Tensorflow in Deep Learning Lecture 4 Convolutional Neural

- Slides: 34

Tensorflow in Deep Learning Lecture 4: Convolutional Neural Network (CNN) JAHANDAR JAHANIPOUR jjahanipour@uh. edu www. easy-tensorflow. com https: //github. com/easy-tensorflow 01/05/2018 www. easy-tensorflow. com 1

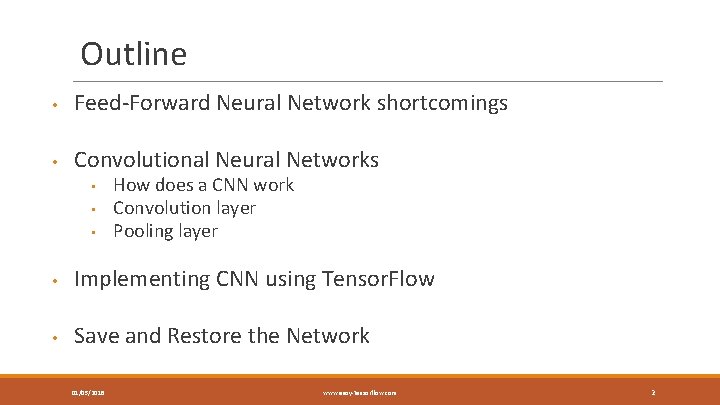

Outline • Feed-Forward Neural Network shortcomings • Convolutional Neural Networks • • • How does a CNN work Convolution layer Pooling layer • Implementing CNN using Tensor. Flow • Save and Restore the Network 01/05/2018 www. easy-tensorflow. com 2

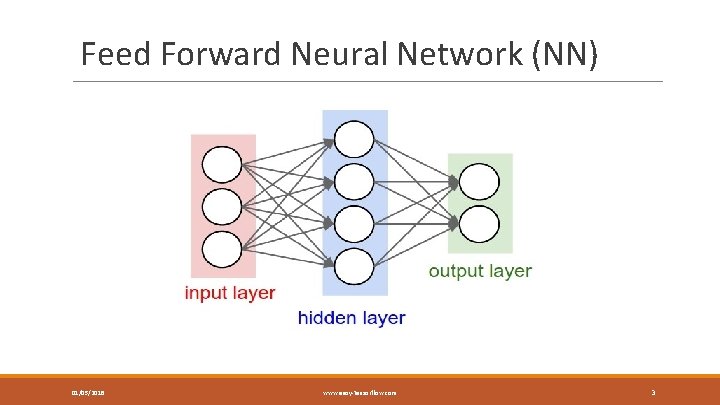

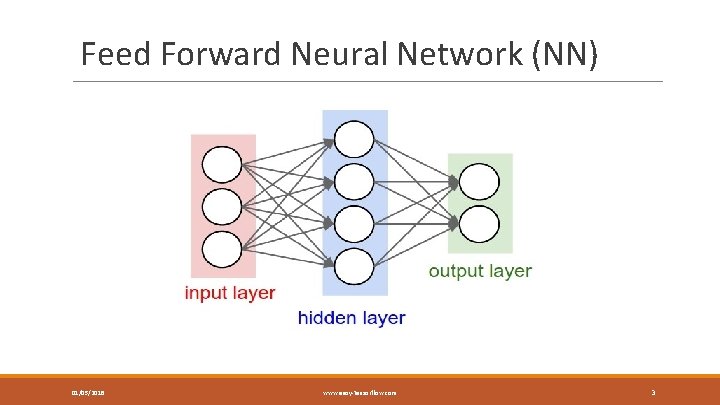

Feed Forward Neural Network (NN) 01/05/2018 www. easy-tensorflow. com 3

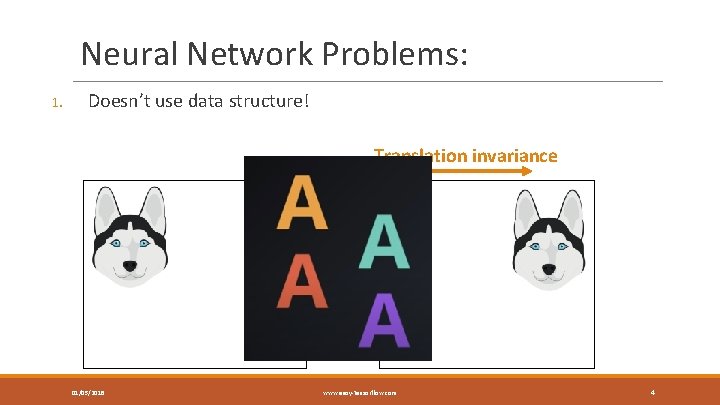

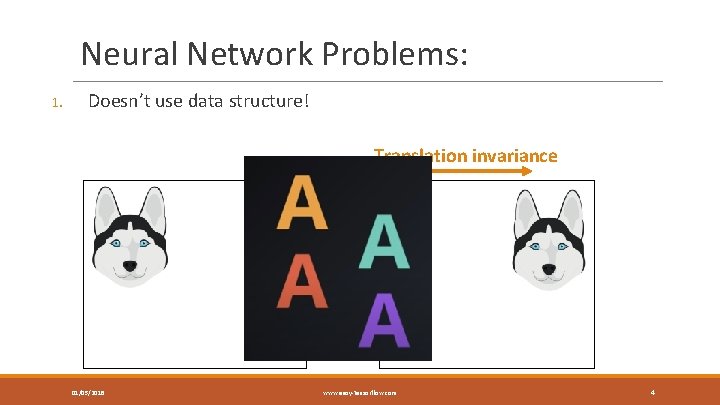

Neural Network Problems: 1. Doesn’t use data structure! Translation invariance 01/05/2018 www. easy-tensorflow. com 4

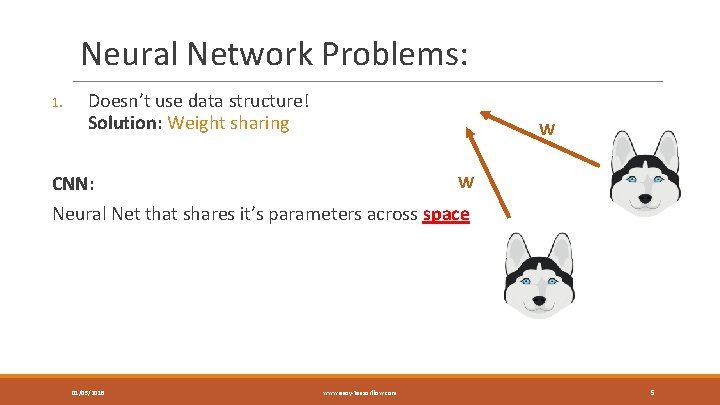

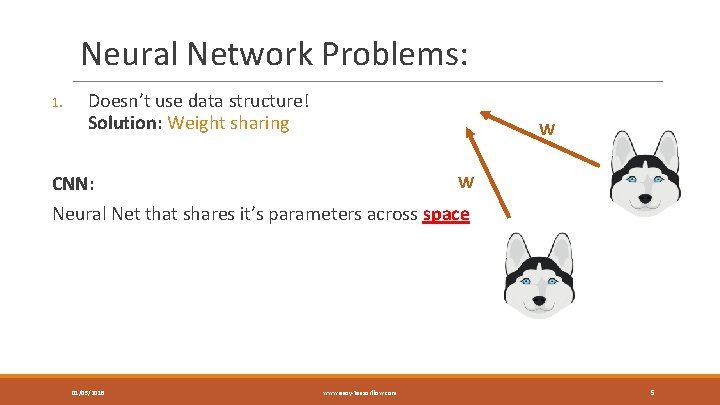

Neural Network Problems: 1. Doesn’t use data structure! Solution: Weight sharing W CNN: W Neural Net that shares it’s parameters across space 01/05/2018 www. easy-tensorflow. com 5

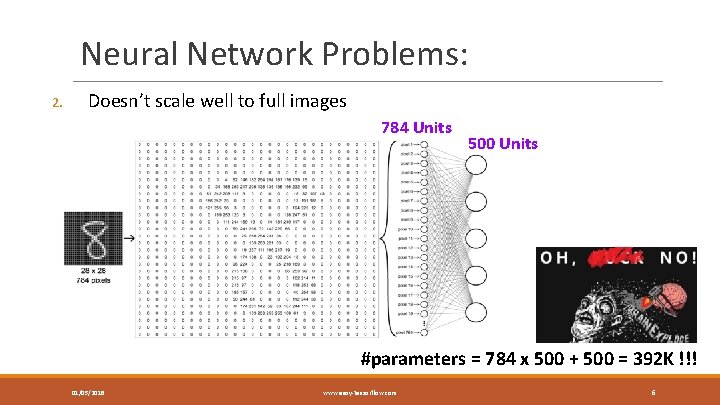

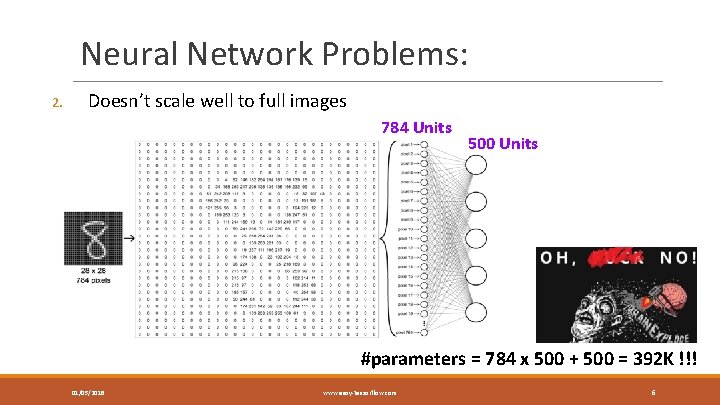

Neural Network Problems: 2. Doesn’t scale well to full images 784 Units 500 Units #parameters = 784 x 500 + 500 = 392 K !!! 01/05/2018 www. easy-tensorflow. com 6

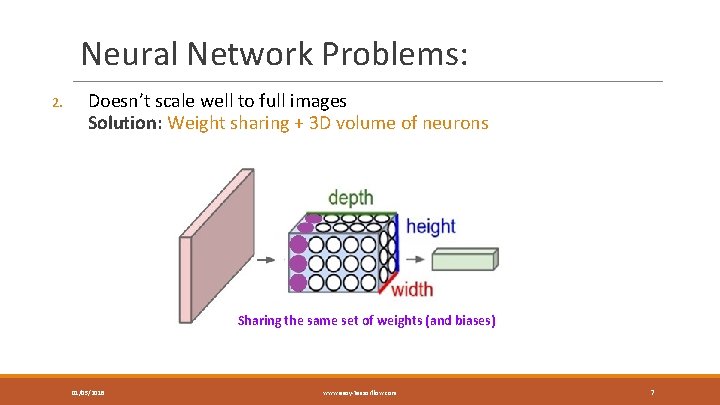

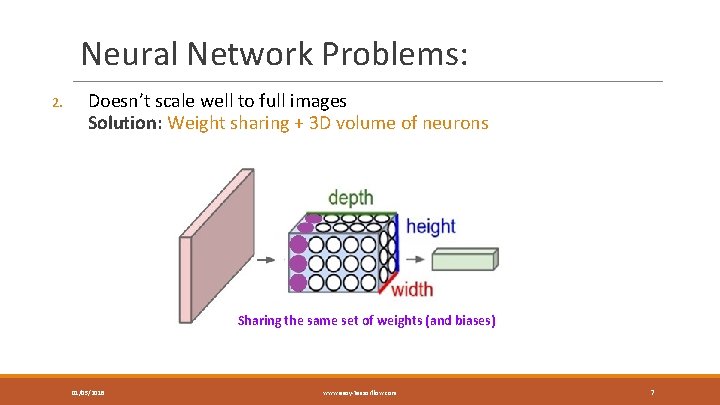

Neural Network Problems: 2. Doesn’t scale well to full images Solution: Weight sharing + 3 D volume of neurons Sharing the same set of weights (and biases) 01/05/2018 www. easy-tensorflow. com 7

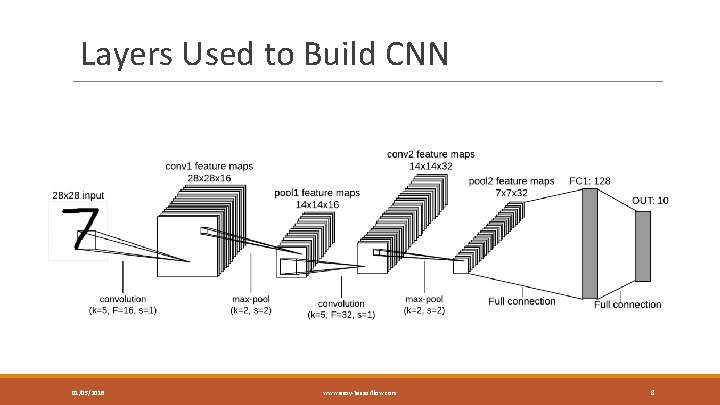

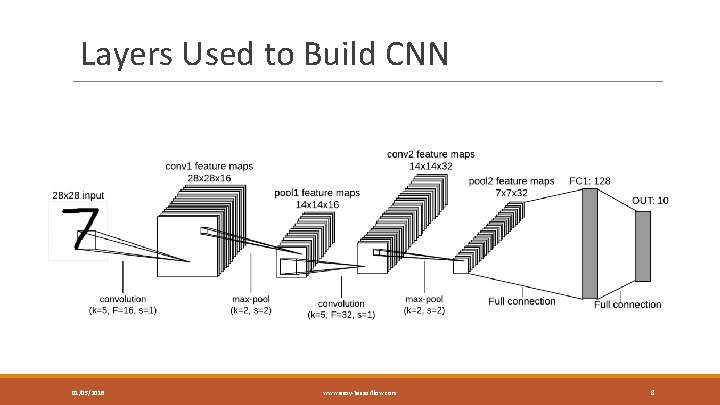

Layers Used to Build CNN 01/05/2018 www. easy-tensorflow. com 8

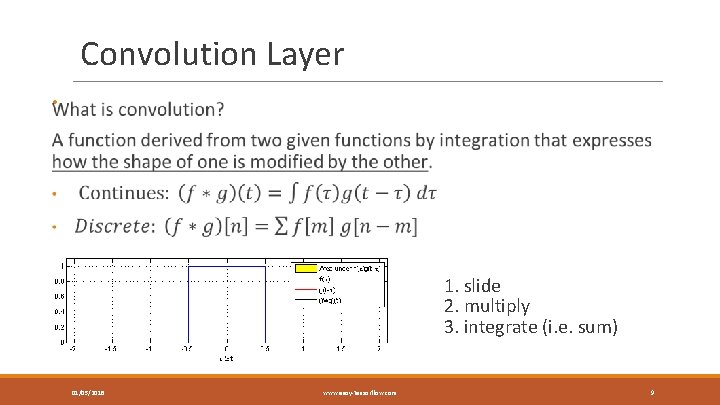

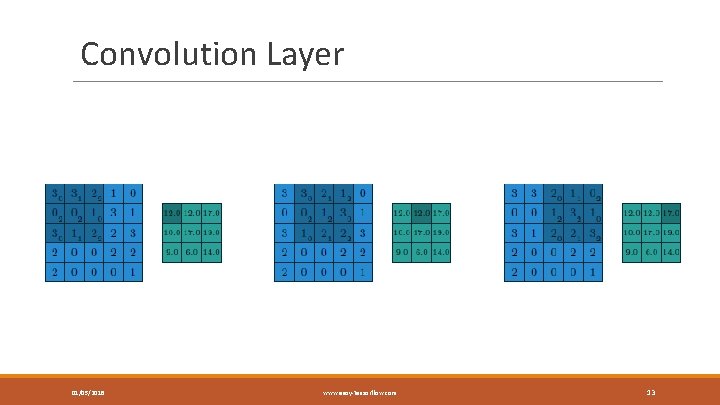

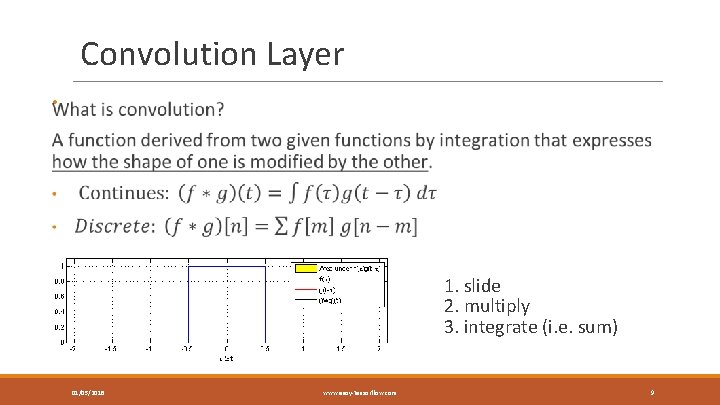

Convolution Layer • 1. slide 2. multiply 3. integrate (i. e. sum) 01/05/2018 www. easy-tensorflow. com 9

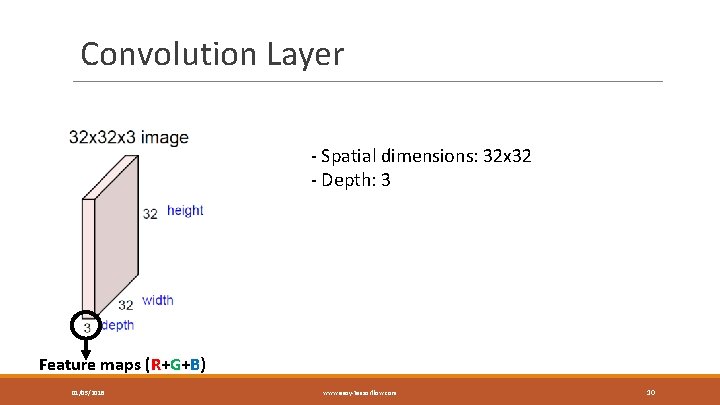

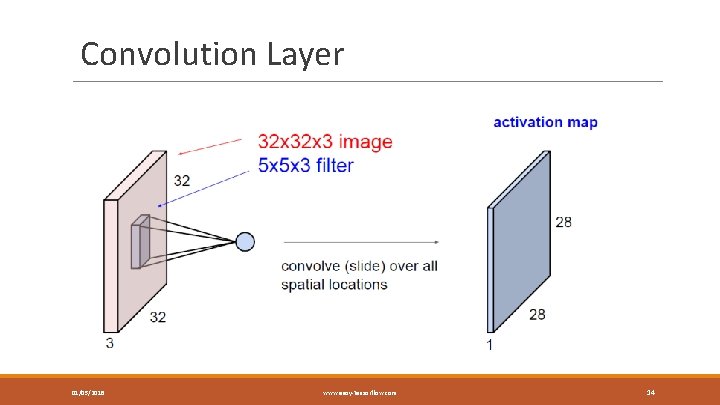

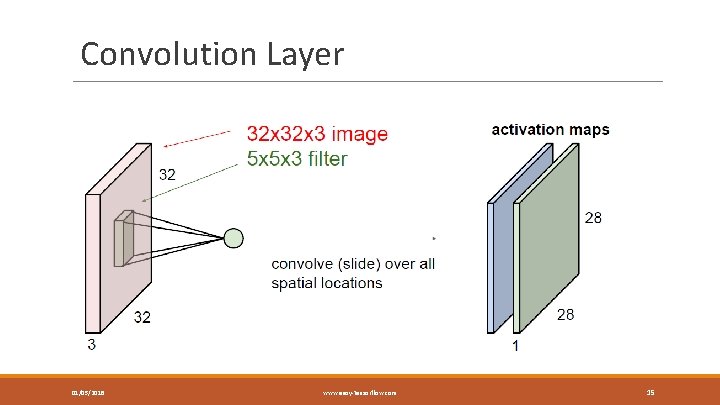

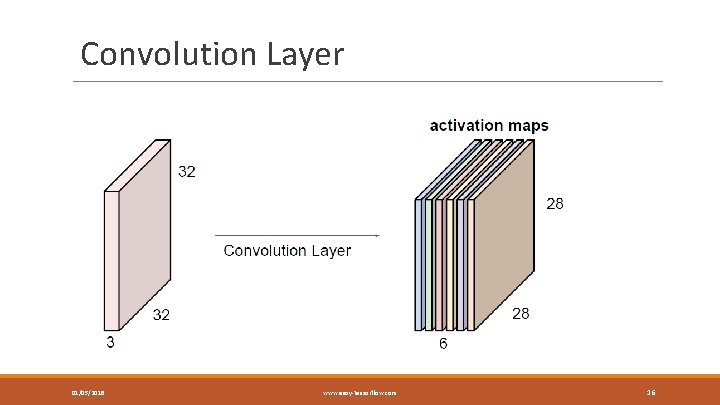

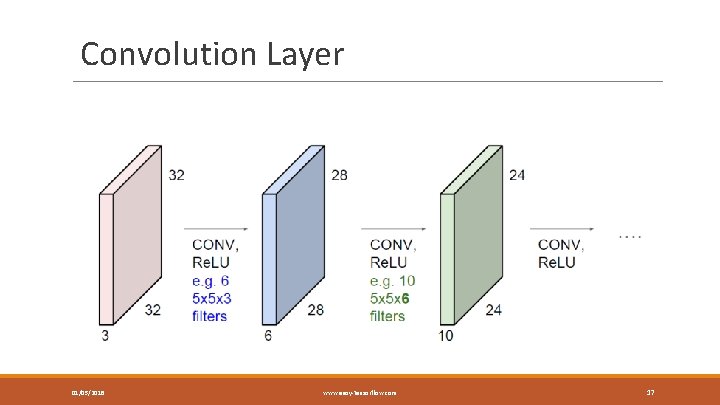

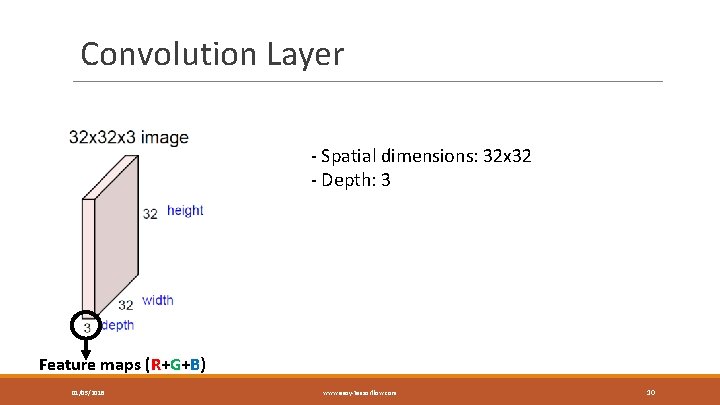

Convolution Layer - Spatial dimensions: 32 x 32 - Depth: 3 Feature maps (R+G+B) 01/05/2018 www. easy-tensorflow. com 10

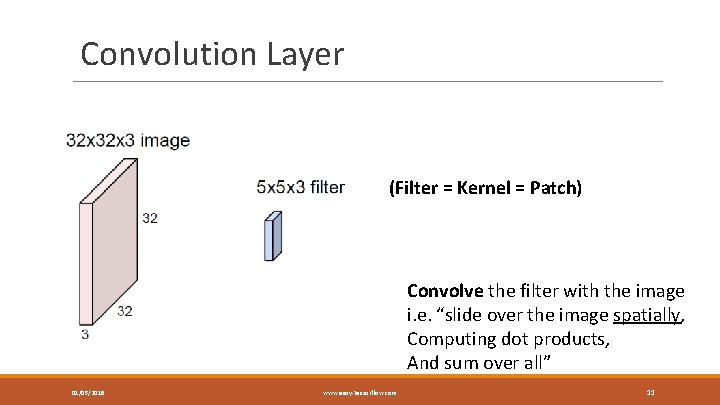

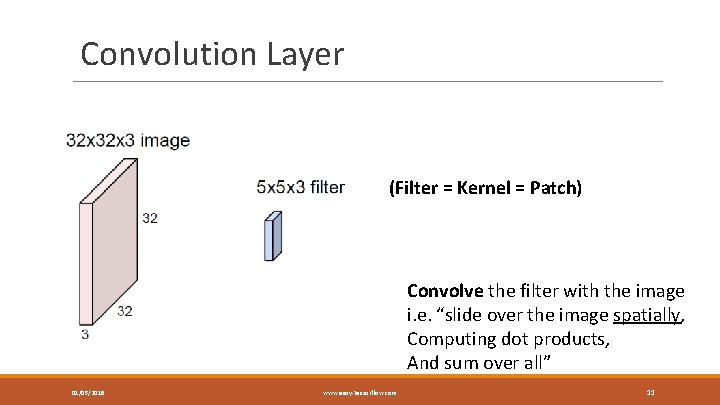

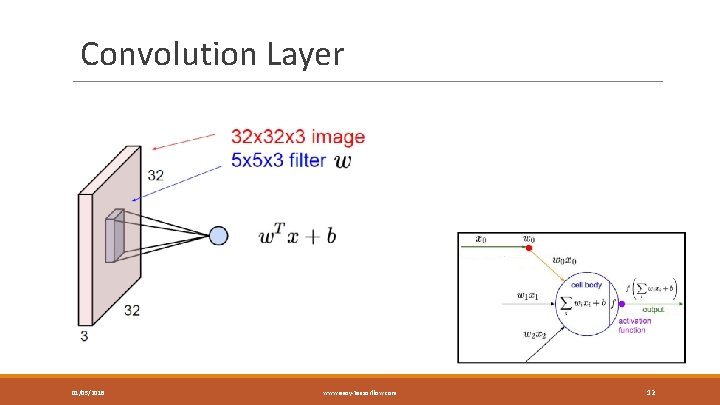

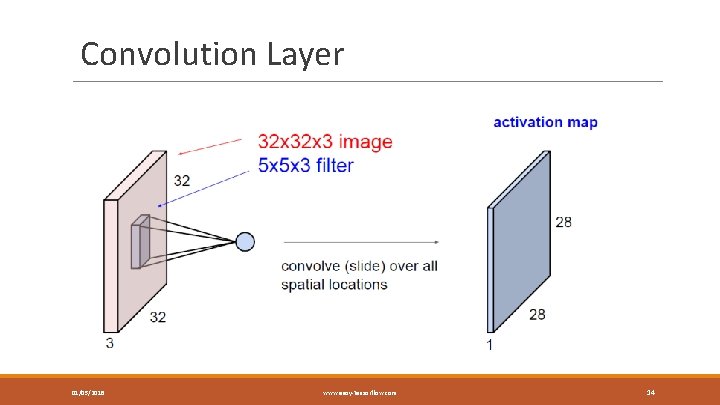

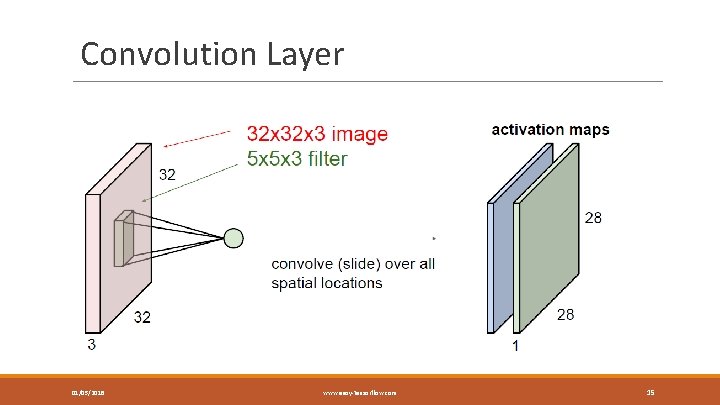

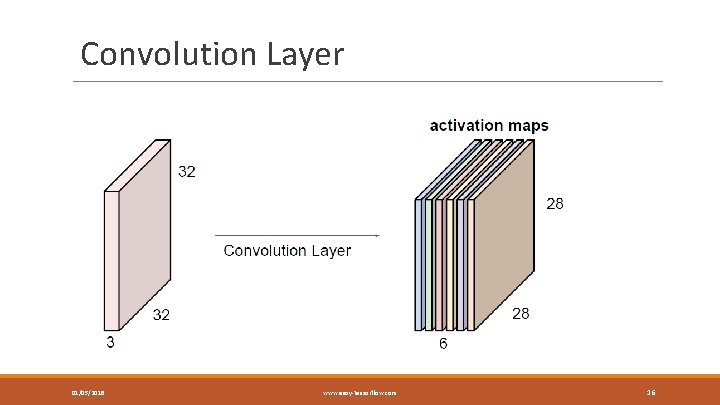

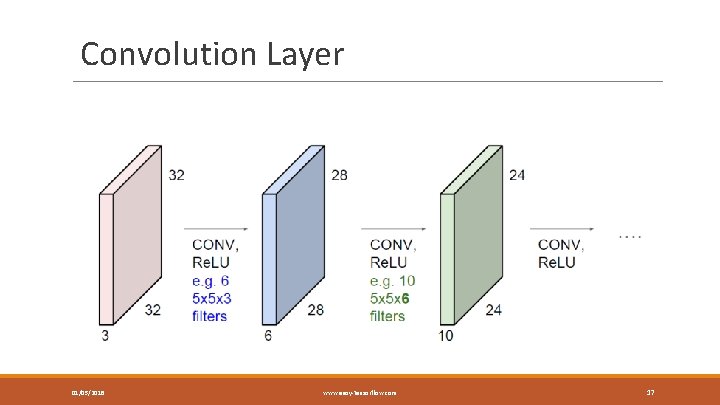

Convolution Layer (Filter = Kernel = Patch) Convolve the filter with the image i. e. “slide over the image spatially, Computing dot products, And sum over all” 01/05/2018 www. easy-tensorflow. com 11

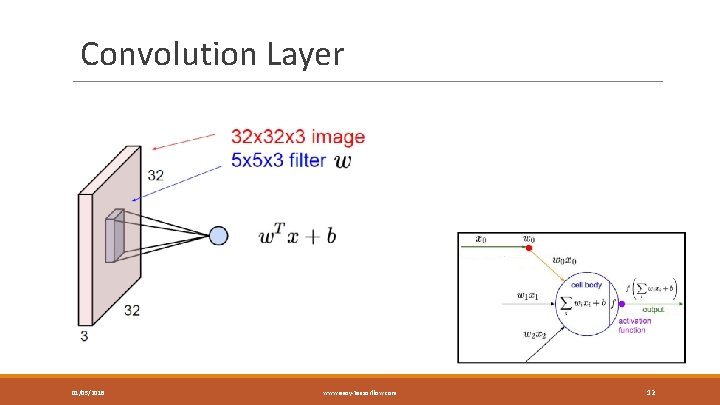

Convolution Layer 01/05/2018 www. easy-tensorflow. com 12

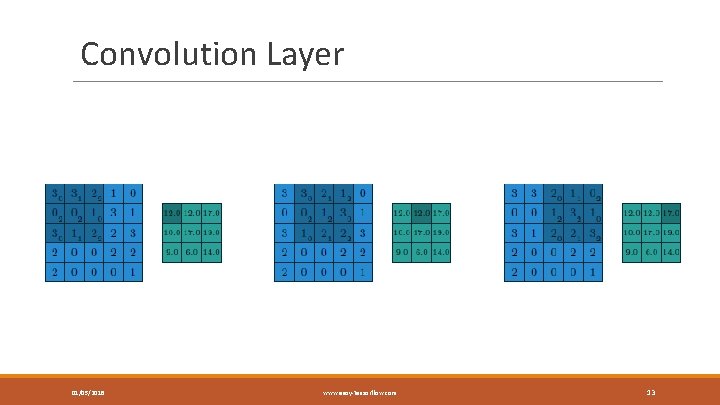

Convolution Layer 01/05/2018 www. easy-tensorflow. com 13

Convolution Layer 01/05/2018 www. easy-tensorflow. com 14

Convolution Layer 01/05/2018 www. easy-tensorflow. com 15

Convolution Layer 01/05/2018 www. easy-tensorflow. com 16

Convolution Layer 01/05/2018 www. easy-tensorflow. com 17

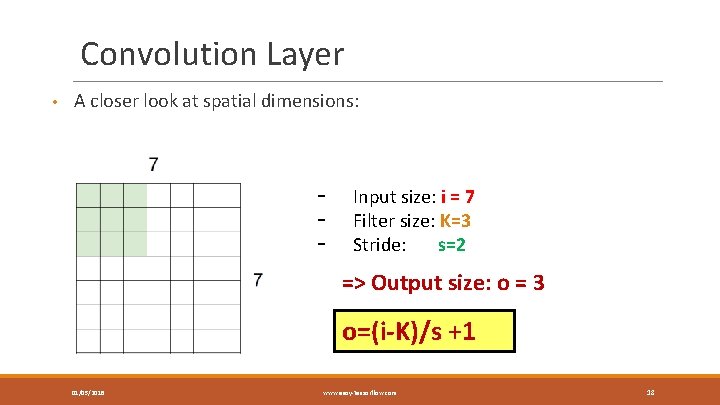

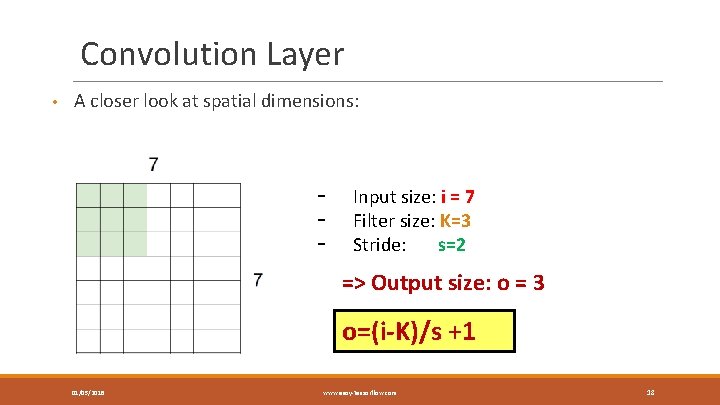

Convolution Layer • A closer look at spatial dimensions: - Input size: i = 7 Filter size: K=3 Stride: s=2 => Output size: o = 3 o=(i-K)/s +1 01/05/2018 www. easy-tensorflow. com 18

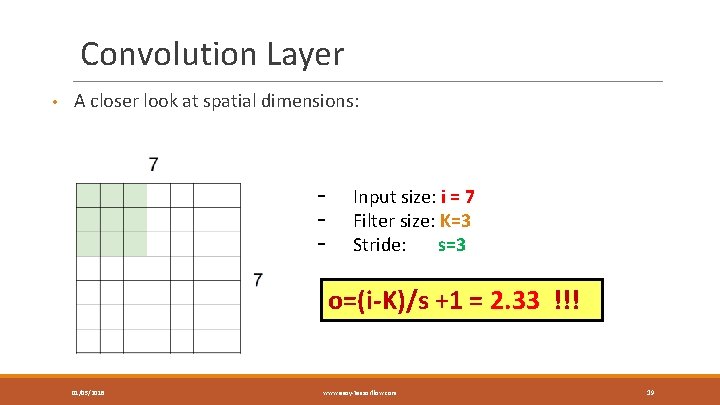

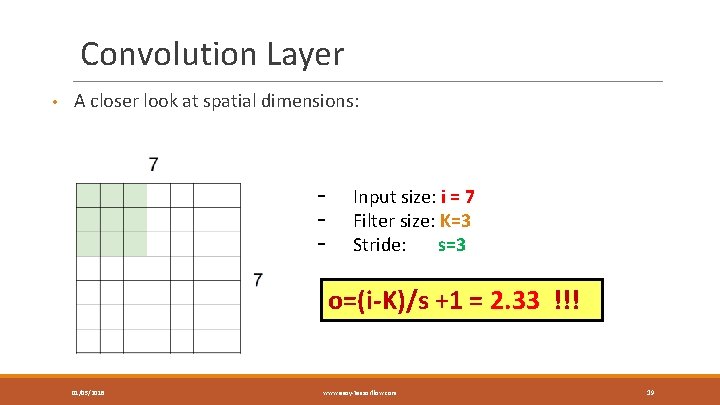

Convolution Layer • A closer look at spatial dimensions: - Input size: i = 7 Filter size: K=3 Stride: s=3 o=(i-K)/s +1 = 2. 33 !!! o=(i-K)/s +1 =? 01/05/2018 www. easy-tensorflow. com 19

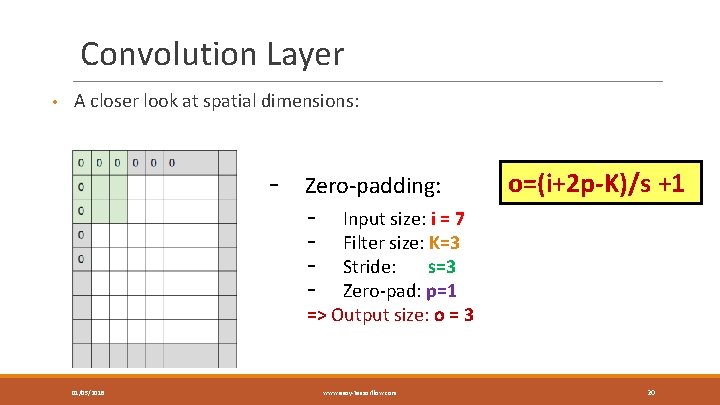

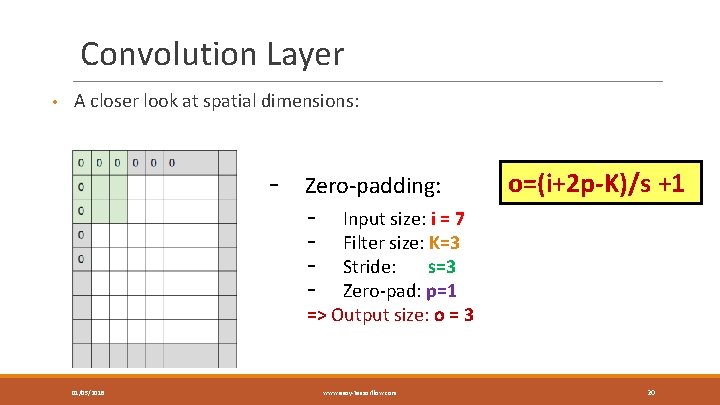

Convolution Layer • A closer look at spatial dimensions: - Zero-padding: - o=(i+2 p-K)/s +1 Input size: i = 7 Filter size: K=3 Stride: s=3 Zero-pad: p=1 => Output size: o = 3 01/05/2018 www. easy-tensorflow. com 20

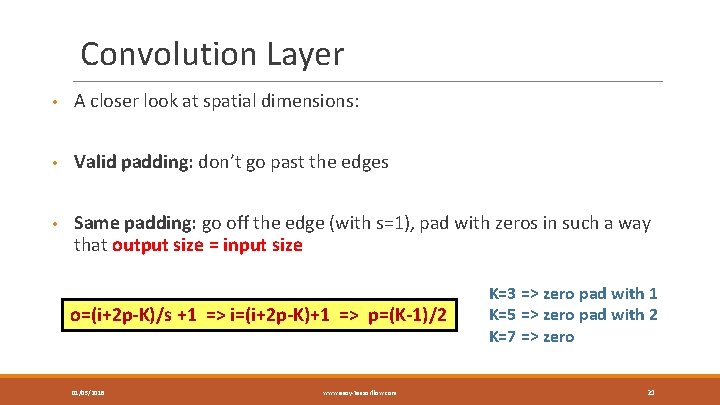

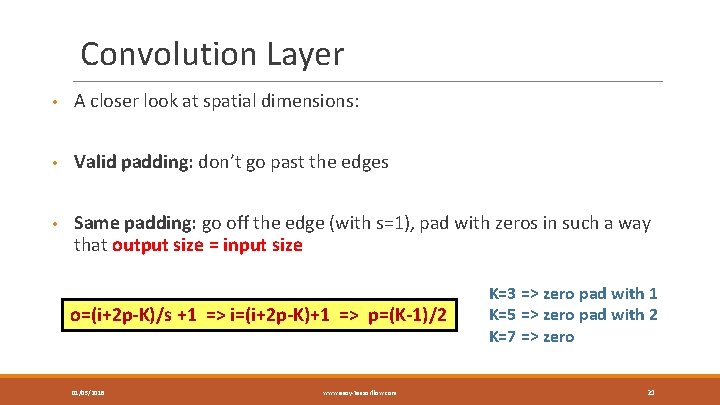

Convolution Layer • A closer look at spatial dimensions: • Valid padding: don’t go past the edges • Same padding: go off the edge (with s=1), pad with zeros in such a way that output size = input size o=(i+2 p-K)/s +1 => i=(i+2 p-K)+1 => p=(K-1)/2 01/05/2018 www. easy-tensorflow. com K=3 => zero pad with 1 K=5 => zero pad with 2 K=7 => zero 21

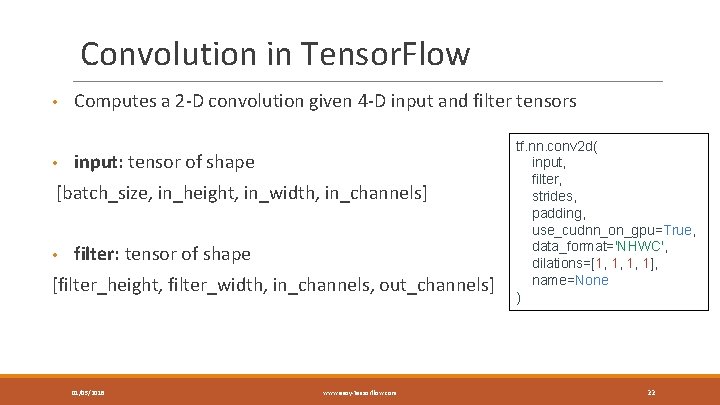

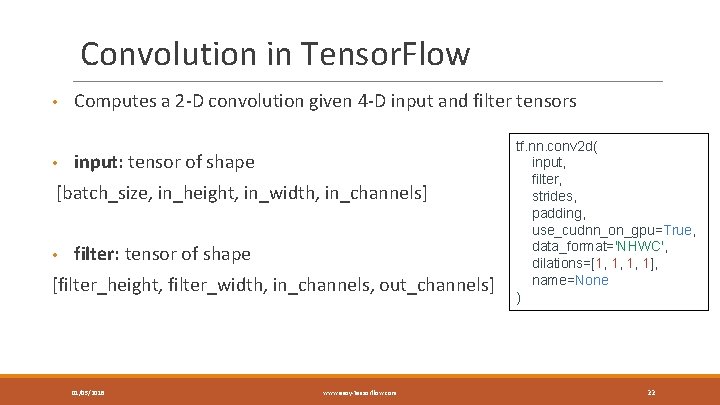

Convolution in Tensor. Flow • • Computes a 2 -D convolution given 4 -D input and filter tensors input: tensor of shape [batch_size, in_height, in_width, in_channels] • filter: tensor of shape [filter_height, filter_width, in_channels, out_channels] 01/05/2018 www. easy-tensorflow. com tf. nn. conv 2 d( input, filter, strides, padding, use_cudnn_on_gpu=True, data_format='NHWC', dilations=[1, 1, 1, 1], name=None ) 22

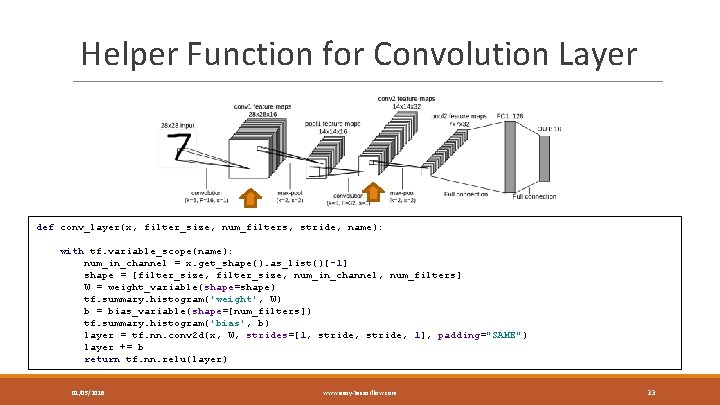

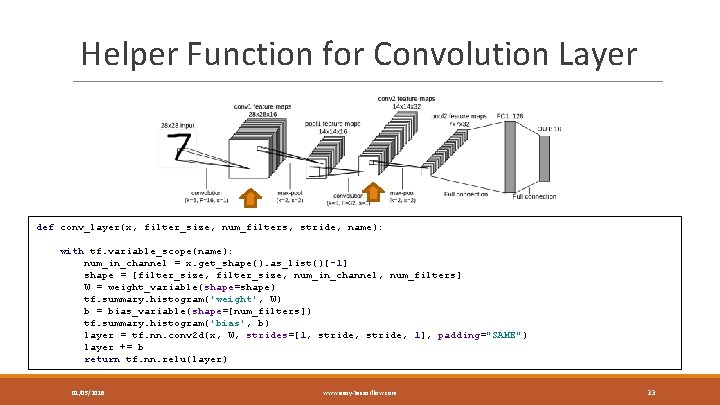

Helper Function for Convolution Layer def conv_layer(x, filter_size, num_filters, stride, name): with tf. variable_scope(name): num_in_channel = x. get_shape(). as_list()[-1] shape = [filter_size, num_in_channel, num_filters] W = weight_variable(shape=shape) tf. summary. histogram('weight', W) b = bias_variable(shape=[num_filters]) tf. summary. histogram('bias', b) layer = tf. nn. conv 2 d(x, W, strides=[1, stride, 1], padding="SAME") layer += b return tf. nn. relu(layer) 01/05/2018 www. easy-tensorflow. com 23

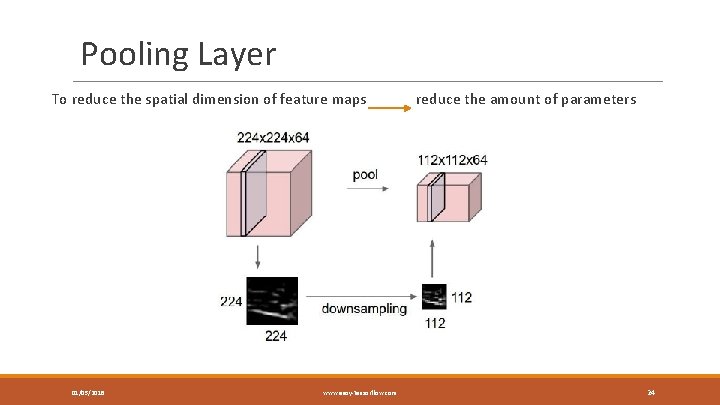

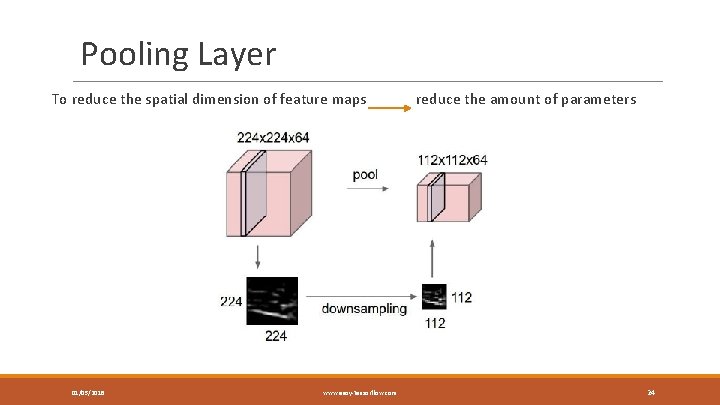

Pooling Layer To reduce the spatial dimension of feature maps 01/05/2018 www. easy-tensorflow. com reduce the amount of parameters 24

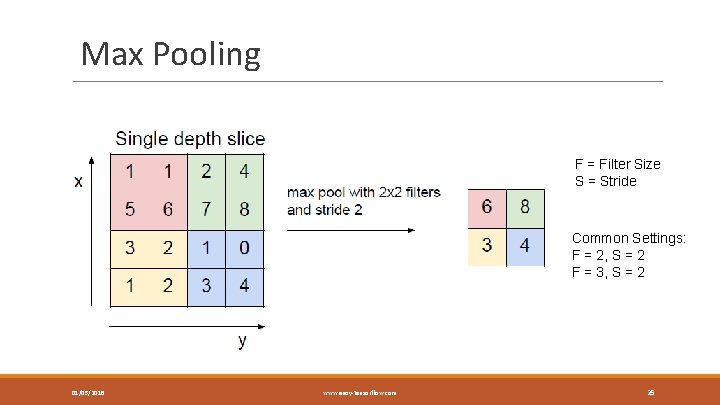

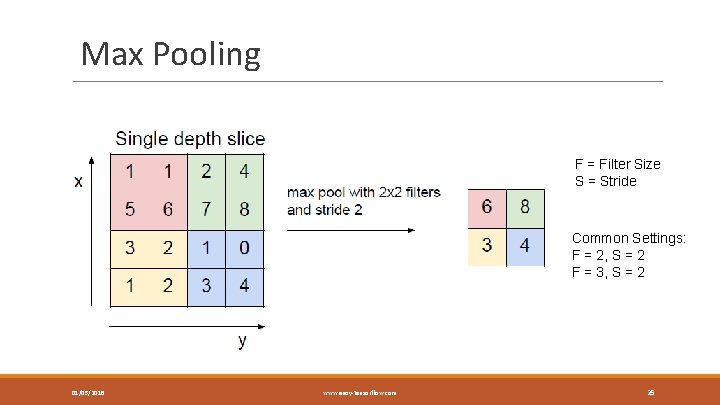

Max Pooling F = Filter Size S = Stride Common Settings: F = 2, S = 2 F = 3, S = 2 01/05/2018 www. easy-tensorflow. com 25

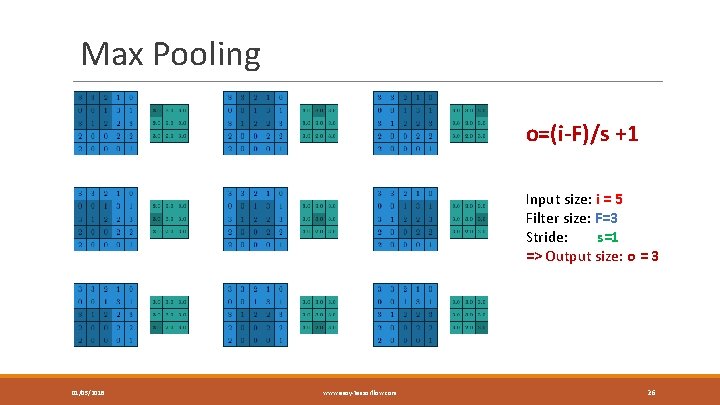

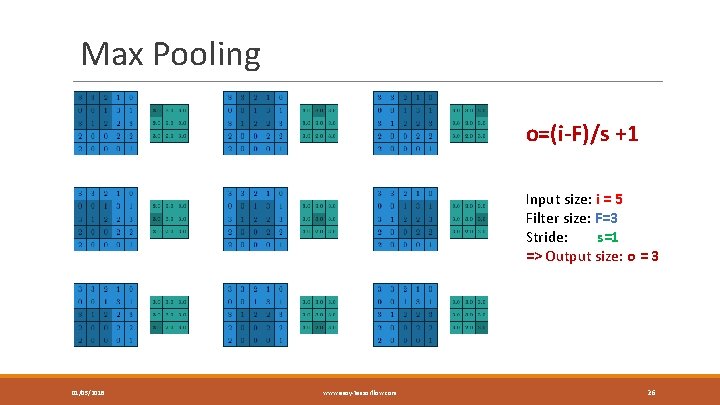

Max Pooling o=(i-F)/s +1 Input size: i = 5 Filter size: F=3 Stride: s=1 => Output size: o = 3 01/05/2018 www. easy-tensorflow. com 26

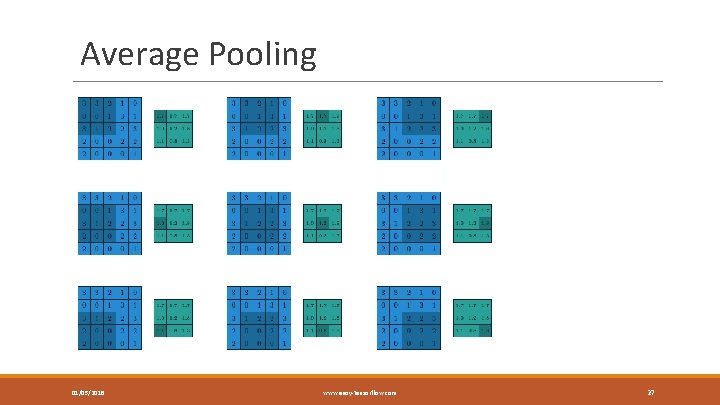

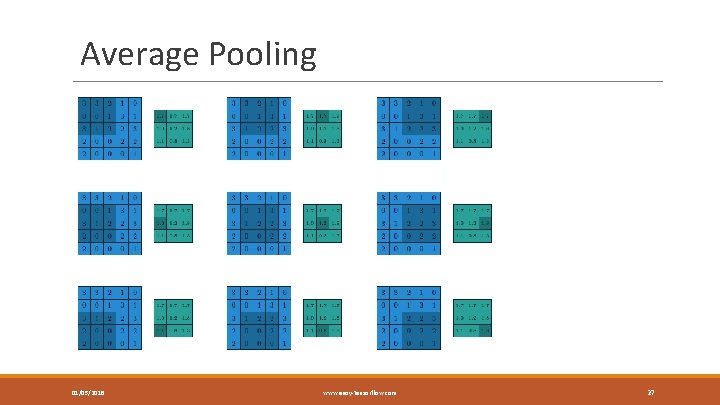

Average Pooling 01/05/2018 www. easy-tensorflow. com 27

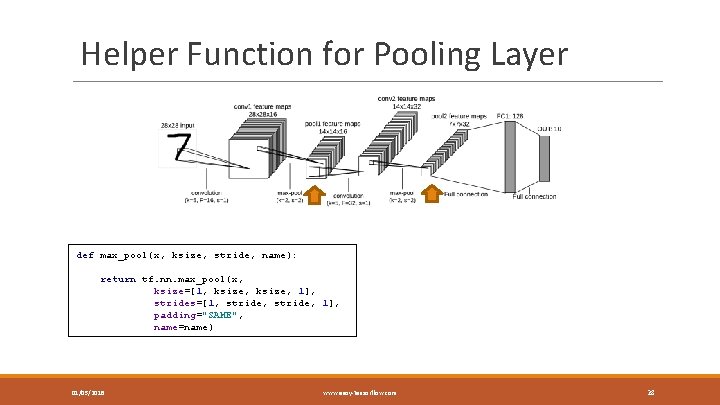

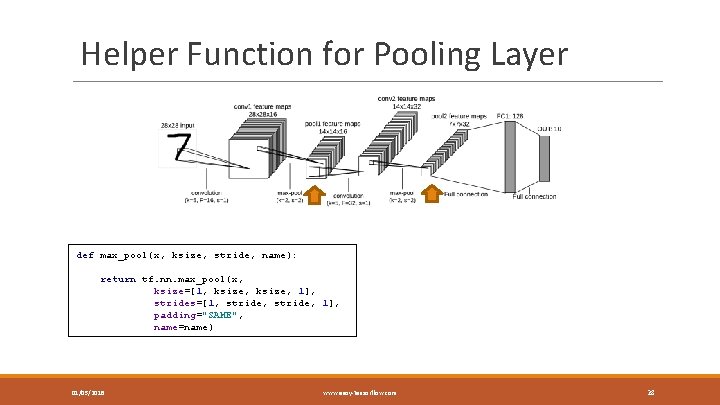

Helper Function for Pooling Layer def max_pool(x, ksize, stride, name): return tf. nn. max_pool(x, ksize=[1, ksize, 1], strides=[1, stride, 1], padding="SAME", name=name) 01/05/2018 www. easy-tensorflow. com 28

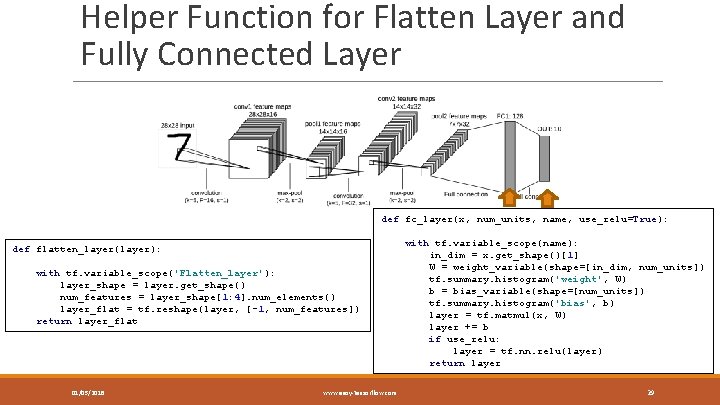

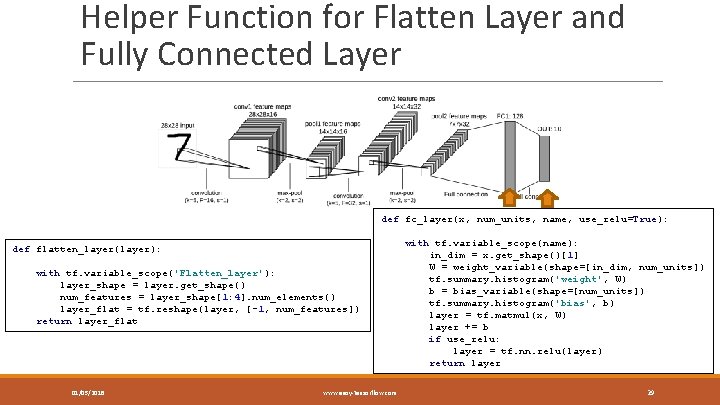

Helper Function for Flatten Layer and Fully Connected Layer def fc_layer(x, num_units, name, use_relu=True): def flatten_layer(layer): with tf. variable_scope('Flatten_layer'): layer_shape = layer. get_shape() num_features = layer_shape[1: 4]. num_elements() layer_flat = tf. reshape(layer, [-1, num_features]) return layer_flat 01/05/2018 www. easy-tensorflow. com with tf. variable_scope(name): in_dim = x. get_shape()[1] W = weight_variable(shape=[in_dim, num_units]) tf. summary. histogram('weight', W) b = bias_variable(shape=[num_units]) tf. summary. histogram('bias', b) layer = tf. matmul(x, W) layer += b if use_relu: layer = tf. nn. relu(layer) return layer 29

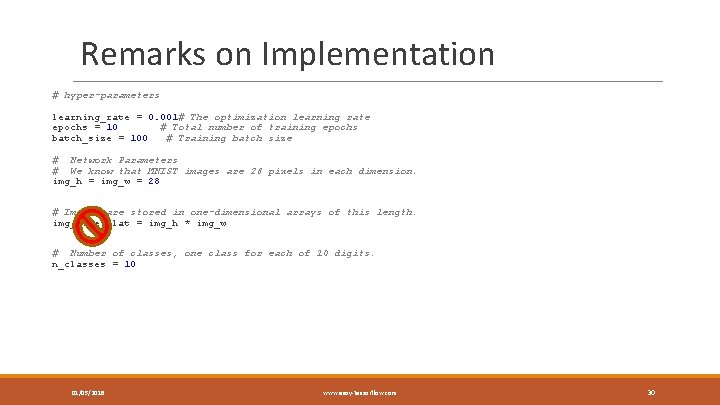

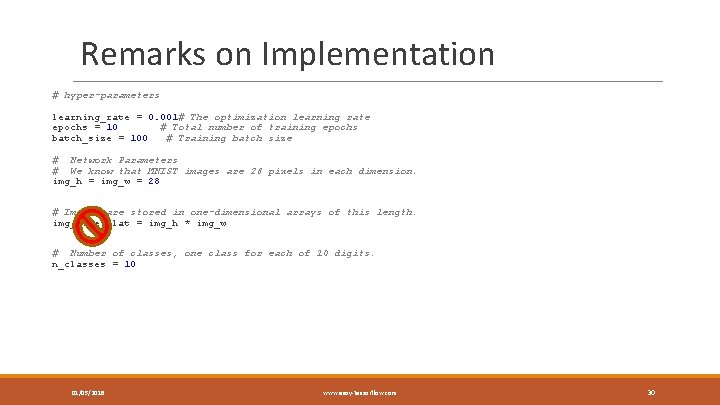

Remarks on Implementation # hyper-parameters learning_rate = 0. 001# The optimization learning rate epochs = 10 # Total number of training epochs batch_size = 100 # Training batch size # Network Parameters # We know that MNIST images are 28 pixels in each dimension. img_h = img_w = 28 # Images are stored in one-dimensional arrays of this length. img_size_flat = img_h * img_w # Number of classes, one class for each of 10 digits. n_classes = 10 01/05/2018 www. easy-tensorflow. com 30

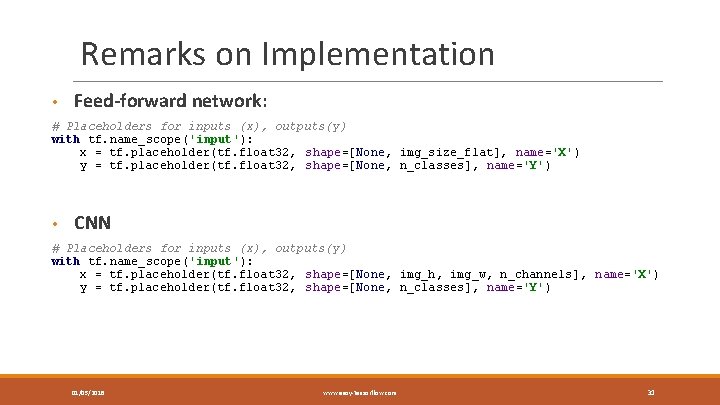

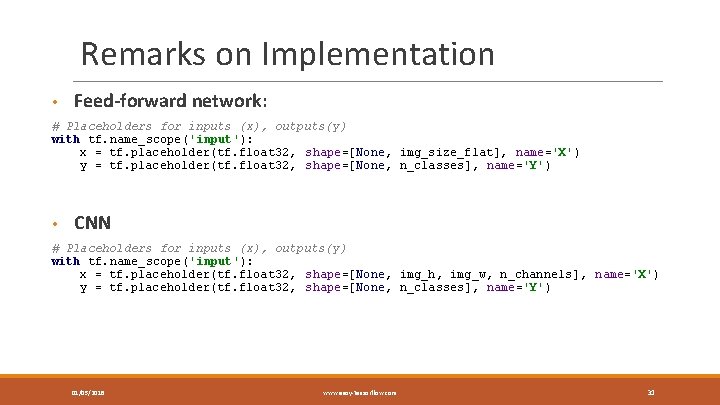

Remarks on Implementation • Feed-forward network: # Placeholders for inputs (x), outputs(y) with tf. name_scope('input'): x = tf. placeholder(tf. float 32, shape=[None, img_size_flat], name='X') y = tf. placeholder(tf. float 32, shape=[None, n_classes], name='Y') • CNN # Placeholders for inputs (x), outputs(y) with tf. name_scope('input'): x = tf. placeholder(tf. float 32, shape=[None, img_h, img_w, n_channels], name='X') y = tf. placeholder(tf. float 32, shape=[None, n_classes], name='Y') 01/05/2018 www. easy-tensorflow. com 31

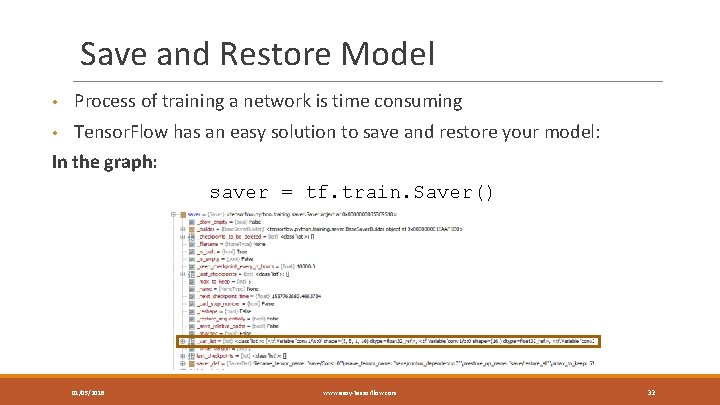

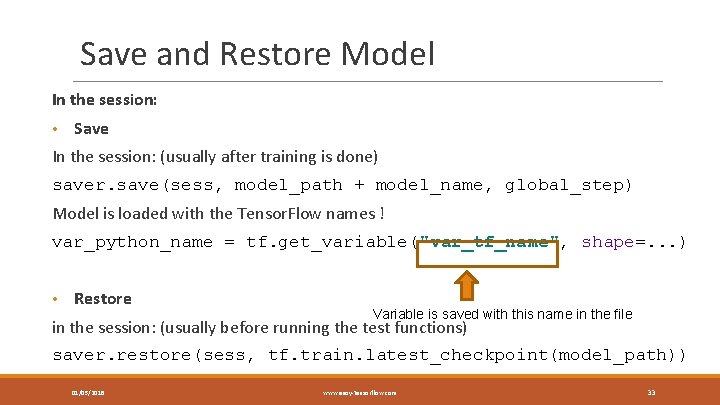

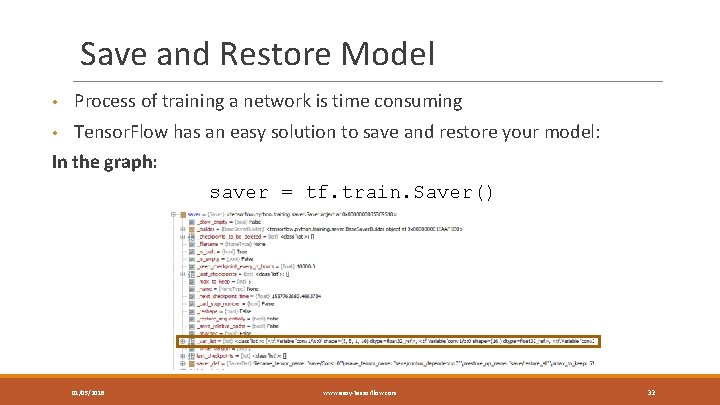

Save and Restore Model • Process of training a network is time consuming • Tensor. Flow has an easy solution to save and restore your model: In the graph: saver = tf. train. Saver() 01/05/2018 www. easy-tensorflow. com 32

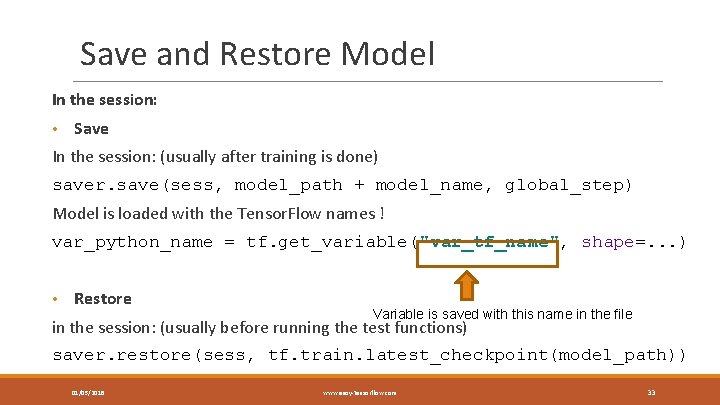

Save and Restore Model In the session: • Save In the session: (usually after training is done) saver. save(sess, model_path + model_name, global_step) Model is loaded with the Tensor. Flow names ! var_python_name = tf. get_variable("var_tf_name", shape=. . . ) • Restore Variable is saved with this name in the file in the session: (usually before running the test functions) saver. restore(sess, tf. train. latest_checkpoint(model_path)) 01/05/2018 www. easy-tensorflow. com 33

• If you found this workshop interesting: Please consider giving us star on Git. Hub and Follow us for more tutorials https: //github. com/easy-tensorflow • Feel free to leave comments on the tutorials, if you have any questions, suggestions … http: //easy-tensorflow. com/ 01/05/2018 www. easy-tensorflow. com 34