Teamwork Safety Culture and Patient Satisfaction 1 1

- Slides: 57

Teamwork, Safety Culture, and Patient Satisfaction 1 1

Teamwork and Its Relation to Patient Safety Culture, Patient Experience & Outcomes Team. STEPPS National Conference Nashville, TN June 21, 2012 Steve Hines, Ph. D, HRET Joann Sorra, Ph. D, Westat joannsorra@westat. com

Objectives • Discuss teamwork, patient safety culture, patient experience and patient safety and quality measures • Describe measure options • Identify data collection and analysis challenges & recommend strategies to overcome these challenges • Discuss how to use the data to improve patient safety and quality 3 3

90 -minute Agenda • Tag team approach • Open Q&A after each section • Small group discussion and reporting back 4 4

Background/Introduction 5 5

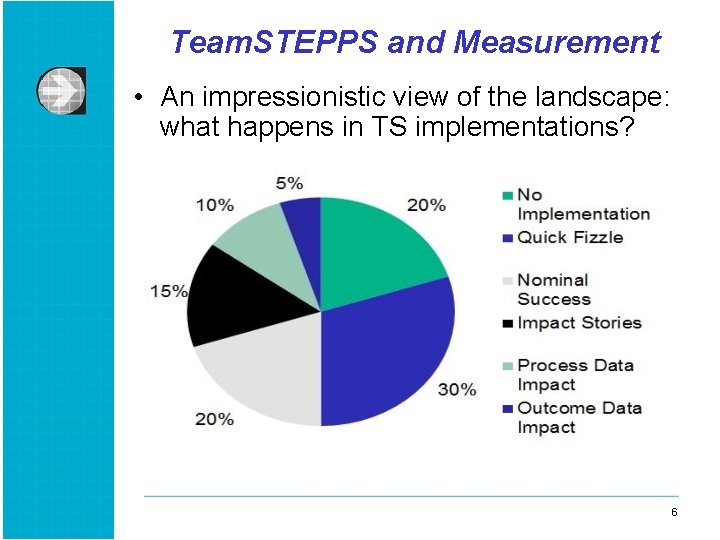

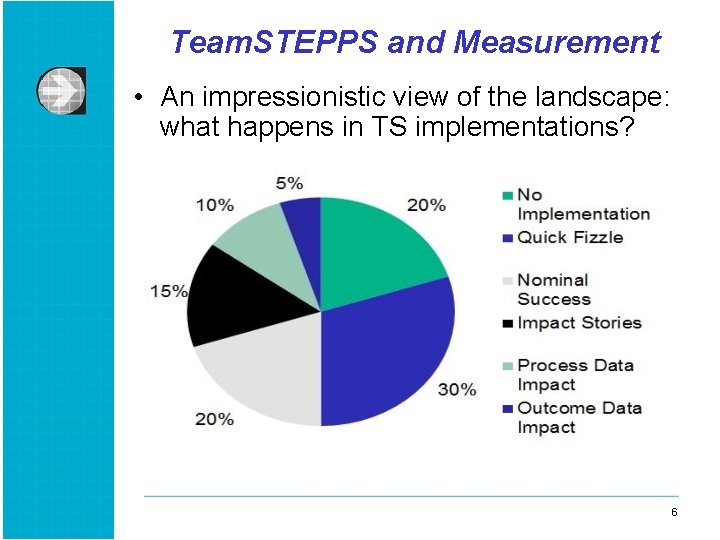

Team. STEPPS and Measurement • An impressionistic view of the landscape: what happens in TS implementations? 6 6

Why Measurement is So Challenging • Implementation doesn’t work • Focus is quality improvement, not measurement • Focus is often on stories, not data • TS is part of broader effort to create a safety culture • Some changes don’t warrant collecting data • Compelling impact data is hard to produce 7 7

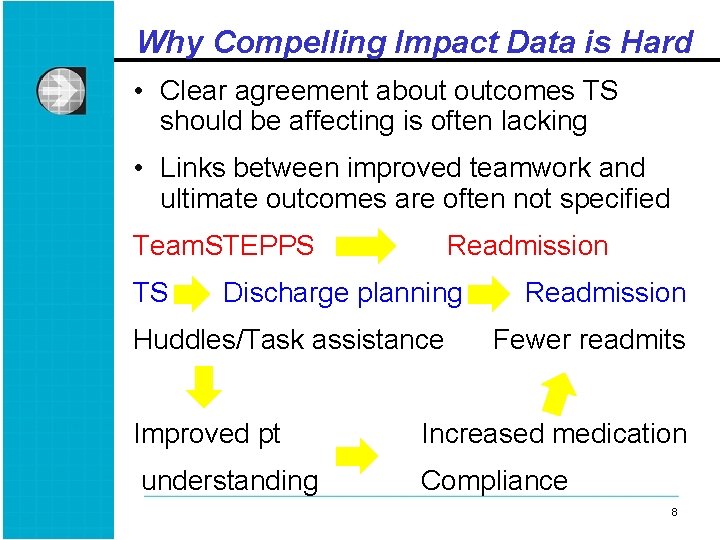

Why Compelling Impact Data is Hard • Clear agreement about outcomes TS should be affecting is often lacking • Links between improved teamwork and ultimate outcomes are often not specified Team. STEPPS TS Readmission Discharge planning Huddles/Task assistance Readmission Fewer readmits Improved pt Increased medication understanding Compliance 8 8

Why Compelling Impact Data is Hard • Measurement of intermediate processes is time consuming and challenging • Data is hard to collect for extended period • If measures aren’t believed, then value of collecting them is small • No surprise most TS implementations lack strong proof of impact—even when they really make one 9 9

Measurement Options: Unit or Task-Specific Options Broader Measures of Culture & Satisfaction 10 10

Unit and Task Specific Measures • What these are: assessments of micro-level processes or outcomes directly linked to the use of a part of TS on a specific task or in a particular unit • Characteristics: • Sometimes created to assess TS implementation • Typically unique to specific hospital or unit • Often linked to problem unit is trying to overcome • Relatively easy and quick to collect • Can be perceptual or observational 11 11

Unit and Task Specific Measures • Why they’re useful: § See whether TS tool is actually being used § Forces leaders and staff to talk about underlying causes of undesired outcomes § See whether specific process causing problems is changing § Can be created by unit staff, which helps get their buy-in and interest § Provides evidence of progress useful for sustaining implementation efforts § Key element of PDSA cycles required in most TS implementations 12 12

Unit and Task Specific Measures • Evaluating these measures § Are they linked to specific TS tools being introduced § Is there agreement that the process or shortterm outcomes matter and should change when TS is used? § Can the data be collected and shared quickly and efficiently (or existing data be used)? § Will staff and leadership be excited when the measure improves? § Is there a good match between the scope of the TS implementation and the universe of activities being measured? 13 13

SOPS, CAHPS & Outcome Measures • AHRQ Surveys on Patient Safety Culture § www. ahrq. gov/qual/patientsafetyculture • Consumer Assessment of Healthcare Providers and Systems (CAHPS) § www. cahps. ahrq. gov • Outcome measures 14 14

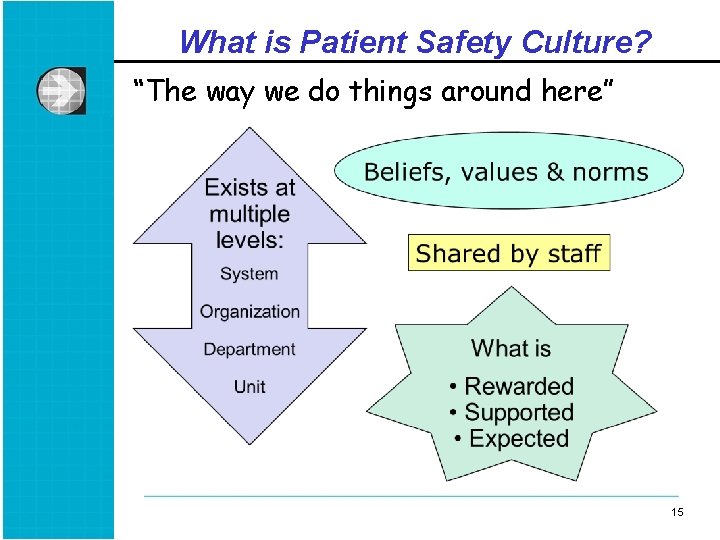

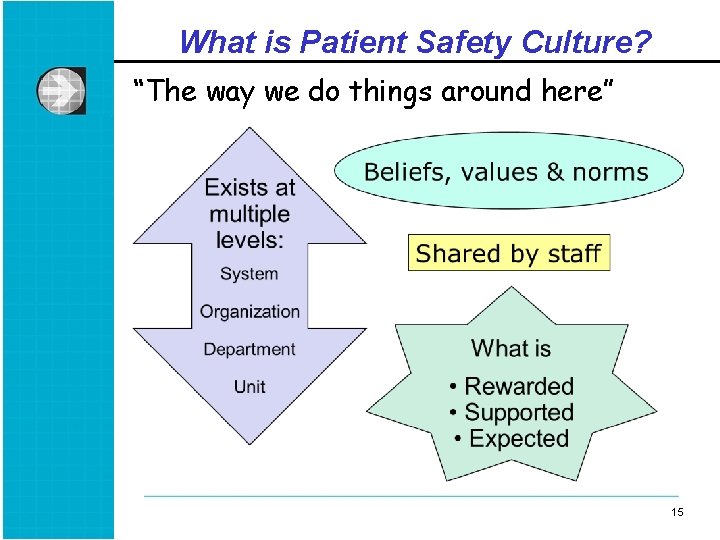

What is Patient Safety Culture? “The way we do things around here” 15 15

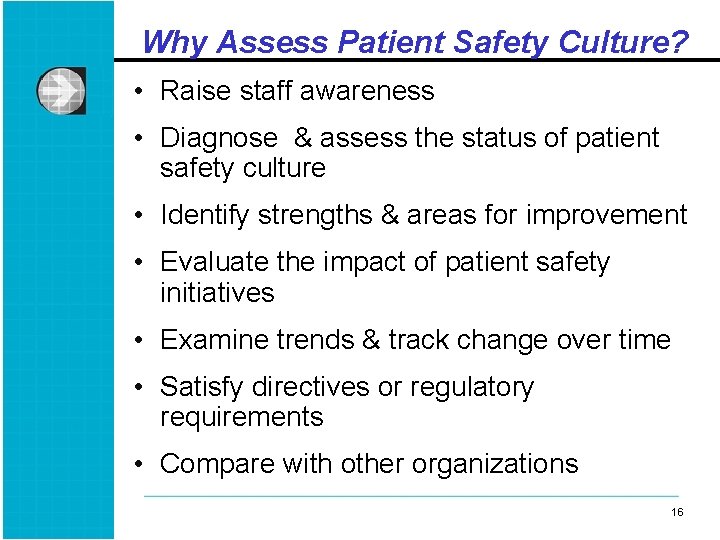

Why Assess Patient Safety Culture? • Raise staff awareness • Diagnose & assess the status of patient safety culture • Identify strengths & areas for improvement • Evaluate the impact of patient safety initiatives • Examine trends & track change over time • Satisfy directives or regulatory requirements • Compare with other organizations 16 16

AHRQ SOPS Surveys • Assess provider & staff opinions about patient safety culture in § Hospitals (2004) § Nursing homes (2008) § Medical offices (2009) § Retail pharmacies (Expected Summer 2012) 17 17

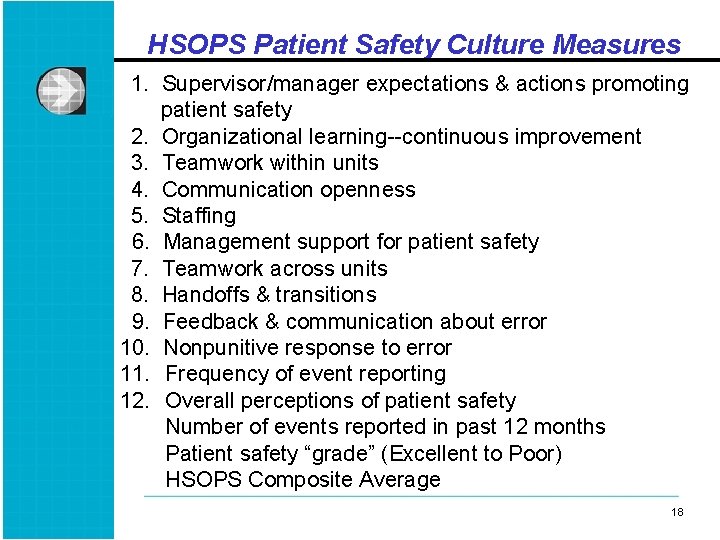

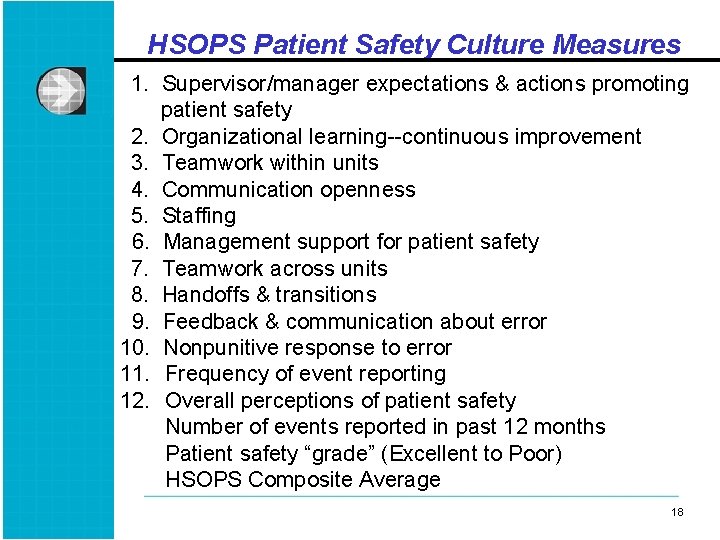

HSOPS Patient Safety Culture Measures 1. Supervisor/manager expectations & actions promoting patient safety 2. Organizational learning--continuous improvement 3. Teamwork within units 4. Communication openness 5. Staffing 6. Management support for patient safety 7. Teamwork across units 8. Handoffs & transitions 9. Feedback & communication about error 10. Nonpunitive response to error 11. Frequency of event reporting 12. Overall perceptions of patient safety Number of events reported in past 12 months Patient safety “grade” (Excellent to Poor) HSOPS Composite Average 18 18

What is Patient Experience? Quality from the patient’s perspective” • Aspects of care for which patients are the best or only source of information Communication with providers Access to care 19 19

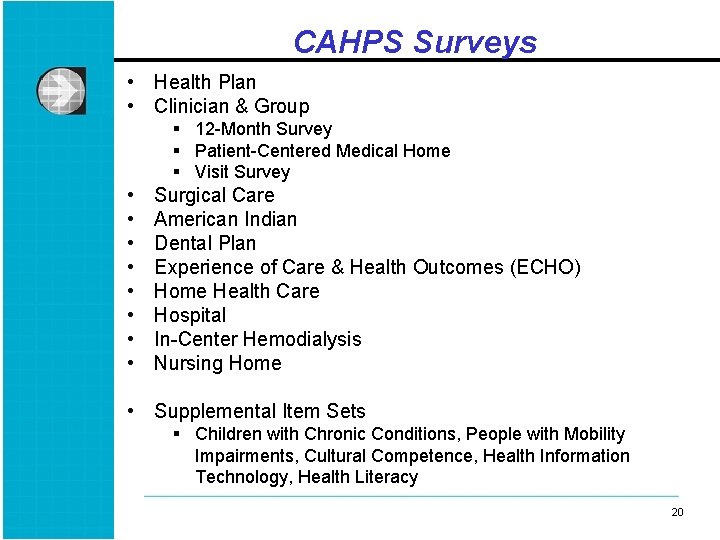

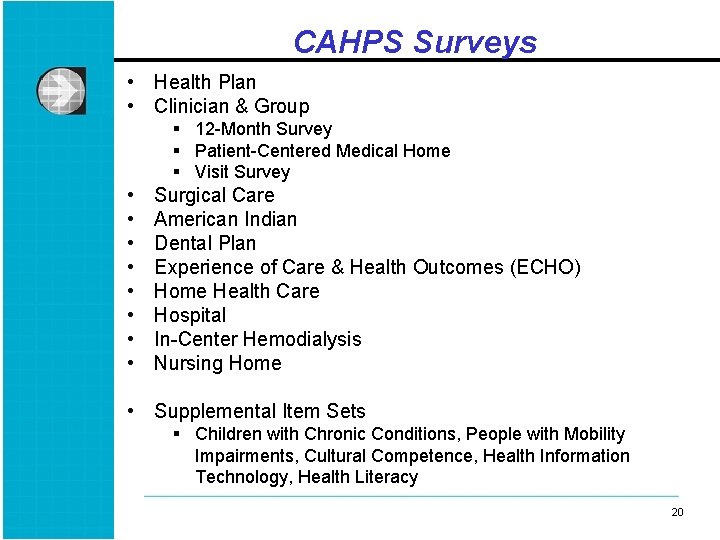

CAHPS Surveys • Health Plan • Clinician & Group § 12 -Month Survey § Patient-Centered Medical Home § Visit Survey • • Surgical Care American Indian Dental Plan Experience of Care & Health Outcomes (ECHO) Home Health Care Hospital In-Center Hemodialysis Nursing Home • Supplemental Item Sets § Children with Chronic Conditions, People with Mobility Impairments, Cultural Competence, Health Information Technology, Health Literacy 20 20

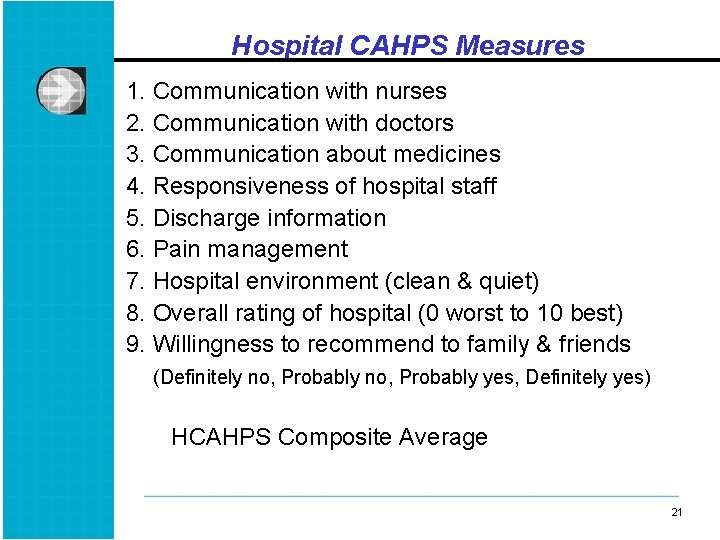

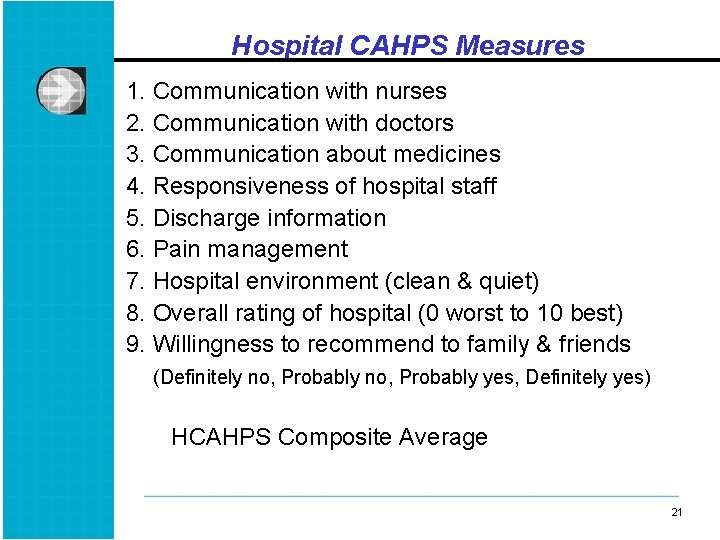

Hospital CAHPS Measures 1. Communication with nurses 2. Communication with doctors 3. Communication about medicines 4. Responsiveness of hospital staff 5. Discharge information 6. Pain management 7. Hospital environment (clean & quiet) 8. Overall rating of hospital (0 worst to 10 best) 9. Willingness to recommend to family & friends (Definitely no, Probably yes, Definitely yes) HCAHPS Composite Average 21 21

Data Collection and Analysis Challenges & Recommended Strategies 22 22

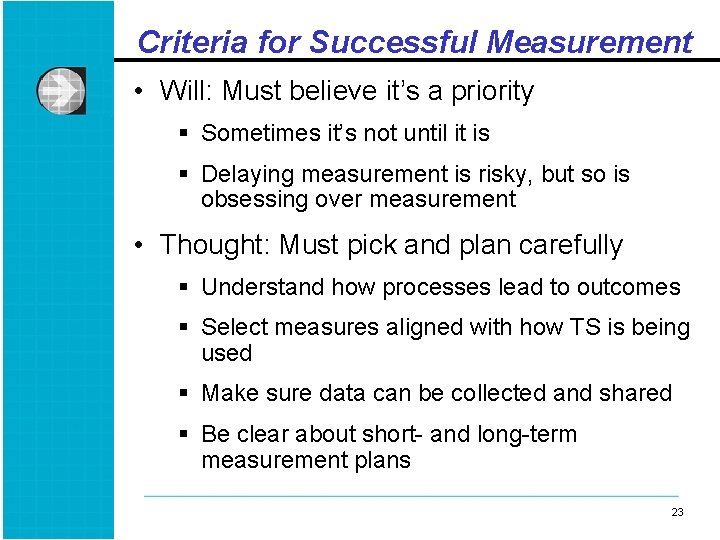

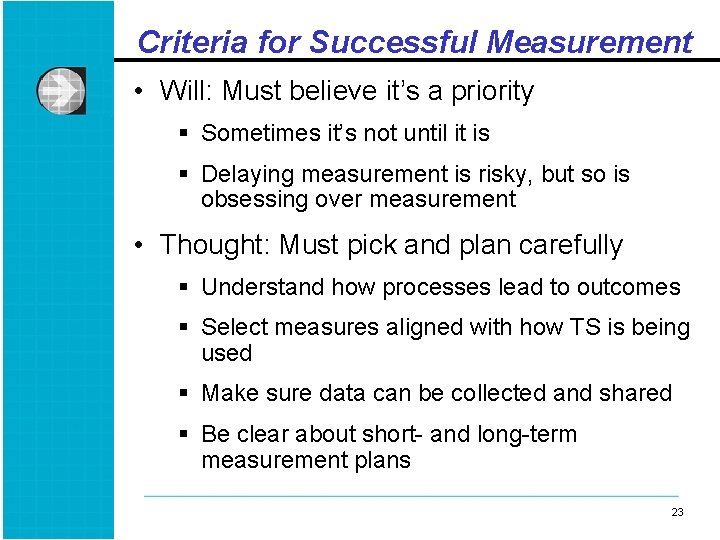

Criteria for Successful Measurement • Will: Must believe it’s a priority § Sometimes it’s not until it is § Delaying measurement is risky, but so is obsessing over measurement • Thought: Must pick and plan carefully § Understand how processes lead to outcomes § Select measures aligned with how TS is being used § Make sure data can be collected and shared § Be clear about short- and long-term measurement plans 23 23

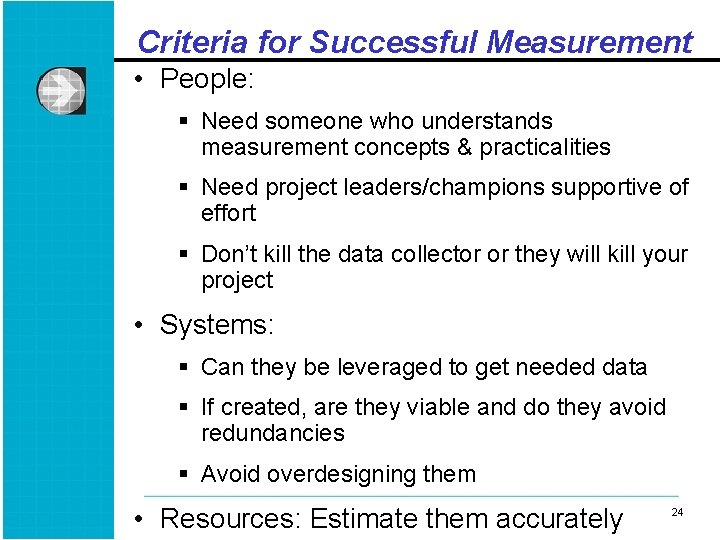

Criteria for Successful Measurement • People: § Need someone who understands measurement concepts & practicalities § Need project leaders/champions supportive of effort § Don’t kill the data collector or they will kill your project • Systems: § Can they be leveraged to get needed data § If created, are they viable and do they avoid redundancies § Avoid overdesigning them • Resources: Estimate them accurately 24 24

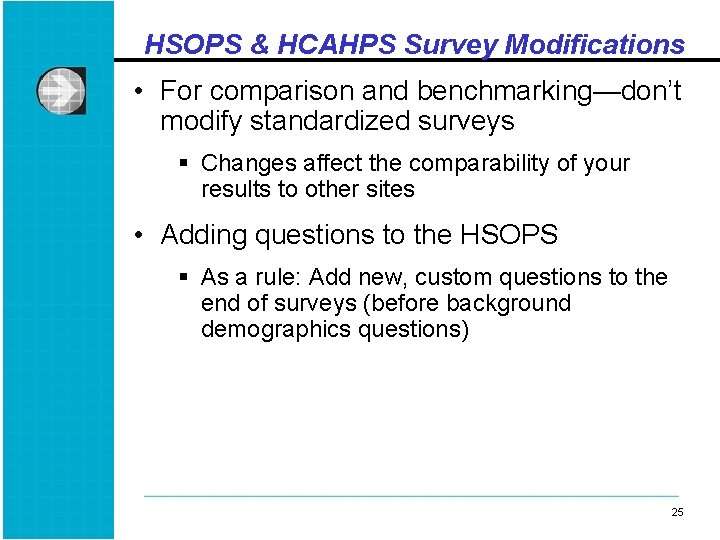

HSOPS & HCAHPS Survey Modifications • For comparison and benchmarking—don’t modify standardized surveys § Changes affect the comparability of your results to other sites • Adding questions to the HSOPS § As a rule: Add new, custom questions to the end of surveys (before background demographics questions) 25 25

HSOPS Survey Buy-in • Obtain senior leadership buy-in from the start • Important, but challenging to engage physicians in the survey § Identify which physicians to include and which “units” they should respond about 26 26

Deciding Whom to Survey • Who should be included? § The goal is representativeness • HSOPS § Many hospitals administer the survey in-house without a vendor § All staff, or sample of all staff from all work areas/unit (90% of hospitals) • HCAHPS § Certified vendors administer the patient survey using strict guidelines Ø http: //www. hcahpsonline. org/qaguidelines. aspx 27 27

Maximizing HSOPS Response • Modes—Paper gets highest response: § Web (66% of hospitals); 51% response rate § Paper (21%); 61% response rate § Web & Paper mixed (13%); 49% response rate • Advance publicity & communication is critical • Visible leadership support needed through newsletters, emails from the CEO/ President, and Department Managers 28 28

Maximizing HSOPS Response • For web surveys, need staff access to computers with intranet/internet • Monitor response statistics & report department/unit response rates • Multiple contacts and reminders in all modes • Consider incentives § Raffles for gift cards, printable cafeteria meal ticket upon web survey completion, ice cream socials or pizza parties for units with 75% response 29 29

Frequency of HSOPS Administration • Depends on purposes of the data § To assess impact of an intervention? § To get regular diagnosis/assessment of patient safety culture? • On average, hospitals administer the HSOPS every 20 months • Culture changes slowly 30 30

Expected Levels of Change • 650 trending hospitals in AHRQ comparative database § Ave increase of 1 percentage point (range of 0 to 2) on the composites from previous to most recent administration § Lots of variability within and across hospitals • A 5 percentage-point difference is not easy to achieve § But is meaningful and oftentimes statistically significant at the hospital level 31 31

Data Analysis • Goals are to identify strengths and areas for improvement • HCAHPS (% responding “Always” or giving highest rating; “top box” scores) • HSOPS (% positive response--Strongly agree/Agree on positively worded items) • Percentages are easier to understand; better than averages (e. g. , 4. 3 out of 5) § When conducting linkage analyses, can use either % scores or mean scores 32 32

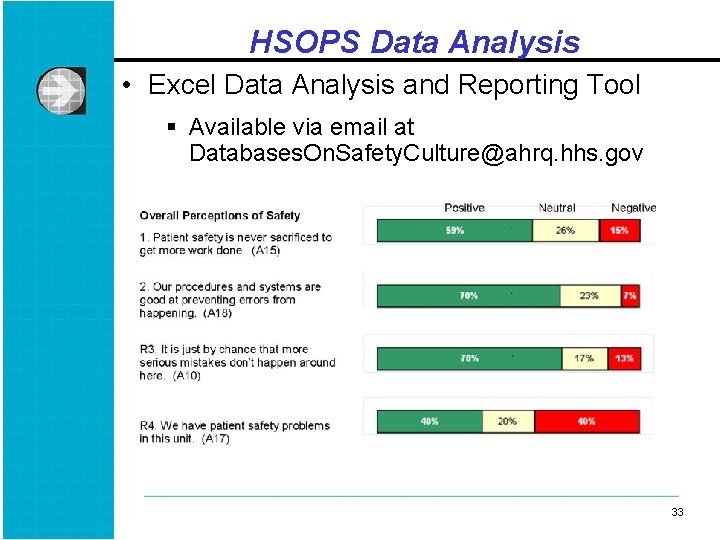

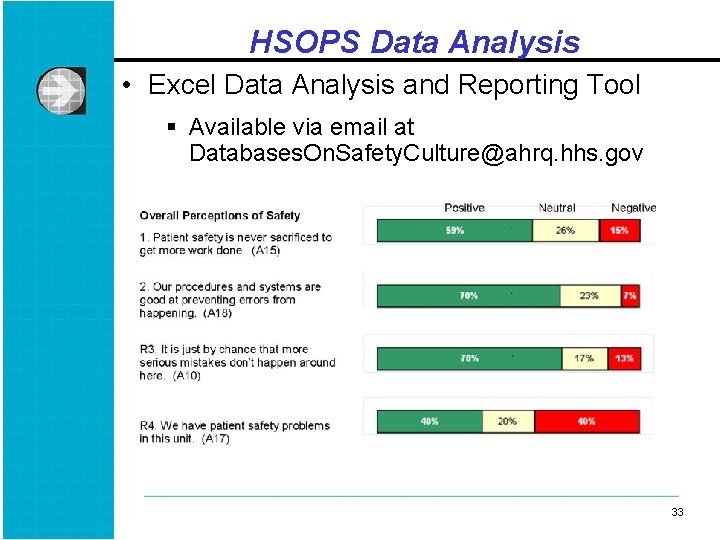

HSOPS Data Analysis • Excel Data Analysis and Reporting Tool § Available via email at Databases. On. Safety. Culture@ahrq. hhs. gov 33 33

Drilling Down Into the HSOPS Data • By staff position • By work area/unit § Unit-level results make the data more relevant for staff § Action planning and improvement initiatives often done at unit level • Rule of 5 § Do not report responses for groups with fewer than 5 respondents 34 34

Using the Data to Improve Patient Safety and Quality 35 35

Overview • Data doesn’t change anything—but using data can • Keys to using it well: § Making connections between data sources that matter § Linking measures you use to outcomes everyone understands and cares about § Embedding data into initial project planning and midcourse project adjustments § Communicating it with people that matter 36 36

Linking HSOPS & HCAHPS • The IOM has emphasized the importance of both establishing a culture of safety & delivering patient-centered care • Thousands of hospitals administer these surveys, so it is important to examine the relationship between these measures • To examine relationships at the hospital level, you need data from a sufficient number of hospitals 37 37

Challenges to Linking Measures • Different departments manage data collection § Staff and patient safety culture surveys vs. patient experience surveys vs. infection rate data vs. other measures • In-house time, resources, capability • Not enough data in a single hospital to achieve enough power to detect results 38 38

HSOPS-HCAHPS Analysis l Data from 73 hospitals l HSOPS data from 2005 to 2007 l HCAHPS data from 2005 to 2006 39 39

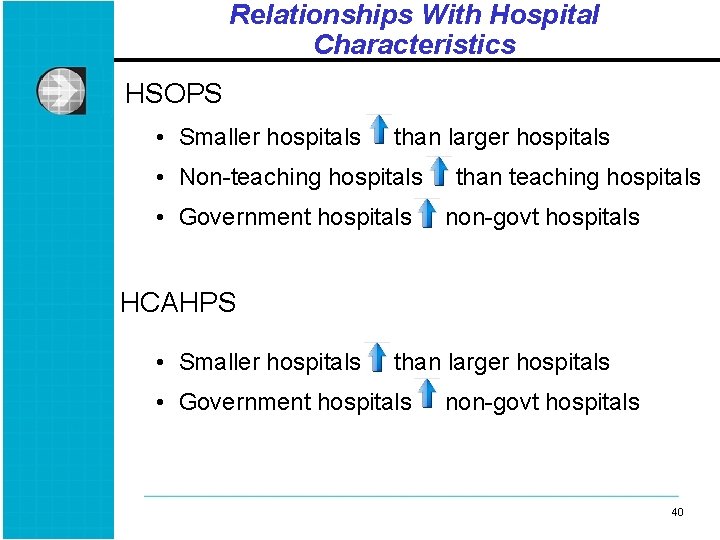

Relationships With Hospital Characteristics HSOPS • Smaller hospitals than larger hospitals • Non-teaching hospitals • Government hospitals than teaching hospitals non-govt hospitals HCAHPS • Smaller hospitals than larger hospitals • Government hospitals non-govt hospitals 40 40

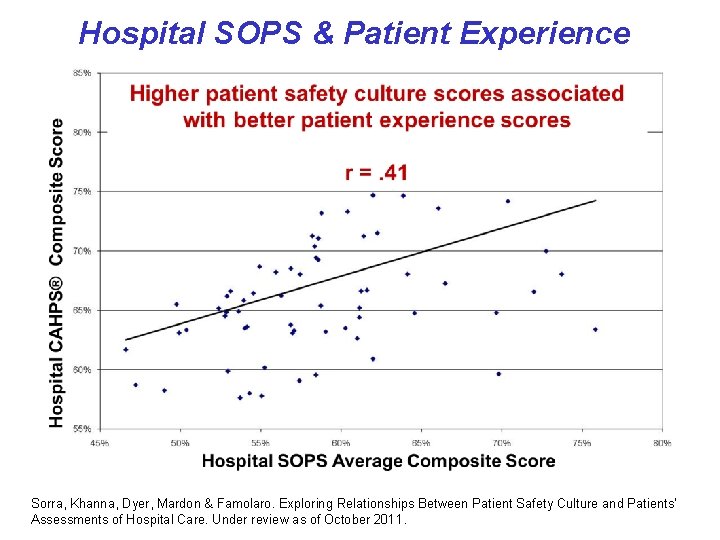

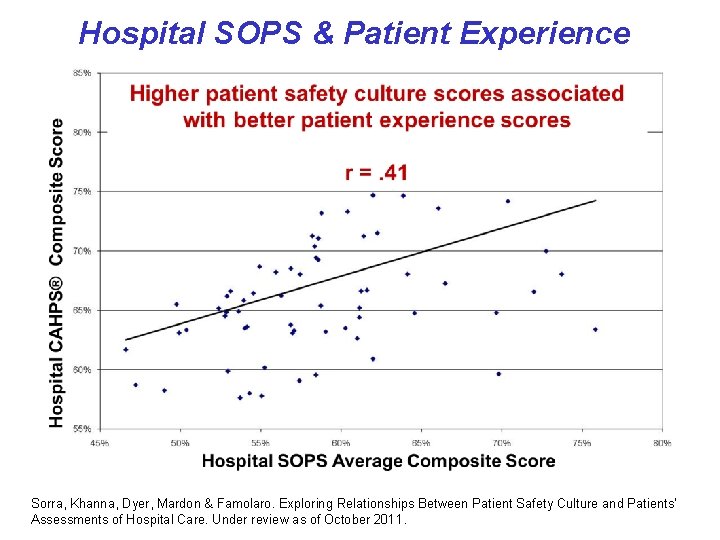

HSOPS-HCAHPS Results • Hospitals with better patient safety cultures had patients who rated the hospital higher on quality of care § Correlation: r =. 41 § Regression controlling for hospital characteristics: β =. 33 41 41

Hospital SOPS & Patient Experience Sorra, Khanna, Dyer, Mardon & Famolaro. Exploring Relationships Between Patient Safety Culture and Patients’ Assessments of Hospital Care. Under review as of October 2011.

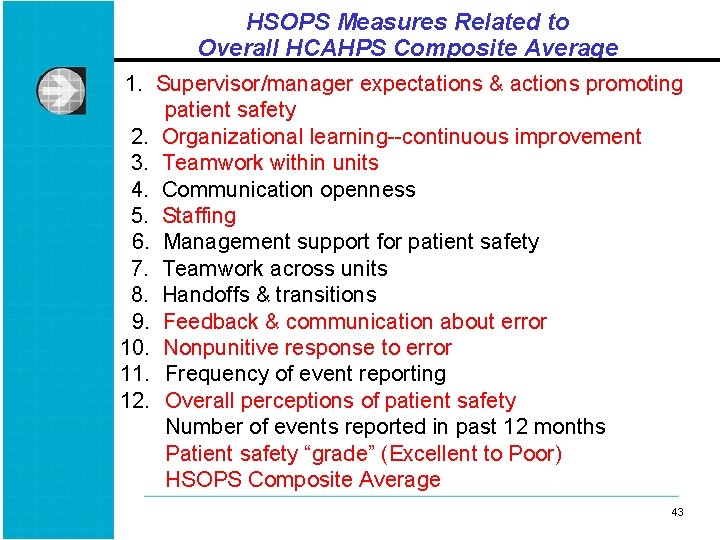

HSOPS Measures Related to Overall HCAHPS Composite Average 1. Supervisor/manager expectations & actions promoting patient safety 2. Organizational learning--continuous improvement 3. Teamwork within units 4. Communication openness 5. Staffing 6. Management support for patient safety 7. Teamwork across units 8. Handoffs & transitions 9. Feedback & communication about error 10. Nonpunitive response to error 11. Frequency of event reporting 12. Overall perceptions of patient safety Number of events reported in past 12 months Patient safety “grade” (Excellent to Poor) HSOPS Composite Average 43 43

HCAHPS Measures Related to Overall HSOPS Composite Average 1. Communication with nurses 2. Communication with doctors 3. Communication about medicines 4. Responsiveness of hospital staff 5. Discharge information 6. Pain management 7. Hospital environment (clean & quiet) 8. Overall rating of hospital (0 worst to 10 best) 9. Willingness to recommend to family & friends (Definitely no, Probably yes, Definitely yes) HCAHPS Composite Average 44 44

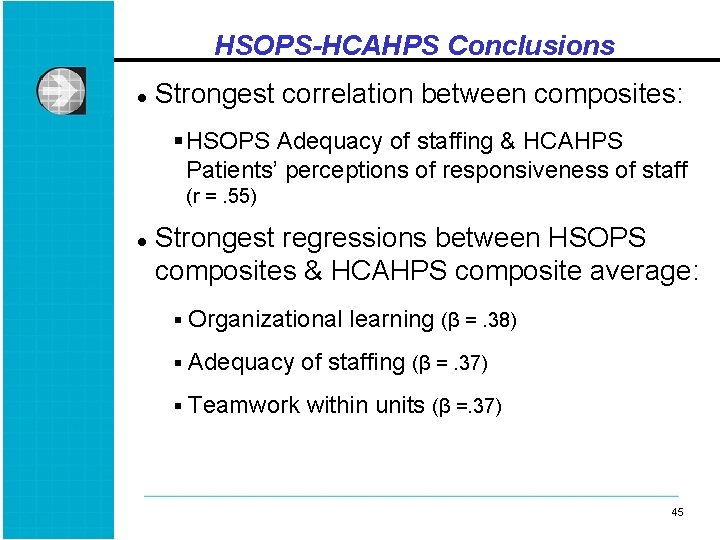

HSOPS-HCAHPS Conclusions l Strongest correlation between composites: § HSOPS Adequacy of staffing & HCAHPS Patients’ perceptions of responsiveness of staff (r =. 55) l Strongest regressions between HSOPS composites & HCAHPS composite average: § Organizational learning (β =. 38) § Adequacy of staffing (β =. 37) § Teamwork within units (β =. 37) 45 45

HSOPS & AHRQ Patient Safety Indicators (PSIs) l Rates of adverse events per 1, 000 patients l HSOPS and PSI data from 179 hospitals l 8 indicators and an overall PSI composite Iatrogenic pneumothorax (PSI 6) Selected infections due to medical care (PSI 7) Accidental puncture and laceration (PSI 15) Postoperative: Hemorrhage or hematoma (PSI 9) Physiologic & metabolic derangements (PSI 10) Respiratory failure (PSI 11) Sepsis (PSI 13) Wound dehiscence in abdominopelvic surgical patients (PSI 14) 46 46

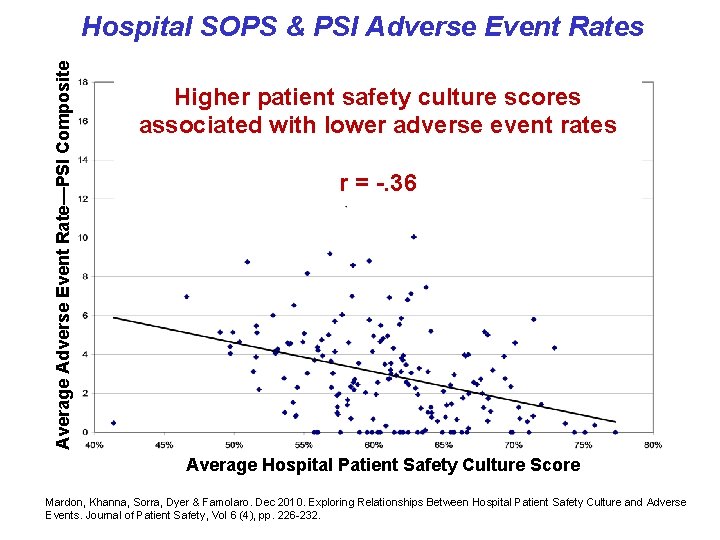

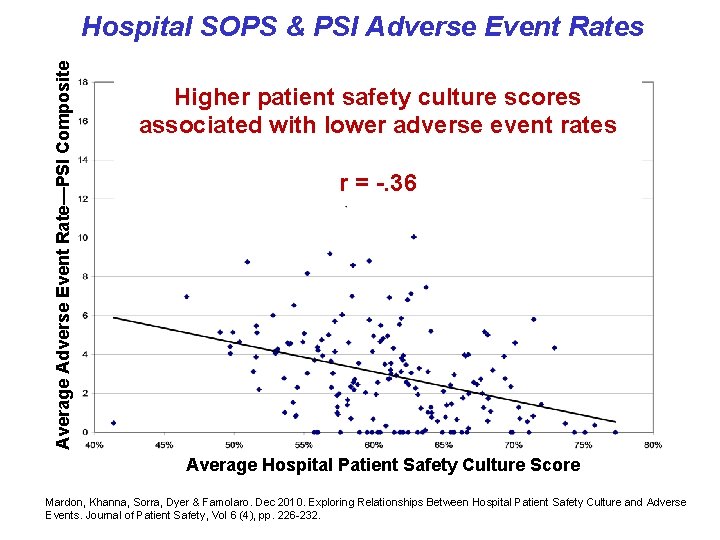

Average Adverse Event Rate—PSI Composite Hospital SOPS & PSI Adverse Event Rates Higher patient safety culture scores associated with lower adverse event rates r = -. 36 Average Hospital Patient Safety Culture Score Mardon, Khanna, Sorra, Dyer & Famolaro. Dec 2010. Exploring Relationships Between Hospital Patient Safety Culture and Adverse Events. Journal of Patient Safety, Vol 6 (4), pp. 226 -232.

Further Linkage Analysis • Obtain larger numbers of sites • Examine data collection periods that help determine the causal relationships between these measures • Examine relationships § At the unit level § In other settings Ø Medical Office SOPS & Clinician-Group CAHPS Ø Medical Office SOPS & Outpatient quality and safety § Between other measures Ø HSOPS and employee engagement surveys 48 48

HCAHPS and Outcomes • Higher hospital-level patient satisfaction (overall & for discharge planning) was related to § Lower 30 -day readmission rates for AMI, heart failure and pneumonia Ø Boulding, Glickman, Manary et al. 2011. The Amer J of Managed Care; 17(1): 41 -48. 49 49

HCAHPS and Outcomes • Higher hospital-level patient satisfaction (overall rating & willingness to recommend) was related to: § HQA process measures § AHRQ PSIs for medical and surgical complication rates Ø Isaac, Zaslavsky, Cleary, & Landon, 2010. Health Services Research; 45(4); 1024 -1040. 50 50

HCAHPS and Team. STEPPS • Why it’s a useful measure to consider § Affects hospital’s bottom line, so people care § Reinforces value of including patients and families on teams § Evidence that better teamwork can improve pt satisfaction Ø Auerbach et al. Effects of a multicentre teamwork and communication programme on patient outcomes: results from the Triad for Optimal Patient Safety (TOPS) project. BMJ Qual Saf. 2012 Feb; 21(2): 118 -26 Ø Armour et al. Team training can improve operating room performance. Surgery. 2011 Oct; 150(4): 771 -8. § Available data with some ability to link to specific parts of hospital 51 51

HCAHPS and Team. STEPPS • Things we’ve noticed working with HCAHPS § Communication dimensions almost perfectly predict willingness to recommend and overall satisfaction § Focus on satisfaction, neglect of dissatisfaction (which better predicts other outcomes of interest) § Links between specific dimensions and outcomes TS projects are targeting (i. e. readmissions/staff gave information about recover; ADEs/staff explained meds well) § Lots of room for improvement § Between-hospital variation tricky to interpret 52 52

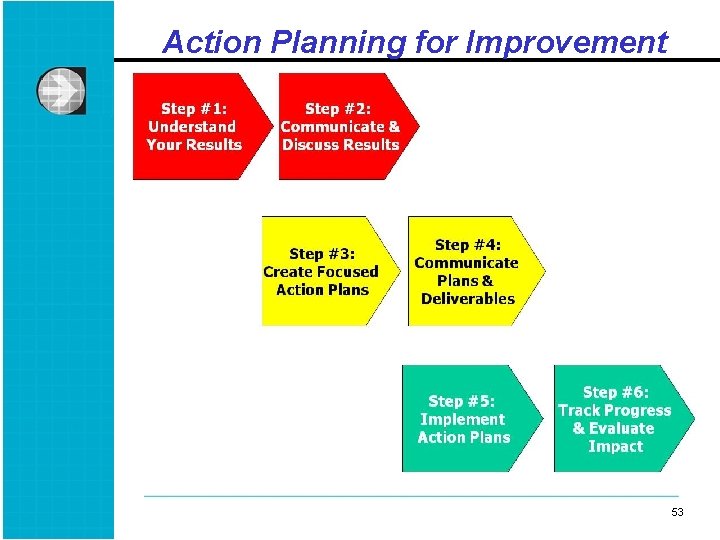

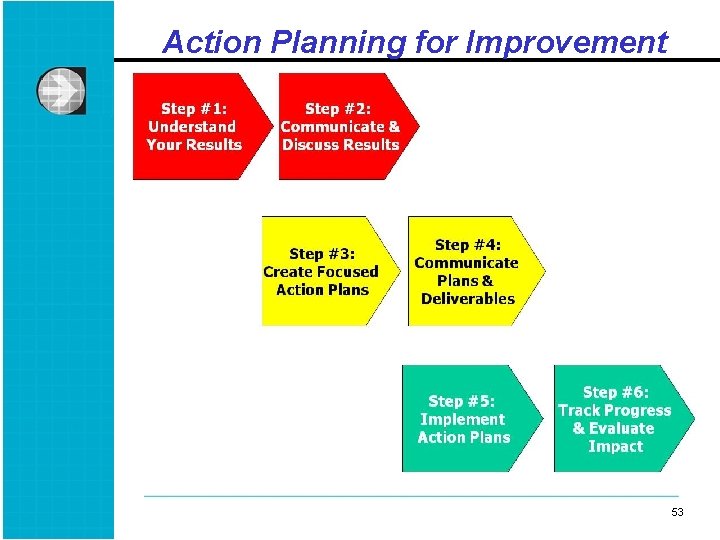

Action Planning for Improvement 53 53

Step 1: Understand Your Results • Identify strengths & areas for improvement § Select 2 -3 areas for improvement to avoid focusing on too many issues at once • Discuss survey results to arrive at deeper understanding of underlying issues § Consider conducting focus groups 54 54

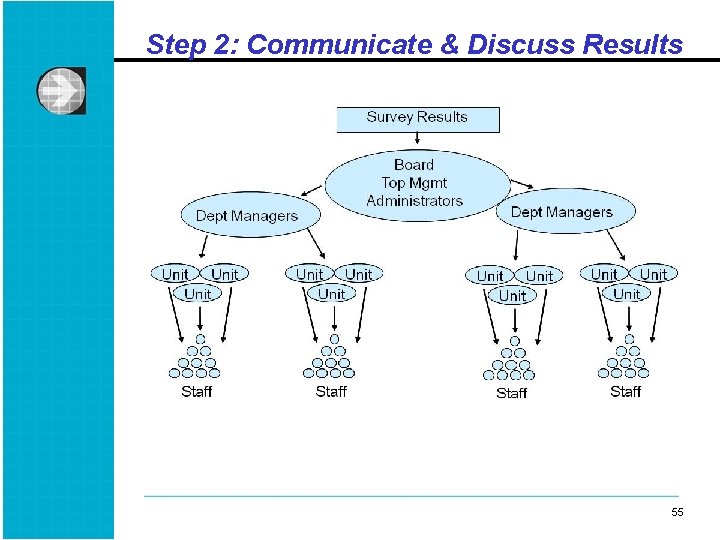

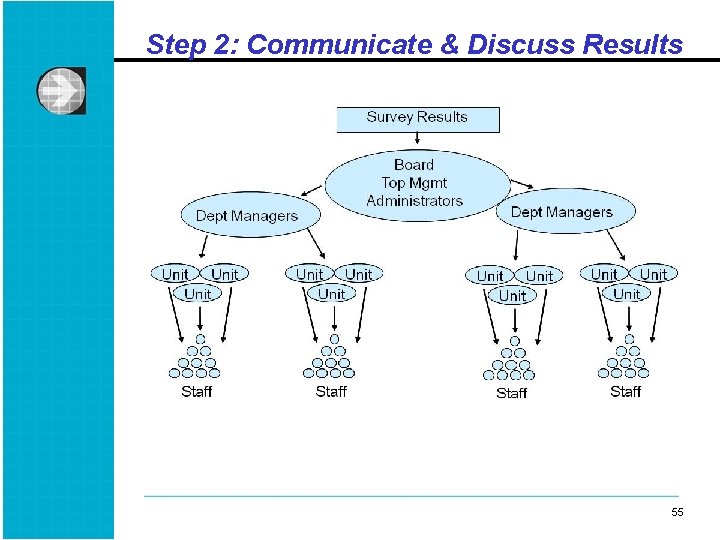

Step 2: Communicate & Discuss Results 55 55

Step 3: Implement Action Plans • In 2010, top 5 actions taken by HSOPS hospitals § Improved fall prevention program (56%) § Conducted root cause analysis § Implemented SBAR (Situation-Background. Assessment-Recommendation) § Improved compliance with Joint Commission National Patient Safety goals § Held education/patient safety fair for staff • Implemented Team. STEPPS (18%) 56 56

Questions & Group Discussion 57 57

Acrostic poem about teamwork

Acrostic poem about teamwork Bedside shift report and patient satisfaction

Bedside shift report and patient satisfaction Patient satisfaction 2017

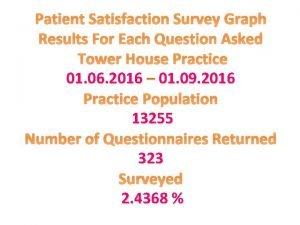

Patient satisfaction 2017 Patient satisfaction graph

Patient satisfaction graph Patient 2 patient

Patient 2 patient A nurse floats to a busy surgical unit

A nurse floats to a busy surgical unit Patient environment and safety

Patient environment and safety Patient safety and quality care movement

Patient safety and quality care movement Time space compression ap human geography

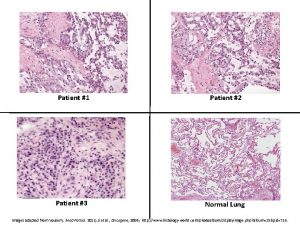

Time space compression ap human geography Continuous culture and batch culture

Continuous culture and batch culture Difference between american and indian culture

Difference between american and indian culture Uses of selenite f broth

Uses of selenite f broth Folk culture and popular culture venn diagram

Folk culture and popular culture venn diagram Chapter 4 folk and popular culture

Chapter 4 folk and popular culture Anaerobic media

Anaerobic media Folk culture and popular culture venn diagram

Folk culture and popular culture venn diagram Stroke culture method

Stroke culture method Describe lawn culture and surface plating

Describe lawn culture and surface plating Surface culture deep culture and esol

Surface culture deep culture and esol Scottish patient safety programme

Scottish patient safety programme Solutions for patient safety

Solutions for patient safety Malaysian patients safety goals

Malaysian patients safety goals National patient safety goals 2012

National patient safety goals 2012 Patient safety incident report form

Patient safety incident report form To err is human to cover up is unforgivable

To err is human to cover up is unforgivable National patient safety goal 6

National patient safety goal 6 Sentinel event

Sentinel event Nj patient safety act

Nj patient safety act Patient safety assistant

Patient safety assistant Dod patient safety program

Dod patient safety program National patient safety goal 6

National patient safety goal 6 Sue sheridan patient safety

Sue sheridan patient safety Patient safety goals - awareness course

Patient safety goals - awareness course National patient safety goals 2017

National patient safety goals 2017 Patient safety solutions

Patient safety solutions Ihi care bundles

Ihi care bundles Safety incident management system

Safety incident management system 2013 hospital national patient safety goals

2013 hospital national patient safety goals Patient safety goals

Patient safety goals National patient safety framework

National patient safety framework National safety goal 6

National safety goal 6 Scottish patient safety programme

Scottish patient safety programme Canadian patient safety officer course

Canadian patient safety officer course Patient safety evaluation system

Patient safety evaluation system Ahrq patient safety survey

Ahrq patient safety survey Promoting a positive health and safety culture

Promoting a positive health and safety culture Building customer satisfaction value and retention

Building customer satisfaction value and retention Individual culture traits combine to form culture patterns.

Individual culture traits combine to form culture patterns. Batch culture vs continuous culture

Batch culture vs continuous culture Individualistic culture definition

Individualistic culture definition Subculture group

Subculture group Inert organizational culture

Inert organizational culture Quality culture vs traditional culture

Quality culture vs traditional culture Total safety culture

Total safety culture Safety culture in aviation industry

Safety culture in aviation industry Dow safety training

Dow safety training Hudson ladder of safety culture

Hudson ladder of safety culture Nuclear safety culture

Nuclear safety culture