Status of CVMFS for ALICE Deployment timeline ALICE

![Use of opportunistic resources [pbuncic@localhost bin]$. /parrot_run -p "http: //cernvm. lbl. gov: 3128; DIRECT" Use of opportunistic resources [pbuncic@localhost bin]$. /parrot_run -p "http: //cernvm. lbl. gov: 3128; DIRECT"](https://slidetodoc.com/presentation_image_h2/81d0dbd03aec3bb57af0e8e5b734c4c9/image-12.jpg)

- Slides: 24

Status of CVMFS for ALICE Deployment timeline ALICE© | Offline Week | 18 -21 June 2013| Predrag Buncic

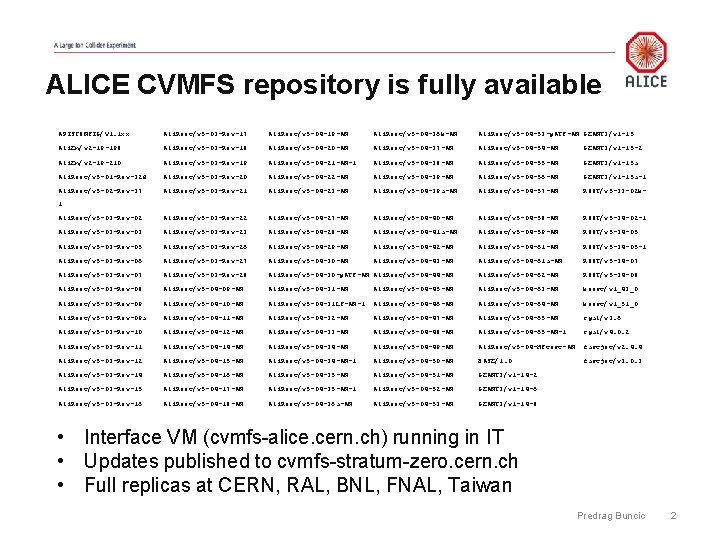

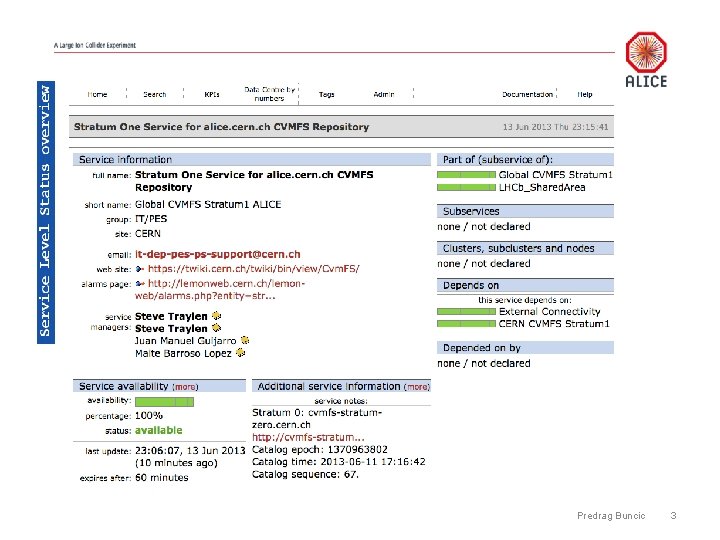

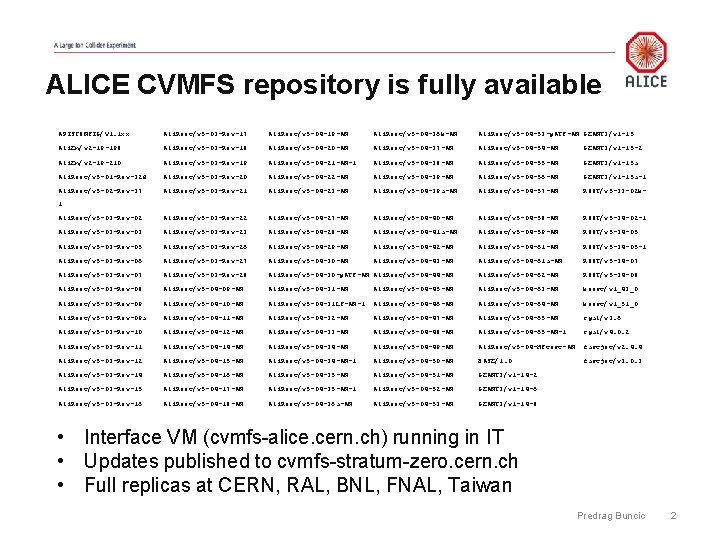

ALICE CVMFS repository is fully available APISCONFIG/V 1. 1 xx Ali. Root/v 5 -03 -Rev-17 Ali. Root/v 5 -04 -19 -AN Ali. Root/v 5 -04 -36 b-AN Ali. Root/v 5 -04 -53 -p. ATF-AN GEANT 3/v 1 -15 Ali. En/v 2 -19 -198 Ali. Root/v 5 -03 -Rev-18 Ali. Root/v 5 -04 -20 -AN Ali. Root/v 5 -04 -37 -AN Ali. Root/v 5 -04 -54 -AN GEANT 3/v 1 -15 -2 Ali. En/v 2 -19 -210 Ali. Root/v 5 -03 -Rev-19 Ali. Root/v 5 -04 -21 -AN-1 Ali. Root/v 5 -04 -38 -AN Ali. Root/v 5 -04 -55 -AN GEANT 3/v 1 -15 a Ali. Root/v 5 -01 -Rev-32 d Ali. Root/v 5 -03 -Rev-20 Ali. Root/v 5 -04 -22 -AN Ali. Root/v 5 -04 -39 -AN Ali. Root/v 5 -04 -56 -AN GEANT 3/v 1 -15 a-1 Ali. Root/v 5 -02 -Rev-37 Ali. Root/v 5 -03 -Rev-21 Ali. Root/v 5 -04 -23 -AN Ali. Root/v 5 -04 -39 a-AN Ali. Root/v 5 -04 -57 -AN ROOT/v 5 -33 -02 b- Ali. Root/v 5 -03 -Rev-02 Ali. Root/v 5 -03 -Rev-22 Ali. Root/v 5 -04 -27 -AN Ali. Root/v 5 -04 -40 -AN Ali. Root/v 5 -04 -58 -AN ROOT/v 5 -34 -02 -1 Ali. Root/v 5 -03 -Rev-03 Ali. Root/v 5 -03 -Rev-23 Ali. Root/v 5 -04 -28 -AN Ali. Root/v 5 -04 -41 a-AN Ali. Root/v 5 -04 -59 -AN ROOT/v 5 -34 -05 Ali. Root/v 5 -03 -Rev-26 Ali. Root/v 5 -04 -29 -AN Ali. Root/v 5 -04 -42 -AN Ali. Root/v 5 -04 -61 -AN ROOT/v 5 -34 -05 -1 Ali. Root/v 5 -03 -Rev-06 Ali. Root/v 5 -03 -Rev-27 Ali. Root/v 5 -04 -30 -AN Ali. Root/v 5 -04 -43 -AN Ali. Root/v 5 -04 -61 a-AN ROOT/v 5 -34 -07 Ali. Root/v 5 -03 -Rev-28 Ali. Root/v 5 -04 -30 -p. ATF-AN Ali. Root/v 5 -04 -44 -AN Ali. Root/v 5 -04 -62 -AN ROOT/v 5 -34 -08 Ali. Root/v 5 -03 -Rev-08 Ali. Root/v 5 -04 -09 -AN Ali. Root/v 5 -04 -31 -AN Ali. Root/v 5 -04 -45 -AN Ali. Root/v 5 -04 -63 -AN boost/v 1_43_0 Ali. Root/v 5 -03 -Rev-09 Ali. Root/v 5 -04 -10 -AN Ali. Root/v 5 -04 -31 LF-AN-1 Ali. Root/v 5 -04 -46 -AN Ali. Root/v 5 -04 -64 -AN boost/v 1_51_0 Ali. Root/v 5 -03 -Rev-09 a Ali. Root/v 5 -04 -11 -AN Ali. Root/v 5 -04 -32 -AN Ali. Root/v 5 -04 -47 -AN Ali. Root/v 5 -04 -65 -AN cgal/v 3. 6 Ali. Root/v 5 -03 -Rev-10 Ali. Root/v 5 -04 -12 -AN Ali. Root/v 5 -04 -33 -AN Ali. Root/v 5 -04 -48 -AN Ali. Root/v 5 -04 -65 -AN-1 cgal/v 4. 0. 2 Ali. Root/v 5 -03 -Rev-11 Ali. Root/v 5 -04 -14 -AN Ali. Root/v 5 -04 -34 -AN Ali. Root/v 5 -04 -49 -AN Ali. Root/v 5 -04 -HFtest-AN fastjet/v 2. 4. 4 Ali. Root/v 5 -03 -Rev-12 Ali. Root/v 5 -04 -15 -AN Ali. Root/v 5 -04 -34 -AN-1 Ali. Root/v 5 -04 -50 -AN BASE/1. 0 fastjet/v 3. 0. 3 Ali. Root/v 5 -03 -Rev-14 Ali. Root/v 5 -04 -16 -AN Ali. Root/v 5 -04 -35 -AN Ali. Root/v 5 -04 -51 -AN GEANT 3/v 1 -14 -2 Ali. Root/v 5 -03 -Rev-15 Ali. Root/v 5 -04 -17 -AN Ali. Root/v 5 -04 -35 -AN-1 Ali. Root/v 5 -04 -52 -AN GEANT 3/v 1 -14 -6 Ali. Root/v 5 -03 -Rev-16 Ali. Root/v 5 -04 -18 -AN Ali. Root/v 5 -04 -36 a-AN Ali. Root/v 5 -04 -53 -AN GEANT 3/v 1 -14 -8 1 • Interface VM (cvmfs-alice. cern. ch) running in IT • Updates published to cvmfs-stratum-zero. cern. ch • Full replicas at CERN, RAL, BNL, FNAL, Taiwan Predrag Buncic 2

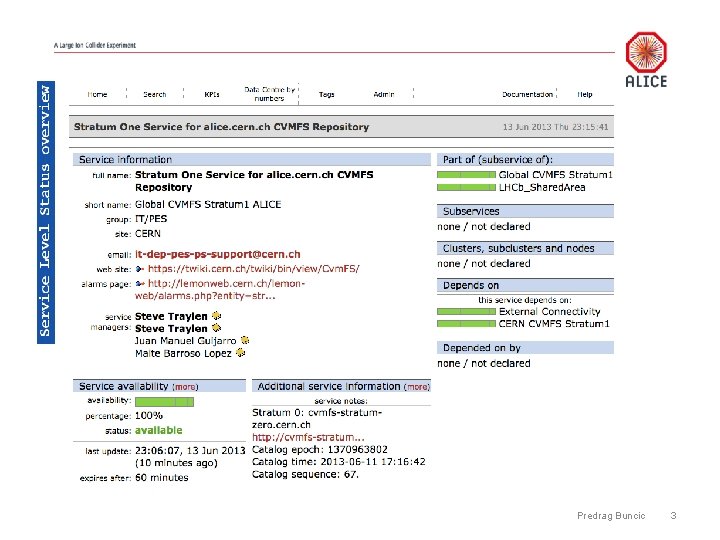

Predrag Buncic 3

Predrag Buncic 4

Use Cases Predrag Buncic 5

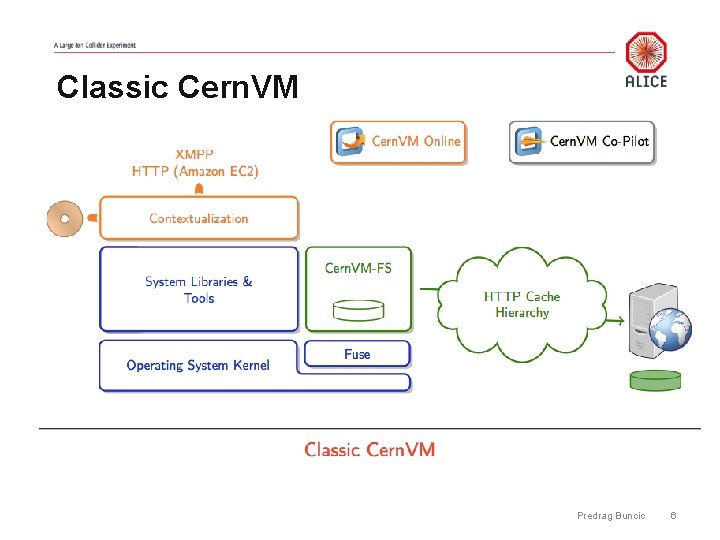

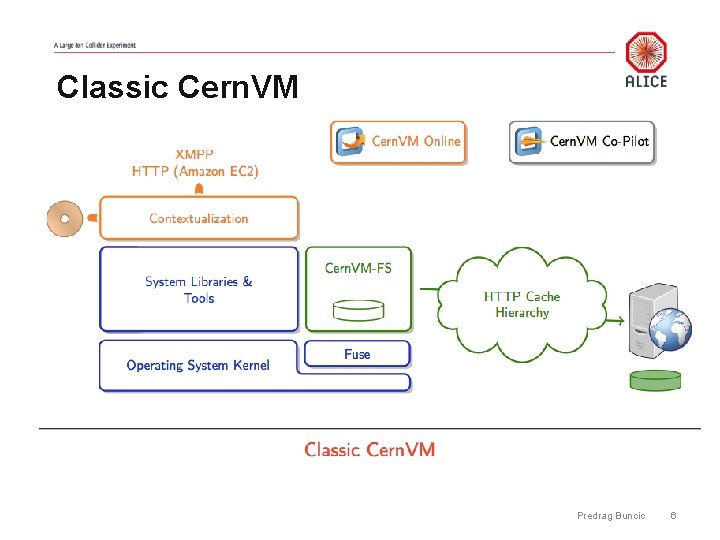

Classic Cern. VM Predrag Buncic 6

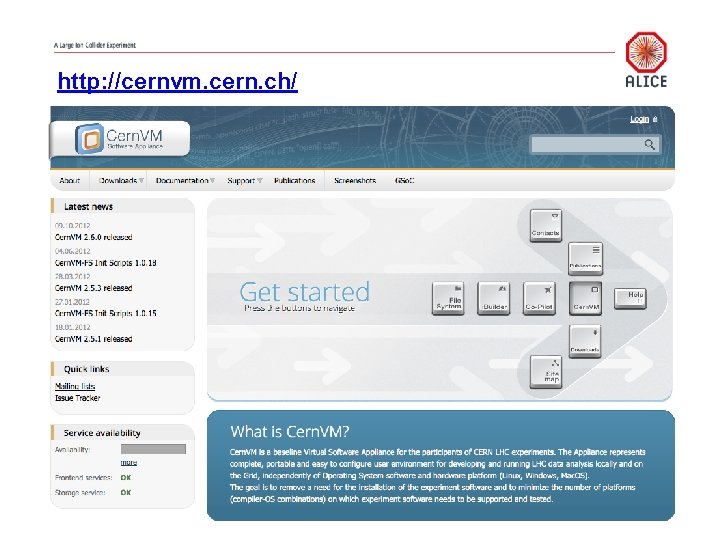

http: //cernvm. cern. ch/ Predrag Buncic 7

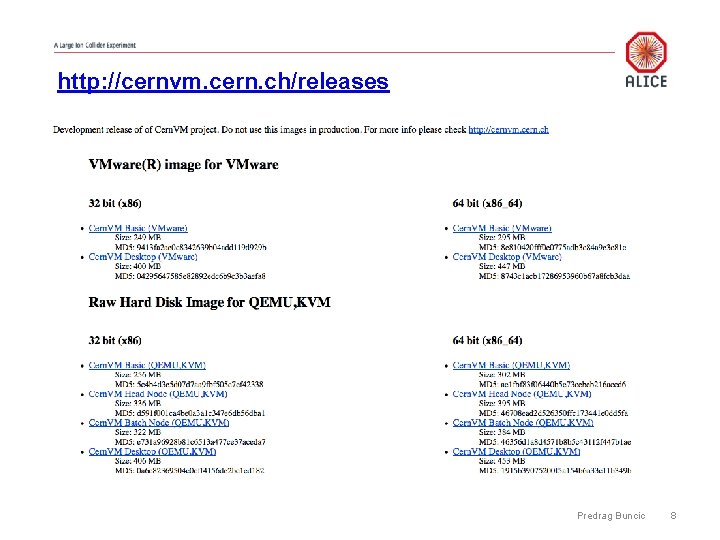

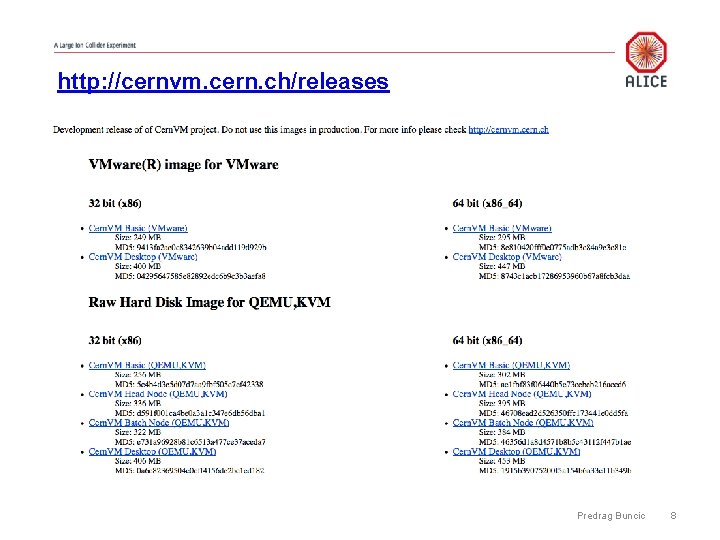

http: //cernvm. cern. ch/releases Predrag Buncic 8

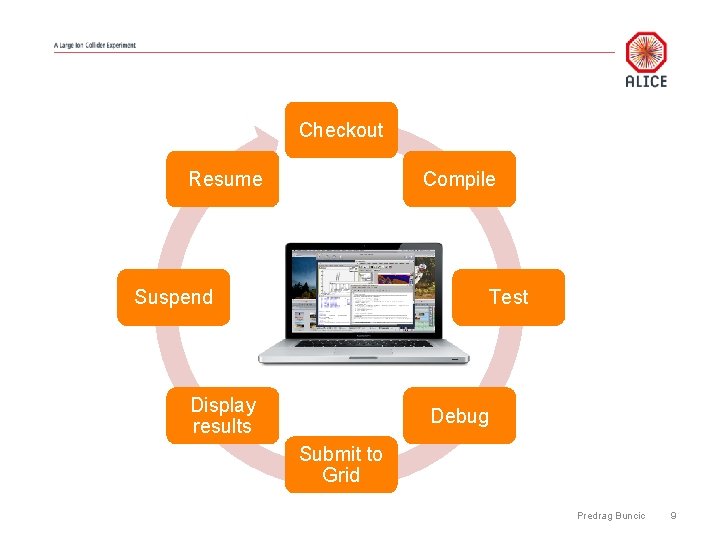

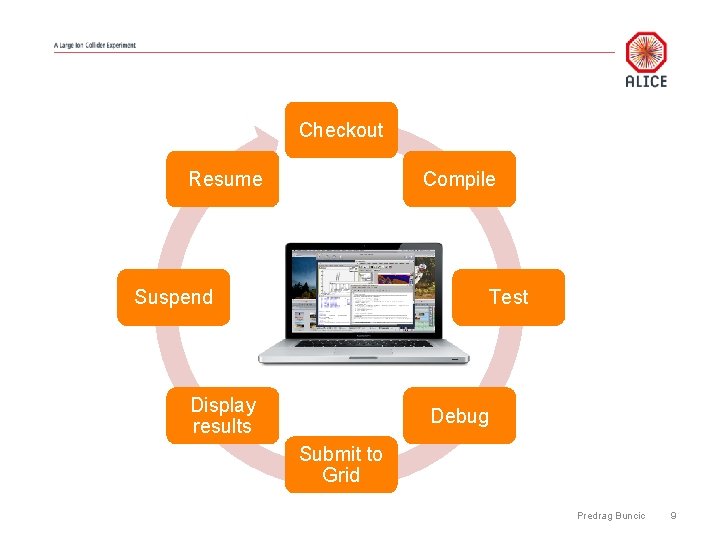

Checkout Resume Compile Suspend Test Display results Debug Submit to Grid Predrag Buncic 9

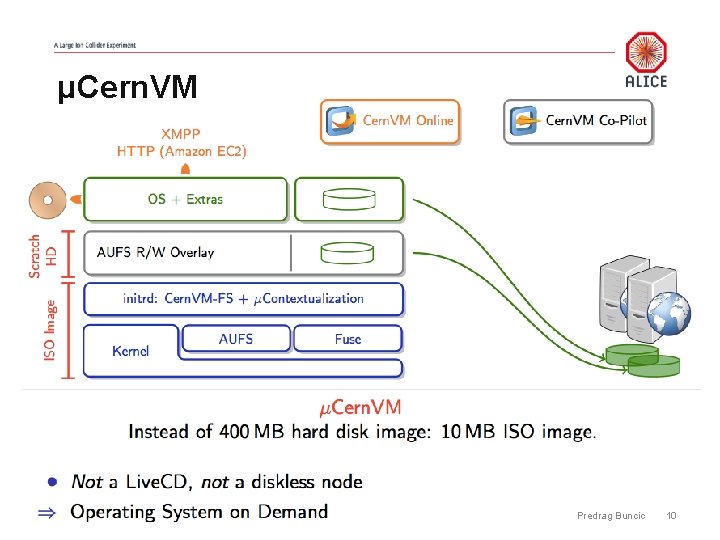

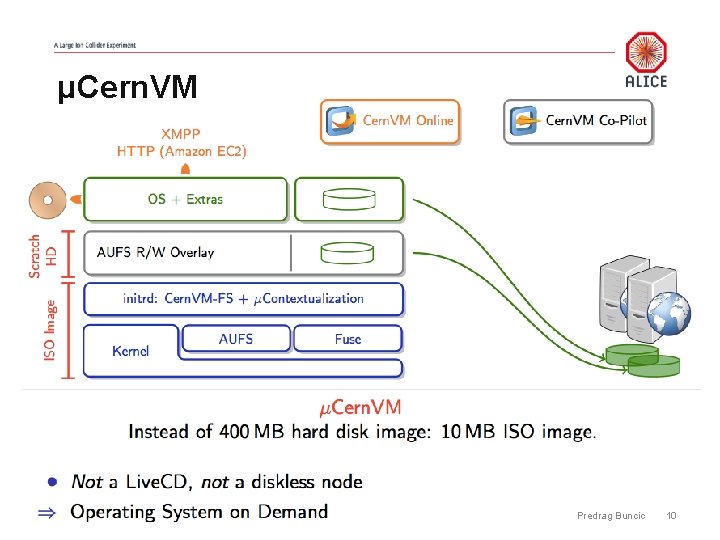

μCern. VM Predrag Buncic 10

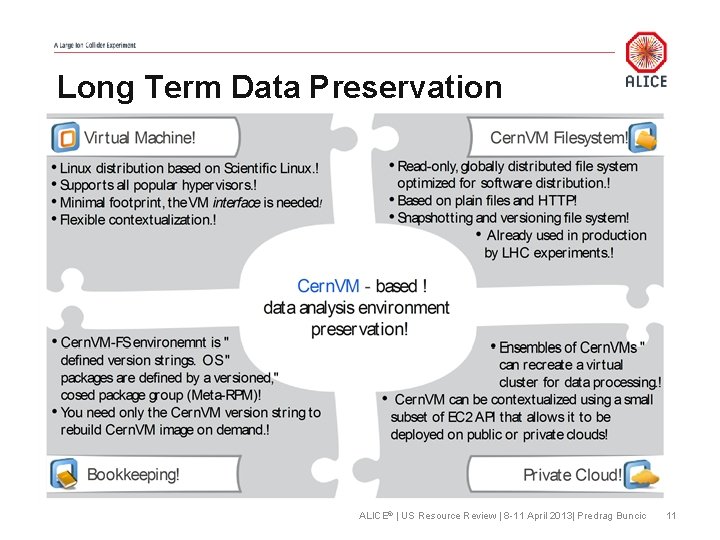

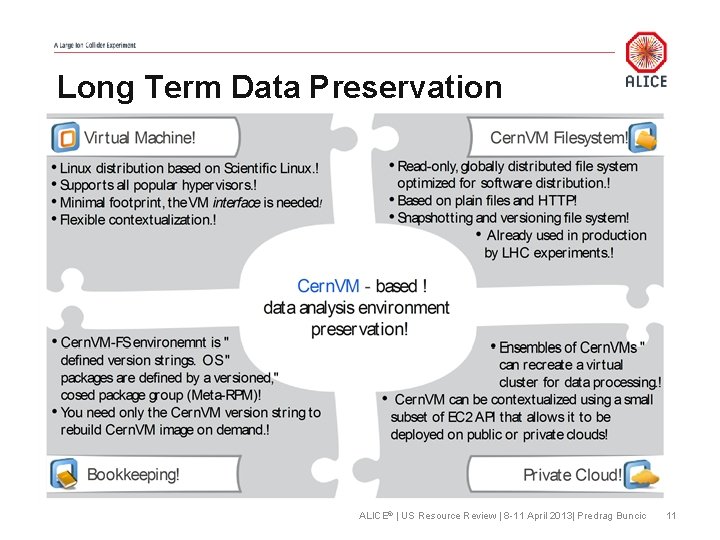

Long Term Data Preservation. ALICE© | US Resource Review | 8 -11 April 2013| Predrag Buncic 11

![Use of opportunistic resources pbunciclocalhost bin parrotrun p http cernvm lbl gov 3128 DIRECT Use of opportunistic resources [pbuncic@localhost bin]$. /parrot_run -p "http: //cernvm. lbl. gov: 3128; DIRECT"](https://slidetodoc.com/presentation_image_h2/81d0dbd03aec3bb57af0e8e5b734c4c9/image-12.jpg)

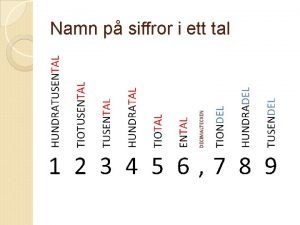

Use of opportunistic resources [pbuncic@localhost bin]$. /parrot_run -p "http: //cernvm. lbl. gov: 3128; DIRECT" -r "alice. cern. ch: url=http: //cernvm-devwebfs. cern. ch/cvmfs/alice. cern. ch" /bin/bash [pbuncic@localhost bin]$. /cvmfs/alice. cern. ch/etc/login. sh [pbuncic@localhost bin]$ time alienv setenv Ali. Root/v 5 -03 -Rev-19 -c aliroot -b ********************** * W E L C O M E to R O O T * Connection: Wifi * * Time to start : 4 m 55 s * Version 5. 34/05 14 February 2013 * Download: 86 MB * * Cache: 292 MB * You are welcome to visit our Web site * * http: //root. cern. ch * ********************** ROOT 5. 34/05 (tags/v 5 -34 -05@48582, Feb 15 2013, 17: 08: 24 on linuxx 8664 gcc) CINT/ROOT C/C++ Interpreter version 5. 18. 00, July 2, 2010 Type ? for help. Commands must be C++ statements. Enclose multiple statements between { }. 12

From “common Grid” to “our grid on the cloud(s)” 13

use Ali. En: : Pack. Man: : CVMFS; (more details in Miguel’s talk) 14

15

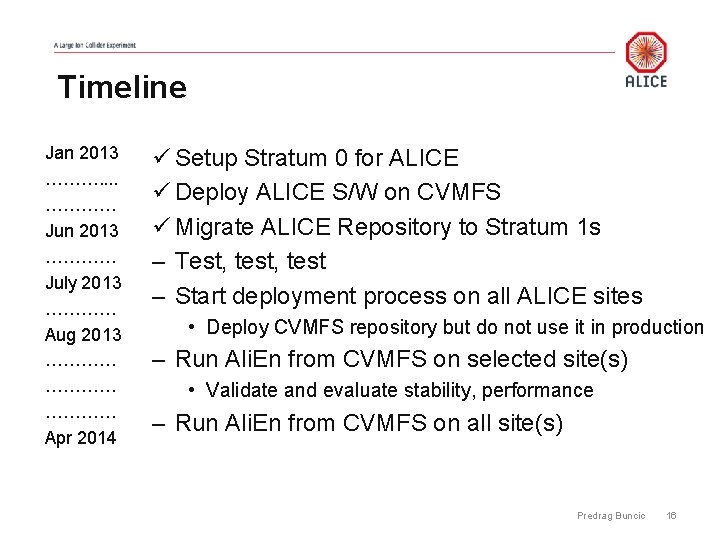

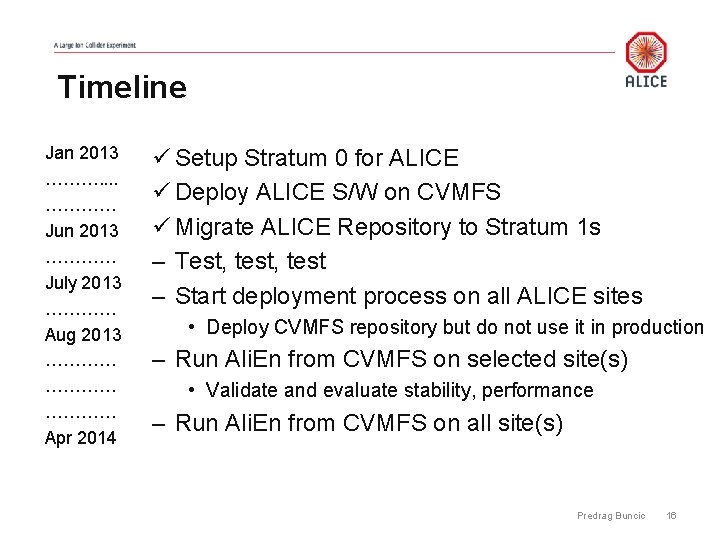

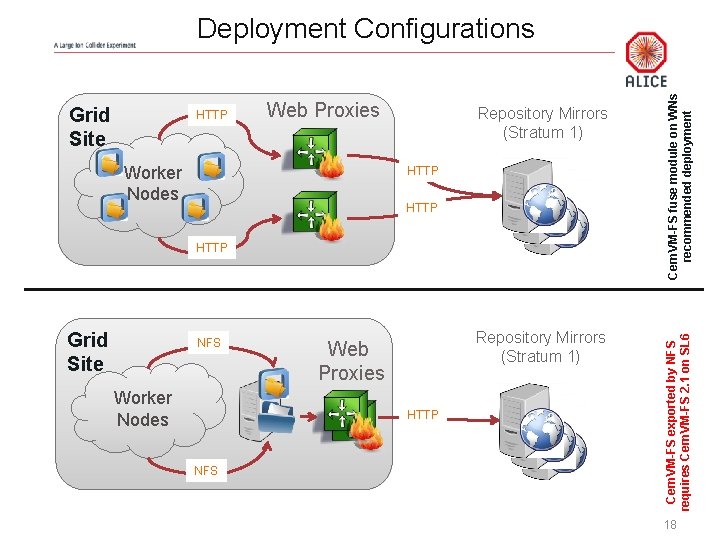

Timeline Jan 2013 ………. . ………… Jun 2013 ………… July 2013 ………… Aug 2013 ………… Apr 2014 ü Setup Stratum 0 for ALICE ü Deploy ALICE S/W on CVMFS ü Migrate ALICE Repository to Stratum 1 s – Test, test – Start deployment process on all ALICE sites • Deploy CVMFS repository but do not use it in production – Run Ali. En from CVMFS on selected site(s) • Validate and evaluate stability, performance – Run Ali. En from CVMFS on all site(s) Predrag Buncic 16

32/32/32 17

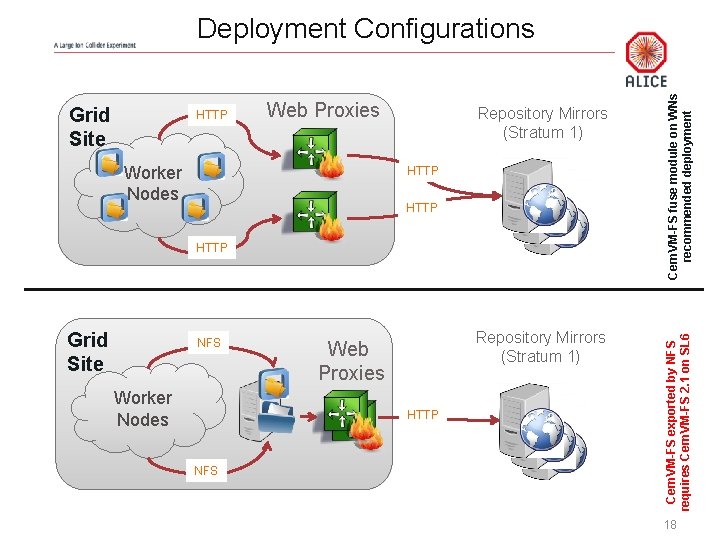

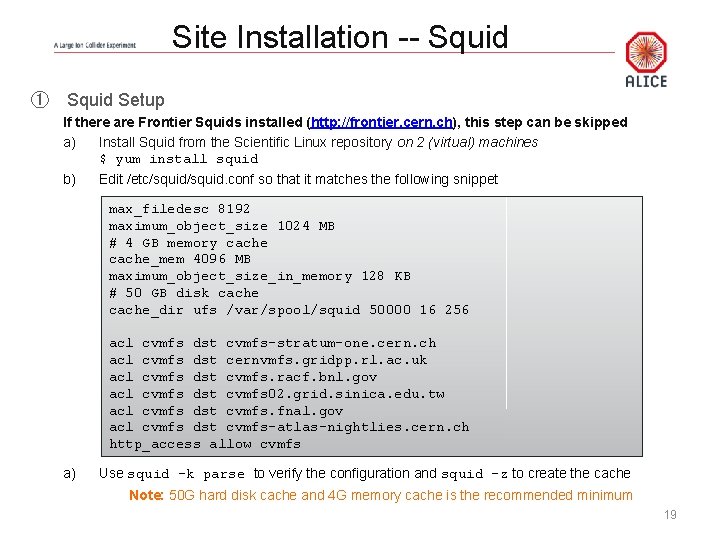

HTTP Web Proxies Worker Nodes Repository Mirrors (Stratum 1) HTTP Grid Site NFS Worker Nodes Repository Mirrors (Stratum 1) Web Proxies HTTP NFS Cern. VM-FS exported by NFS requires Cern. VM-FS 2. 1 on SL 6 Grid Site Cern. VM-FS fuse module on WNs recommended deployment Deployment Configurations 18

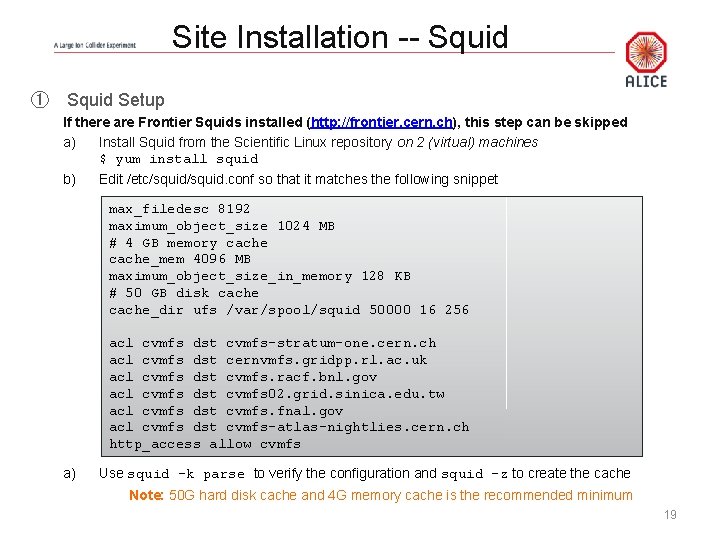

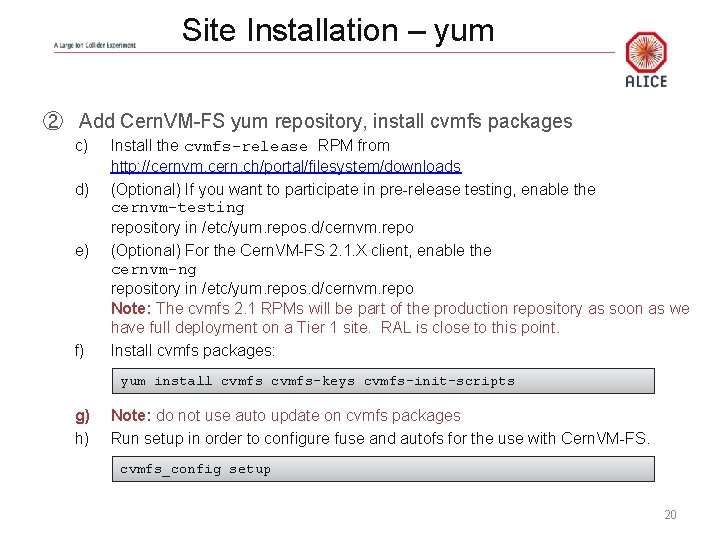

Site Installation -- Squid ① Squid Setup If there are Frontier Squids installed (http: //frontier. cern. ch), this step can be skipped a) Install Squid from the Scientific Linux repository on 2 (virtual) machines $ yum install squid b) Edit /etc/squid. conf so that it matches the following snippet max_filedesc 8192 maximum_object_size 1024 MB # 4 GB memory cache_mem 4096 MB maximum_object_size_in_memory 128 KB # 50 GB disk cache_dir ufs /var/spool/squid 50000 16 256 acl cvmfs dst cvmfs-stratum-one. cern. ch acl cvmfs dst cernvmfs. gridpp. rl. ac. uk acl cvmfs dst cvmfs. racf. bnl. gov acl cvmfs dst cvmfs 02. grid. sinica. edu. tw acl cvmfs dst cvmfs. fnal. gov acl cvmfs dst cvmfs-atlas-nightlies. cern. ch http_access allow cvmfs a) Use squid –k parse to verify the configuration and squid –z to create the cache Note: 50 G hard disk cache and 4 G memory cache is the recommended minimum 19

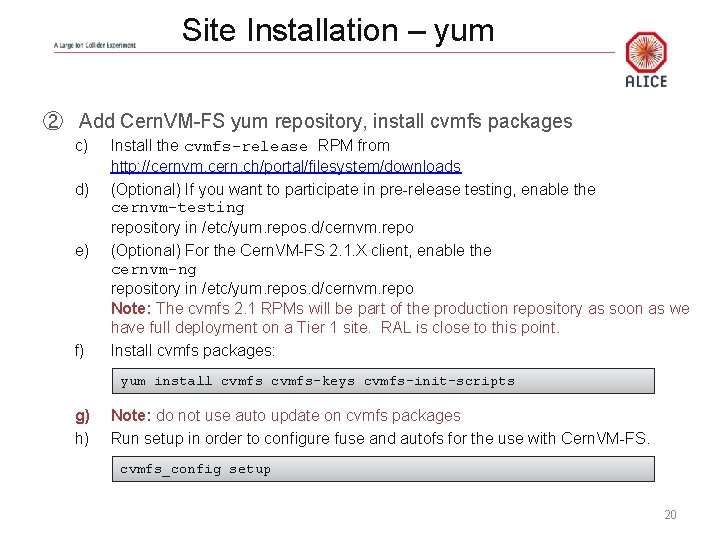

Site Installation – yum ② Add Cern. VM-FS yum repository, install cvmfs packages c) d) e) f) Install the cvmfs-release RPM from http: //cernvm. cern. ch/portal/filesystem/downloads (Optional) If you want to participate in pre-release testing, enable the cernvm-testing repository in /etc/yum. repos. d/cernvm. repo (Optional) For the Cern. VM-FS 2. 1. X client, enable the cernvm-ng repository in /etc/yum. repos. d/cernvm. repo Note: The cvmfs 2. 1 RPMs will be part of the production repository as soon as we have full deployment on a Tier 1 site. RAL is close to this point. Install cvmfs packages: yum install cvmfs-keys cvmfs-init-scripts g) h) Note: do not use auto update on cvmfs packages Run setup in order to configure fuse and autofs for the use with Cern. VM-FS. cvmfs_config setup 20

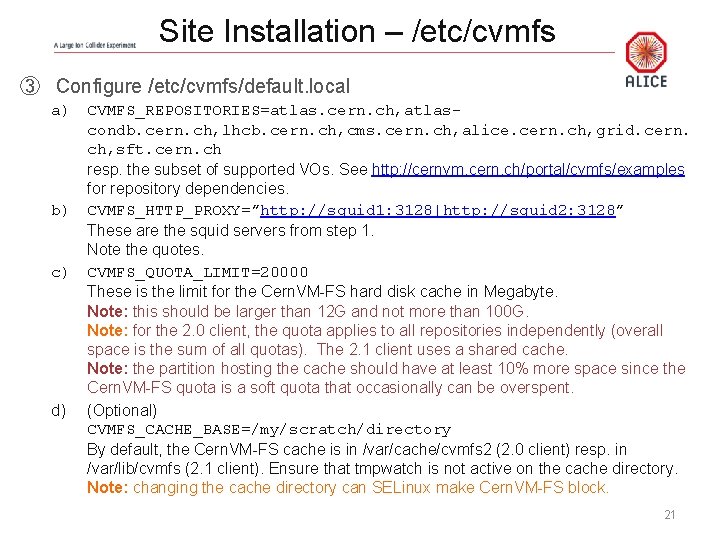

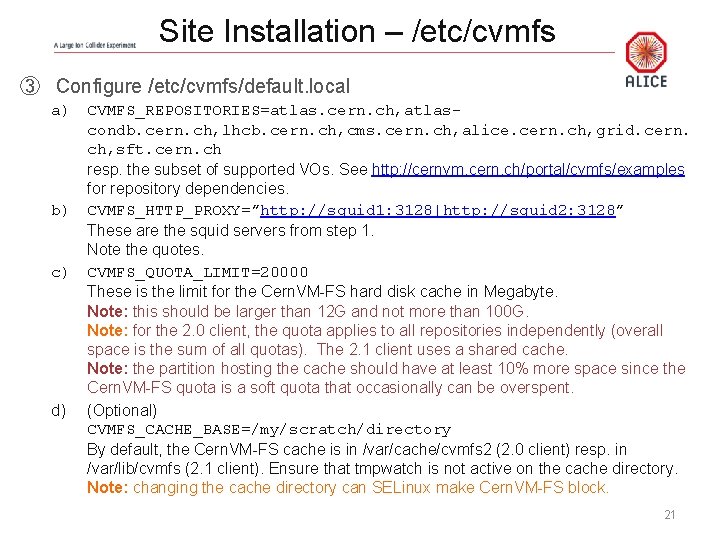

Site Installation – /etc/cvmfs ③ Configure /etc/cvmfs/default. local a) CVMFS_REPOSITORIES=atlas. cern. ch, atlascondb. cern. ch, lhcb. cern. ch, cms. cern. ch, alice. cern. ch, grid. cern. ch, sft. cern. ch resp. the subset of supported VOs. See http: //cernvm. cern. ch/portal/cvmfs/examples for repository dependencies. b) CVMFS_HTTP_PROXY=”http: //squid 1: 3128|http: //squid 2: 3128” These are the squid servers from step 1. Note the quotes. c) CVMFS_QUOTA_LIMIT=20000 These is the limit for the Cern. VM-FS hard disk cache in Megabyte. Note: this should be larger than 12 G and not more than 100 G. Note: for the 2. 0 client, the quota applies to all repositories independently (overall space is the sum of all quotas). The 2. 1 client uses a shared cache. Note: the partition hosting the cache should have at least 10% more space since the Cern. VM-FS quota is a soft quota that occasionally can be overspent. d) (Optional) CVMFS_CACHE_BASE=/my/scratch/directory By default, the Cern. VM-FS cache is in /var/cache/cvmfs 2 (2. 0 client) resp. in /var/lib/cvmfs (2. 1 client). Ensure that tmpwatch is not active on the cache directory. Note: changing the cache directory can SELinux make Cern. VM-FS block. 21

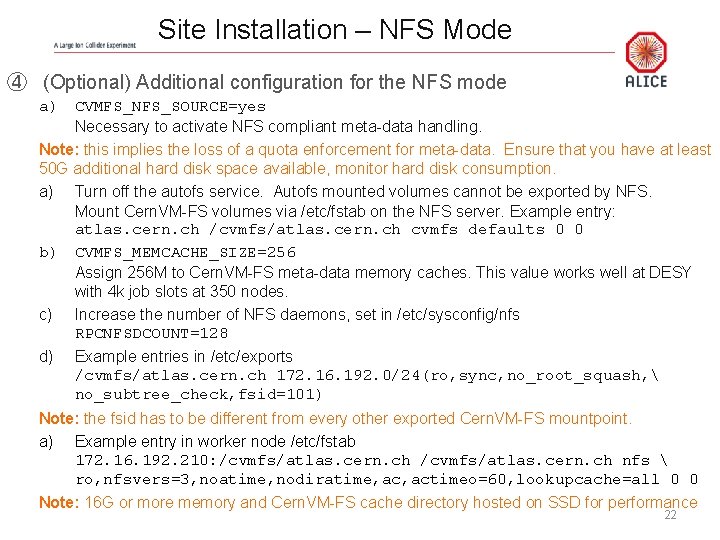

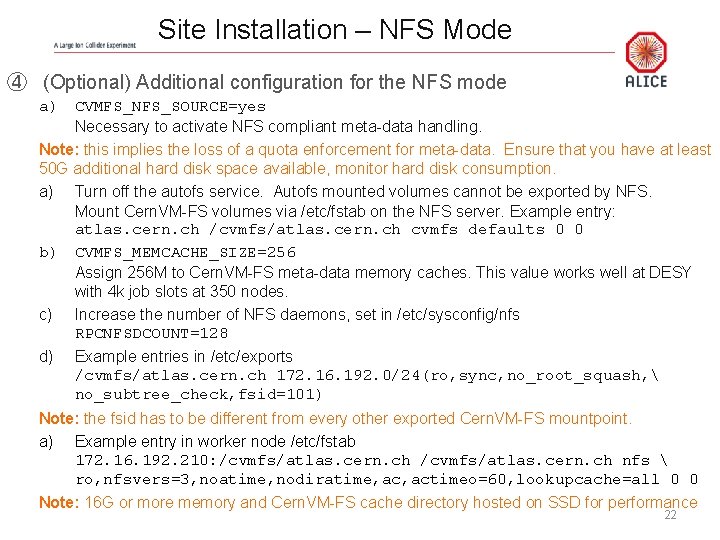

Site Installation – NFS Mode ④ (Optional) Additional configuration for the NFS mode a) CVMFS_NFS_SOURCE=yes Necessary to activate NFS compliant meta-data handling. Note: this implies the loss of a quota enforcement for meta-data. Ensure that you have at least 50 G additional hard disk space available, monitor hard disk consumption. a) Turn off the autofs service. Autofs mounted volumes cannot be exported by NFS. Mount Cern. VM-FS volumes via /etc/fstab on the NFS server. Example entry: atlas. cern. ch /cvmfs/atlas. cern. ch cvmfs defaults 0 0 b) CVMFS_MEMCACHE_SIZE=256 Assign 256 M to Cern. VM-FS meta-data memory caches. This value works well at DESY with 4 k job slots at 350 nodes. c) Increase the number of NFS daemons, set in /etc/sysconfig/nfs RPCNFSDCOUNT=128 d) Example entries in /etc/exports /cvmfs/atlas. cern. ch 172. 16. 192. 0/24(ro, sync, no_root_squash, no_subtree_check, fsid=101) Note: the fsid has to be different from every other exported Cern. VM-FS mountpoint. a) Example entry in worker node /etc/fstab 172. 16. 192. 210: /cvmfs/atlas. cern. ch nfs ro, nfsvers=3, noatime, nodiratime, actimeo=60, lookupcache=all 0 0 Note: 16 G or more memory and Cern. VM-FS cache directory hosted on SSD for performance 22

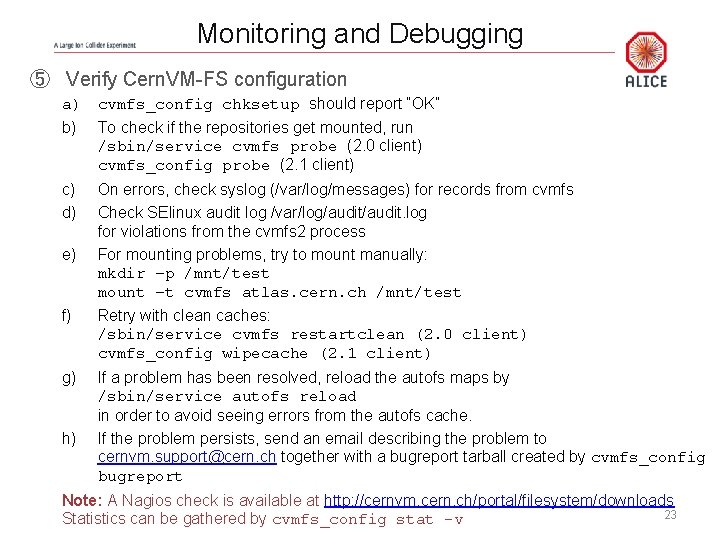

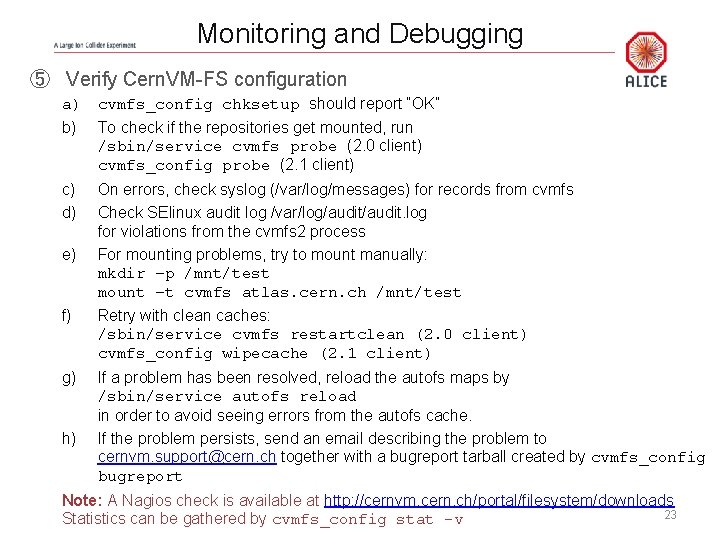

Monitoring and Debugging ⑤ Verify Cern. VM-FS configuration a) cvmfs_config chksetup should report “OK” b) To check if the repositories get mounted, run /sbin/service cvmfs probe (2. 0 client) cvmfs_config probe (2. 1 client) c) On errors, check syslog (/var/log/messages) for records from cvmfs d) Check SElinux audit log /var/log/audit. log for violations from the cvmfs 2 process e) For mounting problems, try to mount manually: mkdir –p /mnt/test mount –t cvmfs atlas. cern. ch /mnt/test f) Retry with clean caches: /sbin/service cvmfs restartclean (2. 0 client) cvmfs_config wipecache (2. 1 client) g) If a problem has been resolved, reload the autofs maps by /sbin/service autofs reload in order to avoid seeing errors from the autofs cache. h) If the problem persists, send an email describing the problem to cernvm. support@cern. ch together with a bugreport tarball created by cvmfs_config bugreport Note: A Nagios check is available at http: //cernvm. cern. ch/portal/filesystem/downloads 23 Statistics can be gathered by cvmfs_config stat -v

Useful Links • Mailing Lists: • • • cvmfs-talk@cern. ch, cvmfs-testing@cern. ch, cvmfs-devel@cern. ch Technical report, known issues, configuration examples: http: //cernvm. cern. ch/portal/filesystem/techinfo Bug tracker: https: //savannah. cern. ch/projects/cernvm Source code: https: //github. com/cvmfs RPMs: http: //cernvm. cern. ch/portal/filesystem/downloads Yum repositories: http: //cvmrepo. web. cern. ch/cvmrepo/yum Nightly builds: https: //ecsft. cern. ch/dist/cvmfs • Cvmfs module for Puppet: https: //github. com/cvmfs/puppet-cvmfs (straylen@cern. ch) • Cvmfs and Quattor: straylen@cern. ch, ian. collier@stfc. ac. uk

Nyckelkompetenser för livslångt lärande

Nyckelkompetenser för livslångt lärande Tack för att ni har lyssnat

Tack för att ni har lyssnat Programskede byggprocessen

Programskede byggprocessen Tidbok

Tidbok Vanlig celldelning

Vanlig celldelning Presentera för publik crossboss

Presentera för publik crossboss Rbk-mätning

Rbk-mätning Fspos vägledning för kontinuitetshantering

Fspos vägledning för kontinuitetshantering Myndigheten för delaktighet

Myndigheten för delaktighet Var 1721 för stormaktssverige

Var 1721 för stormaktssverige Tack för att ni har lyssnat

Tack för att ni har lyssnat Boverket ka

Boverket ka Hur ser ett referat ut

Hur ser ett referat ut Varför kallas perioden 1918-1939 för mellankrigstiden

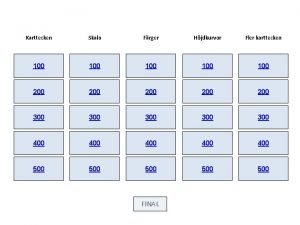

Varför kallas perioden 1918-1939 för mellankrigstiden Brunn karttecken

Brunn karttecken Mjälthilus

Mjälthilus Mindre än tecken

Mindre än tecken Tryck formel

Tryck formel Elektronik för barn

Elektronik för barn Blomman för dagen drog

Blomman för dagen drog Borra hål för knoppar

Borra hål för knoppar Bris för vuxna

Bris för vuxna Mat för unga idrottare

Mat för unga idrottare Anatomi organ reproduksi

Anatomi organ reproduksi Smärtskolan kunskap för livet

Smärtskolan kunskap för livet