SIMS 290 2 Applied Natural Language Processing Marti

- Slides: 45

SIMS 290 -2: Applied Natural Language Processing Marti Hearst October 25, 2004 1

Next Few Classes This week: lexicons and ontologies Today: Word. Net’s structure, computing term similarity Wed: Guest lecture: Prof. Charles Fillmore on Frame. Net Next week: Enron labeling in class The entire assignment will be due on Nov 15 Following week: Question-Answering 2

Text Categorization Assignment Great job, you learned a lot! Comparing to a baseline Selecting features Comparing relative usefulness of features Training, testing, cross-validation I learned a lot too! (from your results) (I’ll send your feedback today) 3

Text Categorization Assignment Features Boosting weights of terms in subject line is helpful. Stemming does help in some circumstances (often works well with SVM, for example), but not always. – Counter-intuitively, stemming can increase the number of features in our implementation, because it increases how many terms pass the minimum-documentoccurrence cutoff. – An example of the porter stemmer not hiding differences when it otherwise would: converting gaseous to "gase" and so not conflating "gas" for fuel for motorcycles with "gaseous" for the science group. 4

Text Categorization Assignment Features Terms with more than just the default alphabetical terms are helpful, maybe because in part getting the domain name information, but also because of getting technical terms. It's probably best to use the Weka feature selector to tell you what *kind* of features are performing well, but not to select those for use exclusively. I'm surprised that no one tried bigrams or noun compounds as features. 5

Text Categorization Assignment Feature Weighting Tf. idf: Almost everyone who tried it found it was raw term frequency (there were exceptions). Binary feature weights with document count minimum thresholds can be a good substitute. An interesting variation on tf. idf is to do it in a classbased manner. – weight terms higher that only occur in one class vs. the others. – A couple of students tried this and got good results on the diverse comparison, but less good on the homogenous. This makes sense since the measure would not help as much in distinguishing similar newsgroups that share many terms. 6

Text Categorization Assignment Classifiers Naïve-Bayes Multinomial was a clear winner SVM worked well most of the time, but not as well as NBM Naive Bayes seemed to be more robust to unseen information; the kernel estimator seems to improve the default Naive Bayes settings. Voted. Perceptron worked very well, but only does binary classification so people who found it did very well on diverse did not transfer it to homogenous. 7

Today Lexicons, Semantic Nets and Ontologies The Structure of Word. Net Computing Similarities Automatic Acquisition of New Terms 8

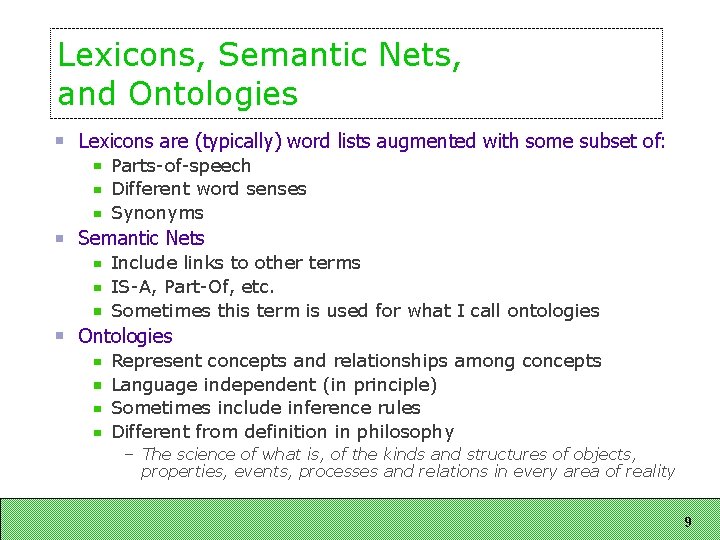

Lexicons, Semantic Nets, and Ontologies Lexicons are (typically) word lists augmented with some subset of: Parts-of-speech Different word senses Synonyms Semantic Nets Include links to other terms IS-A, Part-Of, etc. Sometimes this term is used for what I call ontologies Ontologies Represent concepts and relationships among concepts Language independent (in principle) Sometimes include inference rules Different from definition in philosophy – The science of what is, of the kinds and structures of objects, properties, events, processes and relations in every area of reality 9

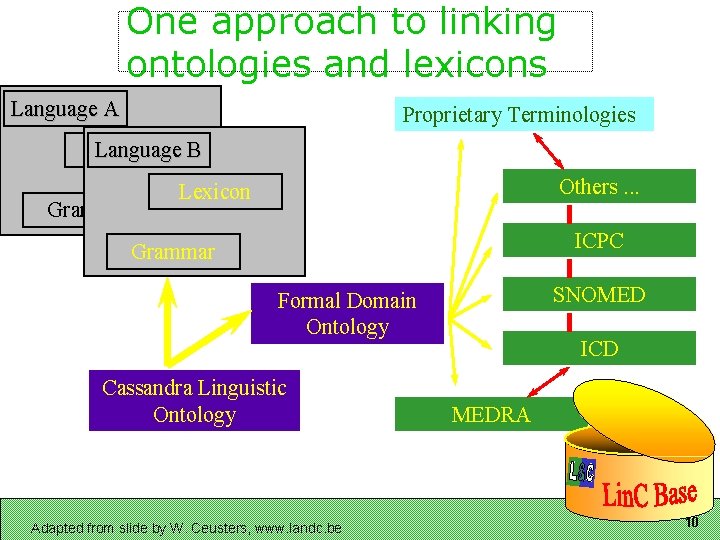

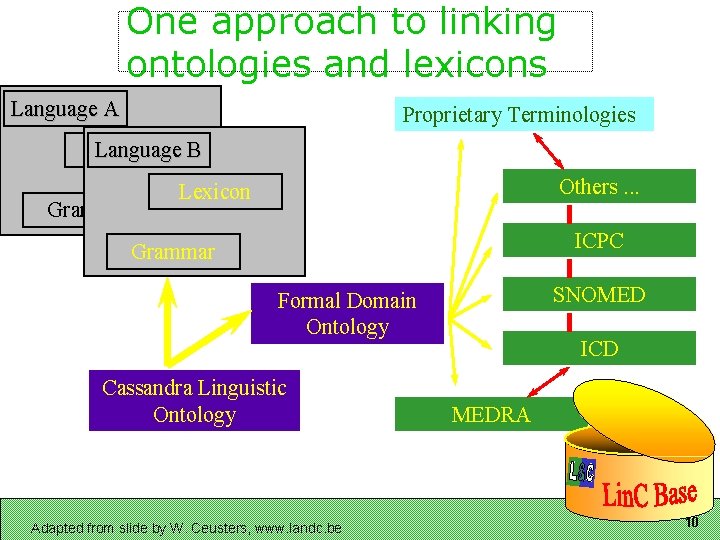

One approach to linking ontologies and lexicons Language A Proprietary Terminologies Language Lexicon B Grammar Others. . . Lexicon ICPC Grammar SNOMED Formal Domain Ontology Cassandra Linguistic Ontology Adapted from slide by W. Ceusters, www. landc. be ICD MEDRA 10

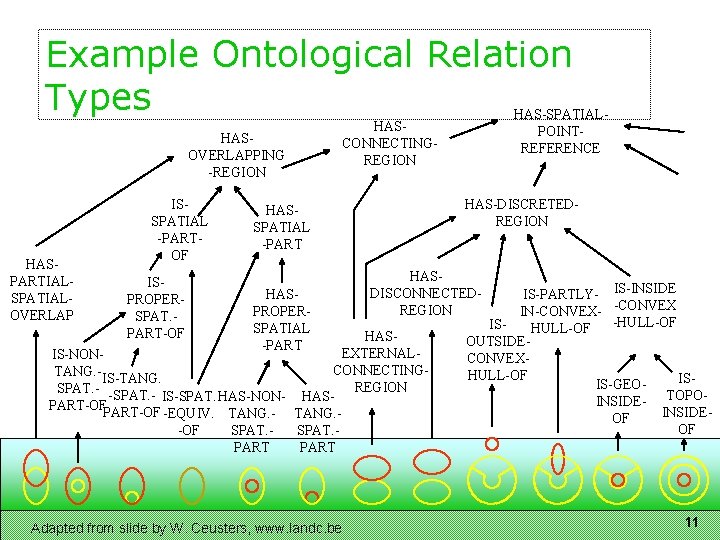

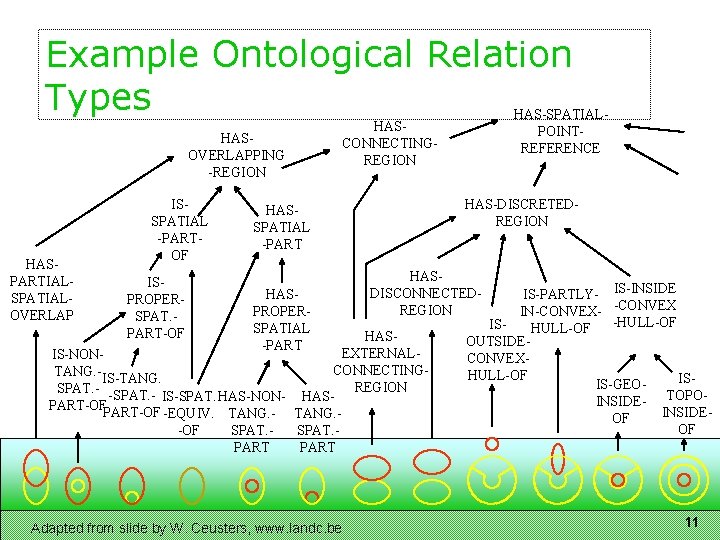

Example Ontological Relation Types HASOVERLAPPING -REGION HASPARTIALSPATIALOVERLAP ISSPATIAL -PARTOF ISPROPERSPAT. PART-OF HAS-SPATIALPOINTREFERENCE HASCONNECTINGREGION HAS-DISCRETEDREGION HASSPATIAL -PART HASPROPERSPATIAL -PART HASDISCONNECTEDREGION HASEXTERNALIS-NONCONNECTINGTANG. IS-TANG. REGION SPAT. - IS-SPAT. HAS-NON- HASPART-OF -EQUIV. TANG. -OF SPAT. PART Adapted from slide by W. Ceusters, www. landc. be IS-PARTLY- IS-INSIDE IN-CONVEX- -CONVEX -HULL-OF ISHULL-OF OUTSIDECONVEXHULL-OF ISIS-GEOINSIDE- TOPOINSIDEOF OF 11

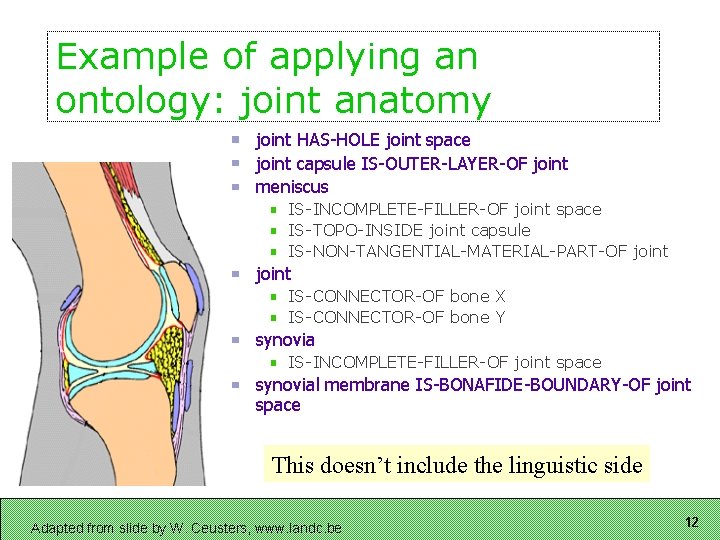

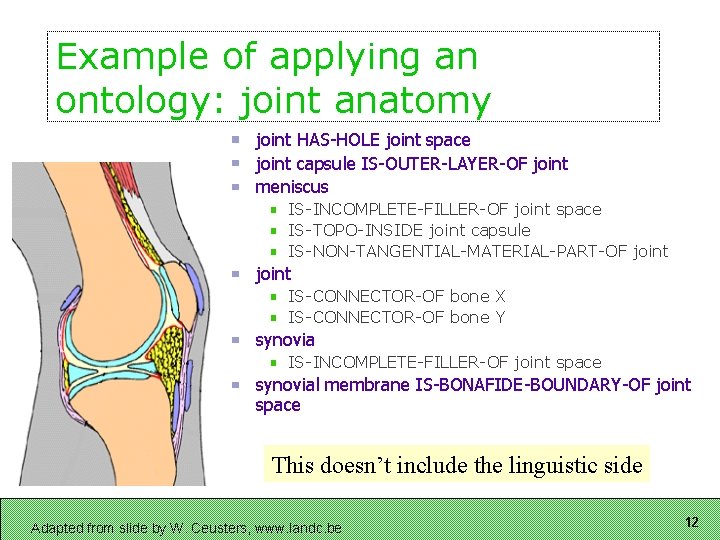

Example of applying an ontology: joint anatomy joint HAS-HOLE joint space joint capsule IS-OUTER-LAYER-OF joint meniscus IS-INCOMPLETE-FILLER-OF joint space IS-TOPO-INSIDE joint capsule IS-NON-TANGENTIAL-MATERIAL-PART-OF joint IS-CONNECTOR-OF bone X IS-CONNECTOR-OF bone Y synovia IS-INCOMPLETE-FILLER-OF joint space synovial membrane IS-BONAFIDE-BOUNDARY-OF joint space This doesn’t include the linguistic side Adapted from slide by W. Ceusters, www. landc. be 12

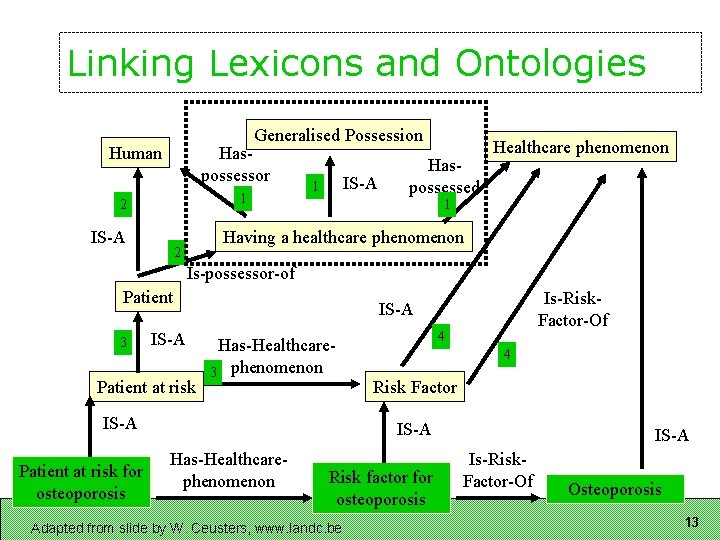

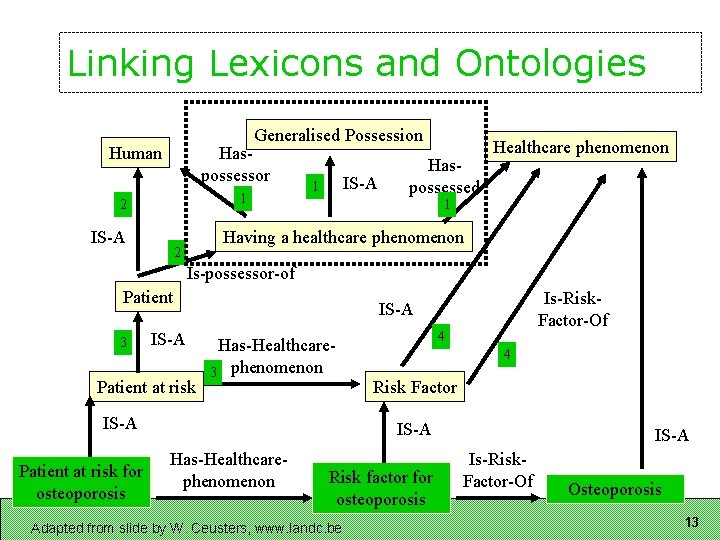

Linking Lexicons and Ontologies Generalised Possession Human Haspossessor 1 2 IS-A 1 Haspossessed Healthcare phenomenon 1 Having a healthcare phenomenon 2 Is-possessor-of Patient 3 IS-A Patient at risk Has-Healthcare 3 phenomenon IS-A Patient at risk for osteoporosis Is-Risk. Factor-Of IS-A 4 4 Risk Factor IS-A Has-Healthcarephenomenon Risk factor for osteoporosis Adapted from slide by W. Ceusters, www. landc. be IS-A Is-Risk. Factor-Of Osteoporosis 13

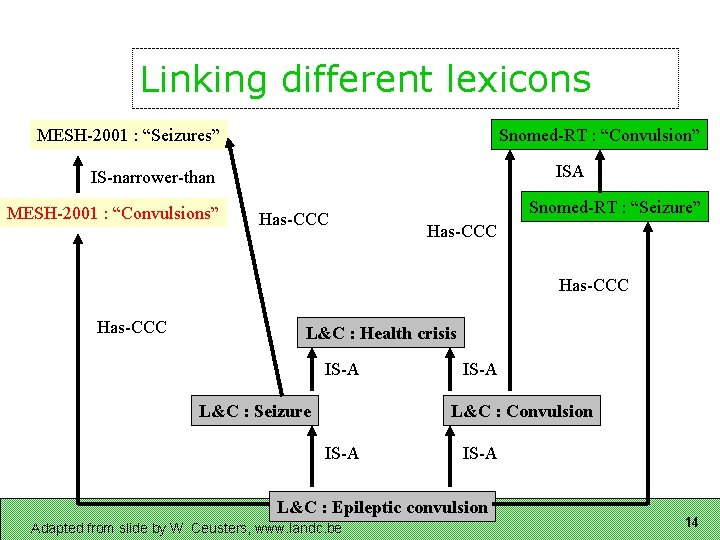

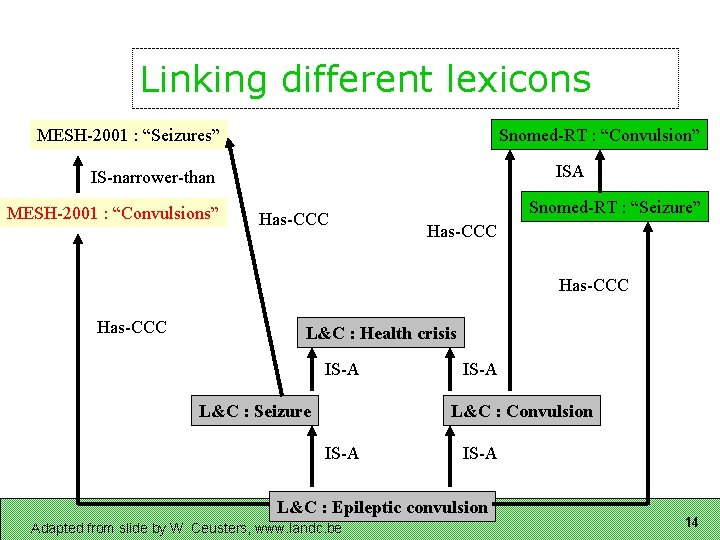

Linking different lexicons MESH-2001 : “Seizures” Snomed-RT : “Convulsion” ISA IS-narrower-than MESH-2001 : “Convulsions” Has-CCC Snomed-RT : “Seizure” Has-CCC L&C : Health crisis IS-A L&C : Seizure IS-A L&C : Convulsion IS-A L&C : Epileptic convulsion Adapted from slide by W. Ceusters, www. landc. be 14

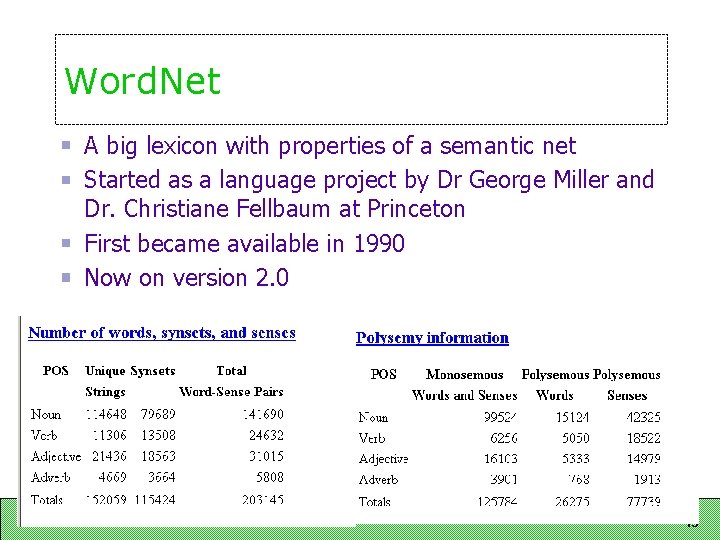

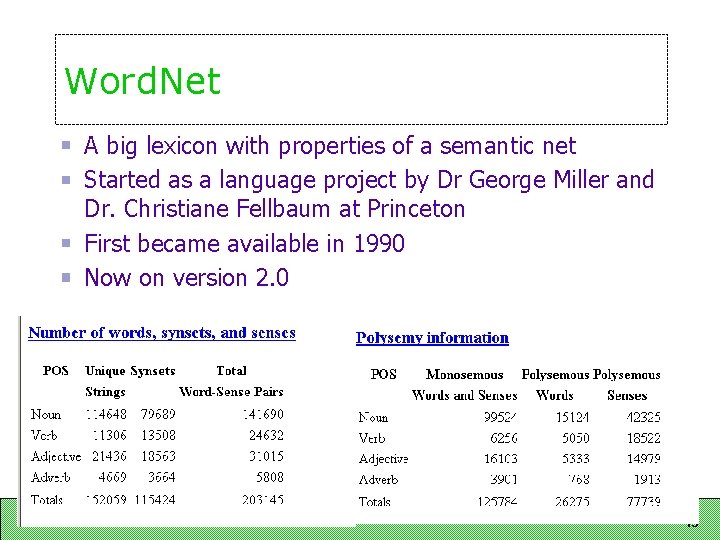

Word. Net A big lexicon with properties of a semantic net Started as a language project by Dr George Miller and Dr. Christiane Fellbaum at Princeton First became available in 1990 Now on version 2. 0 15

Word. Net Huge amounts of research (and products) use it 16

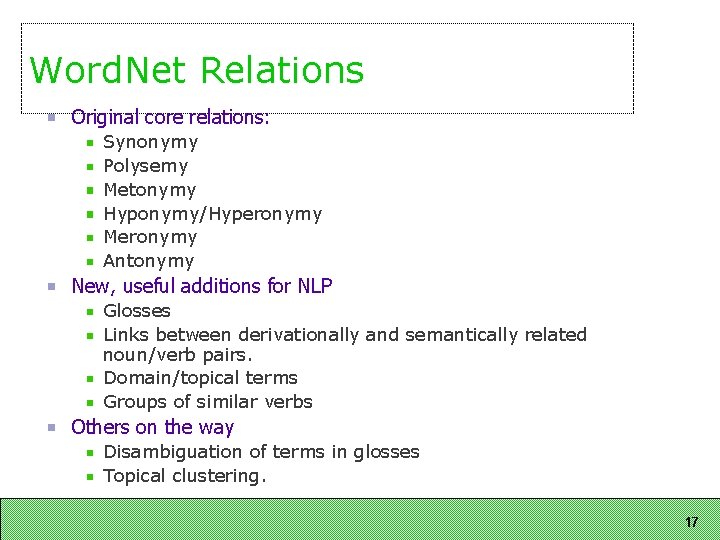

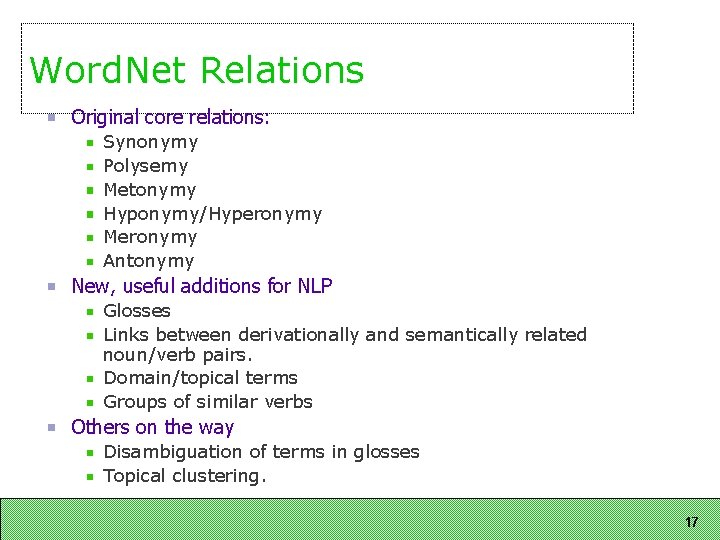

Word. Net Relations Original core relations: Synonymy Polysemy Metonymy Hyponymy/Hyperonymy Meronymy Antonymy New, useful additions for NLP Glosses Links between derivationally and semantically related noun/verb pairs. Domain/topical terms Groups of similar verbs Others on the way Disambiguation of terms in glosses Topical clustering. 17

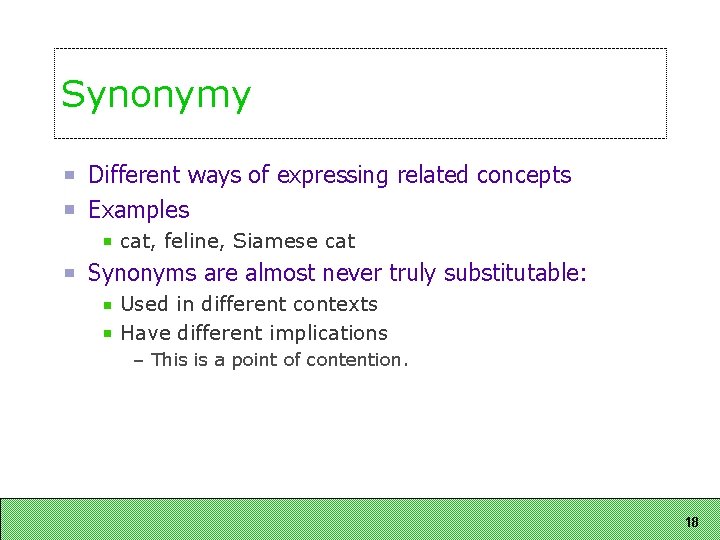

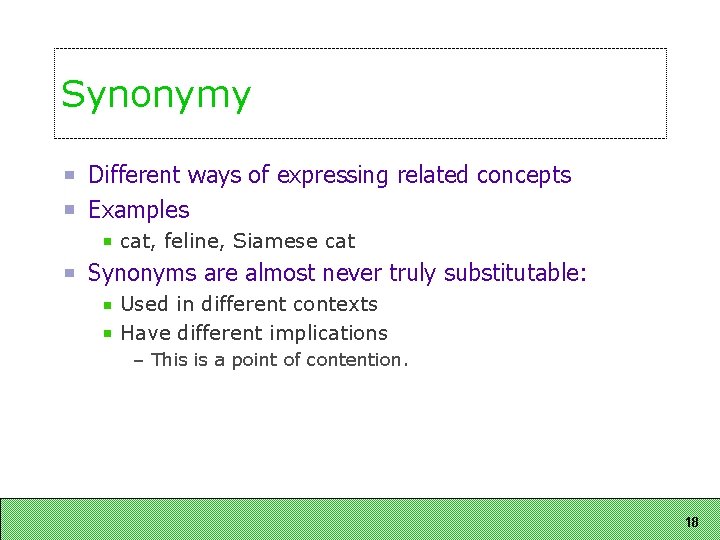

Synonymy Different ways of expressing related concepts Examples cat, feline, Siamese cat Synonyms are almost never truly substitutable: Used in different contexts Have different implications – This is a point of contention. 18

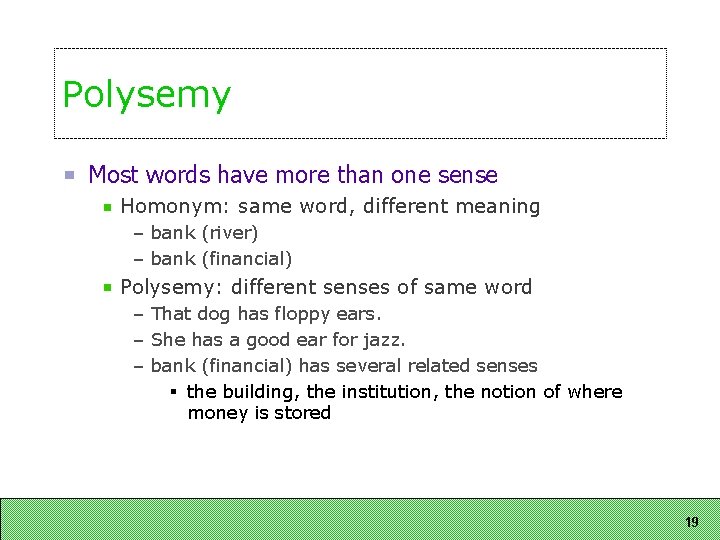

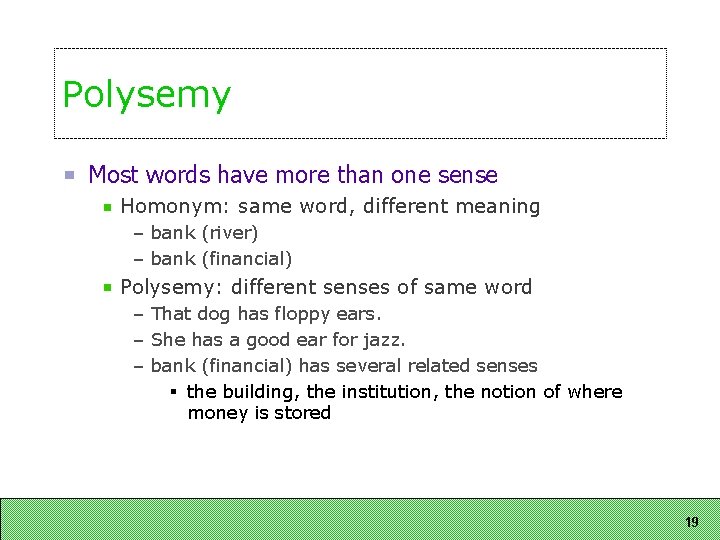

Polysemy Most words have more than one sense Homonym: same word, different meaning – bank (river) – bank (financial) Polysemy: different senses of same word – That dog has floppy ears. – She has a good ear for jazz. – bank (financial) has several related senses § the building, the institution, the notion of where money is stored 19

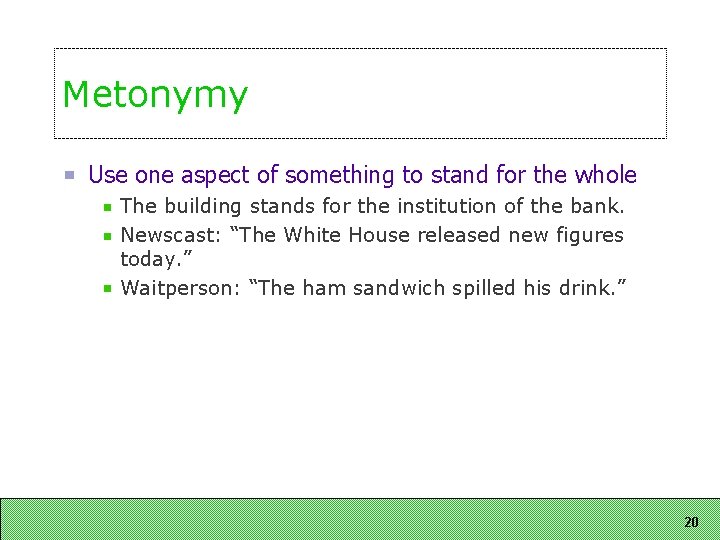

Metonymy Use one aspect of something to stand for the whole The building stands for the institution of the bank. Newscast: “The White House released new figures today. ” Waitperson: “The ham sandwich spilled his drink. ” 20

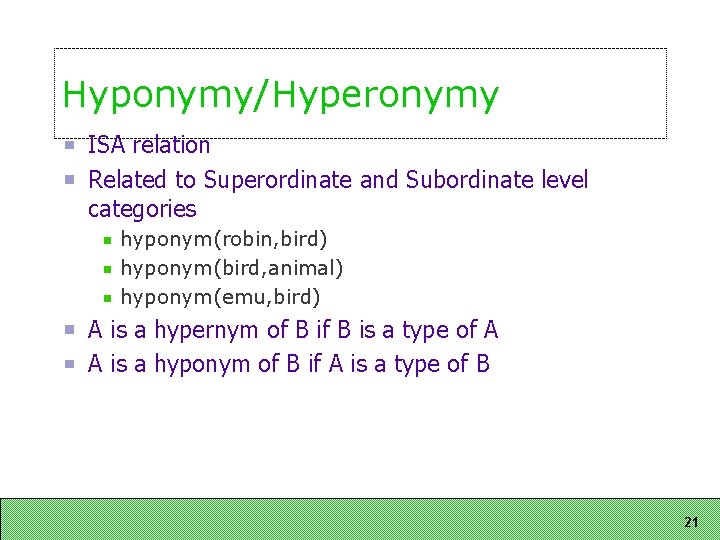

Hyponymy/Hyperonymy ISA relation Related to Superordinate and Subordinate level categories hyponym(robin, bird) hyponym(bird, animal) hyponym(emu, bird) A is a hypernym of B is a type of A A is a hyponym of B if A is a type of B 21

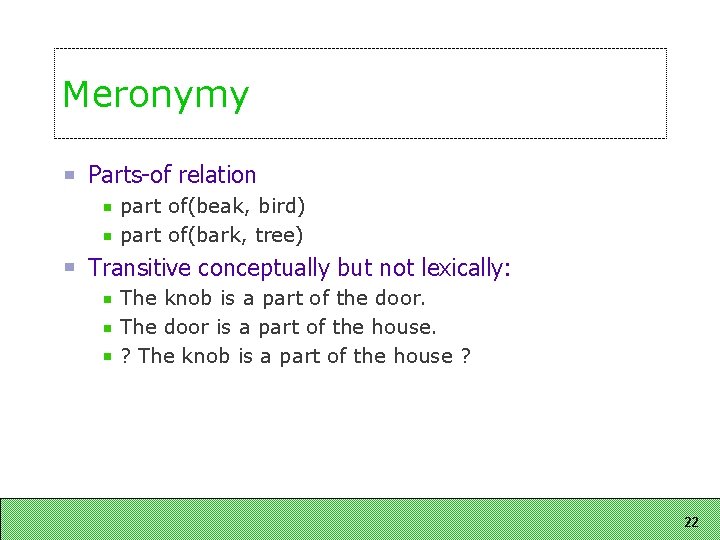

Meronymy Parts-of relation part of(beak, bird) part of(bark, tree) Transitive conceptually but not lexically: The knob is a part of the door. The door is a part of the house. ? The knob is a part of the house ? 22

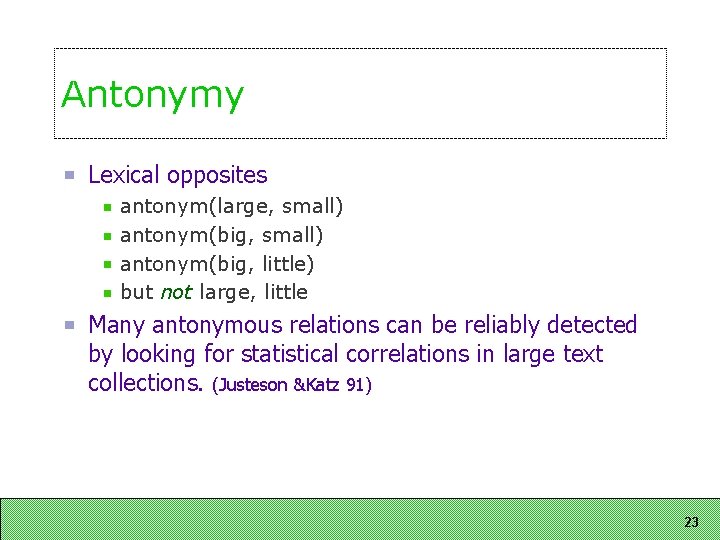

Antonymy Lexical opposites antonym(large, small) antonym(big, little) but not large, little Many antonymous relations can be reliably detected by looking for statistical correlations in large text collections. (Justeson &Katz 91) 23

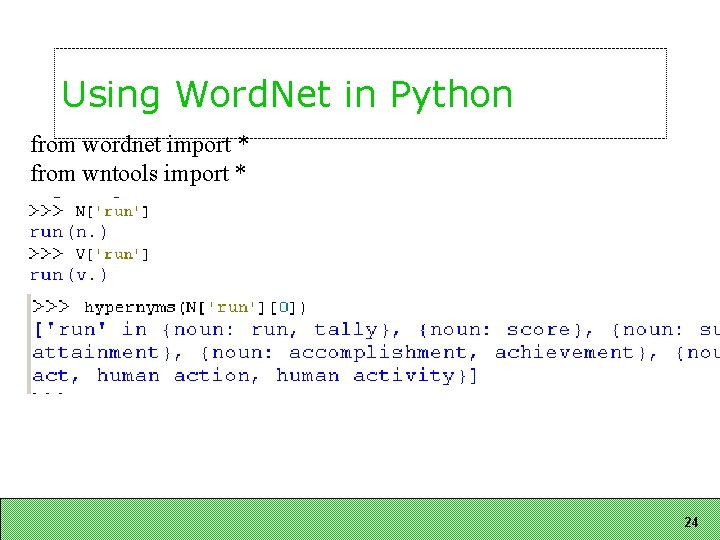

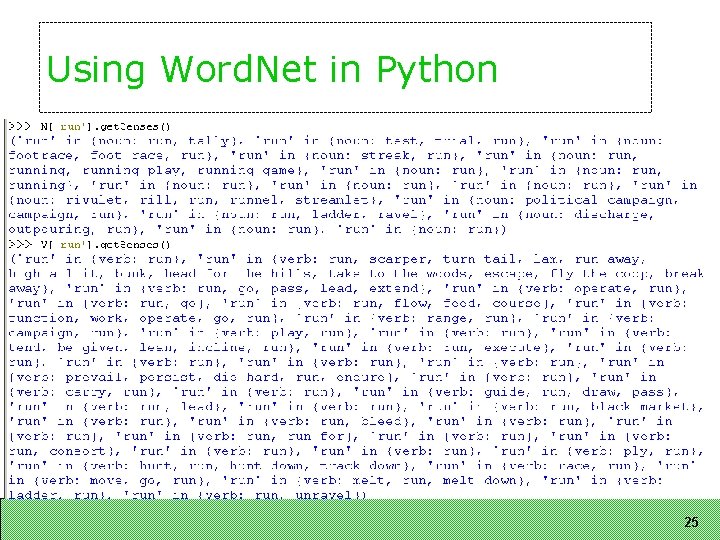

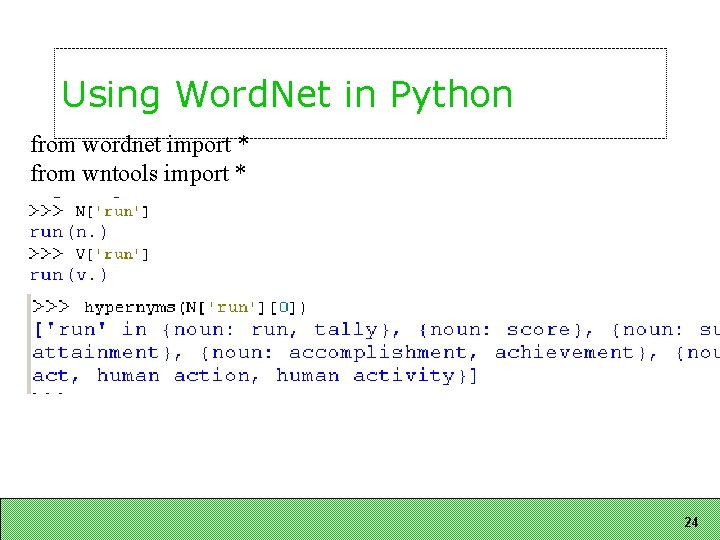

Using Word. Net in Python from wordnet import * from wntools import * 24

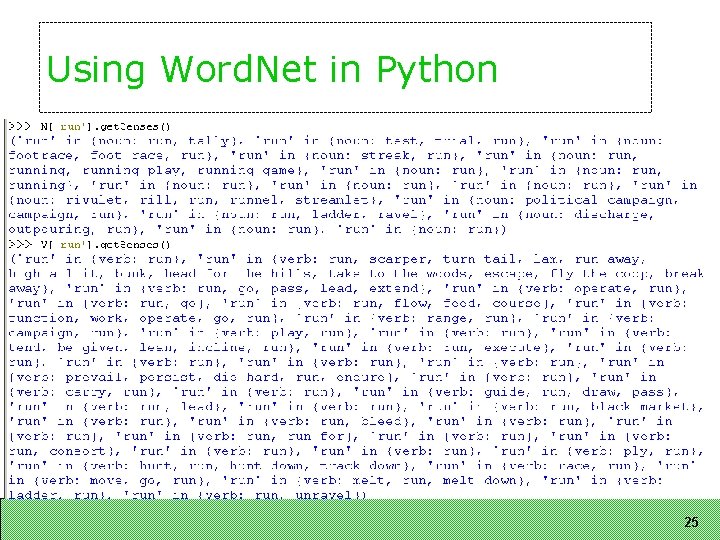

Using Word. Net in Python from wordnet import * from wntools import * 25

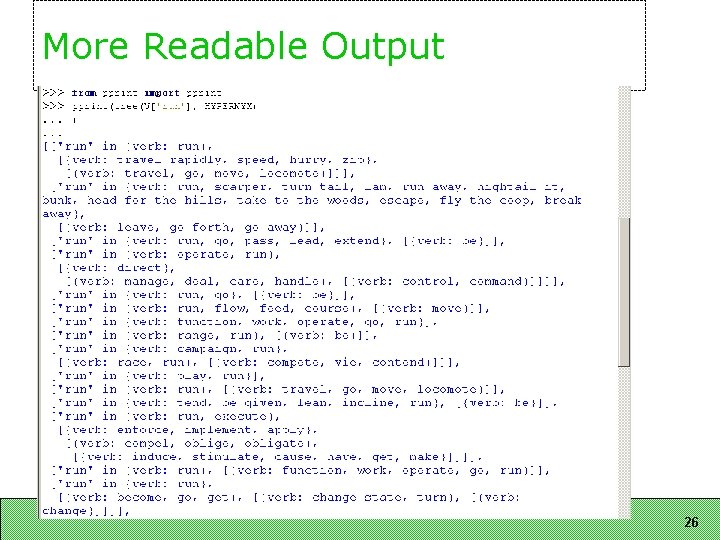

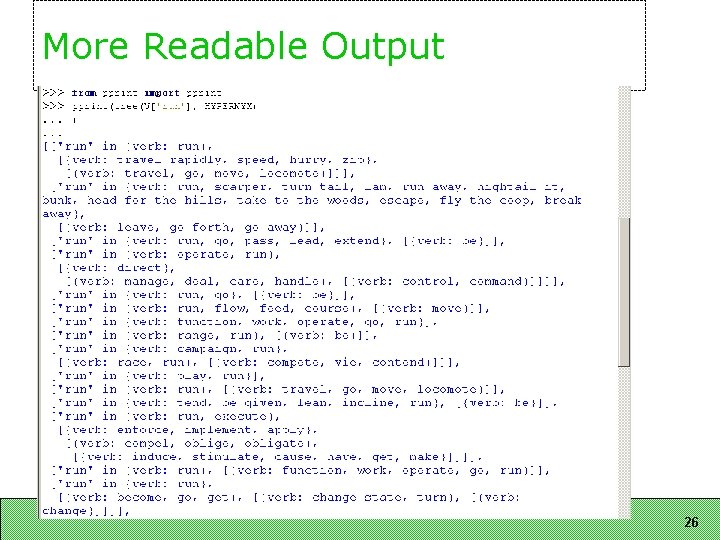

More Readable Output 26

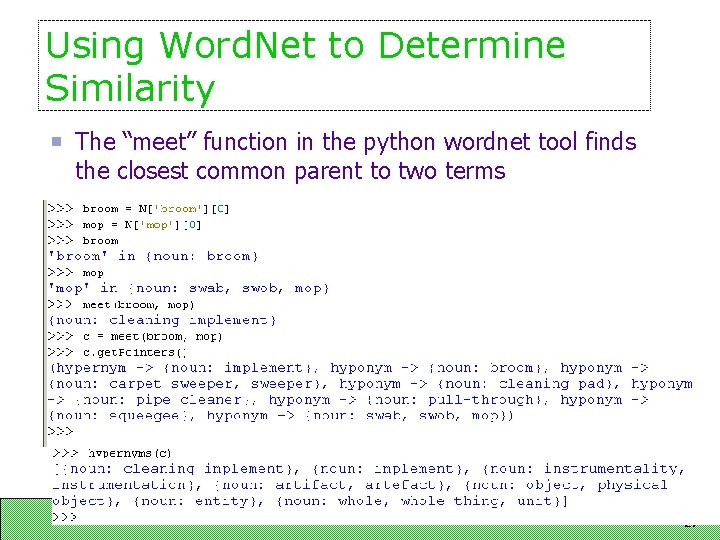

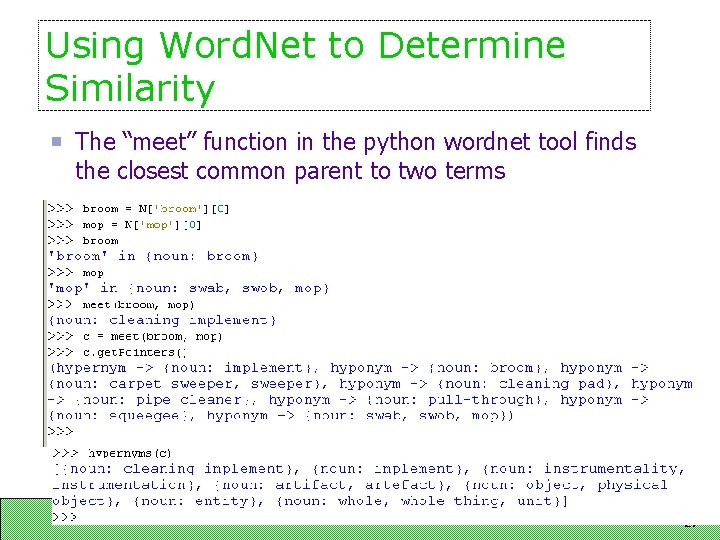

Using Word. Net to Determine Similarity The “meet” function in the python wordnet tool finds the closest common parent to two terms 27

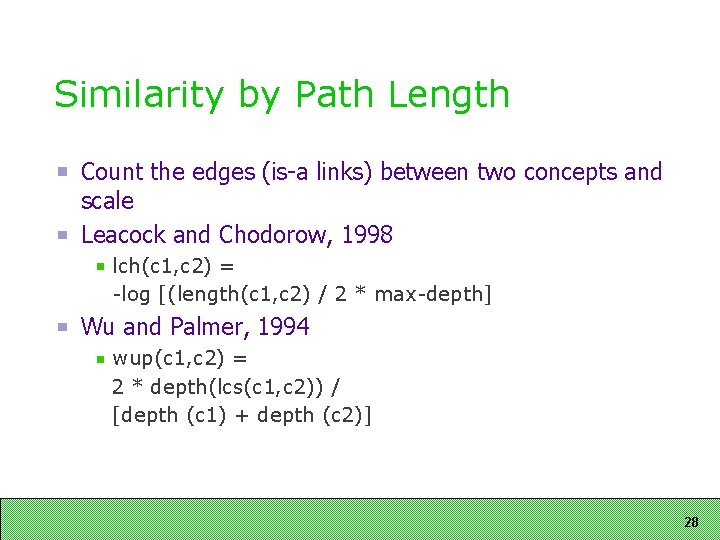

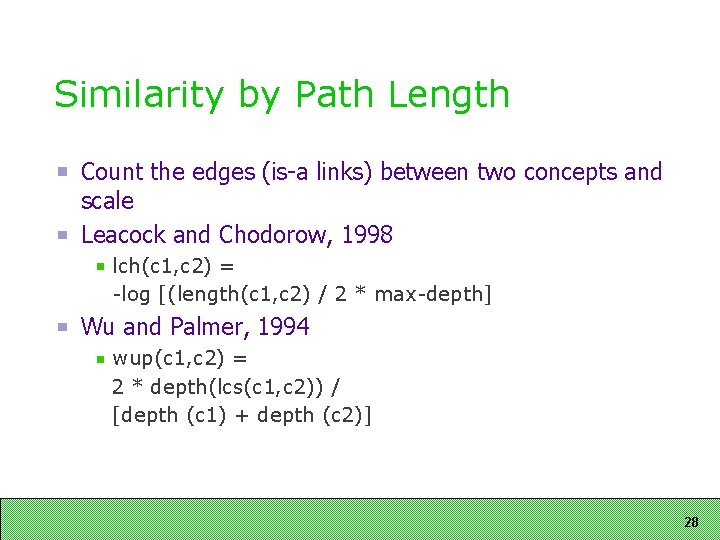

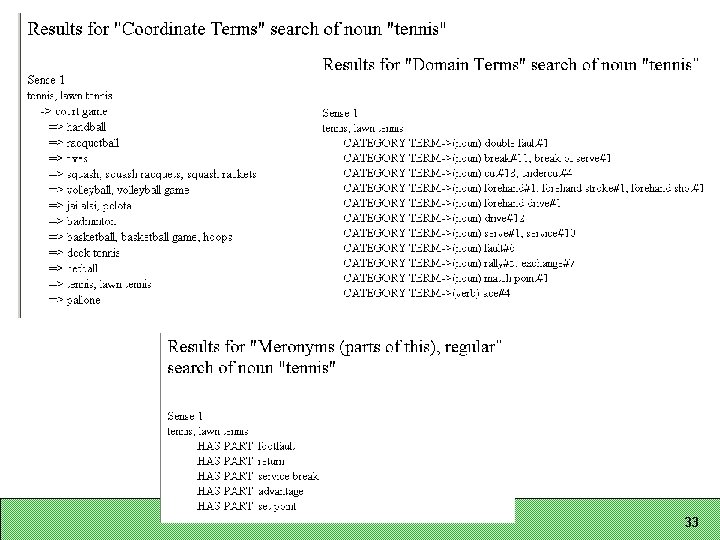

Similarity by Path Length Count the edges (is-a links) between two concepts and scale Leacock and Chodorow, 1998 lch(c 1, c 2) = -log [(length(c 1, c 2) / 2 * max-depth] Wu and Palmer, 1994 wup(c 1, c 2) = 2 * depth(lcs(c 1, c 2)) / [depth (c 1) + depth (c 2)] 28

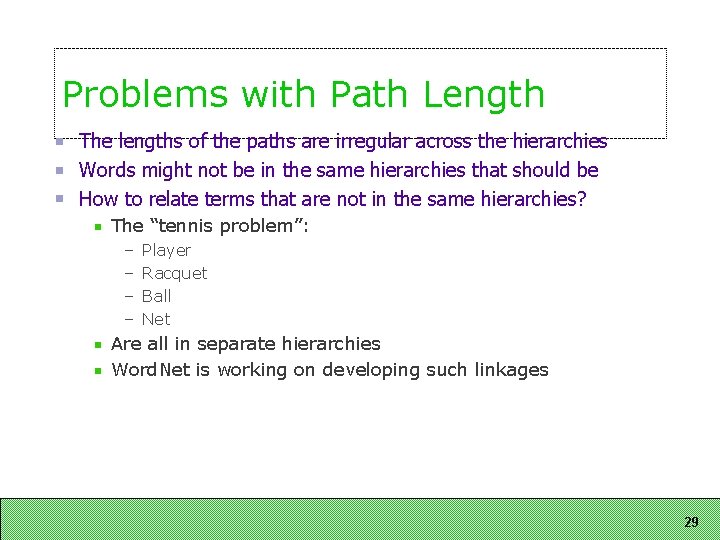

Problems with Path Length The lengths of the paths are irregular across the hierarchies Words might not be in the same hierarchies that should be How to relate terms that are not in the same hierarchies? The “tennis problem”: – – Player Racquet Ball Net Are all in separate hierarchies Word. Net is working on developing such linkages 29

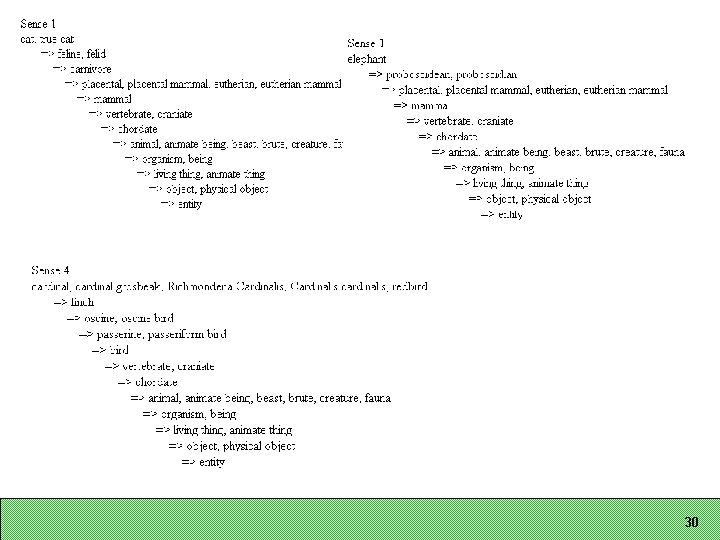

30

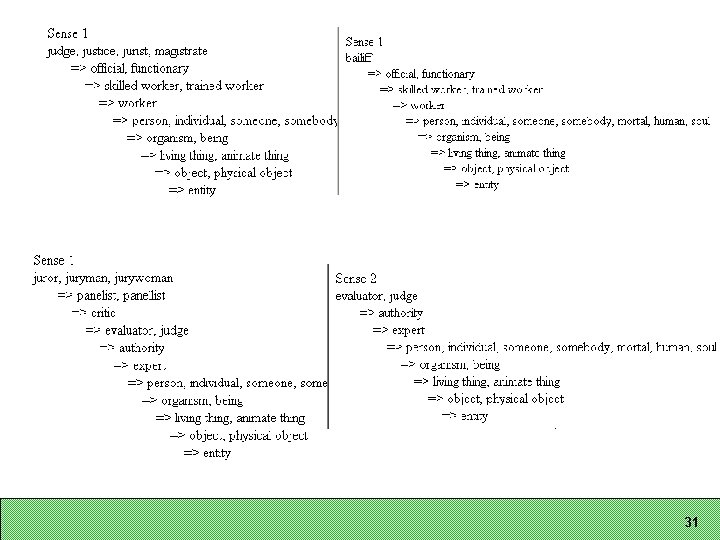

31

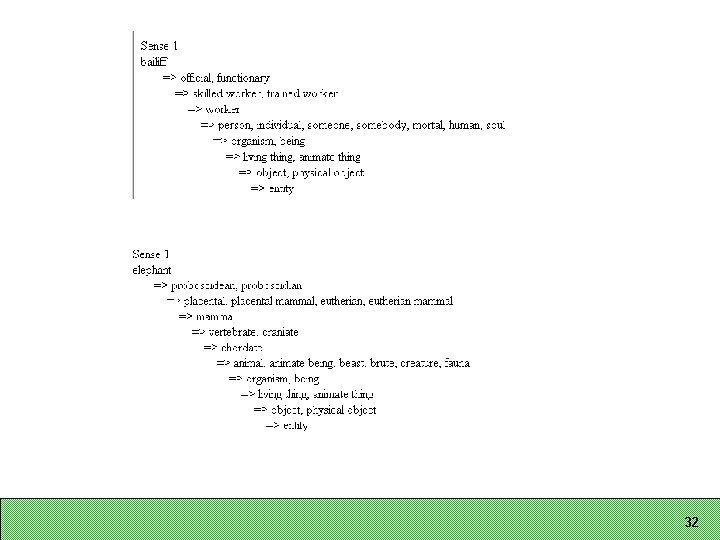

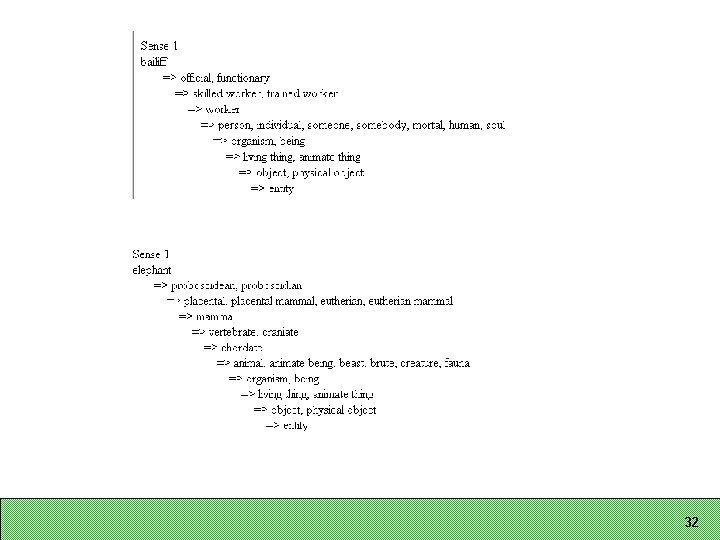

32

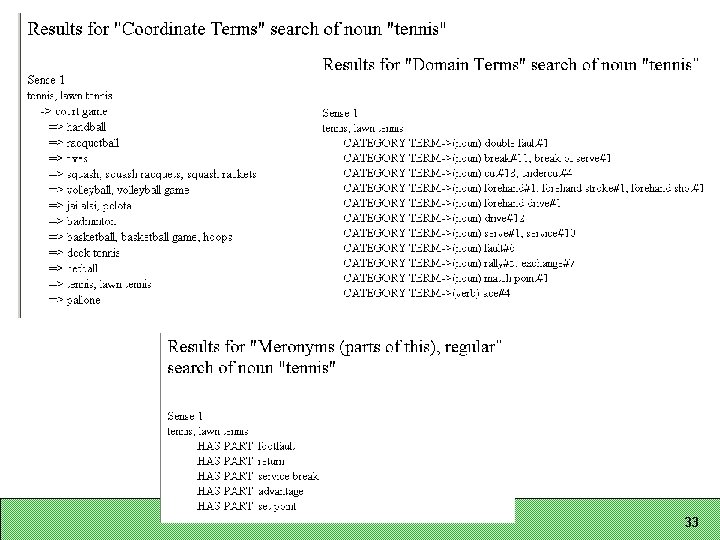

33

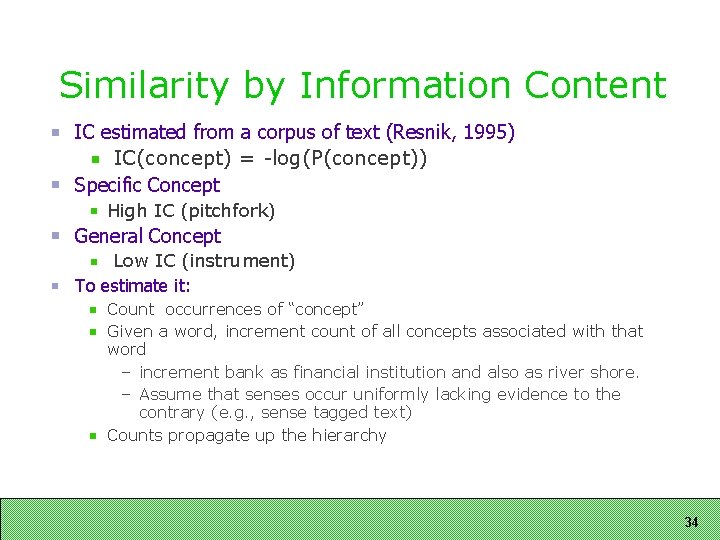

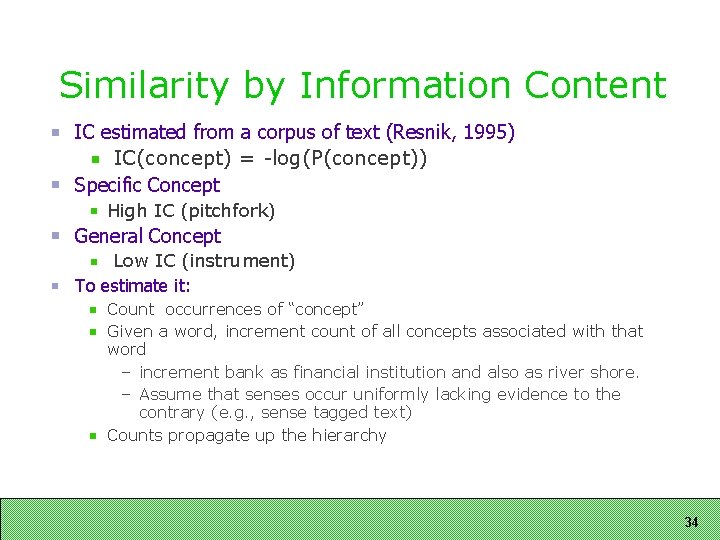

Similarity by Information Content IC estimated from a corpus of text (Resnik, 1995) IC(concept) = -log(P(concept)) Specific Concept High IC (pitchfork) General Concept Low IC (instrument) To estimate it: Count occurrences of “concept” Given a word, increment count of all concepts associated with that word – increment bank as financial institution and also as river shore. – Assume that senses occur uniformly lacking evidence to the contrary (e. g. , sense tagged text) Counts propagate up the hierarchy 34

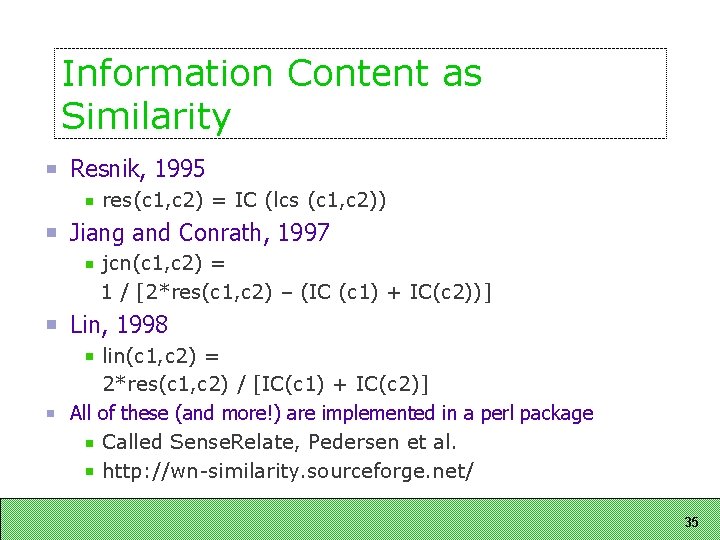

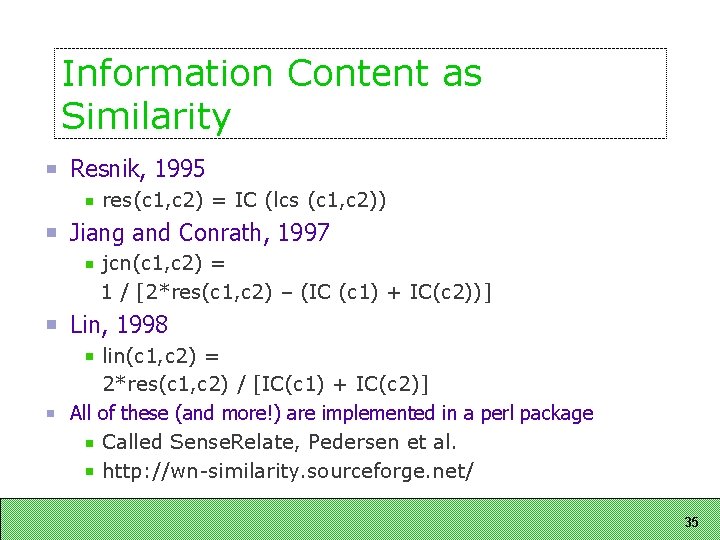

Information Content as Similarity Resnik, 1995 res(c 1, c 2) = IC (lcs (c 1, c 2)) Jiang and Conrath, 1997 jcn(c 1, c 2) = 1 / [2*res(c 1, c 2) – (IC (c 1) + IC(c 2))] Lin, 1998 lin(c 1, c 2) = 2*res(c 1, c 2) / [IC(c 1) + IC(c 2)] All of these (and more!) are implemented in a perl package Called Sense. Relate, Pedersen et al. http: //wn-similarity. sourceforge. net/ 35

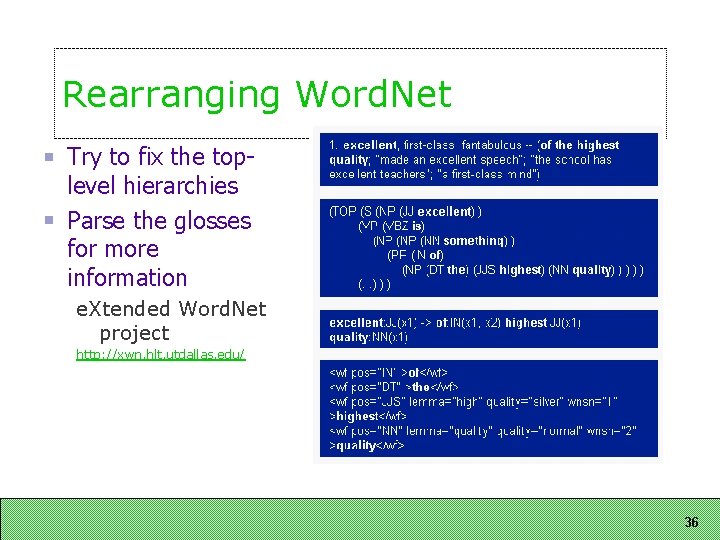

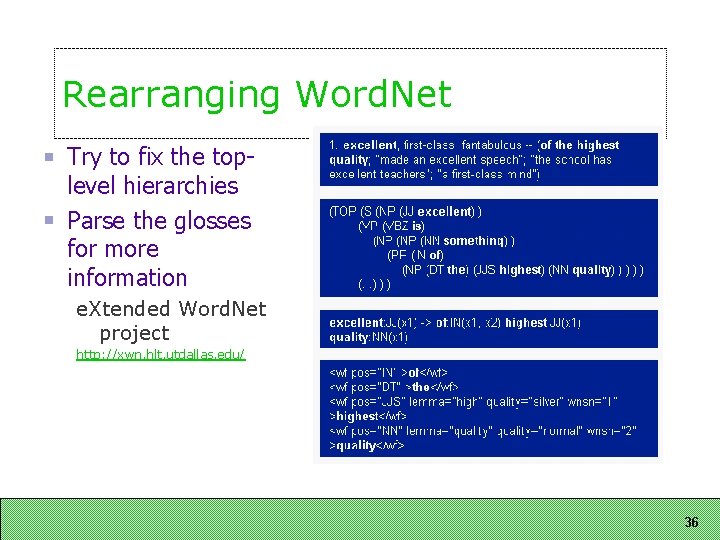

Rearranging Word. Net Try to fix the toplevel hierarchies Parse the glosses for more information e. Xtended Word. Net project http: //xwn. hlt. utdallas. edu/ 36

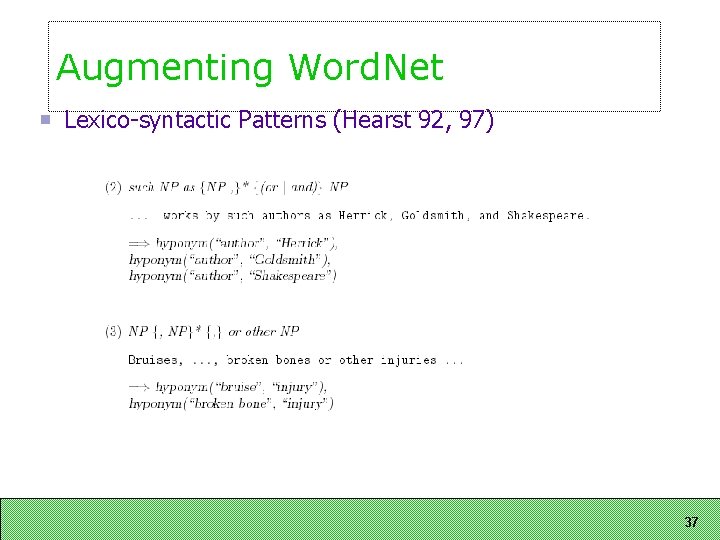

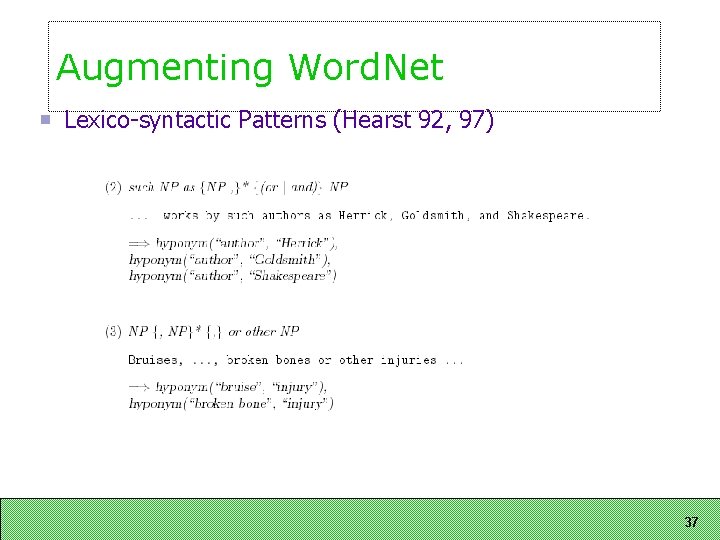

Augmenting Word. Net Lexico-syntactic Patterns (Hearst 92, 97) 37

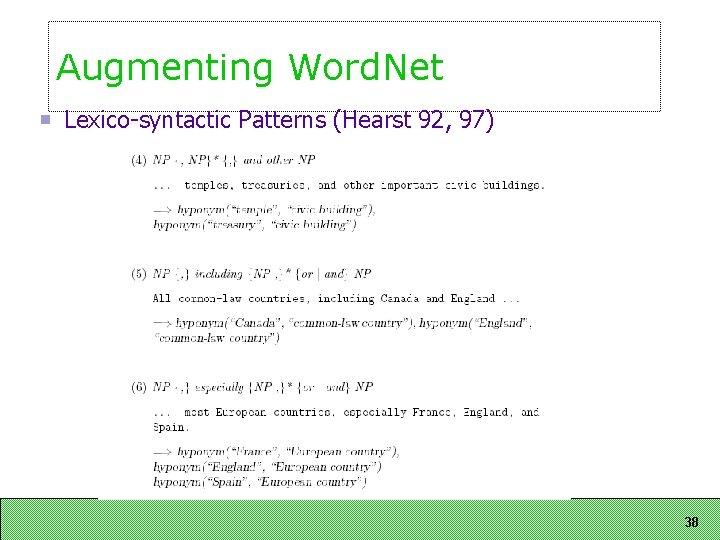

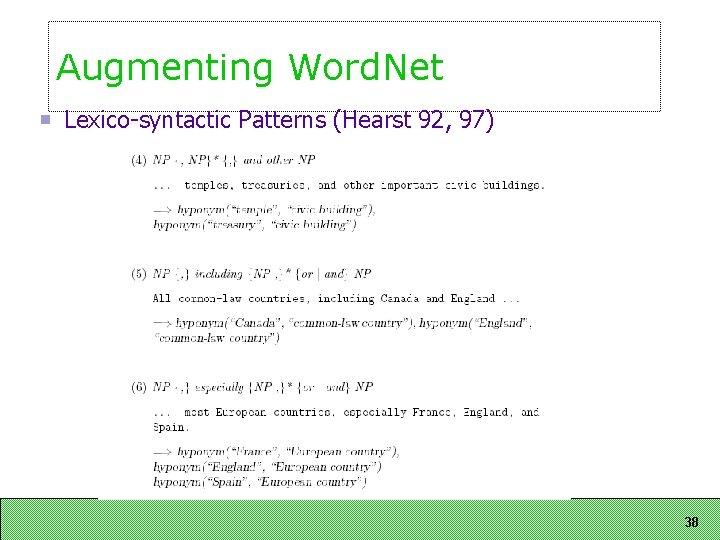

Augmenting Word. Net Lexico-syntactic Patterns (Hearst 92, 97) 38

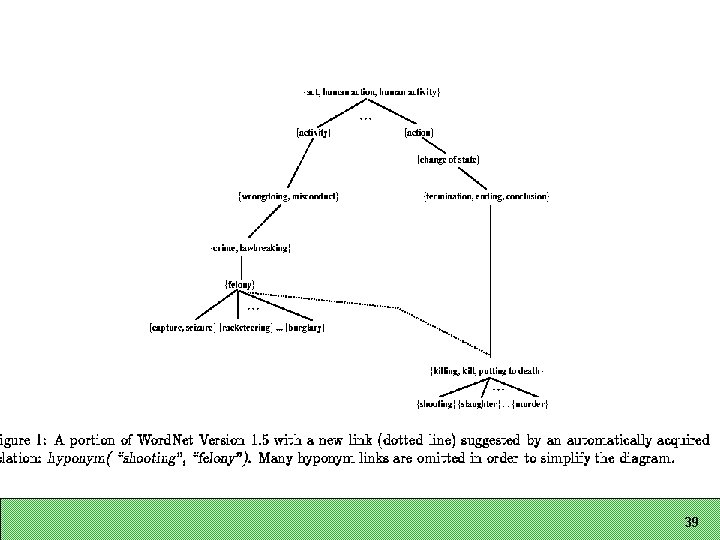

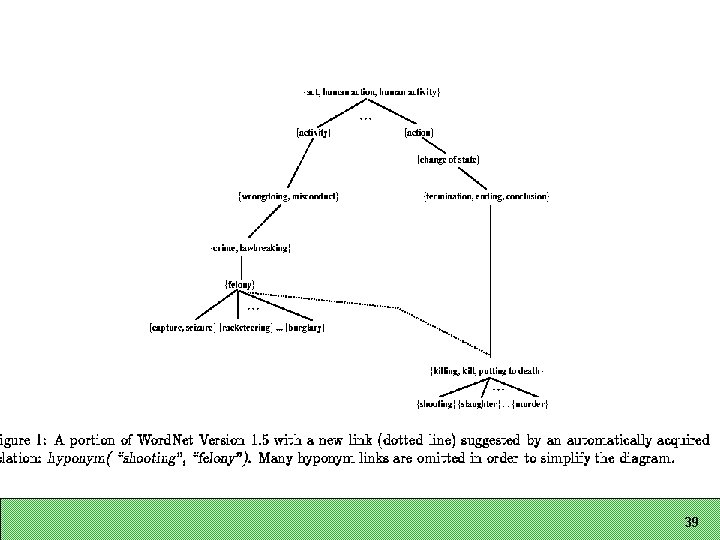

39

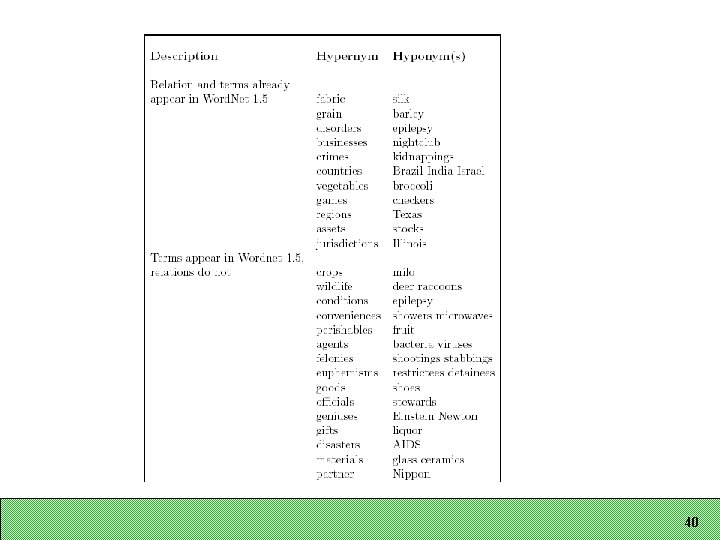

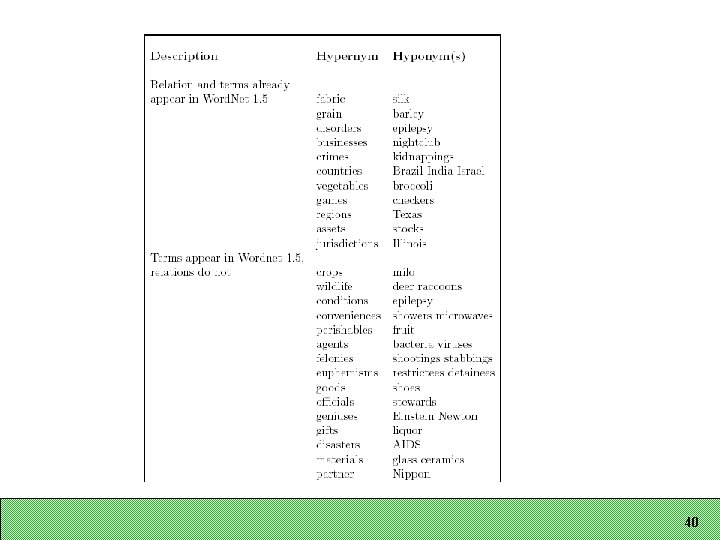

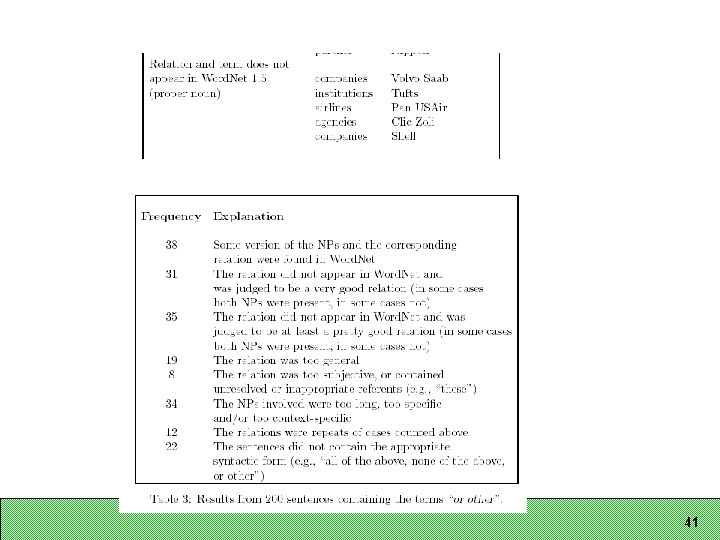

40

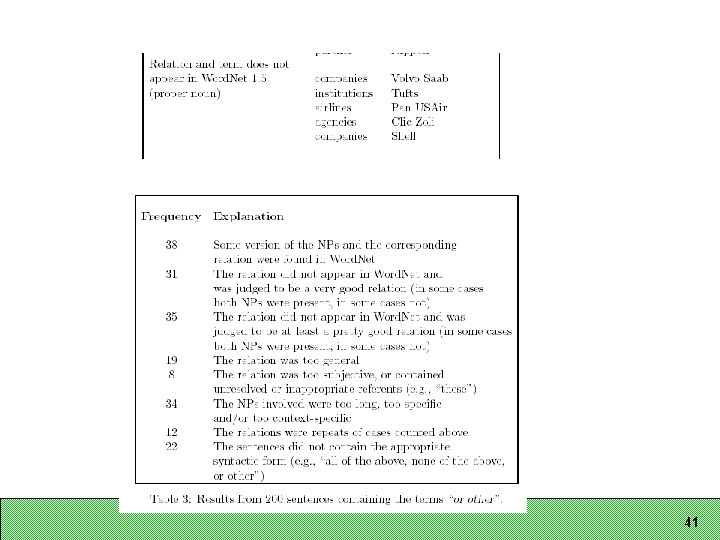

41

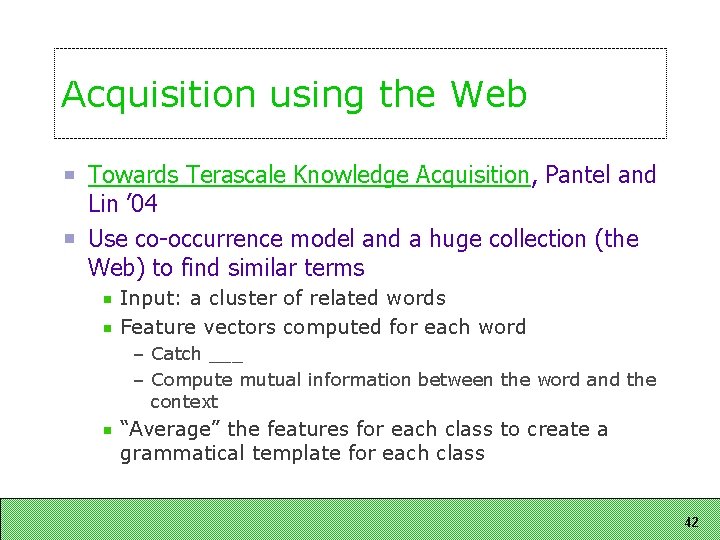

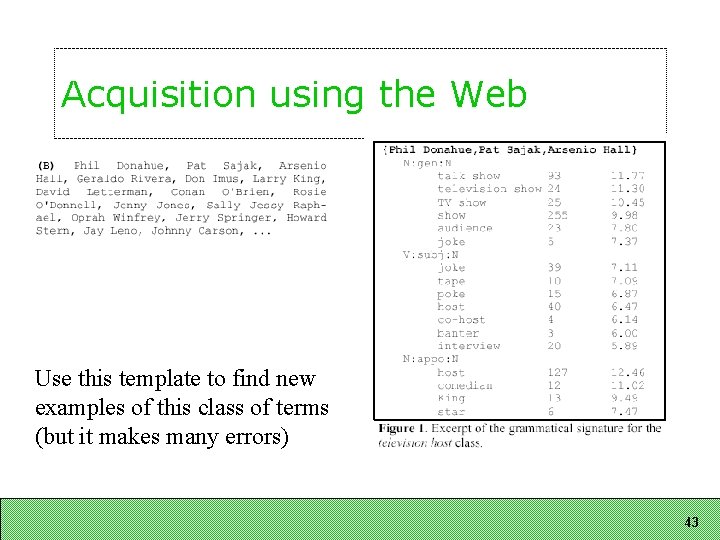

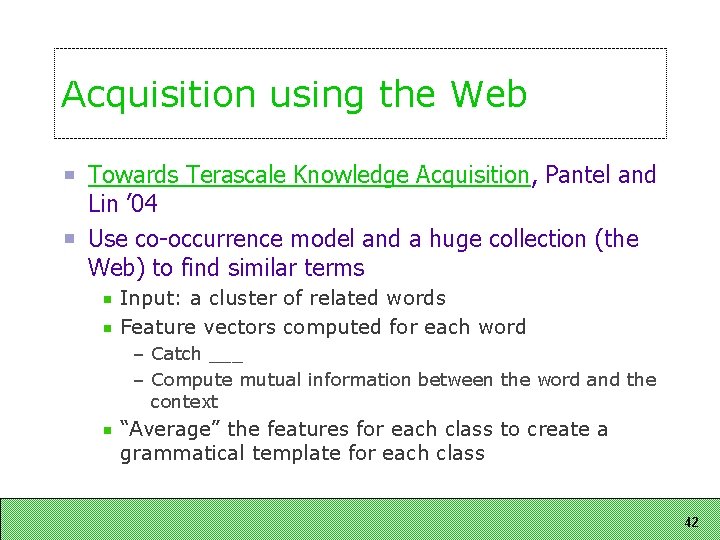

Acquisition using the Web Towards Terascale Knowledge Acquisition, Pantel and Lin ’ 04 Use co-occurrence model and a huge collection (the Web) to find similar terms Input: a cluster of related words Feature vectors computed for each word – Catch ___ – Compute mutual information between the word and the context “Average” the features for each class to create a grammatical template for each class 42

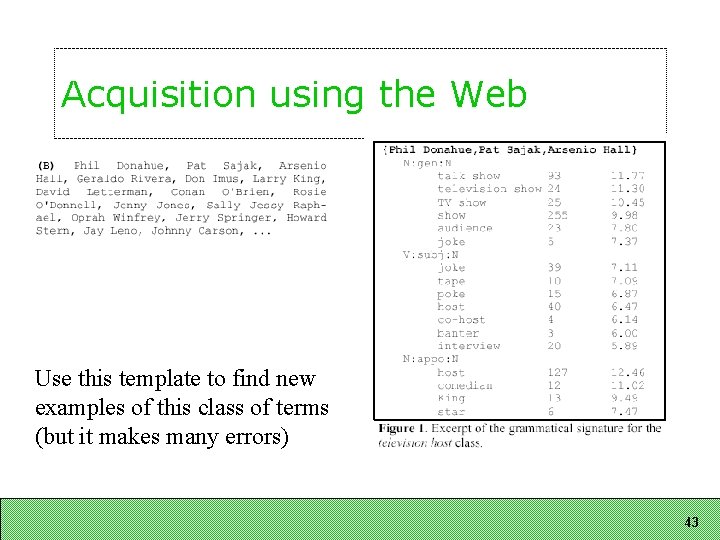

Acquisition using the Web Use this template to find new examples of this class of terms (but it makes many errors) 43

Next Time Frame. Net A background paper is on the class website (Not required to read it beforehand) 44

Acquisition using the Web 45