SIMS 290 2 Applied Natural Language Processing Marti

- Slides: 30

SIMS 290 -2: Applied Natural Language Processing Marti Hearst October 18, 2004 1

How might we analyze email? Identify different parts Reply blocks, signature blocks Integrate email with workflow tasks Build a social network Who do you know, and what is their contact info? Reputation analysis – Useful for anti-spam too 2

Today Email analysis Spam filtering 3

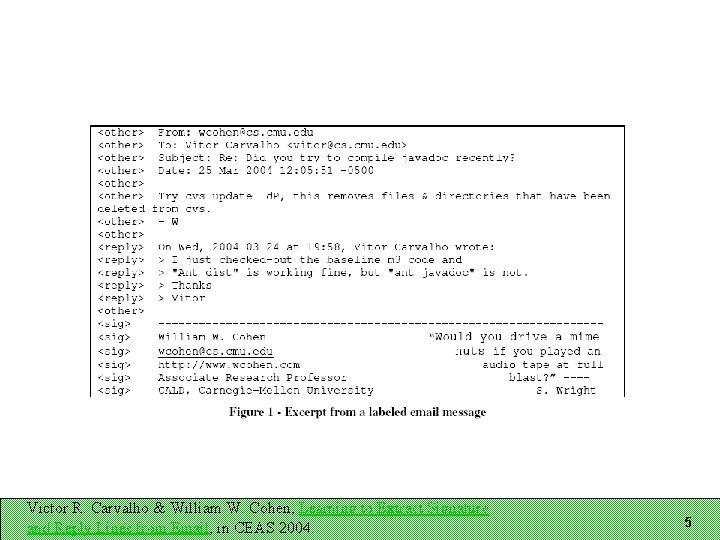

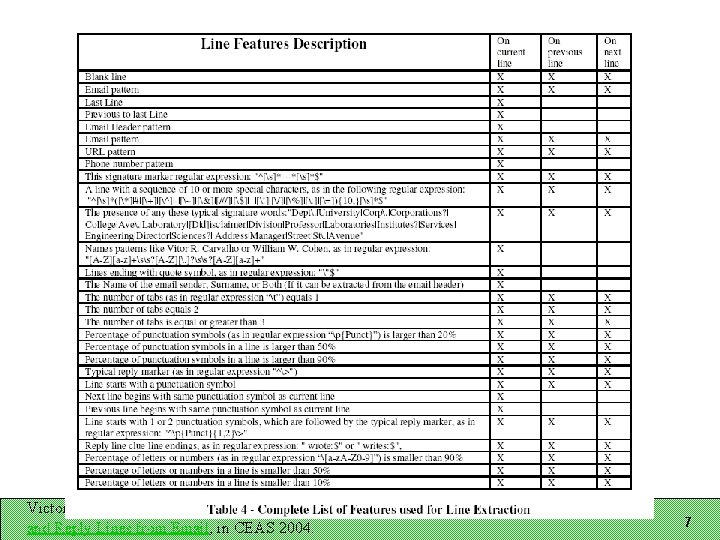

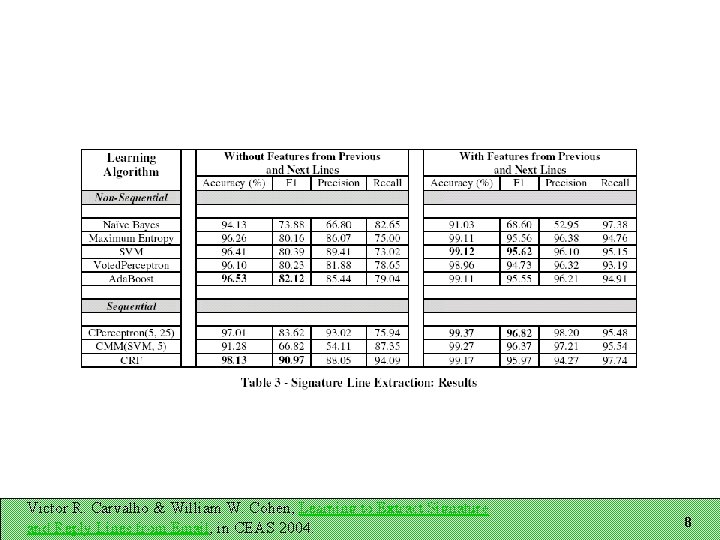

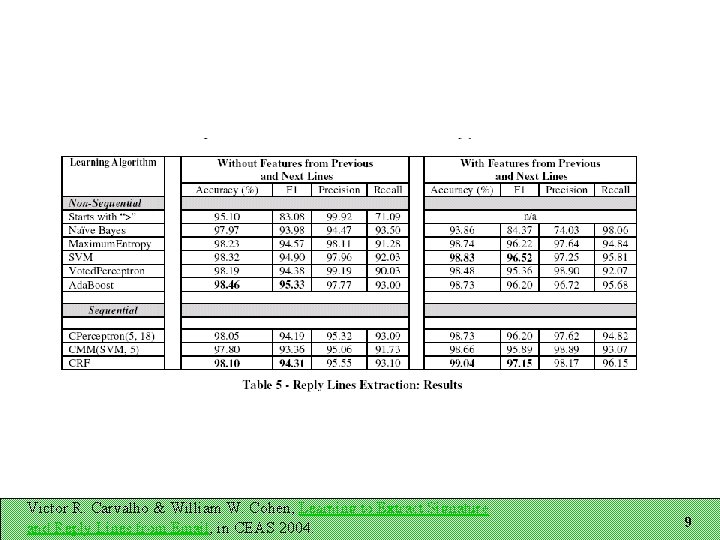

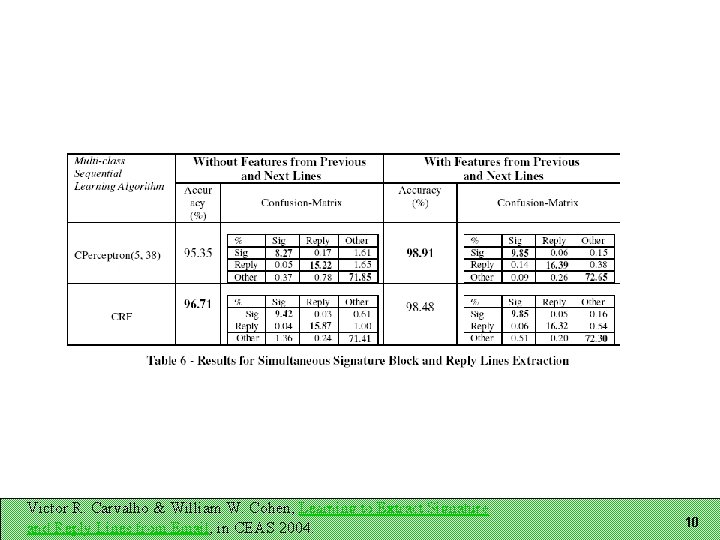

Recognizing Email Structure Three tasks: Does this message contain a signature block? If so, which lines are in it? Which lines are reply lines? Three-way classification for each line Representation A sequence of lines Each line has features associated with it Windows of lines important for line classification Victor R. Carvalho & William W. Cohen, Learning to Extract Signature and Reply Lines from Email, in CEAS 2004. 4

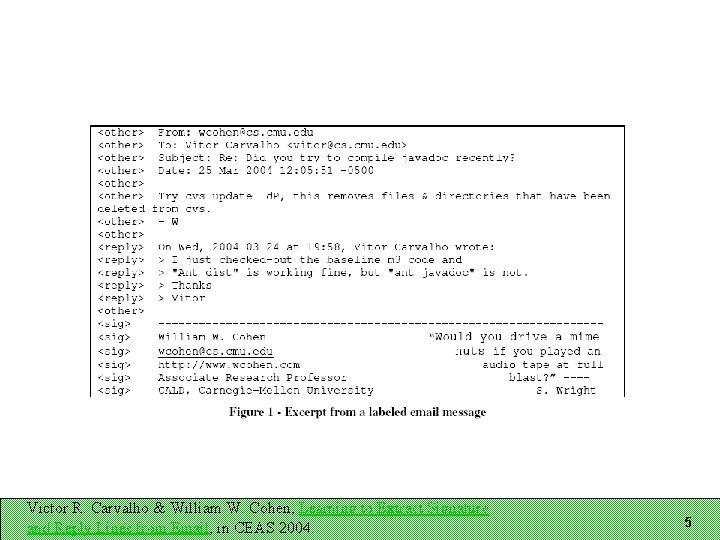

Victor R. Carvalho & William W. Cohen, Learning to Extract Signature and Reply Lines from Email, in CEAS 2004. 5

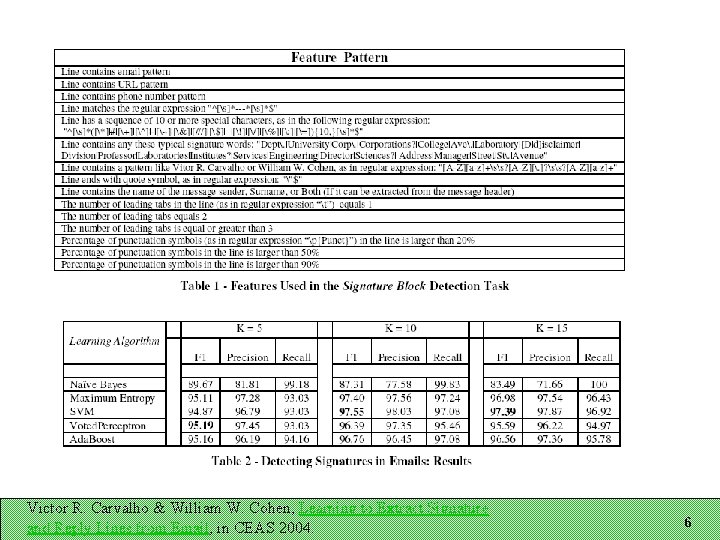

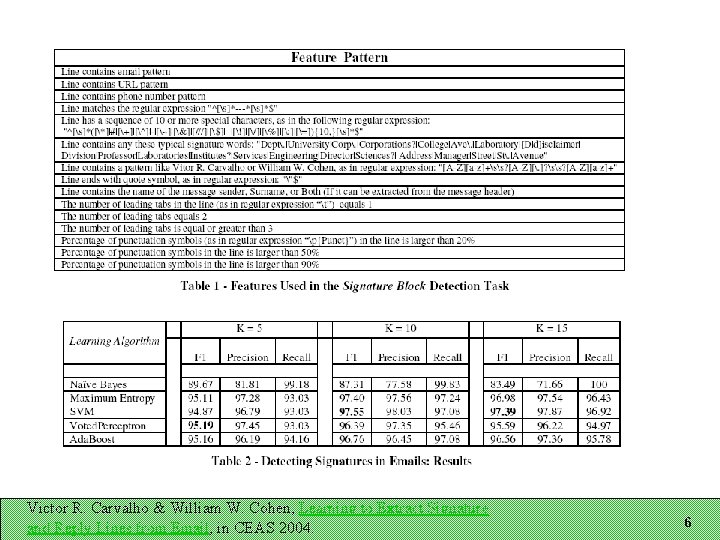

Victor R. Carvalho & William W. Cohen, Learning to Extract Signature and Reply Lines from Email, in CEAS 2004. 6

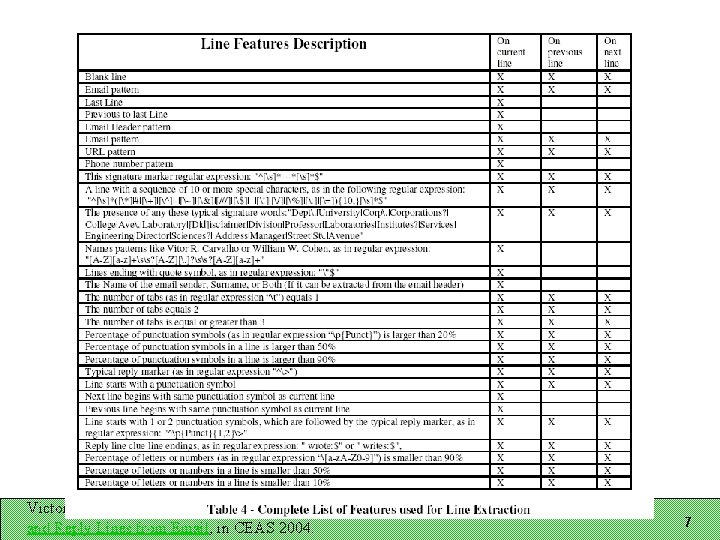

Victor R. Carvalho & William W. Cohen, Learning to Extract Signature and Reply Lines from Email, in CEAS 2004. 7

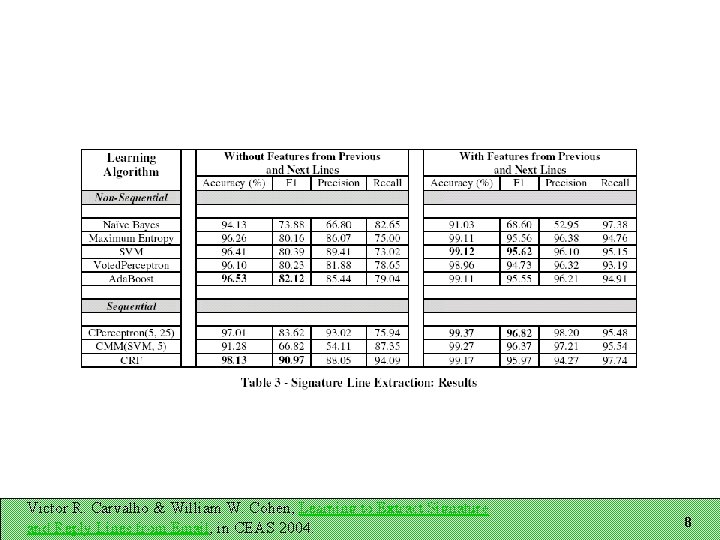

Victor R. Carvalho & William W. Cohen, Learning to Extract Signature and Reply Lines from Email, in CEAS 2004. 8

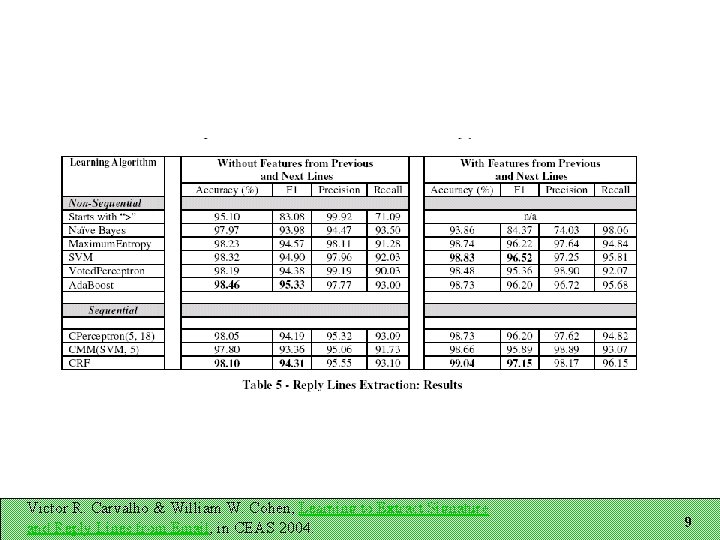

Victor R. Carvalho & William W. Cohen, Learning to Extract Signature and Reply Lines from Email, in CEAS 2004. 9

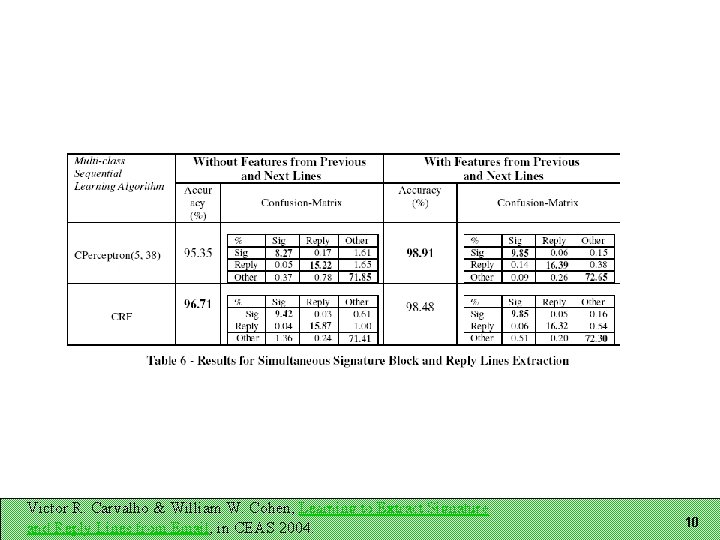

Victor R. Carvalho & William W. Cohen, Learning to Extract Signature and Reply Lines from Email, in CEAS 2004. 10

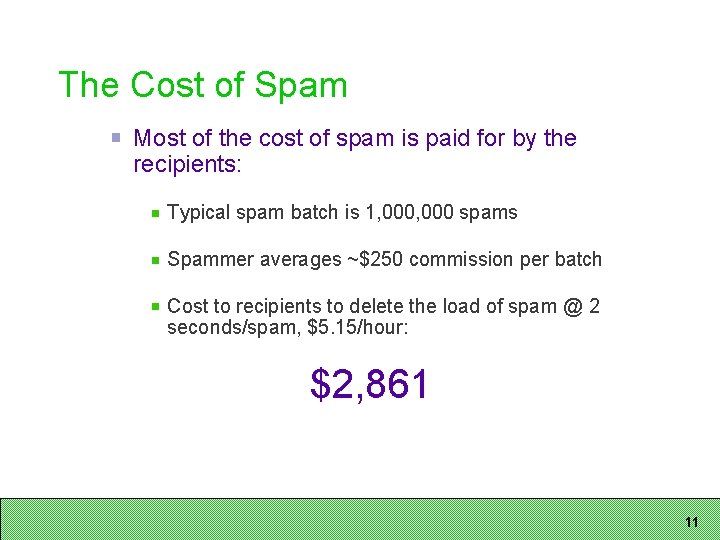

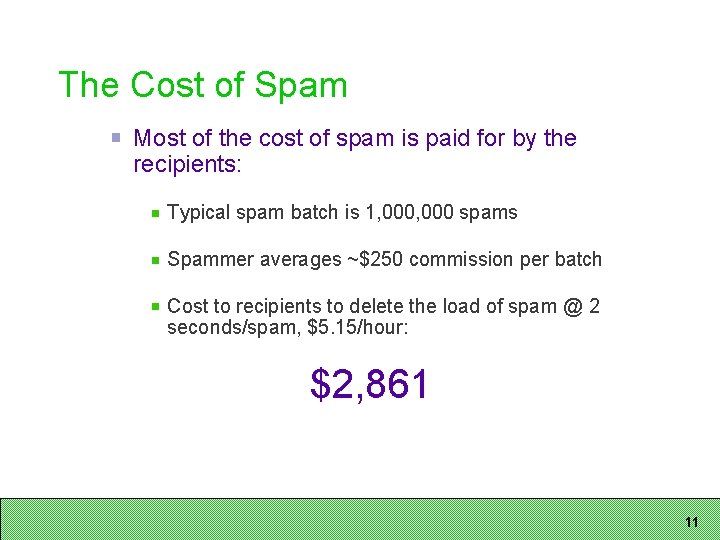

The Cost of Spam Most of the cost of spam is paid for by the recipients: Typical spam batch is 1, 000 spams Spammer averages ~$250 commission per batch Cost to recipients to delete the load of spam @ 2 seconds/spam, $5. 15/hour: $2, 861 11

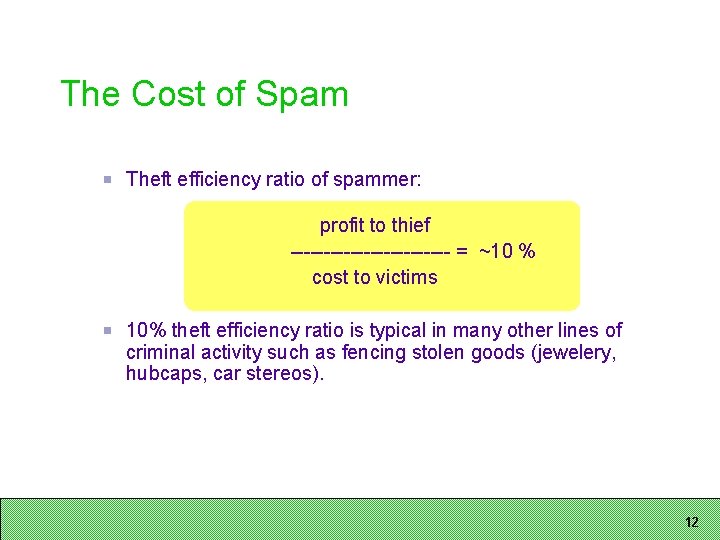

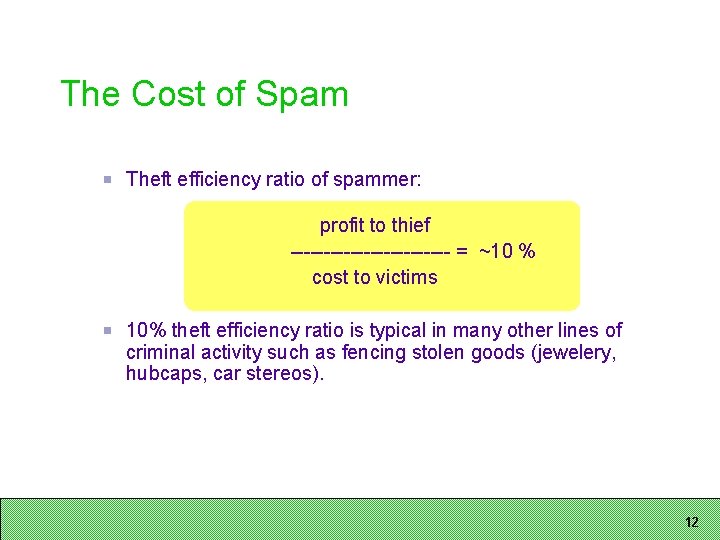

The Cost of Spam Theft efficiency ratio of spammer: profit to thief ------------ = ~10 % cost to victims 10% theft efficiency ratio is typical in many other lines of criminal activity such as fencing stolen goods (jewelery, hubcaps, car stereos). 12

How to Recognize Spam? What features and algorithms should we use? 13

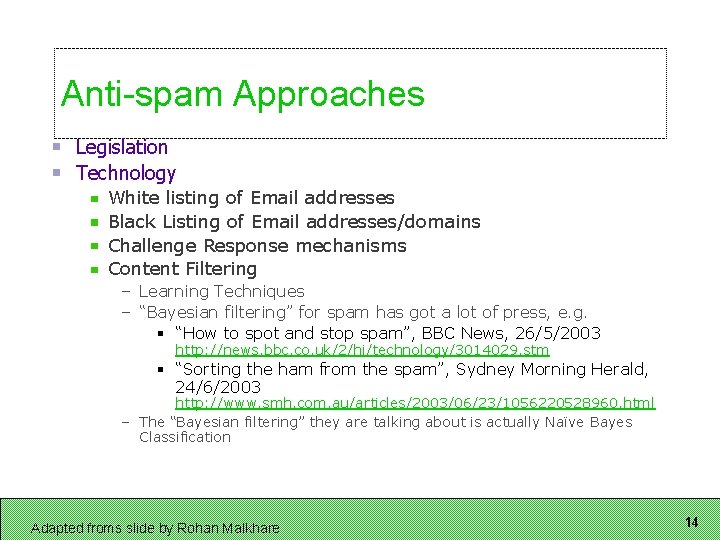

Anti-spam Approaches Legislation Technology White listing of Email addresses Black Listing of Email addresses/domains Challenge Response mechanisms Content Filtering – Learning Techniques – “Bayesian filtering” for spam has got a lot of press, e. g. § “How to spot and stop spam”, BBC News, 26/5/2003 http: //news. bbc. co. uk/2/hi/technology/3014029. stm § “Sorting the ham from the spam”, Sydney Morning Herald, 24/6/2003 http: //www. smh. com. au/articles/2003/06/23/1056220528960. html – The “Bayesian filtering” they are talking about is actually Naïve Bayes Classification Adapted froms slide by Rohan Malkhare 14

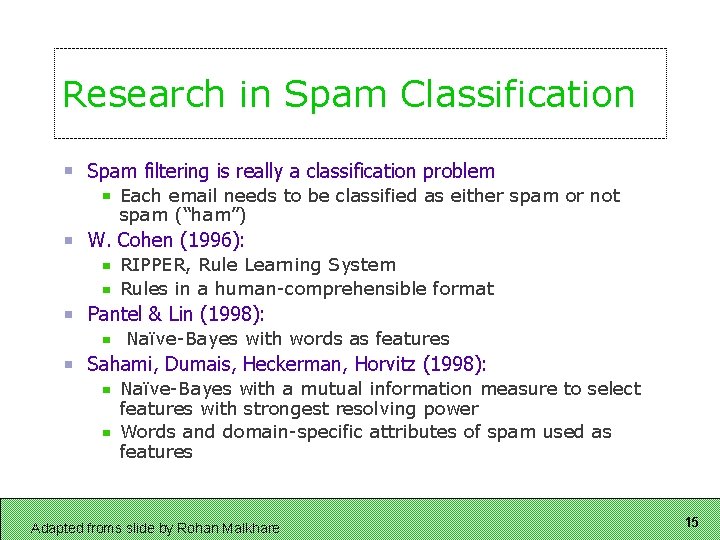

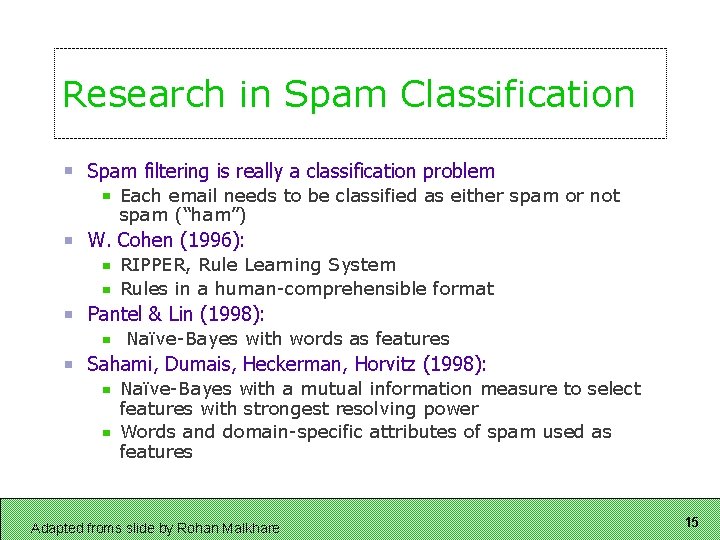

Research in Spam Classification Spam filtering is really a classification problem Each email needs to be classified as either spam or not spam (“ham”) W. Cohen (1996): RIPPER, Rule Learning System Rules in a human-comprehensible format Pantel & Lin (1998): Naïve-Bayes with words as features Sahami, Dumais, Heckerman, Horvitz (1998): Naïve-Bayes with a mutual information measure to select features with strongest resolving power Words and domain-specific attributes of spam used as features Adapted froms slide by Rohan Malkhare 15

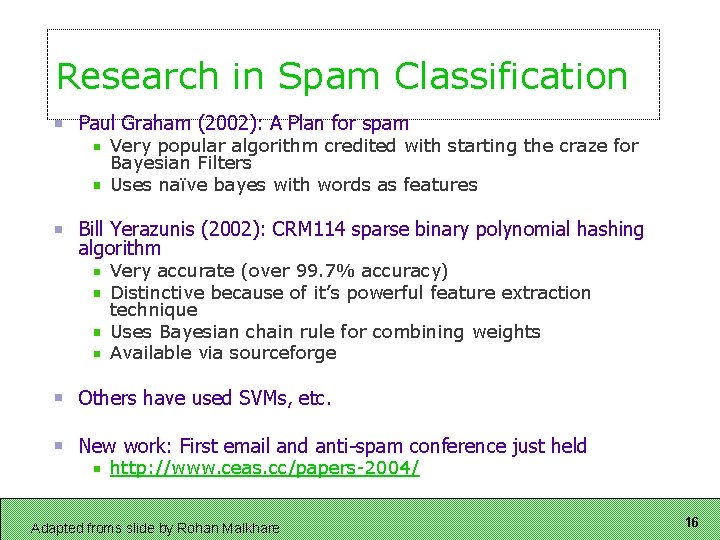

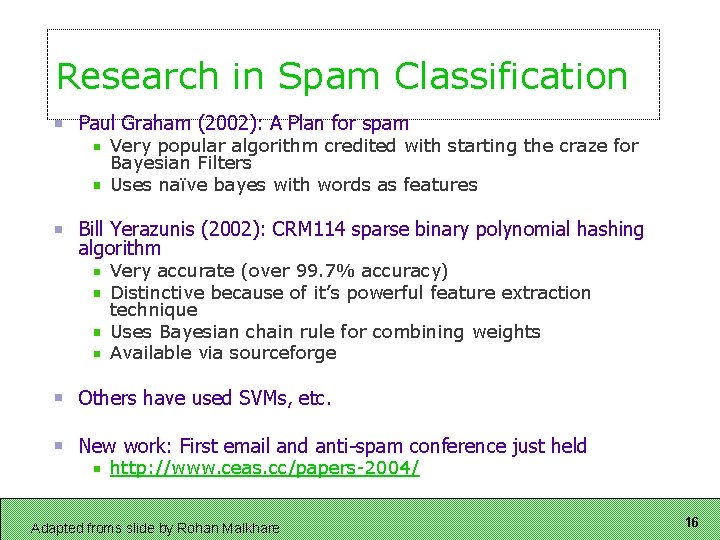

Research in Spam Classification Paul Graham (2002): A Plan for spam Very popular algorithm credited with starting the craze for Bayesian Filters Uses naïve bayes with words as features Bill Yerazunis (2002): CRM 114 sparse binary polynomial hashing algorithm Very accurate (over 99. 7% accuracy) Distinctive because of it’s powerful feature extraction technique Uses Bayesian chain rule for combining weights Available via sourceforge Others have used SVMs, etc. New work: First email and anti-spam conference just held http: //www. ceas. cc/papers-2004/ Adapted froms slide by Rohan Malkhare 16

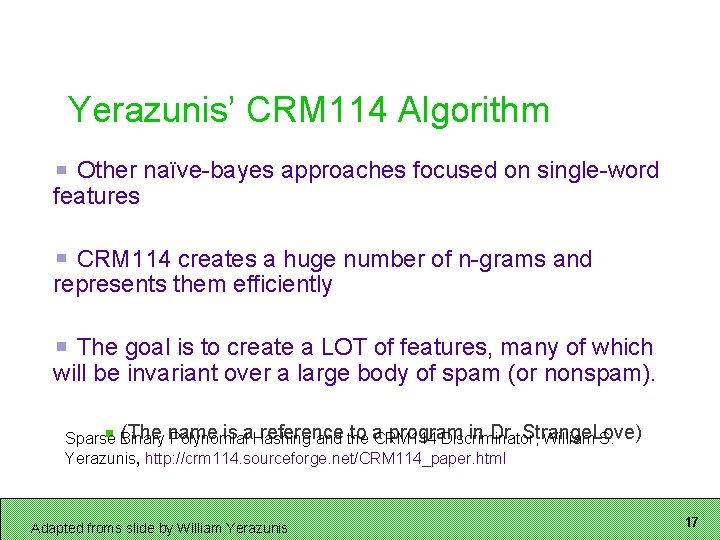

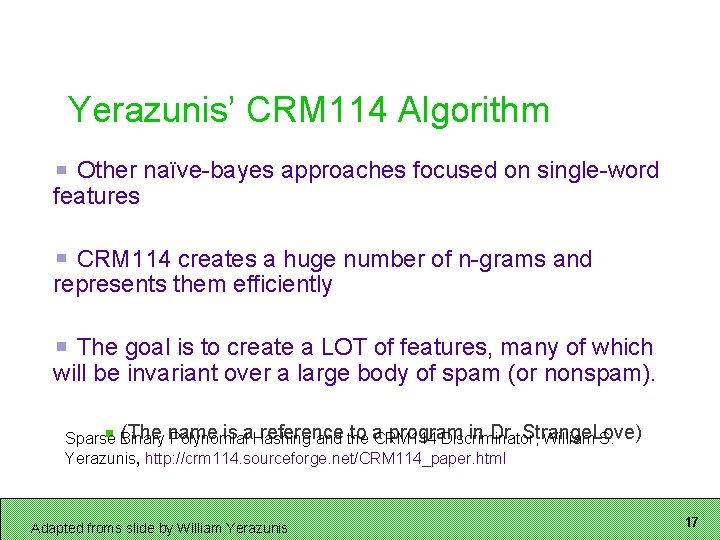

Yerazunis’ CRM 114 Algorithm Other naïve-bayes approaches focused on single-word features CRM 114 creates a huge number of n-grams and represents them efficiently The goal is to create a LOT of features, many of which will be invariant over a large body of spam (or nonspam). (The name is a. Hashing reference to a. CRM 114 program in Dr. Strange. Love) Sparse Binary Polynomial and the Discriminator, William S. Yerazunis, http: //crm 114. sourceforge. net/CRM 114_paper. html Adapted froms slide by William Yerazunis 17

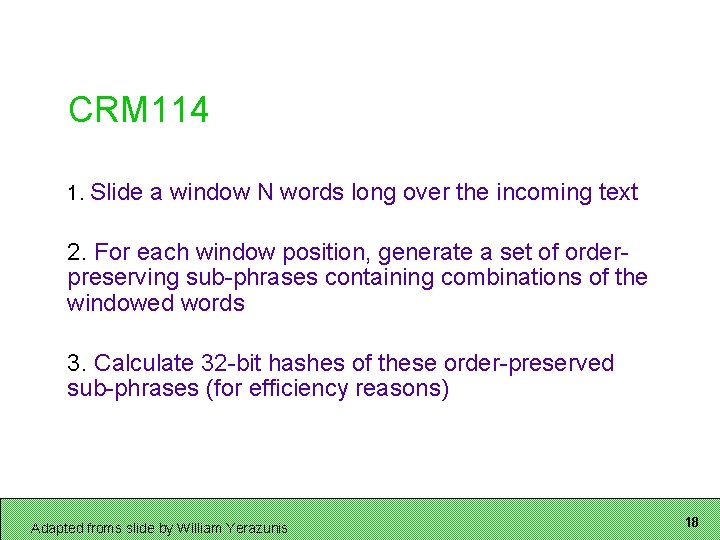

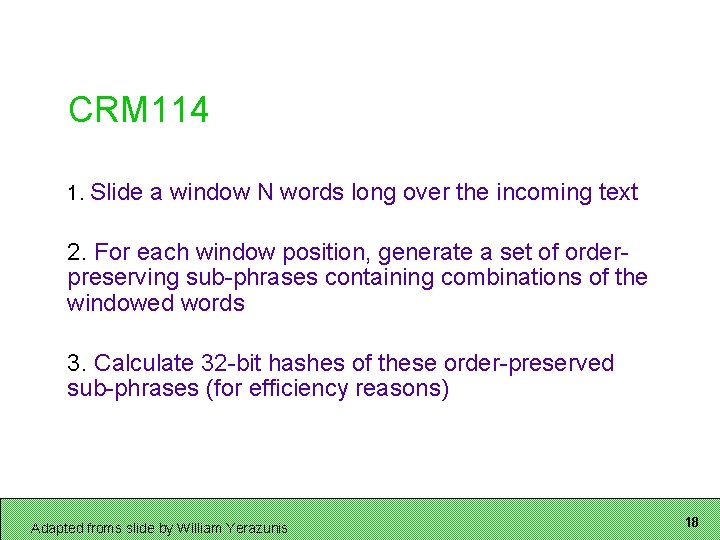

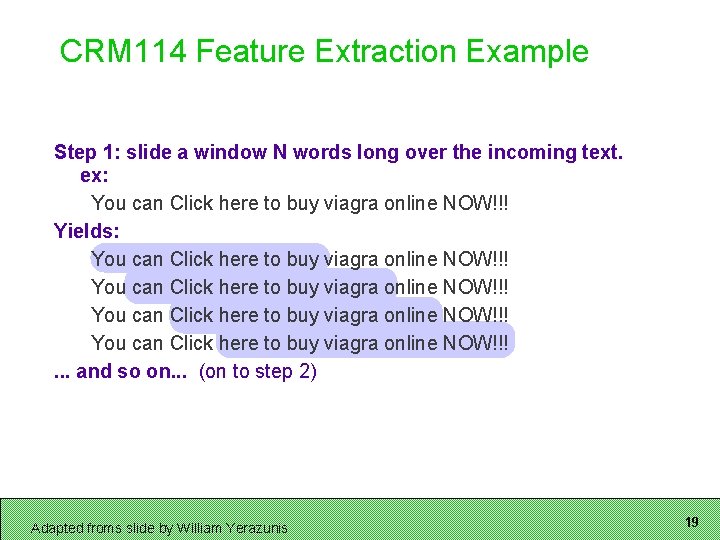

CRM 114 1. Slide a window N words long over the incoming text 2. For each window position, generate a set of orderpreserving sub-phrases containing combinations of the windowed words 3. Calculate 32 -bit hashes of these order-preserved sub-phrases (for efficiency reasons) Adapted froms slide by William Yerazunis 18

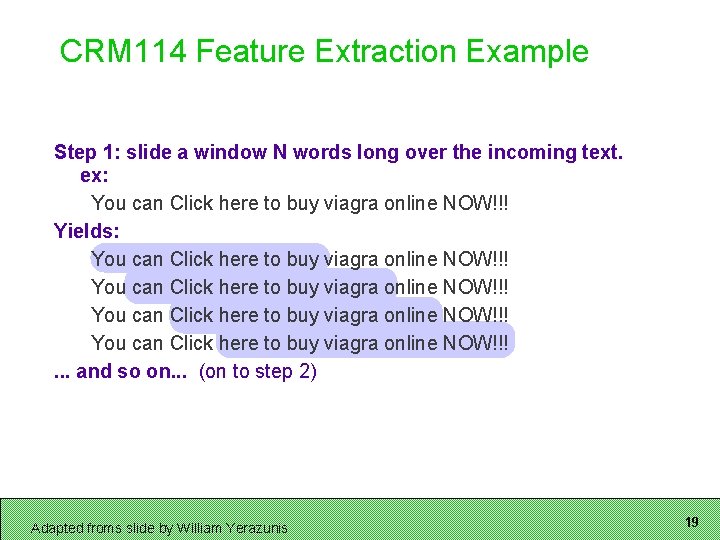

CRM 114 Feature Extraction Example Step 1: slide a window N words long over the incoming text. ex: You can Click here to buy viagra online NOW!!! Yields: You can Click here to buy viagra online NOW!!!. . . and so on. . . (on to step 2) Adapted froms slide by William Yerazunis 19

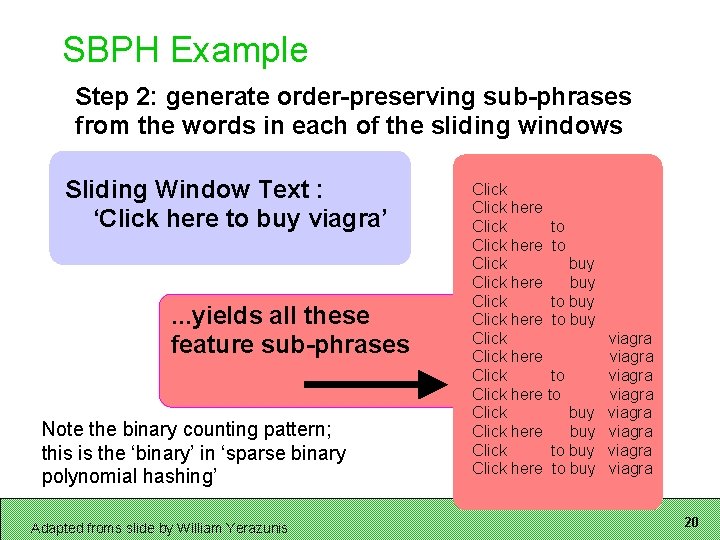

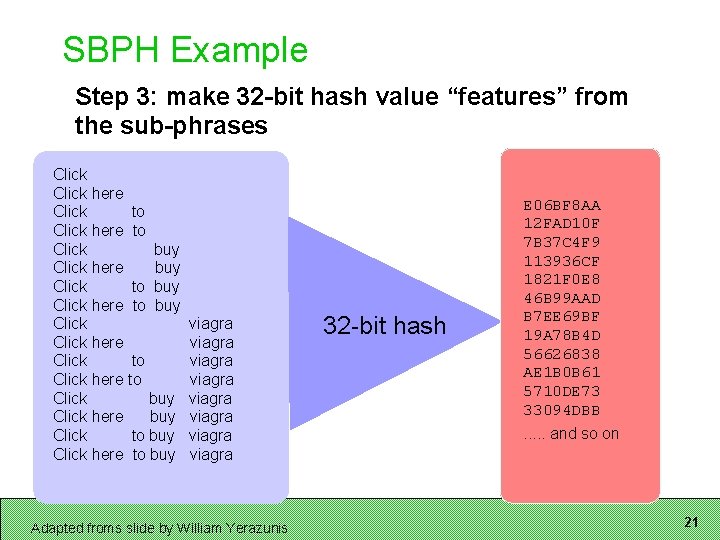

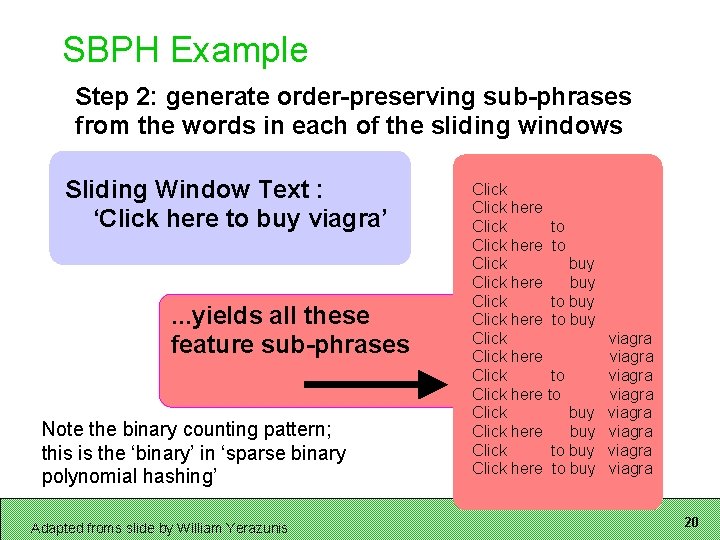

SBPH Example Step 2: generate order-preserving sub-phrases from the words in each of the sliding windows Sliding Window Text : ‘Click here to buy viagra’ . . . yields all these feature sub-phrases Note the binary counting pattern; this is the ‘binary’ in ‘sparse binary polynomial hashing’ Adapted froms slide by William Yerazunis Click here Click to Click here to Click buy Click here buy Click to buy Click here to buy viagra viagra 20

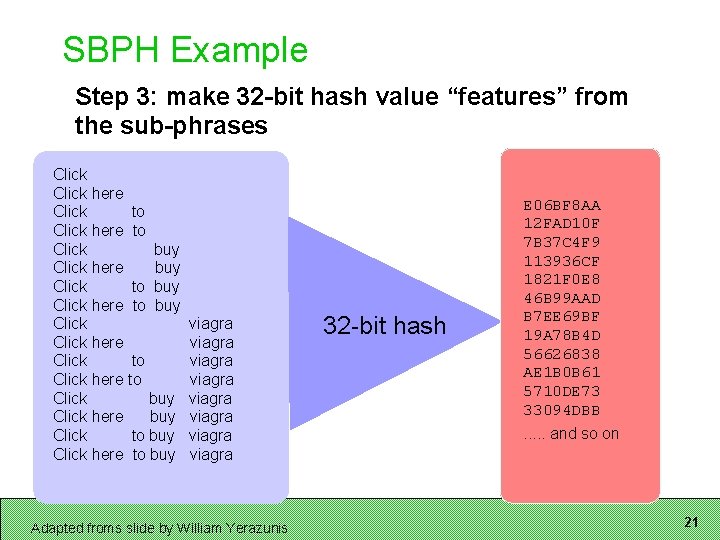

SBPH Example Step 3: make 32 -bit hash value “features” from the sub-phrases Click here Click to Click here to Click buy Click here buy Click to buy Click here to buy viagra viagra Adapted froms slide by William Yerazunis 32 -bit hash E 06 BF 8 AA 12 FAD 10 F 7 B 37 C 4 F 9 113936 CF 1821 F 0 E 8 46 B 99 AAD B 7 EE 69 BF 19 A 78 B 4 D 56626838 AE 1 B 0 B 61 5710 DE 73 33094 DBB. . . and so on 21

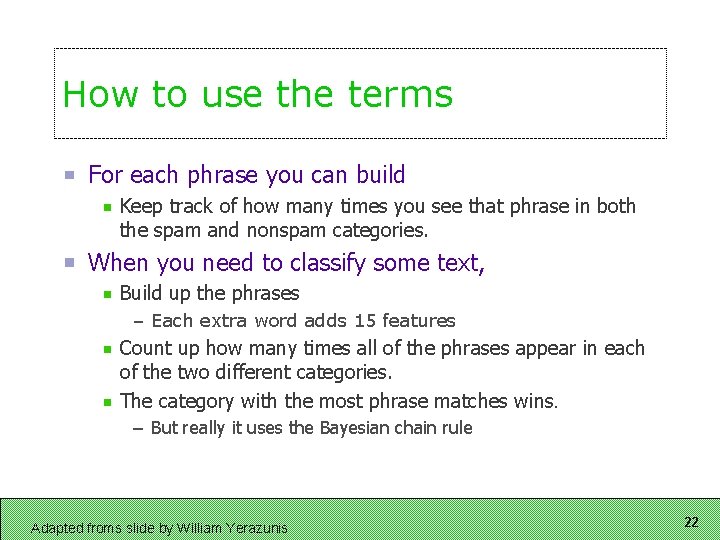

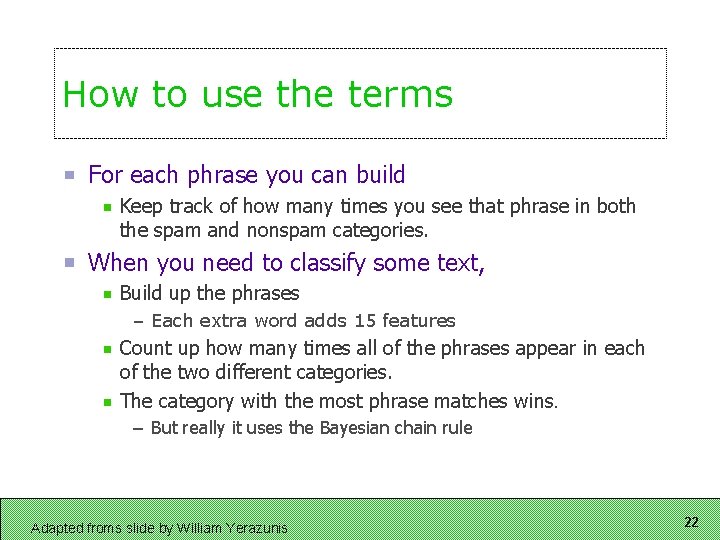

How to use the terms For each phrase you can build Keep track of how many times you see that phrase in both the spam and nonspam categories. When you need to classify some text, Build up the phrases – Each extra word adds 15 features Count up how many times all of the phrases appear in each of the two different categories. The category with the most phrase matches wins. – But really it uses the Bayesian chain rule Adapted froms slide by William Yerazunis 22

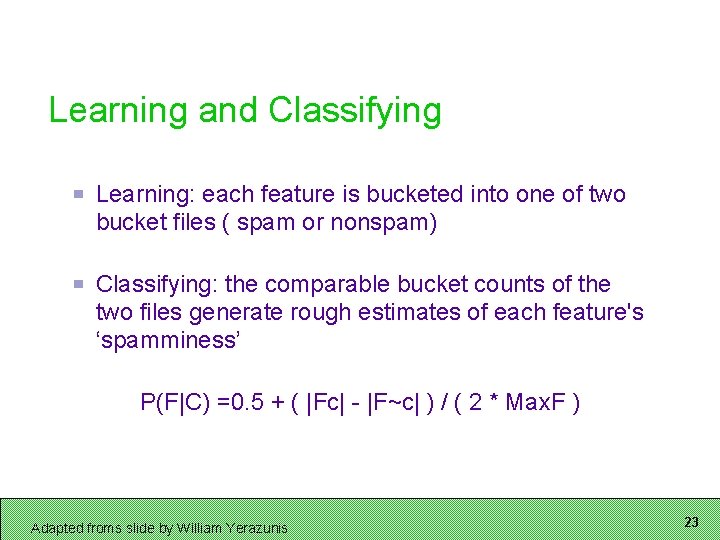

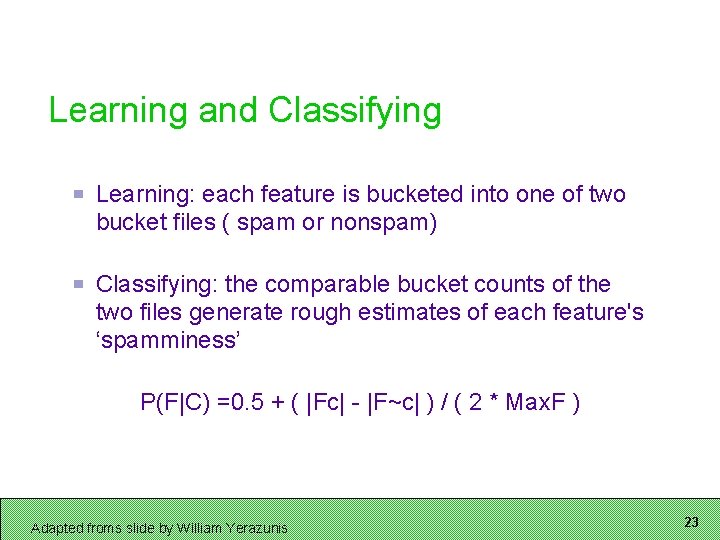

Learning and Classifying Learning: each feature is bucketed into one of two bucket files ( spam or nonspam) Classifying: the comparable bucket counts of the two files generate rough estimates of each feature's ‘spamminess’ P(F|C) =0. 5 + ( |Fc| - |F~c| ) / ( 2 * Max. F ) Adapted froms slide by William Yerazunis 23

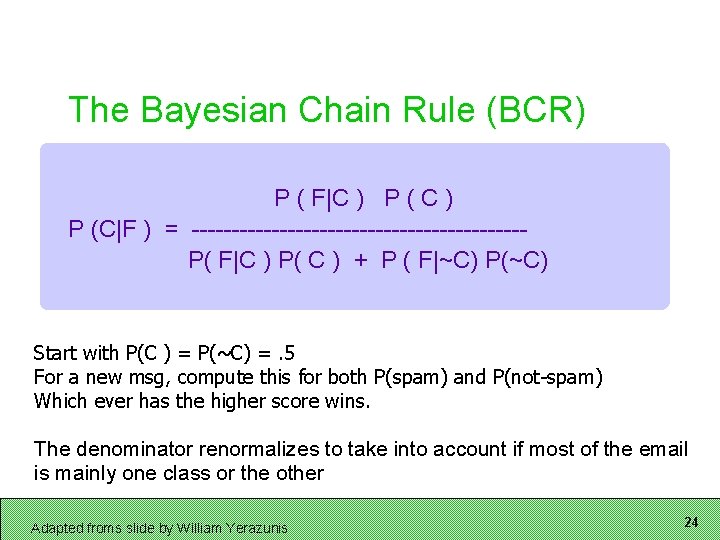

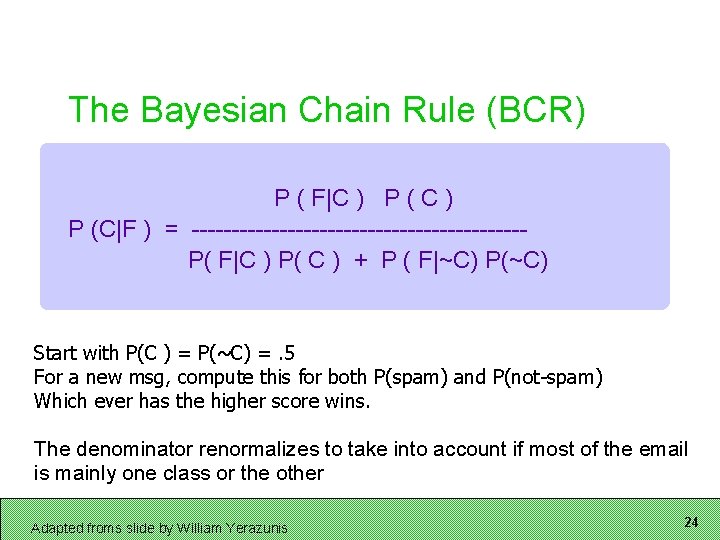

The Bayesian Chain Rule (BCR) P ( F|C ) P (C|F ) = ---------------------P( F|C ) P( C ) + P ( F|~C) P(~C) Start with P(C ) = P(~C) =. 5 For a new msg, compute this for both P(spam) and P(not-spam) Which ever has the higher score wins. The denominator renormalizes to take into account if most of the email is mainly one class or the other Adapted froms slide by William Yerazunis 24

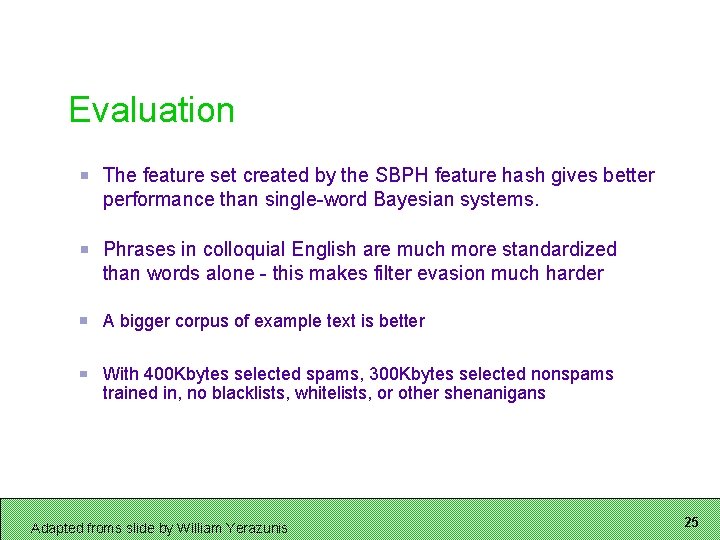

Evaluation The feature set created by the SBPH feature hash gives better performance than single-word Bayesian systems. Phrases in colloquial English are much more standardized than words alone - this makes filter evasion much harder A bigger corpus of example text is better With 400 Kbytes selected spams, 300 Kbytes selected nonspams trained in, no blacklists, whitelists, or other shenanigans Adapted froms slide by William Yerazunis 25

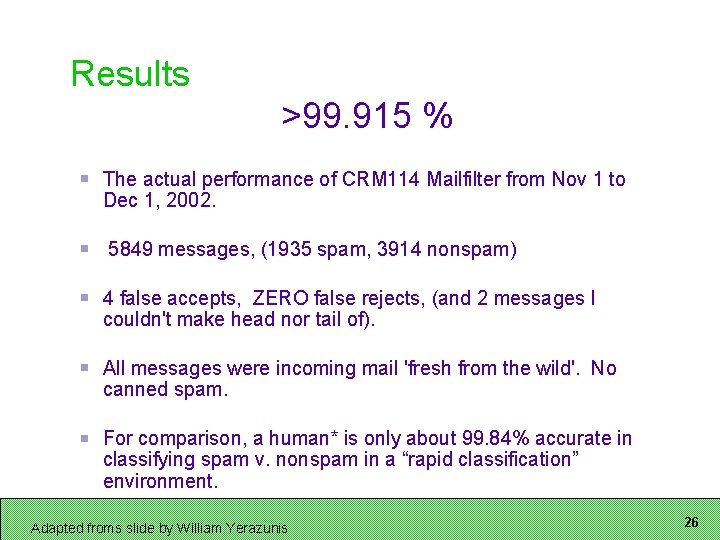

Results >99. 915 % The actual performance of CRM 114 Mailfilter from Nov 1 to Dec 1, 2002. 5849 messages, (1935 spam, 3914 nonspam) 4 false accepts, ZERO false rejects, (and 2 messages I couldn't make head nor tail of). All messages were incoming mail 'fresh from the wild'. No canned spam. For comparison, a human* is only about 99. 84% accurate in classifying spam v. nonspam in a “rapid classification” environment. Adapted froms slide by William Yerazunis 26

Results Stats Filtering speed: classification: about 20 Kbytes per second, learning time: about 10 Kbytes per second (on a Transmeta 666 MHz laptop) Memory required: about 5 megabytes 404 K spam features, 322 K nonspam features Adapted froms slide by William Yerazunis 27

Downsides? The bad news: SPAM MUTATES Even a perfectly trained Bayesian filter will slowly deteriorate. New spams appear, with new topics, as well as old topics with creative twists to evade antispam filters. Adapted froms slide by William Yerazunis 28

Revenge of the Spammers How do the spammers game these algorithms? Break the tokenizer – Split up words, use html tags, etc Throw in randomly ordered words – Throw off the n-gram based statistics Use few words – Harder for the classifier to work On Attacking Statistical Spam Filters. Gregory L. Wittel and S. Felix Wu, CEAS ’ 04. 29

Next Time In-class work: creating categories for the Enron email corpus 30