Shared Memory and Message Passing WA 1 2

- Slides: 32

Shared Memory and Message Passing W+A 1. 2. 1, 1. 2. 2, 1. 2. 3, 2. 1. 2, 2. 1. 3, 8. 3, 9. 2. 1, 9. 2. 2 Akl 2. 5. 2 CSE 160/Berman

Models for Communication • Parallel program = program composed of tasks (processes) which communicate to accomplish an overall computational goal • Two prevalent models for communication: – Message passing (MP) – Shared memory (SM) • This lecture will focus on MP and SM computing CSE 160/Berman

Message Passing Communication • Processes in message passing program communicate by passing messages A B • Basic message passing primitives – Send(parameter list) – Receive(parameter list) – Parameters depend on the software and can be complex CSE 160/Berman

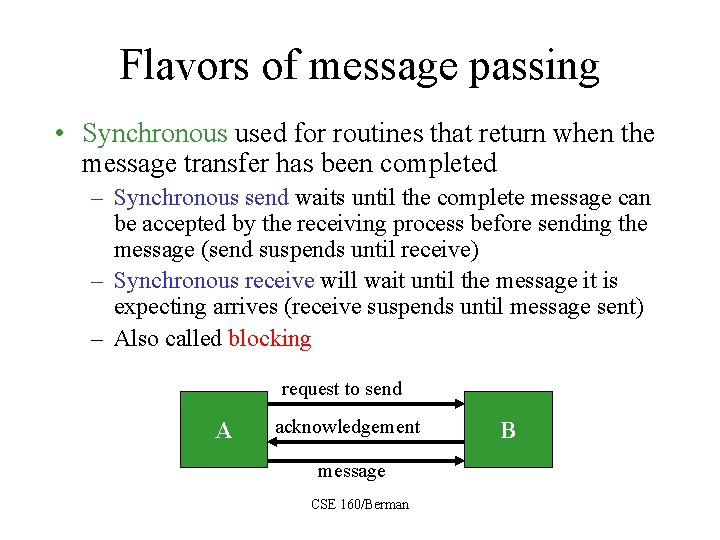

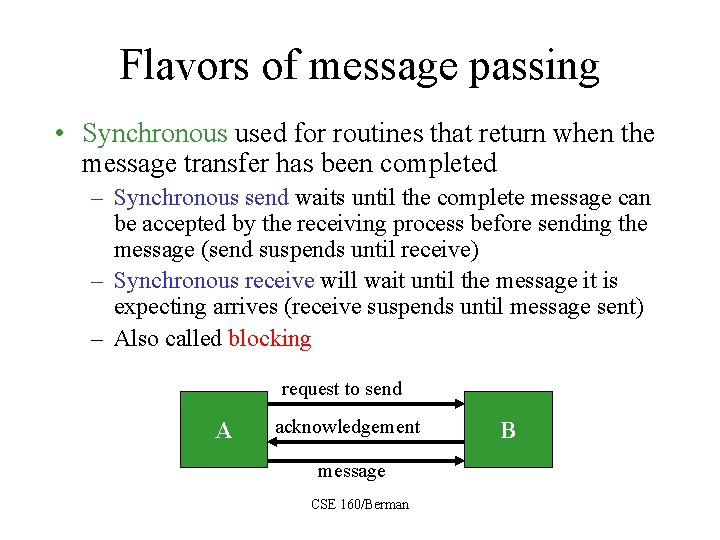

Flavors of message passing • Synchronous used for routines that return when the message transfer has been completed – Synchronous send waits until the complete message can be accepted by the receiving process before sending the message (send suspends until receive) – Synchronous receive will wait until the message it is expecting arrives (receive suspends until message sent) – Also called blocking request to send A acknowledgement message CSE 160/Berman B

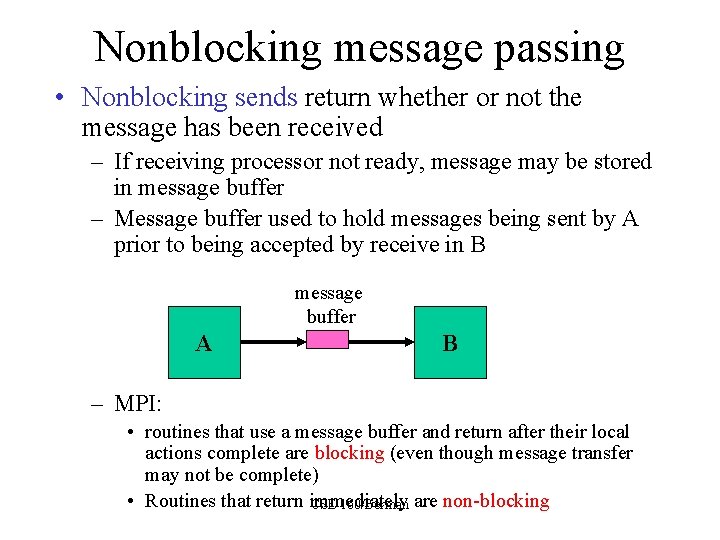

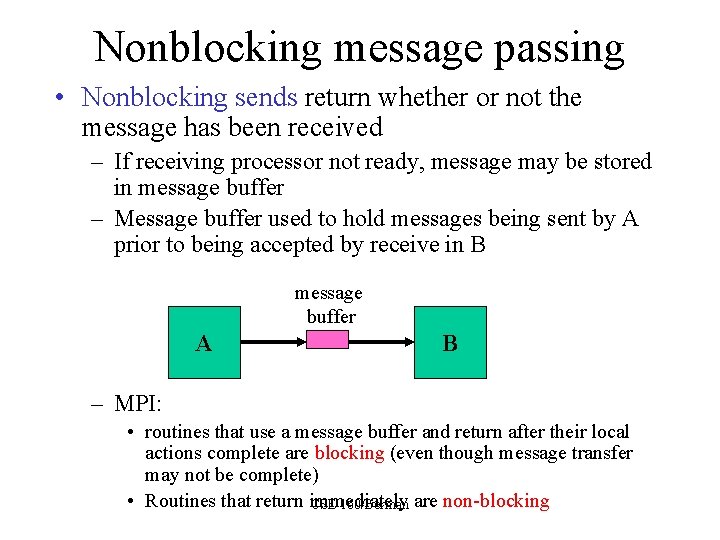

Nonblocking message passing • Nonblocking sends return whether or not the message has been received – If receiving processor not ready, message may be stored in message buffer – Message buffer used to hold messages being sent by A prior to being accepted by receive in B message buffer A B – MPI: • routines that use a message buffer and return after their local actions complete are blocking (even though message transfer may not be complete) • Routines that return immediately CSE 160/Berman are non-blocking

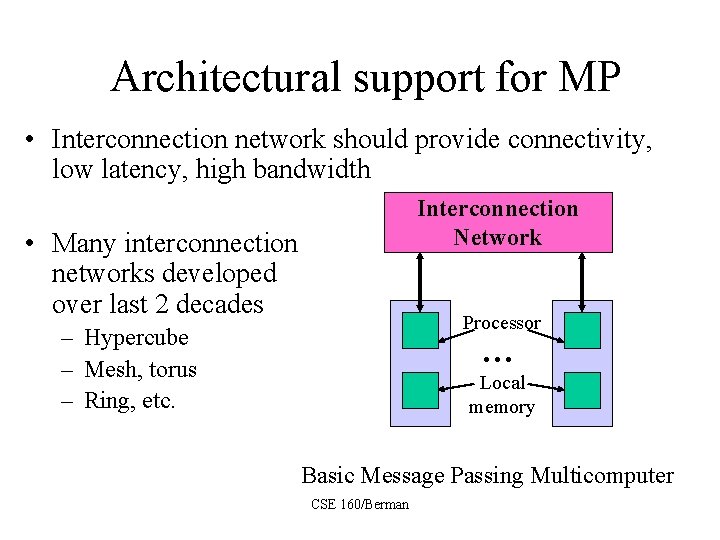

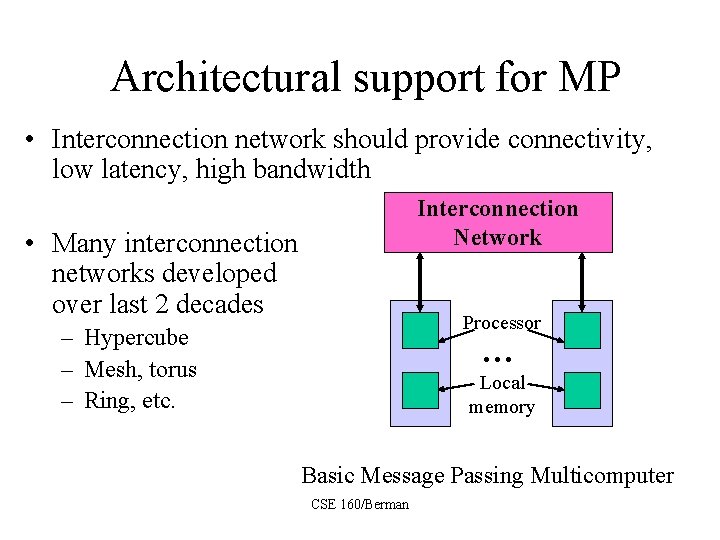

Architectural support for MP • Interconnection network should provide connectivity, low latency, high bandwidth Interconnection Network • Many interconnection networks developed over last 2 decades Processor – Hypercube – Mesh, torus – Ring, etc. … Local memory Basic Message Passing Multicomputer CSE 160/Berman

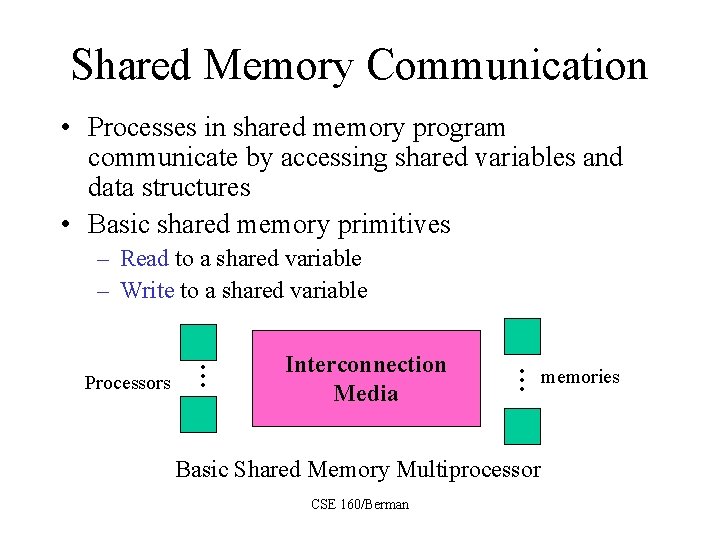

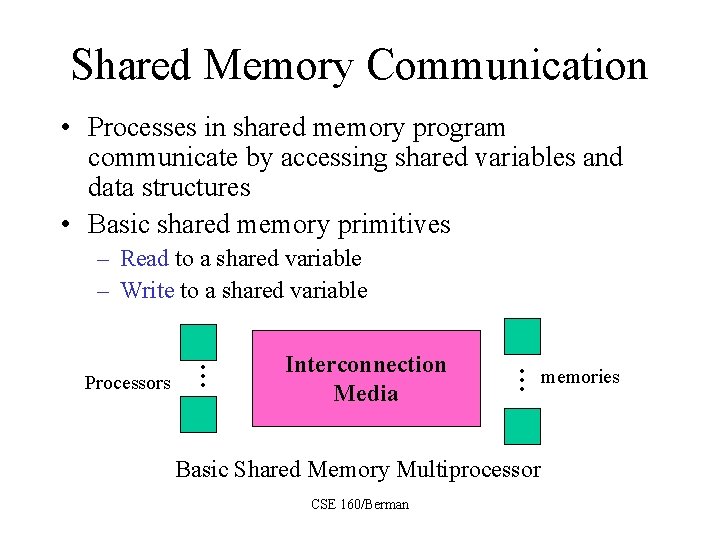

Shared Memory Communication • Processes in shared memory program communicate by accessing shared variables and data structures • Basic shared memory primitives Interconnection Media Basic Shared Memory Multiprocessor CSE 160/Berman memories … Processors … – Read to a shared variable – Write to a shared variable

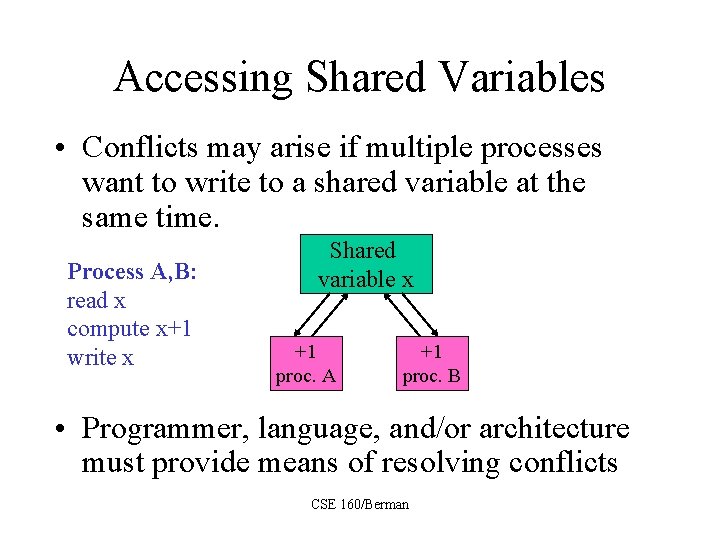

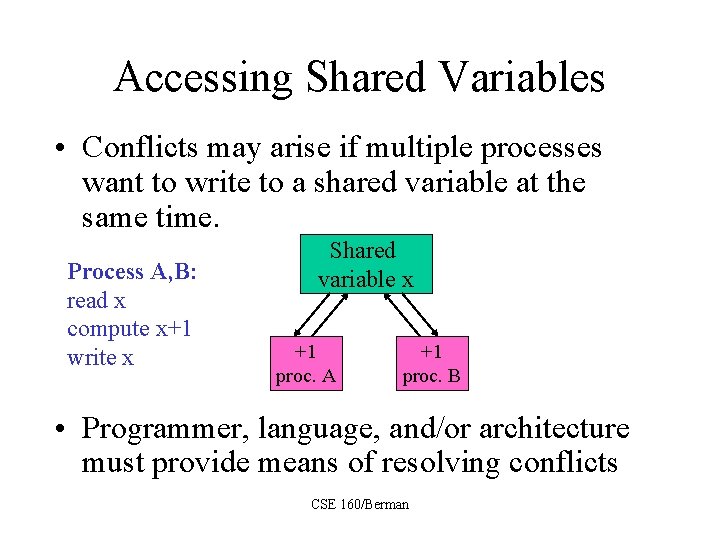

Accessing Shared Variables • Conflicts may arise if multiple processes want to write to a shared variable at the same time. Process A, B: read x compute x+1 write x Shared variable x +1 proc. A +1 proc. B • Programmer, language, and/or architecture must provide means of resolving conflicts CSE 160/Berman

Architectural Support for SM • 4 basic types of interconnection media: – Bus – Crossbar switch – Multistage network – Interconnection network with distributed shared memory CSE 160/Berman

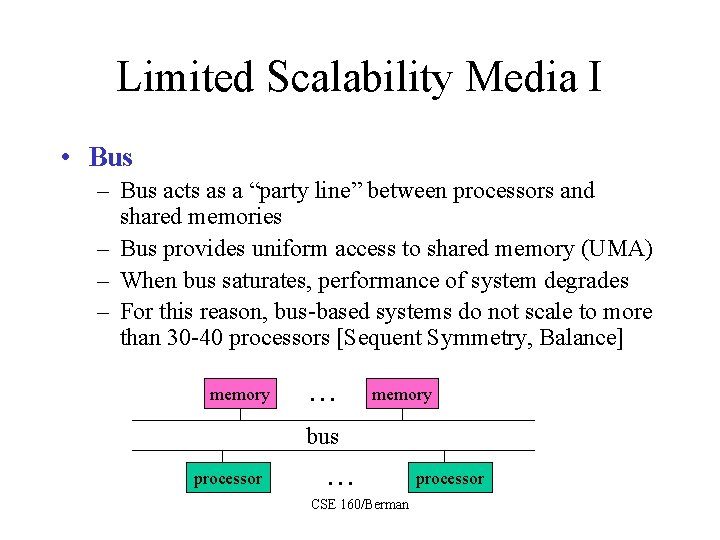

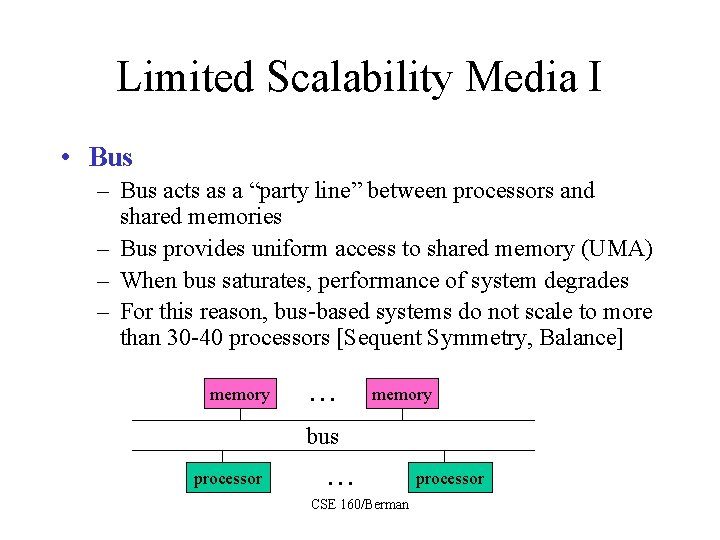

Limited Scalability Media I • Bus – Bus acts as a “party line” between processors and shared memories – Bus provides uniform access to shared memory (UMA) – When bus saturates, performance of system degrades – For this reason, bus-based systems do not scale to more than 30 -40 processors [Sequent Symmetry, Balance] memory … memory bus processor … CSE 160/Berman processor

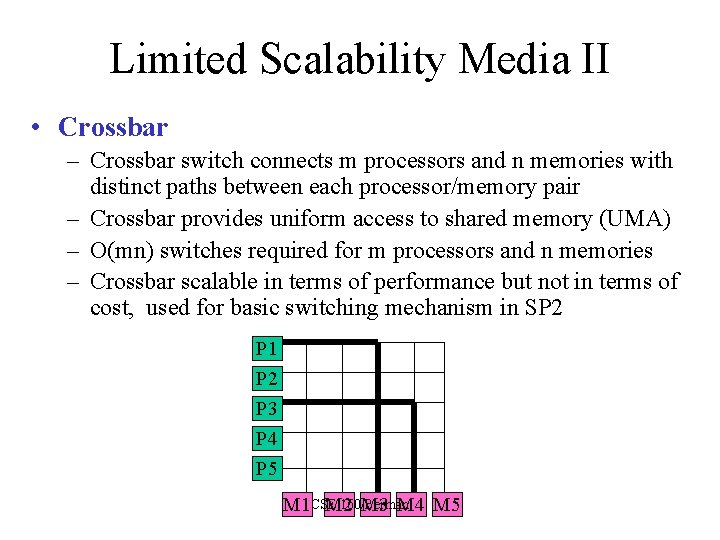

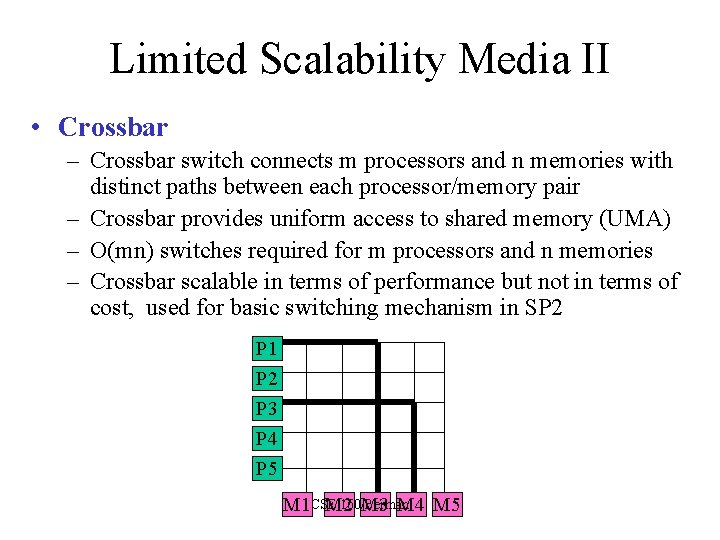

Limited Scalability Media II • Crossbar – Crossbar switch connects m processors and n memories with distinct paths between each processor/memory pair – Crossbar provides uniform access to shared memory (UMA) – O(mn) switches required for m processors and n memories – Crossbar scalable in terms of performance but not in terms of cost, used for basic switching mechanism in SP 2 P 1 P 2 P 3 P 4 P 5 160/Berman M 1 CSE M 2 M 3 M 4 M 5

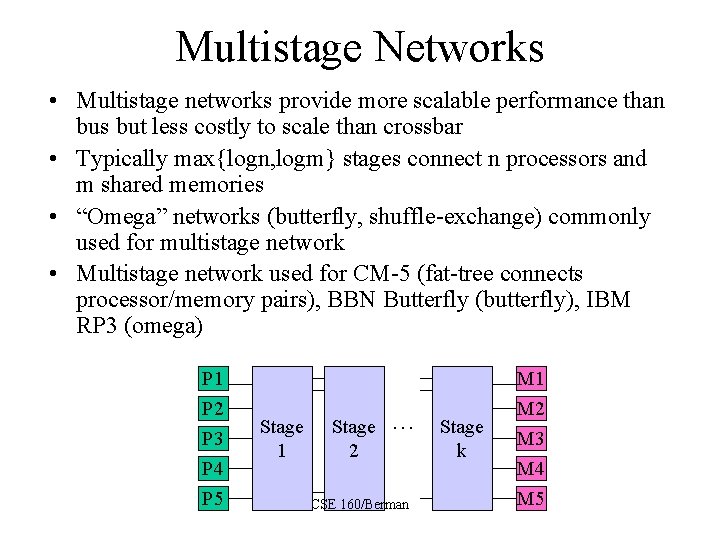

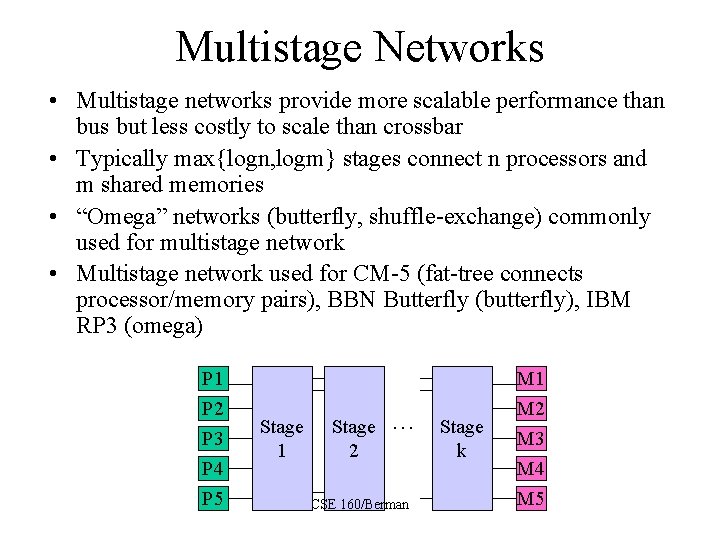

Multistage Networks • Multistage networks provide more scalable performance than bus but less costly to scale than crossbar • Typically max{logn, logm} stages connect n processors and m shared memories • “Omega” networks (butterfly, shuffle-exchange) commonly used for multistage network • Multistage network used for CM-5 (fat-tree connects processor/memory pairs), BBN Butterfly (butterfly), IBM RP 3 (omega) P 1 P 2 P 3 P 4 P 5 Stage 1 Stage 2 … CSE 160/Berman Stage k M 1 M 2 M 3 M 4 M 5

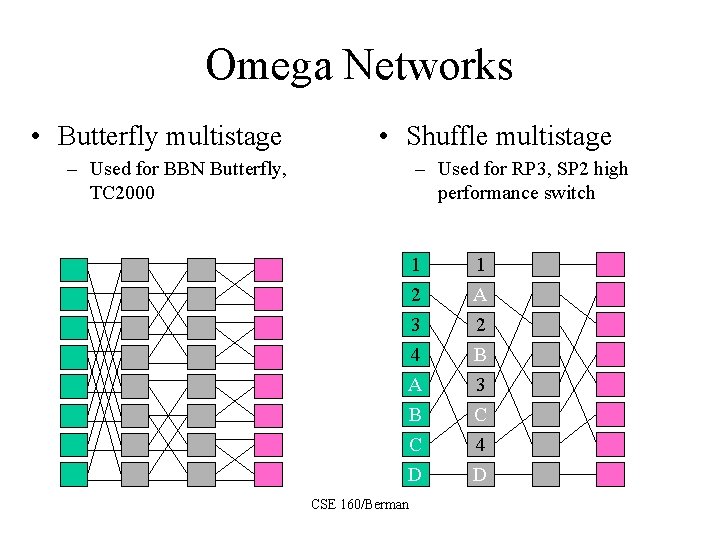

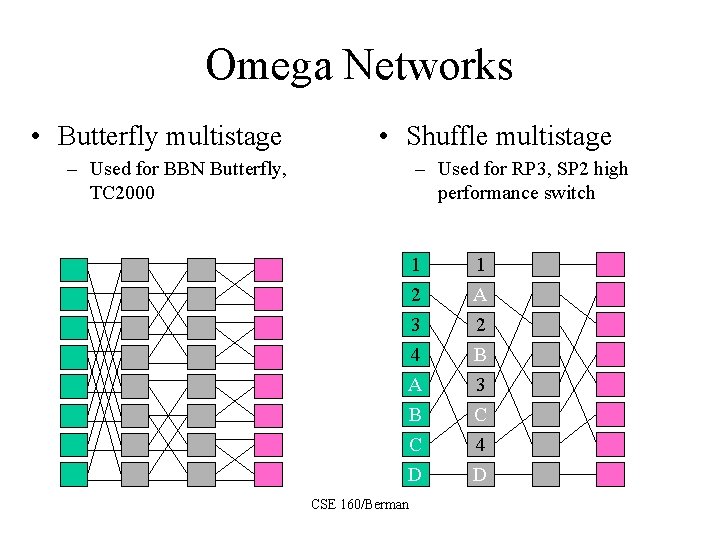

Omega Networks • Butterfly multistage • Shuffle multistage – Used for BBN Butterfly, TC 2000 – Used for RP 3, SP 2 high performance switch 1 2 3 4 A B 1 A 2 B 3 C C D 4 D CSE 160/Berman

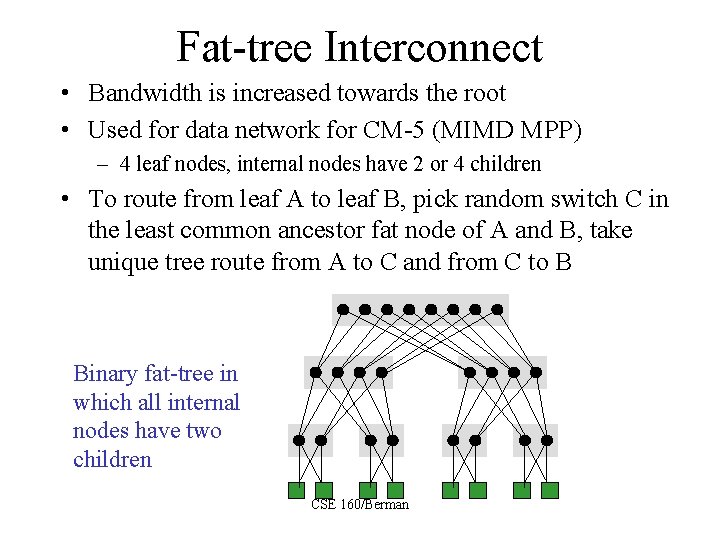

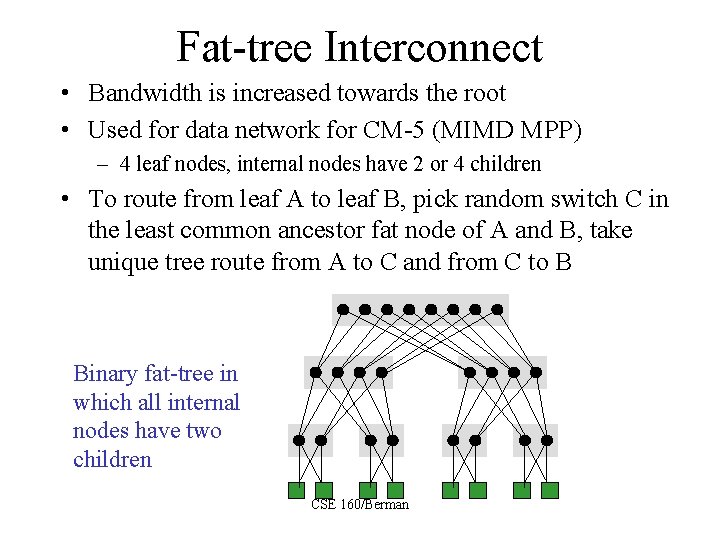

Fat-tree Interconnect • Bandwidth is increased towards the root • Used for data network for CM-5 (MIMD MPP) – 4 leaf nodes, internal nodes have 2 or 4 children • To route from leaf A to leaf B, pick random switch C in the least common ancestor fat node of A and B, take unique tree route from A to C and from C to B Binary fat-tree in which all internal nodes have two children CSE 160/Berman

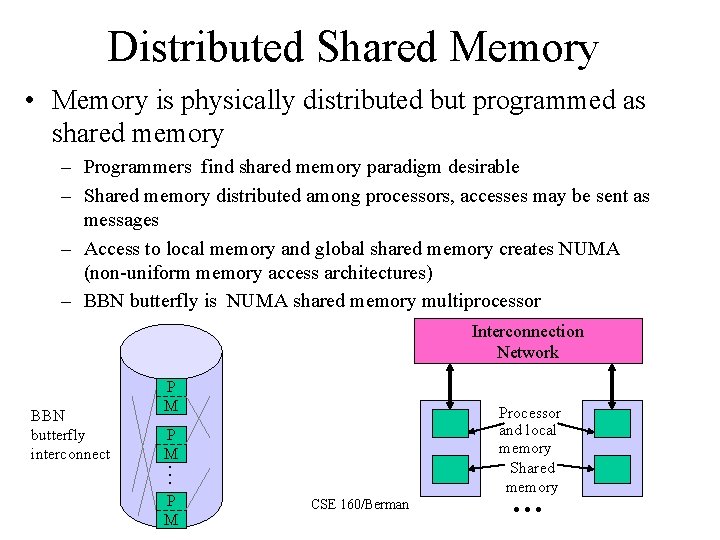

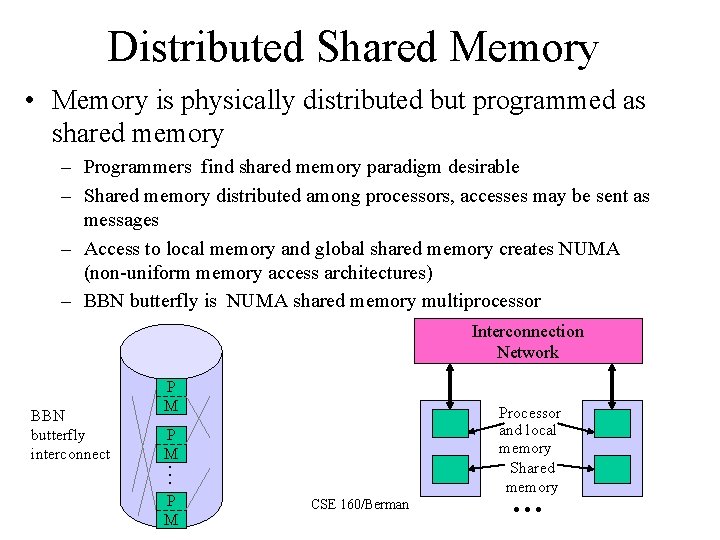

Distributed Shared Memory • Memory is physically distributed but programmed as shared memory – Programmers find shared memory paradigm desirable – Shared memory distributed among processors, accesses may be sent as messages – Access to local memory and global shared memory creates NUMA (non-uniform memory access architectures) – BBN butterfly is NUMA shared memory multiprocessor Interconnection Network Processor and local memory Shared memory P M … BBN butterfly interconnect P M CSE 160/Berman …

Alphabet Soup • Terms for shared memory multiprocessors – NUMA = non-uniform memory access • BBN Butterfly, Cray T 3 E, Origin 2000 – UMA = uniform memory access • Sequent, Sun HPC 1000 – COMA = cache-only memory access • KSR – (NORMA = no remote memory access • message-passing MPPs ) CSE 160/Berman

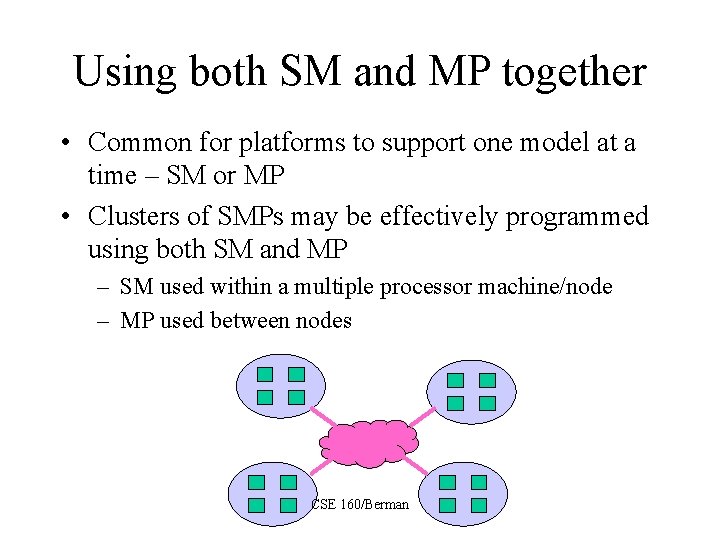

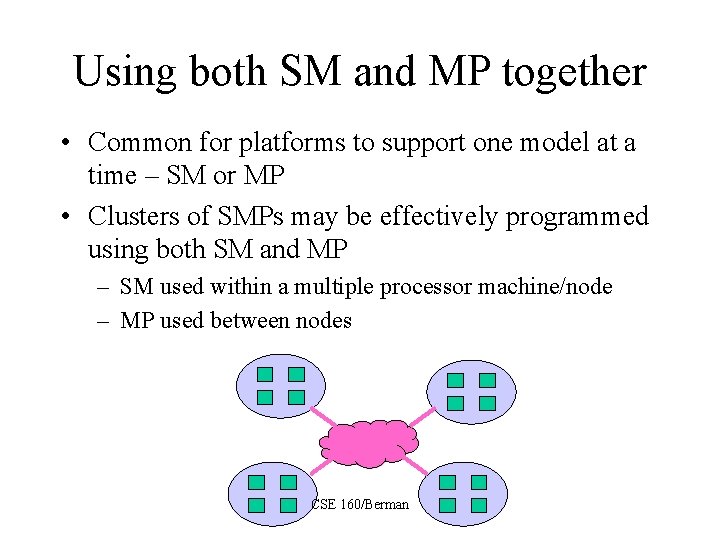

Using both SM and MP together • Common for platforms to support one model at a time – SM or MP • Clusters of SMPs may be effectively programmed using both SM and MP – SM used within a multiple processor machine/node – MP used between nodes CSE 160/Berman

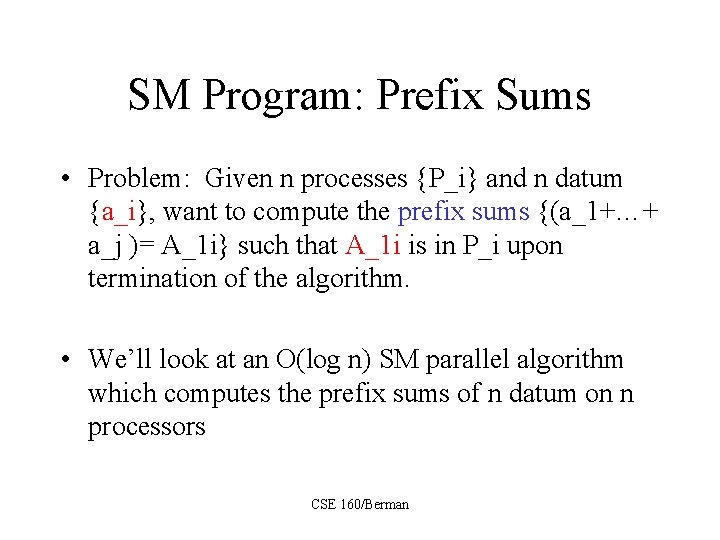

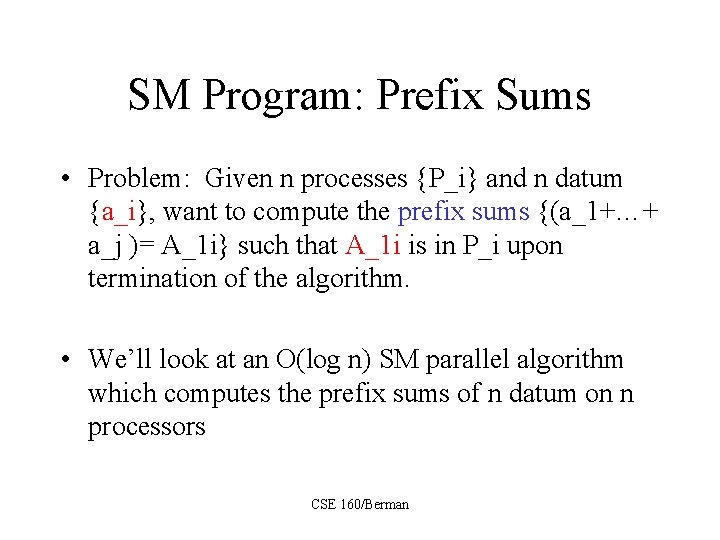

SM Program: Prefix Sums • Problem: Given n processes {P_i} and n datum {a_i}, want to compute the prefix sums {(a_1+…+ a_j )= A_1 i} such that A_1 i is in P_i upon termination of the algorithm. • We’ll look at an O(log n) SM parallel algorithm which computes the prefix sums of n datum on n processors CSE 160/Berman

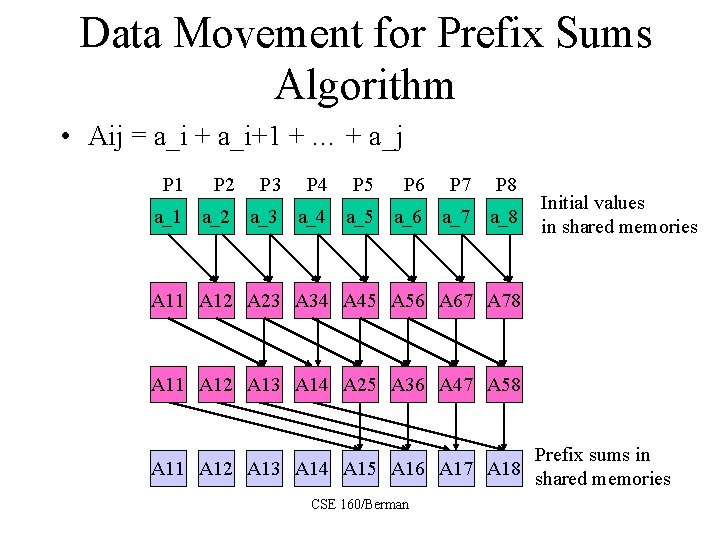

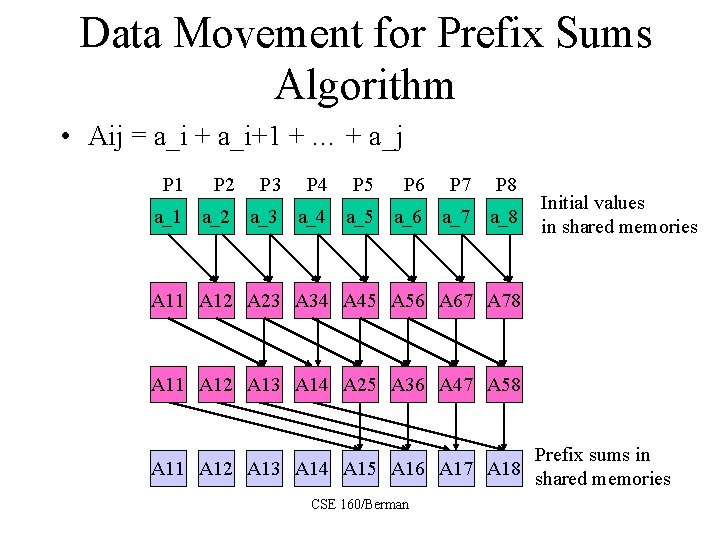

Data Movement for Prefix Sums Algorithm • Aij = a_i + a_i+1 + … + a_j P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 a_1 a_2 a_3 a_4 a_5 a_6 a_7 a_8 Initial values in shared memories A 11 A 12 A 23 A 34 A 45 A 56 A 67 A 78 A 11 A 12 A 13 A 14 A 25 A 36 A 47 A 58 Prefix sums in A 11 A 12 A 13 A 14 A 15 A 16 A 17 A 18 shared memories CSE 160/Berman

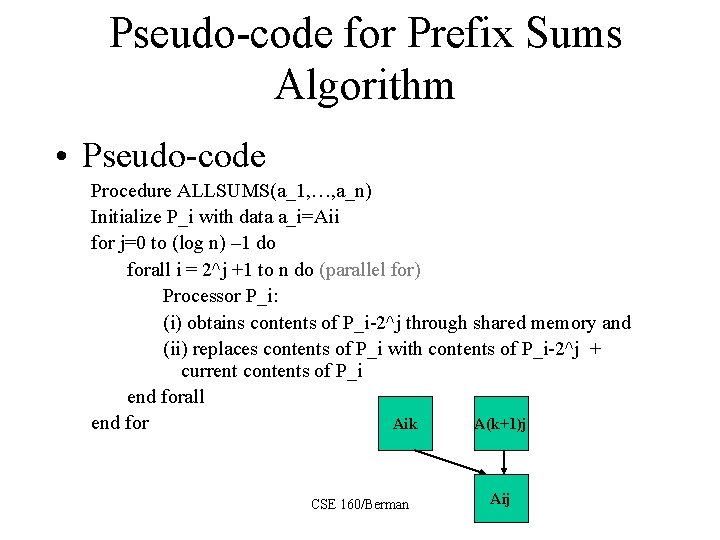

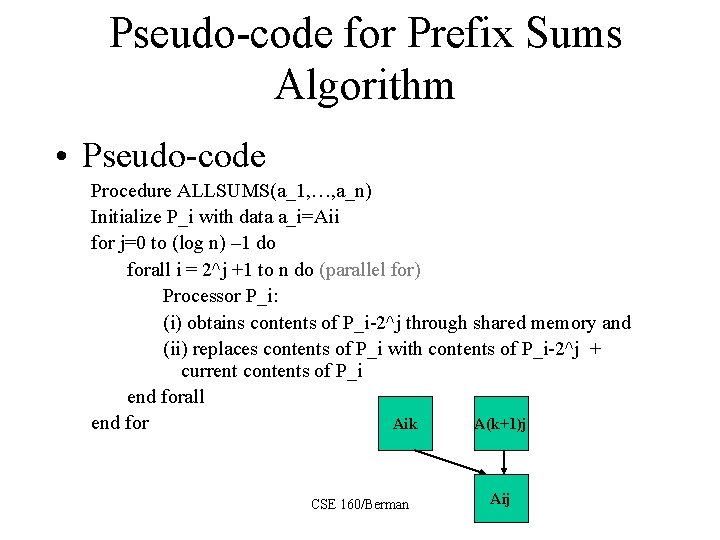

Pseudo-code for Prefix Sums Algorithm • Pseudo-code Procedure ALLSUMS(a_1, …, a_n) Initialize P_i with data a_i=Aii for j=0 to (log n) – 1 do forall i = 2^j +1 to n do (parallel for) Processor P_i: (i) obtains contents of P_i-2^j through shared memory and (ii) replaces contents of P_i with contents of P_i-2^j + current contents of P_i end forall Aik A(k+1)j end for CSE 160/Berman Aij

Programming Issues • Algorithm assumes that all additions with the same offset (i. e. for each level) are performed at the same time – Need some way of tagging or synchronizing computations – May be cost-effective to do a barrier synchronization (all processors must reach a “barrier before proceeding to the next level ) between levels • For this algorithm, there are no write conflicts within a level since one of the values is already in the shared variable, the other value need only be summed with the existing value – If two values must be written with existing variable, we would need to establish a well-defined protocol for handling conflicting writes CSE 160/Berman

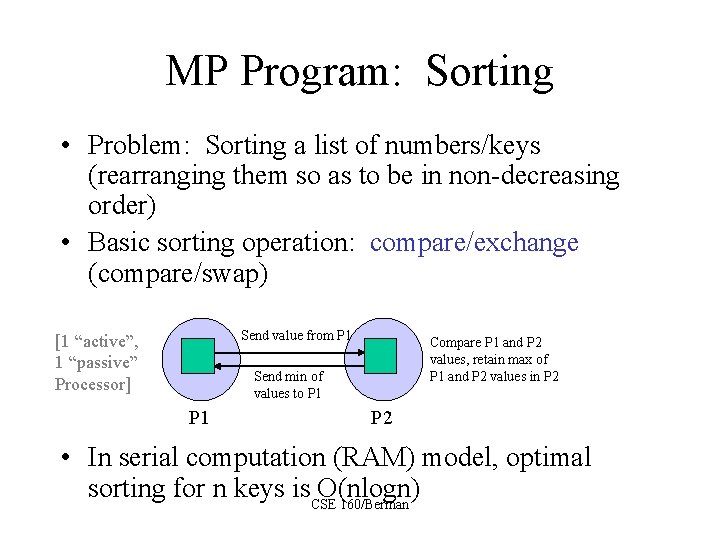

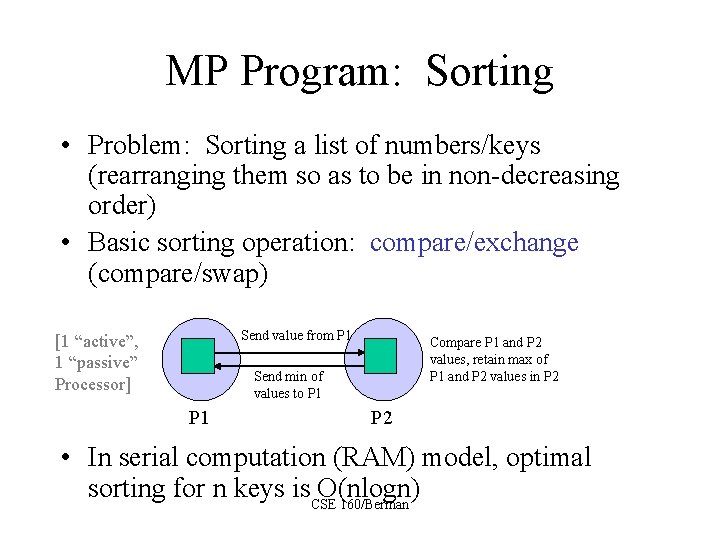

MP Program: Sorting • Problem: Sorting a list of numbers/keys (rearranging them so as to be in non-decreasing order) • Basic sorting operation: compare/exchange (compare/swap) Send value from P 1 [1 “active”, 1 “passive” Processor] Compare P 1 and P 2 values, retain max of P 1 and P 2 values in P 2 Send min of values to P 1 P 2 • In serial computation (RAM) model, optimal sorting for n keys is. CSE O(nlogn) 160/Berman

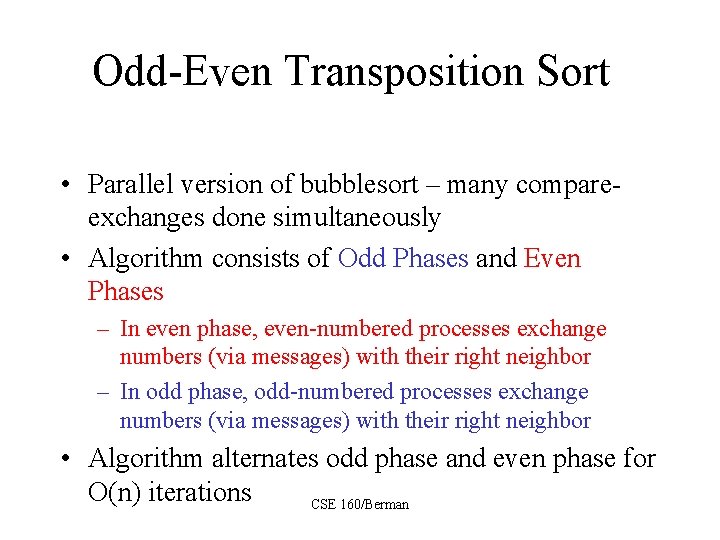

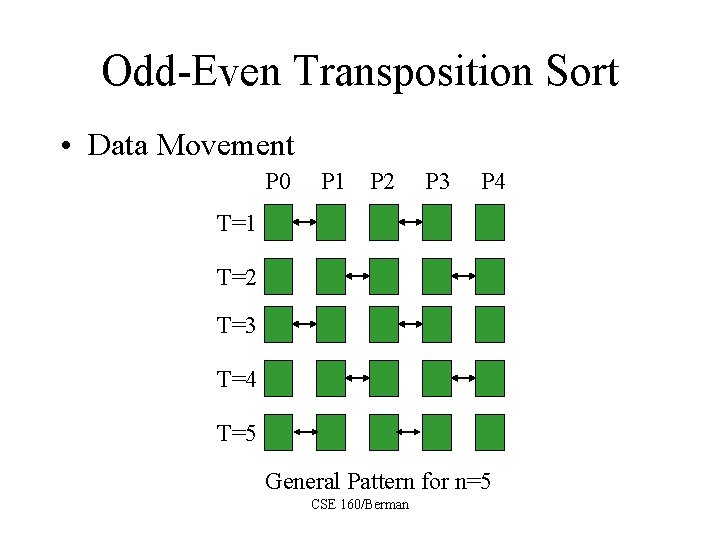

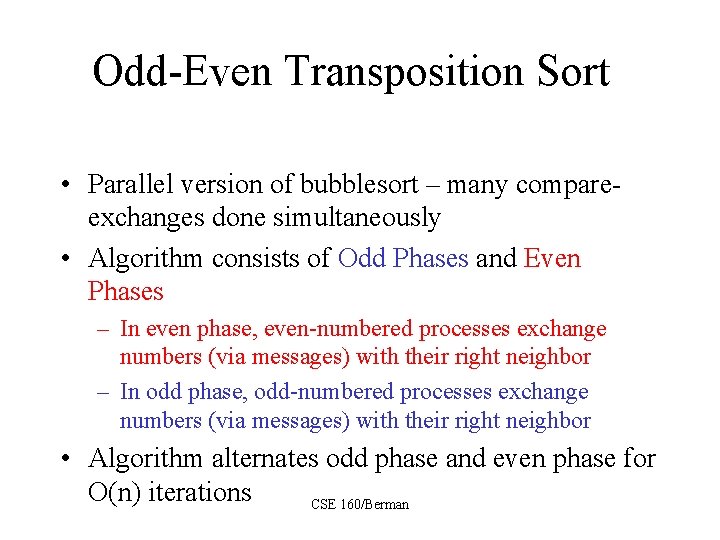

Odd-Even Transposition Sort • Parallel version of bubblesort – many compareexchanges done simultaneously • Algorithm consists of Odd Phases and Even Phases – In even phase, even-numbered processes exchange numbers (via messages) with their right neighbor – In odd phase, odd-numbered processes exchange numbers (via messages) with their right neighbor • Algorithm alternates odd phase and even phase for O(n) iterations CSE 160/Berman

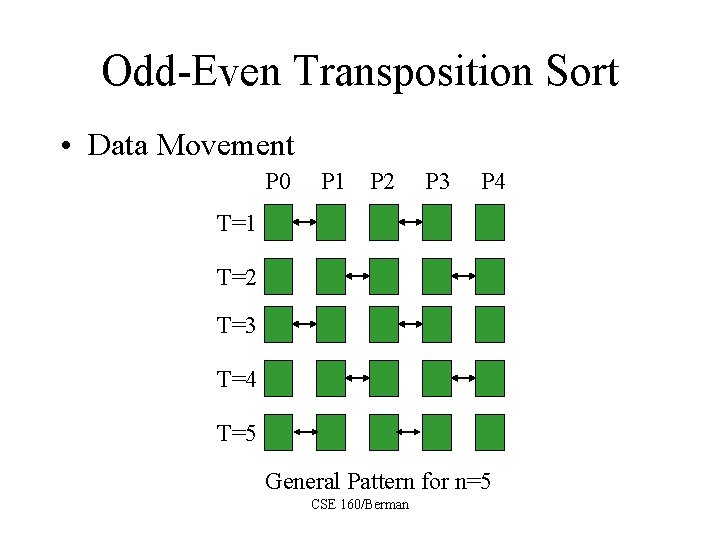

Odd-Even Transposition Sort • Data Movement P 0 P 1 P 2 P 3 P 4 T=1 T=2 T=3 T=4 T=5 General Pattern for n=5 CSE 160/Berman

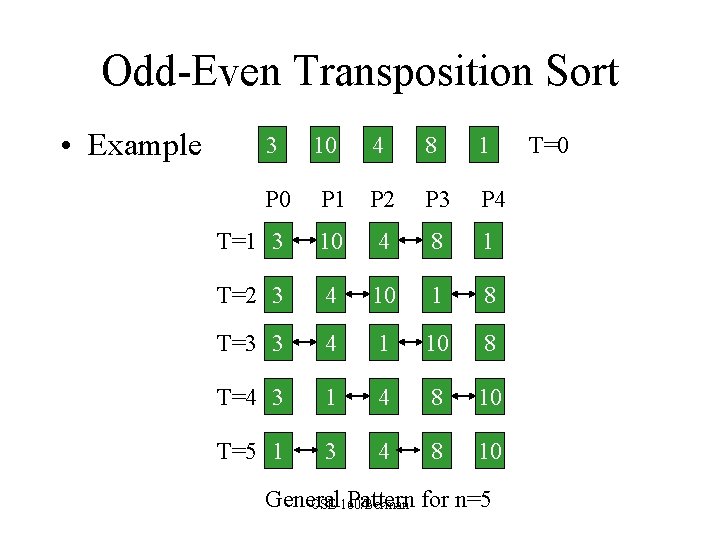

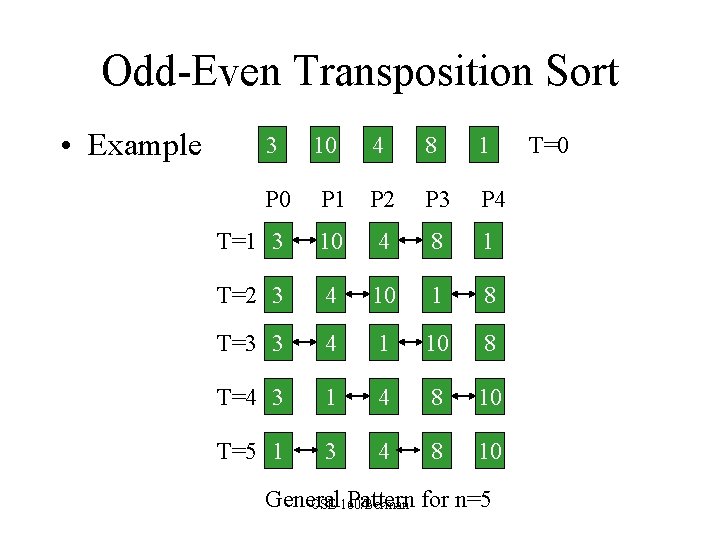

Odd-Even Transposition Sort • Example 3 10 4 8 1 P 0 P 1 P 2 P 3 P 4 T=1 3 10 4 8 1 T=2 3 4 10 1 8 T=3 3 4 1 10 8 T=4 3 1 4 8 10 T=5 1 3 4 8 10 General Pattern for n=5 CSE 160/Berman T=0

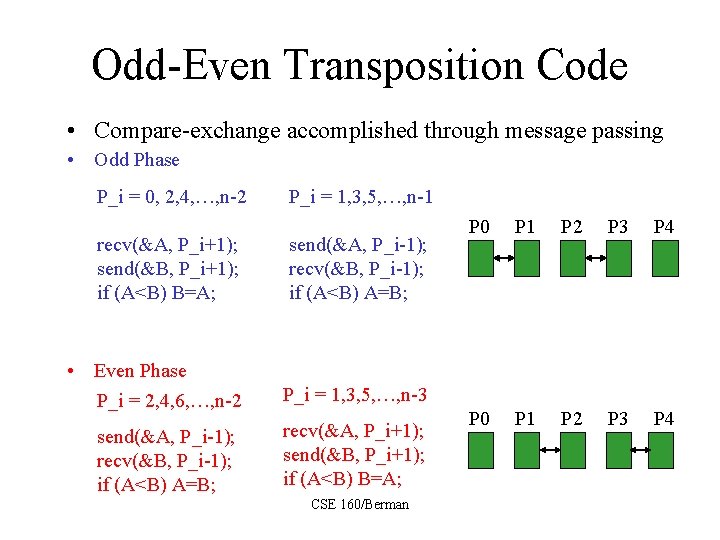

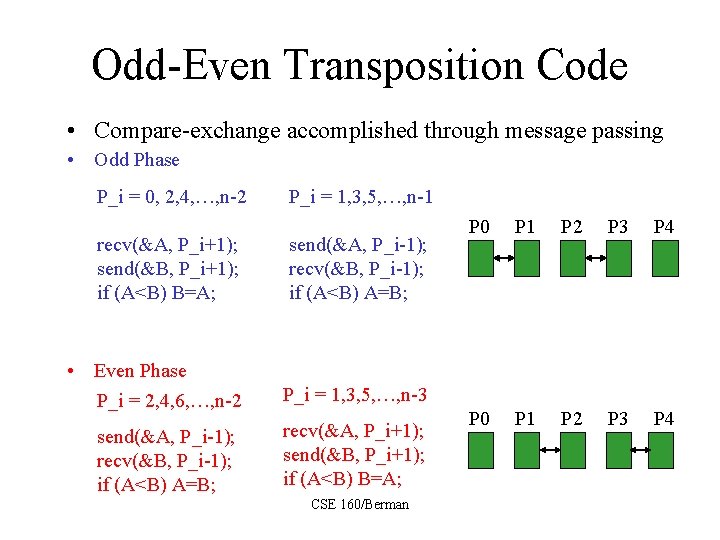

Odd-Even Transposition Code • Compare-exchange accomplished through message passing • Odd Phase P_i = 0, 2, 4, …, n-2 P_i = 1, 3, 5, …, n-1 recv(&A, P_i+1); send(&B, P_i+1); if (A<B) B=A; send(&A, P_i-1); recv(&B, P_i-1); if (A<B) A=B; • Even Phase P_i = 2, 4, 6, …, n-2 P_i = 1, 3, 5, …, n-3 send(&A, P_i-1); recv(&B, P_i-1); if (A<B) A=B; recv(&A, P_i+1); send(&B, P_i+1); if (A<B) B=A; CSE 160/Berman P 0 P 1 P 2 P 3 P 4

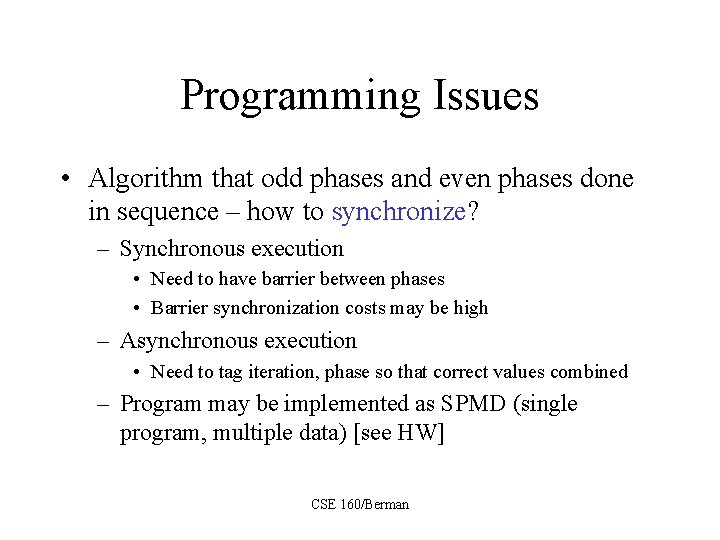

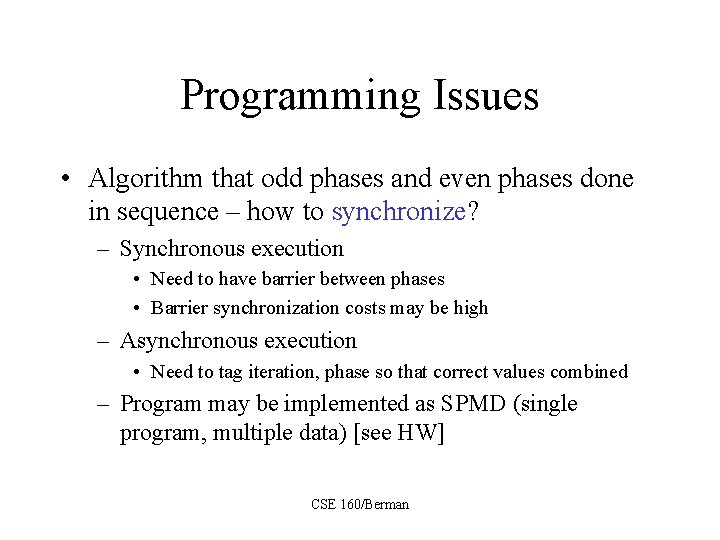

Programming Issues • Algorithm that odd phases and even phases done in sequence – how to synchronize? – Synchronous execution • Need to have barrier between phases • Barrier synchronization costs may be high – Asynchronous execution • Need to tag iteration, phase so that correct values combined – Program may be implemented as SPMD (single program, multiple data) [see HW] CSE 160/Berman

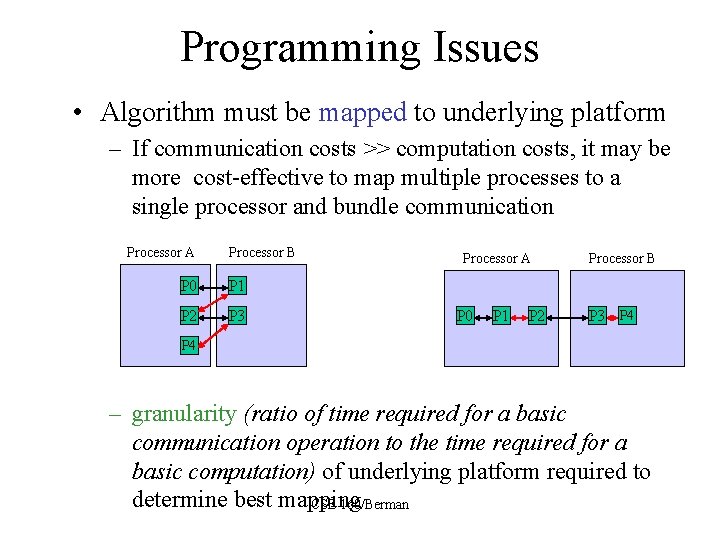

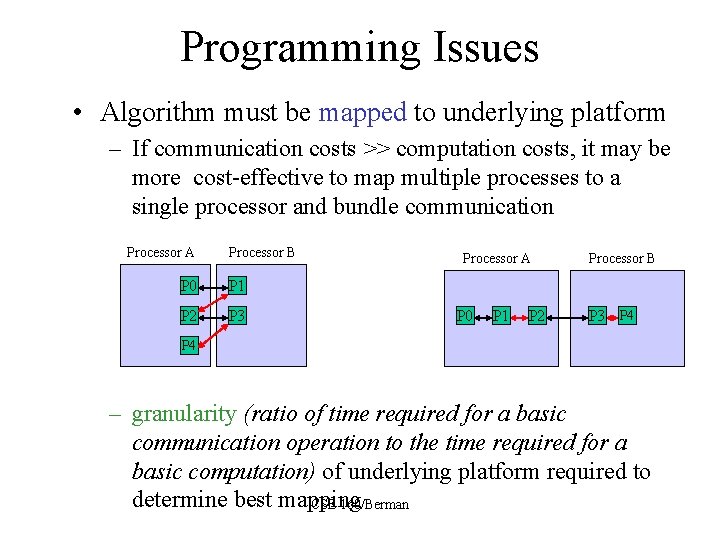

Programming Issues • Algorithm must be mapped to underlying platform – If communication costs >> computation costs, it may be more cost-effective to map multiple processes to a single processor and bundle communication Processor A Processor B P 0 P 1 P 2 P 3 Processor A P 0 P 1 P 2 Processor B P 3 P 4 – granularity (ratio of time required for a basic communication operation to the time required for a basic computation) of underlying platform required to determine best mapping CSE 160/Berman

Is Odd-Even Transposition Sort Optimal? • What is optimal? – An algorithm is optimal if there is a lower bound for the problem it addresses with respect to the basic operation being counted which equals the upper bound given by the algorithm’s complexity function, i. e. lower bound = upper bound CSE 160/Berman

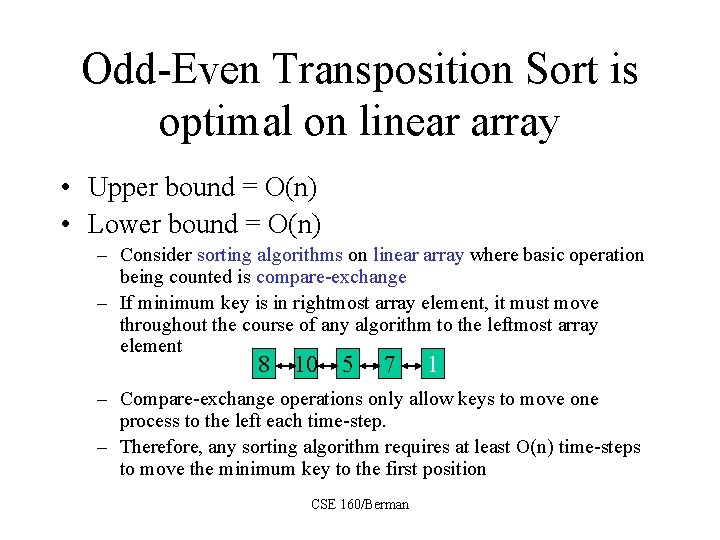

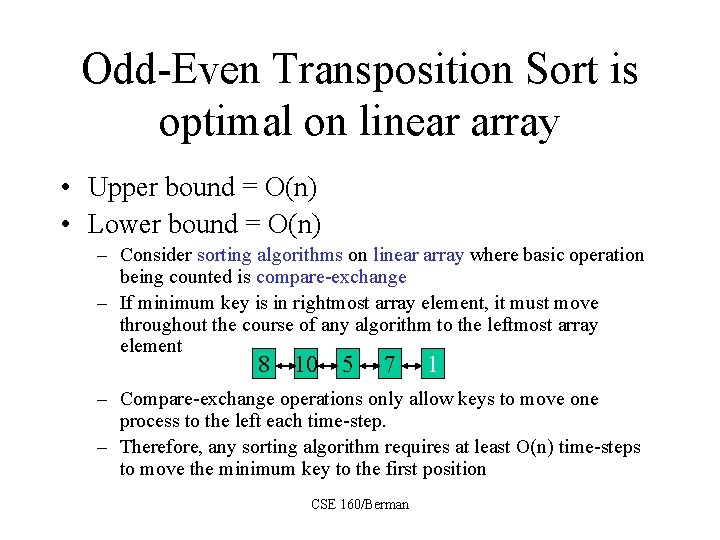

Odd-Even Transposition Sort is optimal on linear array • Upper bound = O(n) • Lower bound = O(n) – Consider sorting algorithms on linear array where basic operation being counted is compare-exchange – If minimum key is in rightmost array element, it must move throughout the course of any algorithm to the leftmost array element 8 10 5 7 1 – Compare-exchange operations only allow keys to move one process to the left each time-step. – Therefore, any sorting algorithm requires at least O(n) time-steps to move the minimum key to the first position CSE 160/Berman

Optimality • O(nlogn) lower bound for serial sorting algorithms on RAM wrt comparisons • O(n) lower bound for parallel sorting algorithms on linear array wrt compare-exchange • No conflict since the platforms/computing environments are different, apples vs. oranges • Note that in parallel world, different lower bounds for sorting in different environments – O(logn) lower bound on PRAM (Parallel RAM) – O(n^1/2) lower bound on 2 D array, etc. CSE 160/Berman

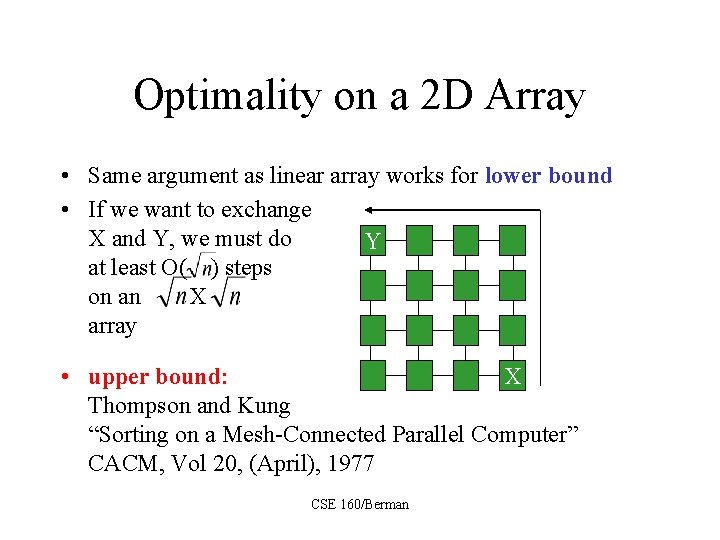

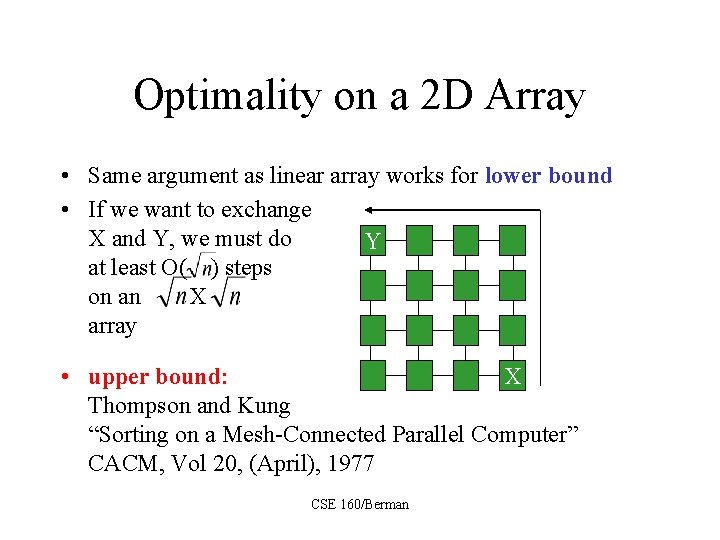

Optimality on a 2 D Array • Same argument as linear array works for lower bound • If we want to exchange X and Y, we must do Y at least O( ) steps on an X array X • upper bound: Thompson and Kung “Sorting on a Mesh-Connected Parallel Computer” CACM, Vol 20, (April), 1977 CSE 160/Berman