Recursive Deep Models for Semantic Compositionality Over a

- Slides: 21

Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank Richard Socher, Alex Perelygin, Jean Y. Wu, Jason Chuang, Christopher D. Manning, Andrew Y. Ng and Christopher Potts Presented by Ben King For the NLP Reading Group November 13, 2013 (some material borrowed from the NAACL 2013 Deep Learning tutorial)

Sentiment Treebank

Need for a Sentiment Treebank • Almost all work on sentiment analysis has used mostly word-order independent methods • But many papers acknowledge that sentiment interacts with syntax in complex ways • Little work has been done on these interactions because they’re very difficult to learn • Single-sentence sentiment classification accuracy has languished at ~80% for a long time

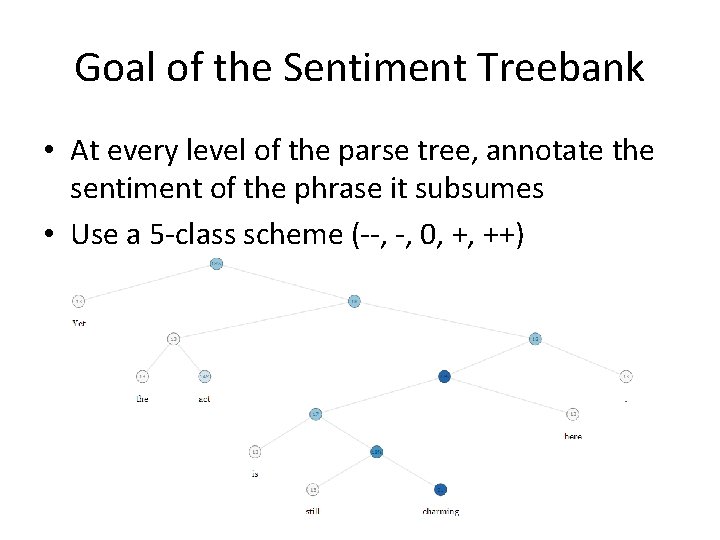

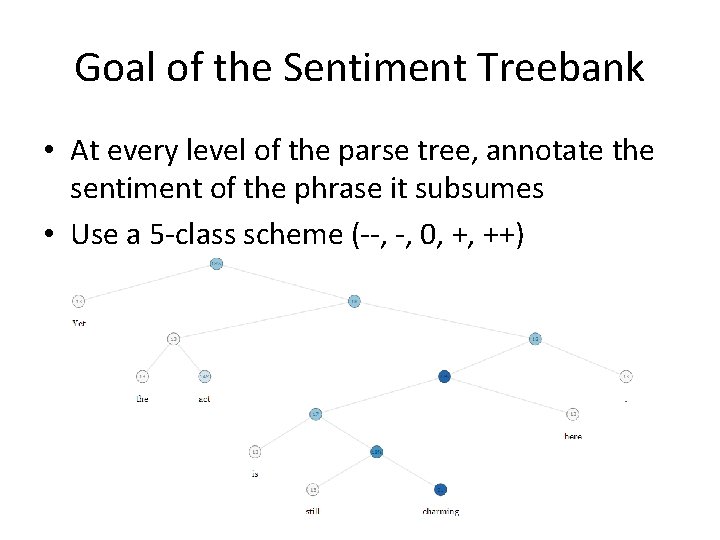

Goal of the Sentiment Treebank • At every level of the parse tree, annotate the sentiment of the phrase it subsumes • Use a 5 -class scheme (--, -, 0, +, ++)

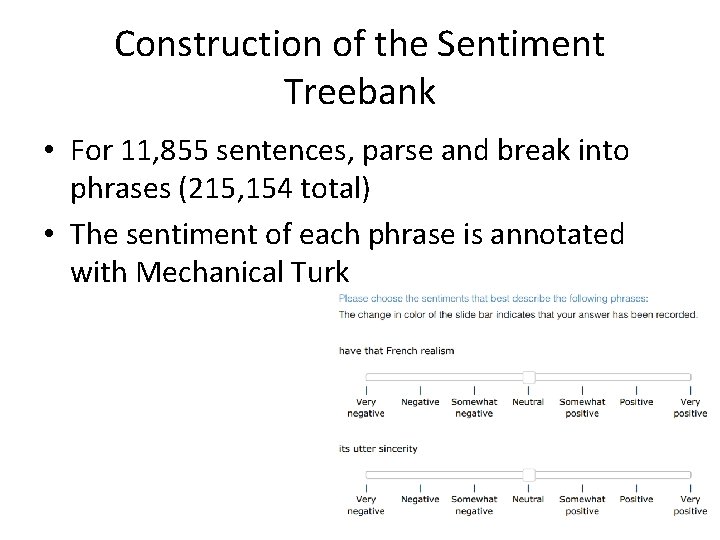

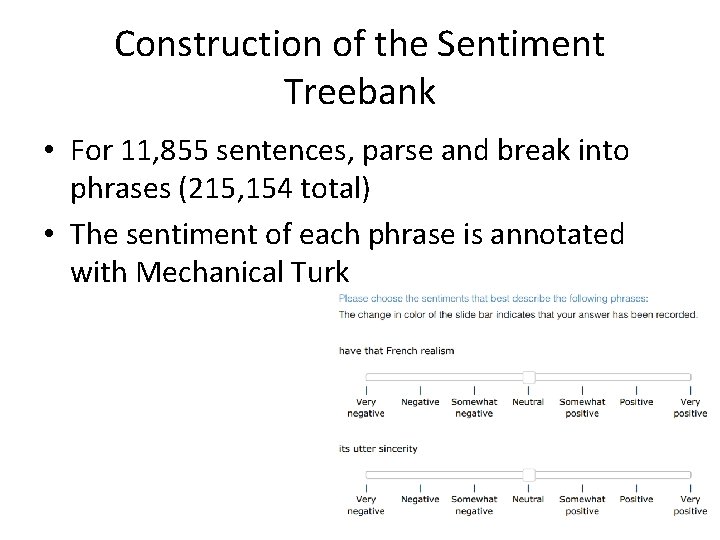

Construction of the Sentiment Treebank • For 11, 855 sentences, parse and break into phrases (215, 154 total) • The sentiment of each phrase is annotated with Mechanical Turk

Construction of the Sentiment Treebank

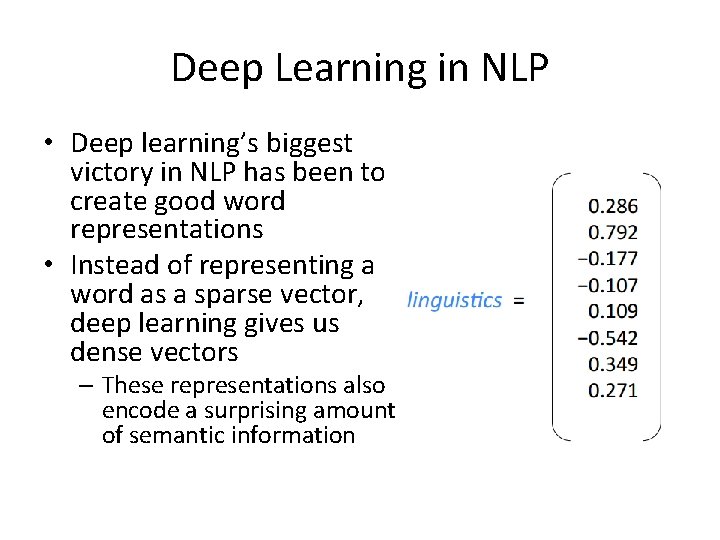

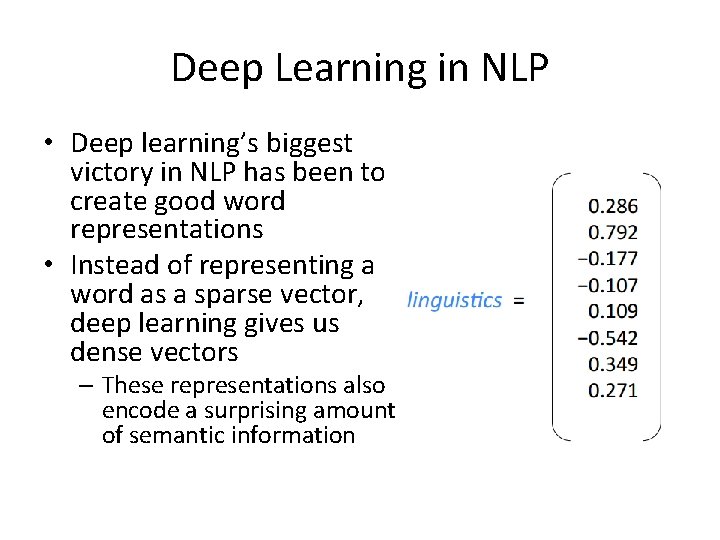

Deep Learning in NLP • Deep learning’s biggest victory in NLP has been to create good word representations • Instead of representing a word as a sparse vector, deep learning gives us dense vectors – These representations also encode a surprising amount of semantic information

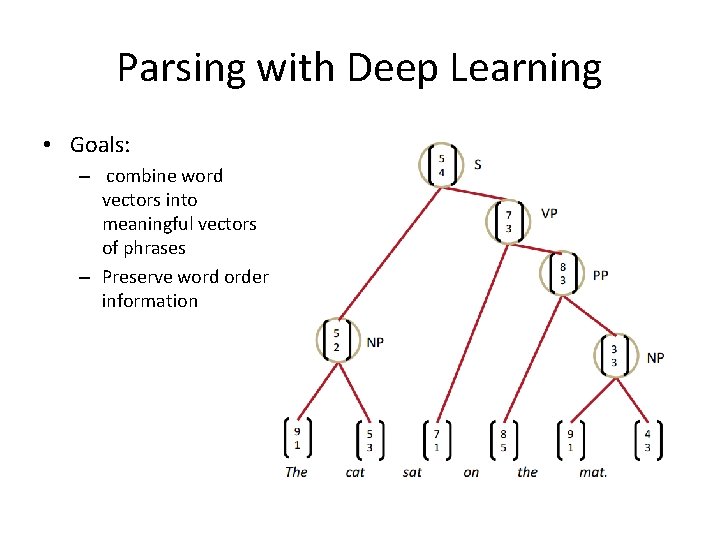

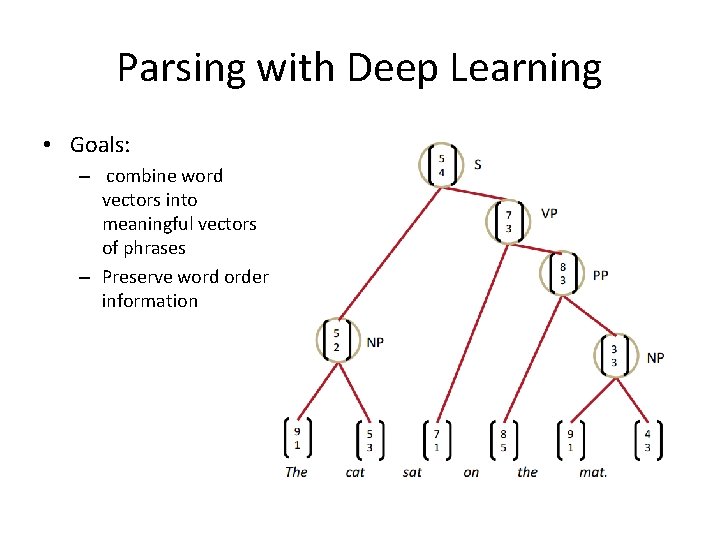

Parsing with Deep Learning • Goals: – combine word vectors into meaningful vectors of phrases – Preserve word order information

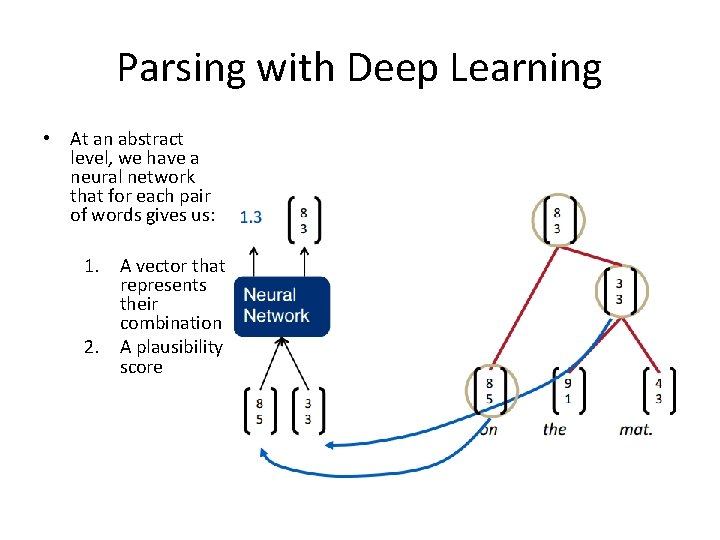

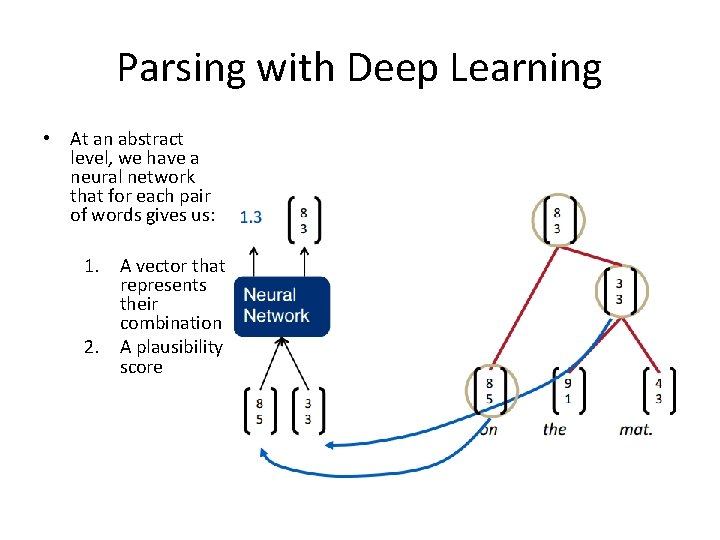

Parsing with Deep Learning • At an abstract level, we have a neural network that for each pair of words gives us: 1. A vector that represents their combination 2. A plausibility score

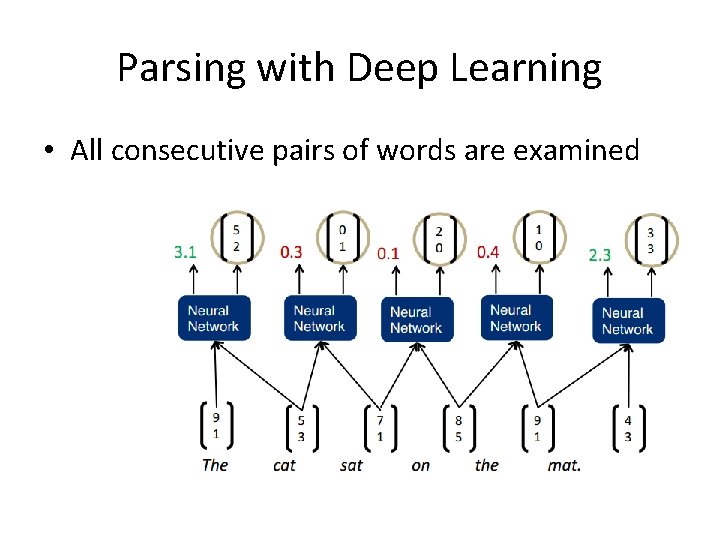

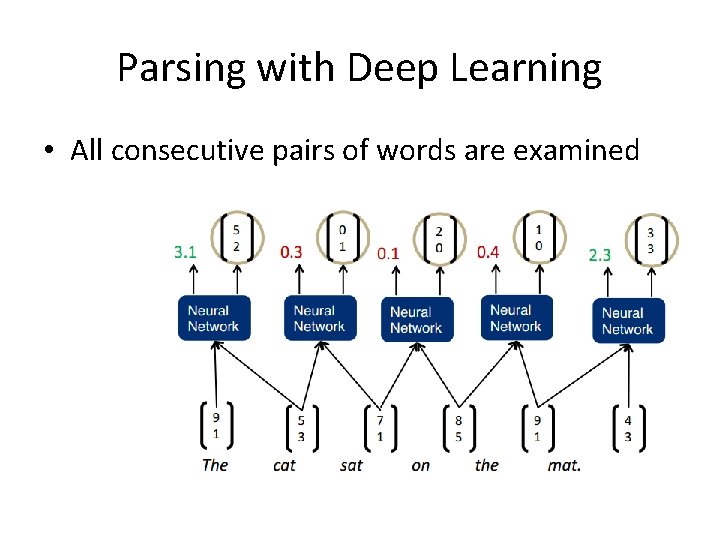

Parsing with Deep Learning • All consecutive pairs of words are examined

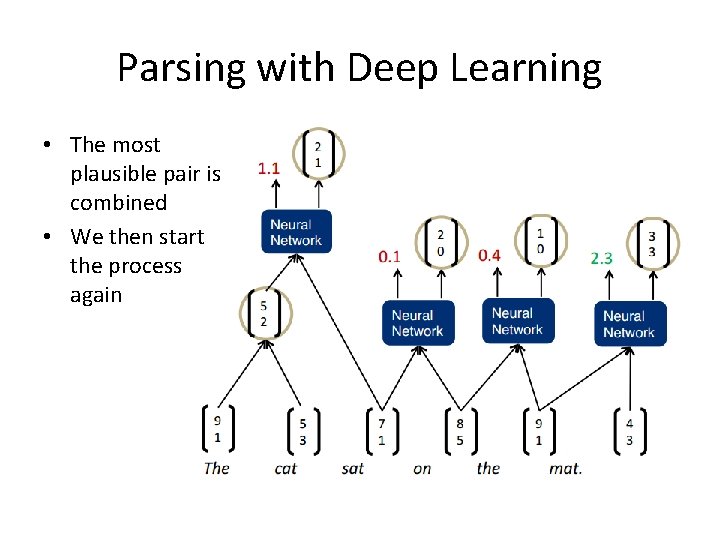

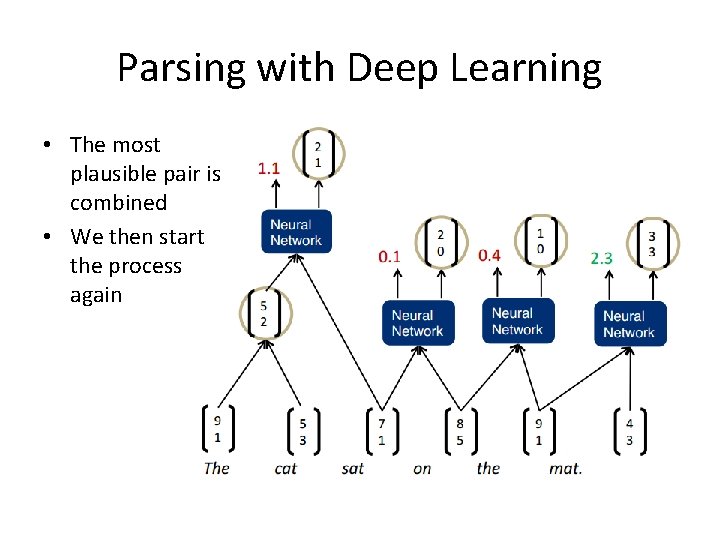

Parsing with Deep Learning • The most plausible pair is combined • We then start the process again

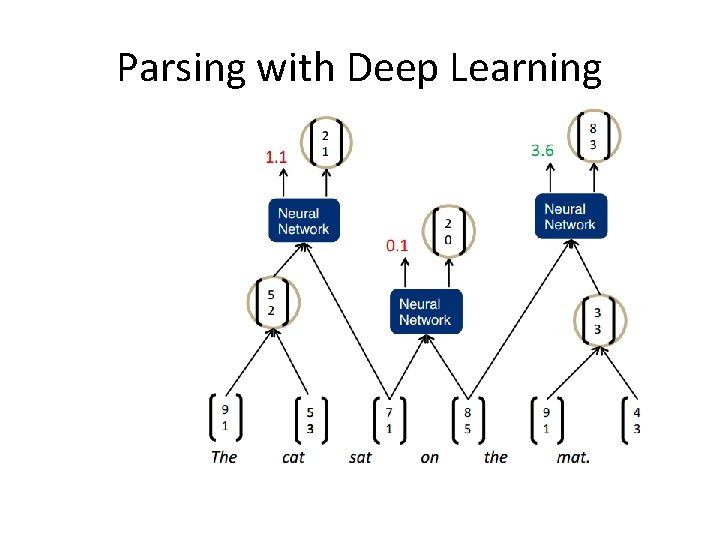

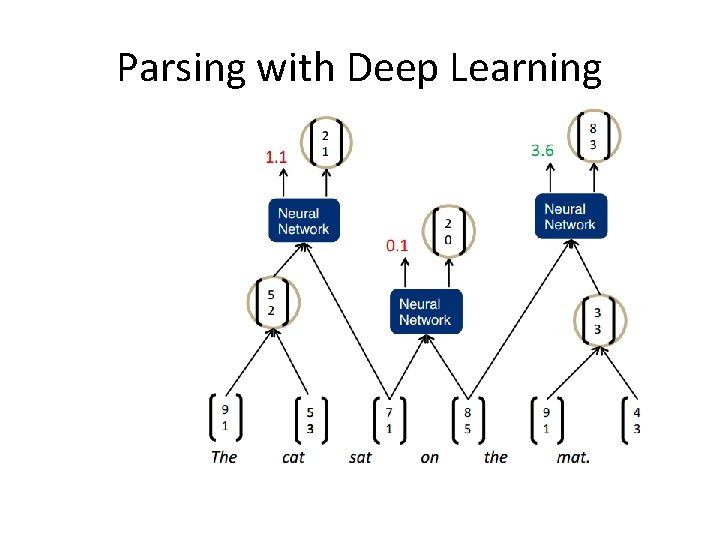

Parsing with Deep Learning

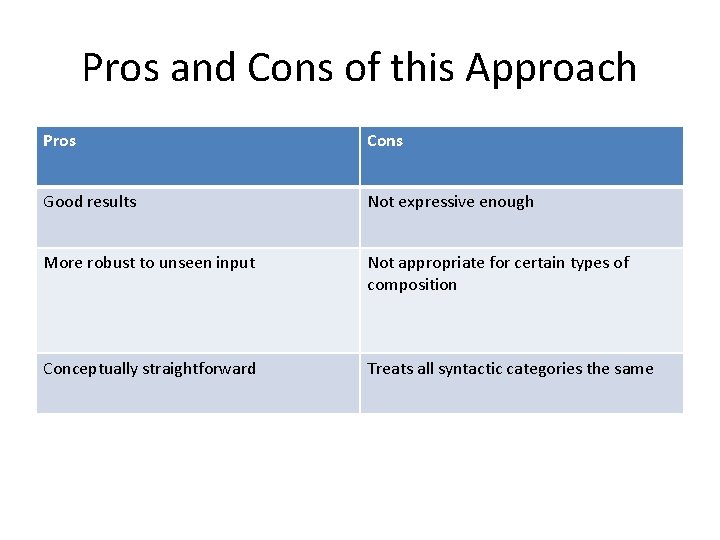

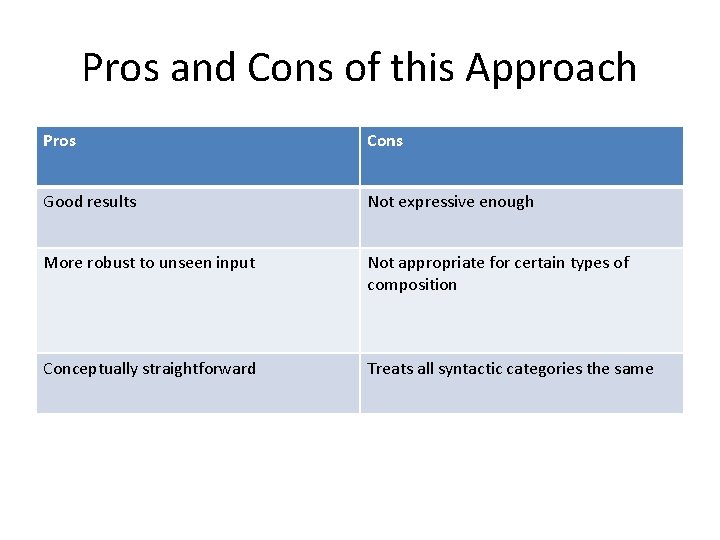

Pros and Cons of this Approach Pros Cons Good results Not expressive enough More robust to unseen input Not appropriate for certain types of composition Conceptually straightforward Treats all syntactic categories the same

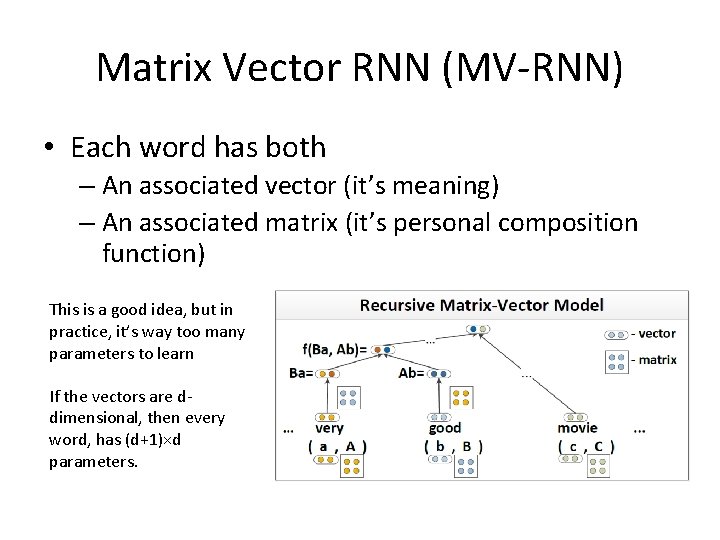

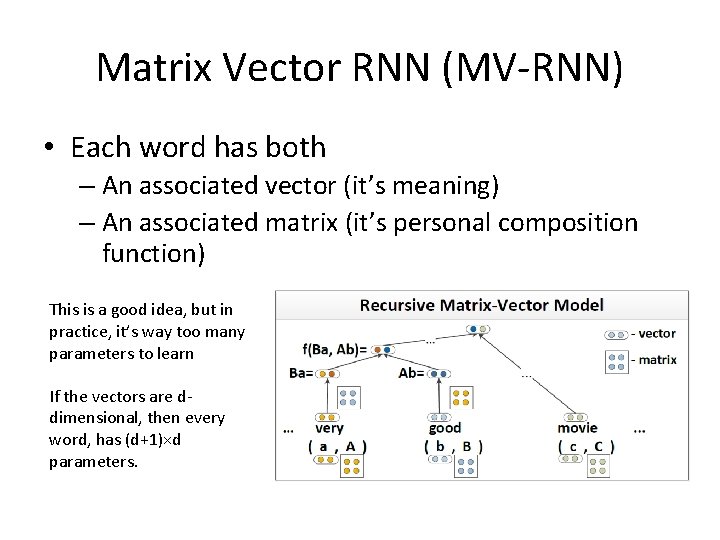

Matrix Vector RNN (MV-RNN) • Each word has both – An associated vector (it’s meaning) – An associated matrix (it’s personal composition function) This is a good idea, but in practice, it’s way too many parameters to learn If the vectors are ddimensional, then every word, has (d+1)×d parameters.

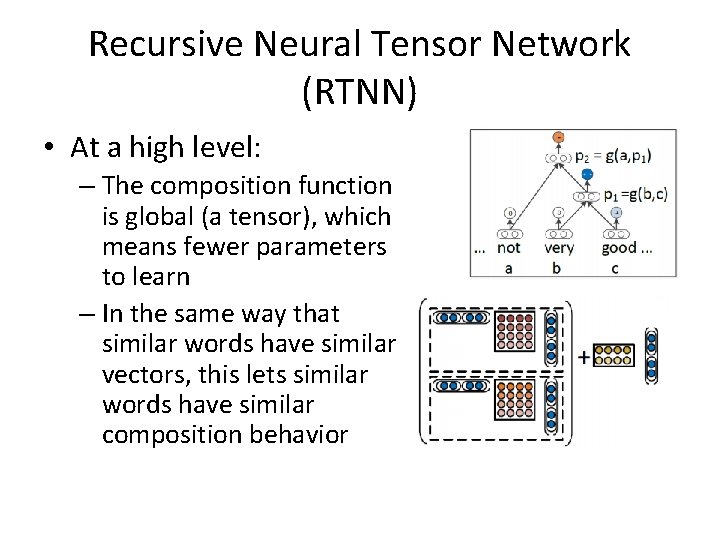

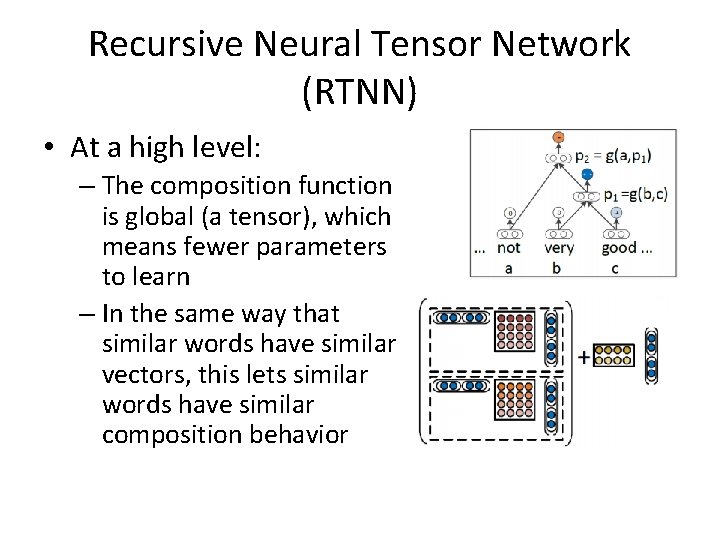

Recursive Neural Tensor Network (RTNN) • At a high level: – The composition function is global (a tensor), which means fewer parameters to learn – In the same way that similar words have similar vectors, this lets similar words have similar composition behavior

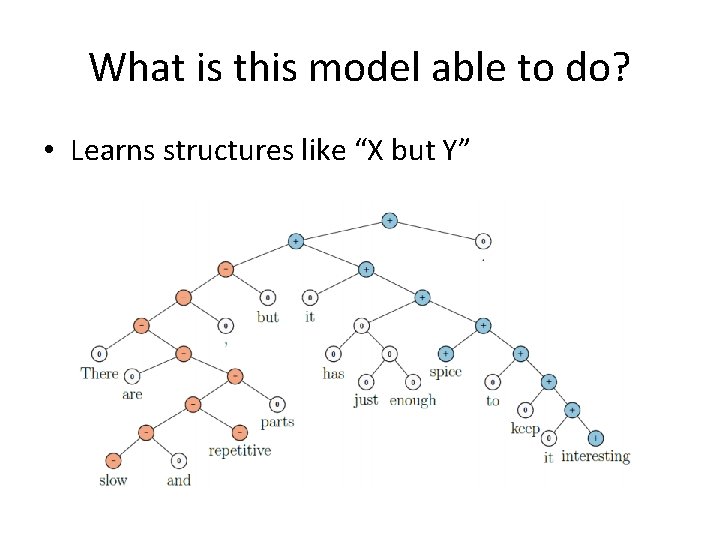

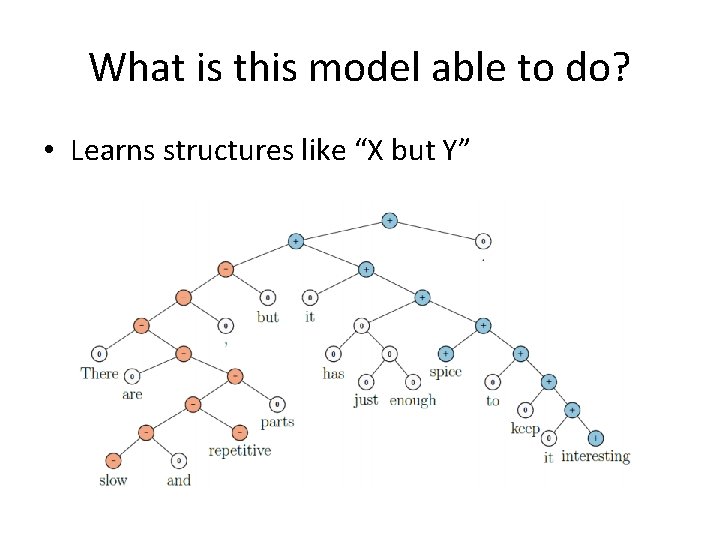

What is this model able to do? • Learns structures like “X but Y”

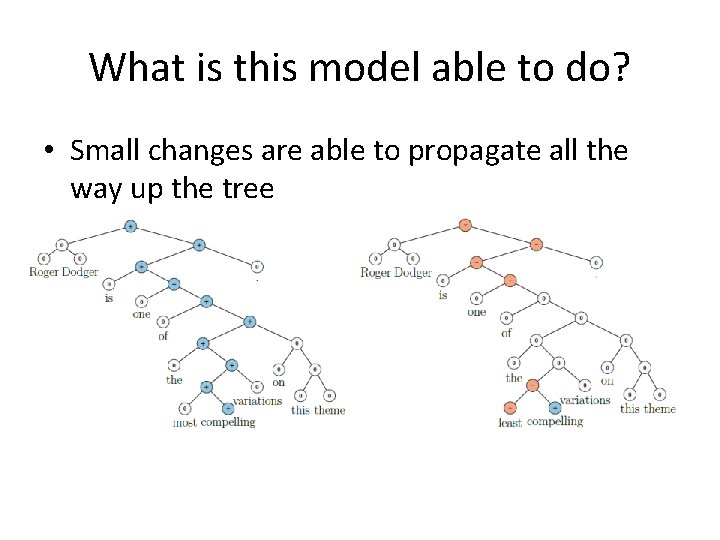

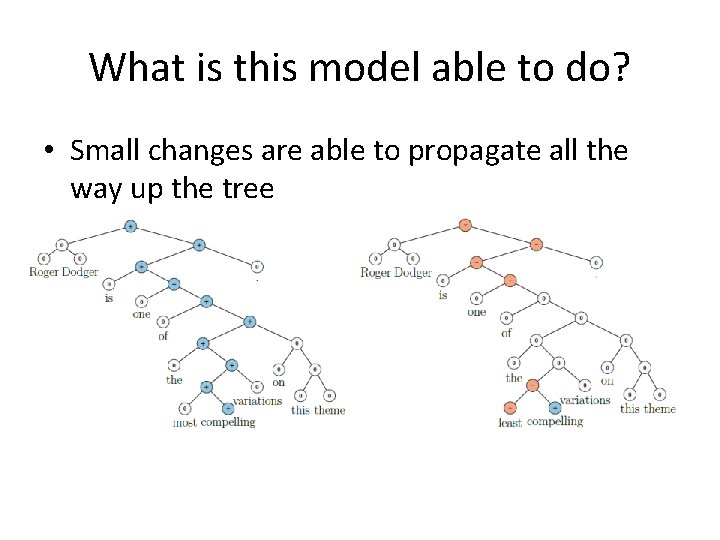

What is this model able to do? • Small changes are able to propagate all the way up the tree

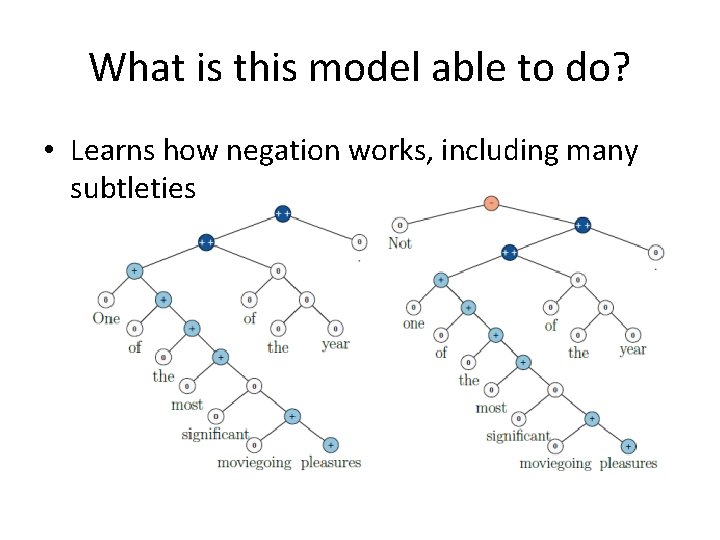

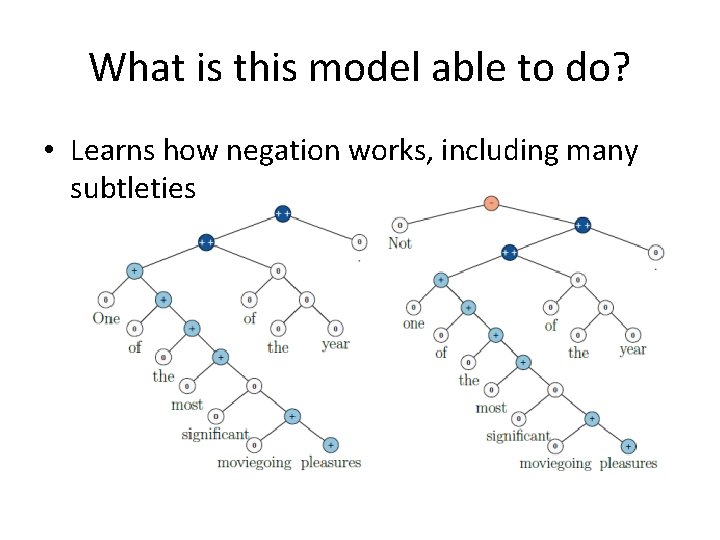

What is this model able to do? • Learns how negation works, including many subtleties

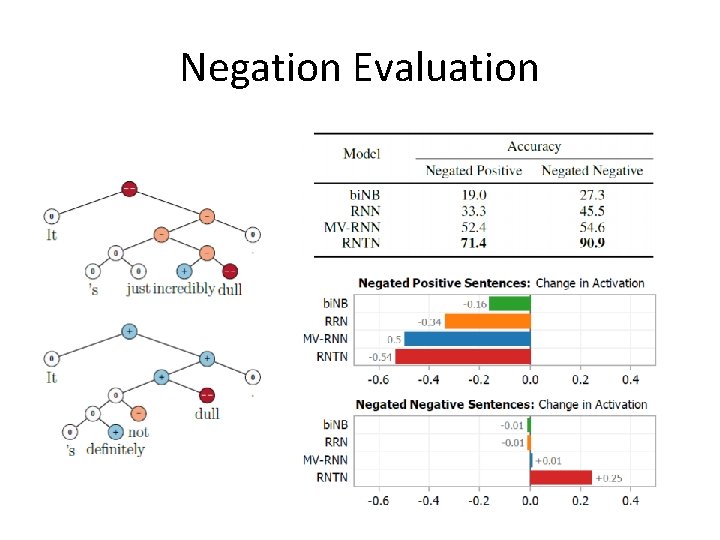

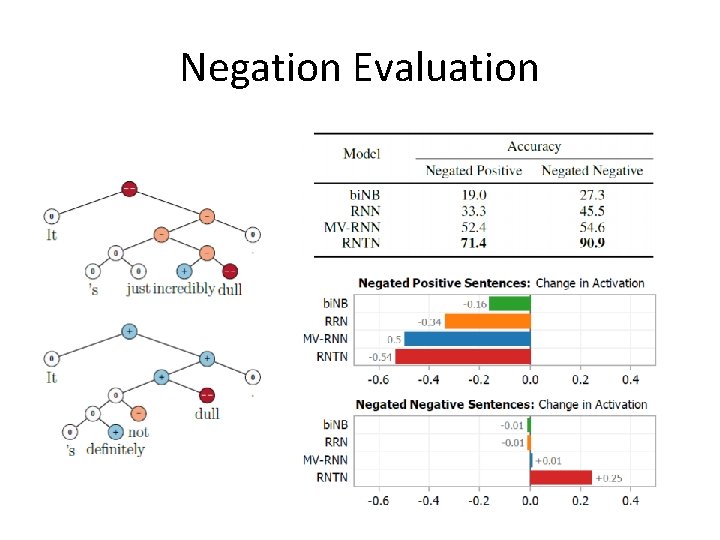

Negation Evaluation

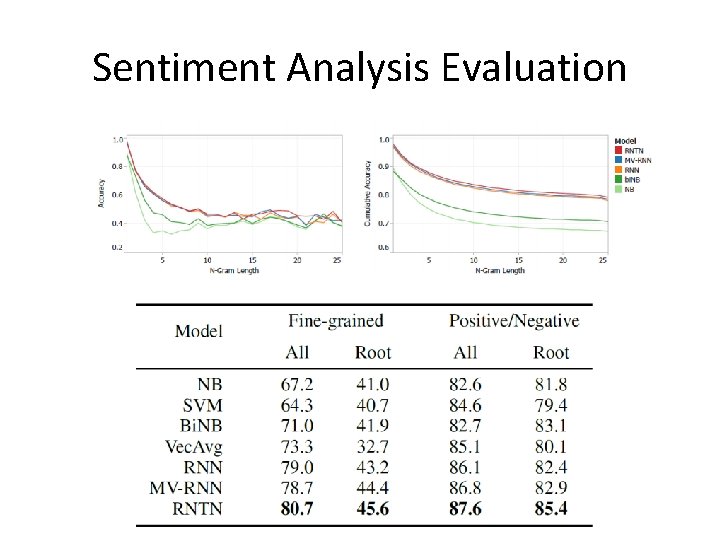

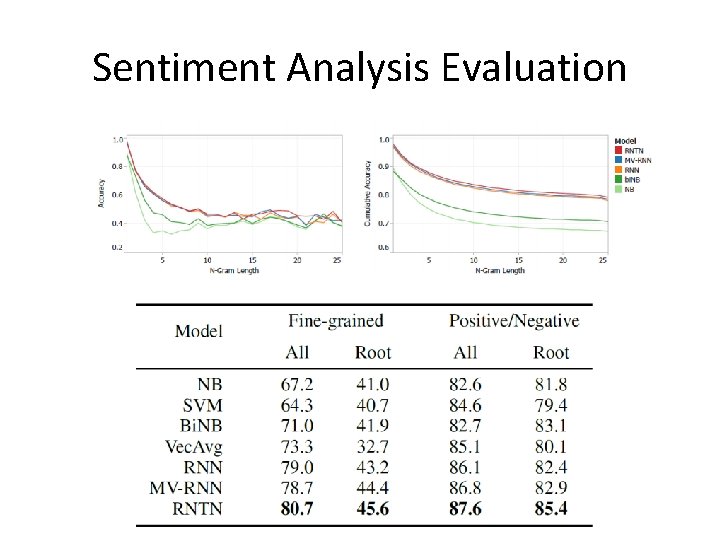

Sentiment Analysis Evaluation

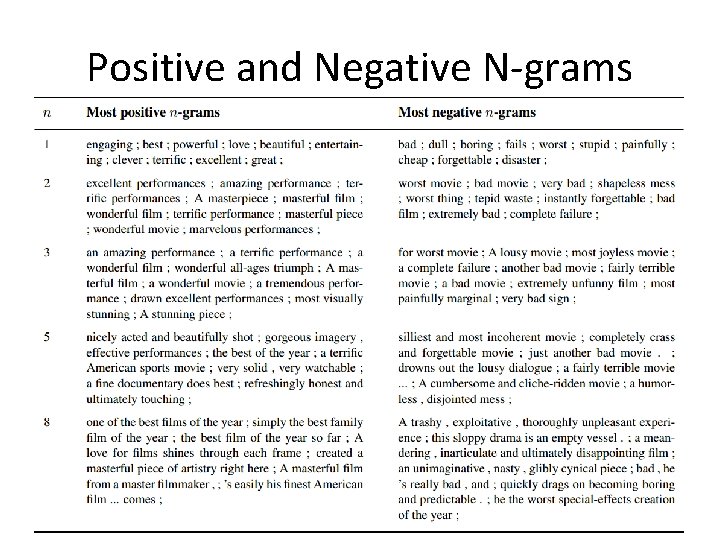

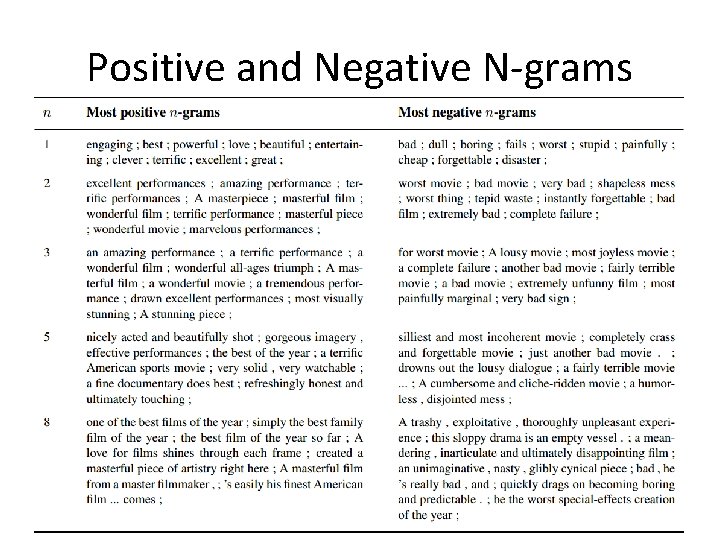

Positive and Negative N-grams