Part 6 HMM in Practice CSE 717 SPRING

![References [1] Schwartz R, Chow Y, Roucos S, Krasner M, Makhoul J, Improved hidden References [1] Schwartz R, Chow Y, Roucos S, Krasner M, Makhoul J, Improved hidden](https://slidetodoc.com/presentation_image_h2/1399bf87f5f10758e544fece780097e9/image-22.jpg)

- Slides: 24

Part 6 HMM in Practice CSE 717, SPRING 2008 CUBS, Univ at Buffalo

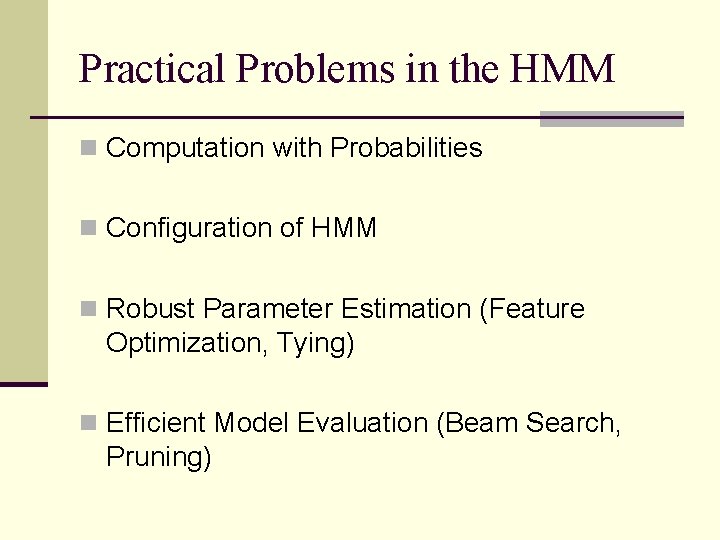

Practical Problems in the HMM n Computation with Probabilities n Configuration of HMM n Robust Parameter Estimation (Feature Optimization, Tying) n Efficient Model Evaluation (Beam Search, Pruning)

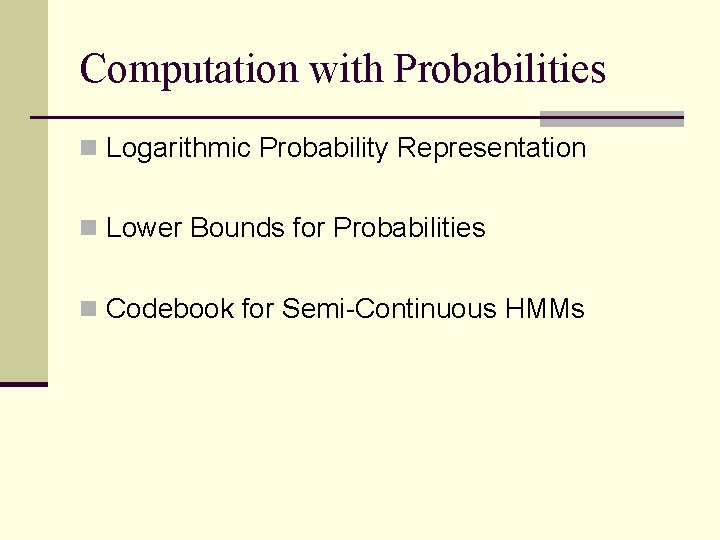

Computation with Probabilities n Logarithmic Probability Representation n Lower Bounds for Probabilities n Codebook for Semi-Continuous HMMs

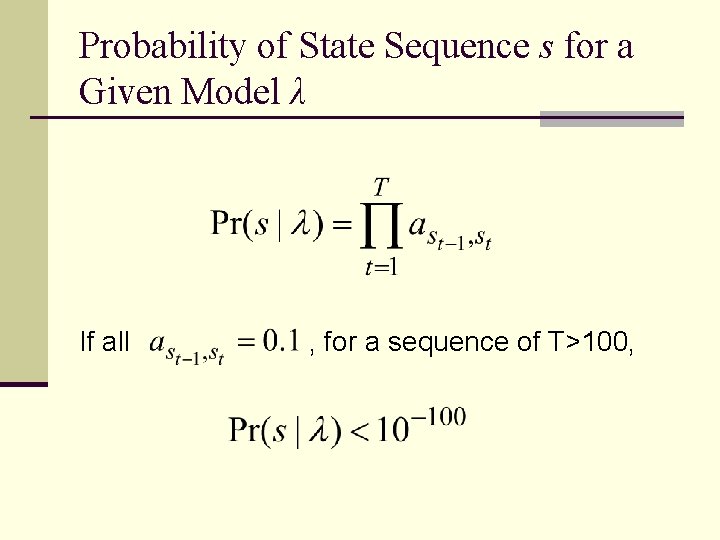

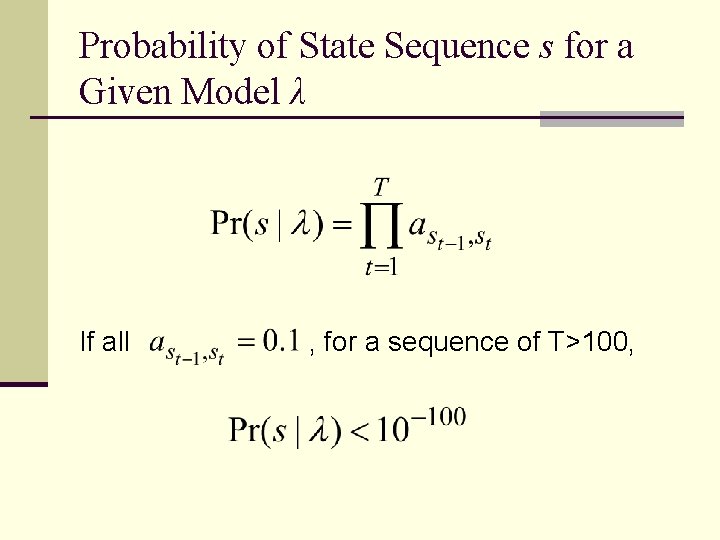

Probability of State Sequence s for a Given Model λ If all , for a sequence of T>100,

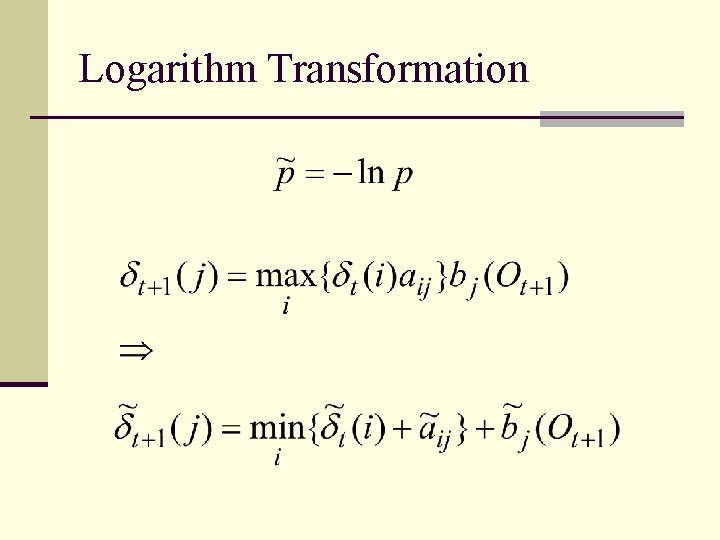

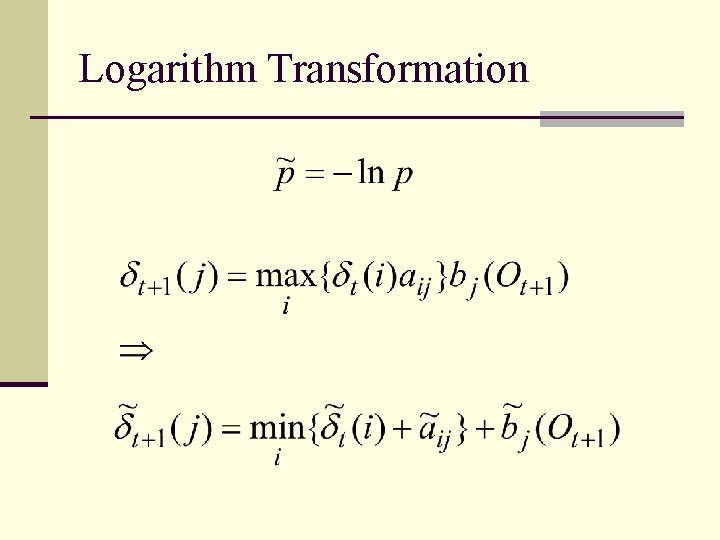

Logarithm Transformation

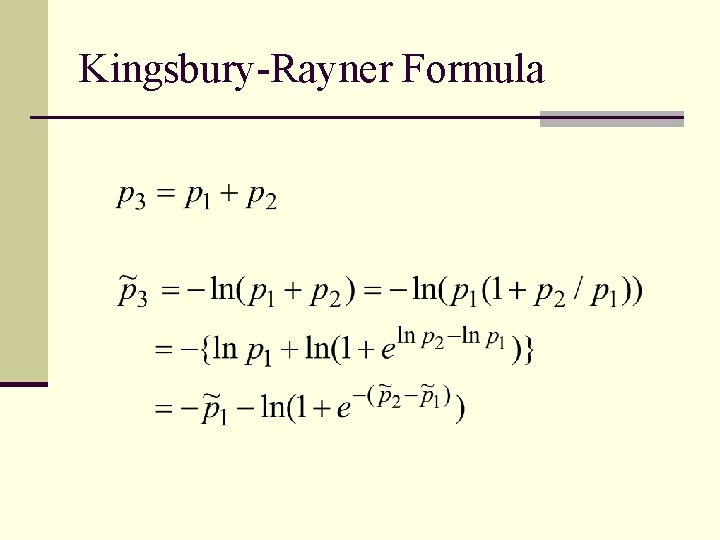

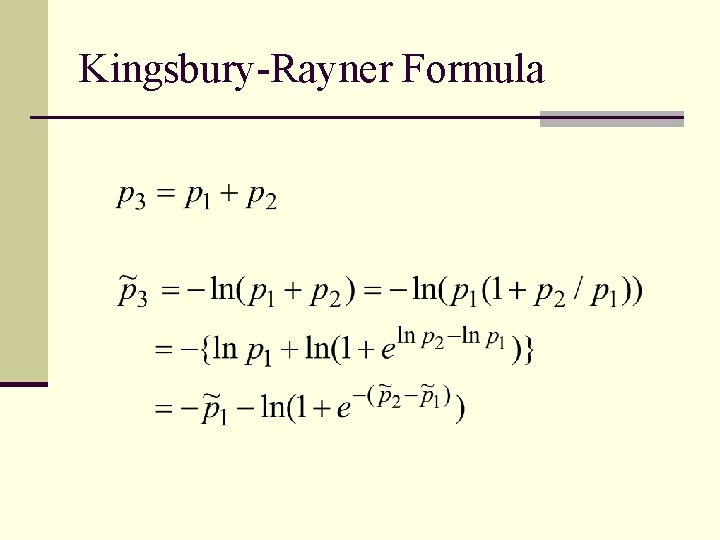

Kingsbury-Rayner Formula

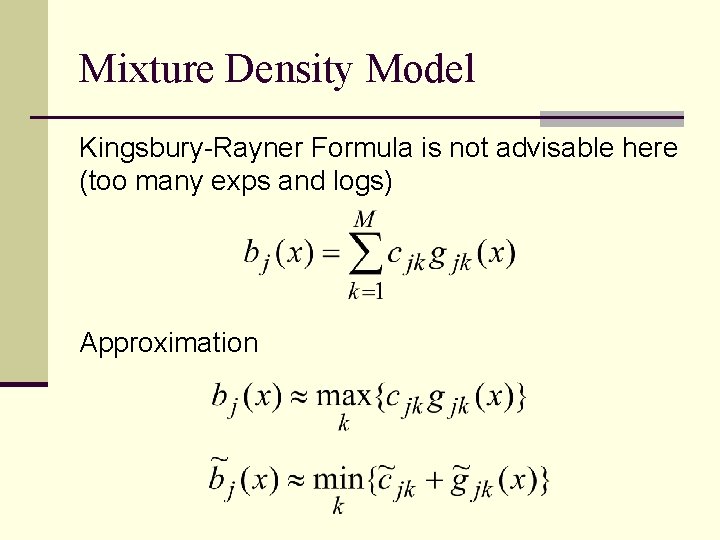

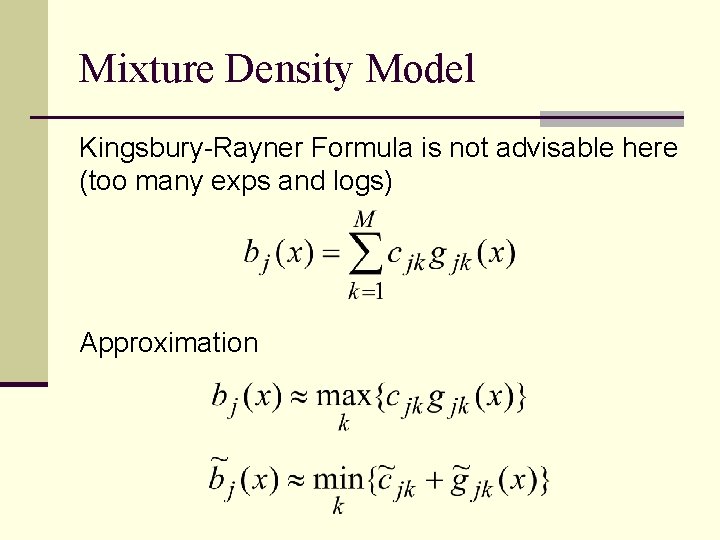

Mixture Density Model Kingsbury-Rayner Formula is not advisable here (too many exps and logs) Approximation

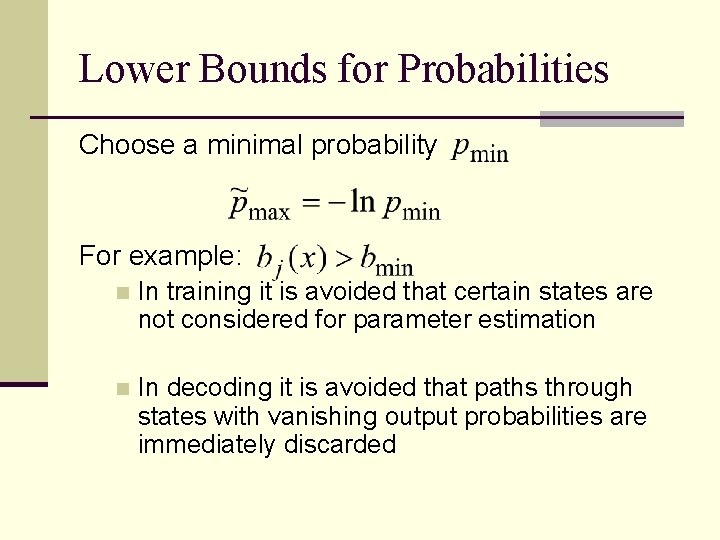

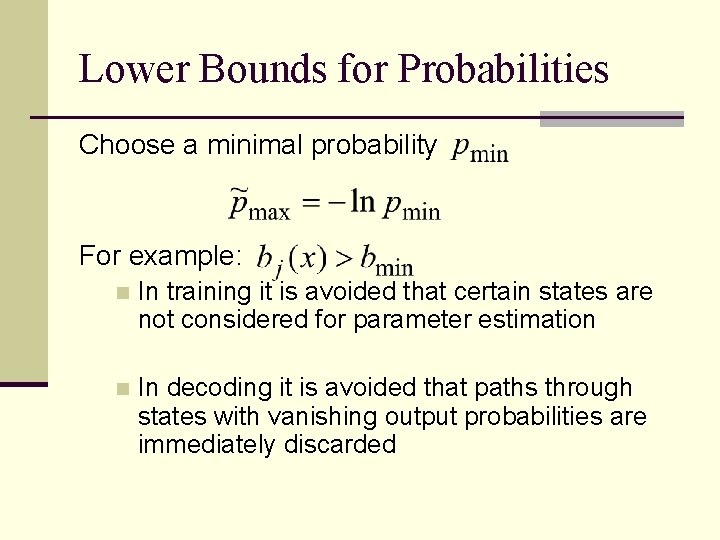

Lower Bounds for Probabilities Choose a minimal probability For example: n In training it is avoided that certain states are not considered for parameter estimation n In decoding it is avoided that paths through states with vanishing output probabilities are immediately discarded

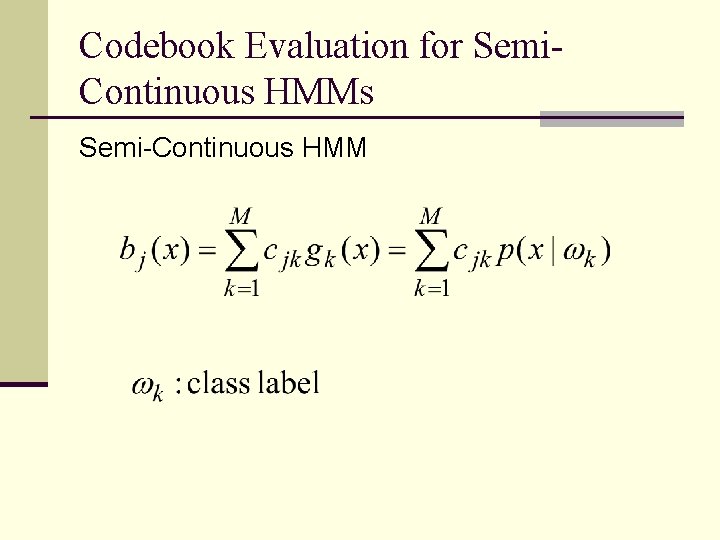

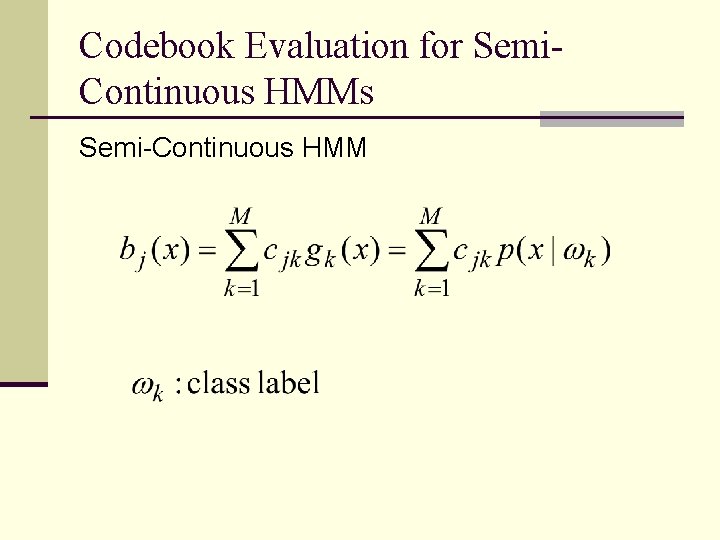

Codebook Evaluation for Semi. Continuous HMMs Semi-Continuous HMM

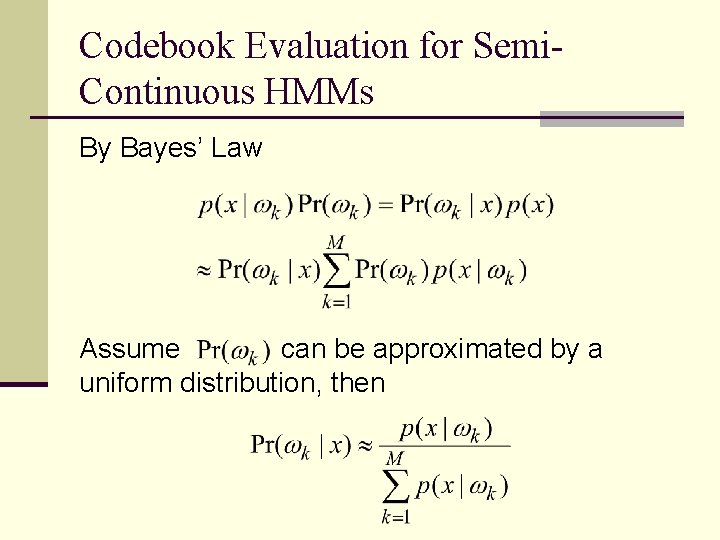

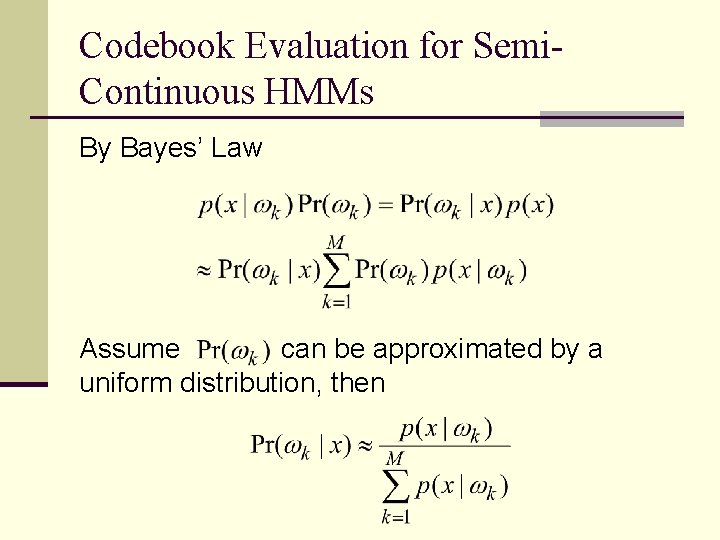

Codebook Evaluation for Semi. Continuous HMMs By Bayes’ Law Assume can be approximated by a uniform distribution, then

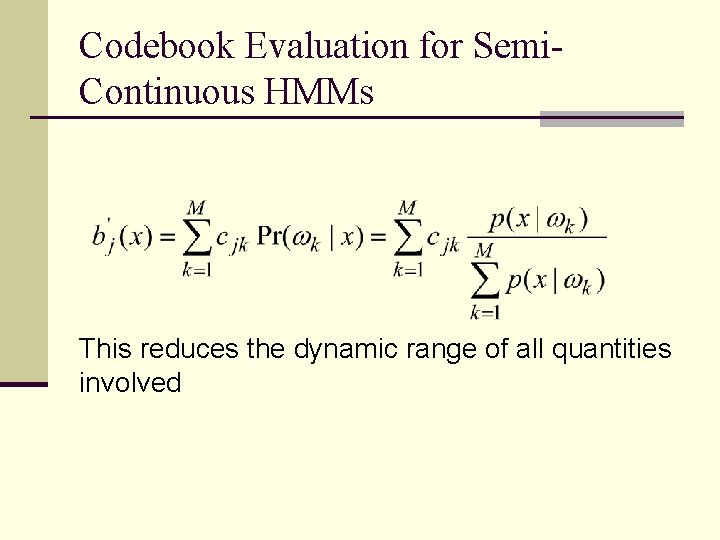

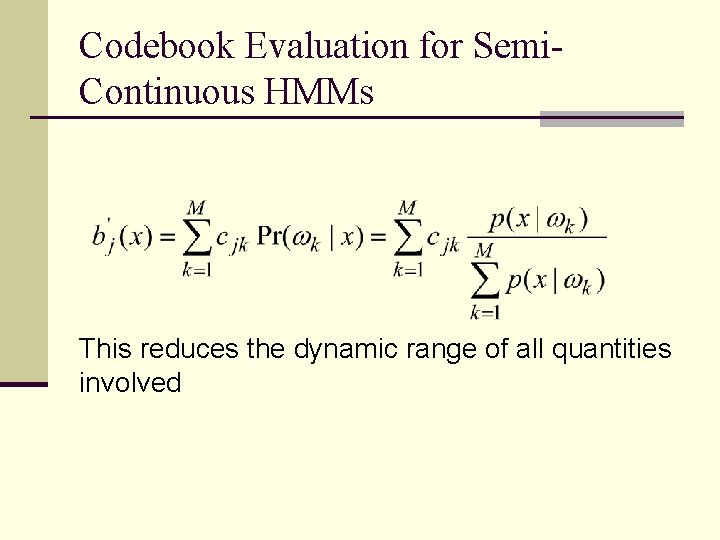

Codebook Evaluation for Semi. Continuous HMMs This reduces the dynamic range of all quantities involved

Configuration of HMM n Model Topology n Modularization n Compound Models n Modeling Emissions

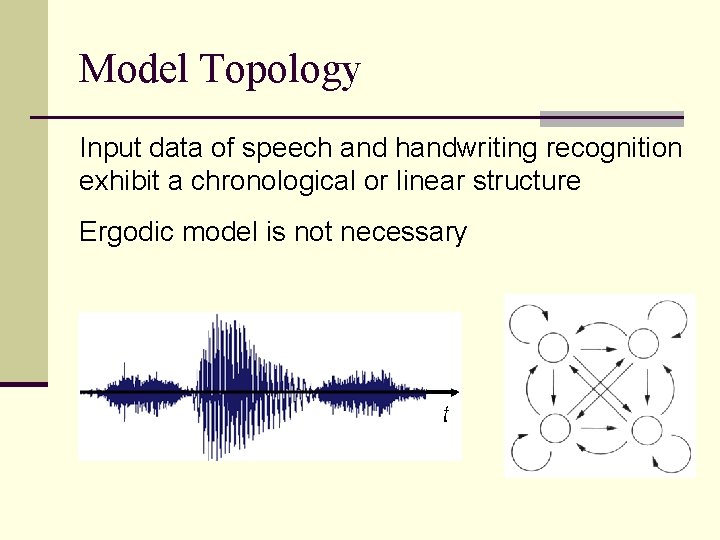

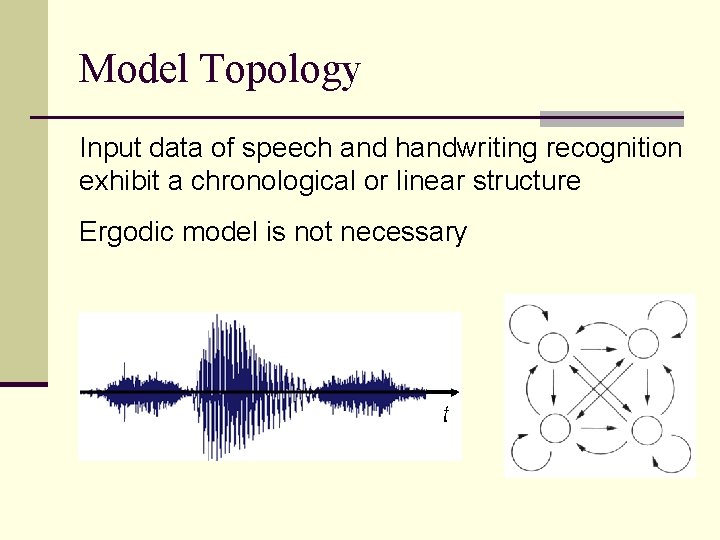

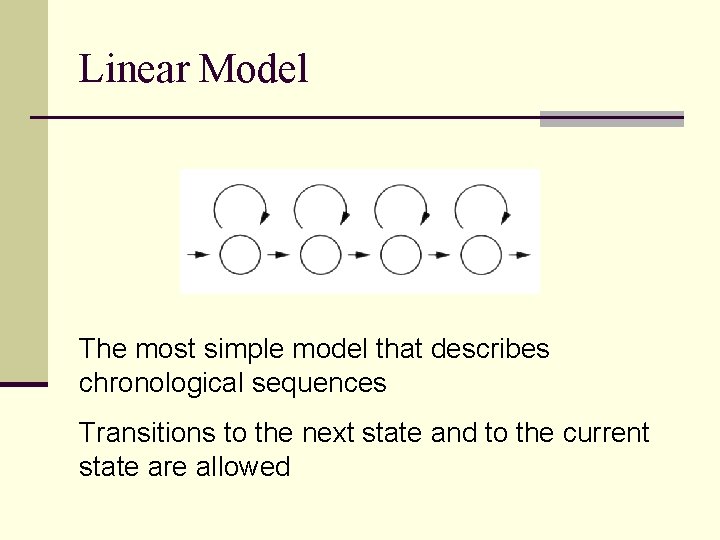

Model Topology Input data of speech and handwriting recognition exhibit a chronological or linear structure Ergodic model is not necessary

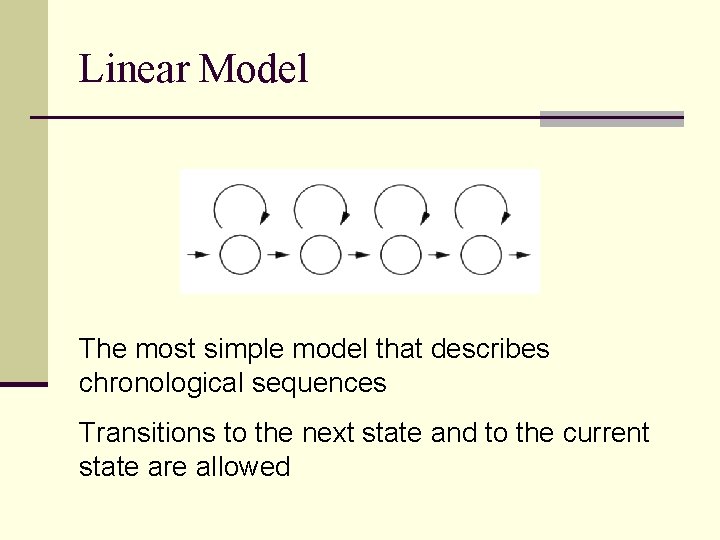

Linear Model The most simple model that describes chronological sequences Transitions to the next state and to the current state are allowed

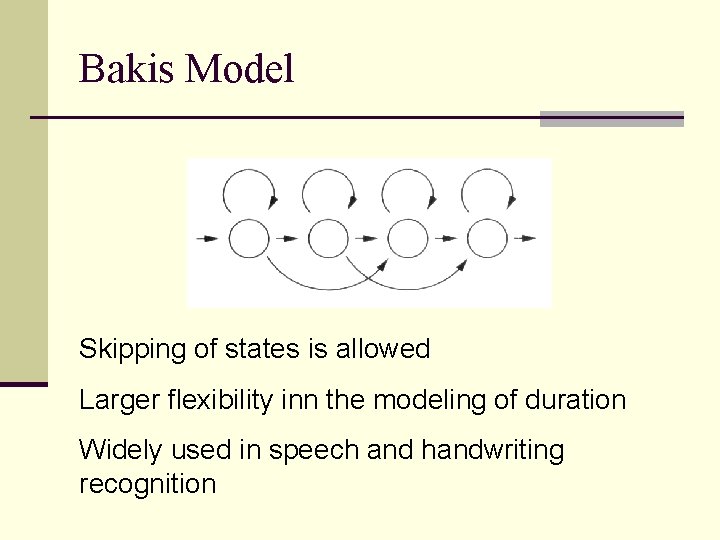

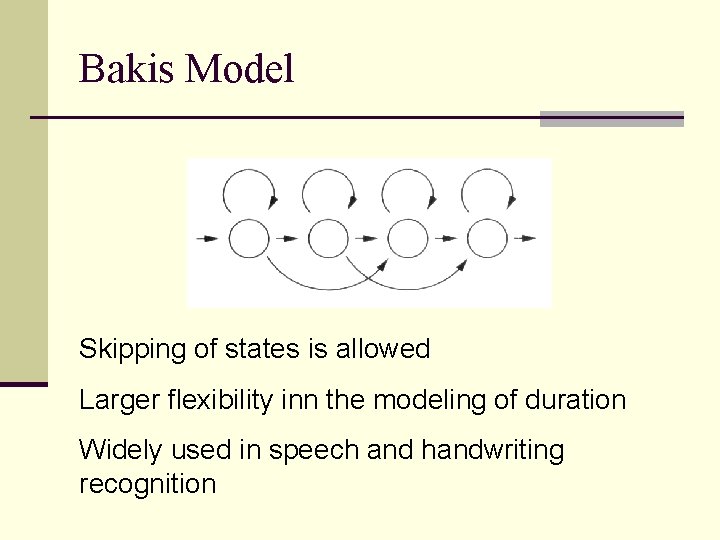

Bakis Model Skipping of states is allowed Larger flexibility inn the modeling of duration Widely used in speech and handwriting recognition

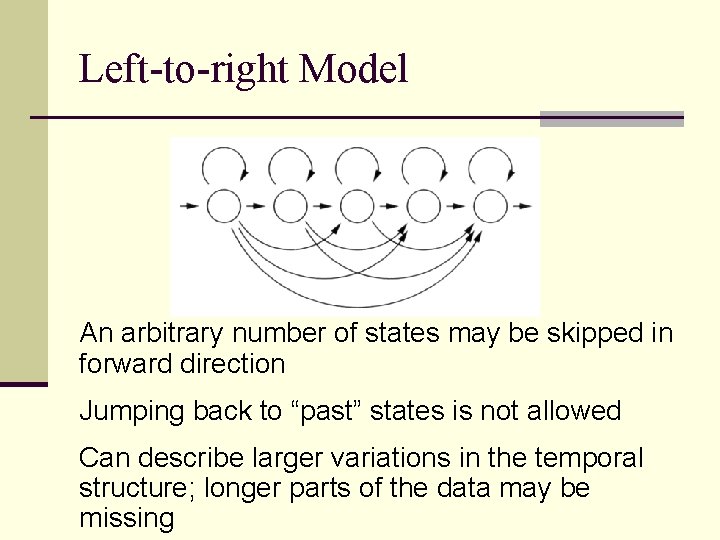

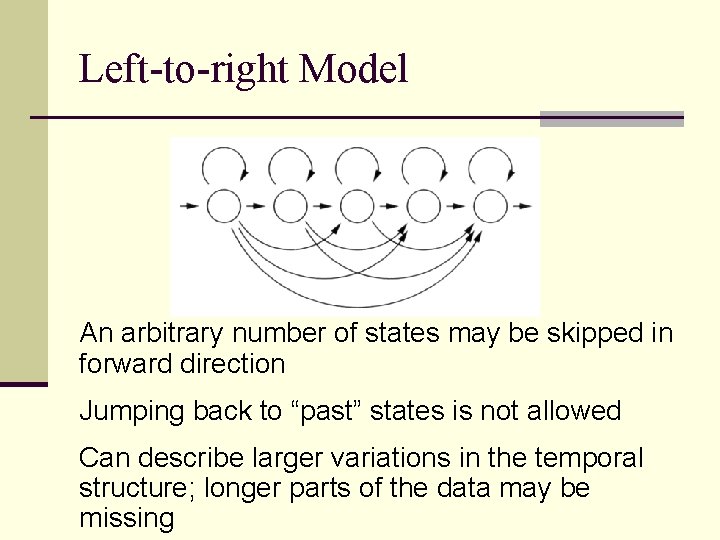

Left-to-right Model An arbitrary number of states may be skipped in forward direction Jumping back to “past” states is not allowed Can describe larger variations in the temporal structure; longer parts of the data may be missing

Modularization n English Word Recognition n Thousands of words: more than thousands of word models; requires large amount of training data n 26 letters: limited number of character models n Modularization: divides complex model into smaller models of segmentation units n Word -> subword -> character

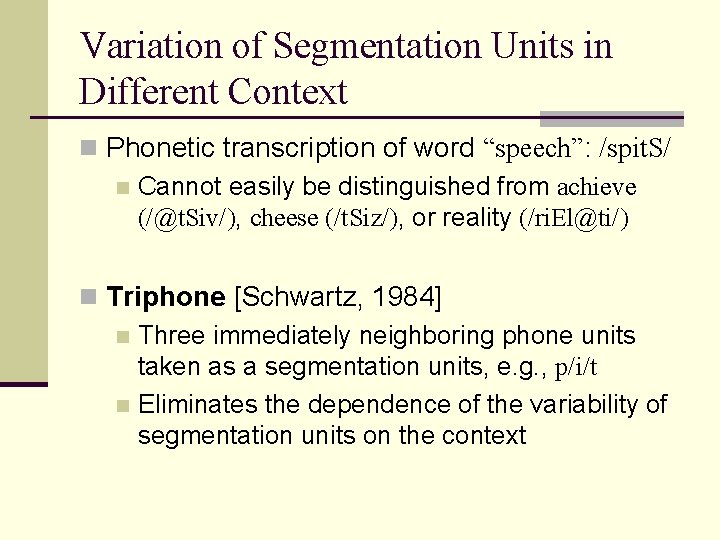

Variation of Segmentation Units in Different Context n Phonetic transcription of word “speech”: /spit. S/ n Cannot easily be distinguished from achieve (/@t. Siv/), cheese (/t. Siz/), or reality (/ri. El@ti/) n Triphone [Schwartz, 1984] n Three immediately neighboring phone units taken as a segmentation units, e. g. , p/i/t n Eliminates the dependence of the variability of segmentation units on the context

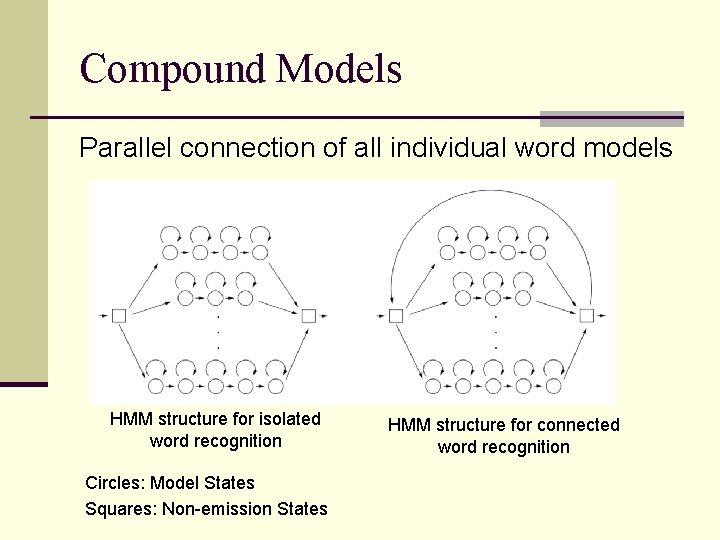

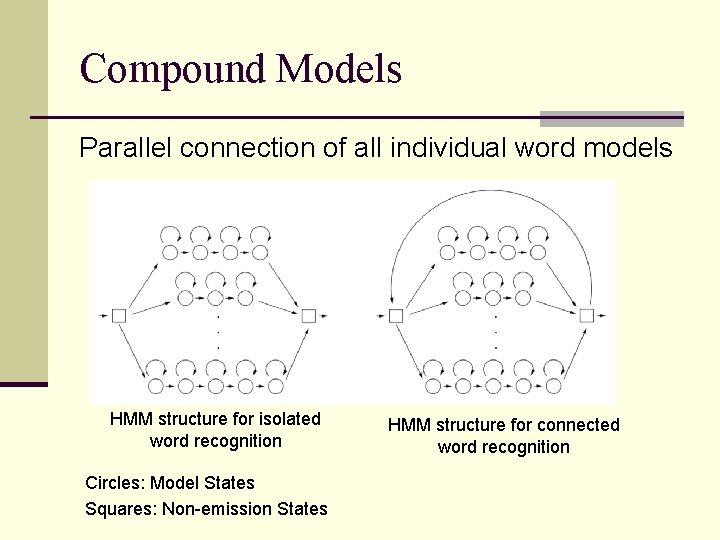

Compound Models Parallel connection of all individual word models HMM structure for isolated word recognition Circles: Model States Squares: Non-emission States HMM structure for connected word recognition

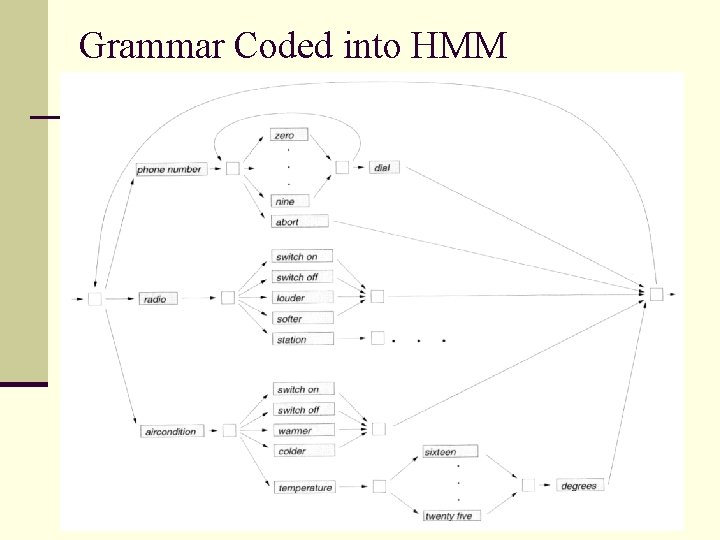

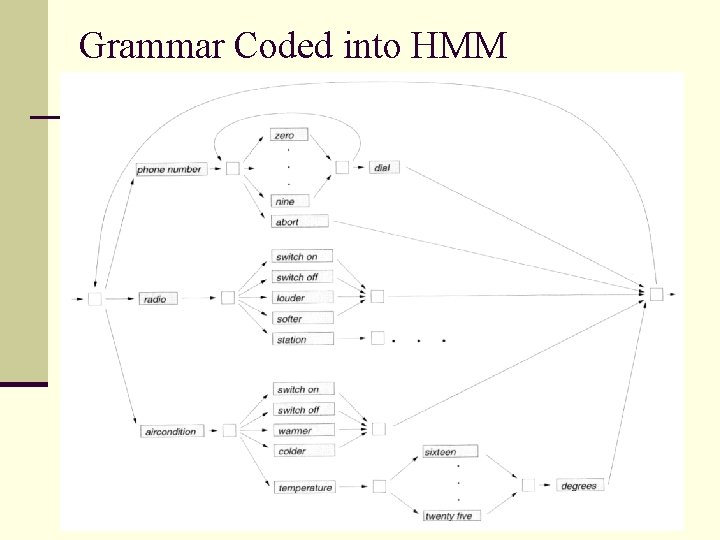

Grammar Coded into HMM

Modeling Emissions n Continuous feature vectors in the fields of speech and handwriting recognition are described by mixture models n n Size of the codebook and number of component densities per mixture density need to be decided No general way; a compromise between the precision of the model, its generalization capabilities, and the computation time n Semi-Continuous Model n Size of codebook: some hundred up to a few thousand densities n Mixture Model: 8 to 64 component densities

![References 1 Schwartz R Chow Y Roucos S Krasner M Makhoul J Improved hidden References [1] Schwartz R, Chow Y, Roucos S, Krasner M, Makhoul J, Improved hidden](https://slidetodoc.com/presentation_image_h2/1399bf87f5f10758e544fece780097e9/image-22.jpg)

References [1] Schwartz R, Chow Y, Roucos S, Krasner M, Makhoul J, Improved hidden Markov Modelling of phonemes for continuous speech recognition, in International Conference on Acoustics, Speech and Signal Processing, pp 35. 6. 1 -35. 6. 4, 1984.

Robust Parameter Estimation n Feature Optimization n Tying

Feature Optimization Techniques