Variants of HMM Sequence Alignment via HMM Lecture

- Slides: 20

Variants of HMM Sequence Alignment via HMM Lecture #10 Background Readings: chapters 3. 4, 3. 5, 4, in the Durbin et al. , 2001, Chapter 3. 4 Setubal et al. , 1997 This class has been edited from Nir Friedman’s lecture which is available at www. cs. huji. ac. il/~nir. Changes made by Dan Geiger, then Shlomo Moran. .

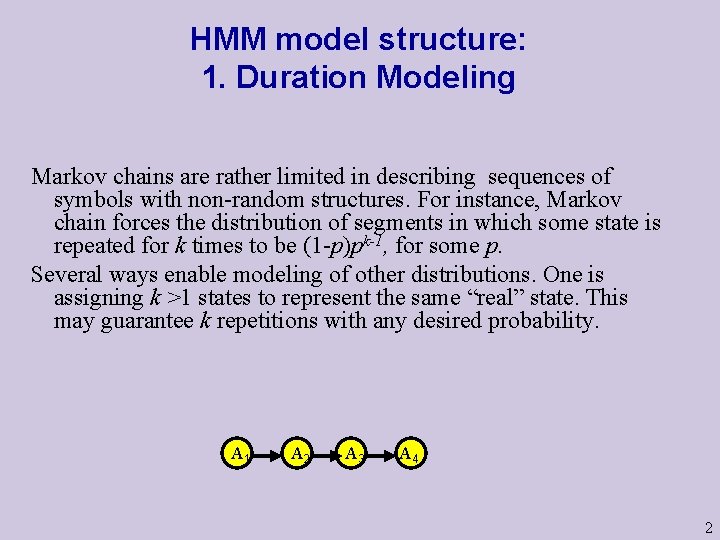

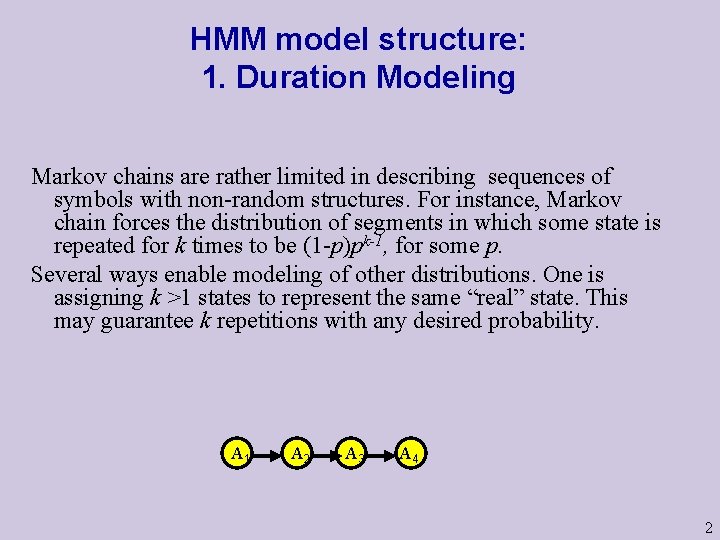

HMM model structure: 1. Duration Modeling Markov chains are rather limited in describing sequences of symbols with non-random structures. For instance, Markov chain forces the distribution of segments in which some state is repeated for k times to be (1 -p)pk-1, for some p. Several ways enable modeling of other distributions. One is assigning k >1 states to represent the same “real” state. This may guarantee k repetitions with any desired probability. A 1 A 2 A 3 A 4 2

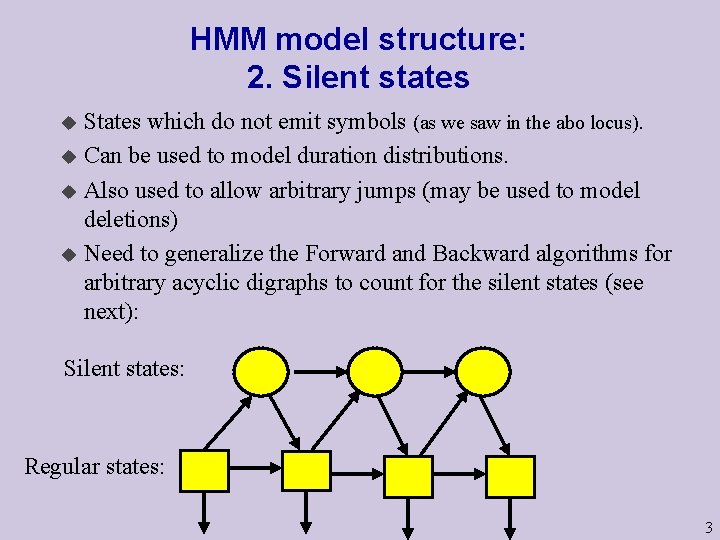

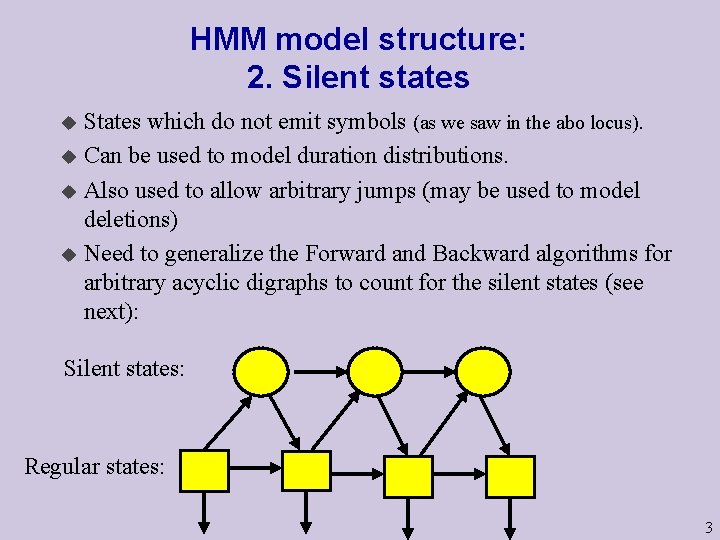

HMM model structure: 2. Silent states States which do not emit symbols (as we saw in the abo locus). u Can be used to model duration distributions. u Also used to allow arbitrary jumps (may be used to model deletions) u Need to generalize the Forward and Backward algorithms for arbitrary acyclic digraphs to count for the silent states (see next): u Silent states: Regular states: 3

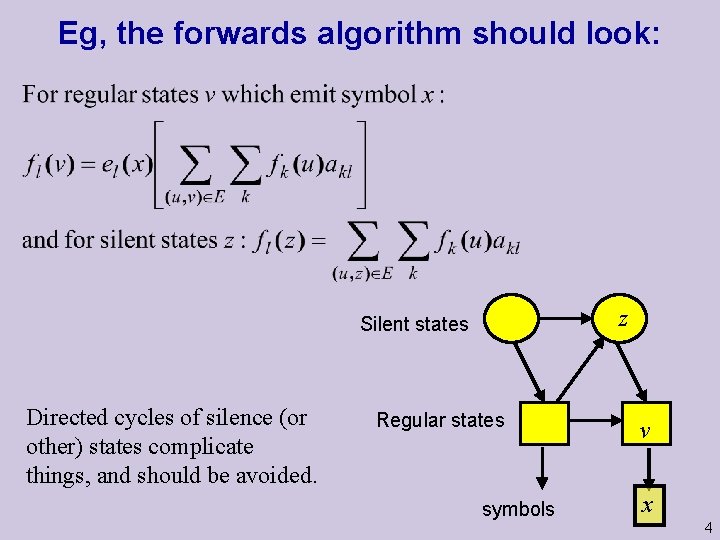

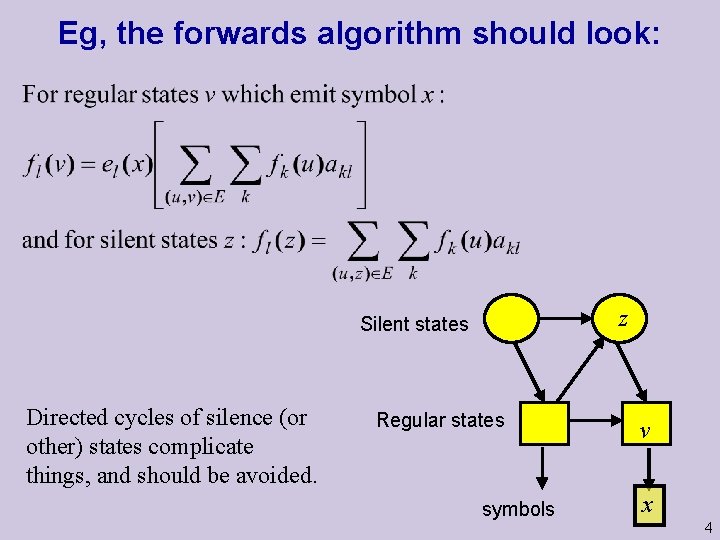

Eg, the forwards algorithm should look: z Silent states Directed cycles of silence (or other) states complicate things, and should be avoided. Regular states symbols v x 4

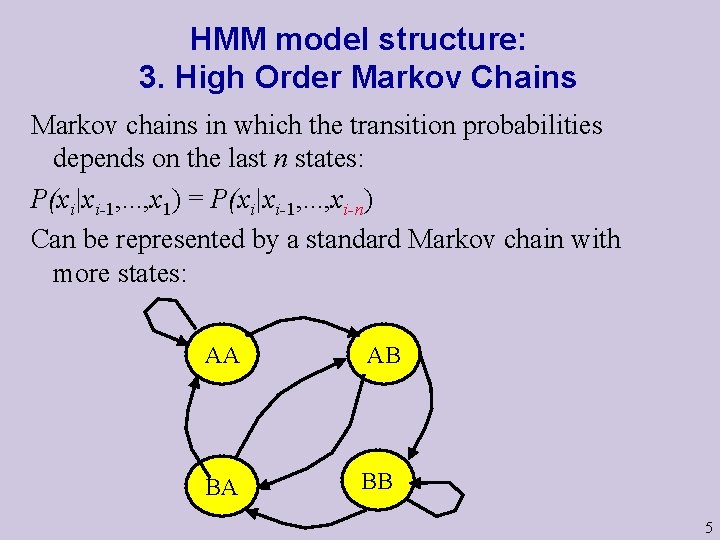

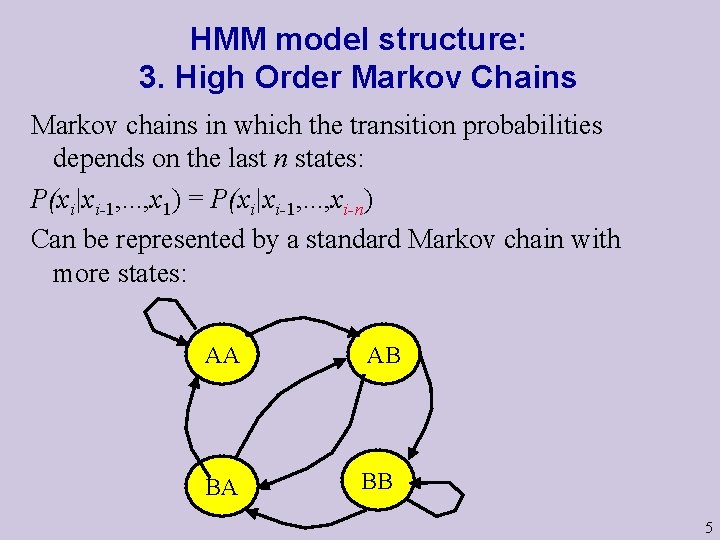

HMM model structure: 3. High Order Markov Chains Markov chains in which the transition probabilities depends on the last n states: P(xi|xi-1, . . . , x 1) = P(xi|xi-1, . . . , xi-n) Can be represented by a standard Markov chain with more states: AA AB BA BB 5

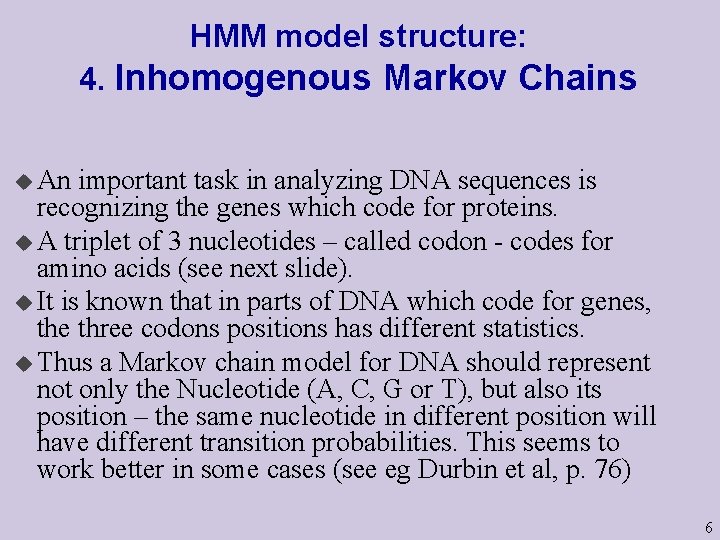

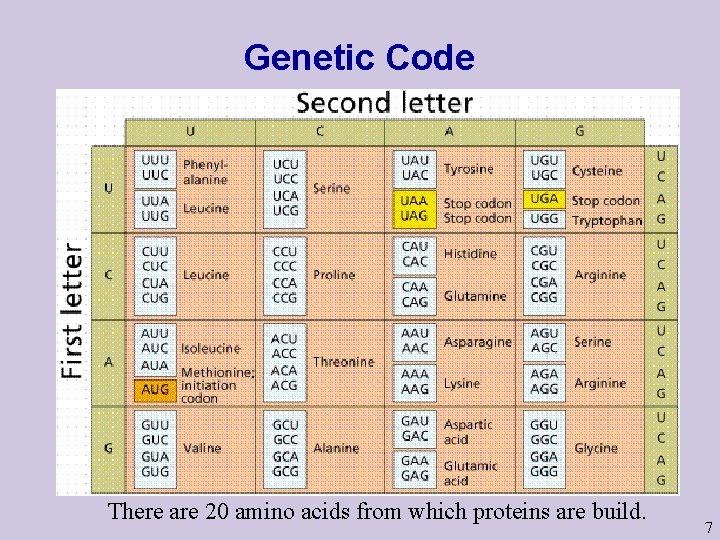

HMM model structure: 4. Inhomogenous Markov Chains u An important task in analyzing DNA sequences is recognizing the genes which code for proteins. u A triplet of 3 nucleotides – called codon - codes for amino acids (see next slide). u It is known that in parts of DNA which code for genes, the three codons positions has different statistics. u Thus a Markov chain model for DNA should represent not only the Nucleotide (A, C, G or T), but also its position – the same nucleotide in different position will have different transition probabilities. This seems to work better in some cases (see eg Durbin et al, p. 76) 6

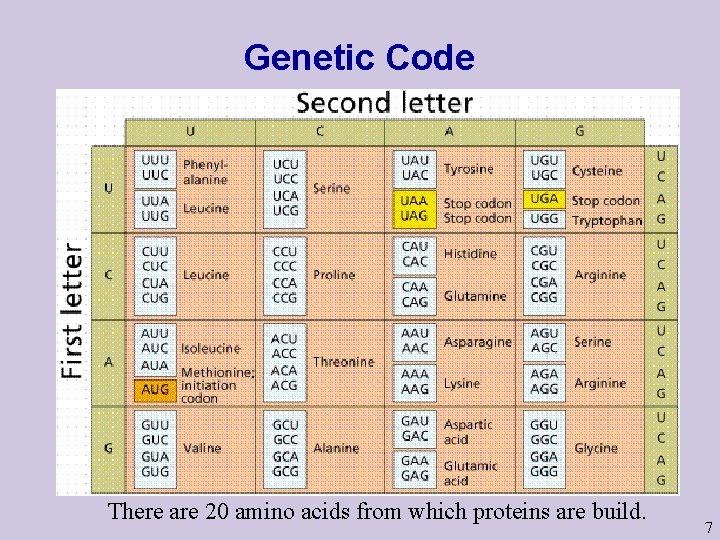

Genetic Code There are 20 amino acids from which proteins are build. 7

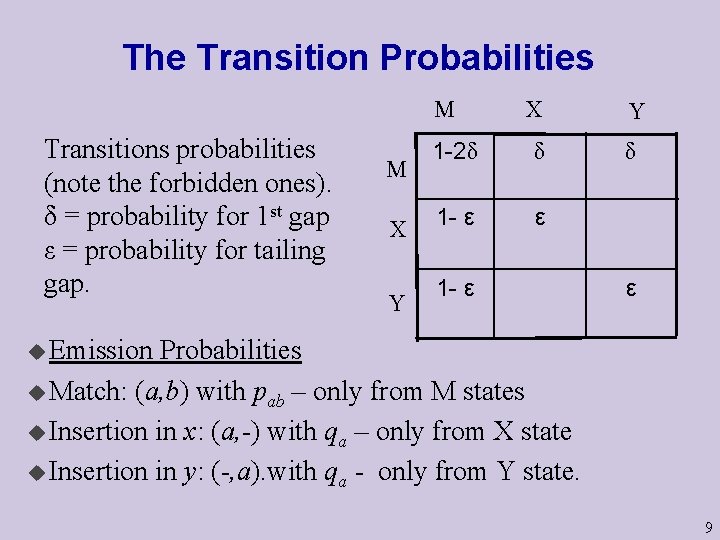

Sequence Comparison using HMM for sequence alignment, which incorporates affine gap scores. “Hidden” States u Match u Insertion in x u insertion in y. Symbols emitted u Match: {(a, b)| a, b in ∑ } u Insertion in x: {(a, -)| a in ∑ }. u Insertion in y: {(-, a)| a in ∑ }. 8

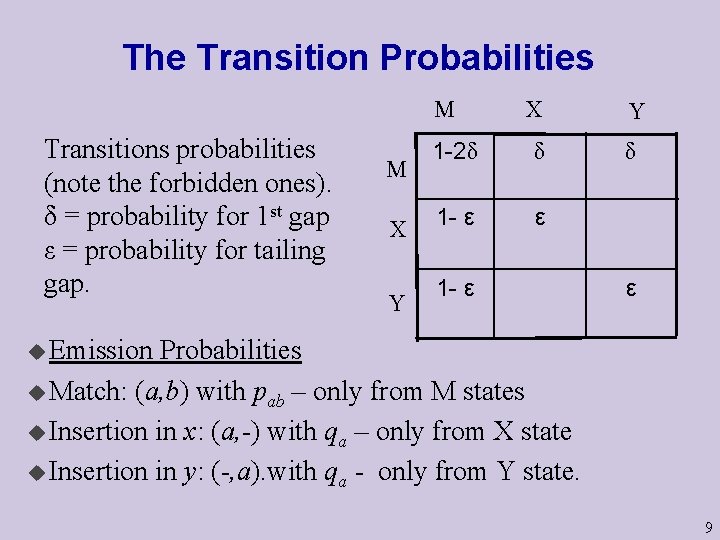

The Transition Probabilities M Transitions probabilities (note the forbidden ones). δ = probability for 1 st gap ε = probability for tailing gap. M X Y 1 -2δ δ δ 1 - ε ε u Emission Probabilities u Match: (a, b) with pab – only from M states u Insertion in x: (a, -) with qa – only from X state u Insertion in y: (-, a). with qa - only from Y state. 9

The Transition Probabilities Each aligned pair is generated by the above HMM with certain probability. Note that the hidden states can be reconstructed from the alignment. However, for each pair of sequences x (of length m) and y (of length n), there are many alignments of x and y, each corresponds to a different state-path (the length of the paths are between max{m, n} and m+n). Our task is to score alignments by using this model. The score should reflect the probability of the alignment. 10

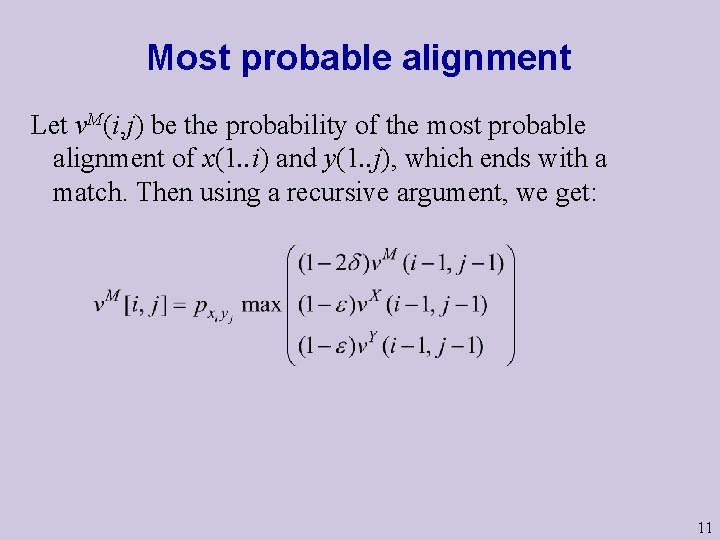

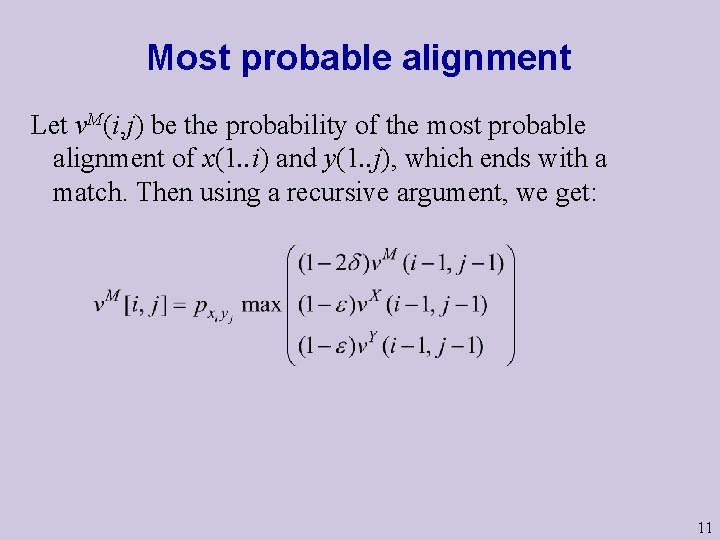

Most probable alignment Let v. M(i, j) be the probability of the most probable alignment of x(1. . i) and y(1. . j), which ends with a match. Then using a recursive argument, we get: 11

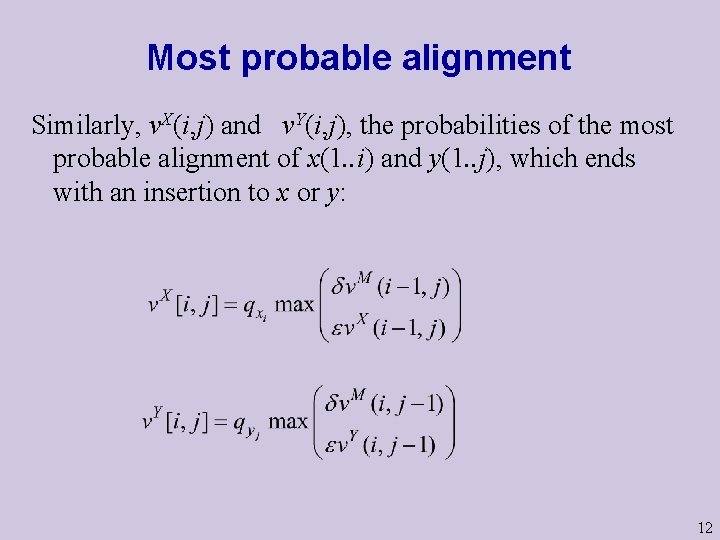

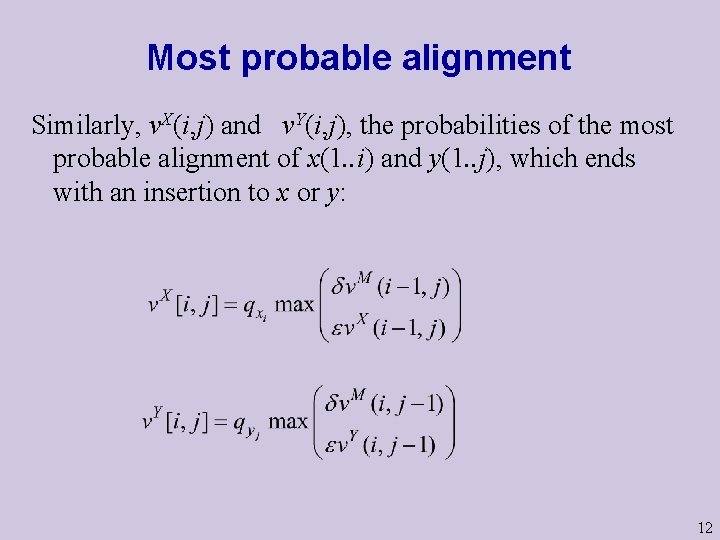

Most probable alignment Similarly, v. X(i, j) and v. Y(i, j), the probabilities of the most probable alignment of x(1. . i) and y(1. . j), which ends with an insertion to x or y: 12

Adding termination probabilities We may want a model which defines a probability distribution over all possible sequences. M For this, an END state is added, with transition probability τ from any other state to END. This assume expected sequence length of 1/ τ. X Y END M 1 -2δ -τ δ δ τ X 1 -ε -τ ε Y END 1 -ε -τ τ ε τ 1 13

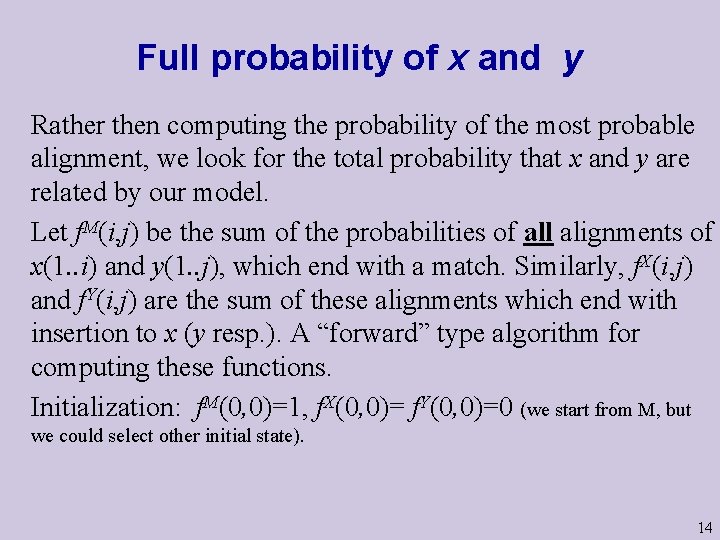

Full probability of x and y Rather then computing the probability of the most probable alignment, we look for the total probability that x and y are related by our model. Let f. M(i, j) be the sum of the probabilities of all alignments of x(1. . i) and y(1. . j), which end with a match. Similarly, f. X(i, j) and f. Y(i, j) are the sum of these alignments which end with insertion to x (y resp. ). A “forward” type algorithm for computing these functions. Initialization: f. M(0, 0)=1, f. X(0, 0)= f. Y(0, 0)=0 (we start from M, but we could select other initial state). 14

Full probability of x and y (cont. ) The total probability of all alignments is: P(x, y|model)= f. M[m, n] + f. X[m, n] + f. Y[m, n] 15

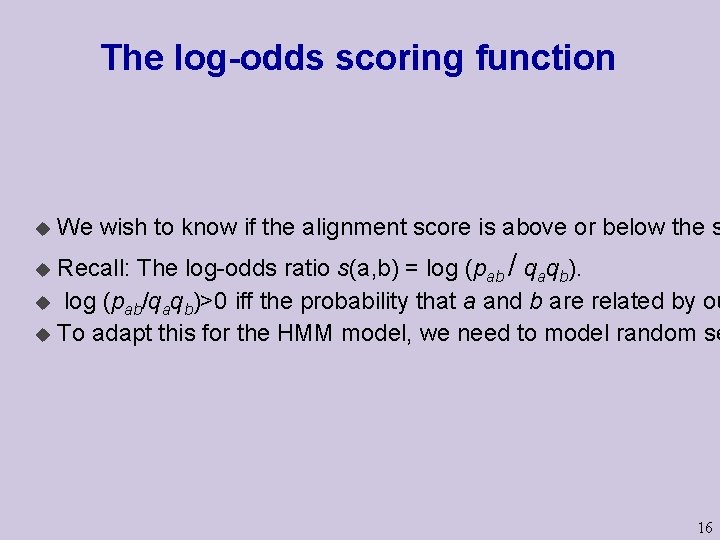

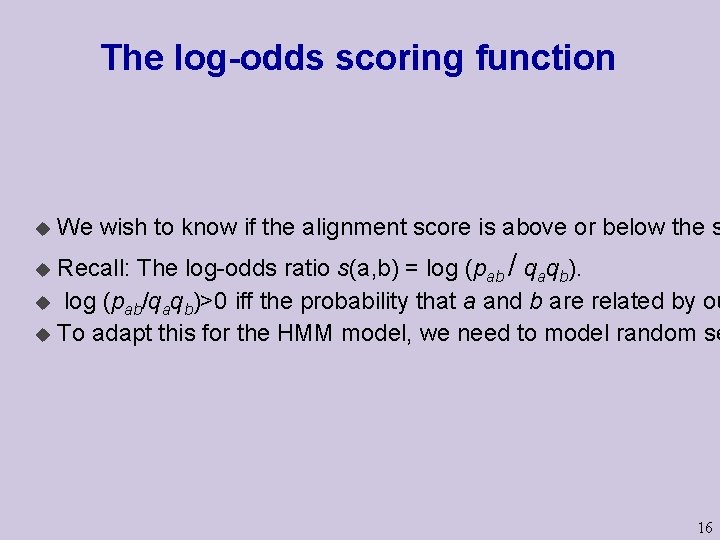

The log-odds scoring function u We wish to know if the alignment score is above or below the s Recall: The log-odds ratio s(a, b) = log (pab / qaqb). u log (pab/qaqb)>0 iff the probability that a and b are related by ou u To adapt this for the HMM model, we need to model random se u 16

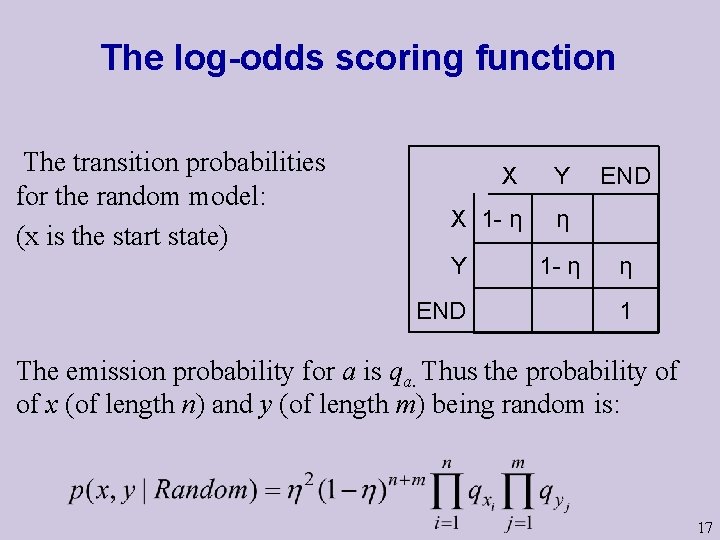

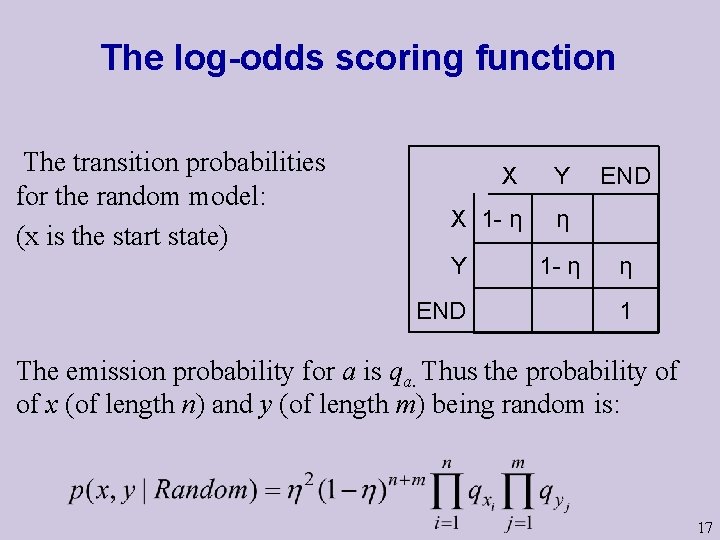

The log-odds scoring function The transition probabilities for the random model: (x is the start state) X Y X 1 - η η Y END 1 - η END η 1 The emission probability for a is qa. Thus the probability of of x (of length n) and y (of length m) being random is: 17

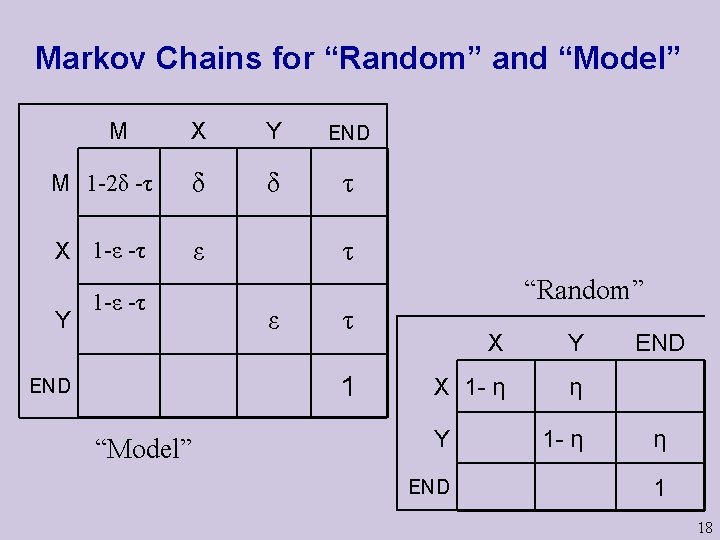

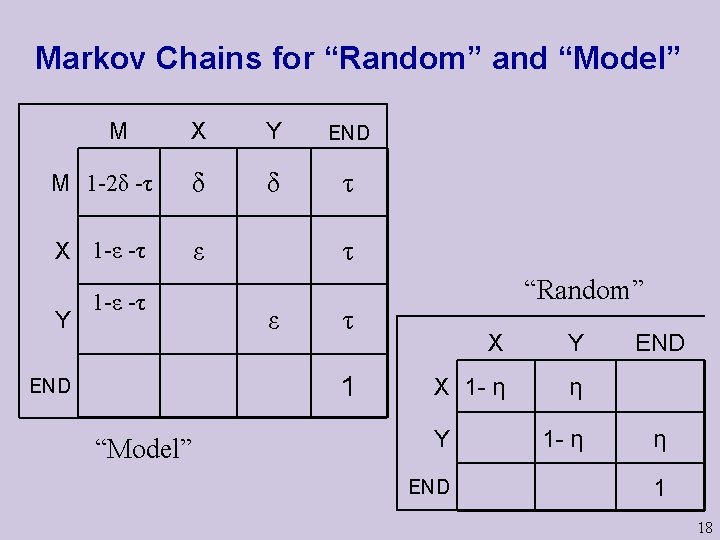

Markov Chains for “Random” and “Model” M X Y END M 1 -2δ -τ δ δ τ X 1 -ε -τ ε Y 1 -ε -τ τ ε τ 1 END “Model” “Random” X Y X 1 - η η Y END 1 - η END η 1 18

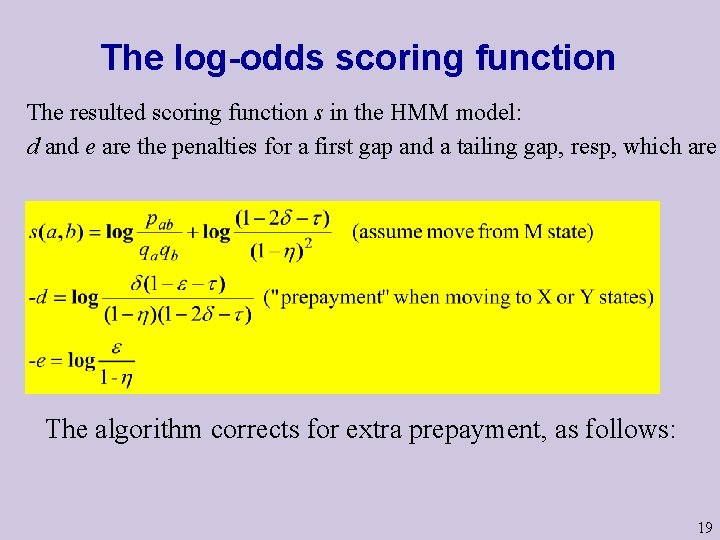

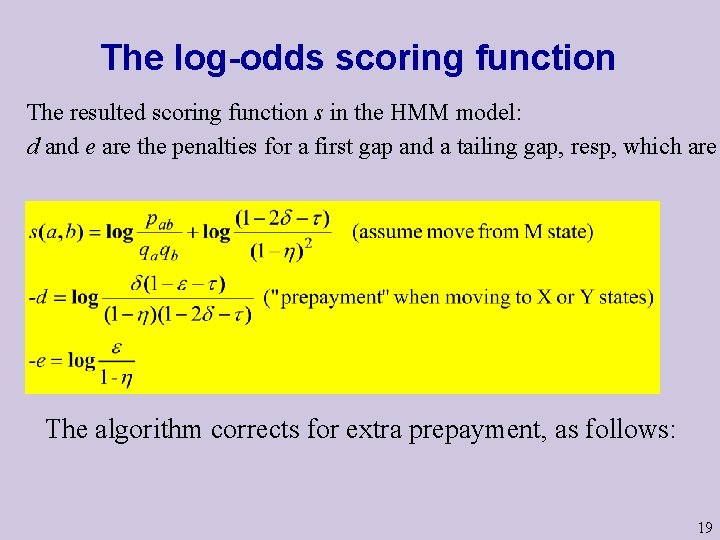

The log-odds scoring function The resulted scoring function s in the HMM model: d and e are the penalties for a first gap and a tailing gap, resp, which are The algorithm corrects for extra prepayment, as follows: 19

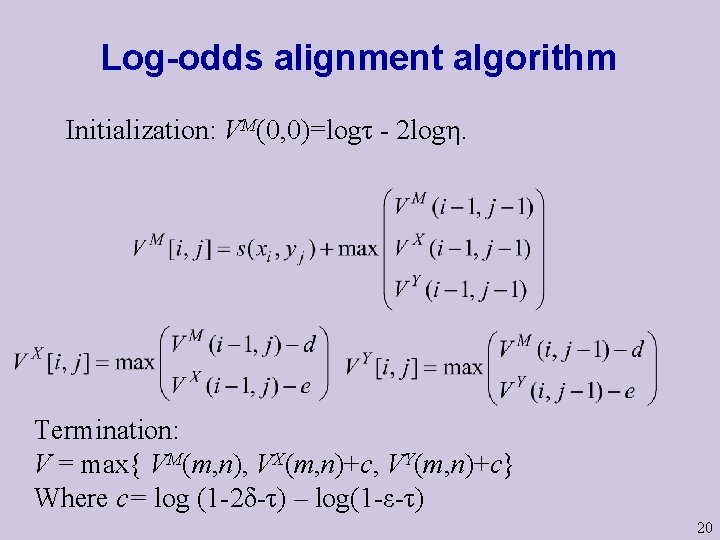

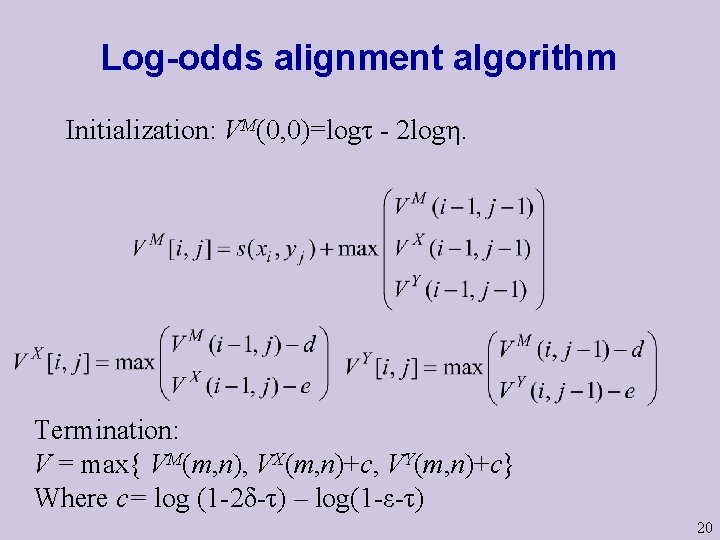

Log-odds alignment algorithm Initialization: VM(0, 0)=logτ - 2 logη. Termination: V = max{ VM(m, n), VX(m, n)+c, VY(m, n)+c} Where c= log (1 -2δ-τ) – log(1 -ε-τ) 20

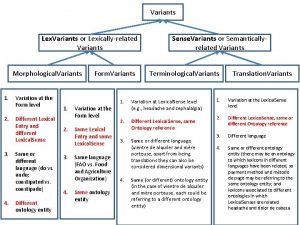

Sequence alignment

Sequence alignment Alignment in bioinformatics

Alignment in bioinformatics Global alignment example

Global alignment example Global vs local alignment

Global vs local alignment Difference between local and global alignment

Difference between local and global alignment A named sequence of statements

A named sequence of statements Tcoffee alignment

Tcoffee alignment Praline multiple sequence alignment

Praline multiple sequence alignment Tcoffee multiple sequence alignment

Tcoffee multiple sequence alignment Sequence alignment

Sequence alignment Pasta alignment

Pasta alignment Progressive alignment

Progressive alignment Hirschberg's algorithm

Hirschberg's algorithm Sequence alignment

Sequence alignment Bioedit clustalw

Bioedit clustalw Clustal omega symbols

Clustal omega symbols Bioinformatics sequence alignment

Bioinformatics sequence alignment Dot plot sequence alignment

Dot plot sequence alignment 01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Estaciones del via lucis para niños

Estaciones del via lucis para niños Mns piramidal

Mns piramidal