Natural Language Processing Dr Andy Evans Text processing

![sentences = [] sentence = [] for tag in tagged: sentence. append(tag) if tag[0] sentences = [] sentence = [] for tag in tagged: sentence. append(tag) if tag[0]](https://slidetodoc.com/presentation_image_h/332bd8d0d3812218c791b04fec39e1da/image-34.jpg)

- Slides: 45

Natural Language Processing Dr Andy Evans

Text processing has been at the core of computing since the 1950 s. Forms the core of third generation languages. We need to do a lexical analysis, parsing (splitting) a program into tokens (keywords etc. ), understand the order (syntax analysis) and semantics (meaning), and then translate it into binary. For more on this, see: Michael L. Scott, Programming Language Pragmatics

However, for other texts, there are similar processes we can go through to investigate human understanding. In increasing complication, they include: Tokenizing Search/regex Statistics Parts of Speech (Po. S) tagging Machine Translation Logic Semantics and Sentiment analysis

NLTK The Natural Language Toolkit (NLTK) dominates. It opened up NLP to programmers and was a major reason Python became so popular. https: //www. nltk. org/ Provided with Anaconda.

NLTK Corpora, Lexicons, etc. NLTK uses a variety of text files containing example data. Can be downloaded with: nltk. download() nltk. download(name) # all Generally, if it fails it will tell you what you are missing and where, and if you can't install it, you can download from the resources page: http: //www. nltk. org/nltk_data/

NLTK Book The NLTK project has its own book "Natural Language Processing with Python", which you can buy or read online (make sure you get the Python 3 version). This lecture makes extensive use and reference to this, though we've adjusted some bits so they work without the corpora, so check both versions.

Tokenizing Search/regex Statistics Parts of Speech (Po. S) tagging and Semantics Machine Translation Logic Sentiment analysis

Encoding First part of reading text is recognising text as text. We've seen that all computer information is binary, but text is binary that can be recognised by some software as text and displayed using characters. There is a difference between the number 2 and the character "2"; the former cannot be read by humans: 2 stored as a 4 -byte big endian number 00000000 00000010 "2" stored in the same fashion using ASCII encoding: 00000000 00110010

Encoding Binary encoding includes: The amount of binary used to represent a character or value. The order of the binary. The way negative numbers are represented. Bits for error checking. File format elements. Which binary represents which character (character encoding).

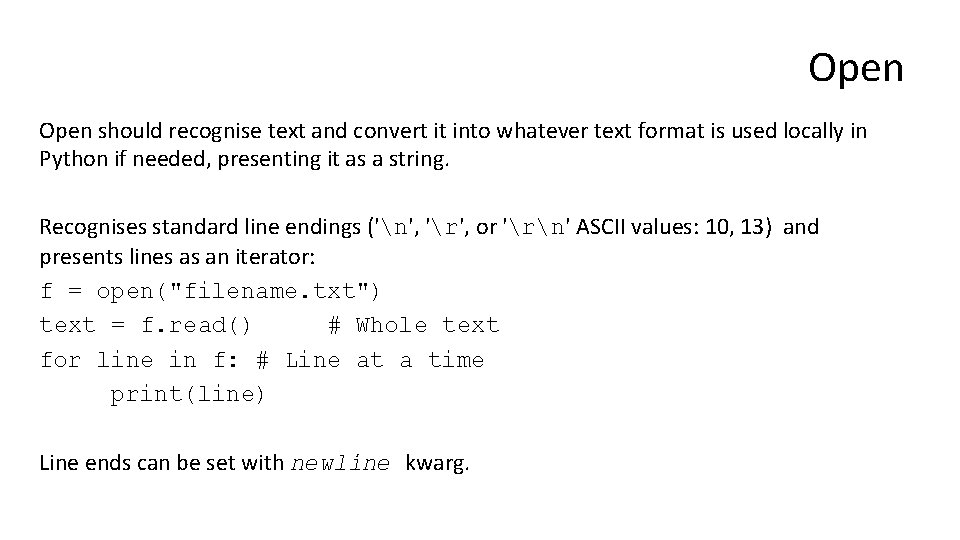

Python Character encoding used is local to OS, but can be set in open with the encoding kwarg. By default this tends to be UTF-8, an encoding for one to four byte Unicode (back compatible with ASCII). This has risen to dominate computational text over the last 10 years. For dealing with other encodings, see the codecs module: https: //docs. python. org/3/library/codecs. html A detail introduction to dealing with other encodings and some of the issues can be found in: Luciano Ramalho, Fluent Python Also: 3. 3 in http: //www. nltk. org/book/ch 03. html

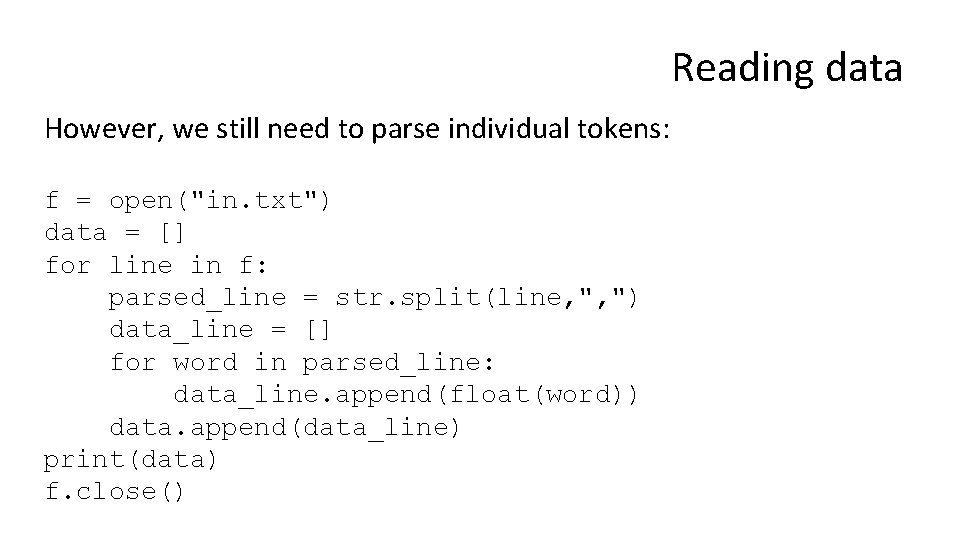

Open should recognise text and convert it into whatever text format is used locally in Python if needed, presenting it as a string. Recognises standard line endings ('n', 'r', or 'rn' ASCII values: 10, 13) and presents lines as an iterator: f = open("filename. txt") text = f. read() # Whole text for line in f: # Line at a time print(line) Line ends can be set with newline kwarg.

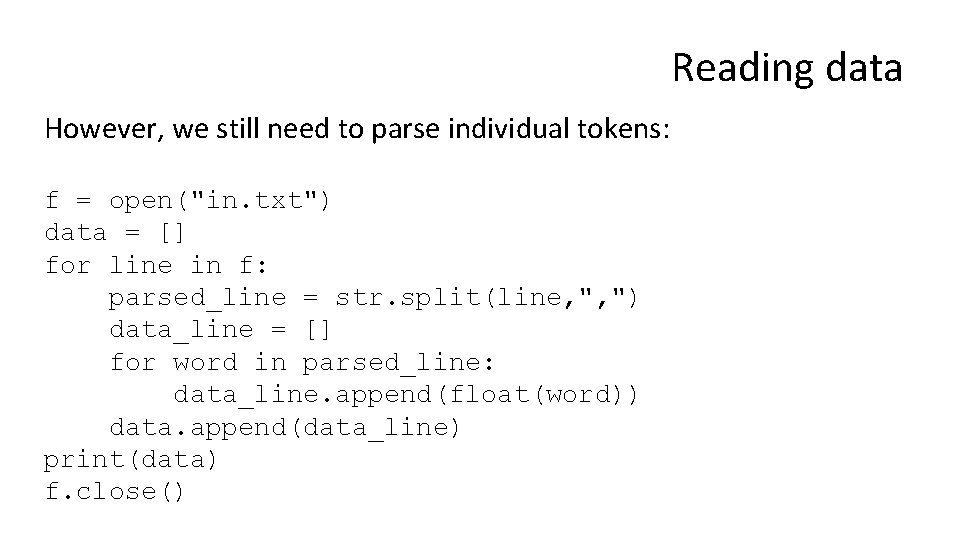

Reading data However, we still need to parse individual tokens: f = open("in. txt") data = [] for line in f: parsed_line = str. split(line, ", ") data_line = [] for word in parsed_line: data_line. append(float(word)) data. append(data_line) print(data) f. close()

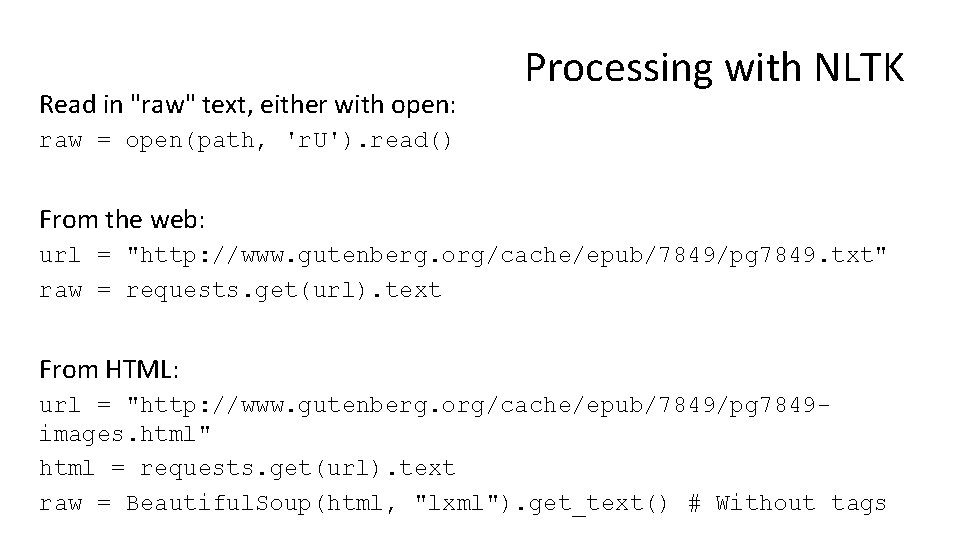

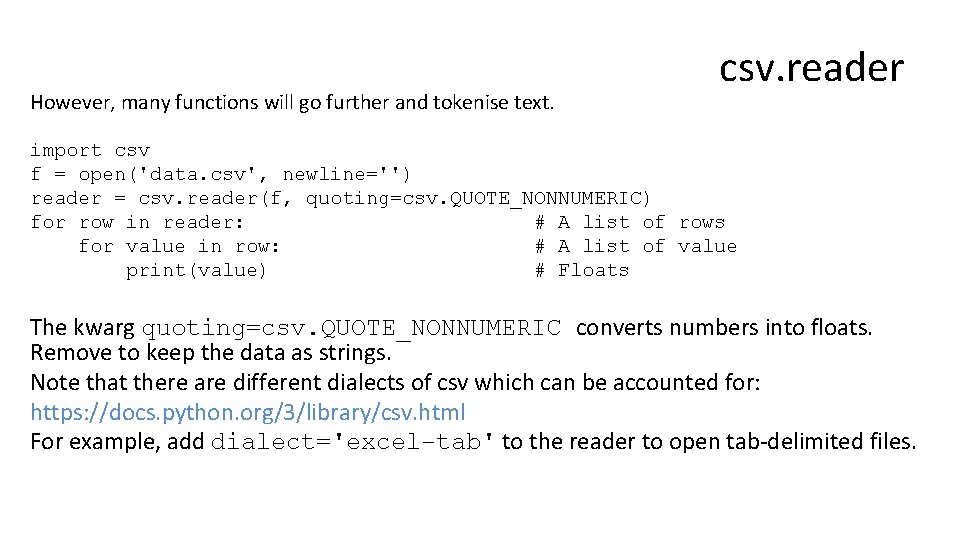

However, many functions will go further and tokenise text. csv. reader import csv f = open('data. csv', newline='') reader = csv. reader(f, quoting=csv. QUOTE_NONNUMERIC) for row in reader: # A list of rows for value in row: # A list of value print(value) # Floats The kwarg quoting=csv. QUOTE_NONNUMERIC converts numbers into floats. Remove to keep the data as strings. Note that there are different dialects of csv which can be accounted for: https: //docs. python. org/3/library/csv. html For example, add dialect='excel-tab' to the reader to open tab-delimited files.

Read in "raw" text, either with open: Processing with NLTK raw = open(path, 'r. U'). read() From the web: url = "http: //www. gutenberg. org/cache/epub/7849/pg 7849. txt" raw = requests. get(url). text From HTML: url = "http: //www. gutenberg. org/cache/epub/7849/pg 7849 images. html" html = requests. get(url). text raw = Beautiful. Soup(html, "lxml"). get_text() # Without tags

Other formats Search results RSS PDF MSWord Keyboard http: //www. nltk. org/book/ch 03. html

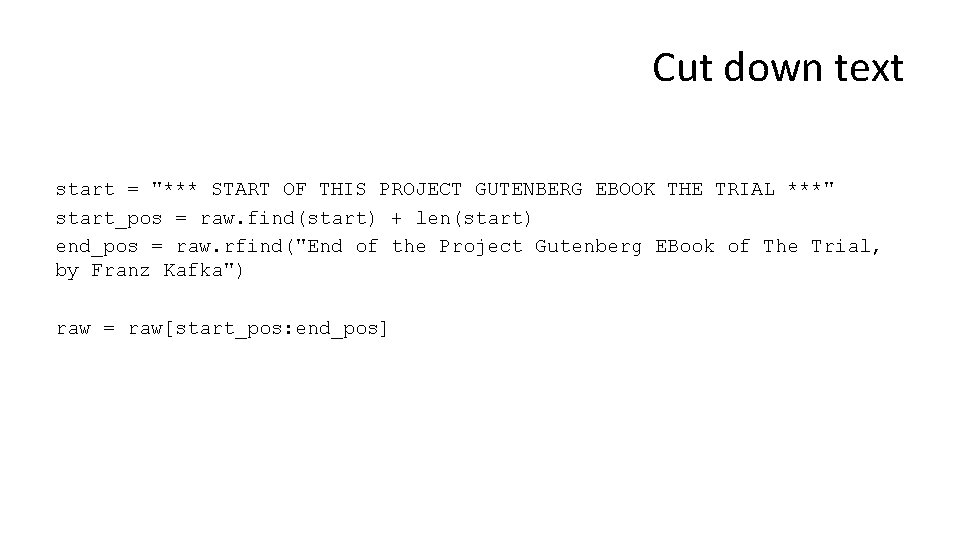

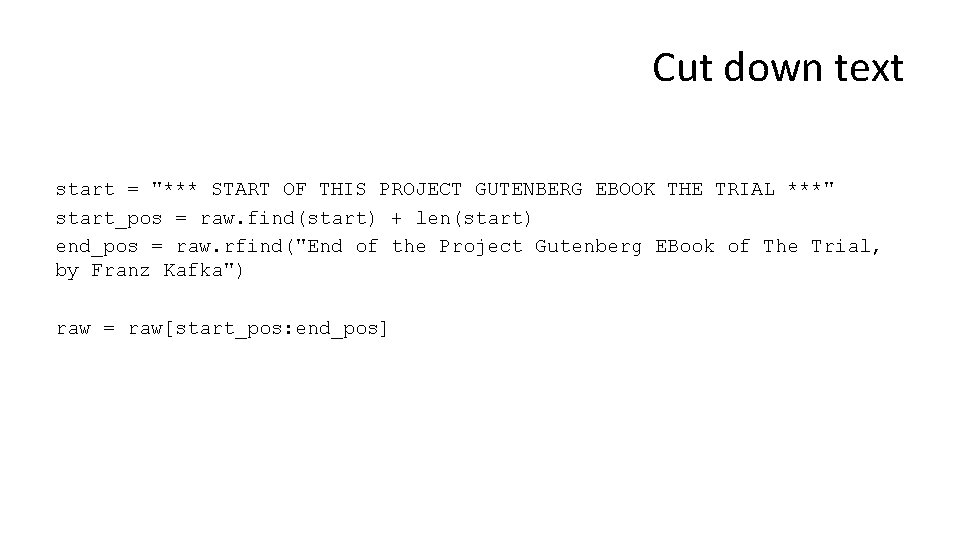

Cut down text start = "*** START OF THIS PROJECT GUTENBERG EBOOK THE TRIAL ***" start_pos = raw. find(start) + len(start) end_pos = raw. rfind("End of the Project Gutenberg EBook of The Trial, by Franz Kafka") raw = raw[start_pos: end_pos]

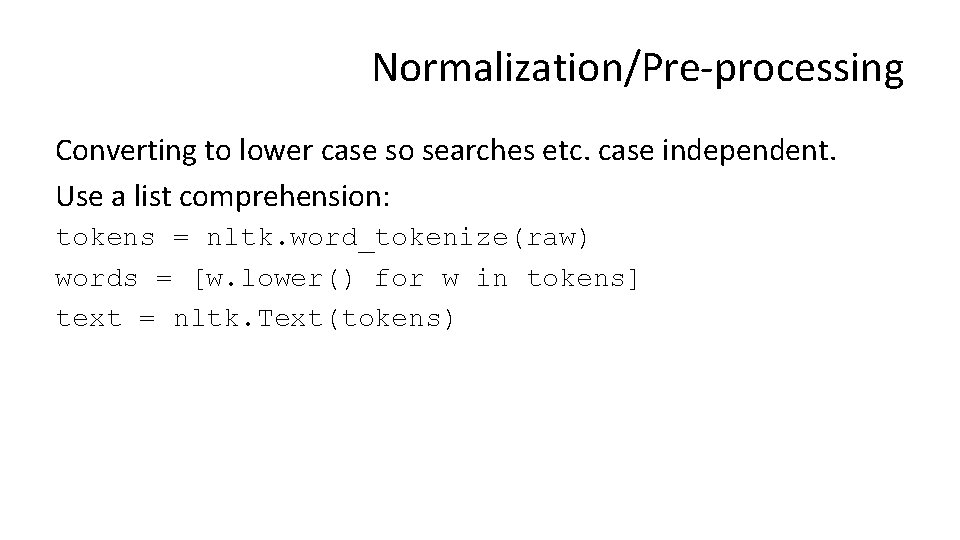

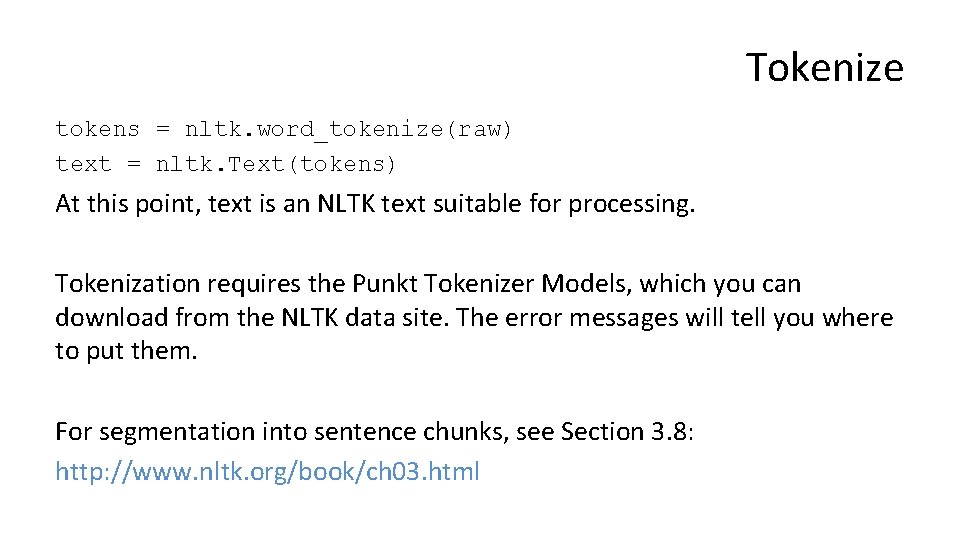

Tokenize tokens = nltk. word_tokenize(raw) text = nltk. Text(tokens) At this point, text is an NLTK text suitable for processing. Tokenization requires the Punkt Tokenizer Models, which you can download from the NLTK data site. The error messages will tell you where to put them. For segmentation into sentence chunks, see Section 3. 8: http: //www. nltk. org/book/ch 03. html

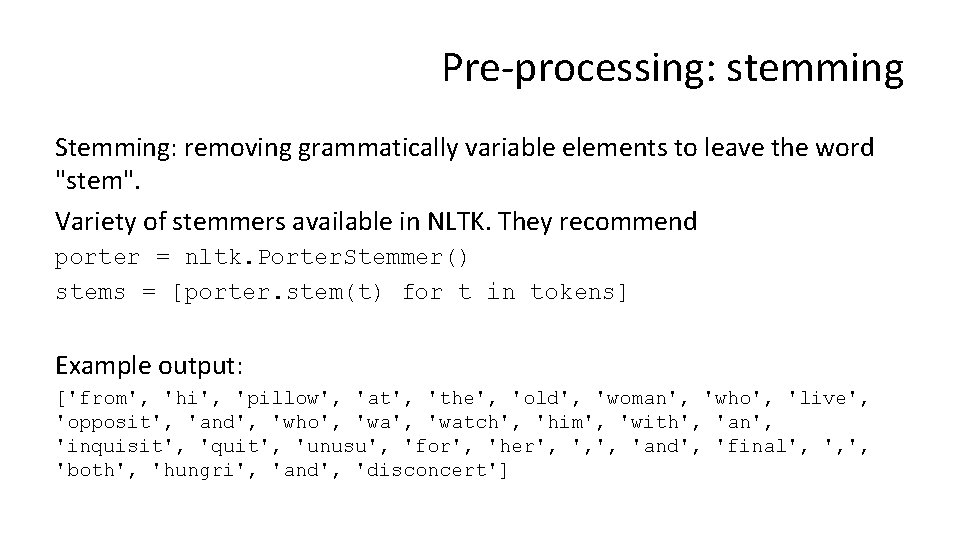

Normalization/Pre-processing Converting to lower case so searches etc. case independent. Use a list comprehension: tokens = nltk. word_tokenize(raw) words = [w. lower() for w in tokens] text = nltk. Text(tokens)

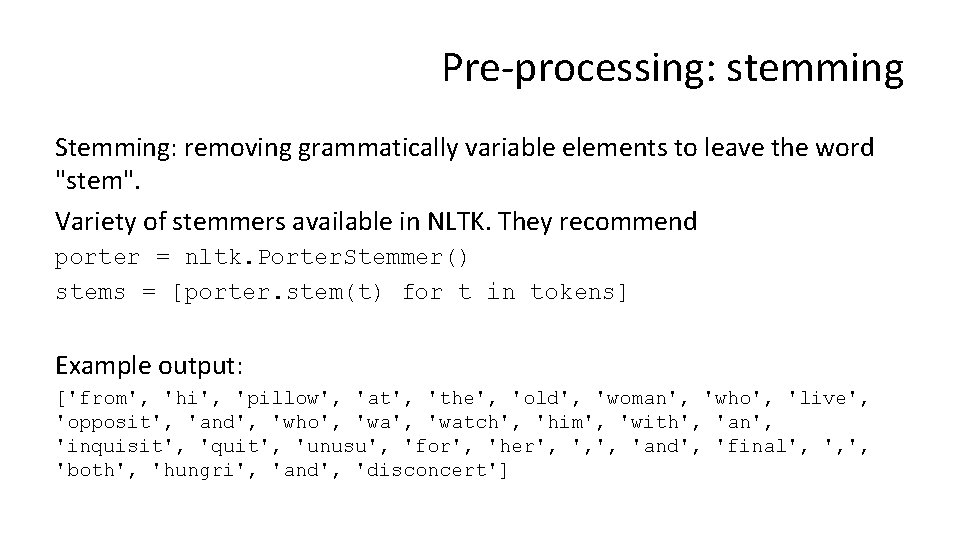

Pre-processing: stemming Stemming: removing grammatically variable elements to leave the word "stem". Variety of stemmers available in NLTK. They recommend porter = nltk. Porter. Stemmer() stems = [porter. stem(t) for t in tokens] Example output: ['from', 'hi', 'pillow', 'at', 'the', 'old', 'woman', 'who', 'live', 'opposit', 'and', 'who', 'watch', 'him', 'with', 'an', 'inquisit', 'quit', 'unusu', 'for', 'her', ', ', 'and', 'final', ', ', 'both', 'hungri', 'and', 'disconcert']

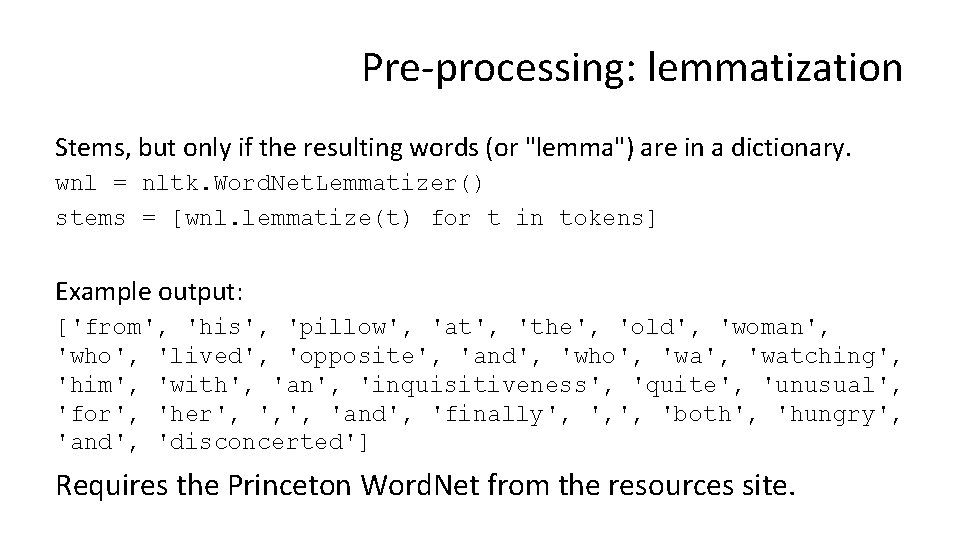

Pre-processing: lemmatization Stems, but only if the resulting words (or "lemma") are in a dictionary. wnl = nltk. Word. Net. Lemmatizer() stems = [wnl. lemmatize(t) for t in tokens] Example output: ['from', 'his', 'pillow', 'at', 'the', 'old', 'woman', 'who', 'lived', 'opposite', 'and', 'who', 'watching', 'him', 'with', 'an', 'inquisitiveness', 'quite', 'unusual', 'for', 'her', ', ', 'and', 'finally', ', ', 'both', 'hungry', 'and', 'disconcerted'] Requires the Princeton Word. Net from the resources site.

Tokenizing Search/regex Statistics Parts of Speech (Po. S) tagging and Semantics Machine Translation Logic Sentiment analysis

Search We dealt with text searching in the Core course, and it is worth revisiting string functions in part 3. We can also use regex, a (somewhat complicated but powerful) search language. https: //docs. python. org/3/library/re. html The NLTK book includes extensive notes on searching with regex, in Section 3. 4 onwards: http: //www. nltk. org/book/ch 03. html We'll see an example shortly.

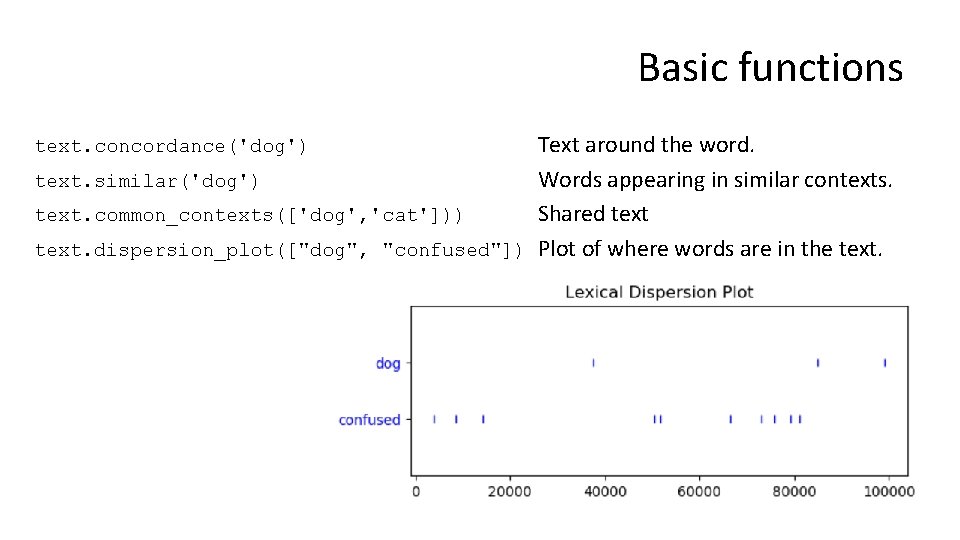

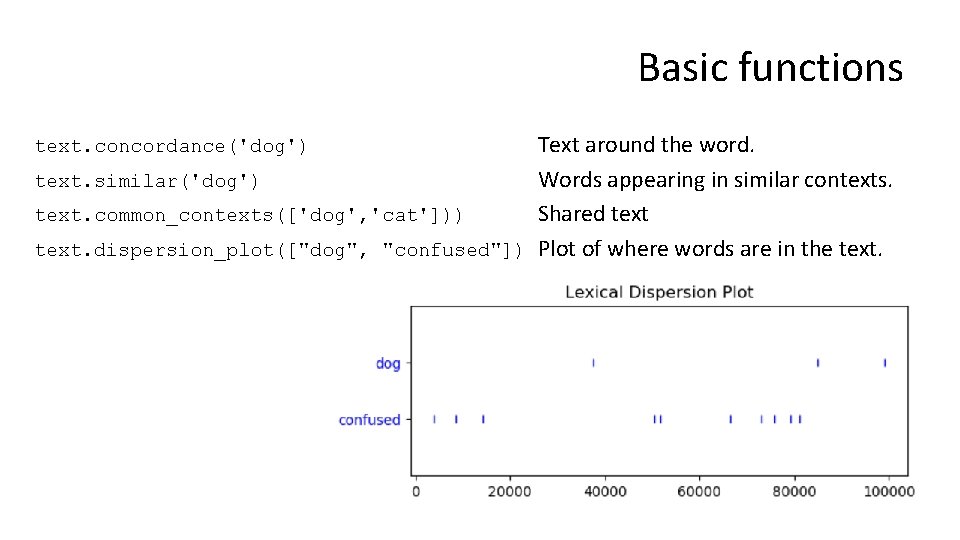

Basic functions Text around the word. text. similar('dog') Words appearing in similar contexts. text. common_contexts(['dog', 'cat'])) Shared text. dispersion_plot(["dog", "confused"]) Plot of where words are in the text. concordance('dog')

Statistics A common use of language processing is to get a statistical overview of a writer and/or their work. See the ongoing battle over who wrote Shakespeare's plays. Statistics can tell us a lot about the overall meaning, evolution, and audience of a text.

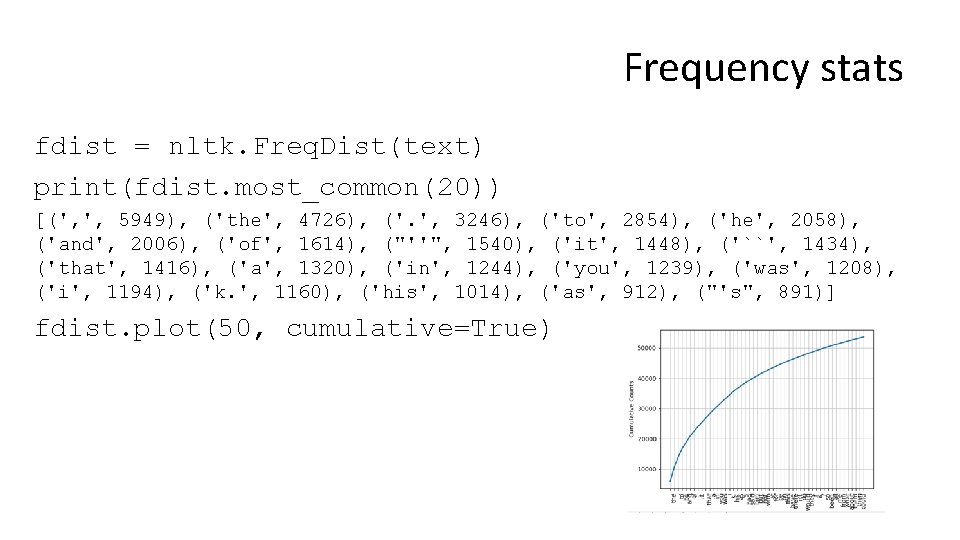

Basic statistics len(text) sorted_words = sorted(set(text)) text. count('dog') text. hapaxes() text. index('dog') Number of words Duplicates collapsed by set. Words only appearing once First location long_words = [w for w in words if len(w) > 15] text. collocations() Pairs of words that appear more often than expected from individual frequencies (requires stopwords from resources site).

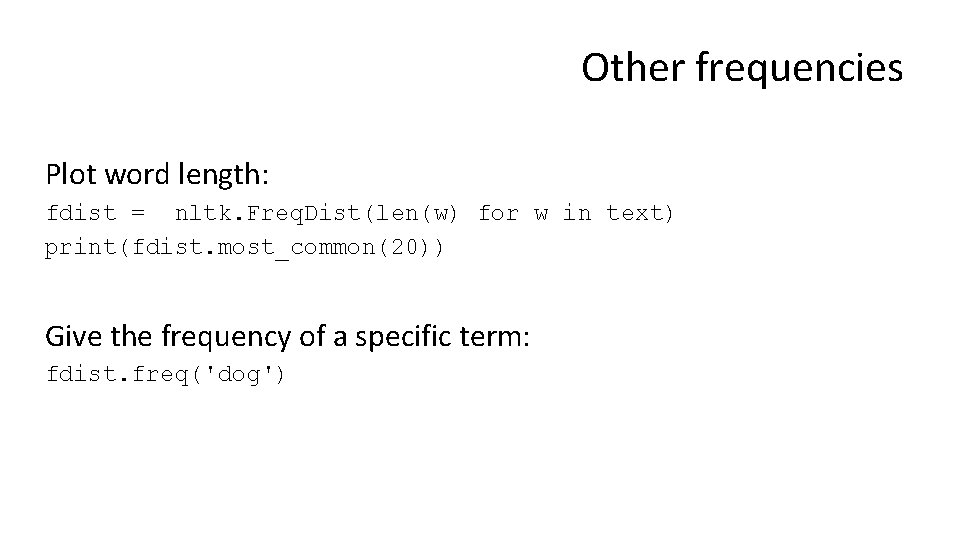

Frequency stats fdist = nltk. Freq. Dist(text) print(fdist. most_common(20)) [(', ', 5949), ('the', 4726), ('. ', 3246), ('to', 2854), ('he', 2058), ('and', 2006), ('of', 1614), ("''", 1540), ('it', 1448), ('``', 1434), ('that', 1416), ('a', 1320), ('in', 1244), ('you', 1239), ('was', 1208), ('i', 1194), ('k. ', 1160), ('his', 1014), ('as', 912), ("'s", 891)] fdist. plot(50, cumulative=True)

Other frequencies Plot word length: fdist = nltk. Freq. Dist(len(w) for w in text) print(fdist. most_common(20)) Give the frequency of a specific term: fdist. freq('dog')

Tokenizing Search/regex Statistics Parts of Speech (Po. S) tagging and Semantics Machine Translation Logic Sentiment analysis

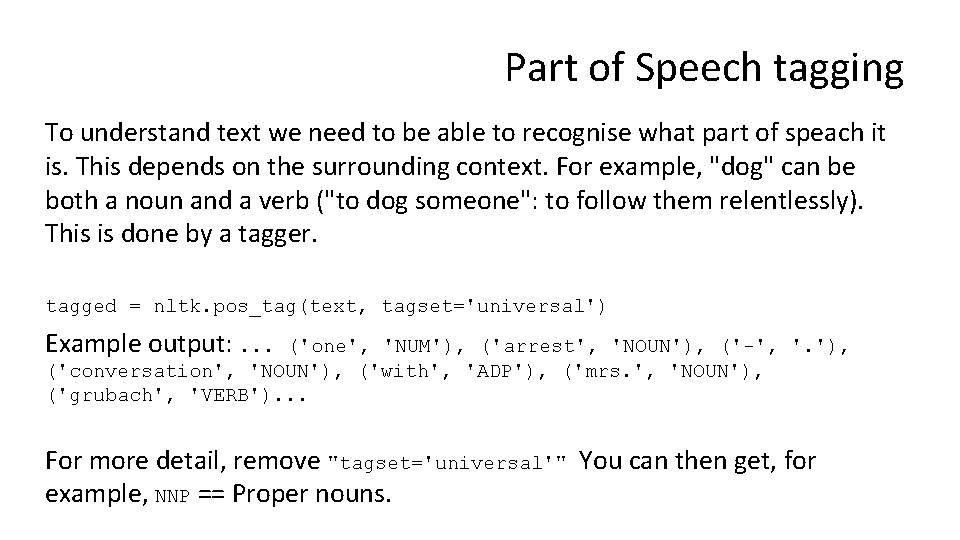

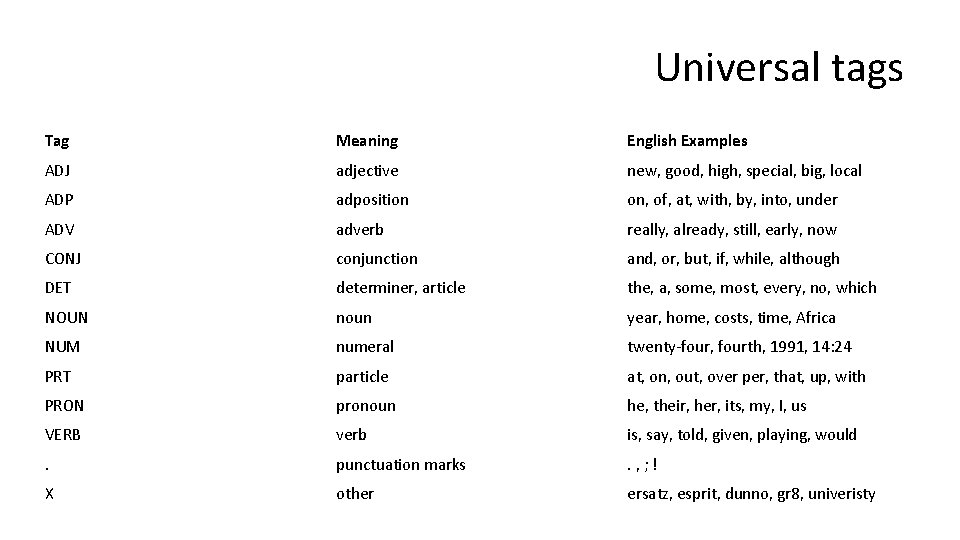

Part of Speech tagging To understand text we need to be able to recognise what part of speach it is. This depends on the surrounding context. For example, "dog" can be both a noun and a verb ("to dog someone": to follow them relentlessly). This is done by a tagger. tagged = nltk. pos_tag(text, tagset='universal') Example output: . . . ('one', 'NUM'), ('arrest', 'NOUN'), ('-', '. '), ('conversation', 'NOUN'), ('with', 'ADP'), ('mrs. ', 'NOUN'), ('grubach', 'VERB'). . . For more detail, remove "tagset='universal'" You can then get, for example, NNP == Proper nouns.

Taggers utilise a combination of lookup tables, derived rules, and training on tagged corpora (samples of text). They often take in n-grams, that is, the word, plus n-1 words around it. More at: http: //www. nltk. org/book/ch 05. html These can be trained as serialised to files using pickle, which turns Python objects to files. They can then be loaded when needed. pos_tag is the Averaged Perceptron Tagger, which is pre-trained.

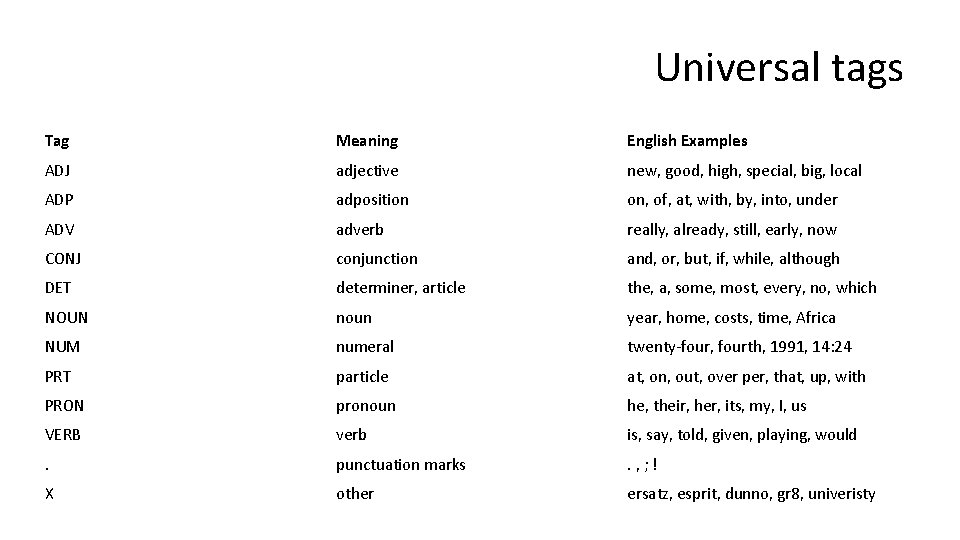

Universal tags Tag Meaning English Examples ADJ adjective new, good, high, special, big, local ADP adposition on, of, at, with, by, into, under ADV adverb really, already, still, early, now CONJ conjunction and, or, but, if, while, although DET determiner, article the, a, some, most, every, no, which NOUN noun year, home, costs, time, Africa NUM numeral twenty-four, fourth, 1991, 14: 24 PRT particle at, on, out, over per, that, up, with PRON pronoun he, their, her, its, my, I, us VERB verb is, say, told, given, playing, would . punctuation marks . , ; ! X other ersatz, esprit, dunno, gr 8, univeristy

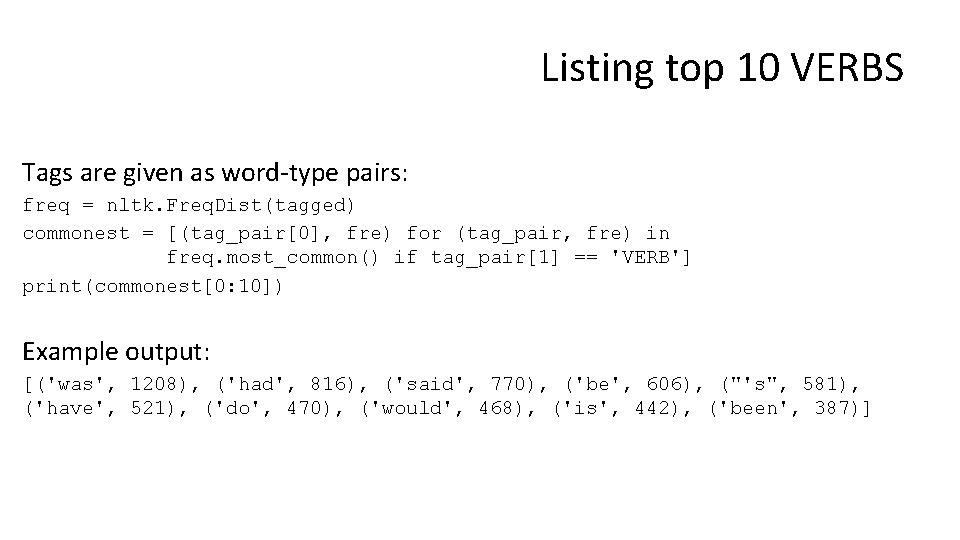

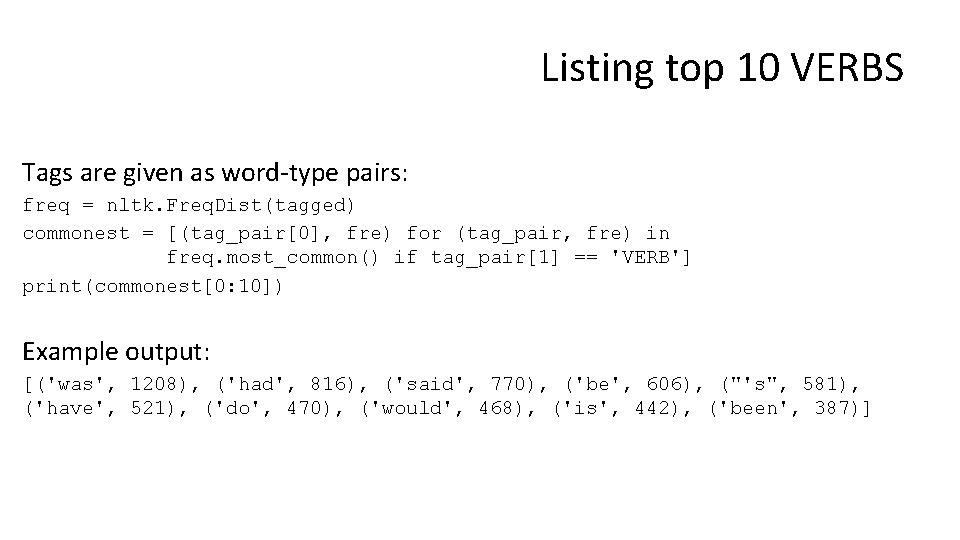

Listing top 10 VERBS Tags are given as word-type pairs: freq = nltk. Freq. Dist(tagged) commonest = [(tag_pair[0], fre) for (tag_pair, fre) in freq. most_common() if tag_pair[1] == 'VERB'] print(commonest[0: 10]) Example output: [('was', 1208), ('had', 816), ('said', 770), ('be', 606), ("'s", 581), ('have', 521), ('do', 470), ('would', 468), ('is', 442), ('been', 387)]

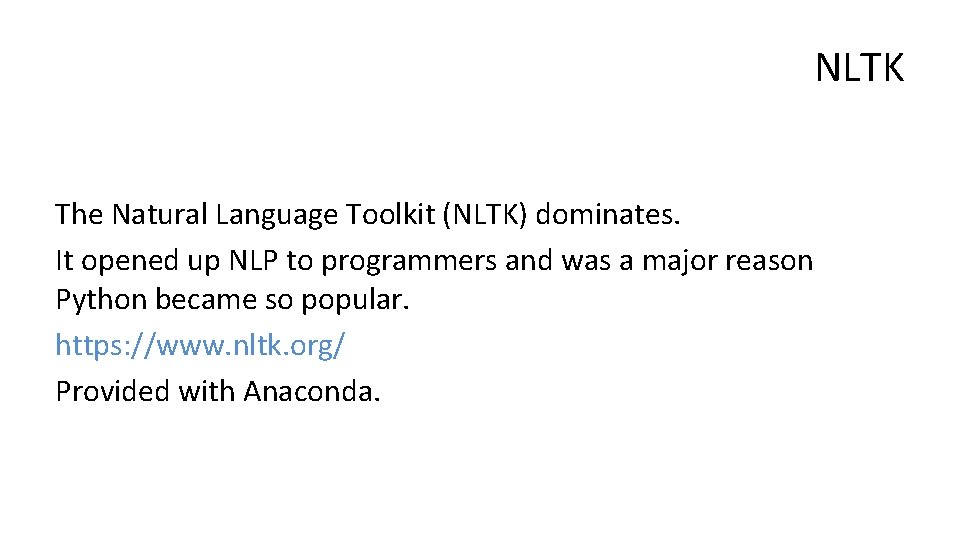

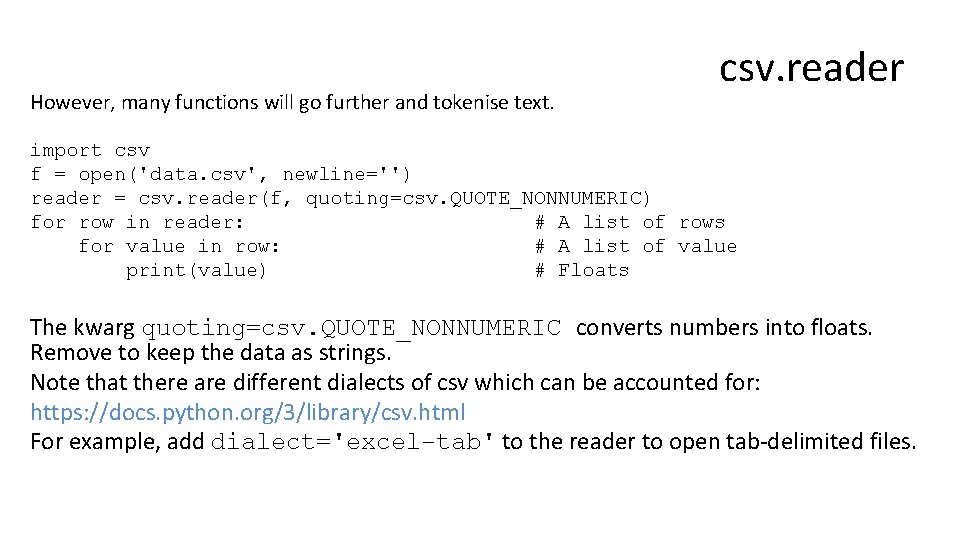

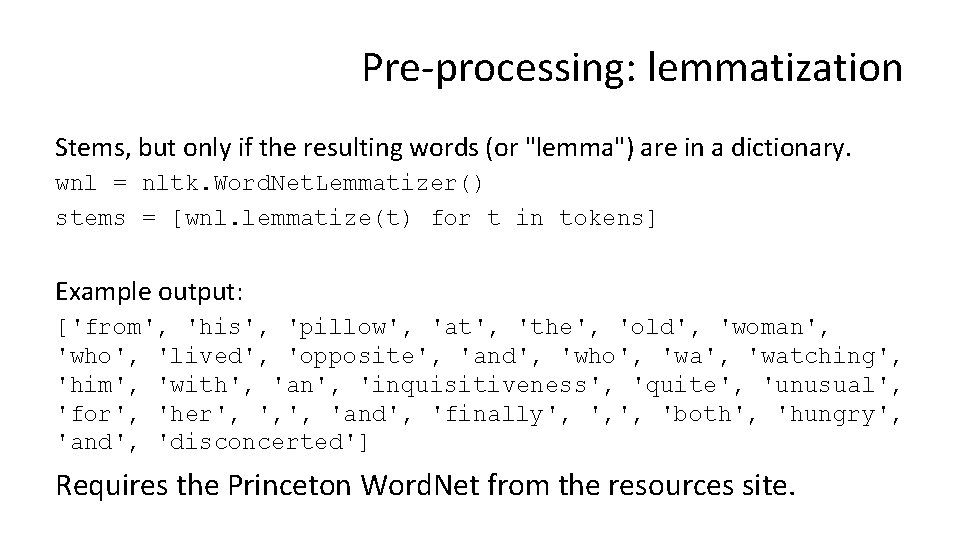

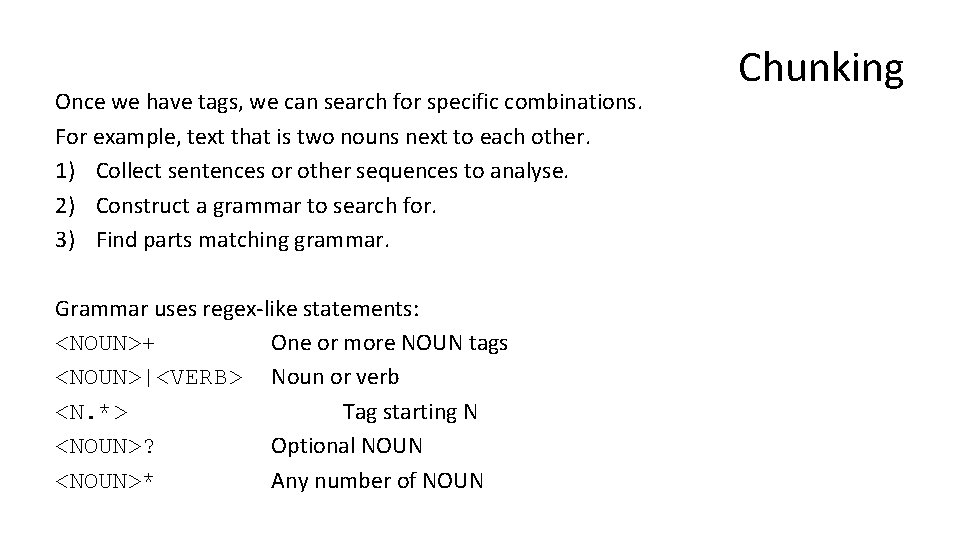

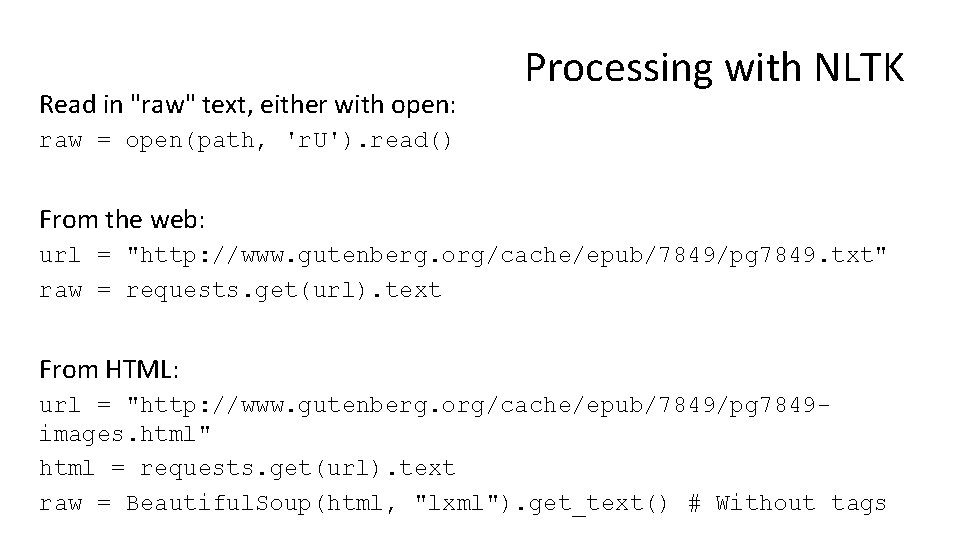

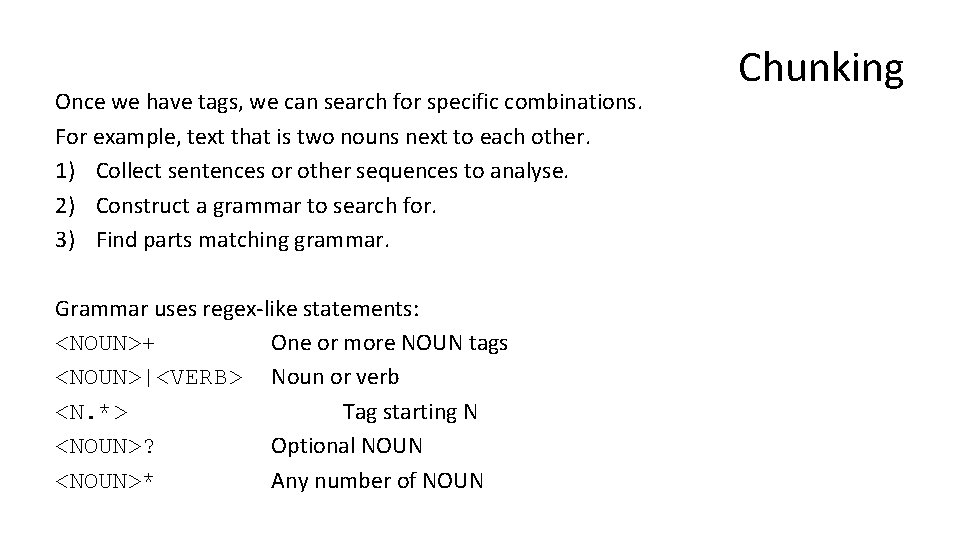

Once we have tags, we can search for specific combinations. For example, text that is two nouns next to each other. 1) Collect sentences or other sequences to analyse. 2) Construct a grammar to search for. 3) Find parts matching grammar. Grammar uses regex-like statements: <NOUN>+ One or more NOUN tags <NOUN>|<VERB> Noun or verb <N. *> Tag starting N <NOUN>? Optional NOUN <NOUN>* Any number of NOUN Chunking

![sentences sentence for tag in tagged sentence appendtag if tag0 sentences = [] sentence = [] for tag in tagged: sentence. append(tag) if tag[0]](https://slidetodoc.com/presentation_image_h/332bd8d0d3812218c791b04fec39e1da/image-34.jpg)

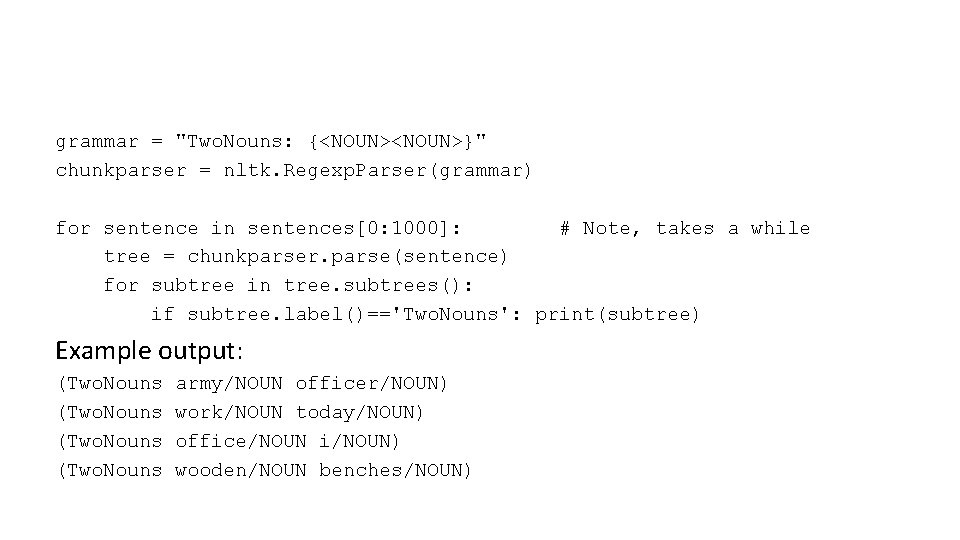

sentences = [] sentence = [] for tag in tagged: sentence. append(tag) if tag[0] == ". ": sentences. append(sentence) sentence = [] continue

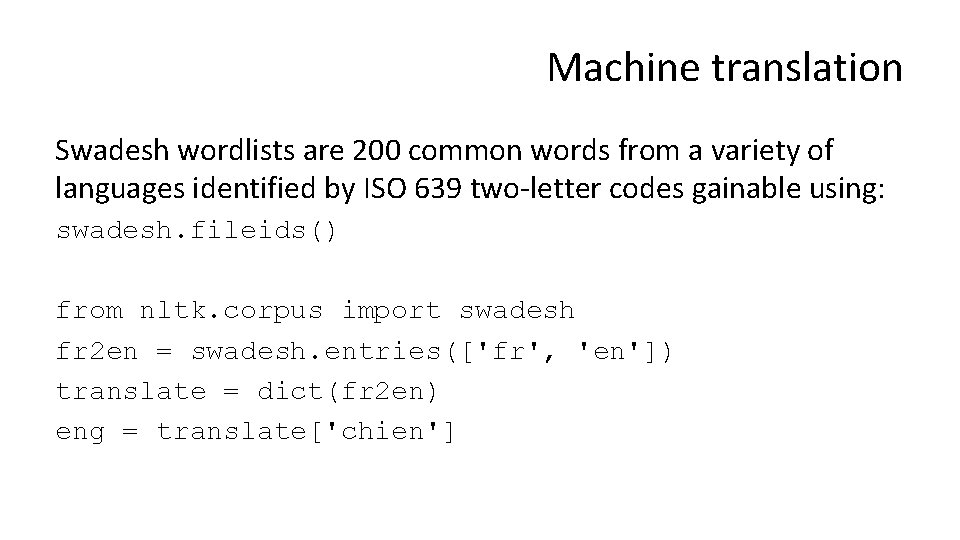

grammar = "Two. Nouns: {<NOUN>}" chunkparser = nltk. Regexp. Parser(grammar) for sentence in sentences[0: 1000]: # Note, takes a while tree = chunkparser. parse(sentence) for subtree in tree. subtrees(): if subtree. label()=='Two. Nouns': print(subtree) Example output: (Two. Nouns army/NOUN officer/NOUN) work/NOUN today/NOUN) office/NOUN i/NOUN) wooden/NOUN benches/NOUN)

Machine translation Swadesh wordlists are 200 common words from a variety of languages identified by ISO 639 two-letter codes gainable using: swadesh. fileids() from nltk. corpus import swadesh fr 2 en = swadesh. entries(['fr', 'en']) translate = dict(fr 2 en) eng = translate['chien']

Wordnets are structured networks of words that are related. They can be set up in nltk, but it also comes with some standard ones. These are usable to find synonyms, antonyms, generalisations and more specific words, along with entailed verbs (that is, verbs that generally go with specific words like swim and swimming pool). Also find semantically similar words (useful for search) http: //www. nltk. org/book/ch 02. html#ex-car 1

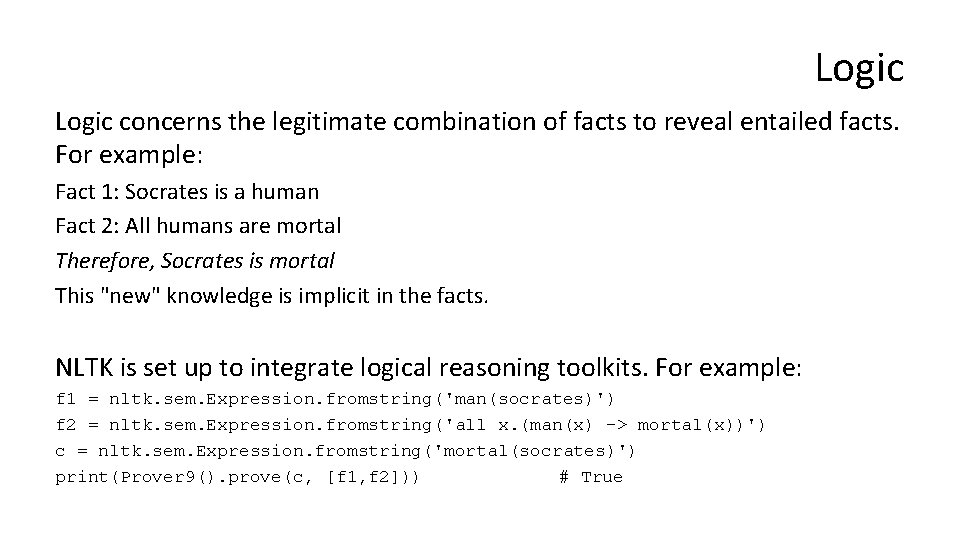

Logic concerns the legitimate combination of facts to reveal entailed facts. For example: Fact 1: Socrates is a human Fact 2: All humans are mortal Therefore, Socrates is mortal This "new" knowledge is implicit in the facts. NLTK is set up to integrate logical reasoning toolkits. For example: f 1 = nltk. sem. Expression. fromstring('man(socrates)') f 2 = nltk. sem. Expression. fromstring('all x. (man(x) -> mortal(x))') c = nltk. sem. Expression. fromstring('mortal(socrates)') print(Prover 9(). prove(c, [f 1, f 2])) # True

Book sections on Logic Proving logic: Chapter 10 Applying logic to discourses: Chapter 10 Recognizing Textual Entailment: Not logic, but supposition. 6. 2. 3

Tokenizing Search/regex Statistics Parts of Speech (Po. S) tagging and Semantics Machine Translation Logic Sentiment analysis (easier in other libraries so lets look at a couple)

Other processes in the NLTK (with book sections) Identifying male/female names: Section 6. 1. 1 Classifying documents: 6. 1. 3 Identifying dialogue act types, such as "Statement, " "Emotion, " "Question": 6. 2. 3 Named Entity Recognition (e. g. people and organisations): 7. 5 Relationship discovery (e. g. between organisations): 7. 6 Parsing sentences as trees: Chapters 8 and 9

Other libraires: Textblob Finally, it is worth pointing out a couple of other libraires. Textblob doesn't come with Anaconda, but is a good addition. Wrapper for nltk, makes a number of functions simple: low power for adaptation, but extremely easy to use. http: //textblob. readthedocs. io/en/dev/

Textblob For example, sentiment analysis. This is the analysis of text for emotional and subjective levels. We might, for example, track how Tweets mentioning a politician change over time. In NLTK you need to set up and train your own text classifier. In Textblob, it is just: kafka = textblob. Text. Blob(raw) print(kafka. sentiment) #Sentiment(polarity=0. 055229266442657333, subjectivity=0. 49242972733871276) Polarity between -1. 0 (very negative) and 1. 0 (very positive). Subjectivity between 0. 0 (very objective) and 1. 0 (very subjective).

Other functions Spelling correction and checking: b = Text. Blob("I havv goood speling!") print(b. correct()) # I have good spelling! Also: Translation POS and noun phrase extraction Wide variety of areas covered by nltk.

Other libraries: Spa. Cy https: //spacy. io/ "Industrial Strength NLP": optimised for big data and deep learning.