Low Latency Analytics and Edge Connected Vehicles with

- Slides: 43

Low Latency Analytics and Edge Connected Vehicles with Rapid. IO Interconnect Devashish Paul, Director Strategic Marketing devashish. paul@idt. com June 2016 © Integrated Device Technology

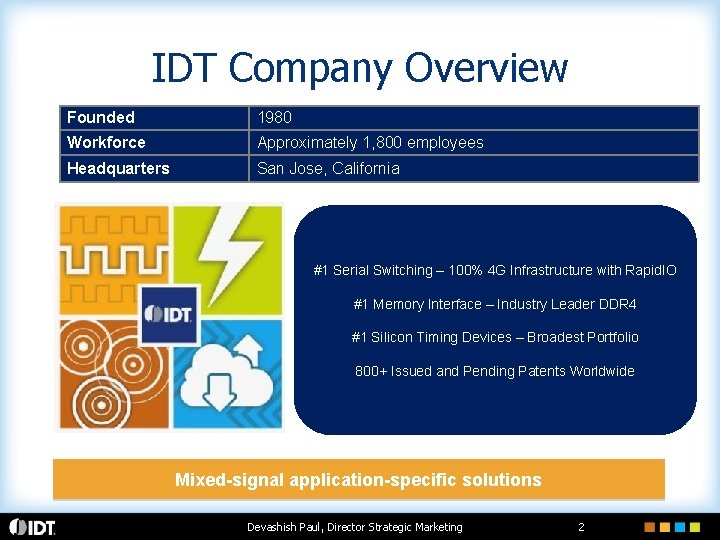

IDT Company Overview Founded 1980 Workforce Approximately 1, 800 employees Headquarters San Jose, California #1 Serial Switching – 100% 4 G Infrastructure with Rapid. IO #1 Memory Interface – Industry Leader DDR 4 #1 Silicon Timing Devices – Broadest Portfolio 800+ Issued and Pending Patents Worldwide Mixed-signal application-specific solutions Devashish Paul, Director Strategic Marketing 2

Low Latency Analytics and Edge Connected Vehicles with Rapid. IO Agenda § Computing Trends/Distributed Computing § Rapid. IO 20 -50 Gbps Technology § 100 ns Low Latency Analytics Architectures § CERN Open. Lab collaboration § Analytics examples with low latency Rapid. IO § Connected Case Vehicles Use Devashish Paul, Director Strategic Marketing 3

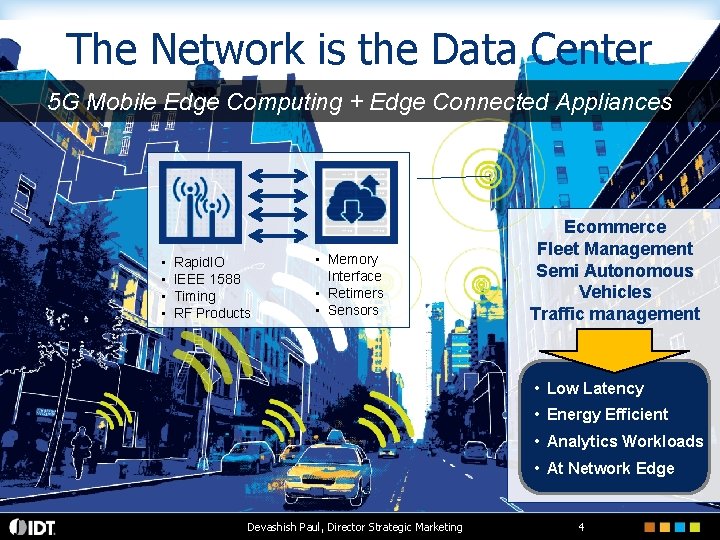

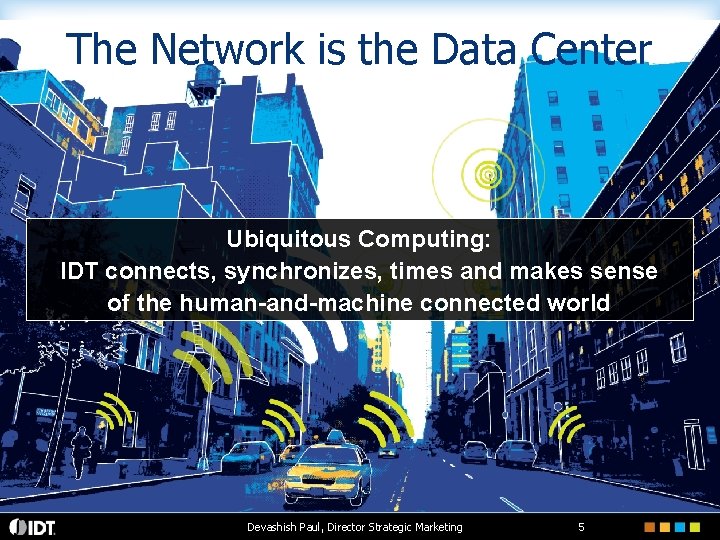

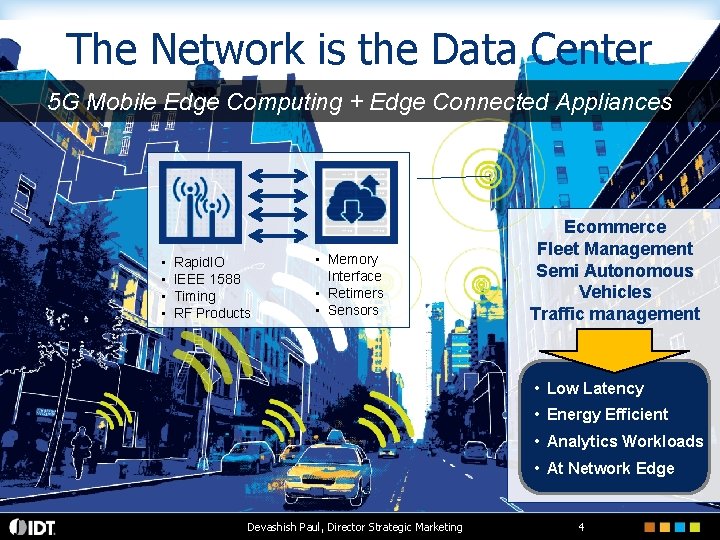

The Network is the Data Center 5 G Mobile Edge Computing + Edge Connected Appliances • • Rapid. IO IEEE 1588 Timing RF Products • Memory Interface • Retimers • Sensors Ecommerce Fleet Management Semi Autonomous Vehicles Traffic management • Low Latency • Energy Efficient • Analytics Workloads • At Network Edge Devashish Paul, Director Strategic Marketing 4

The Network is the Data Center Ubiquitous Computing: IDT connects, synchronizes, times and makes sense of the human-and-machine connected world Devashish Paul, Director Strategic Marketing 5

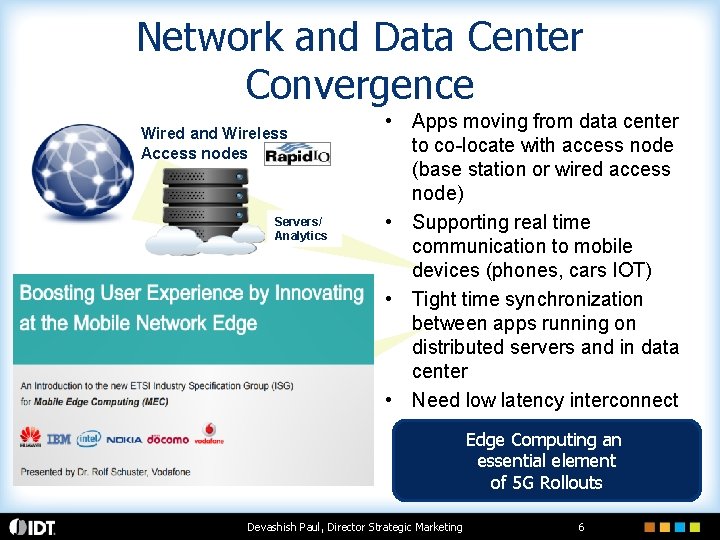

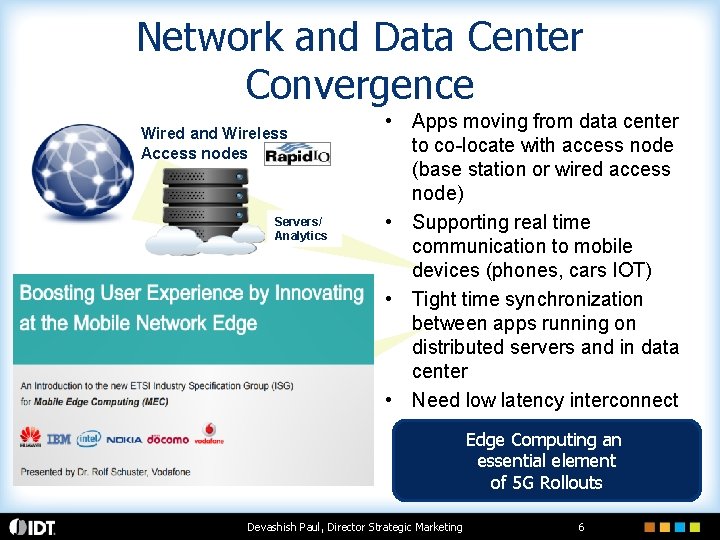

Network and Data Center Convergence Wired and Wireless Access nodes Servers/ Analytics • Apps moving from data center to co-locate with access node (base station or wired access node) • Supporting real time communication to mobile devices (phones, cars IOT) • Tight time synchronization between apps running on distributed servers and in data center • Need low latency interconnect Edge Computing an essential element of 5 G Rollouts Devashish Paul, Director Strategic Marketing 6

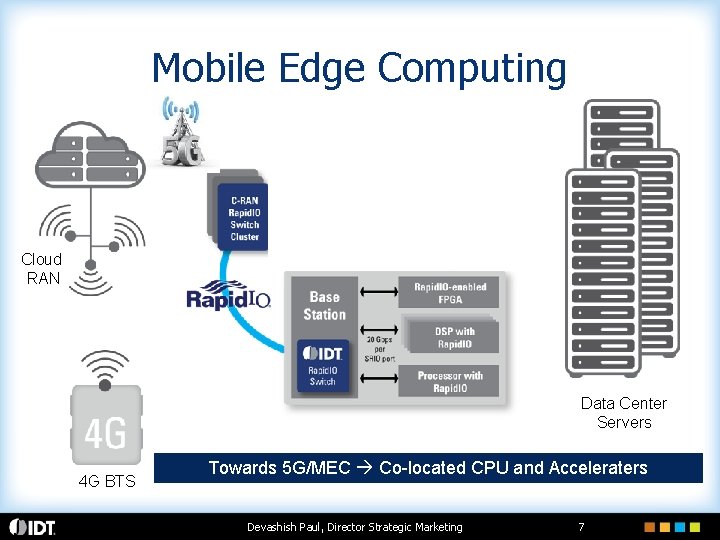

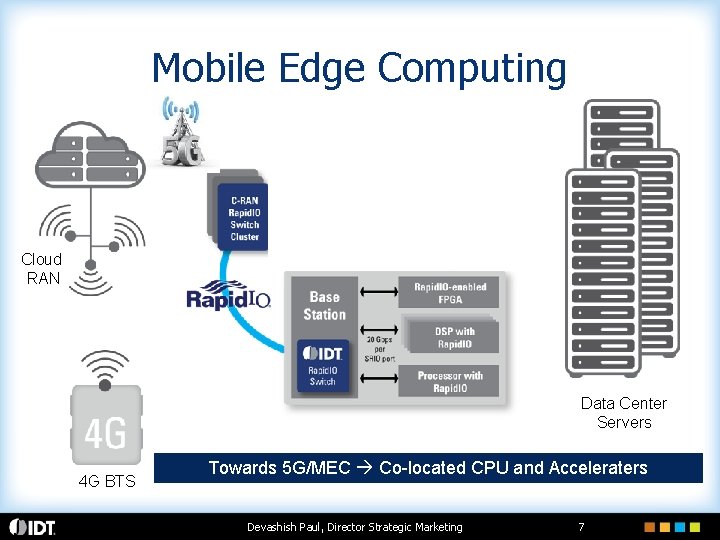

Mobile Edge Computing PPC GPU Cloud RAN Data Center Servers 4 G BTS Towards 5 G/MEC Co-located CPU and Acceleraters Devashish Paul, Director Strategic Marketing 7

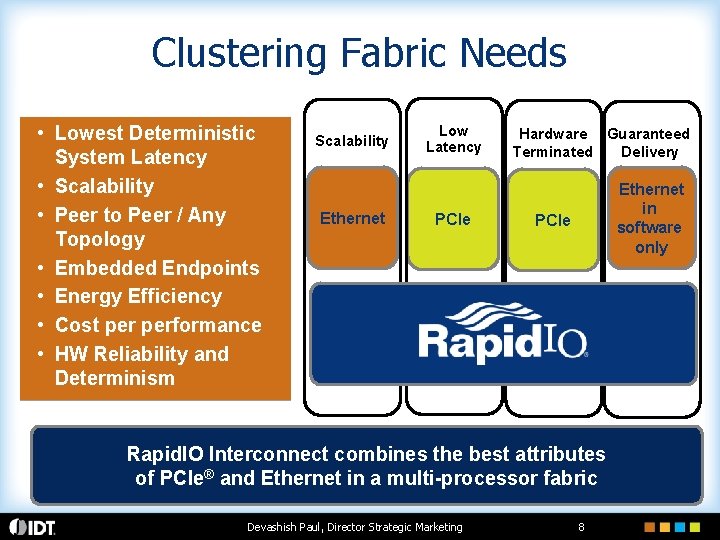

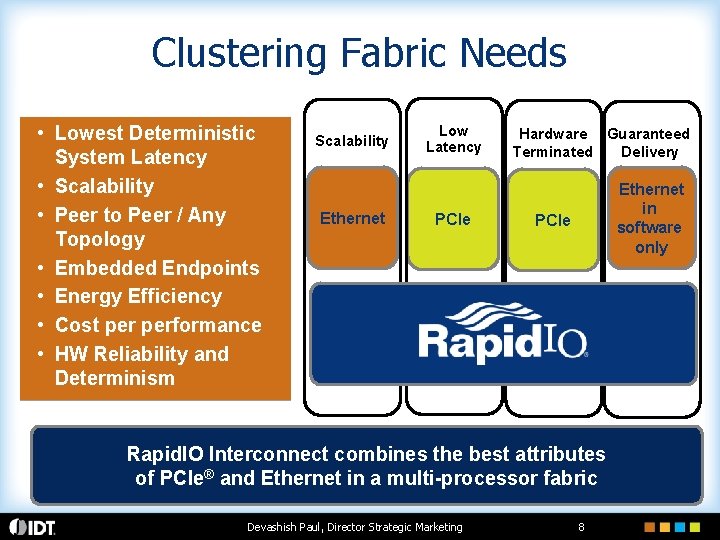

Clustering Fabric Needs • Lowest Deterministic System Latency • Scalability • Peer to Peer / Any Topology • Embedded Endpoints • Energy Efficiency • Cost performance • HW Reliability and Determinism Scalability Ethernet Low Latency PCIe Hardware Guaranteed Terminated Delivery Ethernet in software only PCIe Rapid. IO Interconnect combines the best attributes of PCIe® and Ethernet in a multi-processor fabric Devashish Paul, Director Strategic Marketing 8

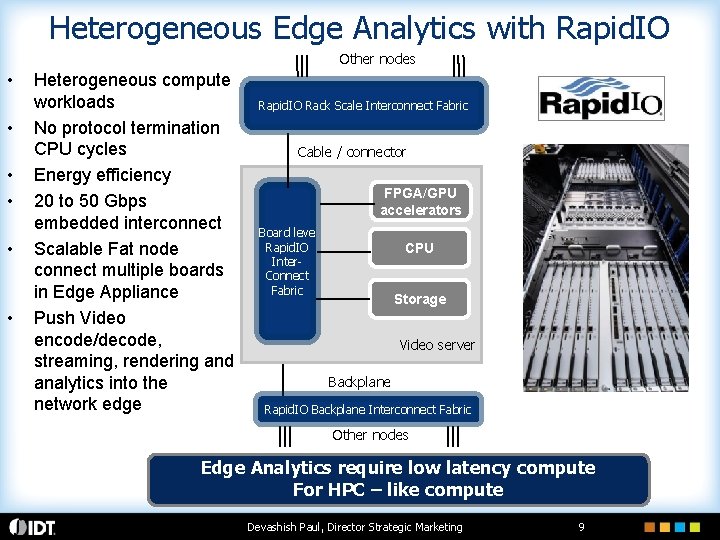

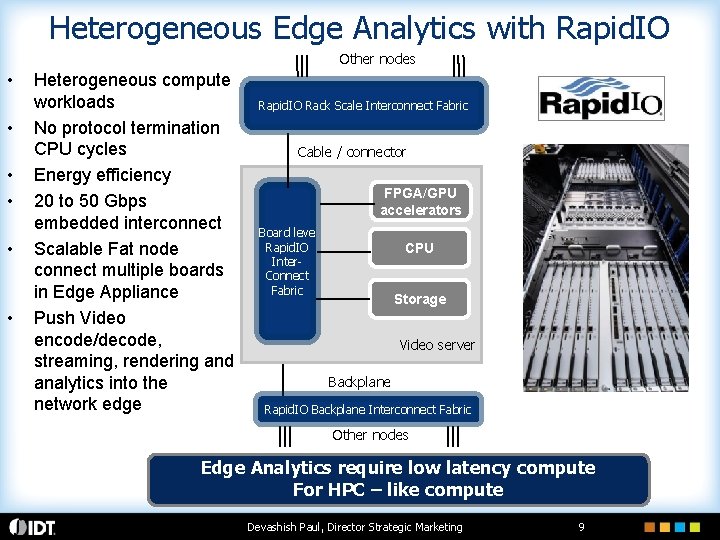

Heterogeneous Edge Analytics with Rapid. IO Other nodes • • • Heterogeneous compute workloads No protocol termination CPU cycles Energy efficiency 20 to 50 Gbps embedded interconnect Scalable Fat node connect multiple boards in Edge Appliance Push Video encode/decode, streaming, rendering and analytics into the network edge Rapid. IO Rack Scale Interconnect Fabric Cable / connector FPGA/GPU accelerators Board leve Rapid. IO Inter. Connect Fabric CPU Storage Video server Backplane Rapid. IO Backplane Interconnect Fabric Other nodes Edge Analytics require low latency compute For HPC – like compute Devashish Paul, Director Strategic Marketing 9

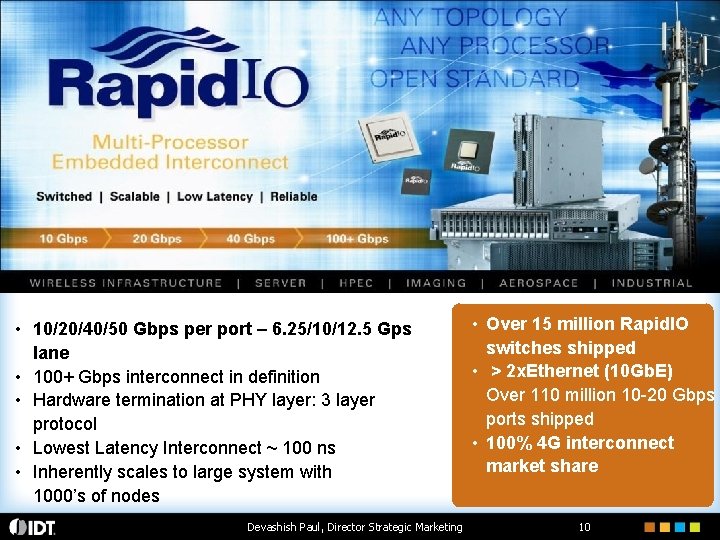

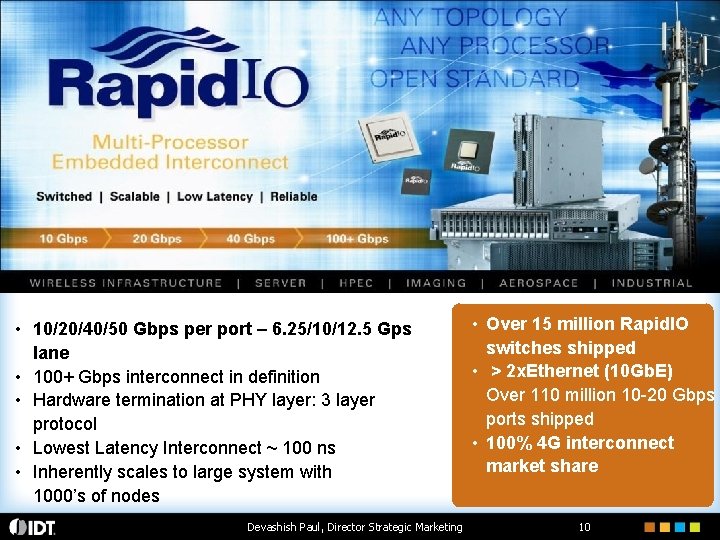

• 10/20/40/50 Gbps per port – 6. 25/10/12. 5 Gps lane • 100+ Gbps interconnect in definition • Hardware termination at PHY layer: 3 layer protocol • Lowest Latency Interconnect ~ 100 ns • Inherently scales to large system with 1000’s of nodes Devashish Paul, Director Strategic Marketing • Over 15 million Rapid. IO switches shipped • > 2 x. Ethernet (10 Gb. E) Over 110 million 10 -20 Gbps ports shipped • 100% 4 G interconnect market share 10

Supported by Large Eco-system © 2015 Rapid. IO. org 02/09/16 Devashish Paul, Director Strategic Marketing 11

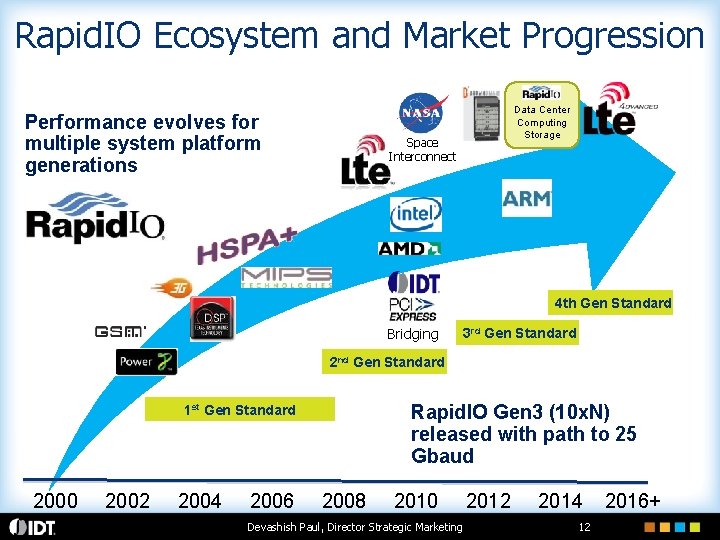

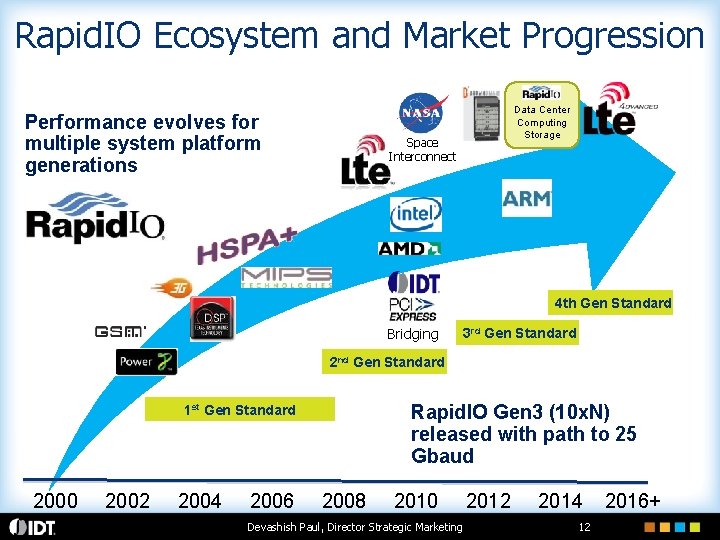

Rapid. IO Ecosystem and Market Progression Performance evolves for multiple system platform generations Data Center Computing Storage Space Interconnect 4 th Gen Standard Bridging 3 rd Gen Standard 2 nd Gen Standard Rapid. IO Gen 3 (10 x. N) released with path to 25 Gbaud 1 st Gen Standard 2000 2002 2004 2006 2008 2010 Devashish Paul, Director Strategic Marketing 2012 2014 12 2016+

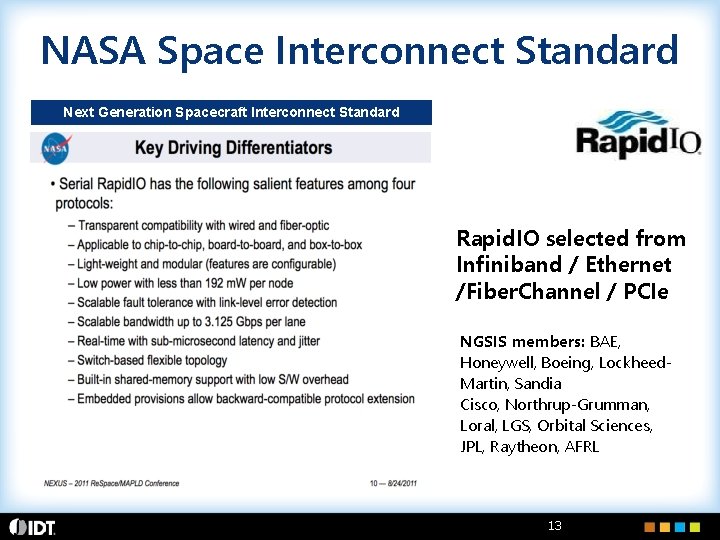

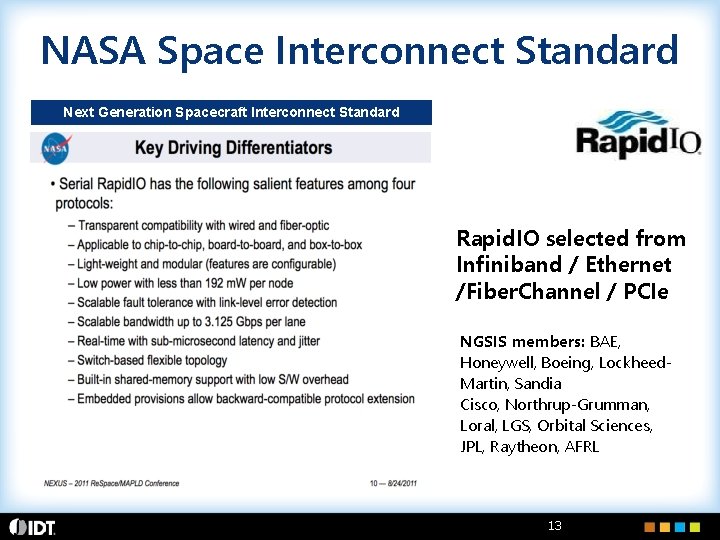

NASA Space Interconnect Standard Next Generation Spacecraft Interconnect Standard Rapid. IO selected from Infiniband / Ethernet /Fiber. Channel / PCIe NGSIS members: BAE, Honeywell, Boeing, Lockheed. Martin, Sandia Cisco, Northrup-Grumman, Loral, LGS, Orbital Sciences, JPL, Raytheon, AFRL 13

Open Compute Project High Performance Computing IDT Co-Chairs Opencompute. org HPC Initiative 02/09/16 Devashish Paul, Director Strategic Marketing 14

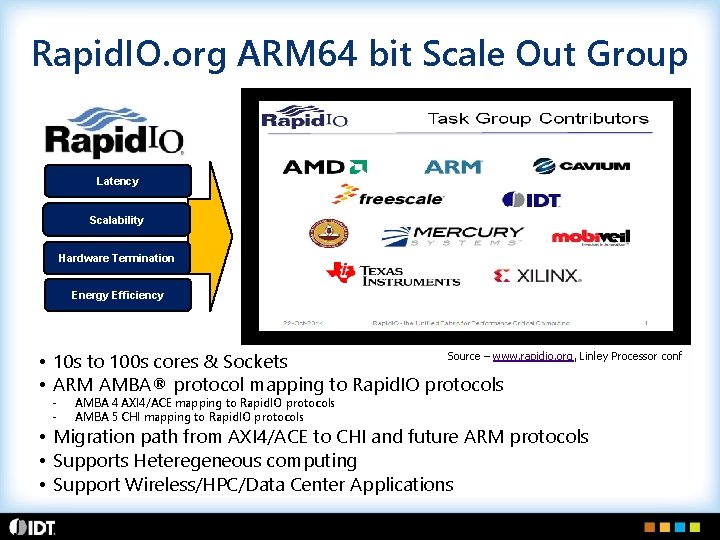

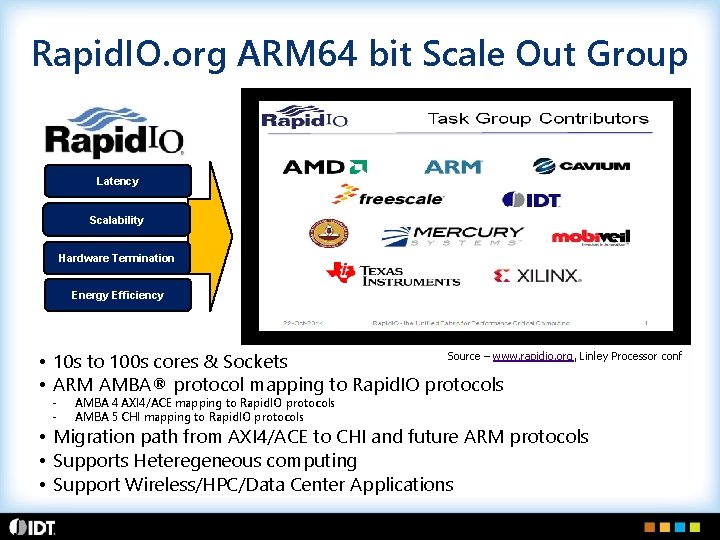

Rapid. IO. org ARM 64 bit Scale Out Group Latency Scalability Hardware Termination Energy Efficiency Source – www. rapidio. org, Linley Processor conf • 10 s to 100 s cores & Sockets • ARM AMBA® protocol mapping to Rapid. IO protocols - AMBA 4 AXI 4/ACE mapping to Rapid. IO protocols AMBA 5 CHI mapping to Rapid. IO protocols • Migration path from AXI 4/ACE to CHI and future ARM protocols • Supports Heteregeneous computing • Support Wireless/HPC/Data Center Applications

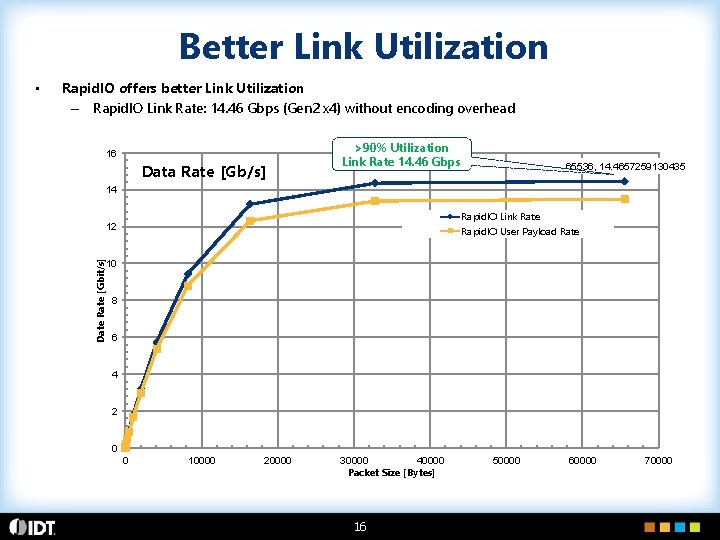

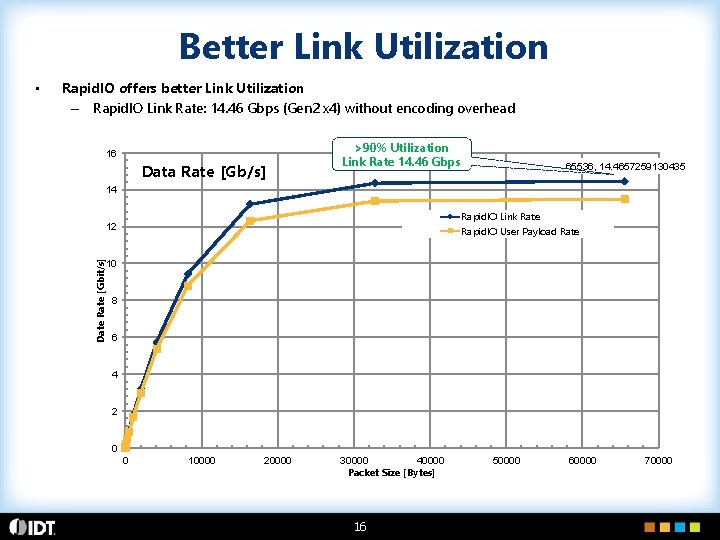

Better Link Utilization Rapid. IO offers better Link Utilization – Rapid. IO Link Rate: 14. 46 Gbps (Gen 2 x 4) without encoding overhead 16 Data Rate [Gb/s] >90% Utilization Link Rate 14. 46 Gbps 65536, 14. 4657259130435 14 Rapid. IO Link Rate Rapid. IO User Payload Rate 12 Date Rate [Gbit/s] • 10 8 6 4 2 0 0 10000 20000 30000 40000 Packet Size [Bytes] 16 50000 60000 70000

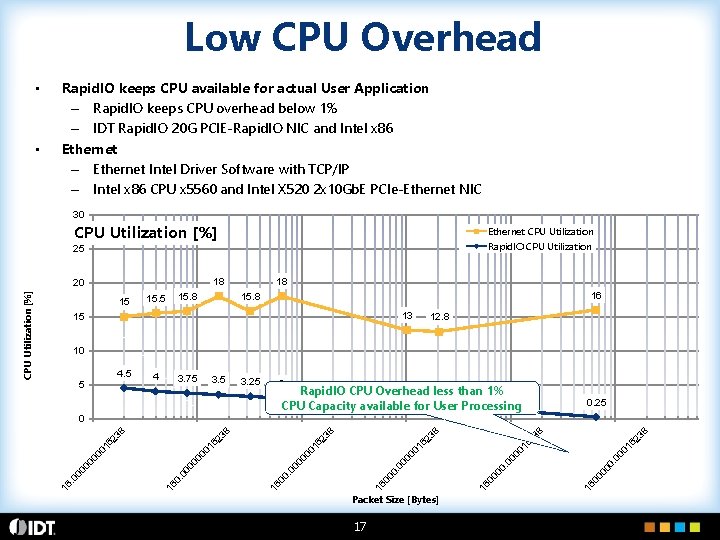

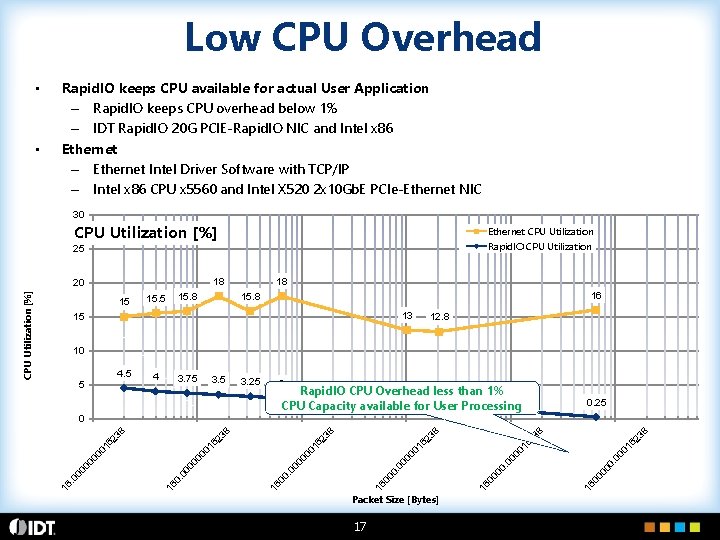

Low CPU Overhead • • Rapid. IO keeps CPU available for actual User Application – Rapid. IO keeps CPU overhead below 1% – IDT Rapid. IO 20 G PCIE-Rapid. IO NIC and Intel x 86 Ethernet – Ethernet Intel Driver Software with TCP/IP – Intel x 86 CPU x 5560 and Intel X 520 2 x 10 Gb. E PCIe-Ethernet NIC 30 CPU Utilization [%] Ethernet CPU Utilization Rapid. IO CPU Utilization 25 18 15 15. 8 18 16 15. 8 13 15 12. 8 10 4. 5 5 4 3. 75 3. 25 3 2 1. 925 Rapid. IO CPU Overhead less than 1% CPU Capacity available for User Processing 0. 25 Packet Size [Bytes] 17 . 0 00 00 16 00 1 00 01 62 38 38 62 16 0 00 00 1 16 0 0. 00 00 00 1 62 38 00 1 00. 0 00 16 0 00 00 16 23 8 0 16. CPU Utilization [%] 20

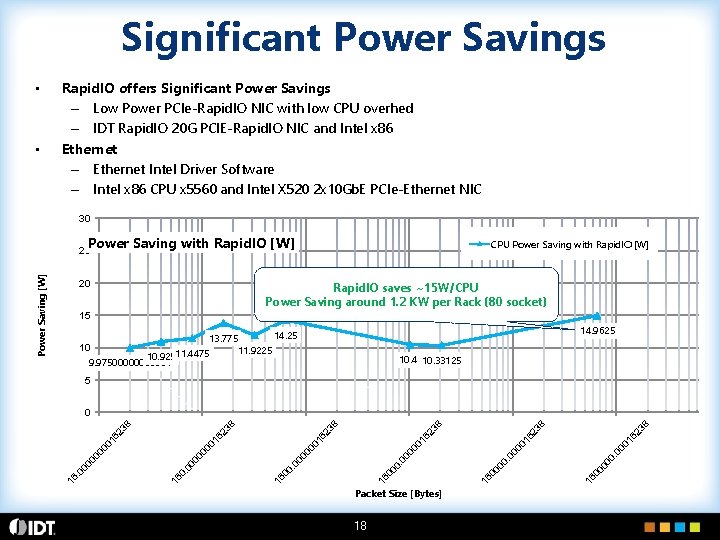

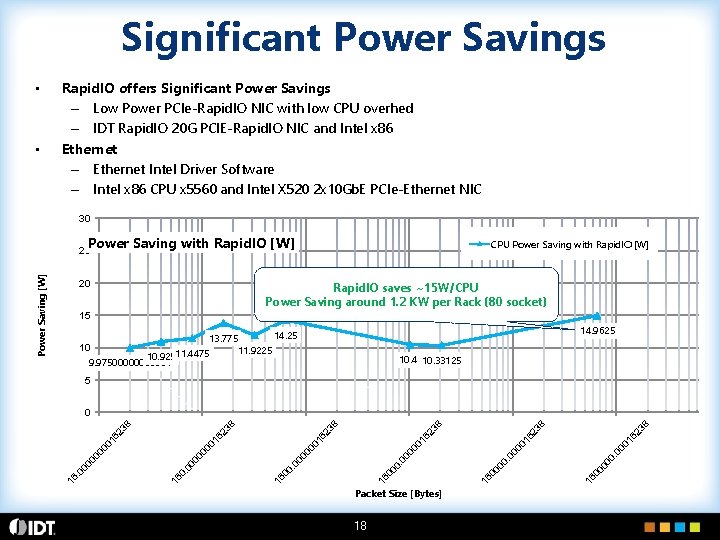

Significant Power Savings • • Rapid. IO offers Significant Power Savings – Low Power PCIe-Rapid. IO NIC with low CPU overhed – IDT Rapid. IO 20 G PCIE-Rapid. IO NIC and Intel x 86 Ethernet – Ethernet Intel Driver Software – Intel x 86 CPU x 5560 and Intel X 520 2 x 10 Gb. E PCIe-Ethernet NIC 30 Power Saving with Rapid. IO [W] CPU Power Saving with Rapid. IO [W] 20 Rapid. IO saves ~15 W/CPU Power Saving around 1. 2 KW per Rack (80 socket) 15 14. 9625 14. 25 13. 775 10 11. 9225 10. 92511. 4475 9. 975000001 10. 4510. 33125 5 18 38 16 0 00 00 1 62 38 00 0. 16 0 00 00. 0 16 0 Packet Size [Bytes] 00 00 1 62 62 38 38 62 00 1 00 0. 00 16 0 00 00 01 00 1 62 62 38 38 0 16. Power Saving [W] 25

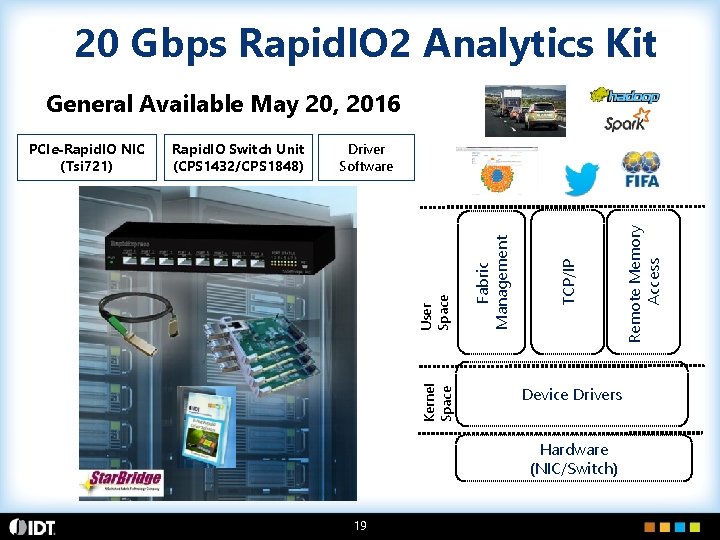

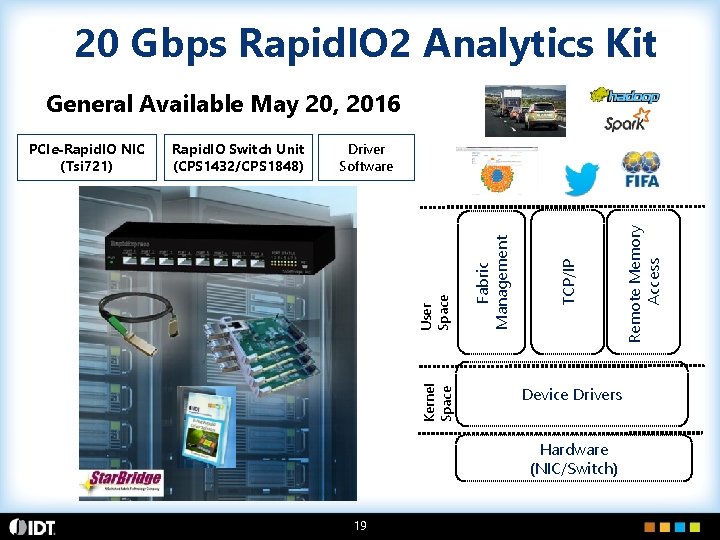

20 Gbps Rapid. IO 2 Analytics Kit General Available May 20, 2016 Device Drivers Hardware (NIC/Switch) 19 Remote Memory Access TCP/IP Fabric Management Driver Software User Space Rapid. IO Switch Unit (CPS 1432/CPS 1848) Kernel Space PCIe-Rapid. IO NIC (Tsi 721)

Rapid. IO Interconnect at CERN Open. Lab 20

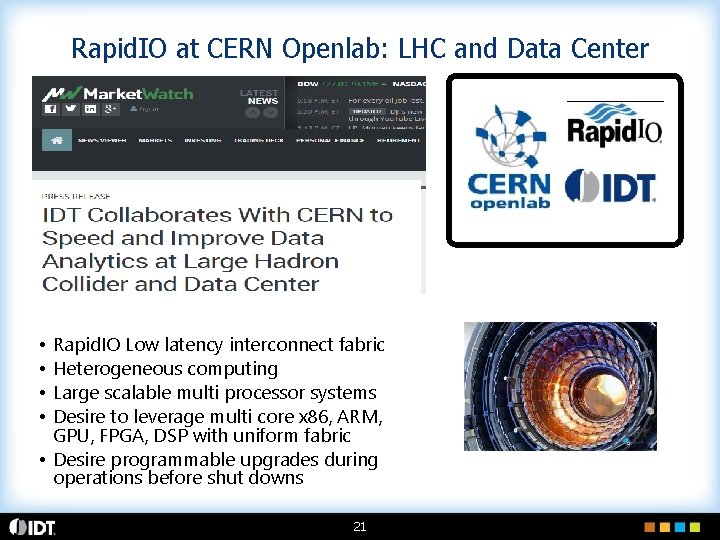

Rapid. IO at CERN Openlab: LHC and Data Center Rapid. IO Low latency interconnect fabric Heterogeneous computing Large scalable multi processor systems Desire to leverage multi core x 86, ARM, GPU, FPGA, DSP with uniform fabric • Desire programmable upgrades during operations before shut downs • • 21

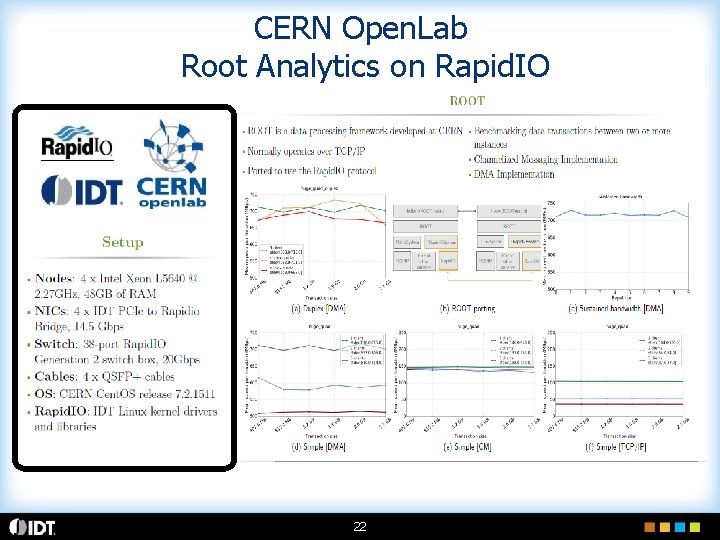

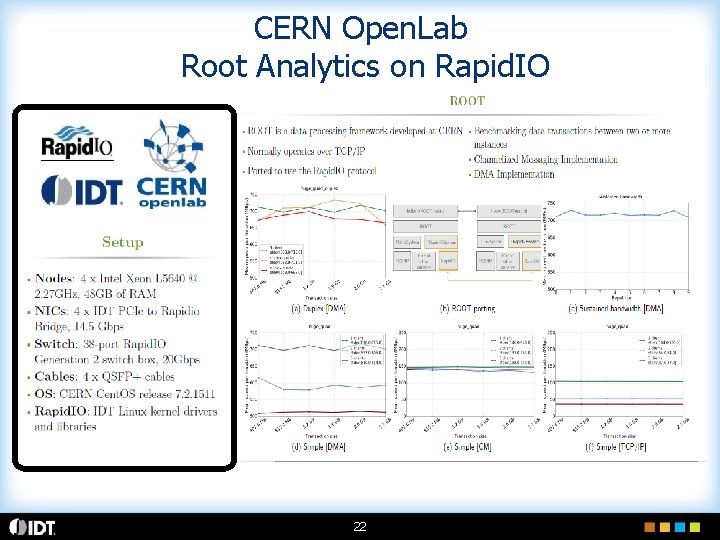

CERN Open. Lab Root Analytics on Rapid. IO 22

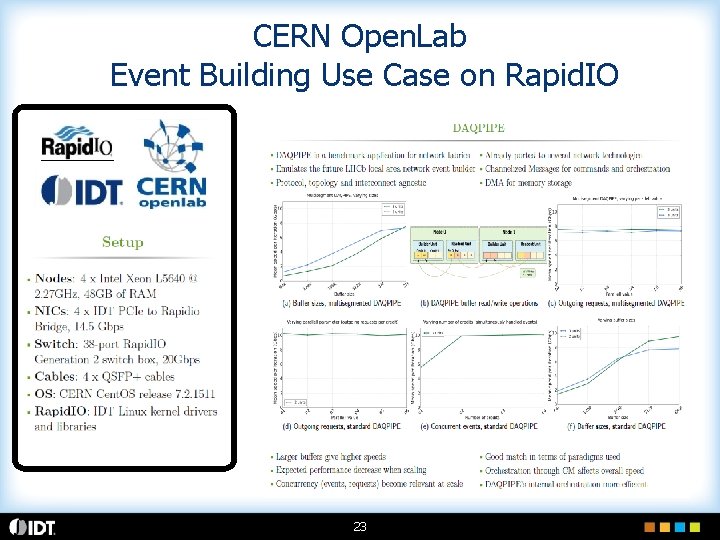

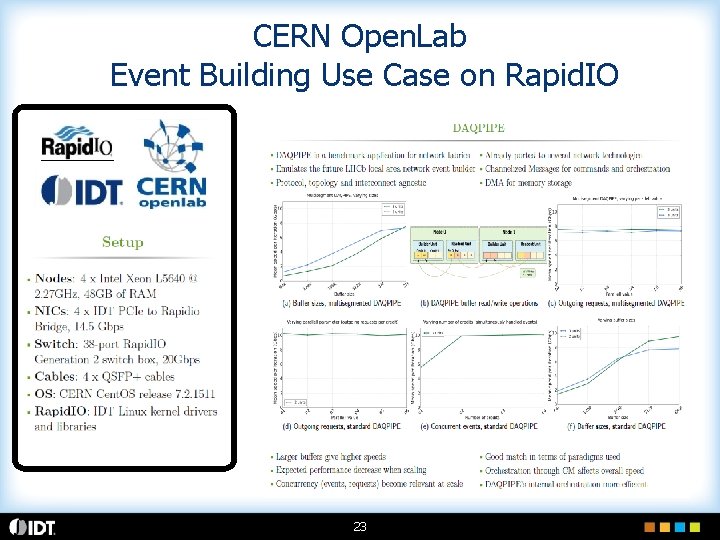

CERN Open. Lab Event Building Use Case on Rapid. IO 23

24

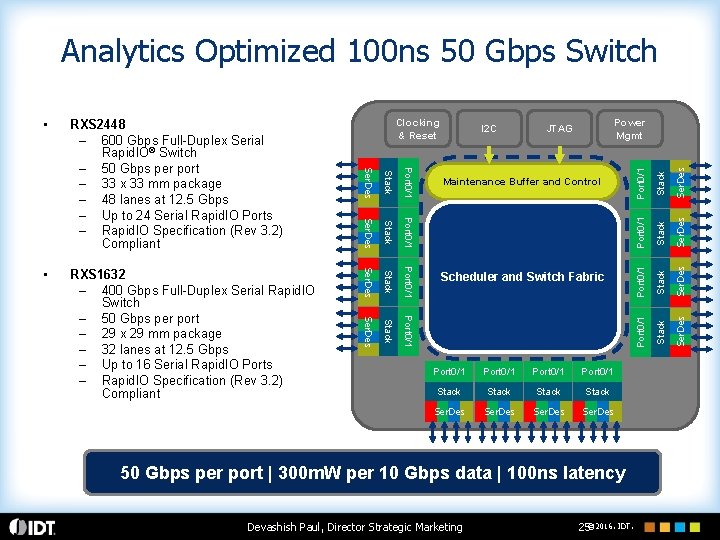

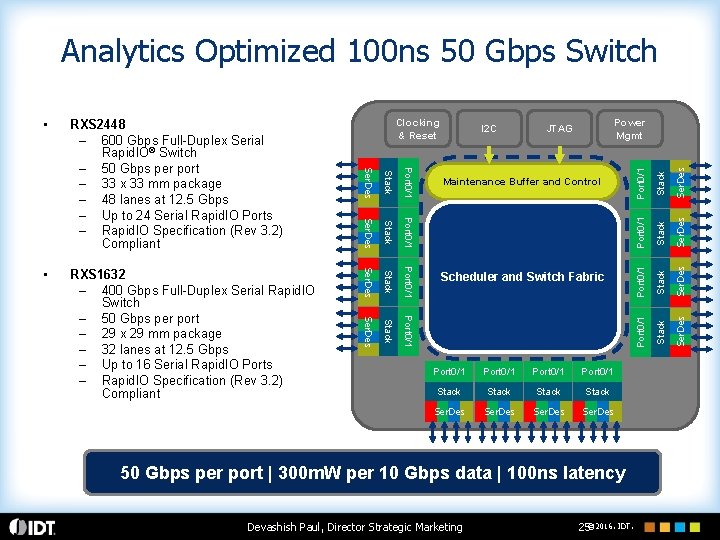

Analytics Optimized 100 ns 50 Gbps Switch Port 0/1 Stack Ser. Des 50 Gbps per port | 300 m. W per 10 Gbps data | 100 ns latency Devashish Paul, Director Strategic Marketing 25© 2016. IDT. Ser. Des Stack Port 0/1 Stack Scheduler and Switch Fabric Stack Port 0/1 Stack Ser. Des Maintenance Buffer and Control Stack Power Mgmt JTAG Port 0/1 I 2 C Port 0/1 Stack Ser. Des RXS 1632 – 400 Gbps Full-Duplex Serial Rapid. IO Switch – 50 Gbps per port – 29 x 29 mm package – 32 lanes at 12. 5 Gbps – Up to 16 Serial Rapid. IO Ports – Rapid. IO Specification (Rev 3. 2) Compliant Clocking & Reset Ser. Des • RXS 2448 – 600 Gbps Full-Duplex Serial Rapid. IO® Switch – 50 Gbps per port – 33 x 33 mm package – 48 lanes at 12. 5 Gbps – Up to 24 Serial Rapid. IO Ports – Rapid. IO Specification (Rev 3. 2) Compliant Port 0/1 •

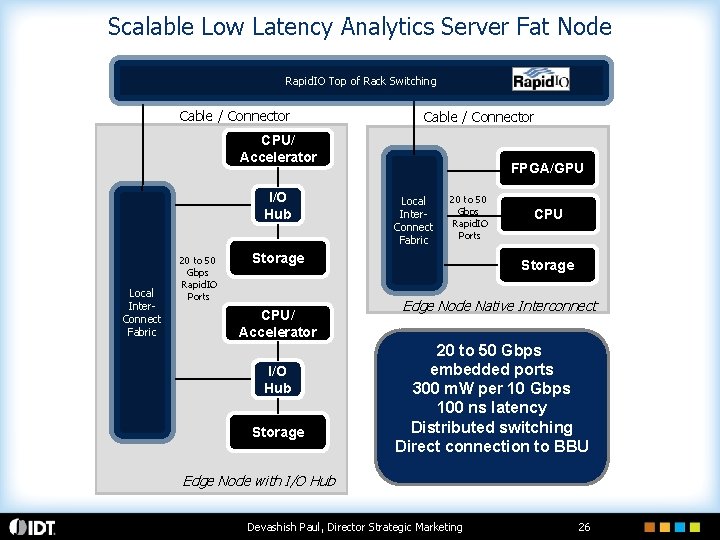

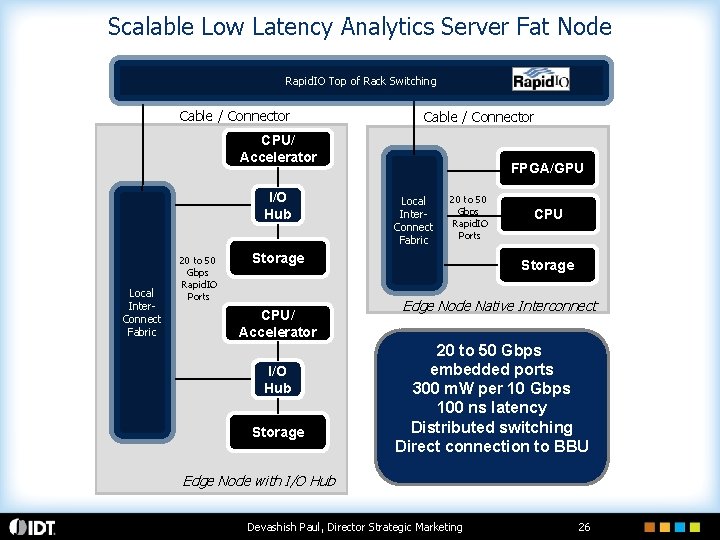

Scalable Low Latency Analytics Server Fat Node Rapid. IO Top of Rack Switching Cable / Connector CPU/ Accelerator I/O Hub Local Inter. Connect Fabric 20 to 50 Gbps Rapid. IO Ports FPGA/GPU Local Inter. Connect Fabric 20 to 50 Gbps Rapid. IO Ports Storage CPU/ Accelerator I/O Hub Storage CPU Storage Edge Node Native Interconnect 20 to 50 Gbps embedded ports 300 m. W per 10 Gbps 100 ns latency Distributed switching Direct connection to BBU Edge Node with I/O Hub Devashish Paul, Director Strategic Marketing 26

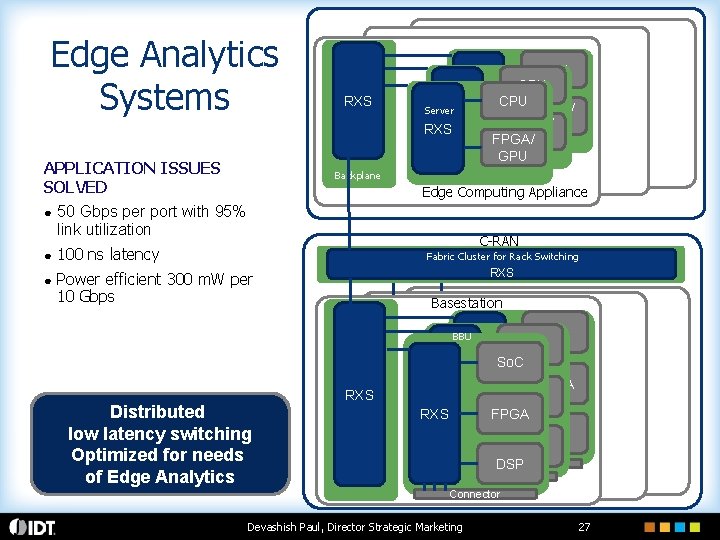

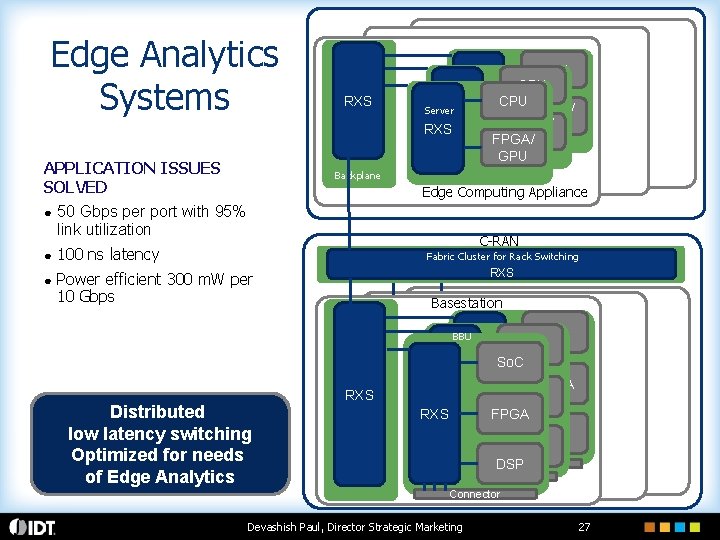

Edge Analytics Systems Backplane RXS Server RXS FPGA/ GPU GPU RXS APPLICATION ISSUES SOLVED CPU CPU FPGA/ Backplane Edge Computing Appliance ● 50 Gbps per port with 95% link utilization ● 100 ns latency ● Power efficient 300 m. W per 10 Gbps C-RAN Fabric Cluster for Rack Switching RXS Basestation BBU Distributed low latency switching Optimized for needs of Edge Analytics RXS So. C RXS FPGA DSP Connector Devashish Paul, Director Strategic Marketing 27

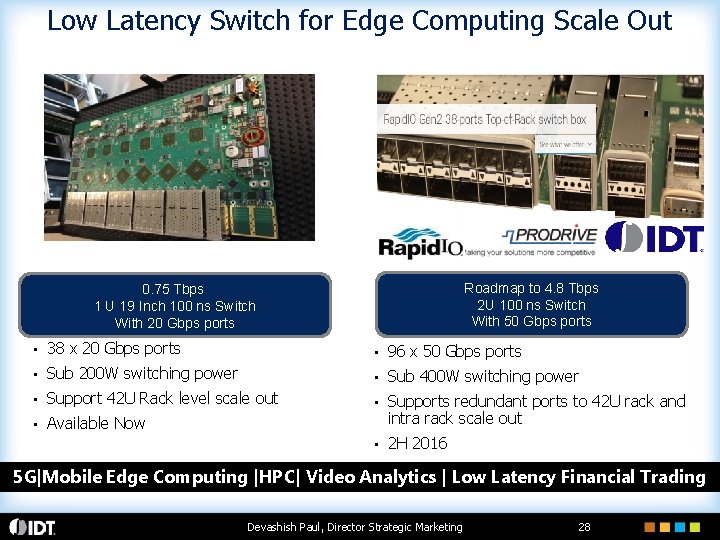

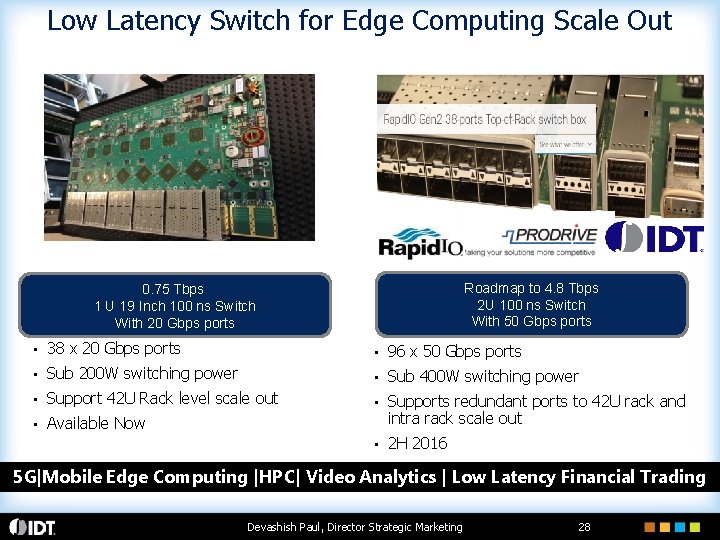

Low Latency Switch for Edge Computing Scale Out Roadmap to 4. 8 Tbps 2 U 100 ns Switch With 50 Gbps ports 0. 75 Tbps 1 U 19 Inch 100 ns Switch With 20 Gbps ports • 38 x 20 Gbps ports • 96 x 50 Gbps ports • Sub 200 W switching power • Sub 400 W switching power • Support 42 U Rack level scale out • • Available Now Supports redundant ports to 42 U rack and intra rack scale out • 2 H 2016 5 G|Mobile Edge Computing |HPC| Video Analytics | Low Latency Financial Trading Devashish Paul, Director Strategic Marketing 28

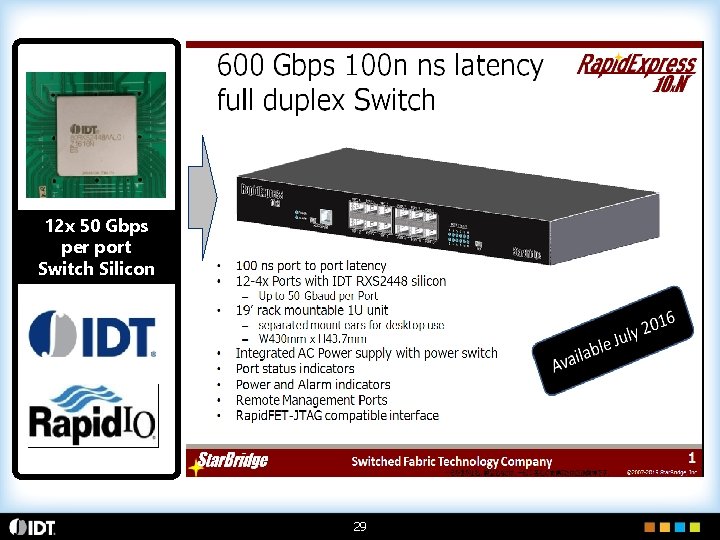

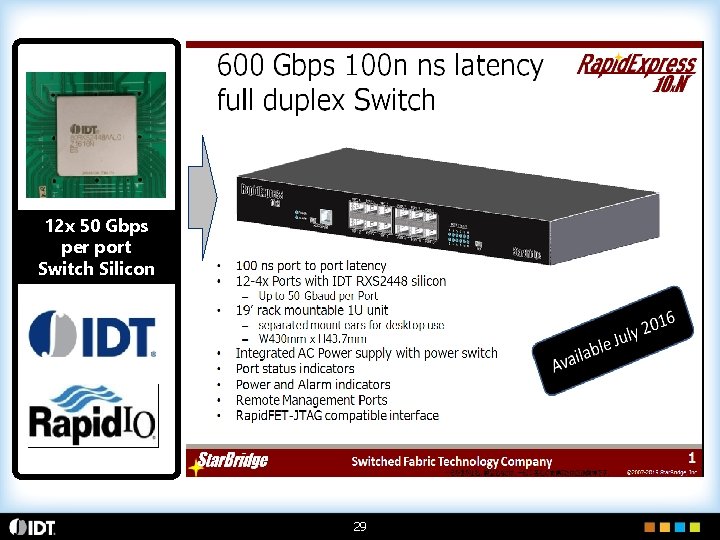

12 x 50 Gbps per port Switch Silicon 29

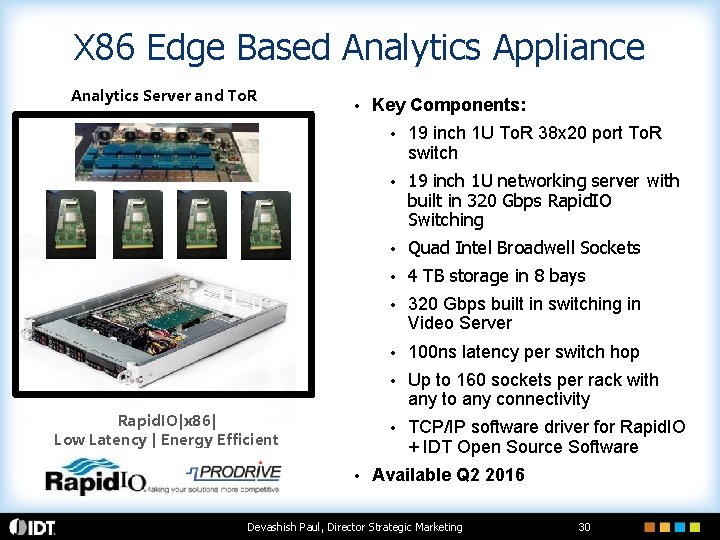

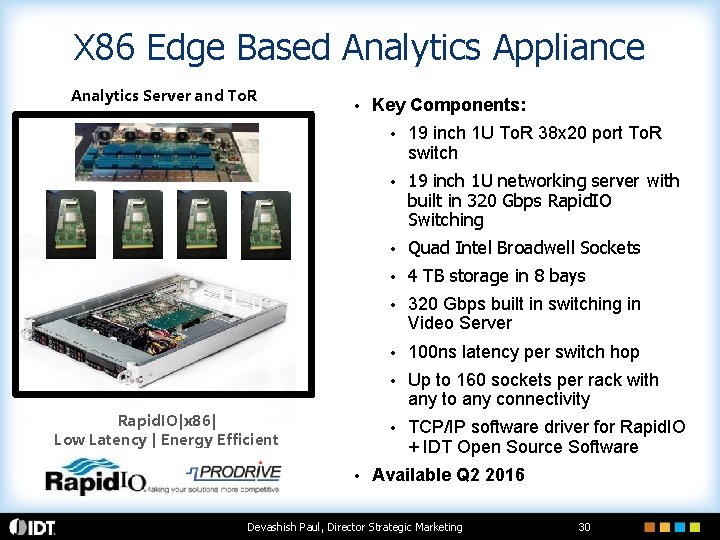

X 86 Edge Based Analytics Appliance Analytics Server and To. R • Rapid. IO|x 86| Low Latency | Energy Efficient • Key Components: • 19 inch 1 U To. R 38 x 20 port To. R switch • 19 inch 1 U networking server with built in 320 Gbps Rapid. IO Switching • Quad Intel Broadwell Sockets • 4 TB storage in 8 bays • 320 Gbps built in switching in Video Server • 100 ns latency per switch hop • Up to 160 sockets per rack with any to any connectivity • TCP/IP software driver for Rapid. IO + IDT Open Source Software Available Q 2 2016 Devashish Paul, Director Strategic Marketing 30

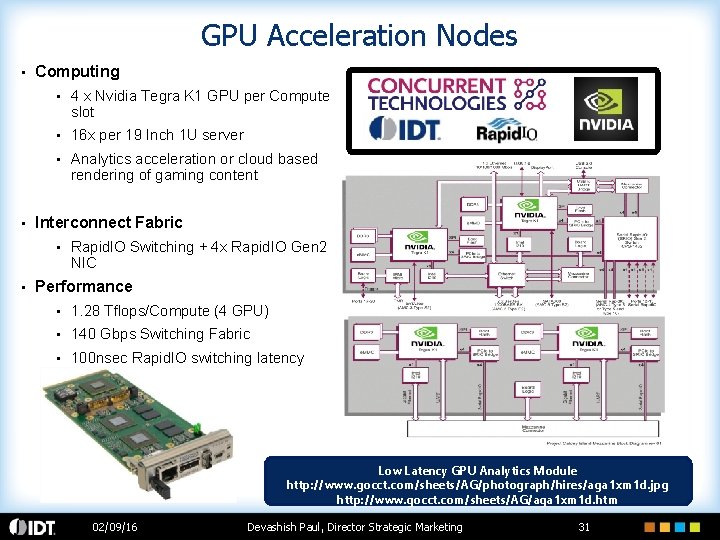

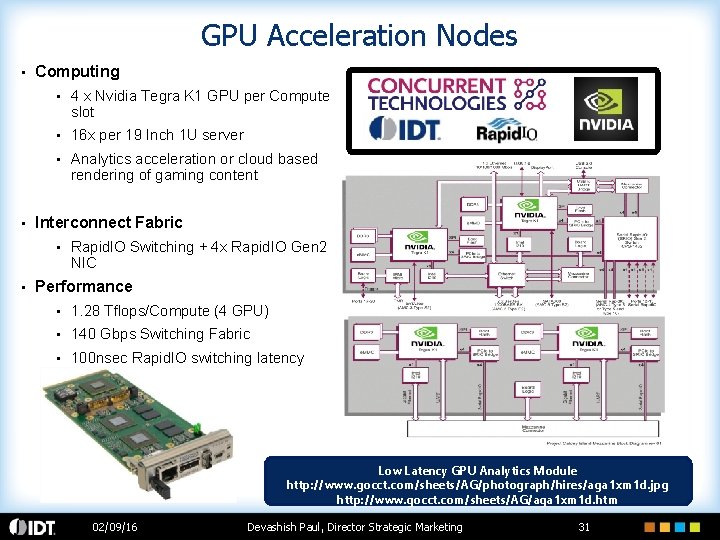

GPU Acceleration Nodes • • Computing • 4 x Nvidia Tegra K 1 GPU per Compute slot • 16 x per 19 Inch 1 U server • Analytics acceleration or cloud based rendering of gaming content Interconnect Fabric • • Rapid. IO Switching + 4 x Rapid. IO Gen 2 NIC Performance • 1. 28 Tflops/Compute (4 GPU) • 140 Gbps Switching Fabric • 100 nsec Rapid. IO switching latency Low Latency GPU Analytics Module http: //www. gocct. com/sheets/AG/photograph/hires/aga 1 xm 1 d. jpg http: //www. gocct. com/sheets/AG/aga 1 xm 1 d. htm 02/09/16 Devashish Paul, Director Strategic Marketing 31

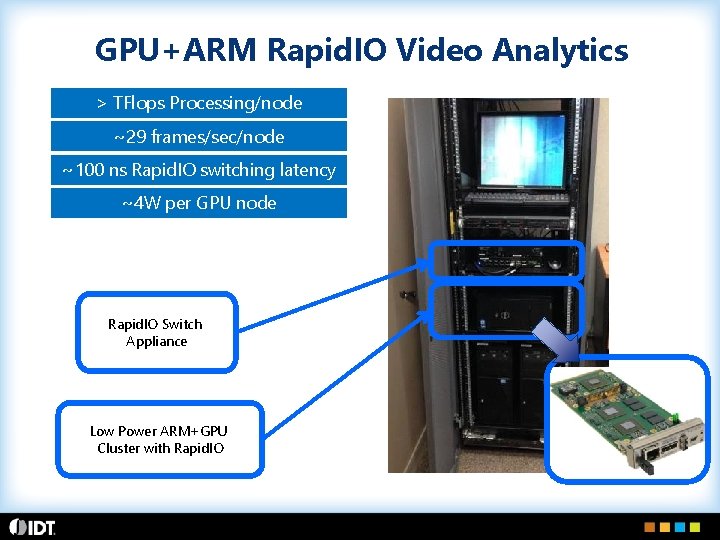

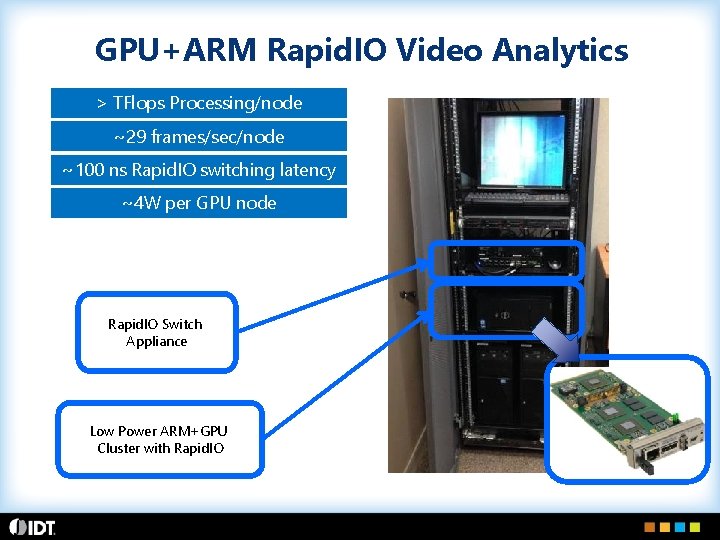

GPU+ARM Rapid. IO Video Analytics > TFlops Processing/node ~29 frames/sec/node ~100 ns Rapid. IO switching latency ~4 W per GPU node Rapid. IO Switch Appliance Low Power ARM+GPU Cluster with Rapid. IO

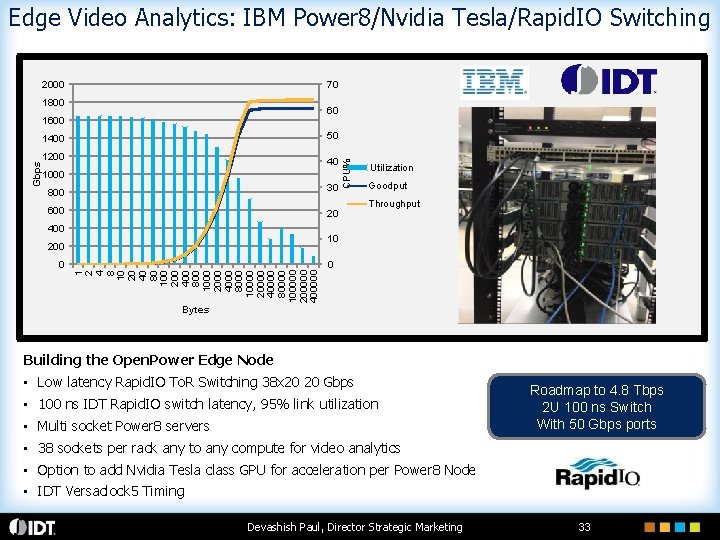

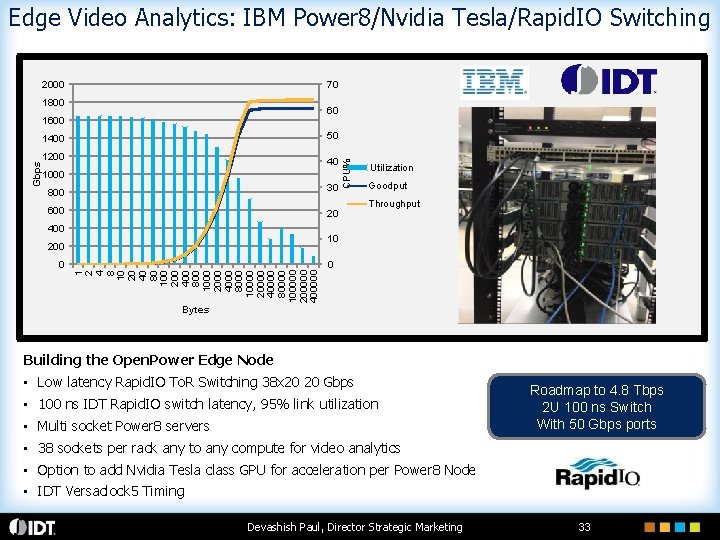

Edge Video Analytics: IBM Power 8/Nvidia Tesla/Rapid. IO Switching 2000 70 1800 60 1600 Gbps 1200 40 1000 30 800 600 20 400 Utilization Goodput Throughput 10 1 2 4 8 10 20 40 80 100 200 400 800 1000 2000 4000 8000 10000 20000 40000 80000 100000 200000 400000 200 0 CPU% 50 1400 0 Bytes Building the Open. Power Edge Node • Low latency Rapid. IO To. R Switching 38 x 20 20 Gbps • 100 ns IDT Rapid. IO switch latency, 95% link utilization • Multi socket Power 8 servers Roadmap to 4. 8 Tbps 2 U 100 ns Switch With 50 Gbps ports • 38 sockets per rack any to any compute for video analytics • Option to add Nvidia Tesla class GPU for acceleration per Power 8 Node • IDT Versaclock 5 Timing Devashish Paul, Director Strategic Marketing 33

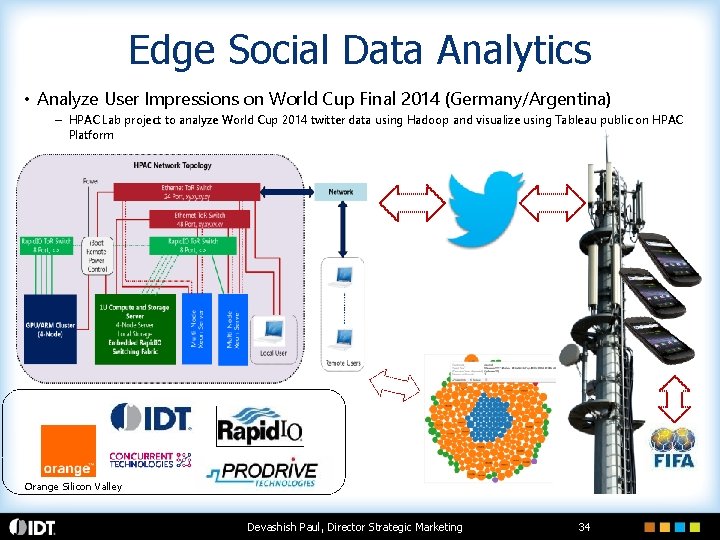

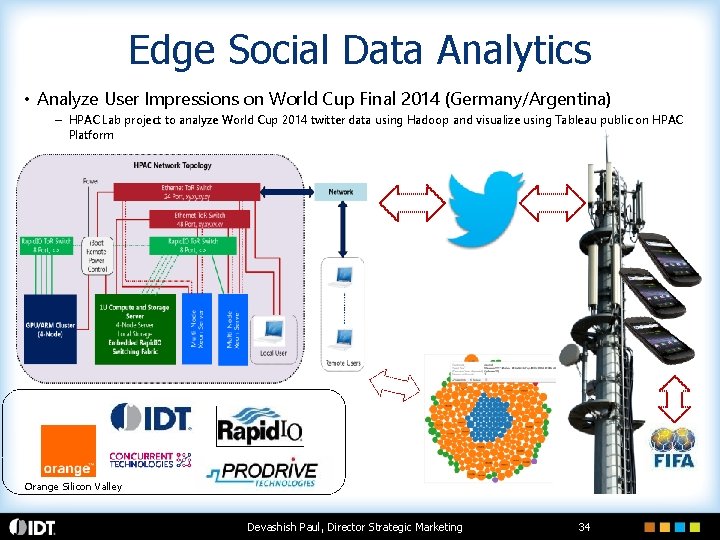

Edge Social Data Analytics • Analyze User Impressions on World Cup Final 2014 (Germany/Argentina) – HPAC Lab project to analyze World Cup 2014 twitter data using Hadoop and visualize using Tableau public on HPAC Platform Orange Silicon Valley Devashish Paul, Director Strategic Marketing 34

5 G Lab Germany: Edge Analytics for Autonomous Vehicles Low Latency Edge Computing Key to Tactile Internet Devashish Paul, Director Strategic Marketing 35

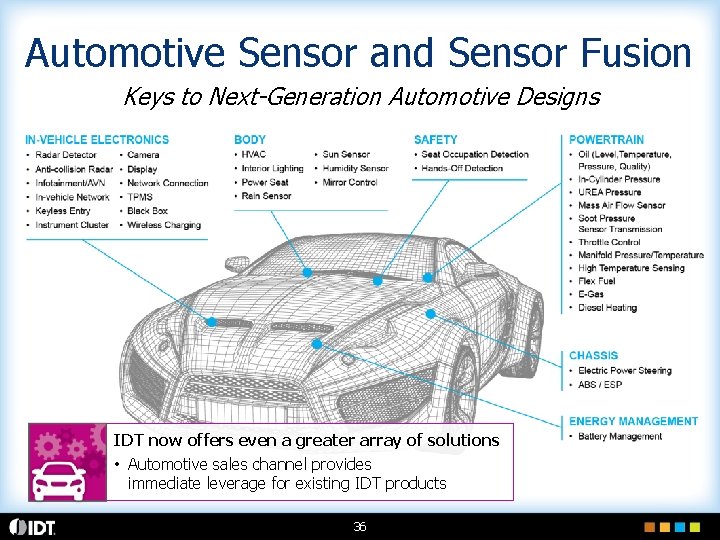

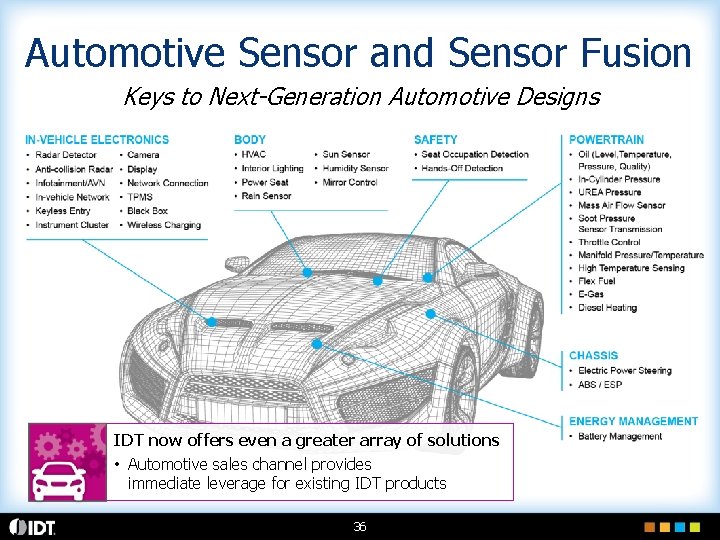

Automotive Sensor and Sensor Fusion Keys to Next-Generation Automotive Designs IDT now offers even a greater array of solutions • Automotive sales channel provides immediate leverage for existing IDT products 36

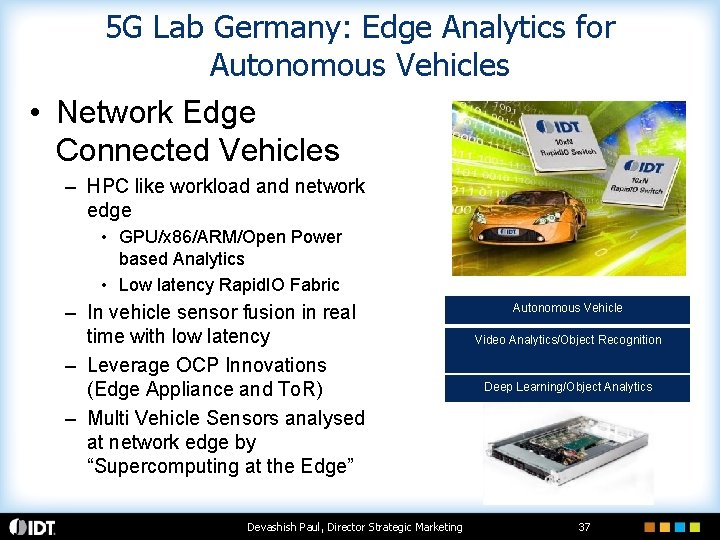

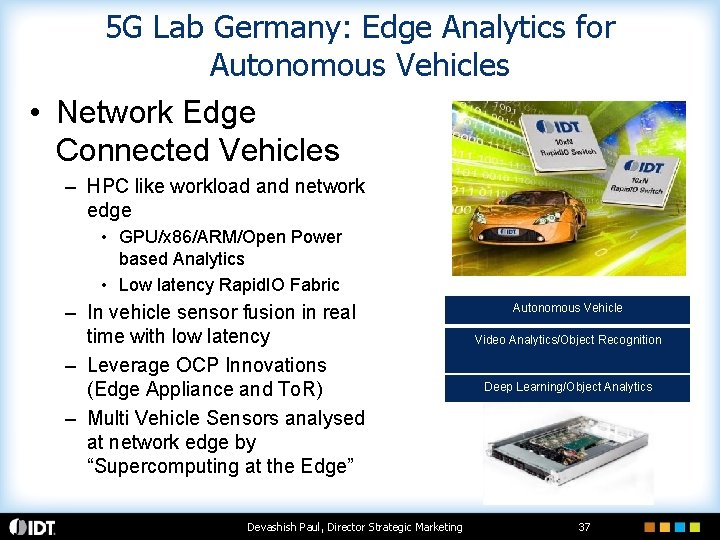

5 G Lab Germany: Edge Analytics for Autonomous Vehicles • Network Edge Connected Vehicles – HPC like workload and network edge • GPU/x 86/ARM/Open Power based Analytics • Low latency Rapid. IO Fabric – In vehicle sensor fusion in real time with low latency – Leverage OCP Innovations (Edge Appliance and To. R) – Multi Vehicle Sensors analysed at network edge by “Supercomputing at the Edge” Devashish Paul, Director Strategic Marketing Autonomous Vehicle Video Analytics/Object Recognition Deep Learning/Object Analytics 37

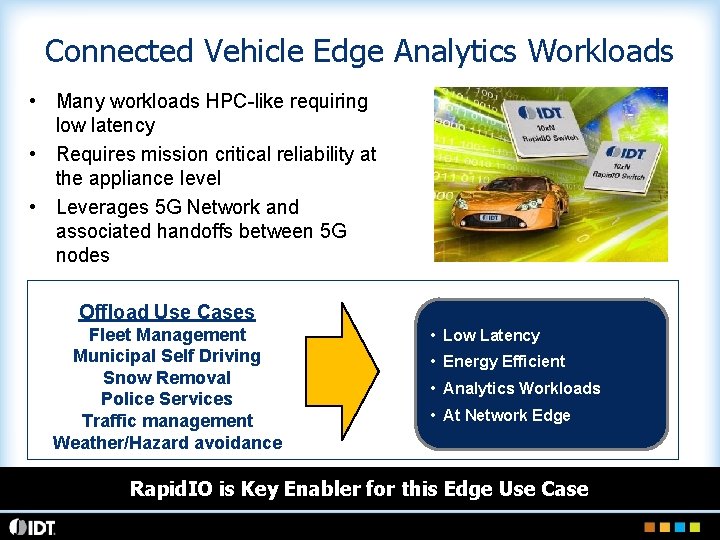

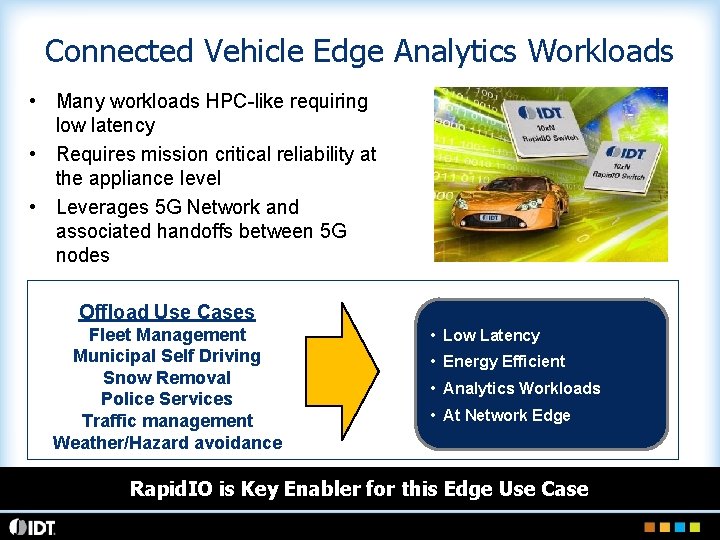

Connected Vehicle Edge Analytics Workloads • Many workloads HPC-like requiring low latency • Requires mission critical reliability at the appliance level • Leverages 5 G Network and associated handoffs between 5 G nodes Offload Use Cases Fleet Management Municipal Self Driving Snow Removal Police Services Traffic management Weather/Hazard avoidance • Low Latency • Energy Efficient • Analytics Workloads 38 • At Network Edge Rapid. IO is Key Enabler for this Edge Use Case

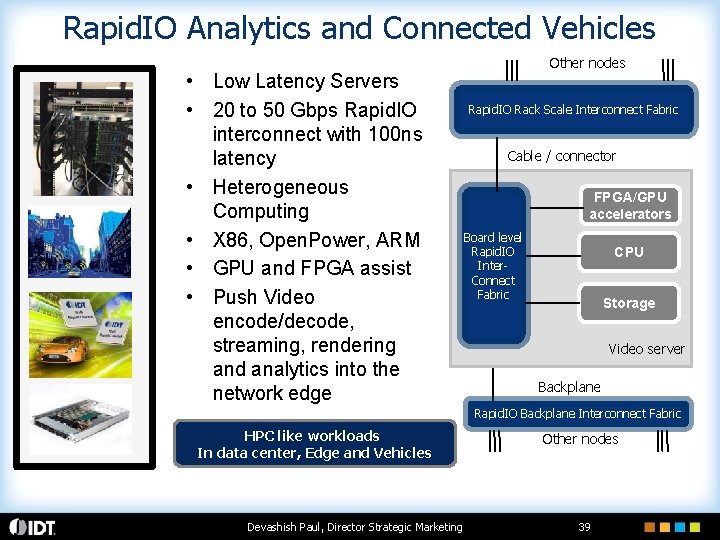

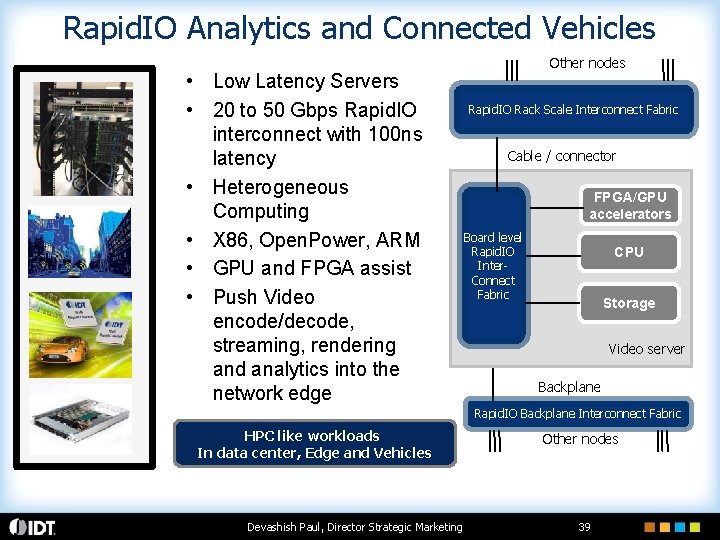

Rapid. IO Analytics and Connected Vehicles • Low Latency Servers • 20 to 50 Gbps Rapid. IO interconnect with 100 ns latency • Heterogeneous Computing • X 86, Open. Power, ARM • GPU and FPGA assist • Push Video encode/decode, streaming, rendering and analytics into the network edge Other nodes Rapid. IO Rack Scale Interconnect Fabric Cable / connector FPGA/GPU accelerators Board level Rapid. IO Inter. Connect Fabric CPU Storage Video server Backplane Rapid. IO Backplane Interconnect Fabric HPC like workloads In data center, Edge and Vehicles Devashish Paul, Director Strategic Marketing Other nodes 39

Backup 40

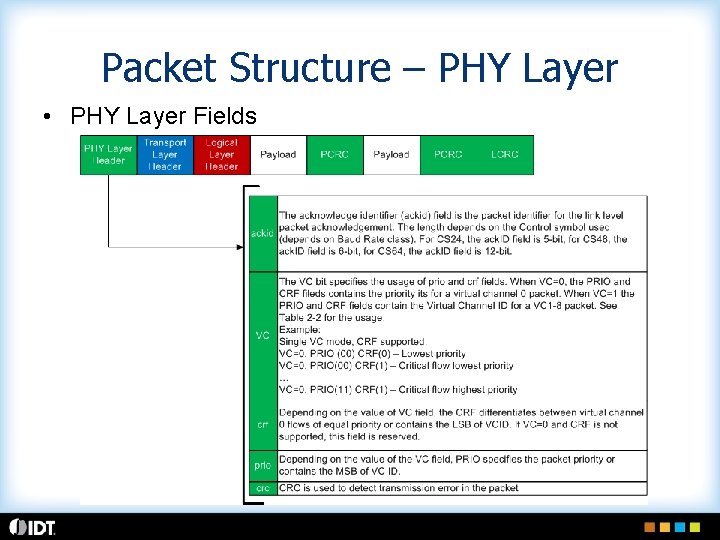

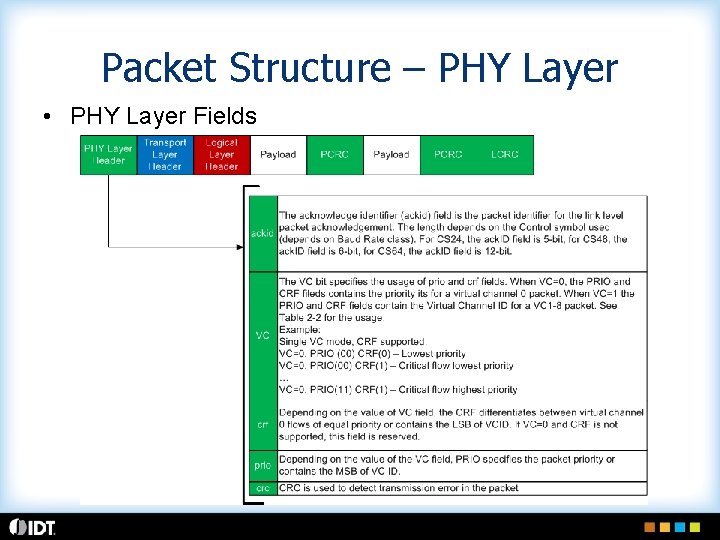

Packet Structure – PHY Layer • PHY Layer Fields

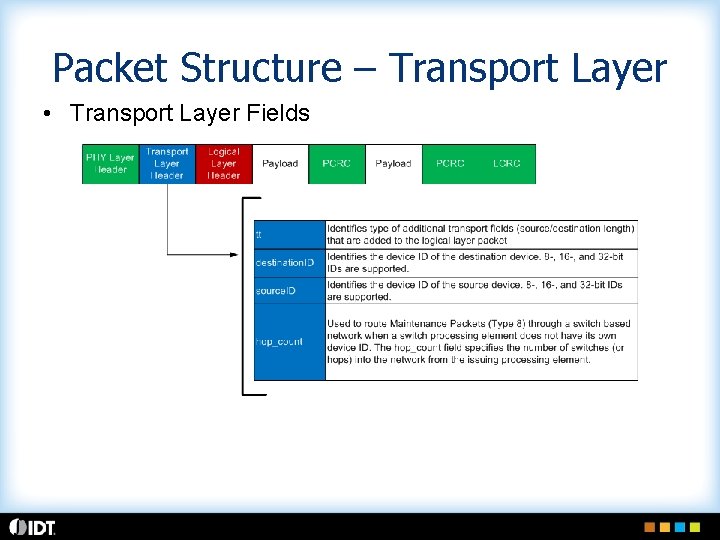

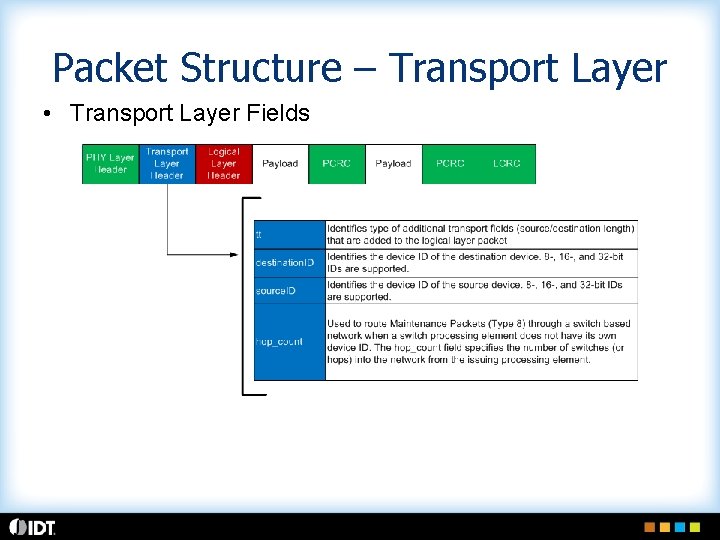

Packet Structure – Transport Layer • Transport Layer Fields

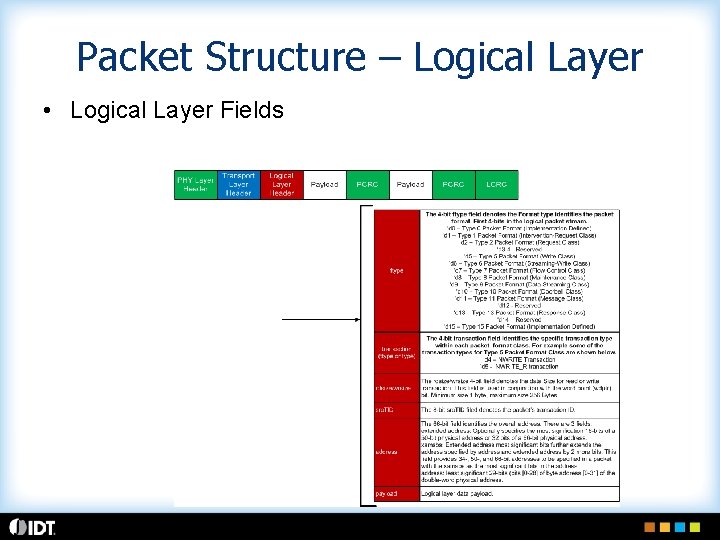

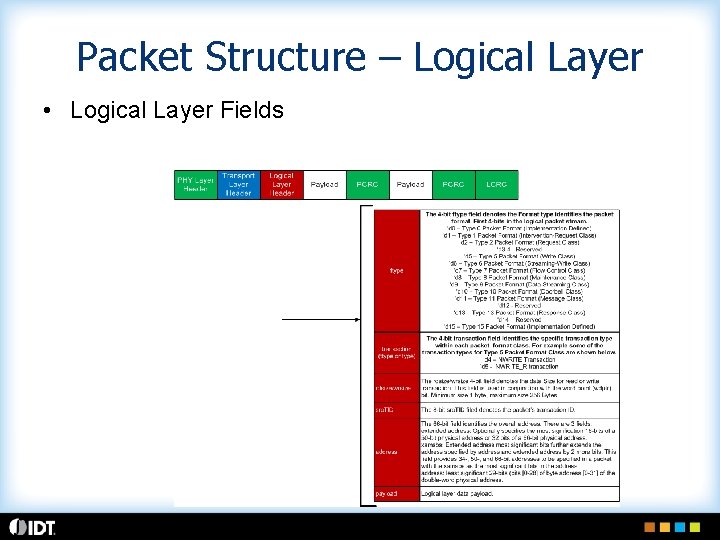

Packet Structure – Logical Layer • Logical Layer Fields