Linear Models II Rong Jin Recap o Classification

- Slides: 29

Linear Models (II) Rong Jin

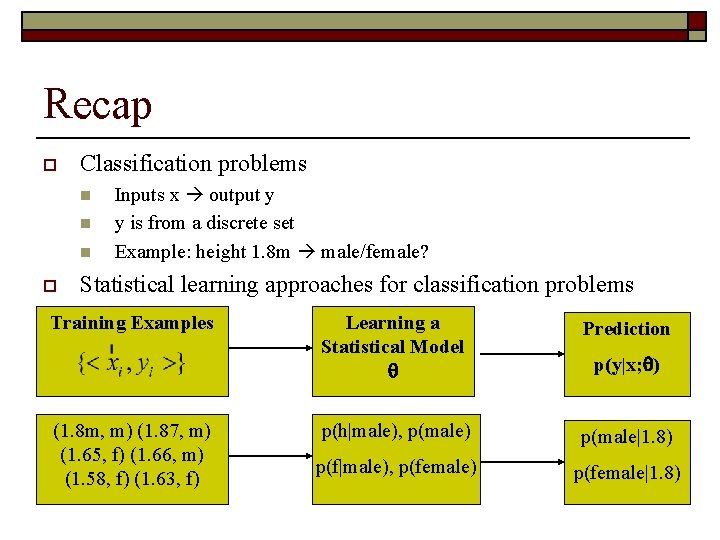

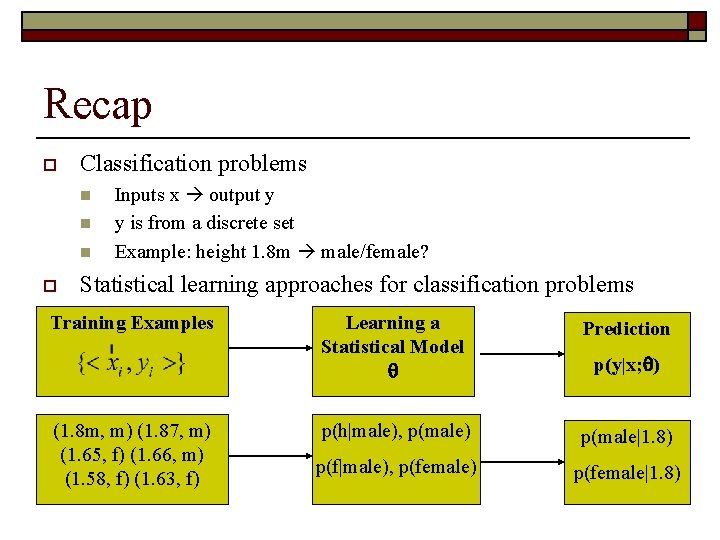

Recap o Classification problems n n n o Inputs x output y y is from a discrete set Example: height 1. 8 m male/female? Statistical learning approaches for classification problems Training Examples (1. 8 m, m) (1. 87, m) (1. 65, f) (1. 66, m) (1. 58, f) (1. 63, f) Learning a Statistical Model Prediction p(h|male), p(male) p(male|1. 8) p(f|male), p(female) p(female|1. 8) p(y|x; )

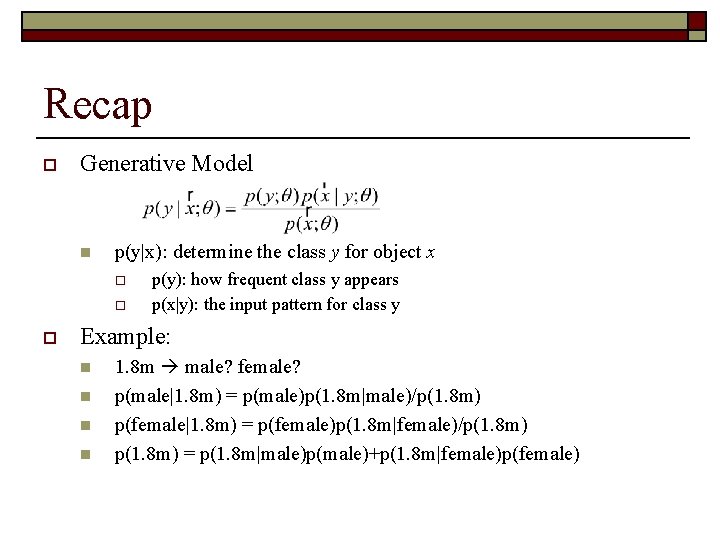

Recap o Generative Model n p(y|x): determine the class y for object x o o o p(y): how frequent class y appears p(x|y): the input pattern for class y Example: n n 1. 8 m male? female? p(male|1. 8 m) = p(male)p(1. 8 m|male)/p(1. 8 m) p(female|1. 8 m) = p(female)p(1. 8 m|female)/p(1. 8 m) = p(1. 8 m|male)p(male)+p(1. 8 m|female)p(female)

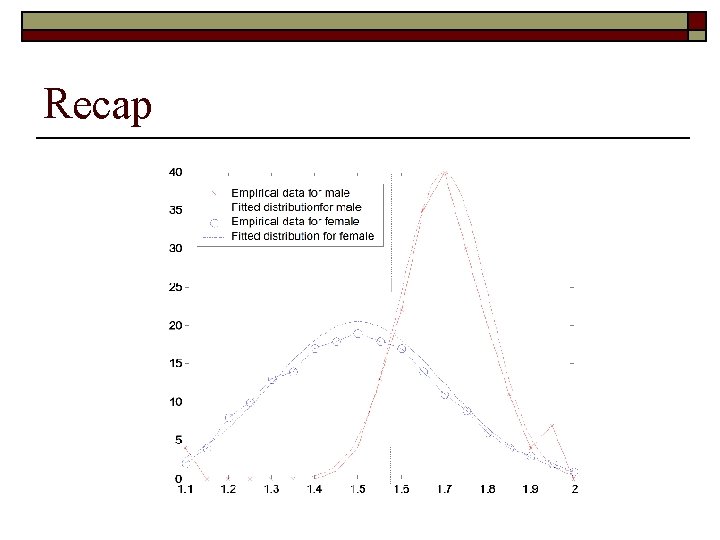

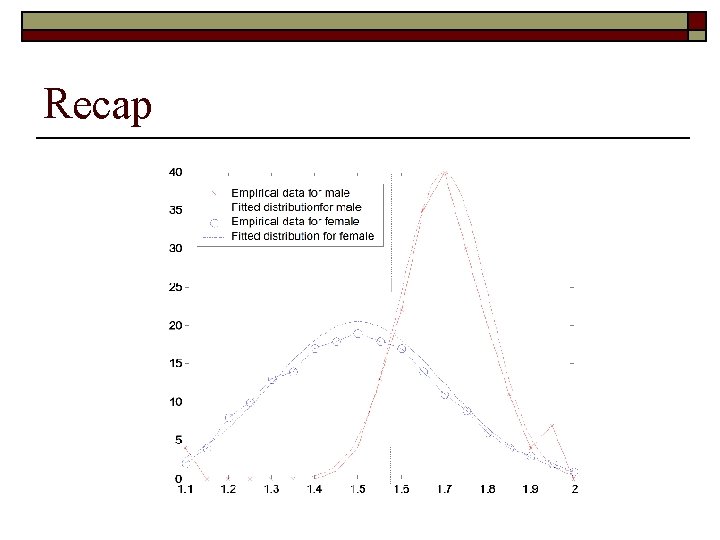

Recap o Learning p(x|y) and p(y) n n o p(y) = #example(y)/#examples Maximum likelihood estimation for p(x|y) Example n Training examples: o n n p(male) = Nmale/N p(female) = Nfemale/N Assume that the height distributions for male and female are Gaussian o n (1. 8 m, m) (1. 87, m) (1. 65, f) (1. 66, m) (1. 58, f) (1. 63, f) ( male, male), ( female, female) MLE estimation

Recap

Recap

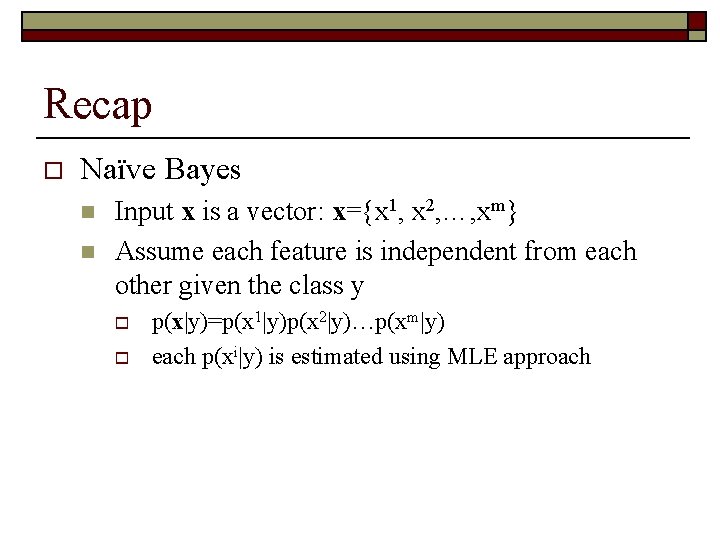

Recap o Naïve Bayes n n Input x is a vector: x={x 1, x 2, …, xm} Assume each feature is independent from each other given the class y o o p(x|y)=p(x 1|y)p(x 2|y)…p(xm|y) each p(xi|y) is estimated using MLE approach

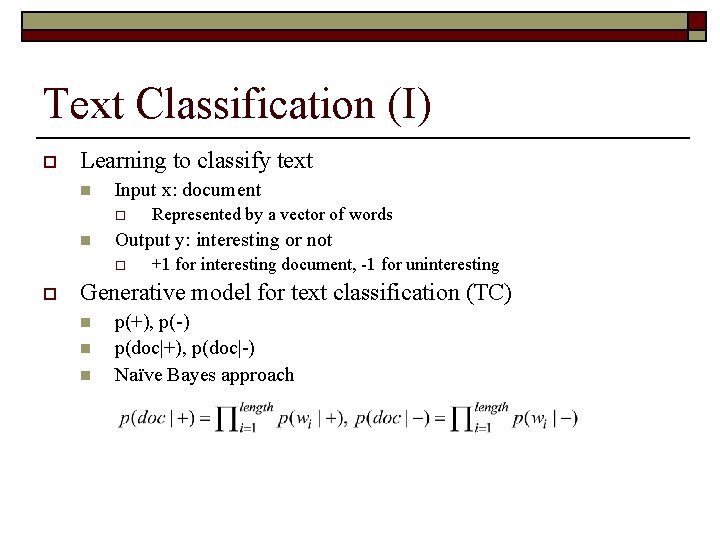

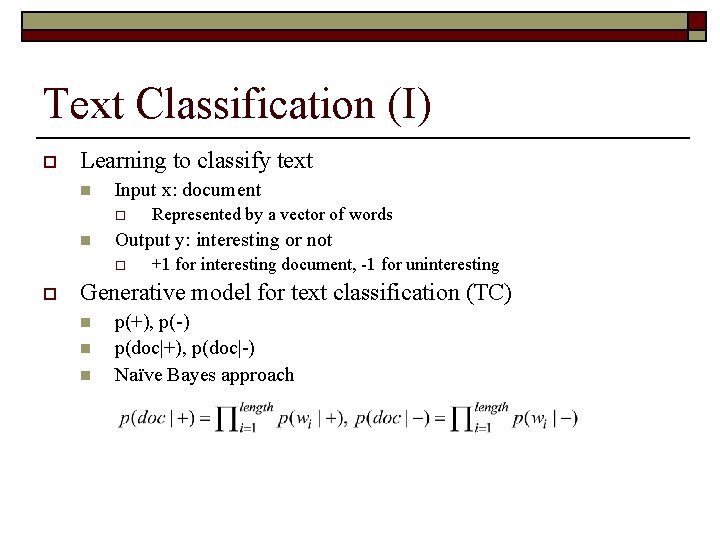

Text Classification (I) o Learning to classify text n Input x: document o n Output y: interesting or not o o Represented by a vector of words +1 for interesting document, -1 for uninteresting Generative model for text classification (TC) n n n p(+), p(-) p(doc|+), p(doc|-) Naïve Bayes approach

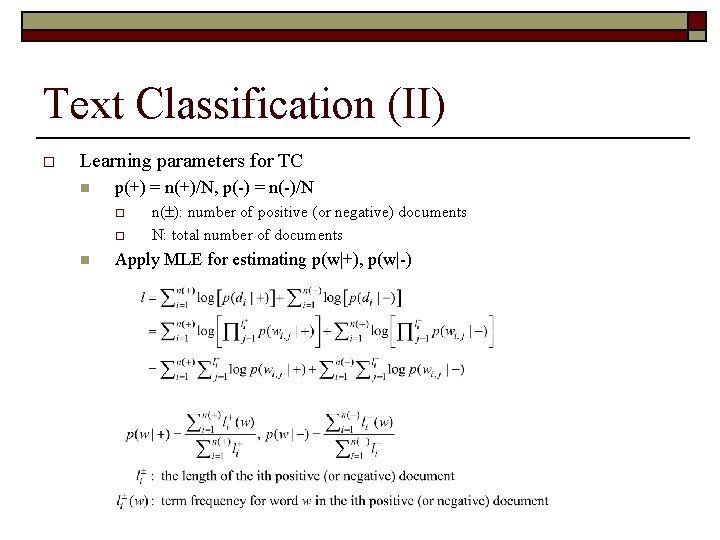

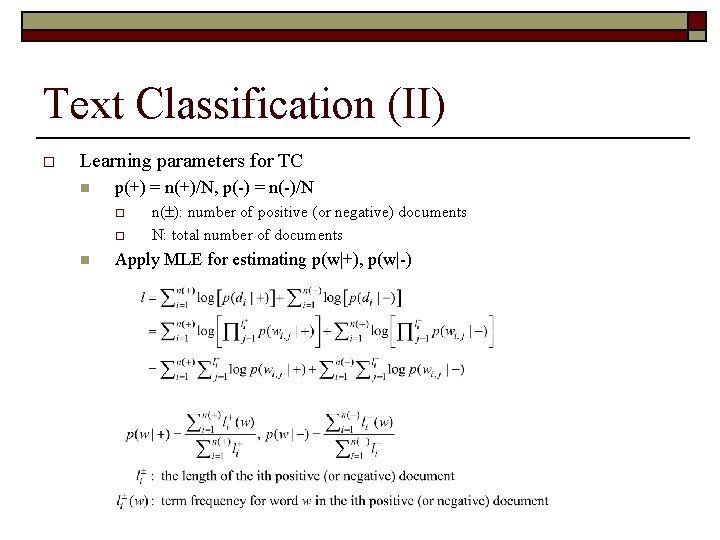

Text Classification (II) o Learning parameters for TC n p(+) = n(+)/N, p(-) = n(-)/N o o n n( ): number of positive (or negative) documents N: total number of documents Apply MLE for estimating p(w|+), p(w|-)

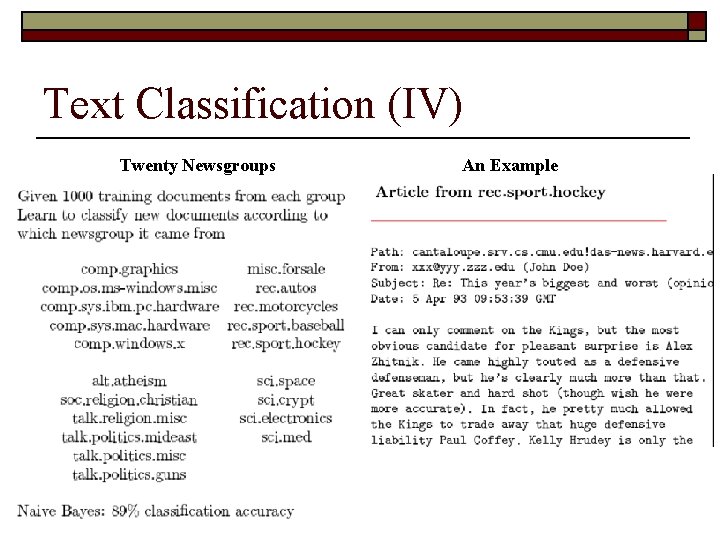

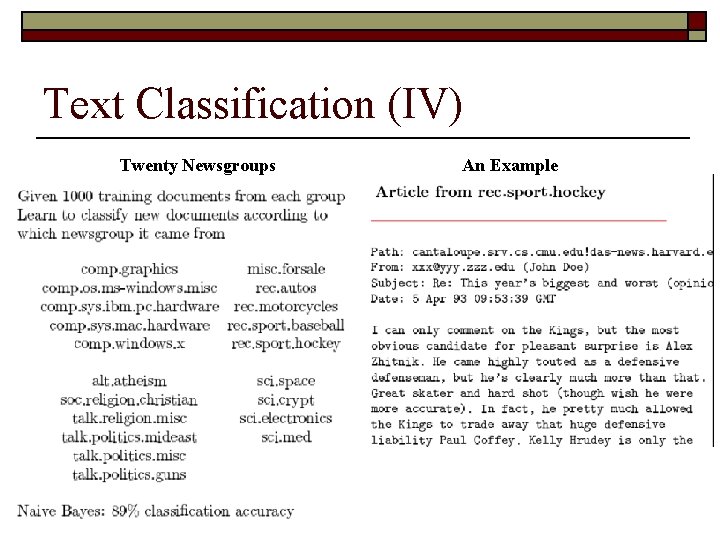

Text Classification (IV) Twenty Newsgroups An Example

Text Classification (IV) o Any problems with the naïve Bayes text classifier?

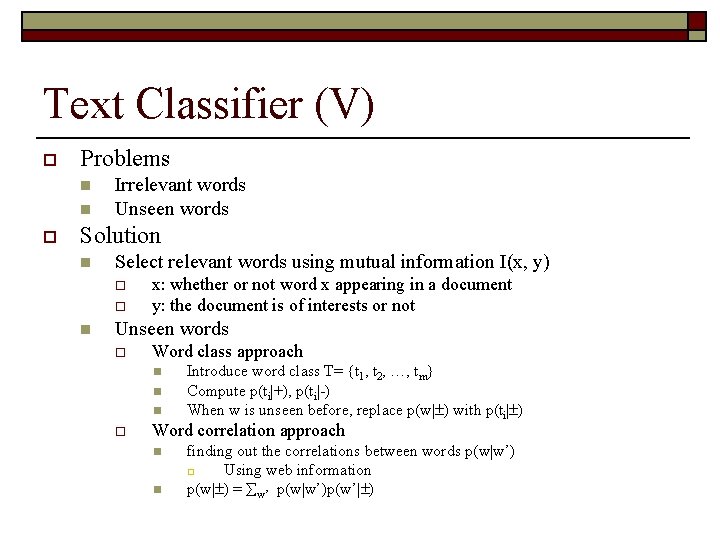

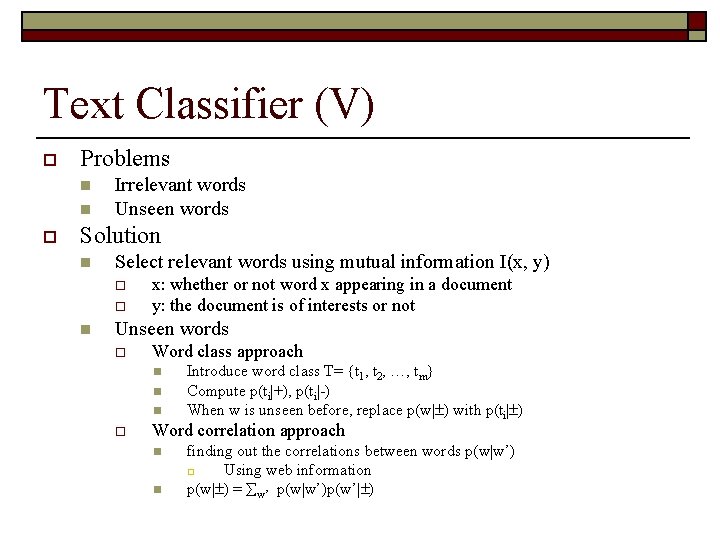

Text Classifier (V) o Problems n n o Irrelevant words Unseen words Solution n Select relevant words using mutual information I(x, y) o o n x: whether or not word x appearing in a document y: the document is of interests or not Unseen words o Word class approach n n n o Introduce word class T= {t 1, t 2, …, tm} Compute p(ti|+), p(ti|-) When w is unseen before, replace p(w| ) with p(ti| ) Word correlation approach n n finding out the correlations between words p(w|w’) o Using web information p(w| ) = w’ p(w|w’)p(w’| )

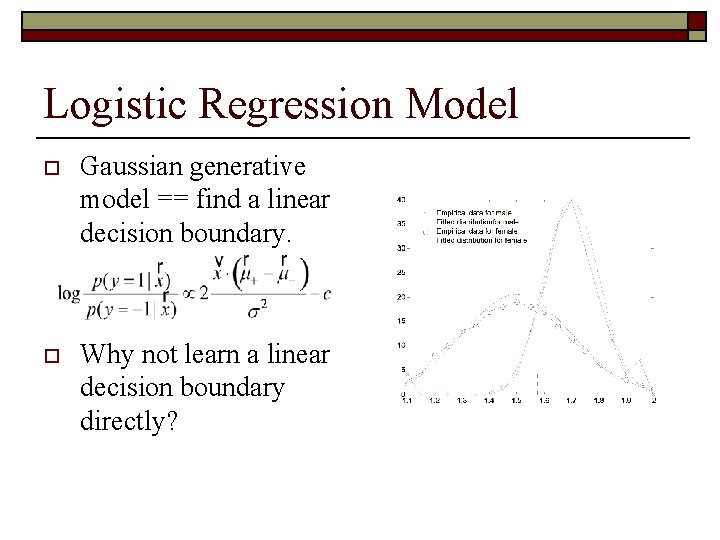

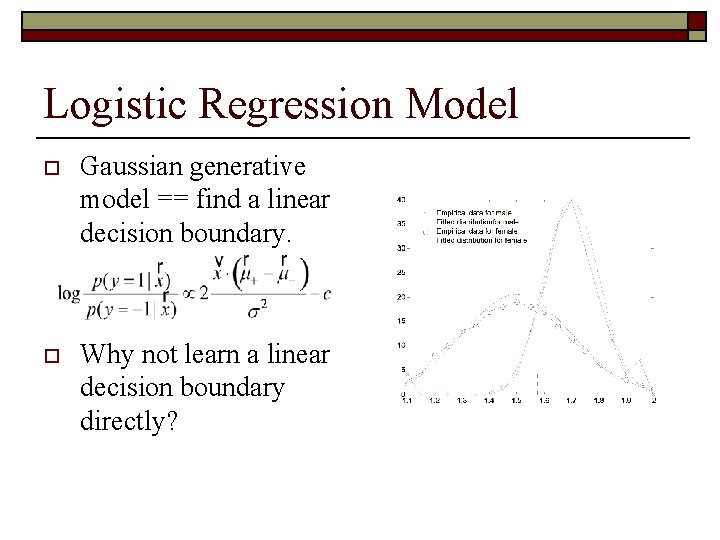

Logistic Regression Model o Gaussian generative model == find a linear decision boundary. o Why not learn a linear decision boundary directly?

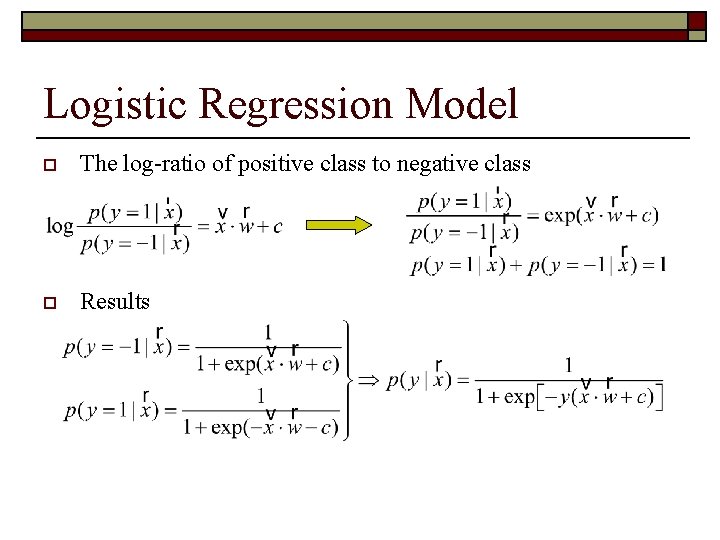

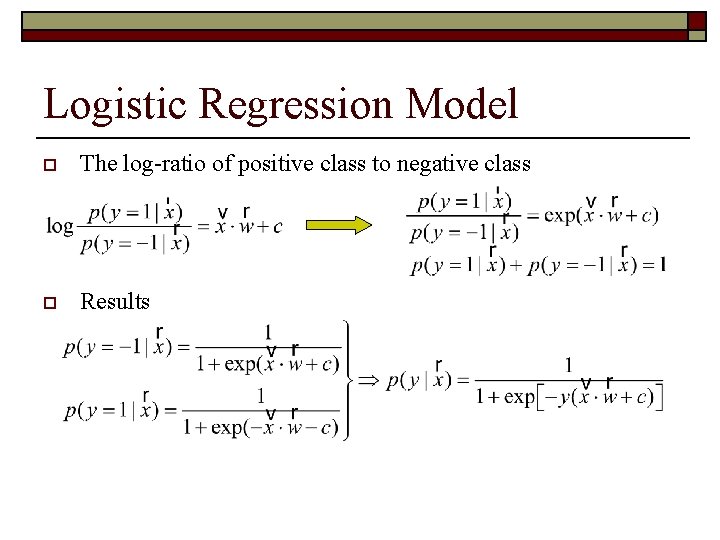

Logistic Regression Model o The log-ratio of positive class to negative class o Results

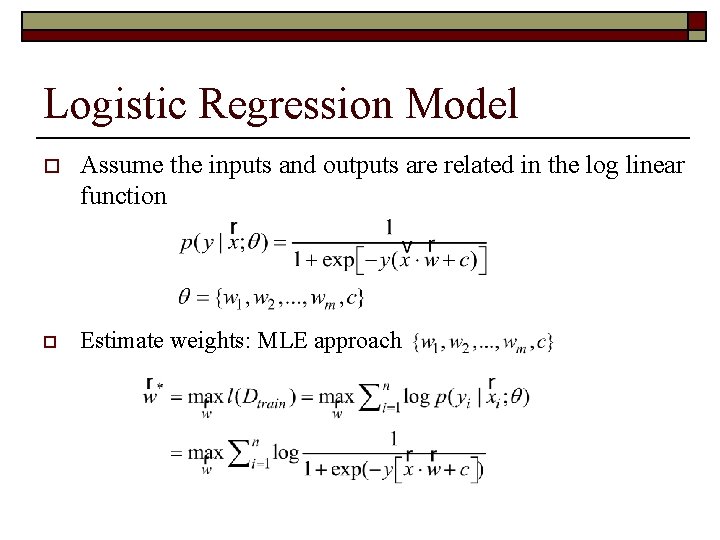

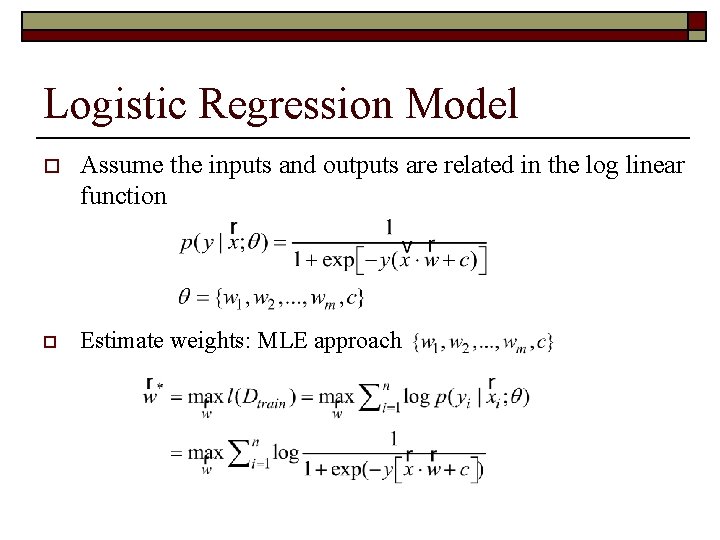

Logistic Regression Model o Assume the inputs and outputs are related in the log linear function o Estimate weights: MLE approach

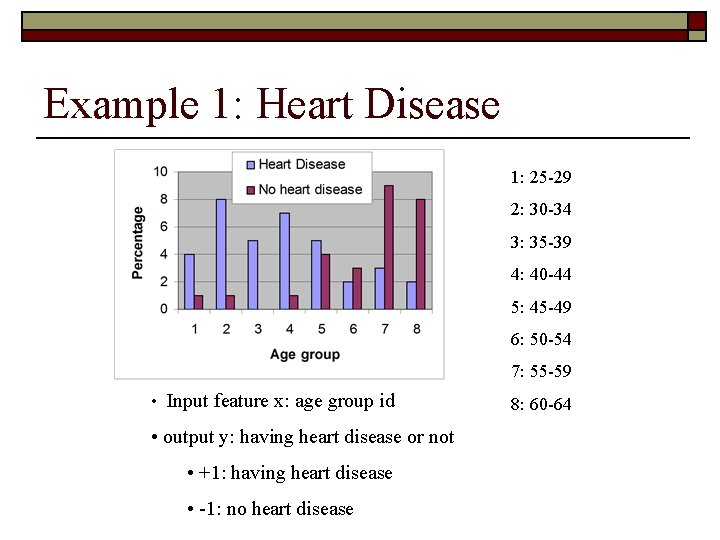

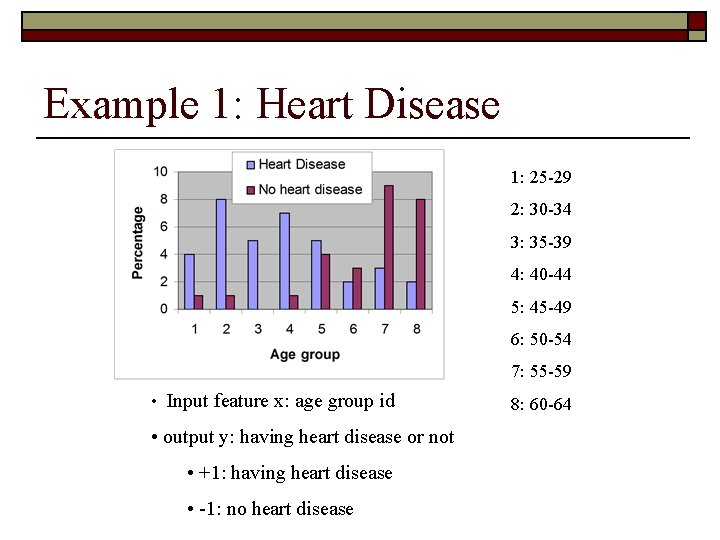

Example 1: Heart Disease 1: 25 -29 2: 30 -34 3: 35 -39 4: 40 -44 5: 45 -49 6: 50 -54 7: 55 -59 • Input feature x: age group id • output y: having heart disease or not • +1: having heart disease • -1: no heart disease 8: 60 -64

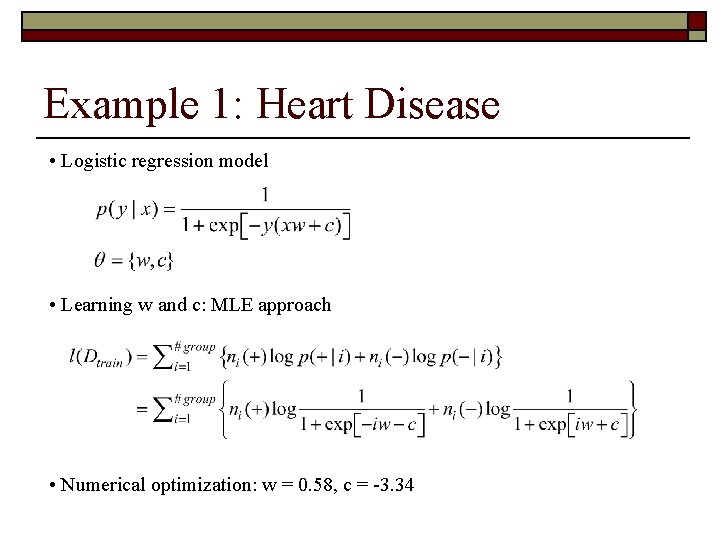

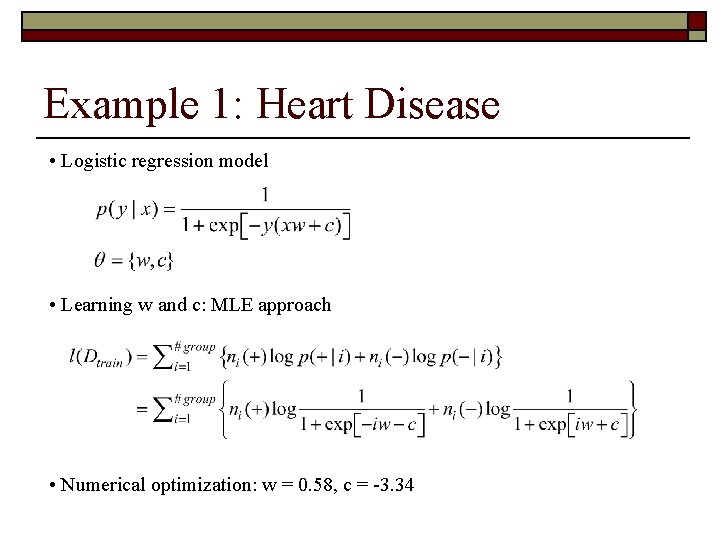

Example 1: Heart Disease • Logistic regression model • Learning w and c: MLE approach • Numerical optimization: w = 0. 58, c = -3. 34

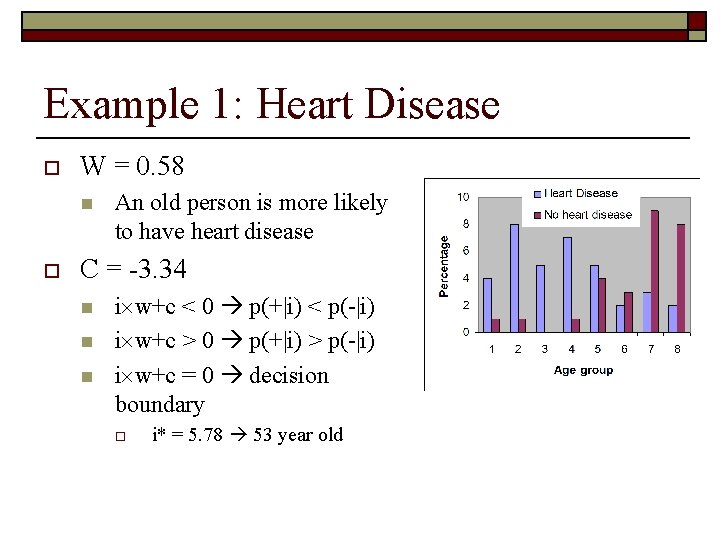

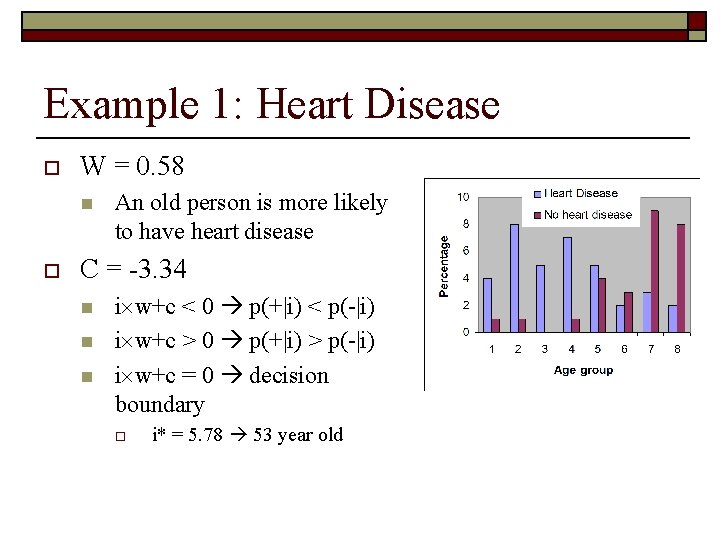

Example 1: Heart Disease o W = 0. 58 n o An old person is more likely to have heart disease C = -3. 34 n n n i w+c < 0 p(+|i) < p(-|i) i w+c > 0 p(+|i) > p(-|i) i w+c = 0 decision boundary o i* = 5. 78 53 year old

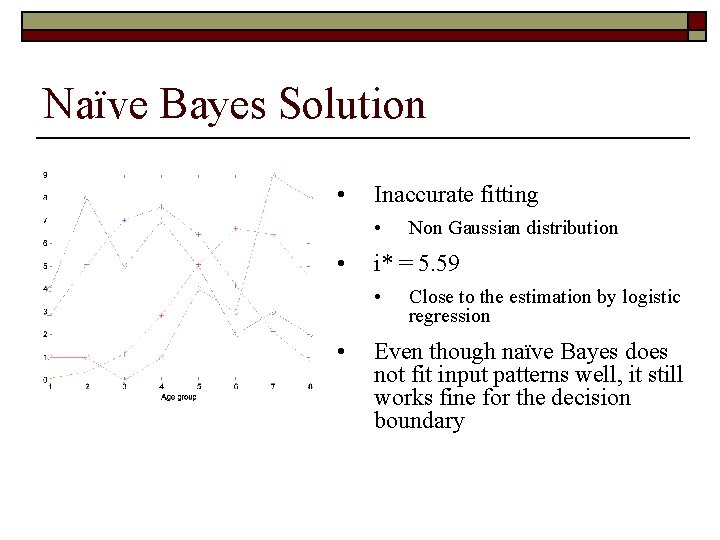

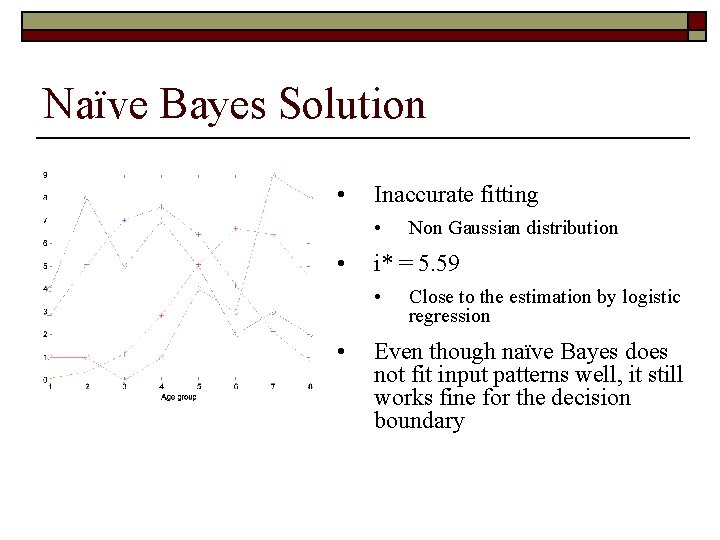

Naïve Bayes Solution • Inaccurate fitting • • i* = 5. 59 • • Non Gaussian distribution Close to the estimation by logistic regression Even though naïve Bayes does not fit input patterns well, it still works fine for the decision boundary

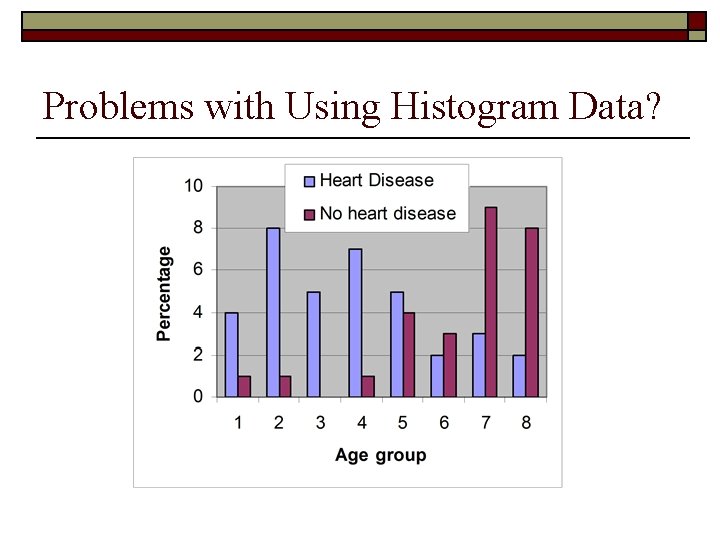

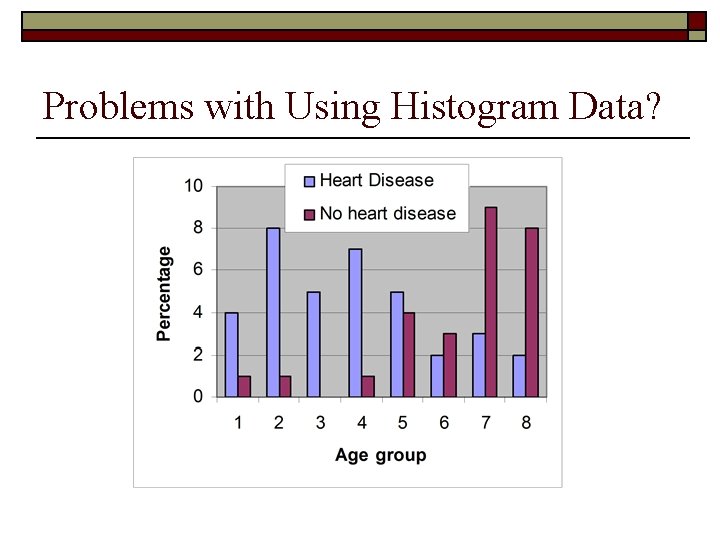

Problems with Using Histogram Data?

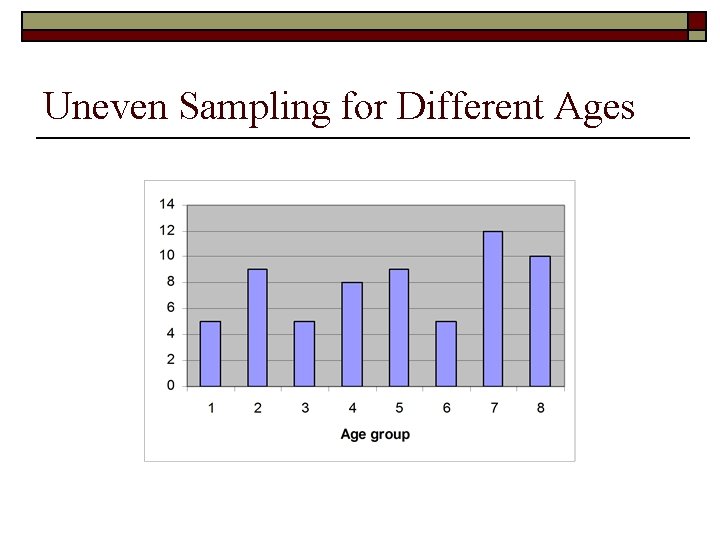

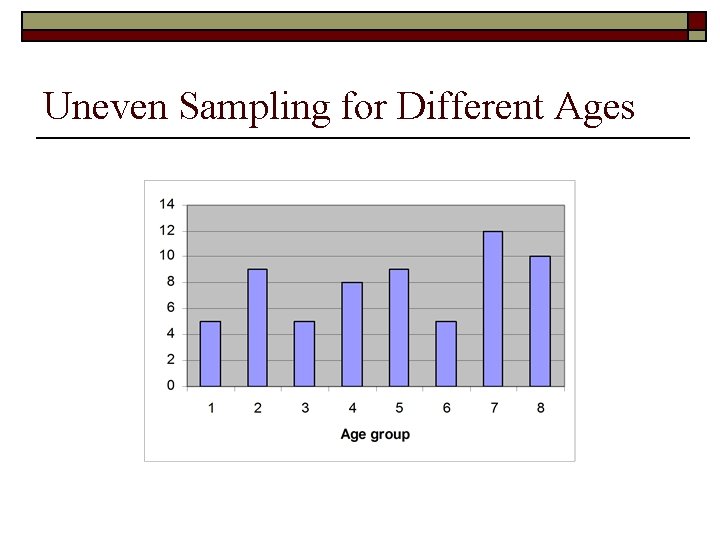

Uneven Sampling for Different Ages

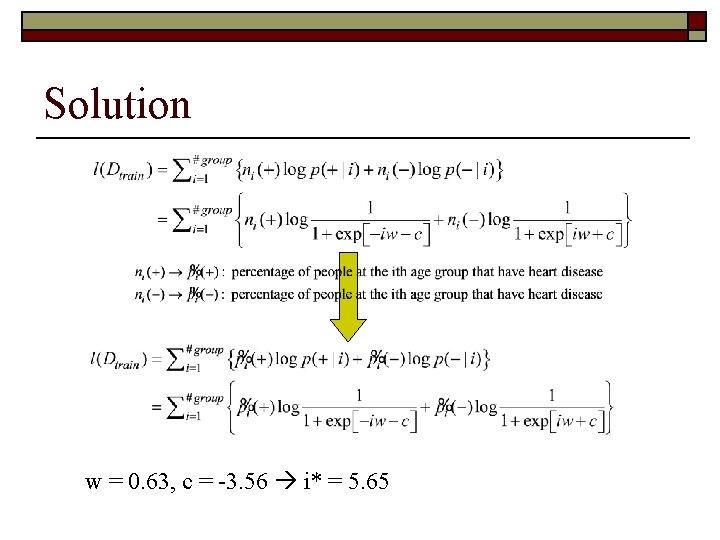

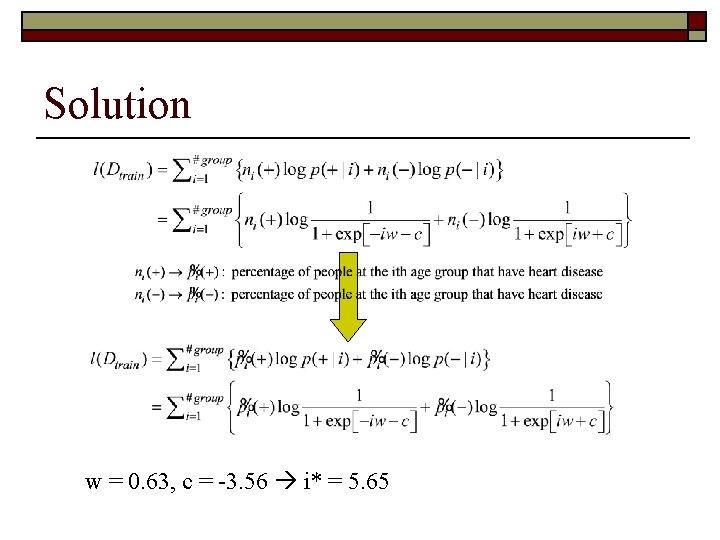

Solution w = 0. 63, c = -3. 56 i* = 5. 65

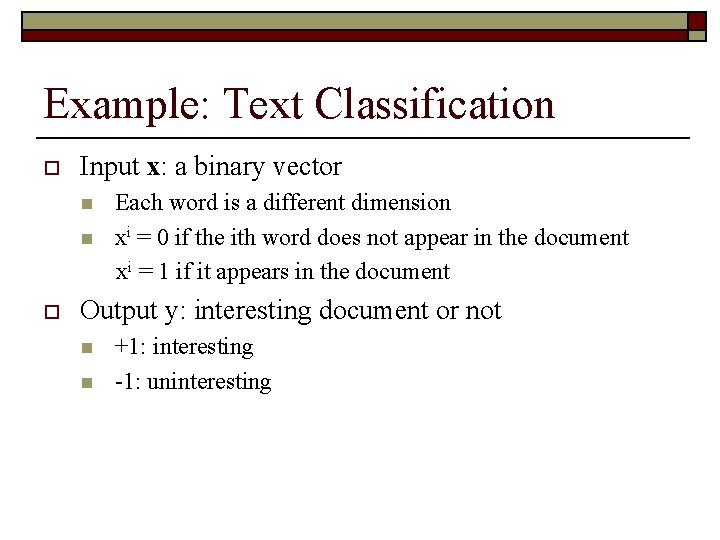

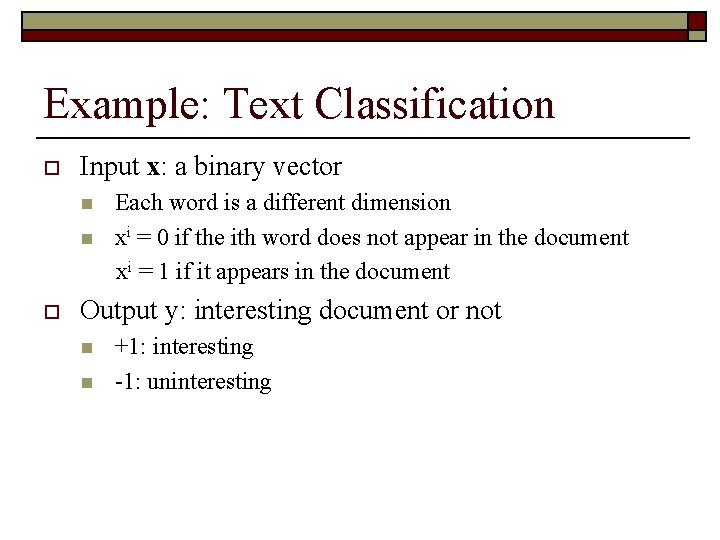

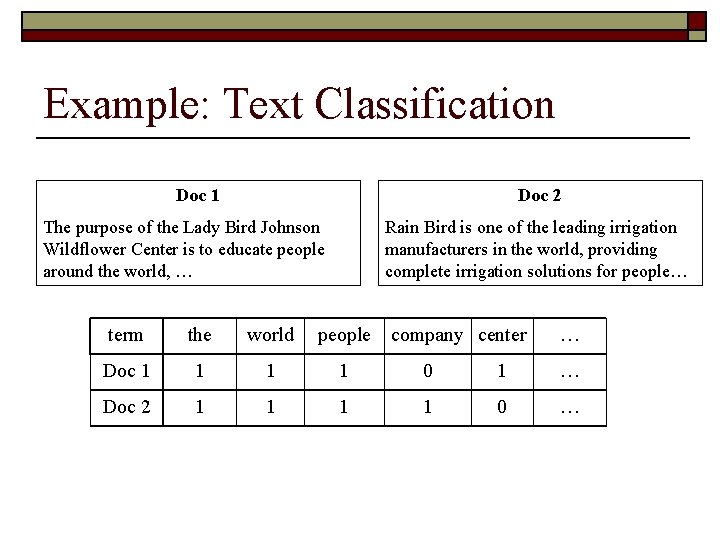

Example: Text Classification o Input x: a binary vector n n o Each word is a different dimension xi = 0 if the ith word does not appear in the document xi = 1 if it appears in the document Output y: interesting document or not n n +1: interesting -1: uninteresting

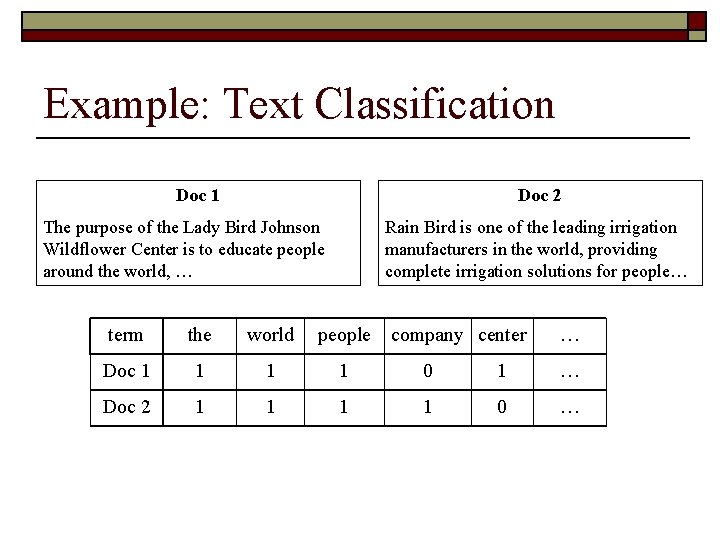

Example: Text Classification Doc 1 Doc 2 The purpose of the Lady Bird Johnson Wildflower Center is to educate people around the world, … Rain Bird is one of the leading irrigation manufacturers in the world, providing complete irrigation solutions for people… term the world people company center … Doc 1 1 0 1 … Doc 2 1 1 0 …

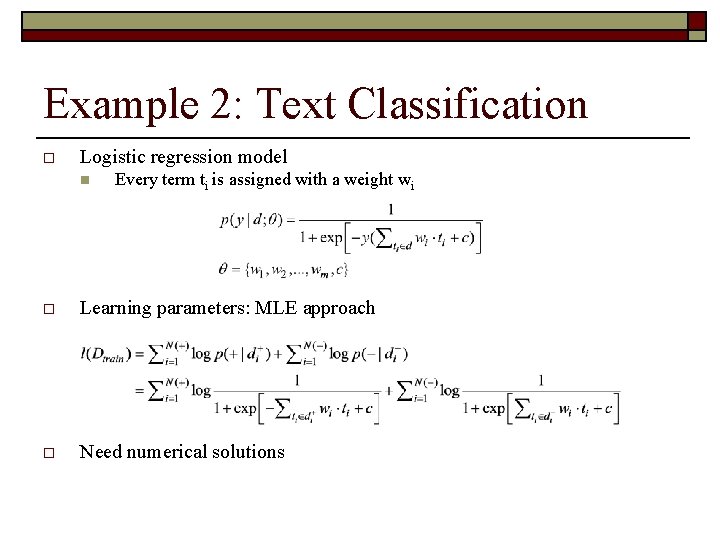

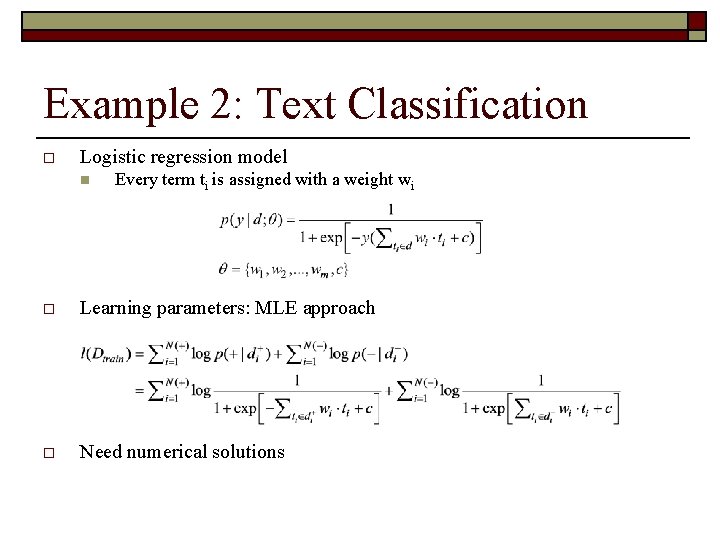

Example 2: Text Classification o Logistic regression model n Every term ti is assigned with a weight wi o Learning parameters: MLE approach o Need numerical solutions

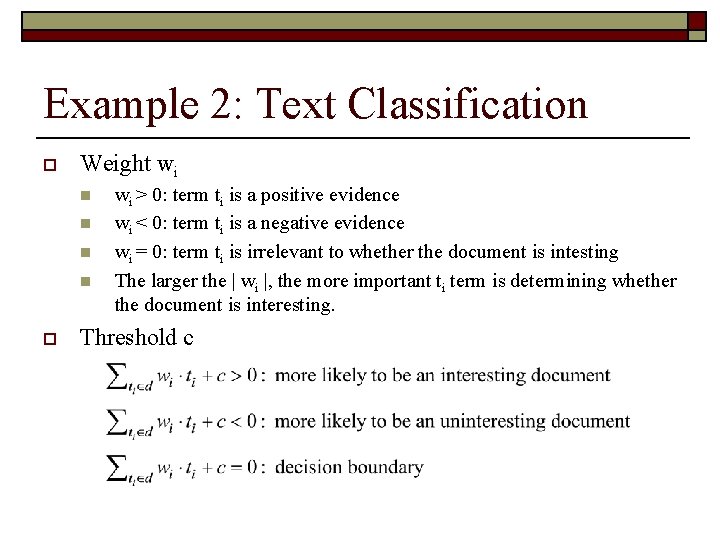

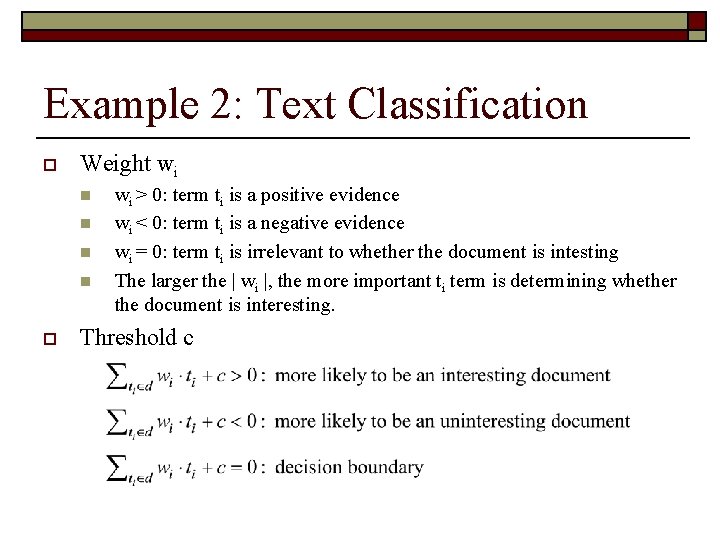

Example 2: Text Classification o Weight wi n n o wi > 0: term ti is a positive evidence wi < 0: term ti is a negative evidence wi = 0: term ti is irrelevant to whether the document is intesting The larger the | wi |, the more important ti term is determining whether the document is interesting. Threshold c

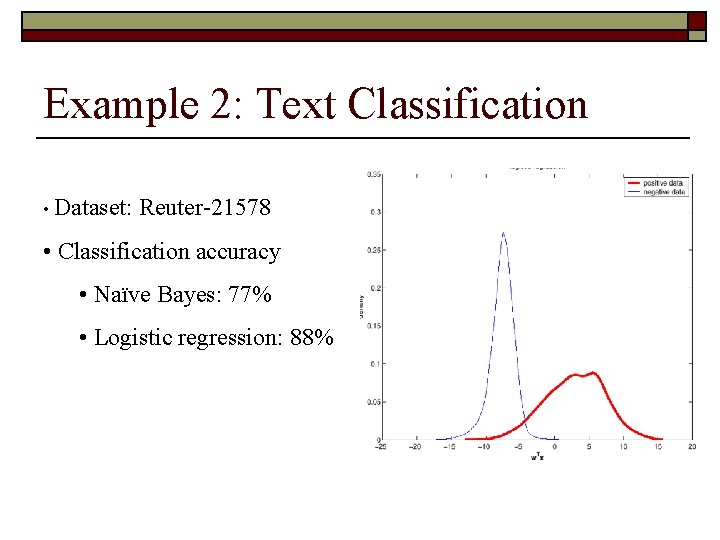

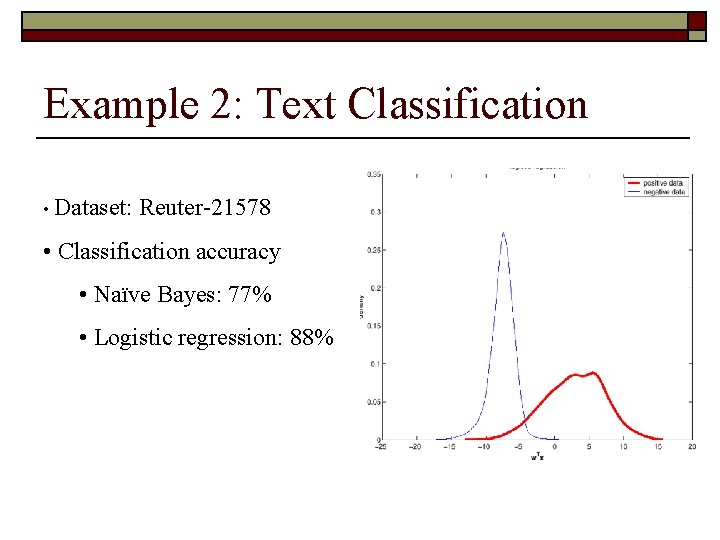

Example 2: Text Classification • Dataset: Reuter-21578 • Classification accuracy • Naïve Bayes: 77% • Logistic regression: 88%

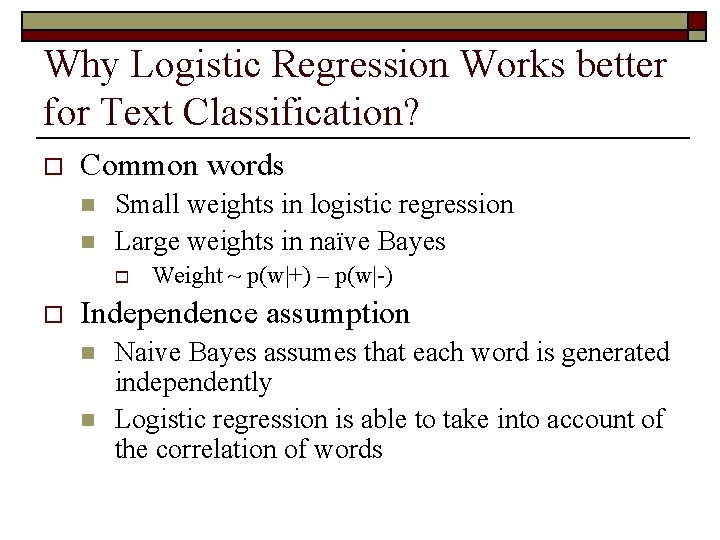

Why Logistic Regression Works better for Text Classification? o Common words n n Small weights in logistic regression Large weights in naïve Bayes o o Weight ~ p(w|+) – p(w|-) Independence assumption n n Naive Bayes assumes that each word is generated independently Logistic regression is able to take into account of the correlation of words

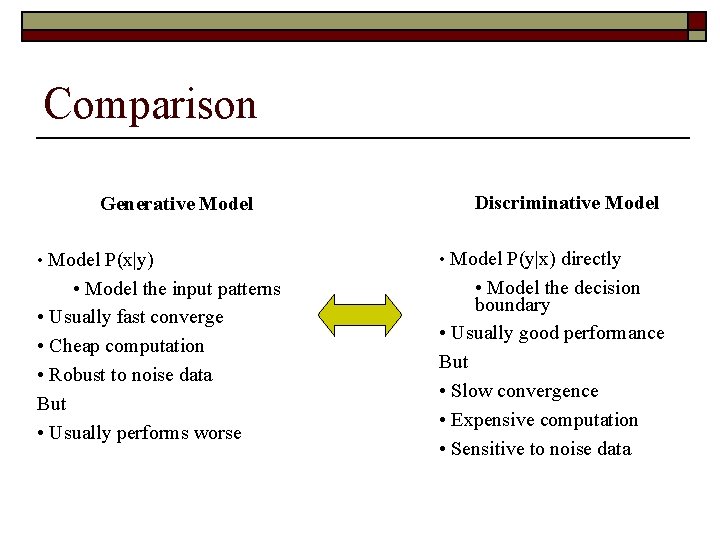

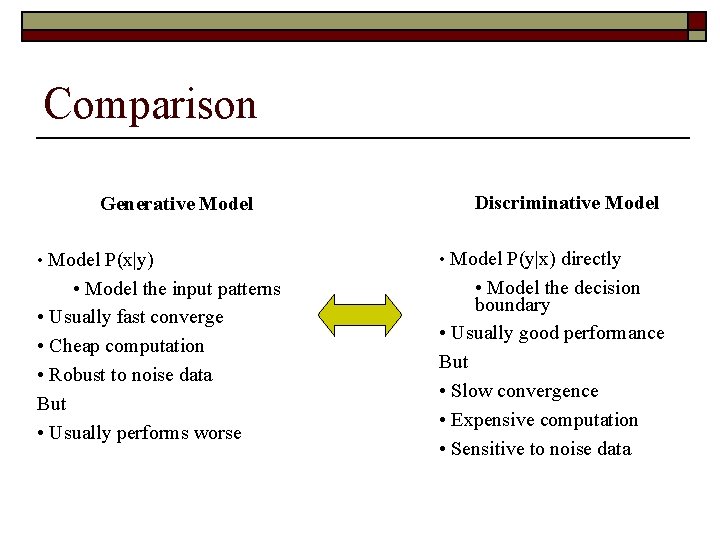

Comparison Generative Model Discriminative Model • Model P(x|y) • Model P(y|x) directly • Model the input patterns • Usually fast converge • Cheap computation • Robust to noise data But • Usually performs worse • Model the decision boundary • Usually good performance But • Slow convergence • Expensive computation • Sensitive to noise data