Cross Lingual Information Retrieval CLIR Rong Jin The

- Slides: 52

Cross Lingual Information Retrieval (CLIR) Rong Jin

The Problem o Increasing pressure for accessing information in foreign language: · · · o find information written in foreign languages read and interpret that information merge it with information in other languages Need for multilingual information access

Why Cross Lingual IR is Important? o Internet is no longer monolingual and non-English content is growing rapidly o Non-English speakers represent the fastest growing group of new internet users o o In 1997, 8. 1 million Spanish speaking users In 2000, 37 million ……. .

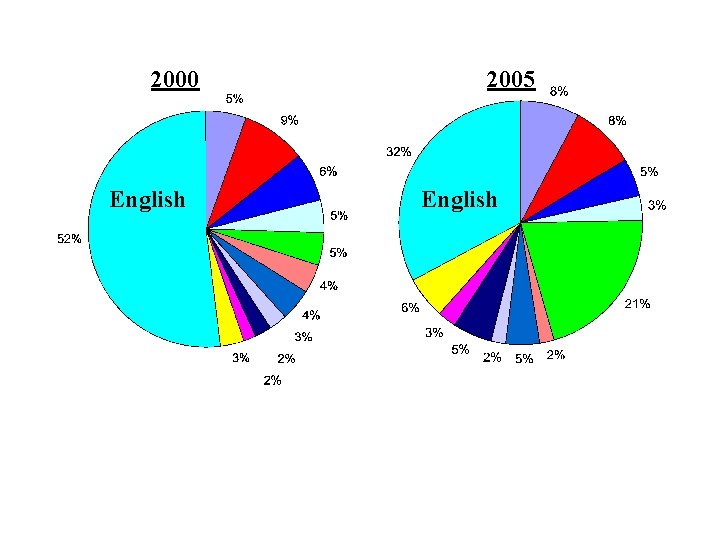

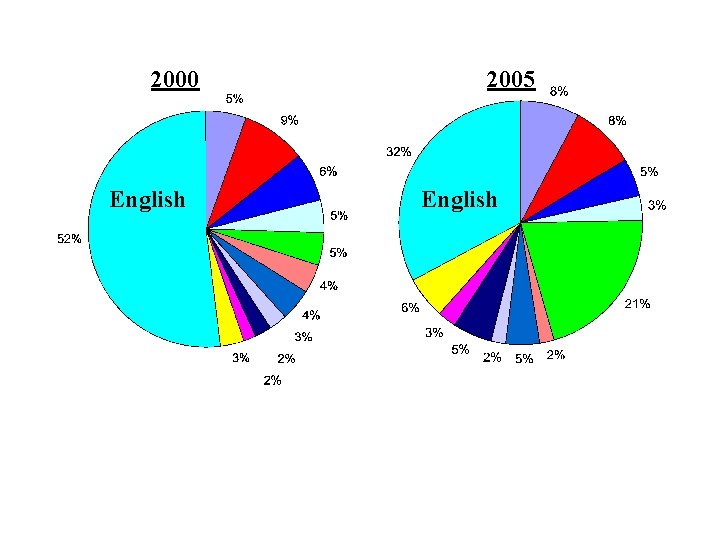

2000 2005 English Confidential, unpublished information & Napier Information Services 2000 ©Manning

2. Multilingual Text Processing o o o o Character encoding Language recognition Tokenization Stop word removal Feature normalization (stemming) Part-of-speech tagging Phrase identification

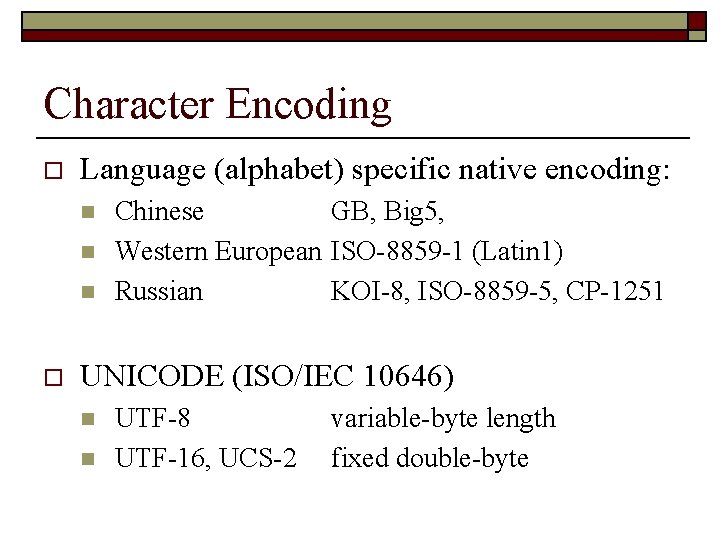

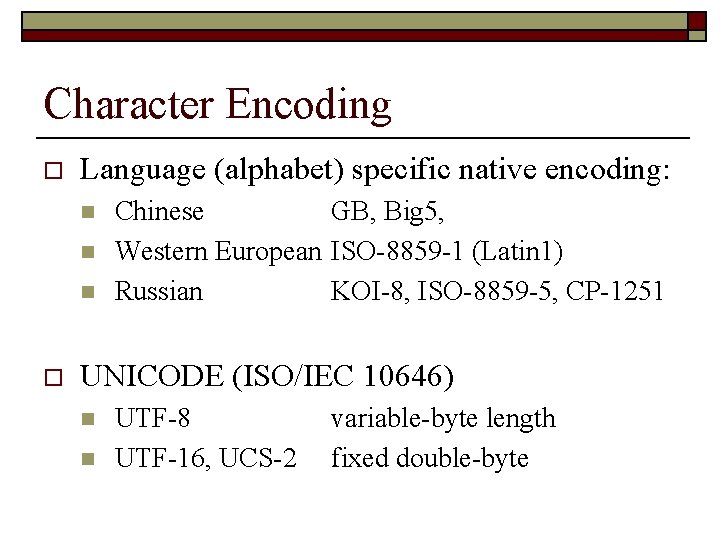

Character Encoding o Language (alphabet) specific native encoding: n n n o Chinese GB, Big 5, Western European ISO-8859 -1 (Latin 1) Russian KOI-8, ISO-8859 -5, CP-1251 UNICODE (ISO/IEC 10646) n n UTF-8 UTF-16, UCS-2 variable-byte length fixed double-byte

Tokenization o Punctuation separated from words – incl. word separation characters. o “The train stopped. ” “The”, “train”, “stopped”, “. ” o String split into lexical units - incl. Segmentation (Chinese) and compound-splitting (German)

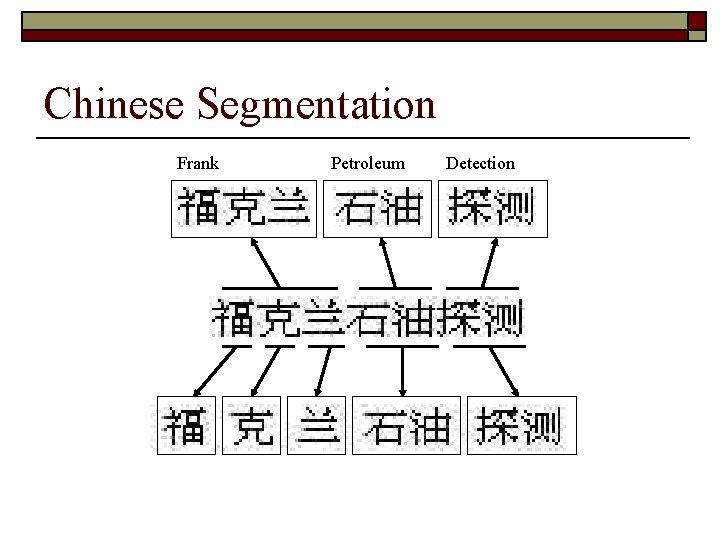

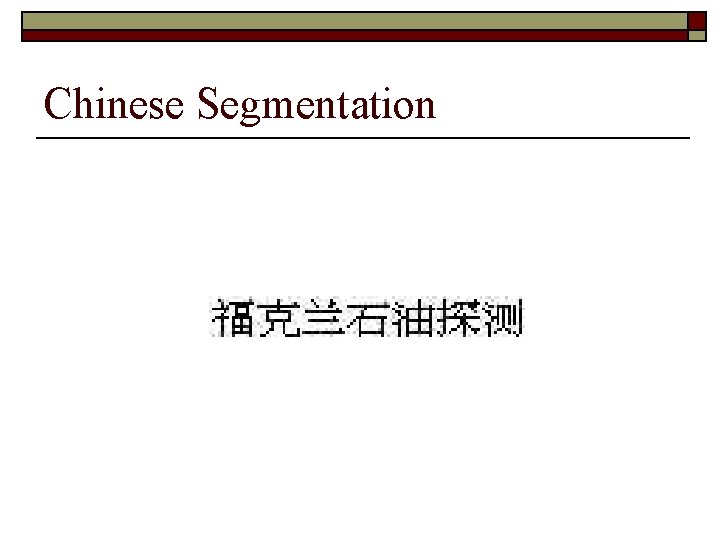

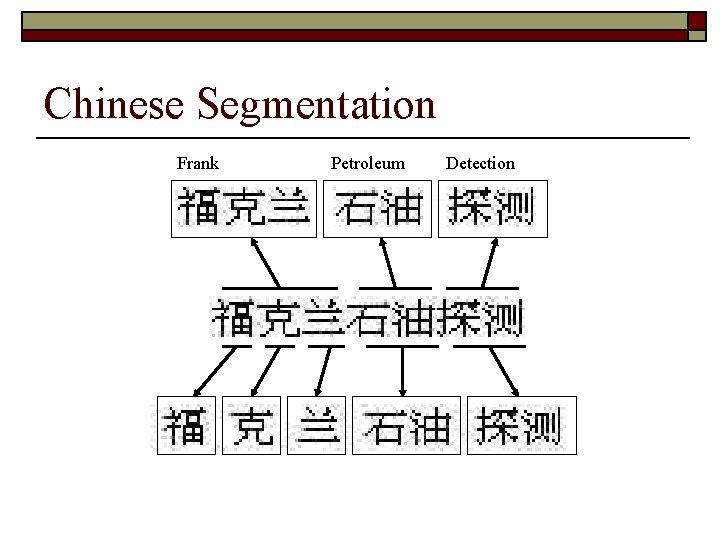

Chinese Segmentation

Chinese Segmentation Frank Petroleum Detection

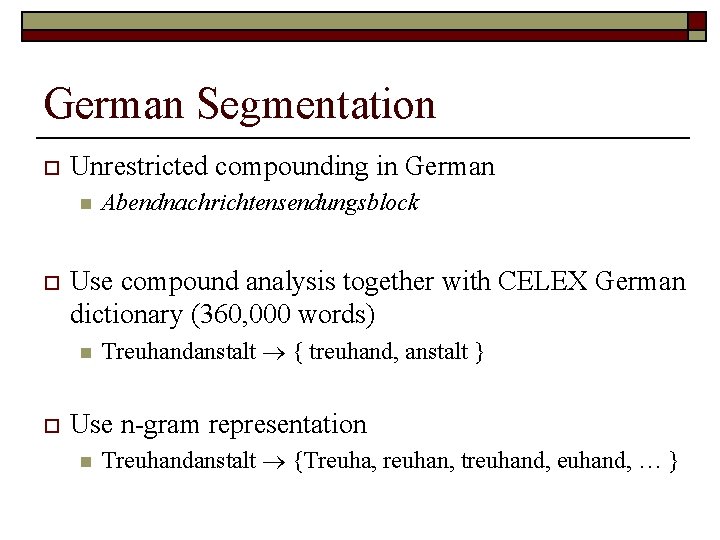

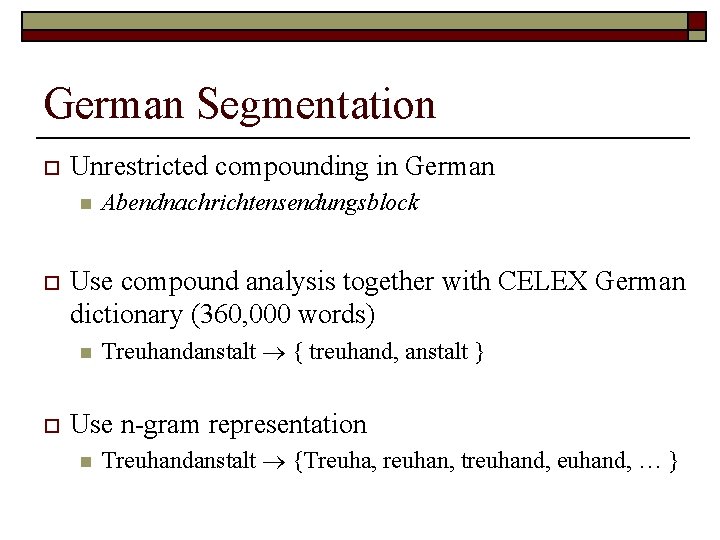

German Segmentation o Unrestricted compounding in German n o Use compound analysis together with CELEX German dictionary (360, 000 words) n o Abendnachrichtensendungsblock Treuhandanstalt { treuhand, anstalt } Use n-gram representation n Treuhandanstalt {Treuha, reuhan, treuhand, … }

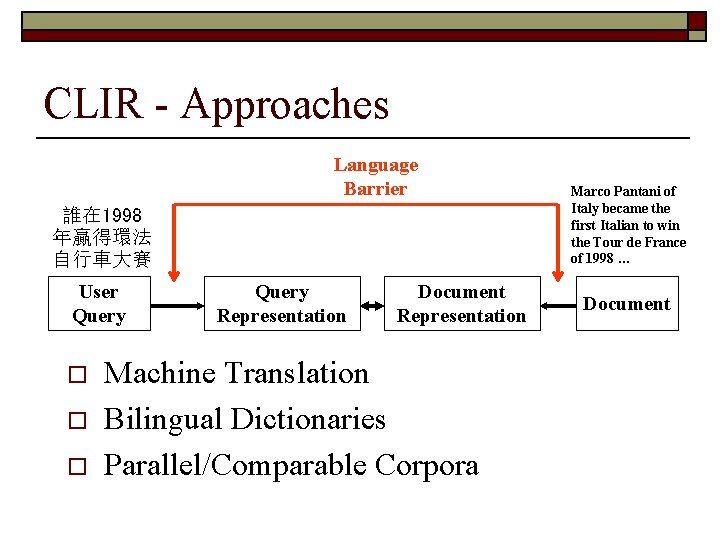

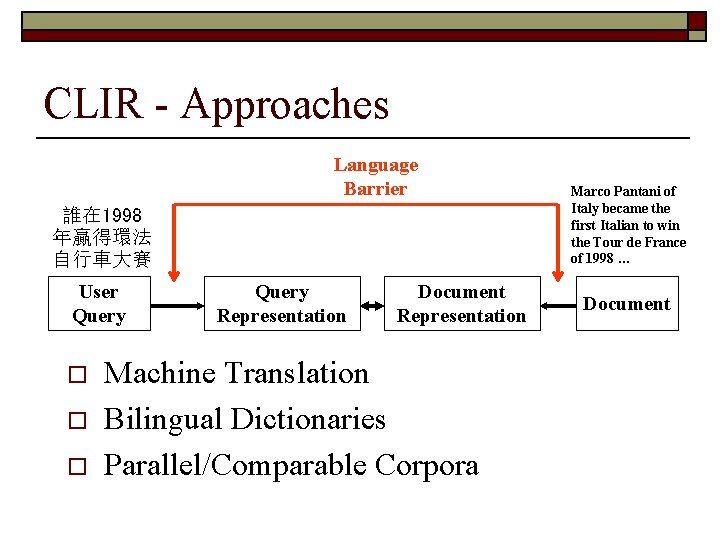

CLIR - Approaches Language Barrier 誰在 1998 年贏得環法 自行車大賽 User Query o o o Query Representation Document Representation Machine Translation Bilingual Dictionaries Parallel/Comparable Corpora Marco Pantani of Italy became the first Italian to win the Tour de France of 1998 … Document

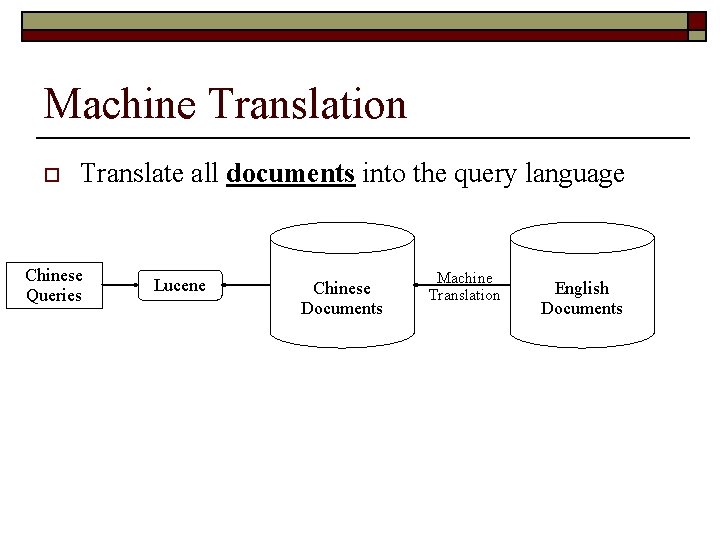

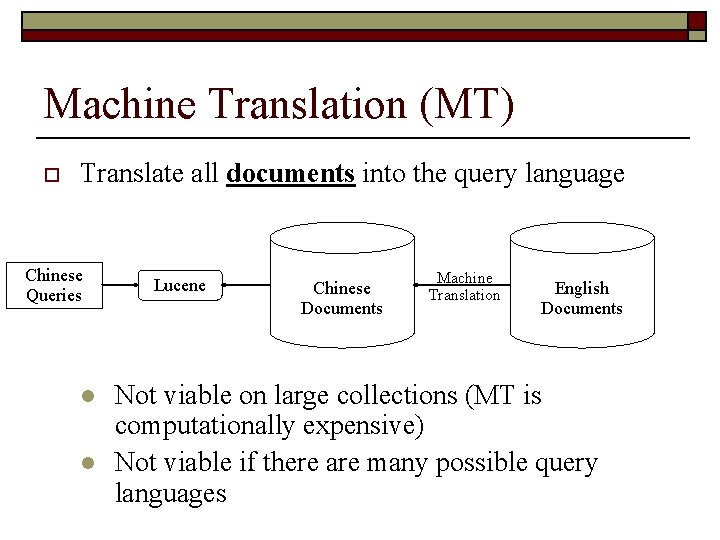

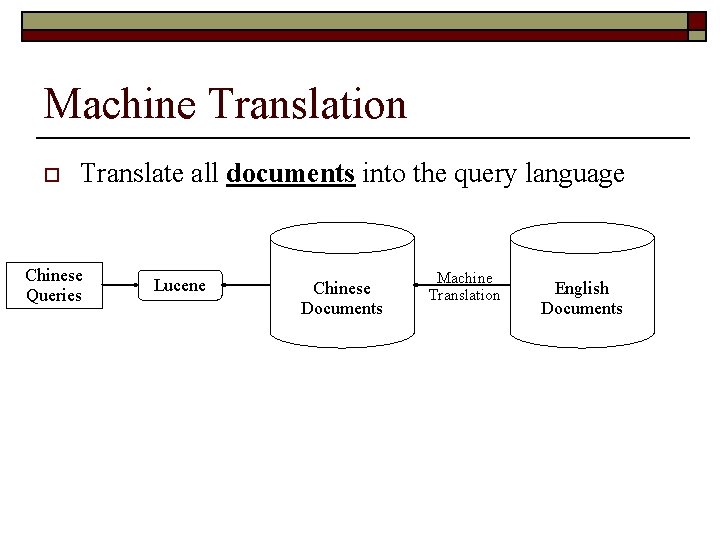

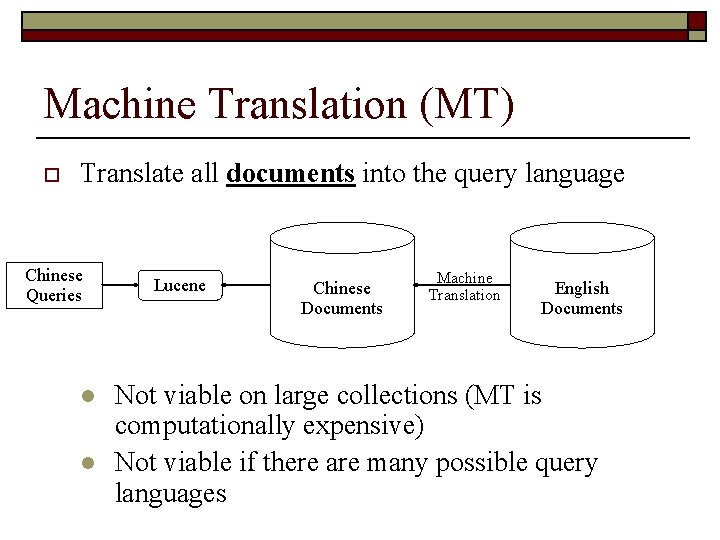

Machine Translation o Translate all documents into the query language Chinese Queries Lucene Chinese Documents Machine Translation English Documents

Machine Translation (MT) o Translate all documents into the query language Chinese Queries l l Lucene Chinese Documents Machine Translation English Documents Not viable on large collections (MT is computationally expensive) Not viable if there are many possible query languages

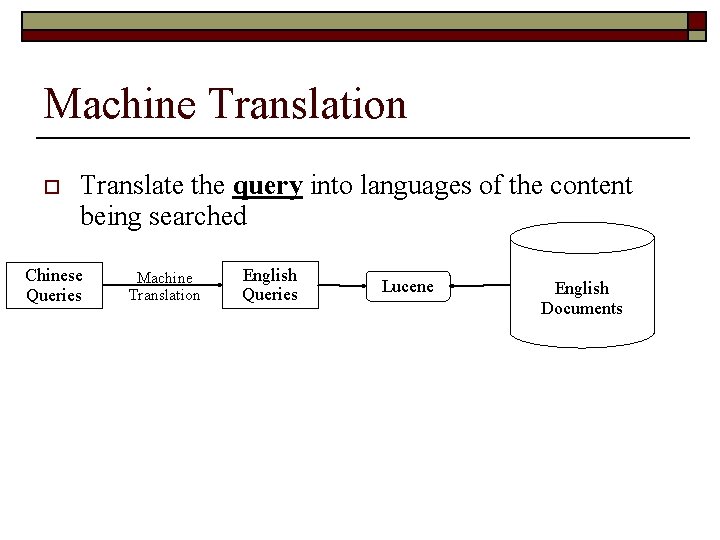

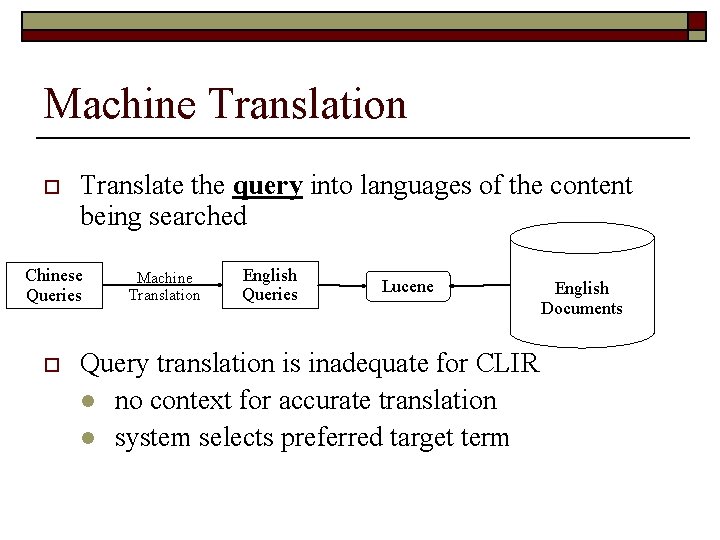

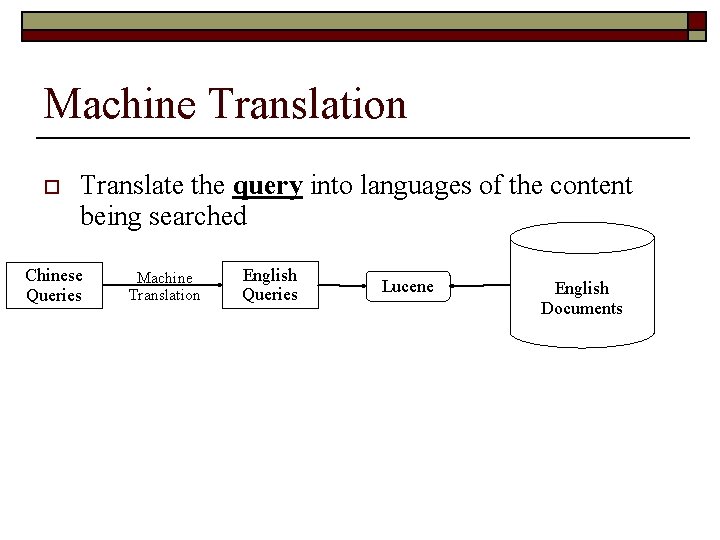

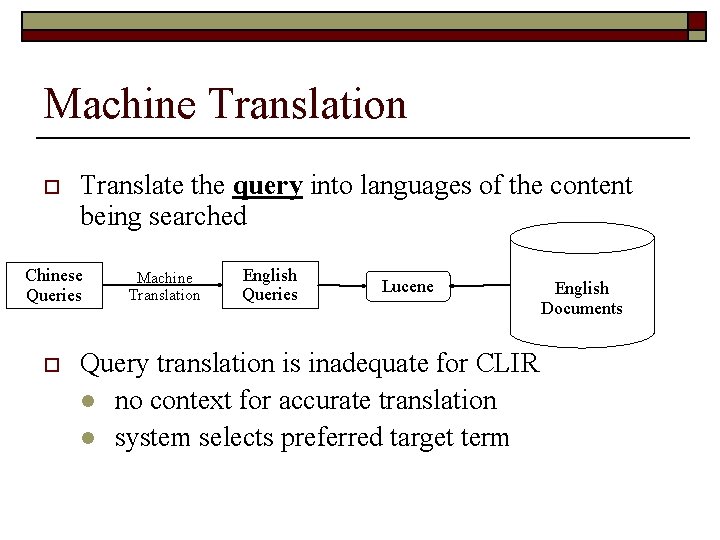

Machine Translation o Translate the query into languages of the content being searched Chinese Queries Machine Translation English Queries Lucene English Documents

Machine Translation o Translate the query into languages of the content being searched Chinese Queries o Machine Translation English Queries Lucene Query translation is inadequate for CLIR l no context for accurate translation l system selects preferred target term English Documents

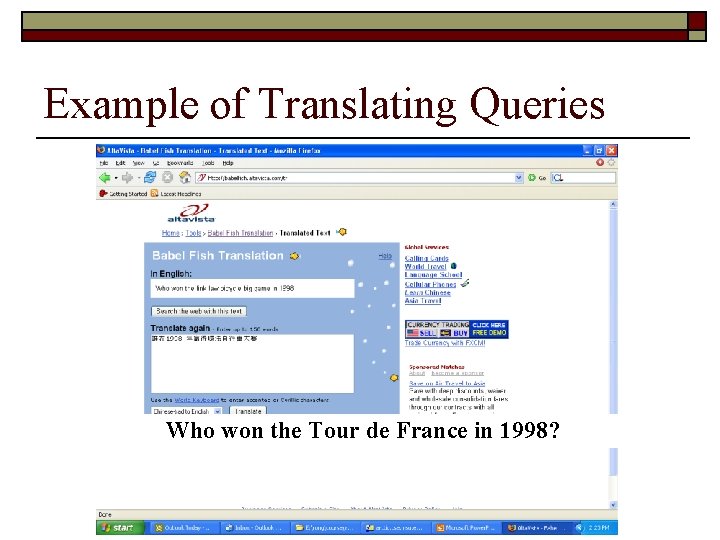

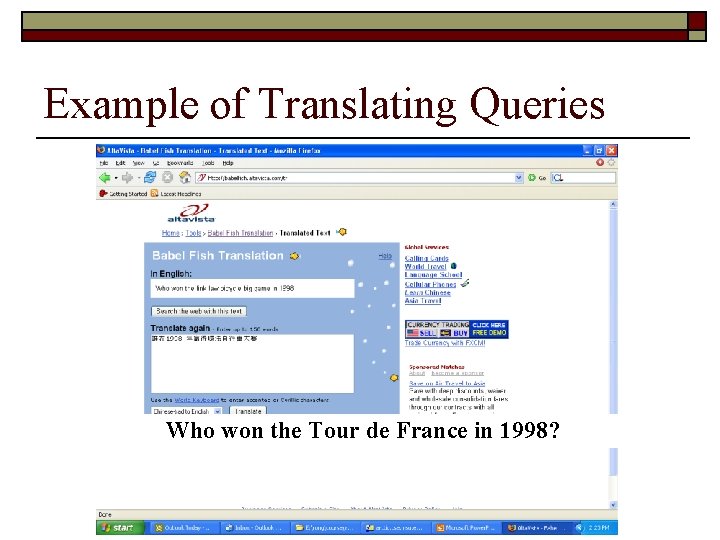

Example of Translating Queries Who won the Tour de France in 1998?

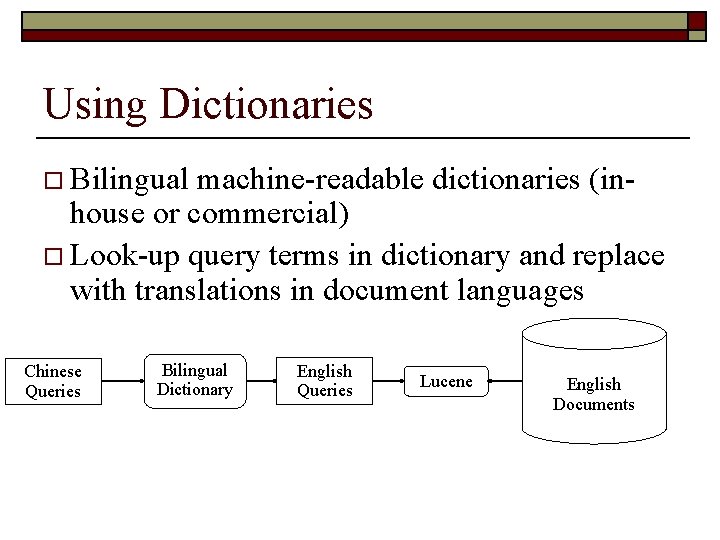

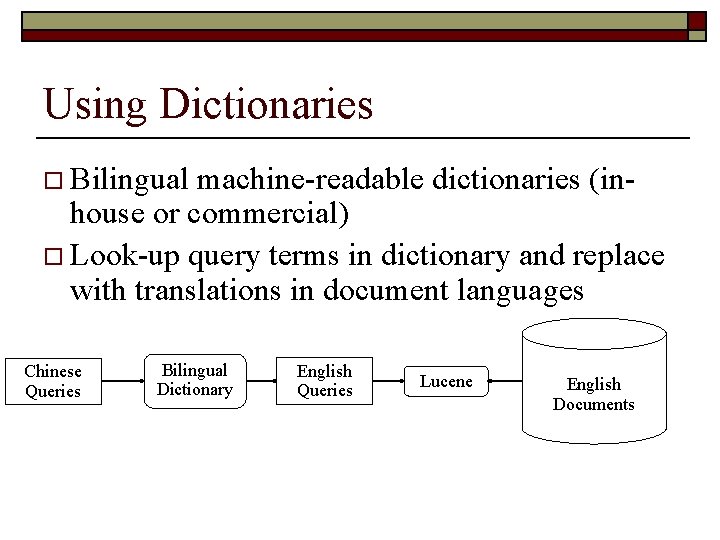

Using Dictionaries o Bilingual machine-readable dictionaries (inhouse or commercial) o Look-up query terms in dictionary and replace with translations in document languages Chinese Queries Bilingual Dictionary English Queries Lucene English Documents

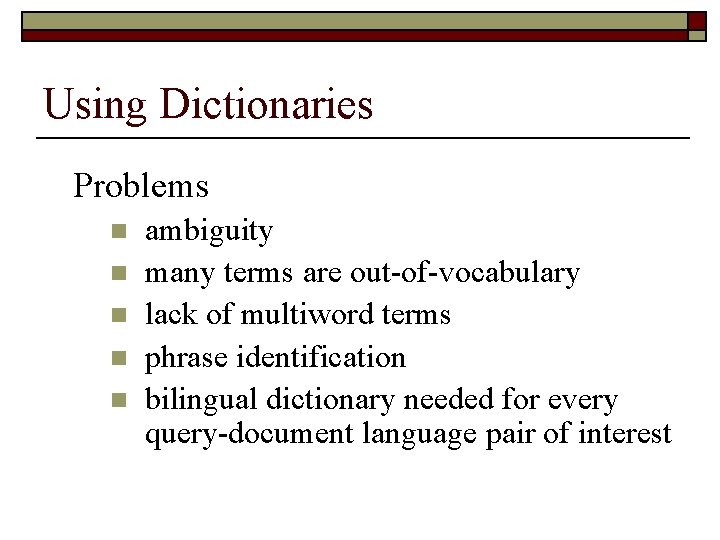

Using Dictionaries Problems n n n ambiguity many terms are out-of-vocabulary lack of multiword terms phrase identification bilingual dictionary needed for every query-document language pair of interest

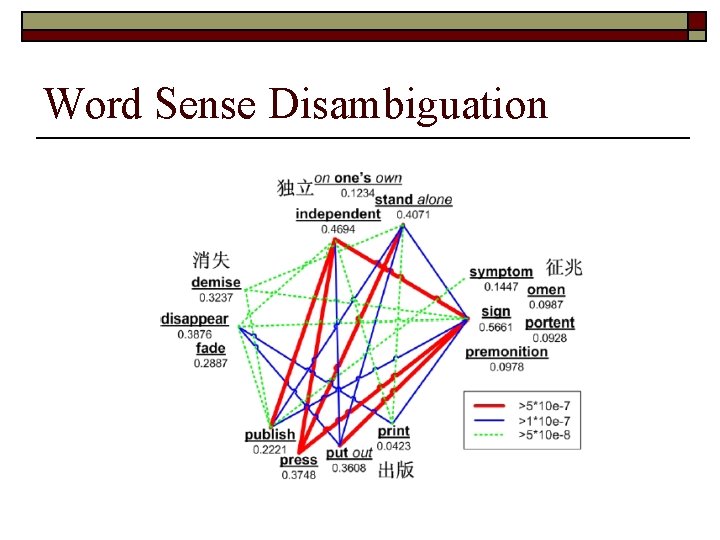

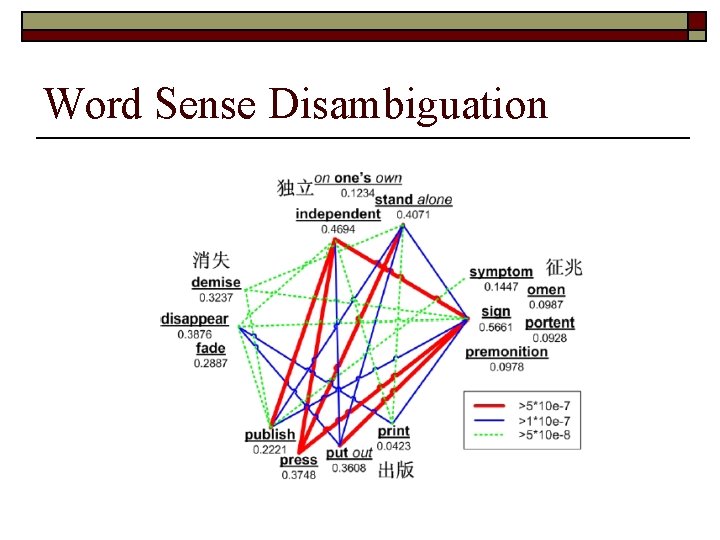

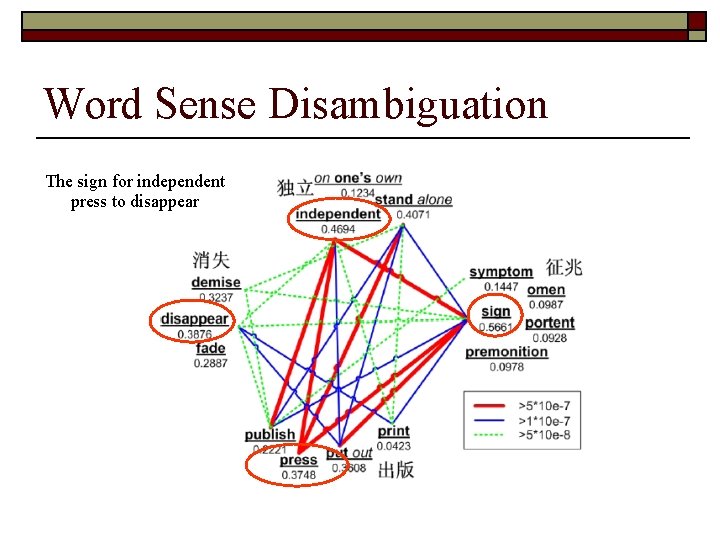

Word Sense Disambiguation

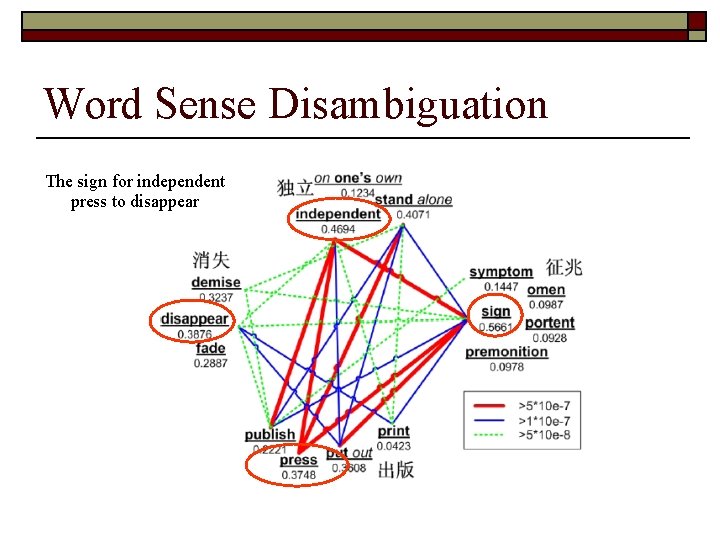

Word Sense Disambiguation The sign for independent press to disappear

Using Corpora o Parallel Corpora n n o translation equivalent e. g. UN corpus in French, Spanish & English Comparable Corpora n n Similar for topic, style, time etc. Hong Kong TV broadcast news in both Chinese and English

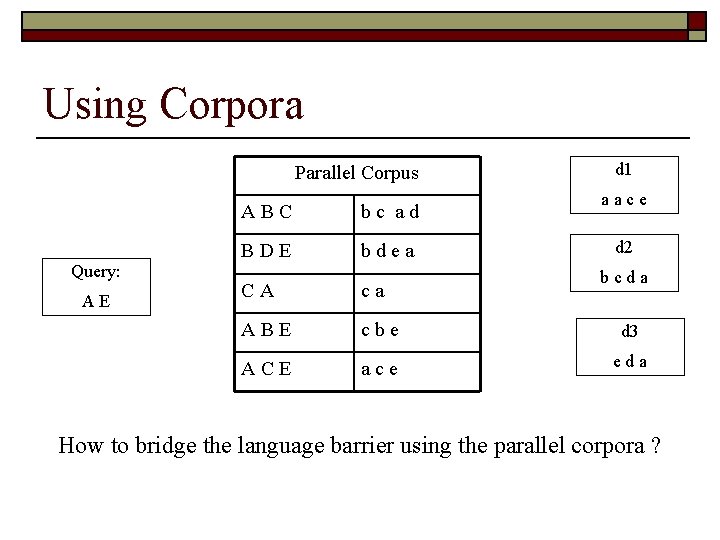

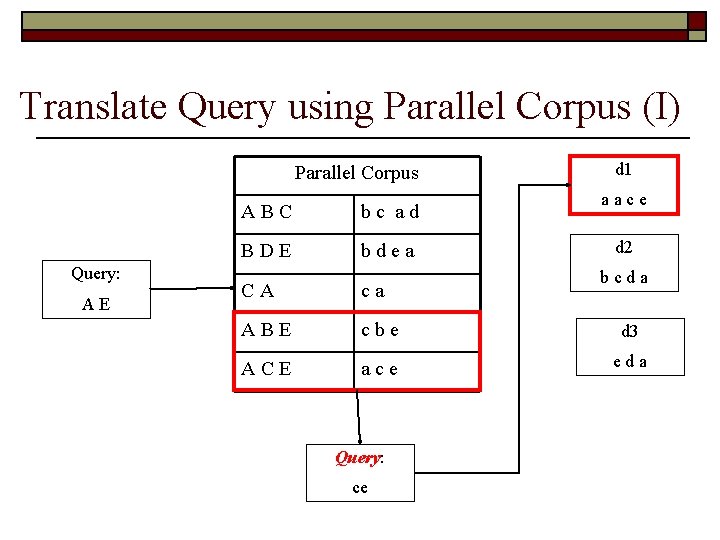

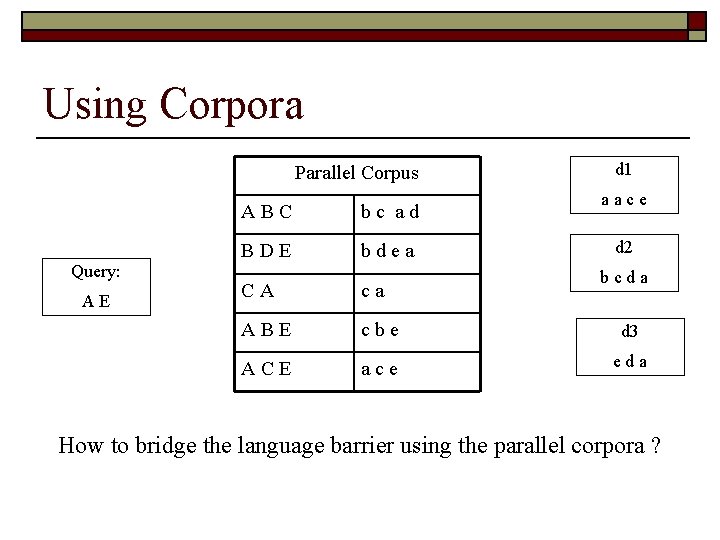

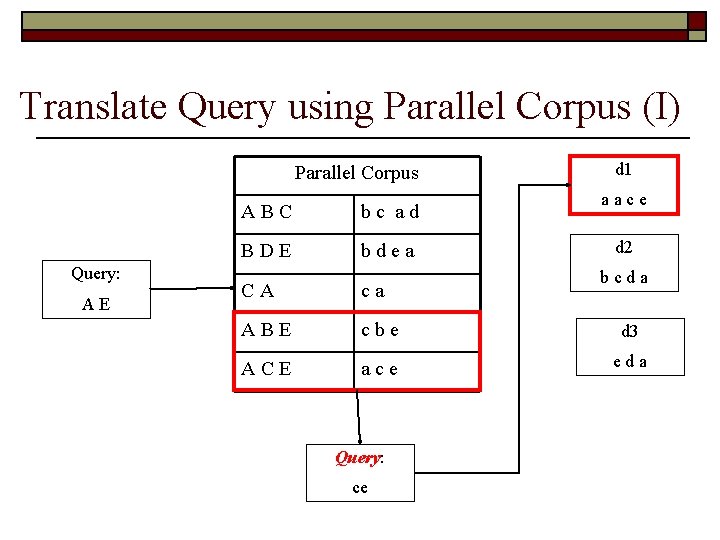

Using Corpora Parallel Corpus Query: AE ABC bc ad BDE bdea d 1 aace d 2 bcda CA ca ABE cbe d 3 ACE ace eda How to bridge the language barrier using the parallel corpora ?

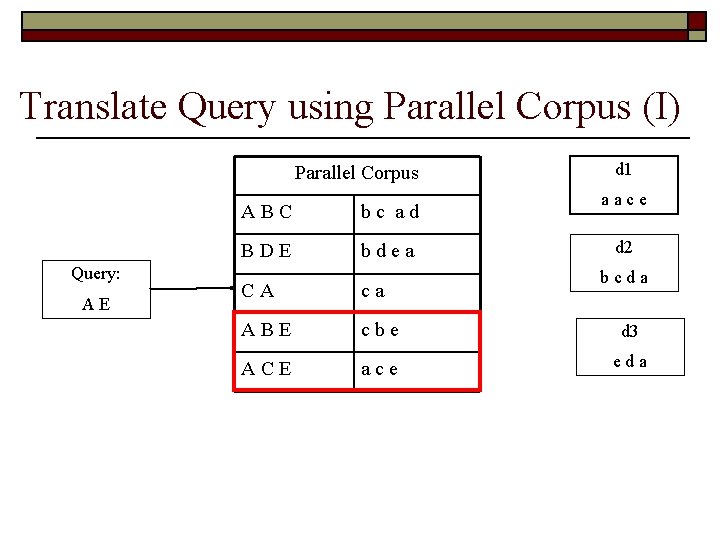

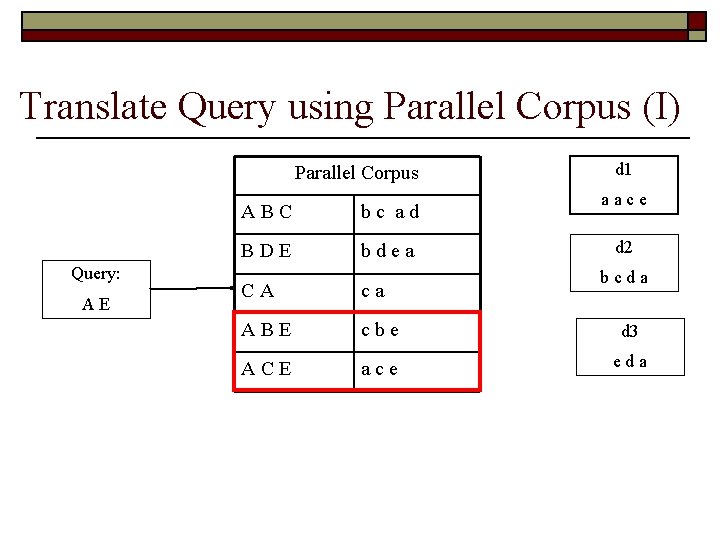

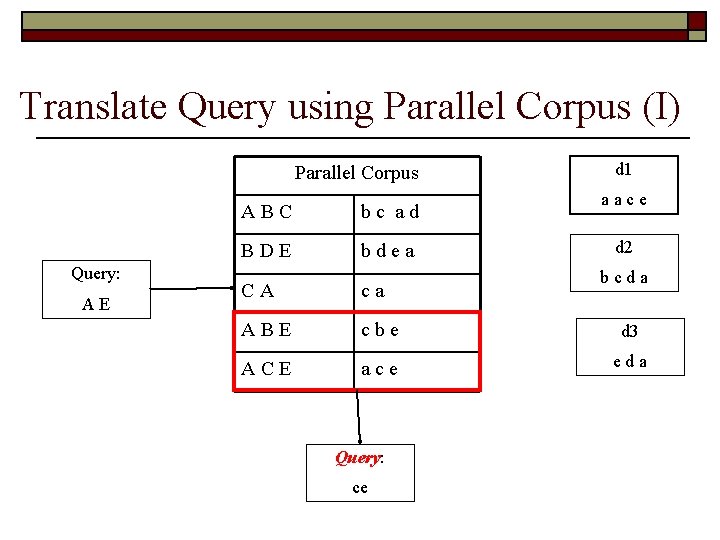

Translate Query using Parallel Corpus (I) Parallel Corpus Query: AE ABC bc ad BDE bdea d 1 aace d 2 bcda CA ca ABE cbe d 3 ACE ace eda

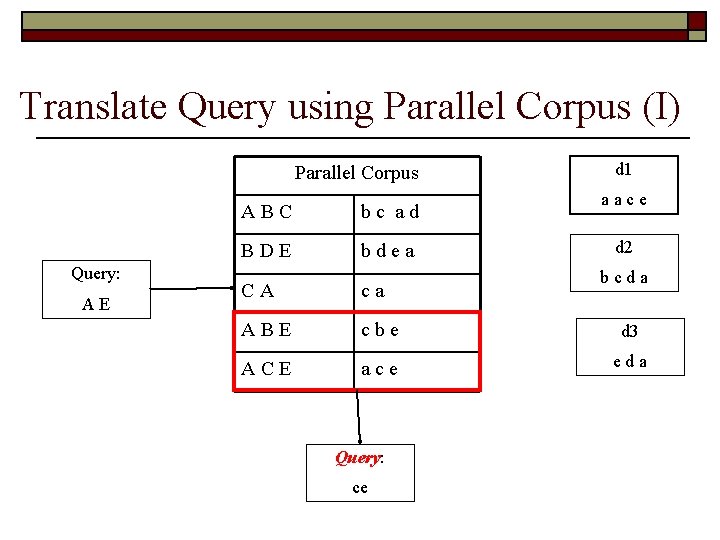

Translate Query using Parallel Corpus (I) Parallel Corpus Query: AE ABC bc ad BDE bdea d 1 aace d 2 bcda CA ca ABE cbe d 3 ACE ace eda Query: ce

Translate Query using Parallel Corpus (I) Parallel Corpus Query: AE ABC bc ad BDE bdea d 1 aace d 2 bcda CA ca ABE cbe d 3 ACE ace eda Query: ce

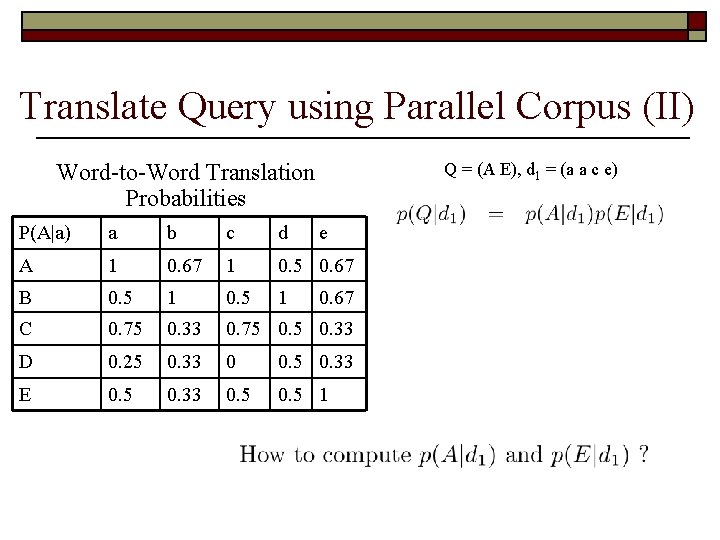

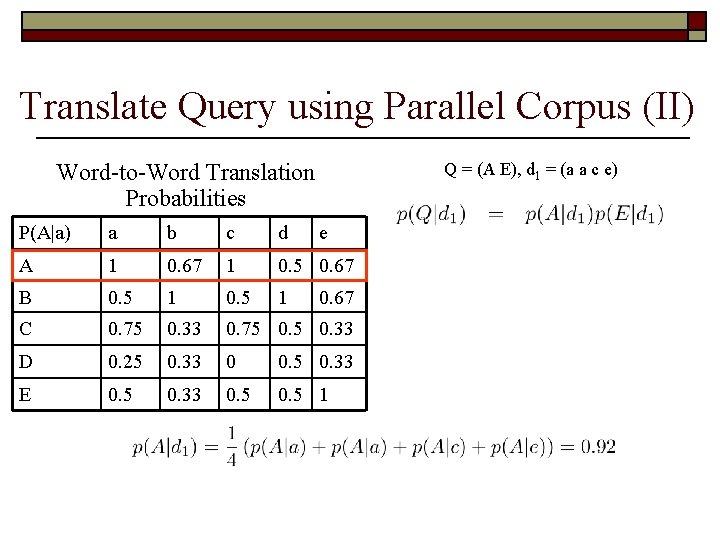

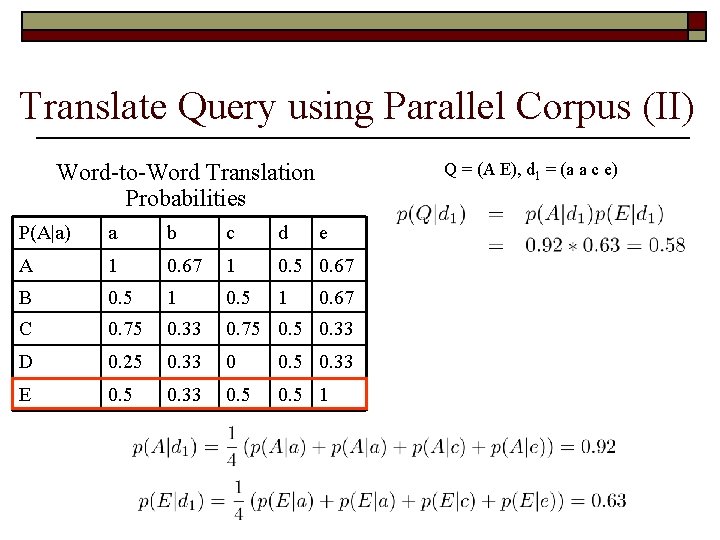

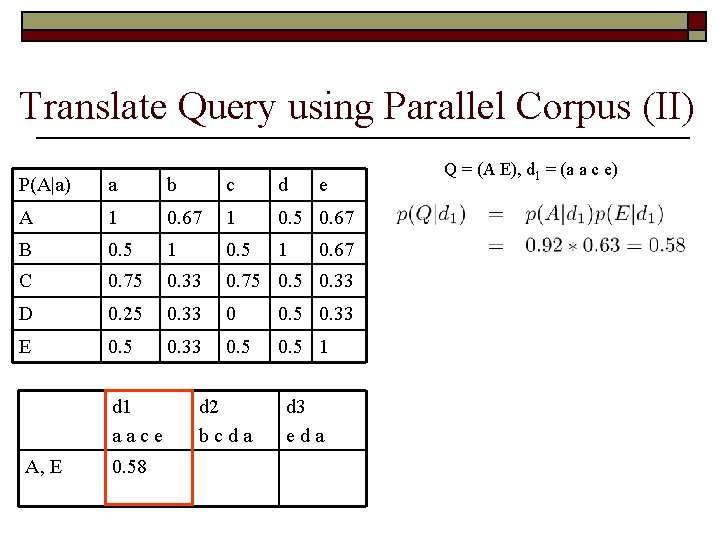

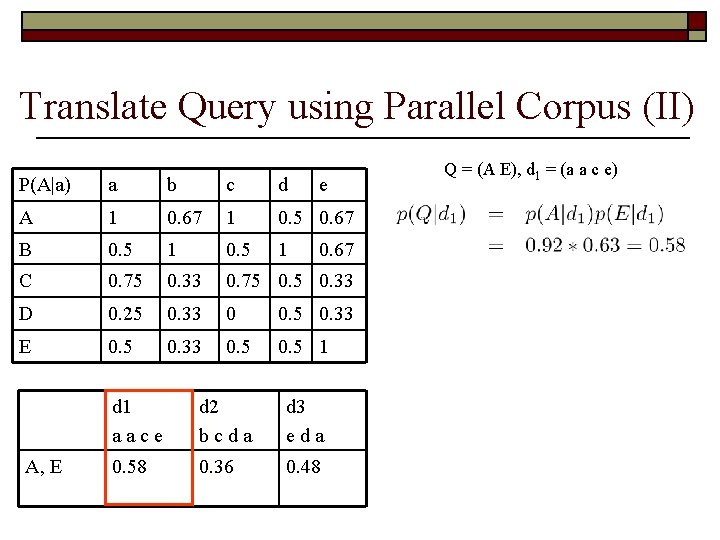

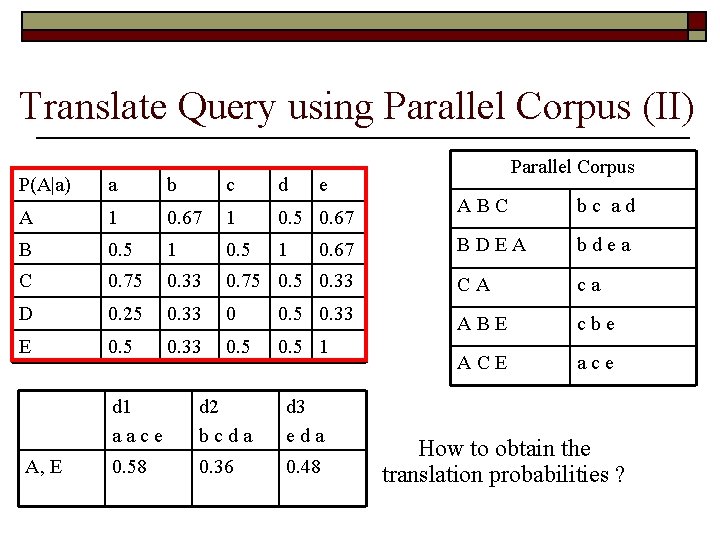

Translate Query using Parallel Corpus (II) o o Learn word-to-word translation probabilities from parallel corpa Compute the relevance of a document d to a given query q by estimating the probability of translating document d into query q

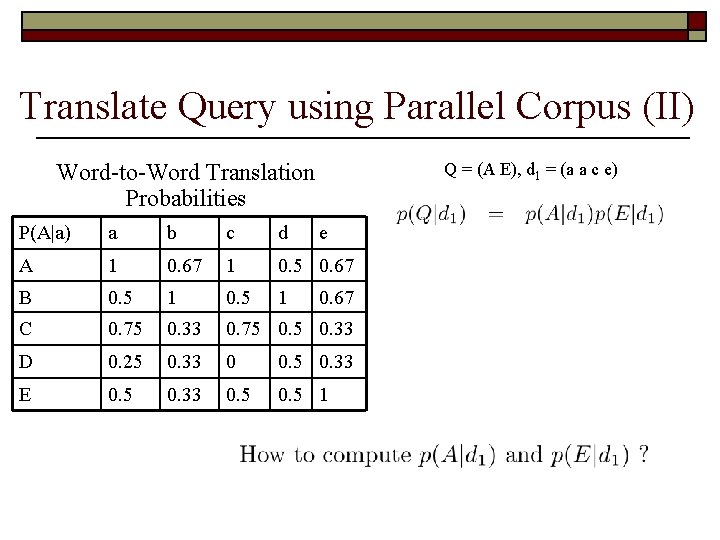

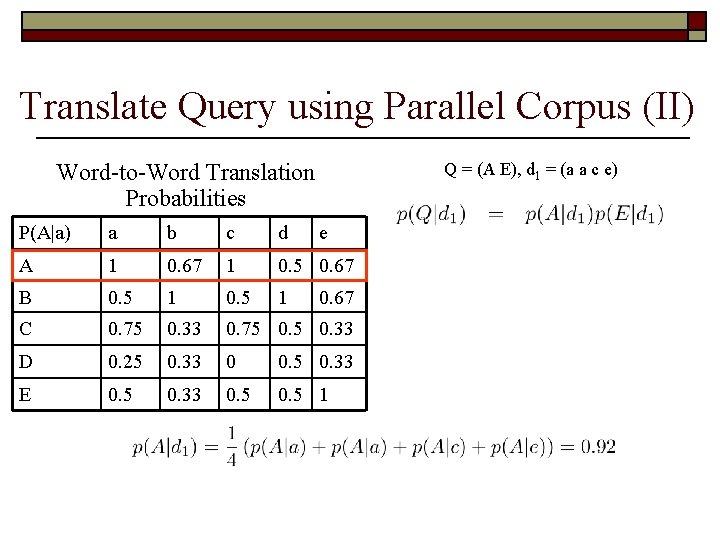

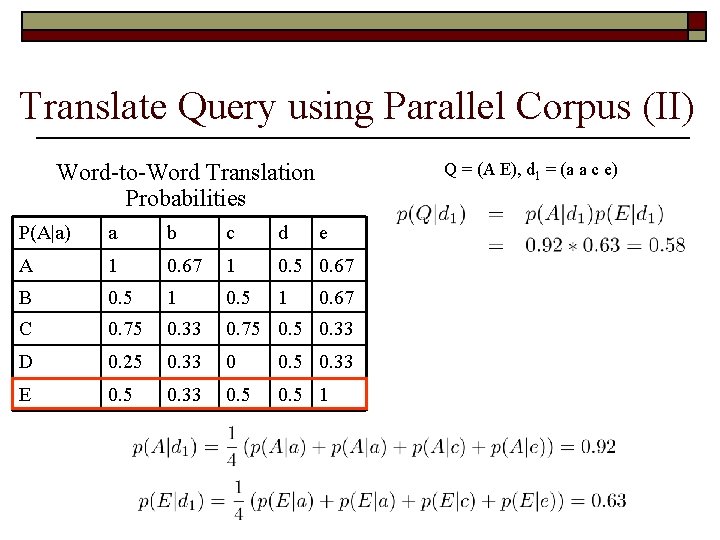

Translate Query using Parallel Corpus (II) Word-to-Word Translation Probabilities Q = (A E), d 1 = (a a c e) P(A|a) a b c d e A 1 0. 67 1 0. 5 0. 67 B 0. 5 1 C 0. 75 0. 33 D 0. 25 0. 33 0 0. 5 0. 33 E 0. 5 0. 33 0. 5 1 0. 67

Translate Query using Parallel Corpus (II) Word-to-Word Translation Probabilities Q = (A E), d 1 = (a a c e) P(A|a) a b c d e A 1 0. 67 1 0. 5 0. 67 B 0. 5 1 C 0. 75 0. 33 D 0. 25 0. 33 0 0. 5 0. 33 E 0. 5 0. 33 0. 5 1 0. 67

Translate Query using Parallel Corpus (II) Word-to-Word Translation Probabilities Q = (A E), d 1 = (a a c e) P(A|a) a b c d e A 1 0. 67 1 0. 5 0. 67 B 0. 5 1 C 0. 75 0. 33 D 0. 25 0. 33 0 0. 5 0. 33 E 0. 5 0. 33 0. 5 1 0. 67

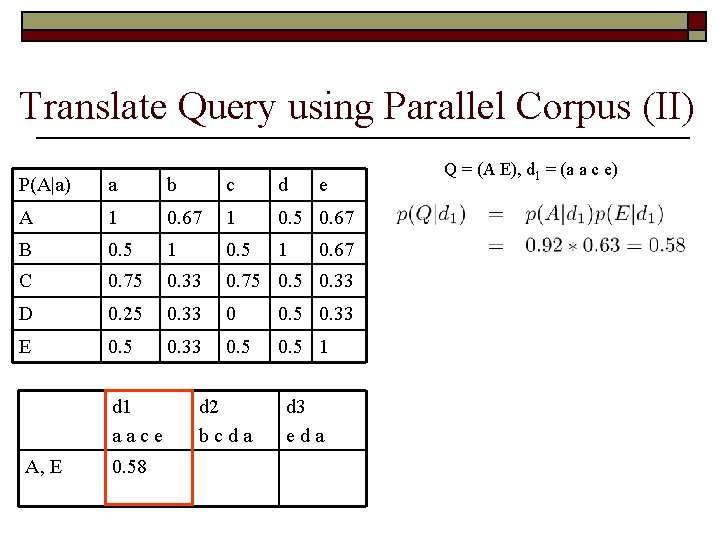

Translate Query using Parallel Corpus (II) P(A|a) a b c d A 1 0. 67 1 0. 5 0. 67 B 0. 5 1 C 0. 75 0. 33 D 0. 25 0. 33 0 0. 5 0. 33 E 0. 5 0. 33 0. 5 1 d 2 bcda d 3 eda d 1 aace A, E 0. 58 e 0. 67 Q = (A E), d 1 = (a a c e)

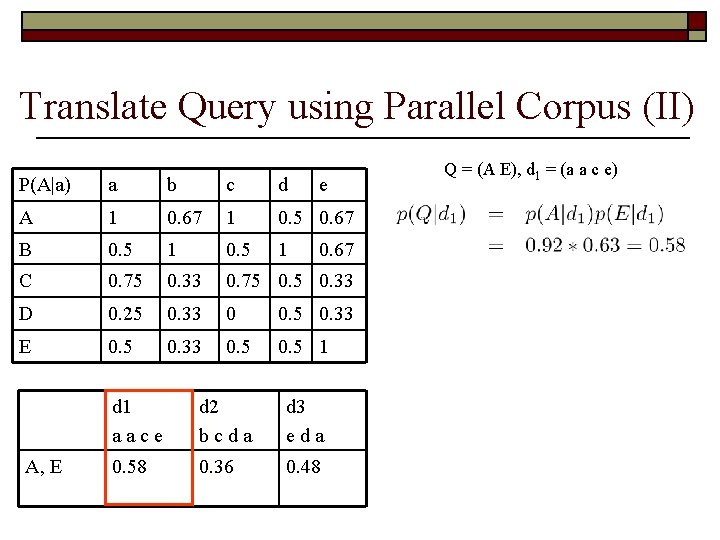

Translate Query using Parallel Corpus (II) P(A|a) a b c d A 1 0. 67 1 0. 5 0. 67 B 0. 5 1 C 0. 75 0. 33 D 0. 25 0. 33 0 0. 5 0. 33 E 0. 5 0. 33 0. 5 1 d 1 aace d 2 bcda d 3 eda 0. 58 0. 36 0. 48 A, E e 0. 67 Q = (A E), d 1 = (a a c e)

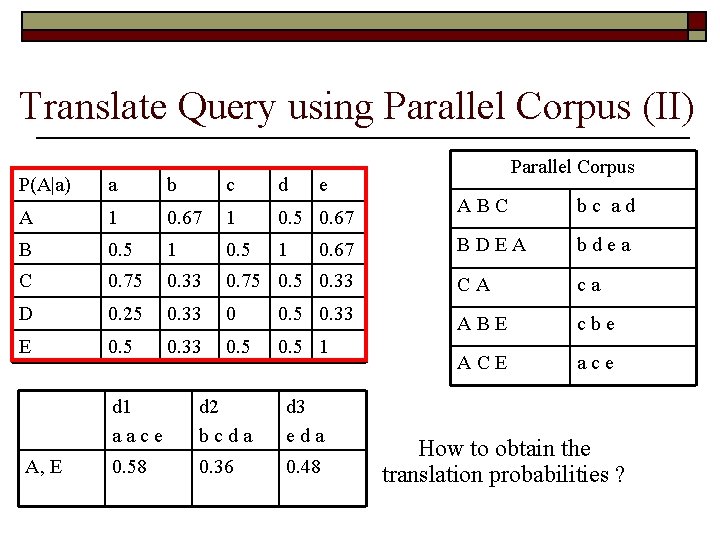

Translate Query using Parallel Corpus (II) P(A|a) a b c d A 1 0. 67 1 0. 5 0. 67 B 0. 5 1 C 0. 75 0. 33 D 0. 25 E 0. 5 A, E e Parallel Corpus ABC bc ad BDEA bdea 0. 75 0. 33 CA ca 0. 33 0 0. 5 0. 33 ABE cbe 0. 33 0. 5 1 ACE ace d 1 aace d 2 bcda d 3 eda 0. 58 0. 36 0. 48 0. 67 How to obtain the translation probabilities ?

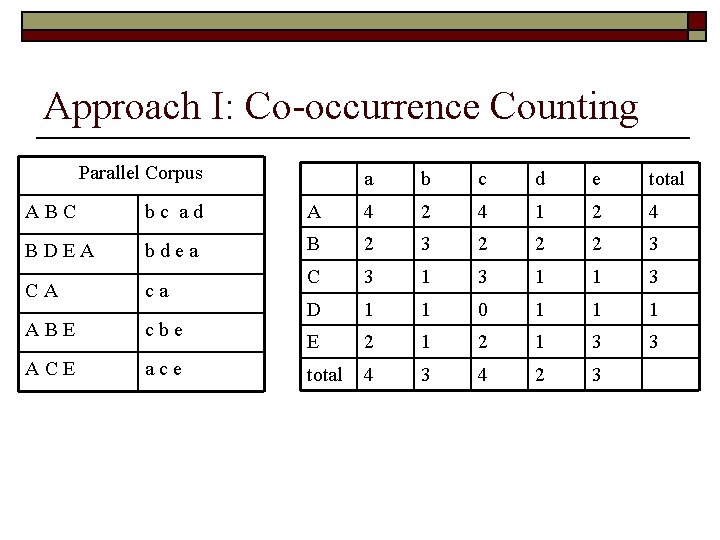

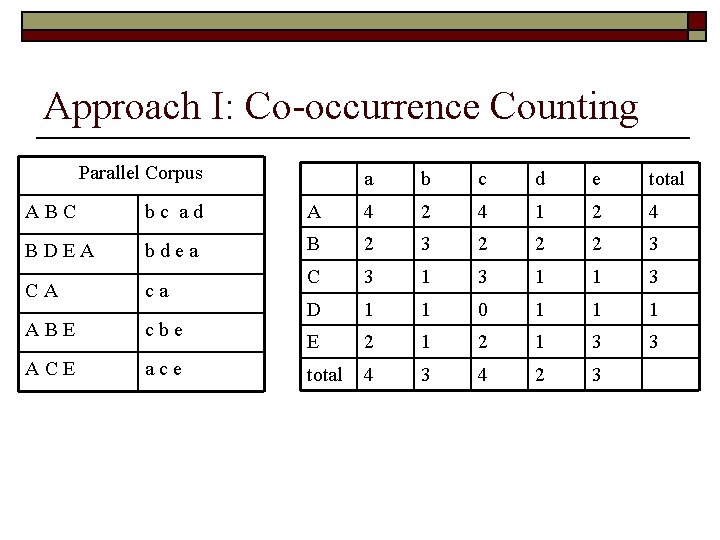

Approach I: Co-occurrence Counting Parallel Corpus a b c d e total ABC bc ad A 4 2 4 1 2 4 BDEA bdea B 2 3 2 2 2 3 C 3 1 1 3 D 1 1 0 1 1 1 E 2 1 3 3 total 4 3 4 2 3 CA ca ABE cbe ACE ace

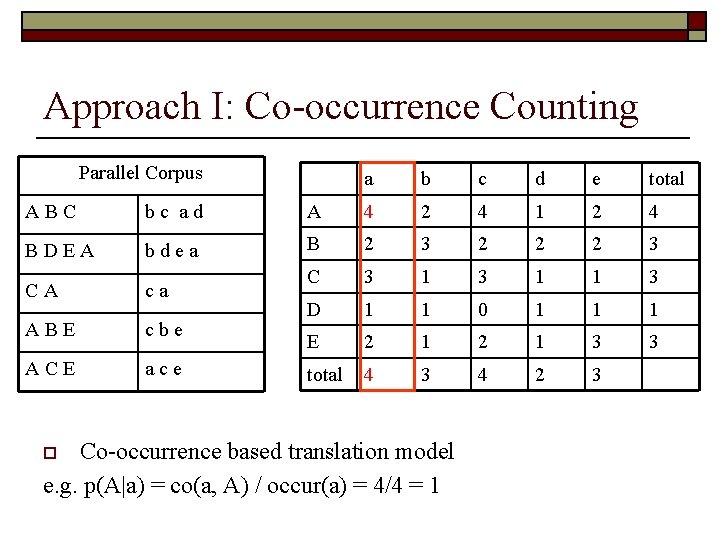

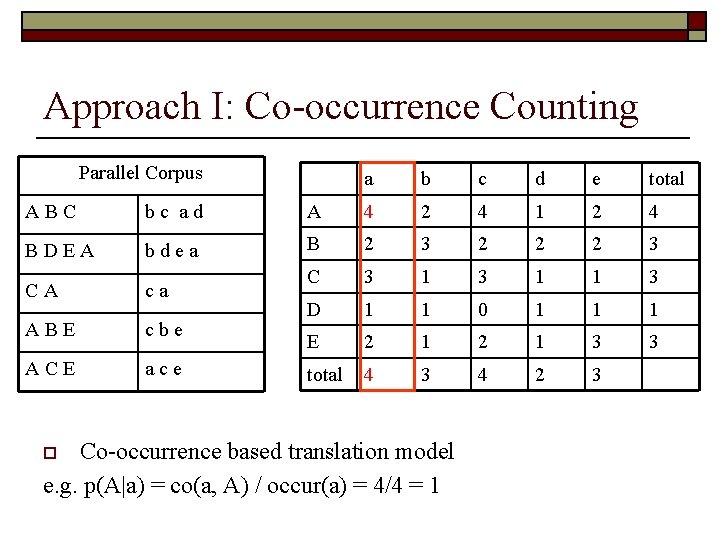

Approach I: Co-occurrence Counting Parallel Corpus a b c d e total ABC bc ad A 4 2 4 1 2 4 BDEA bdea B 2 3 2 2 2 3 C 3 1 1 3 D 1 1 0 1 1 1 E 2 1 3 3 total 4 3 4 2 3 CA ca ABE cbe ACE ace Co-occurrence based translation model e. g. p(A|a) = co(a, A) / occur(a) = 4/4 = 1 o

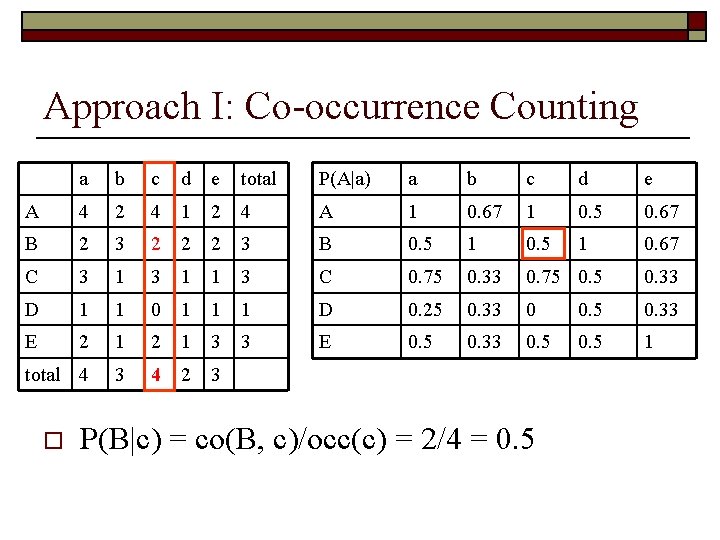

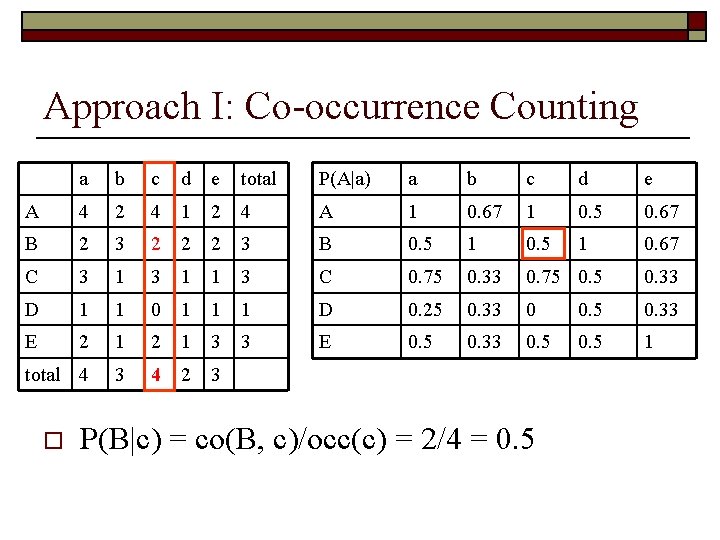

Approach I: Co-occurrence Counting a b c d e total P(A|a) a b c d e A 4 2 4 1 2 4 A 1 0. 67 1 0. 5 0. 67 B 2 3 2 2 2 3 B 0. 5 1 0. 67 C 3 1 1 3 C 0. 75 0. 33 D 1 1 0 1 1 1 D 0. 25 0. 33 0 0. 5 0. 33 E 2 1 3 3 E 0. 5 0. 33 0. 5 1 total 4 3 4 2 3 o P(B|c) = co(B, c)/occ(c) = 2/4 = 0. 5

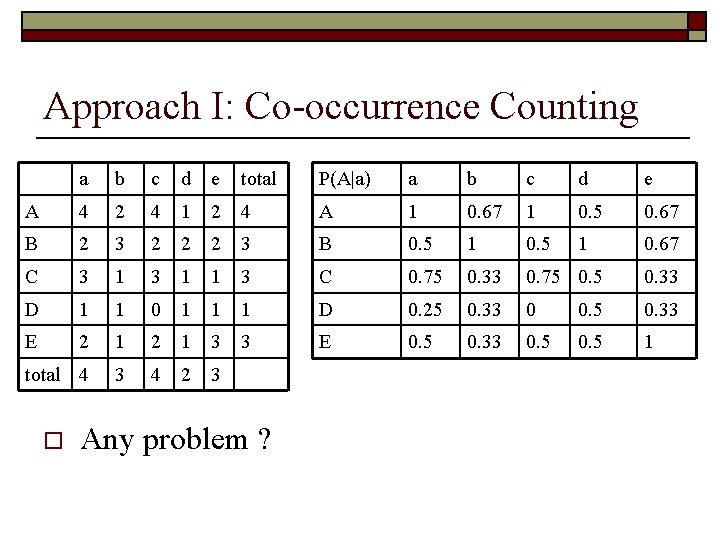

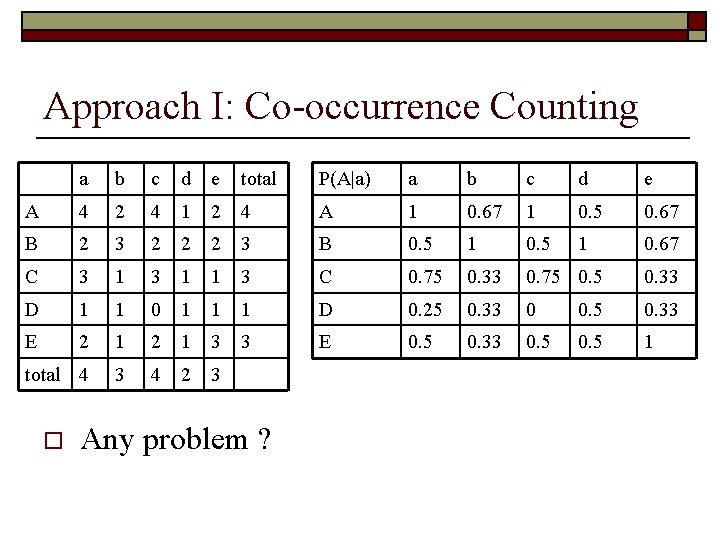

Approach I: Co-occurrence Counting a b c d e total P(A|a) a b c d e A 4 2 4 1 2 4 A 1 0. 67 1 0. 5 0. 67 B 2 3 2 2 2 3 B 0. 5 1 0. 67 C 3 1 1 3 C 0. 75 0. 33 D 1 1 0 1 1 1 D 0. 25 0. 33 0 0. 5 0. 33 E 2 1 3 3 E 0. 5 0. 33 0. 5 1 total 4 3 4 2 3 o Any problem ?

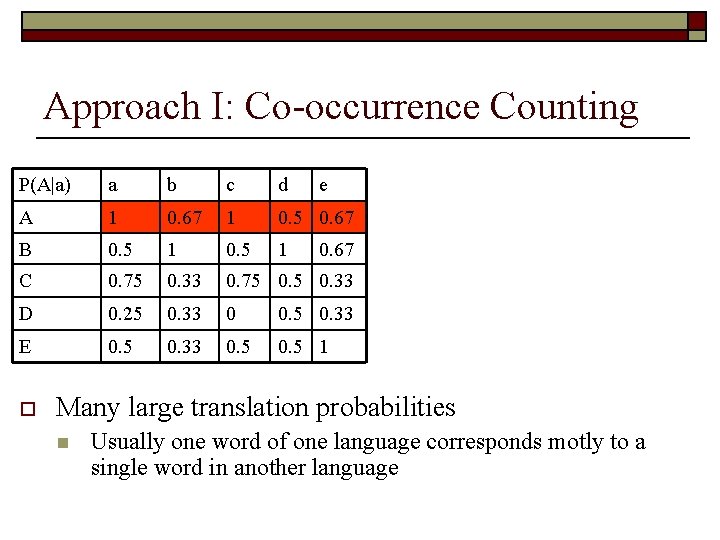

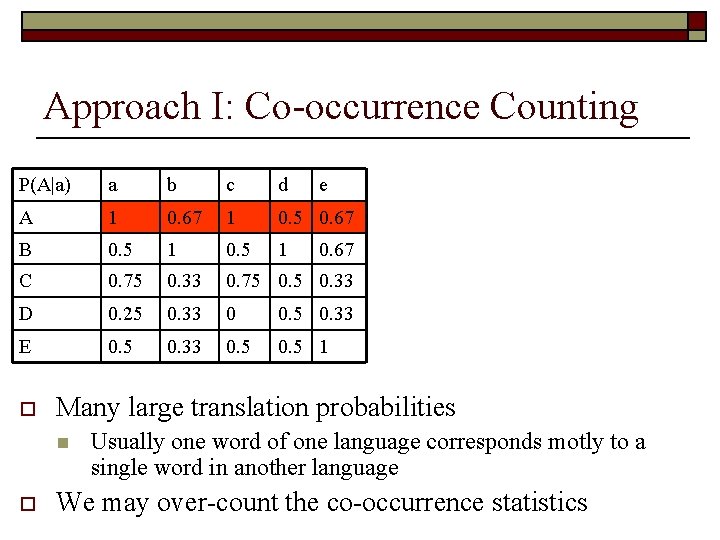

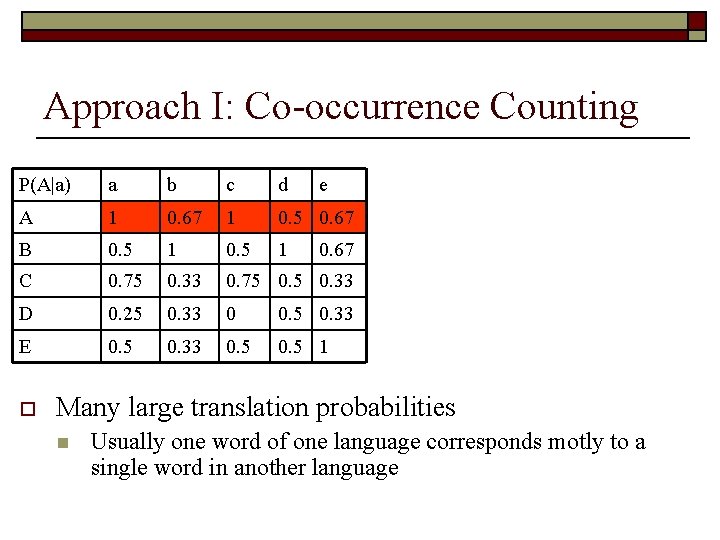

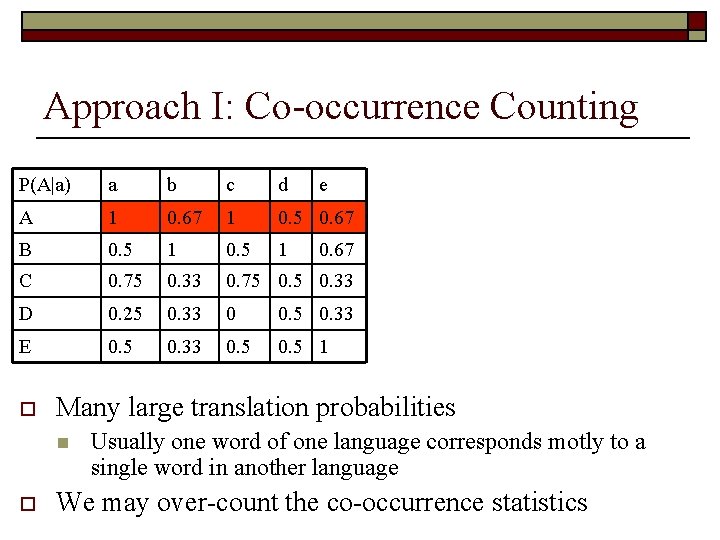

Approach I: Co-occurrence Counting P(A|a) a b c d A 1 0. 67 1 0. 5 0. 67 B 0. 5 1 C 0. 75 0. 33 D 0. 25 0. 33 0 0. 5 0. 33 E 0. 5 0. 33 0. 5 1 o e 0. 67 Many large translation probabilities n Usually one word of one language corresponds motly to a single word in another language

Approach I: Co-occurrence Counting P(A|a) a b c d A 1 0. 67 1 0. 5 0. 67 B 0. 5 1 C 0. 75 0. 33 D 0. 25 0. 33 0 0. 5 0. 33 E 0. 5 0. 33 0. 5 1 o 0. 67 Many large translation probabilities n o e Usually one word of one language corresponds motly to a single word in another language We may over-count the co-occurrence statistics

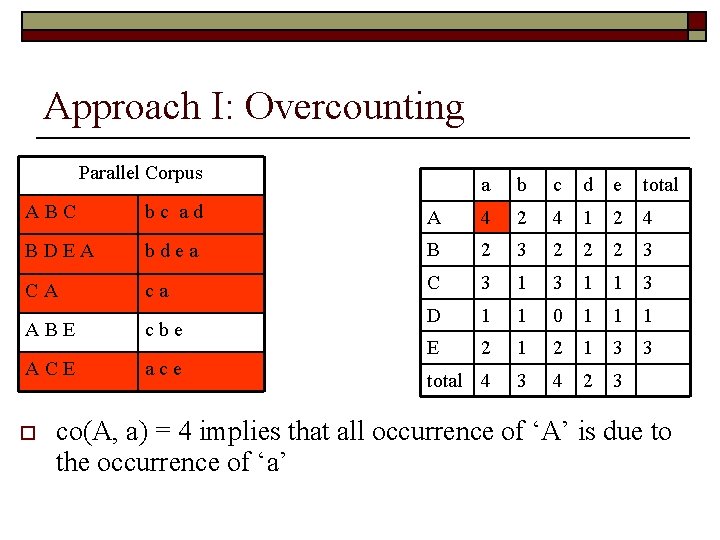

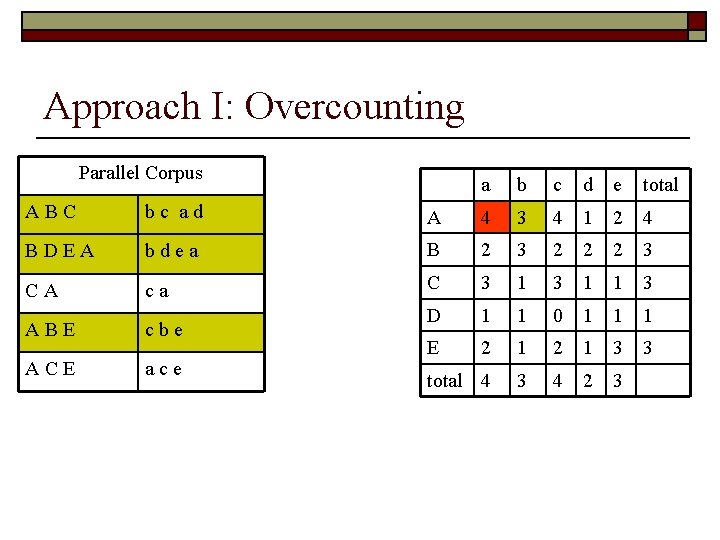

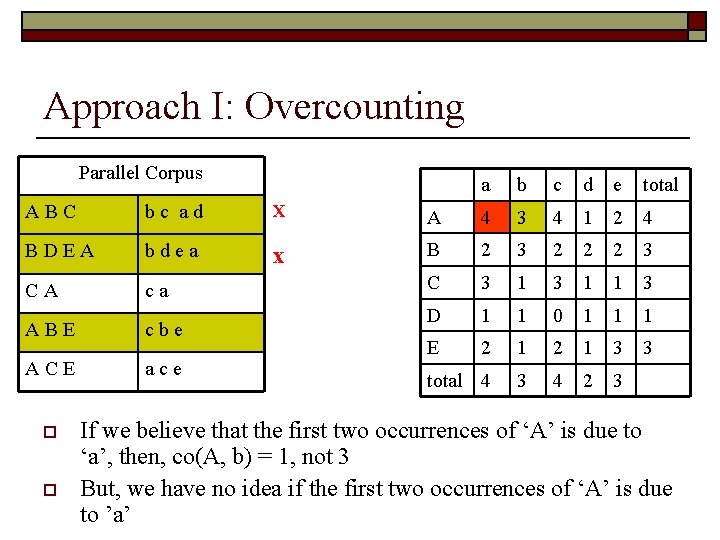

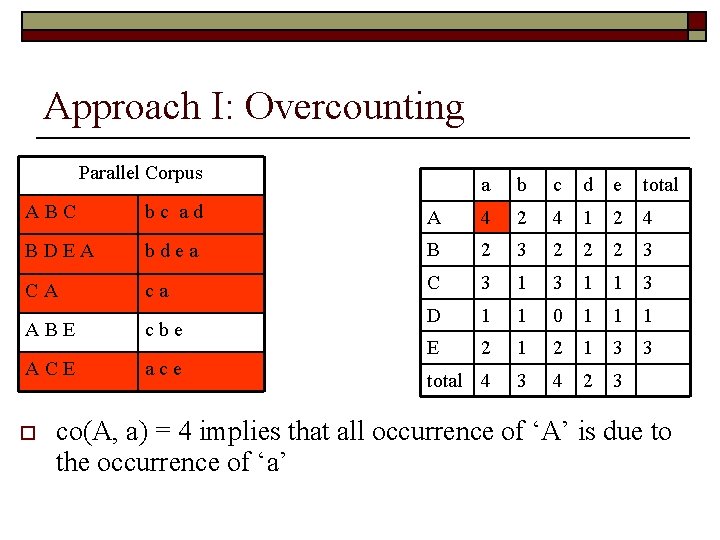

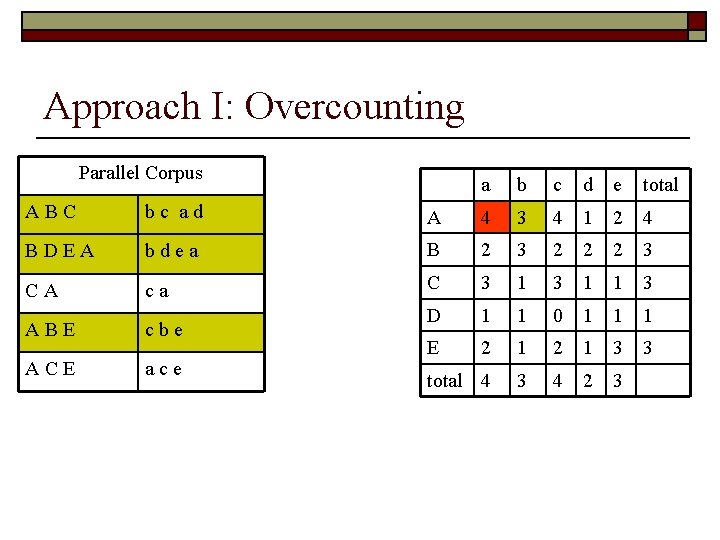

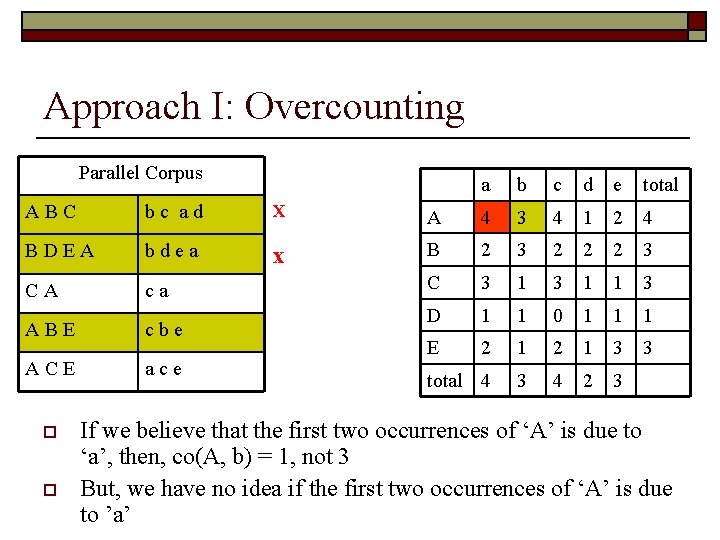

Approach I: Overcounting Parallel Corpus a b c d e total ABC bc ad A 4 2 4 1 2 4 BDEA bdea B 2 3 2 2 2 3 CA ca C 3 1 1 3 ABE cbe D 1 1 0 1 1 1 ACE ace E 2 1 3 3 total 4 3 4 2 3 o co(A, a) = 4 implies that all occurrence of ‘A’ is due to the occurrence of ‘a’

Approach I: Overcounting Parallel Corpus a b c d e total ABC bc ad A 4 3 4 1 2 4 BDEA bdea B 2 3 2 2 2 3 CA ca C 3 1 1 3 ABE cbe D 1 1 0 1 1 1 ACE ace E 2 1 3 3 total 4 3 4 2 3

Approach I: Overcounting Parallel Corpus a b c d e total ABC bc ad X A 4 3 4 1 2 4 BDEA bdea x B 2 3 2 2 2 3 CA ca C 3 1 1 3 ABE cbe D 1 1 0 1 1 1 ACE ace E 2 1 3 3 total 4 3 4 2 3 o o If we believe that the first two occurrences of ‘A’ is due to ‘a’, then, co(A, b) = 1, not 3 But, we have no idea if the first two occurrences of ‘A’ is due to ’a’

How to Compute Co-occurrence ? o IBM statistical translation model n n o There are translation models published by IBM research We will only discuss IBM Translation Model I It uses an iterative procedure to eliminate the over counting problem

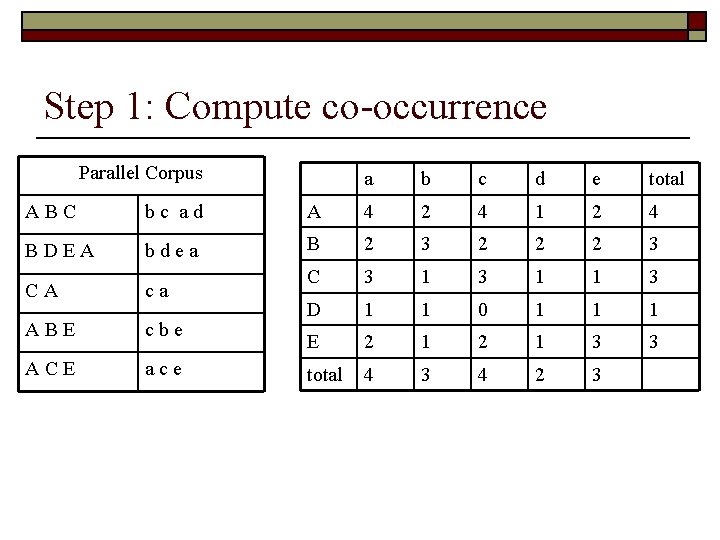

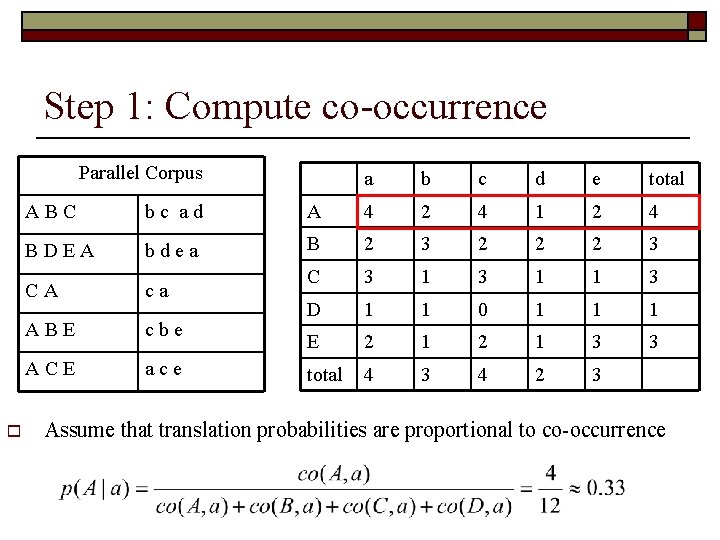

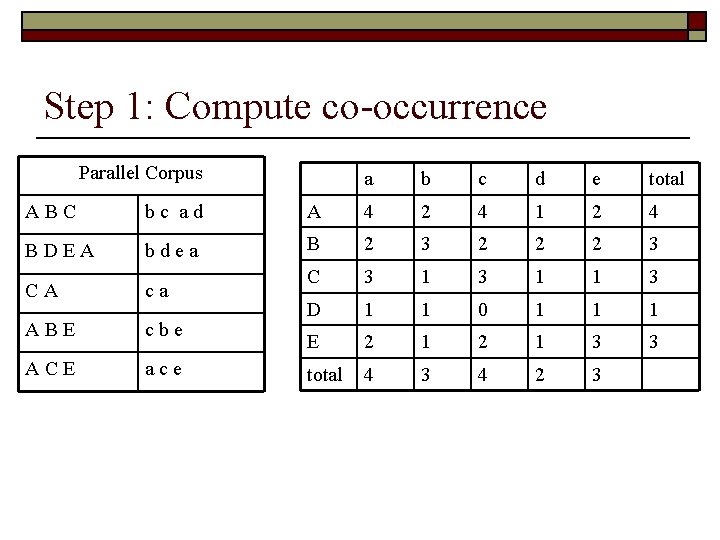

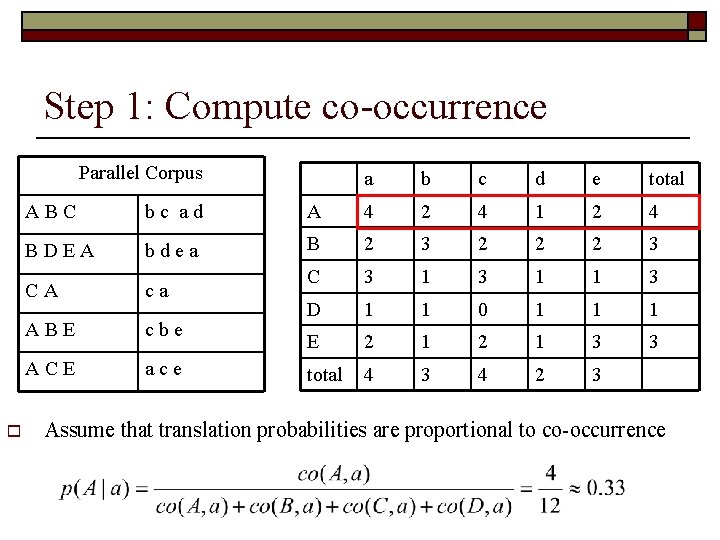

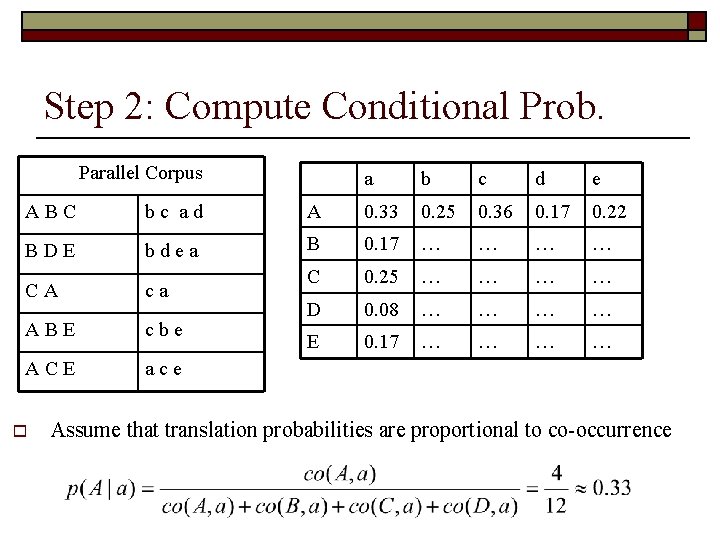

Step 1: Compute co-occurrence Parallel Corpus a b c d e total ABC bc ad A 4 2 4 1 2 4 BDEA bdea B 2 3 2 2 2 3 C 3 1 1 3 D 1 1 0 1 1 1 E 2 1 3 3 total 4 3 4 2 3 CA ca ABE cbe ACE ace

Step 1: Compute co-occurrence Parallel Corpus o a b c d e total ABC bc ad A 4 2 4 1 2 4 BDEA bdea B 2 3 2 2 2 3 C 3 1 1 3 D 1 1 0 1 1 1 E 2 1 3 3 total 4 3 4 2 3 CA ca ABE cbe ACE ace Assume that translation probabilities are proportional to co-occurrence

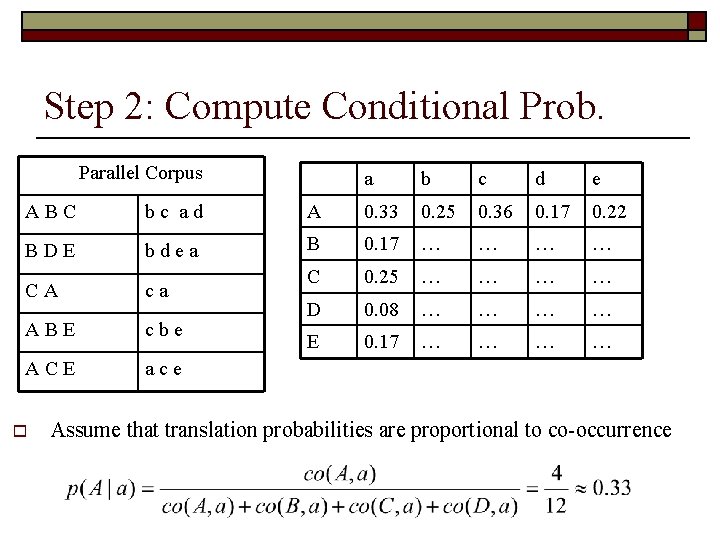

Step 2: Compute Conditional Prob. Parallel Corpus a b c d e ABC bc ad A 0. 33 0. 25 0. 36 0. 17 0. 22 BDE bdea B 0. 17 … … C 0. 25 … … D 0. 08 … … E 0. 17 … … CA ca ABE cbe ACE ace o Assume that translation probabilities are proportional to co-occurrence

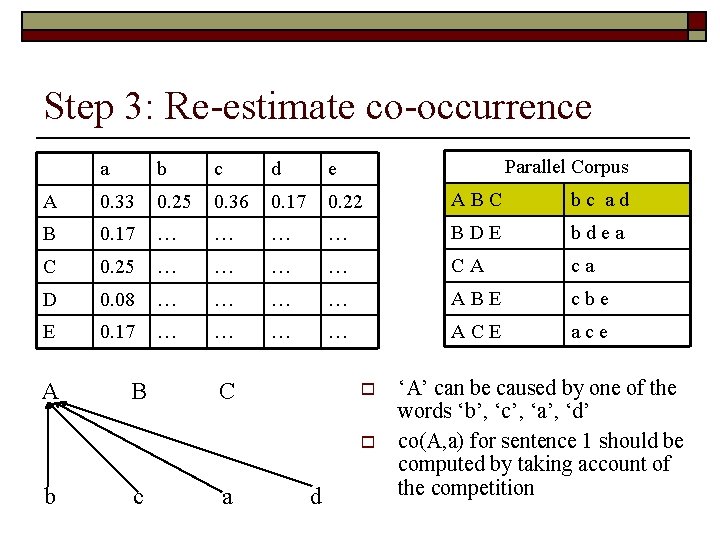

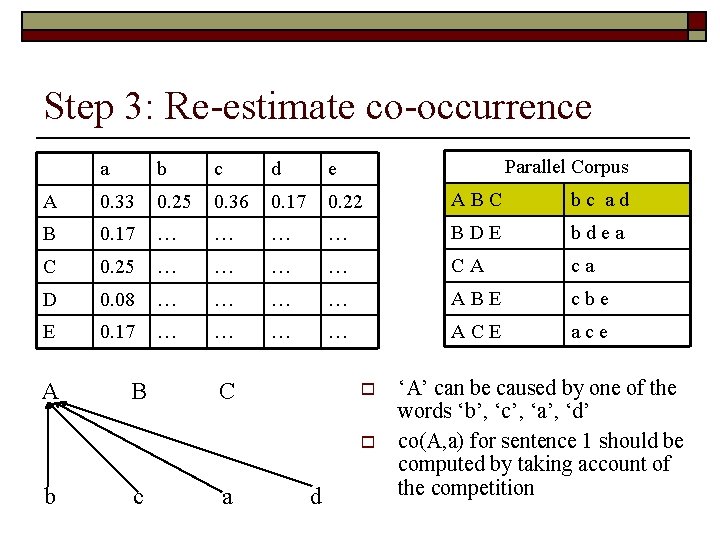

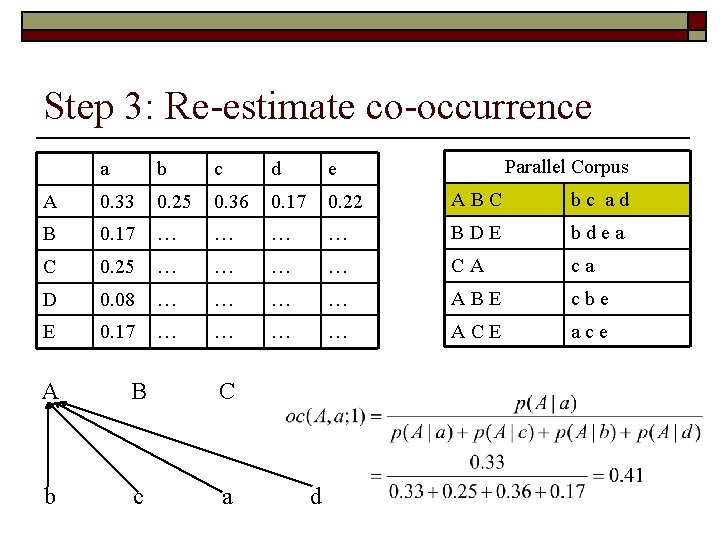

Step 3: Re-estimate co-occurrence Parallel Corpus a b c d e A 0. 33 0. 25 0. 36 0. 17 0. 22 ABC bc ad B 0. 17 … … BDE bdea C 0. 25 … … CA ca D 0. 08 … … ABE cbe E 0. 17 … … ACE ace A B C o o b c a d ‘A’ can be caused by one of the words ‘b’, ‘c’, ‘a’, ‘d’ co(A, a) for sentence 1 should be computed by taking account of the competition

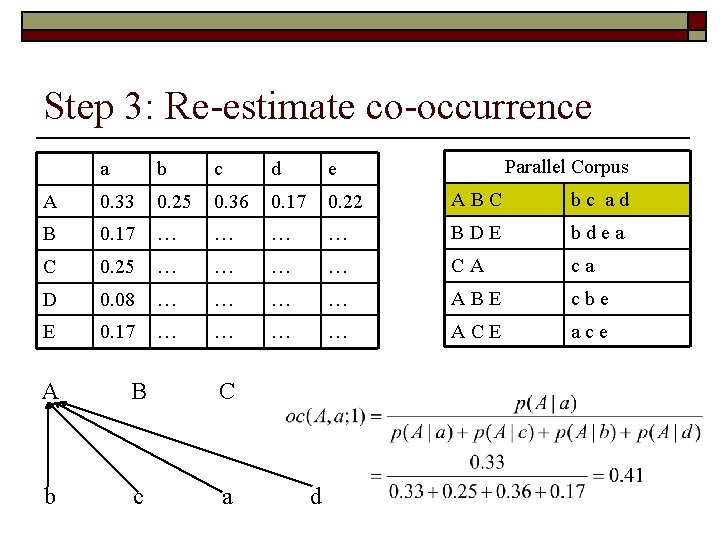

Step 3: Re-estimate co-occurrence Parallel Corpus a b c d e A 0. 33 0. 25 0. 36 0. 17 0. 22 ABC bc ad B 0. 17 … … BDE bdea C 0. 25 … … CA ca D 0. 08 … … ABE cbe E 0. 17 … … ACE ace A B C b c a d

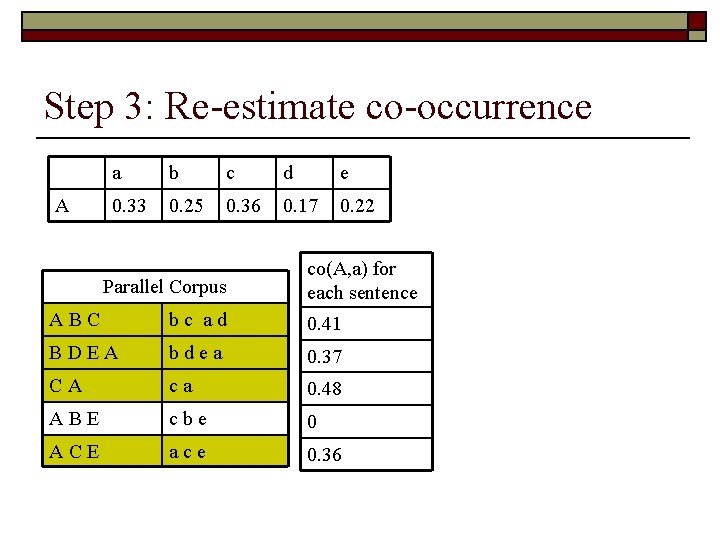

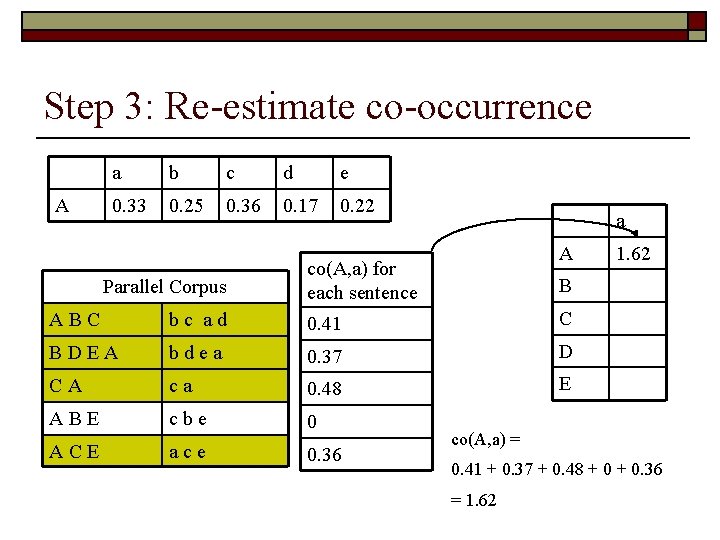

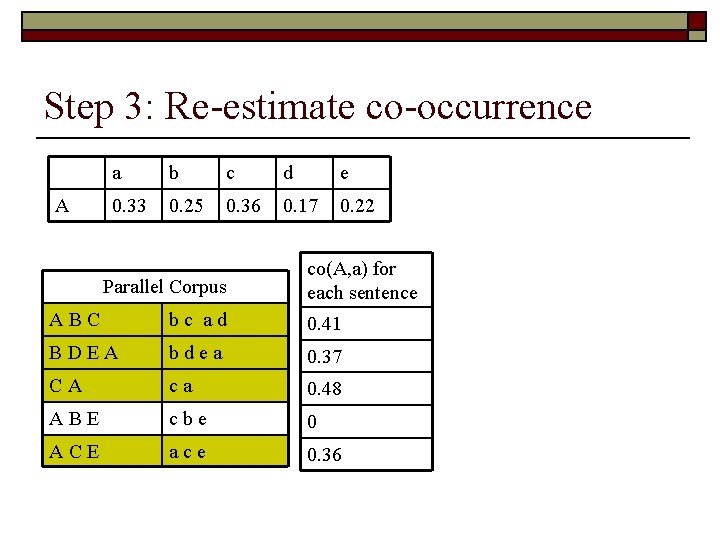

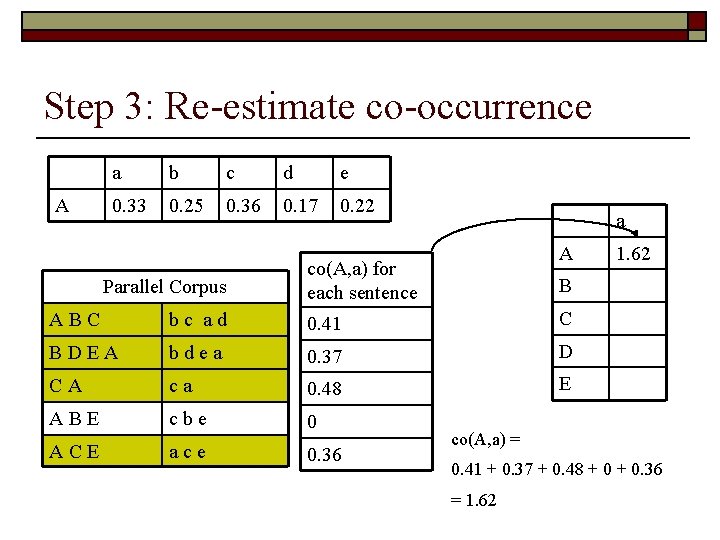

Step 3: Re-estimate co-occurrence A a b c d e 0. 33 0. 25 0. 36 0. 17 0. 22 Parallel Corpus co(A, a) for each sentence ABC bc ad 0. 41 BDEA bdea 0. 37 CA ca 0. 48 ABE cbe 0 ACE ace 0. 36

Step 3: Re-estimate co-occurrence A a b c d e 0. 33 0. 25 0. 36 0. 17 0. 22 Parallel Corpus a A co(A, a) for each sentence B ABC bc ad 0. 41 C BDEA bdea 0. 37 D CA ca 0. 48 E ABE cbe 0 ACE ace 0. 36 1. 62 co(A, a) = 0. 41 + 0. 37 + 0. 48 + 0. 36 = 1. 62

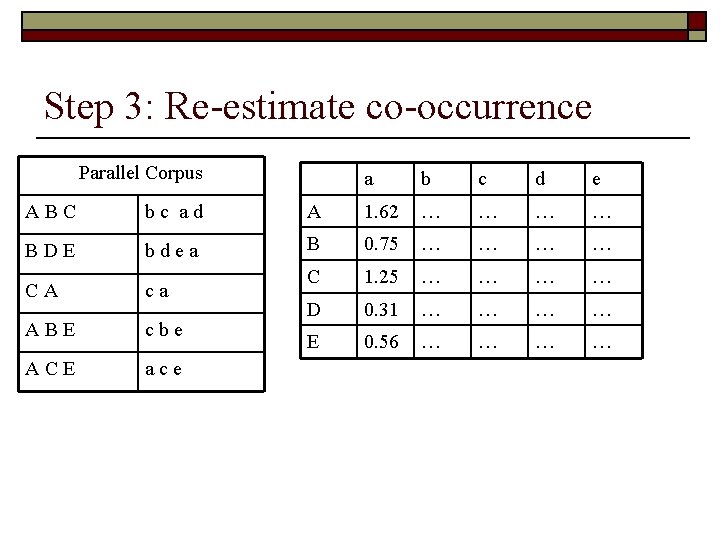

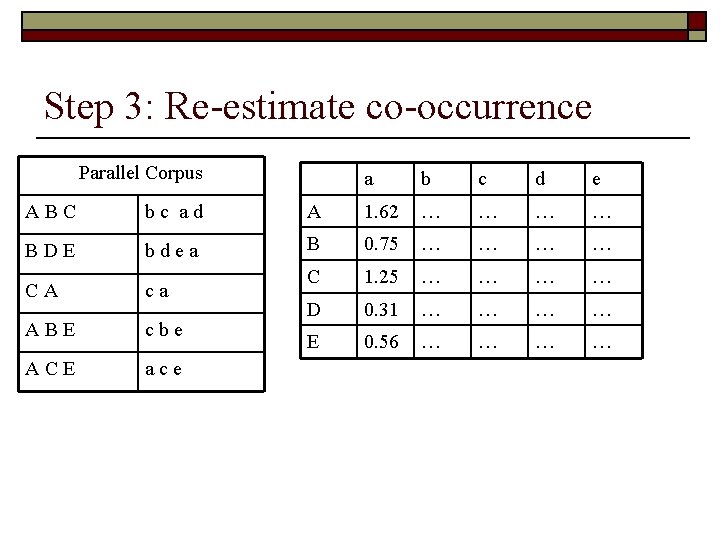

Step 3: Re-estimate co-occurrence Parallel Corpus a b c d e ABC bc ad A 1. 62 … … BDE bdea B 0. 75 … … C 1. 25 … … D 0. 31 … … E 0. 56 … … CA ca ABE cbe ACE ace

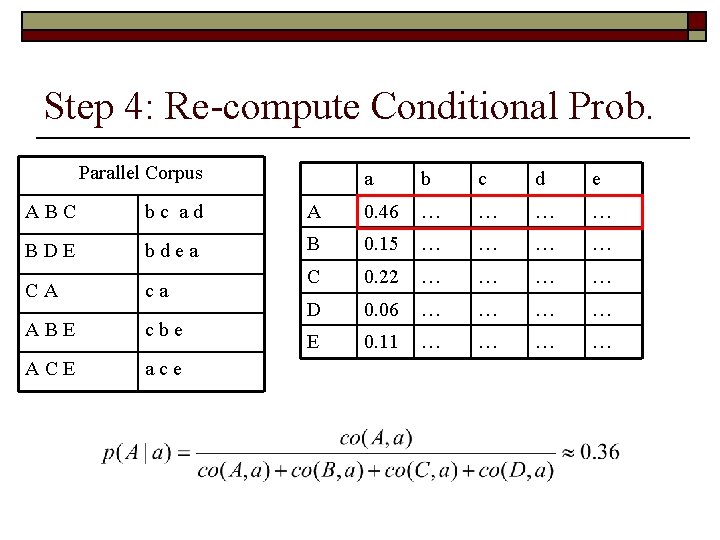

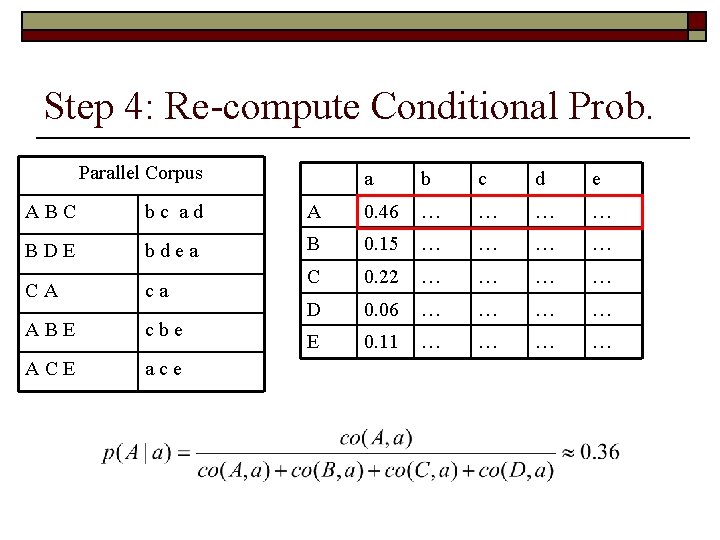

Step 4: Re-compute Conditional Prob. Parallel Corpus a b c d e ABC bc ad A 0. 46 … … BDE bdea B 0. 15 … … C 0. 22 … … D 0. 06 … … E 0. 11 … … CA ca ABE cbe ACE ace

IBM Statistical Translation Model o o o Apply the steps of counting and estimation iteratively The convergence can be proved This is related so-called Expectation Maximization Algorithm n n o E step: counting M step: estimate the translation probabilities It has the best performance in the past TREC evaluations for CLIR

Double palatal strap

Double palatal strap Lingual bar vs lingual plate

Lingual bar vs lingual plate A new approach to cross-modal multimedia retrieval

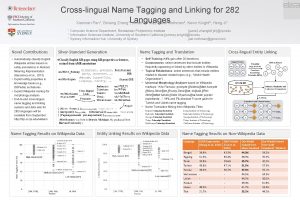

A new approach to cross-modal multimedia retrieval Xiaoman pan

Xiaoman pan Xin cho lòng chúng con

Xin cho lòng chúng con Tich phan suy rong 1

Tich phan suy rong 1 Qiu zhu

Qiu zhu Ngoại diên của hình vuông

Ngoại diên của hình vuông Euclid mở rộng

Euclid mở rộng Receptive grammar activities

Receptive grammar activities Advertisement rong

Advertisement rong Carnivourous

Carnivourous How to escape saddle points efficiently

How to escape saddle points efficiently Rong qu

Rong qu Muốn xóa một hoặc nhiều cột em thực hiện

Muốn xóa một hoặc nhiều cột em thực hiện Cái cối tân

Cái cối tân Sequential searching in information retrieval

Sequential searching in information retrieval Search engine architecture in information retrieval

Search engine architecture in information retrieval What is precision and recall in information retrieval

What is precision and recall in information retrieval Text operations in information retrieval

Text operations in information retrieval Query operations in information retrieval

Query operations in information retrieval For skip pointer more skip leads to

For skip pointer more skip leads to Index construction in information retrieval

Index construction in information retrieval Bsbi vs spimi

Bsbi vs spimi Which internet service is used for information retrieval

Which internet service is used for information retrieval Information retrieval tutorial

Information retrieval tutorial Wild card queries in information retrieval

Wild card queries in information retrieval Information retrieval system capabilities

Information retrieval system capabilities Link analysis in information retrieval

Link analysis in information retrieval Information retrieval lmu

Information retrieval lmu Defense acquisition management information retrieval

Defense acquisition management information retrieval Advantages of information retrieval system

Advantages of information retrieval system Information retrieval nlp

Information retrieval nlp Information retrieval data structures and algorithms

Information retrieval data structures and algorithms Information retrieval slides

Information retrieval slides Relevance information retrieval

Relevance information retrieval Stanford information retrieval

Stanford information retrieval Link analysis in information retrieval

Link analysis in information retrieval Which is a good idea for using skip pointers?

Which is a good idea for using skip pointers? Log frequency weighting

Log frequency weighting Levenshtein distance for oslo-snow

Levenshtein distance for oslo-snow Information retrieval

Information retrieval Information retrieval

Information retrieval Relevance information retrieval

Relevance information retrieval Information retrieval

Information retrieval Information retrieval

Information retrieval Url image

Url image Information retrieval

Information retrieval Cs-276

Cs-276 Introduction

Introduction Information retrieval

Information retrieval Information retrieval

Information retrieval Information retrieval

Information retrieval