Queries and Interfaces Rong Jin 1 Queries and

- Slides: 64

Queries and Interfaces Rong Jin 1

Queries and Information Needs o An information need is the underlying cause of the query that a person submits to a search engine n o information need is generally related to a task A query can be a poor representation of the information need n n User may find it difficult to express the information need User is encouraged to enter short queries both by the search engine interface, and by the fact that long queries don’t work 2

Interaction o Key aspect of effective retrieval n n o users can’t change ranking algorithm but can change results through interaction helps refine description of information need Interaction with the system occurs n n during query formulation and reformulation while browsing the result 3

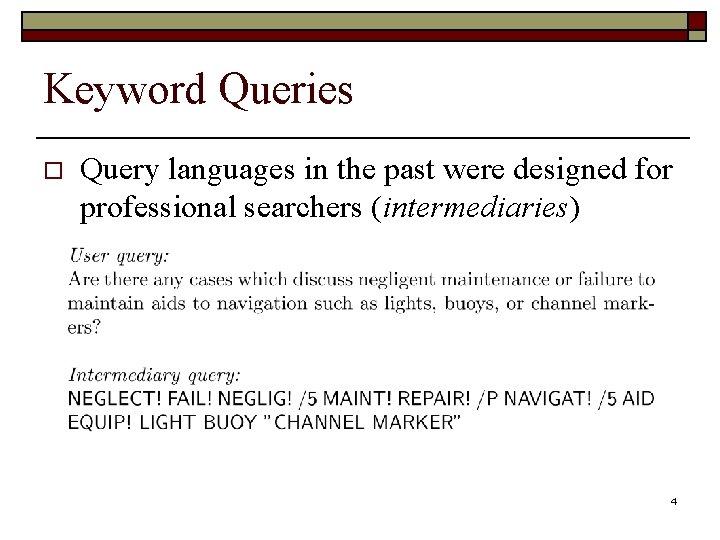

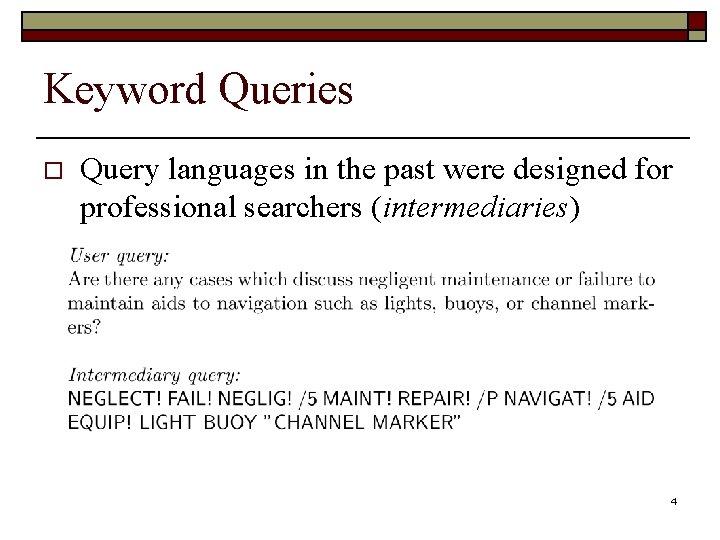

Keyword Queries o Query languages in the past were designed for professional searchers (intermediaries) 4

Keyword Queries o o o Simple, natural language queries were designed to enable everyone to search Current search engines do not perform well (in general) with natural language queries People trained (in effect) to use keywords n o compare average of about 2. 3 words/web query to average of 30 words/CQA query Keyword selection is not always easy n query refinement techniques can help 5

Query-Based Stemming o Make decision about stemming at query time rather than during indexing n o improved flexibility, effectiveness Query is expanded using word variants n n documents are not stemmed e. g. , “rock climbing” expanded with “climb”, not stemmed to “climb” 6

Stem Classes o A stem class is the group of words that will be transformed into the same stem by the stemming algorithm n n generated by running stemmer on large corpus e. g. , Porter stemmer on TREC News 7

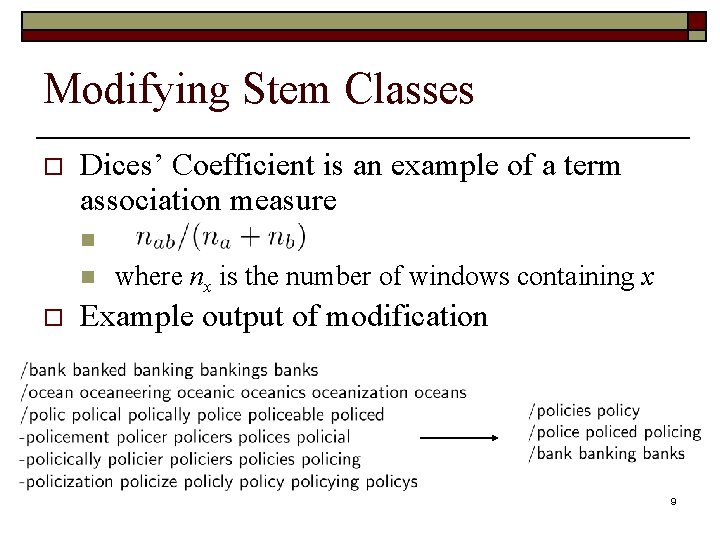

Stem Classes o o o Stem classes are often too big and inaccurate Modify using analysis of word co-occurrence Assumption: n Word variants that could substitute for each other should co-occur often in documents 8

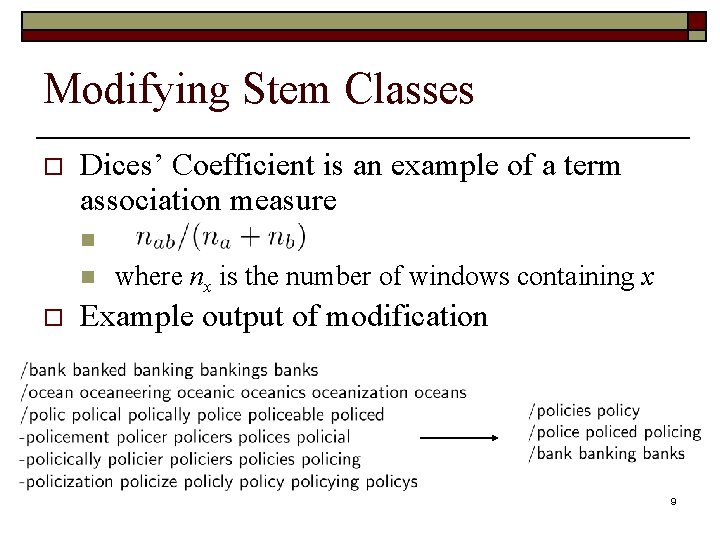

Modifying Stem Classes o Dices’ Coefficient is an example of a term association measure n n o where nx is the number of windows containing x Example output of modification 9

Spell Checking o Important part of query processing n o 10 -15% of all web queries have spelling errors Errors include typical word processing errors but also many other types, e. g. 10

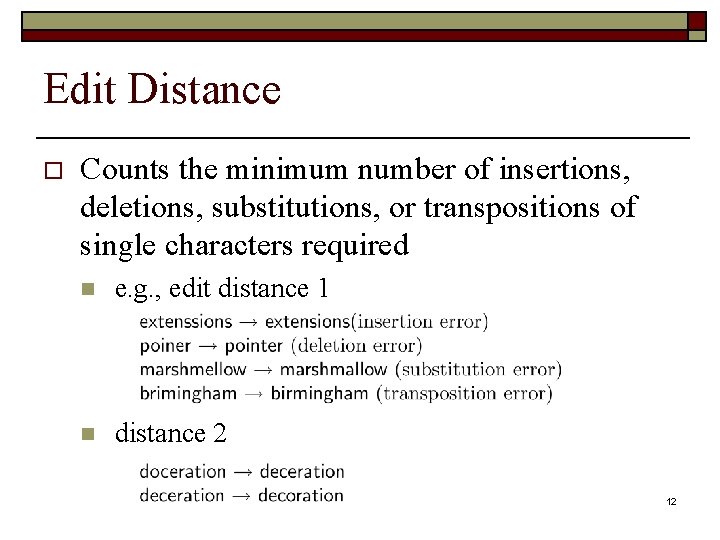

Spell Checking o o o Basic approach: suggest corrections for words not found in spelling dictionary Suggestions found by comparing word to words in dictionary using similarity measure Most common similarity measure is edit distance n number of operations required to transform one word into the other 11

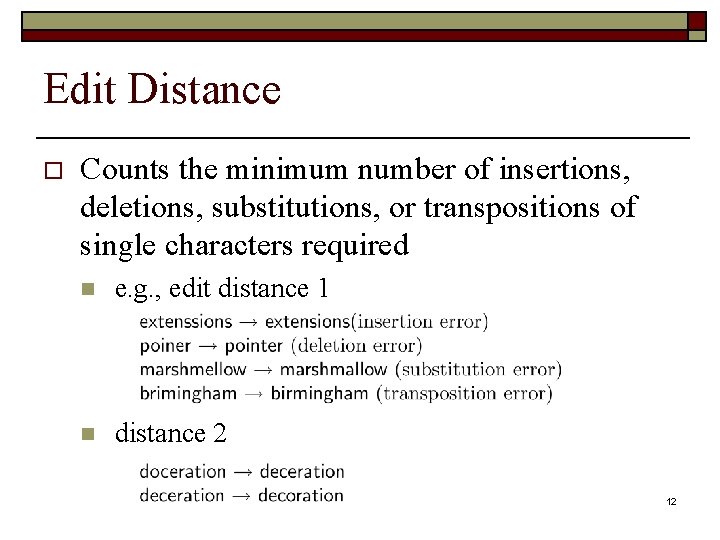

Edit Distance o Counts the minimum number of insertions, deletions, substitutions, or transpositions of single characters required n e. g. , edit distance 1 n distance 2 12

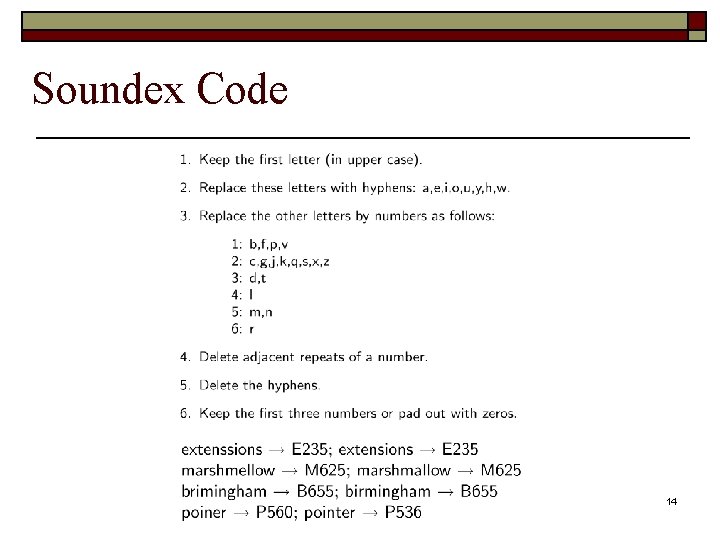

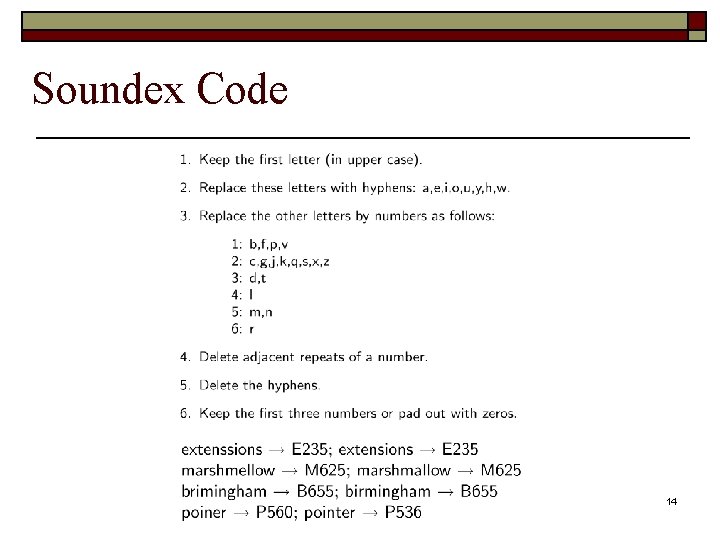

Edit Distance o Speed up calculation of edit distances n n n o restrict to words starting with same character restrict to words of same or similar length restrict to words that sound the same Last option uses a phonetic code to group words n e. g. restrict to words of the same Soundex 13

Soundex Code 14

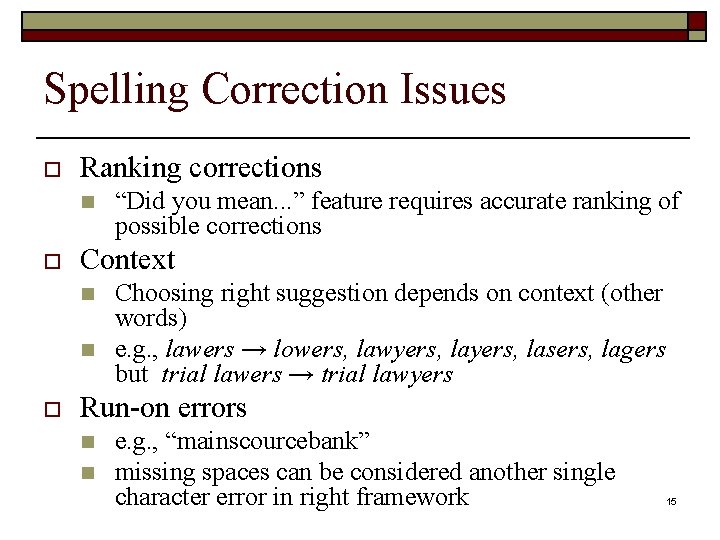

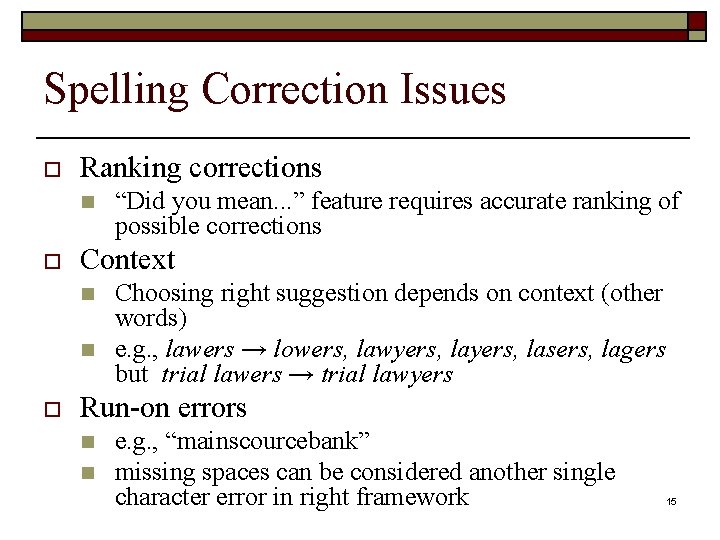

Spelling Correction Issues o Ranking corrections n o Context n n o “Did you mean. . . ” feature requires accurate ranking of possible corrections Choosing right suggestion depends on context (other words) e. g. , lawers → lowers, lawyers, lasers, lagers but trial lawers → trial lawyers Run-on errors n n e. g. , “mainscourcebank” missing spaces can be considered another single character error in right framework 15

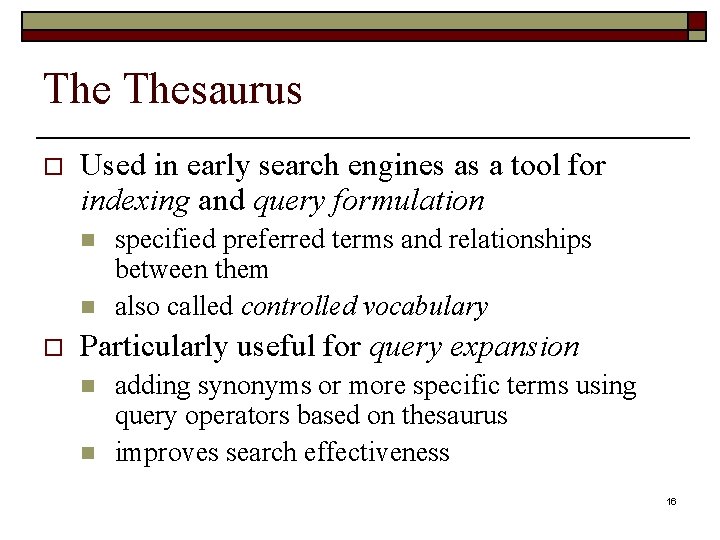

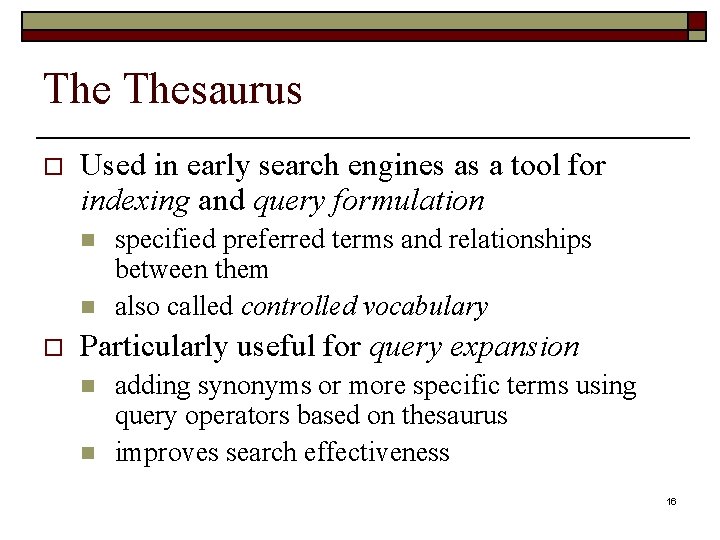

The Thesaurus o Used in early search engines as a tool for indexing and query formulation n n o specified preferred terms and relationships between them also called controlled vocabulary Particularly useful for query expansion n n adding synonyms or more specific terms using query operators based on thesaurus improves search effectiveness 16

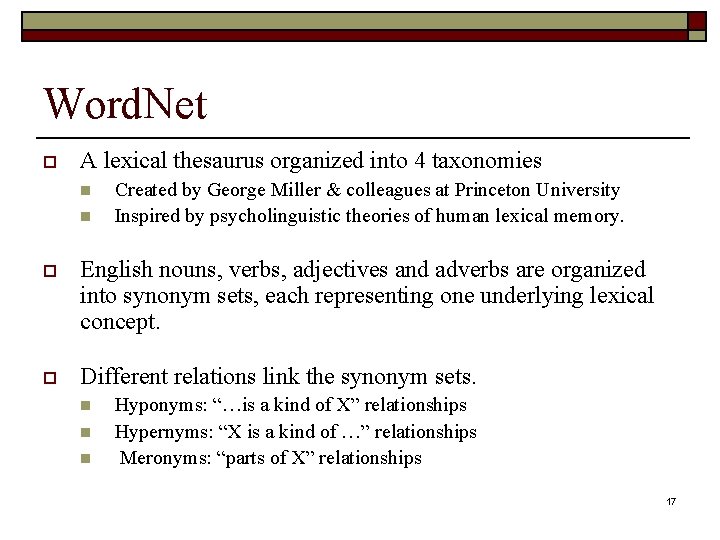

Word. Net o A lexical thesaurus organized into 4 taxonomies n n Created by George Miller & colleagues at Princeton University Inspired by psycholinguistic theories of human lexical memory. o English nouns, verbs, adjectives and adverbs are organized into synonym sets, each representing one underlying lexical concept. o Different relations link the synonym sets. n n n Hyponyms: “…is a kind of X” relationships Hypernyms: “X is a kind of …” relationships Meronyms: “parts of X” relationships 17

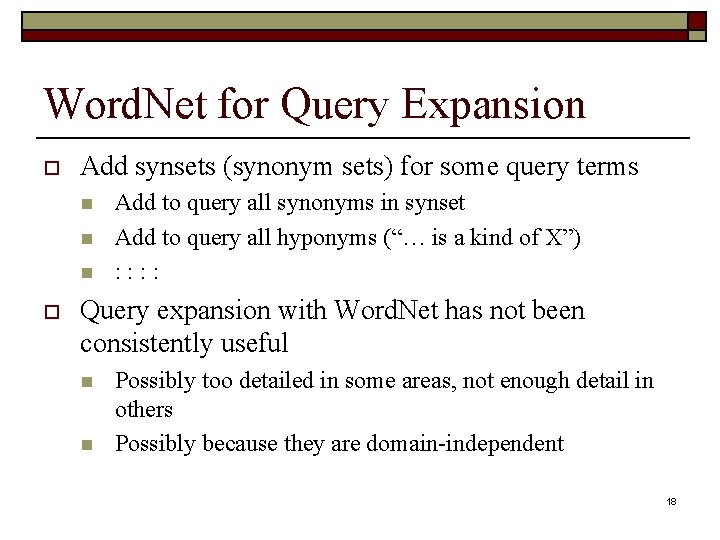

Word. Net for Query Expansion o Add synsets (synonym sets) for some query terms n n n o Add to query all synonyms in synset Add to query all hyponyms (“… is a kind of X”) : : Query expansion with Word. Net has not been consistently useful n n Possibly too detailed in some areas, not enough detail in others Possibly because they are domain-independent 18

Query Expansion o A variety of automatic or semi-automatic query expansion techniques have been developed n n o to improve effectiveness by matching related terms semi-automatic techniques require user interaction to select best expansion terms Query suggestion is a related technique n alternative queries, not necessarily more terms 19

Query Expansion o Approaches usually based on an analysis of term co-occurrence n n o either in the entire document collection, a large collection of queries, or the top-ranked documents in a result list query-based stemming also an expansion technique Automatic expansion based on general thesaurus not effective n does not take context into account 20

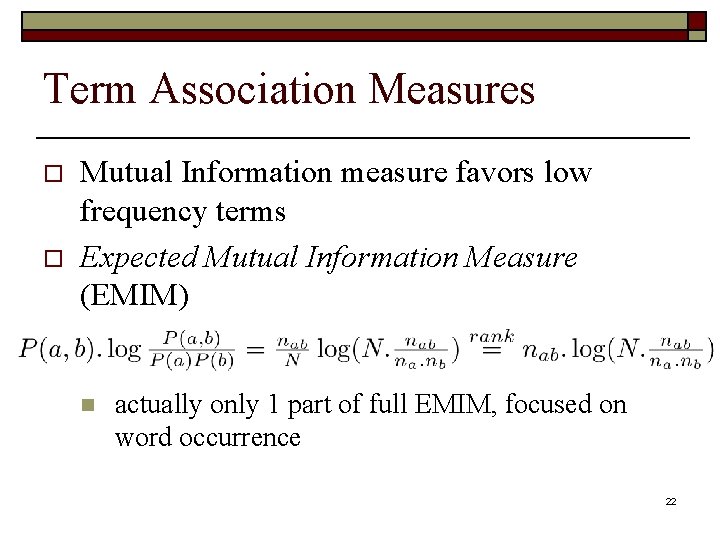

Term Association Measures o Dice’s Coefficient o Mutual Information o Favor words with high co-occurrence but low document frequency na and nb 21

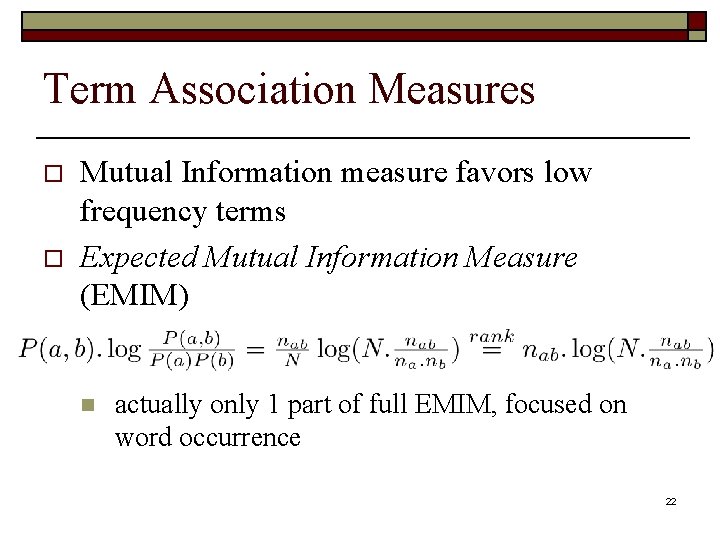

Term Association Measures o o Mutual Information measure favors low frequency terms Expected Mutual Information Measure (EMIM) n actually only 1 part of full EMIM, focused on word occurrence 22

Association Measure Example Most strongly associated words for “tropical” in a collection of TREC news stories. Co-occurrence counts are measured at the document level. 23

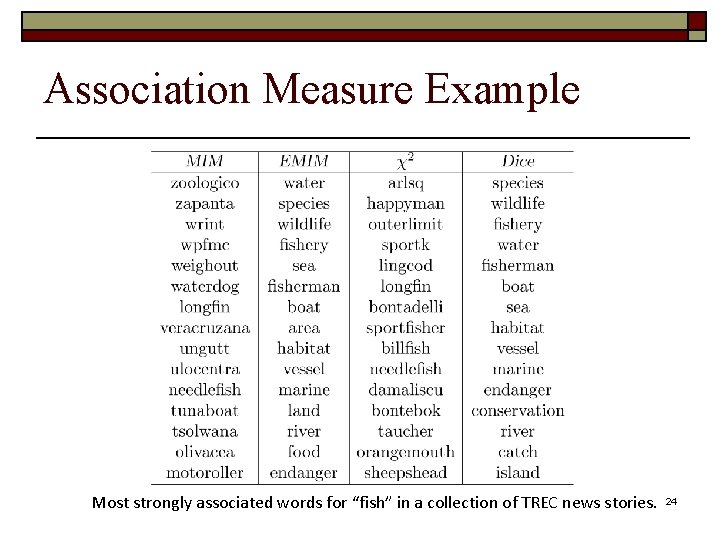

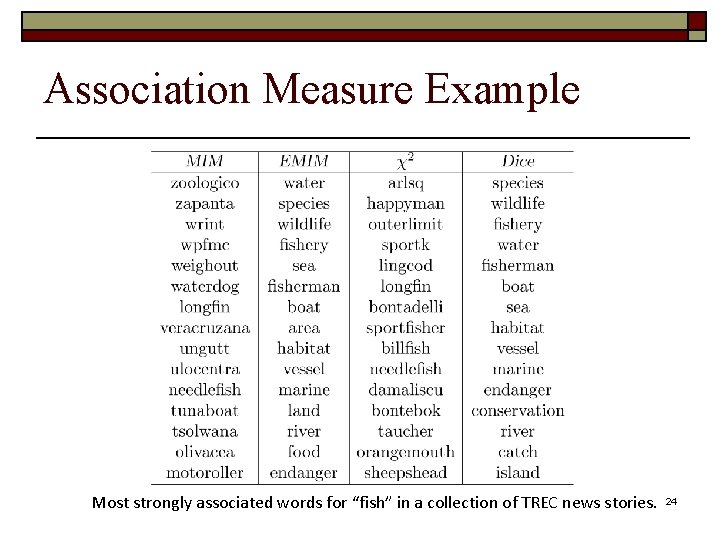

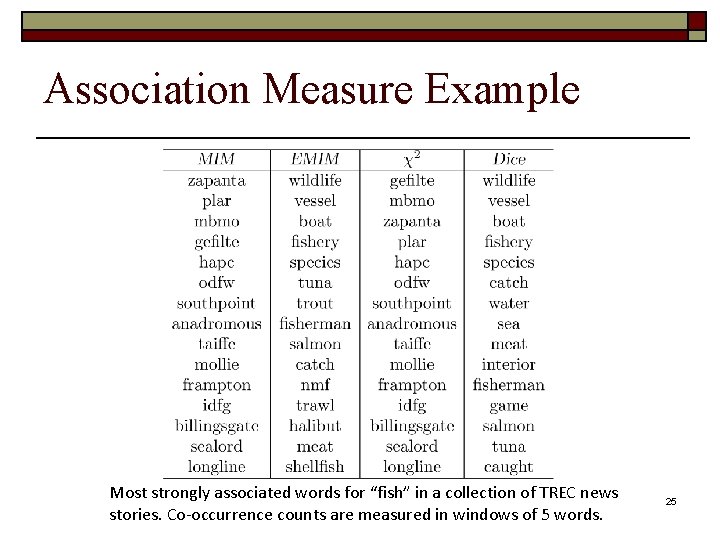

Association Measure Example Most strongly associated words for “fish” in a collection of TREC news stories. 24

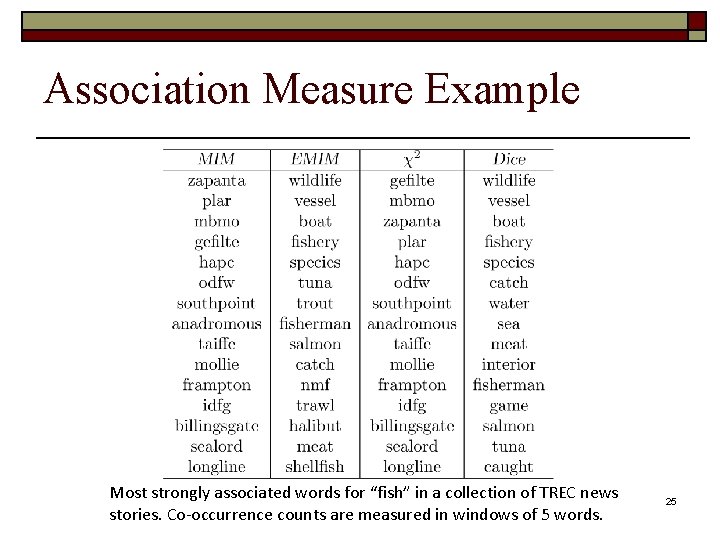

Association Measure Example Most strongly associated words for “fish” in a collection of TREC news stories. Co-occurrence counts are measured in windows of 5 words. 25

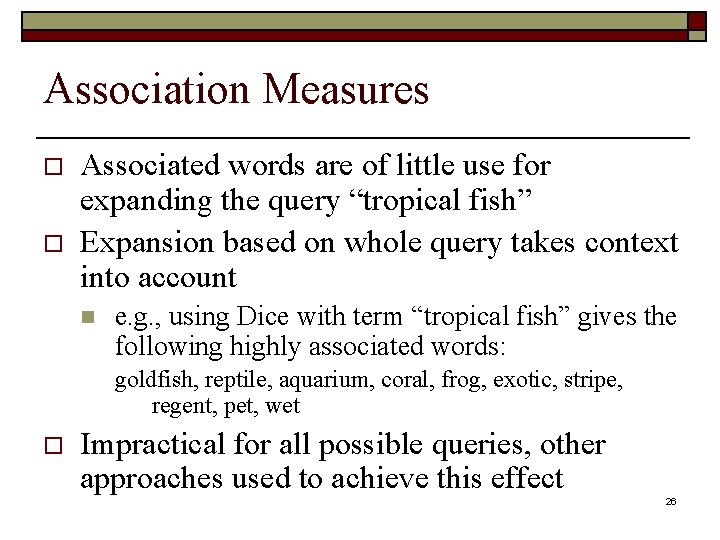

Association Measures o o Associated words are of little use for expanding the query “tropical fish” Expansion based on whole query takes context into account n e. g. , using Dice with term “tropical fish” gives the following highly associated words: goldfish, reptile, aquarium, coral, frog, exotic, stripe, regent, pet, wet o Impractical for all possible queries, other approaches used to achieve this effect 26

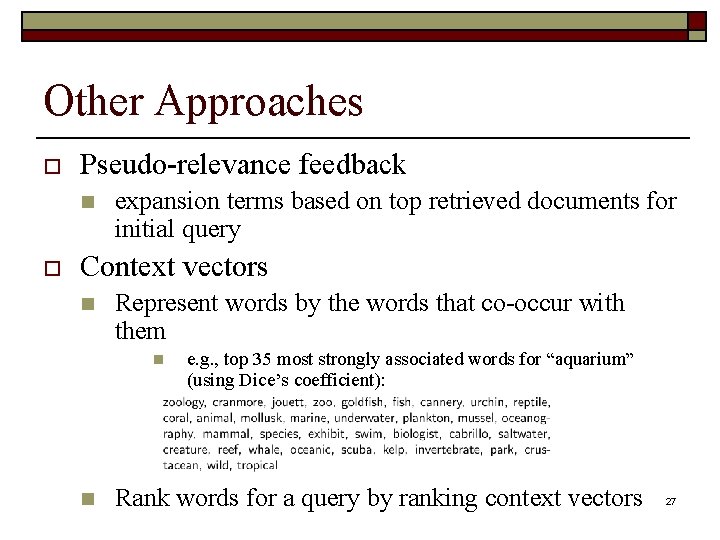

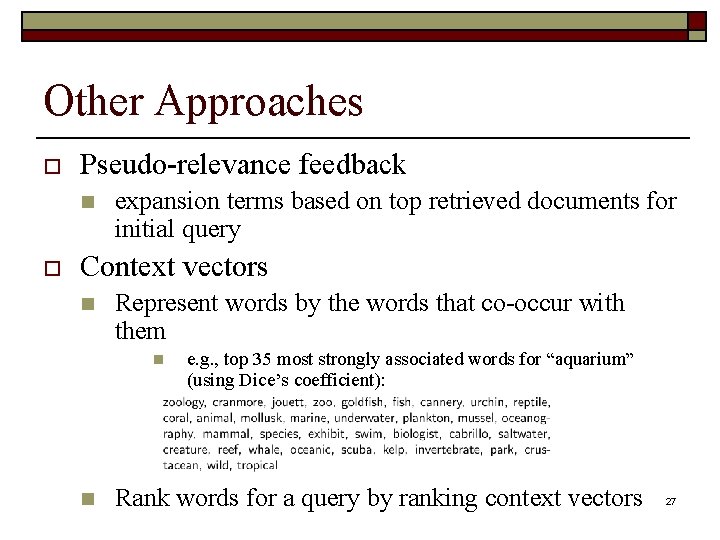

Other Approaches o Pseudo-relevance feedback n o expansion terms based on top retrieved documents for initial query Context vectors n Represent words by the words that co-occur with them n n e. g. , top 35 most strongly associated words for “aquarium” (using Dice’s coefficient): Rank words for a query by ranking context vectors 27

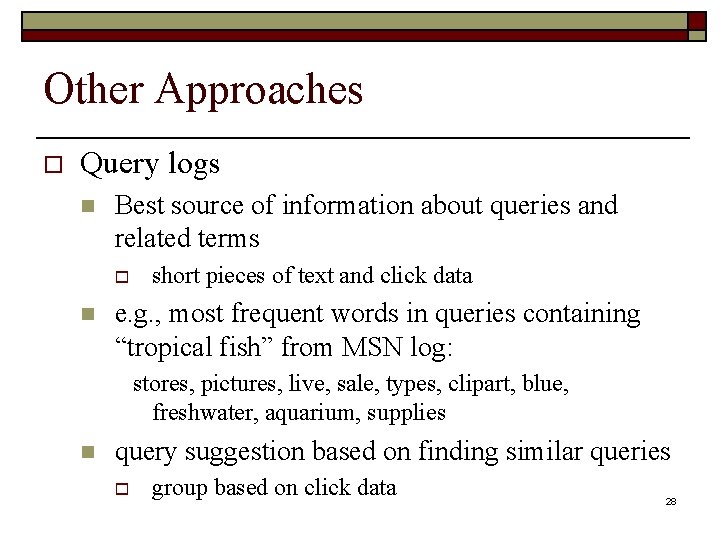

Other Approaches o Query logs n Best source of information about queries and related terms o n short pieces of text and click data e. g. , most frequent words in queries containing “tropical fish” from MSN log: stores, pictures, live, sale, types, clipart, blue, freshwater, aquarium, supplies n query suggestion based on finding similar queries o group based on click data 28

Relevance Feedback o o User identifies relevant (and maybe non-relevant) documents in the initial result list System modifies query using terms from those documents and re-ranks documents 29

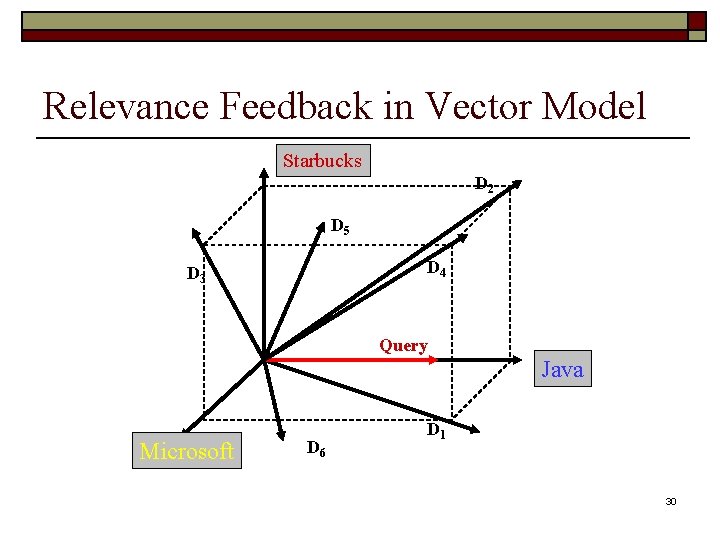

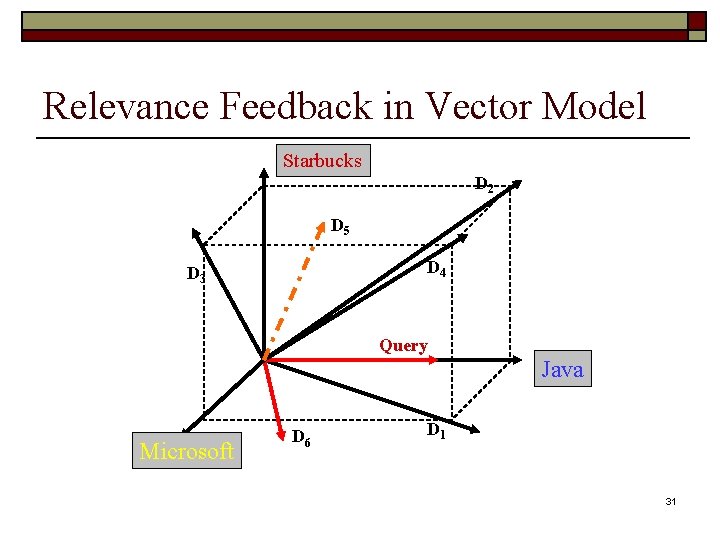

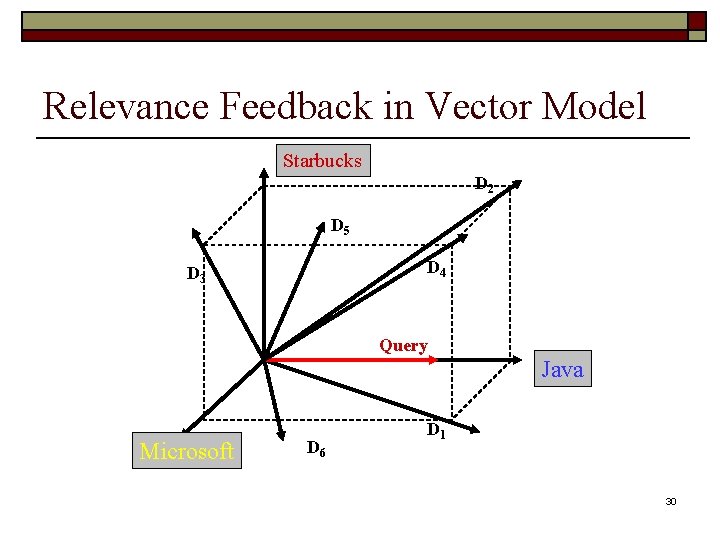

Relevance Feedback in Vector Model Starbucks D 2 D 5 D 4 D 3 Query Java Microsoft D 6 D 1 30

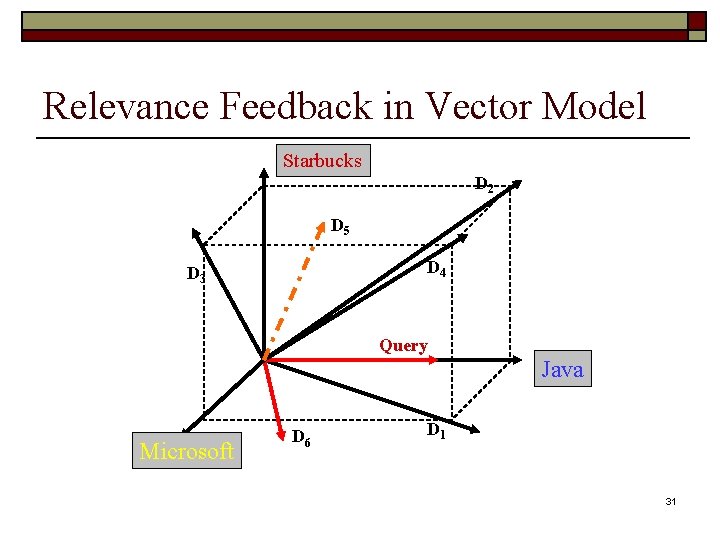

Relevance Feedback in Vector Model Starbucks D 2 D 5 D 4 D 3 Query Java Microsoft D 6 D 1 31

Relevance Feedback in Vector Model Starbucks D 2 D 5 D 4 D 3 Java Microsoft D 6 Query D 1 32

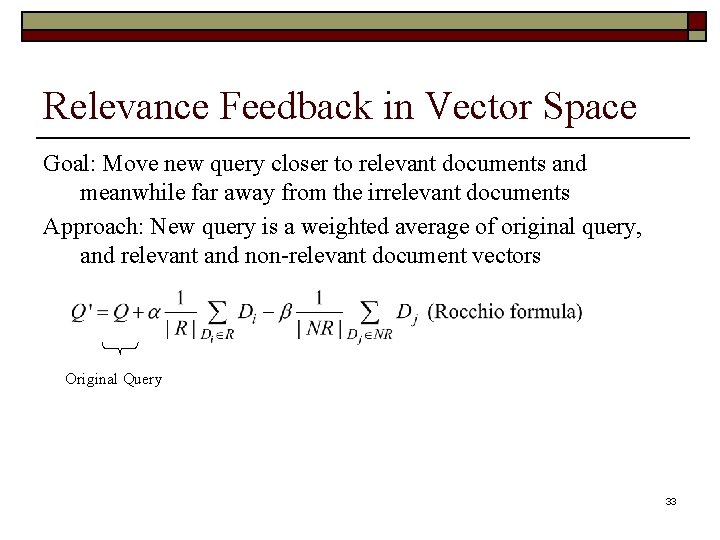

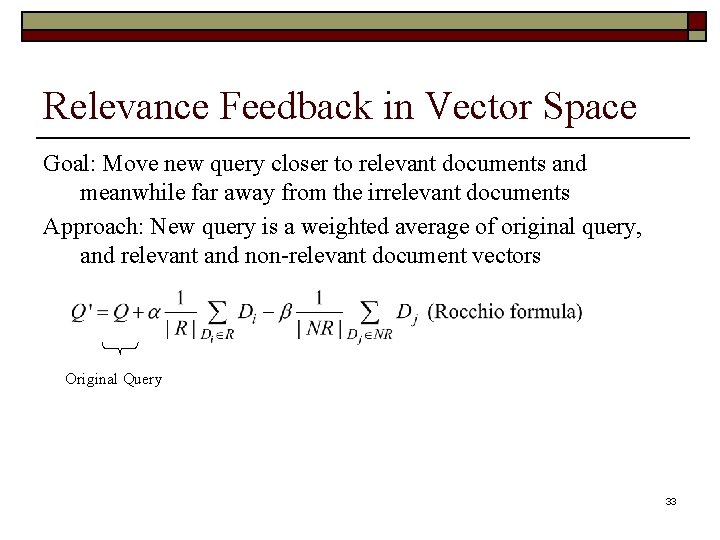

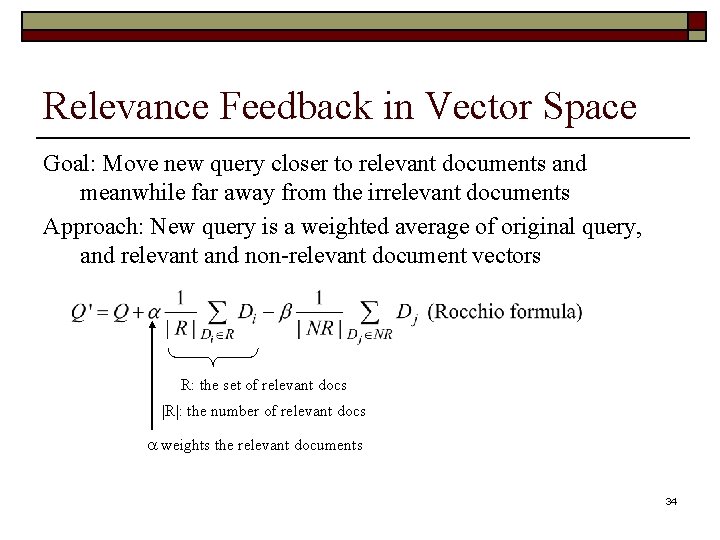

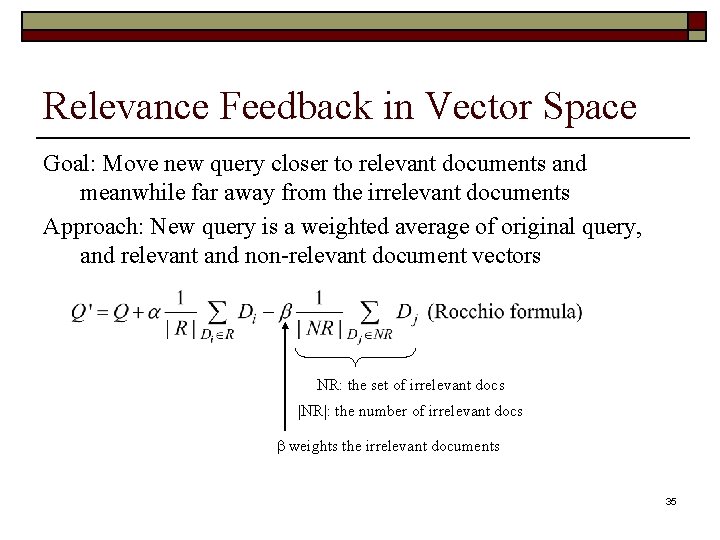

Relevance Feedback in Vector Space Goal: Move new query closer to relevant documents and meanwhile far away from the irrelevant documents Approach: New query is a weighted average of original query, and relevant and non-relevant document vectors Original Query 33

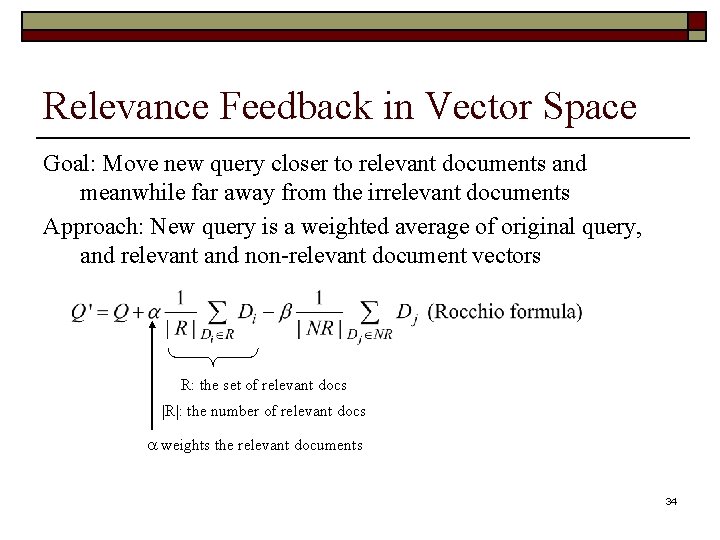

Relevance Feedback in Vector Space Goal: Move new query closer to relevant documents and meanwhile far away from the irrelevant documents Approach: New query is a weighted average of original query, and relevant and non-relevant document vectors R: the set of relevant docs |R|: the number of relevant docs weights the relevant documents 34

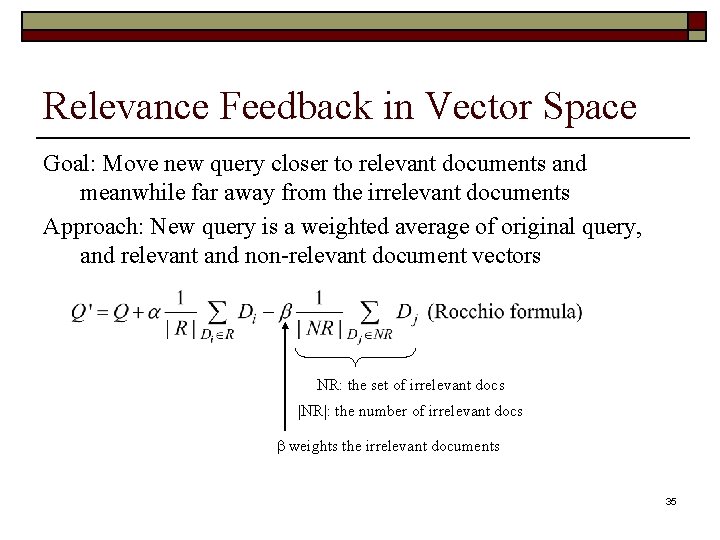

Relevance Feedback in Vector Space Goal: Move new query closer to relevant documents and meanwhile far away from the irrelevant documents Approach: New query is a weighted average of original query, and relevant and non-relevant document vectors NR: the set of irrelevant docs |NR|: the number of irrelevant docs weights the irrelevant documents 35

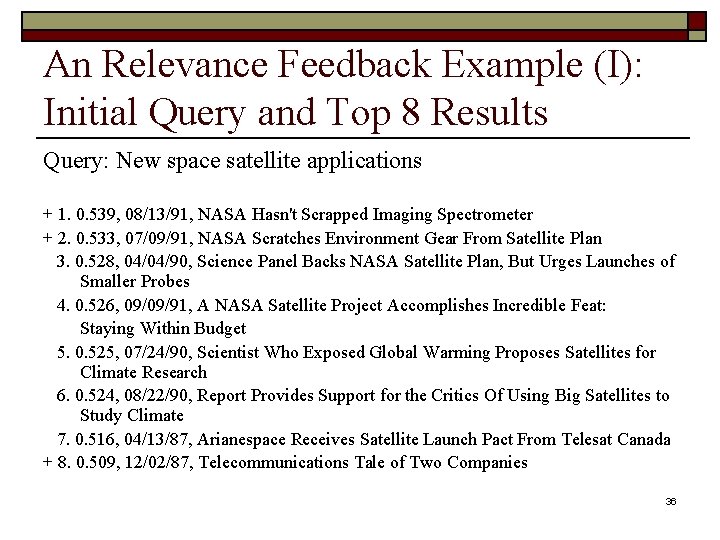

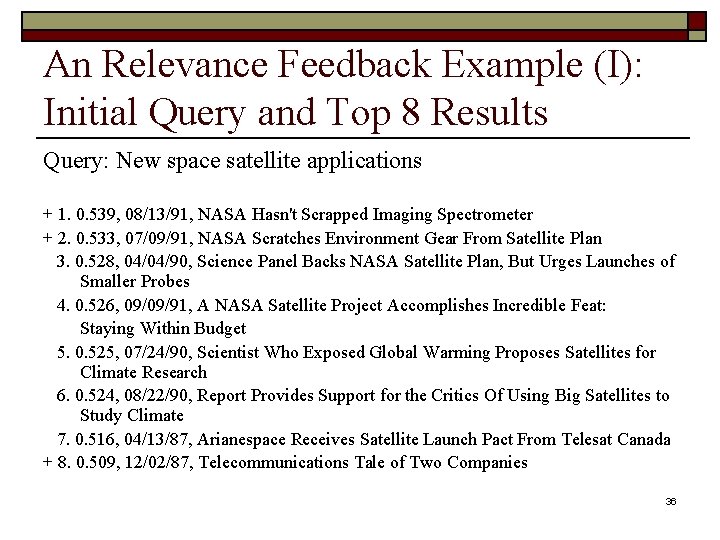

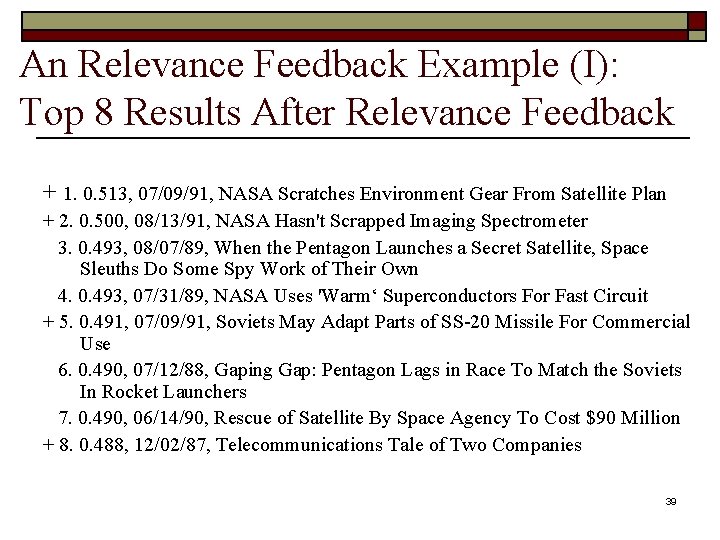

An Relevance Feedback Example (I): Initial Query and Top 8 Results Query: New space satellite applications + 1. 0. 539, 08/13/91, NASA Hasn't Scrapped Imaging Spectrometer + 2. 0. 533, 07/09/91, NASA Scratches Environment Gear From Satellite Plan 3. 0. 528, 04/04/90, Science Panel Backs NASA Satellite Plan, But Urges Launches of Smaller Probes 4. 0. 526, 09/09/91, A NASA Satellite Project Accomplishes Incredible Feat: Staying Within Budget 5. 0. 525, 07/24/90, Scientist Who Exposed Global Warming Proposes Satellites for Climate Research 6. 0. 524, 08/22/90, Report Provides Support for the Critics Of Using Big Satellites to Study Climate 7. 0. 516, 04/13/87, Arianespace Receives Satellite Launch Pact From Telesat Canada + 8. 0. 509, 12/02/87, Telecommunications Tale of Two Companies 36

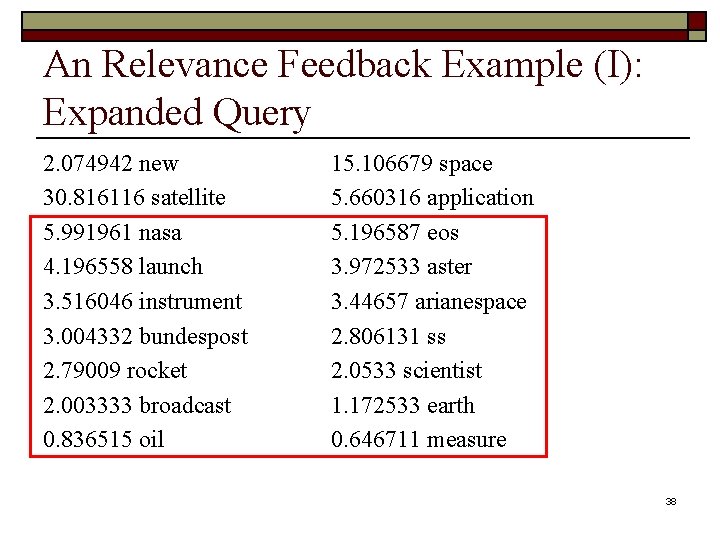

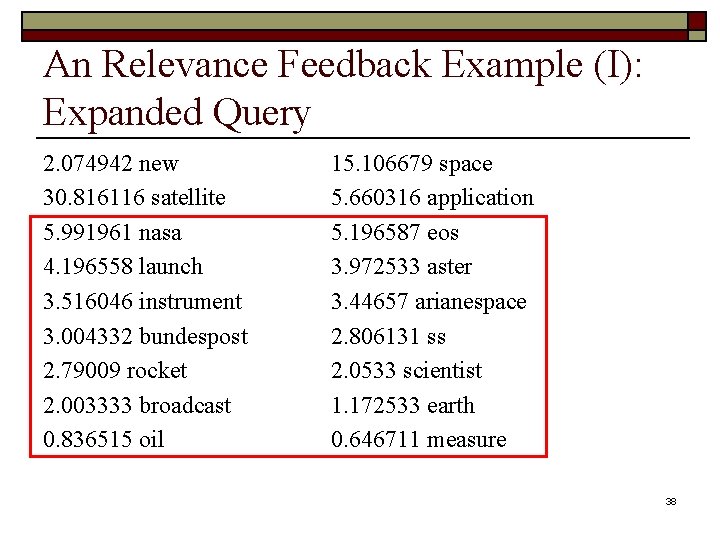

An Relevance Feedback Example (I): Expanded Query 2. 074942 new 30. 816116 satellite 5. 991961 nasa 4. 196558 launch 3. 516046 instrument 3. 004332 bundespost 2. 79009 rocket 2. 003333 broadcast 0. 836515 oil 15. 106679 space 5. 660316 application 5. 196587 eos 3. 972533 aster 3. 44657 arianespace 2. 806131 ss 2. 0533 scientist 1. 172533 earth 0. 646711 measure 37

An Relevance Feedback Example (I): Expanded Query 2. 074942 new 30. 816116 satellite 5. 991961 nasa 4. 196558 launch 3. 516046 instrument 3. 004332 bundespost 2. 79009 rocket 2. 003333 broadcast 0. 836515 oil 15. 106679 space 5. 660316 application 5. 196587 eos 3. 972533 aster 3. 44657 arianespace 2. 806131 ss 2. 0533 scientist 1. 172533 earth 0. 646711 measure 38

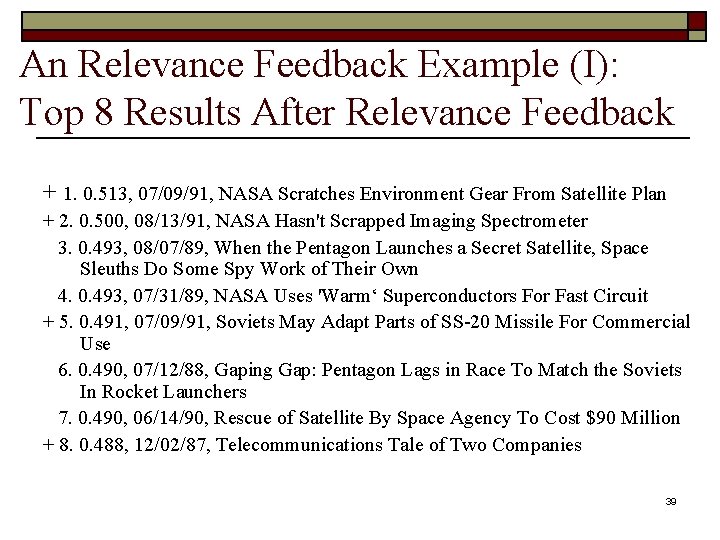

An Relevance Feedback Example (I): Top 8 Results After Relevance Feedback + 1. 0. 513, 07/09/91, NASA Scratches Environment Gear From Satellite Plan + 2. 0. 500, 08/13/91, NASA Hasn't Scrapped Imaging Spectrometer 3. 0. 493, 08/07/89, When the Pentagon Launches a Secret Satellite, Space Sleuths Do Some Spy Work of Their Own 4. 0. 493, 07/31/89, NASA Uses 'Warm‘ Superconductors For Fast Circuit + 5. 0. 491, 07/09/91, Soviets May Adapt Parts of SS-20 Missile For Commercial Use 6. 0. 490, 07/12/88, Gaping Gap: Pentagon Lags in Race To Match the Soviets In Rocket Launchers 7. 0. 490, 06/14/90, Rescue of Satellite By Space Agency To Cost $90 Million + 8. 0. 488, 12/02/87, Telecommunications Tale of Two Companies 39

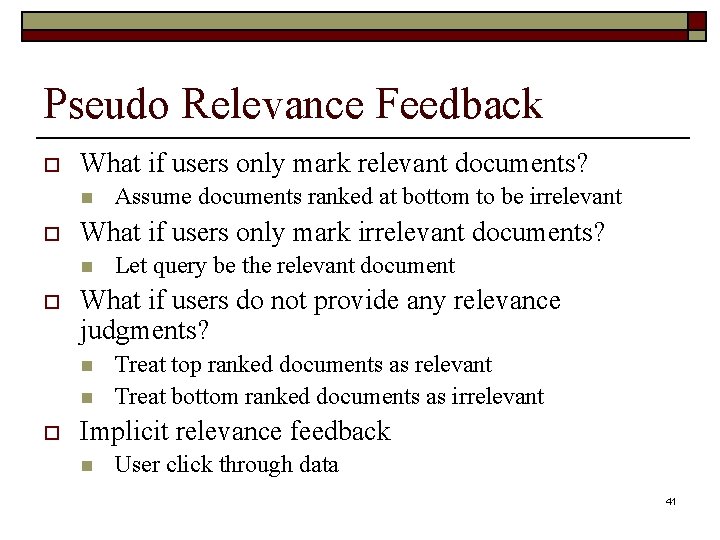

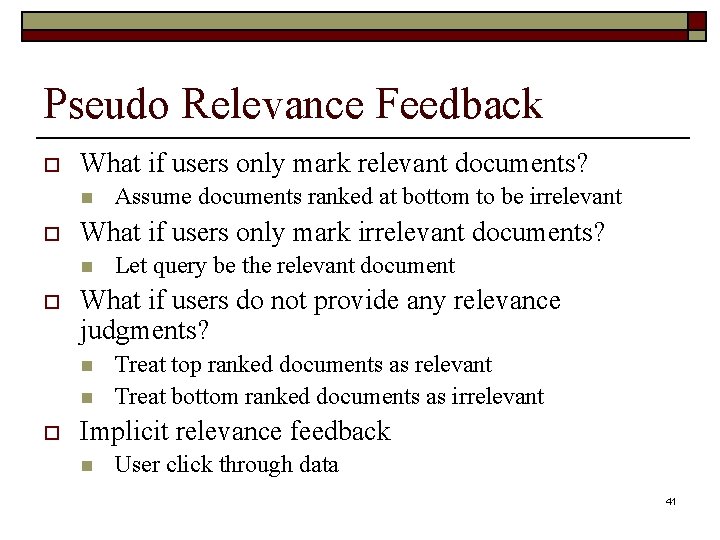

Pseudo Relevance Feedback o What if users only mark relevant documents? o What if users only mark irrelevant documents? o What if users do not provide any relevance judgments? 40

Pseudo Relevance Feedback o What if users only mark relevant documents? n o What if users only mark irrelevant documents? n o Let query be the relevant document What if users do not provide any relevance judgments? n n o Assume documents ranked at bottom to be irrelevant Treat top ranked documents as relevant Treat bottom ranked documents as irrelevant Implicit relevance feedback n User click through data 41

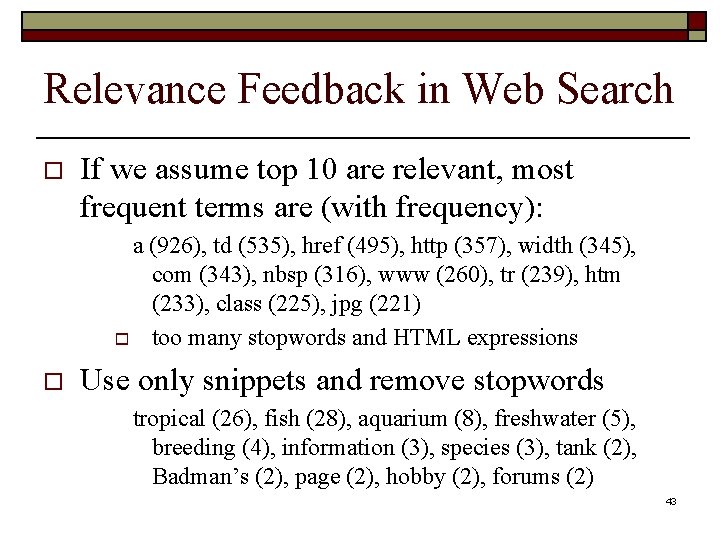

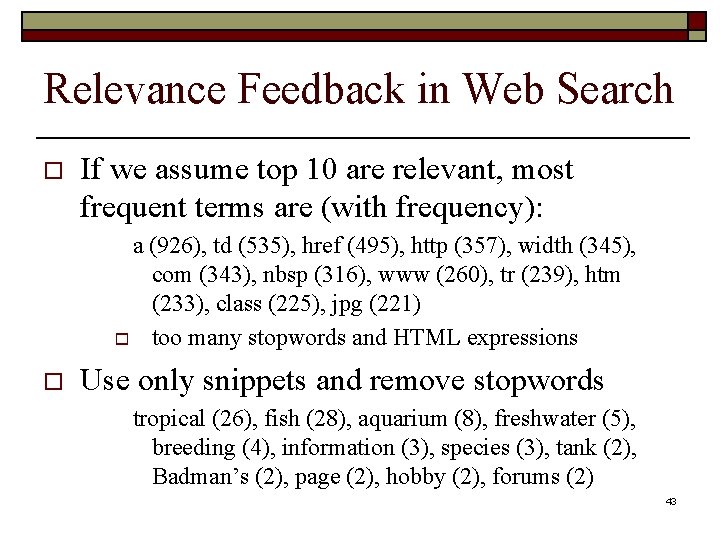

Relevance Feedback in Web Search Top 10 documents for “tropical fish” 42

Relevance Feedback in Web Search o If we assume top 10 are relevant, most frequent terms are (with frequency): a (926), td (535), href (495), http (357), width (345), com (343), nbsp (316), www (260), tr (239), htm (233), class (225), jpg (221) o too many stopwords and HTML expressions o Use only snippets and remove stopwords tropical (26), fish (28), aquarium (8), freshwater (5), breeding (4), information (3), species (3), tank (2), Badman’s (2), page (2), hobby (2), forums (2) 43

Relevance Feedback o Relevance feedback is not used in many applications n o Some applications use relevance feedback n o Reliability issues, especially with queries that don’t retrieve many relevant documents filtering, “more like this” Query suggestion more popular n may be less accurate, but can work if initial query fails 44

Context and Personalization o If a query has the same words as another query, results will be the same regardless of n n o who submitted the query why the query was submitted where the query was submitted what other queries were submitted in the same session These other factors (the context) could have a significant impact on relevance n difficult to incorporate into ranking 45

User Models o Generate user profiles based on documents that the person looks at n o o such as web pages visited, email messages, or word processing documents on the desktop Modify queries using words from profile Generally not effective n imprecise profiles, information needs can change significantly 46

Query Logs o o Query logs provide important contextual information that can be used effectively Context in this case is n n n o previous queries that are the same previous queries that are similar query sessions including the same query Query history for individuals could be used for caching 47

Local Search o o Location is context Local search uses geographic information to modify the ranking of search results n n o location derived from the query text location of the device where the query originated e. g. , n n “underworld 3 cape cod” “underworld 3” from mobile device in Hyannis 48

Local Search o Identify the geographic region associated with web pages n n o Identify the geographic region associated with the query n o location metadata, or automatically identifying the locations such as place names, city names, or country names in text 10 -15% of queries contain some location reference Rank web pages using location information in addition to text and link-based features 49

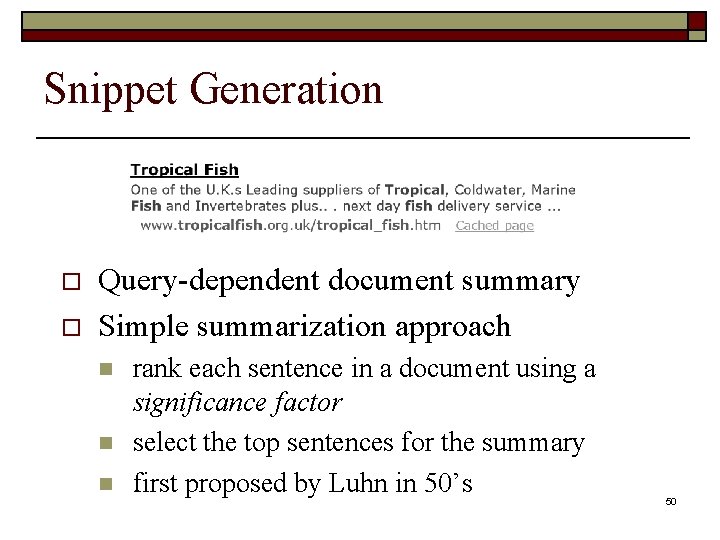

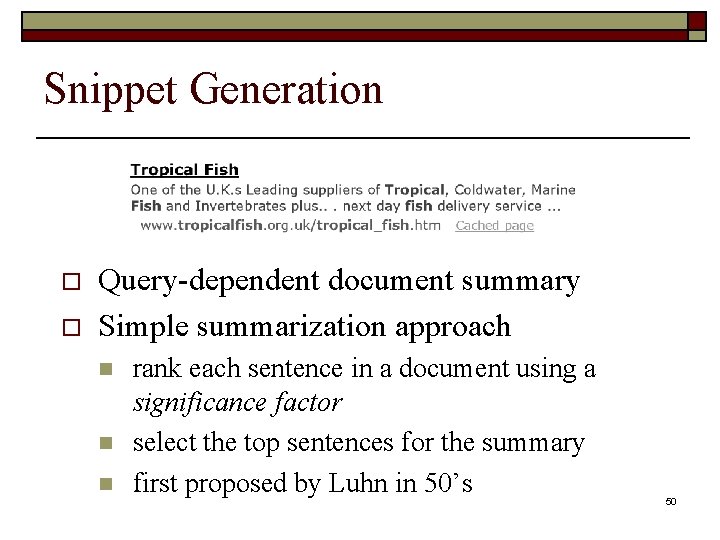

Snippet Generation o o Query-dependent document summary Simple summarization approach n n n rank each sentence in a document using a significance factor select the top sentences for the summary first proposed by Luhn in 50’s 50

Snippet Generation o o Involves more features than just significance factor e. g. for a news story, could use n n n o whether the sentence is a heading whether it is the first or second line of the document the total number of query terms occurring in the sentence the number of unique query terms in the sentence the longest contiguous run of query words in the sentence a density measure of query words (significance factor) Weighted combination of features used to rank sentences 51

Snippet Guidelines o o All query terms should appear in the summary, showing their relationship to the retrieved page When query terms are present in the title, they need not be repeated n o o allows snippets that do not contain query terms Highlight query terms in URLs Snippets should be readable text, not lists of keywords 52

Advertising o o o Sponsored search – advertising presented with search results Contextual advertising – advertising presented when browsing web pages Both involve finding the most relevant advertisements in a database n An advertisement usually consists of a short text description and a link to a web page describing the product or service in more detail 53

Searching Advertisements o Factors involved in ranking advertisements n n n o similarity of text content to query bids for keywords in query popularity of advertisement Small amount of text in advertisement n n dealing with vocabulary mismatch is important expansion techniques are effective 54

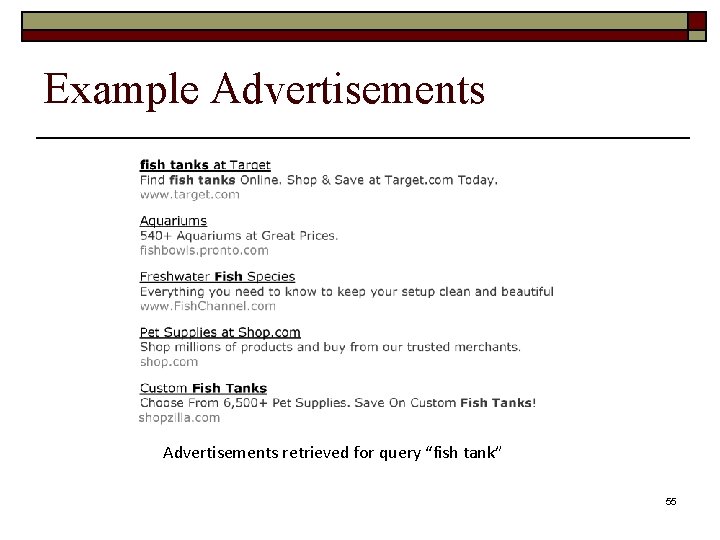

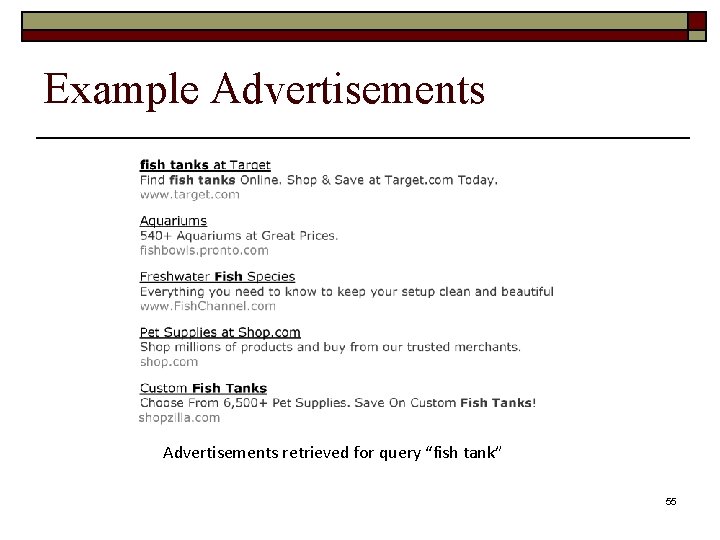

Example Advertisements retrieved for query “fish tank” 55

Searching Advertisements o Pseudo-relevance feedback n n n o expand query and/or document using the Web use ad text or query for pseudo-relevance feedback rank exact matches first, followed by stem matches, followed by expansion matches Query reformulation based on search sessions n learn associations between words and phrases based on co-occurrence in search sessions 56

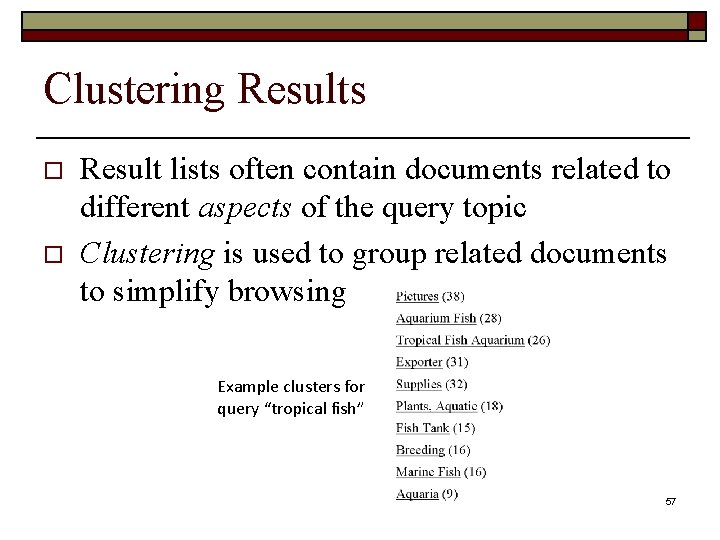

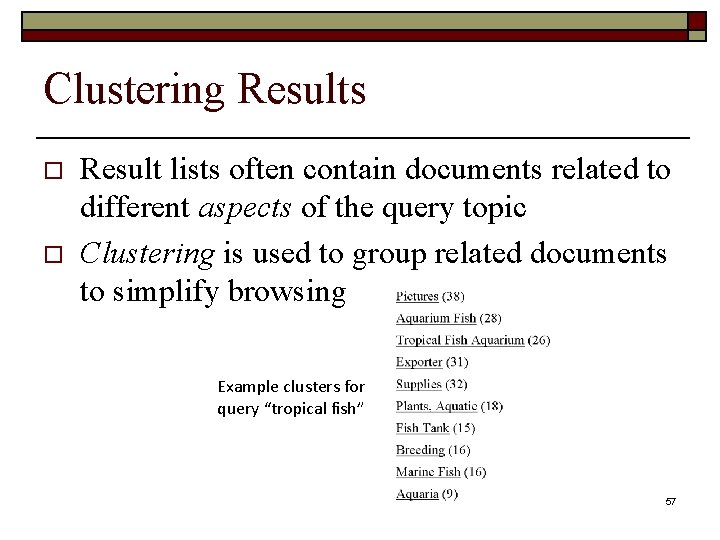

Clustering Results o o Result lists often contain documents related to different aspects of the query topic Clustering is used to group related documents to simplify browsing Example clusters for query “tropical fish” 57

Result List Example Top 10 documents for “tropical fish” 58

Clustering Results o o Requirements Efficiency n n o must be specific to each query and are based on the top-ranked documents for that query typically based on snippets Easy to understand n Can be difficult to assign good labels to groups 59

Faceted Classification o o A set of categories, usually organized into a hierarchy, together with a set of facets that describe the important properties associated with the category Manually defined n o potentially less adaptable than dynamic classification Easy to understand n commonly used in e-commerce 60

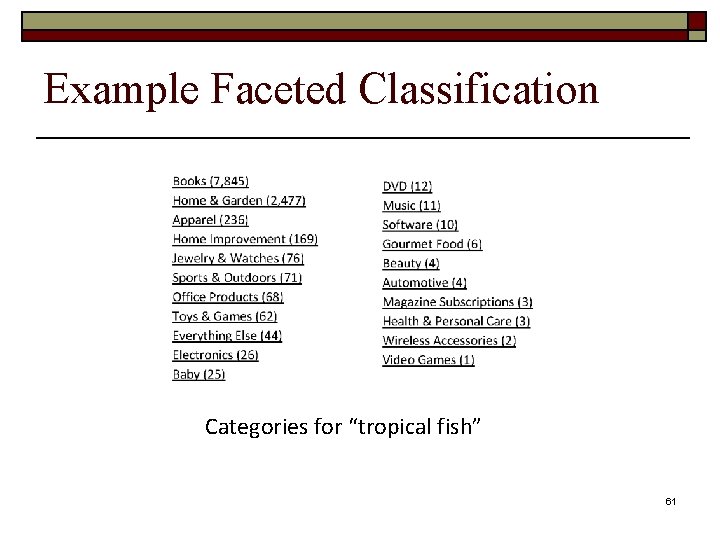

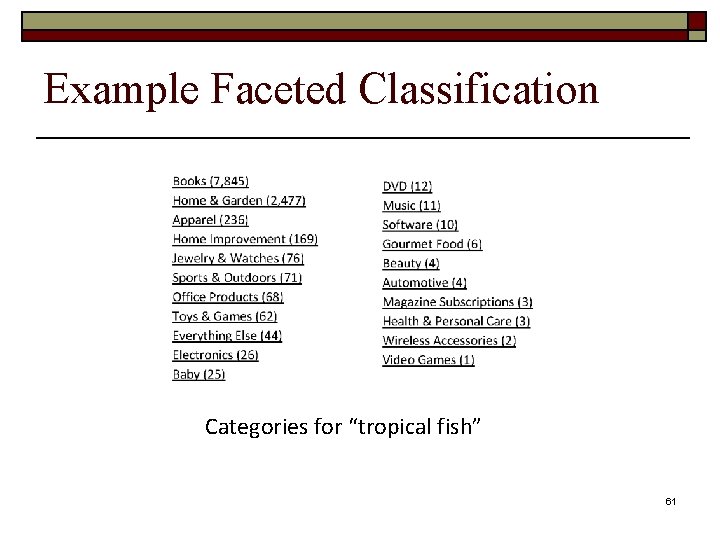

Example Faceted Classification Categories for “tropical fish” 61

Example Faceted Classification Subcategories and facets for “Home & Garden” 62

Cross-Language Search o o Query in one language, retrieve documents in multiple other languages Involves query translation, and probably document translation Query translation can be done using bilingual dictionaries Document translation requires more sophisticated statistical translation models n similar to some retrieval models 63

Cross-Language Search 64