Lecture Class 4 Introduction to Multithreaded Programming in

![Identifying Threads. . . int somefunc() { char buf[BUFSIZ]; sprintf(buf, "%i", some_num); thread t Identifying Threads. . . int somefunc() { char buf[BUFSIZ]; sprintf(buf, "%i", some_num); thread t](https://slidetodoc.com/presentation_image/08b15c5682291afe3d809155324bed5c/image-31.jpg)

- Slides: 40

Lecture Class 4 Introduction to Multithreaded Programming in C++11 "Concurrency is the next major revolution in how we write software. "—Herb Sutter, ISO C++ Standards Committee Chairman, "The Free Lunch is Over", Dr. Dobb's Journal, 2005

Processes Revisited • A process is an active runtime environment that cradles a running program, providing an execution state for all threads in the process, including: – – – a process id, parent process id, process group id, etc. file descriptor table signal dispositions data segments heap a process state (running, ready, waiting, etc. ) • Informally, a process is an executing program

Multiprocessing Revisited • A multiprocessing or multitasking operating system (like Unix, as opposed to DOS) can have more than one process executing at any given time • This simultaneous execution may either be – concurrent, meaning that multiple processes in a run state can be swapped in and out by the OS – parallel, meaning that multiple processes are actually running at the same time on multiple processors

What is a Thread? • A thread is an encapsulation of a discrete flow of control in a program, that can be independently scheduled • Each process is given a single thread by default • A thread is sometimes called a lightweight process, because it is similar to a process in that it has its own thread id, stack pointer, a signal mask, program counter, register values, etc. • All threads within a given process share resource handles, file descriptors, memory segments (heap and data segments), and code. THEREFORE HEAR THIS: – All threads share the same data segments and code segments, along with a single file descriptor table

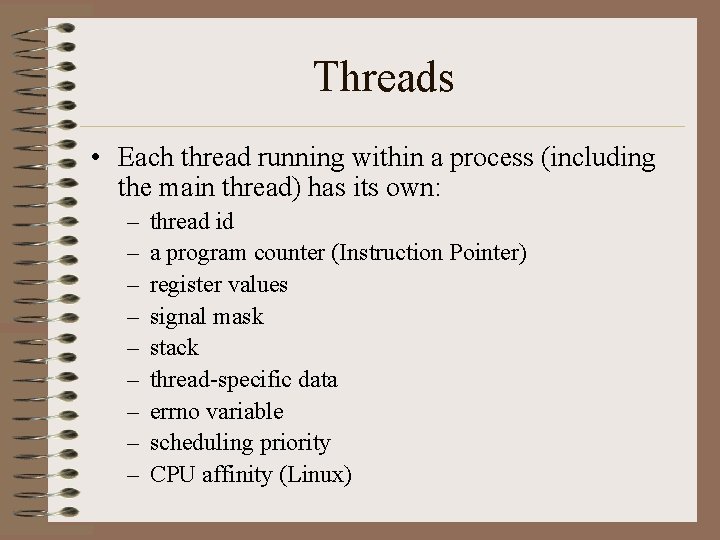

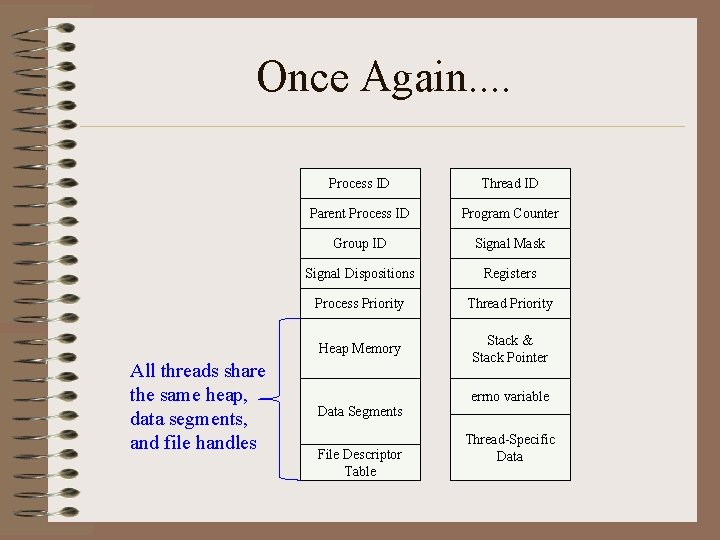

Threads • Each thread running within a process (including the main thread) has its own: – – – – – thread id a program counter (Instruction Pointer) register values signal mask stack thread-specific data errno variable scheduling priority CPU affinity (Linux)

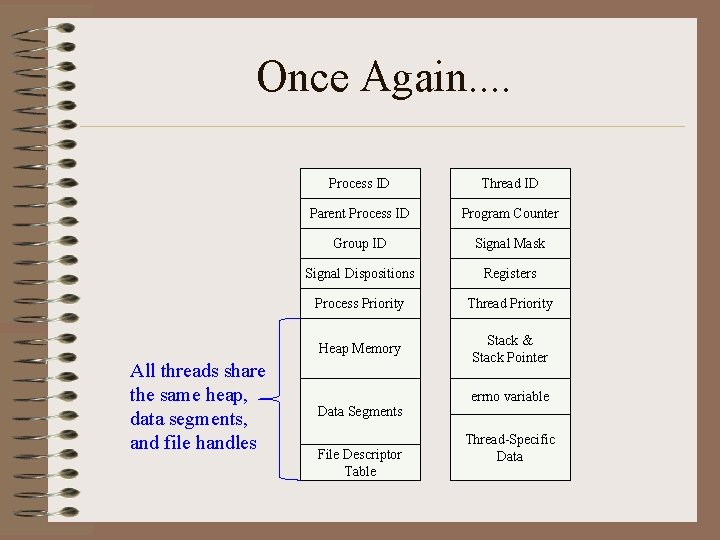

Once Again. . All threads share the same heap, data segments, and file handles Process ID Thread ID Parent Process ID Program Counter Group ID Signal Mask Signal Dispositions Registers Process Priority Thread Priority Heap Memory Stack & Stack Pointer Data Segments File Descriptor Table errno variable Thread-Specific Data

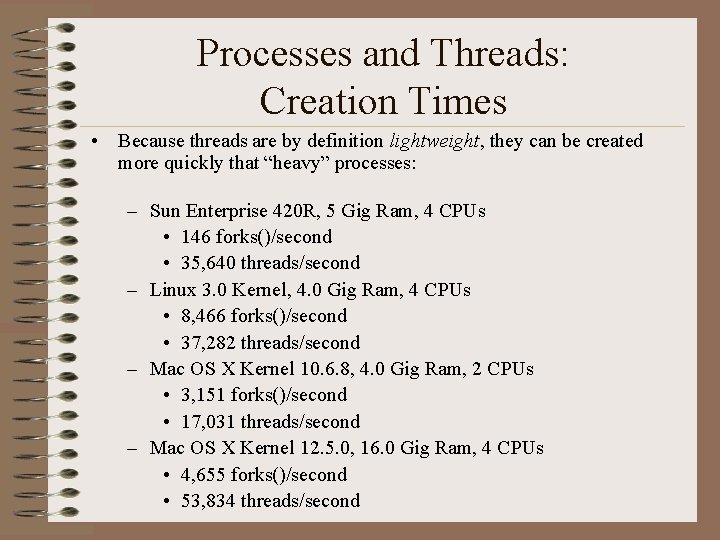

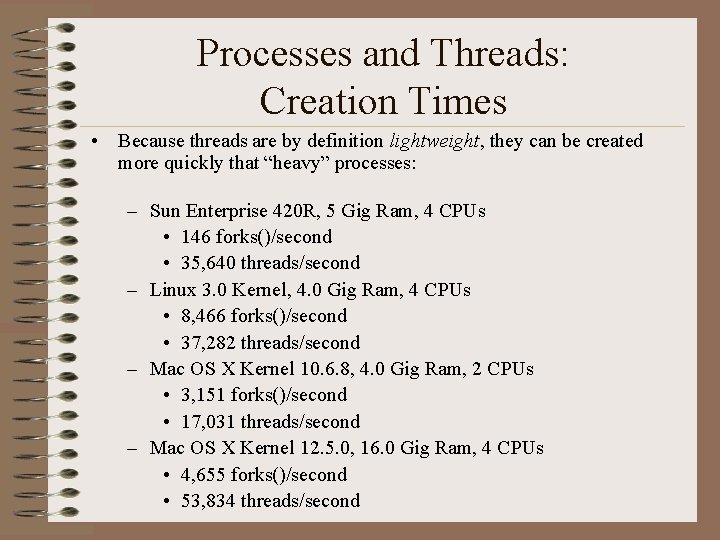

Processes and Threads: Creation Times • Because threads are by definition lightweight, they can be created more quickly that “heavy” processes: – Sun Enterprise 420 R, 5 Gig Ram, 4 CPUs • 146 forks()/second • 35, 640 threads/second – Linux 3. 0 Kernel, 4. 0 Gig Ram, 4 CPUs • 8, 466 forks()/second • 37, 282 threads/second – Mac OS X Kernel 10. 6. 8, 4. 0 Gig Ram, 2 CPUs • 3, 151 forks()/second • 17, 031 threads/second – Mac OS X Kernel 12. 5. 0, 16. 0 Gig Ram, 4 CPUs • 4, 655 forks()/second • 53, 834 threads/second

Say What? • Threads can be created and managed more quickly than processes because: – Threads have less overhead than processes, for example, threads share the process heap, all data and code segments – Threads can live entirely in user space, so that no kernel mode switch needs to be made to create a new thread – Processes don’t need to be swapped to create a thread

Analogies • Just as a multitasking operating system can have multiple processes executing concurrently or in parallel, so a single process can have multiple threads that are executing concurrently or in parallel • These multiple threads can be taskswapped by a scheduler onto a single processor (via a LWP), or can run in parallel on separate processors

Benefits of Multithreading • Performance gains – Amdahl’s Law: speedup = 1 / (1 – p) + (p/n) – the speedup generated from parallelizing code is the time executing the parallelizable work (p) divided by the number of processors (n) plus 1 minus the parallelizable work (1 -p) – The more code that can run in parallel, the faster the overall program will run – If you can apply multiple processors for 75% of your program’s execution time, and you’re running on a dual processor box: • 1 / ((1 -. 75) + (. 75 / 2)) = 60% improvement – Why is it not strictly linear? How do you calculate p?

Benefits of Multithreading (continued) • Increased throughput • Increased application responsiveness (no more hourglasses) • Replacing interprocess communications (you’re in one process) • Single binary executable runs on both multiprocessors as well as single processors (processor transparency) • Gains can be seen even on single processor machines, because blocking calls no longer have to stop you.

On the Scheduling of Threads • Threads may be scheduled by the system scheduler (OS) or by a scheduler in the thread library (depending on the threading model). • The scheduler in the thread library: – will preempt currently running threads on the basis of priority – does NOT time-slice (i. e. , is not fair). A running thread will continue to run (almost) forever unless: • a thread call is made into the thread library • a blocking call is made • the running thread calls sched_yield()

Models • Many Threads to One LWP – DCE threads on HPUX 10. 20 • One Thread to One LWP – Windows NT – Linux • Many Threads to Many LWPs – Solaris, Digital UNIX, IRIX, HPUX 11. 0

One Thread to One LWP( Windows NT, Linux) (there may be no real distinction between a thread and LWP)

1 x 1 Model Variances • Parallel execution is supported, as each user thread is directly associated with a single kernel thread which is scheduled by the OS scheduler • Slower context switches, as kernel is involved • Number of threads is limited because each user thread is directly associated with a single kernel thread (in some instances threads take up an entry in the process table) • Scheduling of threads is handled by the OS’s scheduler, threads are seldom starved • Because threads are essentially kernel entities, swapping involves the kernel and is less efficient than a pure userspace scheduler

A Blog Entry "As a programmer, thanks to plummeting memory prices, and CPU speeds doubling every year, you had a choice. You could spend six months rewriting your inner loops in Assembler, or take six months off to play drums in a rock and roll band, and in either case, your program would run faster. Assembler programmers don't have groupies. "—Joel Spolsky

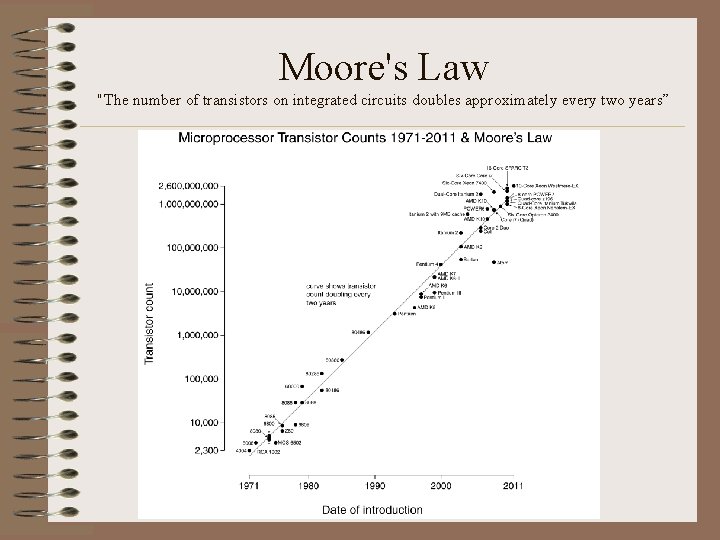

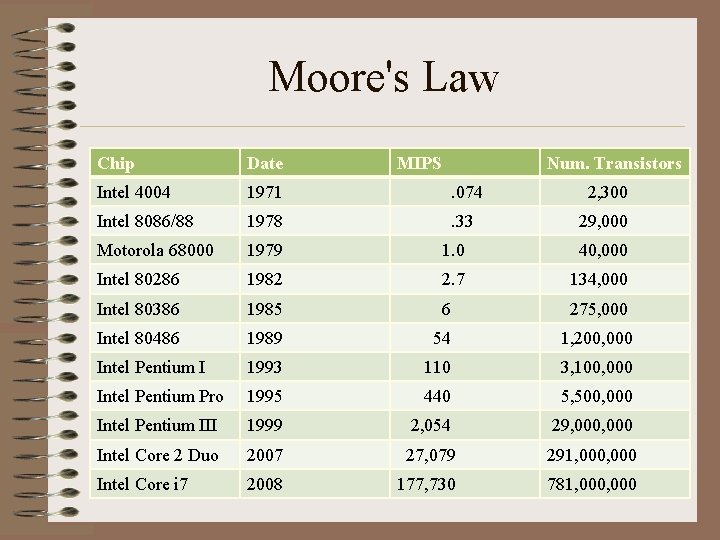

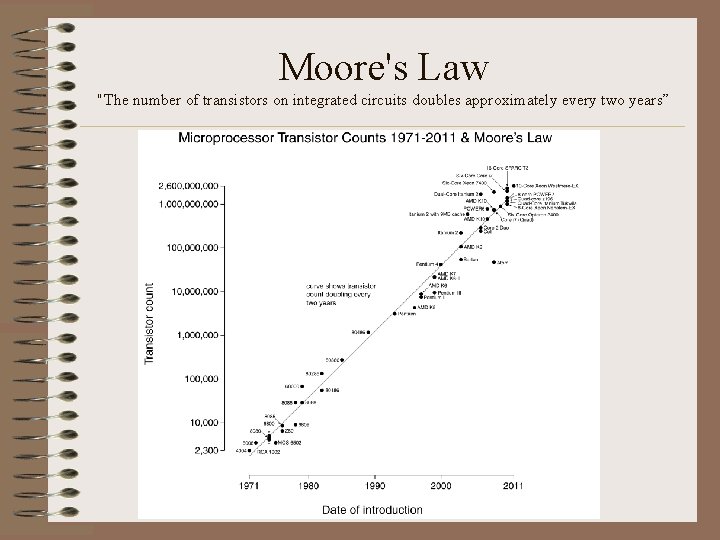

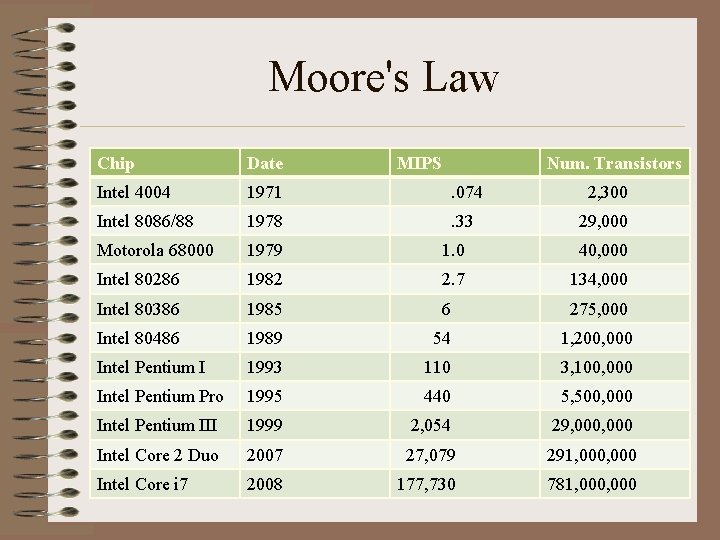

Moore's Law "The number of transistors on integrated circuits doubles approximately every two years”

Moore's Law Chip Date MIPS Num. Transistors Intel 4004 1971 . 074 2, 300 Intel 8086/88 1978 . 33 29, 000 Motorola 68000 1979 1. 0 40, 000 Intel 80286 1982 2. 7 134, 000 Intel 80386 1985 6 275, 000 Intel 80486 1989 54 1, 200, 000 Intel Pentium I 1993 110 3, 100, 000 Intel Pentium Pro 1995 440 5, 500, 000 Intel Pentium III 1999 2, 054 29, 000 Intel Core 2 Duo 2007 27, 079 291, 000 Intel Core i 7 2008 177, 730 781, 000

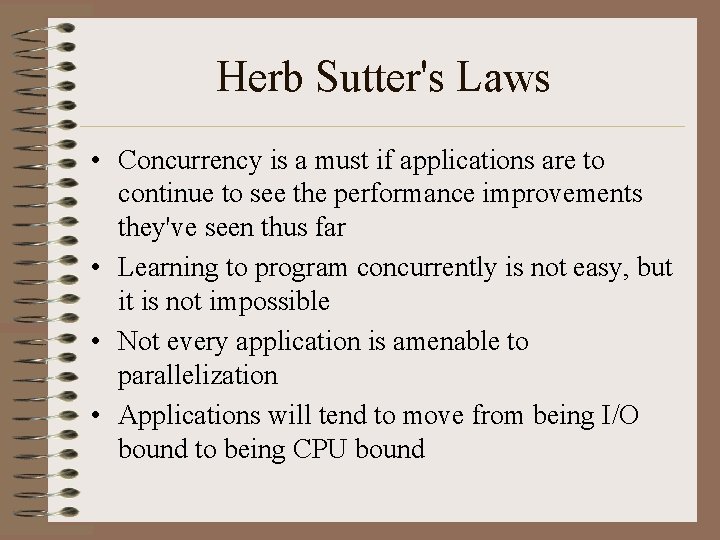

Herb Sutter's Laws • Concurrency is a must if applications are to continue to see the performance improvements they've seen thus far • Learning to program concurrently is not easy, but it is not impossible • Not every application is amenable to parallelization • Applications will tend to move from being I/O bound to being CPU bound

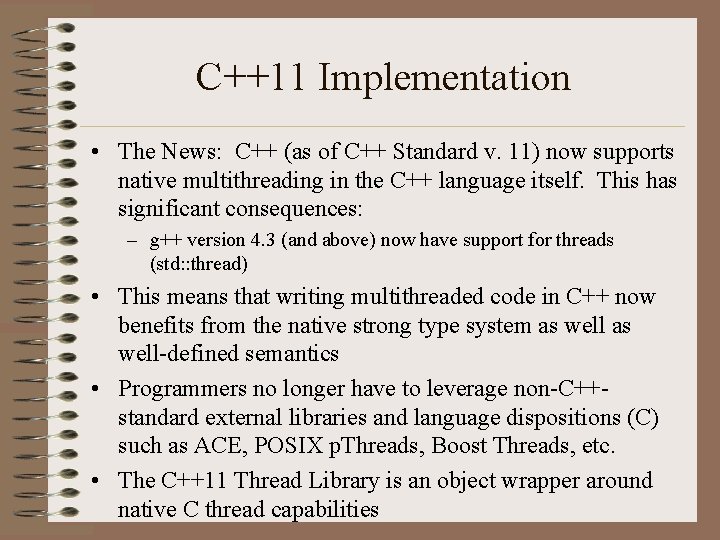

C++11 Implementation • The News: C++ (as of C++ Standard v. 11) now supports native multithreading in the C++ language itself. This has significant consequences: – g++ version 4. 3 (and above) now have support for threads (std: : thread) • This means that writing multithreaded code in C++ now benefits from the native strong type system as well-defined semantics • Programmers no longer have to leverage non-C++standard external libraries and language dispositions (C) such as ACE, POSIX p. Threads, Boost Threads, etc. • The C++11 Thread Library is an object wrapper around native C thread capabilities

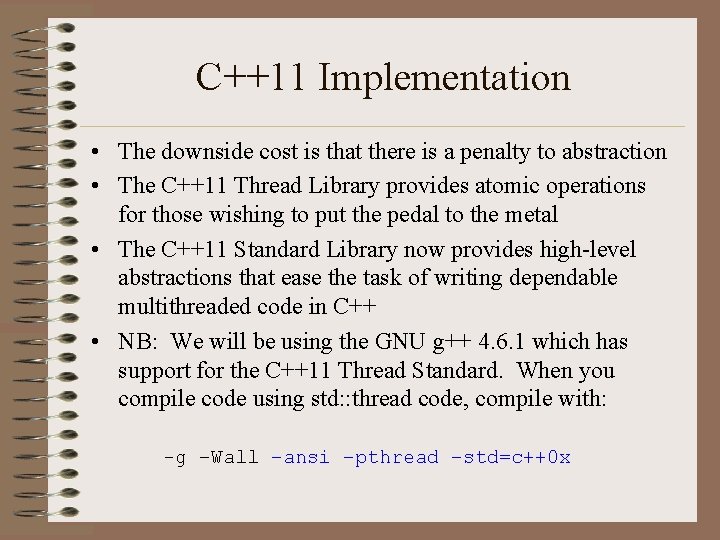

C++11 Implementation • The downside cost is that there is a penalty to abstraction • The C++11 Thread Library provides atomic operations for those wishing to put the pedal to the metal • The C++11 Standard Library now provides high-level abstractions that ease the task of writing dependable multithreaded code in C++ • NB: We will be using the GNU g++ 4. 6. 1 which has support for the C++11 Thread Standard. When you compile code using std: : thread code, compile with: -g –Wall –ansi –pthread –std=c++0 x

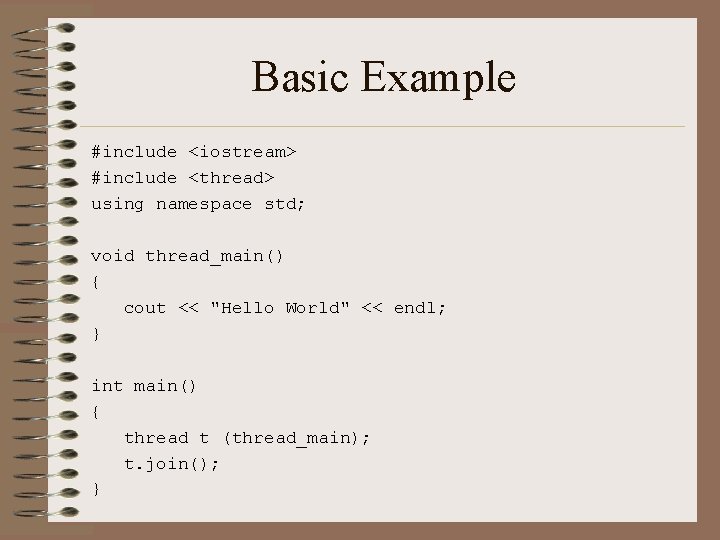

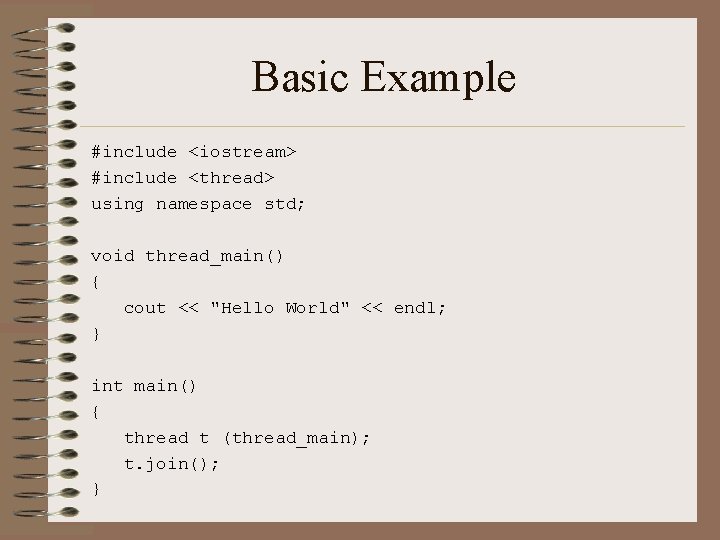

Basic Example #include <iostream> #include <thread> using namespace std; void thread_main() { cout << "Hello World" << endl; } int main() { thread t (thread_main); t. join(); }

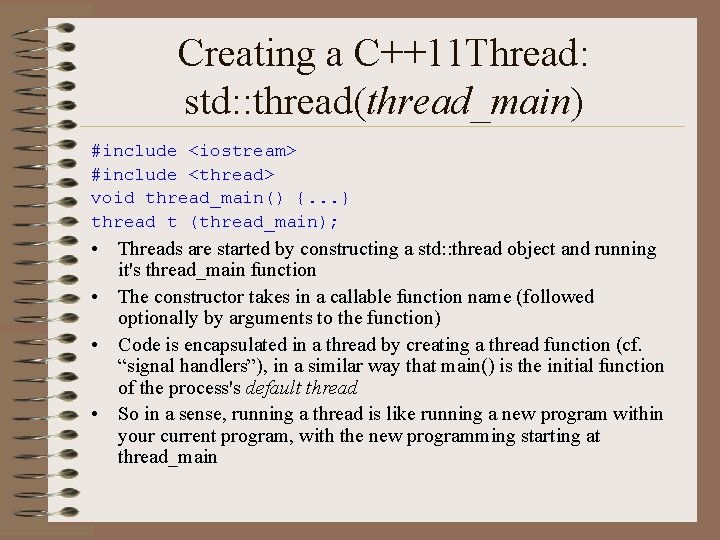

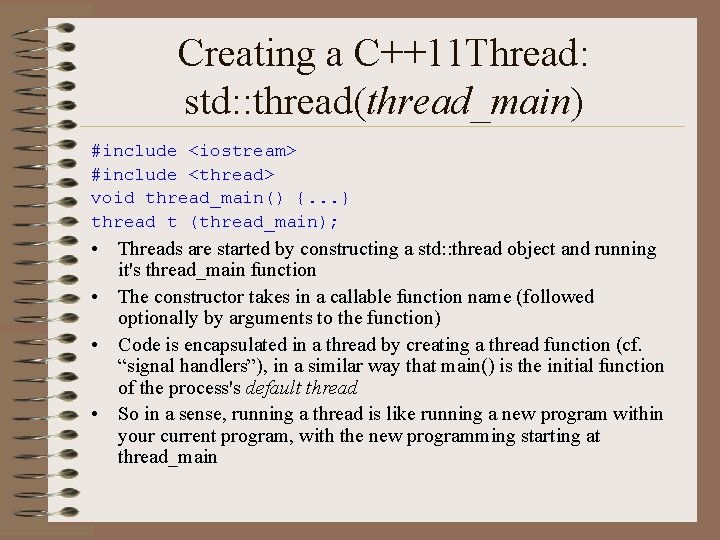

Creating a C++11 Thread: std: : thread(thread_main) #include <iostream> #include <thread> void thread_main() {. . . } thread t (thread_main); • Threads are started by constructing a std: : thread object and running it's thread_main function • The constructor takes in a callable function name (followed optionally by arguments to the function) • Code is encapsulated in a thread by creating a thread function (cf. “signal handlers”), in a similar way that main() is the initial function of the process's default thread • So in a sense, running a thread is like running a new program within your current program, with the new programming starting at thread_main

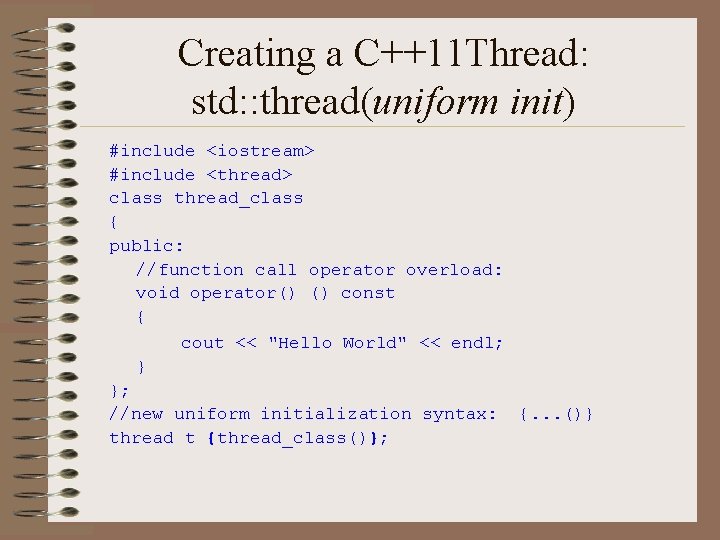

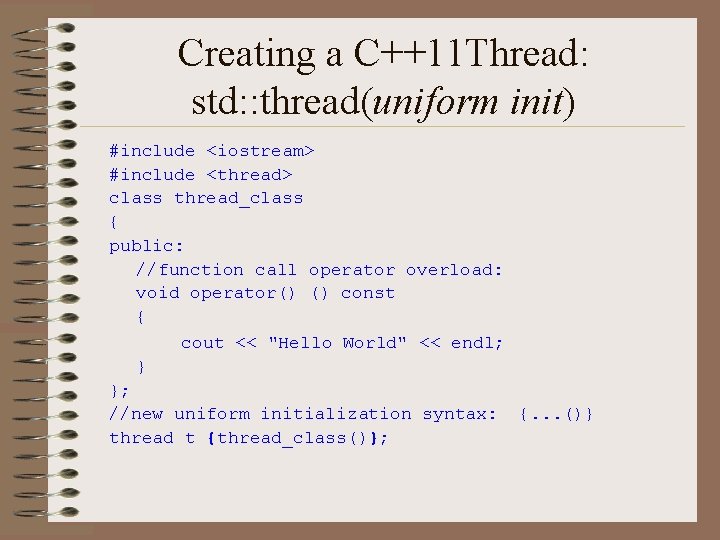

Creating a C++11 Thread: std: : thread(uniform init) #include <iostream> #include <thread> class thread_class { public: //function call operator overload: void operator() () const { cout << "Hello World" << endl; } }; //new uniform initialization syntax: {. . . ()} thread t {thread_class()};

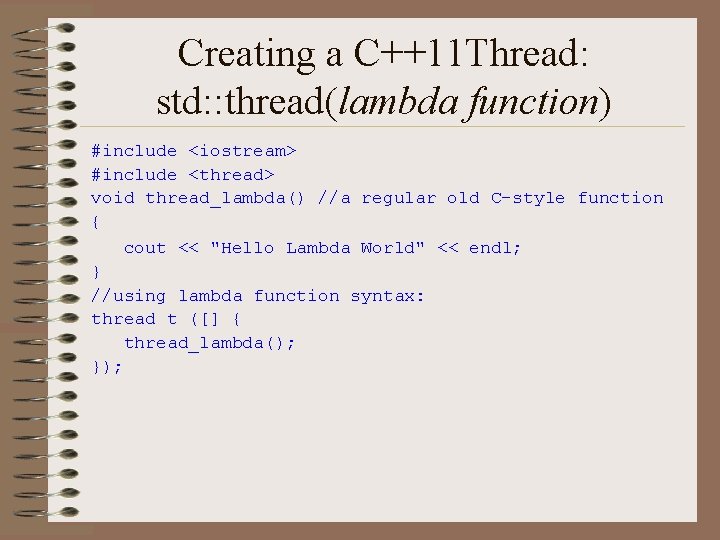

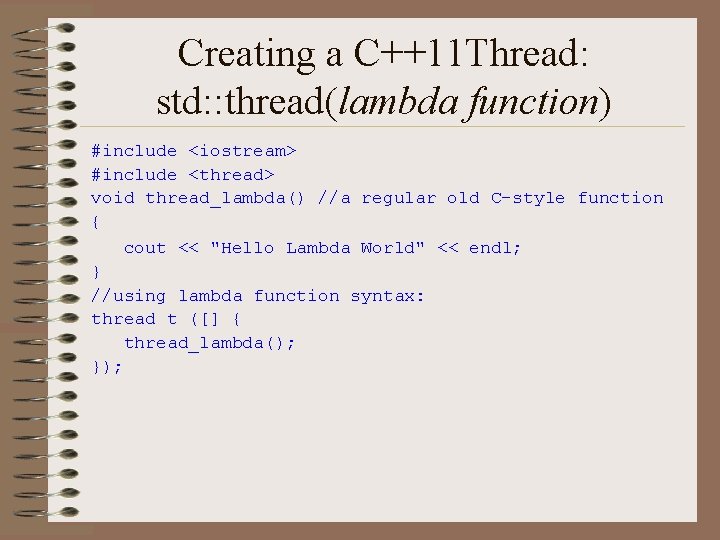

Creating a C++11 Thread: std: : thread(lambda function) #include <iostream> #include <thread> void thread_lambda() //a regular old C-style function { cout << "Hello Lambda World" << endl; } //using lambda function syntax: thread t ([] { thread_lambda(); });

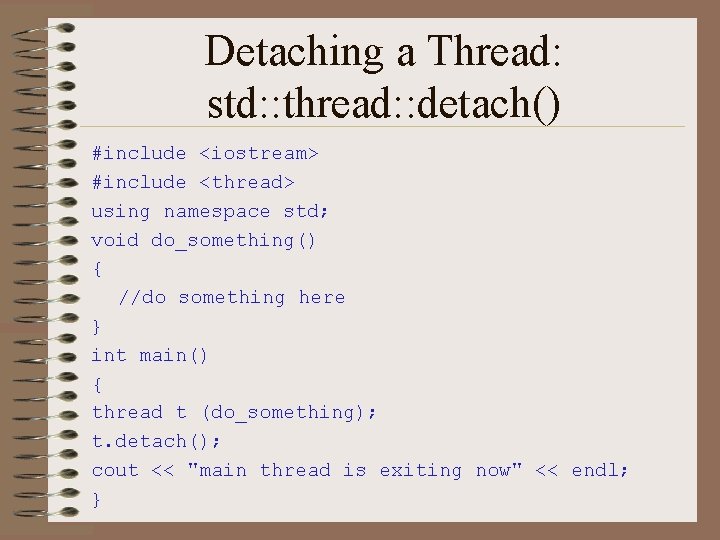

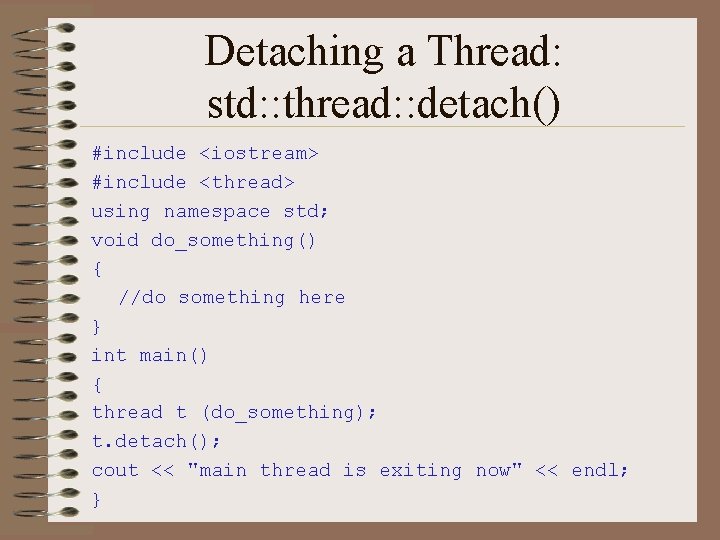

Detaching a Thread: std: : thread: : detach() #include <iostream> #include <thread> using namespace std; void do_something() { //do something here } int main() { thread t (do_something); t. detach(); cout << "main thread is exiting now" << endl; }

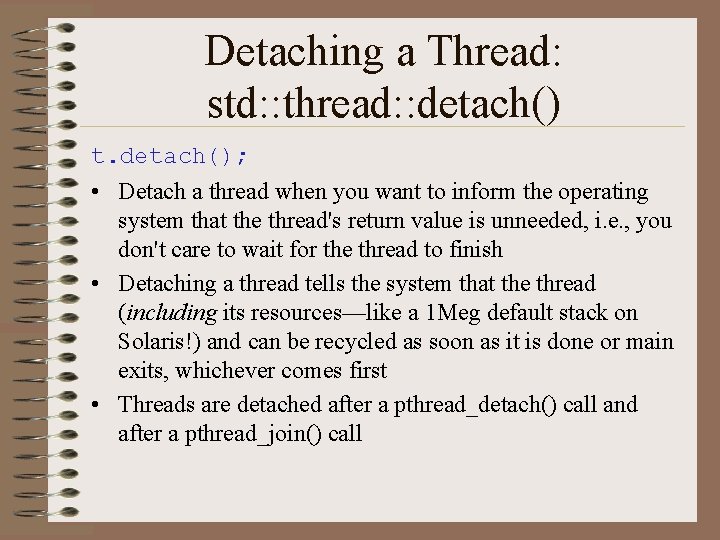

Detaching a Thread: std: : thread: : detach() t. detach(); • Detach a thread when you want to inform the operating system that the thread's return value is unneeded, i. e. , you don't care to wait for the thread to finish • Detaching a thread tells the system that the thread (including its resources—like a 1 Meg default stack on Solaris!) and can be recycled as soon as it is done or main exits, whichever comes first • Threads are detached after a pthread_detach() call and after a pthread_join() call

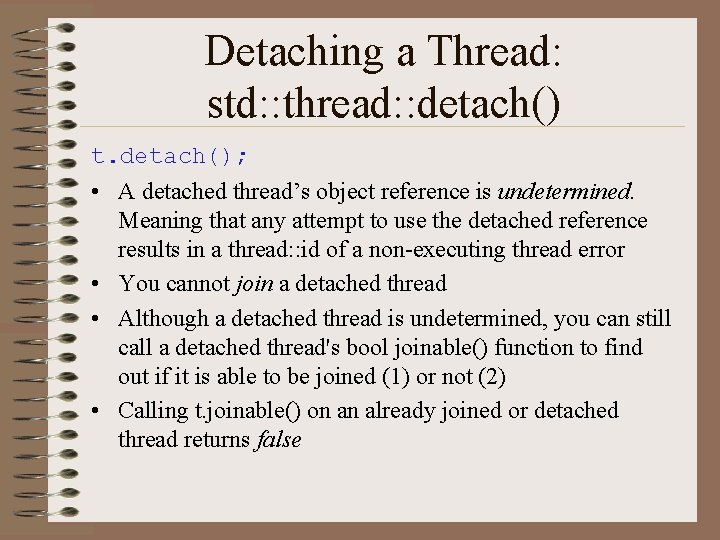

Detaching a Thread: std: : thread: : detach() t. detach(); • A detached thread’s object reference is undetermined. Meaning that any attempt to use the detached reference results in a thread: : id of a non-executing thread error • You cannot join a detached thread • Although a detached thread is undetermined, you can still call a detached thread's bool joinable() function to find out if it is able to be joined (1) or not (2) • Calling t. joinable() on an already joined or detached thread returns false

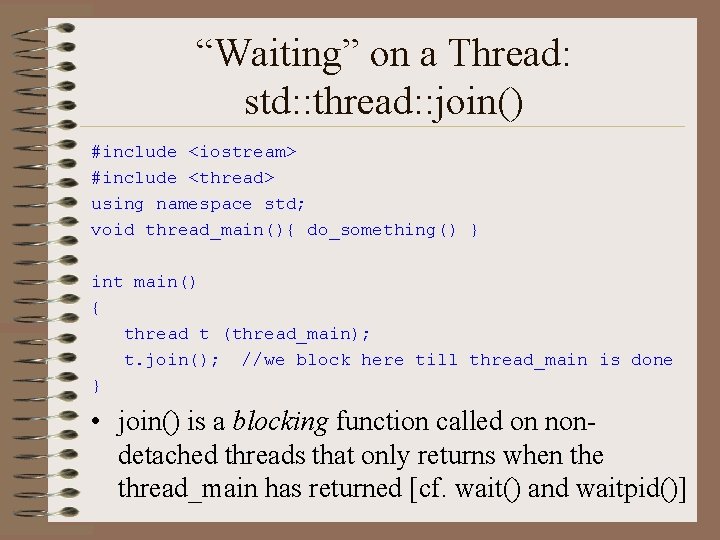

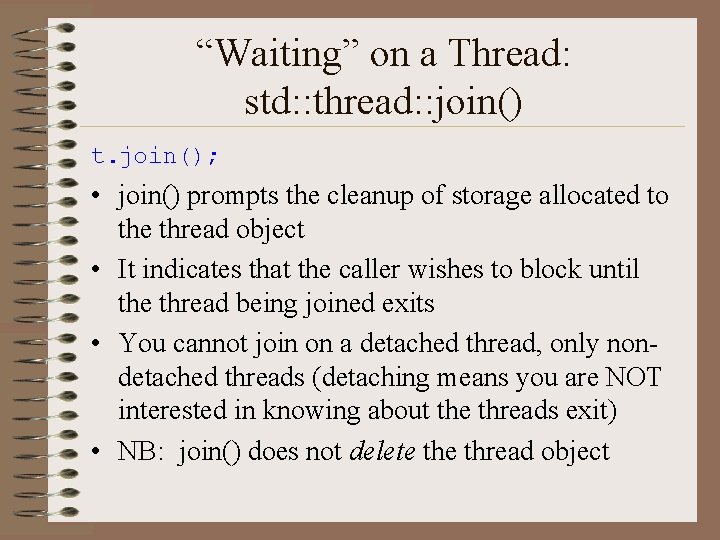

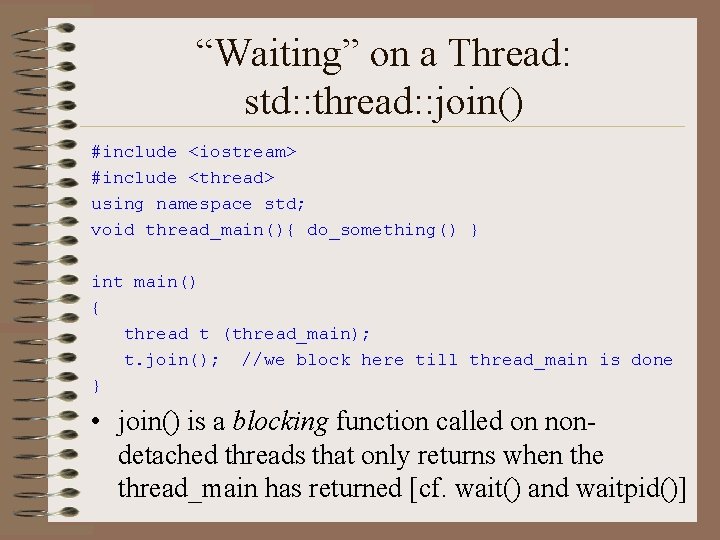

“Waiting” on a Thread: std: : thread: : join() #include <iostream> #include <thread> using namespace std; void thread_main(){ do_something() } int main() { thread t (thread_main); t. join(); //we block here till thread_main is done } • join() is a blocking function called on nondetached threads that only returns when the thread_main has returned [cf. wait() and waitpid()]

“Waiting” on a Thread: std: : thread: : join() t. join(); • join() prompts the cleanup of storage allocated to the thread object • It indicates that the caller wishes to block until the thread being joined exits • You cannot join on a detached thread, only nondetached threads (detaching means you are NOT interested in knowing about the threads exit) • NB: join() does not delete thread object

![Identifying Threads int somefunc char bufBUFSIZ sprintfbuf i somenum thread t Identifying Threads. . . int somefunc() { char buf[BUFSIZ]; sprintf(buf, "%i", some_num); thread t](https://slidetodoc.com/presentation_image/08b15c5682291afe3d809155324bed5c/image-31.jpg)

Identifying Threads. . . int somefunc() { char buf[BUFSIZ]; sprintf(buf, "%i", some_num); thread t (thread_main, 5, ref(buf)); cout << "thread id of t is" << t. get_id() << endl; cout << "my thread id is" << this_thread: : get_id() << endl; } • std: : thread: : get_id() will return the thread id of the particular thread • use std: : this_thread: : get_id() to print out the current thread's id

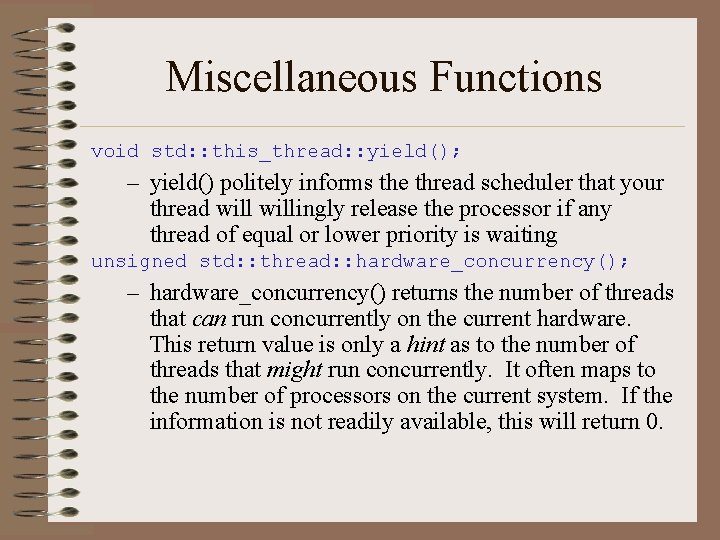

Miscellaneous Functions void std: : this_thread: : yield(); – yield() politely informs the thread scheduler that your thread willingly release the processor if any thread of equal or lower priority is waiting unsigned std: : thread: : hardware_concurrency(); – hardware_concurrency() returns the number of threads that can run concurrently on the current hardware. This return value is only a hint as to the number of threads that might run concurrently. It often maps to the number of processors on the current system. If the information is not readily available, this will return 0.

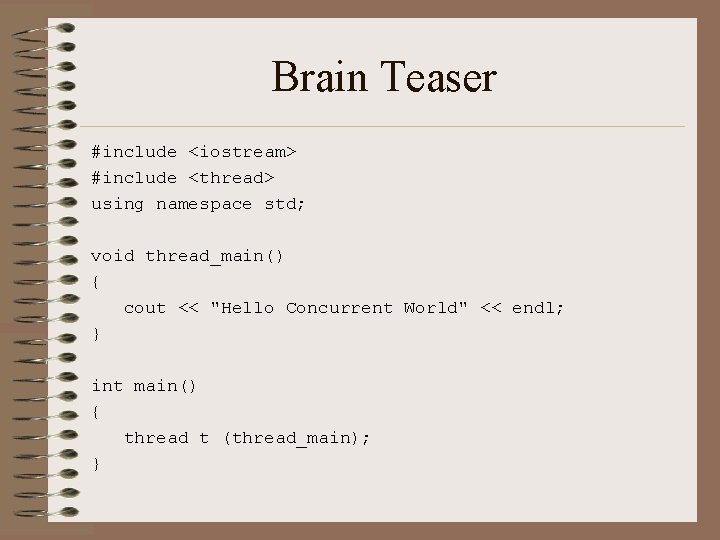

Brain Teaser #include <iostream> #include <thread> using namespace std; void thread_main() { cout << "Hello Concurrent World" << endl; } int main() { thread t (thread_main); }

STOP

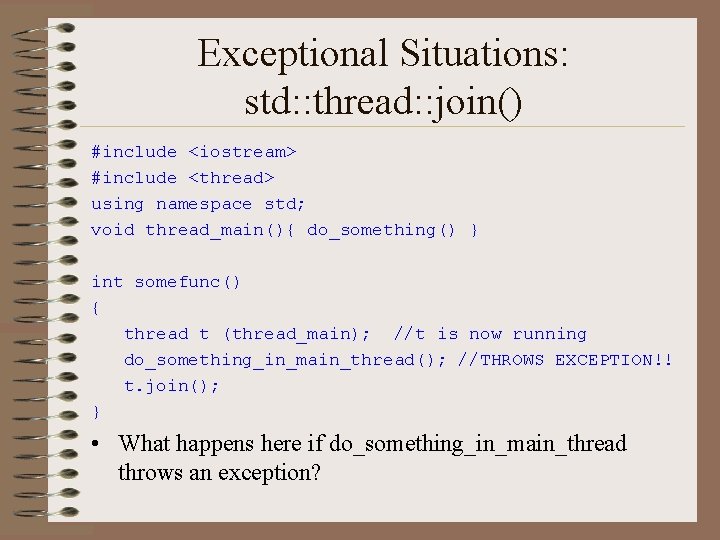

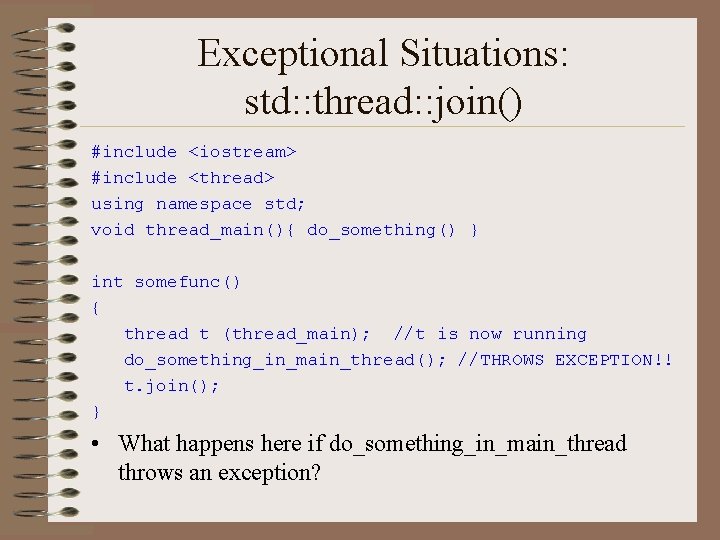

Exceptional Situations: std: : thread: : join() #include <iostream> #include <thread> using namespace std; void thread_main(){ do_something() } int somefunc() { thread t (thread_main); //t is now running do_something_in_main_thread(); //THROWS EXCEPTION!! t. join(); } • What happens here if do_something_in_main_thread throws an exception?

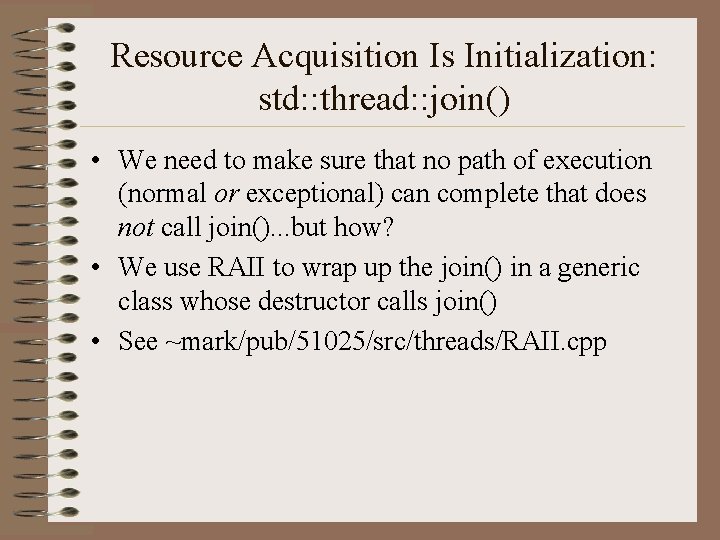

Resource Acquisition Is Initialization: std: : thread: : join() • We need to make sure that no path of execution (normal or exceptional) can complete that does not call join(). . . but how? • We use RAII to wrap up the join() in a generic class whose destructor calls join() • See ~mark/pub/51025/src/threads/RAII. cpp

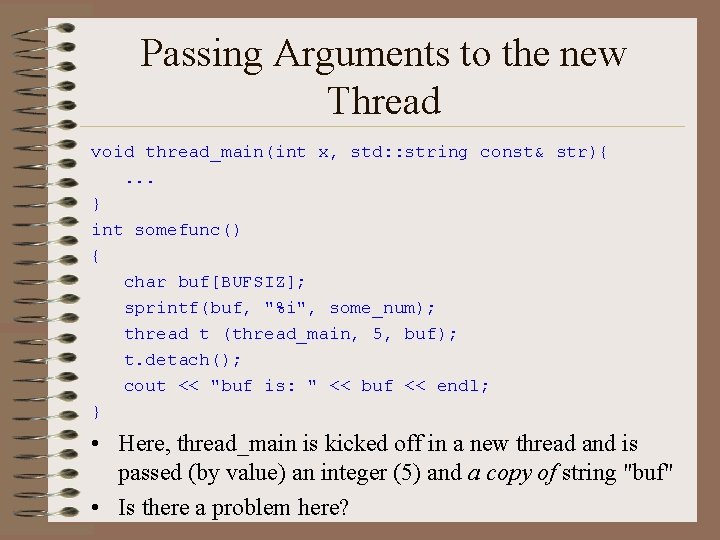

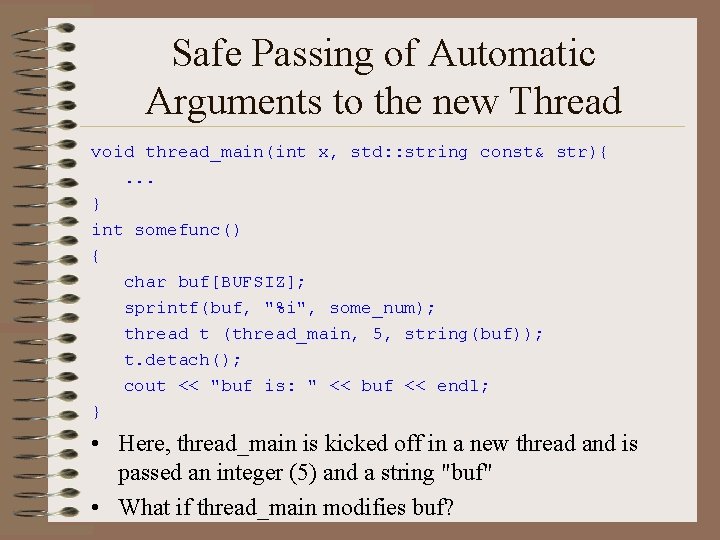

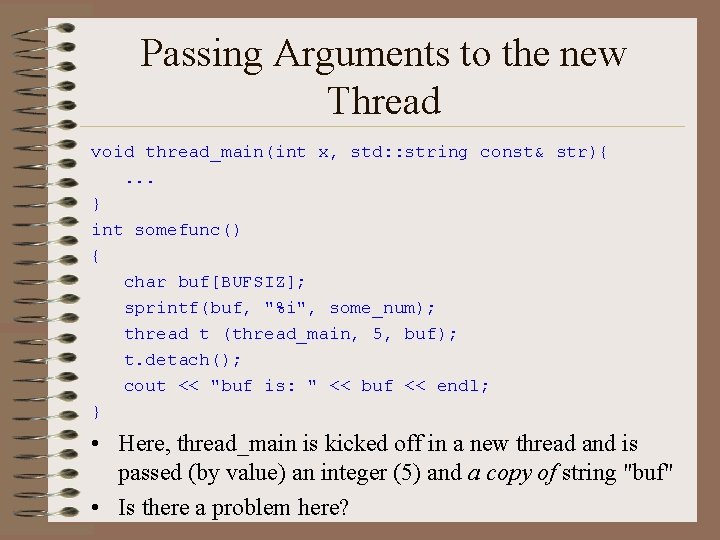

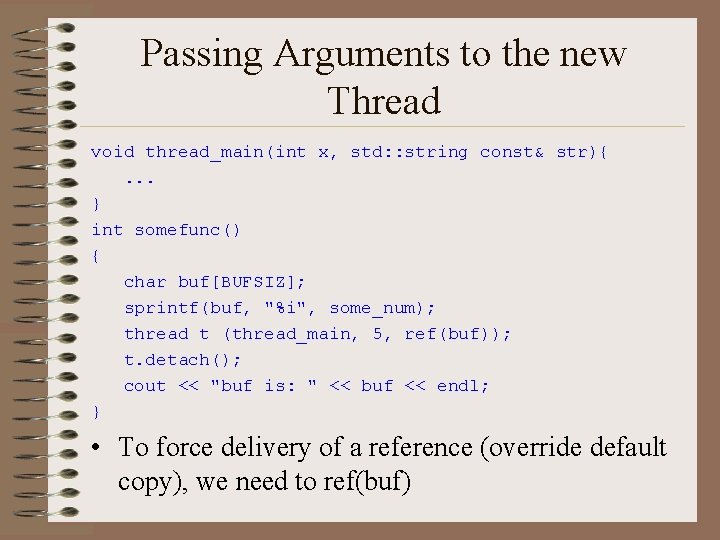

Passing Arguments to the new Thread void thread_main(int x, std: : string const& str){. . . } int somefunc() { char buf[BUFSIZ]; sprintf(buf, "%i", some_num); thread t (thread_main, 5, buf); t. detach(); cout << "buf is: " << buf << endl; } • Here, thread_main is kicked off in a new thread and is passed (by value) an integer (5) and a copy of string "buf" • Is there a problem here?

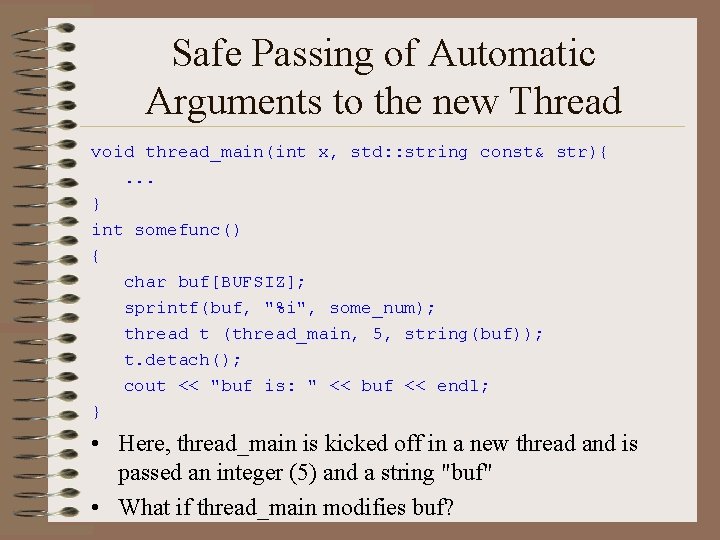

Safe Passing of Automatic Arguments to the new Thread void thread_main(int x, std: : string const& str){. . . } int somefunc() { char buf[BUFSIZ]; sprintf(buf, "%i", some_num); thread t (thread_main, 5, string(buf)); t. detach(); cout << "buf is: " << buf << endl; } • Here, thread_main is kicked off in a new thread and is passed an integer (5) and a string "buf" • What if thread_main modifies buf?

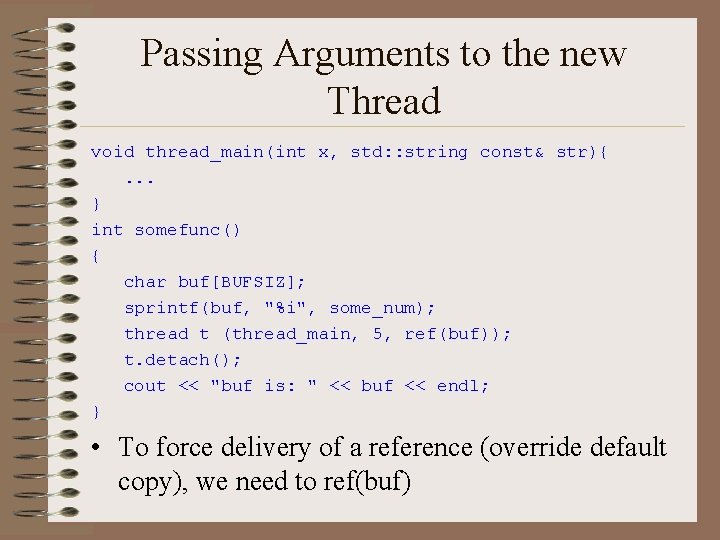

Passing Arguments to the new Thread void thread_main(int x, std: : string const& str){. . . } int somefunc() { char buf[BUFSIZ]; sprintf(buf, "%i", some_num); thread t (thread_main, 5, ref(buf)); t. detach(); cout << "buf is: " << buf << endl; } • To force delivery of a reference (override default copy), we need to ref(buf)

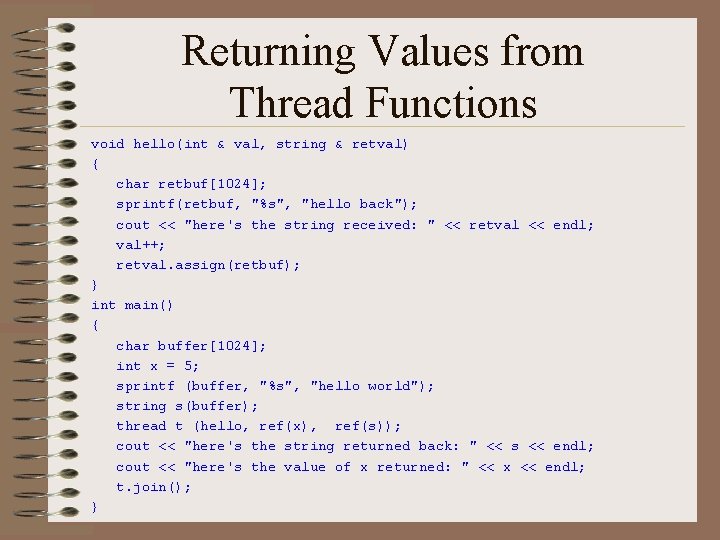

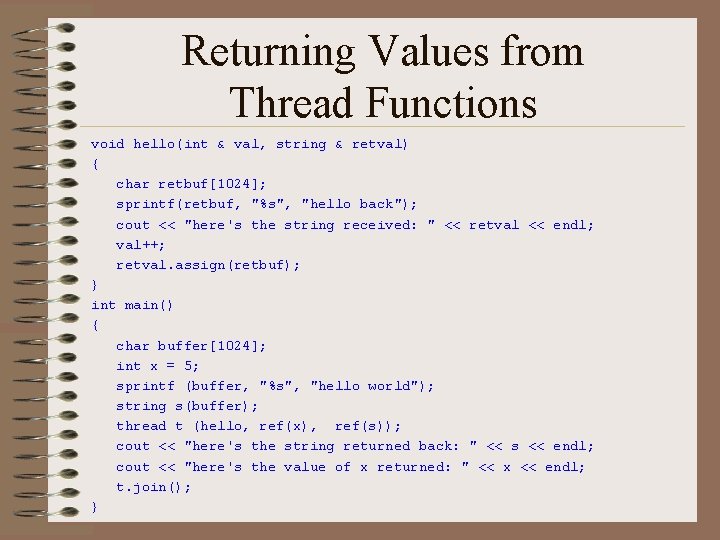

Returning Values from Thread Functions void hello(int & val, string & retval) { char retbuf[1024]; sprintf(retbuf, "%s", "hello back"); cout << "here's the string received: " << retval << endl; val++; retval. assign(retbuf); } int main() { char buffer[1024]; int x = 5; sprintf (buffer, "%s", "hello world"); string s(buffer); thread t (hello, ref(x), ref(s)); cout << "here's the string returned back: " << s << endl; cout << "here's the value of x returned: " << x << endl; t. join(); }