Language Models for Hierarchical Summarization Dawn J Lawrie

- Slides: 28

Language Models for Hierarchical Summarization Dawn J. Lawrie Loyola College in Maryland

The Problem Dawn J. Lawrie Loyola College in Maryland 2

Hierarchical Summaries l l l Word-based summary Focus on topics of the documents Allows users to navigate through the results Dawn J. Lawrie Loyola College in Maryland 3

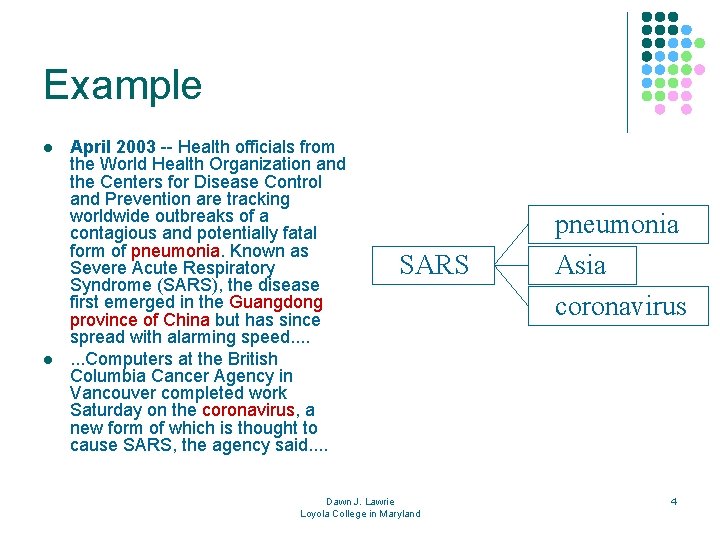

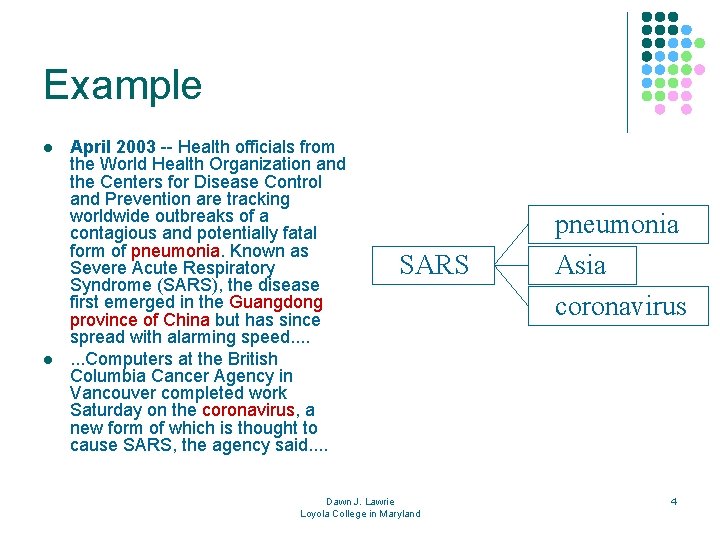

Example l l April 2003 -- Health officials from the World Health Organization and the Centers for Disease Control and Prevention are tracking worldwide outbreaks of a contagious and potentially fatal form of pneumonia. Known as Severe Acute Respiratory Syndrome (SARS), the disease first emerged in the Guangdong province of China but has since spread with alarming speed. . . . Computers at the British Columbia Cancer Agency in Vancouver completed work Saturday on the coronavirus, a new form of which is thought to cause SARS, the agency said. . SARS Dawn J. Lawrie Loyola College in Maryland pneumonia Asia coronavirus 4

Approach l Use language models to describe the text summarized by the hierarchy l l Not using language models to predict text Unigram and bigram models Use approximation of relative entropy to find topicsubtopic relationships – “Predictiveness” Use relative entropy to identify likely content-bearing words – “Topicality” Dawn J. Lawrie Loyola College in Maryland 5

Applications of Hierarchical Summaries l Full text hierarchies l l Uses all text in the document set as the basis for the hierarchy Snippet hierarchies l l Uses summaries of documents as the text for the basis of the hierarchy Snippets contain about 30 words that chosen from segments that contain query words Dawn J. Lawrie Loyola College in Maryland 6

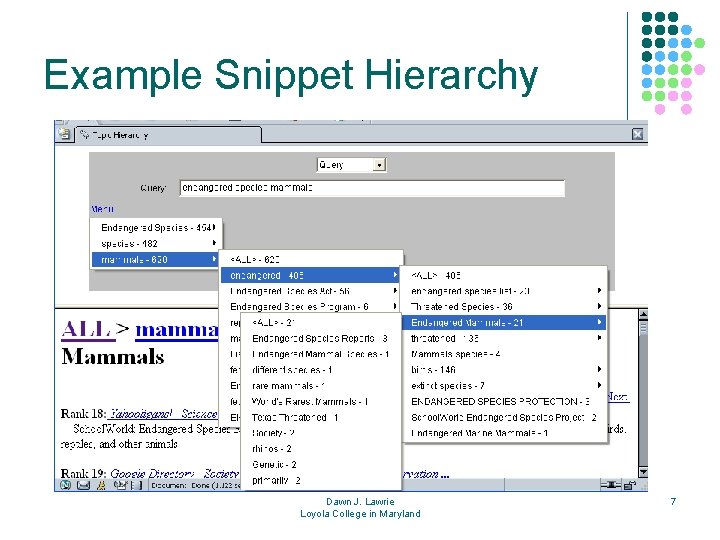

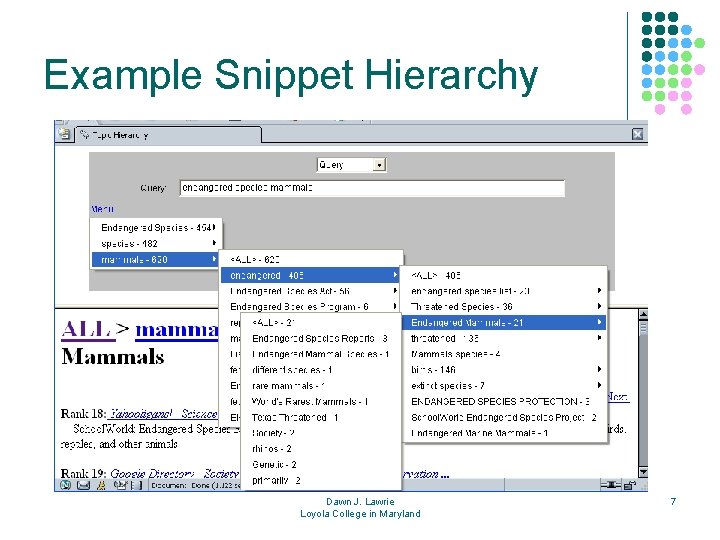

Example Snippet Hierarchy Dawn J. Lawrie Loyola College in Maryland 7

Outline l l l Introduction Related Work Probabilistic Framework for Summarization Evaluation Conclusion Dawn J. Lawrie Loyola College in Maryland 8

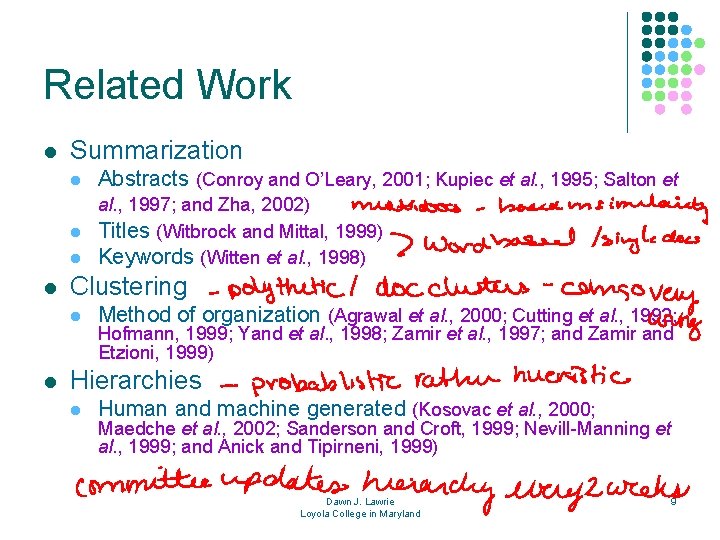

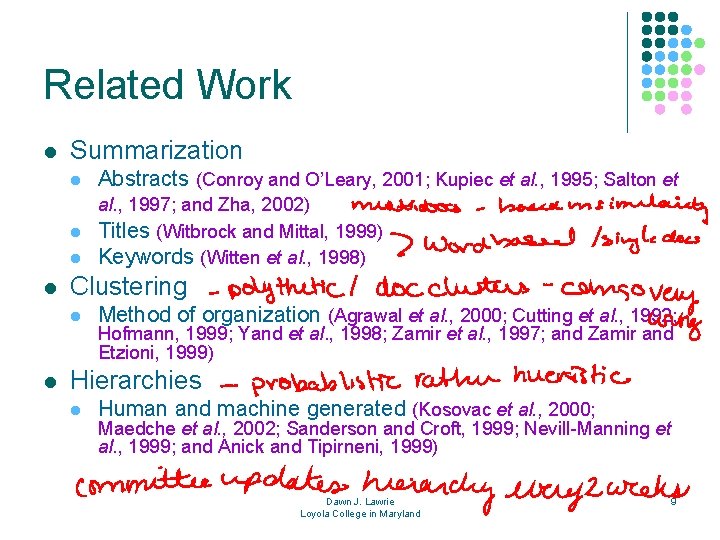

Related Work l Summarization l Abstracts (Conroy and O’Leary, 2001; Kupiec et al. , 1995; Salton et al. , 1997; and Zha, 2002) l Titles (Witbrock and Mittal, 1999) l Keywords (Witten et al. , 1998) l Clustering l l Method of organization (Agrawal et al. , 2000; Cutting et al. , 1992; Hofmann, 1999; Yand et al. , 1998; Zamir et al. , 1997; and Zamir and Etzioni, 1999) Hierarchies l Human and machine generated (Kosovac et al. , 2000; Maedche et al. , 2002; Sanderson and Croft, 1999; Nevill-Manning et al. , 1999; and Anick and Tipirneni, 1999) Dawn J. Lawrie Loyola College in Maryland 9

Outline l l l Introduction Related Work Probabilistic Framework for Summarization Evaluation Conclusion Dawn J. Lawrie Loyola College in Maryland 10

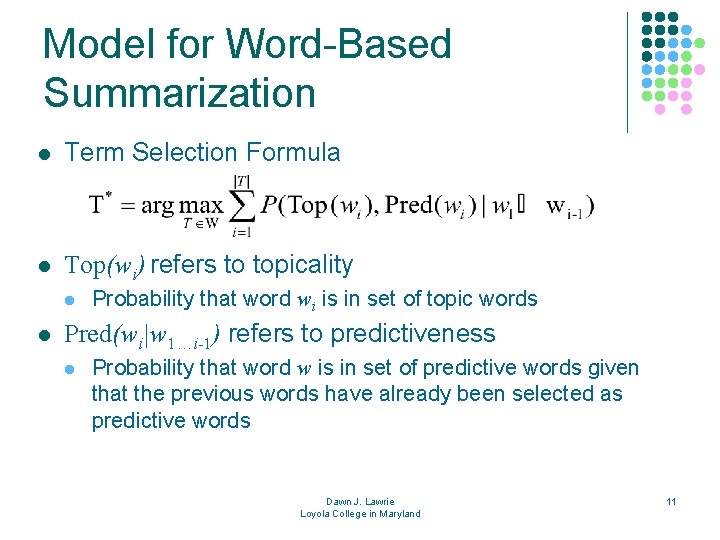

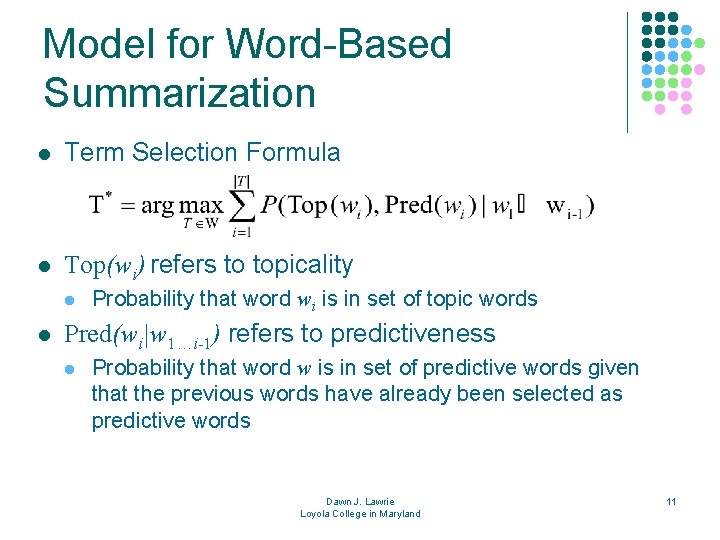

Model for Word-Based Summarization l Term Selection Formula l Top(wi) refers to topicality l l Probability that word wi is in set of topic words Pred(wi|w 1…i-1) refers to predictiveness l Probability that word w is in set of predictive words given that the previous words have already been selected as predictive words Dawn J. Lawrie Loyola College in Maryland 11

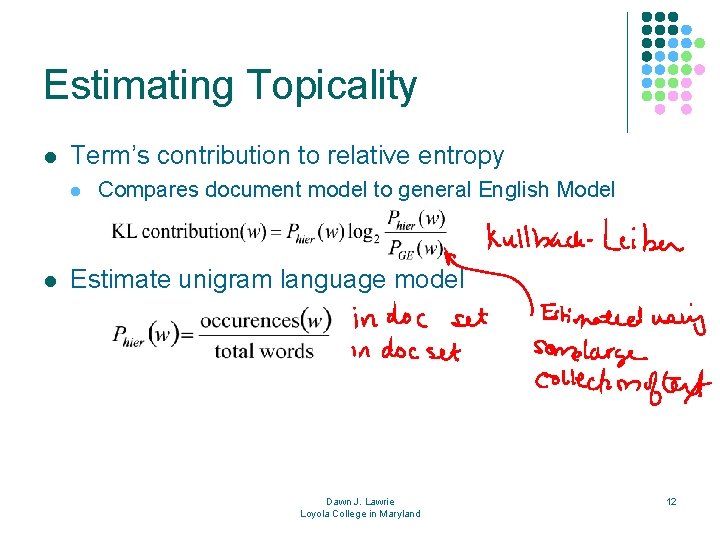

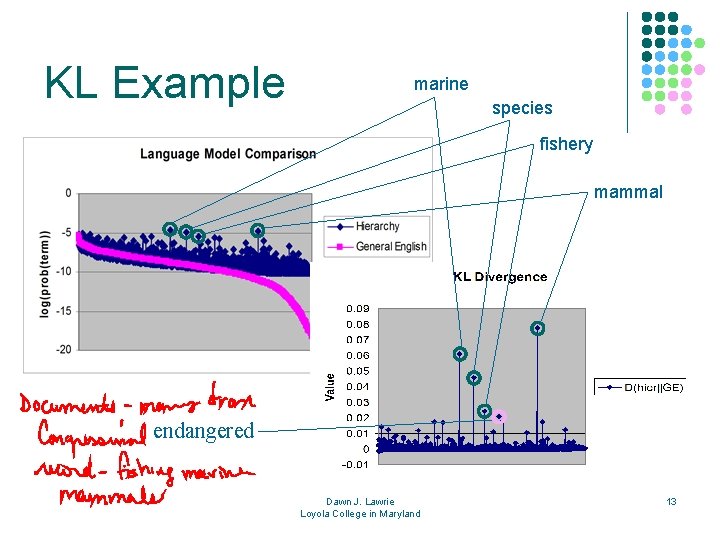

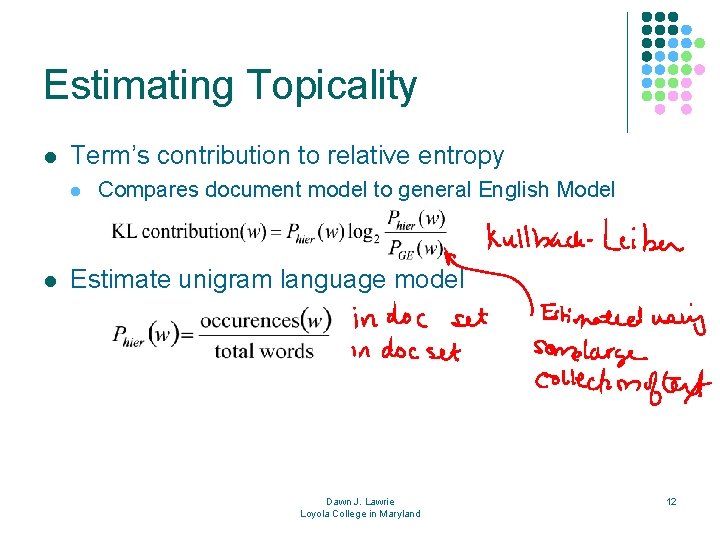

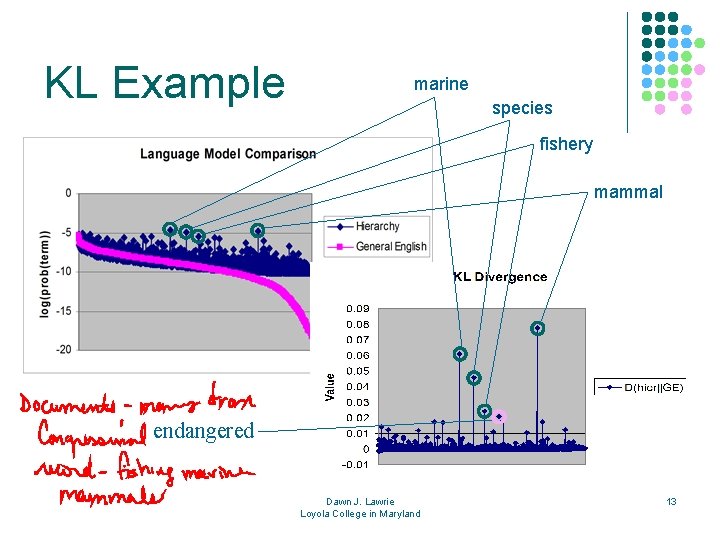

Estimating Topicality l Term’s contribution to relative entropy l l Compares document model to general English Model Estimate unigram language model Dawn J. Lawrie Loyola College in Maryland 12

KL Example marine species fishery mammal endangered Dawn J. Lawrie Loyola College in Maryland 13

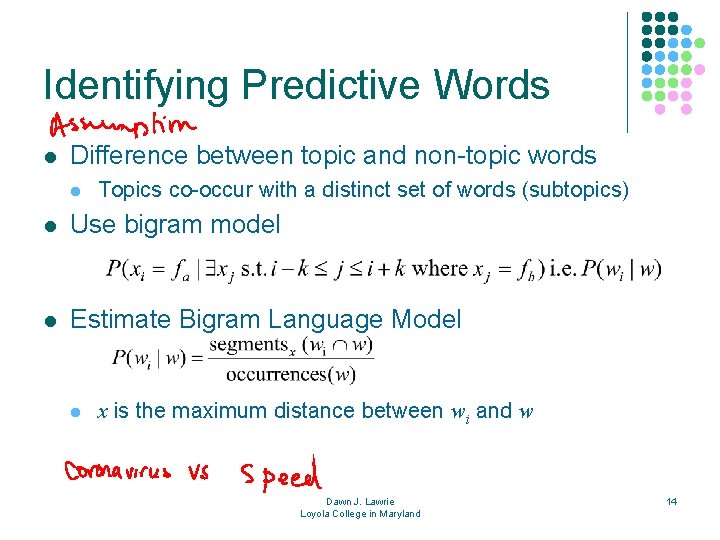

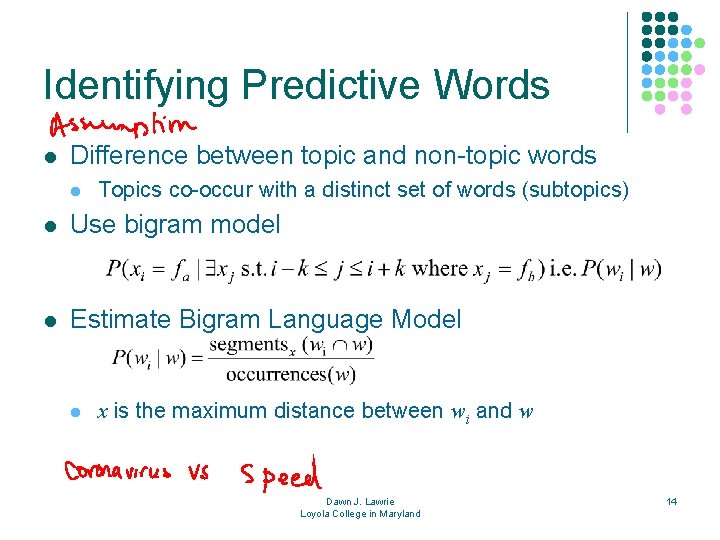

Identifying Predictive Words l Difference between topic and non-topic words l Topics co-occur with a distinct set of words (subtopics) l Use bigram model l Estimate Bigram Language Model l x is the maximum distance between wi and w Dawn J. Lawrie Loyola College in Maryland 14

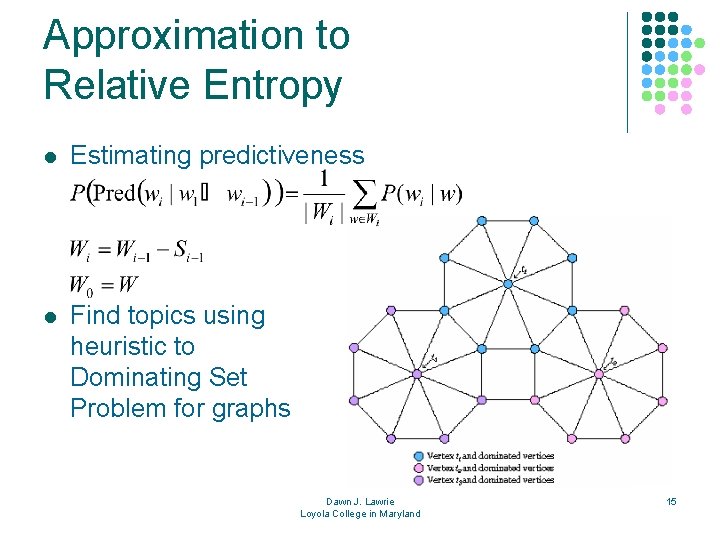

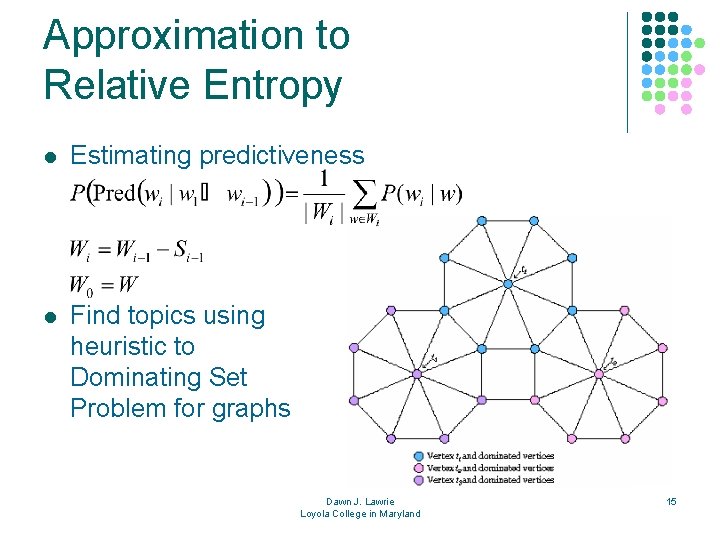

Approximation to Relative Entropy l Estimating predictiveness l Find topics using heuristic to Dominating Set Problem for graphs Dawn J. Lawrie Loyola College in Maryland 15

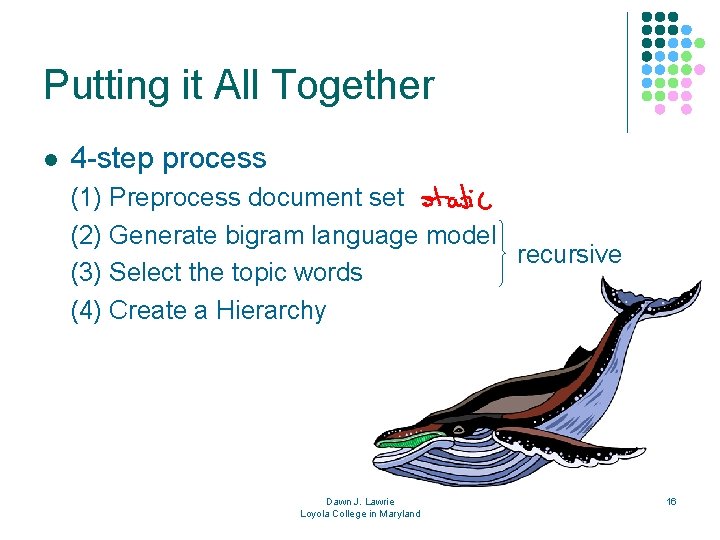

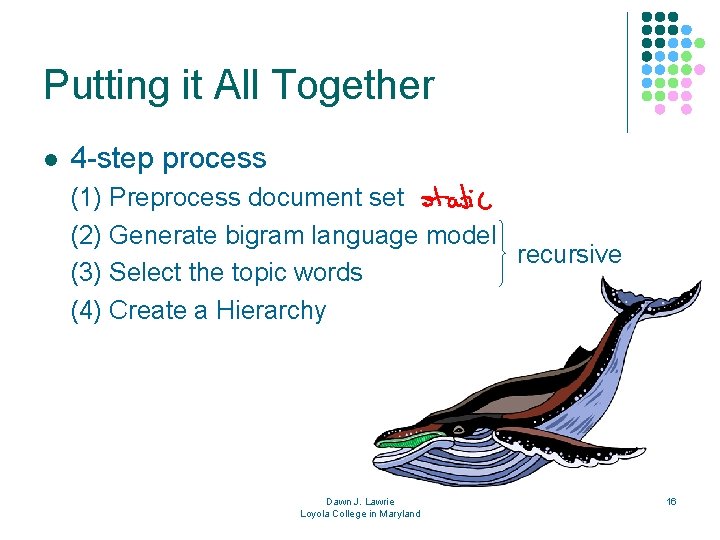

Putting it All Together l 4 -step process (1) Preprocess document set (2) Generate bigram language model recursive (3) Select the topic words (4) Create a Hierarchy Dawn J. Lawrie Loyola College in Maryland 16

Outline l l l Introduction Related Work Probabilistic Framework for Summarization Evaluation Conclusion Dawn J. Lawrie Loyola College in Maryland 17

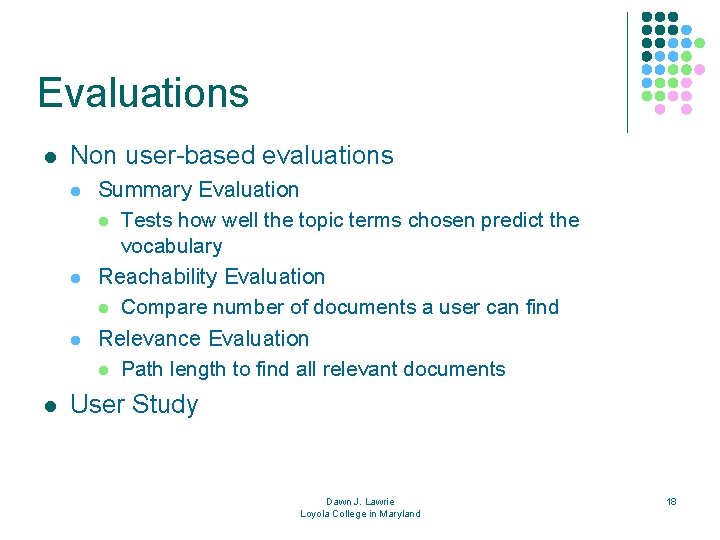

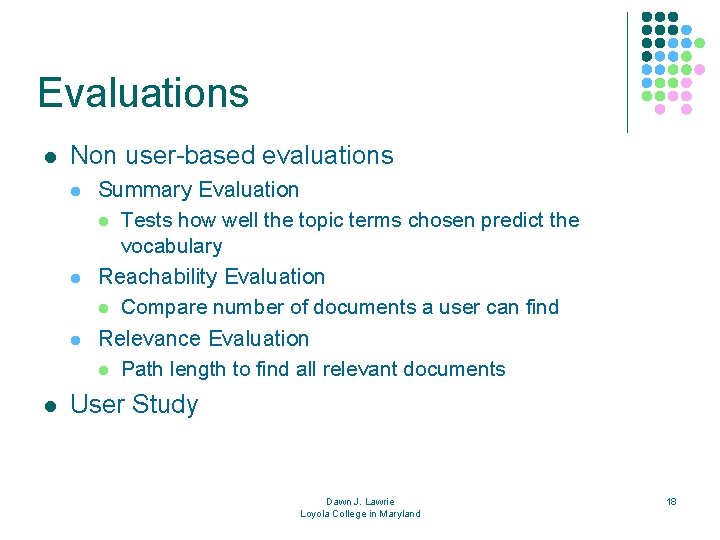

Evaluations l Non user-based evaluations l l Summary Evaluation l Tests how well the topic terms chosen predict the vocabulary Reachability Evaluation l Compare number of documents a user can find Relevance Evaluation l Path length to find all relevant documents User Study Dawn J. Lawrie Loyola College in Maryland 18

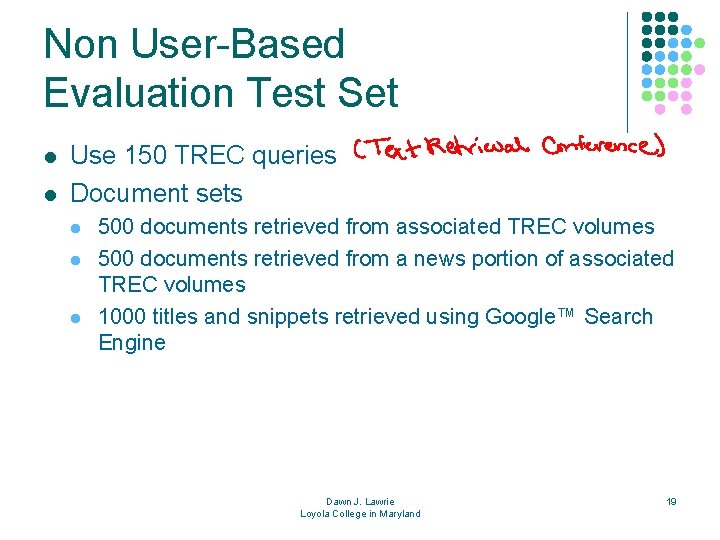

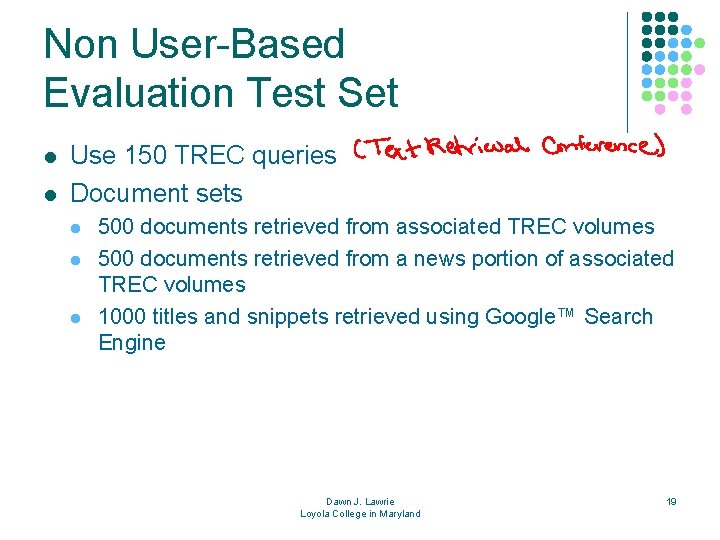

Non User-Based Evaluation Test Set l l Use 150 TREC queries Document sets l l l 500 documents retrieved from associated TREC volumes 500 documents retrieved from a news portion of associated TREC volumes 1000 titles and snippets retrieved using Google™ Search Engine Dawn J. Lawrie Loyola College in Maryland 19

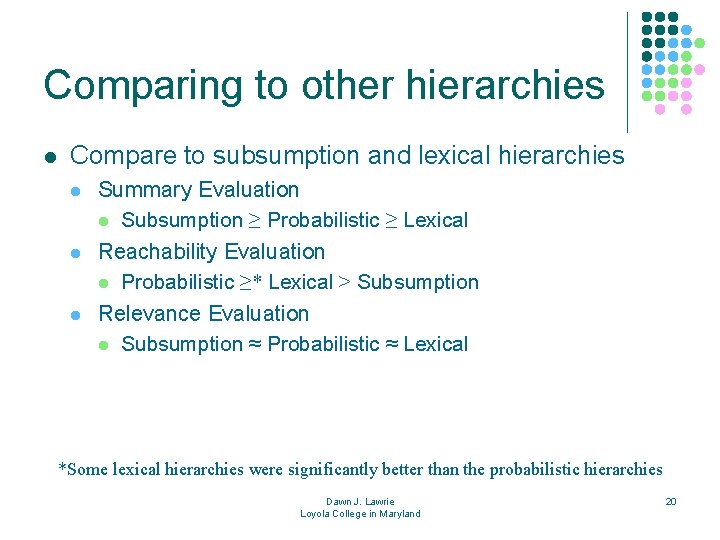

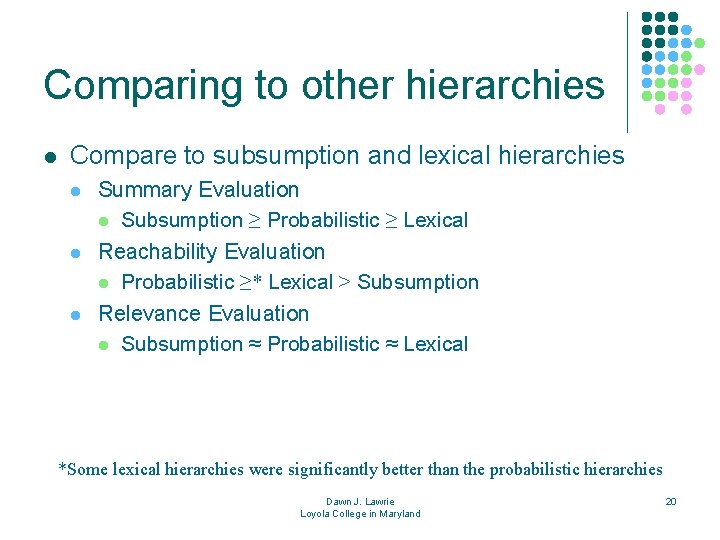

Comparing to other hierarchies l Compare to subsumption and lexical hierarchies l l l Summary Evaluation l Subsumption ≥ Probabilistic ≥ Lexical Reachability Evaluation l Probabilistic ≥* Lexical > Subsumption Relevance Evaluation l Subsumption ≈ Probabilistic ≈ Lexical *Some lexical hierarchies were significantly better than the probabilistic hierarchies Dawn J. Lawrie Loyola College in Maryland 20

Comparison to Other Techniques l Compare words chosen for hierarchy to top TF. IDF words l l Hierarchy words significantly better according to Summary evaluation Compare access provided by hierarchy to access provided by ranked list l l Full text hierarchy with 10 topics – groups no larger than 30 access ~ 350 documents Snippet hierarchy with 10 topics – access ~ 250 documents Dawn J. Lawrie Loyola College in Maryland 21

User Study l TREC style study retrieving “aspects” of a topic l l Users asked to find all documents relevant to the query 12 users 10 queries Compare ranked list and hierarchy to ranked list alone Dawn J. Lawrie Loyola College in Maryland 22

Results l l Ranked list significantly better for aspectual recall Hierarchy slightly better for precision – not significant Recall performance seemed to improve during the course of using the hierarchy All but one user preferred the hierarchy Dawn J. Lawrie Loyola College in Maryland 23

Discussion l Choice of study l l l Believed summary of results help users find all aspects Found users unable to understand topics in hierarchy because unfamiliar with query topic Better suited task l l User familiar with topic and interested in details Able to understand what words and phrases describe Dawn J. Lawrie Loyola College in Maryland 24

Outline l l l Introduction Related Work Probabilistic Framework for Summarization Evaluation Conclusion Dawn J. Lawrie Loyola College in Maryland 25

Future Work l Improve the hierarchy l l Refine estimations of topicality and predictiveness Learn more about effect of different segment sizes and use of natural language features Redesign the interface for the hierarchy so more user friendly Develop a new topic model for snippets that better handles the tiny documents Dawn J. Lawrie Loyola College in Maryland 26

Future Work l Explore the use of topic hierarchies in other organizational tasks l l Personal collections of documents E-mails Create cross-lingual hierarchies using excerpts of documents Compare auto-generated hierarchies manually created ones Dawn J. Lawrie Loyola College in Maryland 27

Conclusions l l l Developed a formal framework for topic hierarchies Used language models as an abstraction of documents Developed non user-based evaluations to combat problems associated with user studies Dawn J. Lawrie Loyola College in Maryland 28