Introduction to Computational Linguistics Eleni Miltsakaki AUTH Fall

- Slides: 42

Introduction to Computational Linguistics Eleni Miltsakaki AUTH Fall 2005 -Lecture 9 1

What’s the plan for today? • Discourse models cont’d – DLTAG: Lexicalized Tree Adjoining Grammar for Discourse • A DLTAG-based system for parsing discourse • The Penn Discourse Treebank – http: //www. cis. upenn. edu/~pdtb 2

Basic references • Anchoring a Lexicalized Tree-Adjoining Grammar for Discourse (1998), – B. Webber and A. Joshi • What are Little Texts Made of? A Structural Presuppositional Account Using Lexicalized TAG – B. Webber, A. Joshi, A. Knott, M. Stone • DLTAG System: Discourse Parsing with a Lexicalized Tree-Adjoining Grammar (2001) – K. Forbes, E. Miltsakaki, R. Prasad, A. Sarkar, A. Joshi and B. Webber • The Penn Discourse Treebank (2004) – E. Miltsakaki, R. Prasad, A. Joshi and B. Webber 3

Motivation and basics of the DLTAG approach • Discourse meaning: more than its parts • Compositional vs non-compositional aspects of discourse meaning • This distinction is often conflated in most of related work • Smooth transition from sentence level structure to discourse level structure 4

The DLTAG view of discourse connectives • Discourse connectives are treated as higher level predicates taking clausal arguments • Basic types of discourse connectives: – Structural • Subordinate conjunctions (when, although, because etc) • Coordinate conjunctions (and, but, or) – “Anaphoric” • Adverbials (however, therefore, as a result, etc) 5

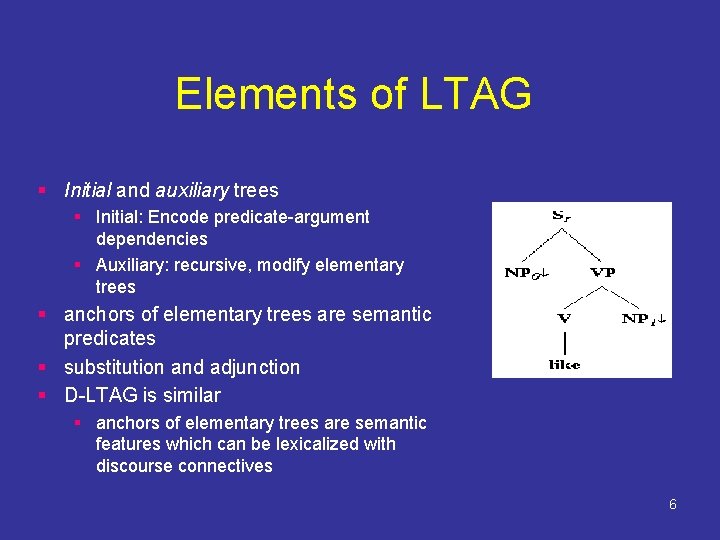

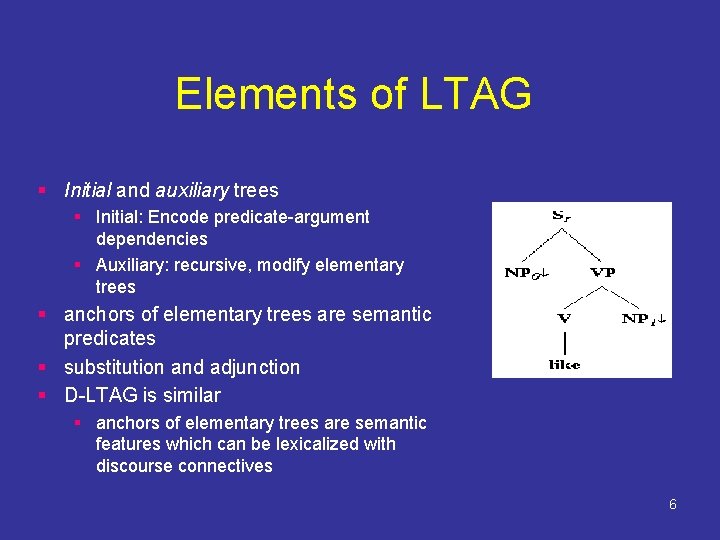

Elements of LTAG § Initial and auxiliary trees § Initial: Encode predicate-argument dependencies § Auxiliary: recursive, modify elementary trees § anchors of elementary trees are semantic predicates § substitution and adjunction § D-LTAG is similar § anchors of elementary trees are semantic features which can be lexicalized with discourse connectives 6

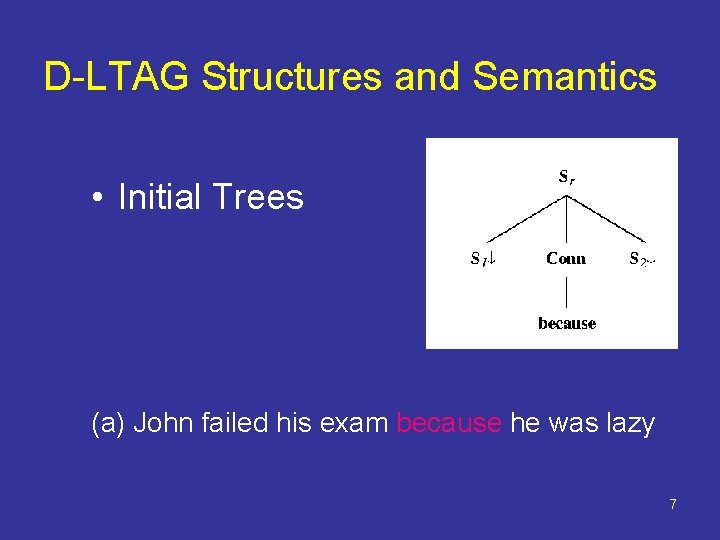

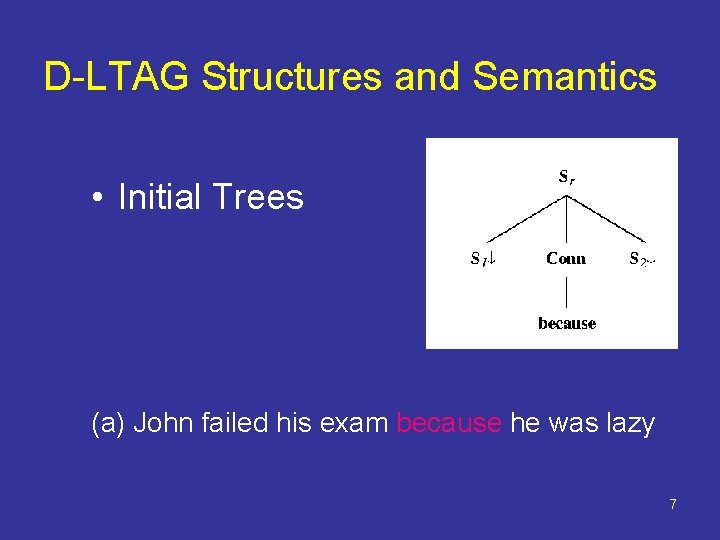

D-LTAG Structures and Semantics • Initial Trees (a) John failed his exam because he was lazy 7

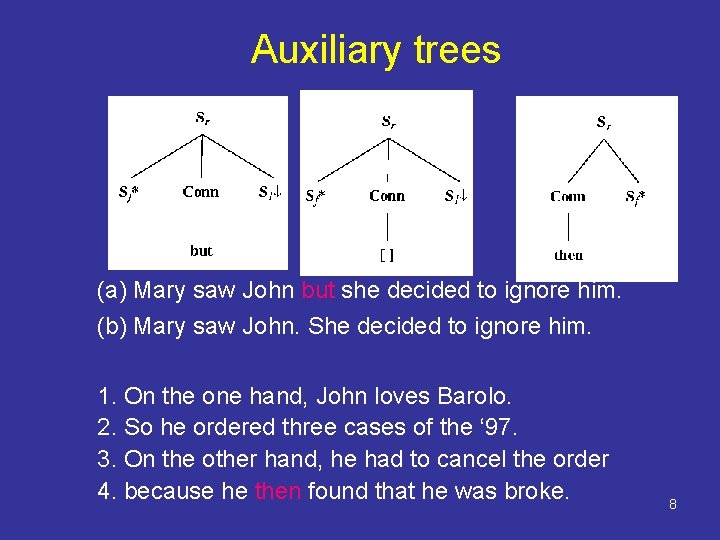

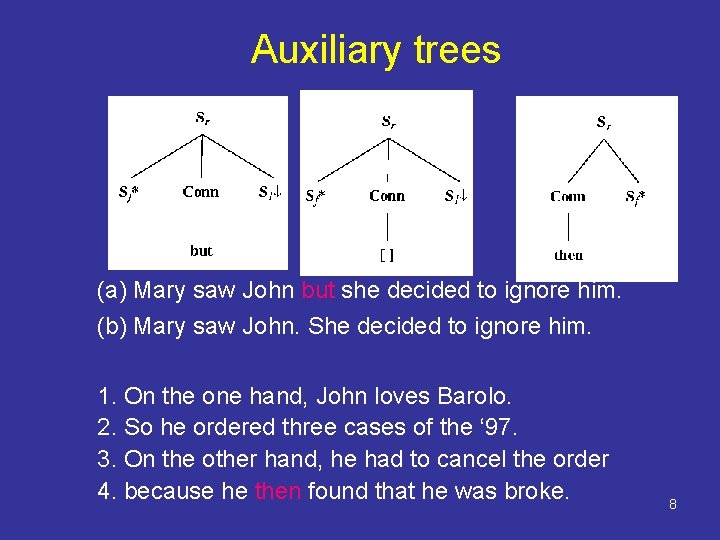

Auxiliary trees (a) Mary saw John but she decided to ignore him. (b) Mary saw John. She decided to ignore him. 1. On the one hand, John loves Barolo. 2. So he ordered three cases of the ‘ 97. 3. On the other hand, he had to cancel the order 4. because he then found that he was broke. 8

Phenomena that DLTAG captures • Arguments of a coherence relation can be stretched “long distance” • Multiple discourse connectives can appear in a single sentence or even a single clause • Coherence relations can vary in how and when they are realized lexically 9

Stretching arguments • On the one hand, John loves Barolo. • So he ordered three cases of the ’ 97. • On the other hand, he had to cancel the order • Because he then found that he was broke. 10

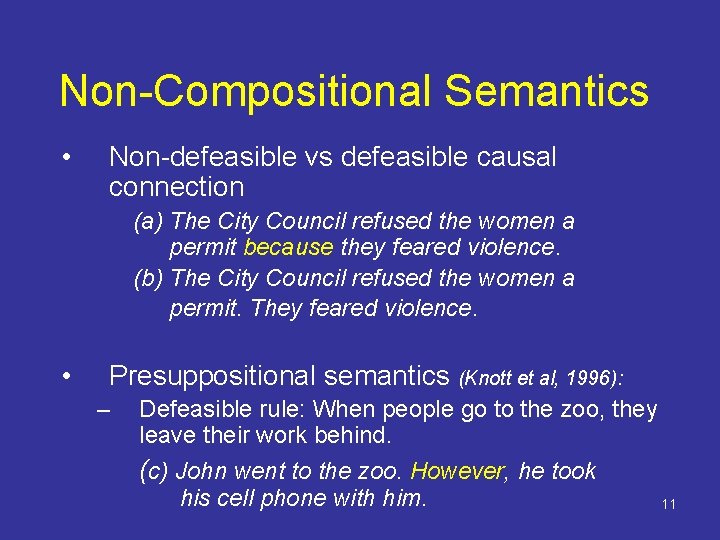

Non-Compositional Semantics • Non-defeasible vs defeasible causal connection (a) The City Council refused the women a permit because they feared violence. (b) The City Council refused the women a permit. They feared violence. • Presuppositional semantics (Knott et al, 1996): – Defeasible rule: When people go to the zoo, they leave their work behind. (c) John went to the zoo. However, he took his cell phone with him. 11

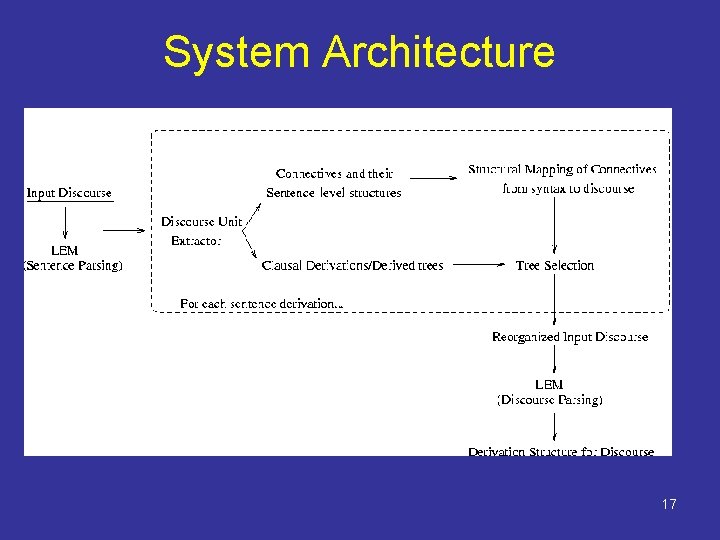

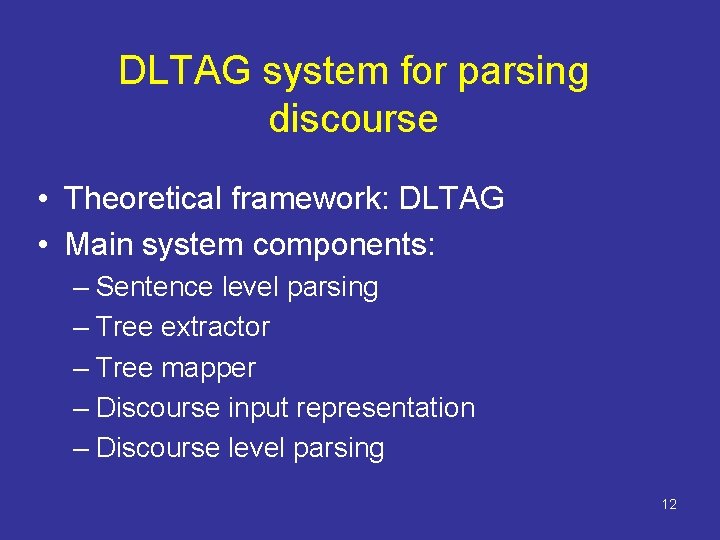

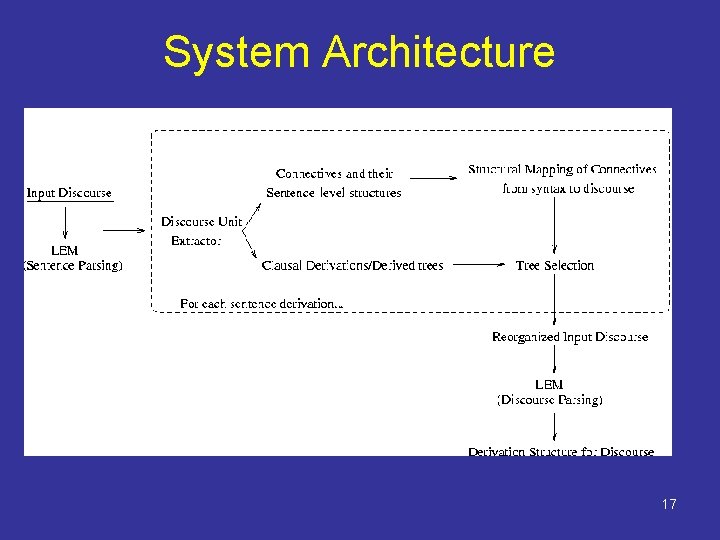

DLTAG system for parsing discourse • Theoretical framework: DLTAG • Main system components: – Sentence level parsing – Tree extractor – Tree mapper – Discourse input representation – Discourse level parsing 12

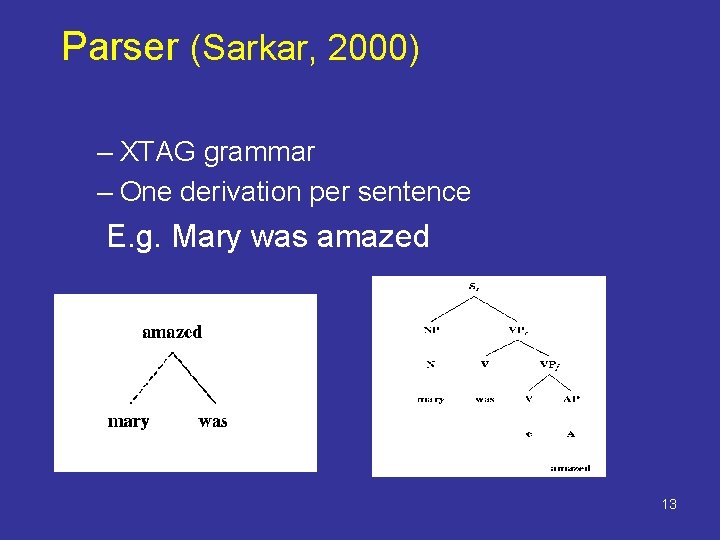

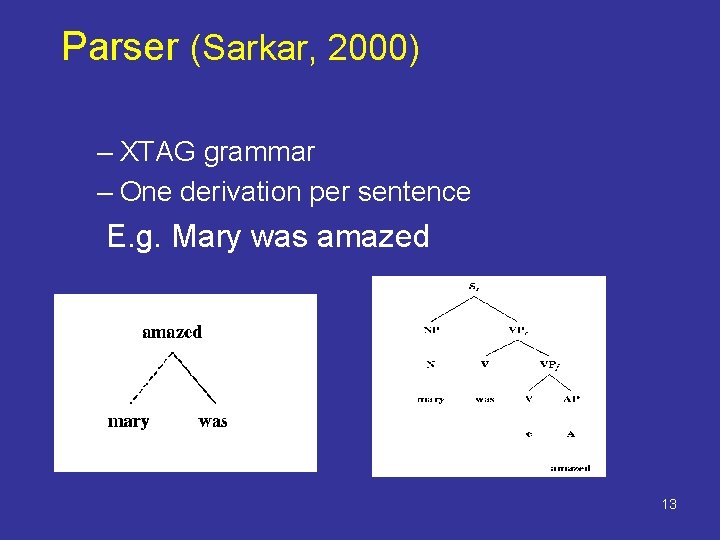

Parser (Sarkar, 2000) – XTAG grammar – One derivation per sentence E. g. Mary was amazed 13

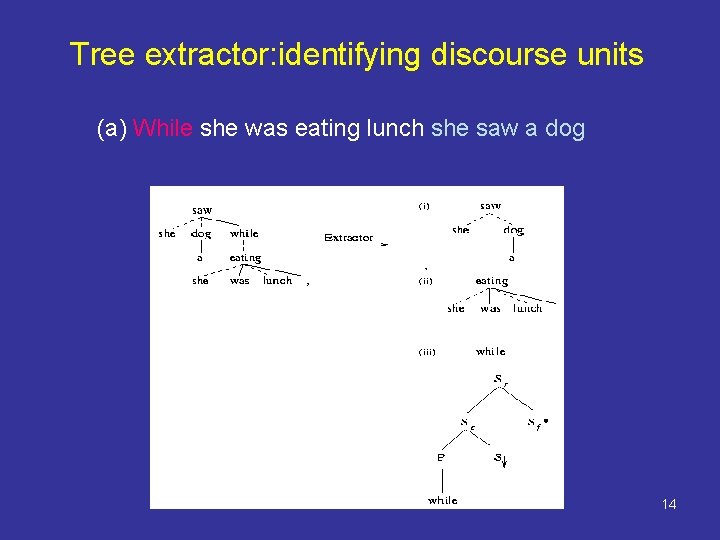

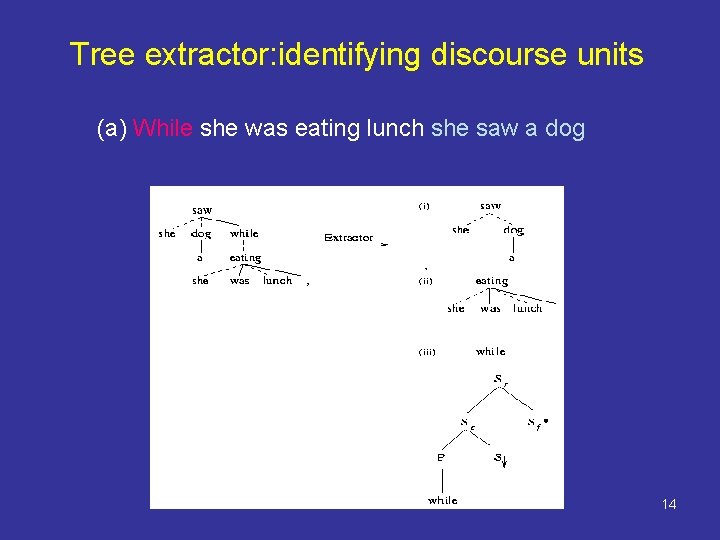

Tree extractor: identifying discourse units (a) While she was eating lunch she saw a dog 14

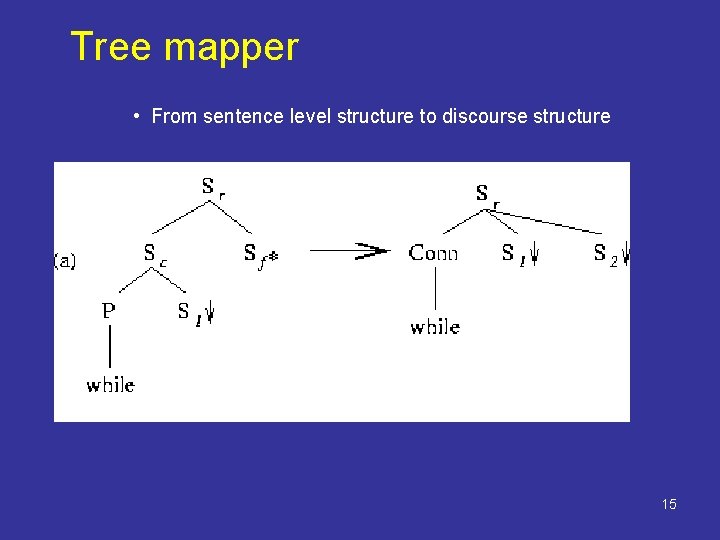

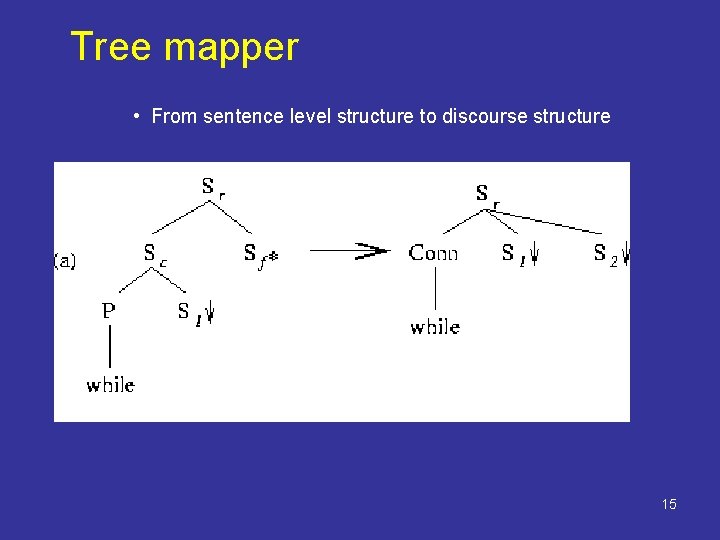

Tree mapper • From sentence level structure to discourse structure 15

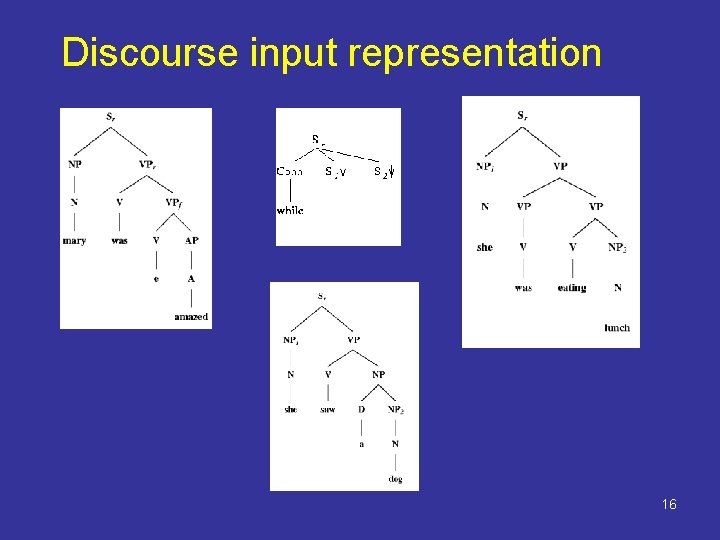

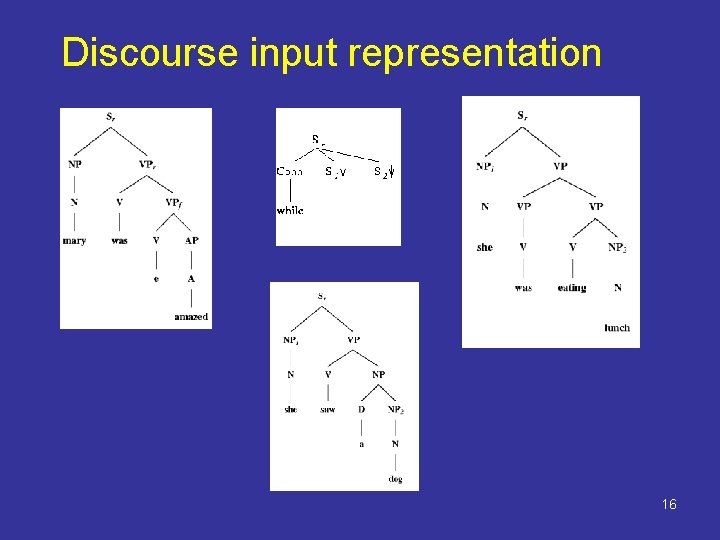

Discourse input representation 16

System Architecture 17

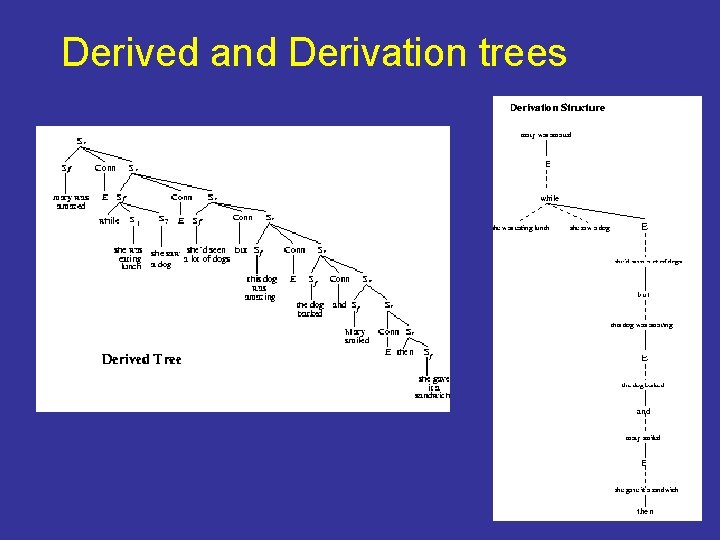

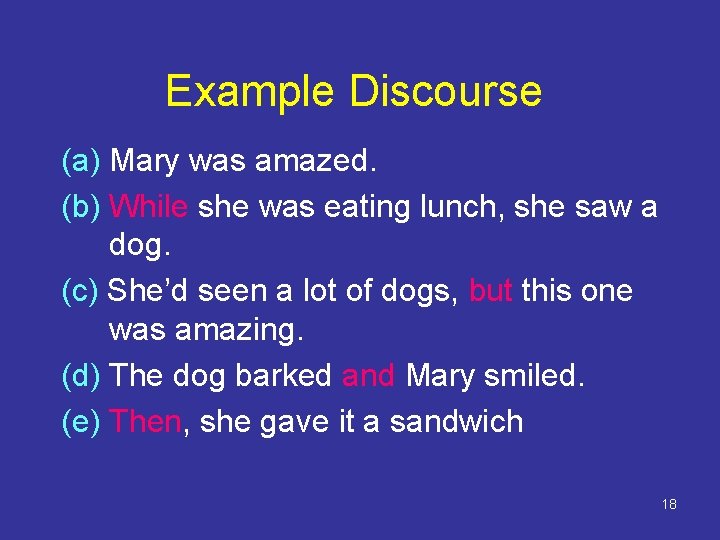

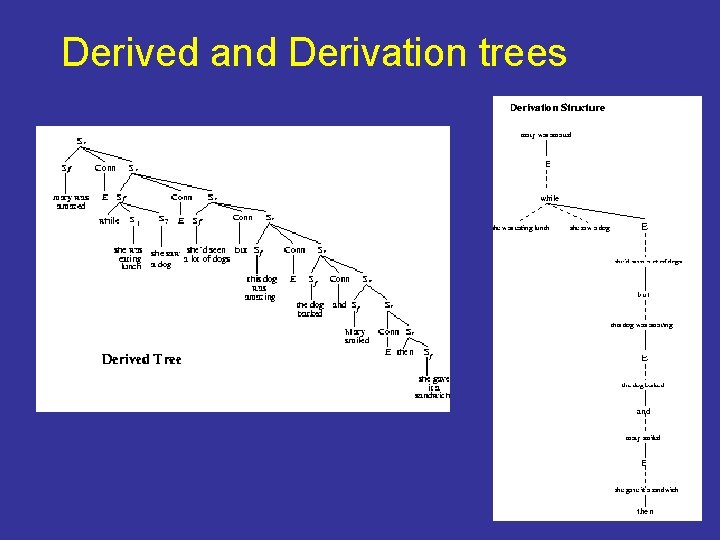

Example Discourse (a) Mary was amazed. (b) While she was eating lunch, she saw a dog. (c) She’d seen a lot of dogs, but this one was amazing. (d) The dog barked and Mary smiled. (e) Then, she gave it a sandwich 18

Derived and Derivation trees

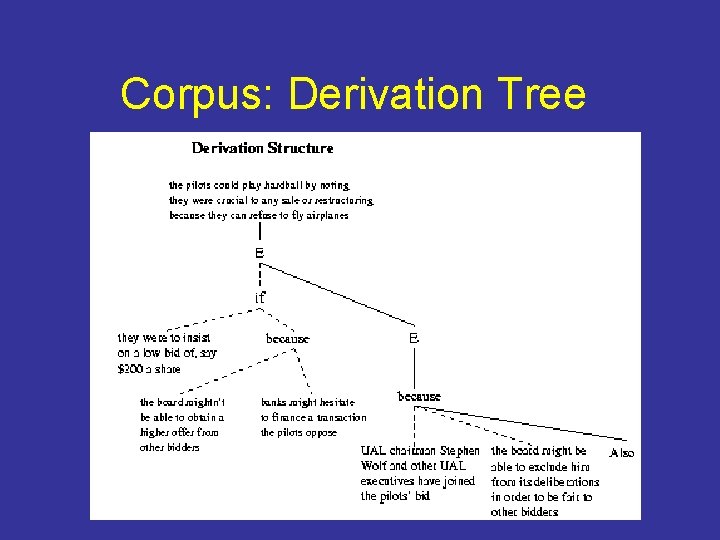

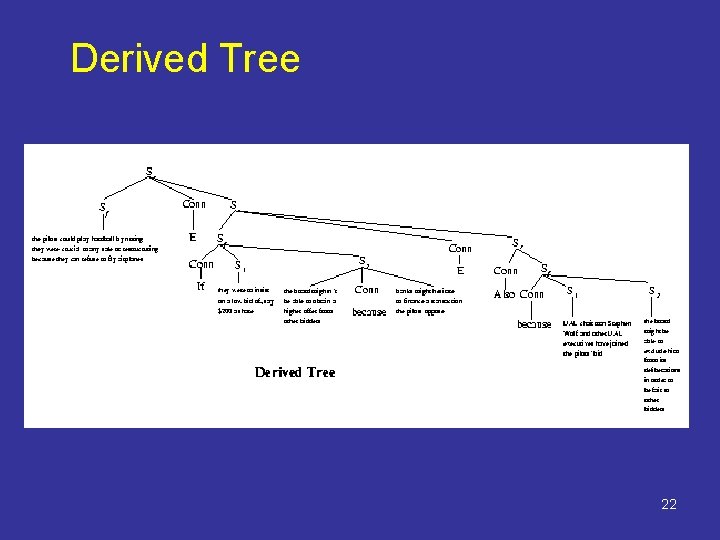

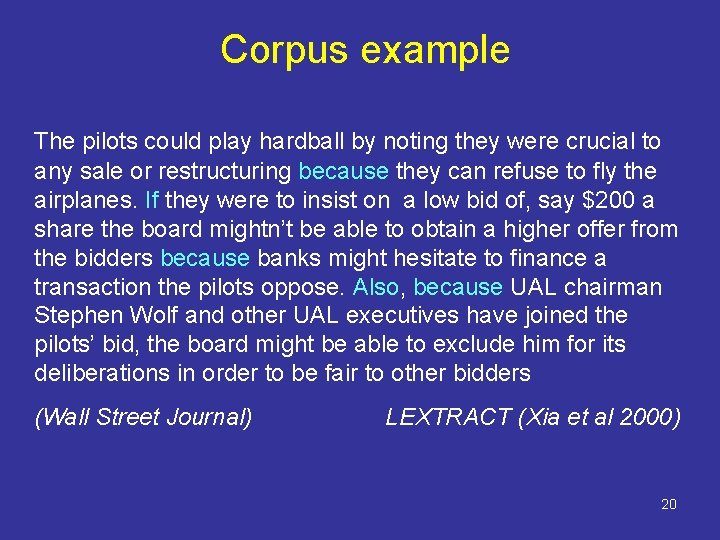

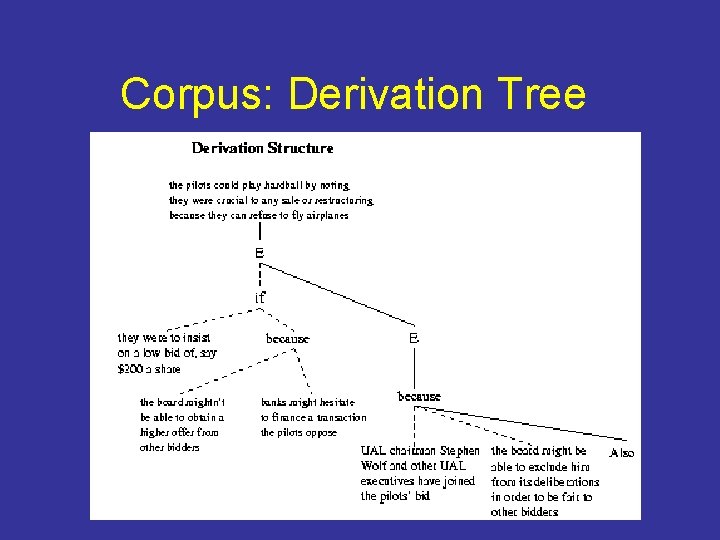

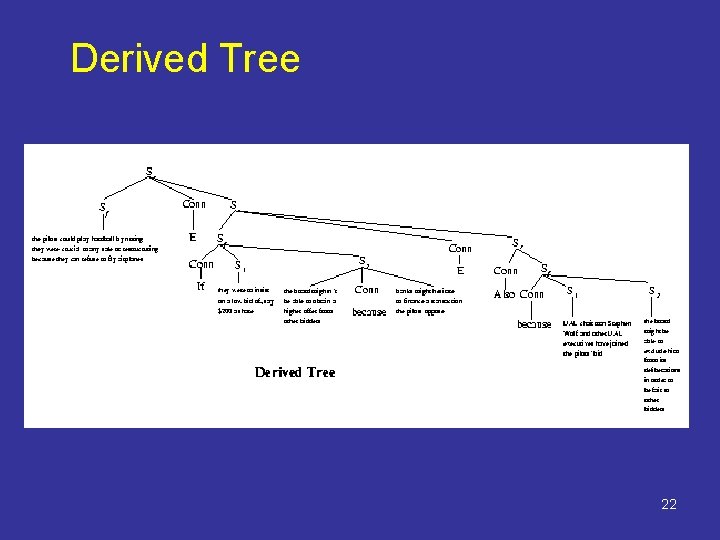

Corpus example The pilots could play hardball by noting they were crucial to any sale or restructuring because they can refuse to fly the airplanes. If they were to insist on a low bid of, say $200 a share the board mightn’t be able to obtain a higher offer from the bidders because banks might hesitate to finance a transaction the pilots oppose. Also, because UAL chairman Stephen Wolf and other UAL executives have joined the pilots’ bid, the board might be able to exclude him for its deliberations in order to be fair to other bidders (Wall Street Journal) LEXTRACT (Xia et al 2000) 20

Corpus: Derivation Tree

Derived Tree 22

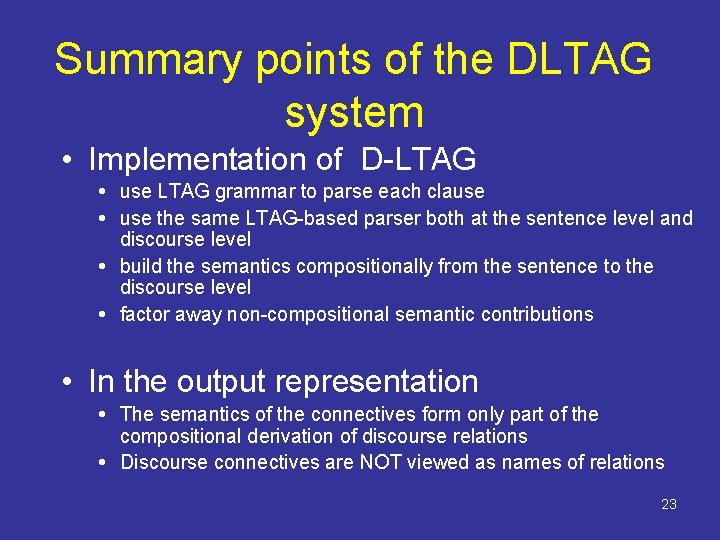

Summary points of the DLTAG system • Implementation of D-LTAG use LTAG grammar to parse each clause the same LTAG-based parser both at the sentence level and discourse level build the semantics compositionally from the sentence to the discourse level factor away non-compositional semantic contributions • In the output representation The semantics of the connectives form only part of the compositional derivation of discourse relations Discourse connectives are NOT viewed as names of relations 23

The Penn Discourse Treebank Ø Annotation of discourse connective and their arguments Ø Large scale: annotation of the entire Penn Treebank (1 million words) 24

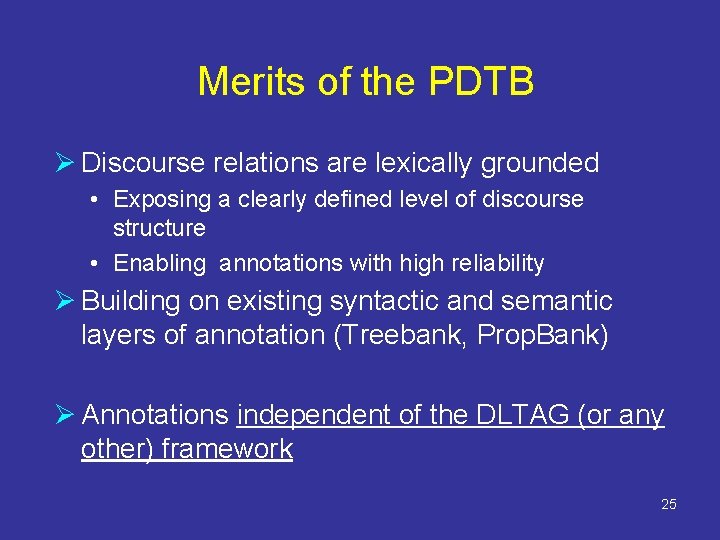

Merits of the PDTB Ø Discourse relations are lexically grounded • Exposing a clearly defined level of discourse structure • Enabling annotations with high reliability Ø Building on existing syntactic and semantic layers of annotation (Treebank, Prop. Bank) Ø Annotations independent of the DLTAG (or any other) framework 25

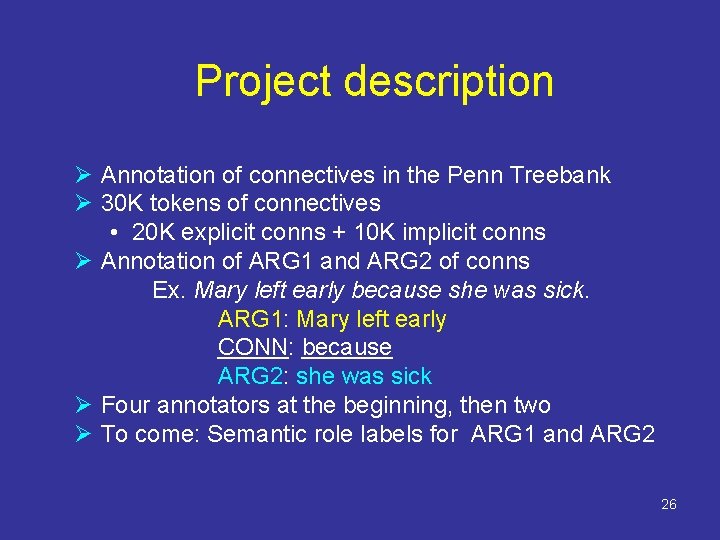

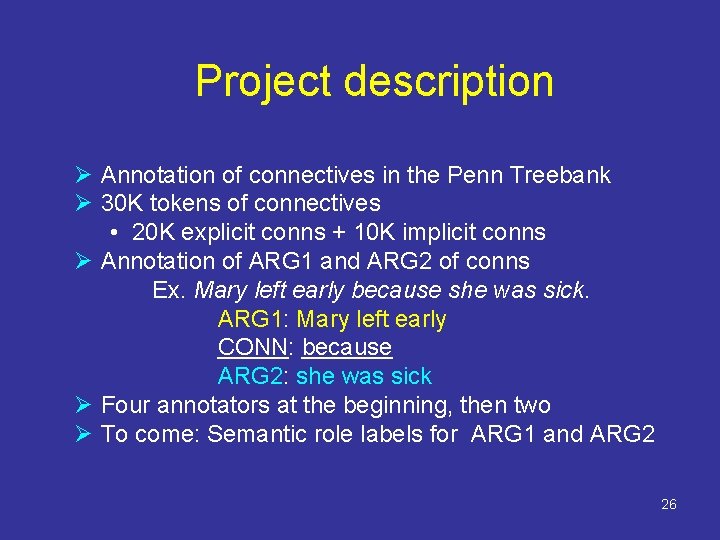

Project description Ø Annotation of connectives in the Penn Treebank Ø 30 K tokens of connectives • 20 K explicit conns + 10 K implicit conns Ø Annotation of ARG 1 and ARG 2 of conns Ex. Mary left early because she was sick. ARG 1: Mary left early CONN: because ARG 2: she was sick Ø Four annotators at the beginning, then two Ø To come: Semantic role labels for ARG 1 and ARG 2 26

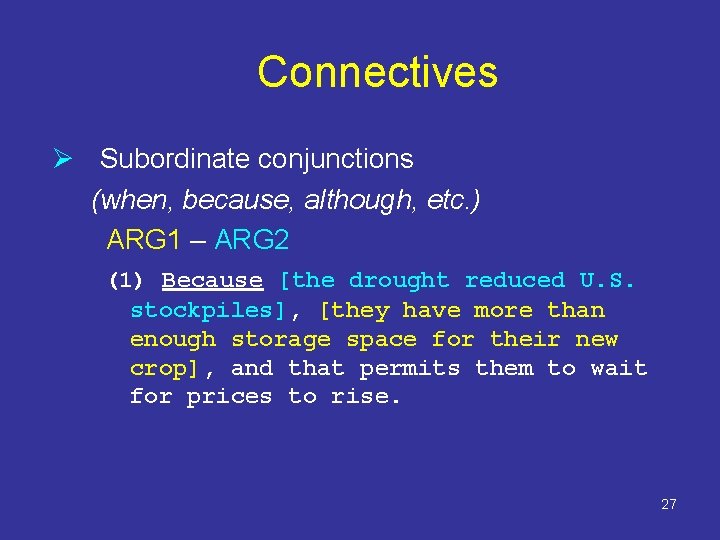

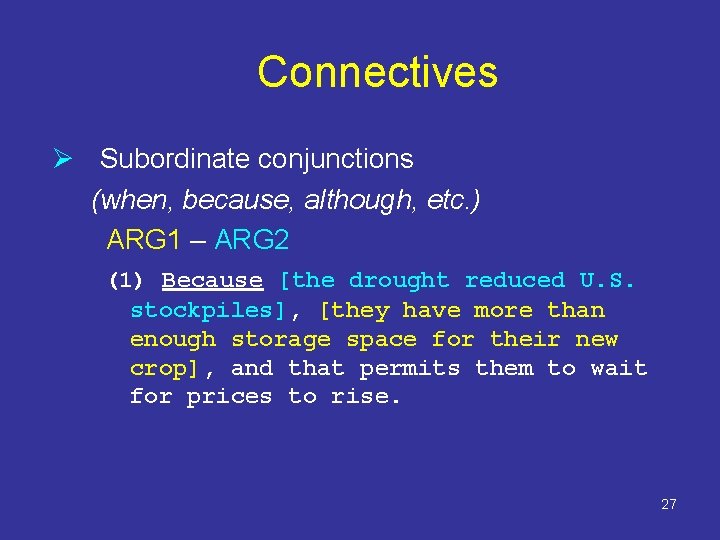

Connectives Ø Subordinate conjunctions (when, because, although, etc. ) ARG 1 – ARG 2 (1) Because [the drought reduced U. S. stockpiles], [they have more than enough storage space for their new crop], and that permits them to wait for prices to rise. 27

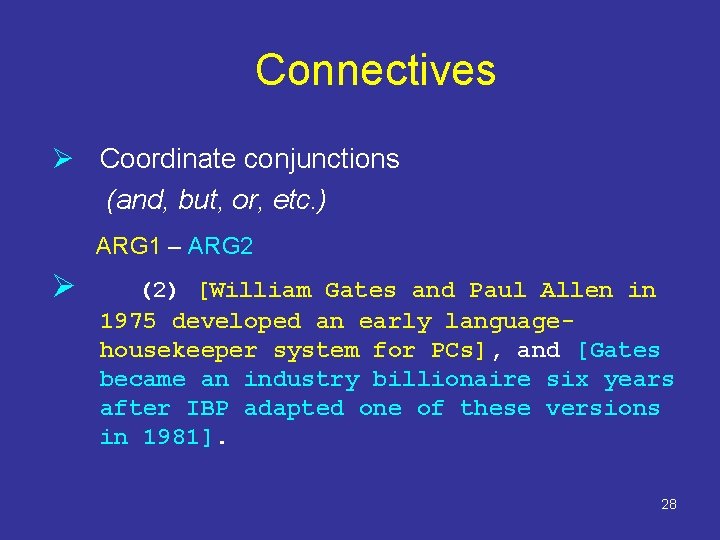

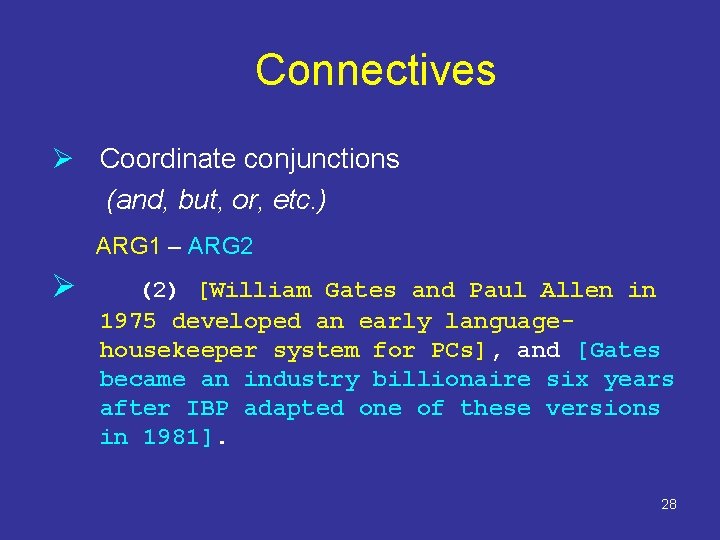

Connectives Ø Coordinate conjunctions (and, but, or, etc. ) ARG 1 – ARG 2 Ø (2) [William Gates and Paul Allen in 1975 developed an early languagehousekeeper system for PCs], and [Gates became an industry billionaire six years after IBP adapted one of these versions in 1981]. 28

Connectives Ø Adverbials (therefore, then, as a result, etc. ) ARG 1 – ARG 2 • (3) For years, costume jewelry makers fought a losing battle. Jewelry displays in department stores were often cluttered and uninspired. And the merchandise was, well, fake. As a result, marketers of faux gems steadily lost space in department stores to more fashionable rivals -- cosmetics makers. 29

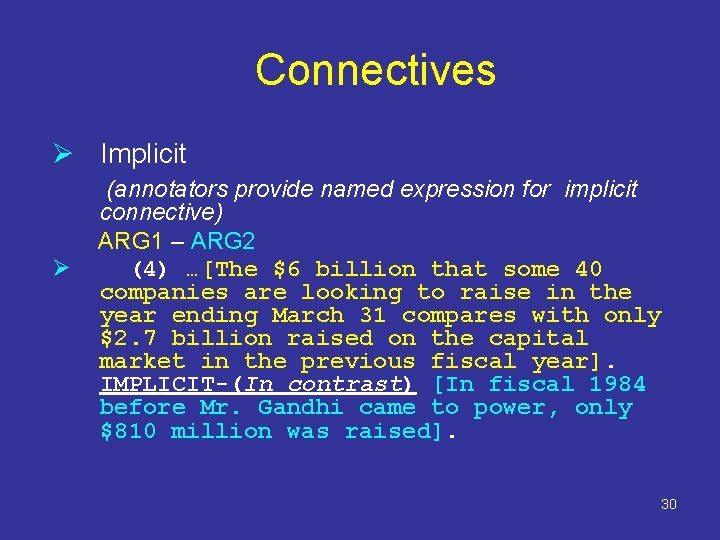

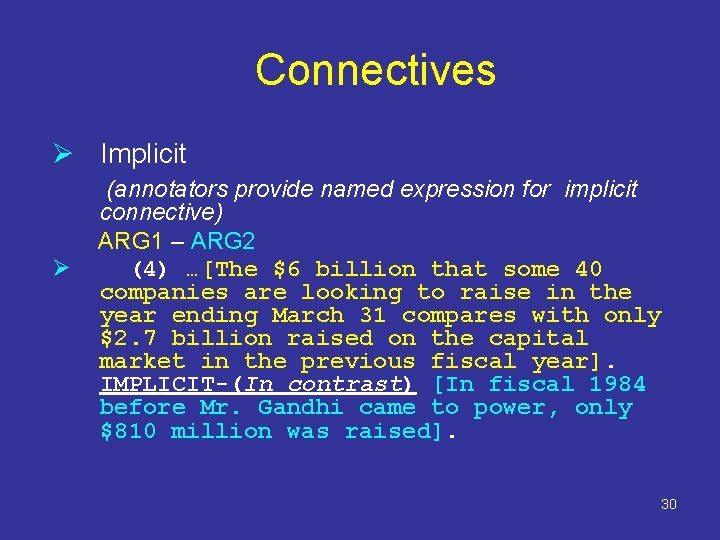

Connectives Ø Implicit Ø (annotators provide named expression for implicit connective) ARG 1 – ARG 2 (4) …[The $6 billion that some 40 companies are looking to raise in the year ending March 31 compares with only $2. 7 billion raised on the capital market in the previous fiscal year]. IMPLICIT-(In contrast) [In fiscal 1984 before Mr. Gandhi came to power, only $810 million was raised]. 30

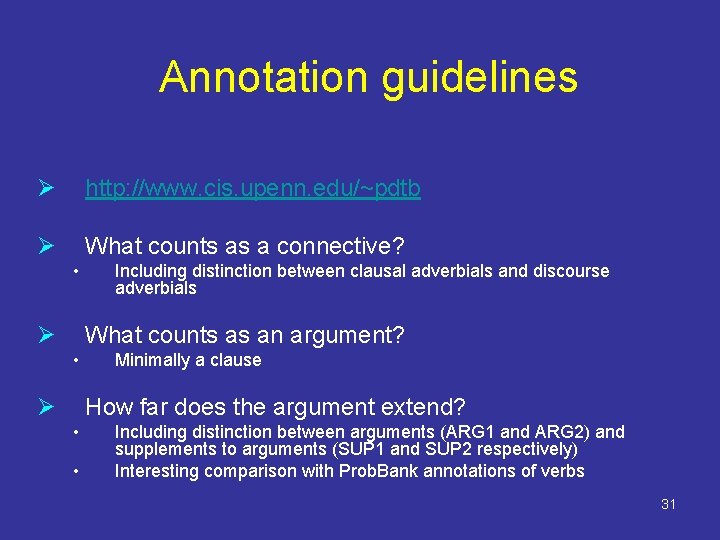

Annotation guidelines Ø http: //www. cis. upenn. edu/~pdtb Ø What counts as a connective? • Ø Including distinction between clausal adverbials and discourse adverbials What counts as an argument? • Ø Minimally a clause How far does the argument extend? • • Including distinction between arguments (ARG 1 and ARG 2) and supplements to arguments (SUP 1 and SUP 2 respectively) Interesting comparison with Prob. Bank annotations of verbs 31

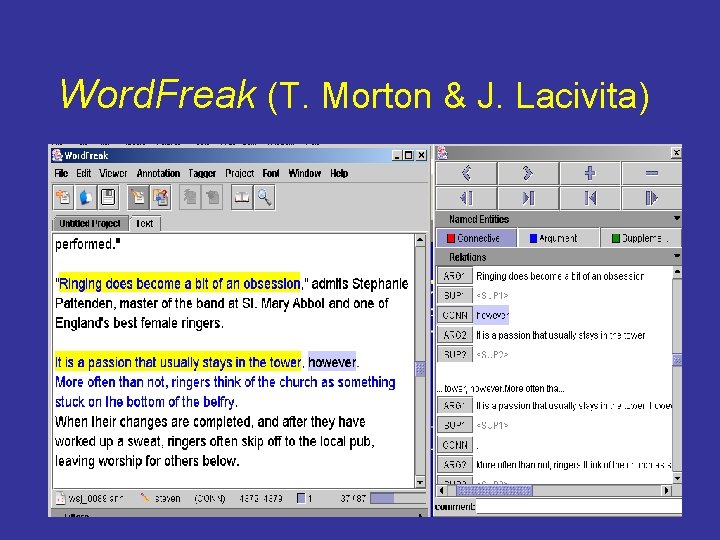

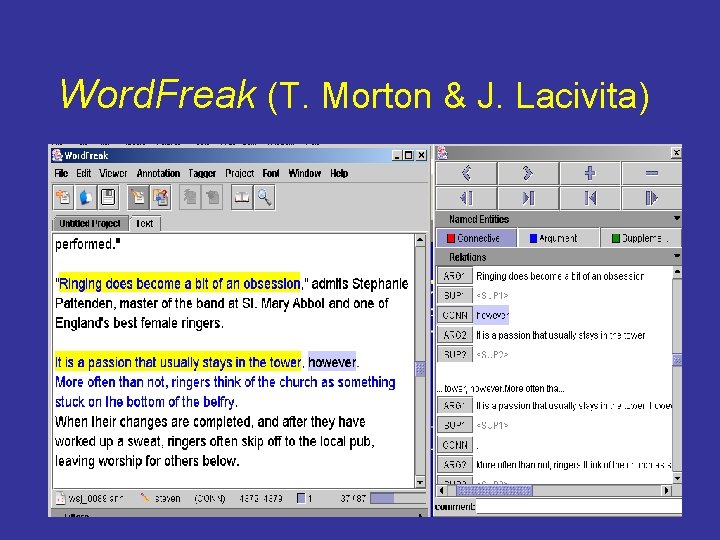

Word. Freak (T. Morton & J. Lacivita) 32

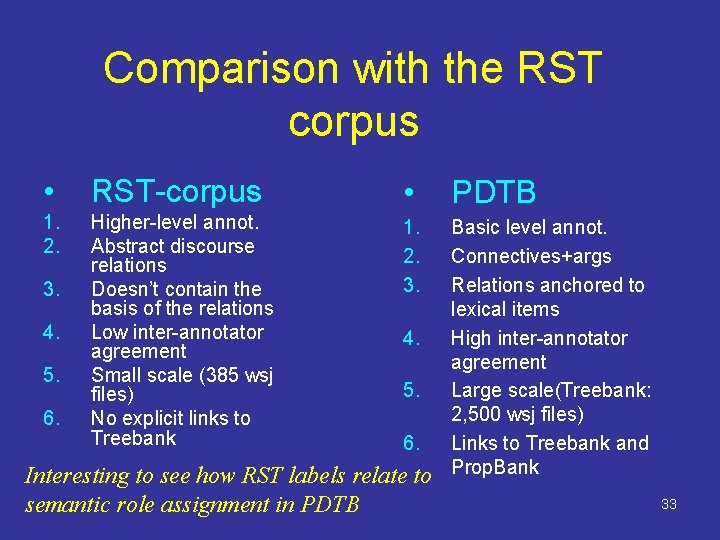

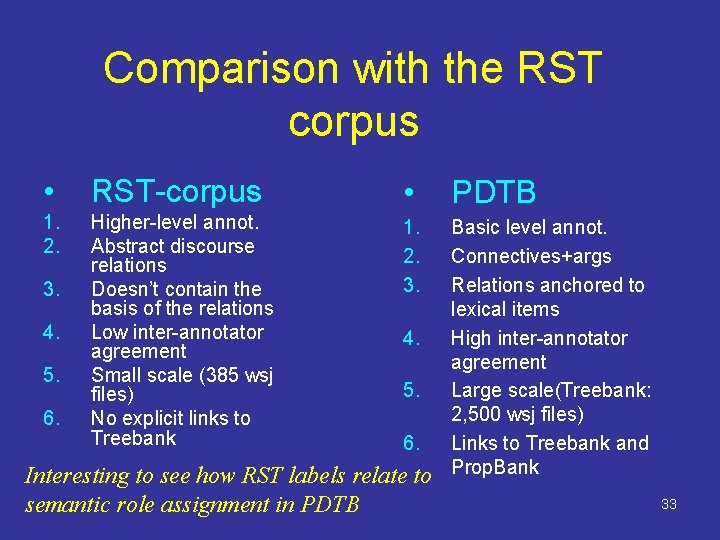

Comparison with the RST corpus • RST-corpus • PDTB 1. 2. Higher-level annot. Abstract discourse relations Doesn’t contain the basis of the relations Low inter-annotator agreement Small scale (385 wsj files) No explicit links to Treebank 1. 2. 3. Basic level annot. Connectives+args Relations anchored to lexical items High inter-annotator agreement Large scale(Treebank: 2, 500 wsj files) Links to Treebank and Prop. Bank 3. 4. 5. 6. Interesting to see how RST labels relate to semantic role assignment in PDTB 33

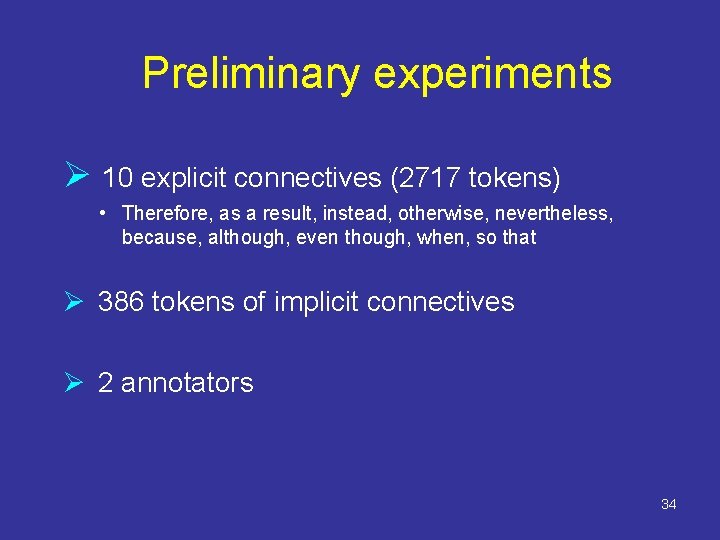

Preliminary experiments Ø 10 explicit connectives (2717 tokens) • Therefore, as a result, instead, otherwise, nevertheless, because, although, even though, when, so that Ø 386 tokens of implicit connectives Ø 2 annotators 34

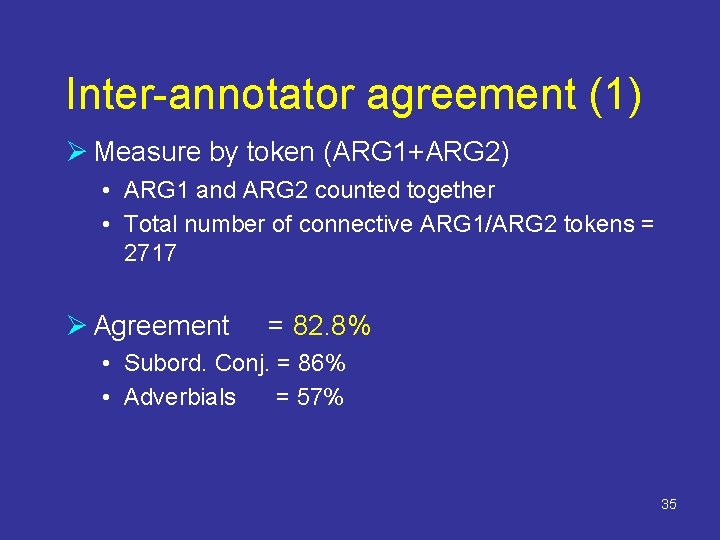

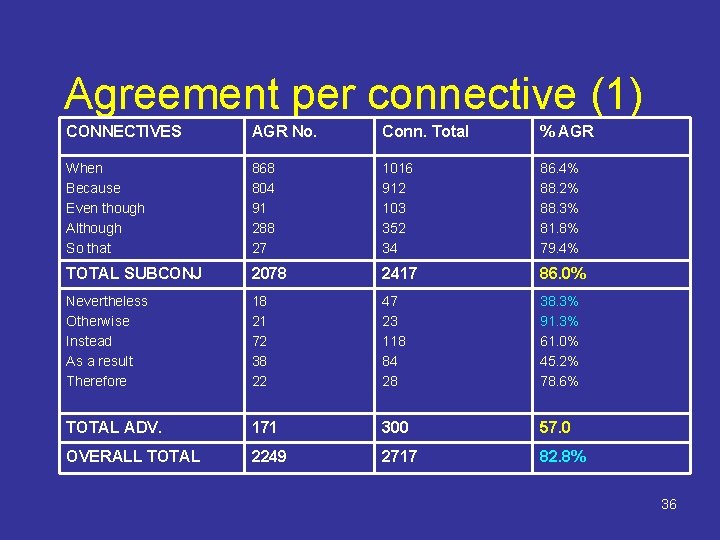

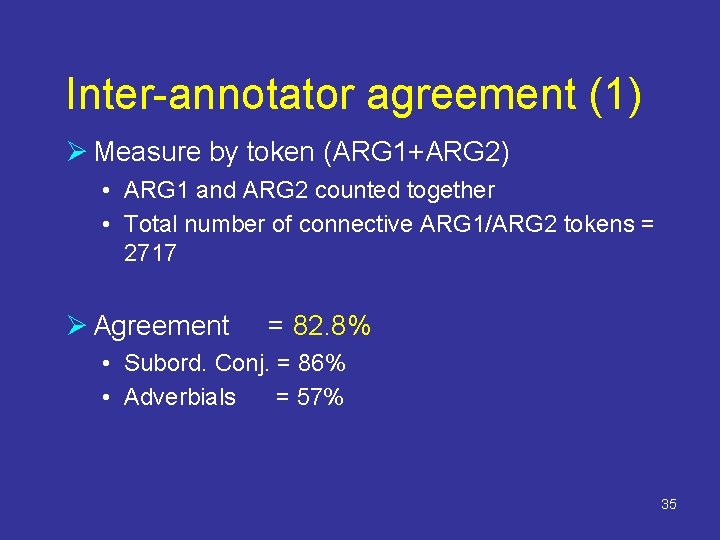

Inter-annotator agreement (1) Ø Measure by token (ARG 1+ARG 2) • ARG 1 and ARG 2 counted together • Total number of connective ARG 1/ARG 2 tokens = 2717 Ø Agreement = 82. 8% • Subord. Conj. = 86% • Adverbials = 57% 35

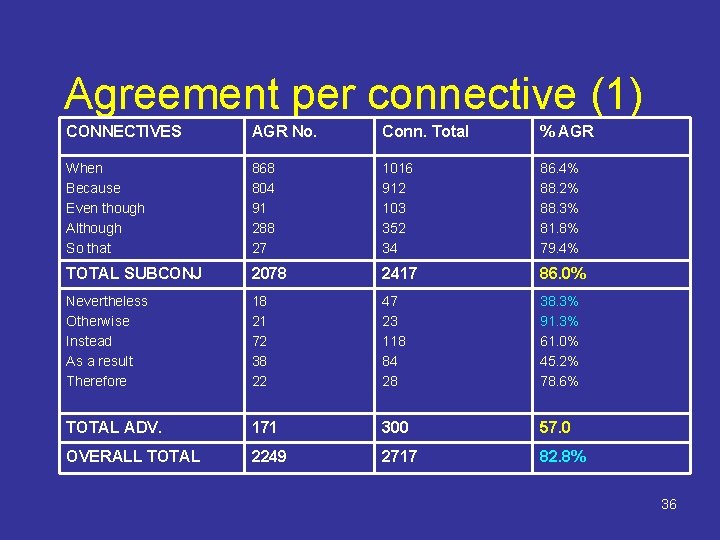

Agreement per connective (1) CONNECTIVES AGR No. Conn. Total % AGR When Because Even though Although So that 868 804 91 288 27 1016 912 103 352 34 86. 4% 88. 2% 88. 3% 81. 8% 79. 4% TOTAL SUBCONJ 2078 2417 86. 0% Nevertheless Otherwise Instead As a result Therefore 18 21 72 38 22 47 23 118 84 28 38. 3% 91. 3% 61. 0% 45. 2% 78. 6% TOTAL ADV. 171 300 57. 0 OVERALL TOTAL 2249 2717 82. 8% 36

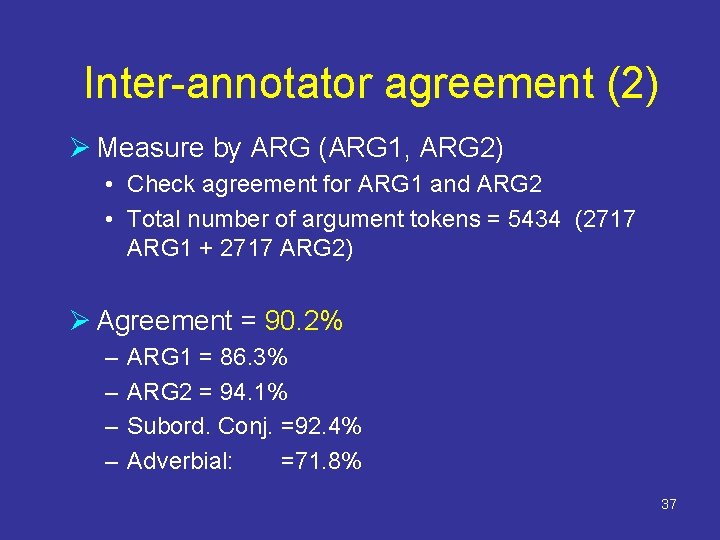

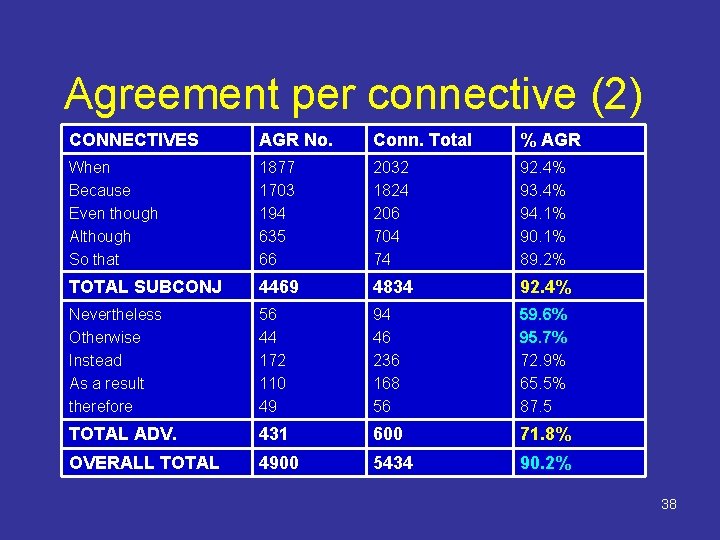

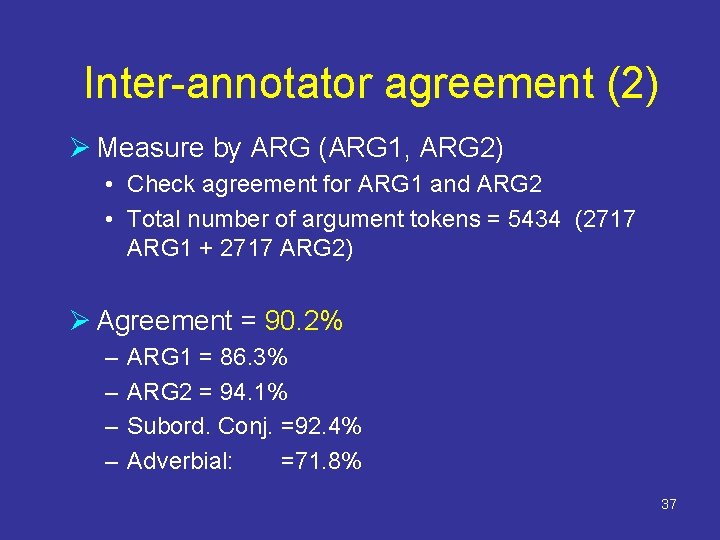

Inter-annotator agreement (2) Ø Measure by ARG (ARG 1, ARG 2) • Check agreement for ARG 1 and ARG 2 • Total number of argument tokens = 5434 (2717 ARG 1 + 2717 ARG 2) Ø Agreement = 90. 2% – – ARG 1 = 86. 3% ARG 2 = 94. 1% Subord. Conj. =92. 4% Adverbial: =71. 8% 37

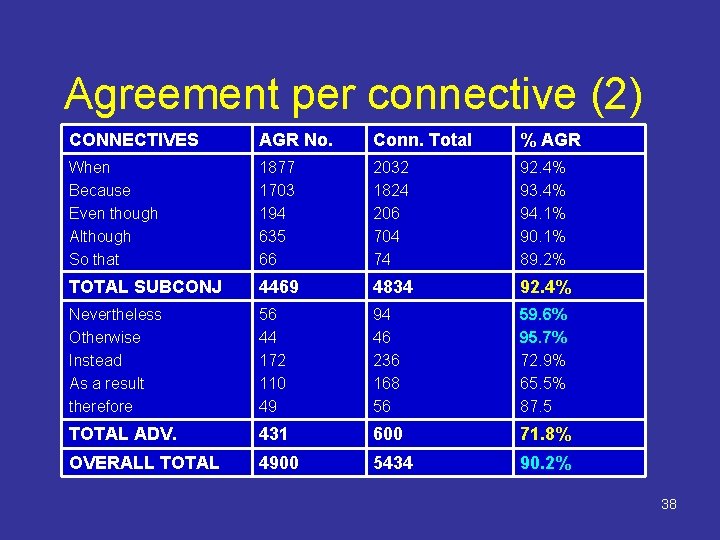

Agreement per connective (2) CONNECTIVES AGR No. Conn. Total % AGR When Because Even though Although So that 1877 1703 194 635 66 2032 1824 206 704 74 92. 4% 93. 4% 94. 1% 90. 1% 89. 2% TOTAL SUBCONJ 4469 4834 92. 4% Nevertheless Otherwise Instead As a result therefore 56 44 172 110 49 94 46 236 168 56 59. 6% 95. 7% 72. 9% 65. 5% 87. 5 TOTAL ADV. 431 600 71. 8% OVERALL TOTAL 4900 5434 90. 2% 38

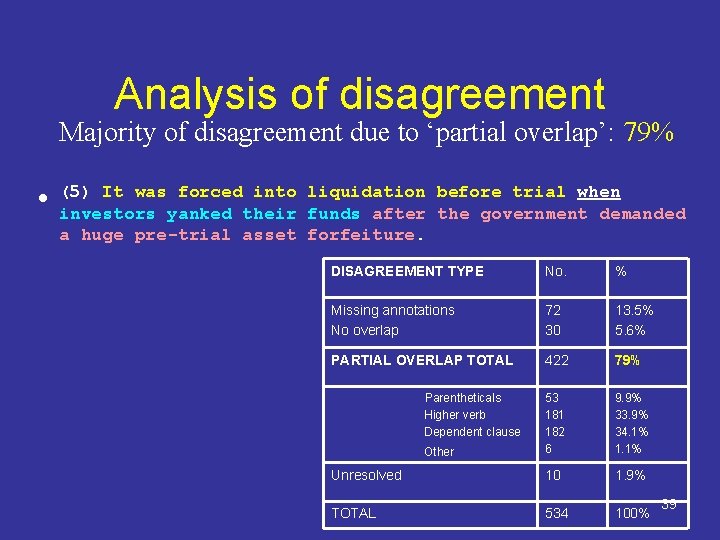

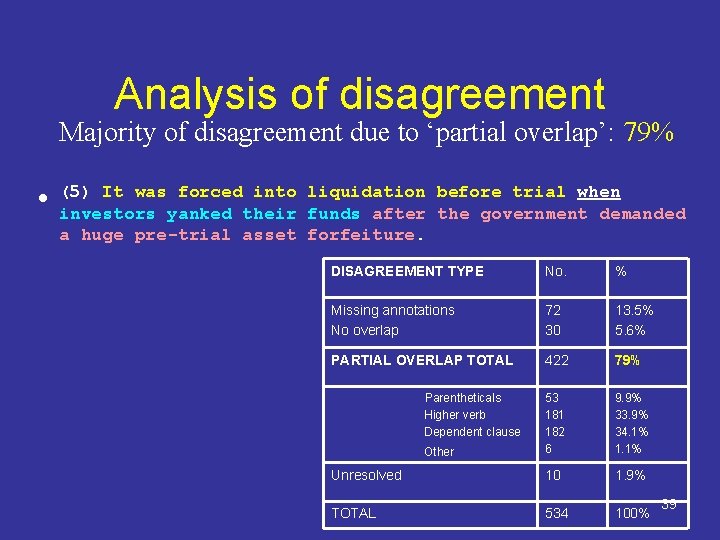

Analysis of disagreement Majority of disagreement due to ‘partial overlap’: 79% • (5) It was forced into liquidation before trial when investors yanked their funds after the government demanded a huge pre-trial asset forfeiture. DISAGREEMENT TYPE No. % Missing annotations No overlap 72 30 13. 5% 5. 6% PARTIAL OVERLAP TOTAL 422 79% 53 181 182 6 9. 9% 33. 9% 34. 1% 1. 1% Unresolved 10 1. 9% TOTAL 534 100% Parentheticals Higher verb Dependent clause Other 39

Reanalysis of agreement ØInter-annotator agreement counting in partial overlap • 94. 5% Ø Dealing with extent of the argument • Revise guidelines • BUT: Some disagreement will persist 40

Comparing predicates Ø Prop. Bank – sentence level predicates (verbs) • Arity of arguments: Hard • Extent of the argument: Easy Ø Penn Discourse Treebank – discourse predicates • Arity of arguments: Easy • Extent of the argument: Hard 41

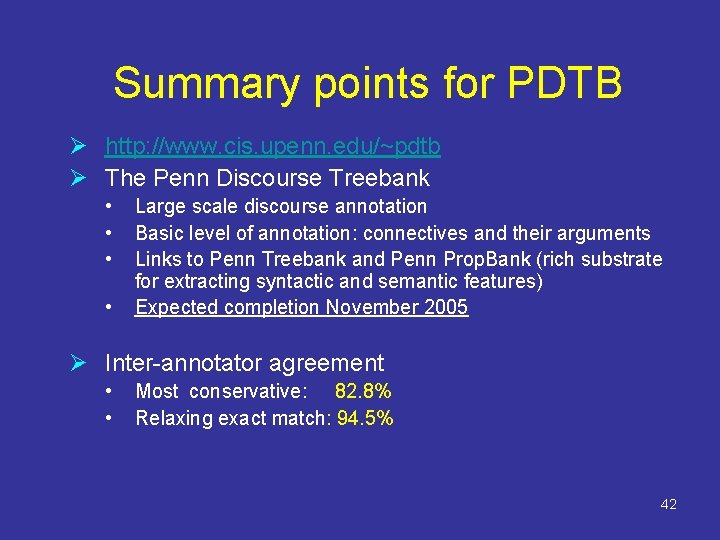

Summary points for PDTB Ø http: //www. cis. upenn. edu/~pdtb Ø The Penn Discourse Treebank • • Large scale discourse annotation Basic level of annotation: connectives and their arguments Links to Penn Treebank and Penn Prop. Bank (rich substrate for extracting syntactic and semantic features) Expected completion November 2005 Ø Inter-annotator agreement • • Most conservative: 82. 8% Relaxing exact match: 94. 5% 42

Elnaz delpisheh

Elnaz delpisheh Eleni miltsakaki

Eleni miltsakaki Eleni miltsakaki

Eleni miltsakaki Eleni miltsakaki

Eleni miltsakaki Eleni miltsakaki

Eleni miltsakaki Chomsky computational linguistics

Chomsky computational linguistics Xkcd computational linguistics

Xkcd computational linguistics Computational linguistics olympiad

Computational linguistics olympiad Columbia computational linguistics

Columbia computational linguistics Traditional linguistics and modern linguistics

Traditional linguistics and modern linguistics Theoretical linguistics vs applied linguistics

Theoretical linguistics vs applied linguistics Eleni hatzi

Eleni hatzi Eleni stroulia

Eleni stroulia Is fiber class evidence

Is fiber class evidence Daniel lightman qc

Daniel lightman qc Istio security

Istio security Is.muni.cz/auth/

Is.muni.cz/auth/ Forti authenticator

Forti authenticator Aikido/admin/auth

Aikido/admin/auth Auth aiesec

Auth aiesec Aiesec vision

Aiesec vision Auth portal pvusd

Auth portal pvusd Eurep auth

Eurep auth Jamboree auth

Jamboree auth Auth z

Auth z Auth amikom

Auth amikom G yule

G yule Introducing phonetics and phonology answer key

Introducing phonetics and phonology answer key An introduction to applied linguistics

An introduction to applied linguistics Cognitive linguistics: an introduction

Cognitive linguistics: an introduction Characteristics of computational thinking

Characteristics of computational thinking Computational thinking algorithms and programming

Computational thinking algorithms and programming Grc computational chemistry

Grc computational chemistry Using mathematics and computational thinking

Using mathematics and computational thinking Straight skeleton

Straight skeleton Computational neuroscience usc

Computational neuroscience usc Standard deviation computational formula

Standard deviation computational formula Semi interquartile range

Semi interquartile range Computational math

Computational math Decomposition computer science

Decomposition computer science Computational sustainability cornell

Computational sustainability cornell Comp bio cmu

Comp bio cmu C6748 architecture supports

C6748 architecture supports