Interlingua Design for Spoken Language Translation March 28

- Slides: 28

Interlingua Design for Spoken Language Translation March 28, 2003 Presented by Lori Levin Interlingua Team: Lori Levin, Donna Gates, Dorcas Wallace, Kay Peterson, Alon Lavie, Chad Langley

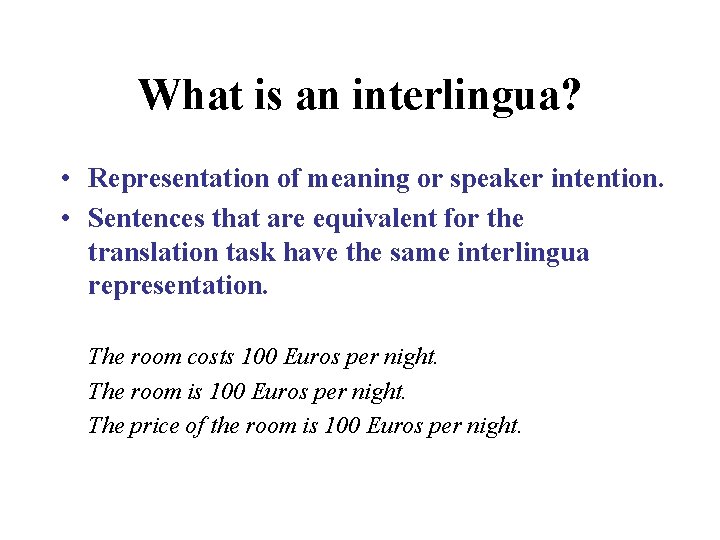

What is an interlingua? • Representation of meaning or speaker intention. • Sentences that are equivalent for the translation task have the same interlingua representation. The room costs 100 Euros per night. The room is 100 Euros per night. The price of the room is 100 Euros per night.

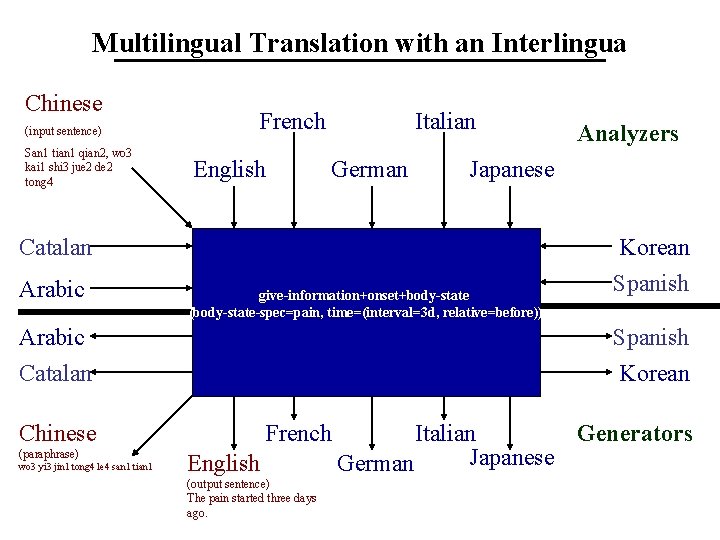

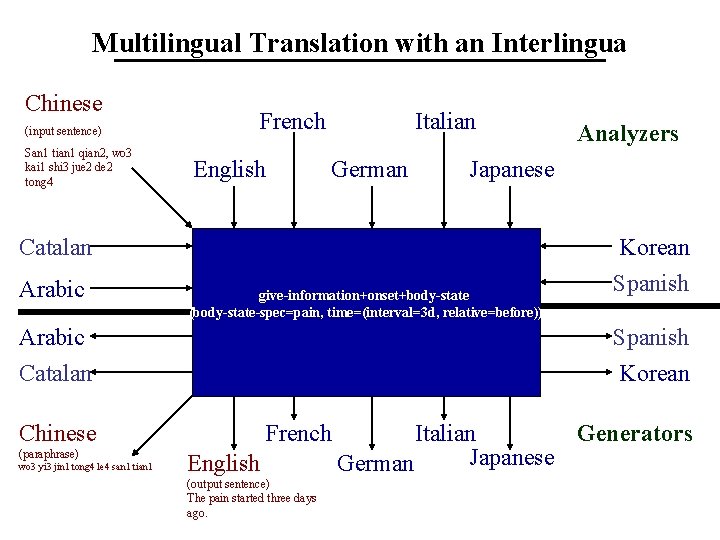

Multilingual Translation with an Interlingua Chinese (input sentence) San 1 tian 1 qian 2, wo 3 kai 1 shi 3 jue 2 de 2 tong 4 French English Italian German Japanese Catalan Arabic Interlingua give-information+onset+body-state (body-state-spec=pain, time=(interval=3 d, relative=before)) Arabic Catalan wo 3 yi 3 jin 1 tong 4 le 4 san 1 tian 1 Korean Spanish Korean Chinese (paraphrase) Analyzers French English (output sentence) The pain started three days ago. Italian Generators Japanese German

Advantages of Interlingua • Add a new language easily – get all-ways translation to all previous languages by adding one grammar for analysis and one grammar for generation • Mono-lingual development teams. • Paraphrase – Generate a new source language sentence from the interlingua so that the user can confirm the meaning

Challenges for Interlingua • “Meaning” is arbitrarily deep. – What level of detail do you stop at? • If it is too simple, meaning will be lost in translation. • If it is too complex, analysis and generation will be too difficult. • Should be applicable to all languages. • Human development time.

Design Principles of the Interchange Format • Based on speaker’s intent, not literal meaning – Can you pass the salt is represented only as a request for the hearer to perform an action, not as a question about the hearer’s ability. • Abstract away from the peculiarities of any particular language – Why not go to the meeting? – Kaigi ni itte mittara doo? meeting to going try-if how

Formulaic Utterances • Good night. • tisba. H cala x. Er waking up on good • Romanization of Arabic from Call. Home Egypt

Same intention, different syntax • rigly bitiwgacny my leg hurts • candy wagac f. E rigly I have pain in my leg • rigly biti. Climny my leg hurts • f. E wagac f. E rigly there is pain in my leg • rigly bitinqa. H calya my leg bothers on me Romanization of Arabic from Call. Home Egypt.

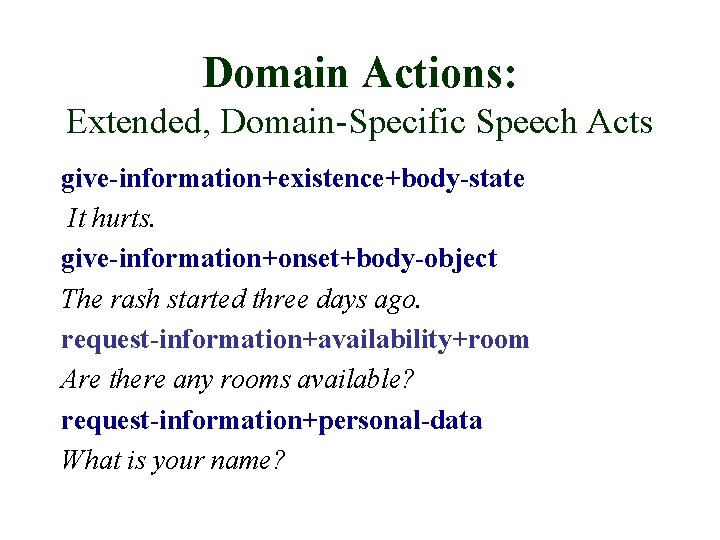

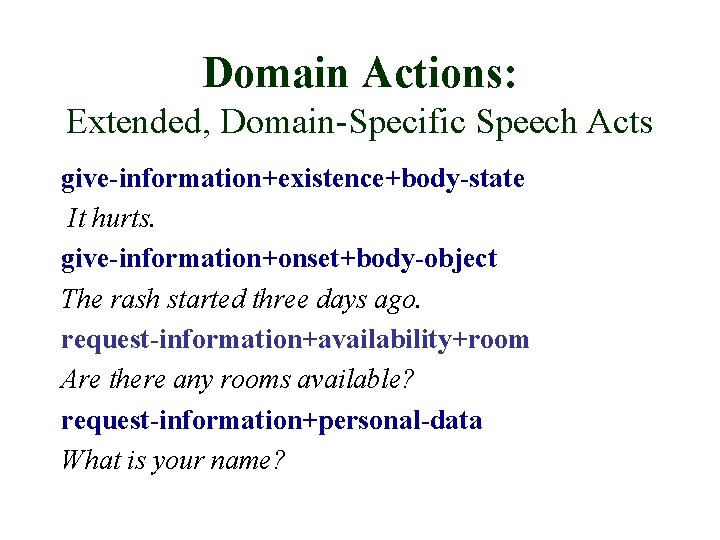

Domain Actions: Extended, Domain-Specific Speech Acts give-information+existence+body-state It hurts. give-information+onset+body-object The rash started three days ago. request-information+availability+room Are there any rooms available? request-information+personal-data What is your name?

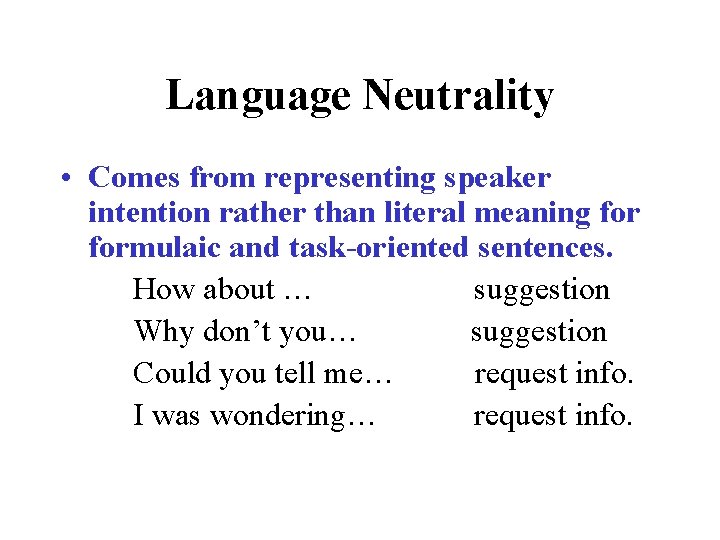

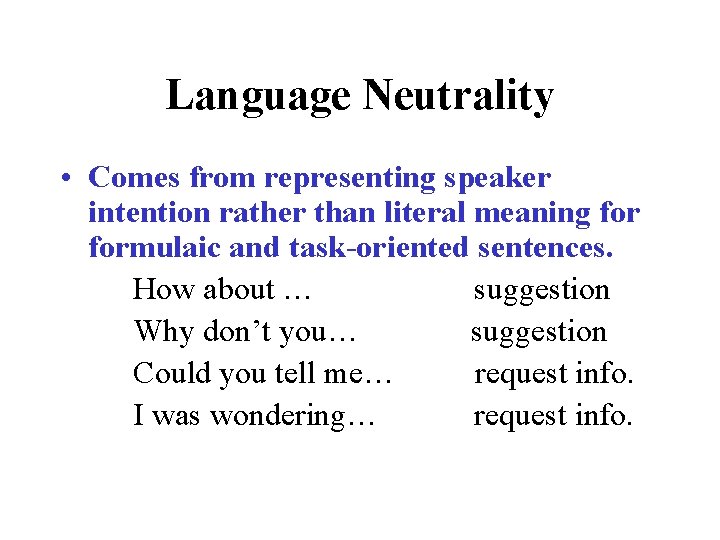

Language Neutrality • Comes from representing speaker intention rather than literal meaning formulaic and task-oriented sentences. How about … suggestion Why don’t you… suggestion Could you tell me… request info. I was wondering… request info.

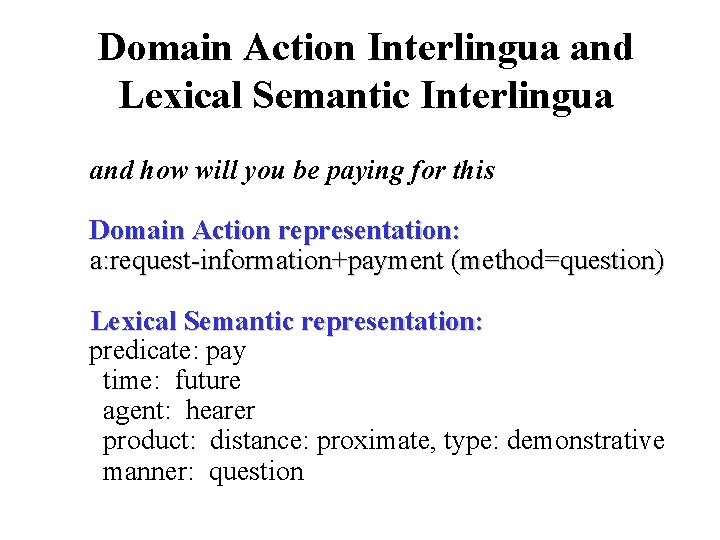

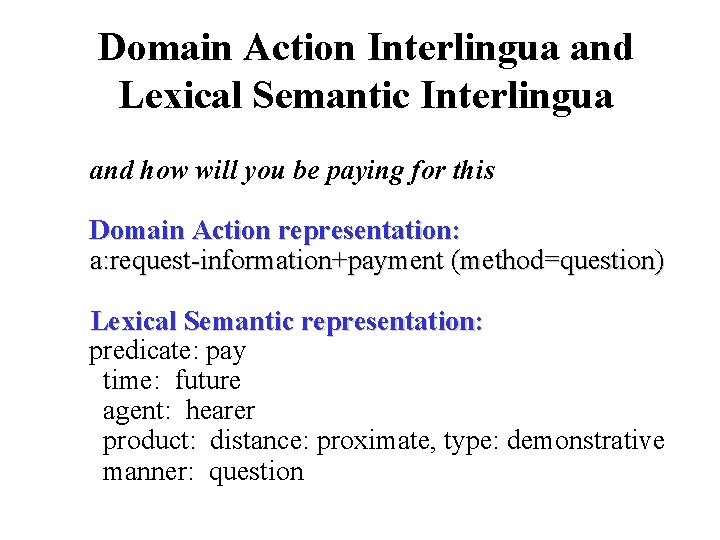

Domain Action Interlingua and Lexical Semantic Interlingua and how will you be paying for this Domain Action representation: a: request-information+payment (method=question) Lexical Semantic representation: predicate: pay time: future agent: hearer product: distance: proximate, type: demonstrative manner: question

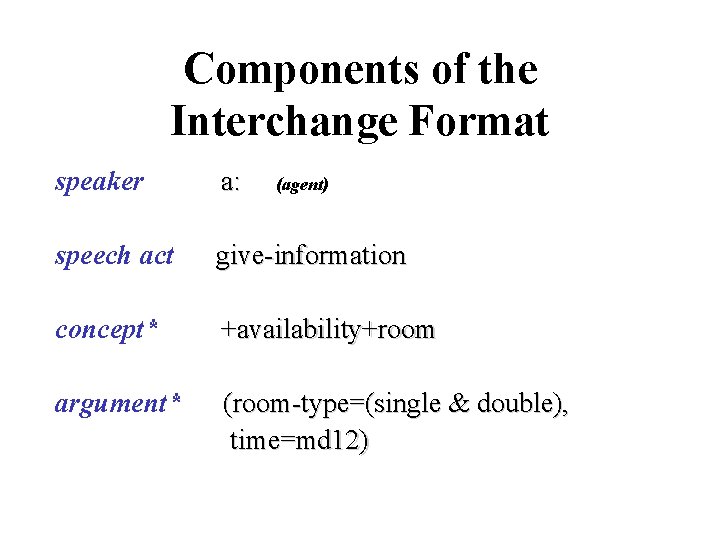

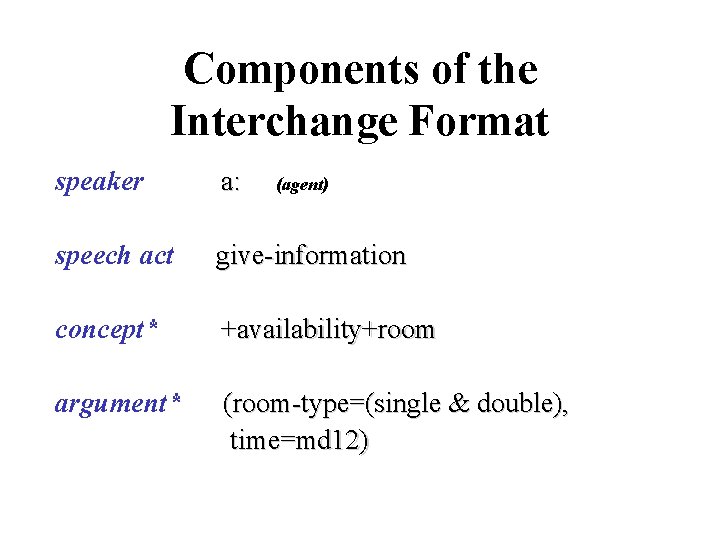

Components of the Interchange Format speaker a: speech act give-information concept* +availability+room argument* (room-type=(single & double), time=md 12) (agent)

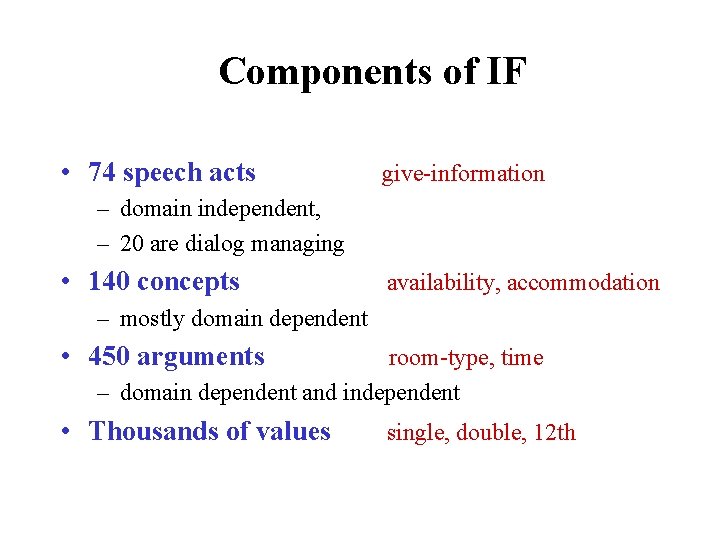

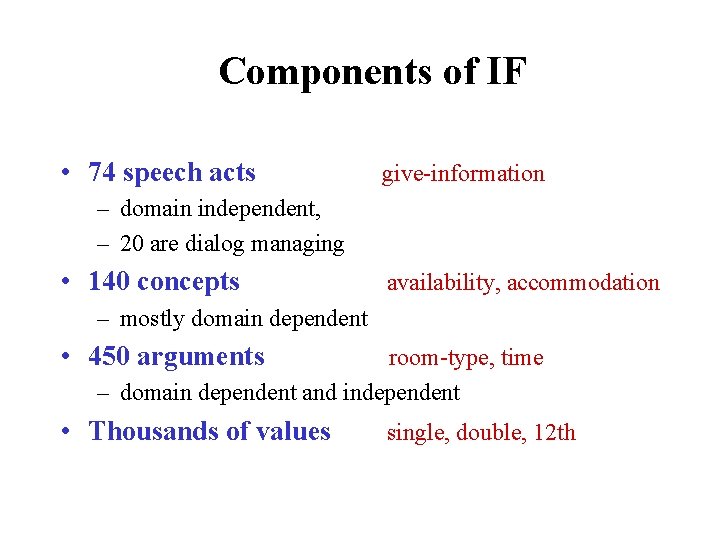

Components of IF • 74 speech acts give-information – domain independent, – 20 are dialog managing • 140 concepts availability, accommodation – mostly domain dependent • 450 arguments room-type, time – domain dependent and independent • Thousands of values single, double, 12 th

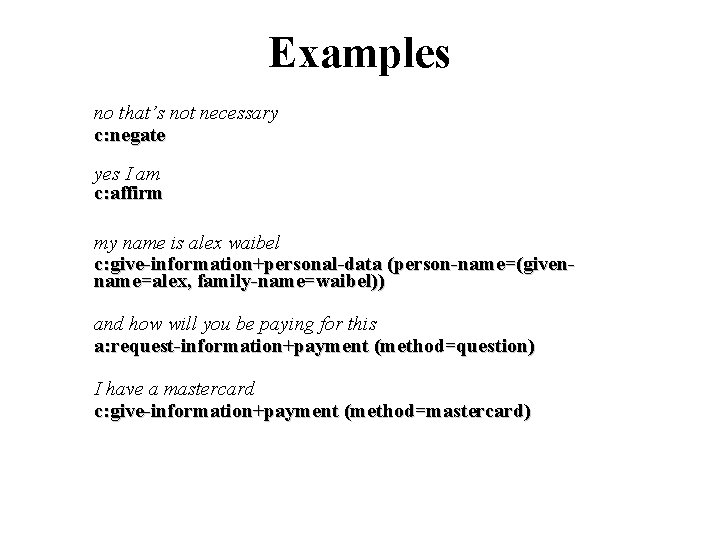

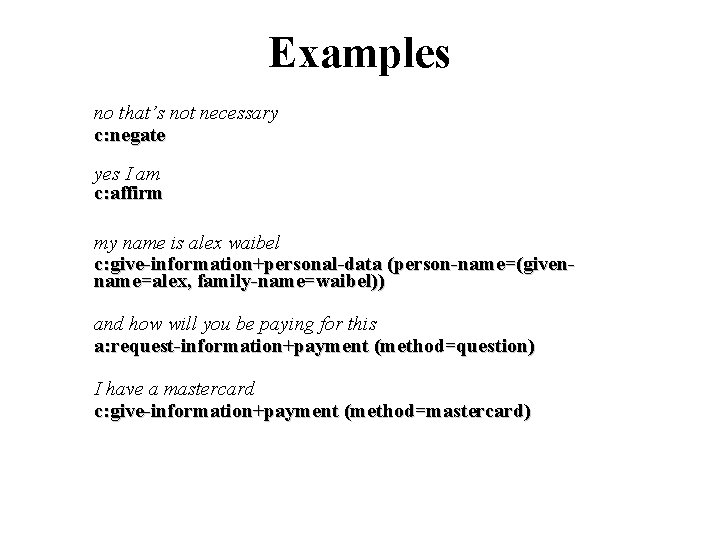

Examples no that’s not necessary c: negate yes I am c: affirm my name is alex waibel c: give-information+personal-data (person-name=(givenname=alex, family-name=waibel)) and how will you be paying for this a: request-information+payment (method=question) I have a mastercard c: give-information+payment (method=mastercard)

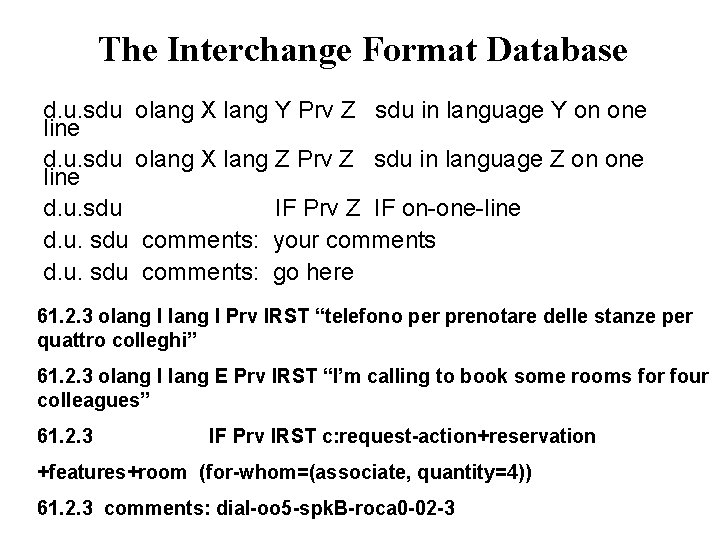

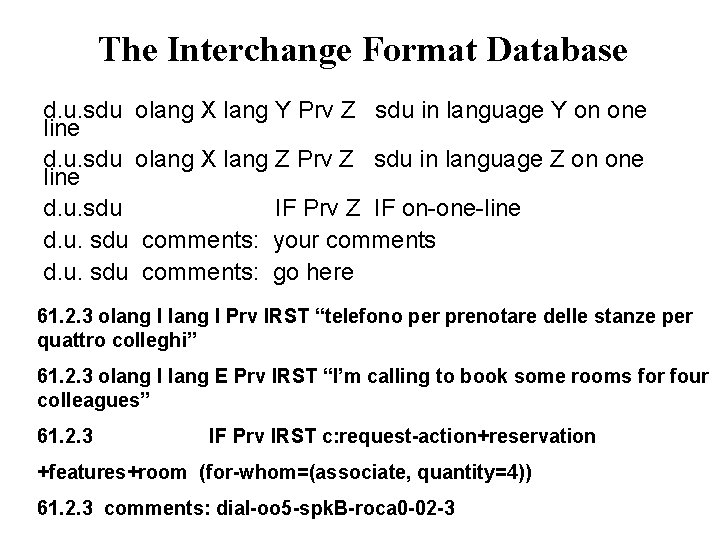

The Interchange Format Database d. u. sdu olang X lang Y Prv Z sdu in language Y on one line d. u. sdu olang X lang Z Prv Z sdu in language Z on one line d. u. sdu IF Prv Z IF on-one-line d. u. sdu comments: your comments d. u. sdu comments: go here 61. 2. 3 olang I Prv IRST “telefono per prenotare delle stanze per quattro colleghi” 61. 2. 3 olang I lang E Prv IRST “I’m calling to book some rooms for four colleagues” 61. 2. 3 IF Prv IRST c: request-action+reservation +features+room (for-whom=(associate, quantity=4)) 61. 2. 3 comments: dial-oo 5 -spk. B-roca 0 -02 -3

NESPOLE! Database • Over 12, 000 tagged sentences, in English, Italian, and German

Tools and Resources IF specifications (available on the web) http: //www. is. cmu. edu/nespole/db/index. html IF discussion board http: //peace. is. cmu. edu/ISL/get/if. html C-STAR and NESPOLE! Data Bases http: //www. is. cmu. edu/nespole/db/index. html IF Checker (web interface) http: //tcc. it/projects/xig-on-line. html IF test suite http: //tcc. it/projects/xig-ts. html IF emacs mode

Measuring Coverage • No-tag rate: – Can a human expert assign an interlingua representation to each sentence? – C-STAR II no-tag rate: 7. 3% – NESPOLE no-tag rate: 2. 4% • 300 more sentences were covered in the C-STAR English database • End-to-end translation performance: Measures recognizer, analyzer, and generator performance in combination with interlingua coverage.

Example of failure of reliability Input: 3: 00, right? Interlingua: verify (time=3: 00) Poor choice of speech act name: does it mean that the speaker is confirming the time or requesting verification from the user? Output: 3: 00 is right.

Measuring Reliability: Cross-site evaluations • Compare performance of: – Analyzer interlingua generator – Where the analyzer and generator are built at the same site (or by the same person) – Where the analyzer and generator are built at different sites (or by different people who may not know each other) • Comparable end-to-end performance within sites and across sites.

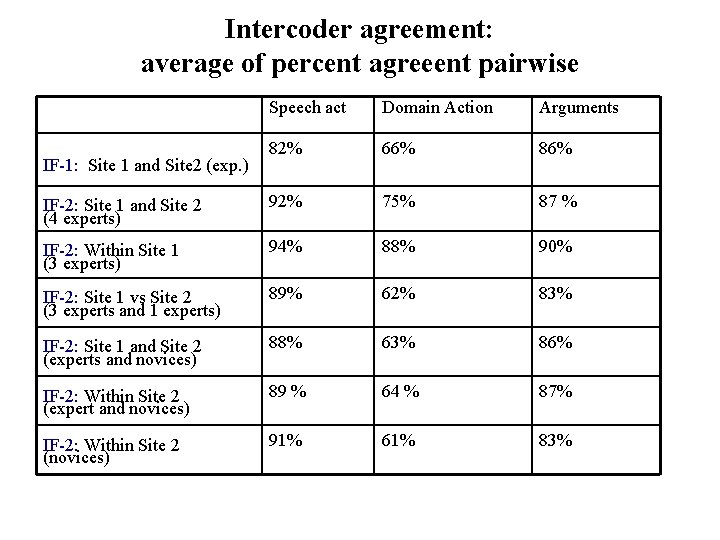

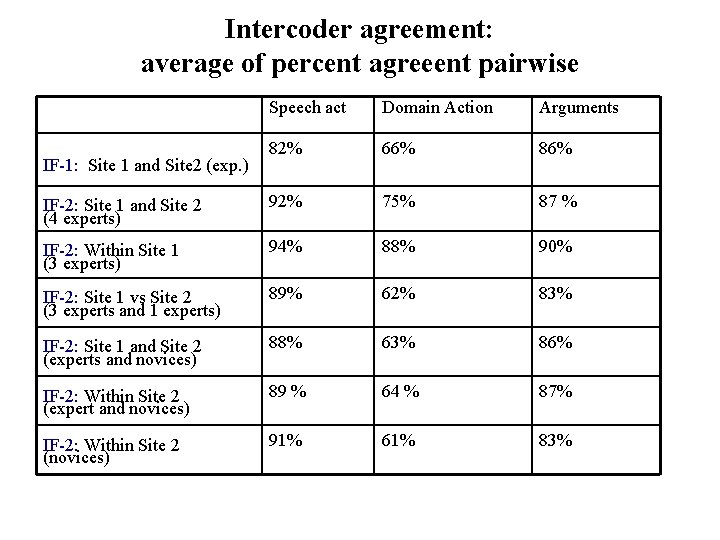

Intercoder agreement: average of percent agreeent pairwise Speech act Domain Action Arguments 82% 66% 86% IF-2: Site 1 and Site 2 (4 experts) 92% 75% 87 % IF-2: Within Site 1 (3 experts) 94% 88% 90% IF-2: Site 1 vs Site 2 (3 experts and 1 experts) 89% 62% 83% IF-2: Site 1 and Site 2 (experts and novices) 88% 63% 86% IF-2: Within Site 2 (expert and novices) 89 % 64 % 87% IF-2: Within Site 2 (novices) 91% 61% 83% IF-1: Site 1 and Site 2 (exp. )

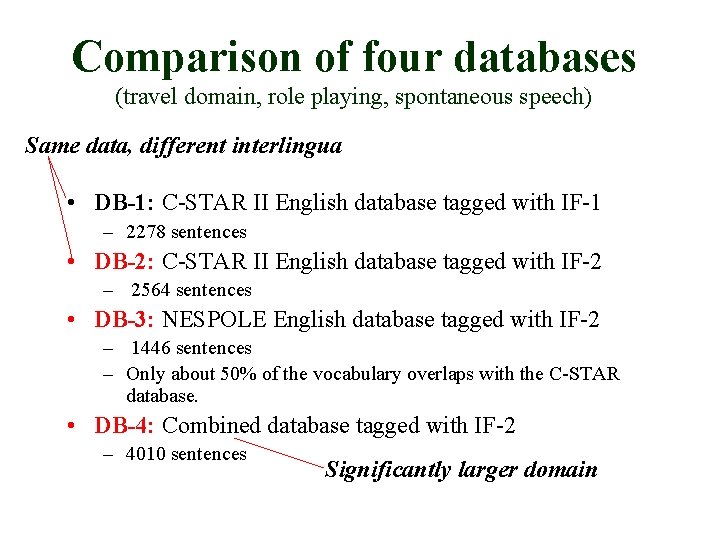

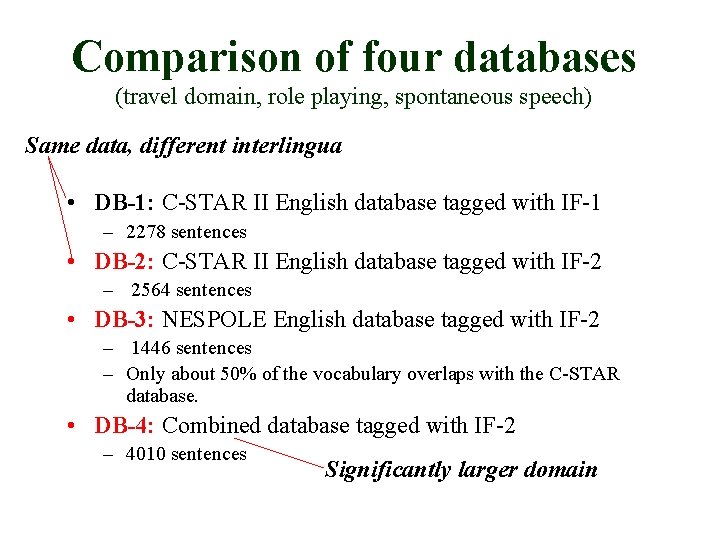

Comparison of four databases (travel domain, role playing, spontaneous speech) Same data, different interlingua • DB-1: C-STAR II English database tagged with IF-1 – 2278 sentences • DB-2: C-STAR II English database tagged with IF-2 – 2564 sentences • DB-3: NESPOLE English database tagged with IF-2 – 1446 sentences – Only about 50% of the vocabulary overlaps with the C-STAR database. • DB-4: Combined database tagged with IF-2 – 4010 sentences Significantly larger domain

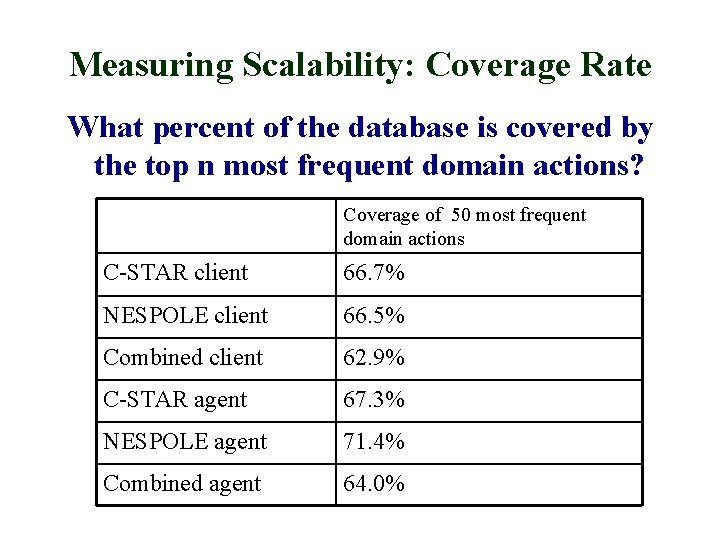

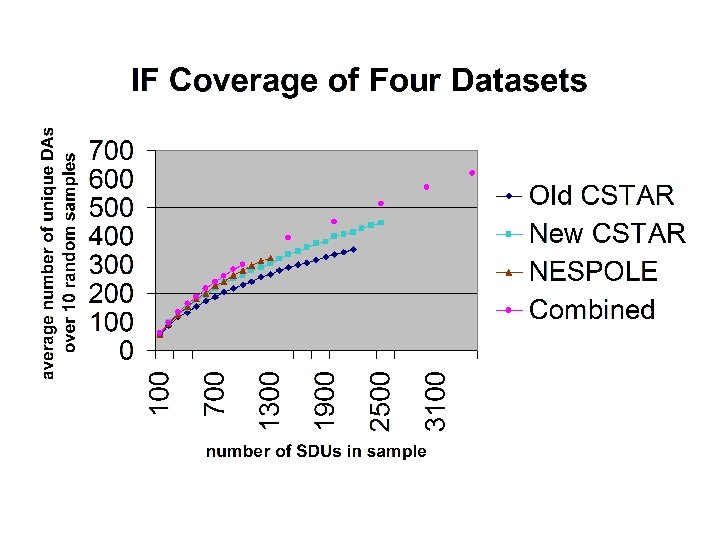

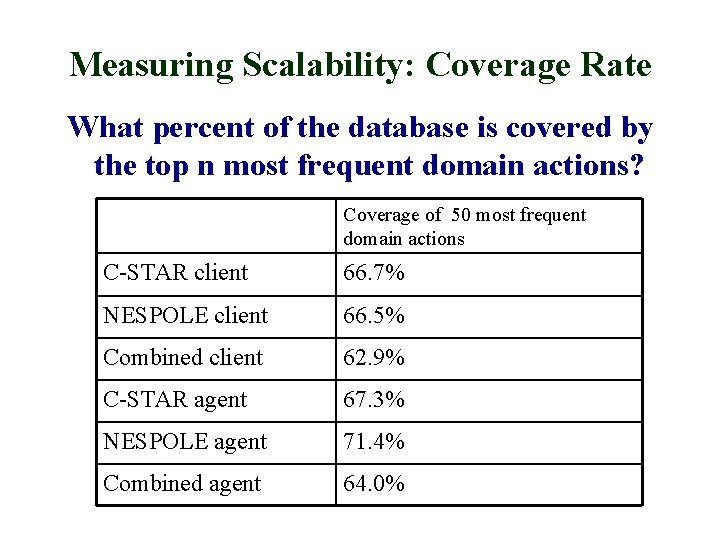

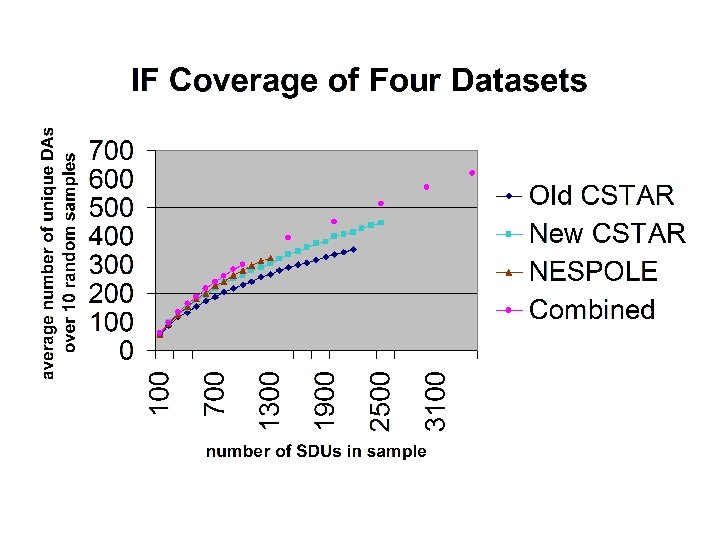

Measuring Scalability: Coverage Rate What percent of the database is covered by the top n most frequent domain actions? Coverage of 50 most frequent domain actions C-STAR client 66. 7% NESPOLE client 66. 5% Combined client 62. 9% C-STAR agent 67. 3% NESPOLE agent 71. 4% Combined agent 64. 0%

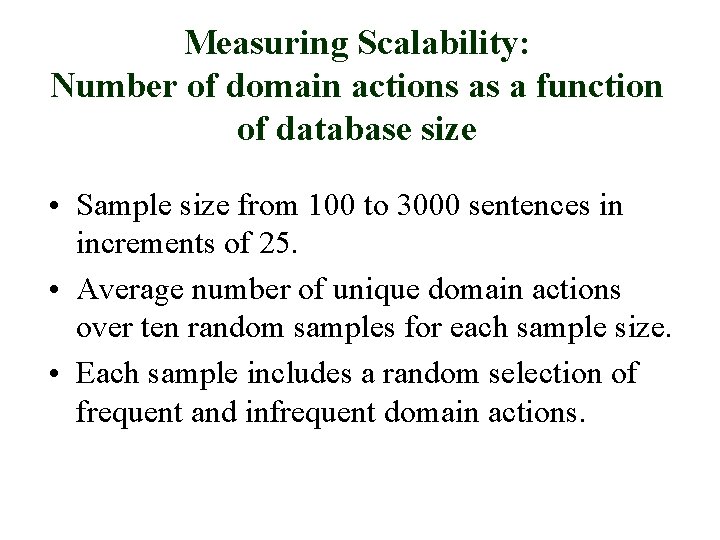

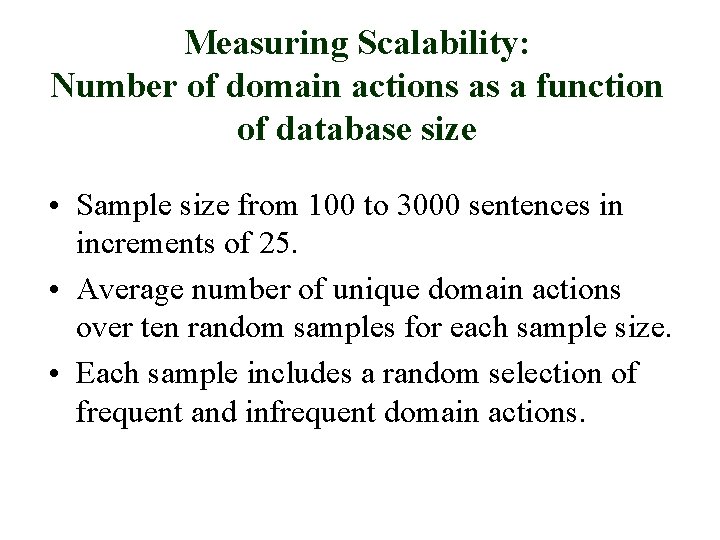

Measuring Scalability: Number of domain actions as a function of database size • Sample size from 100 to 3000 sentences in increments of 25. • Average number of unique domain actions over ten random samples for each sample size. • Each sample includes a random selection of frequent and infrequent domain actions.

Comparison of four databases (travel domain, role playing, spontaneous speech) Same data, different interlingua • English database 1 tagged with interlingua 1: 2278 sentences • English database 1 tagged with interlingua 2: 2564 sentences • English database 2 tagged with interlingua 2: 1446 sentences – Only about 50% of the vocabulary overlaps with the English database 1. • Combined databases tagged with interlingua 2: 4010 sentences Significantly larger domain

Conclusions • An interlingua based on domain actions is suitable for task-oriented dialogue: – Reliable – Good coverage – Scalable without explosion of domain actions • It is possible to evaluate an interlingua for – Realiability – Expressivity – Scalability

How to have success with an interlingua in a multi-site project • • • Keep it simple. Periodically check for intercoder agreement. Good documentation Discussion board for developers Know your language typology.