Conceptual Language Model Design for Spoken Language Understanding

- Slides: 13

Conceptual Language Model Design for Spoken Language Understanding Catherine Kobus†, G´eraldine Damnati†, Lionel Delphin-Poulat†, Renato de Mori‡ †France T´el´ecom Division R&D 2 Av. Pierre Marzin, 22307 Lannion – France ‡LIA/CNRS - University of Avignon BP 1228 84911 Avignon cedex 09 – France INTERSPEECH 2005 報告者:郝柏翰 2013/10/15

Outline Introduction Conceptual LMs for Semantic Composition Experiments Conclusion 2

Introduction In spoken dialogues for telephone applications, interpretation consists in finding instances of conceptual structures representing knowledge in the domain semantics SLU can be performed on the results of an Automatic Speech Recognition (ASR) system or it can be part of an integrated process which generates word and concept hypotheses at the same time Of central importance in SLU is the relation between SK components and LMs. In principle, each entity, relation, or property can be associated with a LM which should include long distance dependencies between words. Let us call these LMs, conceptual LMs. 3

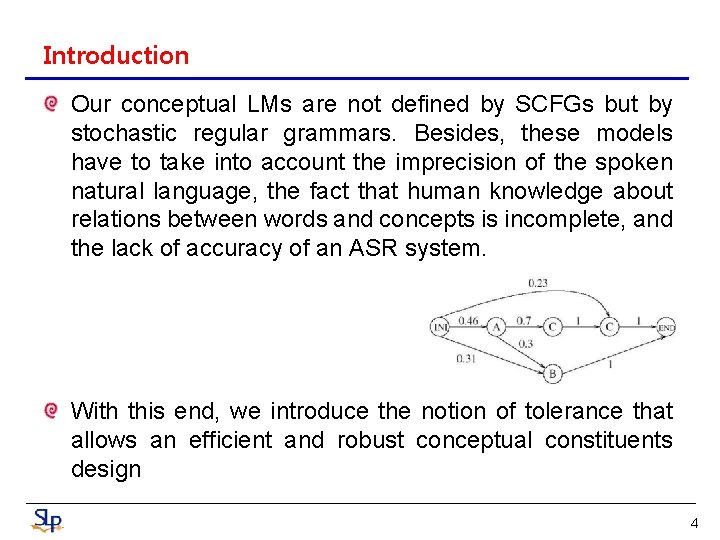

Introduction Our conceptual LMs are not defined by SCFGs but by stochastic regular grammars. Besides, these models have to take into account the imprecision of the spoken natural language, the fact that human knowledge about relations between words and concepts is incomplete, and the lack of accuracy of an ASR system. With this end, we introduce the notion of tolerance that allows an efficient and robust conceptual constituents design 4

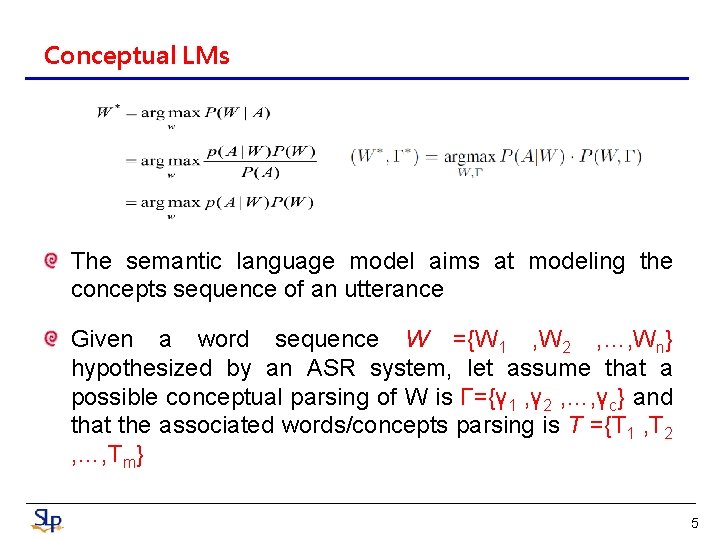

Conceptual LMs The semantic language model aims at modeling the concepts sequence of an utterance Given a word sequence W ={W 1 , W 2 , …, Wn} hypothesized by an ASR system, let assume that a possible conceptual parsing of W is Γ={γ 1 , γ 2 , …, γc} and that the associated words/concepts parsing is T ={T 1 , T 2 , …, Tm} 5

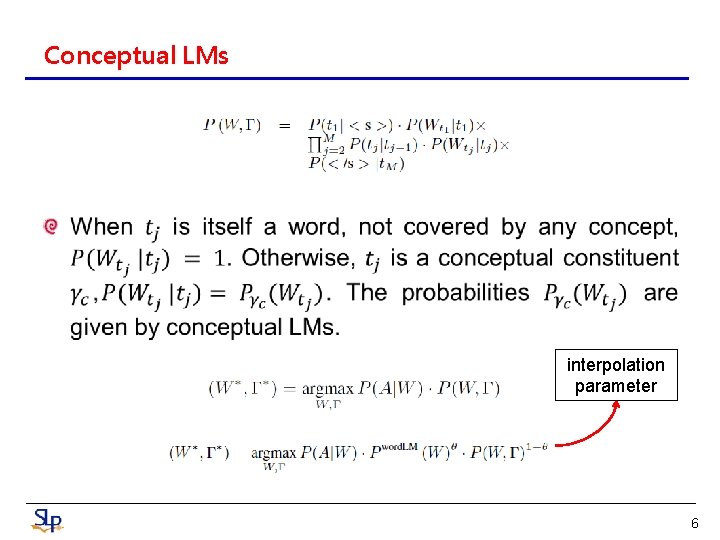

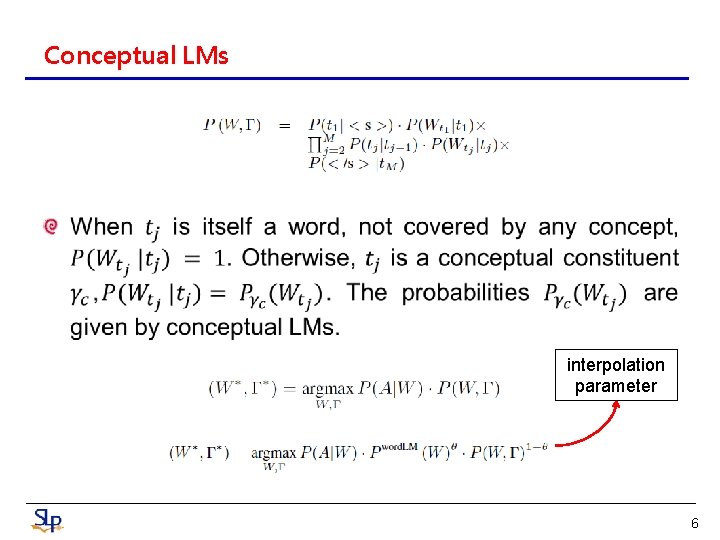

Conceptual LMs interpolation parameter 6

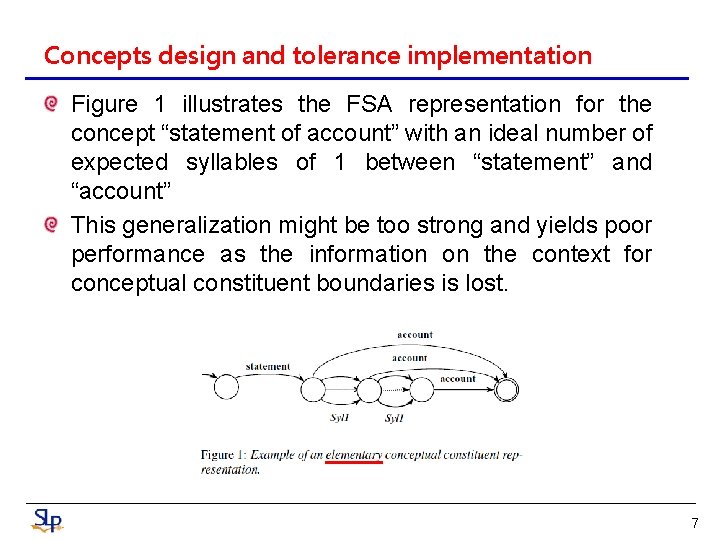

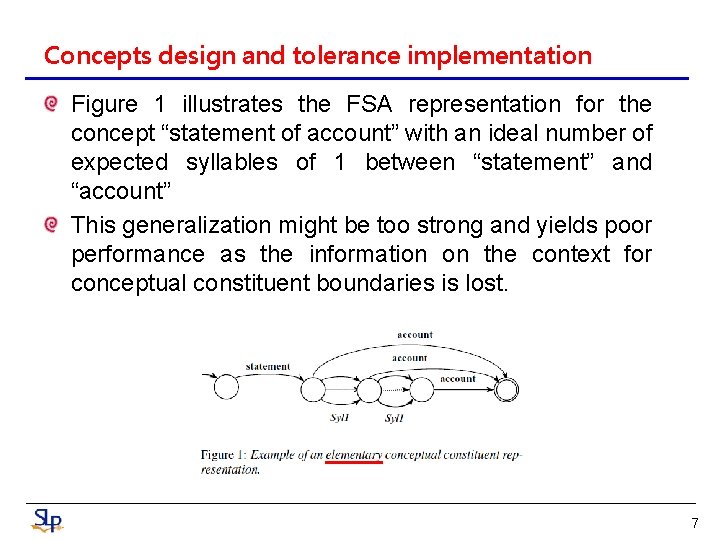

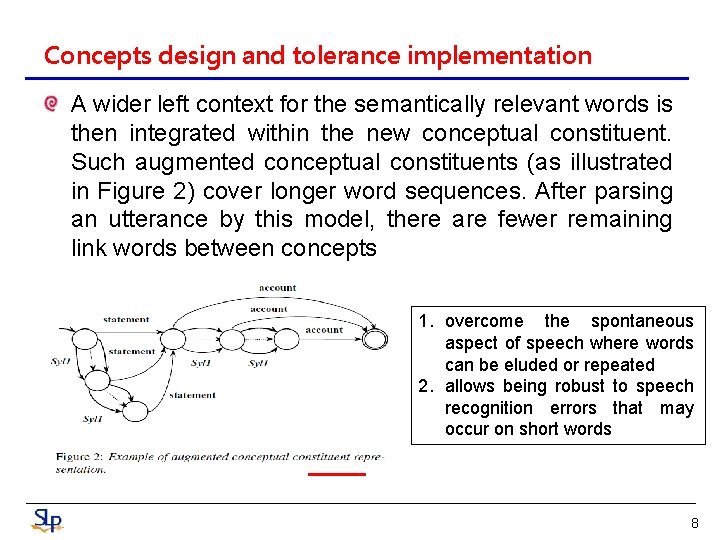

Concepts design and tolerance implementation Figure 1 illustrates the FSA representation for the concept “statement of account” with an ideal number of expected syllables of 1 between “statement” and “account” This generalization might be too strong and yields poor performance as the information on the context for conceptual constituent boundaries is lost. 7

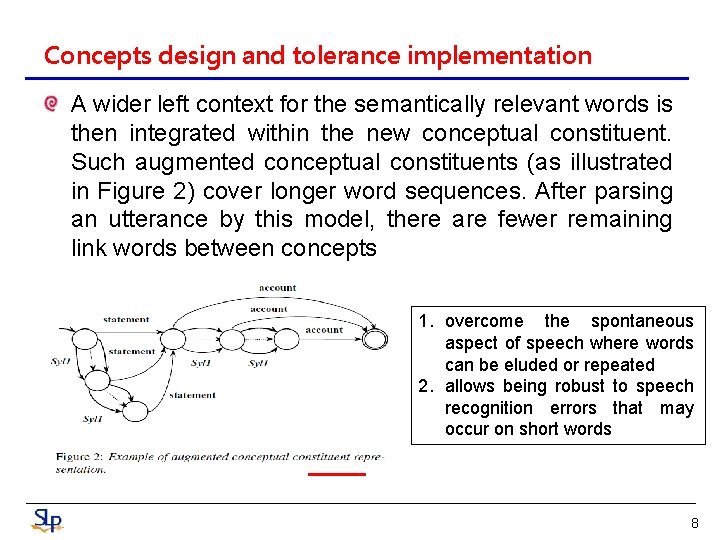

Concepts design and tolerance implementation A wider left context for the semantically relevant words is then integrated within the new conceptual constituent. Such augmented conceptual constituents (as illustrated in Figure 2) cover longer word sequences. After parsing an utterance by this model, there are fewer remaining link words between concepts 1. overcome the spontaneous aspect of speech where words can be eluded or repeated 2. allows being robust to speech recognition errors that may occur on short words 8

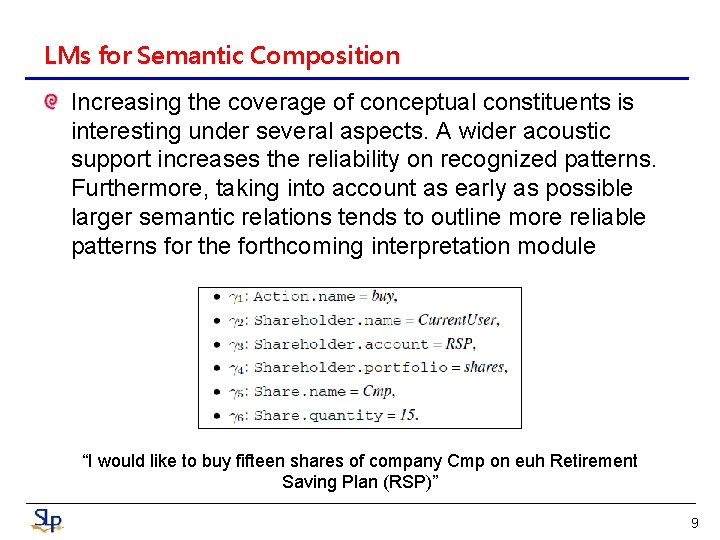

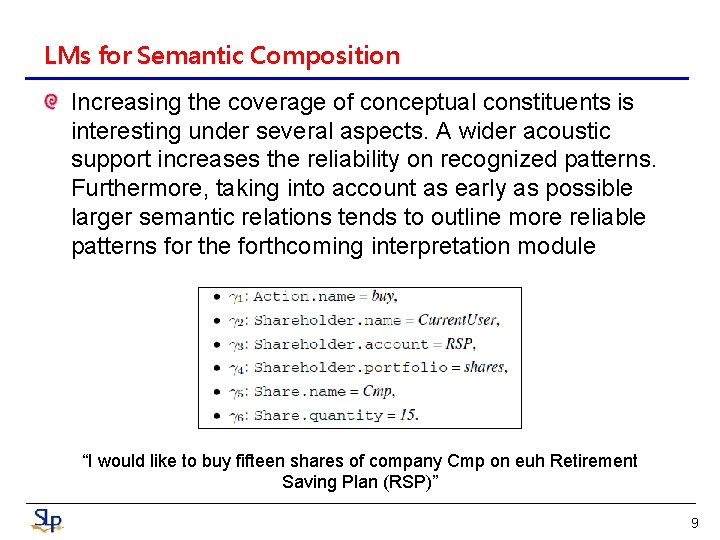

LMs for Semantic Composition Increasing the coverage of conceptual constituents is interesting under several aspects. A wider acoustic support increases the reliability on recognized patterns. Furthermore, taking into account as early as possible larger semantic relations tends to outline more reliable patterns for the forthcoming interpretation module “I would like to buy fifteen shares of company Cmp on euh Retirement Saving Plan (RSP)” 9

Experiments setup Experiments have been carried out with a corpus collected at France Telecom R&D for a banking application, which allows users to perform transactions and ask questions about the stock market, portfolios and accounts The basic vocabulary of the application is about 2, 732 words (include share names). The training set contains 134, 722 words for 24, 604 utterances collected over the French telephone network. The average utterance length is about 6 words. The development set contains 3, 788 word graphs. A test set of 2, 356 utterances is used. 10

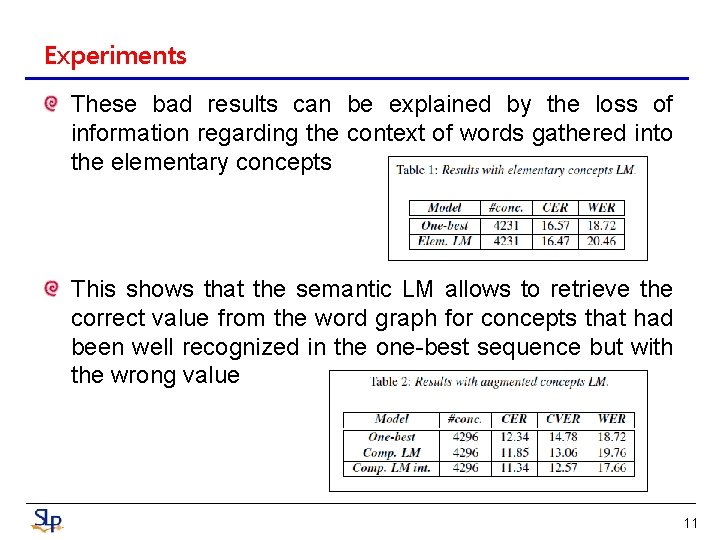

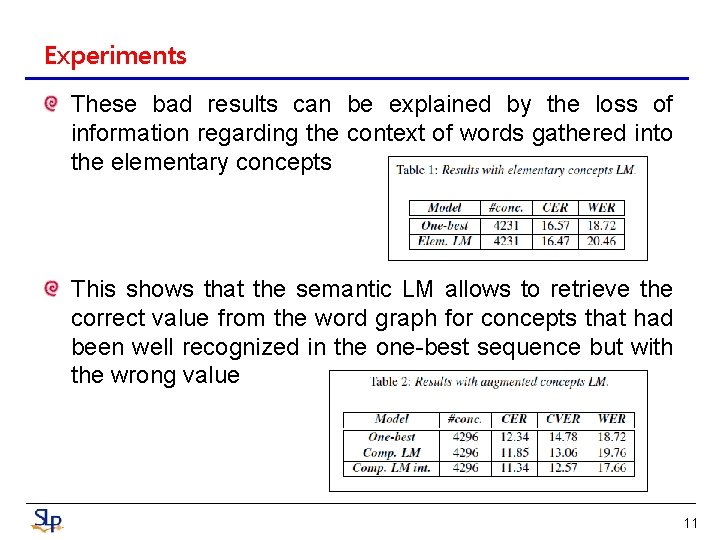

Experiments These bad results can be explained by the loss of information regarding the context of words gathered into the elementary concepts This shows that the semantic LM allows to retrieve the correct value from the word graph for concepts that had been well recognized in the one-best sequence but with the wrong value 11

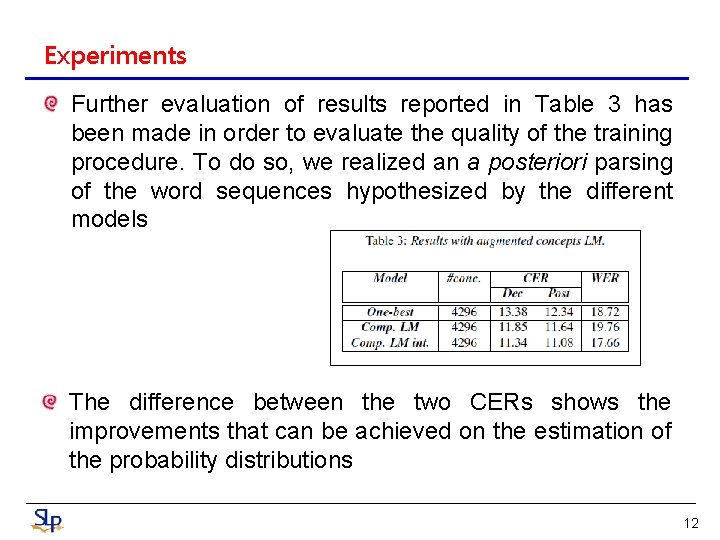

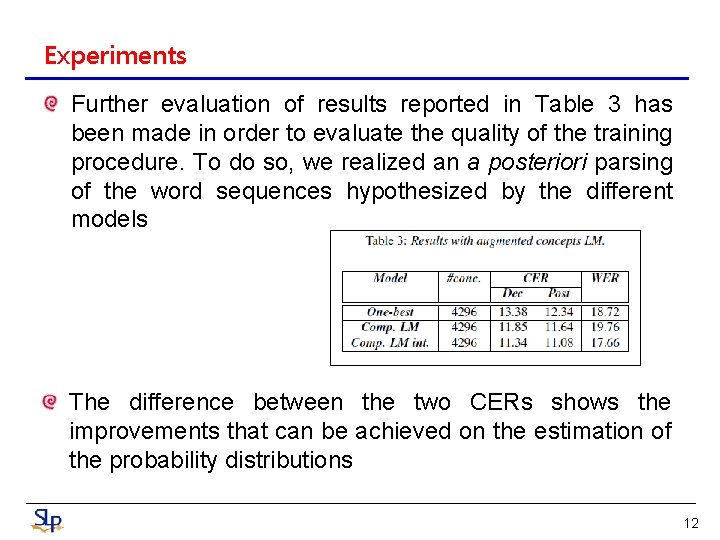

Experiments Further evaluation of results reported in Table 3 has been made in order to evaluate the quality of the training procedure. To do so, we realized an a posteriori parsing of the word sequences hypothesized by the different models The difference between the two CERs shows the improvements that can be achieved on the estimation of the probability distributions 12

Conclusion Specific LMs for conceptual constituents and semantic compositions are introduced in this paper Furthermore, specific LMs contain redundant word patterns for representing concepts implemented by a tolerance scheme. Redundancies are represented by sequences of words longer than the ones that are essential for representing a concept Future work will focus on the automatic construction of redundant and composition LMs 13