Grid Computing A highlevel look at Grid Computing

- Slides: 70

Grid Computing A high-level look at Grid Computing in the world of Particle Physics and at LHC in particular. I am indebted to the EGEE, LCG and Grid. PP projects and to colleagues therein for much of the material presented here. Robin Middleton RAL-PPD/EGEE/Grid. PP

Overview • e-Science and The Grid • Grids in Particle Physics – EGEE LCG Grid. PP – Virtual Organisations • Computing Model (very high level !) • Components of the EGEE/LCG/Grid. PP Grid – – • • security information service resource brokering data management Monitoring & User Support Other Projects / Sciences Sustainability & EGI Further information – Links 2

What is e-Science ? What is the Grid ? 3

e-Science • …also : e-Infrastructure, cyberinfrastructure, e-Research, … • Includes – grid computing (e. g. WLCG, EGEE, EGI, OSG, Tera. Grid, NGS…) • computationally and/or data intensive; highly distributed over wide area – – digital curation digital libraries collaborative tools (e. g. Access Grid) …many other areas • Most UK Research Councils active in e-Science – – – BBSRC NERC (e. g. climate studies, NERC Data. Grid) ESRC (e. g. NCe. SS AHRC (e. g. studies in collaborative performing arts) EPSRC (e. g. e. Minerals, My. Grid, …) STFC (formerly PPARC and CCLRC) (e. g. Grid. PP, Astro. Grid) 4

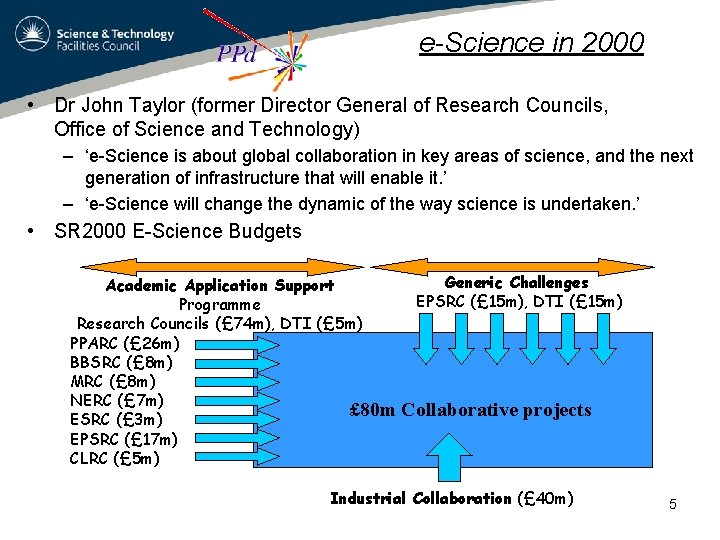

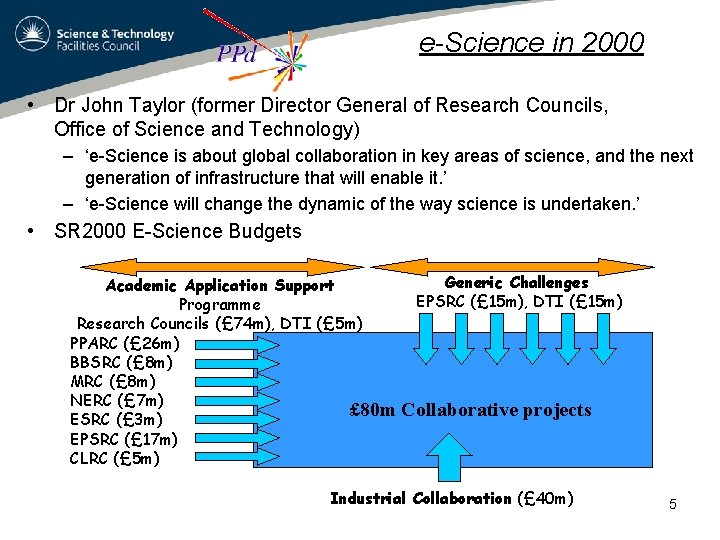

e-Science in 2000 • Dr John Taylor (former Director General of Research Councils, Office of Science and Technology) – ‘e-Science is about global collaboration in key areas of science, and the next generation of infrastructure that will enable it. ’ – ‘e-Science will change the dynamic of the way science is undertaken. ’ • SR 2000 E-Science Budgets Academic Application Support Programme Research Councils (£ 74 m), DTI (£ 5 m) PPARC (£ 26 m) BBSRC (£ 8 m) MRC (£ 8 m) NERC (£ 7 m) £ 80 m ESRC (£ 3 m) EPSRC (£ 17 m) CLRC (£ 5 m) Generic Challenges EPSRC (£ 15 m), DTI (£ 15 m) Collaborative projects Industrial Collaboration (£ 40 m) 5

And 9 Years on… • An independent panel of international experts has judged the UK's e-Science Programme as "world-leading", citing that "investments are already empowering significant contributions to wellbeing in the UK and the world beyond". “The panel found the e-Science Programme to have had a positive economic impact, especially in the important areas of life sciences and medicine, materials, and energy and sustainability. Attractive to industry from its inception, the programme has drawn in around £ 30 million from industrial collaborations, both in cash and in-kind. Additionally it has already contributed to 138 stakeholder collaborations, 30 licenses or patents, 14 spin-off companies and 103 key results taken up by industry and early indications show there are still more to come. ” http: //www. rcuk. ac. uk/news/100210. htm 6

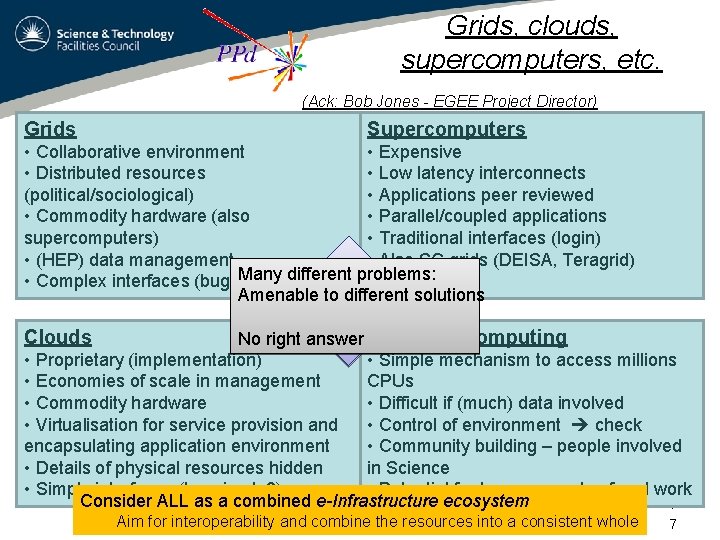

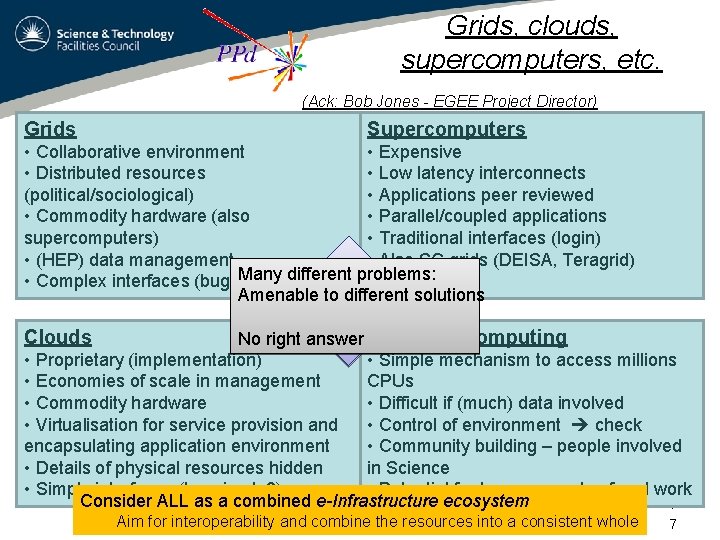

Grids, clouds, supercomputers, etc. (Ack: Bob Jones - EGEE Project Director) Grids Supercomputers • Collaborative environment • Expensive • Distributed resources • Low latency interconnects (political/sociological) • Applications peer reviewed • Commodity hardware (also • Parallel/coupled applications supercomputers) • Traditional interfaces (login) • (HEP) data management • Also SC grids (DEISA, Teragrid) Many different problems: • Complex interfaces (bug not feature) Amenable to different solutions Clouds No right answer Volunteer computing • Proprietary (implementation) • Simple mechanism to access millions • Economies of scale in management CPUs • Commodity hardware • Difficult if (much) data involved • Control of environment check • Virtualisation for service provision and • Community building – people involved encapsulating application environment • Details of physical resources hidden in Science • Simple interfaces (too simple? ) • Potential for huge amounts of real work Consider ALL as a combined e-Infrastructure ecosystem 7 Aim for interoperability Bob and Jones combine the resources into a consistent whole - October 2009 7

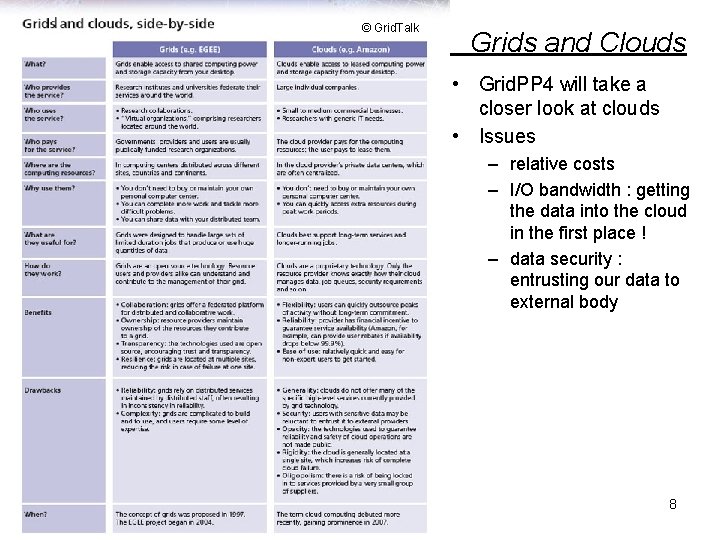

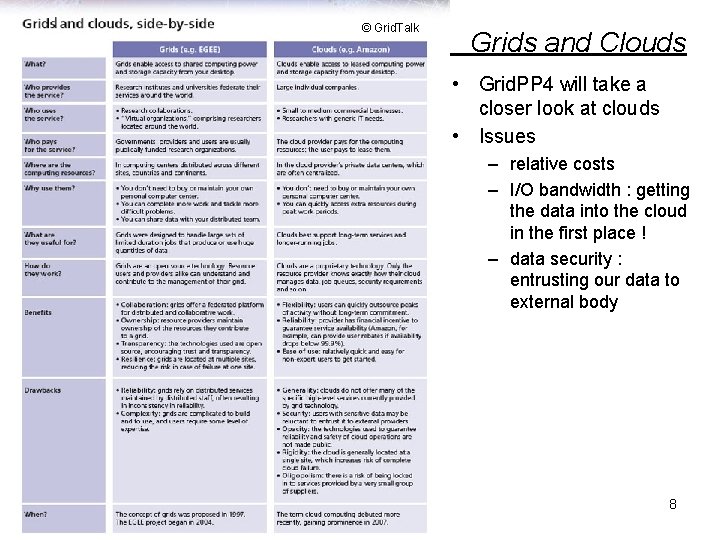

© Grid. Talk Grids and Clouds • Grid. PP 4 will take a closer look at clouds • Issues – relative costs – I/O bandwidth : getting the data into the cloud in the first place ! – data security : entrusting our data to external body 8

What is the Grid ? • Much more than the web… • “Grid computing [is] distinguished from conventional distributed computing by its focus on large-scale resource sharing, innovative applications, and, in some cases, high-performance orientation. . . we review the "Grid problem", which we define as flexible, secure, coordinated resource sharing among dynamic collections of individuals, institutions, and resources - what we refer to as virtual organizations. " – From "The Anatomy of the Grid: Enabling Scalable Virtual Organizations" by Foster, Kesselman and Tuecke • “The Web on Steroids” ! 9

What is the Grid ? • The Grid : Blueprint for a New Computing Infrastructure (Ian Foster & Carl Kesselman) – – • “A computational grid is a hardware and software infrastructure that provides dependable, consistent, pervasive, and inexpensive access to high-end computational capabilities. ” http: //www. mkp. com/mk/default. asp? isbn=1558604758 What is the Grid ? A Three Point Checklist (Ian Foster) i) Co-ordinates resources that are not subject to centralised control - see EGEE/LCG/Grid. PP Grid ii) …using standard, open, general-purpose protocols and interfaces - see Open Grid Forum, x. 509, (also Globus, Condor, g. Lite) iii) …to deliver nontrivial qualities of service - – see LCG Mo. U, Service availability, resources promises (CPU, storage, network) http: //www-fp. mcs. anl. gov/~foster/Articles/What. Is. The. Grid. pdf 10

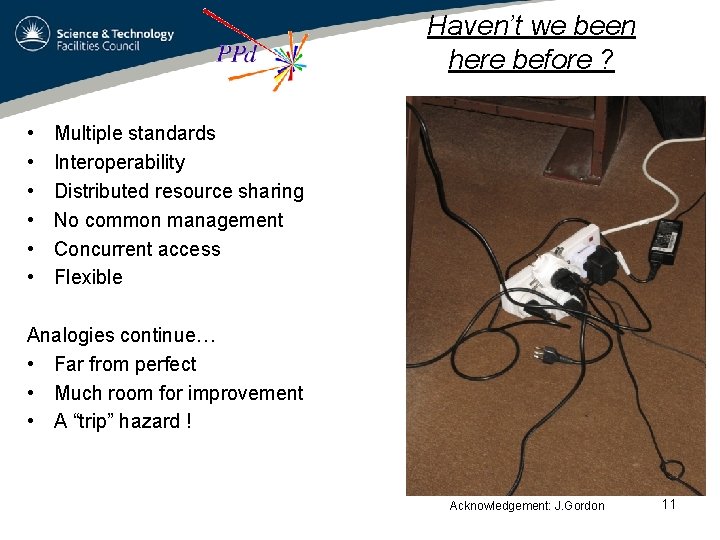

Haven’t we been here before ? • • • Multiple standards Interoperability Distributed resource sharing No common management Concurrent access Flexible Analogies continue… • Far from perfect • Much room for improvement • A “trip” hazard ! Acknowledgement: J. Gordon 11

Grids in Particle Physics (with the LHC as an example) 12

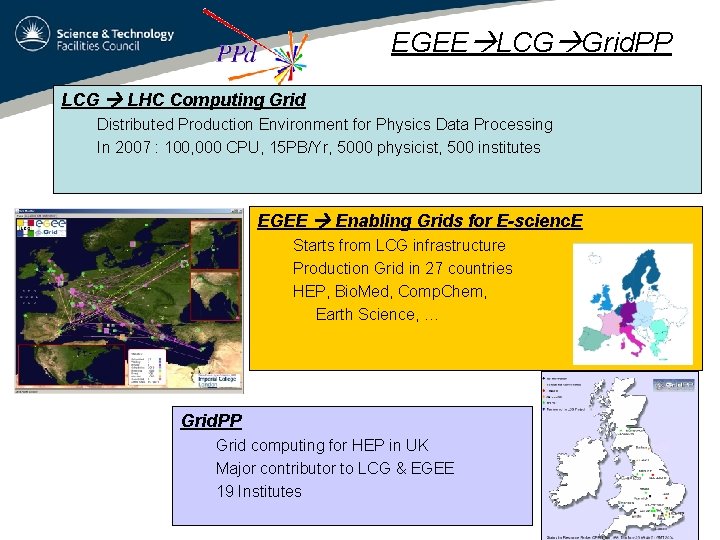

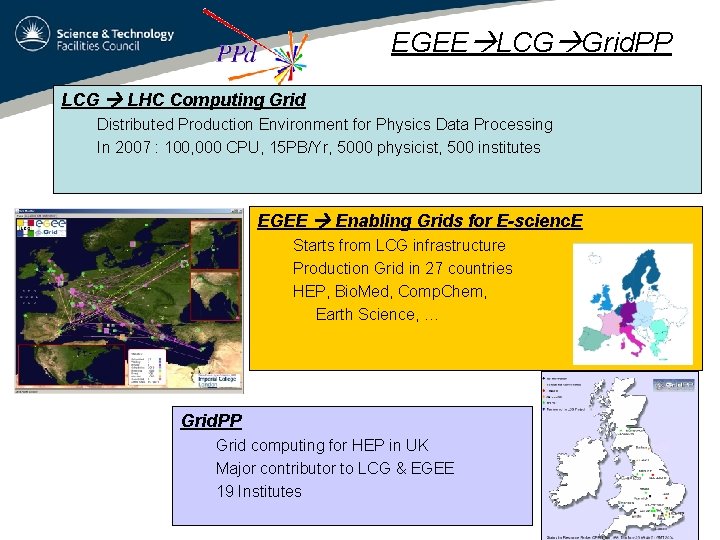

EGEE LCG Grid. PP LCG LHC Computing Grid Distributed Production Environment for Physics Data Processing In 2007 : 100, 000 CPU, 15 PB/Yr, 5000 physicist, 500 institutes EGEE Enabling Grids for E-scienc. E Starts from LCG infrastructure Production Grid in 27 countries HEP, Bio. Med, Comp. Chem, Earth Science, … Grid. PP Grid computing for HEP in UK Major contributor to LCG & EGEE 19 Institutes 13

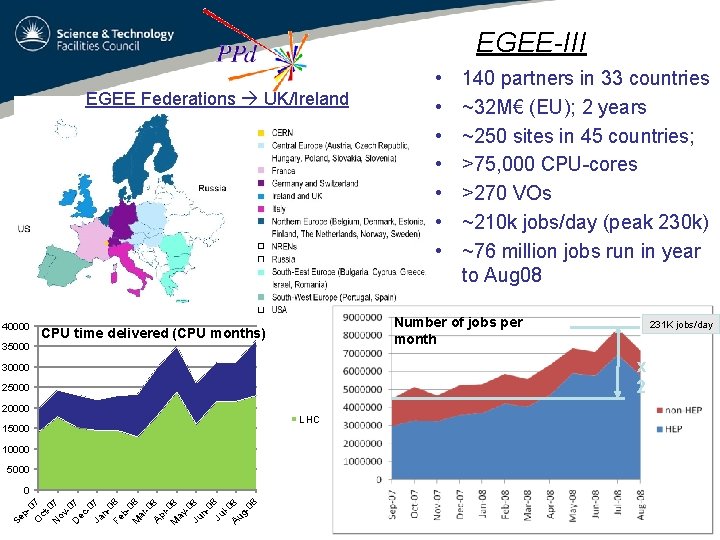

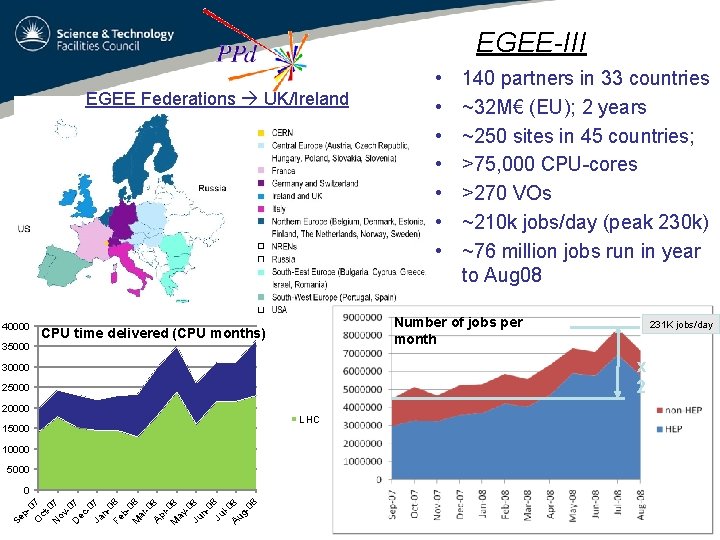

EGEE-III EGEE Federations UK/Ireland 40000 • • 140 partners in 33 countries ~32 M€ (EU); 2 years ~250 sites in 45 countries; >75, 000 CPU-cores >270 VOs ~210 k jobs/day (peak 230 k) ~76 million jobs run in year to Aug 08 Number of jobs per month CPU time delivered (CPU months) 35000 231 K jobs/day x 2 30000 25000 20000 15000 LHC 10000 5000 Se p 0 O 7 ct -0 N 7 ov D 07 ec -0 Ja 7 n 0 Fe 8 b. M 08 ar -0 Ap 8 r. M 08 ay -0 Ju 8 n 0 Ju 8 l-0 Au 8 g 08 0 14

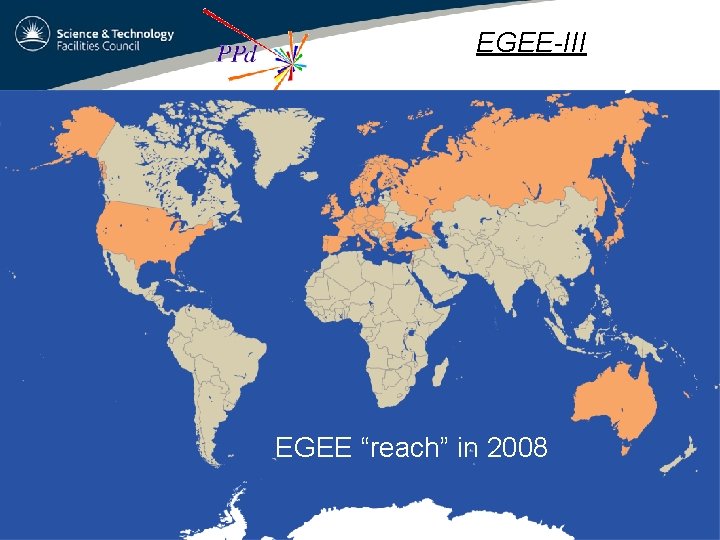

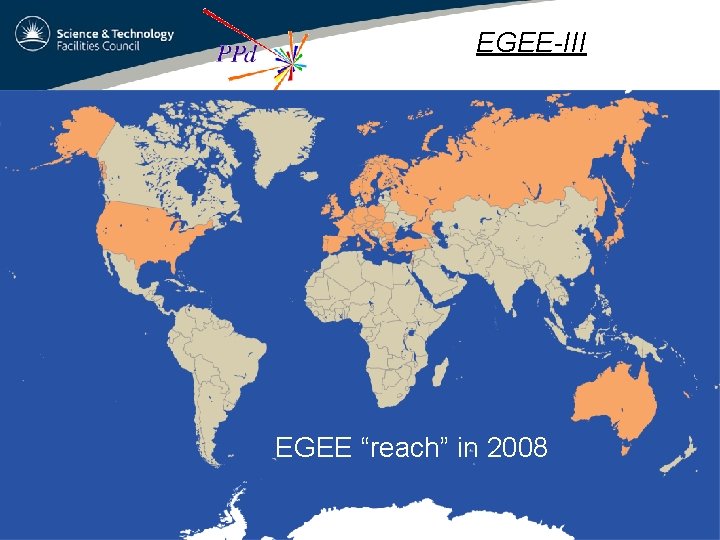

EGEE-III • • 140 partners in 33 countries ~32 M€ (EU); 2 years ~250 sites in 45 countries; >75, 000 CPU-cores >270 VOs ~210 k jobs/day (peak 230 k) ~76 million jobs run in year to Aug 08 • “Other VOs” 30 k jobs/day EGEE “reach” in 2008 15

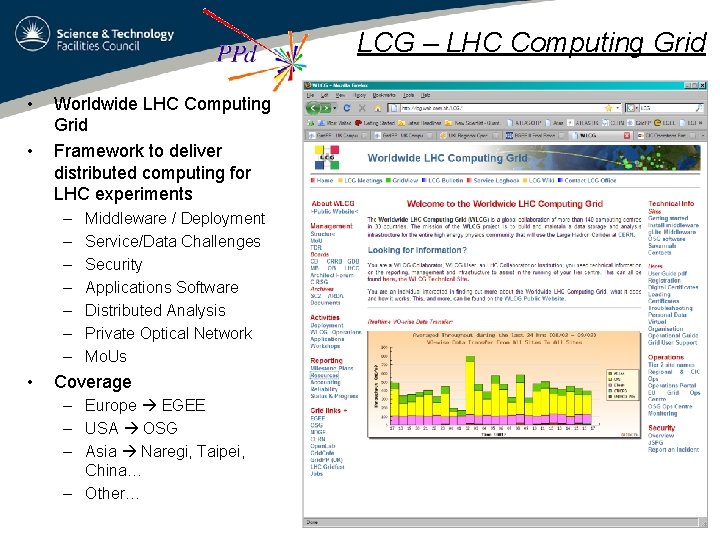

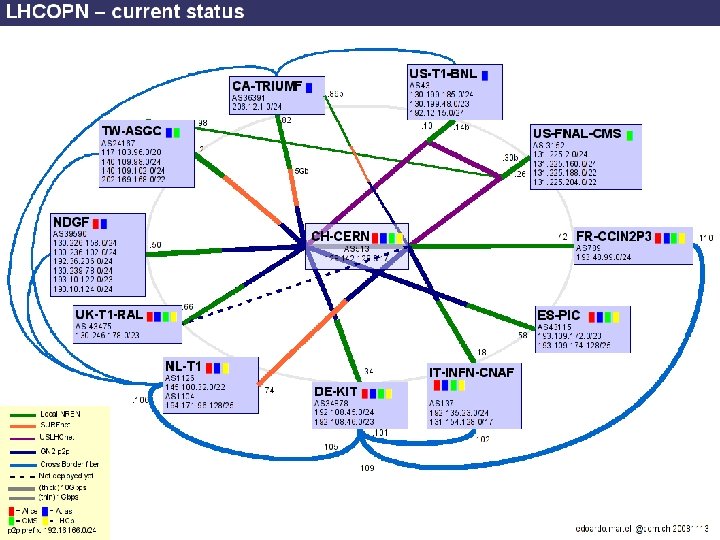

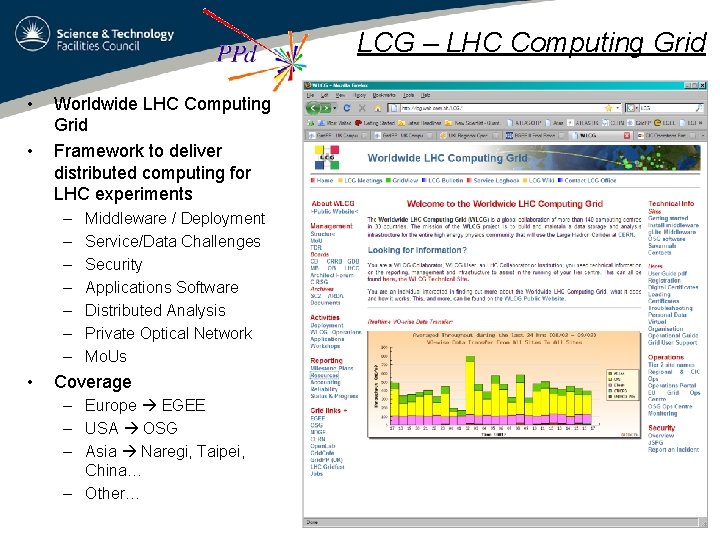

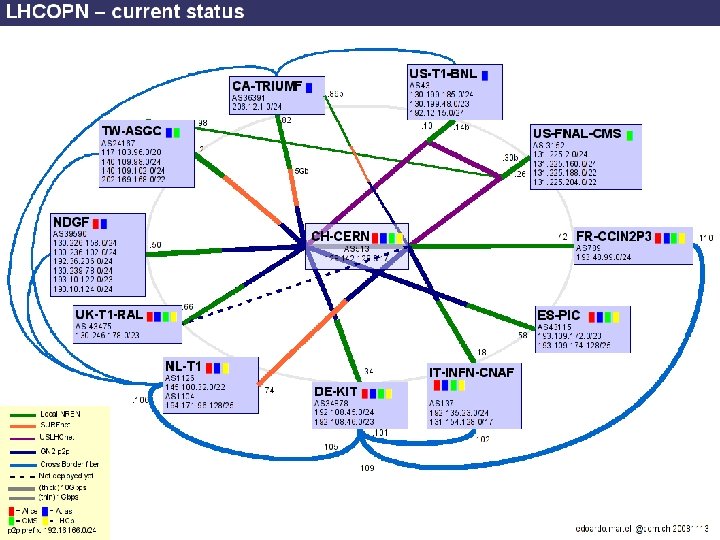

LCG – LHC Computing Grid • • Worldwide LHC Computing Grid Framework to deliver distributed computing for LHC experiments – – – – • Middleware / Deployment Service/Data Challenges Security Applications Software Distributed Analysis Private Optical Network Mo. Us Coverage – Europe EGEE – USA OSG – Asia Naregi, Taipei, China… – Other… 16

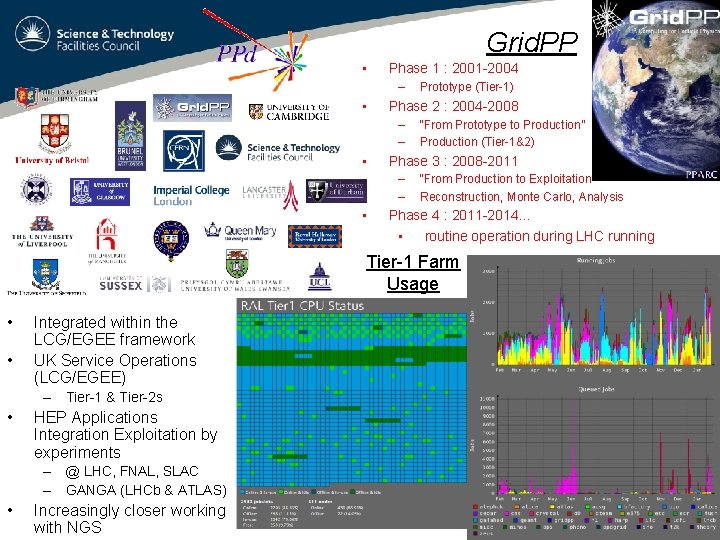

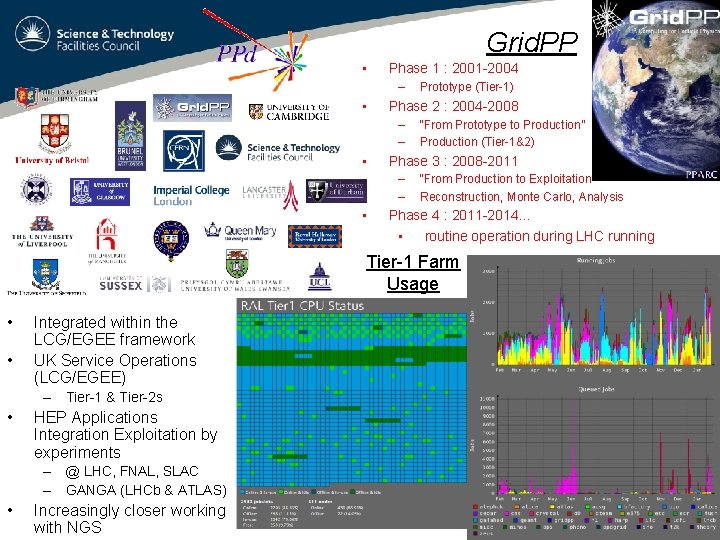

Grid. PP • Phase 1 : 2001 -2004 – • Phase 2 : 2004 -2008 – – • “From Prototype to Production” Production (Tier-1&2) Phase 3 : 2008 -2011 – – • Prototype (Tier-1) “From Production to Exploitation” Reconstruction, Monte Carlo, Analysis Phase 4 : 2011 -2014… • routine operation during LHC running Tier-1 Farm Usage • • Integrated within the LCG/EGEE framework UK Service Operations (LCG/EGEE) – Tier-1 & Tier-2 s • HEP Applications Integration Exploitation by experiments – @ LHC, FNAL, SLAC – GANGA (LHCb & ATLAS) • Increasingly closer working with NGS 17

Virtual Organisations 18

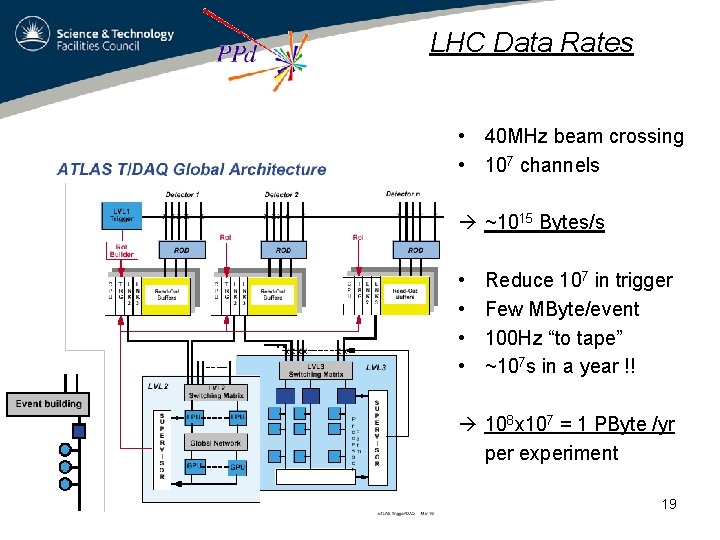

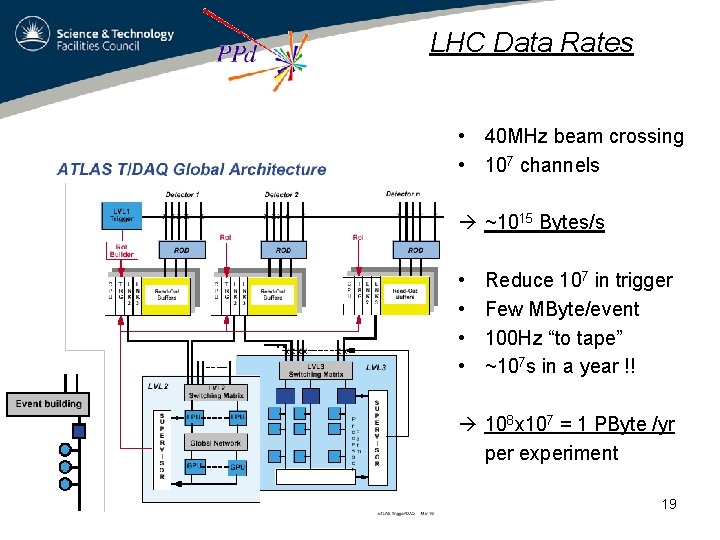

LHC Data Rates • 40 MHz beam crossing • 107 channels ~1015 Bytes/s • • Reduce 107 in trigger Few MByte/event 100 Hz “to tape” ~107 s in a year !! 108 x 107 = 1 PByte /yr per experiment 19

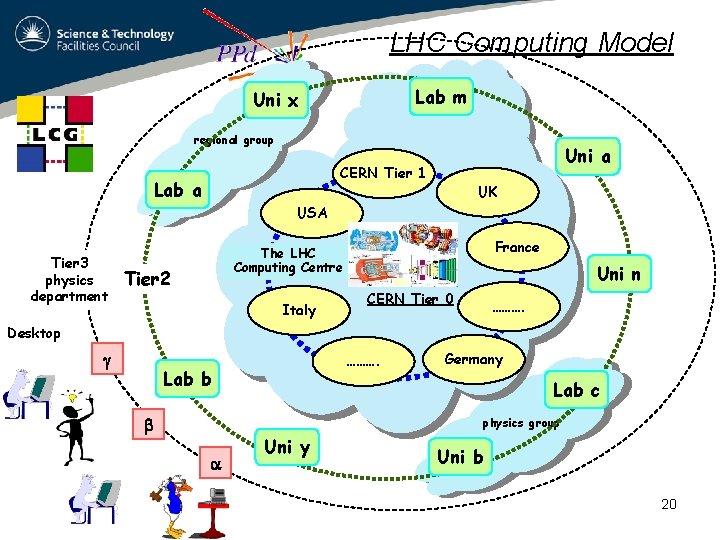

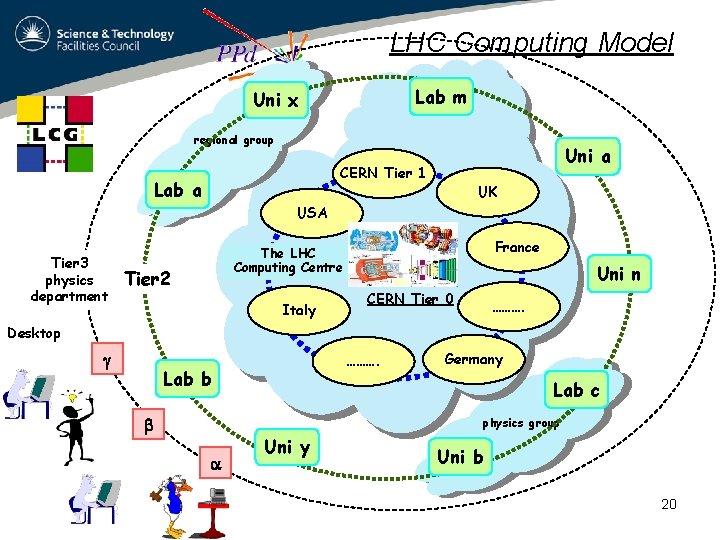

LHC Computing Model Lab m Uni x regional group Uni a CERN Tier 1 Lab a UK USA Tier 3 physics department France The LHC Tier 1 Computing Centre Tier 2 Italy Uni n CERN Tier 0 ………. Desktop ………. Lab b Germany Lab c physics group Uni y Uni b 20

21

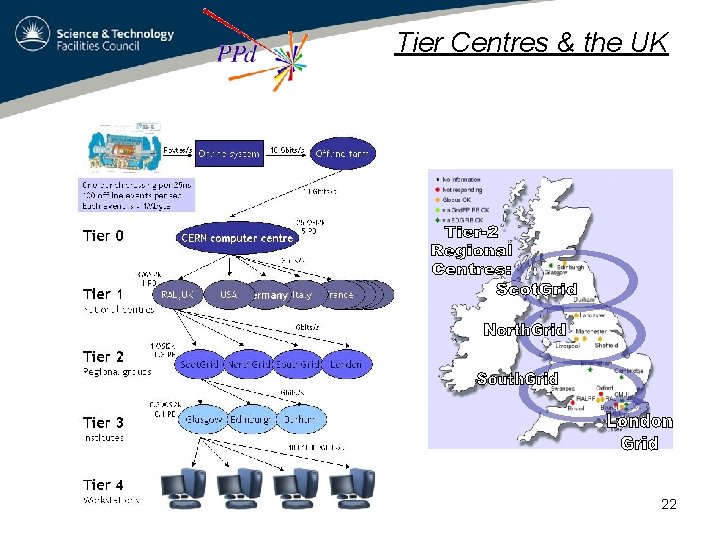

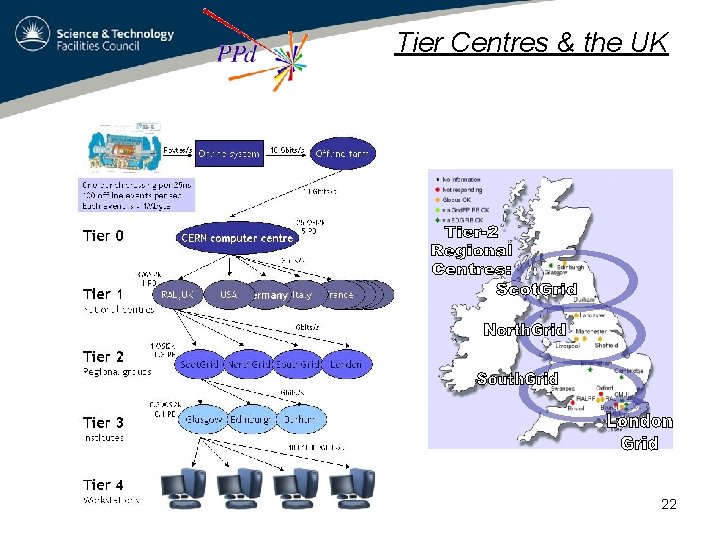

Tier Centres & the UK 22

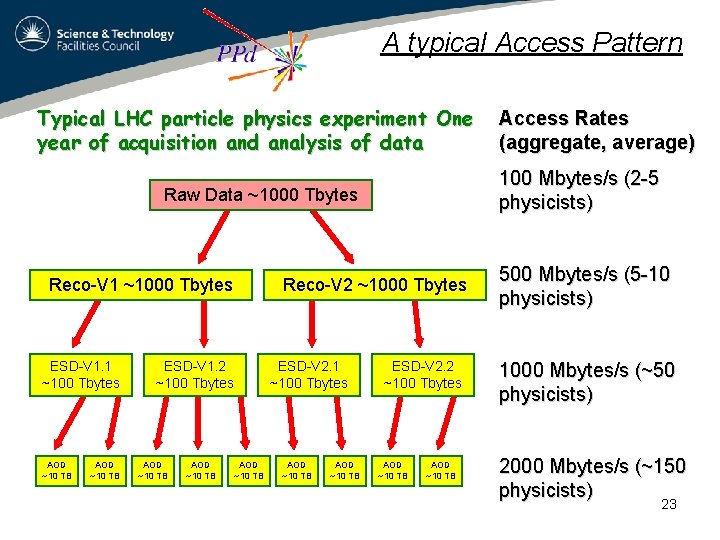

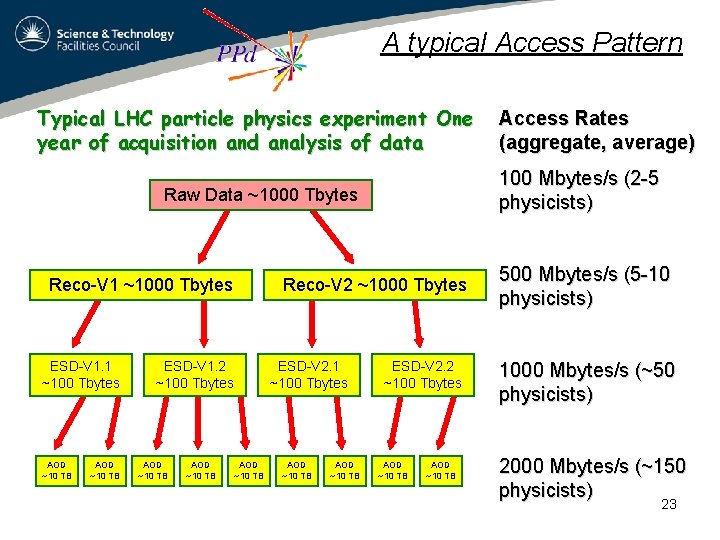

A typical Access Pattern Typical LHC particle physics experiment One year of acquisition and analysis of data 100 Mbytes/s (2 -5 physicists) Raw Data ~1000 Tbytes Reco-V 1 ~1000 Tbytes ESD-V 1. 1 ~100 Tbytes AOD ~10 TB Reco-V 2 ~1000 Tbytes ESD-V 1. 2 ~100 Tbytes AOD ~10 TB ESD-V 2. 1 ~100 Tbytes AOD ~10 TB Access Rates (aggregate, average) ESD-V 2. 2 ~100 Tbytes AOD ~10 TB 500 Mbytes/s (5 -10 physicists) 1000 Mbytes/s (~50 physicists) 2000 Mbytes/s (~150 physicists) 23

Principle Components 24

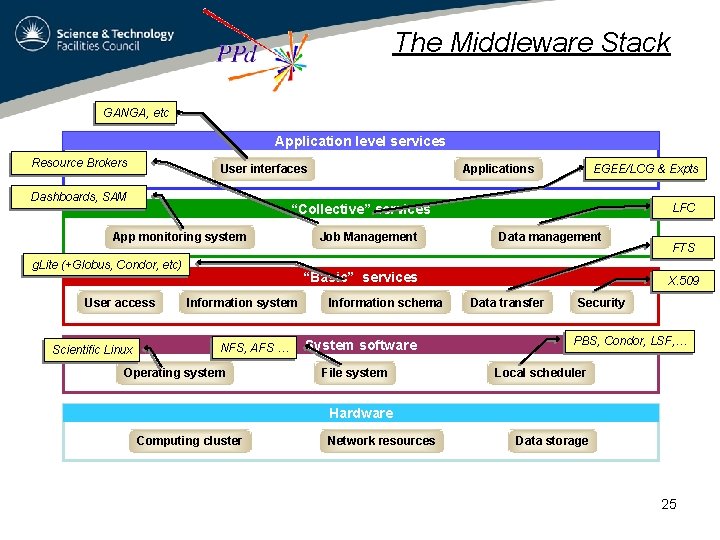

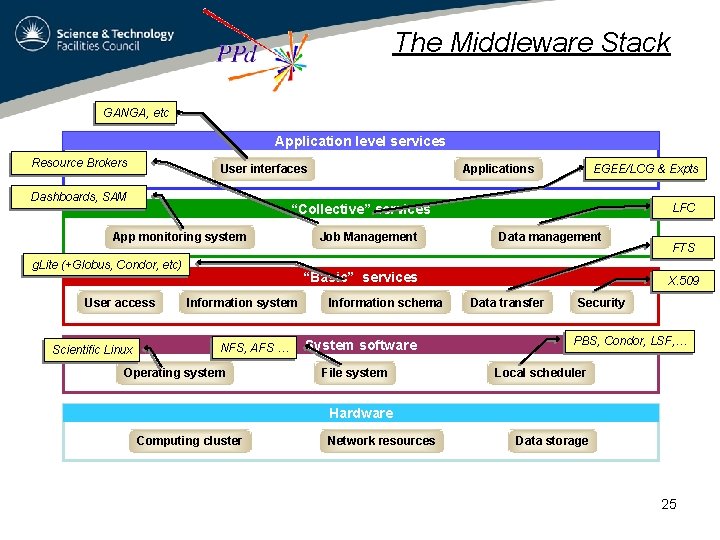

The Middleware Stack GANGA, etc Application level services Resource Brokers User interfaces Dashboards, SAM “Collective” services App monitoring system g. Lite (+Globus, Condor, etc) User access Scientific Linux EGEE/LCG & Expts Applications Job Management LFC Data management “Basic” services Information system NFS, AFS … Operating system Information schema System software File system FTS X. 509 Data transfer Security PBS, Condor, LSF, … Local scheduler Hardware Computing cluster Network resources Data storage 25

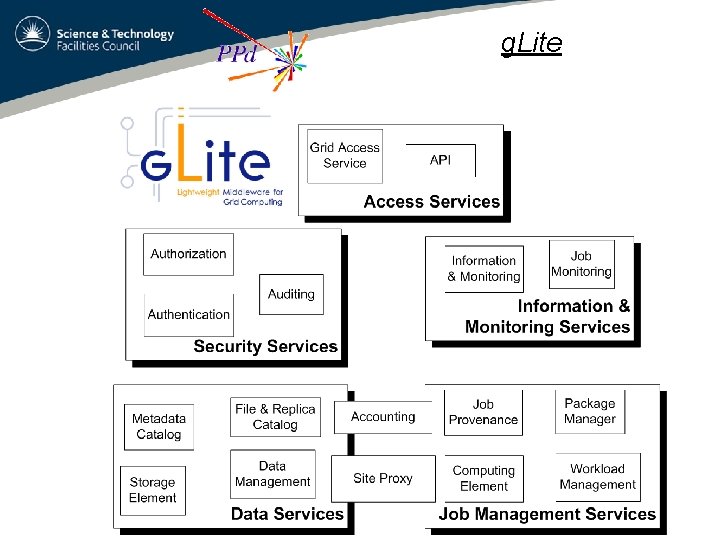

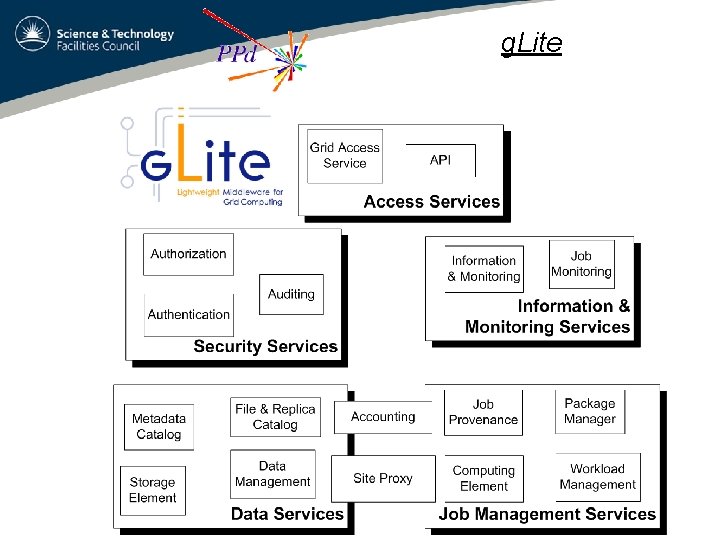

g. Lite 26

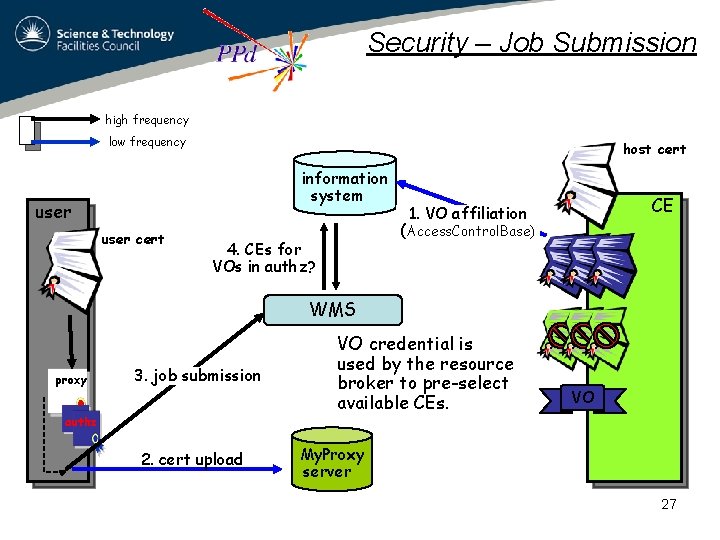

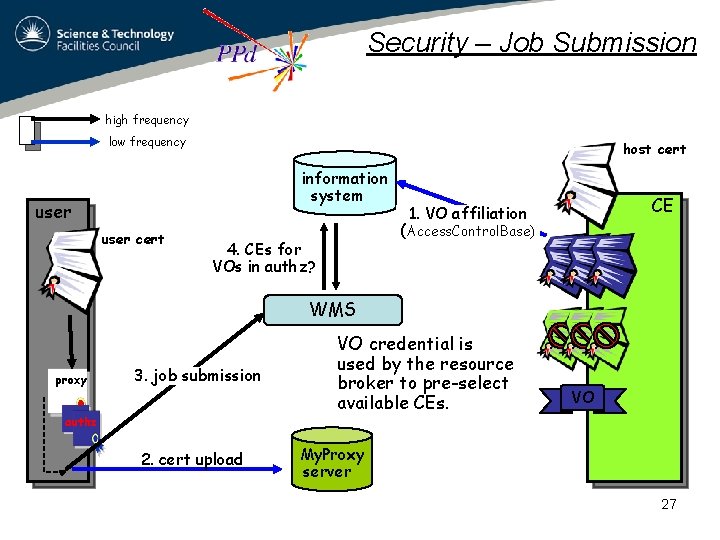

Security – Job Submission high frequency low frequency host cert information system user cert CE 1. VO affiliation (Access. Control. Base) 4. CEs for VOs in authz? WMS proxy 3. job submission authz 2. cert upload VO credential is used by the resource broker to pre-select available CEs. VO My. Proxy server 27

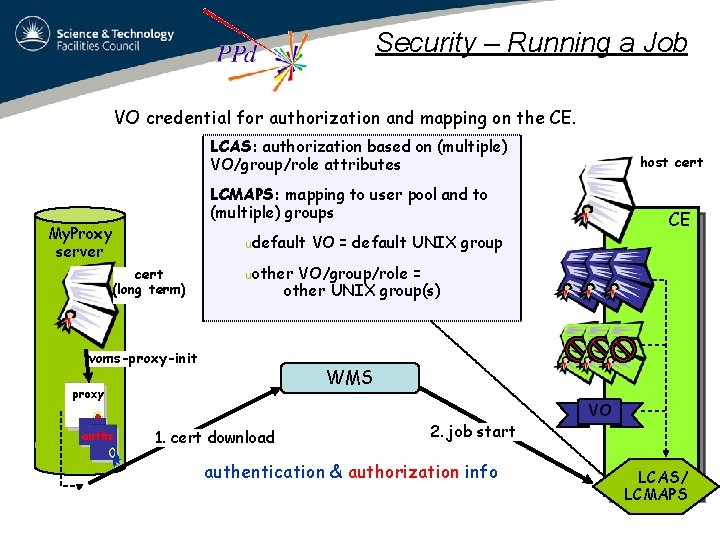

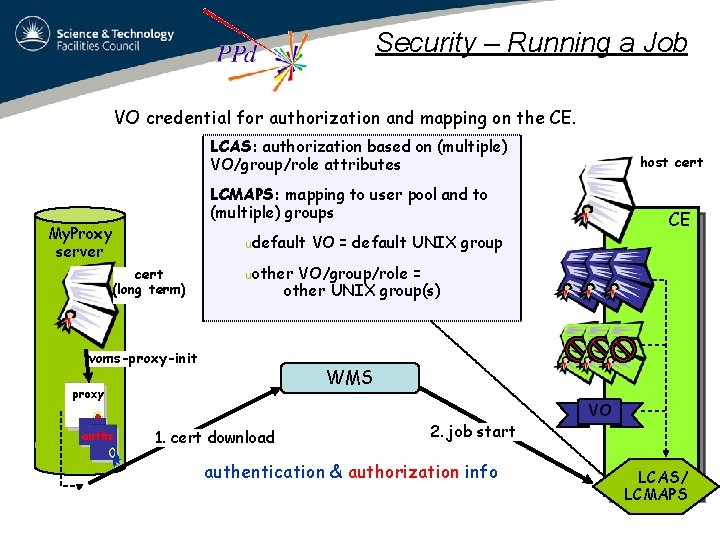

Security – Running a Job VO credential for authorization and mapping on the CE. LCAS: authorization based on (multiple) VO/group/role attributes LCMAPS: mapping to user pool and to (multiple) groups My. Proxy server udefault cert (long term) CE VO = default UNIX group uother VO/group/role = other UNIX group(s) voms-proxy-init WMS proxy authz host cert 1. cert download 2. job start authentication & authorization info VO LCAS/ LCMAPS 28

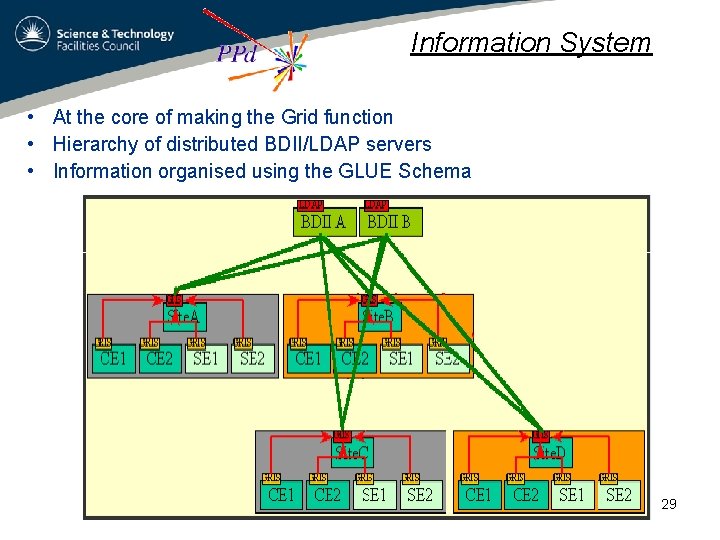

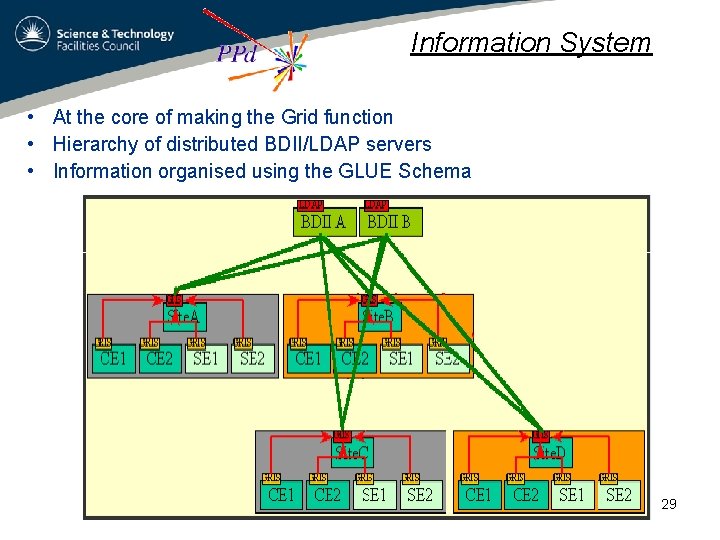

Information System • At the core of making the Grid function • Hierarchy of distributed BDII/LDAP servers • Information organised using the GLUE Schema 29

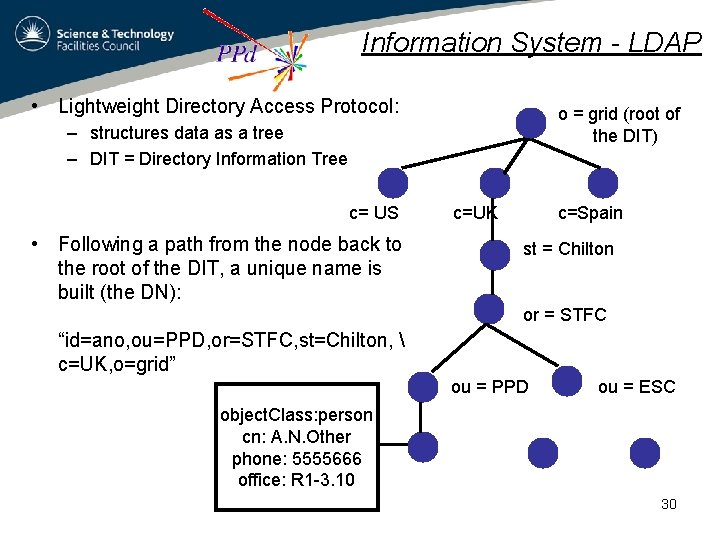

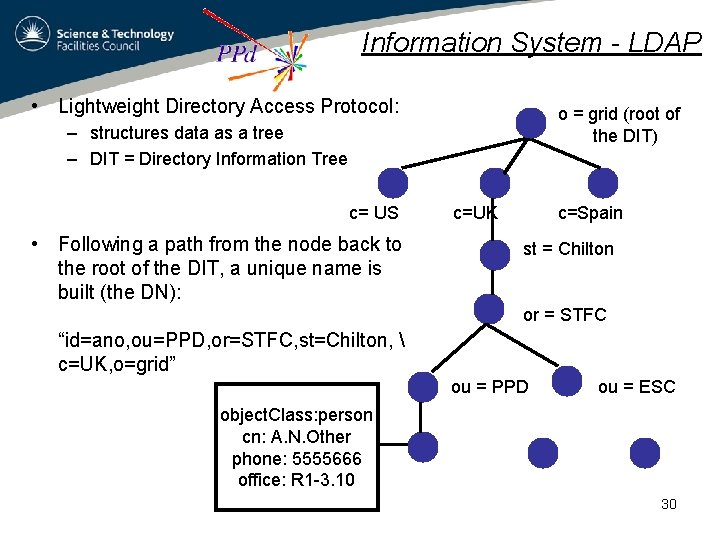

Information System - LDAP • Lightweight Directory Access Protocol: o = grid (root of the DIT) – structures data as a tree – DIT = Directory Information Tree c= US • Following a path from the node back to the root of the DIT, a unique name is built (the DN): c=UK c=Spain st = Chilton or = STFC “id=ano, ou=PPD, or=STFC, st=Chilton, c=UK, o=grid” ou = PPD ou = ESC object. Class: person cn: A. N. Other phone: 5555666 office: R 1 -3. 10 30

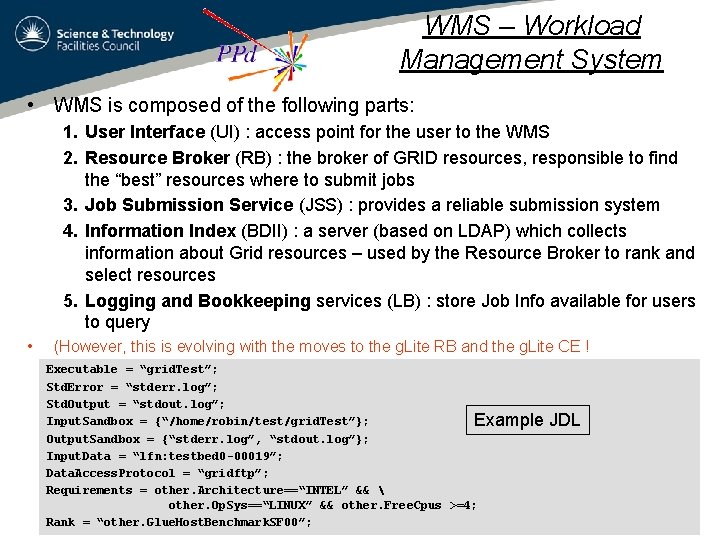

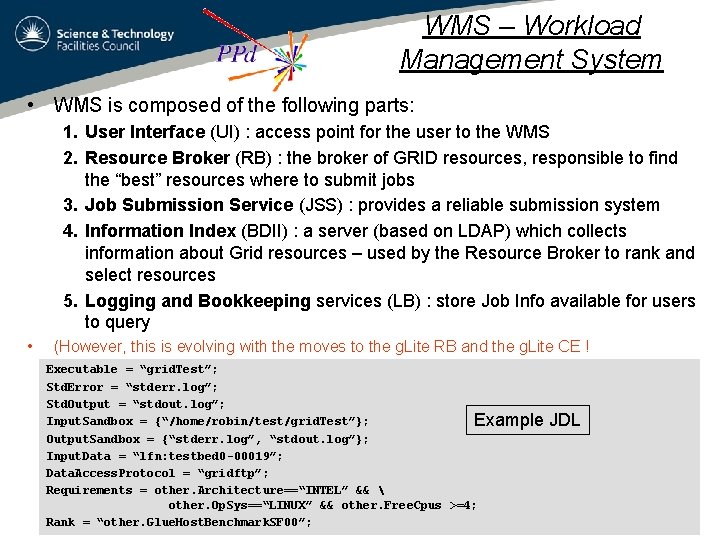

WMS – Workload Management System • WMS is composed of the following parts: 1. User Interface (UI) : access point for the user to the WMS 2. Resource Broker (RB) : the broker of GRID resources, responsible to find the “best” resources where to submit jobs 3. Job Submission Service (JSS) : provides a reliable submission system 4. Information Index (BDII) : a server (based on LDAP) which collects information about Grid resources – used by the Resource Broker to rank and select resources 5. Logging and Bookkeeping services (LB) : store Job Info available for users to query • (However, this is evolving with the moves to the g. Lite RB and the g. Lite CE ! Executable = “grid. Test”; Std. Error = “stderr. log”; Std. Output = “stdout. log”; Executable = “/bin/echo”; Input. Sandbox = {“/home/robin/test/grid. Test”}; Example JDL Arguments = “Good Morning”; Output. Sandbox = {“stderr. log”, “stdout. log”}; Std. Error = “stderr. log”; Input. Data = “lfn: testbed 0 -00019”; Std. Output == “gridftp”; “stdout. log”; Data. Access. Protocol Requirements = other. Architecture==“INTEL” && Output. Sandbox = {“stderr. log”, “stdout. log”}; other. Op. Sys==“LINUX” && other. Free. Cpus >=4; Rank = “other. Glue. Host. Benchmark. SF 00”; 31

Data Management • DPM – Disk Pool Manager • also (d. Cache), CASTOR • LFC – LHC File Catalogue • FTS – File Transfer Service 32

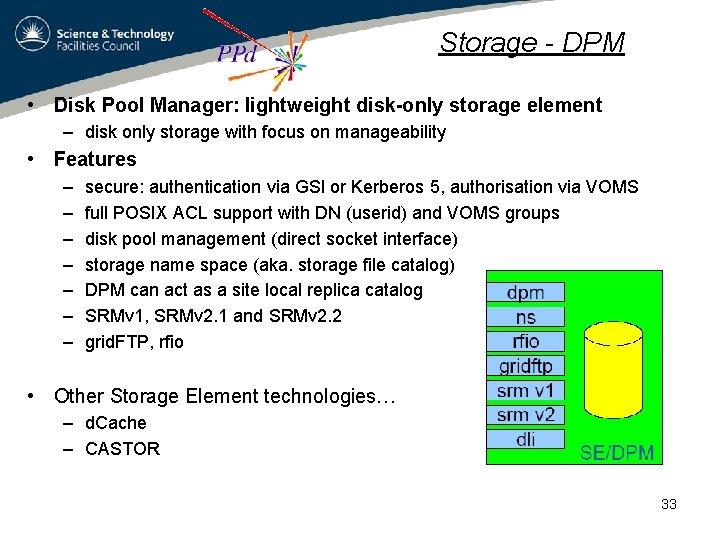

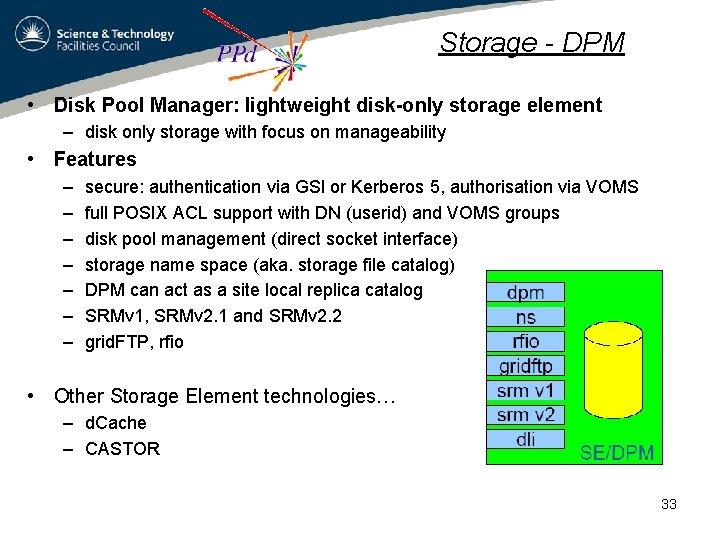

Storage - DPM • Disk Pool Manager: lightweight disk-only storage element – disk only storage with focus on manageability • Features – – – – secure: authentication via GSI or Kerberos 5, authorisation via VOMS full POSIX ACL support with DN (userid) and VOMS groups disk pool management (direct socket interface) storage name space (aka. storage file catalog) DPM can act as a site local replica catalog SRMv 1, SRMv 2. 1 and SRMv 2. 2 grid. FTP, rfio • Other Storage Element technologies… – d. Cache – CASTOR 33

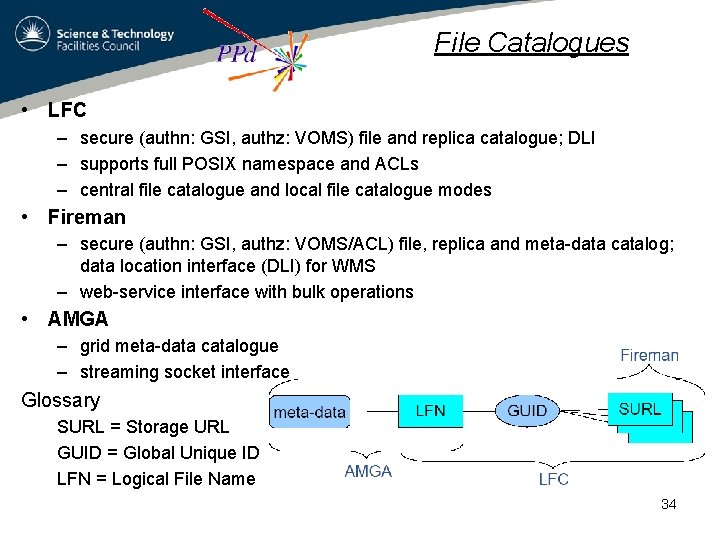

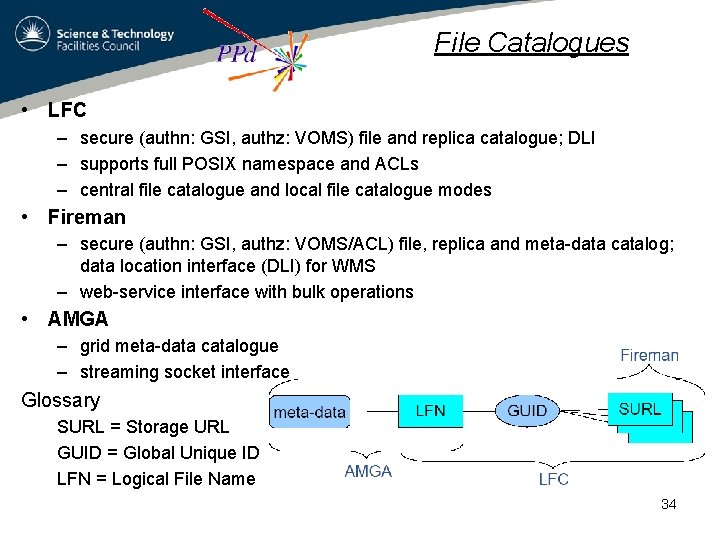

File Catalogues • LFC – secure (authn: GSI, authz: VOMS) file and replica catalogue; DLI – supports full POSIX namespace and ACLs – central file catalogue and local file catalogue modes • Fireman – secure (authn: GSI, authz: VOMS/ACL) file, replica and meta-data catalog; data location interface (DLI) for WMS – web-service interface with bulk operations • AMGA – grid meta-data catalogue – streaming socket interface Glossary SURL = Storage URL GUID = Global Unique ID LFN = Logical File Name 34

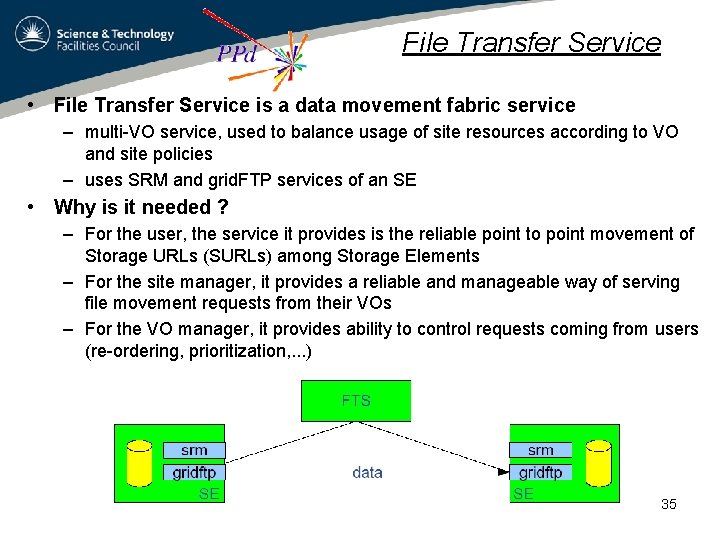

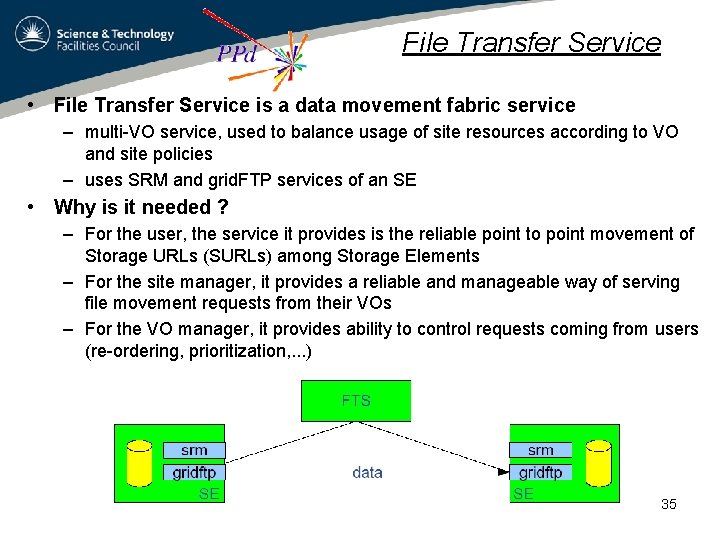

File Transfer Service • File Transfer Service is a data movement fabric service – multi-VO service, used to balance usage of site resources according to VO and site policies – uses SRM and grid. FTP services of an SE • Why is it needed ? – For the user, the service it provides is the reliable point to point movement of Storage URLs (SURLs) among Storage Elements – For the site manager, it provides a reliable and manageable way of serving file movement requests from their VOs – For the VO manager, it provides ability to control requests coming from users (re-ordering, prioritization, . . . ) 35

Grid Portals 36

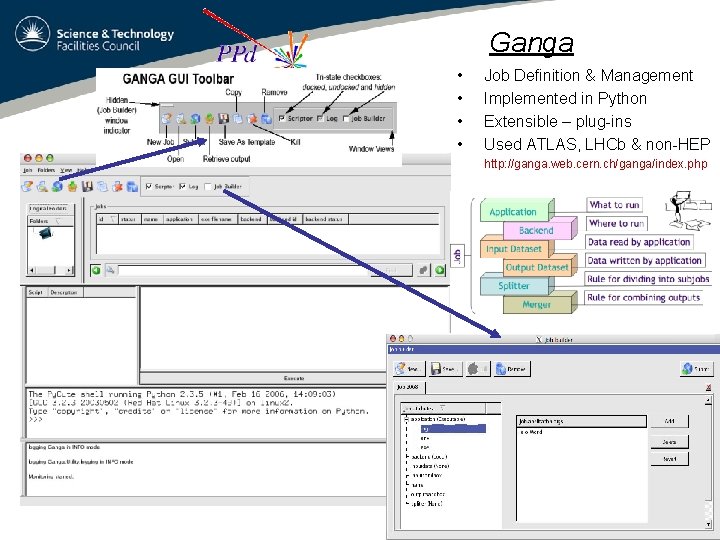

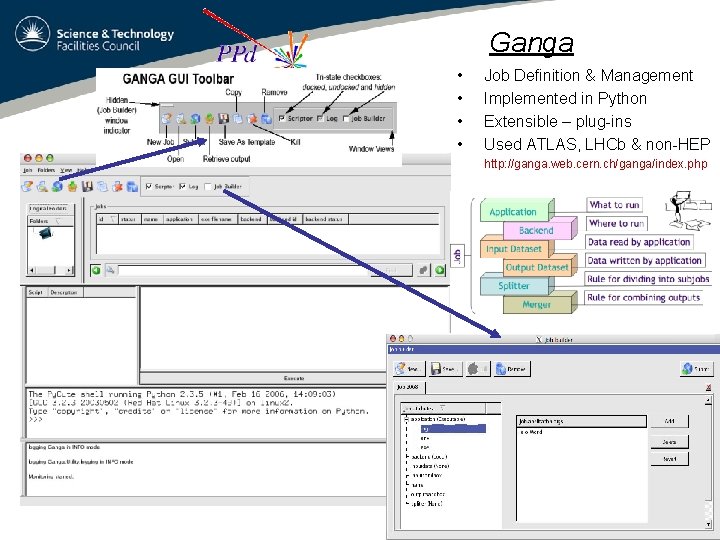

Ganga • • Job Definition & Management Implemented in Python Extensible – plug-ins Used ATLAS, LHCb & non-HEP http: //ganga. web. cern. ch/ganga/index. php 37

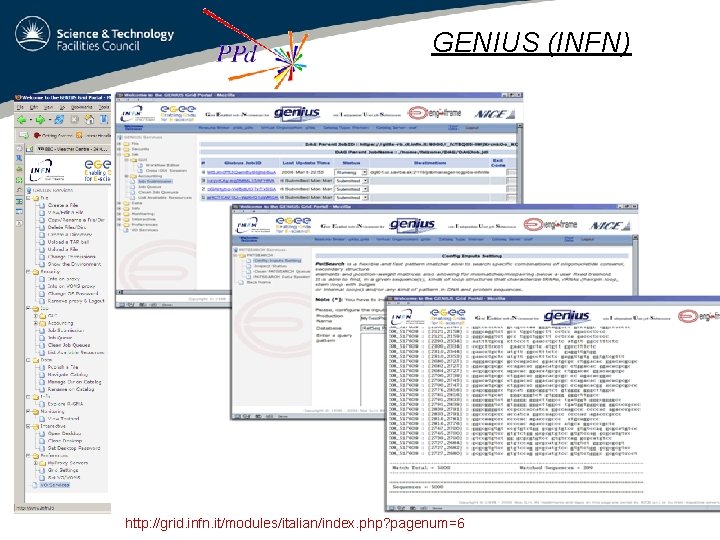

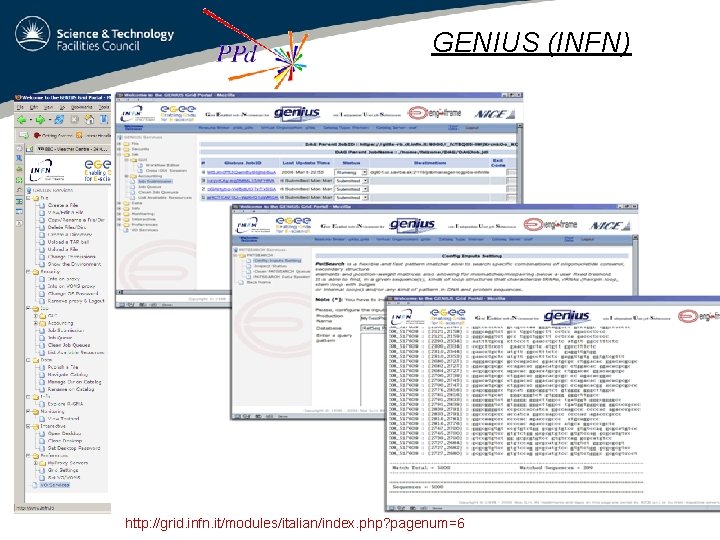

GENIUS (INFN) 38 http: //grid. infn. it/modules/italian/index. php? pagenum=6

Monitoring the Grid User Support 39

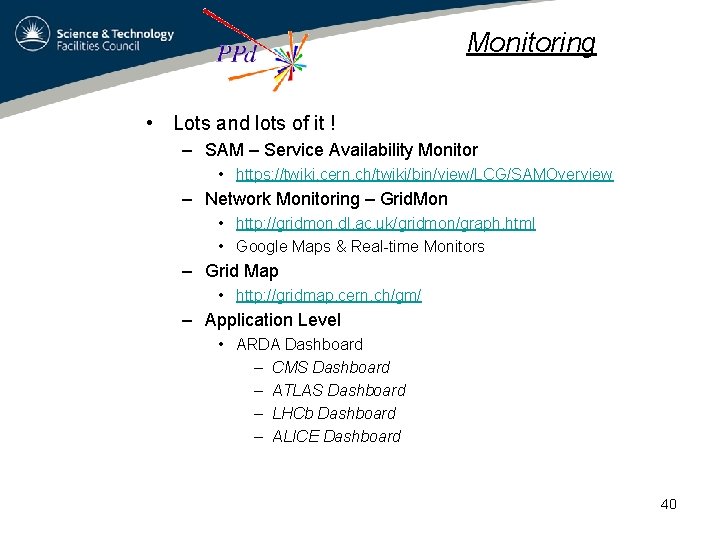

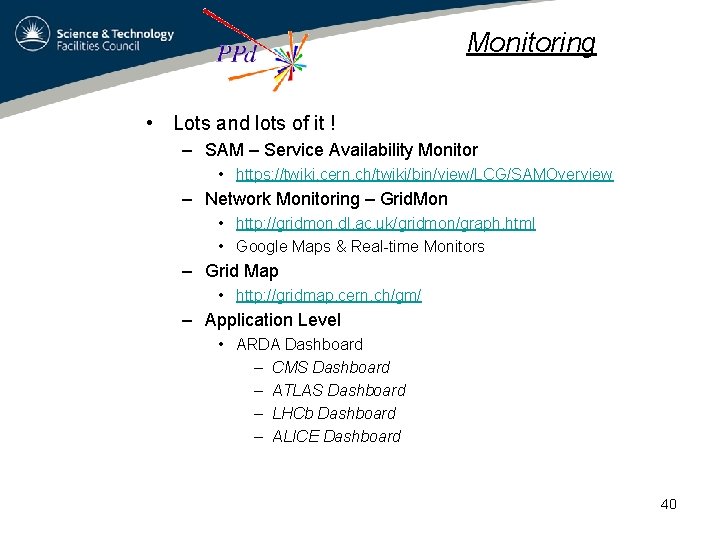

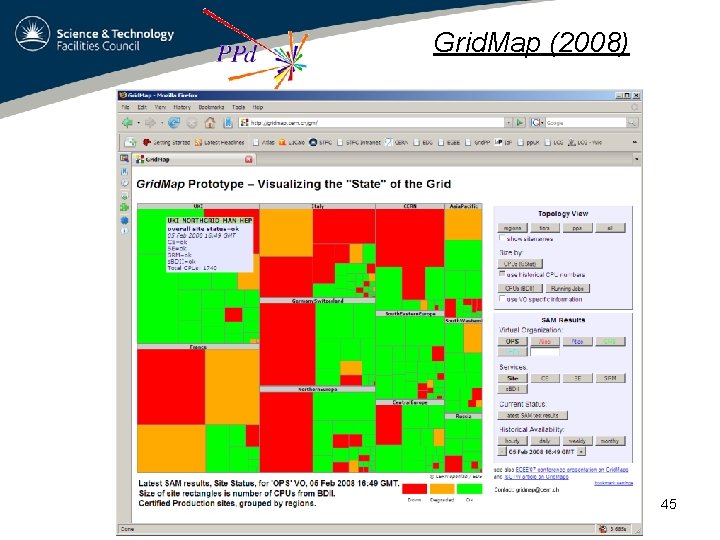

Monitoring • Lots and lots of it ! – SAM – Service Availability Monitor • https: //twiki. cern. ch/twiki/bin/view/LCG/SAMOverview – Network Monitoring – Grid. Mon • http: //gridmon. dl. ac. uk/gridmon/graph. html • Google Maps & Real-time Monitors – Grid Map • http: //gridmap. cern. ch/gm/ – Application Level • ARDA Dashboard – CMS Dashboard – ATLAS Dashboard – LHCb Dashboard – ALICE Dashboard 40

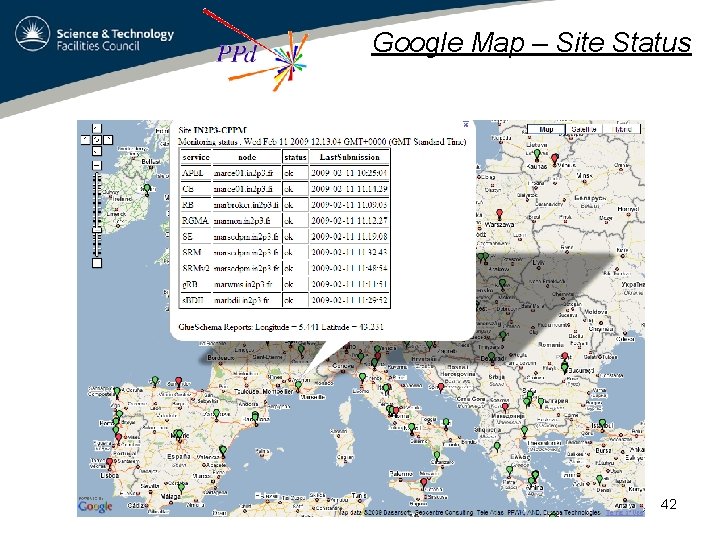

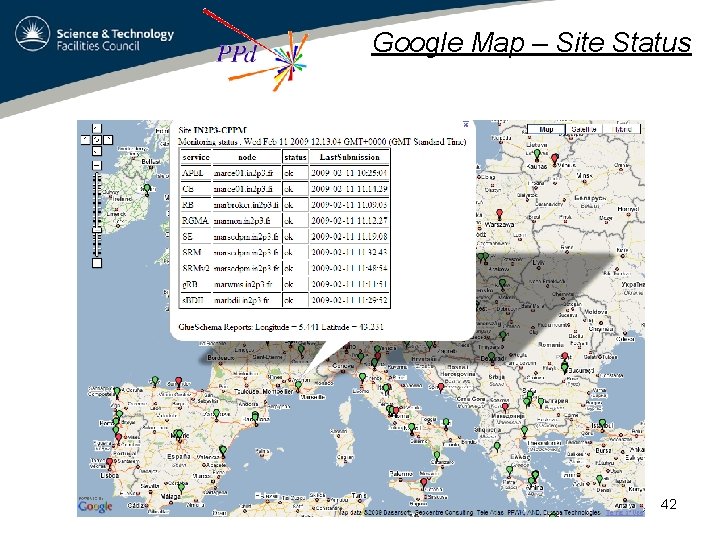

Google Map – Site Status • http: //goc 02. grid-support. ac. uk/googlemaps/sam. html 41

Google Map – Site Status 42

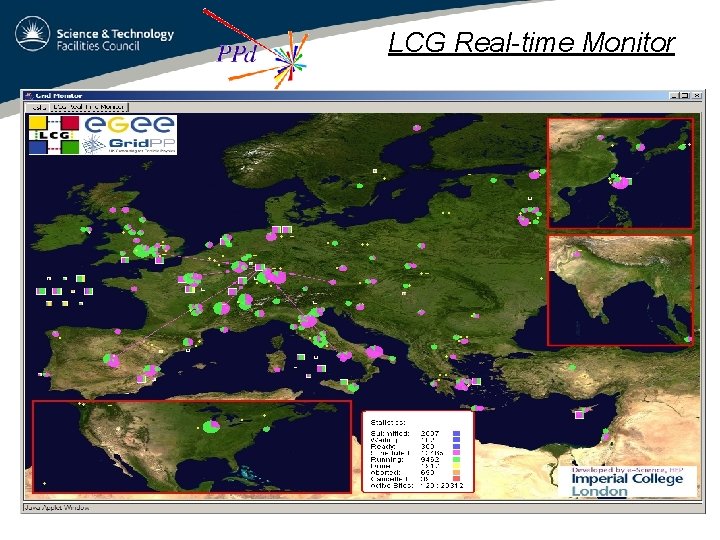

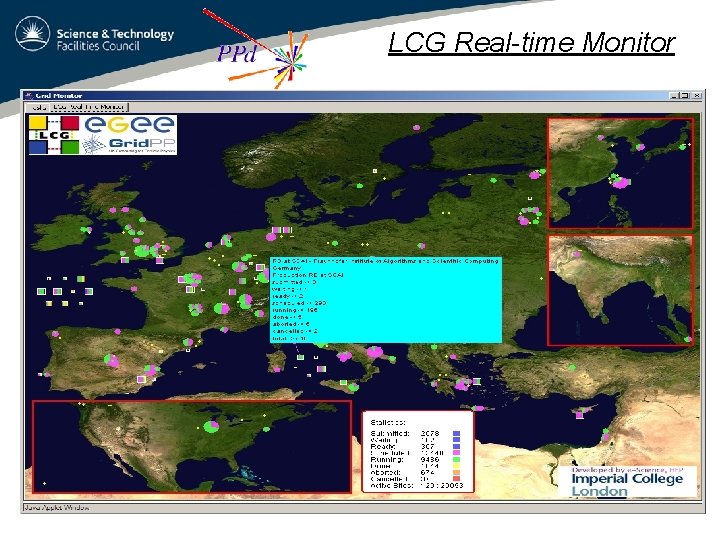

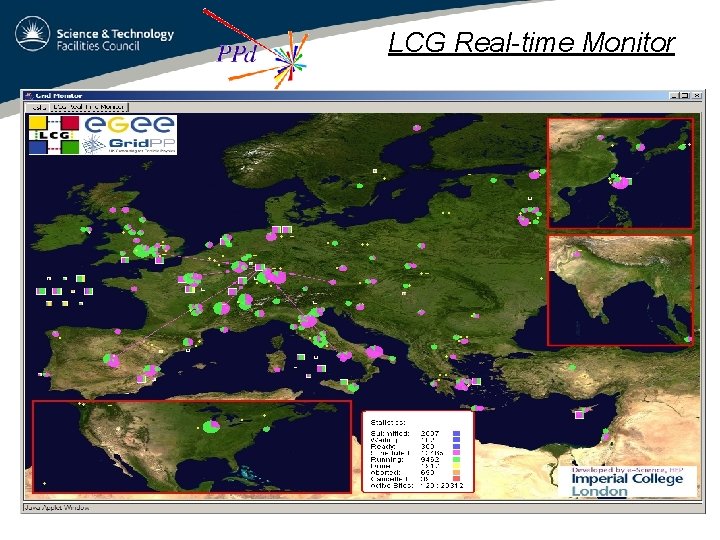

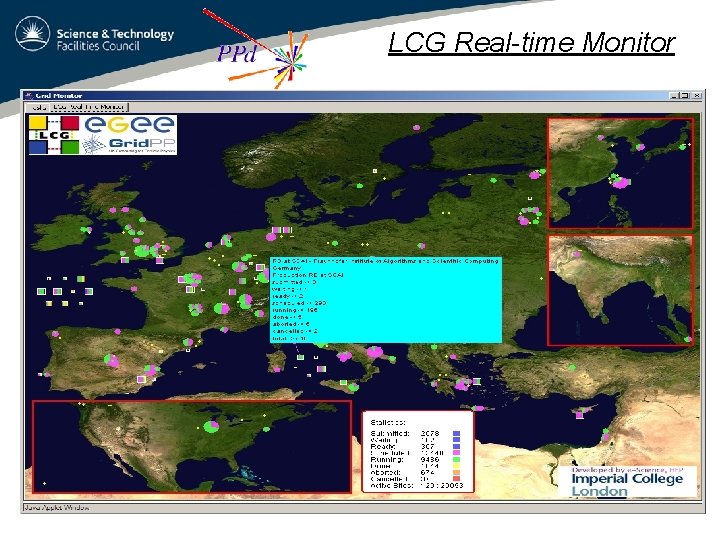

LCG Real-time Monitor 43

LCG Real-time Monitor 44

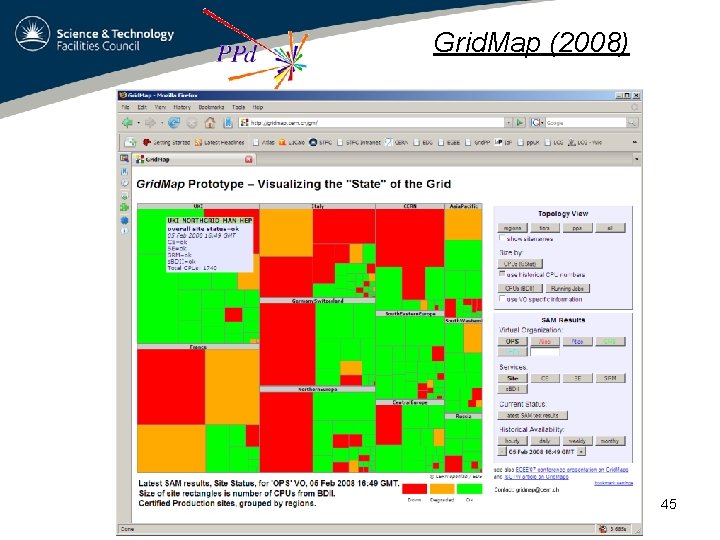

Grid. Map (2008) 45

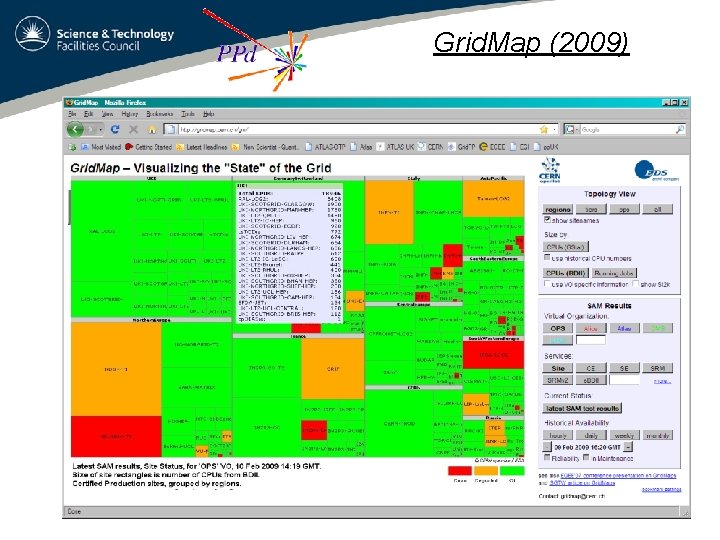

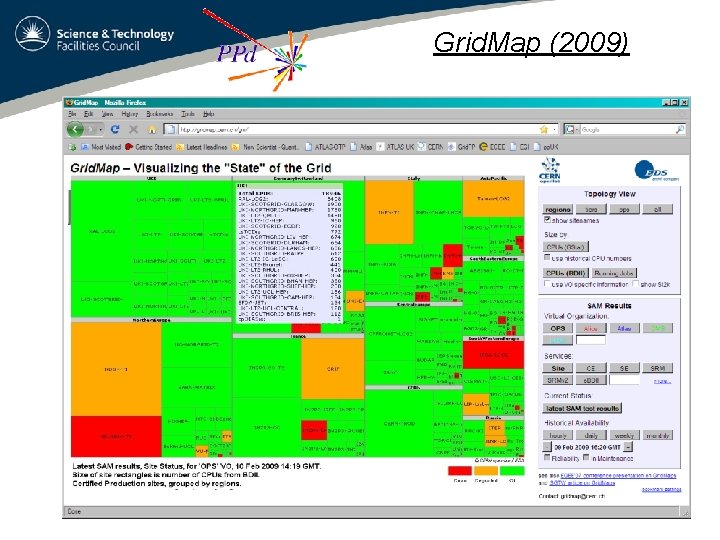

Grid. Map (2009) 46

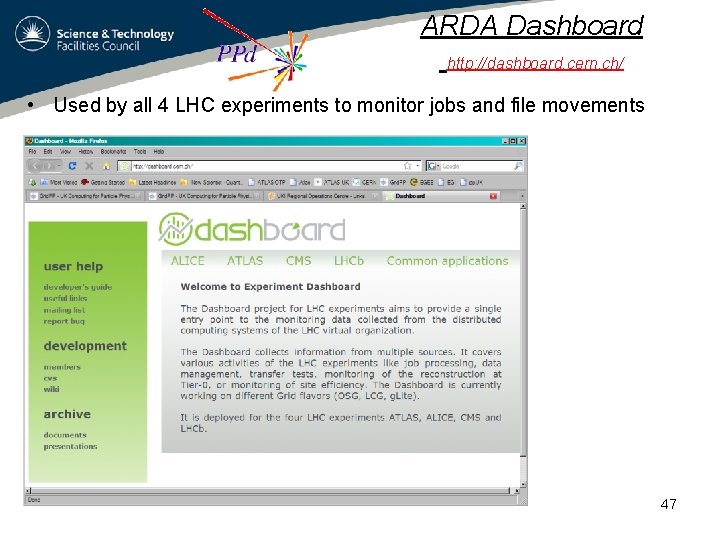

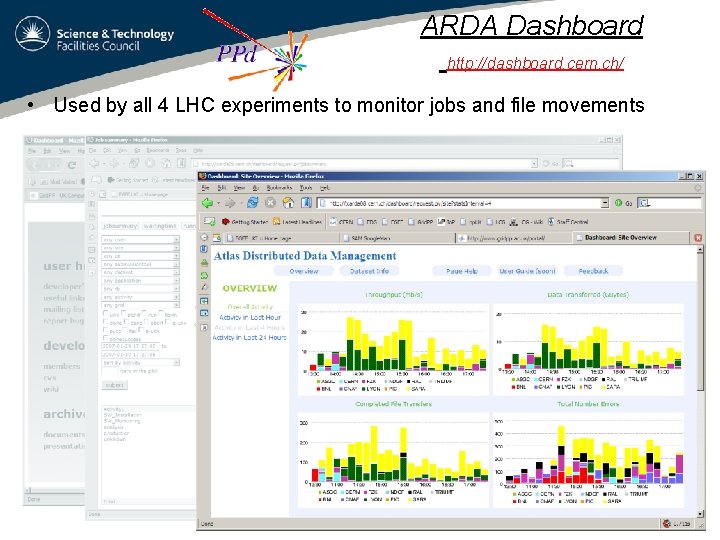

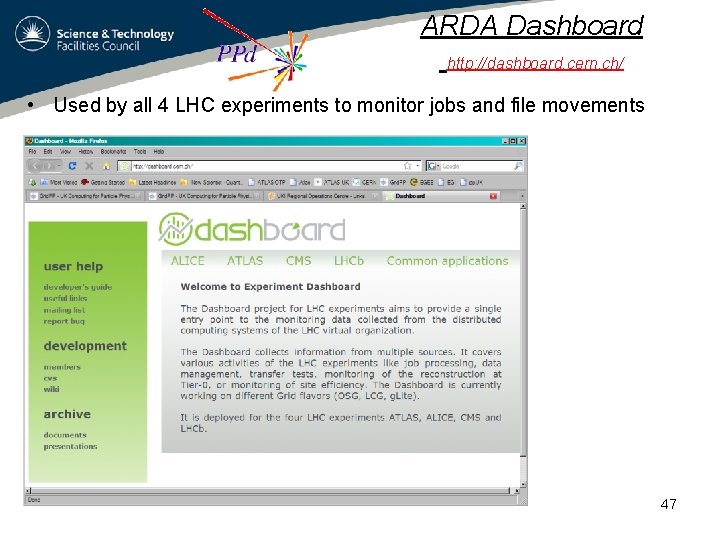

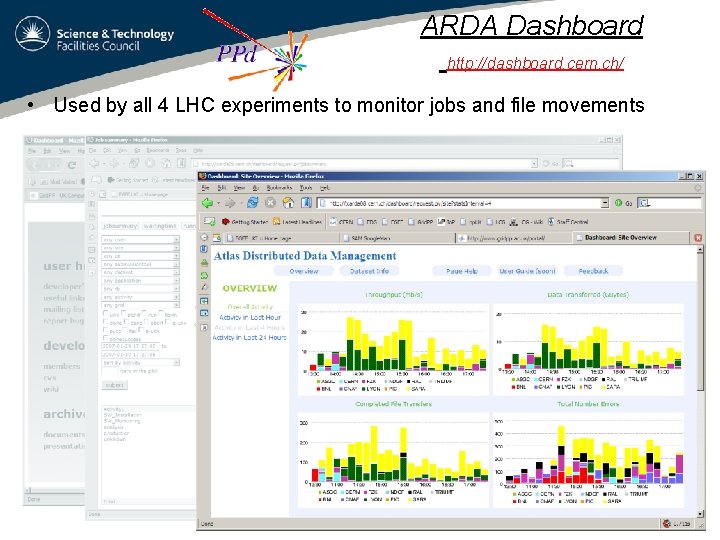

ARDA Dashboard http: //dashboard. cern. ch/ • Used by all 4 LHC experiments to monitor jobs and file movements 47

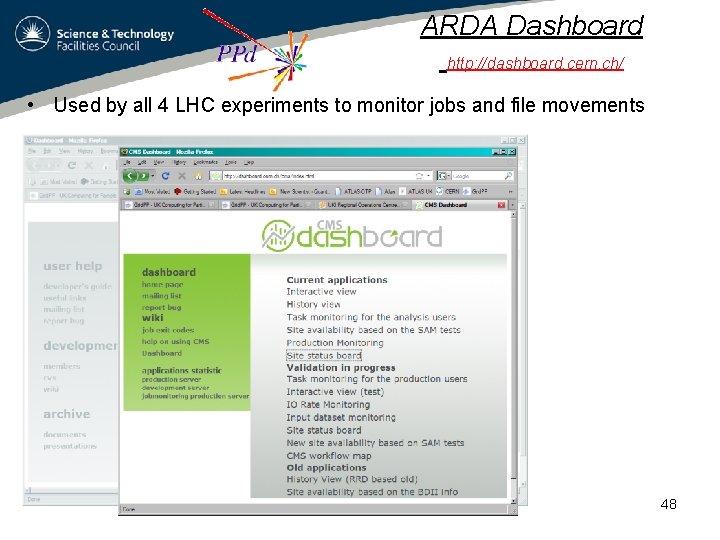

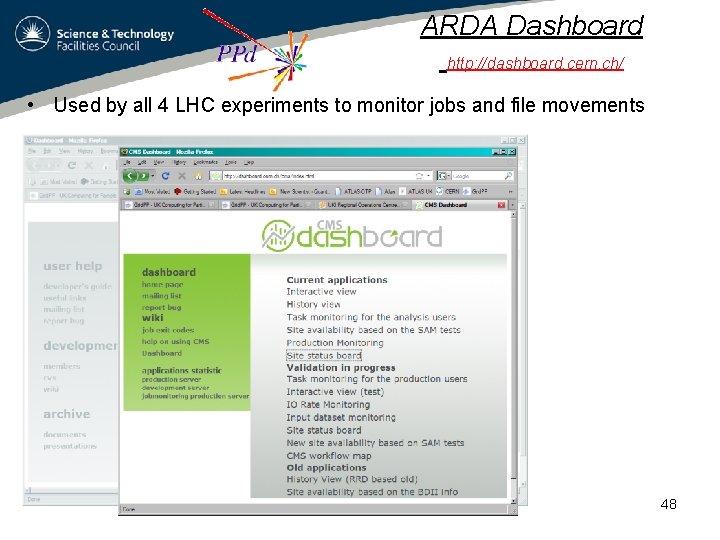

ARDA Dashboard http: //dashboard. cern. ch/ • Used by all 4 LHC experiments to monitor jobs and file movements 48

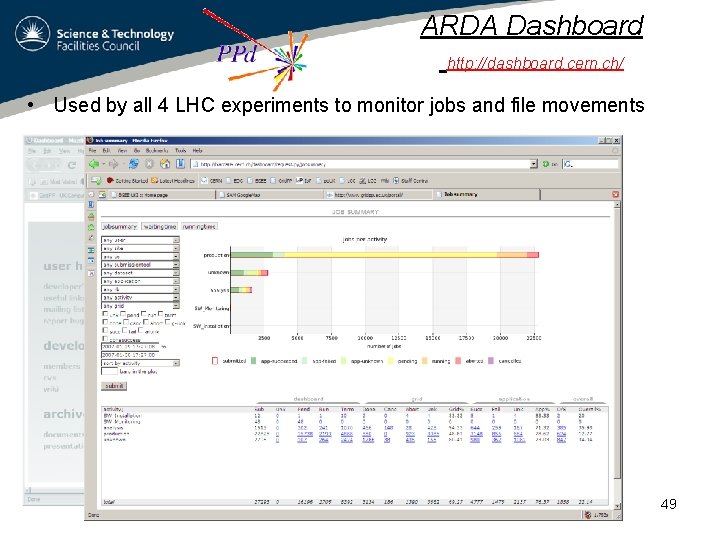

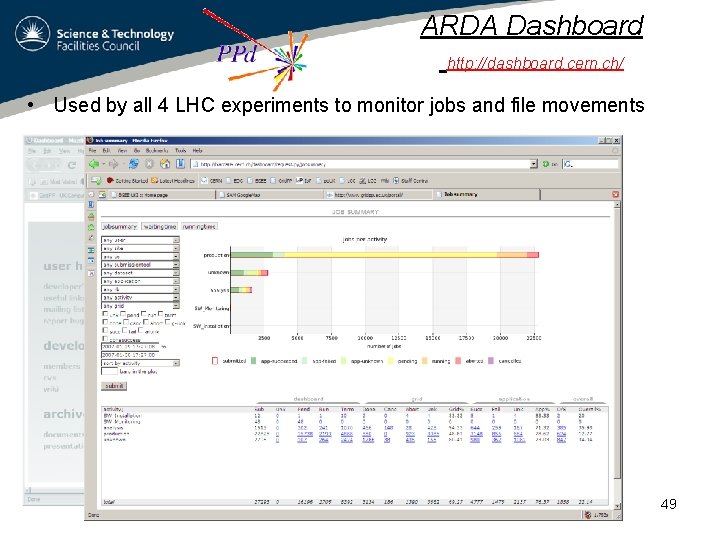

ARDA Dashboard http: //dashboard. cern. ch/ • Used by all 4 LHC experiments to monitor jobs and file movements 49

ARDA Dashboard http: //dashboard. cern. ch/ • Used by all 4 LHC experiments to monitor jobs and file movements 50

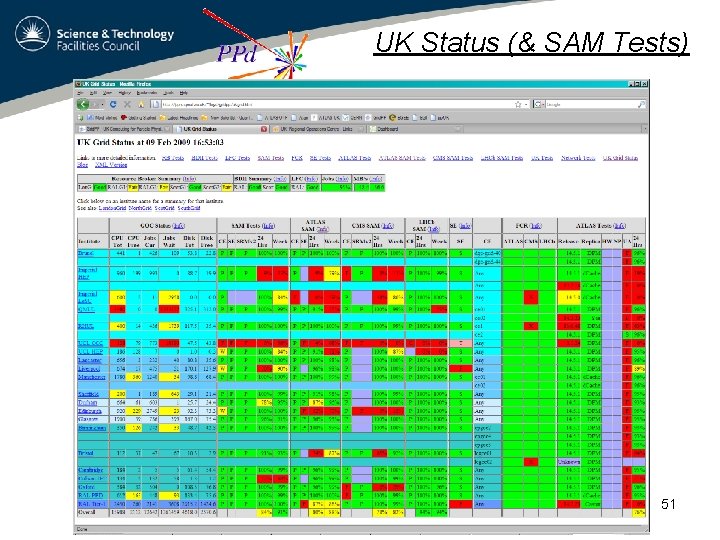

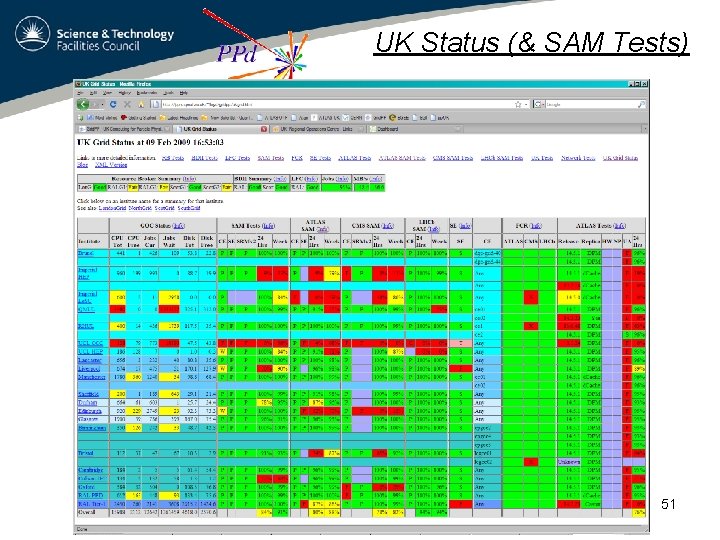

UK Status (& SAM Tests) 51

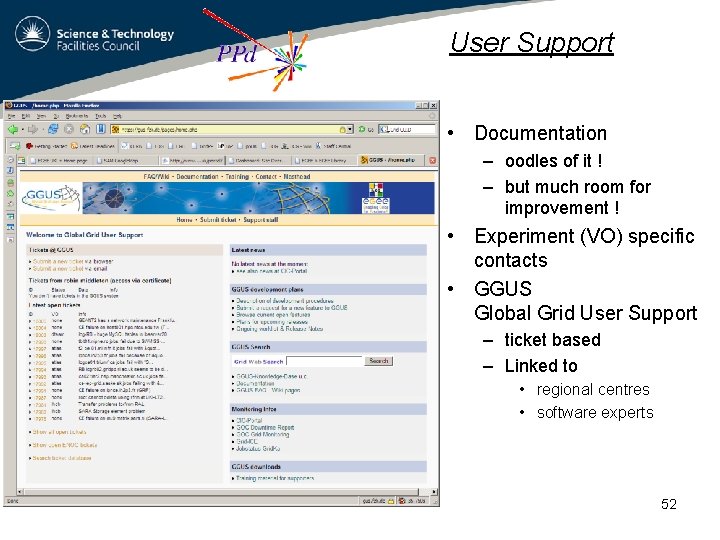

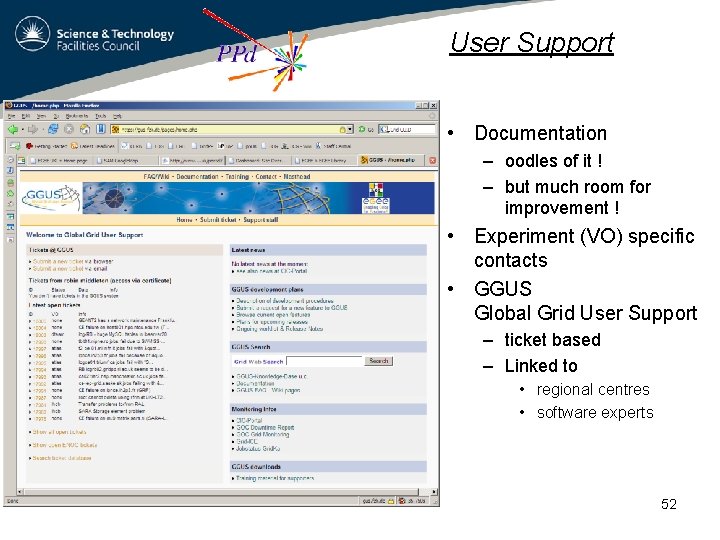

User Support • Documentation – oodles of it ! – but much room for improvement ! • Experiment (VO) specific contacts • GGUS Global Grid User Support – ticket based – Linked to • regional centres • software experts 52

Other Projects Other Sciences 53

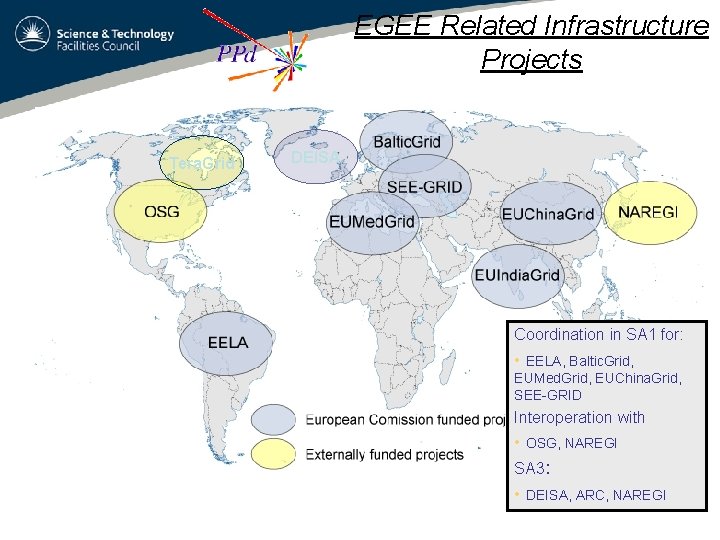

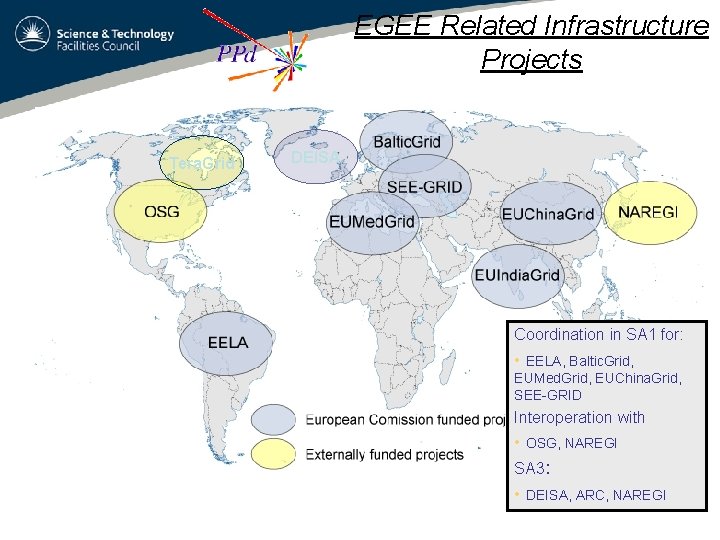

EGEE Related Infrastructure Projects Tera. Grid DEISA Coordination in SA 1 for: • EELA, Baltic. Grid, EUMed. Grid, EUChina. Grid, SEE-GRID Interoperation with • OSG, NAREGI SA 3: • DEISA, ARC, NAREGI 54

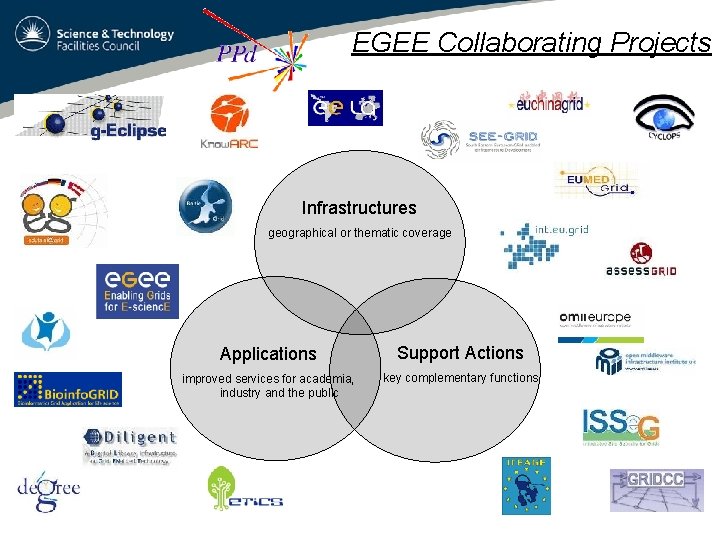

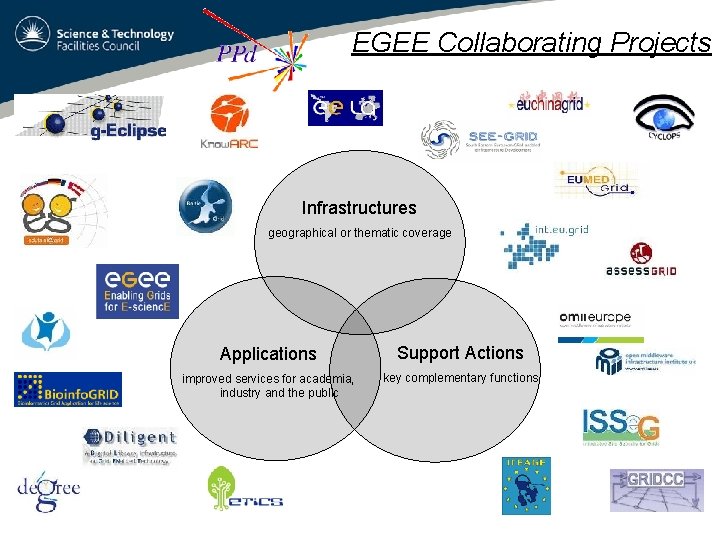

EGEE Collaborating Projects Infrastructures geographical or thematic coverage Applications Support Actions improved services for academia, industry and the public key complementary functions 55

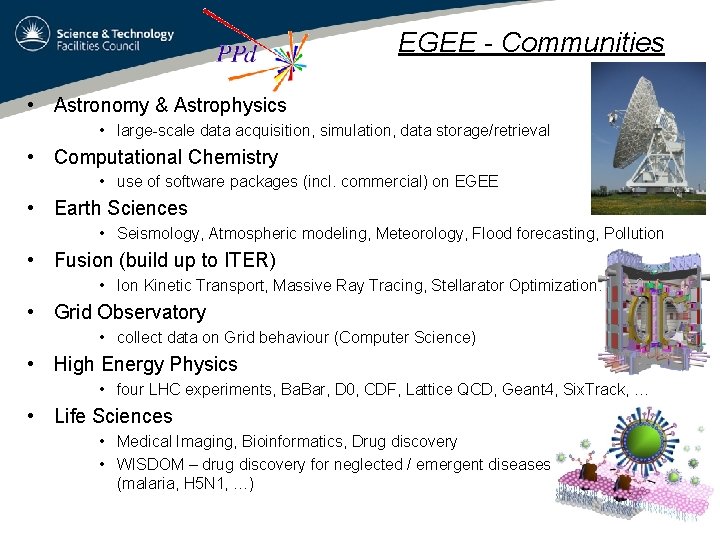

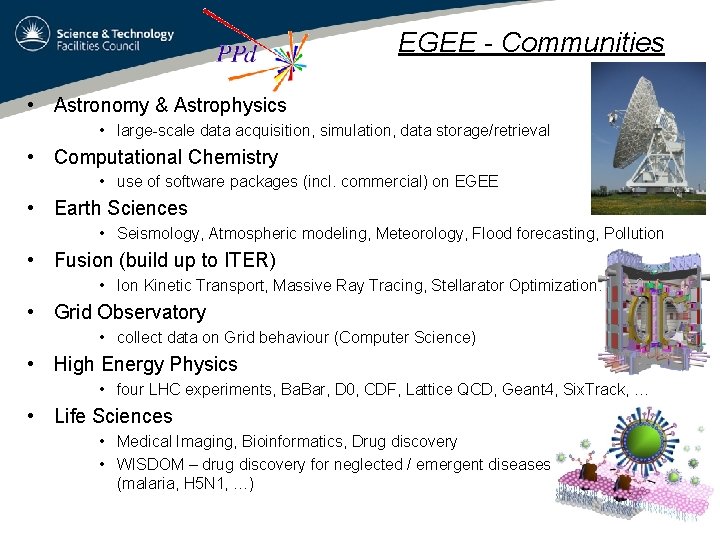

EGEE - Communities • Astronomy & Astrophysics • large-scale data acquisition, simulation, data storage/retrieval • Computational Chemistry • use of software packages (incl. commercial) on EGEE • Earth Sciences • Seismology, Atmospheric modeling, Meteorology, Flood forecasting, Pollution • Fusion (build up to ITER) • Ion Kinetic Transport, Massive Ray Tracing, Stellarator Optimization. • Grid Observatory • collect data on Grid behaviour (Computer Science) • High Energy Physics • four LHC experiments, Ba. Bar, D 0, CDF, Lattice QCD, Geant 4, Six. Track, … • Life Sciences • Medical Imaging, Bioinformatics, Drug discovery • WISDOM – drug discovery for neglected / emergent diseases (malaria, H 5 N 1, …) 56

ESFRI Projects • Many are starting to look at their e-Science needs – some at a similar scale to the LHC (petascale) – project design study stage – http: //cordis. europa. eu/esfri/ Cherenkov Telescope Array 57

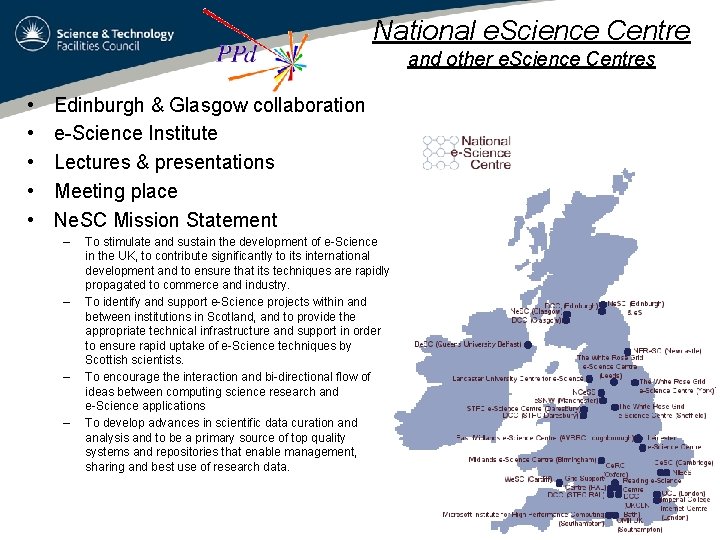

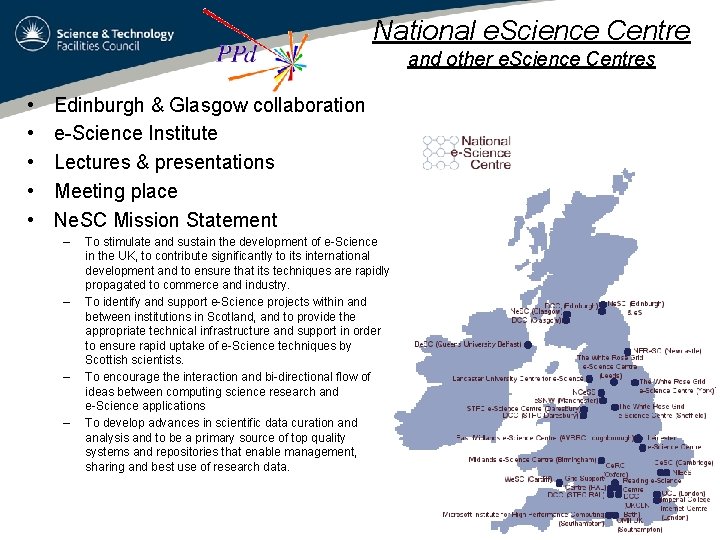

National e. Science Centre and other e. Science Centres • • • Edinburgh & Glasgow collaboration e-Science Institute Lectures & presentations Meeting place Ne. SC Mission Statement – – To stimulate and sustain the development of e-Science in the UK, to contribute significantly to its international development and to ensure that its techniques are rapidly propagated to commerce and industry. To identify and support e-Science projects within and between institutions in Scotland, and to provide the appropriate technical infrastructure and support in order to ensure rapid uptake of e-Science techniques by Scottish scientists. To encourage the interaction and bi-directional flow of ideas between computing science research and e-Science applications To develop advances in scientific data curation and analysis and to be a primary source of top quality systems and repositories that enable management, sharing and best use of research data. 58

Digital Curation • Digital Curation Centre – Edinburgh, Ne. SC, HATII, UKOLN, STFC – Objectives • Provide strategic leadership in digital curation and preservation for the UK research community, with particular emphasis on science data • Influence and inform national and international policy • Provide advocacy and expert advice and guidance to practitioners and funding bodies • Create, manage and develop an outstanding suite of resources and tools • Raise the level of awareness and expertise amongst data creators and curators, and other individuals with a curation role • Strengthen community curation networks and collaborative partnerships • Continue our strong association with our research programme • Particle Physics – Study group / workshops (DESY & SLAC) in 2009 -> intermediate report 59 to ICFA

Sustainability EGI European Grid Infrastructure 60

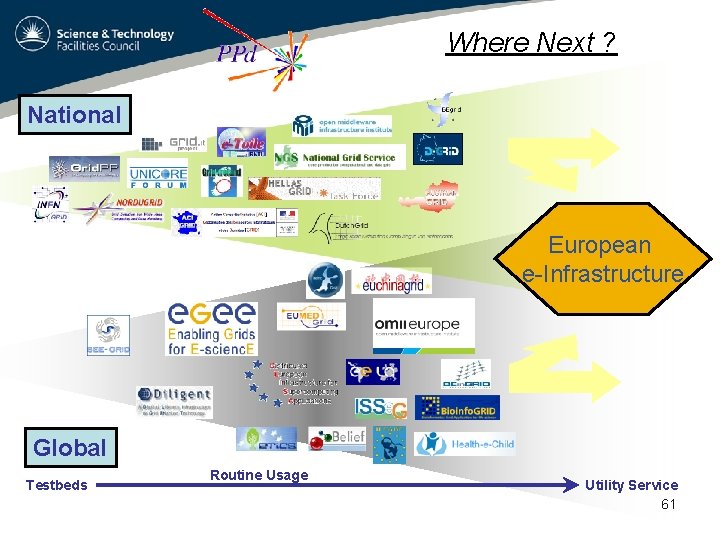

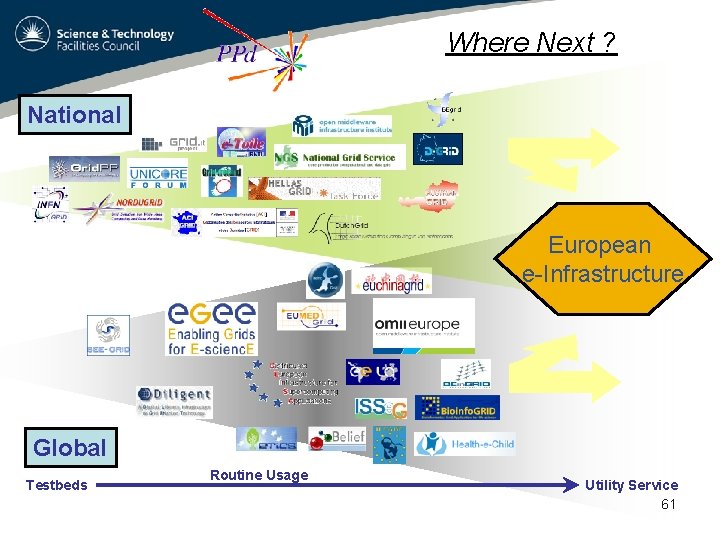

Where Next ? National European e-Infrastructure Global Testbeds Routine Usage Utility Service 61

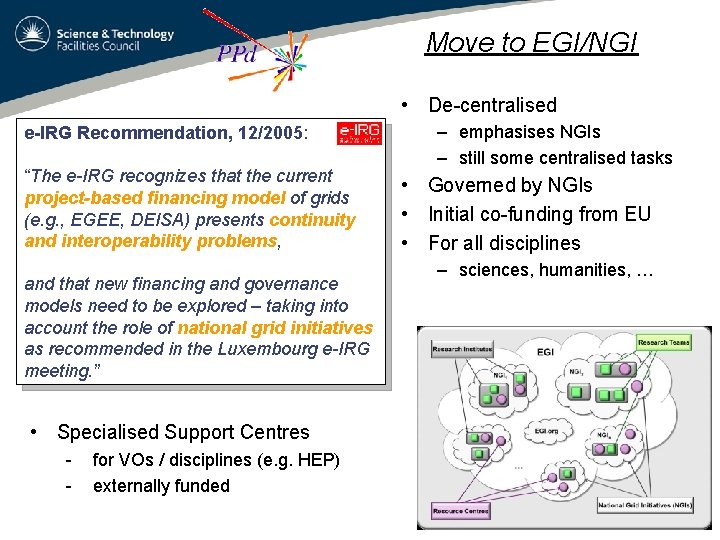

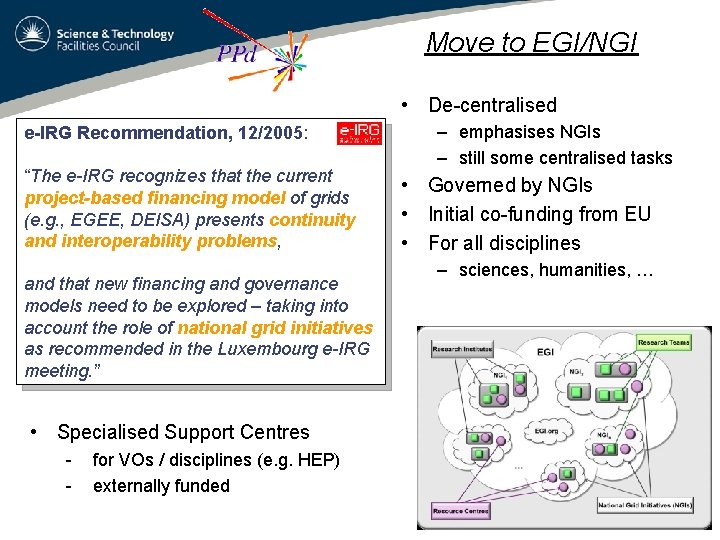

Move to EGI/NGI • De-centralised e-IRG Recommendation, 12/2005: “The e-IRG recognizes that the current project-based financing model of grids (e. g. , EGEE, DEISA) presents continuity and interoperability problems, and that new financing and governance models need to be explored – taking into account the role of national grid initiatives as recommended in the Luxembourg e-IRG meeting. ” – emphasises NGIs – still some centralised tasks • Governed by NGIs • Initial co-funding from EU • For all disciplines – sciences, humanities, … • Specialised Support Centres - for VOs / disciplines (e. g. HEP) externally funded 62

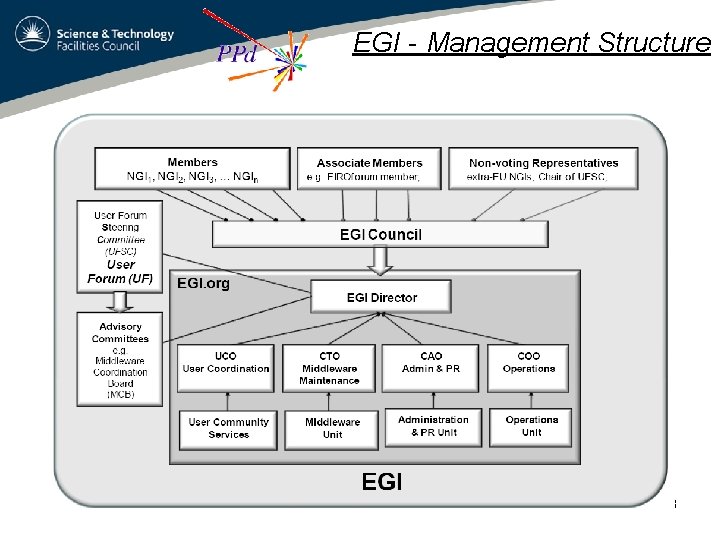

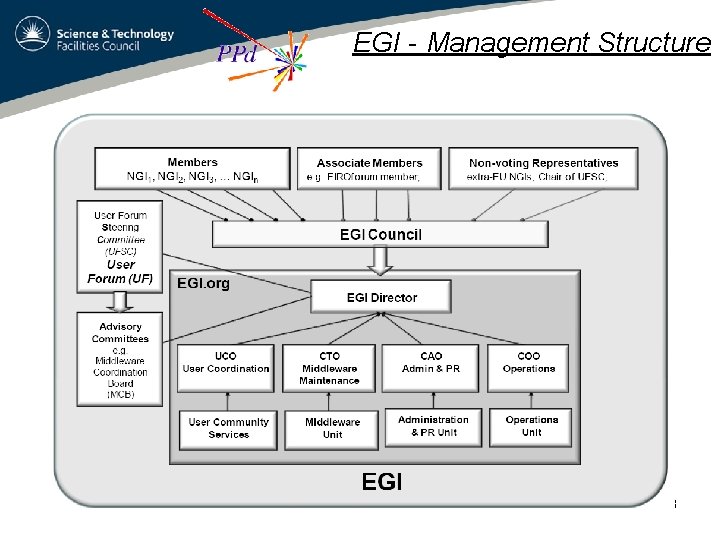

EGI - Management Structure 63

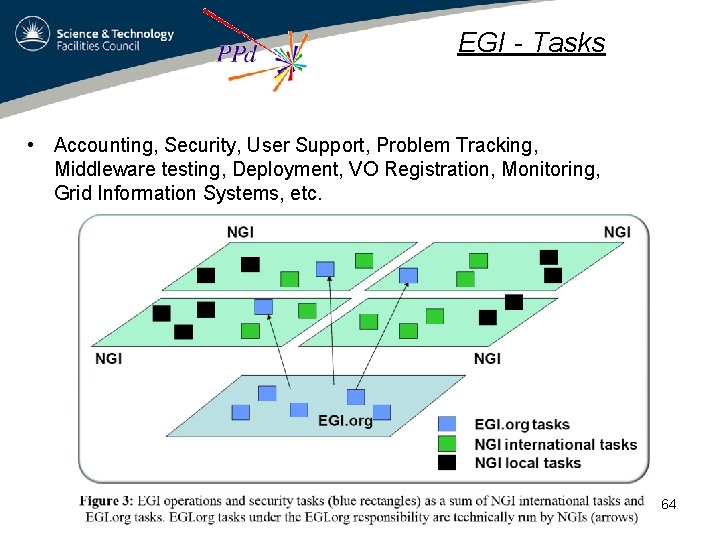

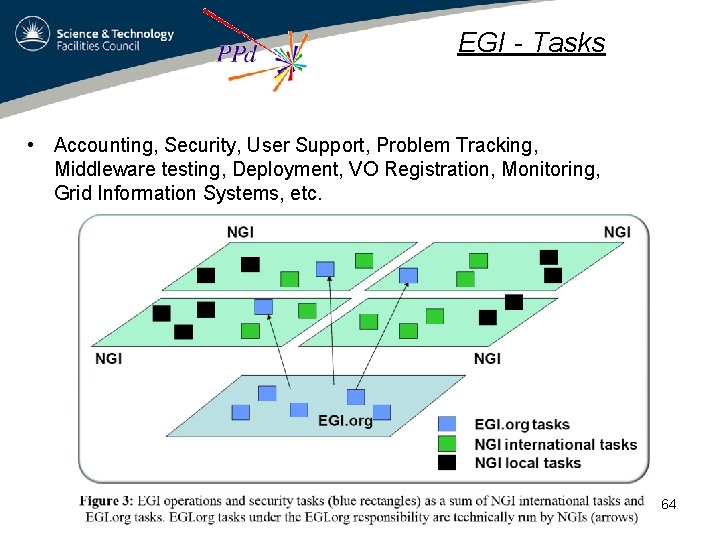

EGI - Tasks • Accounting, Security, User Support, Problem Tracking, Middleware testing, Deployment, VO Registration, Monitoring, Grid Information Systems, etc. 64

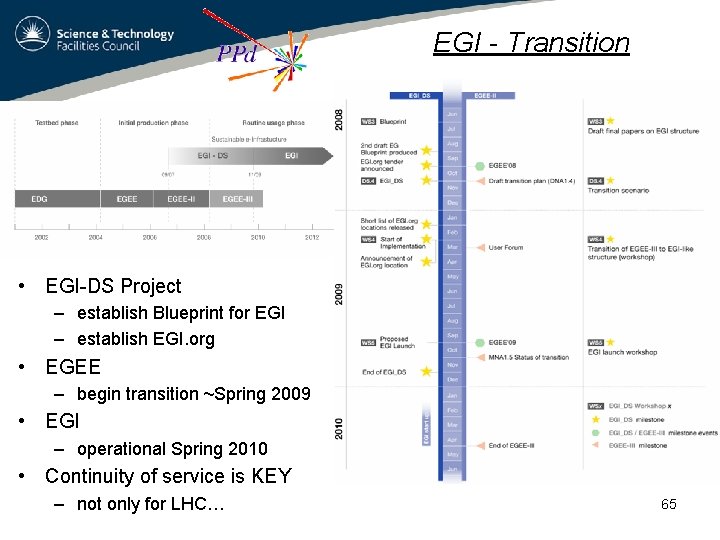

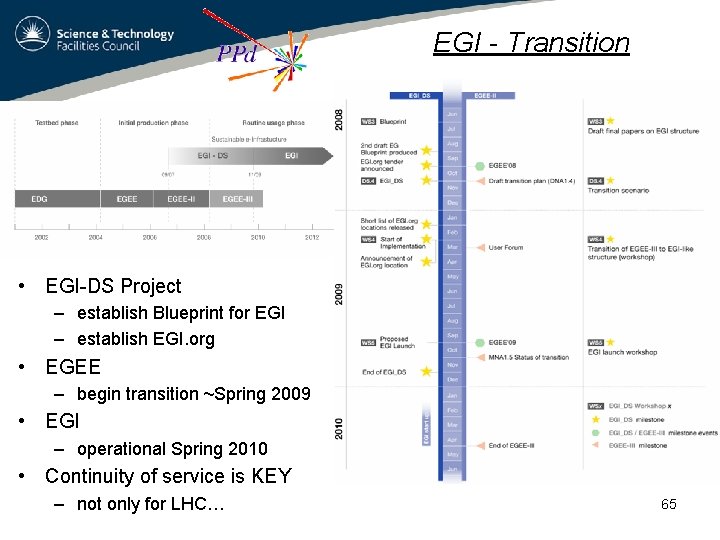

EGI - Transition • EGI-DS Project – establish Blueprint for EGI – establish EGI. org • EGEE – begin transition ~Spring 2009 • EGI – operational Spring 2010 • Continuity of service is KEY – not only for LHC… 65

EGI - Status • EU bids – Proposals submitted – Nov’ 09 – Significant (for HEP) SSCs not invited to hearing ! – EGI-Inspire & EMI hearing yesterday -> anticipate infrastructure & middleware development will be funded • New legal entity, EGI. eu, created last week in Amsterdam – …and soon recruiting • Proto UK NGI based on NGS & Grid. PP is in place 66

Further Information 67

i. SGTW International Science Grid This Week http: //www. isgtw. org/ 68

Links • • Grid. PP LCG http: //www. gridpp. ac. uk/ http: //lcg. web. cern. ch/LCG/ – LCGwiki https: //twiki. cern. ch/twiki/bin/view/LCG/Web. Home – monitoring & status • EGEE – g. Lite • http: //www. eu-egee. org/ http: //glite. web. cern. ch/glite/ EGI (European Grid Initiative) – Design Study http: //web. eu-egi. org/ • Computing in… – ALTAS – CMS – LHCb • http: //cms. cern. ch/i. CMS/jsp/page. jsp? mode=cms&action=url&urlkey=CMS_COMPUTING http: //lhcb-comp. web. cern. ch/lhcb-comp/ Portals – Ganga – GILDA • • • http: //atlas-computing. web. cern. ch/atlas-computing/computing. php http: //ganga. web. cern. ch/ganga/ https: //gilda. ct. infn. it/ Open Grid Forum Globus Condor http: //www. ogf. org/ http: //www. globus. org/ http: //www. cs. wisc. edu/condor/ 69

The End Robin Middleton RAL-PPD/EGEE/Grid. PP