WLCG The Worldwide LHC Computing Grid OSG All

- Slides: 22

WLCG - The Worldwide LHC Computing Grid OSG All Hands Meeting San Diego 06 March 2007 Les Robertson LCG Project Leader les. robertson@cern. ch

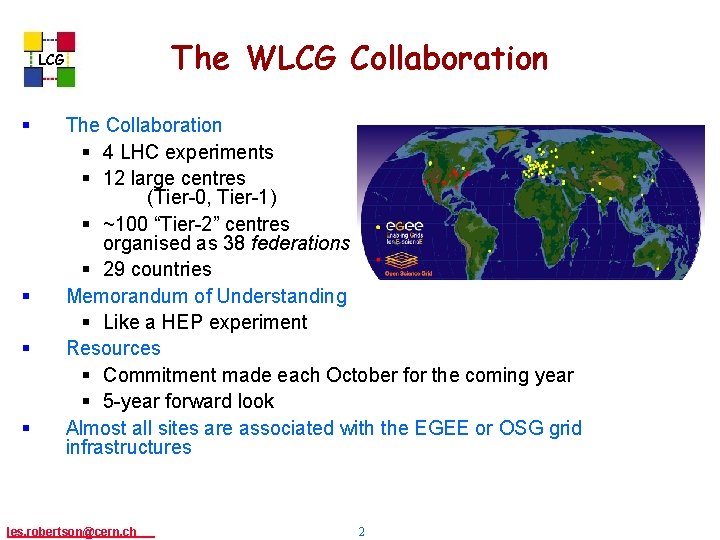

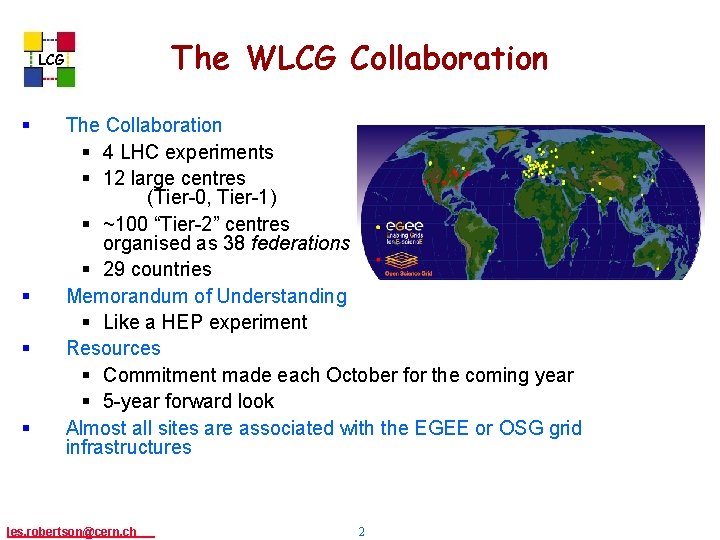

The WLCG Collaboration LCG § § The Collaboration § 4 LHC experiments § 12 large centres (Tier-0, Tier-1) § ~100 “Tier-2” centres organised as 38 federations § 29 countries Memorandum of Understanding § Like a HEP experiment Resources § Commitment made each October for the coming year § 5 -year forward look Almost all sites are associated with the EGEE or OSG grid infrastructures les. robertson@cern, ch 2

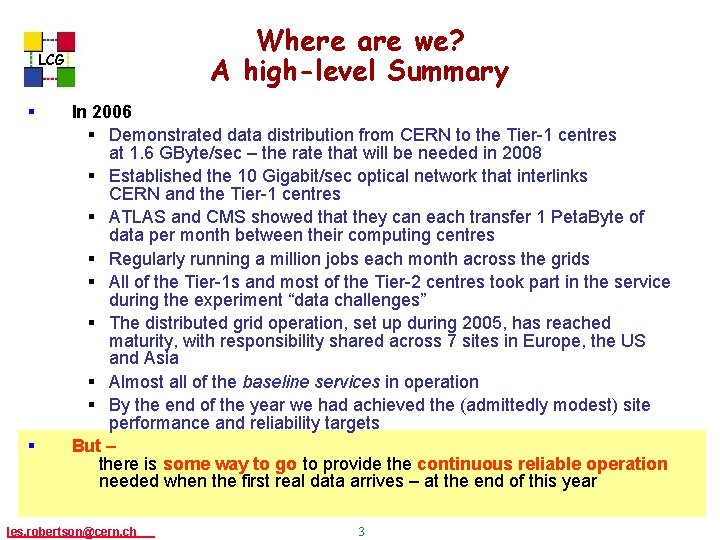

Where are we? A high-level Summary LCG § § In 2006 § Demonstrated data distribution from CERN to the Tier-1 centres at 1. 6 GByte/sec – the rate that will be needed in 2008 § Established the 10 Gigabit/sec optical network that interlinks CERN and the Tier-1 centres § ATLAS and CMS showed that they can each transfer 1 Peta. Byte of data per month between their computing centres § Regularly running a million jobs each month across the grids § All of the Tier-1 s and most of the Tier-2 centres took part in the service during the experiment “data challenges” § The distributed grid operation, set up during 2005, has reached maturity, with responsibility shared across 7 sites in Europe, the US and Asia § Almost all of the baseline services in operation § By the end of the year we had achieved the (admittedly modest) site performance and reliability targets But – there is some way to go to provide the continuous reliable operation needed when the first real data arrives – at the end of this year les. robertson@cern, ch 3

LCG Workshop 22 -26 January 270 people representing 27 countries & 86 Sites

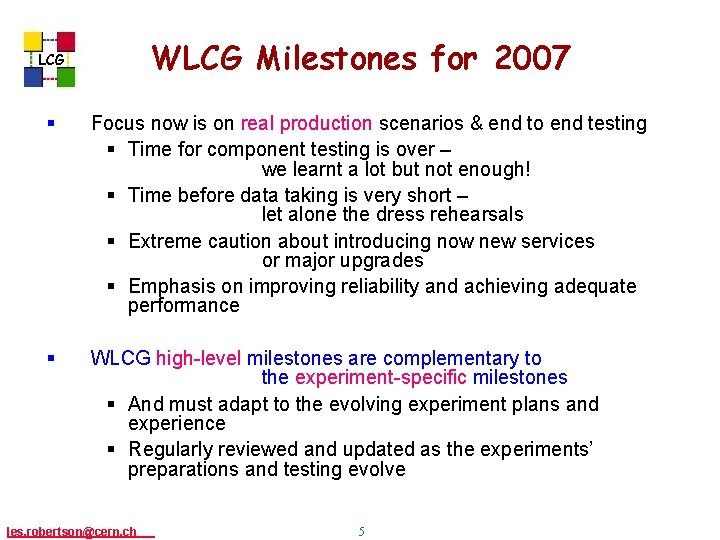

WLCG Milestones for 2007 LCG § Focus now is on real production scenarios & end to end testing § Time for component testing is over – we learnt a lot but not enough! § Time before data taking is very short – let alone the dress rehearsals § Extreme caution about introducing now new services or major upgrades § Emphasis on improving reliability and achieving adequate performance § WLCG high-level milestones are complementary to the experiment-specific milestones § And must adapt to the evolving experiment plans and experience § Regularly reviewed and updated as the experiments’ preparations and testing evolve les. robertson@cern, ch 5

LCG – A Data Grid for LHC les. robertson@cern, ch 6

LCG The Mega. Table Starting from the experiment computing models and the resources that sites plan to make available, the Mega. Table specifies for each experiment and each pair of sites • inter-site data rates • sizing and utilisation of storage classes • …. les. robertson@cern. ch 7

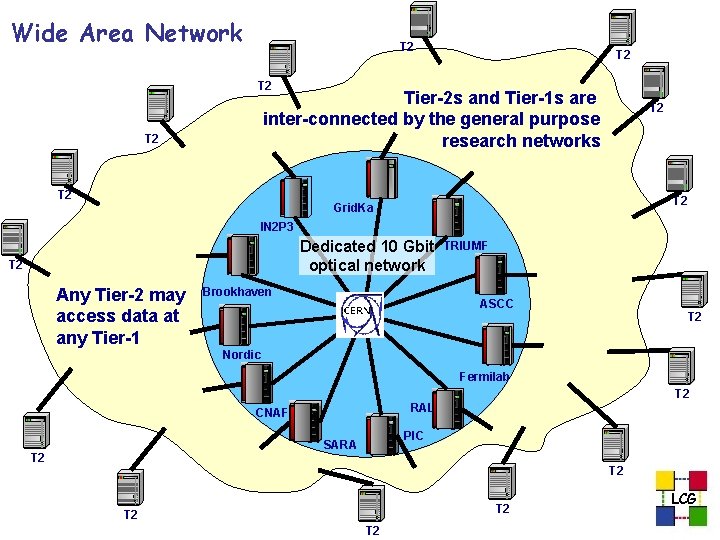

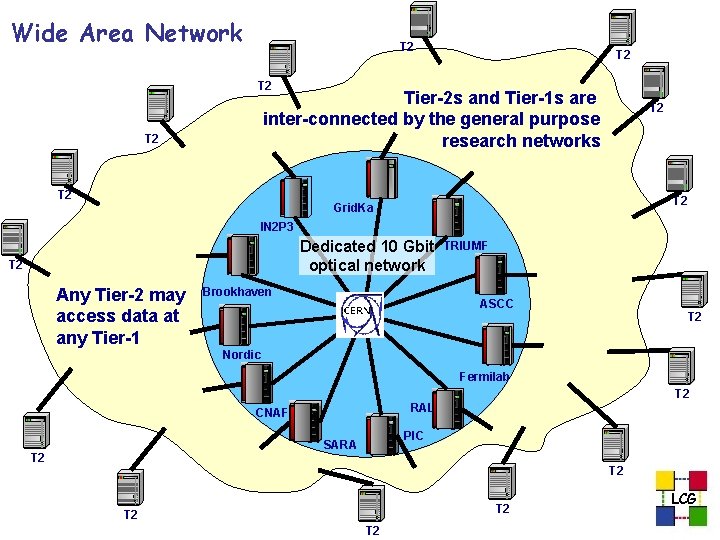

Wide Area Network T 2 T 2 Tier-2 s and Tier-1 s are inter-connected by the general purpose research networks T 2 T 2 Grid. Ka IN 2 P 3 Dedicated 10 Gbit optical network T 2 Any Tier-2 may access data at any Tier-1 Brookhaven TRIUMF ASCC T 2 Nordic Fermilab T 2 RAL CNAF PIC SARA T 2 T 2 T 2 LCG

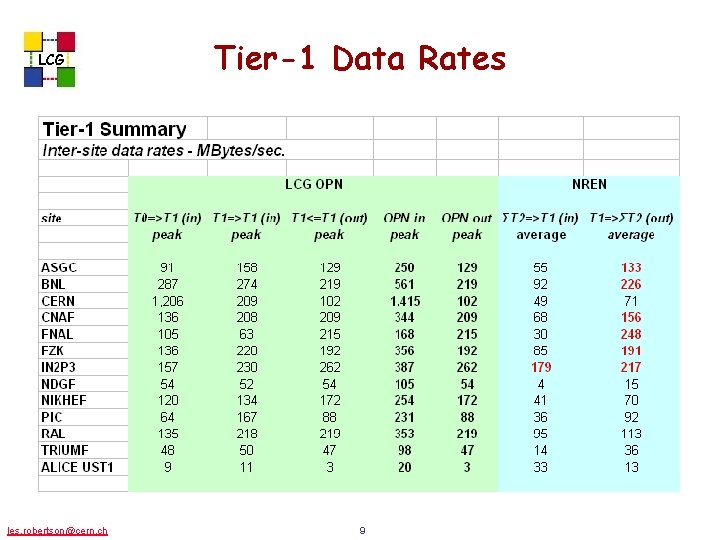

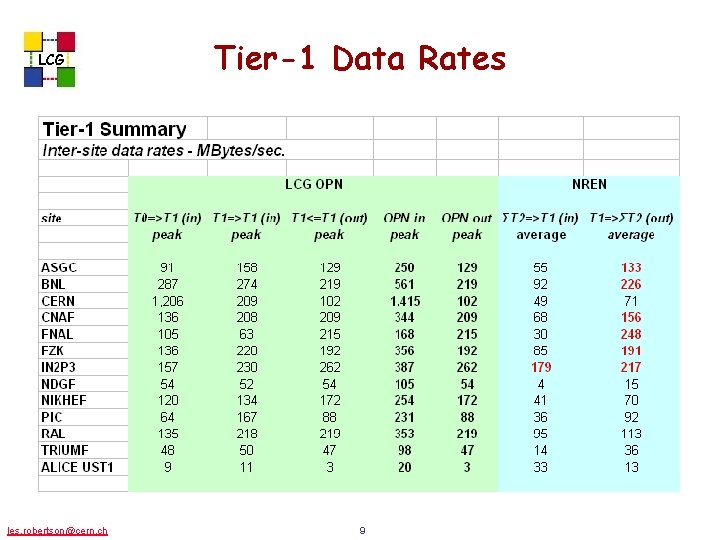

LCG les. robertson@cern. ch Tier-1 Data Rates 9

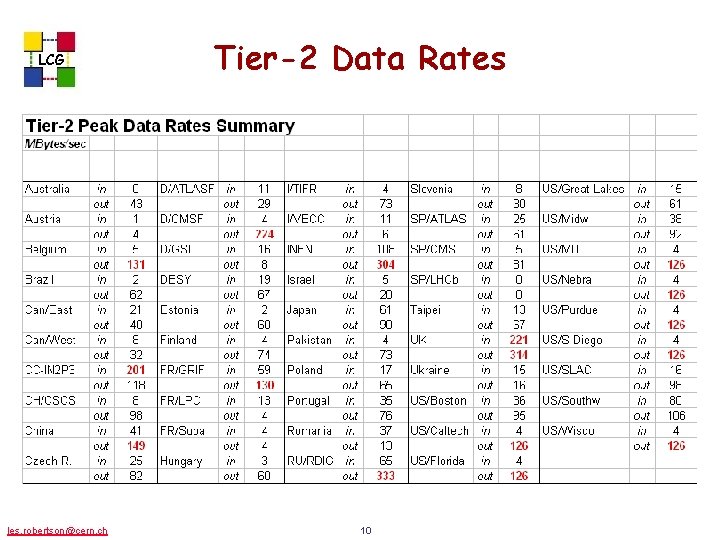

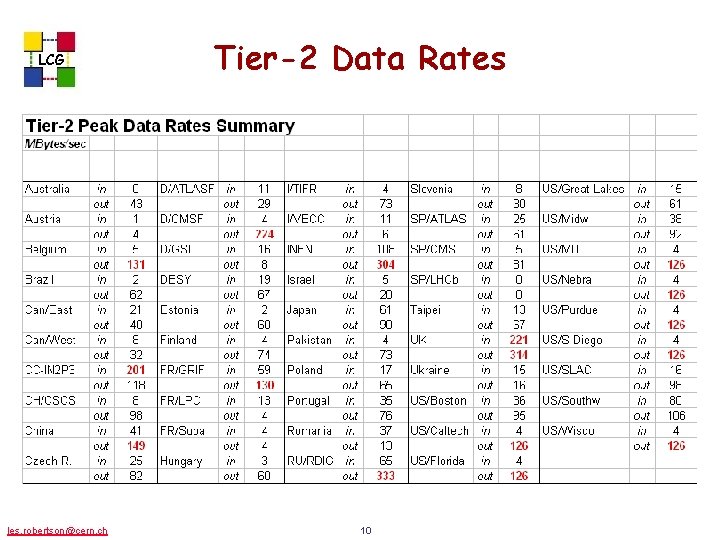

LCG les. robertson@cern. ch Tier-2 Data Rates 10

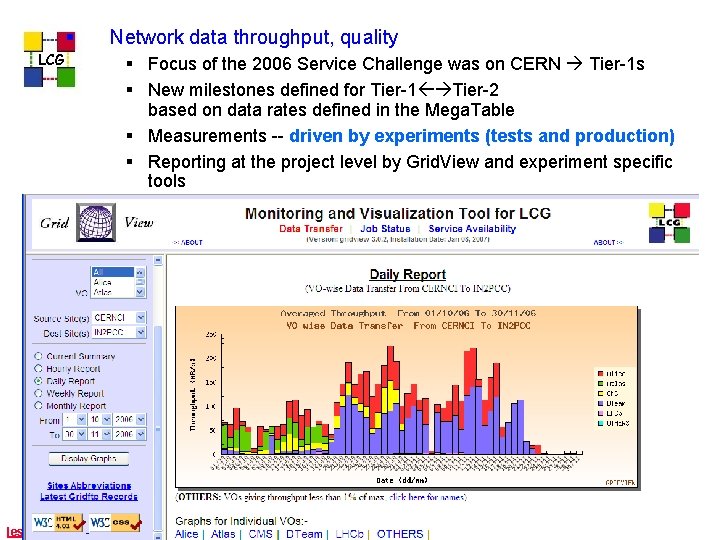

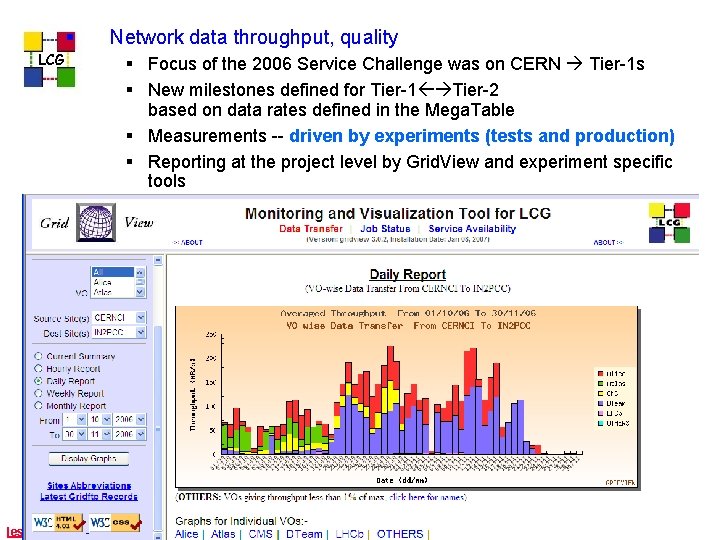

§ LCG Network data throughput, quality § Focus of the 2006 Service Challenge was on CERN Tier-1 s § New milestones defined for Tier-1 Tier-2 based on data rates defined in the Mega. Table § Measurements -- driven by experiments (tests and production) § Reporting at the project level by Grid. View and experiment specific tools les. robertson@cern, ch 11

LCG Service Reliability les. robertson@cern, ch 12

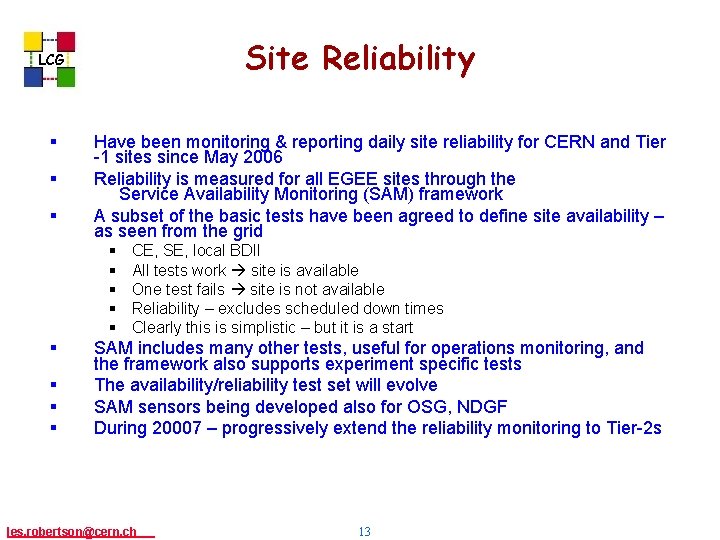

Site Reliability LCG § § § Have been monitoring & reporting daily site reliability for CERN and Tier -1 sites since May 2006 Reliability is measured for all EGEE sites through the Service Availability Monitoring (SAM) framework A subset of the basic tests have been agreed to define site availability – as seen from the grid § § § § § CE, SE, local BDII All tests work site is available One test fails site is not available Reliability – excludes scheduled down times Clearly this is simplistic – but it is a start SAM includes many other tests, useful for operations monitoring, and the framework also supports experiment specific tests The availability/reliability test set will evolve SAM sensors being developed also for OSG, NDGF During 20007 – progressively extend the reliability monitoring to Tier-2 s les. robertson@cern, ch 13

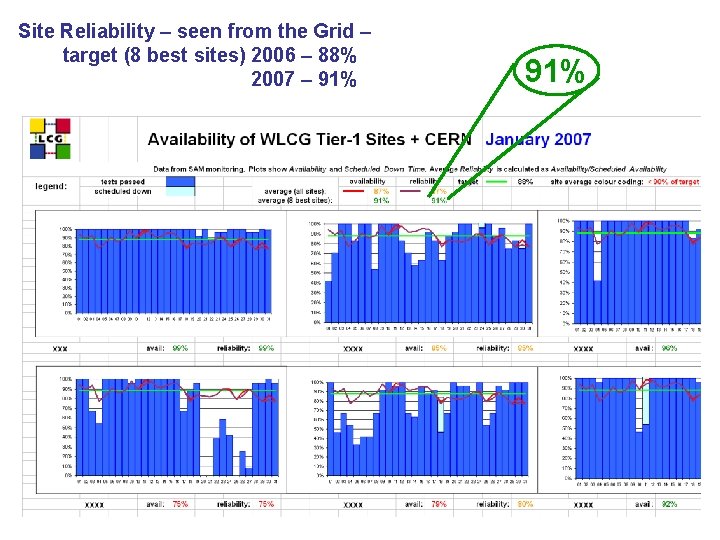

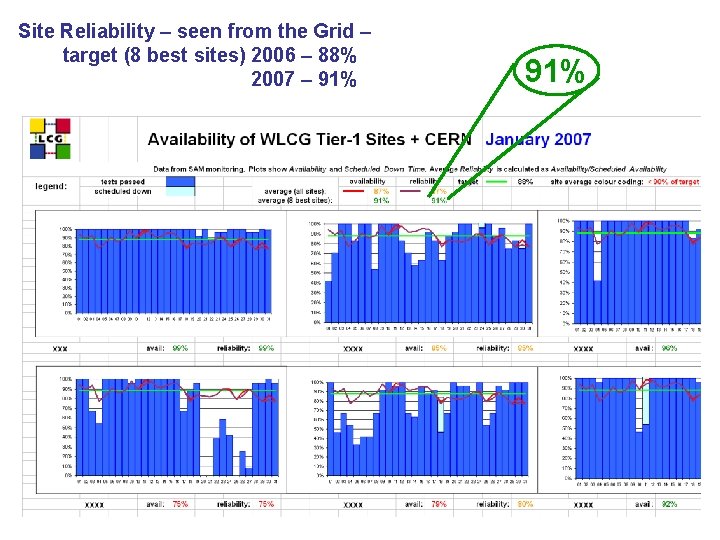

Site Reliability – seen from the Grid – target (8 best sites) 2006 – 88% 2007 – 91%

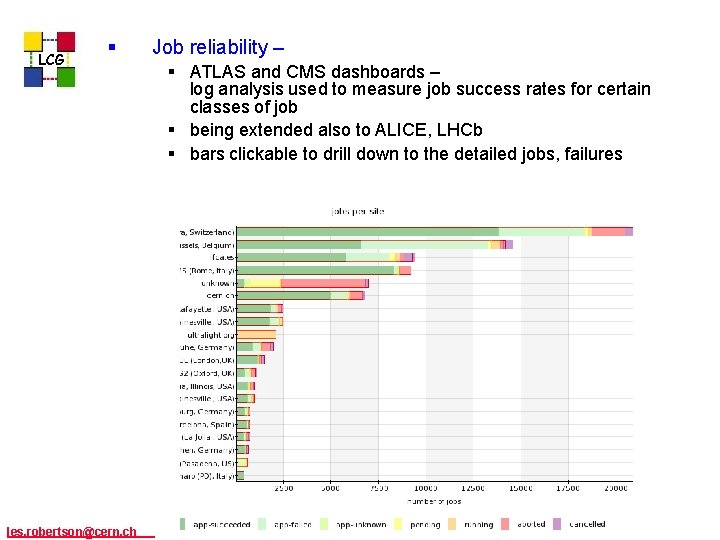

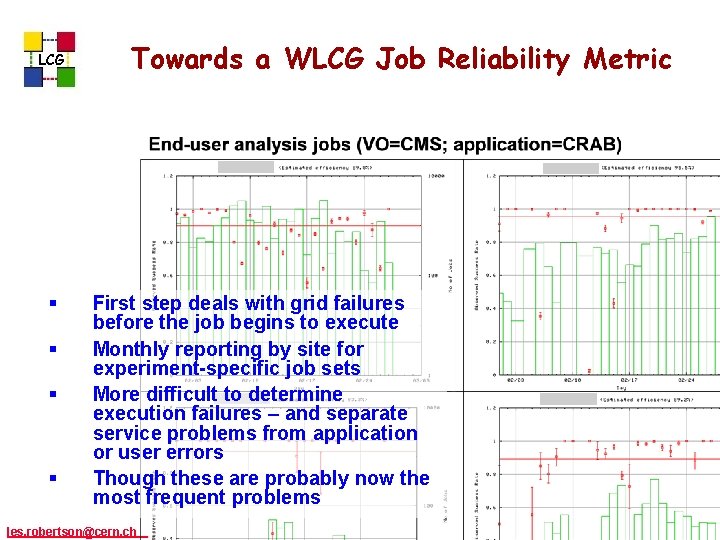

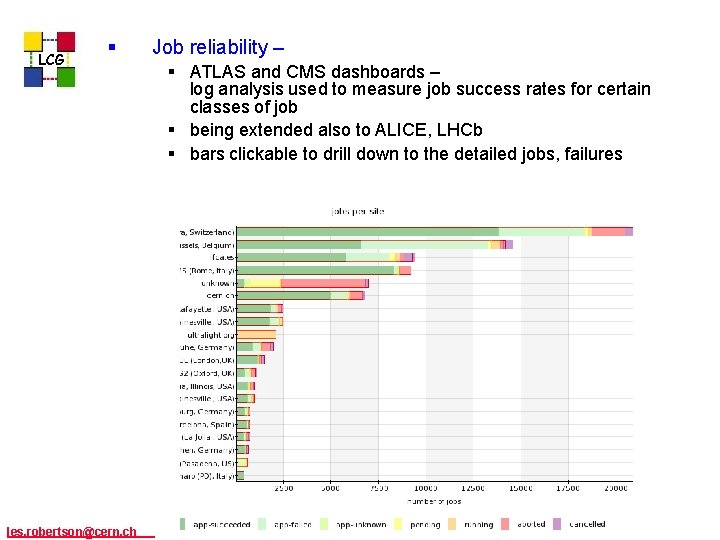

LCG § les. robertson@cern, ch Job reliability – § ATLAS and CMS dashboards – log analysis used to measure job success rates for certain classes of job § being extended also to ALICE, LHCb § bars clickable to drill down to the detailed jobs, failures 15

LCG les. robertson@cern, ch 16

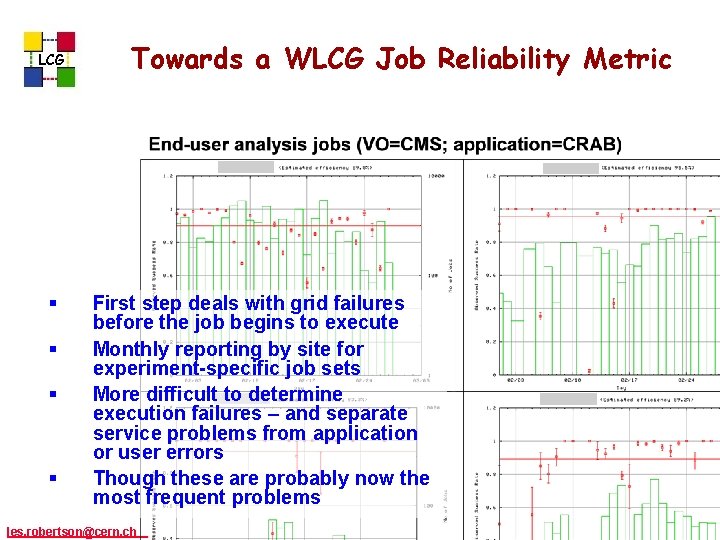

LCG § § Towards a WLCG Job Reliability Metric First step deals with grid failures before the job begins to execute Monthly reporting by site for experiment-specific job sets More difficult to determine execution failures – and separate service problems from application or user errors Though these are probably now the most frequent problems les. robertson@cern, ch 17

Betting on Multi-Science Grid Infrastructures LCG § § § As multi-science infrastructures have emerged, (EGEE, OSG) WLCG has begun to depend on them § Middleware selection, certification and packaging § Grid operation Advantages: § Science infrastructure funding provides an additional incentive for international and regional collaboration § Imposes a certain level of standardisation Disadvantages: § Hard to agree a single set of basic services for an LHC experiment, as many decisions are taken by the grid infrastructure the application must adapt to both OSG and EGEE (but imagine the situation if every national grid had its own set of standards!) § Middleware polemics § Additional layers of technical and social complexity and overhead Will these projects evolve into long term science infrastructures? Or will HEP be left in a few years to undo over-complex services? The answer depends on whether this type of grid is essential for other sciences les. robertson@cern, ch 18

Middleware LCG § § § Base is still Condor and some Globus components Plus many enhancements (My. Proxy, BDII, VOMS, improved CEs, FTS, LFC, GFAL, lcg_utils, accounting tools, various Grid Schedulers, …. ) Essential (for WLCG) are the certification and packaging activities of VDT and EGEE § the OSG support for VDT is very welcome and important § Not usually classified as middleware, but at least as important for WLCG, are the HEP Mass Storage Systems § d. Cache, CASTOR, DPM § With a standard (SRM) interface les. robertson@cern, ch 19

Operation LCG § § OSG and EGEE each has its operation service to monitor and manage the grid infrastructure But for WLCG the site operation is just as important § Implications of experiment plans on sites ¨ remember that most non-US LCG sites serve multiple accelerators, and some are multi-science § Data transfer issues ¨ Tier-1/Tier-2 relationships vary from experiment to experiment -- and CMS requires full T 1/T 2 connectivity § Storage management issues § Weekly operations meeting – to resolve and escalate problems les. robertson@cern, ch 20

WLCG Commissioning Schedule experiments 2007 • Continued testing of computing models, basic services • Testing the full data flow DAQ Tier-0 Tier-1 Tier-2 • Building up end-user analysis support Dress Rehearsals • Exercising the computing systems, ramping up job rates, data management performance, …. 2008 LCG services Introduce residual services File Transfer Services for T 1 -T 2 traffic Distributed Database Synchronisation VOMS roles in site scheduling Storage Resource Manager v 2. 2 Migration to SL 4 and 64 bit Commissioning the service for the 2007 run– increase performance, reliability, capacity to target levels, monitoring tools, 24 x 7 operation, …. 01 jul 07 - service commissioned - full 2007 capacity, performance first LHC collisions

www. cern. ch/lcg