Games on Graphs Uri Zwick Tel Aviv University

![Markov Decision Processes [Bellman ’ 57] [Howard ’ 60] … 1 -player games Total Markov Decision Processes [Bellman ’ 57] [Howard ’ 60] … 1 -player games Total](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-3.jpg)

![Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Theorem: For a stopping MDP, Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Theorem: For a stopping MDP,](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-27.jpg)

![Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Optimality (Alternative proof): Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Optimality (Alternative proof):](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-28.jpg)

![Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Theorem: An optimal positional strategy Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Theorem: An optimal positional strategy](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-29.jpg)

![Primal LP formulation for total cost MDPs [d’Epenoux (1964)] Primal LP formulation for total cost MDPs [d’Epenoux (1964)]](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-32.jpg)

![Primal LP formulation for total cost MDPs [d’Epenoux (1964)] (The linear system has a Primal LP formulation for total cost MDPs [d’Epenoux (1964)] (The linear system has a](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-33.jpg)

![Primal LP formulation for total cost MDPs [d’Epenoux (1964)] The calculations: These are exactly Primal LP formulation for total cost MDPs [d’Epenoux (1964)] The calculations: These are exactly](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-34.jpg)

![Policy iteration for MDPs [Howard ’ 60] As there is only a finite number Policy iteration for MDPs [Howard ’ 60] As there is only a finite number](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-42.jpg)

- Slides: 45

Games on Graphs Uri Zwick Tel Aviv University Lecture 2 – Markov Decision Processes Last modified 14/11/2019

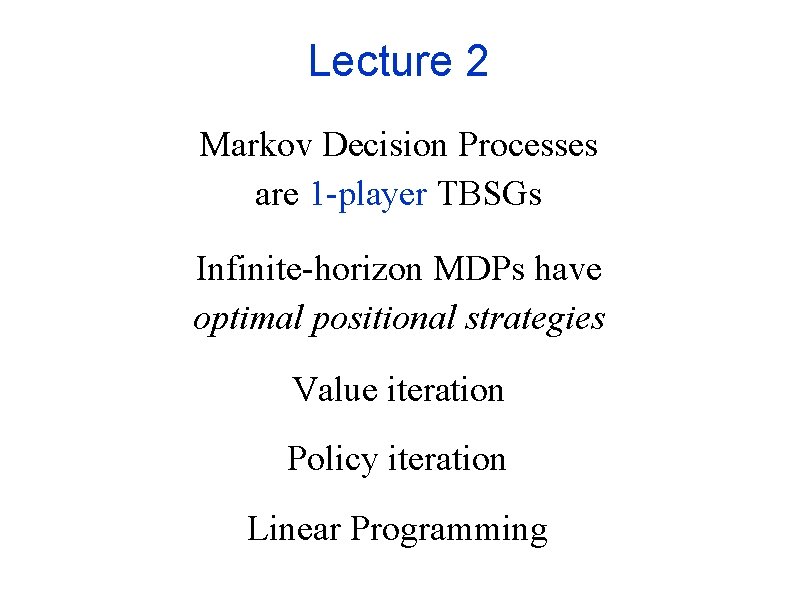

Lecture 2 Markov Decision Processes are 1 -player TBSGs Infinite-horizon MDPs have optimal positional strategies Value iteration Policy iteration Linear Programming

![Markov Decision Processes Bellman 57 Howard 60 1 player games Total Markov Decision Processes [Bellman ’ 57] [Howard ’ 60] … 1 -player games Total](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-3.jpg)

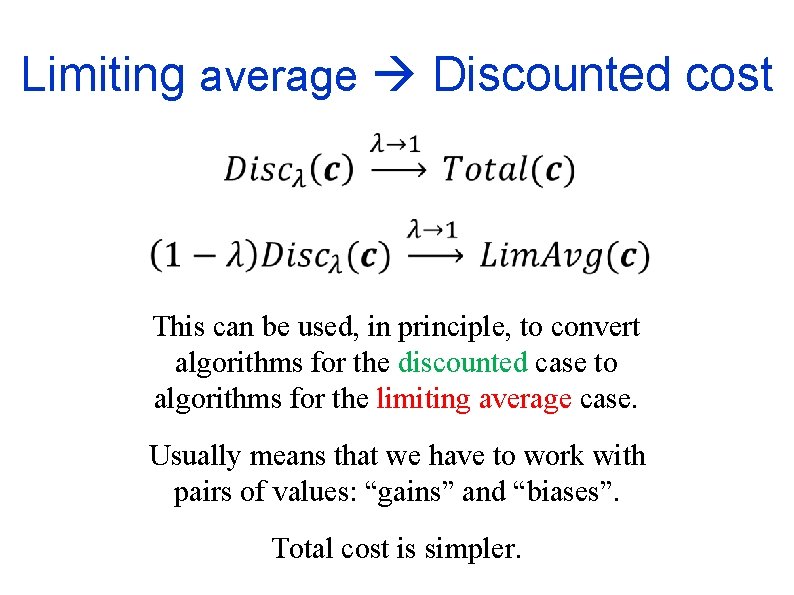

Markov Decision Processes [Bellman ’ 57] [Howard ’ 60] … 1 -player games Total cost Discounted cost Limiting average cost Optimal positional strategies can be found using LP. Is there a strongly polynomial time algorithm?

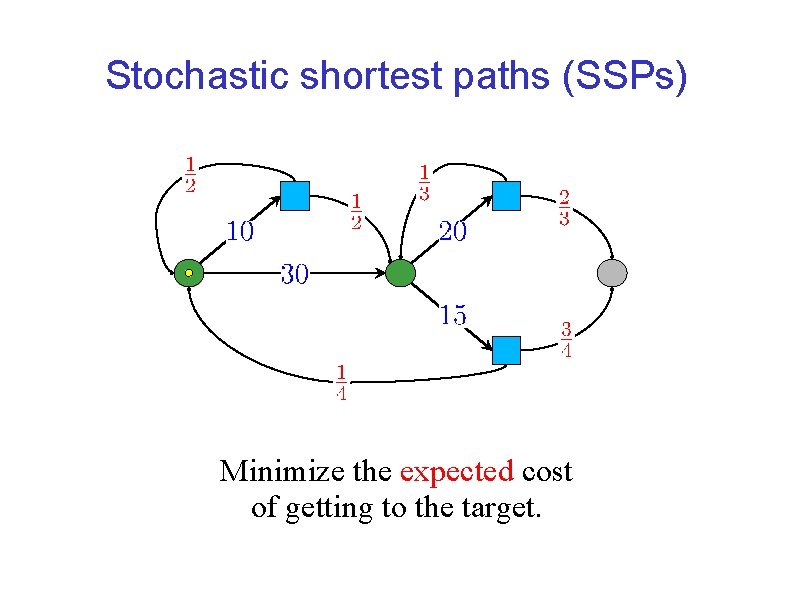

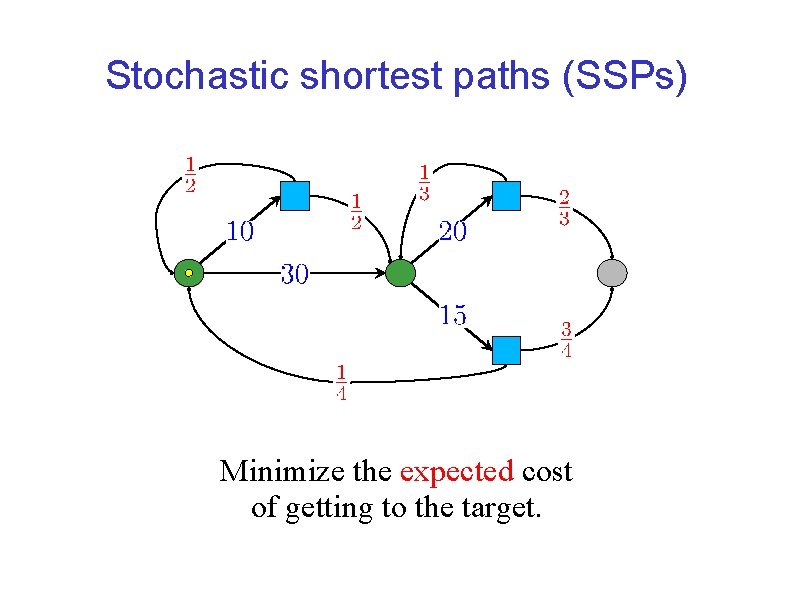

Stochastic shortest paths (SSPs) Minimize the expected cost of getting to the target.

One-player Games (MDPs)

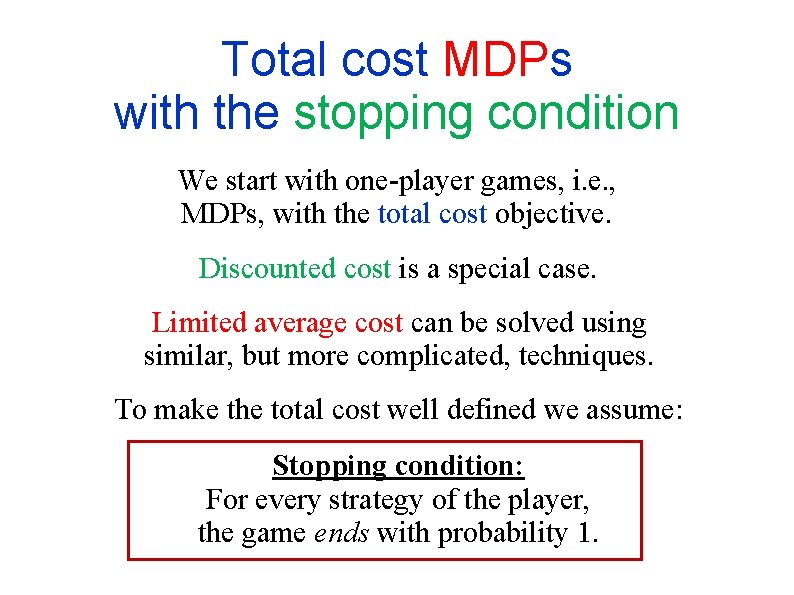

Total cost MDPs with the stopping condition We start with one-player games, i. e. , MDPs, with the total cost objective. Discounted cost is a special case. Limited average cost can be solved using similar, but more complicated, techniques. To make the total cost well defined we assume: Stopping condition: For every strategy of the player, the game ends with probability 1.

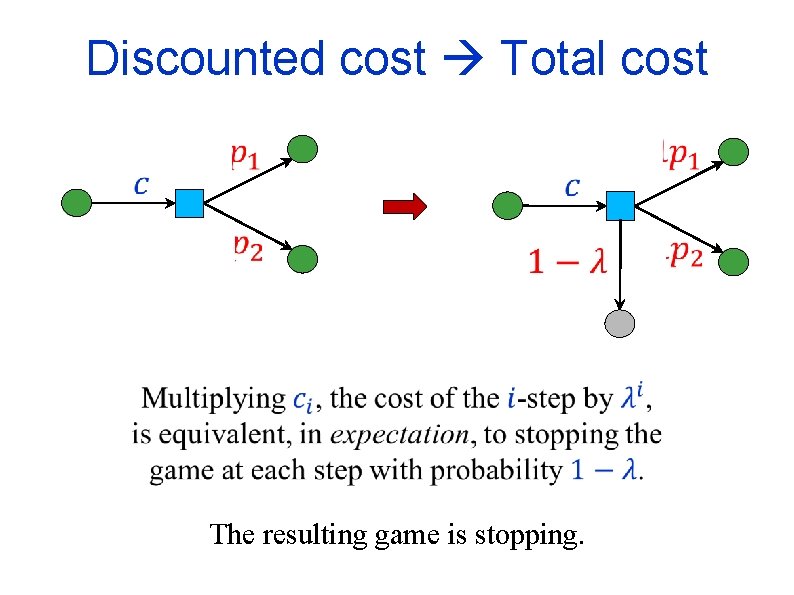

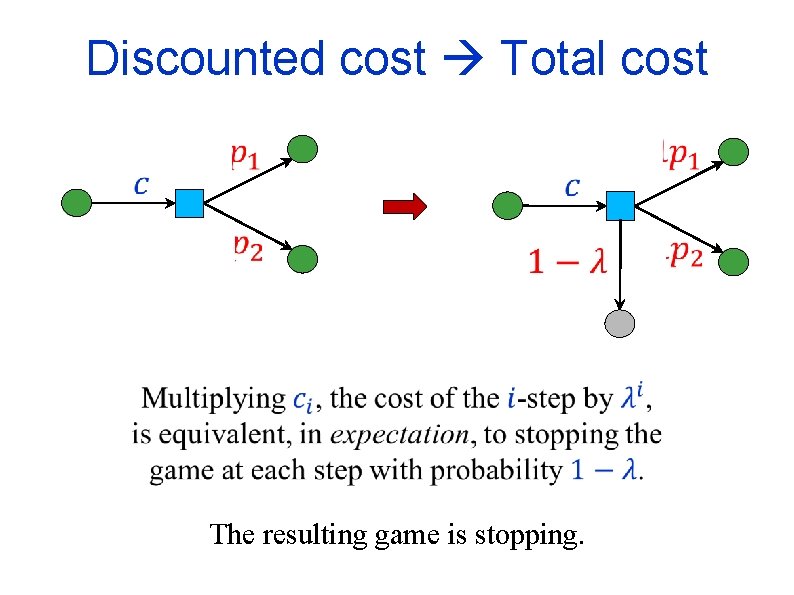

Discounted cost Total cost The resulting game is stopping.

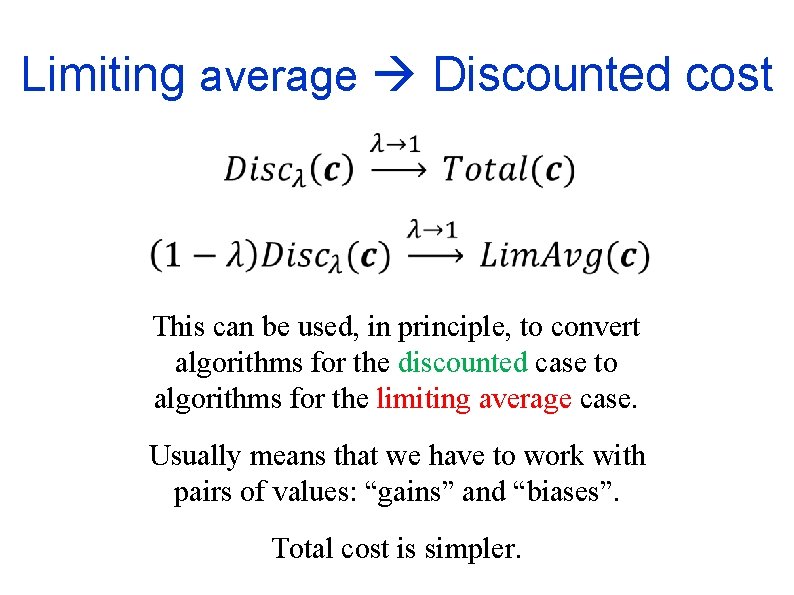

Limiting average Discounted cost This can be used, in principle, to convert algorithms for the discounted case to algorithms for the limiting average case. Usually means that we have to work with pairs of values: “gains” and “biases”. Total cost is simpler.

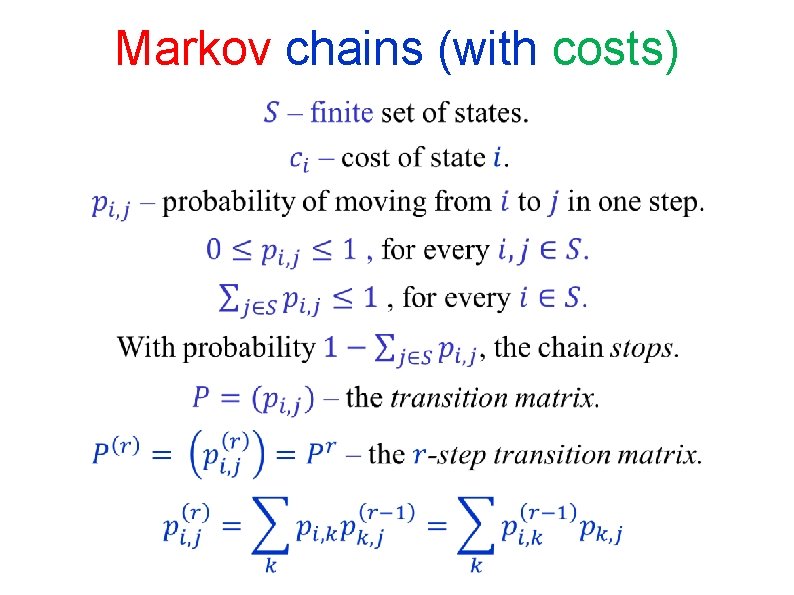

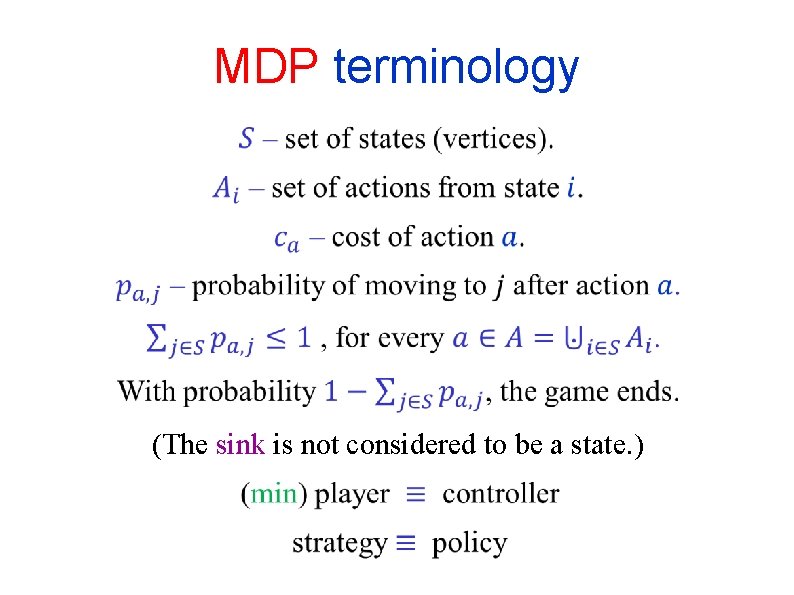

MDP terminology (The sink is not considered to be a state. )

Markov chains A Markov chain is an MDP in which there is only one action from each state.

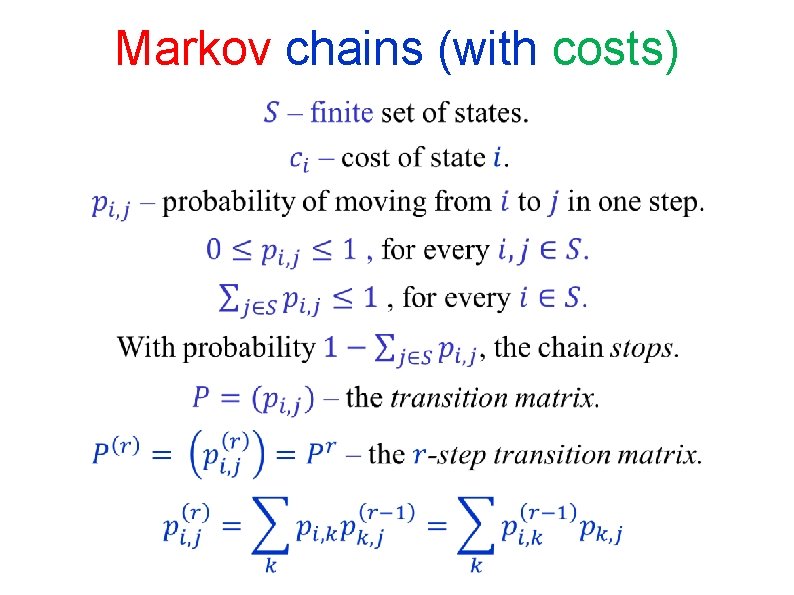

Markov chains (with costs)

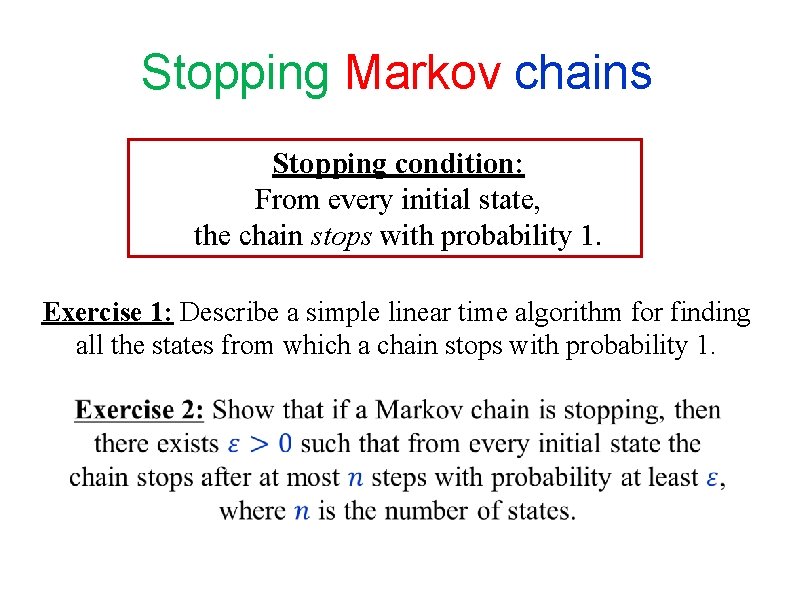

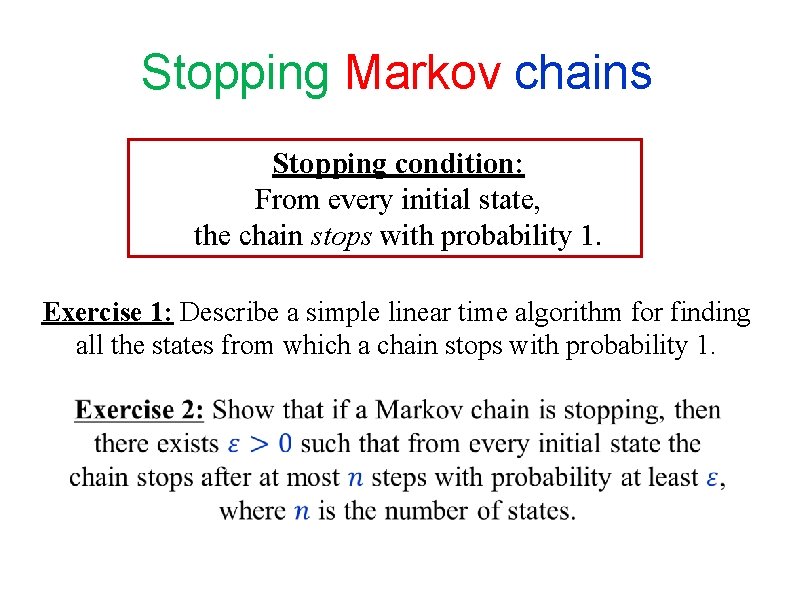

Stopping Markov chains Stopping condition: From every initial state, the chain stops with probability 1. Exercise 1: Describe a simple linear time algorithm for finding all the states from which a chain stops with probability 1.

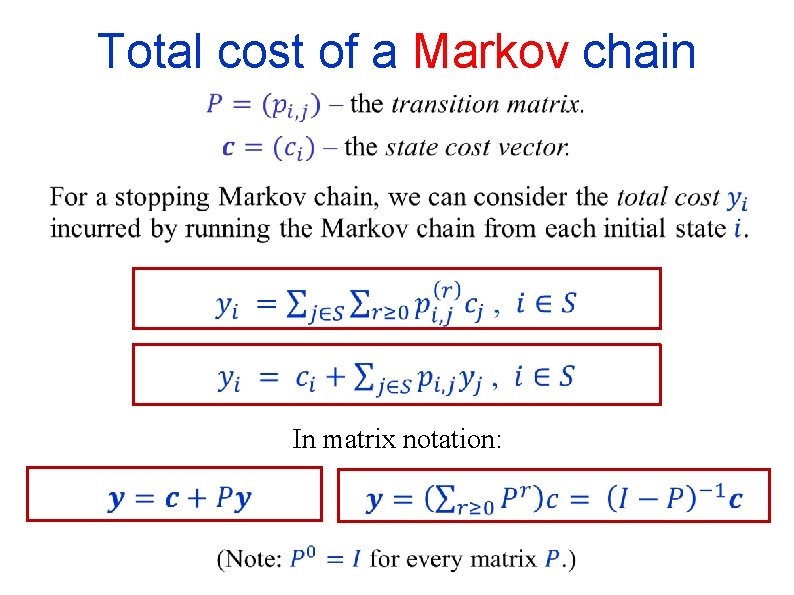

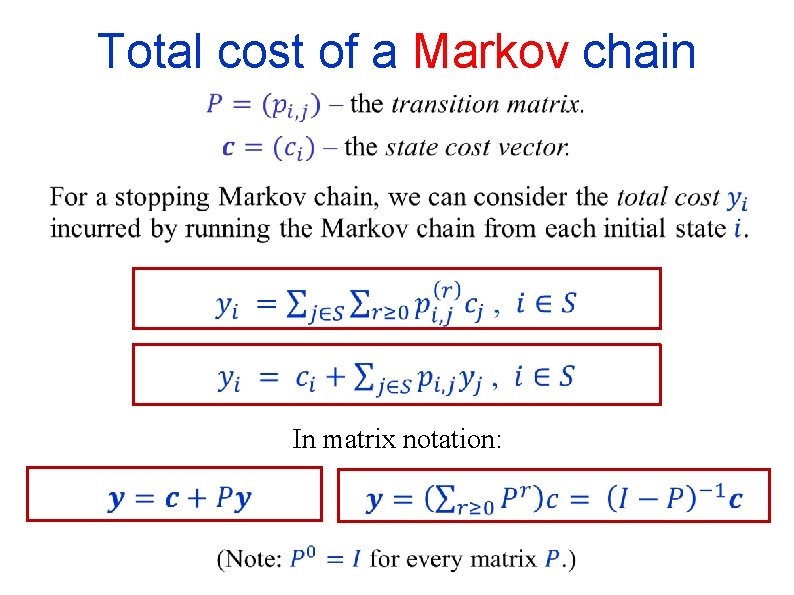

Total cost of a Markov chain In matrix notation:

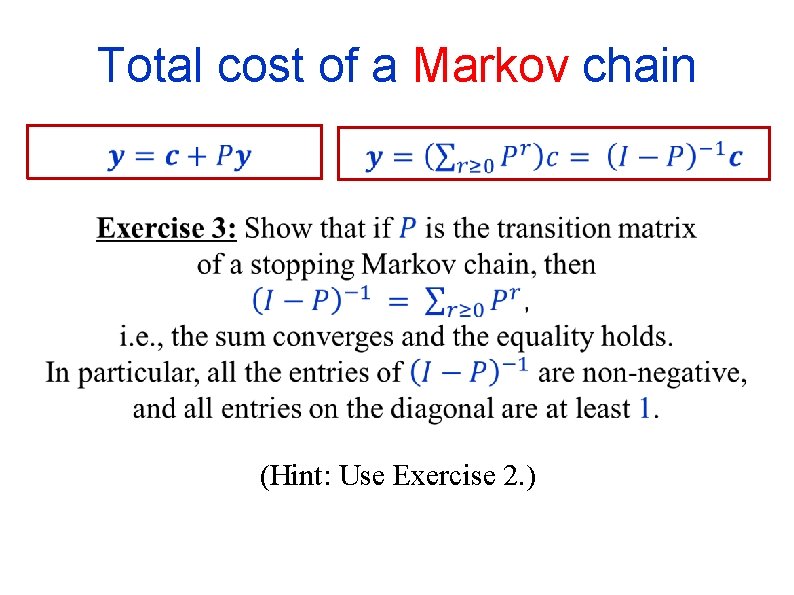

Total cost of a Markov chain (Hint: Use Exercise 2. )

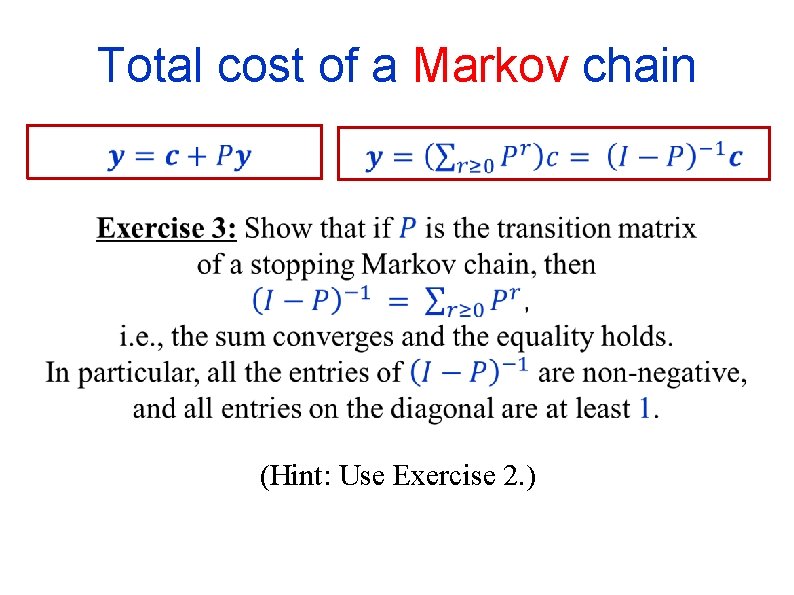

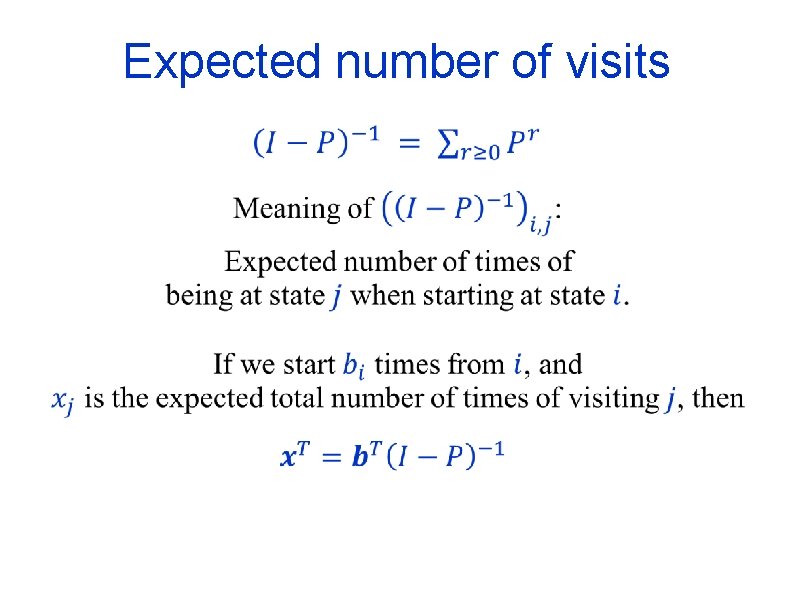

Expected number of visits

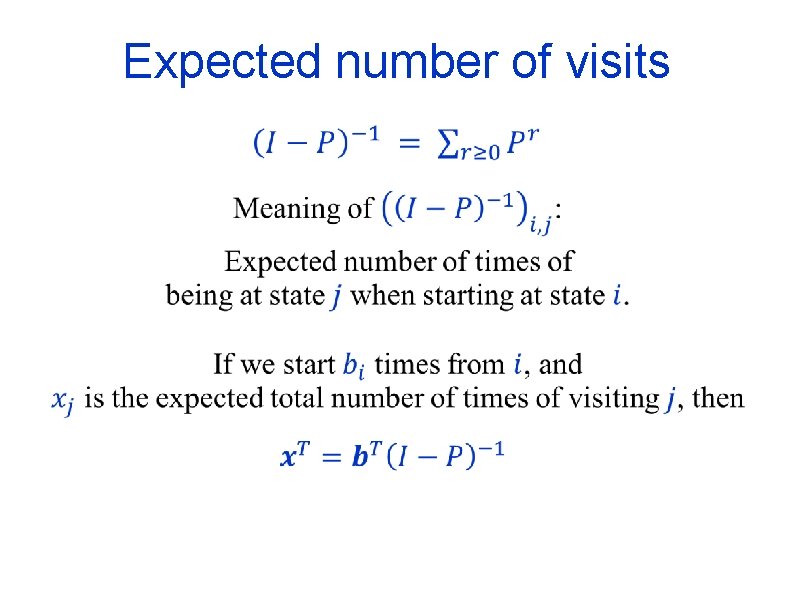

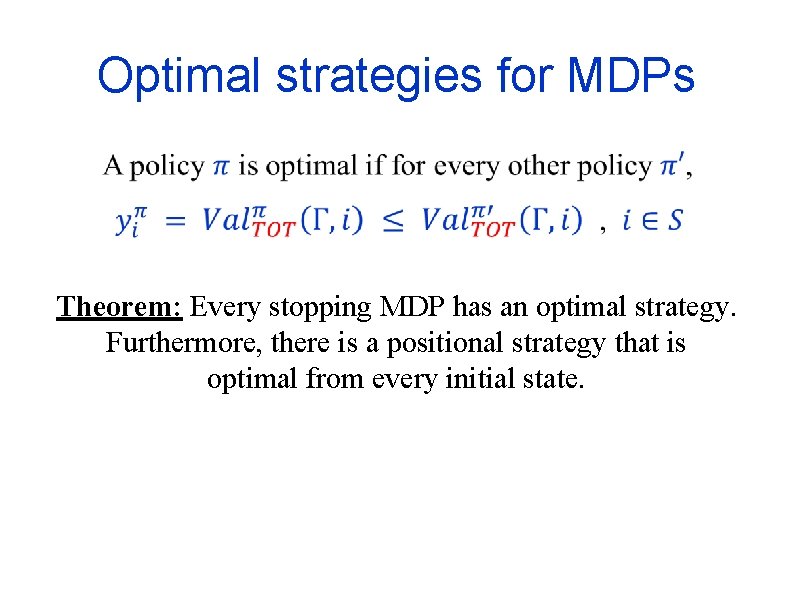

Optimal strategies for MDPs Theorem: Every stopping MDP has an optimal strategy. Furthermore, there is a positional strategy that is optimal from every initial state.

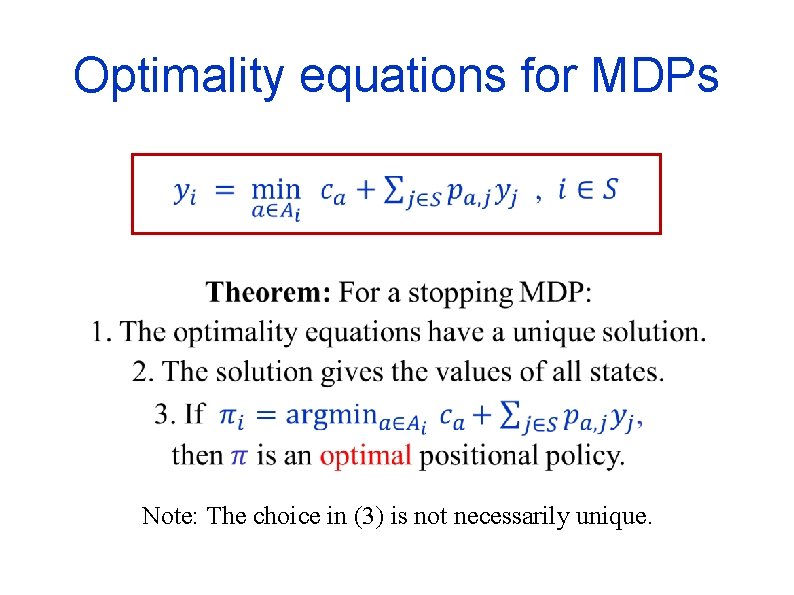

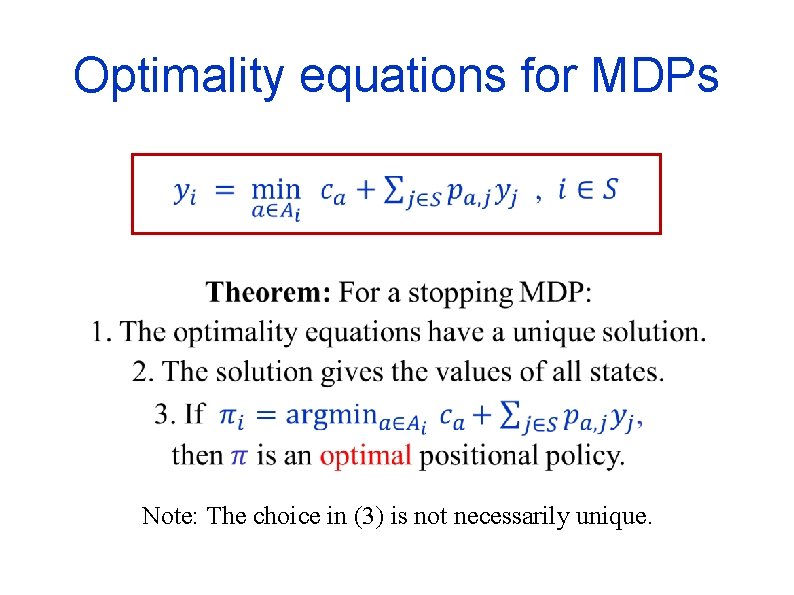

Optimality equations for MDPs Note: The choice in (3) is not necessarily unique.

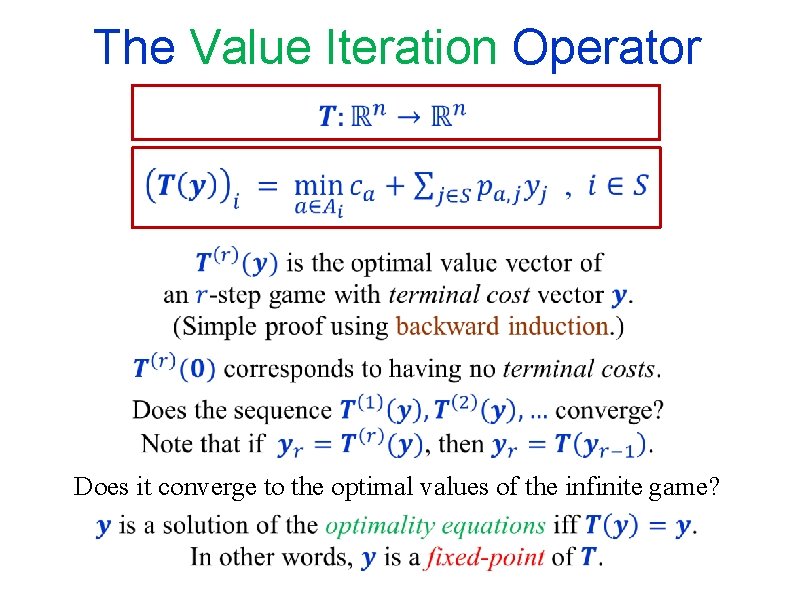

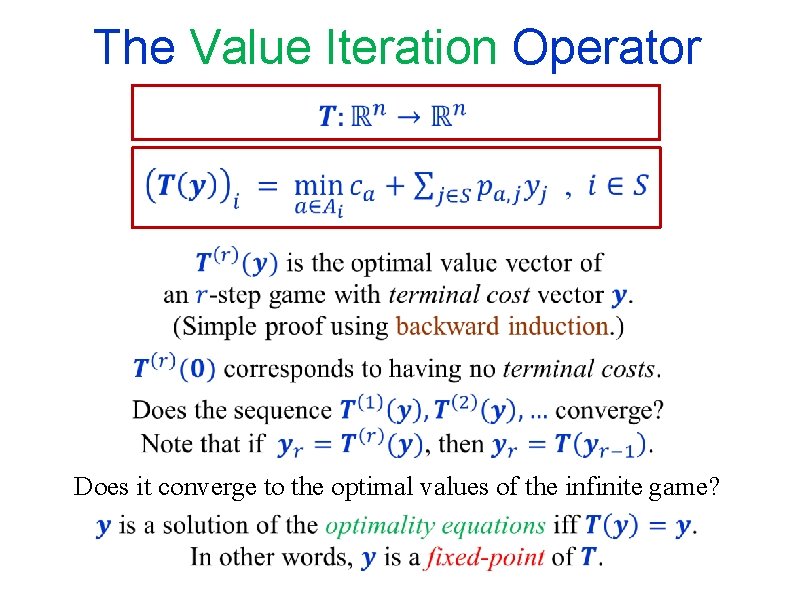

The Value Iteration Operator Does it converge to the optimal values of the infinite game?

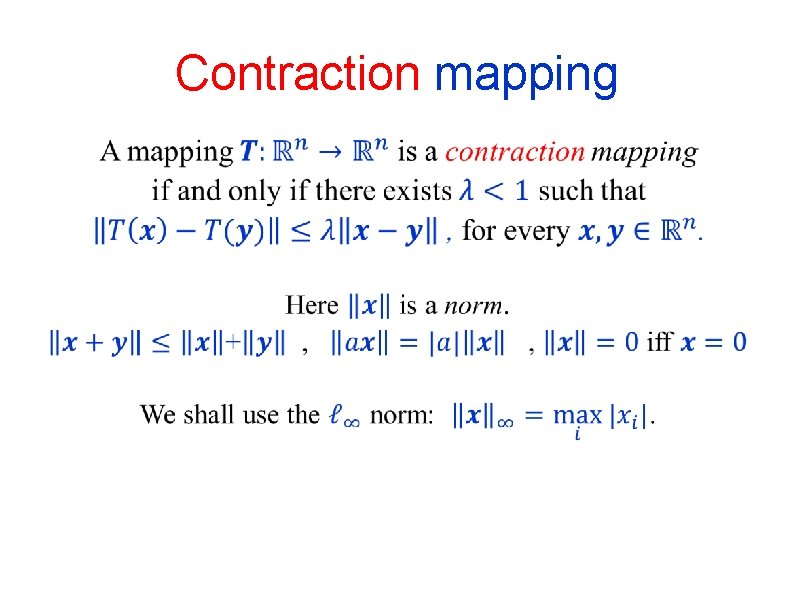

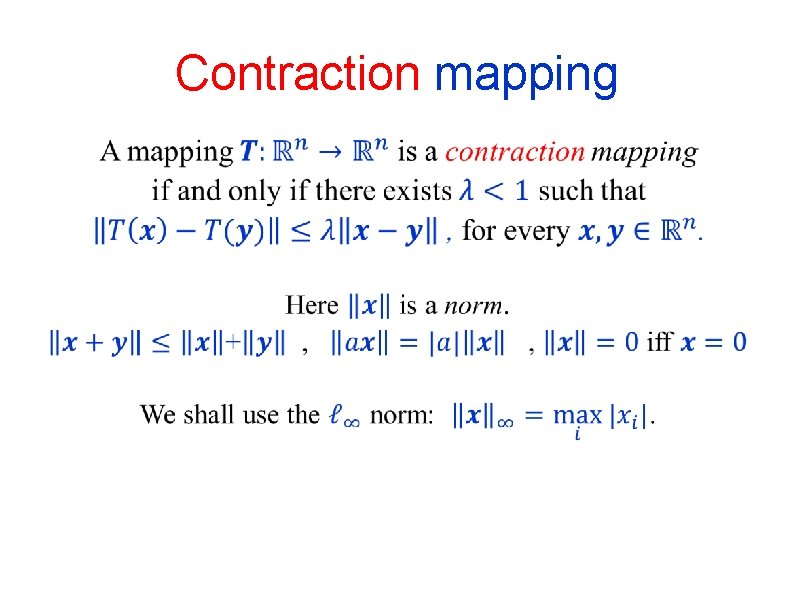

Contraction mapping

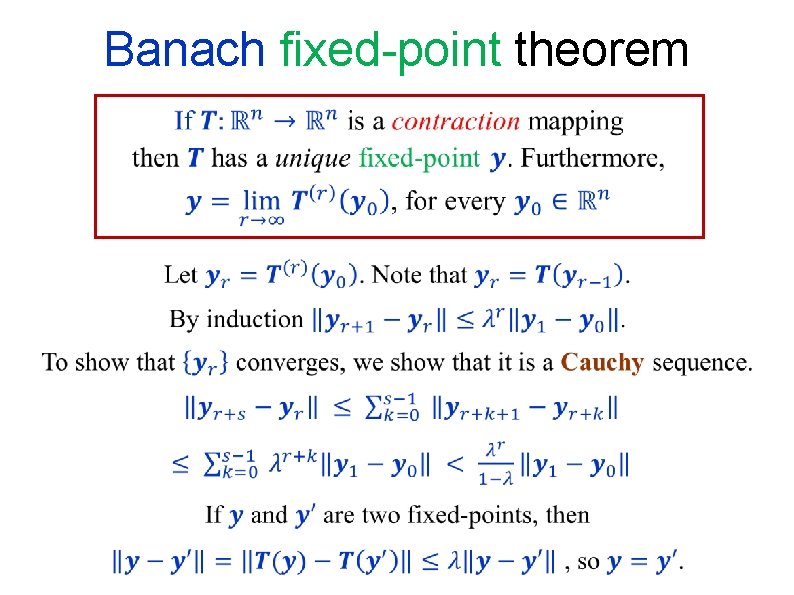

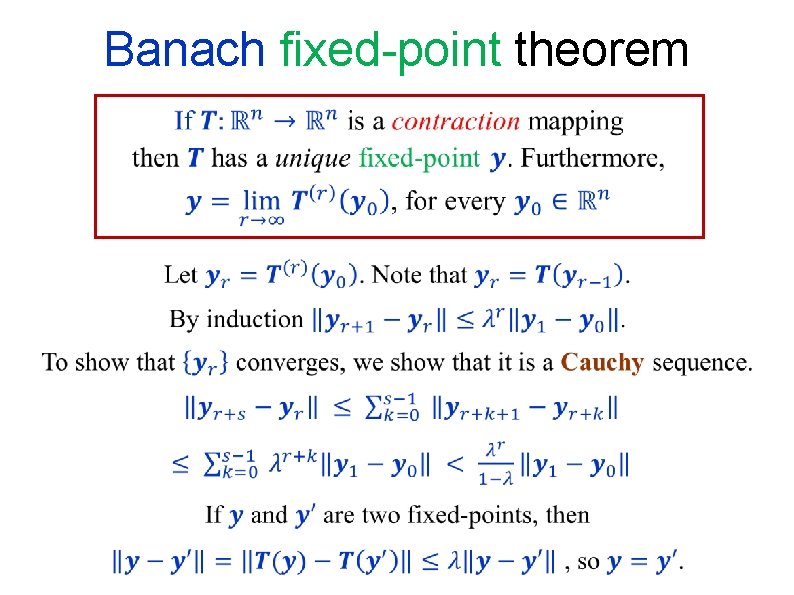

Banach fixed-point theorem

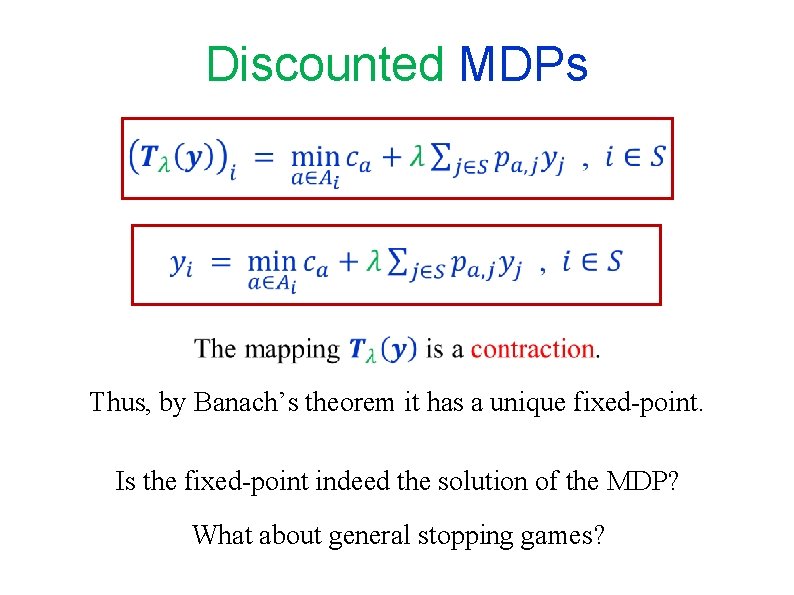

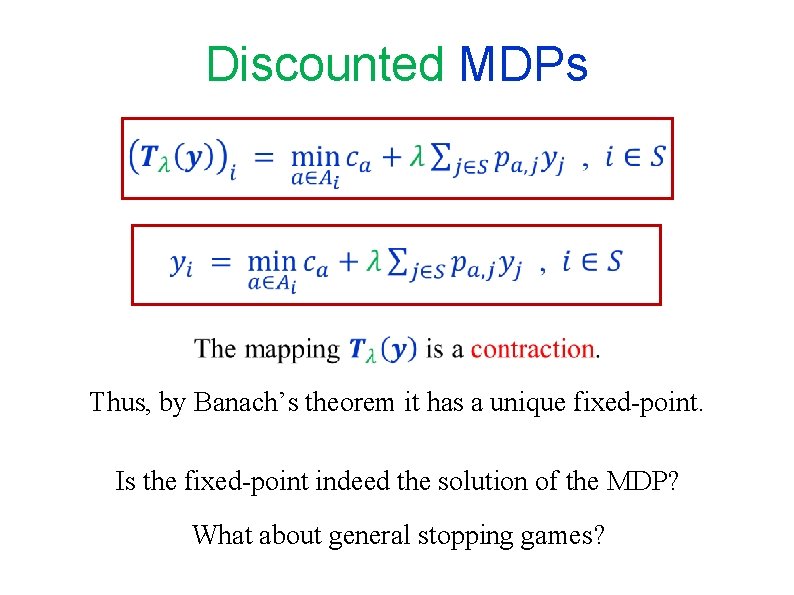

Discounted MDPs Thus, by Banach’s theorem it has a unique fixed-point. Is the fixed-point indeed the solution of the MDP? What about general stopping games?

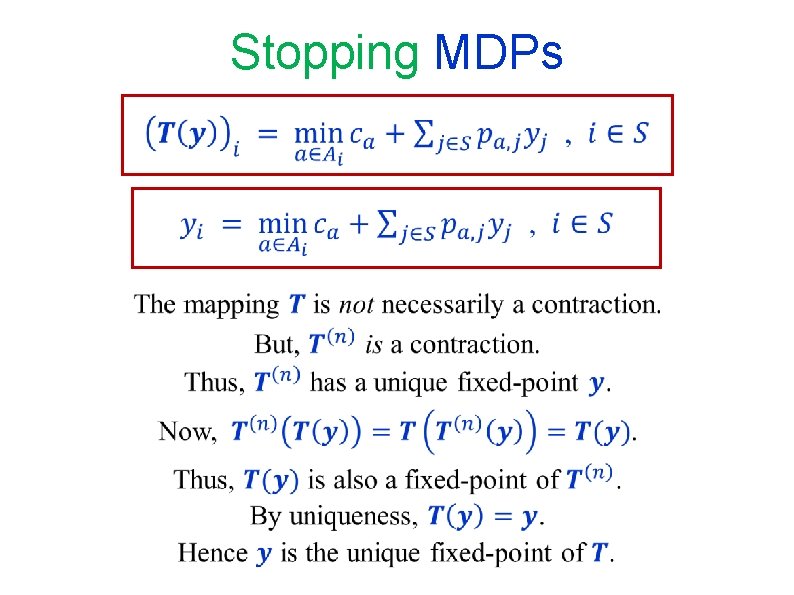

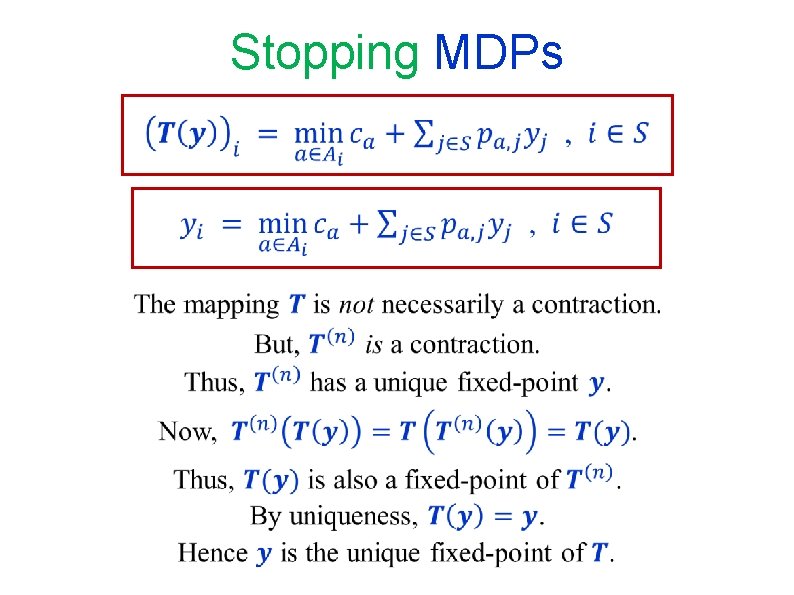

Stopping MDPs

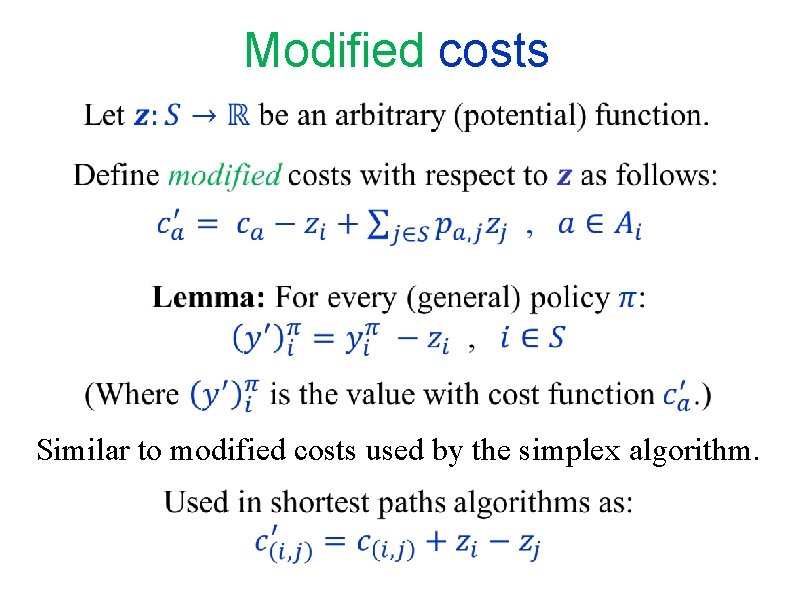

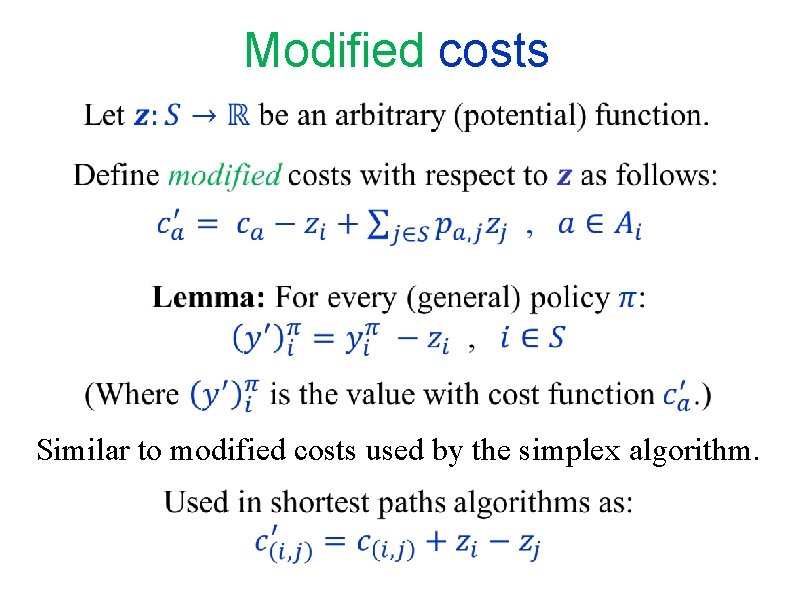

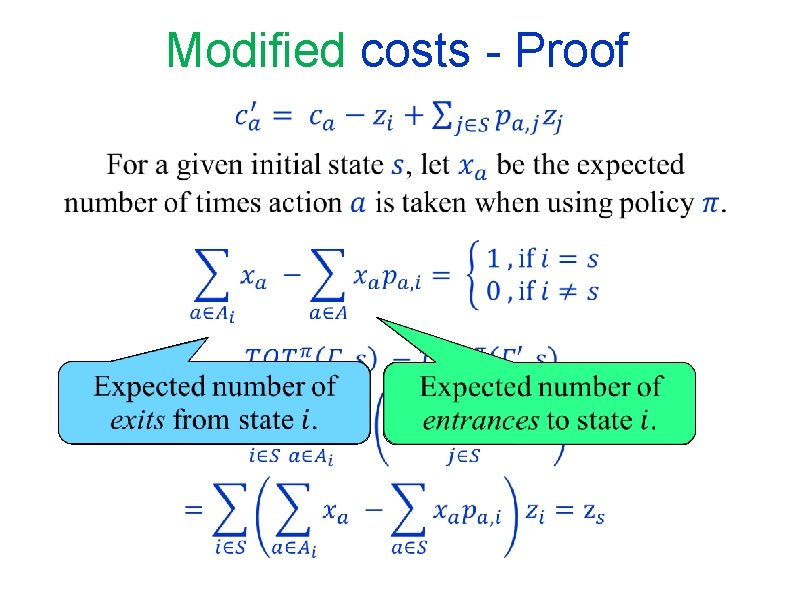

Modified costs Similar to modified costs used by the simplex algorithm.

Modified costs - Proof

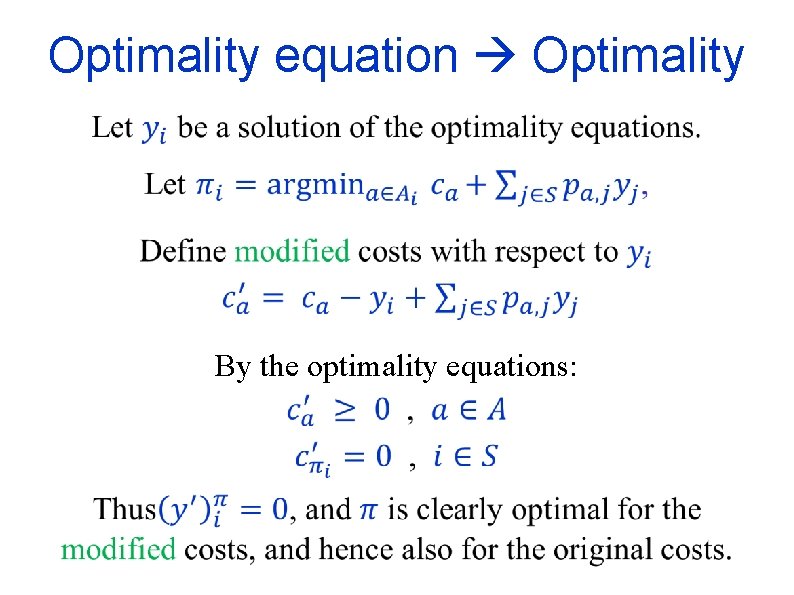

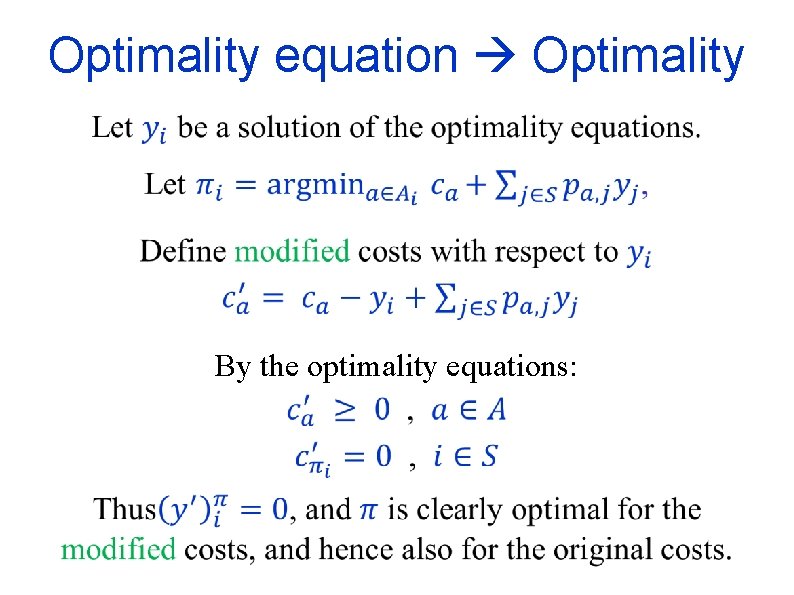

Optimality equation Optimality By the optimality equations:

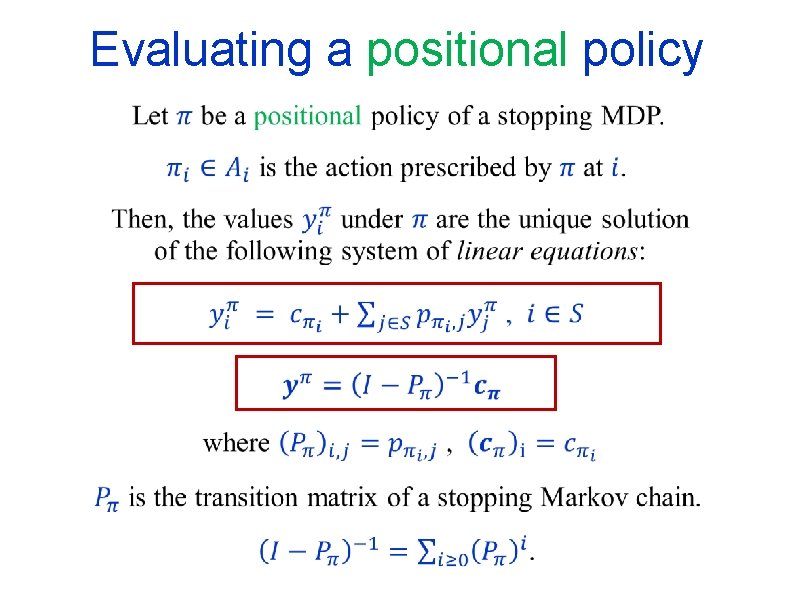

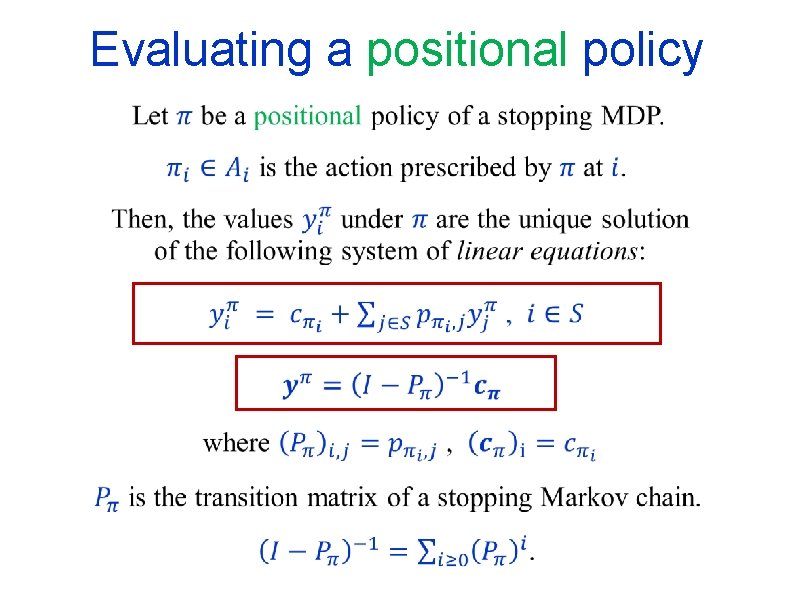

Evaluating a positional policy

![Dual LP formulation for total cost MDPs dEpenoux 1964 Theorem For a stopping MDP Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Theorem: For a stopping MDP,](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-27.jpg)

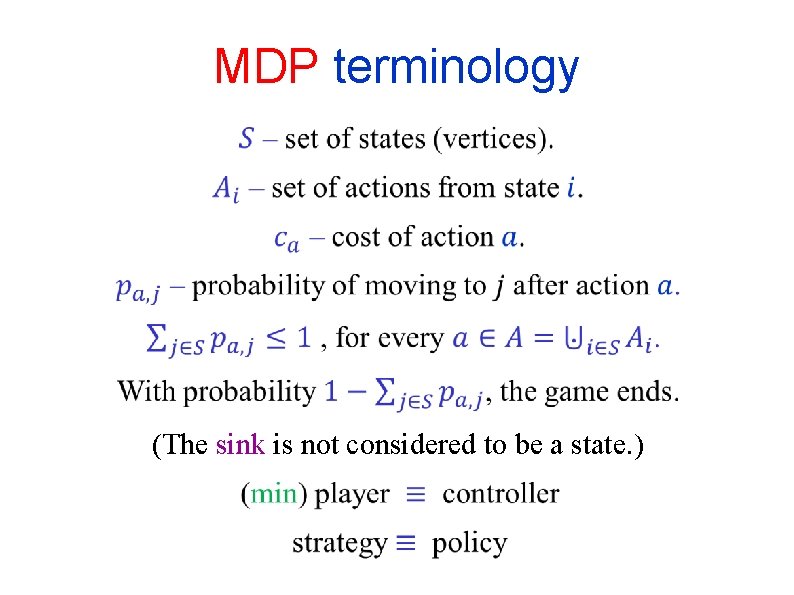

Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Theorem: For a stopping MDP, the LP has a unique optimal solution which satisfies the optimality equations, and hence gives the optimal values of all the states of the MDP.

![Dual LP formulation for total cost MDPs dEpenoux 1964 Optimality Alternative proof Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Optimality (Alternative proof):](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-28.jpg)

Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Optimality (Alternative proof):

![Dual LP formulation for total cost MDPs dEpenoux 1964 Theorem An optimal positional strategy Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Theorem: An optimal positional strategy](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-29.jpg)

Dual LP formulation for total cost MDPs [d’Epenoux (1964)] Theorem: An optimal positional strategy of a stopping MDP, and values of all states, can be found in polynomial time by solving an LP. The dual LP formulation can be used to prove the existence of an optimal positional strategy without using Banach’s fixed-point theorem.

Linear Programming Duality Primal Duality Theorem: (a) If both LPs are feasible and bounded, then their optimal values are equal. (b) An LP is unbounded iff its dual is infeasible.

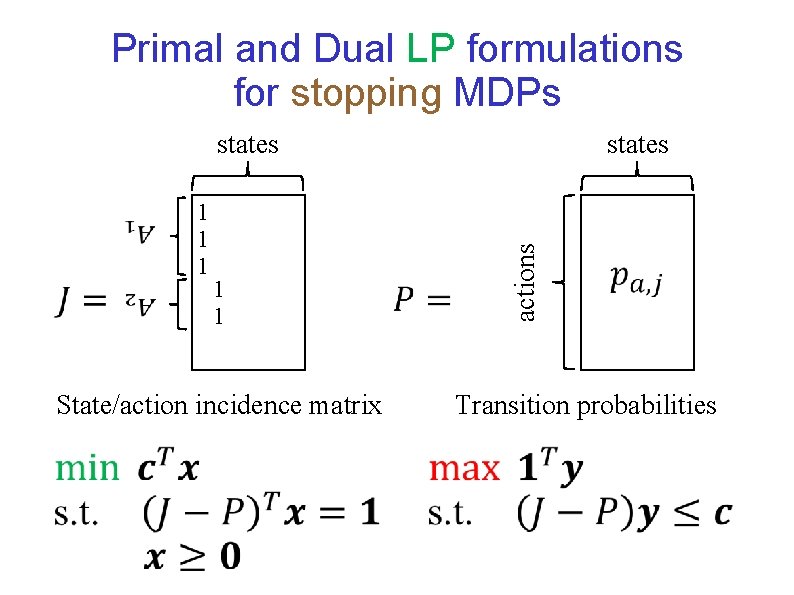

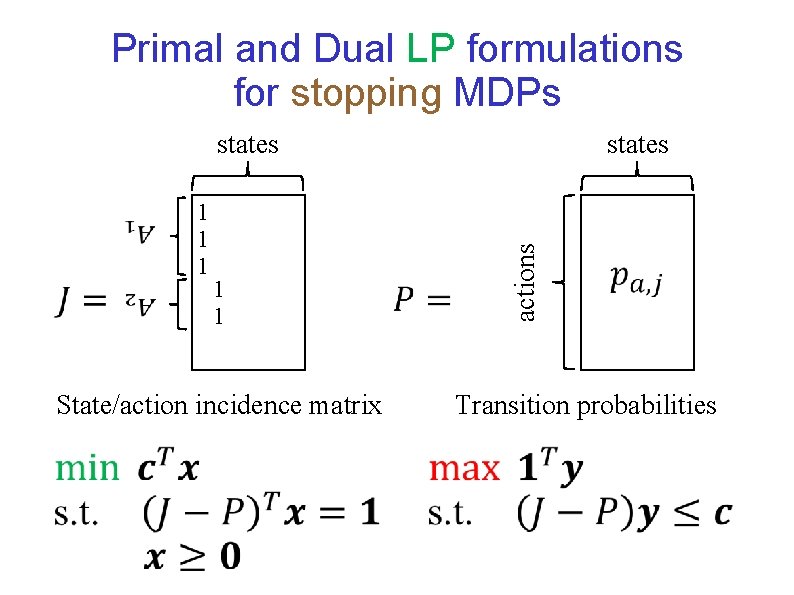

Primal and Dual LP formulations for stopping MDPs 1 1 actions 1 1 1 states State/action incidence matrix states Transition probabilities

![Primal LP formulation for total cost MDPs dEpenoux 1964 Primal LP formulation for total cost MDPs [d’Epenoux (1964)]](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-32.jpg)

Primal LP formulation for total cost MDPs [d’Epenoux (1964)]

![Primal LP formulation for total cost MDPs dEpenoux 1964 The linear system has a Primal LP formulation for total cost MDPs [d’Epenoux (1964)] (The linear system has a](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-33.jpg)

Primal LP formulation for total cost MDPs [d’Epenoux (1964)] (The linear system has a unique solution. )

![Primal LP formulation for total cost MDPs dEpenoux 1964 The calculations These are exactly Primal LP formulation for total cost MDPs [d’Epenoux (1964)] The calculations: These are exactly](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-34.jpg)

Primal LP formulation for total cost MDPs [d’Epenoux (1964)] The calculations: These are exactly the constraints of the primal LP.

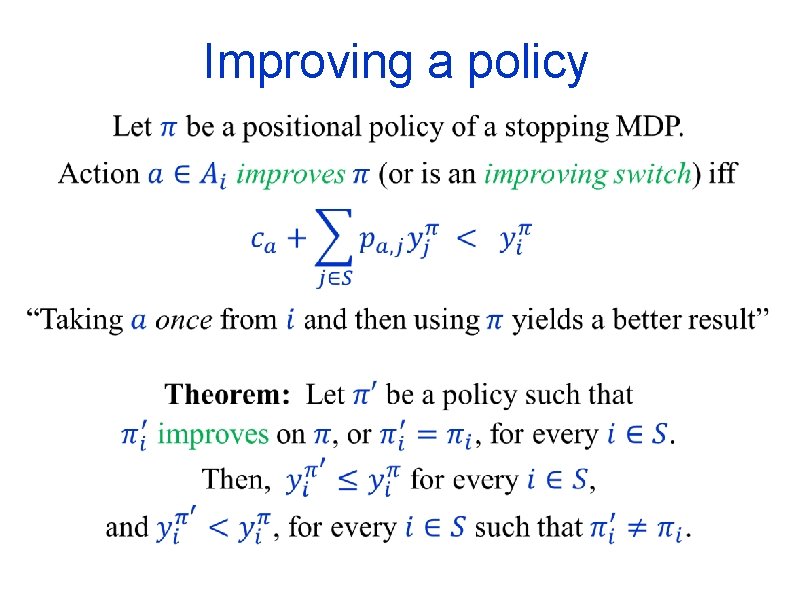

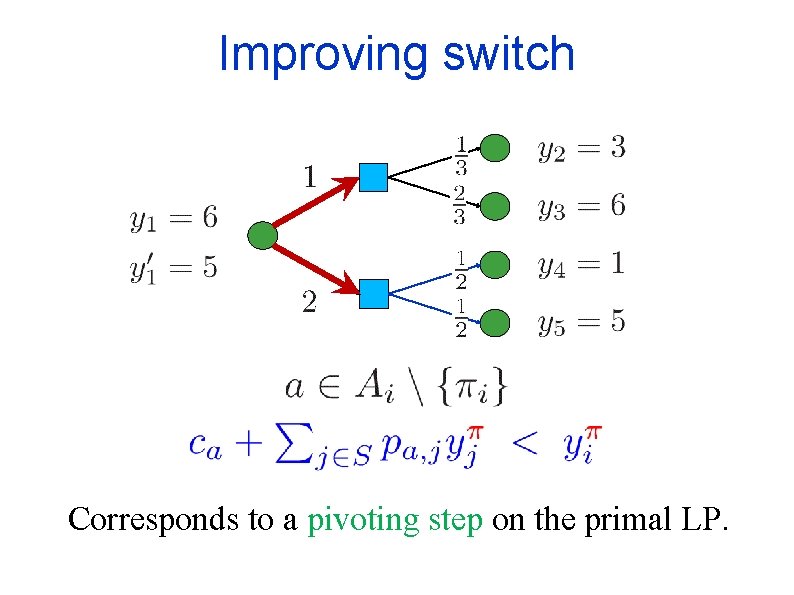

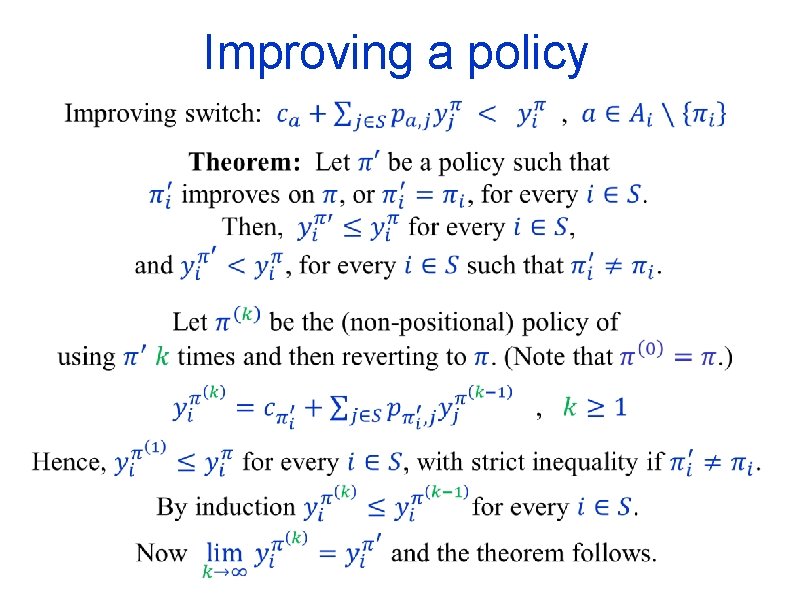

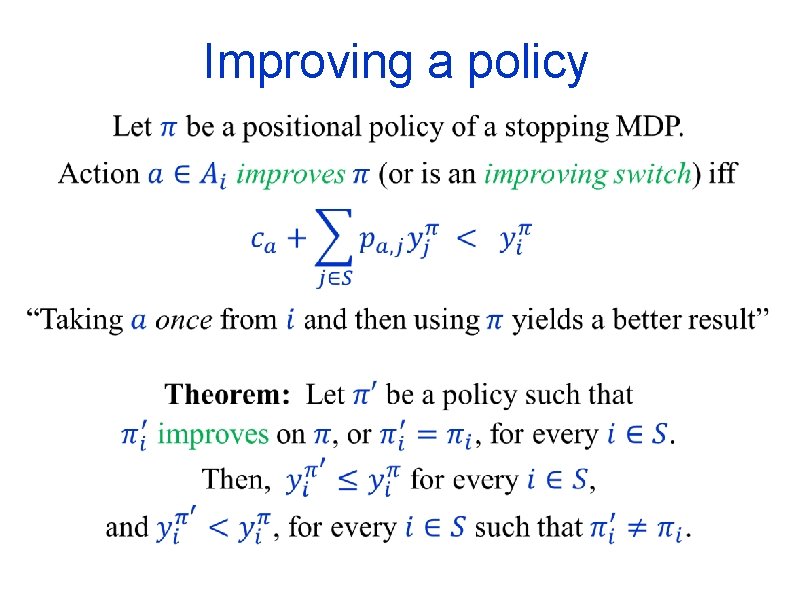

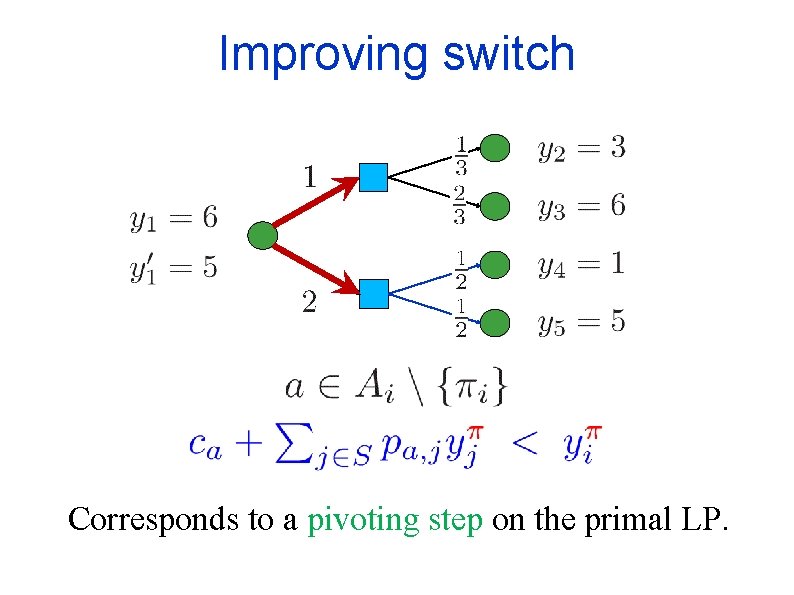

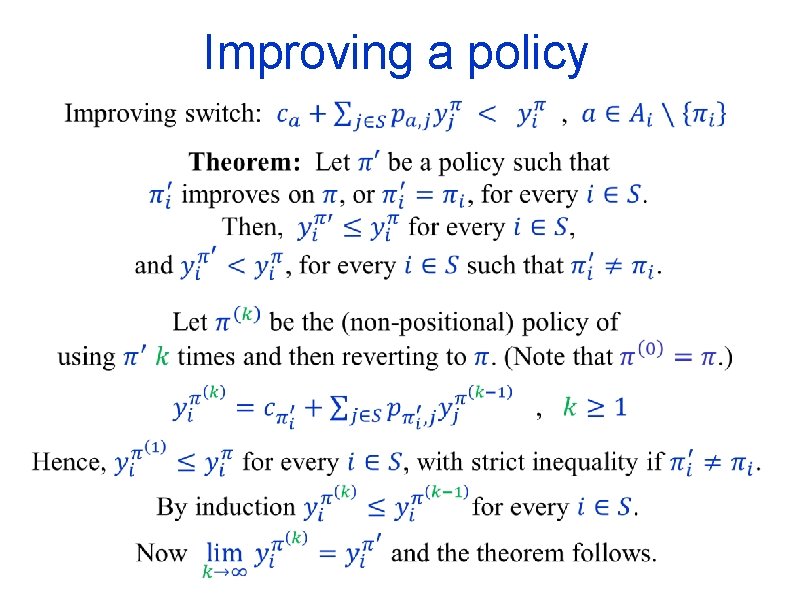

Improving a policy

Improving switch Corresponds to a pivoting step on the primal LP.

Improving a policy

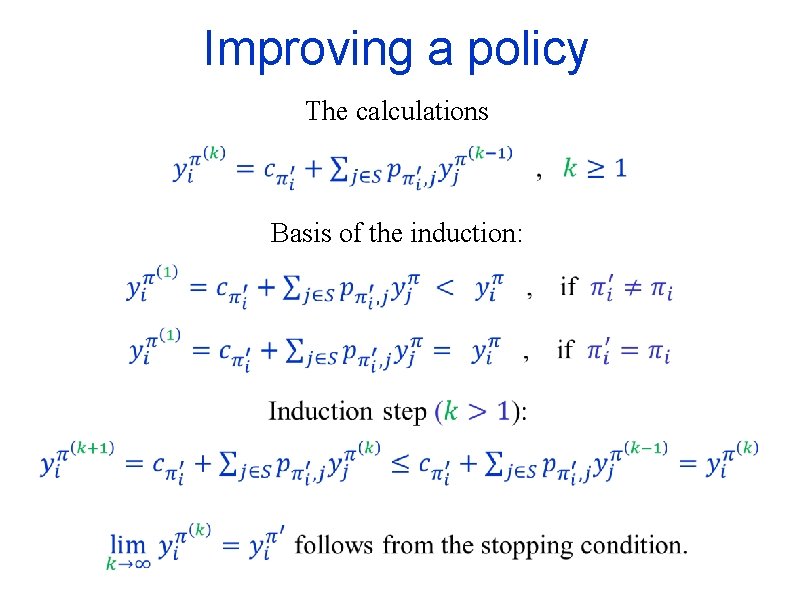

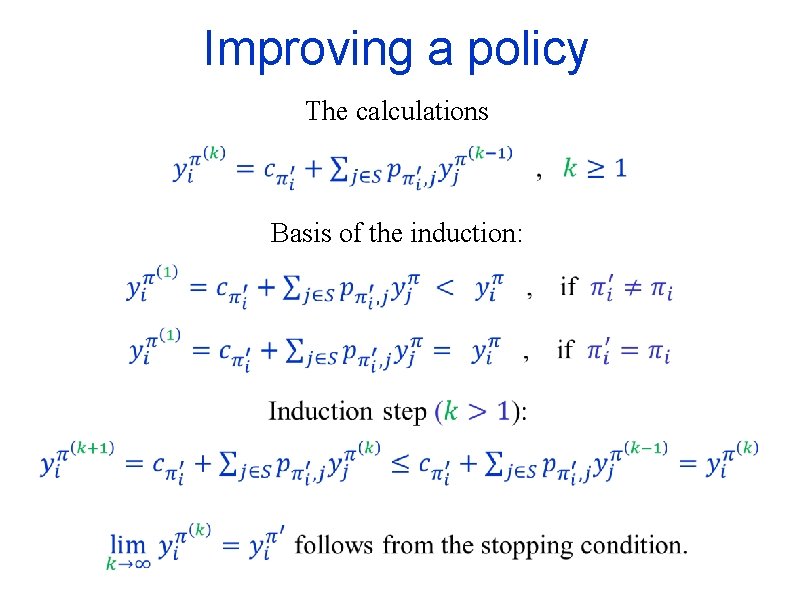

Improving a policy The calculations Basis of the induction:

Improving a policy Alternative proof:

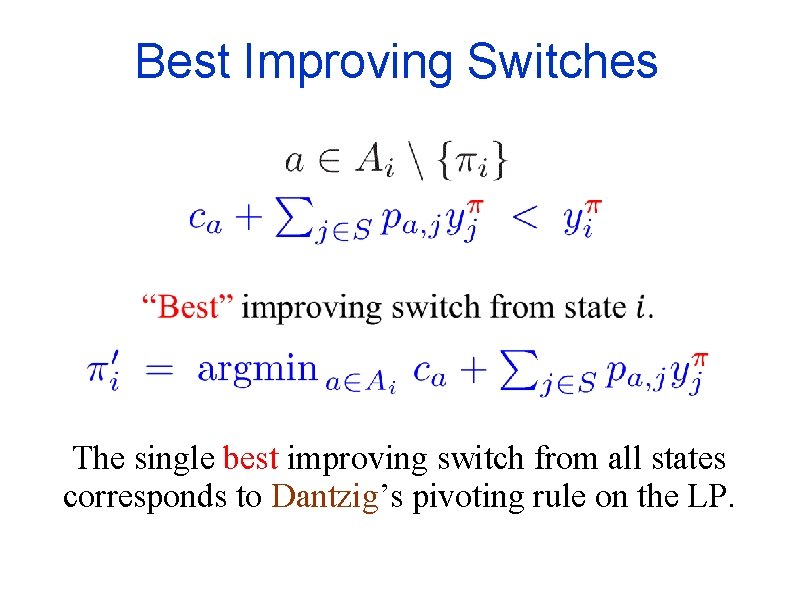

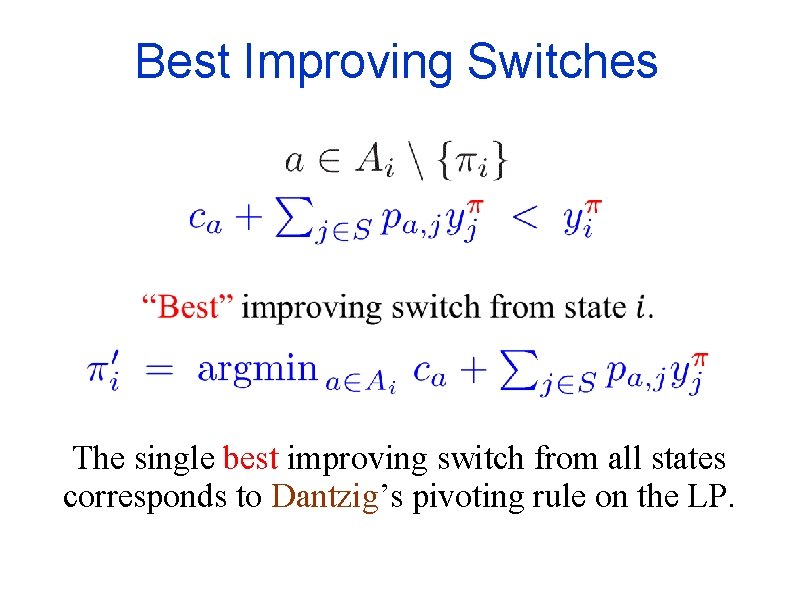

Best Improving Switches The single best improving switch from all states corresponds to Dantzig’s pivoting rule on the LP.

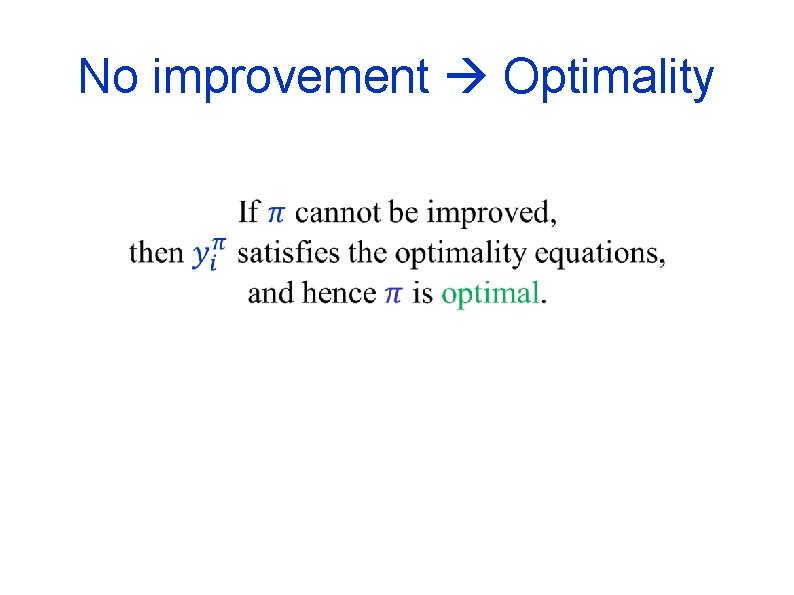

No improvement Optimality

![Policy iteration for MDPs Howard 60 As there is only a finite number Policy iteration for MDPs [Howard ’ 60] As there is only a finite number](https://slidetodoc.com/presentation_image_h/d983c196afbf760a8b7f6437a06b4641/image-42.jpg)

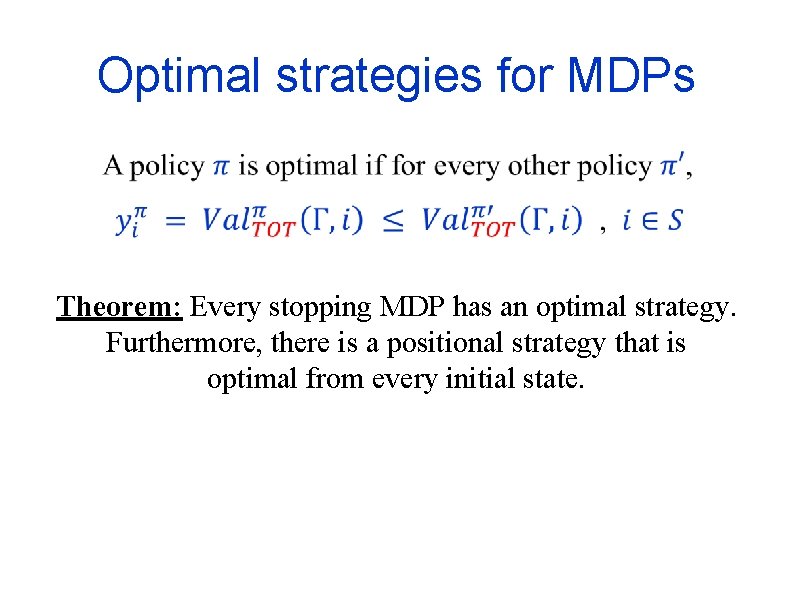

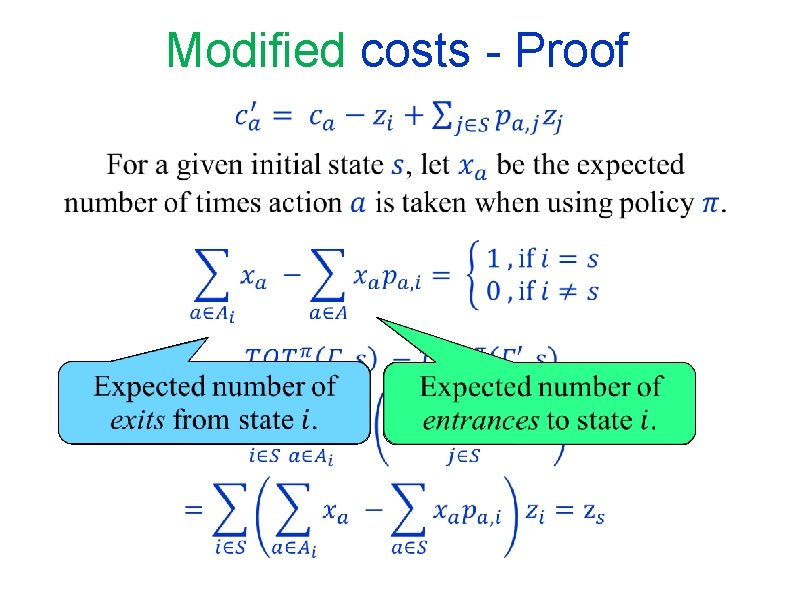

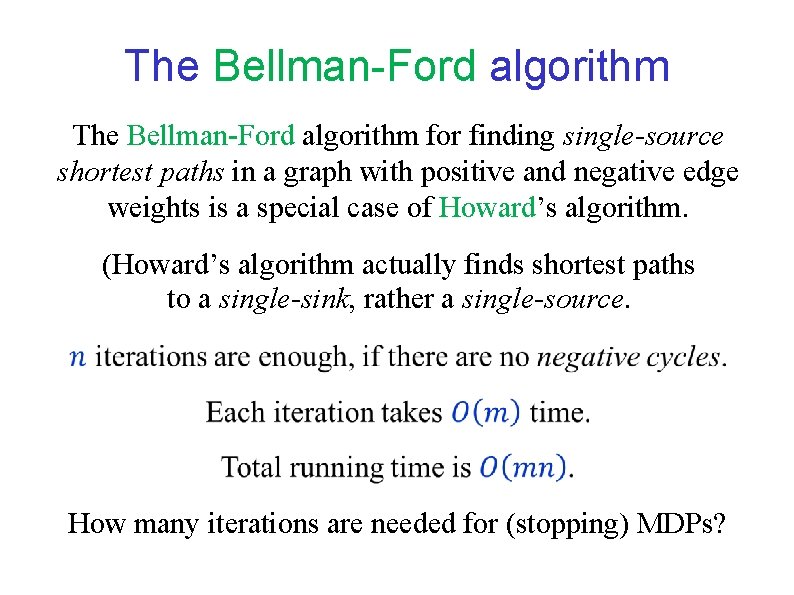

Policy iteration for MDPs [Howard ’ 60] As there is only a finite number of positional policies, the algorithm must terminate with an optimal policy.

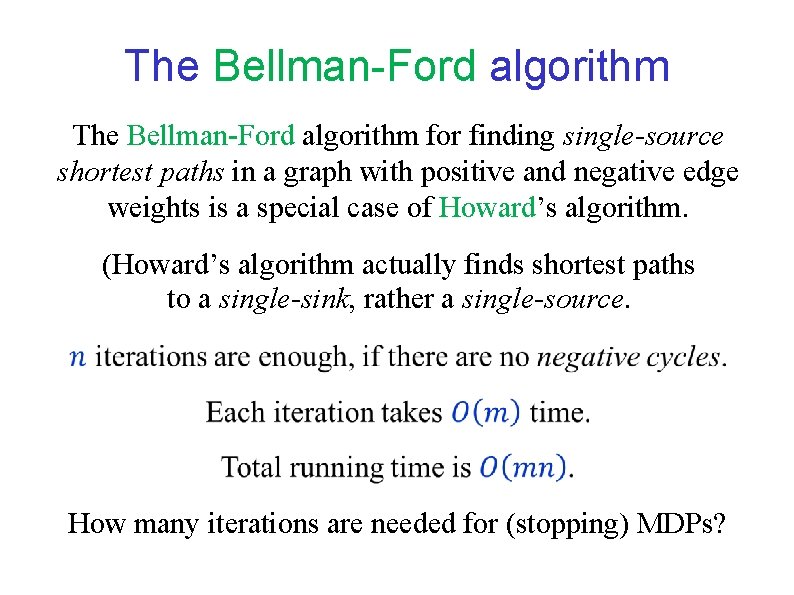

The Bellman-Ford algorithm for finding single-source shortest paths in a graph with positive and negative edge weights is a special case of Howard’s algorithm. (Howard’s algorithm actually finds shortest paths to a single-sink, rather a single-source. How many iterations are needed for (stopping) MDPs?

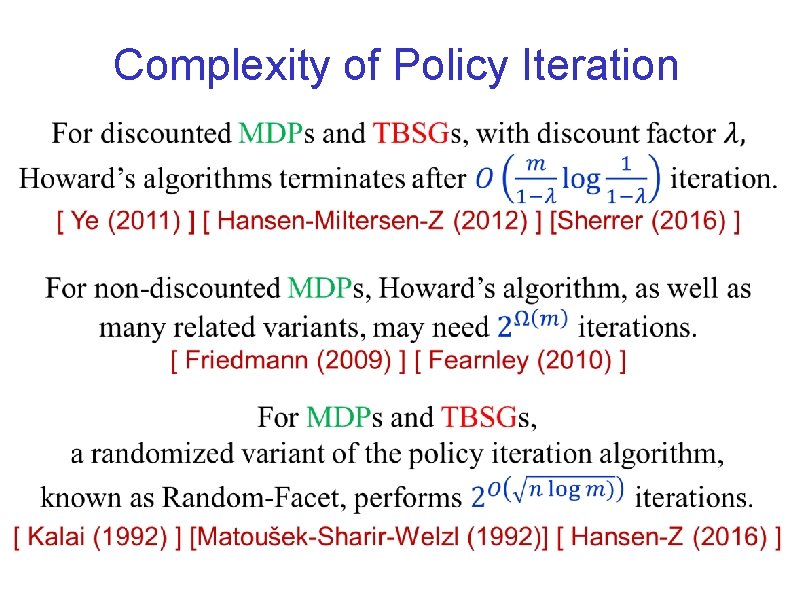

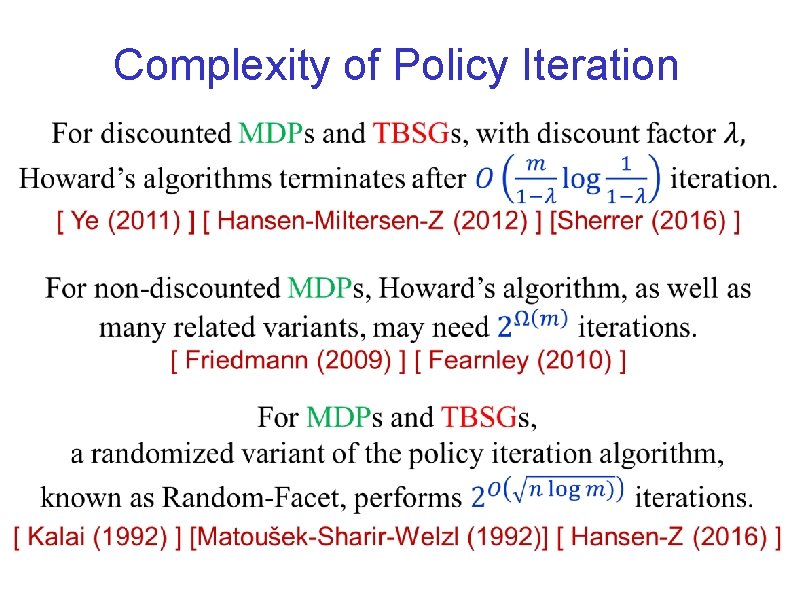

Complexity of Policy Iteration

END of LECTURE 2

Tel aviv university electrical engineering

Tel aviv university electrical engineering Tel aviv university mechanical engineering

Tel aviv university mechanical engineering Tel aviv university electrical engineering

Tel aviv university electrical engineering Gdb tel aviv

Gdb tel aviv Tbsgs

Tbsgs Maximum weight matching

Maximum weight matching Uri zwick

Uri zwick Uri zwick

Uri zwick Teltel games

Teltel games Anti parallel edges

Anti parallel edges Tribe of judah symbol

Tribe of judah symbol Eric zwick

Eric zwick Soft heaps of kaplan and zwick uses

Soft heaps of kaplan and zwick uses Good state and bad state graphs in software testing

Good state and bad state graphs in software testing Comparing distance/time graphs to speed/time graphs

Comparing distance/time graphs to speed/time graphs Graphs that enlighten and graphs that deceive

Graphs that enlighten and graphs that deceive Degree and leading coefficient

Degree and leading coefficient The hunger games chapter 26 questions and answers

The hunger games chapter 26 questions and answers Types of games outdoor

Types of games outdoor Ibat ibang bahagi ng talumpati

Ibat ibang bahagi ng talumpati Pandiwa kahulugan

Pandiwa kahulugan Panguring pamilang

Panguring pamilang Tel mixto

Tel mixto Clasificacion de rapin y allen

Clasificacion de rapin y allen Tel mixto

Tel mixto Mammoth oil 1920

Mammoth oil 1920 Tel ve levha haline getirilebilen elementler

Tel ve levha haline getirilebilen elementler 123 12345678

123 12345678 János vitéz szerkezete

János vitéz szerkezete Tel 104

Tel 104 Picture tel

Picture tel Gerard tel

Gerard tel Microfluidic resistance calculator

Microfluidic resistance calculator Tel 971

Tel 971 Tel

Tel Tel 044

Tel 044 Tel

Tel Gen tel

Gen tel Ccp 1

Ccp 1 Logo tel

Logo tel Baby tel

Baby tel Tel 972

Tel 972 Kroger foods stock 1920

Kroger foods stock 1920 Tel 31

Tel 31 Toroid manyetik alan

Toroid manyetik alan Tlckrgi

Tlckrgi