Exploiting InterWarp Heterogeneity to Improve GPGPU Performance Rachata

![Comparison Points • FR-FCFS baseline [Rixner+, ISCA’ 00] • Cache Insertion: – EAF [Seshadri+, Comparison Points • FR-FCFS baseline [Rixner+, ISCA’ 00] • Cache Insertion: – EAF [Seshadri+,](https://slidetodoc.com/presentation_image_h2/916821022955a40af737ab9cf81b2d4a/image-25.jpg)

- Slides: 45

Exploiting Inter-Warp Heterogeneity to Improve GPGPU Performance Rachata Ausavarungnirun Saugata Ghose, Onur Kayiran, Gabriel H. Loh Chita Das, Mahmut Kandemir, Onur Mutlu

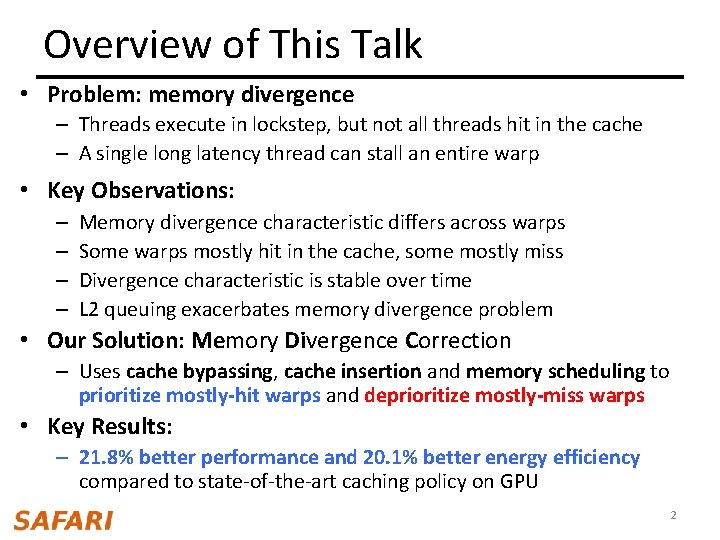

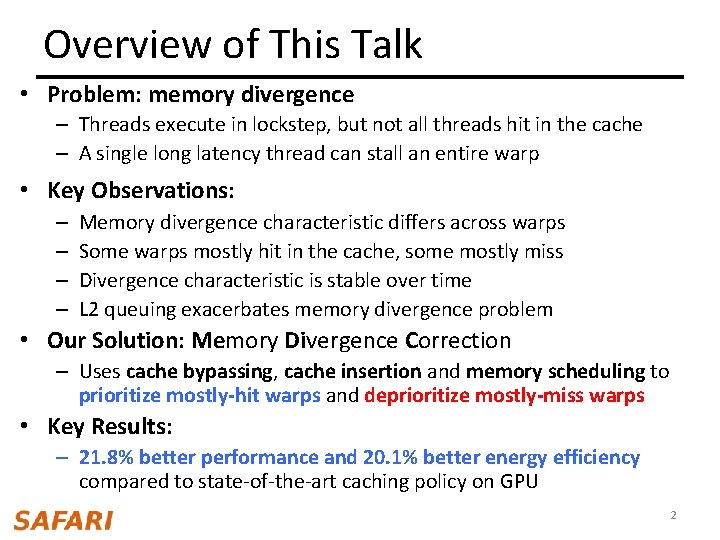

Overview of This Talk • Problem: memory divergence – Threads execute in lockstep, but not all threads hit in the cache – A single long latency thread can stall an entire warp • Key Observations: – – Memory divergence characteristic differs across warps Some warps mostly hit in the cache, some mostly miss Divergence characteristic is stable over time L 2 queuing exacerbates memory divergence problem • Our Solution: Memory Divergence Correction – Uses cache bypassing, cache insertion and memory scheduling to prioritize mostly-hit warps and deprioritize mostly-miss warps • Key Results: – 21. 8% better performance and 20. 1% better energy efficiency compared to state-of-the-art caching policy on GPU 2

Outline • • Background Key Observations Memory Divergence Correction (Me. Di. C) Results 3

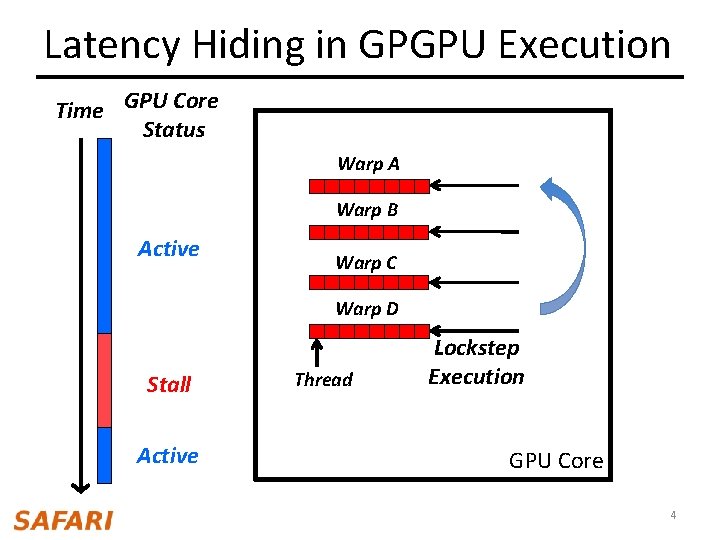

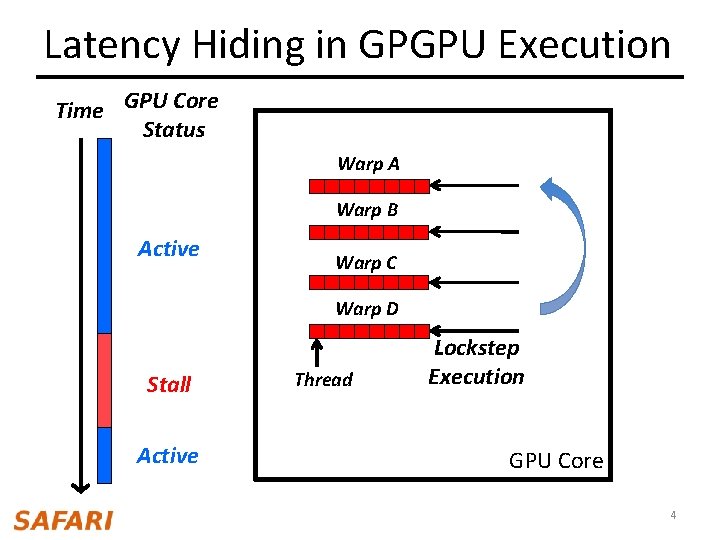

Latency Hiding in GPGPU Execution Time GPU Core Status Warp A Warp B Active Warp C Warp D Stall Active Thread Lockstep Execution GPU Core 4

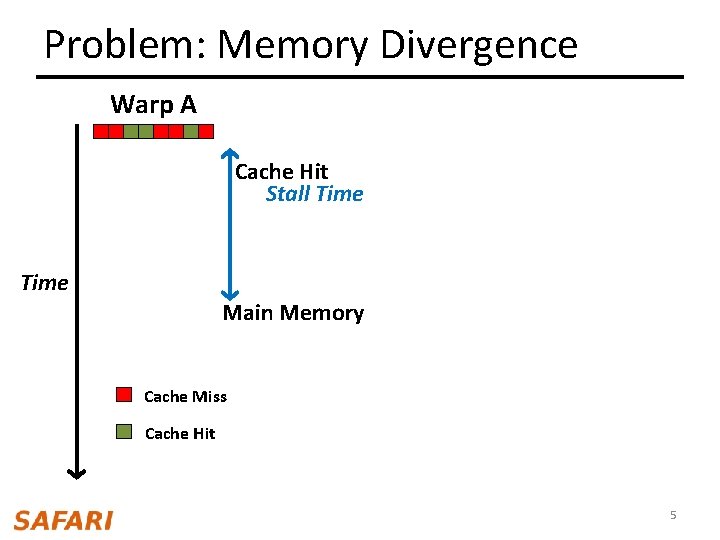

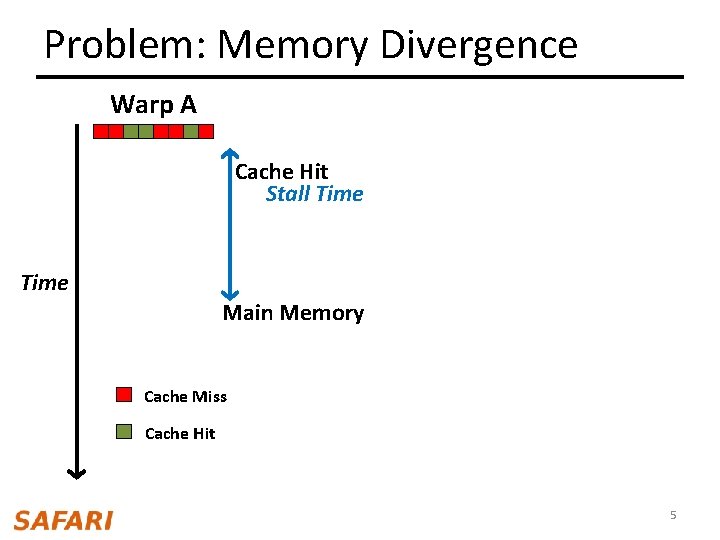

Problem: Memory Divergence Warp A Cache Hit Stall Time Main Memory Cache Miss Cache Hit 5

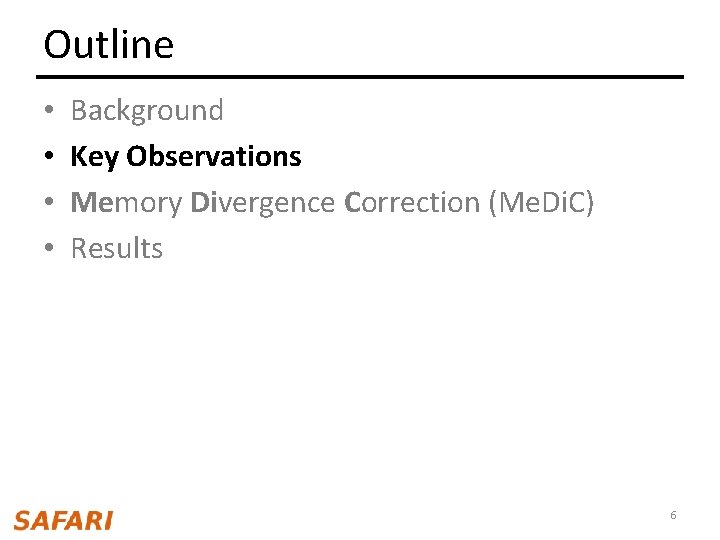

Outline • • Background Key Observations Memory Divergence Correction (Me. Di. C) Results 6

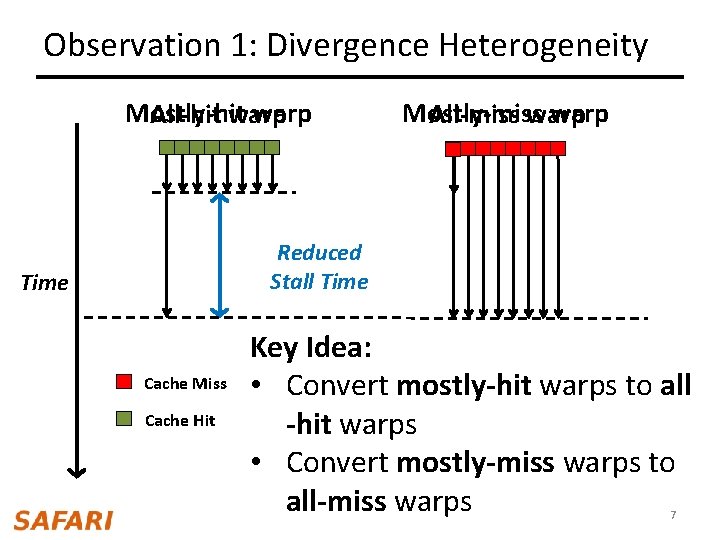

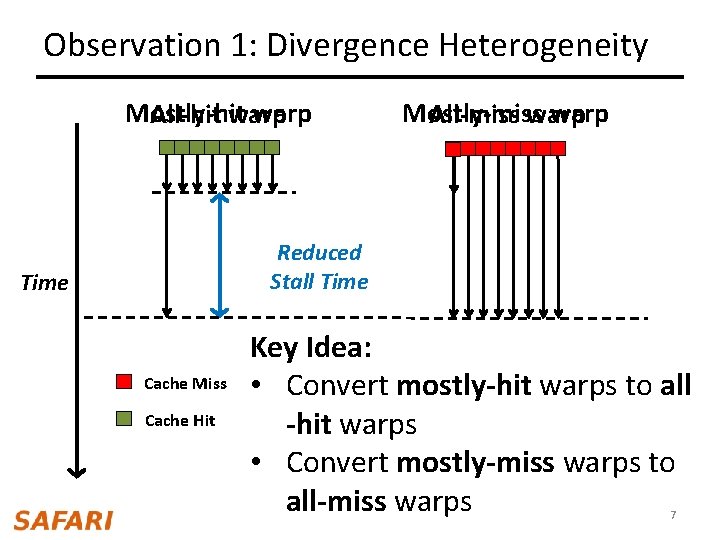

Observation 1: Divergence Heterogeneity Mostly-hit warp All-hit warp Mostly-miss warp All-miss warp Reduced Stall Time Cache Miss Cache Hit Key Idea: • Convert mostly-hit warps to all -hit warps • Convert mostly-miss warps to all-miss warps 7

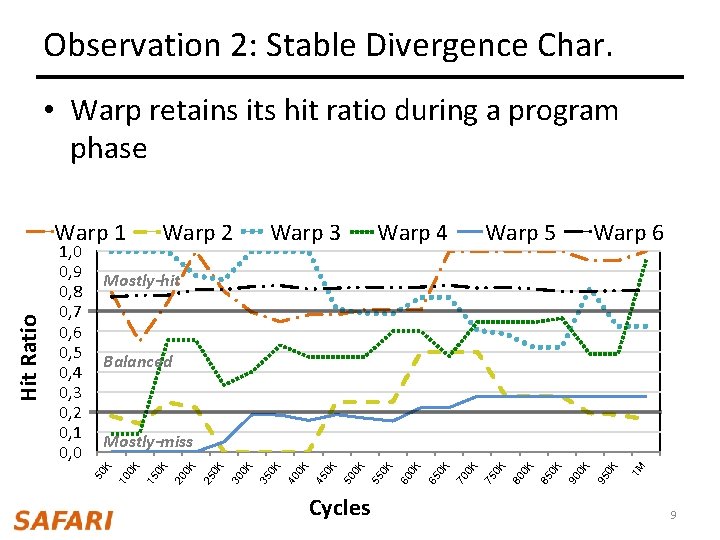

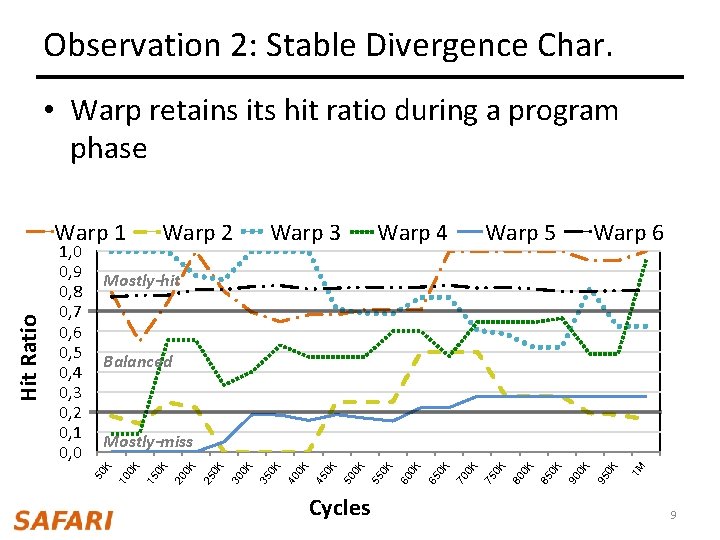

Observation 2: Stable Divergence Char. • Warp retains its hit ratio during a program phase – Hit ratio number of hits / number of access 8

Observation 2: Stable Divergence Char. • Warp retains its hit ratio during a program phase Warp 2 Warp 3 Warp 4 Warp 5 Warp 6 Mostly-hit Balanced Cycles 1 M 0 K 95 0 K 90 0 K 85 0 K 80 0 K 75 0 K 70 0 K 65 0 K 60 0 K 55 0 K 50 0 K 45 0 K 40 0 K 35 0 K 30 0 K 25 0 K 20 0 K 15 10 0 K Mostly-miss K 1, 0 0, 9 0, 8 0, 7 0, 6 0, 5 0, 4 0, 3 0, 2 0, 1 0, 0 50 Hit Ratio Warp 1 9

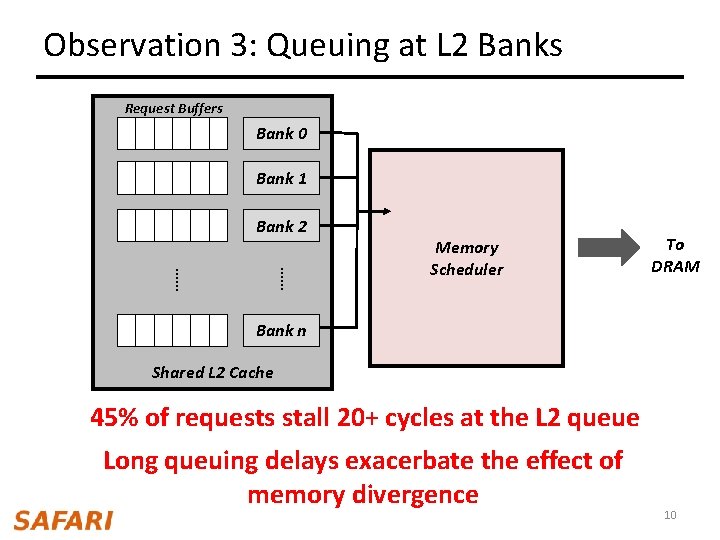

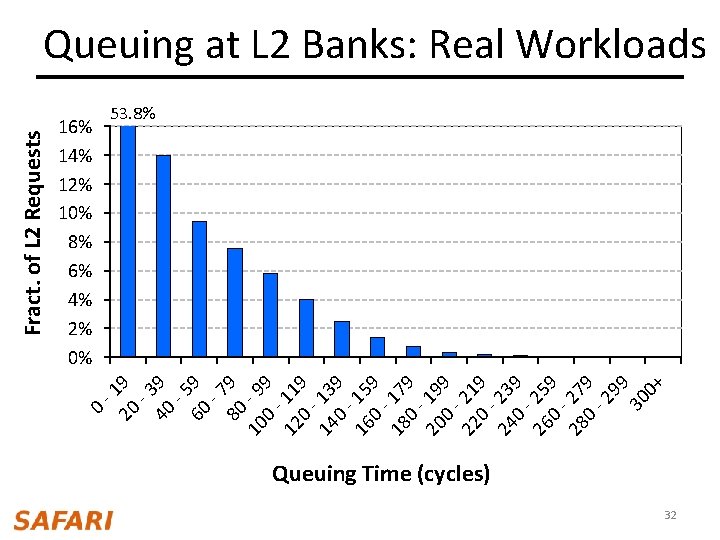

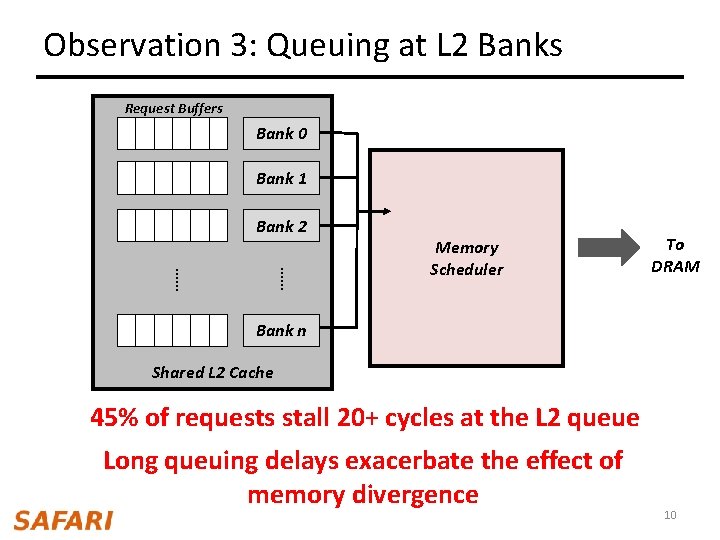

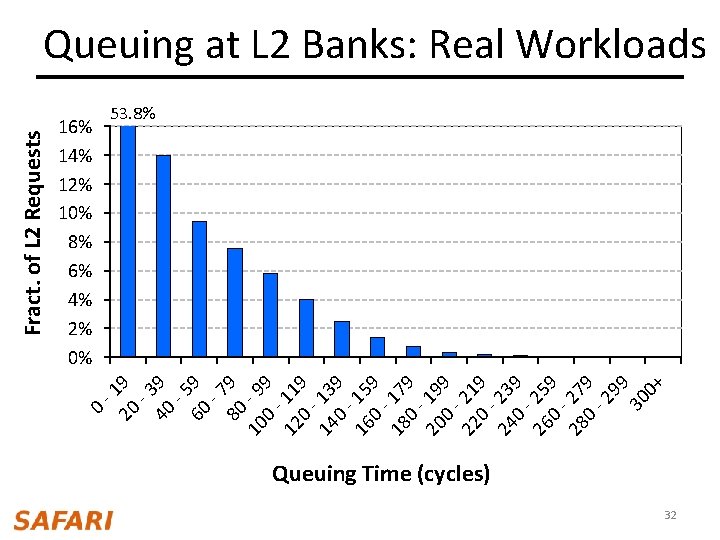

Observation 3: Queuing at L 2 Banks Request Buffers Bank 0 Bank 1 Bank 2 …… …… Memory Scheduler To DRAM Bank n Shared L 2 Cache 45% of requests stall 20+ cycles at the L 2 queue Long queuing delays exacerbate the effect of memory divergence 10

Outline • • Background Key Observations Memory Divergence Correction (Me. Di. C) Results 11

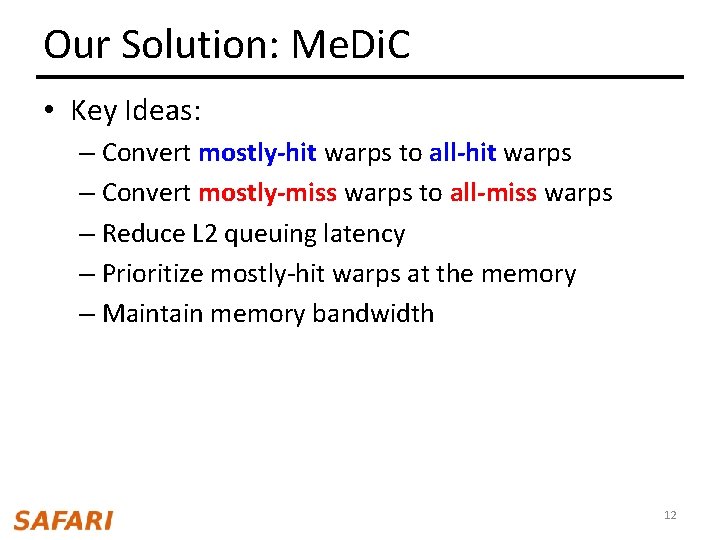

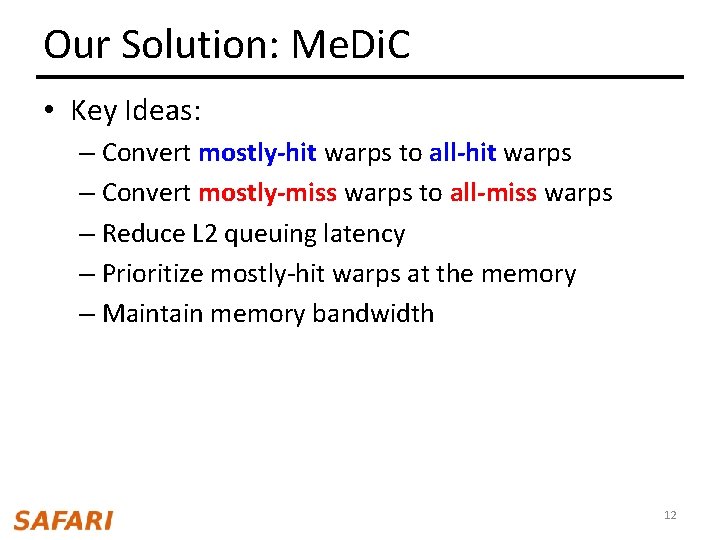

Our Solution: Me. Di. C • Key Ideas: – Convert mostly-hit warps to all-hit warps – Convert mostly-miss warps to all-miss warps – Reduce L 2 queuing latency – Prioritize mostly-hit warps at the memory – Maintain memory bandwidth 12

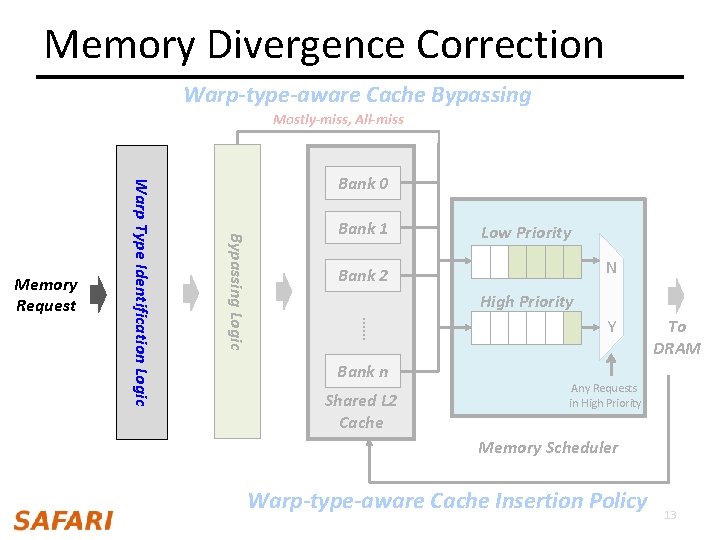

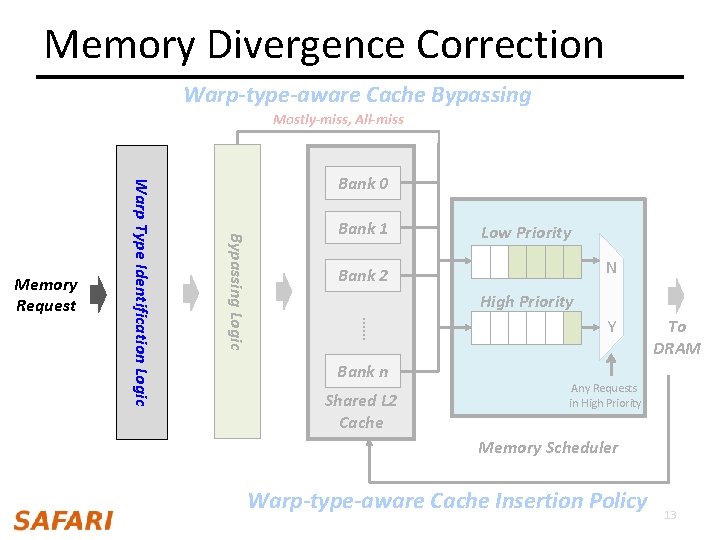

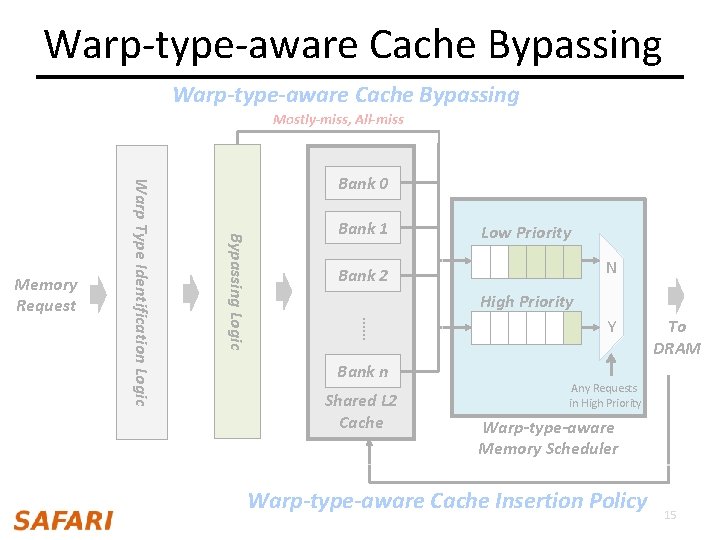

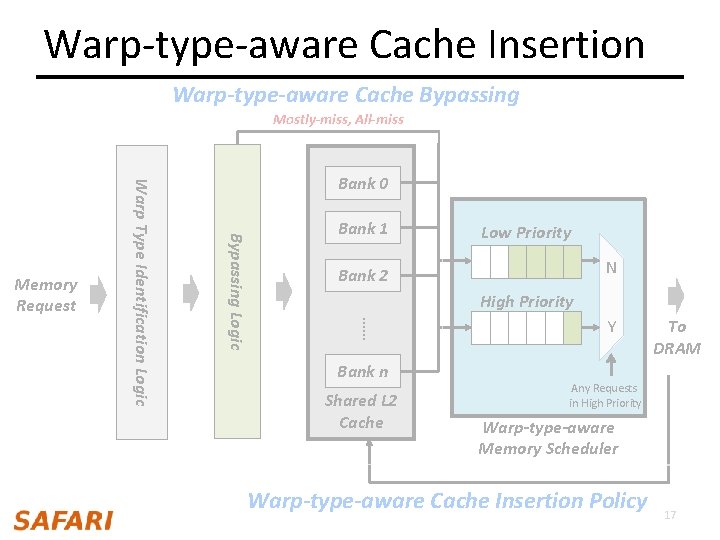

Memory Divergence Correction Warp-type-aware Cache Bypassing Mostly-miss, All-miss Bank 1 Low Priority N Bank 2 High Priority …… Bypassing Logic Warp Type Identification Logic Memory Request Bank 0 Bank n Shared L 2 Cache Y To DRAM Any Requests in High Priority Warp-type-aware Memory Scheduler Warp-type-aware Cache Insertion Policy 13

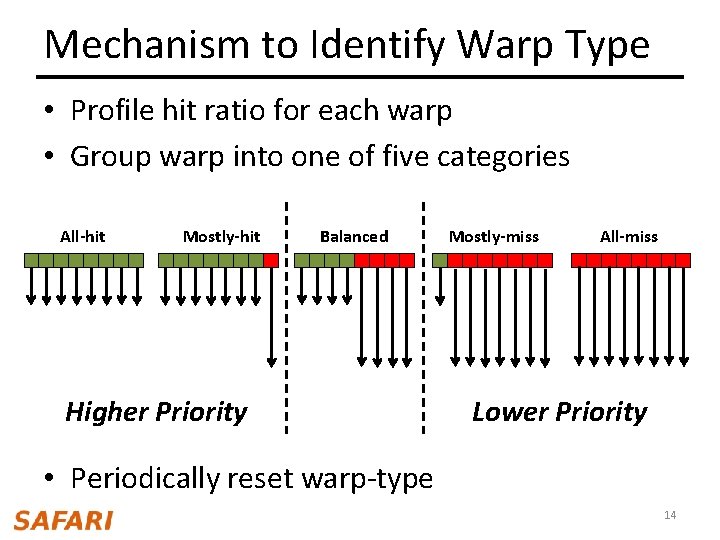

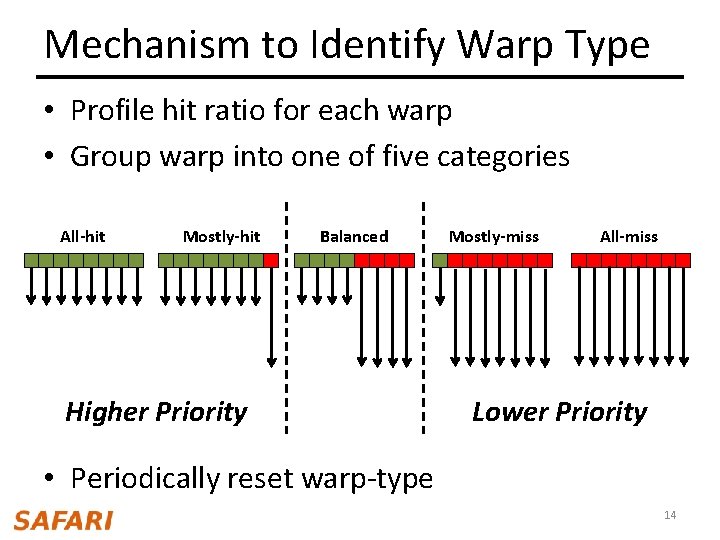

Mechanism to Identify Warp Type • Profile hit ratio for each warp • Group warp into one of five categories All-hit Mostly-hit Balanced Higher Priority Mostly-miss All-miss Lower Priority • Periodically reset warp-type 14

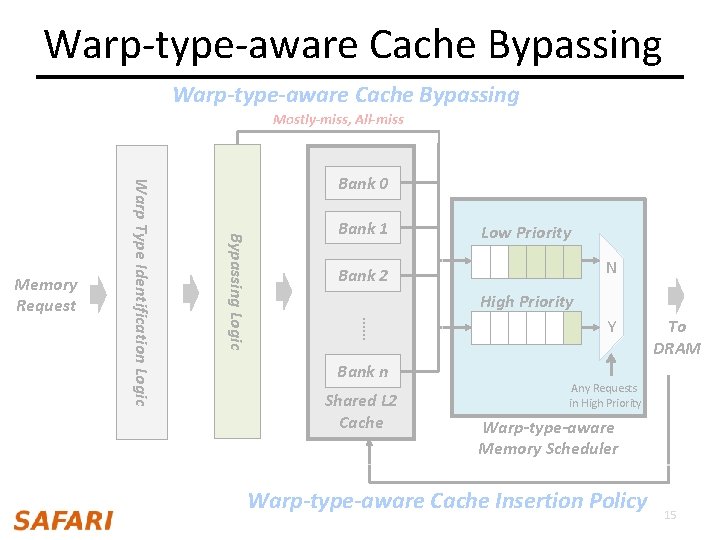

Warp-type-aware Cache Bypassing Mostly-miss, All-miss Bank 1 Low Priority N Bank 2 High Priority …… Bypassing Logic Warp Type Identification Logic Memory Request Bank 0 Bank n Shared L 2 Cache Y To DRAM Any Requests in High Priority Warp-type-aware Memory Scheduler Warp-type-aware Cache Insertion Policy 15

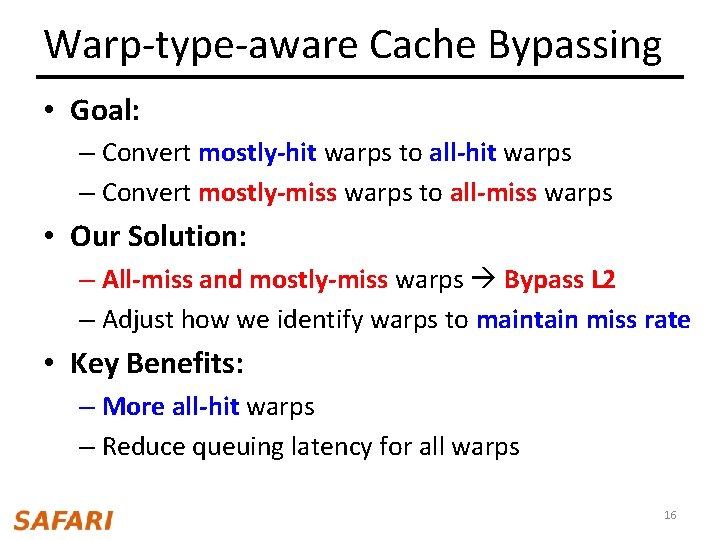

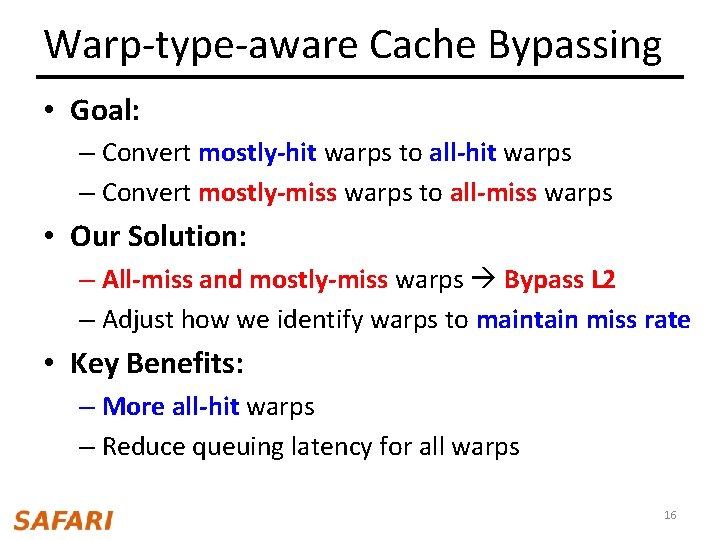

Warp-type-aware Cache Bypassing • Goal: – Convert mostly-hit warps to all-hit warps – Convert mostly-miss warps to all-miss warps • Our Solution: – All-miss and mostly-miss warps Bypass L 2 – Adjust how we identify warps to maintain miss rate • Key Benefits: – More all-hit warps – Reduce queuing latency for all warps 16

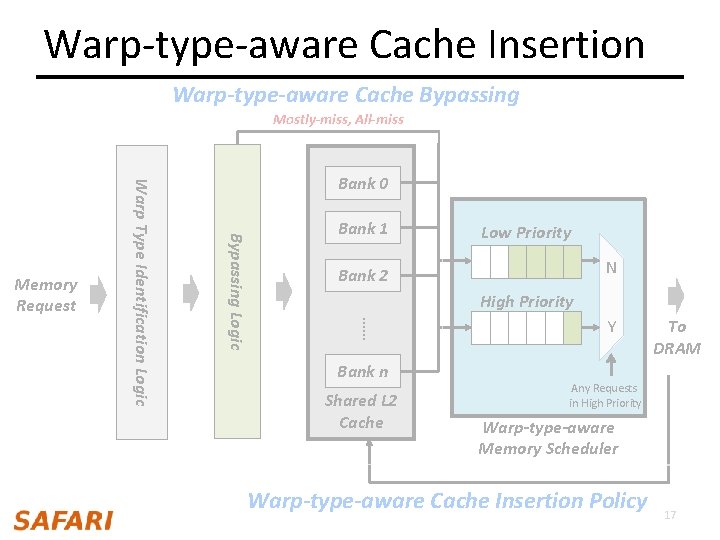

Warp-type-aware Cache Insertion Warp-type-aware Cache Bypassing Mostly-miss, All-miss Bank 1 Low Priority N Bank 2 High Priority …… Bypassing Logic Warp Type Identification Logic Memory Request Bank 0 Bank n Shared L 2 Cache Y To DRAM Any Requests in High Priority Warp-type-aware Memory Scheduler Warp-type-aware Cache Insertion Policy 17

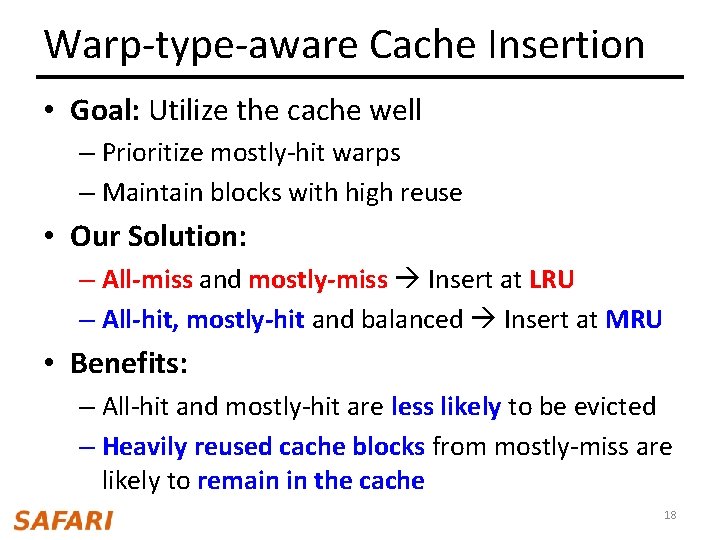

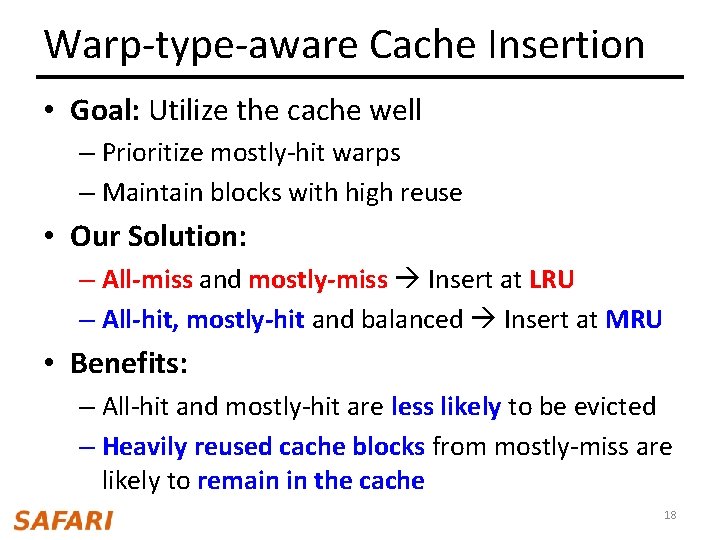

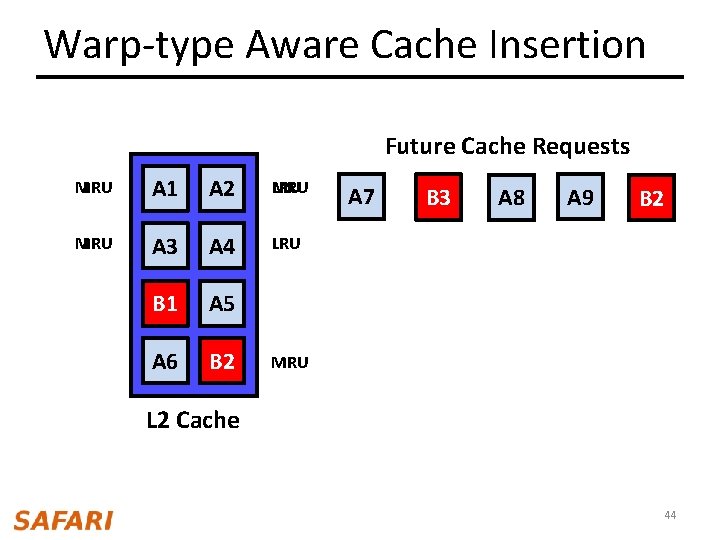

Warp-type-aware Cache Insertion • Goal: Utilize the cache well – Prioritize mostly-hit warps – Maintain blocks with high reuse • Our Solution: – All-miss and mostly-miss Insert at LRU – All-hit, mostly-hit and balanced Insert at MRU • Benefits: – All-hit and mostly-hit are less likely to be evicted – Heavily reused cache blocks from mostly-miss are likely to remain in the cache 18

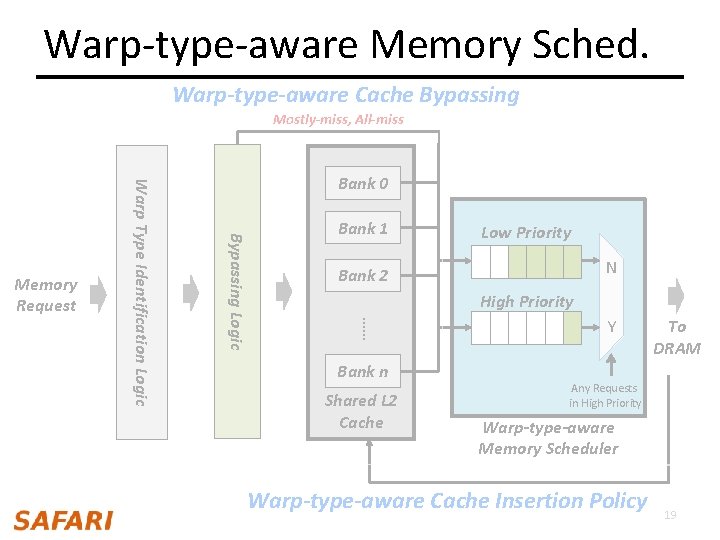

Warp-type-aware Memory Sched. Warp-type-aware Cache Bypassing Mostly-miss, All-miss Bank 1 Low Priority N Bank 2 High Priority …… Bypassing Logic Warp Type Identification Logic Memory Request Bank 0 Bank n Shared L 2 Cache Y To DRAM Any Requests in High Priority Warp-type-aware Memory Scheduler Warp-type-aware Cache Insertion Policy 19

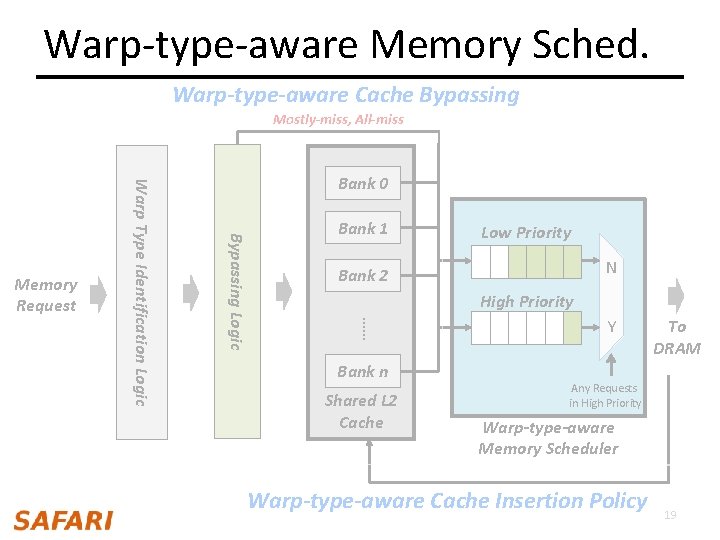

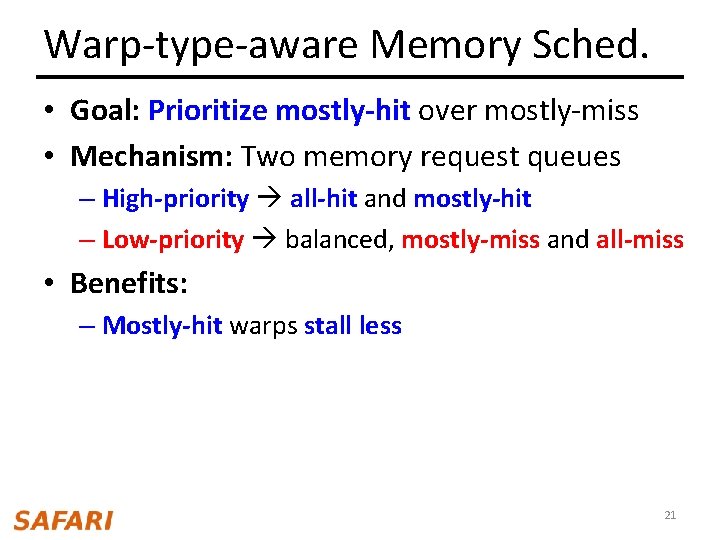

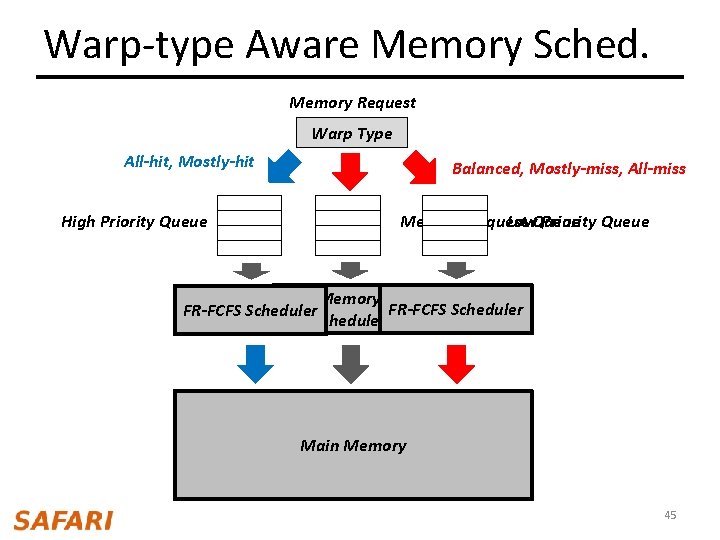

Not All Blocks Can Be Cached • Despite best efforts, accesses from mostly-hit warps can still miss in the cache – Compulsory misses – Cache thrashing • Solution: Warp-type-aware memory scheduler 20

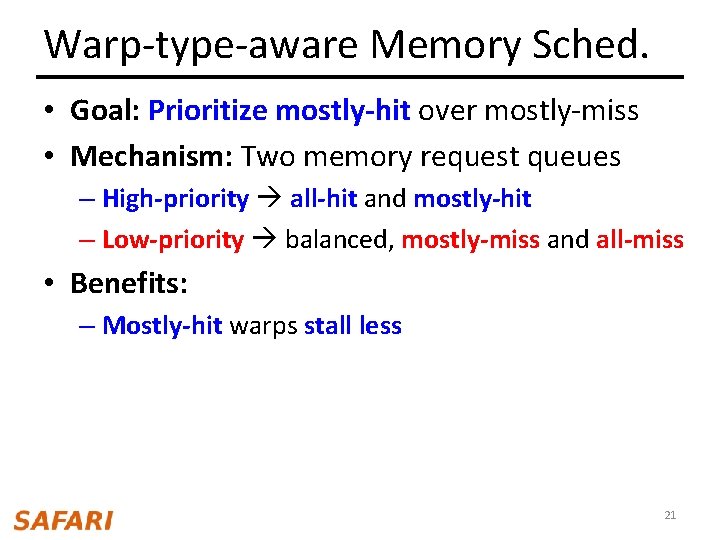

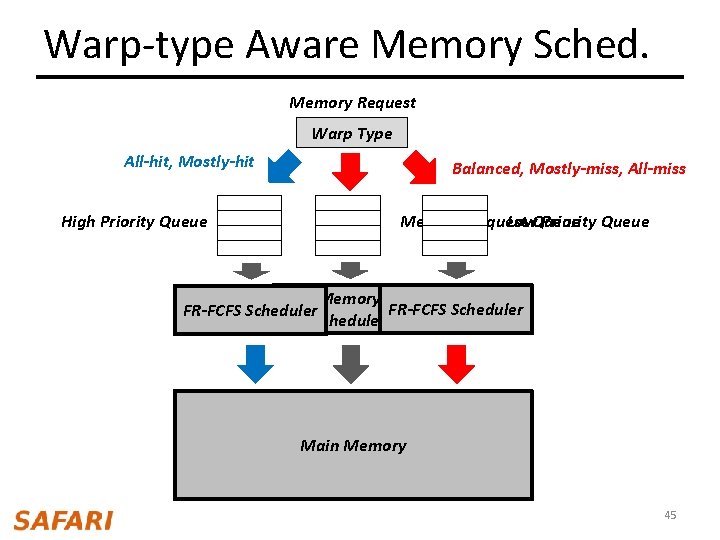

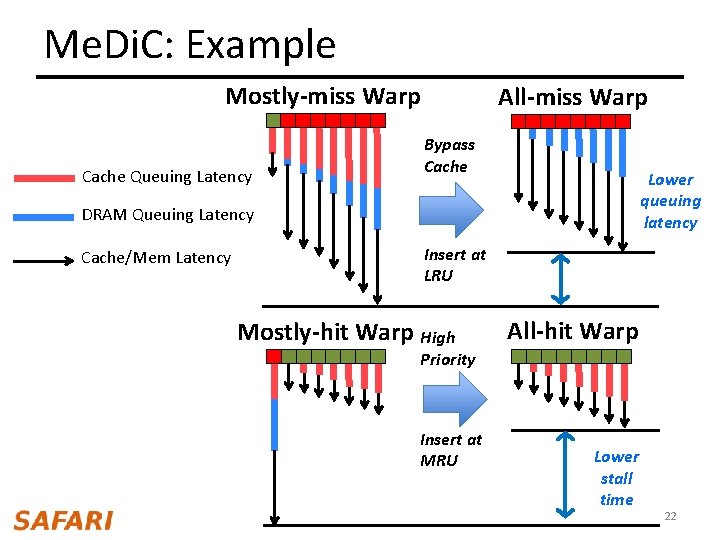

Warp-type-aware Memory Sched. • Goal: Prioritize mostly-hit over mostly-miss • Mechanism: Two memory request queues – High-priority all-hit and mostly-hit – Low-priority balanced, mostly-miss and all-miss • Benefits: – Mostly-hit warps stall less 21

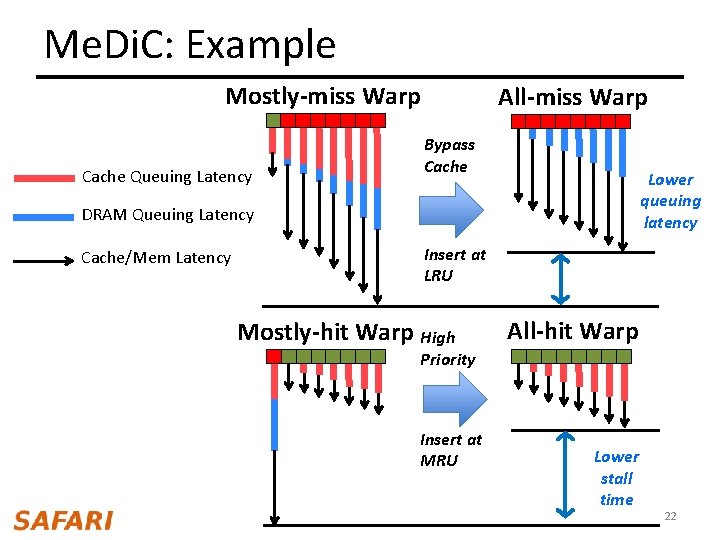

Me. Di. C: Example Mostly-miss Warp Cache Queuing Latency All-miss Warp Bypass Cache Lower queuing latency DRAM Queuing Latency Cache/Mem Latency Insert at LRU Mostly-hit Warp High All-hit Warp Priority Insert at MRU Lower stall time 22

Outline • • Background Key Observations Memory Divergence Correction (Me. Di. C) Results 23

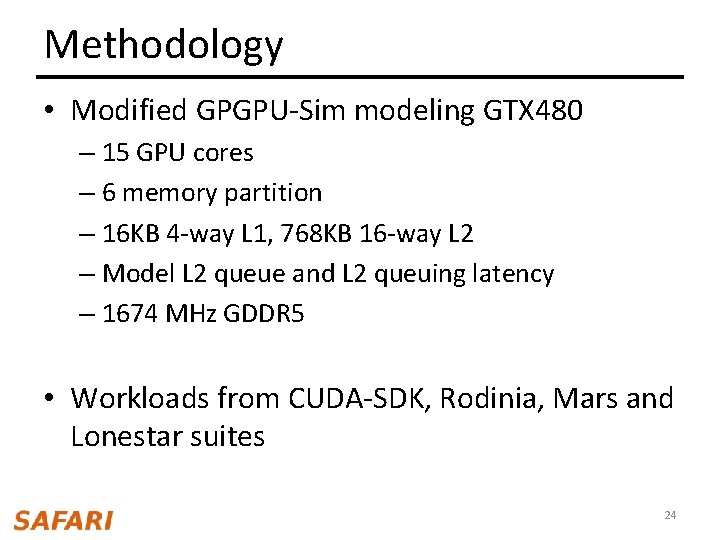

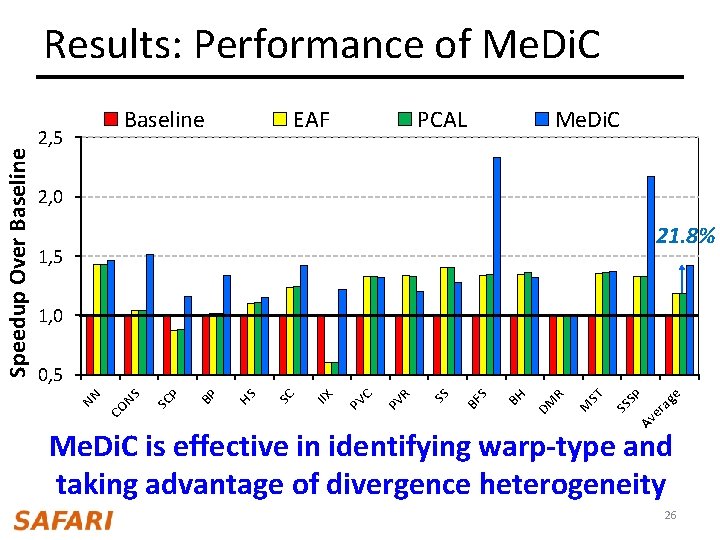

Methodology • Modified GPGPU-Sim modeling GTX 480 – 15 GPU cores – 6 memory partition – 16 KB 4 -way L 1, 768 KB 16 -way L 2 – Model L 2 queue and L 2 queuing latency – 1674 MHz GDDR 5 • Workloads from CUDA-SDK, Rodinia, Mars and Lonestar suites 24

![Comparison Points FRFCFS baseline Rixner ISCA 00 Cache Insertion EAF Seshadri Comparison Points • FR-FCFS baseline [Rixner+, ISCA’ 00] • Cache Insertion: – EAF [Seshadri+,](https://slidetodoc.com/presentation_image_h2/916821022955a40af737ab9cf81b2d4a/image-25.jpg)

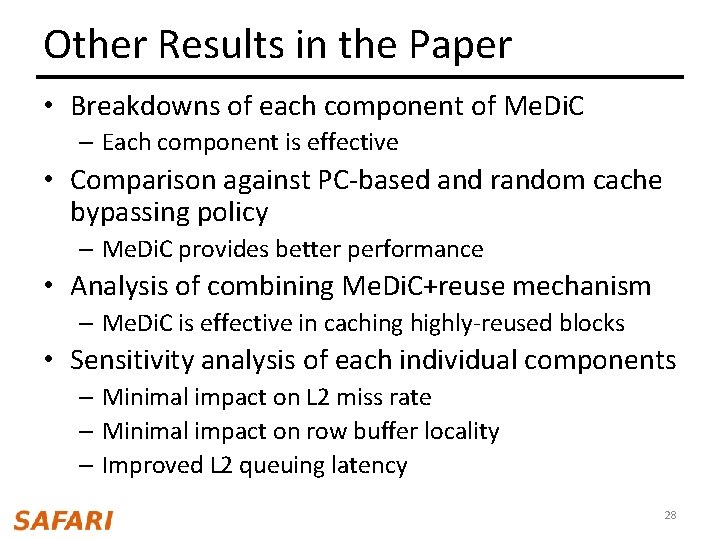

Comparison Points • FR-FCFS baseline [Rixner+, ISCA’ 00] • Cache Insertion: – EAF [Seshadri+, PACT’ 12] • Tracks blocks that are recently evicted to detect high reuse and inserts them at the MRU position • Does not take divergence heterogeneity into account • Does not lower queuing latency • Cache Bypassing: – PCAL [Li+, HPCA’ 15] • Uses tokens to limit number of warps that gets to access the L 2 cache Lower cache thrashing • Warps with highly reuse access gets more priority • Does not take divergence heterogeneity into account – PC-based and Random bypassing policy 25

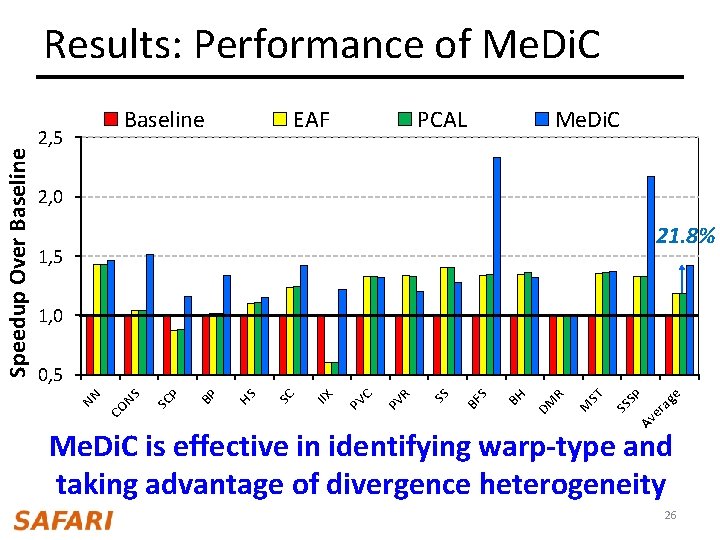

Baseline 2, 5 EAF PCAL Me. Di. C 2, 0 21. 8% 1, 5 1, 0 ge er a SP Av SS ST M DM R BH S BF SS R PV C PV IIX SC HS BP P SC CO NS 0, 5 NN Speedup Over Baseline Results: Performance of Me. Di. C is effective in identifying warp-type and taking advantage of divergence heterogeneity 26

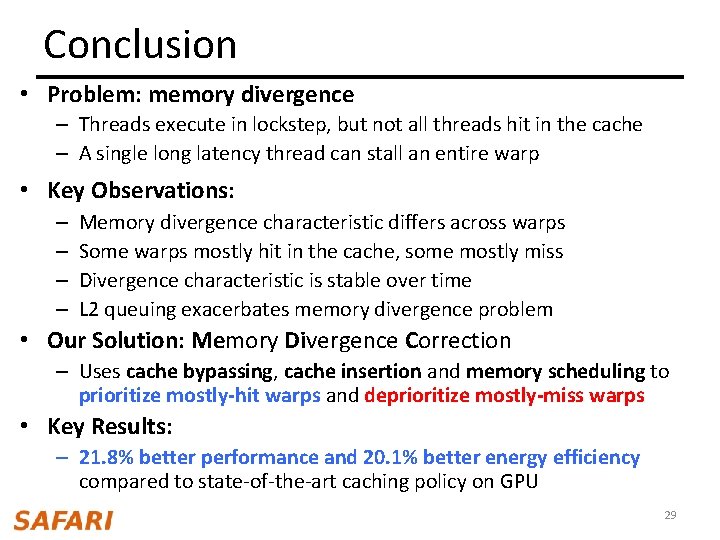

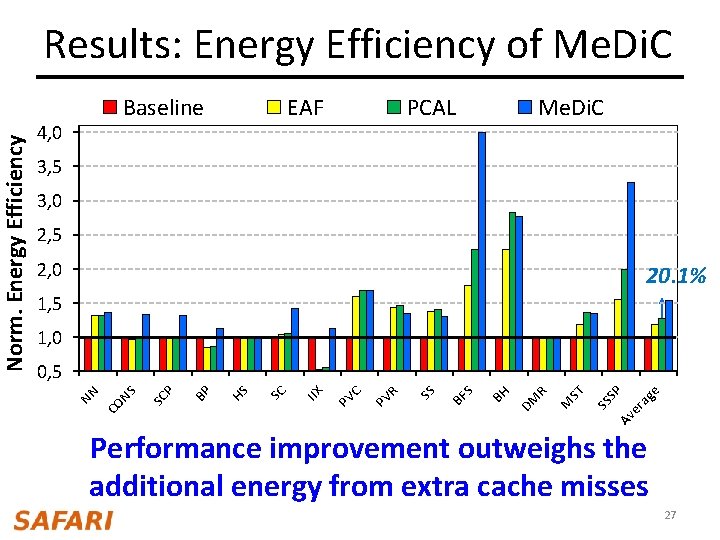

Baseline 4, 0 EAF PCAL Me. Di. C 3, 5 3, 0 2, 5 2, 0 20. 1% 1, 5 1, 0 ge er a SP Av SS ST M DM R BH S BF SS R PV C PV IIX SC HS BP P SC CO NS 0, 5 NN Norm. Energy Efficiency Results: Energy Efficiency of Me. Di. C Performance improvement outweighs the additional energy from extra cache misses 27

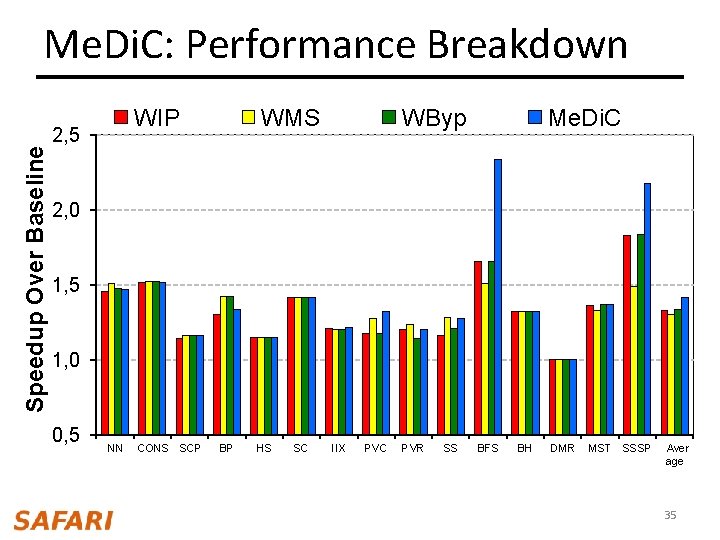

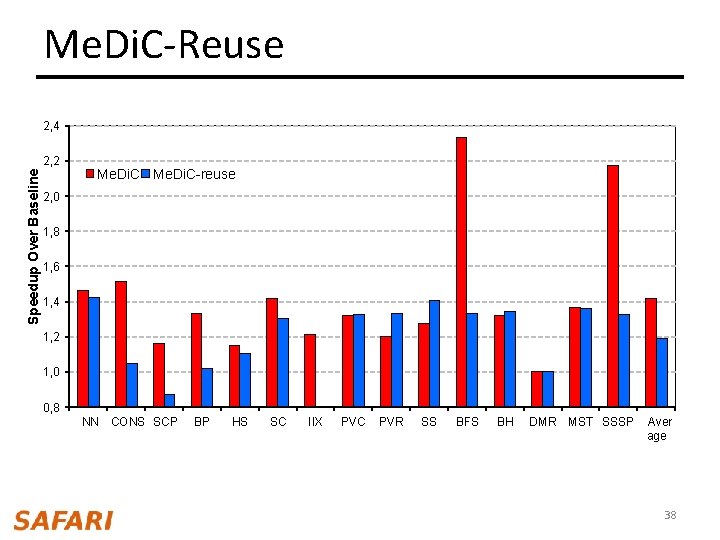

Other Results in the Paper • Breakdowns of each component of Me. Di. C – Each component is effective • Comparison against PC-based and random cache bypassing policy – Me. Di. C provides better performance • Analysis of combining Me. Di. C+reuse mechanism – Me. Di. C is effective in caching highly-reused blocks • Sensitivity analysis of each individual components – Minimal impact on L 2 miss rate – Minimal impact on row buffer locality – Improved L 2 queuing latency 28

Conclusion • Problem: memory divergence – Threads execute in lockstep, but not all threads hit in the cache – A single long latency thread can stall an entire warp • Key Observations: – – Memory divergence characteristic differs across warps Some warps mostly hit in the cache, some mostly miss Divergence characteristic is stable over time L 2 queuing exacerbates memory divergence problem • Our Solution: Memory Divergence Correction – Uses cache bypassing, cache insertion and memory scheduling to prioritize mostly-hit warps and deprioritize mostly-miss warps • Key Results: – 21. 8% better performance and 20. 1% better energy efficiency compared to state-of-the-art caching policy on GPU 29

Exploiting Inter-Warp Heterogeneity to Improve GPGPU Performance Rachata Ausavarungnirun Saugata Ghose, Onur Kayiran, Gabriel H. Loh Chita Das, Mahmut Kandemir, Onur Mutlu

Backup Slides 31

-1 16% 14% 12% 10% 8% 6% 4% 2% 0% 20 9 -3 40 9 -5 60 9 -7 80 9 10 - 9 0 9 12 11 0 9 14 13 0 9 16 15 0 9 18 17 0 9 20 19 0 9 22 21 0 9 24 23 0 9 26 25 0 9 28 27 0 9 -2 99 30 0+ 0 Fract. of L 2 Requests Queuing at L 2 Banks: Real Workloads 53. 8% Queuing Time (cycles) 32

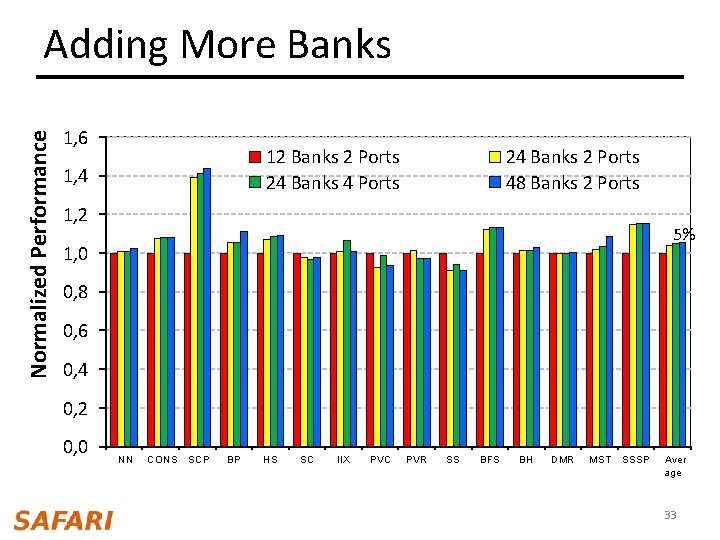

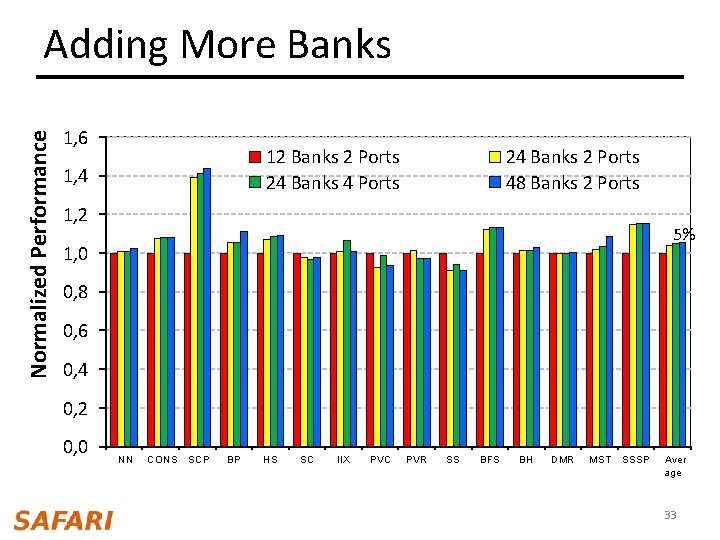

Normalized Performance Adding More Banks 1, 6 12 Banks 2 Ports 24 Banks 4 Ports 1, 4 24 Banks 2 Ports 48 Banks 2 Ports 1, 2 5% 1, 0 0, 8 0, 6 0, 4 0, 2 0, 0 NN CONS SCP BP HS SC IIX PVC PVR SS BFS BH DMR MST SSSP Aver age 33

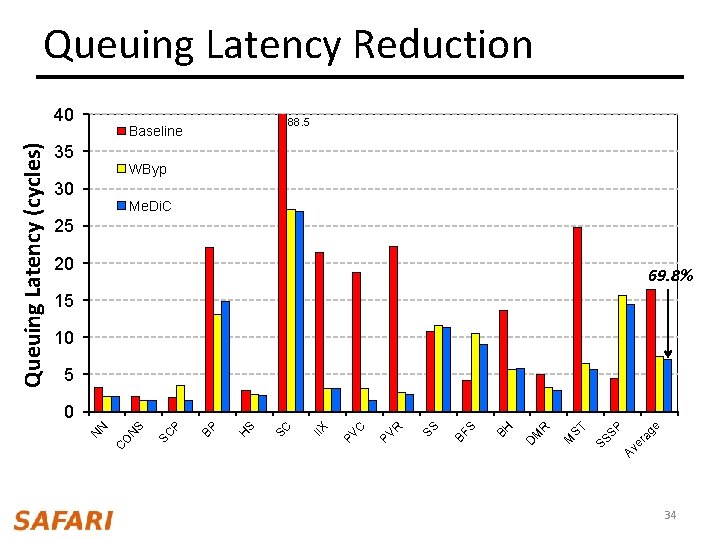

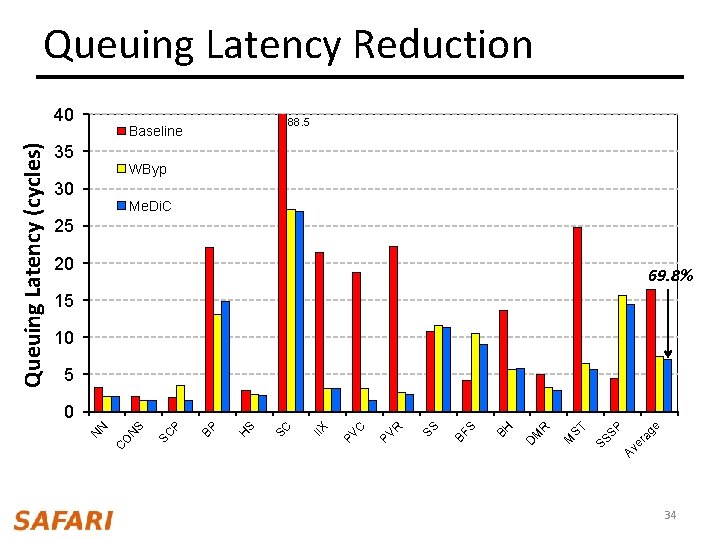

ST Av ge er a SS SP M R M D S BH BF SS R PV C PV IIX Baseline SC S H 30 BP 35 P 40 SC S N O C N N Queuing Latency (cycles) Queuing Latency Reduction 88. 5 WByp Me. Di. C 25 20 69. 8% 15 10 5 0 34

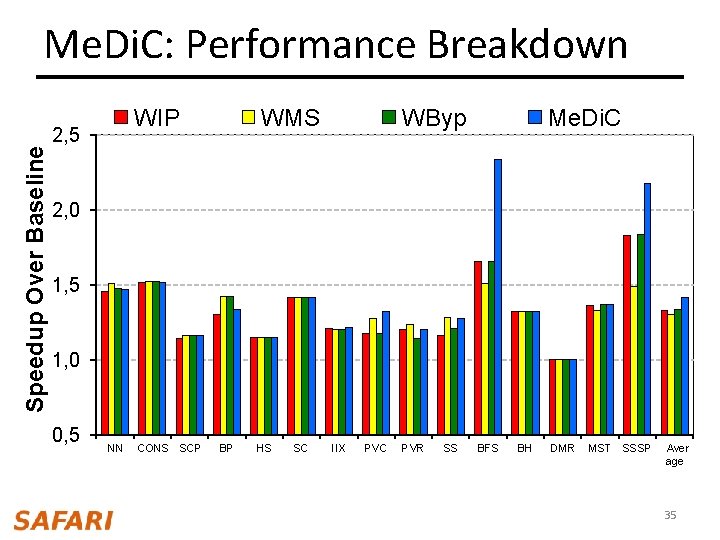

Speedup Over Baseline Me. Di. C: Performance Breakdown WIP 2, 5 WMS WByp Me. Di. C 2, 0 1, 5 1, 0 0, 5 NN CONS SCP BP HS SC IIX PVC PVR SS BFS BH DMR MST SSSP Aver age 35

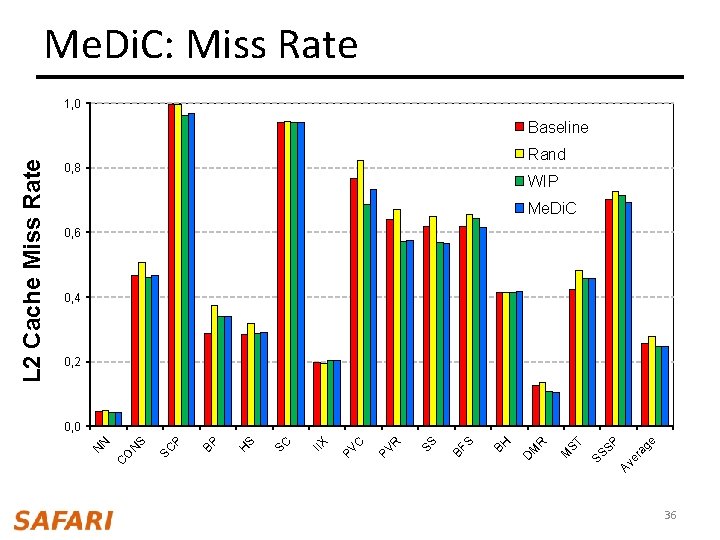

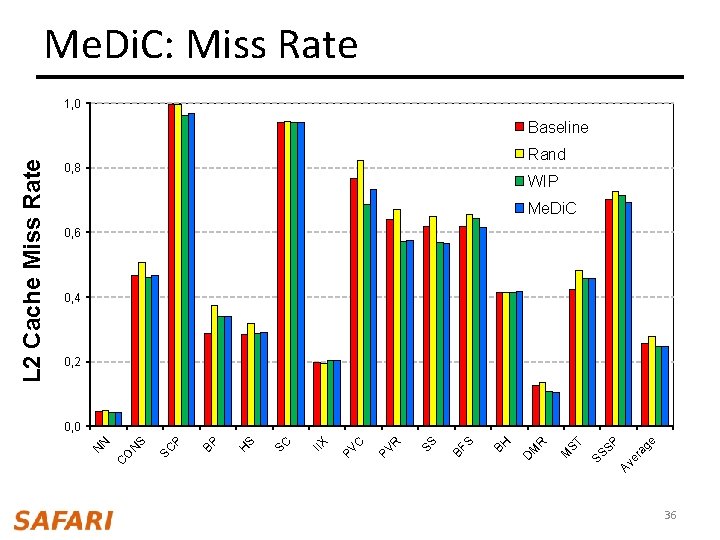

ST Av ge er a SS SP M R M 0, 8 D S BH BF SS R PV C PV IIX SC S H BP P SC S N O C N N L 2 Cache Miss Rate Me. Di. C: Miss Rate 1, 0 Baseline Rand WIP Me. Di. C 0, 6 0, 4 0, 2 0, 0 36

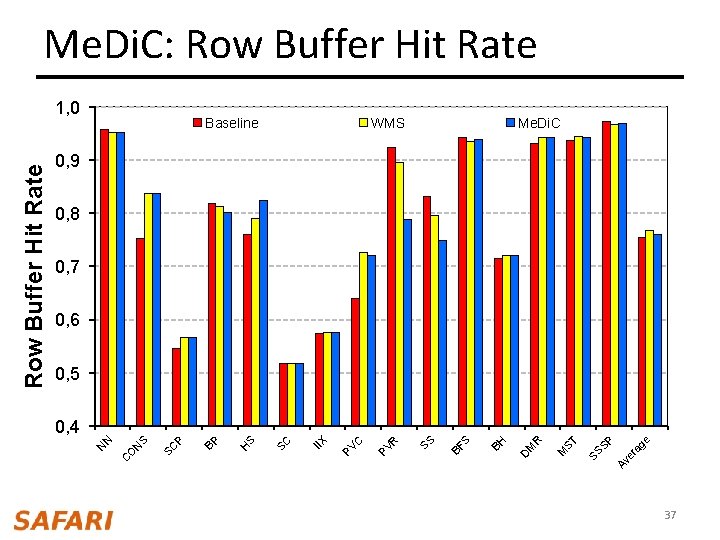

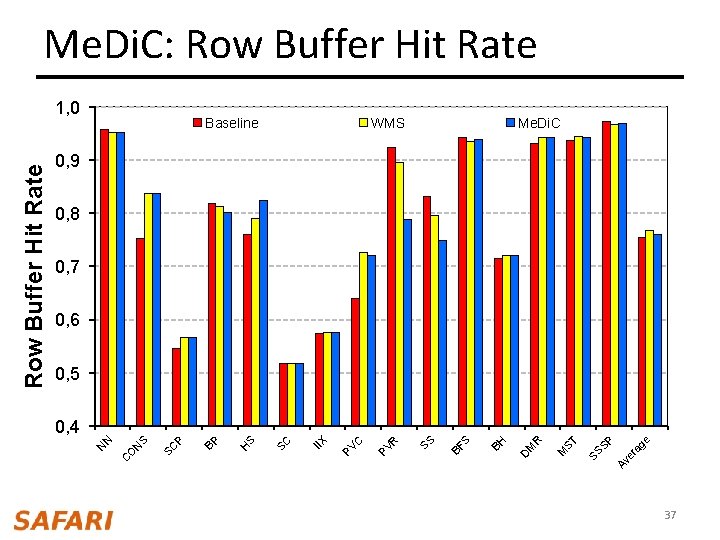

ag e er SP SS M ST R M BH S BF SS R PV C PV IIX WMS Av S SC H Baseline D P 1, 0 BP SC S N O C N N Row Buffer Hit Rate Me. Di. C: Row Buffer Hit Rate Me. Di. C 0, 9 0, 8 0, 7 0, 6 0, 5 0, 4 37

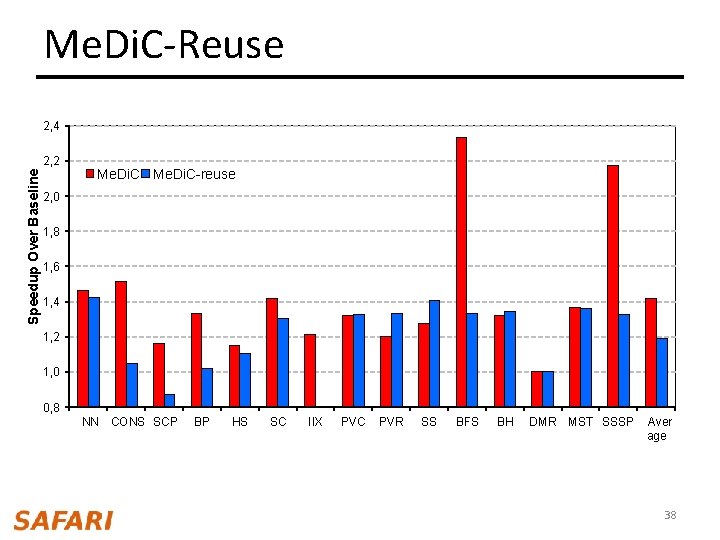

Me. Di. C-Reuse 2, 4 Speedup Over Baseline 2, 2 Me. Di. C-reuse 2, 0 1, 8 1, 6 1, 4 1, 2 1, 0 0, 8 NN CONS SCP BP HS SC IIX PVC PVR SS BFS BH DMR MST SSSP Aver age 38

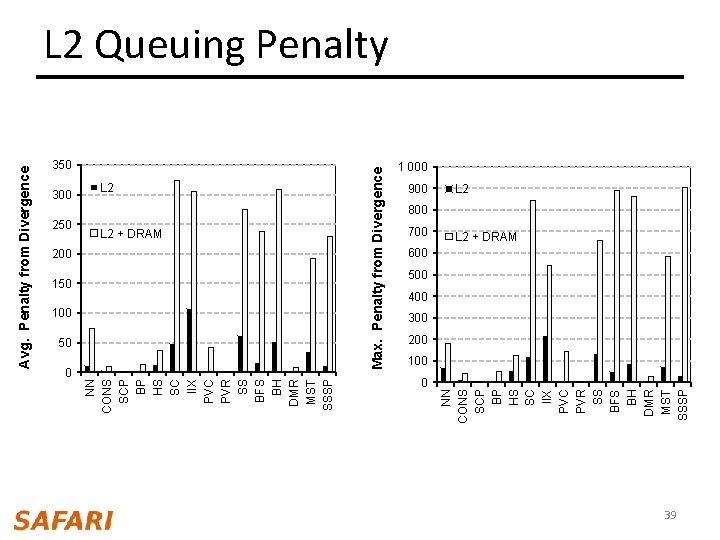

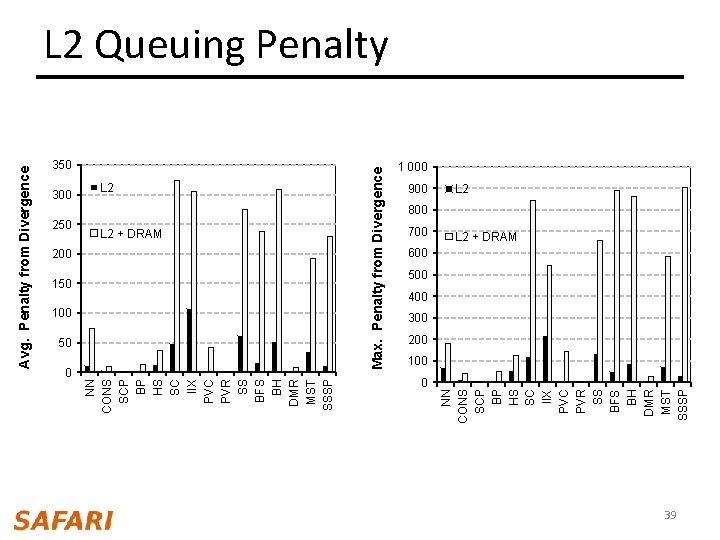

300 250 L 2 + DRAM 200 150 100 50 Max. Penalty from Divergence 0 NN CONS SCP BP HS SC IIX PVC PVR SS BFS BH DMR MST SSSP 350 900 700 0 NN CONS SCP BP HS SC IIX PVC PVR SS BFS BH DMR MST SSSP Avg. Penalty from Divergence L 2 Queuing Penalty 1 000 L 2 800 L 2 + DRAM 600 500 400 300 200 100 39

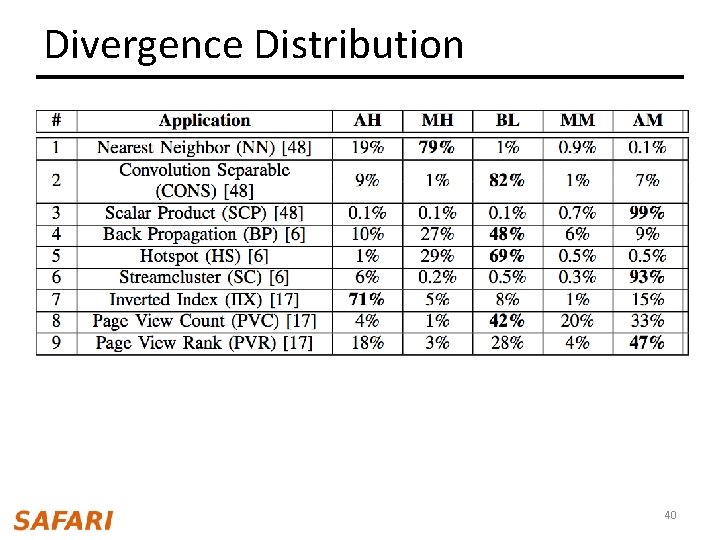

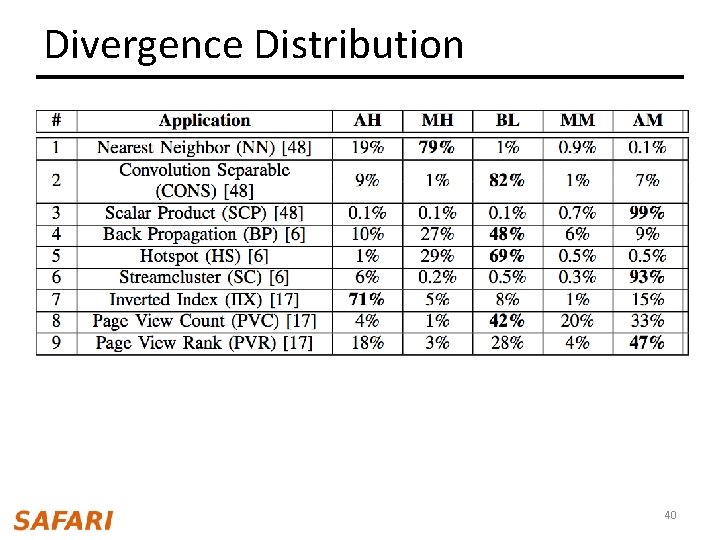

Divergence Distribution 40

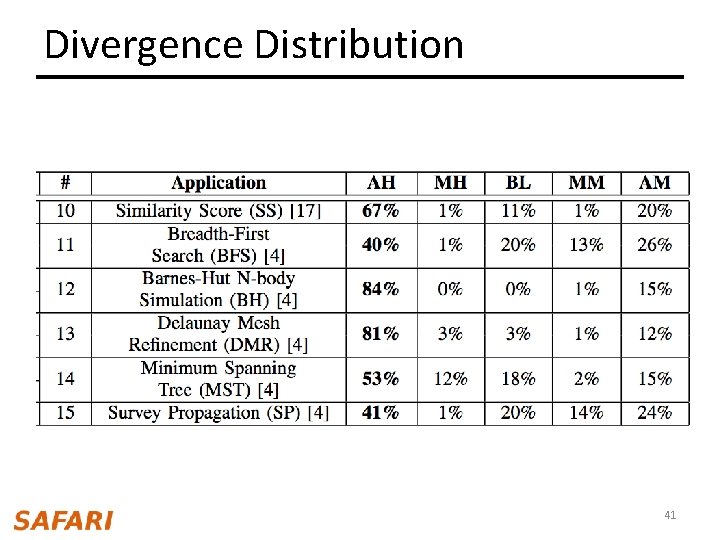

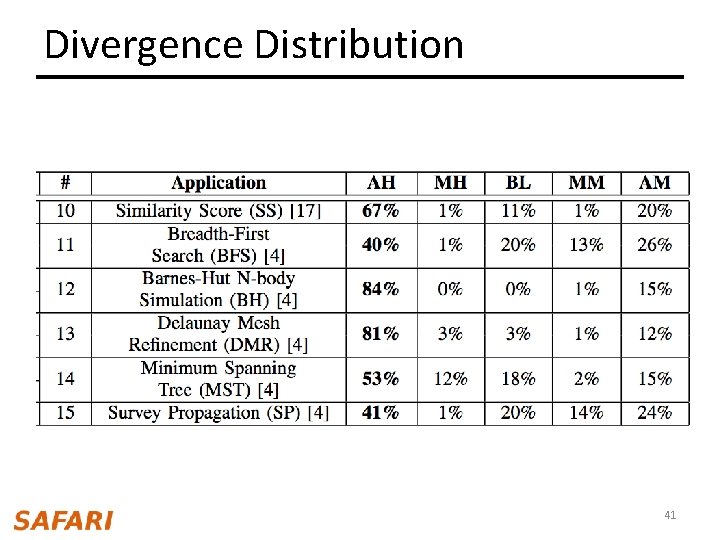

Divergence Distribution 41

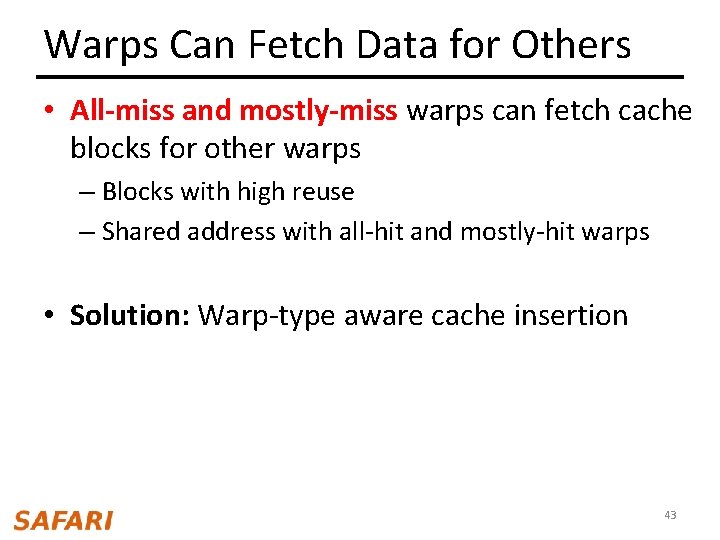

Stable Divergence Characteristics • Warp retains its hit ratio during a program phase • Heterogeneity – Control Divergence – Memory Divergence – Edge cases on the data the program is operating on – Coalescing – Affinity to different memory partition • Stability – Temporal + spatial locality 42

Warps Can Fetch Data for Others • All-miss and mostly-miss warps can fetch cache blocks for other warps – Blocks with high reuse – Shared address with all-hit and mostly-hit warps • Solution: Warp-type aware cache insertion 43

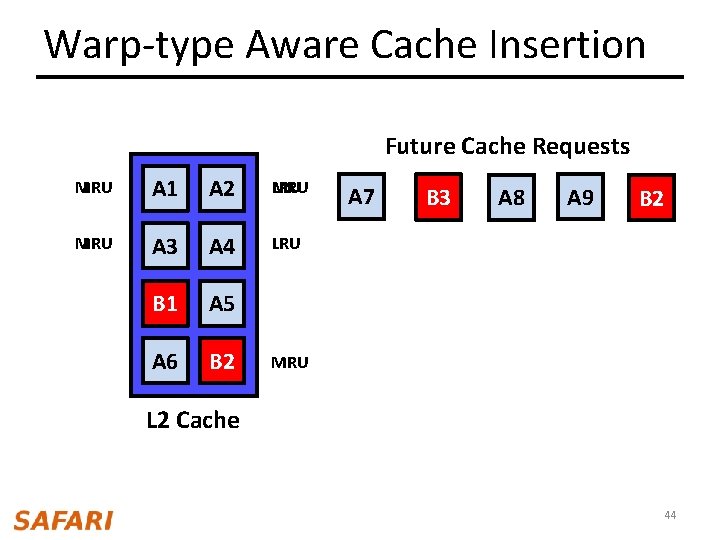

Warp-type Aware Cache Insertion Future Cache Requests MRU LRU A 1 A 2 LRU MRU LRU A 3 A 4 LRU B 1 A 5 A 6 B 2 A 7 B 3 A 8 A 9 B 2 MRU L 2 Cache 44

Warp-type Aware Memory Sched. Memory Request Warp Type All-hit, Mostly-hit High Priority Queue Balanced, Mostly-miss, All-miss Memory Request Low. Queue Priority Queue Memory FR-FCFS Scheduler Main Memory 45