Evolutionary Computation vol 2 Advanced Algorithms and Operators

- Slides: 19

Evolutionary Computation vol. 2: Advanced Algorithms and Operators CH. 22 - 26 30 March. 2011 Summarized by Kim Soo-Jin

Contents l CH 22. Meta-evolutionary approaches l CH 23. Coevolutionary algorithms l CH 24. Efficient implementation of algorithms l CH 25. Computation time of evolutionary operators l CH 26. Hardware realizations of evolutionary algorithms (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 2

CH 22. Meta-evolutionary approaches (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 3

Working mechanism l After having defined the individuals of a population for a given problem, the designer of an evolutionary algorithm (EA) is faced with the problem of deciding what types of operator and control parameter settings are likely to produce the best results. ¨ systematically checking a range of operators and/or parameter values and assessing the performance of the EA (De Jong 1975, Schaffer et a 1 1989) ¨ the experiences reported in the literature describing similar application scenarios (Goldberg I 989 a, Jog et a 1 1989, Oliver et a 1 1987, Starkweather et a 1 1991) ¨ the results of theoretical analyses for determining the optimal parameter settings (Goldberg 1989 b, Hesser and Manner 1990, Nakano et a 1 1994). (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 4

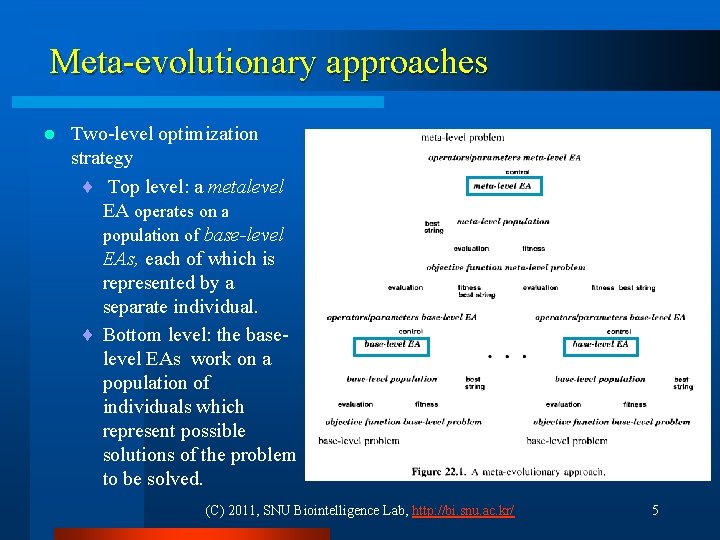

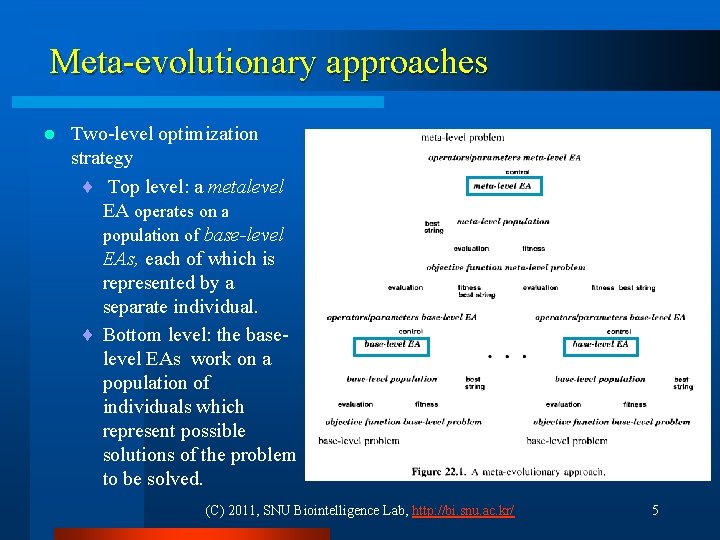

Meta-evolutionary approaches l Two-level optimization strategy ¨ Top level: a metalevel EA operates on a population of base-level EAs, each of which is represented by a separate individual. ¨ Bottom level: the baselevel EAs work on a population of individuals which represent possible solutions of the problem to be solved. (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 5

Formal description l Let B be a base-level problem, IB its solution space, we assume that FB should be maximized l Thus, the search for a good solution for the base-level problem B reduces to a metalevel problem M of maximizing the objective function FM (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 6

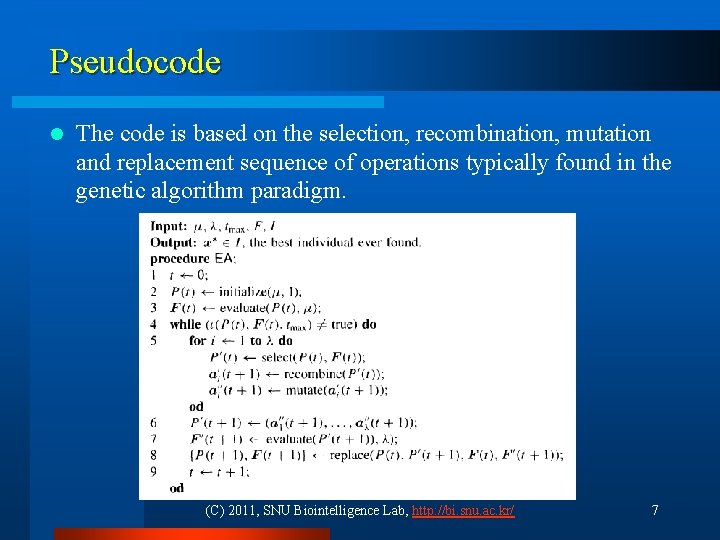

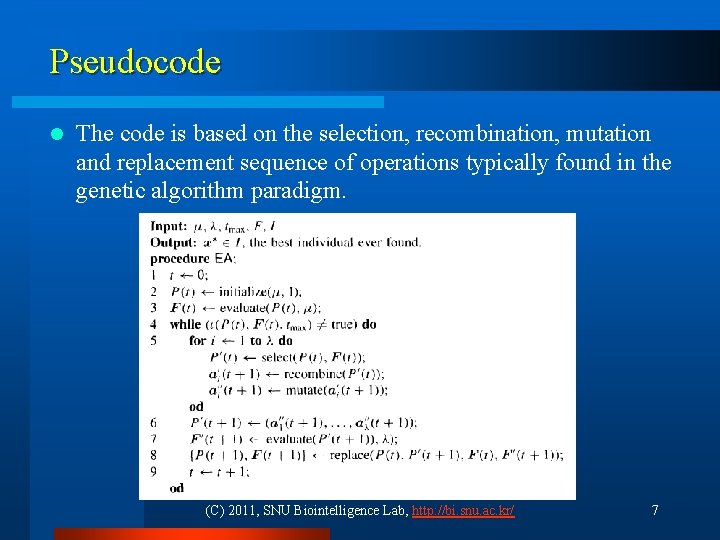

Pseudocode l The code is based on the selection, recombination, mutation and replacement sequence of operations typically found in the genetic algorithm paradigm. (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 7

Related works (1/2) l l l (i) Mercer and Sampson (1978) gave presumably one of the first descriptions of a meta-evolutionary approach. (ii) Grefenstette’ s meta-CA (Grefenstette 1986) operated on individuals representing the population size, crossover probability, mutation rate, generation gap, scaling window, and selection strategy. (iii) Shahookar and Mazumder ( 1990) used a meta-GA to optimize the crossover rate, the mutation rate, and the inversion rate of a GA to solve the standard cell placement problem for industrial circuits consisting of between 100 and 800 cells. (iv) Freisleben and Hartfelder ( I 993 a, b) proposed a meta-GA approach which was based on a much larger space of up to 20 components, divided into decisions and parameters. (vi) Back’s meta-algorithm (1994) combines principles of ESs and GAS in order to optimize a population of GAs. (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 8

Related works (2/2) (vii) Lee and Takagi (1994) have presented a meta-CA-based approach for studying the effects of a dynamically adaptive population size, crossover, and mutation rate on De Jong’s set of test functions. l (viii) Pham ( I 994) repeated Grefenstette’s approach for different baselevel objective functions as a preliminary step towards a proposal called competitive evolution. l (ix) Tuson and Ross (1 996 a, b) have investigated the dynamic adaptation of GA operator settings by means of two different approaches. l (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 9

CH 23. Coevolutionary algorithms (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 10

Coevolution: Complementary evolution of closely associated species. The interlocking adaptations of many flowering plants and their pollinating insects provide some striking examples of coevolution. In a broader sense, predator-prey relationships also involve coevolution, with an evolutionary advance in the predator, for instance, triggering an evolutionary response in the prey. (The Oxford Dictionary of Natural History, 1985) l According to the description above, coevolution involves closely interacting species. l (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 11

Competitive fitness The fitness function of most EAs is a user-defined optimization criterion which evaluates the individuals in isolation from each other. l Competitive fitness functions (Angeline and Pollack 1993) are a type of relative fitness function. They calculate the fitness of an individual through competition ‘duels’ with other individuals. ¨ Static fitness functions work well for simple problems, but for more complex or nondeterministic environments it can be difficult to create a function that accurately captures the fitness of every individual in the population. ¨ Competitive fitness functions help to deal with this problem, by judging the fitness of individuals relative to the rest of the population instead of compared to a single objective standard. A genetic programming system which uses a competitive fitness function is called a coevolutionary system, because the behavior of an individual evolves depending on the behavior of the other members of the population. l (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 12

Coevolving sorting network l Hillis (1992) put the computational use of predator-prey coevolution in the spotlight. ¨ The goal is to find a network which correctly sorts all possible lists of 16 numbers with as few comparisons as possible. ¨ Hillis used two population. < The first population: sorting network < The second population: sets of test lists which contain numbers to be sorted. ¨ Both populations were geographically distributed over a grid with each location containing one set of test lists and one sorting network. < At each generation, a sorting network was tested on the set of test lists at the same location. (selection is made locally) < The fitness of a sorting network was defined as the percentage of correctly sorted test lists. The fitness of the set of test lists was equal to the percentage of test lists incorrectly sorted by the network. (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 13

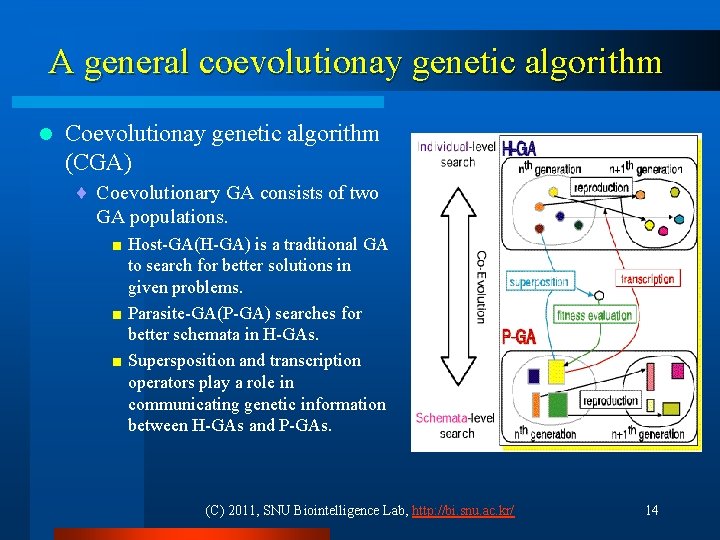

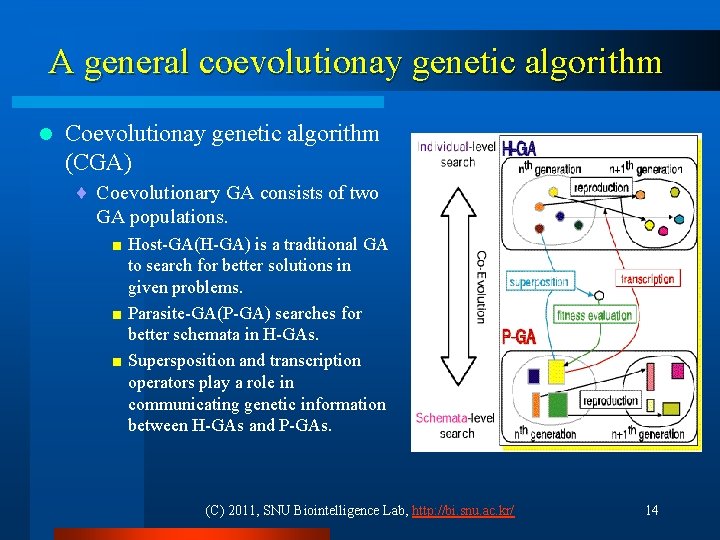

A general coevolutionay genetic algorithm l Coevolutionay genetic algorithm (CGA) ¨ Coevolutionary GA consists of two GA populations. < Host-GA(H-GA) is a traditional GA to search for better solutions in given problems. < Parasite-GA(P-GA) searches for better schemata in H-GAs. < Supersposition and transcription operators play a role in communicating genetic information between H-GAs and P-GAs. (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 14

Solving test-solution problems with a CGA l Test-solution problems consist of two types of elements ¨ Potential solution (concept description ¨ Test (examples or constraints) l CGAs operate on two interacting populations. ¨ Fitness interaction between two types of individuals. ¨ Once the fitness of the initial populations is calculated, the individuals are sorted on fitness; that is, the fitter solutions and tests are located at the top of their respective populations. (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 15

CGA applications l l l Classification Path planning Constraint satisfaction Density classification Symbiosis Recently, researchers have been using CGAs in realworld applications such as object motion estimation from video images (Dixon et al 1997) and timetabling for an emergency service (Kragelund 1997) (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 16

CH 24. Efficient implementation of algorithms l Given the variety of evolutionary techniques, it is not possible to present a complete discussion of implementation details. ¨ Random number generators < generating random populations as usual, and then performing repeated crossover operations with uniform random pairing ¨ Selection operators < compute selection probabilities for the current population based on fitness. ¨ Mutation operators < sampling from a random variable for each gene position ¨ Evaluation phase < Deterministic evaluation < Monte Carlo evaluation < Parallel evaluation (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 17

CH 25. Computation time of evolutionary operators l Computation time of selection operators ¨ ¨ ¨ Proportional selection vici roulette wheel Stochastic universal sampling q-ary tournament selection (µ, A) selection (µ+A) selection q-fold binary tournament selection (EP selection) Computation time of mutation operators l Computation time of recombination operators l Remarks l ¨ Without any doubt, it is always useful to employ the most efficient data structures and algorithms to realize variation and selection operators, but in almost all practical applications most time is spent during the calculation of the objective function value. Therefore, the realization of this operation ought to be always checked with regard to potential savings of computing time. (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 18

CH 26. Hardware realization of evolutionary algorithms In order to use evolutionary algorithms (EAs) including genetic algorithms(GAs) in real time or for hard real-world applications. l As EA computation has inherent parallelisms, EA computation using parallel machines is a versatile and effective way for speeding up. l ¨ parallel implementations of GAs on different parallel machines ¨ dedicated hardware systems for EAs < a TSP GA machine, a wafer-scale GA machine, and vector processing of GA operators ¨ evolvable hardware (EHW) < It is built on FPGAs (field programmable gate arrays) and whose architecture can be reconfigured by using evolutionary computing techniques to adapt to the new environment. < If hardware errors occur or if new hardware functions are required, EHW can alter its own hardware structure in order to accommodate such changes. (C) 2011, SNU Biointelligence Lab, http: //bi. snu. ac. kr/ 19