RAS Reliability Availability Serviceability Product Support Engineering VI

RAS - Reliability, Availability, Serviceability Product Support Engineering VI 4 - Mod 2 -8 - Slide 1 VMware Confidential

Module 2 Lessons Lesson 1 – v. Center Server High Availability Lesson 2 – v. Center Server Distributed Resource Scheduler Lesson 3 – Fault Tolerance Virtual Machines Lesson 4 – Enhanced v. Motion Compatibility Lesson 5 – DPM - IPMI Lesson 6 – v. Apps Lesson 7 – Host Profiles Lesson 8 – Reliability, Availability, Serviceability ( RAS ) Lesson 9 – Web Access Lesson 10 – v. Center Server Update Manager Lesson 11 – Guided Consolidation Lesson 12 – Health Status VI 4 - Mod 2 -8 - Slide 2

Module 2 -8 Lessons Lesson 1 – Overview of RAS Lesson 2 – RAS objectives Lesson 3 – Networking v. Probs Lesson 4 – Storage v. Probs Lesson 5 – VMFS v. Probs Lesson 6 – Migration v. Prob VI 4 - Mod 2 -8 - Slide 3

Introduction The long-term goal of the ESX RAS project is to make ESX more Reliable, Available and Serviceable. To do so the VMkernel needs to detect, report, recover, diagnose and repair/react to hardware and software problems which occur in the system. ESX RAS 1. 0 will focus on detecting asynchronous hardware and synchronous software observations and reporting them. VI 4 - Mod 2 -8 - Slide 4

RAS Objectives ESX RAS team objective is to increase the reliability, availability and serviceability of the vmkernel. This includes: Hardening of vmkernel drivers (hardware errors): CPU, Memory, PCI(X/Express), SCSI, Networking. Hardening of vmkernel facilities (software errors): SCSI, Networking, VMotion, DMotion, etc. Developing a standardized method of reporting observations from software and hardware error handlers. Developing a method to diagnose a given stream of observations, down to one or more problems which may have caused them. Develop method for determining predictive failure of a given (sub-)system and feed analysis to consumers (DRS, DPM, FT, HA) Gather and write service actions which correspond to the problem or set of problems which are possibly present. Develop automated policies for certain problems which may be taken care of without user action. Maintain and improve logging, coredump, and PSOD infrastructure in the vmkernel VI 4 - Mod 2 -8 - Slide 5

RAS Terms RAS: Reliability, Availability, Serviceability. Reliability: The ability of a system to perform and maintain its functions, in the face of hostile or unexpected circumstances. Availability: The proportion of time a system is in a functioning condition. Serviceability: The ability to debug or perform root cause analysis in pursuit of solving a problem with a product. Hardening: To enhance a (sub-)system to be able to detect, report and handle errors which may be encountered, whether hardware or software related. Handling may involve panicing and/or attempting recovery from a given error or stream of errors. VProb: A VProb is an automatically generated problem report. VI 4 - Mod 2 -8 - Slide 6

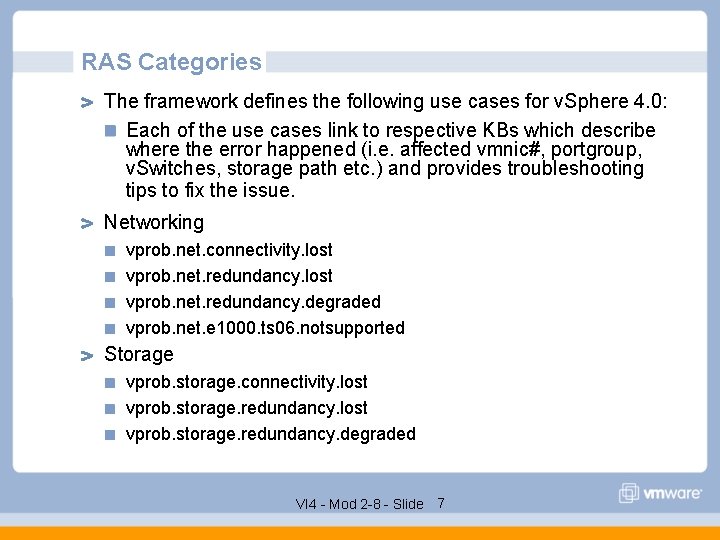

RAS Categories The framework defines the following use cases for v. Sphere 4. 0: Each of the use cases link to respective KBs which describe where the error happened (i. e. affected vmnic#, portgroup, v. Switches, storage path etc. ) and provides troubleshooting tips to fix the issue. Networking vprob. net. connectivity. lost vprob. net. redundancy. degraded vprob. net. e 1000. ts 06. notsupported Storage vprob. storage. connectivity. lost vprob. storage. redundancy. degraded VI 4 - Mod 2 -8 - Slide 7

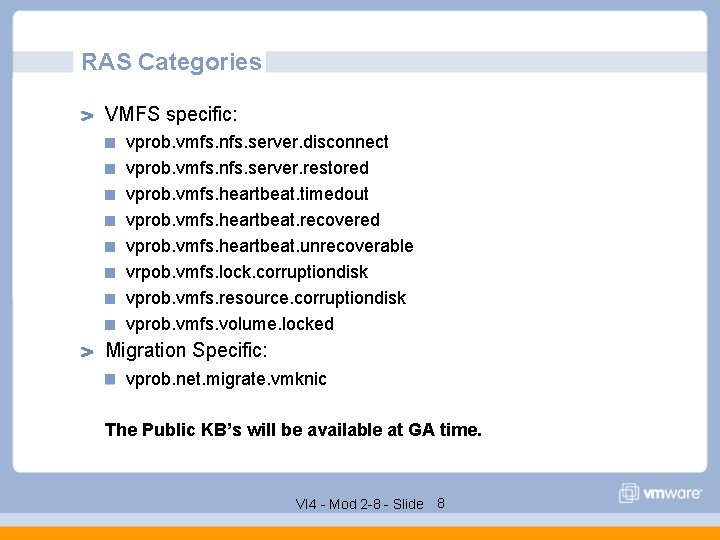

RAS Categories VMFS specific: vprob. vmfs. nfs. server. disconnect vprob. vmfs. nfs. server. restored vprob. vmfs. heartbeat. timedout vprob. vmfs. heartbeat. recovered vprob. vmfs. heartbeat. unrecoverable vrpob. vmfs. lock. corruptiondisk vprob. vmfs. resource. corruptiondisk vprob. vmfs. volume. locked Migration Specific: vprob. net. migrate. vmknic The Public KB’s will be available at GA time. VI 4 - Mod 2 -8 - Slide 8

Networking VProb vprob. net. connectivity. lost http: //communities. vmware. com/viewwebdoc. jspa? document. ID=DOC 6122&community. ID=2701 Connectivity to a physical network has been lost, all the affected portgroups are part of the message (e. g. >Lost network connectivity on virtual switch "system". Physical NIC vmnic 1 is down. Affected port groups: "cos", "VM Network". <) VI 4 - Mod 2 -8 - Slide 9

Networking VProb vprob. net. redundancy. lost http: //communities. vmware. com/viewwebdoc. jspa? document. ID=DOC 6097&community. ID=2701 Only one physical NIC is currently connected, one more failure will result in a loss of connectivity (e. g. >Lost uplink redundancy on virtual switch "system". Physical NIC vmnic 0 is down. Affected port groups: "cos", "VM Network". <) VI 4 - Mod 2 -8 - Slide 10

Networking VProb vprob. net. redundancy. degraded http: //communities. vmware. com/viewwebdoc. jspa? document. ID= DOC-6098&community. ID=2701 One of the physical NICs in your NIC team has gone down, you still have n-1 NICs available (e. g. >Uplink redundancy degraded on virtual switch "v. Switch 0". Physical NIC vmnic 1 is down. 2 uplinks still up. Affected portgroups: "VM Network". <) VI 4 - Mod 2 -8 - Slide 11

Networking VProb vprob. net. e 1000. tso 6. notsupported (KB article) Guest e 1000 driver is misbehaving and sending TSO IPv 6 packets, which will be dropped. The vprob specifies the affected VM, and the KB article discusses ways to fix this. http: //communities. vmware. com/viewwebdoc. jspa? document. ID=DOC-7393 "Guest-initiated IPv 6 TCP Segmentation Offload (TSO) packets ignored. Manually disable TSO inside the guest operating system in virtual machine "XYZ", or use a different virtual adapter. " VI 4 - Mod 2 -8 - Slide 12

Storage VProb vprob. storage. connectivity. lost http: //communities. vmware. com/viewwebdoc. jspa? document. ID=DOC 6099&community. ID=2701 The connectivity to a specific device has been lost (e. g. "Lost connectivity to storage device naa. 60 a 9800043346534645 a 433967325334. Path vmhba 35: C 1: T 0: L 7 is down") VI 4 - Mod 2 -8 - Slide 13

Storage VProb vprob. storage. redundancy. lost http: //communities. vmware. com/viewwebdoc. jspa? document. ID=DOC 6120&community. ID=2701 Only one path is remaining to a device and you no longer have any redundancy (e. g. "Lost path redundancy to storage device naa. 60 a 9800043346534645 a 433967325334. Path vmhba 35: C 1: T 0: L 7 is down. ") VI 4 - Mod 2 -8 - Slide 14

Storage VProb vprob. storage. redundancy. degraded http: //communities. vmware. com/viewwebdoc. jspa? document. ID= DOC-6099&community. ID=2701 One of your paths to a device has been lost but you still have n-1 paths remaining (e. g. "Path redundancy to storage device naa. 60 a 9800043346534645 a 433967325334 degraded. Path vmhba 35: C 1: T 0: L 7 is down. 3 remaining active paths. ") VI 4 - Mod 2 -8 - Slide 15

VMFS v. Prob vprob. vmfs. nfs. server. disconnect vprob. vmfs. nfs. server. restored http: //pseweb. vmware. com/twiki/bin/viewfile/Main/Vmkernel. Ras? rev=1; file name=vprob. vmfs. volume. locked. htm Lost connection to server nfs-server mount point /share, mounted as 1264 e 433 -5854 ee 53 -00000000 ("nfs-share") VI 4 - Mod 2 -8 - Slide 16

VMFS v. Prob vprob. vmfs. heartbeat. timedout http: //pseweb. vmware. com/twiki/bin/viewfile/Main/Vmkernel. Ras? re v=1; filename=vprob. vmfs. heartbeat. combined. htm VMFS Volume Connectivity Degraded 496 befed-1 c 79 c 817 -6 beb 001 ec 9 b 60619 san-lun-100 VI 4 - Mod 2 -8 - Slide 17

VMFS v. Prob vprob. vmfs. heartbeat. recovered http: //pseweb. vmware. com/twiki/bin/viewfile/Main/Vmke rnel. Ras? rev=1; filename=vprob. vmfs. heartbeat. combi ned. htm VMFS Volume Connectivity Restored 496 befed-1 c 79 c 817 -6 beb 001 ec 9 b 60619 san-lun-100 VI 4 - Mod 2 -8 - Slide 18

VMFS v. Prob vprob. vmfs. heartbeat. unrecoverable http: //pseweb. vmware. com/twiki/bin/viewfile/Main/Vmke rnel. Ras? rev=1; filename=vprob. vmfs. heartbeat. combi ned. htm VMFS Volume Connectivity lost 496 befed-1 c 79 c 817 -6 beb 001 ec 9 b 60619 san-lun-100 VI 4 - Mod 2 -8 - Slide 19

VMFS v. Prob vrpob. vmfs. lock. corruptiondisk vprob. vmfs. resource. corruptiondisk http: //pseweb. vmware. com/twiki/bin/viewfile/Main/Vmkernel. Ras? re v=1; filename=vprob. vmfs. corruptioncombined. htm Volume 4976 b 16 c-bd 394790 -6 fd 8 -00215 aaf 0626 (san-lun-100) may be damaged on disk. Corrupt lock detected at offset O Volume 4976 b 16 c-bd 394790 -6 fd 8 -00215 aaf 0626 (san-lun-100) may be damaged on disk. Resource cluster metadata corruption detected VI 4 - Mod 2 -8 - Slide 20

VMFS v. Prob vprob. vmfs. volume. locked http: //pseweb. vmware. com/twiki/bin/viewfile/Main/Vmkernel. Ras? re v=1; filename=vprob. vmfs. volume. locked. htm Volume on device naa. 60060160 b 3 c 018009 bd 1 e 02 f 725 fdd 11: 1 locked, possibly because remote host 10. 17. 211. 73 encountered an error during a volume operation and couldn’t recover. VI 4 - Mod 2 -8 - Slide 21

Migration Specific vprob. net. migrate. vmknic http: //pseweb. vmware. com/twiki/bin/viewfile/Main/Vmkernel. Ras? re v=1; filename=vprob. net. migrate. vmkernel. htm The ESX advanced config option /Migrate/Vmknic is set to an invalid vmknic: vmk 0. /Migrate/Vmknic specifies a vmknic that VMotion binds to for improved performance. Please update the config option with a valid vmknic or, if you don't want VMotion to bind to a specific vmknic, remove the invalid vmknic and leave the option blank. VI 4 - Mod 2 -8 - Slide 22

Lesson 2 -8 Summary Understand what v. Probs are Learn how to troubleshoot v. Probs VI 4 - Mod 2 -8 - Slide 23

Lesson 2 -8 – Optional Lab 1 OPTIONAL Lab 1 involves generating v. Prob scenarios VI 4 - Mod 2 -8 - Slide 24

- Slides: 24