EE 290 A Generalized Principal Component Analysis Lecture

- Slides: 22

EE 290 A: Generalized Principal Component Analysis Lecture 2 (by Allen Y. Yang): Extensions of PCA Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 1

Last time n n Challenges in modern data clustering problems. PCA reduces dimensionality of the data while retaining as much data variation as possible. Statistical view: The first d PCs are given by the d leading eigenvectors of the covariance. Geometric view: Fitting a d-dim subspace model via SVD Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 2

This lecture n n Determine an optimal number of PCs: d Probabilistic PCA Kernel PCA Robust PCA shall be discussed later Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 3

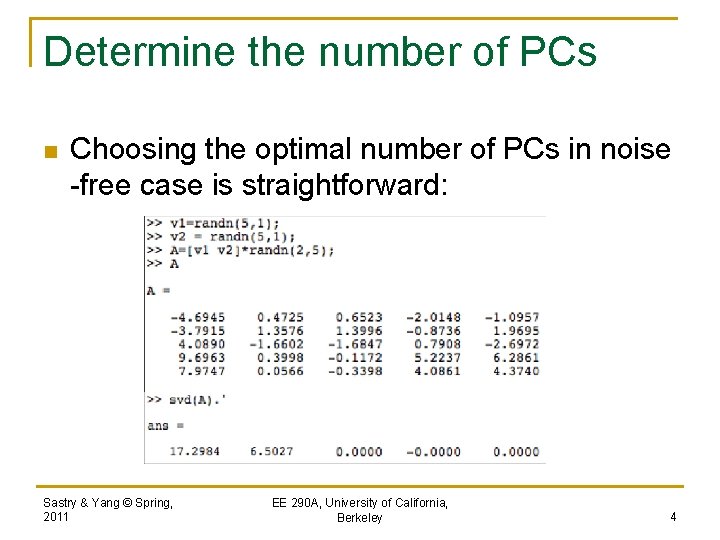

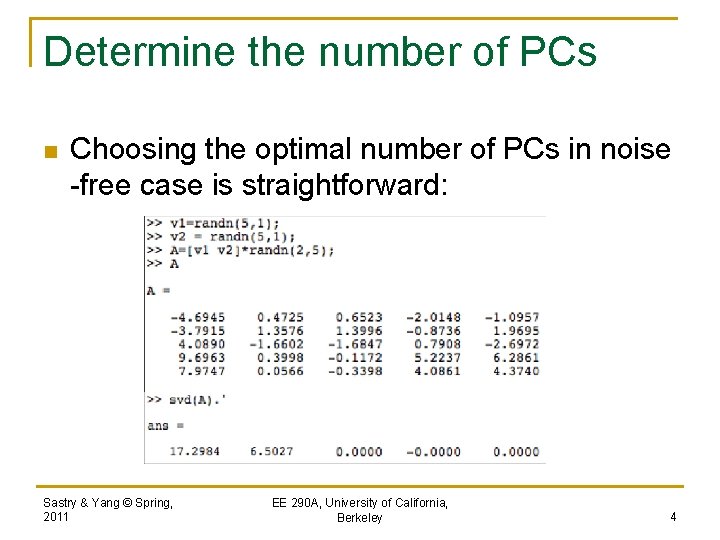

Determine the number of PCs n Choosing the optimal number of PCs in noise -free case is straightforward: Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 4

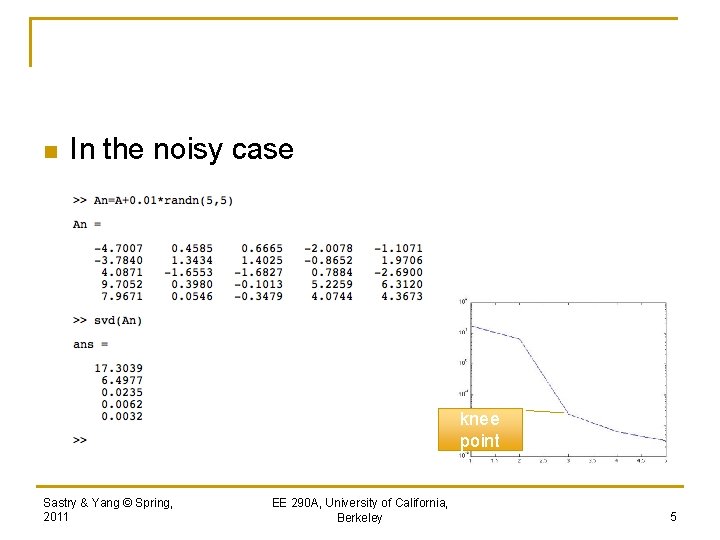

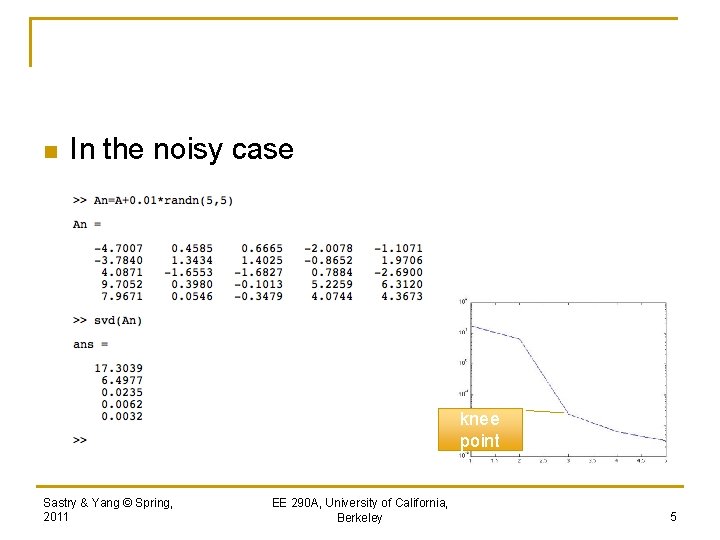

n In the noisy case knee point Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 5

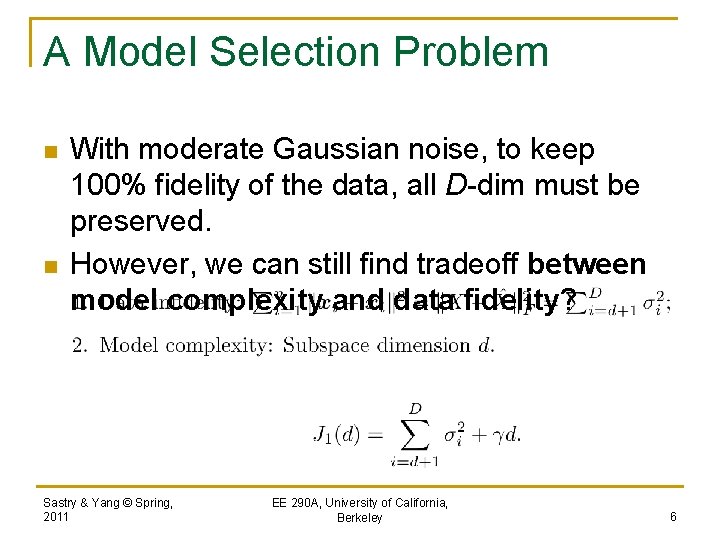

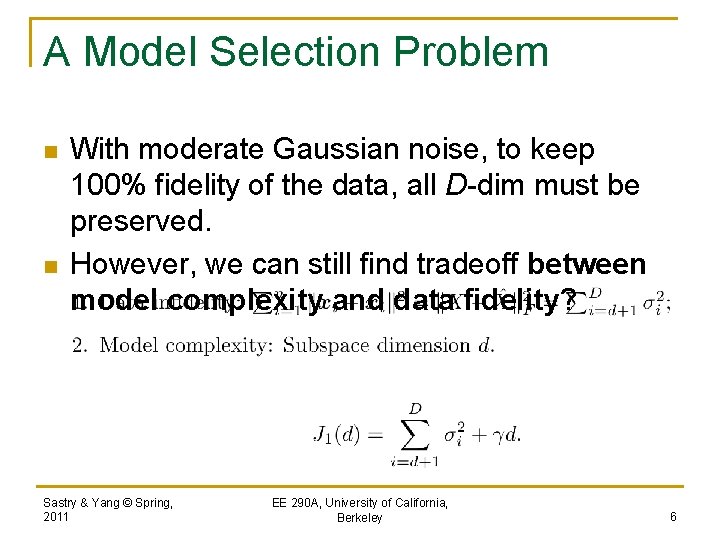

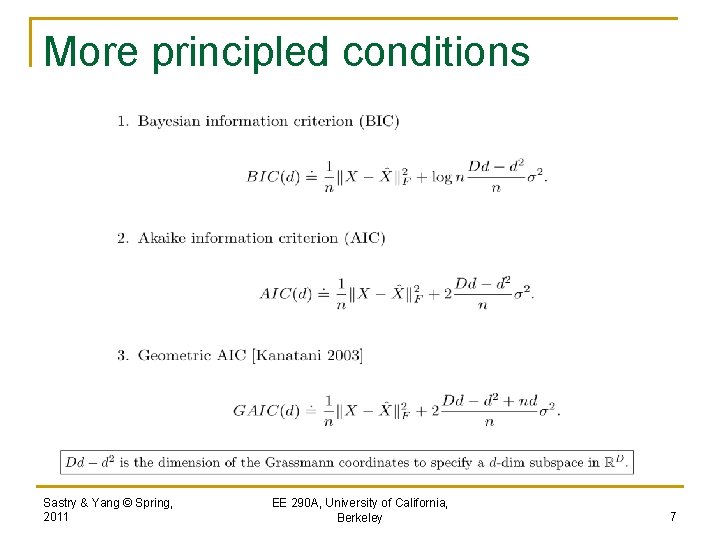

A Model Selection Problem n n With moderate Gaussian noise, to keep 100% fidelity of the data, all D-dim must be preserved. However, we can still find tradeoff between model complexity and data fidelity? Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 6

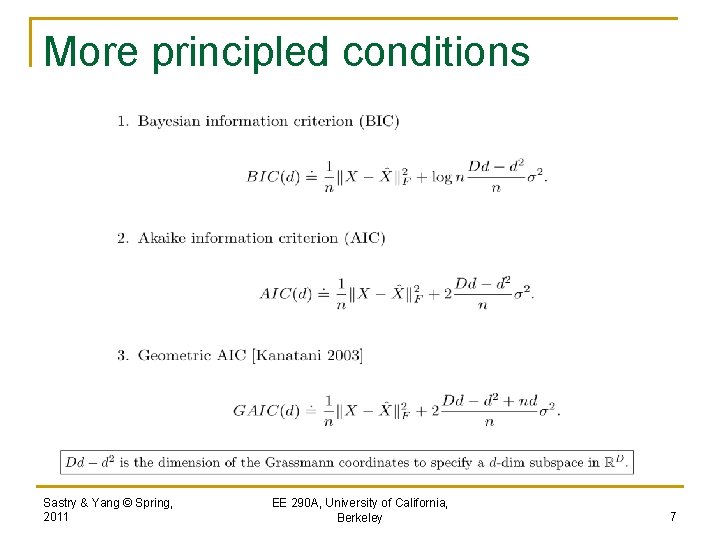

More principled conditions Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 7

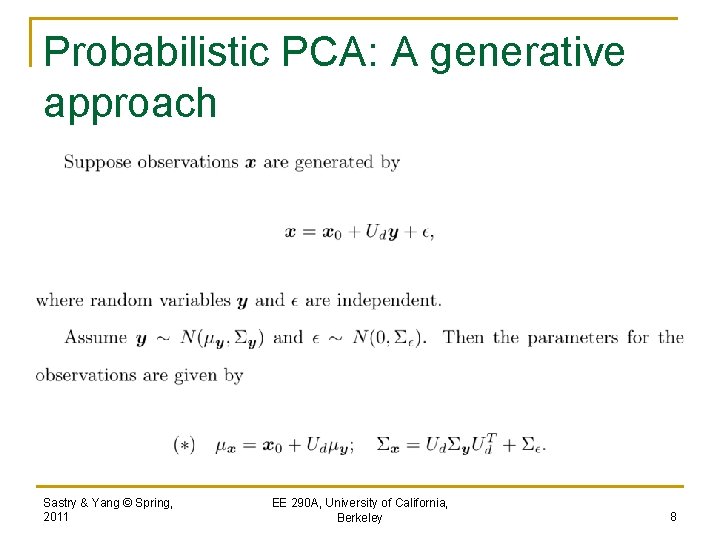

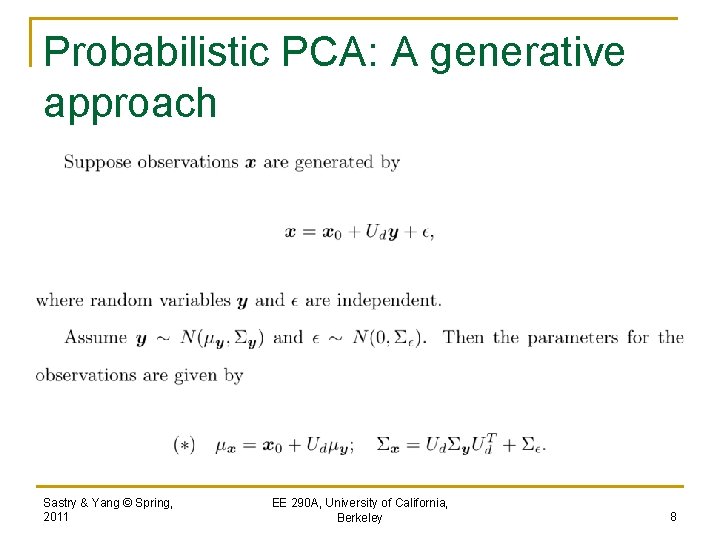

Probabilistic PCA: A generative approach Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 8

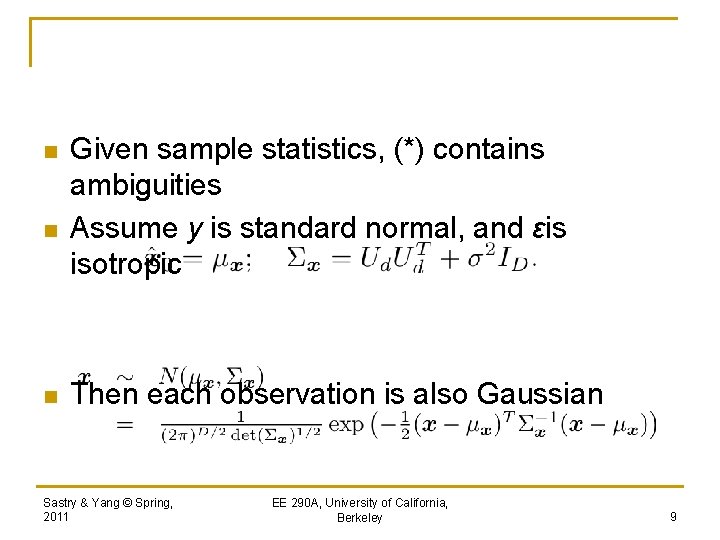

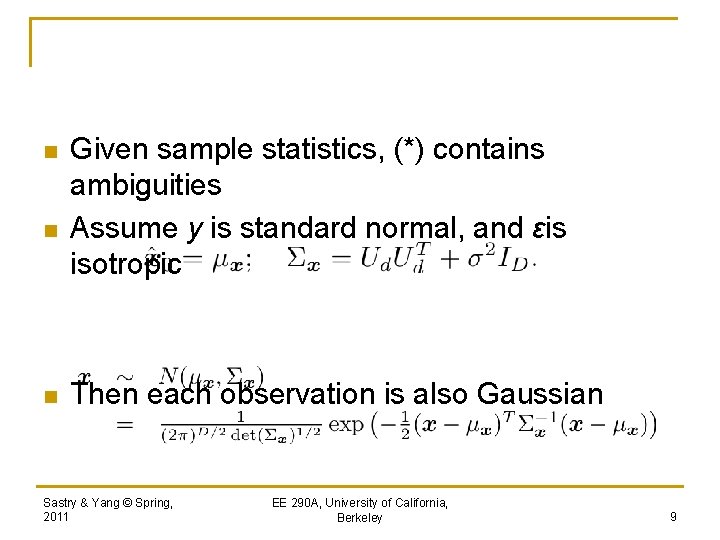

n n n Given sample statistics, (*) contains ambiguities Assume y is standard normal, and εis isotropic Then each observation is also Gaussian Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 9

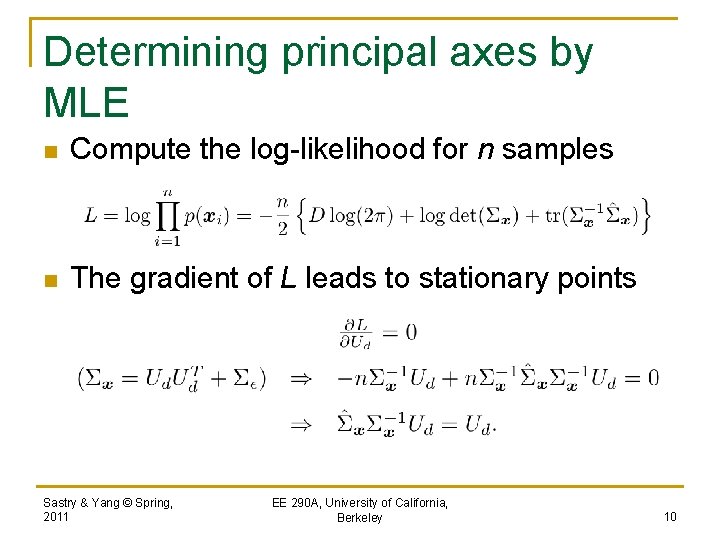

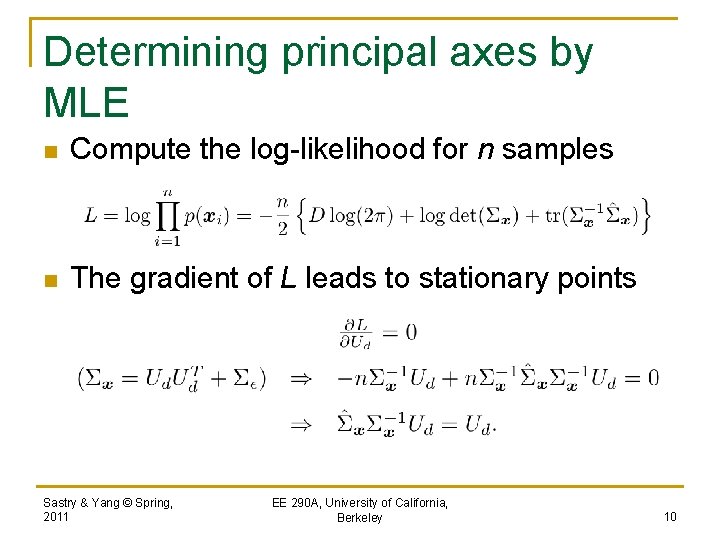

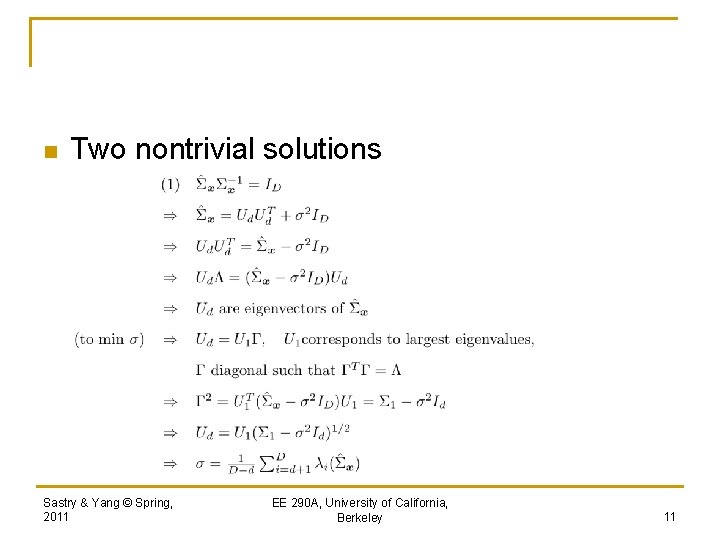

Determining principal axes by MLE n Compute the log-likelihood for n samples n The gradient of L leads to stationary points Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 10

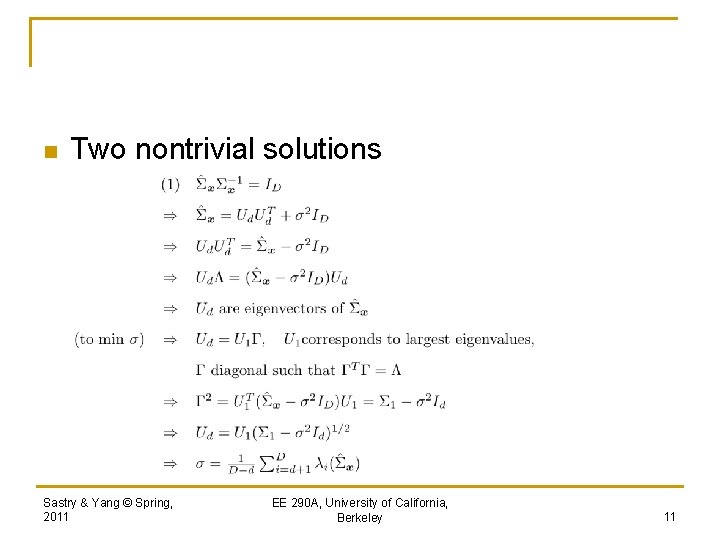

n Two nontrivial solutions Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 11

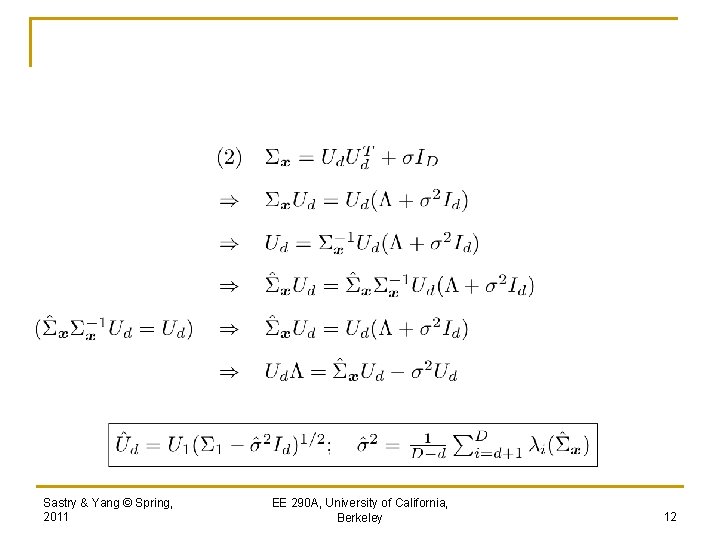

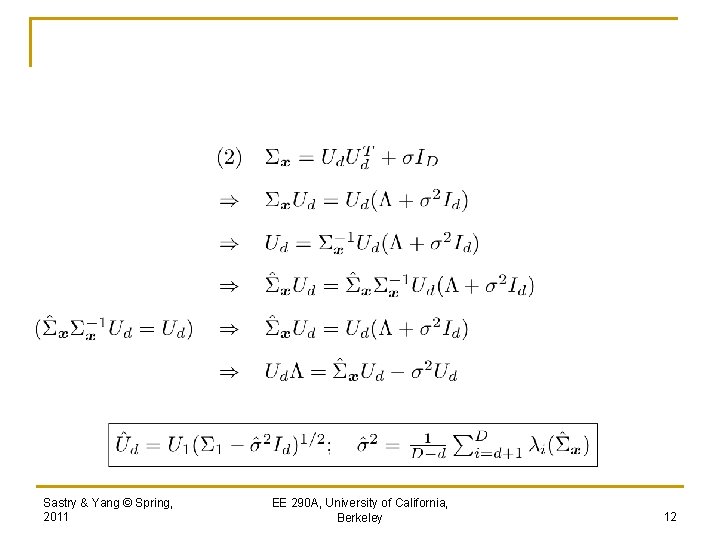

Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 12

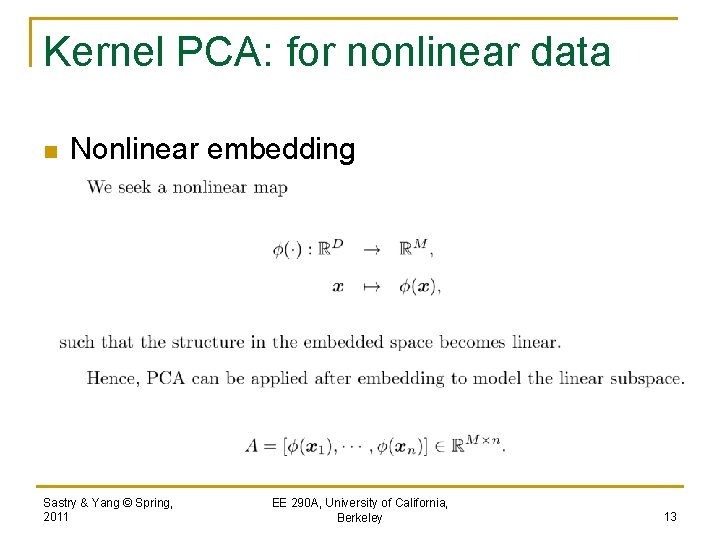

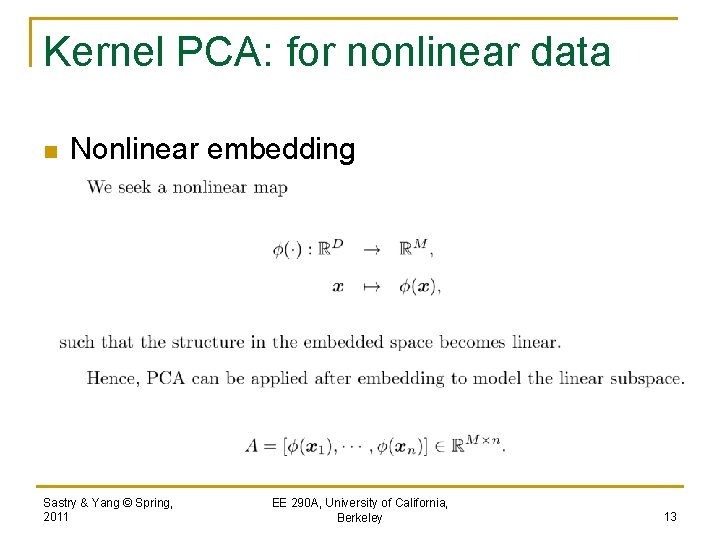

Kernel PCA: for nonlinear data n Nonlinear embedding Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 13

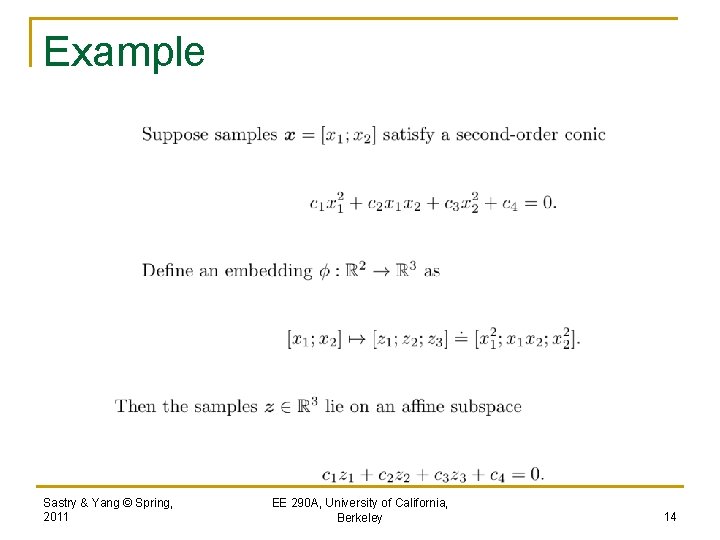

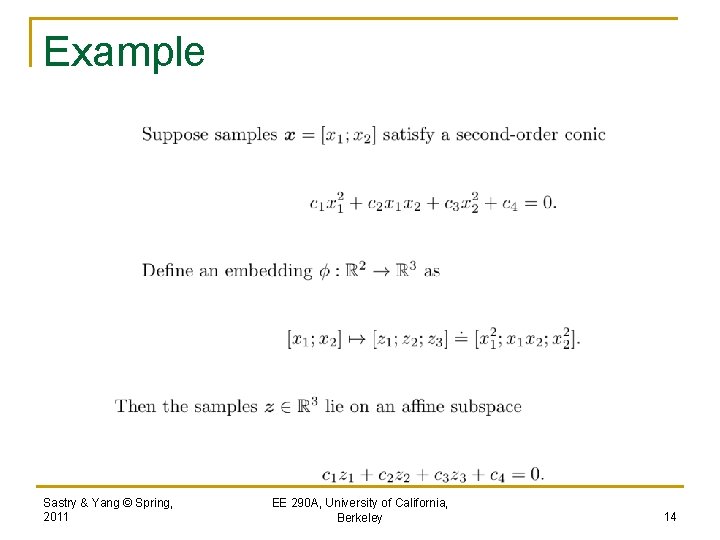

Example Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 14

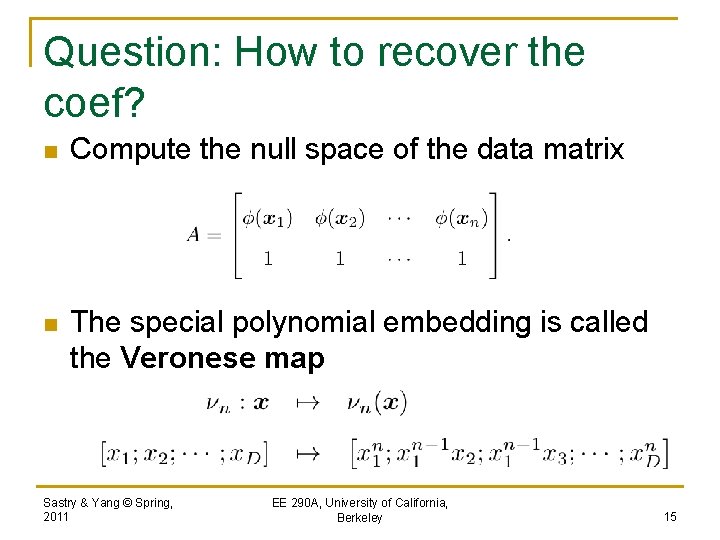

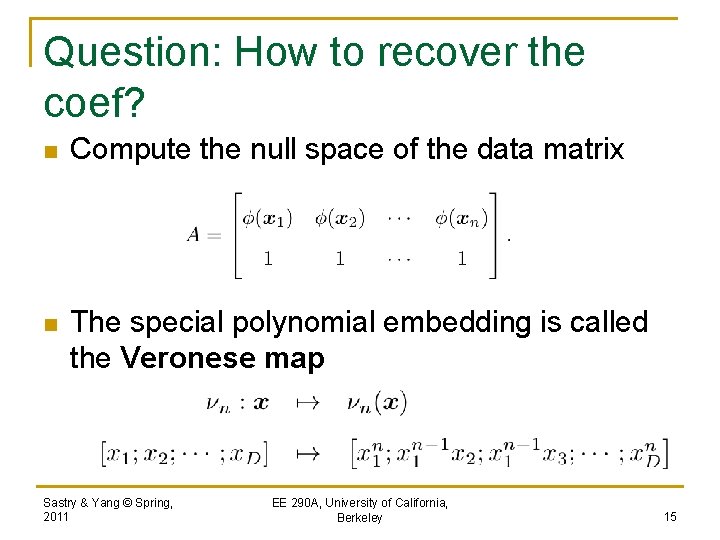

Question: How to recover the coef? n Compute the null space of the data matrix n The special polynomial embedding is called the Veronese map Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 15

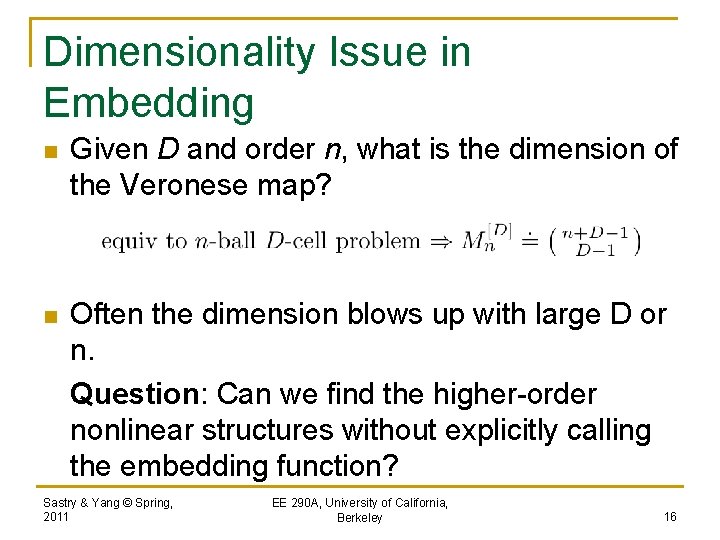

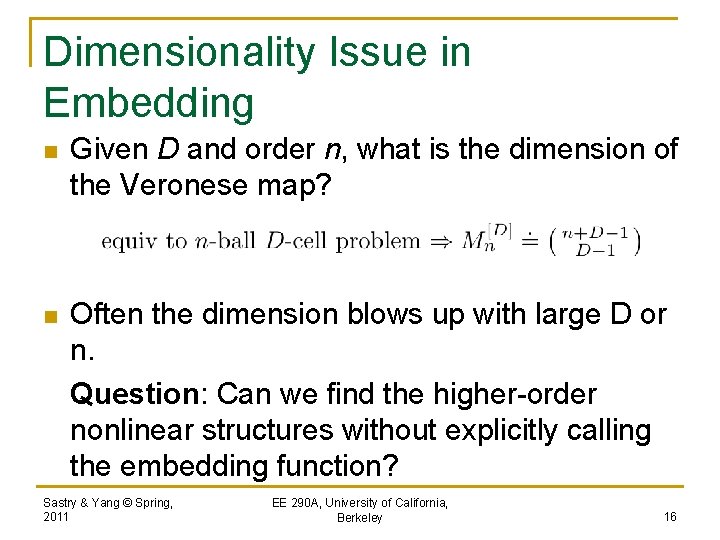

Dimensionality Issue in Embedding n Given D and order n, what is the dimension of the Veronese map? n Often the dimension blows up with large D or n. Question: Can we find the higher-order nonlinear structures without explicitly calling the embedding function? Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 16

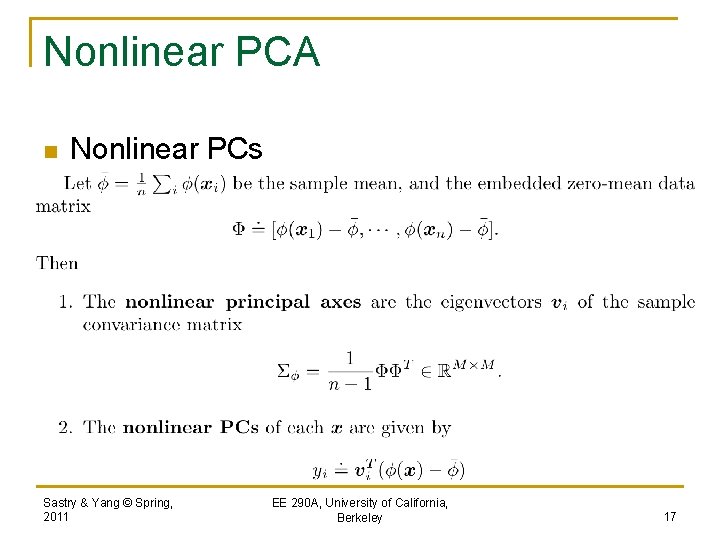

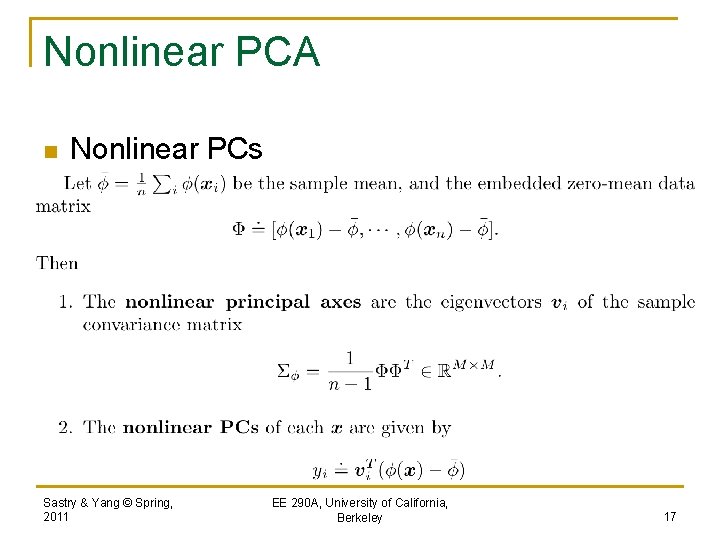

Nonlinear PCA n Nonlinear PCs Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 17

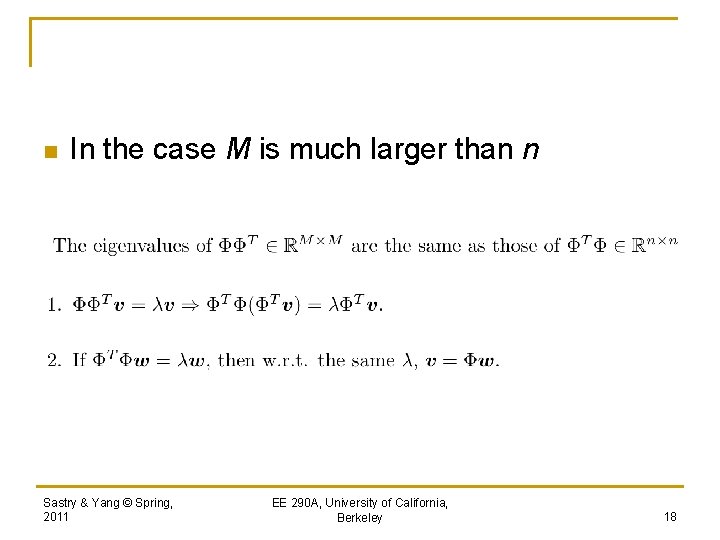

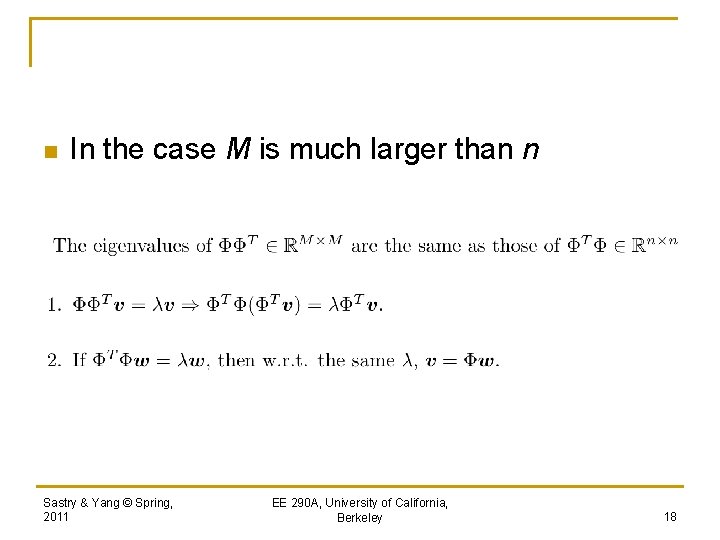

n In the case M is much larger than n Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 18

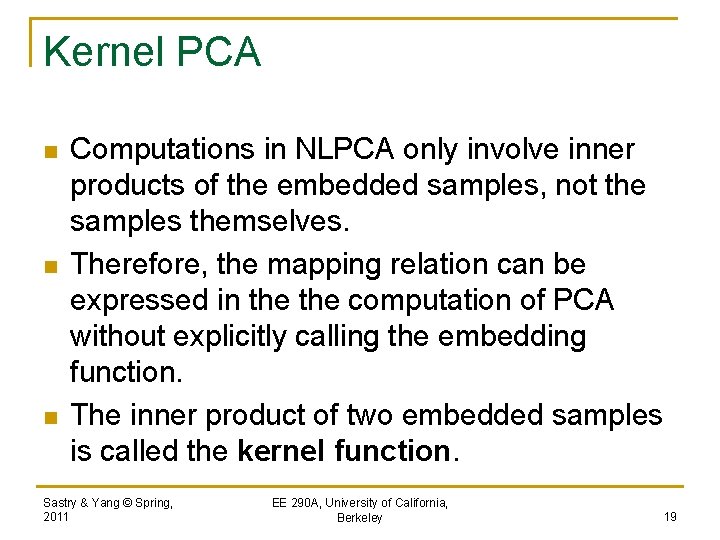

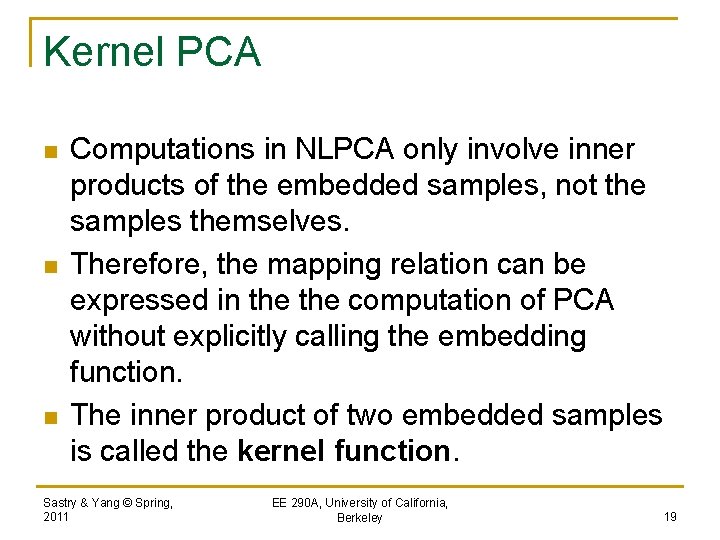

Kernel PCA n n n Computations in NLPCA only involve inner products of the embedded samples, not the samples themselves. Therefore, the mapping relation can be expressed in the computation of PCA without explicitly calling the embedding function. The inner product of two embedded samples is called the kernel function. Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 19

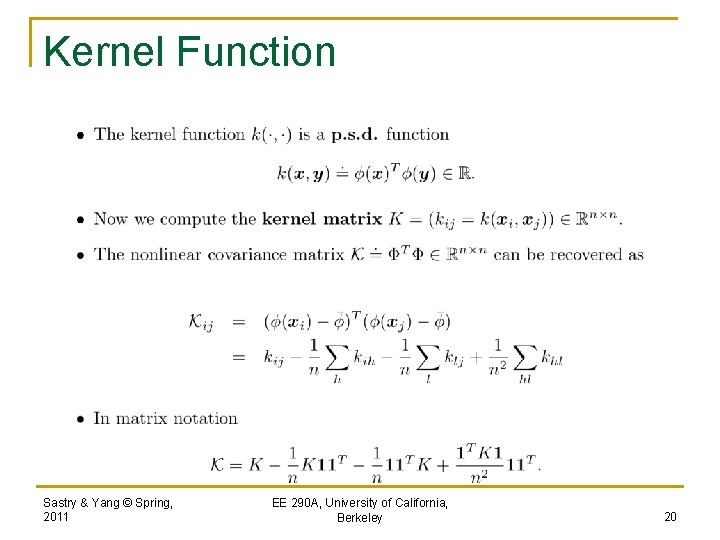

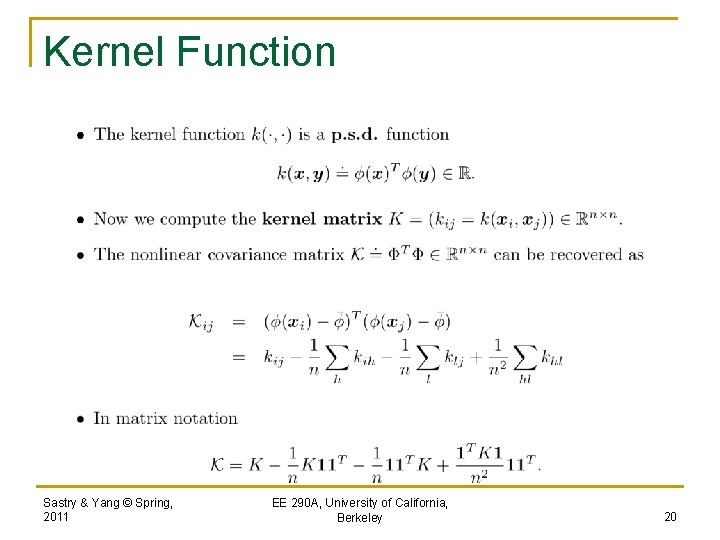

Kernel Function Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 20

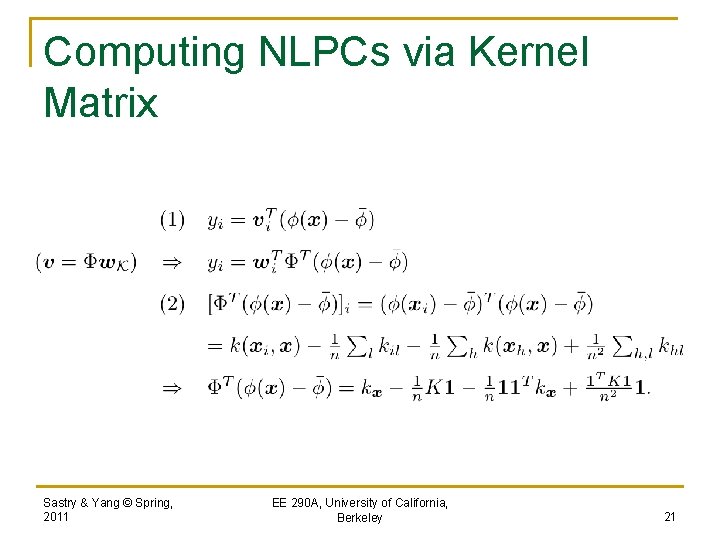

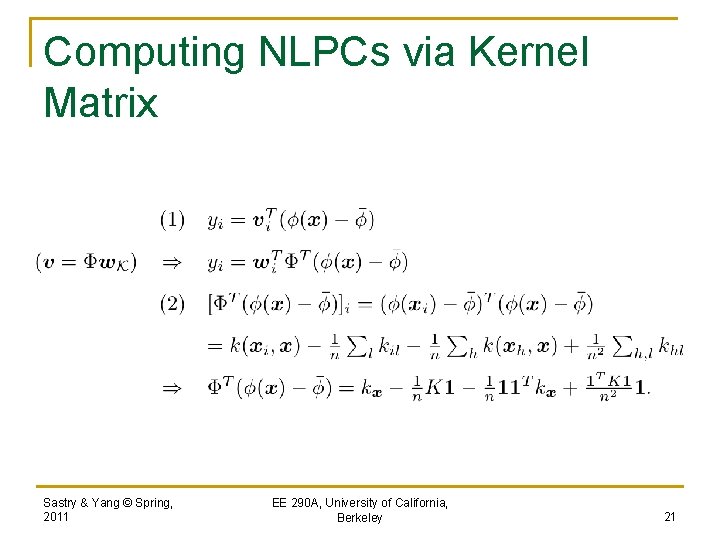

Computing NLPCs via Kernel Matrix Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 21

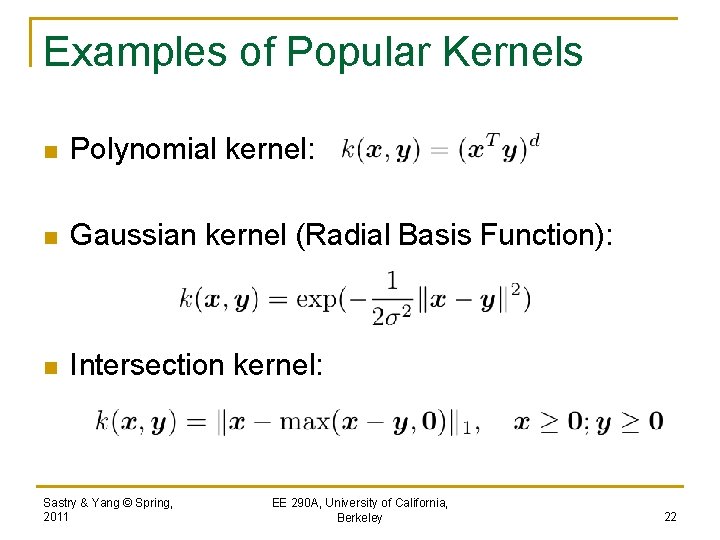

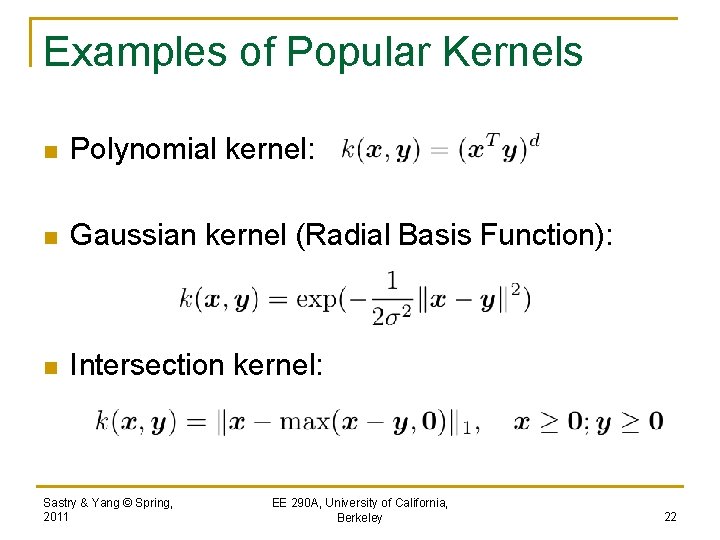

Examples of Popular Kernels n Polynomial kernel: n Gaussian kernel (Radial Basis Function): n Intersection kernel: Sastry & Yang © Spring, 2011 EE 290 A, University of California, Berkeley 22