Delving into Internet Streaming Media Delivery A quality

- Slides: 49

Delving into Internet Streaming Media Delivery: A quality and Resource Utilization Perspective Written by: Lei Guo, Enhua Tan, Songqing Chen, Zhen Xiao, Oliver Spatscheck, Xiaodong Zhang Presented by: Harris C. C. Sun Dicky Kwok

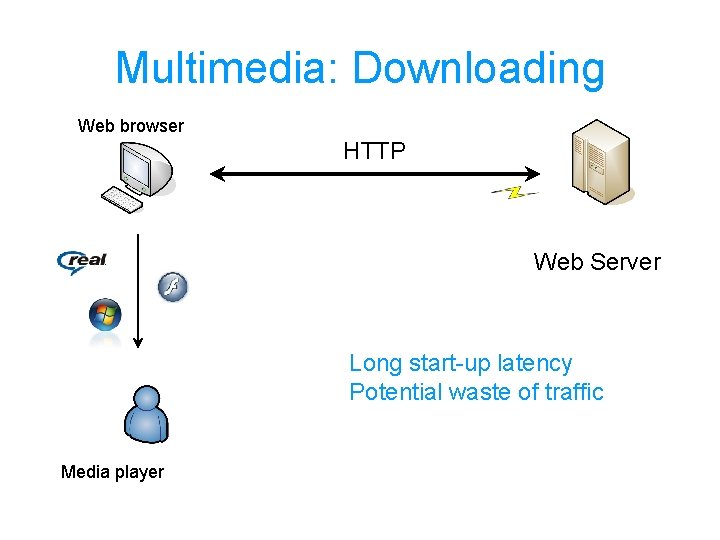

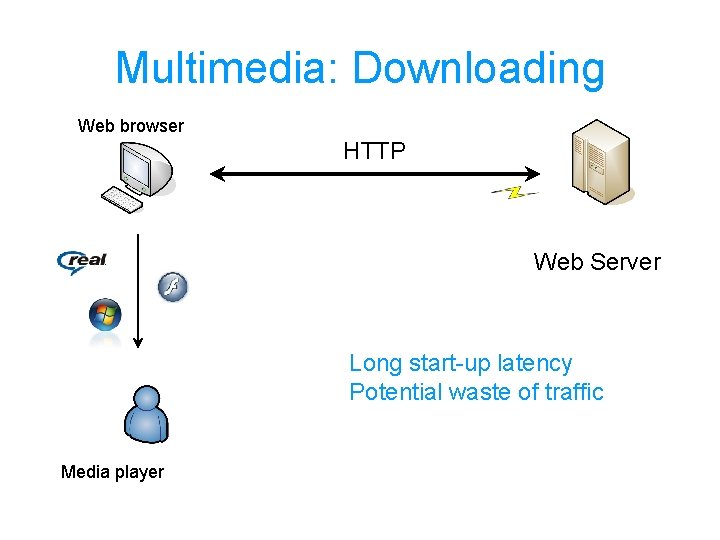

Multimedia: Downloading Web browser HTTP Web Server Long start-up latency Potential waste of traffic Media player

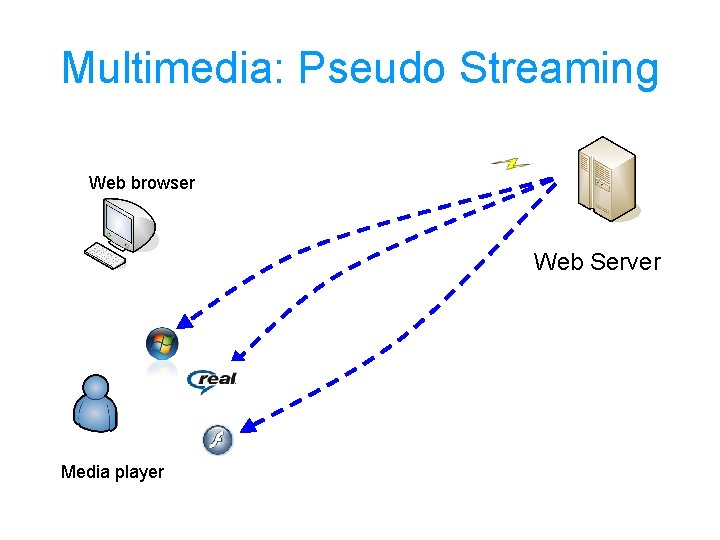

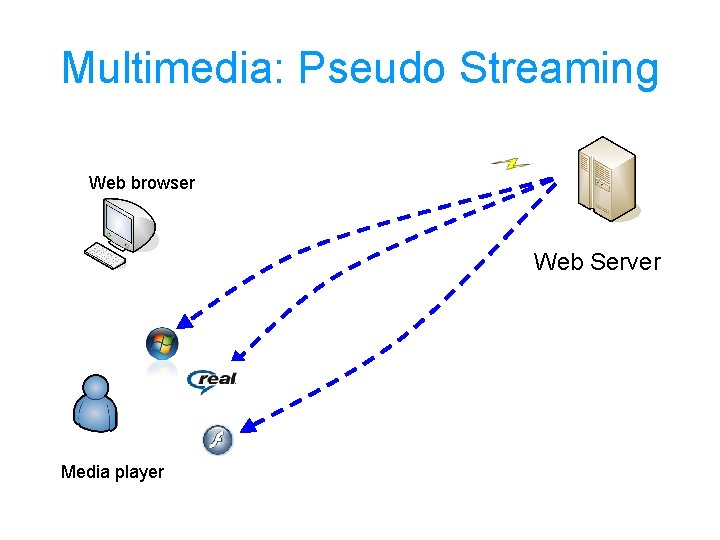

Multimedia: Pseudo Streaming Web browser Web Server Media player

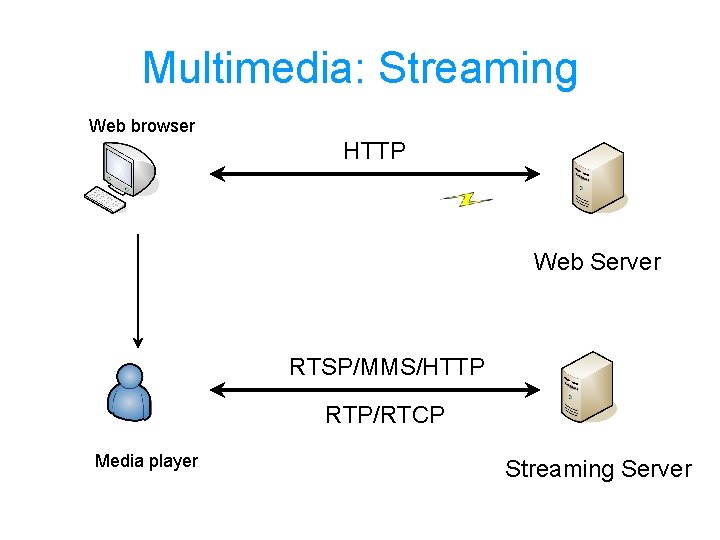

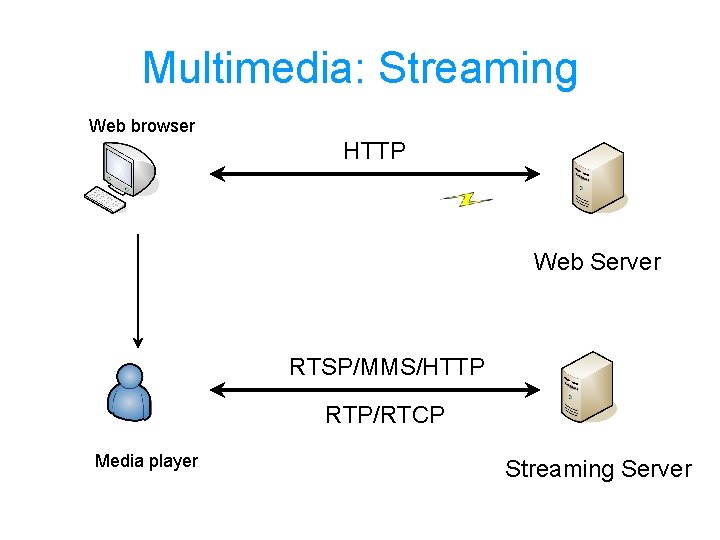

Multimedia: Streaming Web browser HTTP Web Server RTSP/MMS/HTTP RTP/RTCP Media player Streaming Server

Streaming Media • Merits – – Thousands of concurrent streams Flexible response to network congestion Efficient bandwidth utilization High quality to end users • Challenges – Lack of Qo. S on the Internet – Diverse network connection of users – Prolonged startup latency • Research and techniques – Effective utilization of server and Internet resources – Mechanism of streaming media delivery • • Protocol rollover, Fast Streaming, MBR and rate adaptation

Motivation • Modern streaming techniques concentrated on the techniques of – media access pattern and – user behaviors to improve streaming performance. • Few focused on – the improvement of techniques for quality streaming delivery and – the effective utilization of media resource.

Objective and approach • Understand modern streaming techniques – The delivery quality and resource utilization • Collect a large streaming media workload – From thousands of home users and business users Hosted by a large ISP (Gigascope) – RTSP, RTP/RTCP, MMS, RDT packet headers instead of server logs • Analyze commonly used streaming techniques – Fast Streaming – Protocol rollover – MBR encoding and rate adaptation • Propose a coordinated streaming mechanism – Effectively coordinate the merits from caching and rate adaptation

• Trace Collection and processing Methodology • Traffic Overview • Fast Streaming • Rate Adaptation • Protocol Rollover • Coordinated Streaming • Summary

Trace Collection and processing methodology • Collect streaming packet • Capture all TCP packet within ports 554 -555, 7070 -7071 9070 and 1755 • Keywords matching to collect RTSP and MMS packet • Group TCP packet by IP addresses, port, TCP SYN/FIN/RST flag • Extract streaming command from each request • Identify media data and control packet

• Trace Collection and processing Methodology • Traffic Overview • Fast Streaming • Rate Adaptation • Protocol Rollover • Coordinated Streaming • Summary

Traffic Overview • User communities – Home users in a cable network – Business users hosted by a big ISP – Have different access patterns • Media hosting services – Self-hosting – Third-party hosting

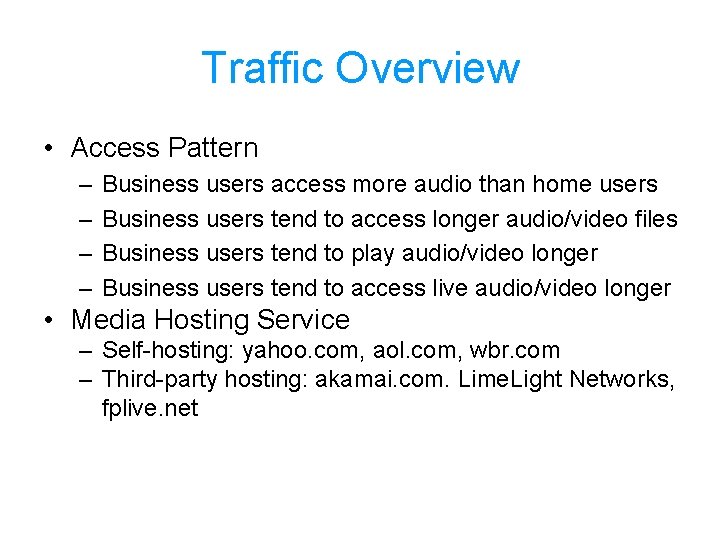

Traffic Overview • Access Pattern – – Business users access more audio than home users Business users tend to access longer audio/video files Business users tend to play audio/video longer Business users tend to access live audio/video longer • Media Hosting Service – Self-hosting: yahoo. com, aol. com, wbr. com – Third-party hosting: akamai. com. Lime. Light Networks, fplive. net

• Trace Collection and processing Methodology • Traffic Overview • Fast Streaming • Rate Adaptation • Protocol Rollover • Coordinated Streaming • Summary

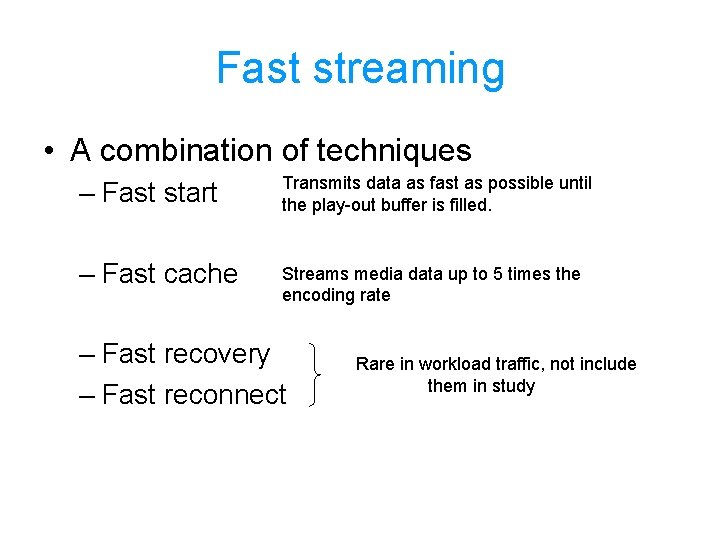

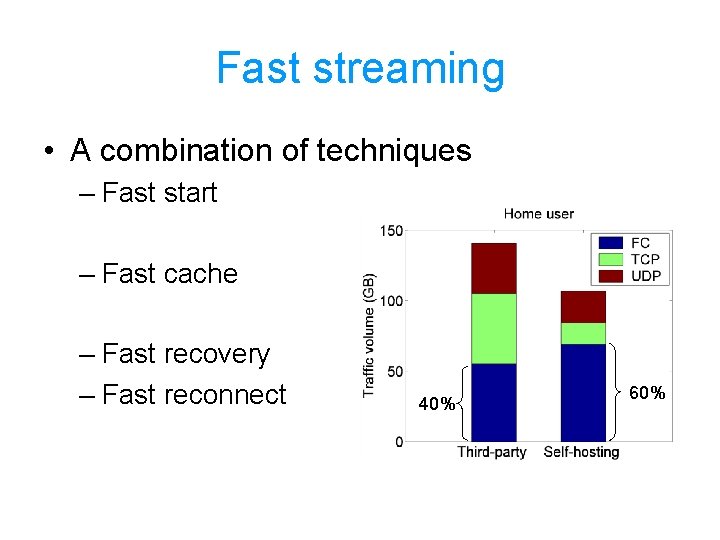

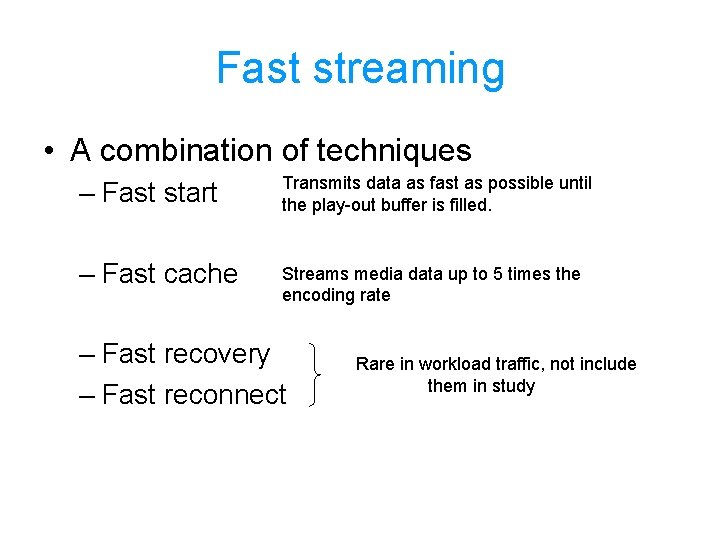

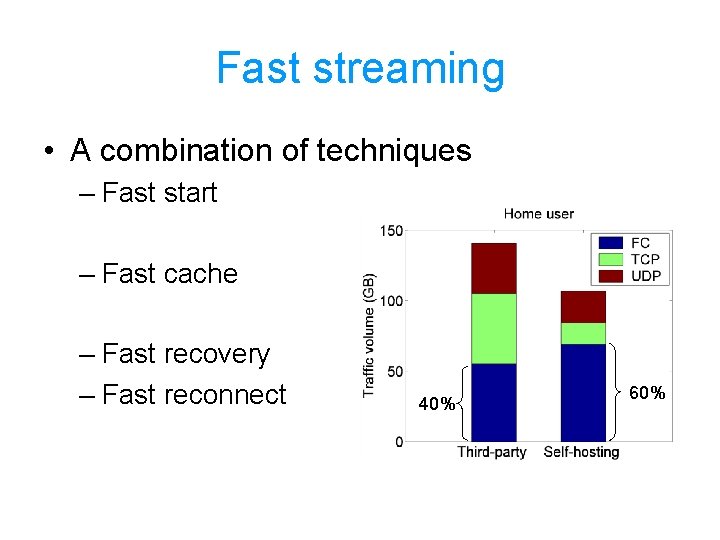

Fast streaming • A combination of techniques – Fast start Transmits data as fast as possible until the play-out buffer is filled. – Fast cache Streams media data up to 5 times the encoding rate – Fast recovery – Fast reconnect Rare in workload traffic, not include them in study

Fast streaming • A combination of techniques – Fast start – Fast cache – Fast recovery – Fast reconnect 40% 60%

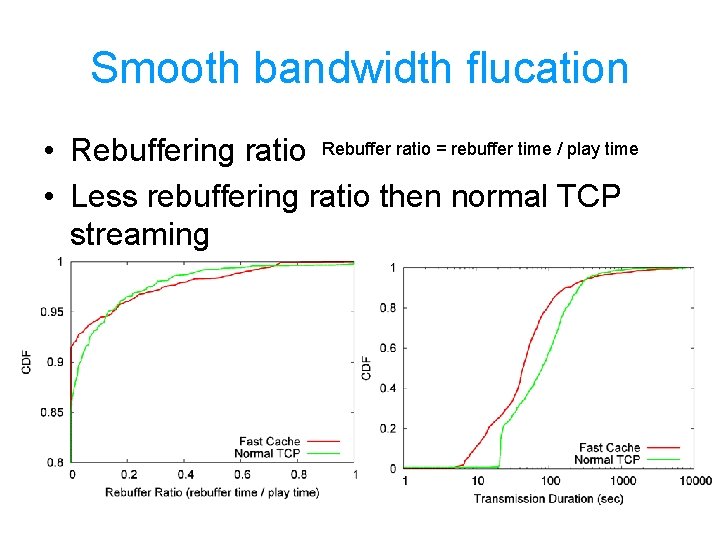

Smooth bandwidth flucation • Rebuffering ratio Rebuffer ratio = rebuffer time / play time • Less rebuffering ratio then normal TCP streaming

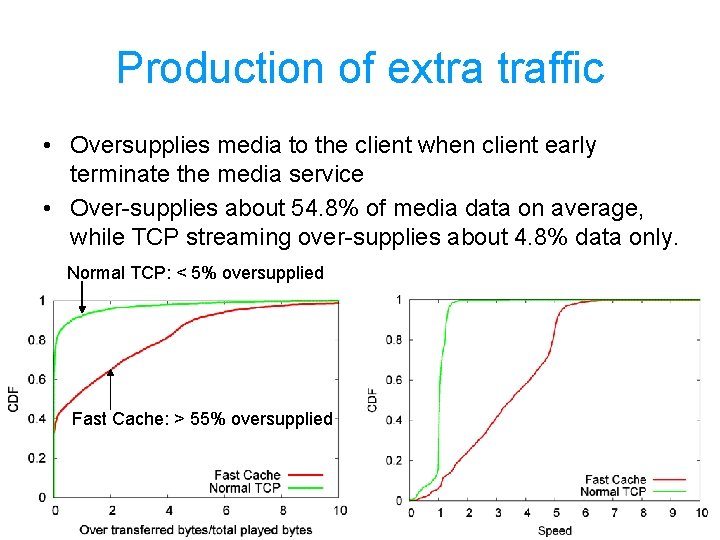

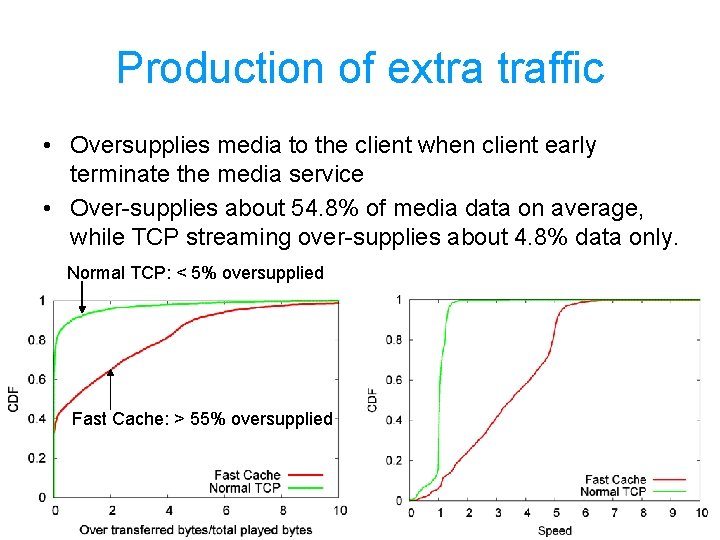

Production of extra traffic • Oversupplies media to the client when client early terminate the media service • Over-supplies about 54. 8% of media data on average, while TCP streaming over-supplies about 4. 8% data only. Normal TCP: < 5% oversupplied Fast Cache: > 55% oversupplied

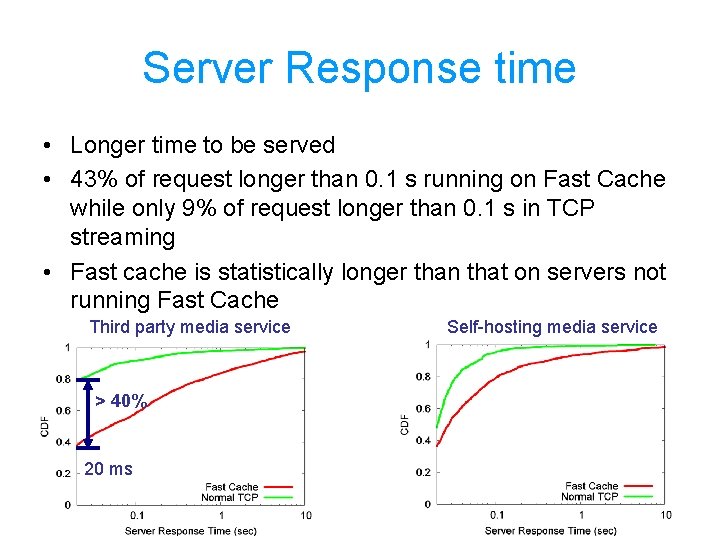

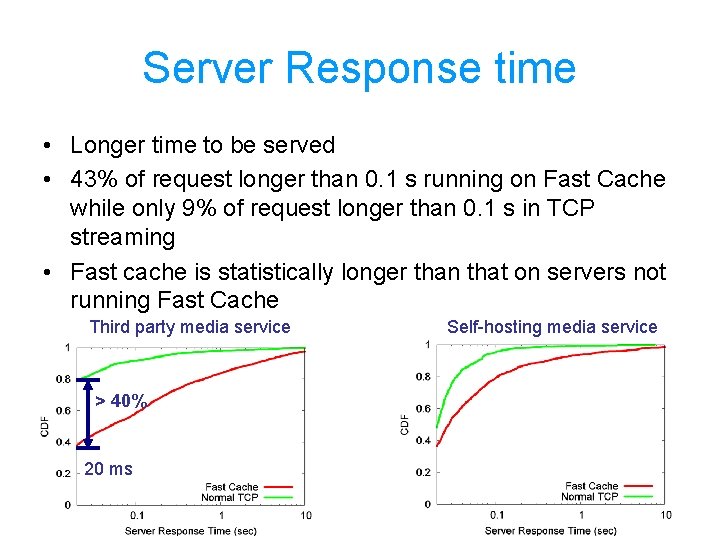

Server Response time • Longer time to be served • 43% of request longer than 0. 1 s running on Fast Cache while only 9% of request longer than 0. 1 s in TCP streaming • Fast cache is statistically longer than that on servers not running Fast Cache Third party media service > 40% 20 ms Self-hosting media service

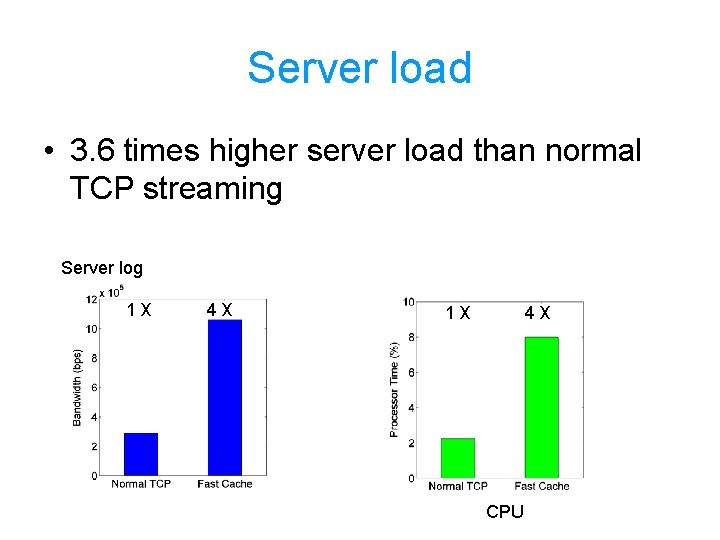

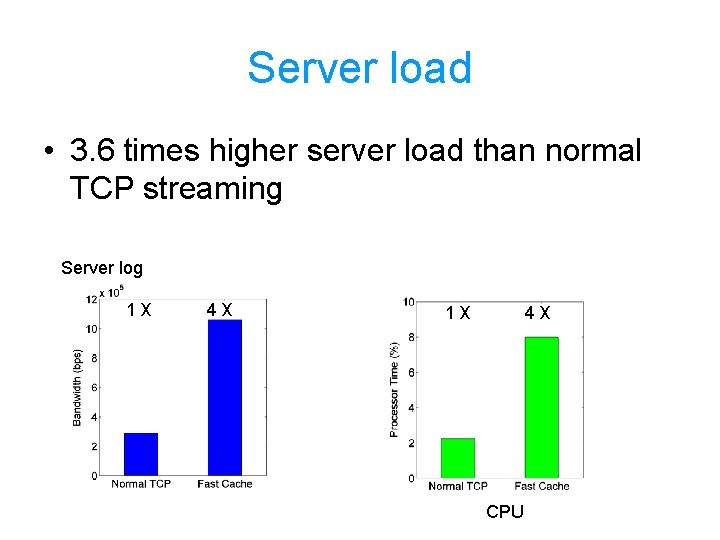

Server load • 3. 6 times higher server load than normal TCP streaming Server log 1 X 4 X CPU

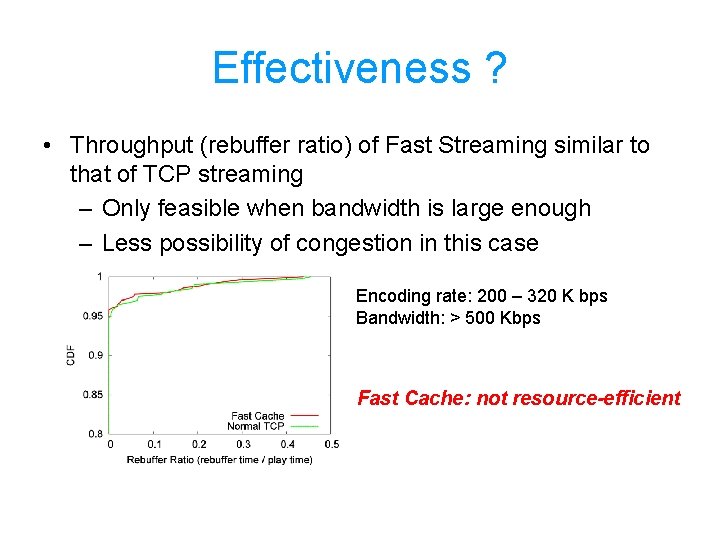

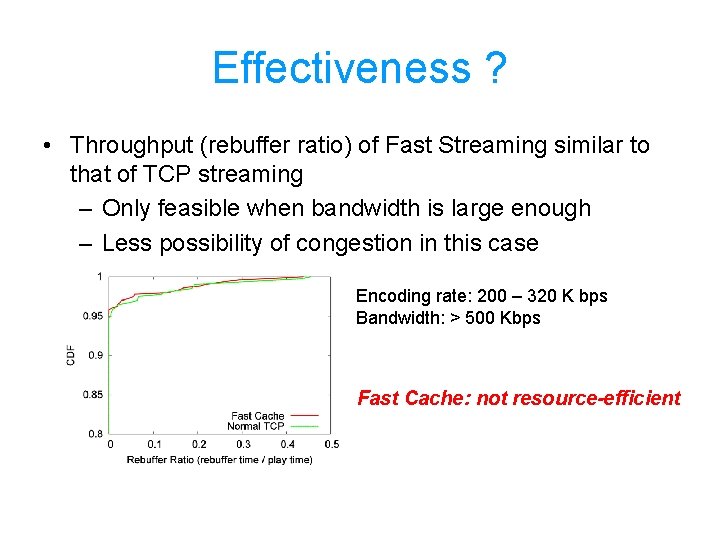

Effectiveness ? • Throughput (rebuffer ratio) of Fast Streaming similar to that of TCP streaming – Only feasible when bandwidth is large enough – Less possibility of congestion in this case Encoding rate: 200 – 320 K bps Bandwidth: > 500 Kbps Fast Cache: not resource-efficient

• Trace Collection and processing Methodology • Traffic Overview • Fast Streaming • Rate Adaptation • Protocol Rollover • Coordinated Streaming • Summary

Challenges of Streaming • Bandwidth fluctuation – Quality of media streaming may significantly degrade • Connection of speed varies – From dial-up to cable • Prolonged startup latency

Challenges of Streaming • Under the situation of bandwidth fluctuation, a technique of Rate Adaptation is widely used by media players • The basic concept is simple. – modify the stream bit rate to adapt the various bandwidth – Resume when bandwidth recovers – Never higher than original bit rate

Rate Adaptation • In order to adapt to bandwidth fluctuation, major media services support three kinds of techniques for rate adaptation. – Stream switch (also known as Intelligent Streaming in WM and Sure. Stream in Real. Network) – Stream thinning – Video Cancellation

Rate Adaptation • Stream Switch – Multiple bit rates (MBR) must be used – According to the statistic of the paper, MBR encoding technique is widely used in media authoring

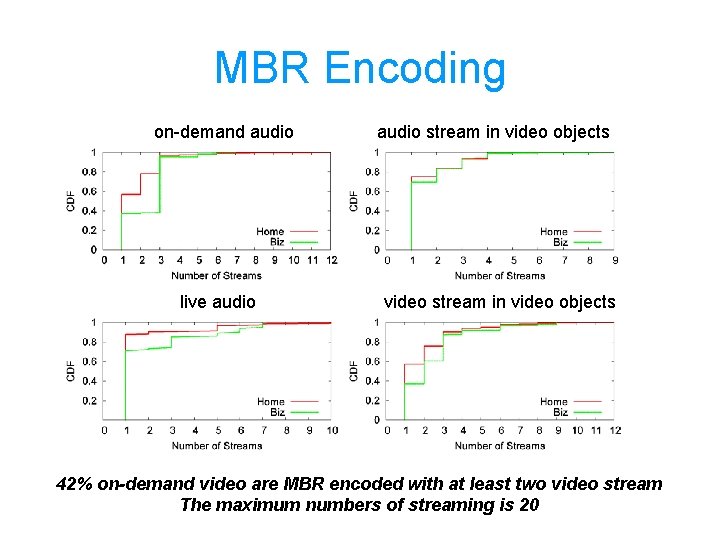

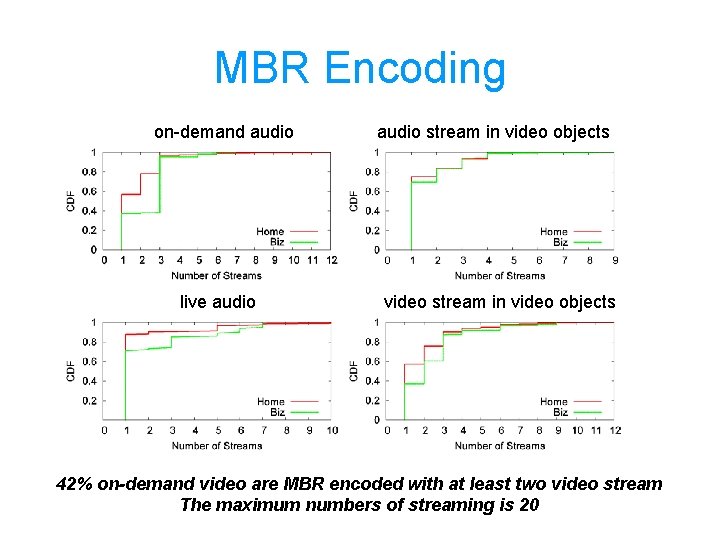

MBR Encoding on-demand audio live audio stream in video objects video stream in video objects 42% on-demand video are MBR encoded with at least two video stream The maximum numbers of streaming is 20

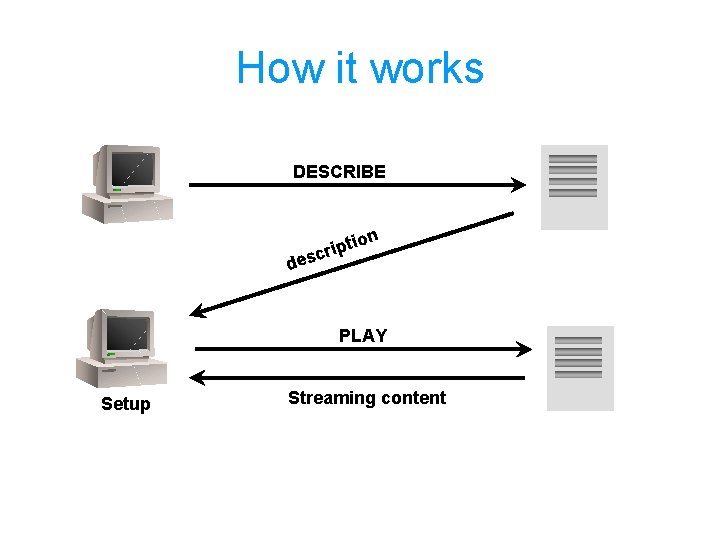

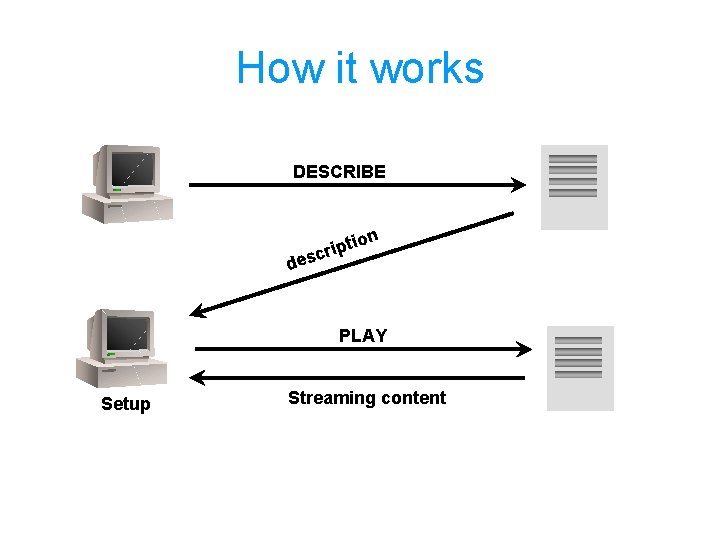

How it works DESCRIBE ion t p i scr de PLAY Setup Streaming content

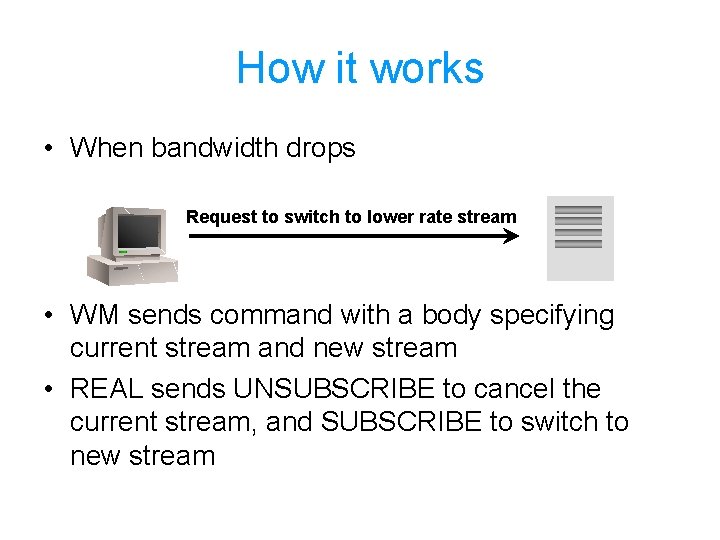

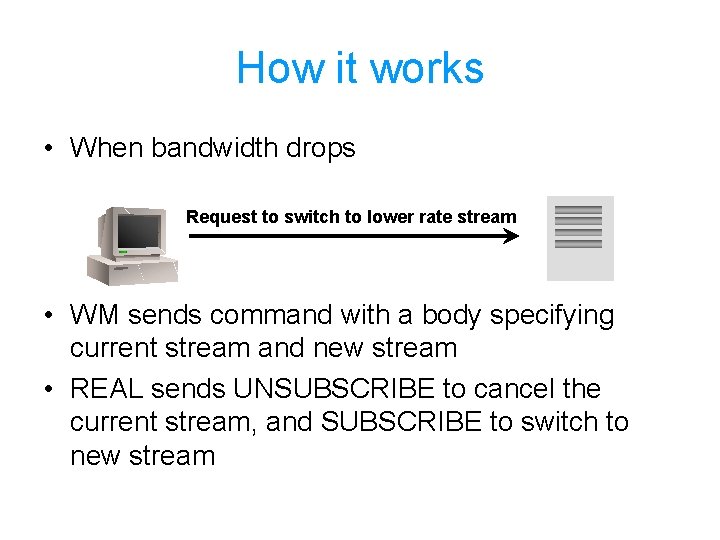

How it works • When bandwidth drops Request to switch to lower rate stream • WM sends command with a body specifying current stream and new stream • REAL sends UNSUBSCRIBE to cancel the current stream, and SUBSCRIBE to switch to new stream

How it works • During transmission, if the bandwidth decreases, the server automatically detects the change and switches to a stream with a lower bit rate. If bandwidth improves, the server switches to a stream with a higher bit rate, but never higher than the original bit rate. (from Microsoft page)

Problem Occurs • After extracting and analyzing information from RTSP/MMS commands, switch latency occurs • Switch latency occurs as the freezing duration between old stream and new stream • User has to wait for re-buffering • Low quality duration appears

Problem Occurs • 30%~40% of stream switches have latency greater than 3 seconds • 10%~20% of stream switches have latency greater than 5 seconds • 60% of sessions have low quality duration less than 30 seconds • 85% are shorter than 40 seconds • Non-trivial for end users

Stream Thinning • Similar to stream switch • If the bandwidth can no longer support the streaming video, the image quality will be degraded in order to avoid buffering • Thinning interval is defined as the interval between two consecutive stream thinning events

Stream Thinning • 70% of the thinning duration are shorter than 30 seconds • 70% in the home users and 82% in the business users, the thinning intervals are longer than 30 seconds

Video Cancellation • When the bandwidth is too low to transmit the key frame of video stream, the client may send a TEARDOWN command to cancel the video streaming • After that, the server maintains the continuous audio steam only • If the bandwidth increases, the client may set up and request the video streaming again

• Trace Collection and processing Methodology • Traffic Overview • Fast Streaming • Rate Adaptation • Protocol Rollover • Coordinated Streaming • Summary

Protocol Rollover • Streaming protocol: UDP, TCP, HTTP Delivery over UDP X Delivery over TCP X HTTP • Due the wide deployment of NAT routers/firewalls in both home and business users, protocol rollover results in great affect of startup latency

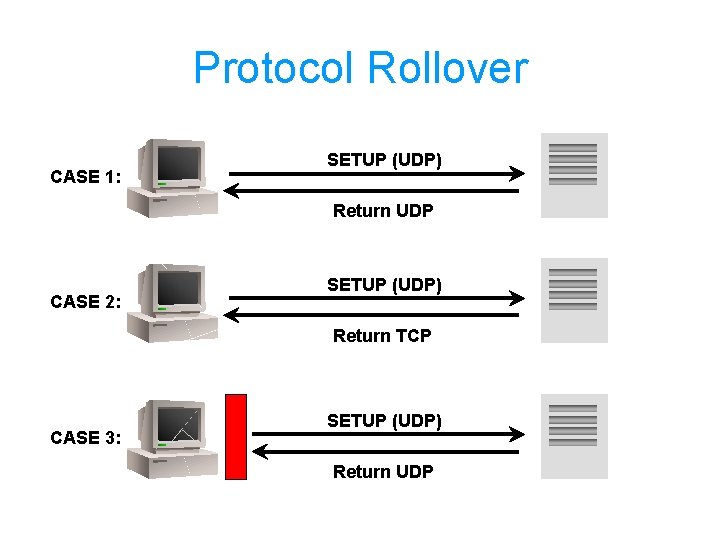

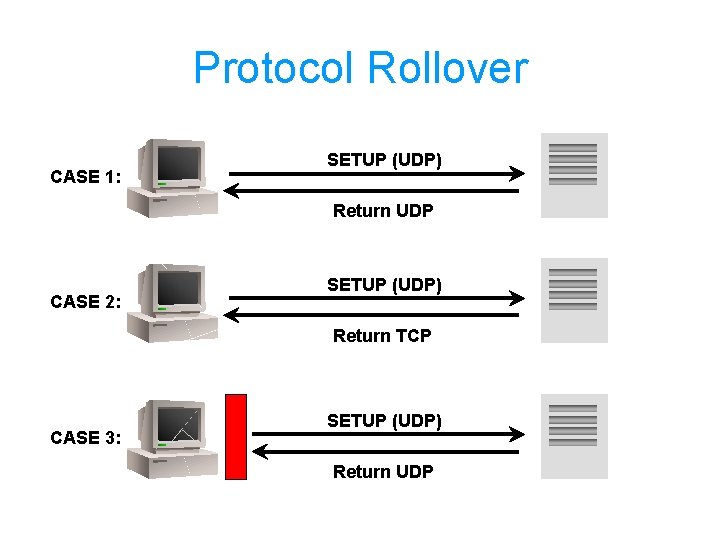

Protocol Rollover CASE 1: SETUP (UDP) Return UDP CASE 2: SETUP (UDP) Return TCP CASE 3: SETUP (UDP) Return UDP

Protocol Rollover • In the collected data, if protocol rollover occurs, it tried to establish UDP from 1 to 3 times before switching to TCP • Protocol rollover takes non-trivial time, increase the startup latency • The default protocol in client is usually UDP • However, some interesting results are revealed by the data.

Protocol Rollover • In home user workload, only 7. 37% of streaming session trying UDP first then switching to TCP. • In business user workload, only 7. 95% in the streaming session. • These imply that TCP is directly used without protocol rollover in most streaming. • What happened?

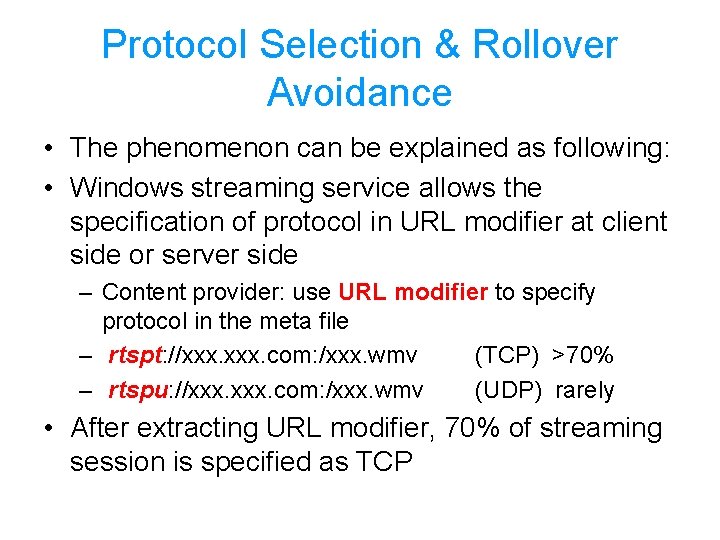

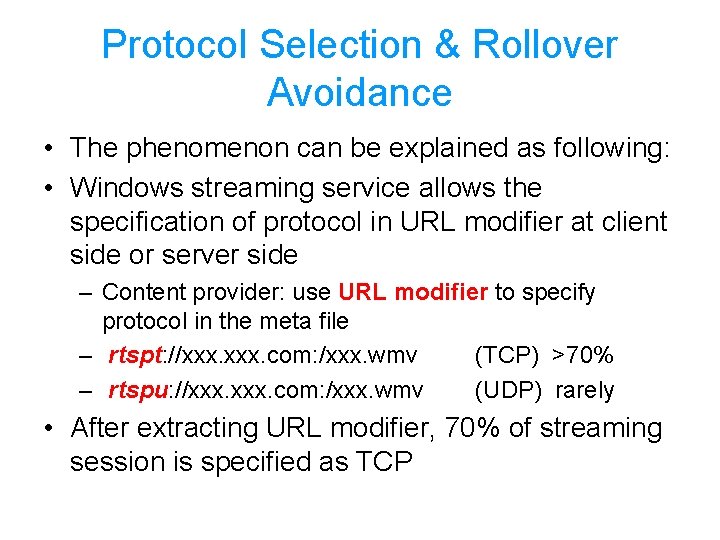

Protocol Selection & Rollover Avoidance • The phenomenon can be explained as following: • Windows streaming service allows the specification of protocol in URL modifier at client side or server side – Content provider: use URL modifier to specify protocol in the meta file – rtspt: //xxx. com: /xxx. wmv (TCP) >70% – rtspu: //xxx. com: /xxx. wmv (UDP) rarely • After extracting URL modifier, 70% of streaming session is specified as TCP

Protocol Selection & Rollover Avoidance • The conjecture is: – Content providers are aware of NAT/firewalls – Understand UDP is mostly shielded by clients – They actively use TCP to avoid shielding or protocol rollover – Even if UDP is supported, the streaming is delivered over TCP directly

Proof • Further investigate the NAT usage with MMS • Different from RTSP, clients report local IP address to server • Most users report private IPs • Indicate that clients access internet through NAT

• Trace Collection and processing Methodology • Traffic Overview • Fast Streaming • Rate Adaptation • Protocol Rollover • Coordinated Streaming • Summary

Coordinating caching and rate adaptation • Fast Cache: aggressively buffer data in advance – Over-utilize CPU and bandwidth resources – Neither performance effective nor cost-efficient • Rate adaptation: conservatively switch to lower bit rate stream – Switch handoff latency • Coordinated Streaming high rate stream low rate stream Lower bound Prevent switch latency Upper bound Prevent aggressive buffering

Results • Rebuffering ratio is close to zero • Reduces 77% over-supplied traffic produced by Fast Cache, though still not as good as TCP streaming • Switch handoff latency is nearly zero

• Trace Collection and processing Methodology • Traffic Overview • Fast Streaming • Rate Adaptation • Protocol Rollover • Coordinated Streaming • Summary

What We Learn and What We Think • This paper mainly aim at investigating the current streaming technique used by media players • Most people enjoy video streaming but seldom know theory behind • Modern streaming services over-utilize CPU and bandwidth resource • Coordinated Streaming is suggested • Ideal theory but not sure if it works in reality.

What We Learn and What We Think • More research papers are written by same group of people • More study are needed

Thank You!