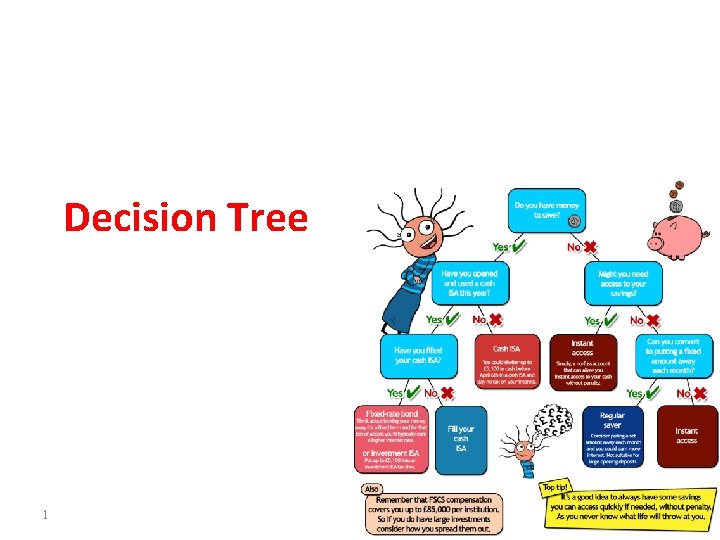

Decision Tree 1 2 Decision Tree Outlook Sunny

![ID 3 Algorithm [D 1, D 2, …, D 14] [9+, 5 -] Outlook ID 3 Algorithm [D 1, D 2, …, D 14] [9+, 5 -] Outlook](https://slidetodoc.com/presentation_image_h2/6cf78c8f84f7d3ed0d4c0000df8099f4/image-16.jpg)

![ID 3 Algorithm Outlook Sunny Humidity High No [D 1, D 2] 17 Overcast ID 3 Algorithm Outlook Sunny Humidity High No [D 1, D 2] 17 Overcast](https://slidetodoc.com/presentation_image_h2/6cf78c8f84f7d3ed0d4c0000df8099f4/image-17.jpg)

- Slides: 62

Decision Tree 1

2

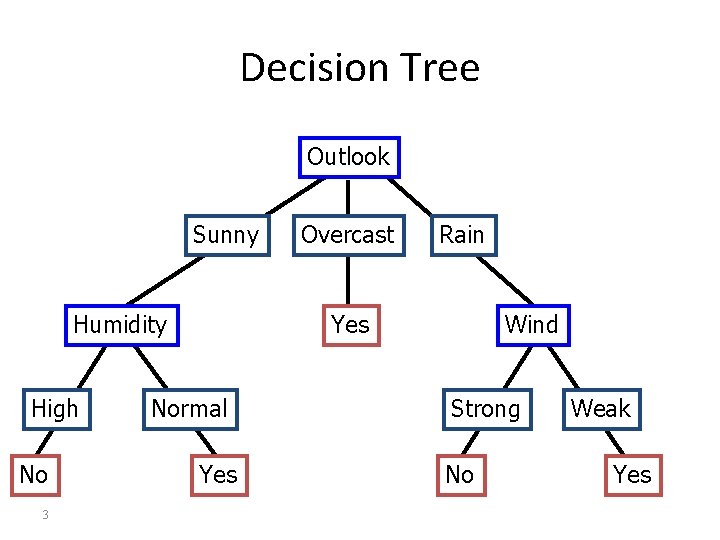

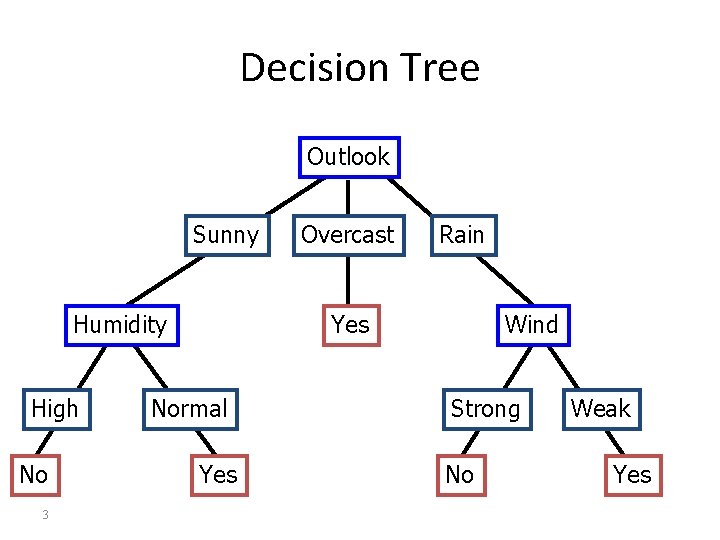

Decision Tree Outlook Sunny Humidity High No 3 Overcast Rain Yes Normal Yes Wind Strong No Weak Yes

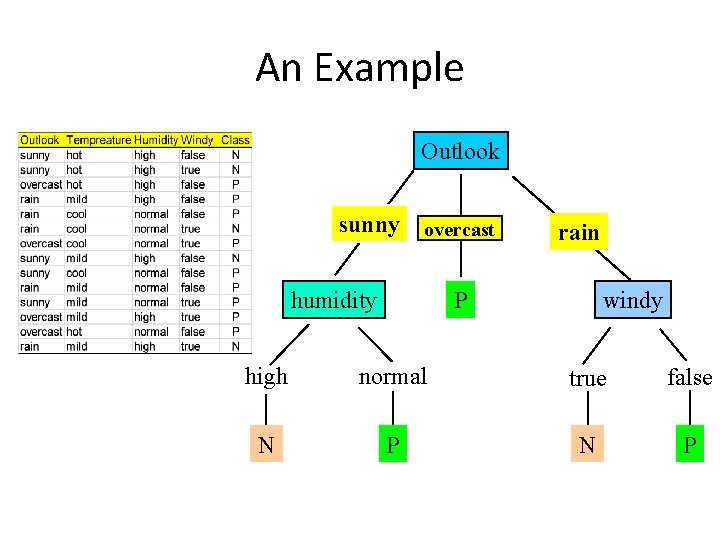

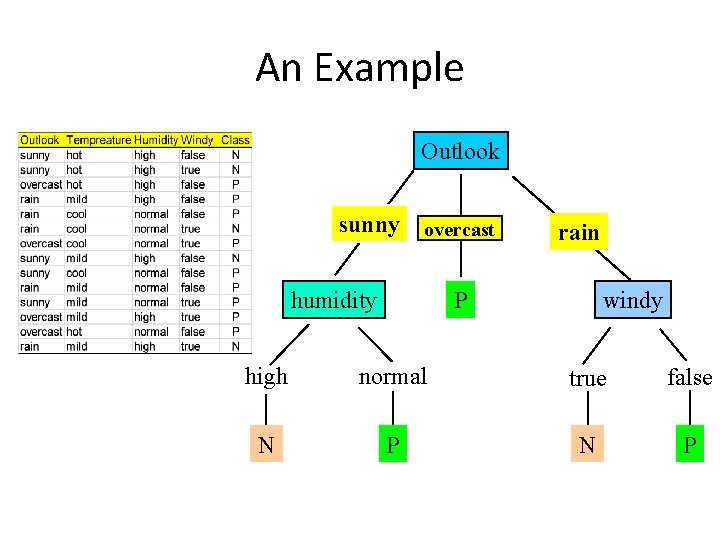

An Example Outlook sunny overcast humidity rain windy P high normal true false N P

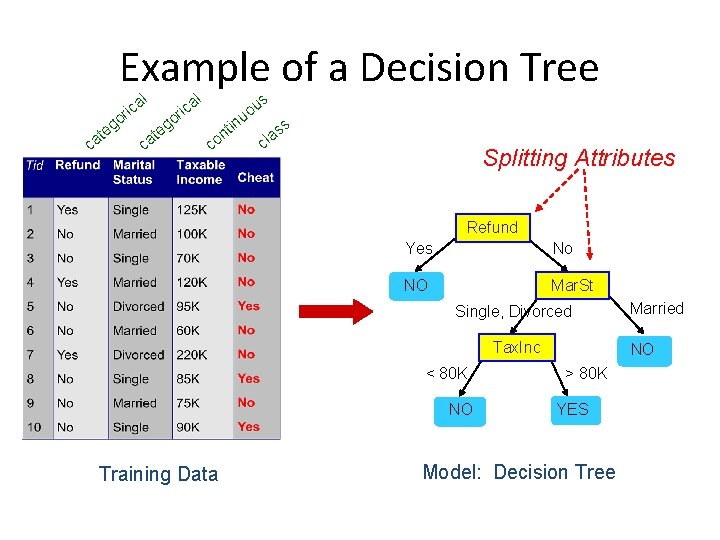

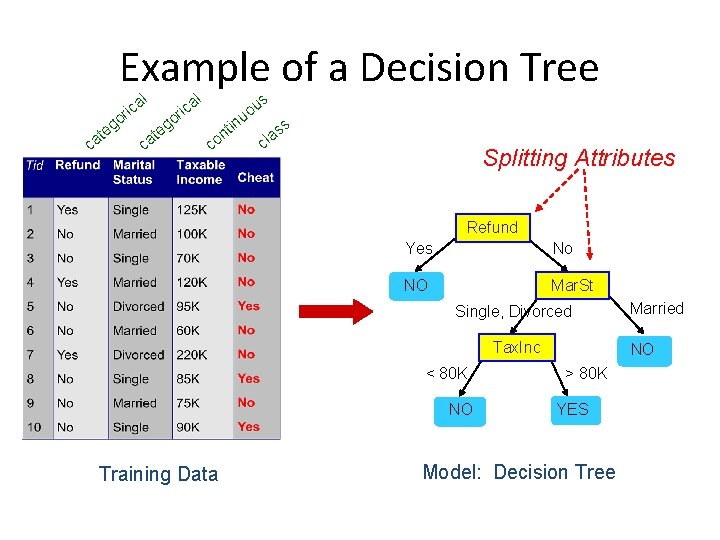

Example of a Decision Tree o g te ca l a c ri in us o u t on c s s cla Splitting Attributes Refund Yes No NO Mar. St Single, Divorced Tax. Inc < 80 K NO Training Data Married NO > 80 K YES Model: Decision Tree

6

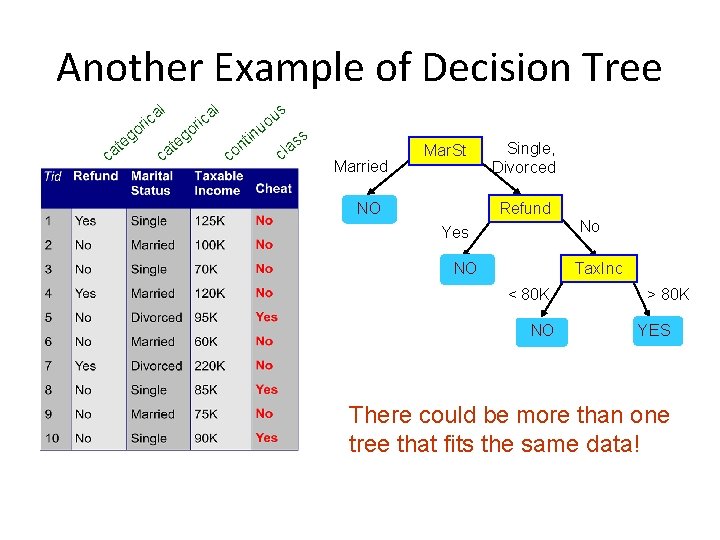

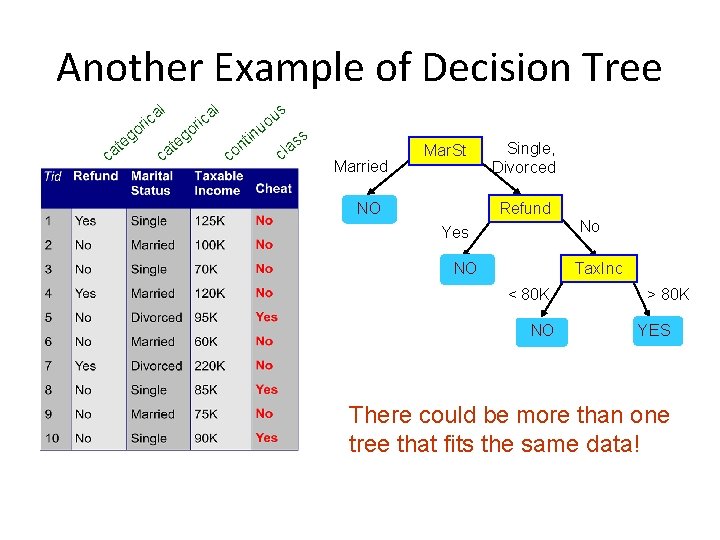

Another Example of Decision Tree l ir ca o g c e at go l ir ca us o u in t ss n a l c co Married Mar. St NO Single, Divorced Refund No Yes NO Tax. Inc < 80 K NO > 80 K YES There could be more than one tree that fits the same data!

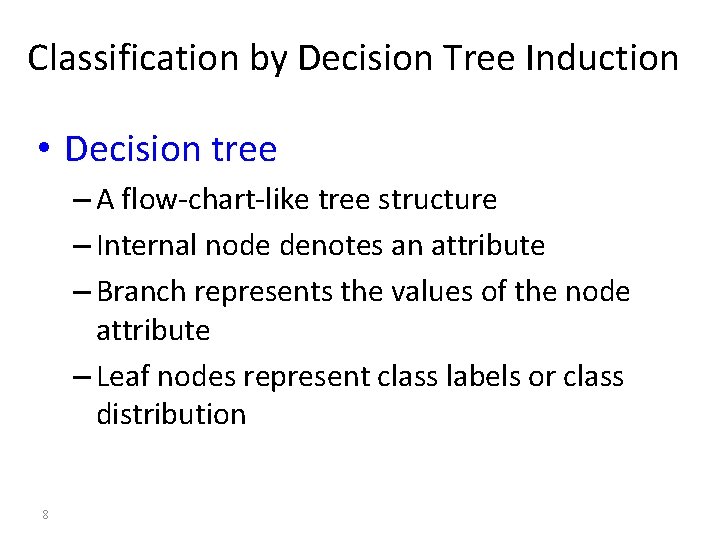

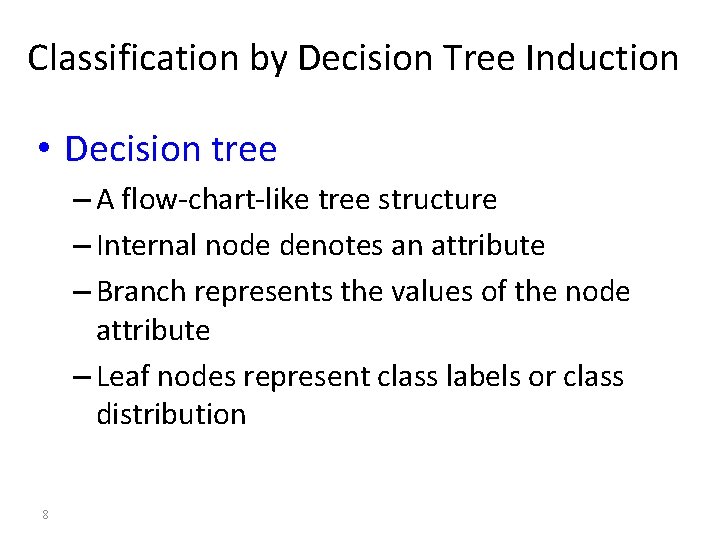

Classification by Decision Tree Induction • Decision tree – A flow-chart-like tree structure – Internal node denotes an attribute – Branch represents the values of the node attribute – Leaf nodes represent class labels or class distribution 8

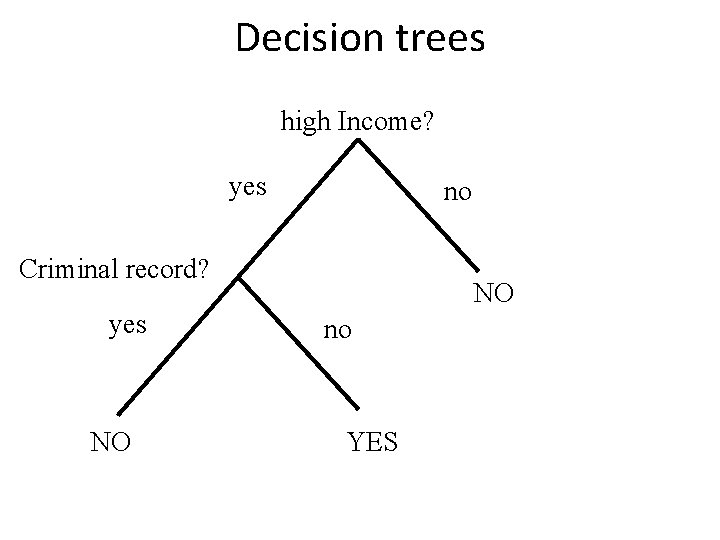

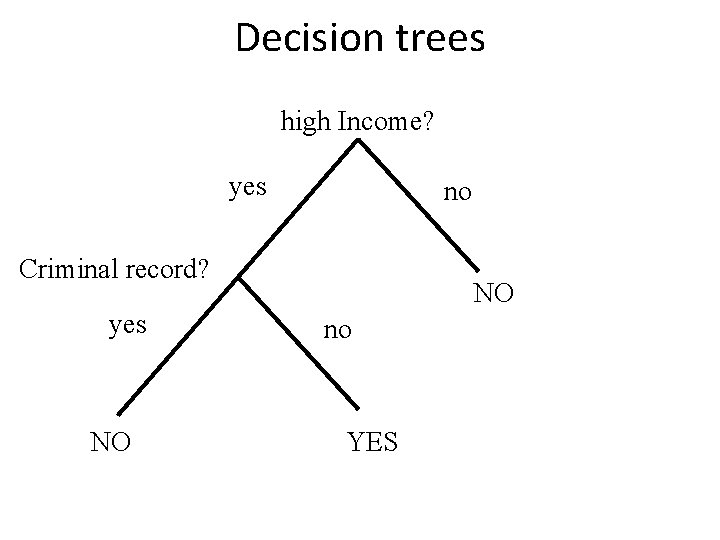

Decision trees high Income? yes no Criminal record? yes NO NO no YES

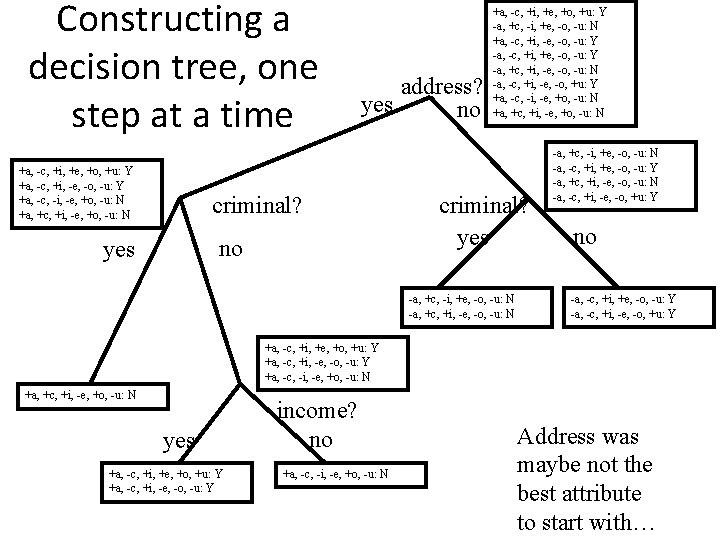

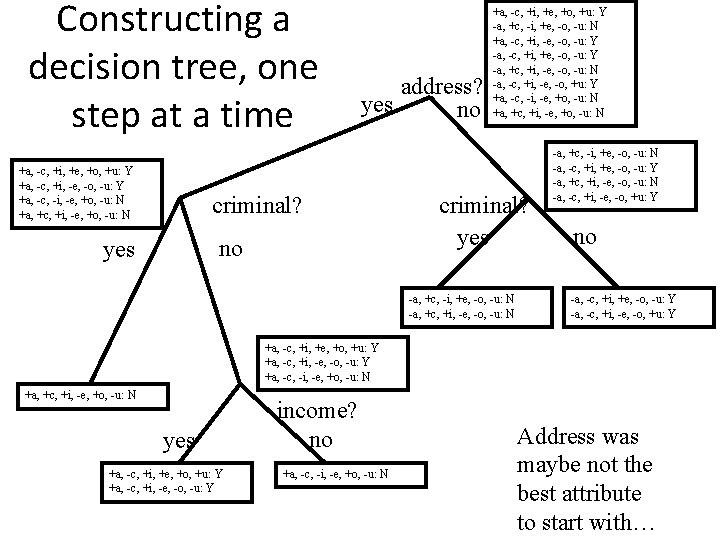

Constructing a decision tree, one step at a time +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N address? yes no criminal? no yes +a, -c, +i, +e, +o, +u: Y -a, +c, -i, +e, -o, -u: N +a, -c, +i, -e, -o, -u: Y -a, -c, +i, +e, -o, -u: Y -a, +c, +i, -e, -o, -u: N -a, -c, +i, -e, -o, +u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N criminal? yes -a, +c, -i, +e, -o, -u: N -a, +c, +i, -e, -o, -u: N -a, +c, -i, +e, -o, -u: N -a, -c, +i, +e, -o, -u: Y -a, +c, +i, -e, -o, -u: N -a, -c, +i, -e, -o, +u: Y no -a, -c, +i, +e, -o, -u: Y -a, -c, +i, -e, -o, +u: Y +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y +a, -c, -i, -e, +o, -u: N +a, +c, +i, -e, +o, -u: N yes +a, -c, +i, +e, +o, +u: Y +a, -c, +i, -e, -o, -u: Y income? no +a, -c, -i, -e, +o, -u: N Address was maybe not the best attribute to start with…

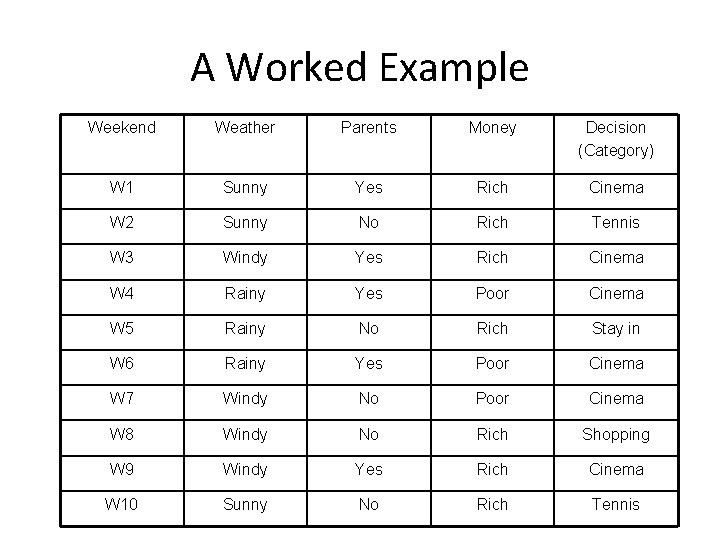

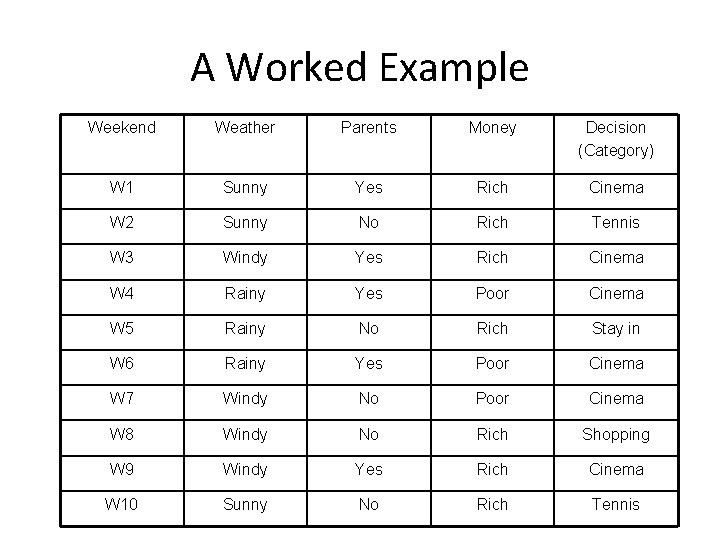

A Worked Example Weekend Weather Parents Money Decision (Category) W 1 Sunny Yes Rich Cinema W 2 Sunny No Rich Tennis W 3 Windy Yes Rich Cinema W 4 Rainy Yes Poor Cinema W 5 Rainy No Rich Stay in W 6 Rainy Yes Poor Cinema W 7 Windy No Poor Cinema W 8 Windy No Rich Shopping W 9 Windy Yes Rich Cinema W 10 Sunny No Rich Tennis

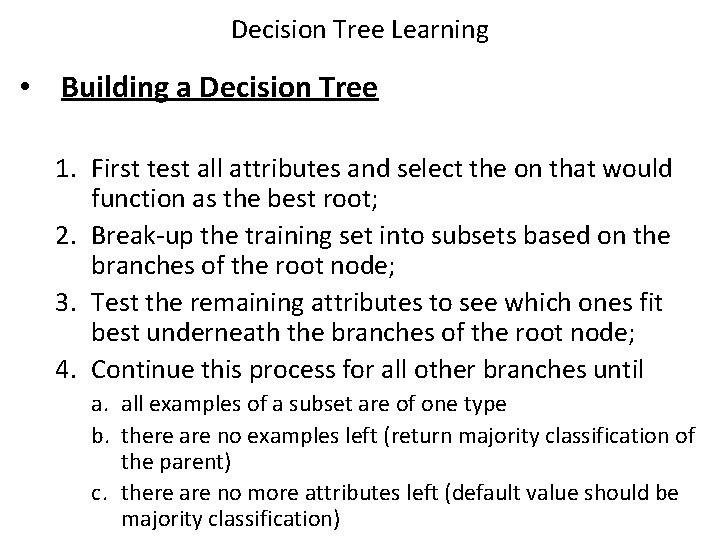

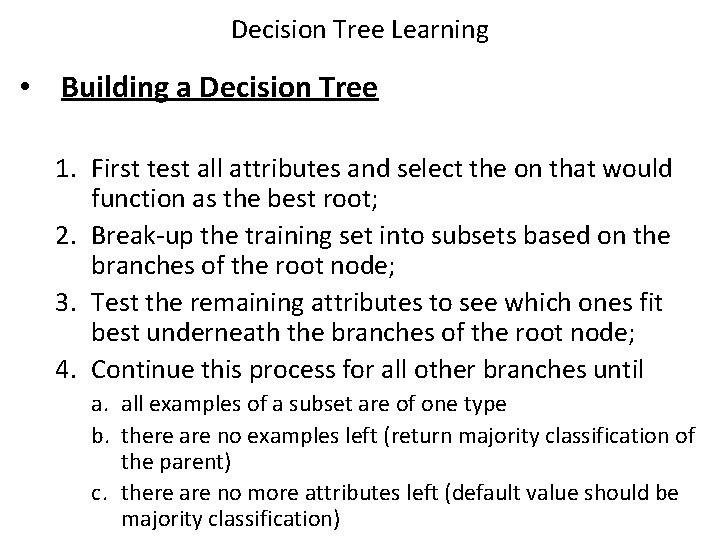

Decision Tree Learning • Building a Decision Tree 1. First test all attributes and select the on that would function as the best root; 2. Break-up the training set into subsets based on the branches of the root node; 3. Test the remaining attributes to see which ones fit best underneath the branches of the root node; 4. Continue this process for all other branches until a. all examples of a subset are of one type b. there are no examples left (return majority classification of the parent) c. there are no more attributes left (default value should be majority classification)

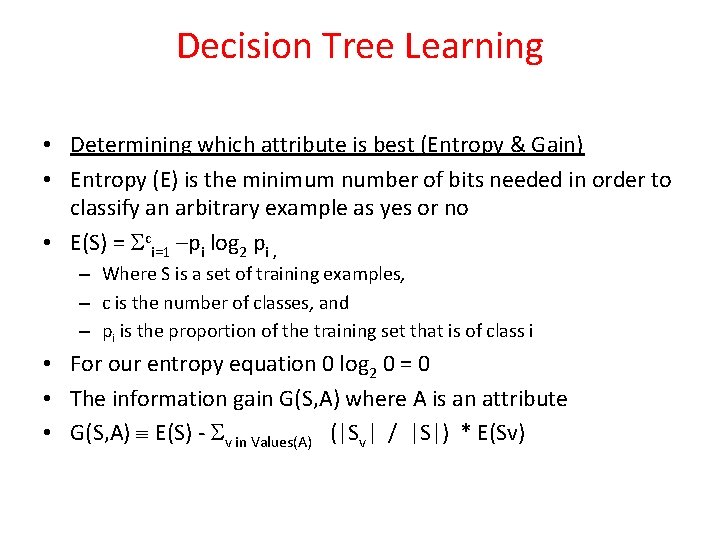

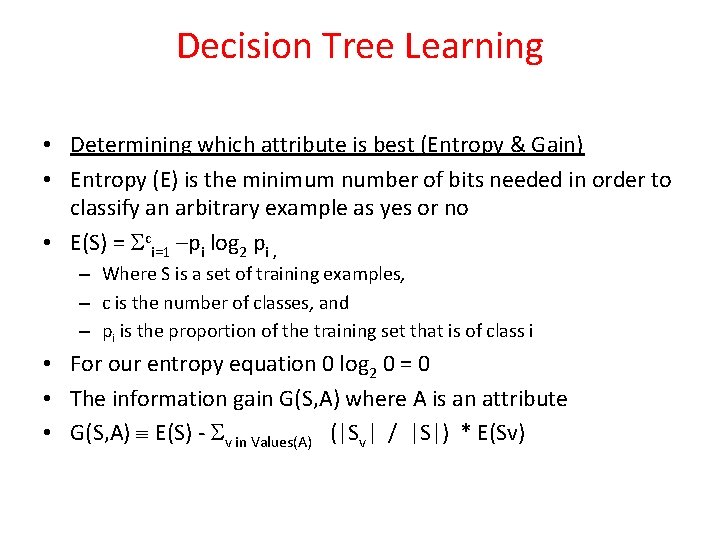

Decision Tree Learning • Determining which attribute is best (Entropy & Gain) • Entropy (E) is the minimum number of bits needed in order to classify an arbitrary example as yes or no • E(S) = ci=1 –pi log 2 pi , – Where S is a set of training examples, – c is the number of classes, and – pi is the proportion of the training set that is of class i • For our entropy equation 0 log 2 0 = 0 • The information gain G(S, A) where A is an attribute • G(S, A) E(S) - v in Values(A) (|Sv| / |S|) * E(Sv)

Information Gain • Gain(S, A): expected reduction in entropy due to sorting S on attribute A Gain(S, A)=Entropy(S) - v values(A) |Sv|/|S| Entropy(Sv) Entropy([29+, 35 -]) = -29/64 log 2 29/64 – 35/64 log 2 35/64 = 0. 99 [29+, 35 -] A 1=? True [21+, 5 -] 14 A 2=? [29+, 35 -] False [8+, 30 -] True [18+, 33 -] False [11+, 2 -]

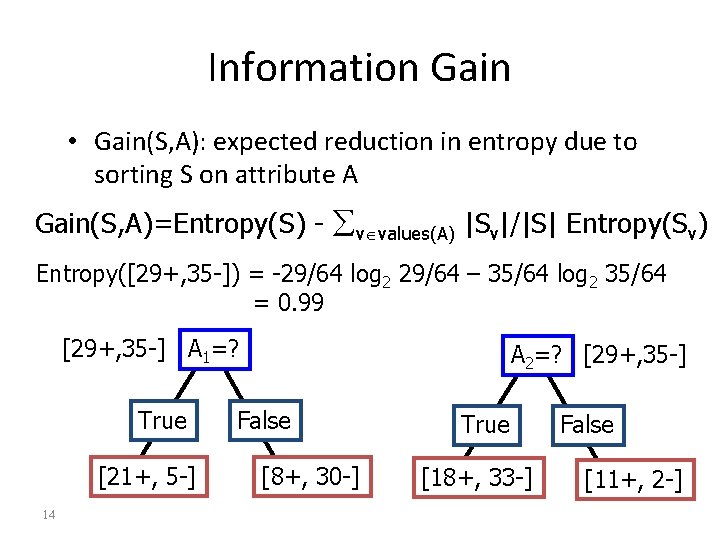

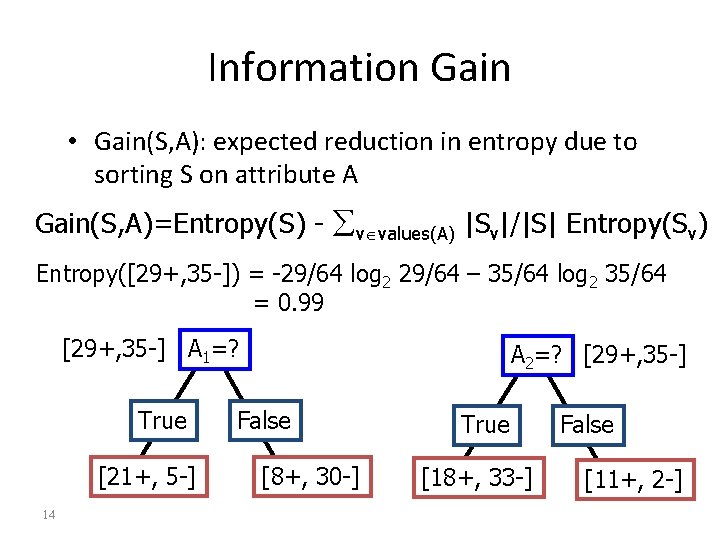

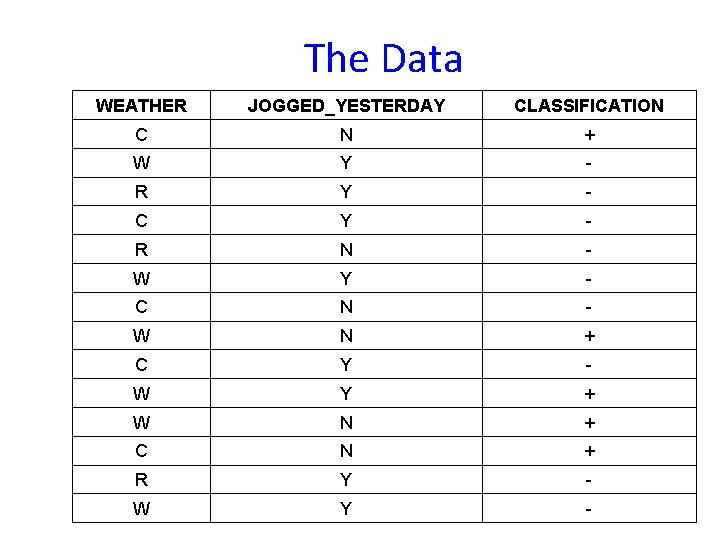

Training Examples Day Outlook Temp. Humidity Wind Play Tennis D 1 Sunny Hot High Weak No D 2 D 3 D 4 D 5 D 6 D 7 D 8 Sunny Overcast Rain Overcast Sunny Hot Mild Cool Mild High Normal High Strong Weak Strong Weak No Yes Yes No D 9 D 10 D 11 D 12 D 13 D 14 Sunny Rain Sunny Overcast Rain Cold Mild Hot Mild Normal High Weak Strong Weak Strong Yes Yes Yes No 15

![ID 3 Algorithm D 1 D 2 D 14 9 5 Outlook ID 3 Algorithm [D 1, D 2, …, D 14] [9+, 5 -] Outlook](https://slidetodoc.com/presentation_image_h2/6cf78c8f84f7d3ed0d4c0000df8099f4/image-16.jpg)

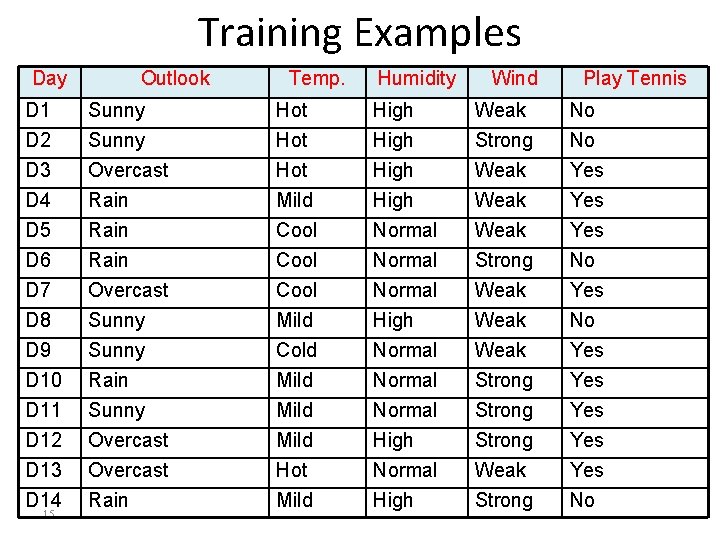

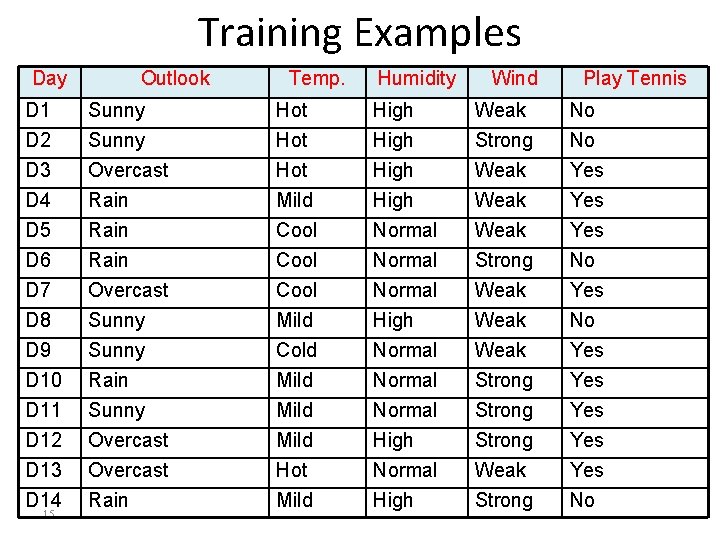

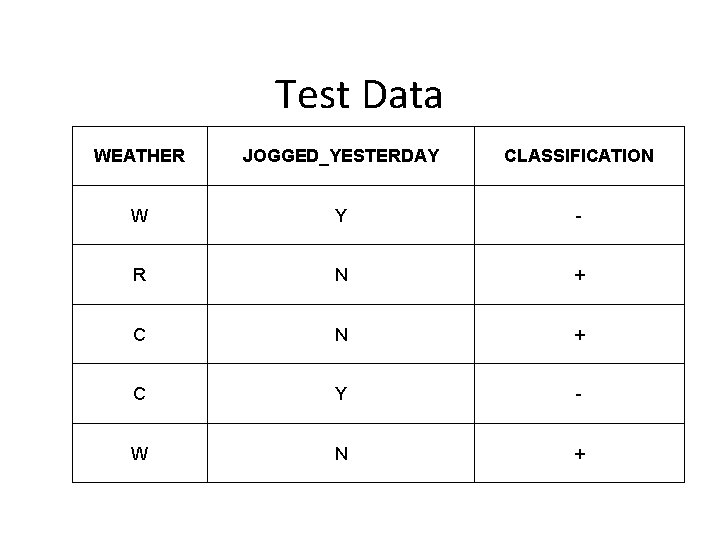

ID 3 Algorithm [D 1, D 2, …, D 14] [9+, 5 -] Outlook Sunny Overcast Rain Ssunny=[D 1, D 2, D 8, D 9, D 11] [D 3, D 7, D 12, D 13] [D 4, D 5, D 6, D 10, D 14] [2+, 3 -] [4+, 0 -] [3+, 2 -] ? Yes ? Gain(Ssunny , Humidity)=0. 970 -(3/5)0. 0 – 2/5(0. 0) = 0. 970 Gain(Ssunny , Temp. )=0. 970 -(2/5)0. 0 – 2/5(1. 0)-(1/5)0. 0 = 0. 570 Gain(Ssunny , Wind)=0. 970= -(2/5)1. 0 – 3/5(0. 918) = 0. 019 16

![ID 3 Algorithm Outlook Sunny Humidity High No D 1 D 2 17 Overcast ID 3 Algorithm Outlook Sunny Humidity High No [D 1, D 2] 17 Overcast](https://slidetodoc.com/presentation_image_h2/6cf78c8f84f7d3ed0d4c0000df8099f4/image-17.jpg)

ID 3 Algorithm Outlook Sunny Humidity High No [D 1, D 2] 17 Overcast Rain Yes [D 3, D 7, D 12, D 13] Normal Yes [D 8, D 9, D 11] Wind Strong Weak No Yes [D 6, D 14] [D 4, D 5, D 10]

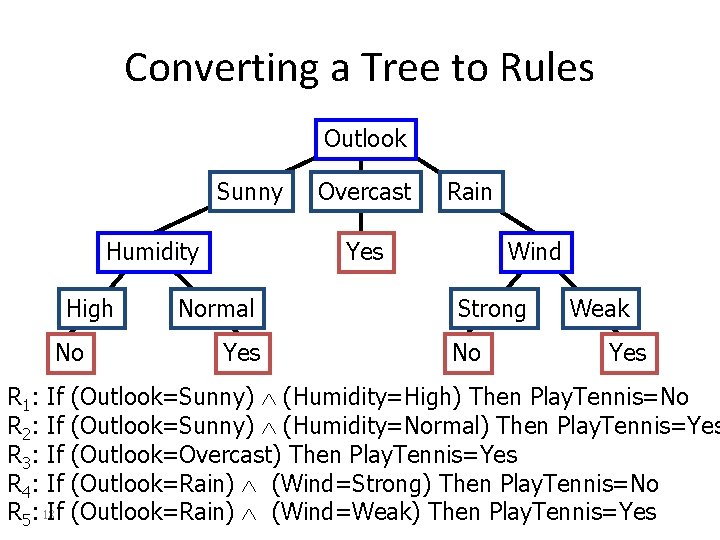

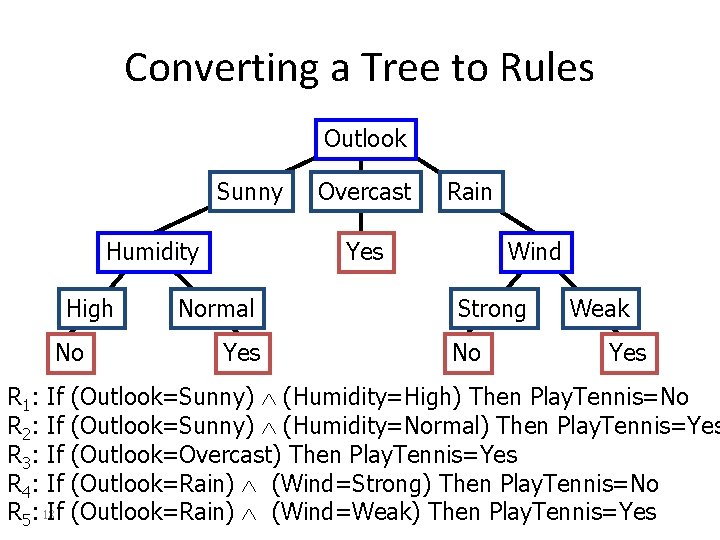

Converting a Tree to Rules Outlook Sunny Humidity High No R 1: If R 2: If R 3: If R 4: If R 5: 18 If Overcast Rain Yes Normal Yes Wind Strong No Weak Yes (Outlook=Sunny) (Humidity=High) Then Play. Tennis=No (Outlook=Sunny) (Humidity=Normal) Then Play. Tennis=Yes (Outlook=Overcast) Then Play. Tennis=Yes (Outlook=Rain) (Wind=Strong) Then Play. Tennis=No (Outlook=Rain) (Wind=Weak) Then Play. Tennis=Yes

Decision tree classifiers • Does not require any prior knowledge of data distribution, works well on noisy data. • Has been applied to: – classify medical patients based on the disease, – equipment malfunction by cause, – loan applicant by likelihood of payment.

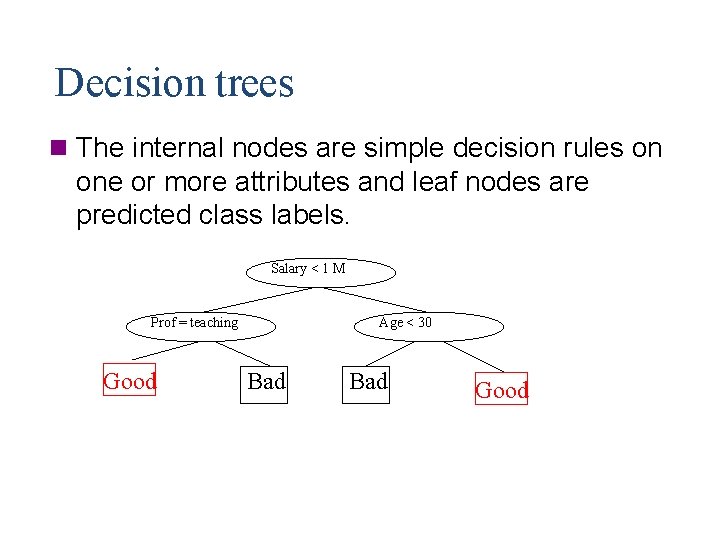

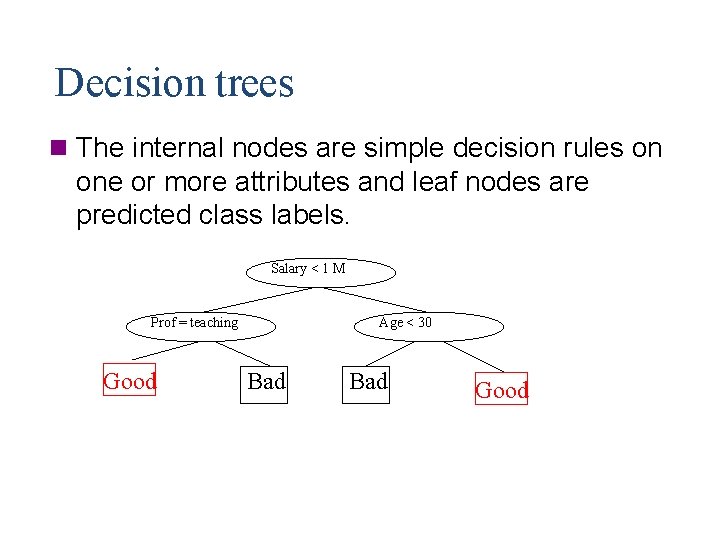

Decision trees n The internal nodes are simple decision rules on one or more attributes and leaf nodes are predicted class labels. Salary < 1 M Prof = teaching Good Age < 30 Bad Good

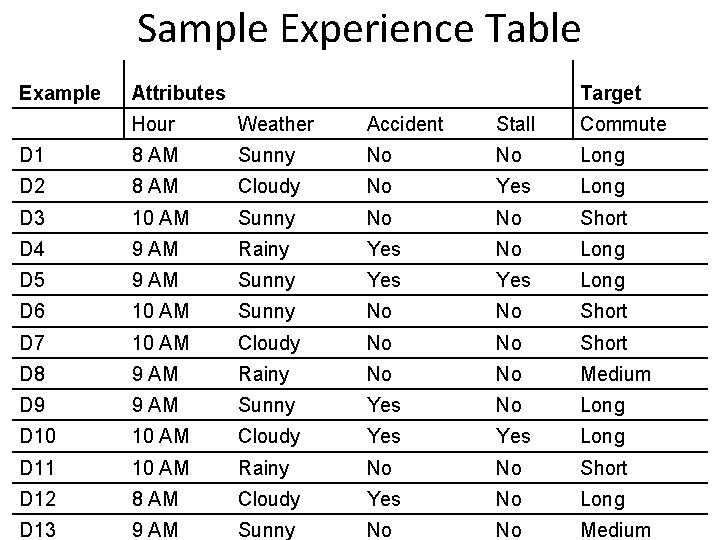

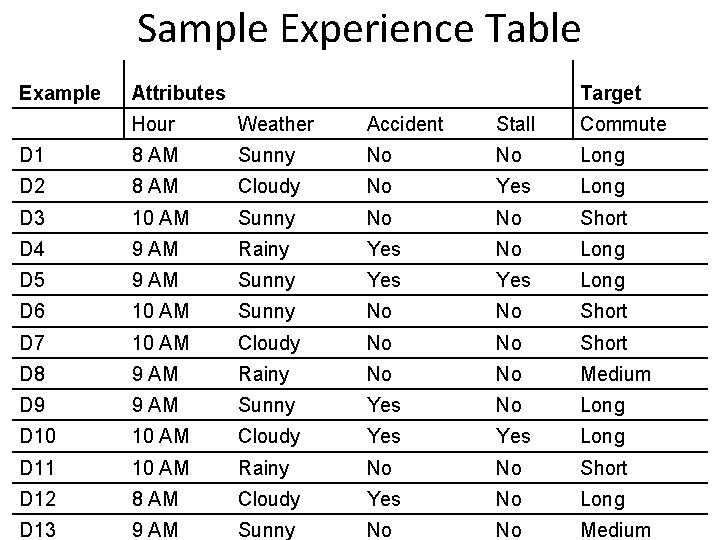

Sample Experience Table Example Attributes Target Hour Weather Accident Stall Commute D 1 8 AM Sunny No No Long D 2 8 AM Cloudy No Yes Long D 3 10 AM Sunny No No Short D 4 9 AM Rainy Yes No Long D 5 9 AM Sunny Yes Long D 6 10 AM Sunny No No Short D 7 10 AM Cloudy No No Short D 8 9 AM Rainy No No Medium D 9 9 AM Sunny Yes No Long D 10 10 AM Cloudy Yes Long D 11 10 AM Rainy No No Short D 12 8 AM Cloudy Yes No Long D 13 9 AM Sunny No No Medium

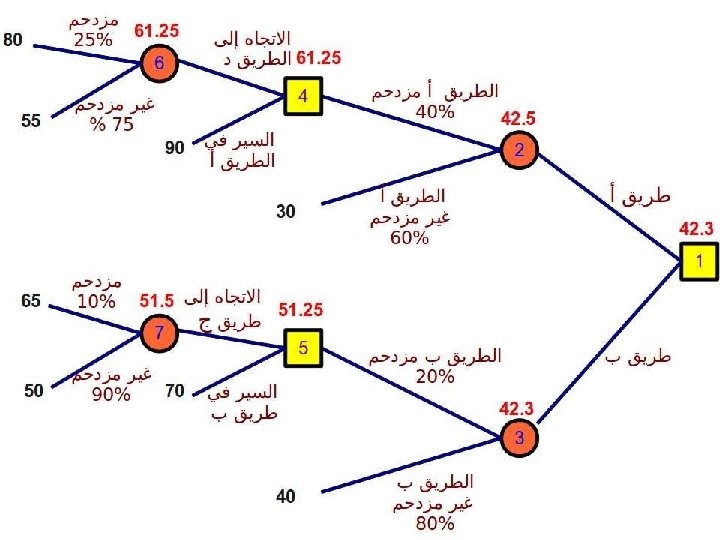

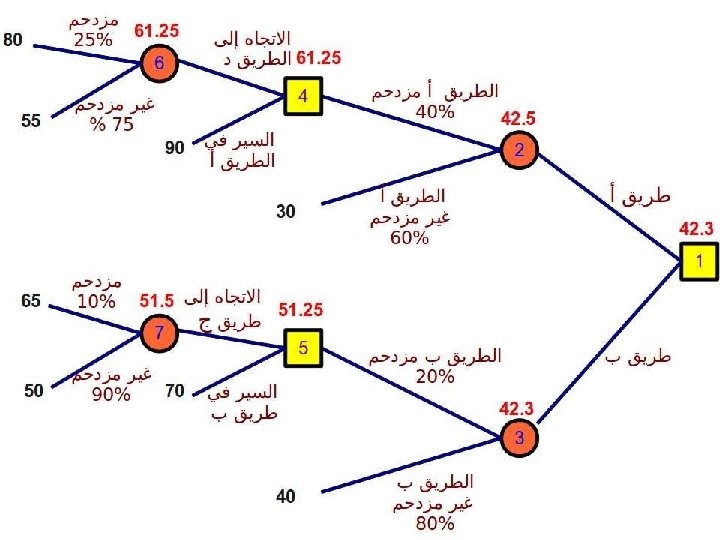

Decision Tree Analysis • For choosing the best course of action when future outcomes are uncertain.

Classification • Data: It has k attributes A 1, … Ak. Each tuple (case or example) is described by values of the attributes and a class label. • Goal: To learn rules or to build a model that can be used to predict the classes of new (or future or test) cases. • The data used for building the model is called the training data.

Data and its format • Data – attribute-value pairs – with/without class • Data type – continuous/discrete – nominal • Data format – Flat – If not flat, what should we do?

Induction Algorithm • We calculate the gain for each attribute and choose the max gain to be the node in the tree. • After build the node calculate the gain for other attribute and choose again the max of them.

Decision Tree Induction • Many Algorithms: – Hunt’s Algorithm (one of the earliest) – CART – ID 3, C 4. 5 – SLIQ, SPRINT

Decision Tree Induction Algorithm • Create a root node for the tree • If all cases are positive, return single-node tree with label + • If all cases are negative, return single-node tree with label • Otherwise begin – For each possible value of node • Add a new tree branch • Let cases be subset of all data that have this value – Add new node with new subtree until leaf node

Tests for Choosing Best function • Purity (Diversity) Measures: – Gini (population diversity) – Entropy (information gain) – Information Gain Ratio – Chi-square Test We will only explore Gini in class

Measures of Node Impurity • Gini Index • Entropy • Misclassification error

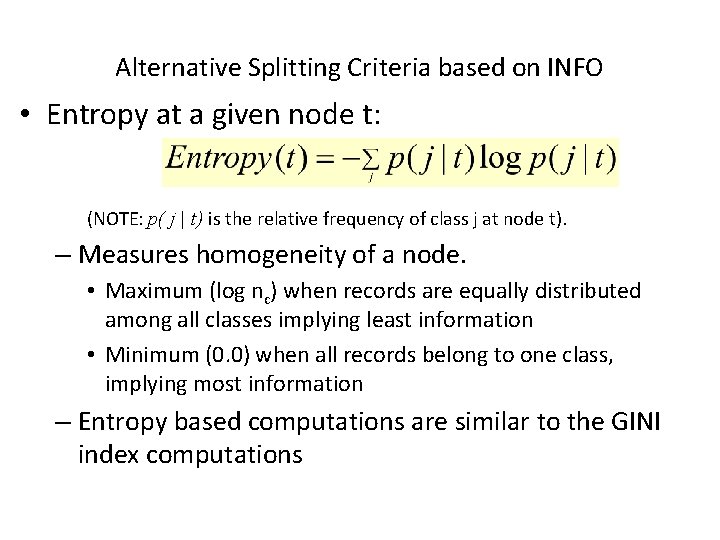

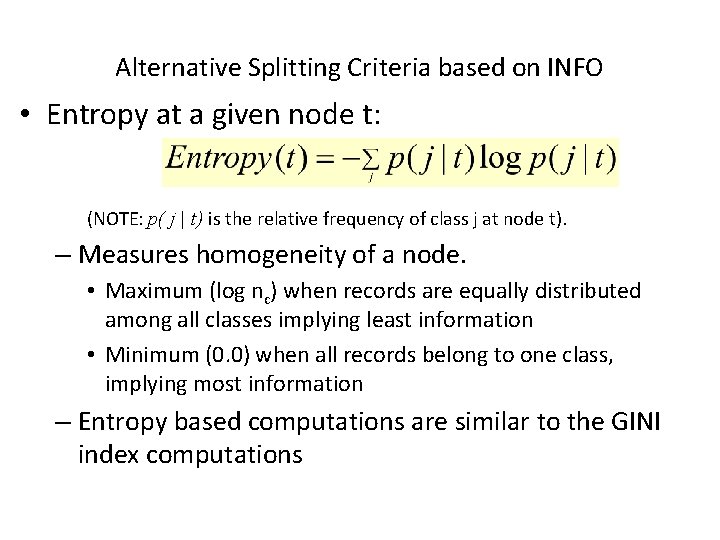

Alternative Splitting Criteria based on INFO • Entropy at a given node t: (NOTE: p( j | t) is the relative frequency of class j at node t). – Measures homogeneity of a node. • Maximum (log nc) when records are equally distributed among all classes implying least information • Minimum (0. 0) when all records belong to one class, implying most information – Entropy based computations are similar to the GINI index computations

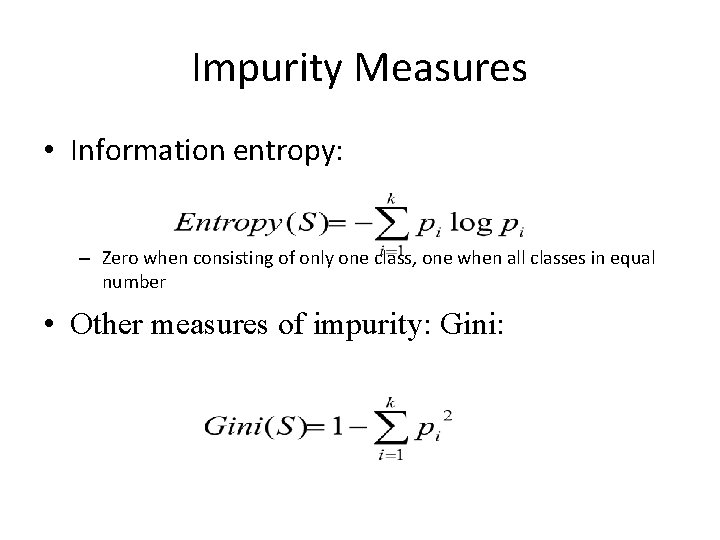

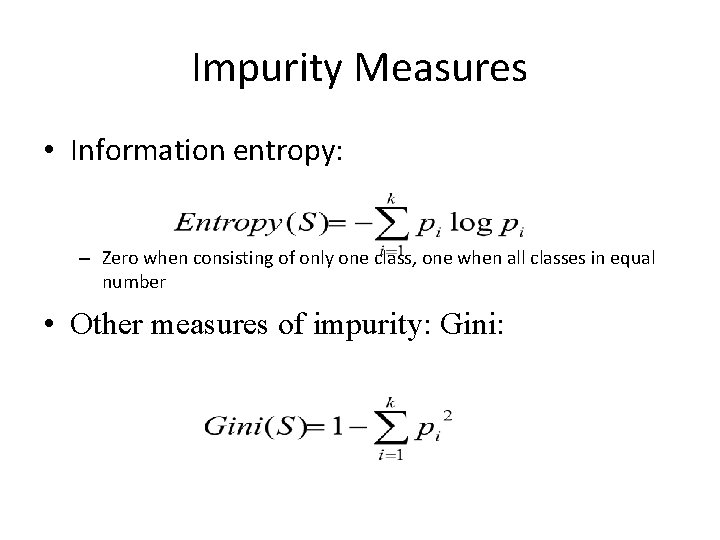

Impurity Measures • Information entropy: – Zero when consisting of only one class, one when all classes in equal number • Other measures of impurity: Gini:

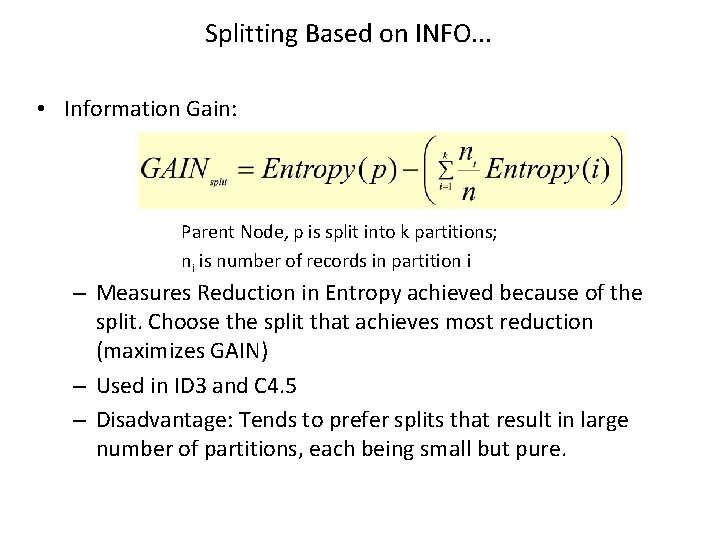

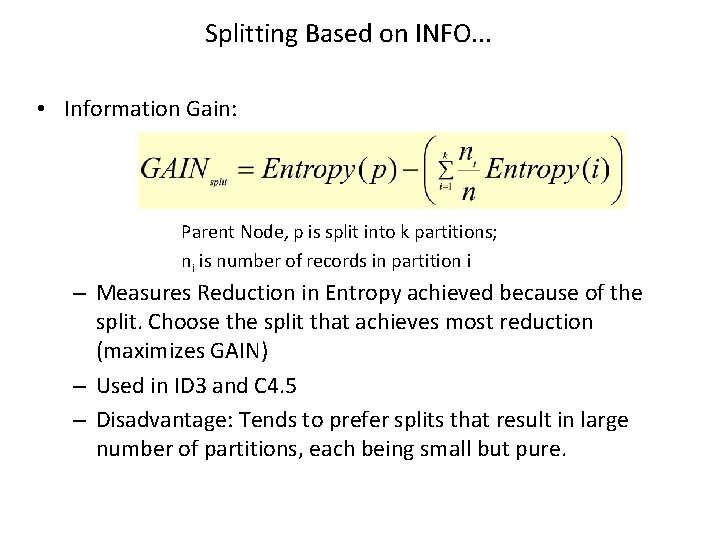

Splitting Based on INFO. . . • Information Gain: Parent Node, p is split into k partitions; ni is number of records in partition i – Measures Reduction in Entropy achieved because of the split. Choose the split that achieves most reduction (maximizes GAIN) – Used in ID 3 and C 4. 5 – Disadvantage: Tends to prefer splits that result in large number of partitions, each being small but pure.

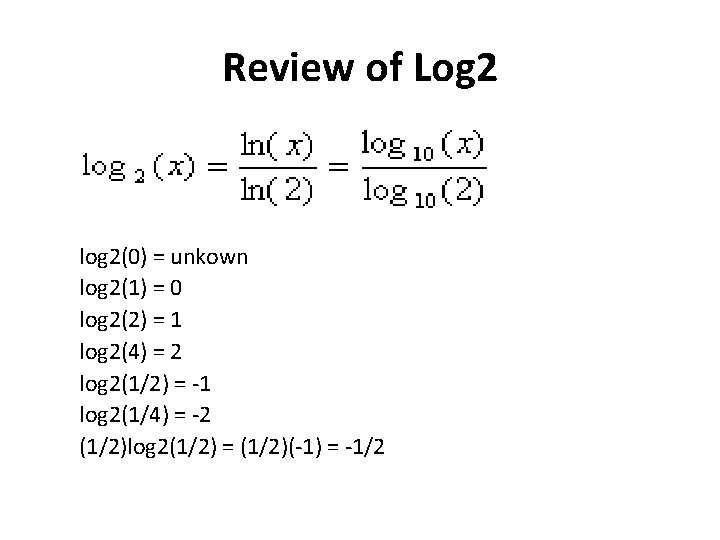

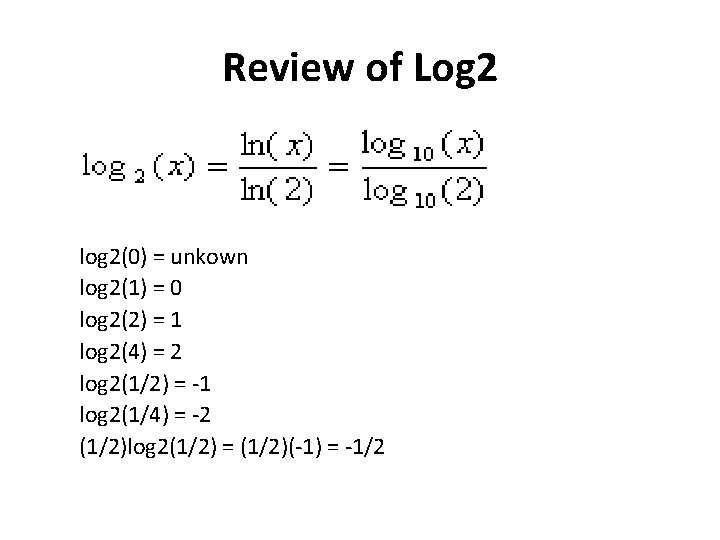

Review of Log 2 log 2(0) = unkown log 2(1) = 0 log 2(2) = 1 log 2(4) = 2 log 2(1/2) = -1 log 2(1/4) = -2 (1/2)log 2(1/2) = (1/2)(-1) = -1/2

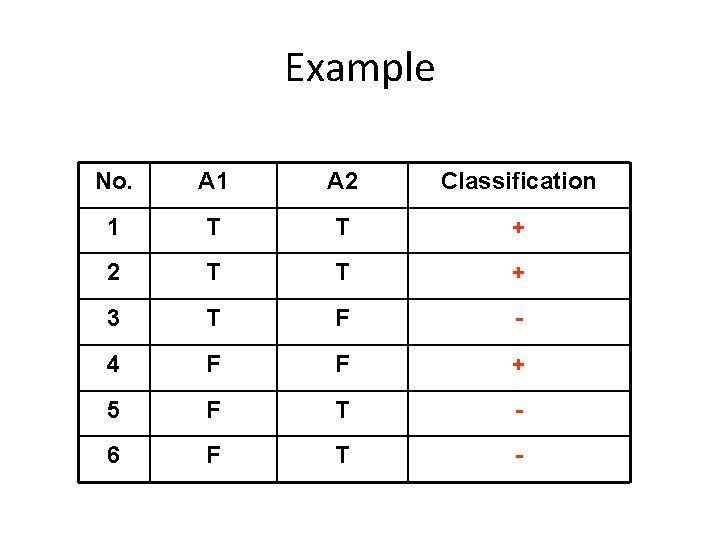

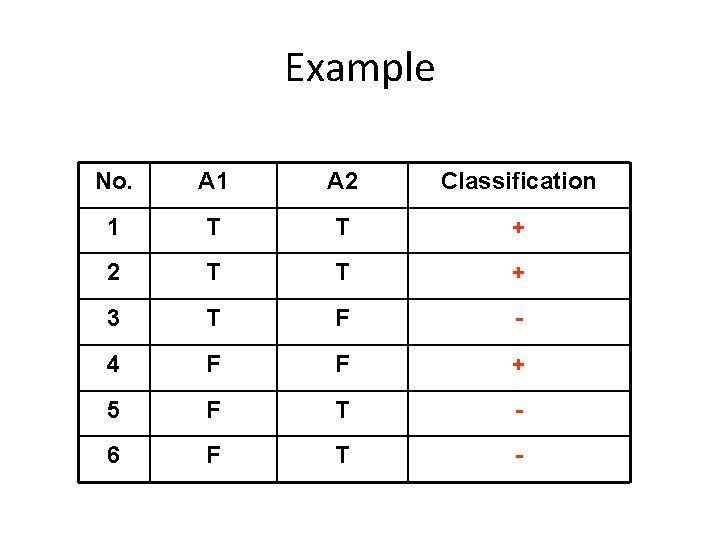

Example No. A 1 A 2 Classification 1 T T + 2 T T + 3 T F - 4 F F + 5 F T - 6 F T -

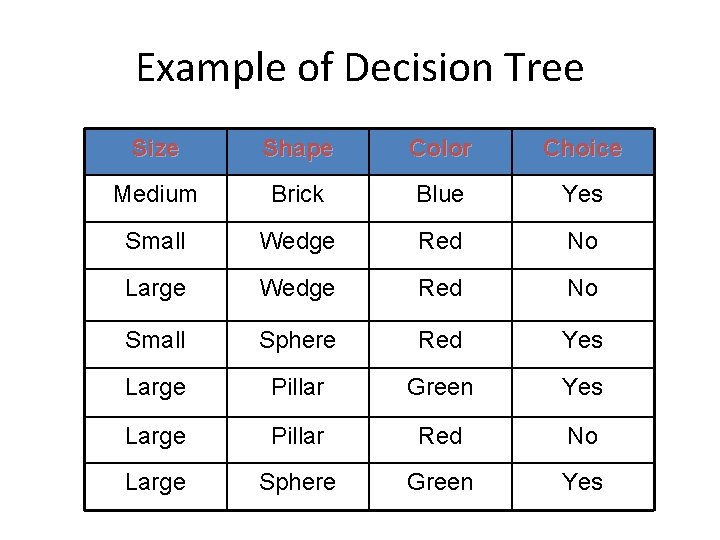

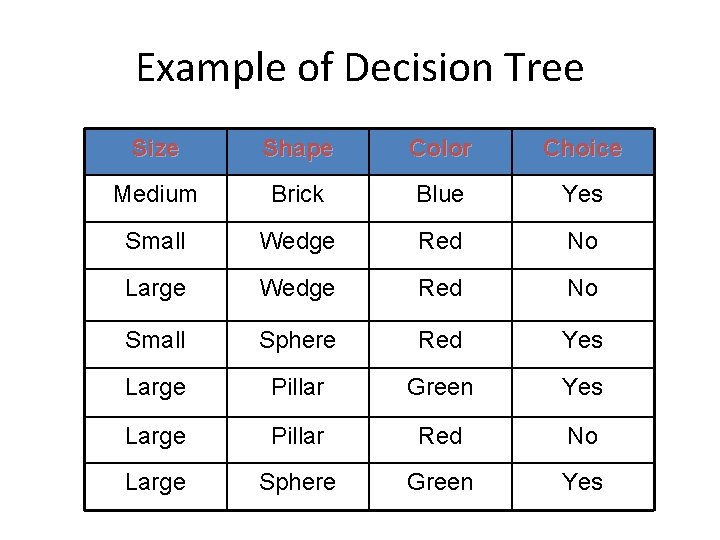

Example of Decision Tree Size Shape Color Choice Medium Brick Blue Yes Small Wedge Red No Large Wedge Red No Small Sphere Red Yes Large Pillar Green Yes Large Pillar Red No Large Sphere Green Yes

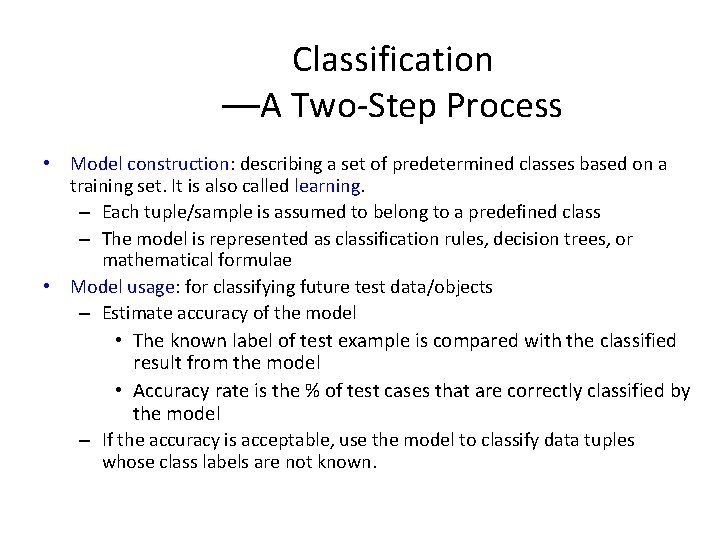

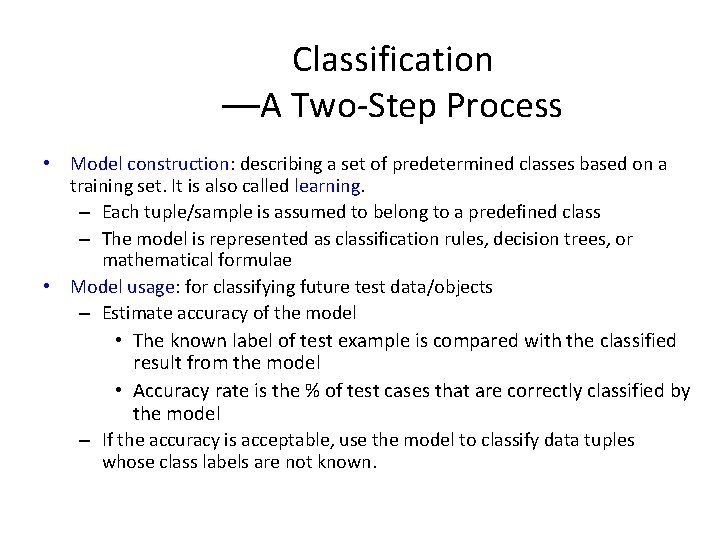

Classification —A Two-Step Process • Model construction: describing a set of predetermined classes based on a training set. It is also called learning. – Each tuple/sample is assumed to belong to a predefined class – The model is represented as classification rules, decision trees, or mathematical formulae • Model usage: for classifying future test data/objects – Estimate accuracy of the model • The known label of test example is compared with the classified result from the model • Accuracy rate is the % of test cases that are correctly classified by the model – If the accuracy is acceptable, use the model to classify data tuples whose class labels are not known.

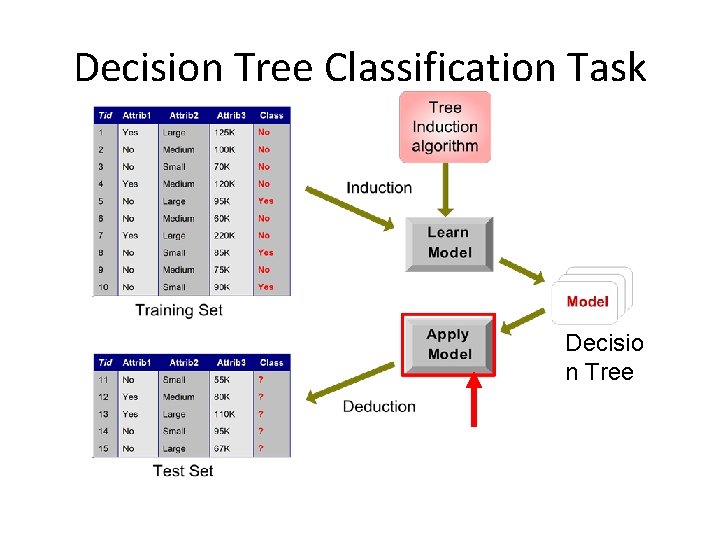

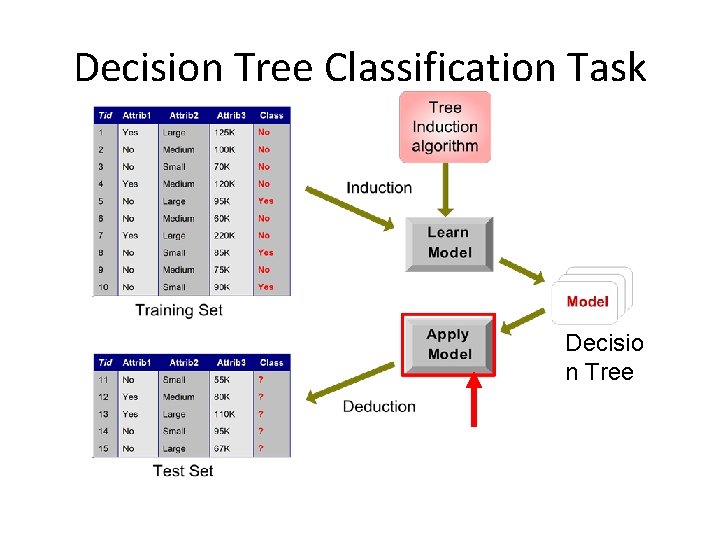

Decision Tree Classification Task Decisio n Tree

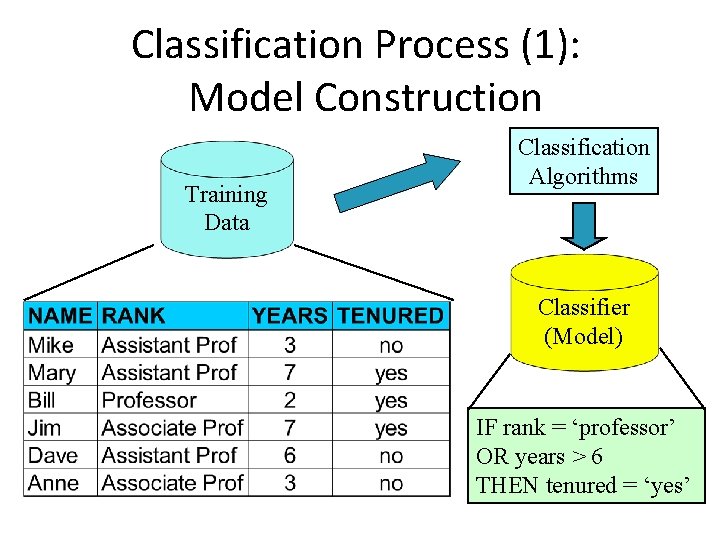

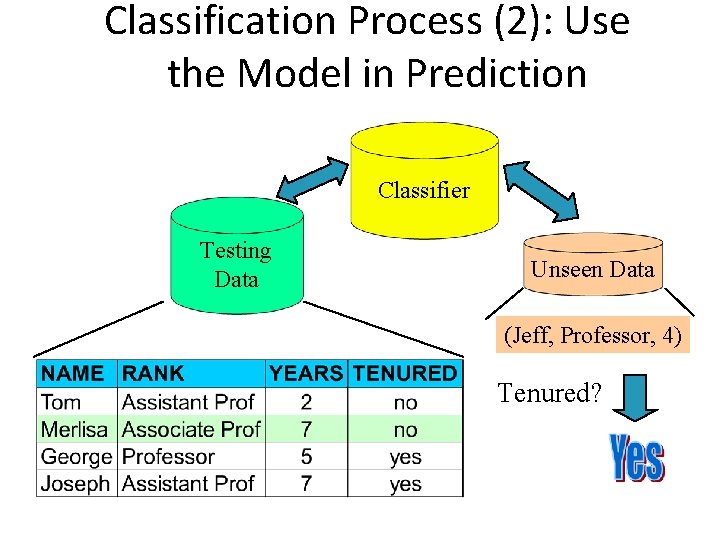

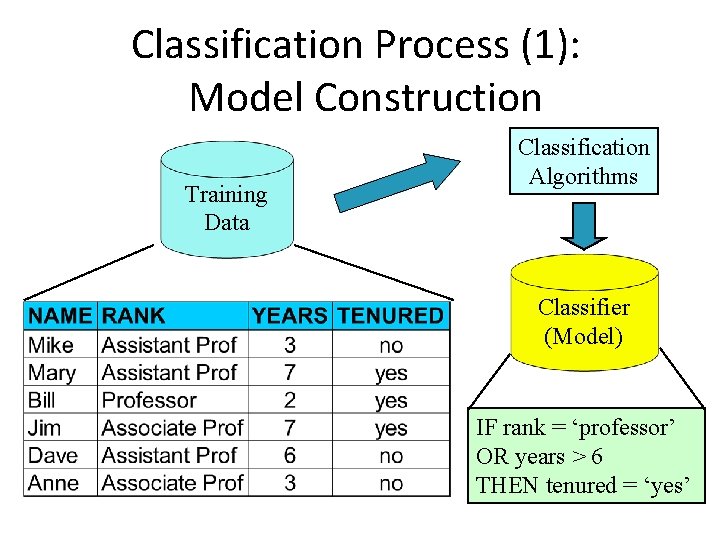

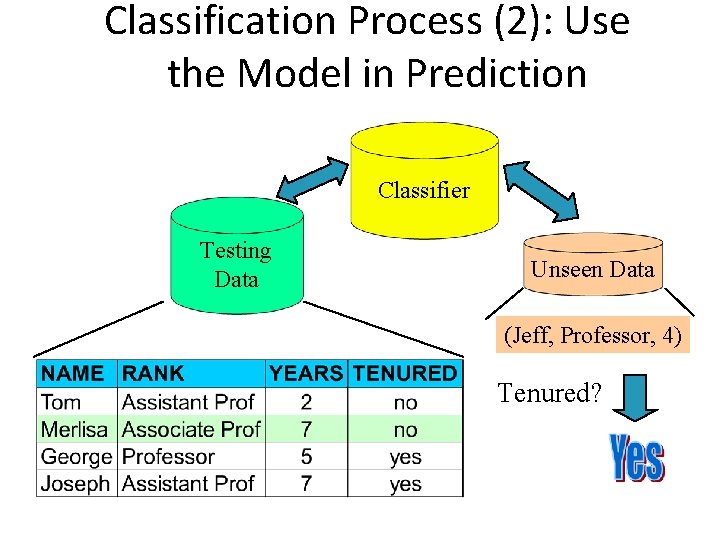

Classification Process (1): Model Construction Training Data Classification Algorithms Classifier (Model) IF rank = ‘professor’ OR years > 6 THEN tenured = ‘yes’

Classification Process (2): Use the Model in Prediction Classifier Testing Data Unseen Data (Jeff, Professor, 4) Tenured?

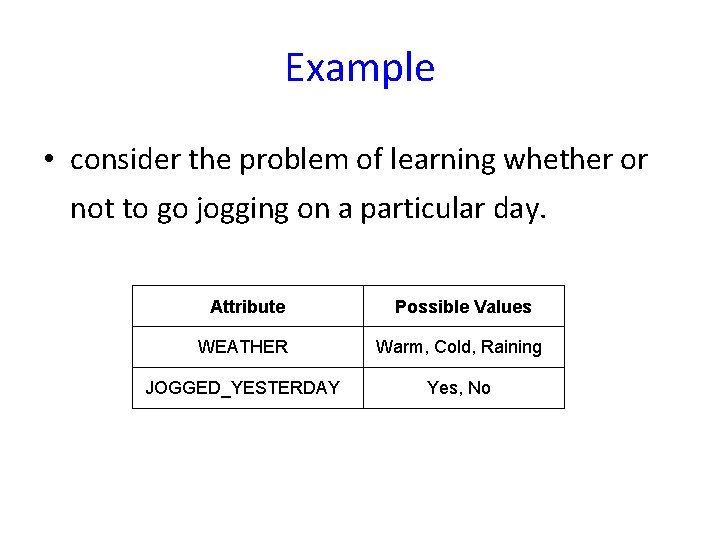

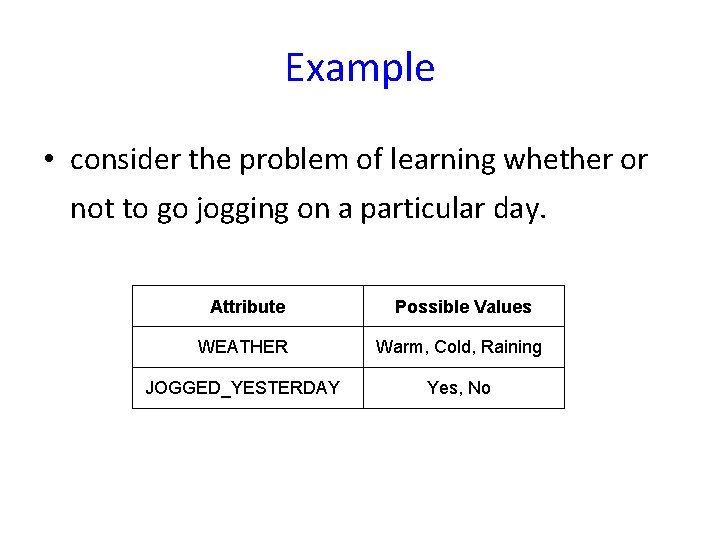

Example • consider the problem of learning whether or not to go jogging on a particular day. Attribute Possible Values WEATHER Warm, Cold, Raining JOGGED_YESTERDAY Yes, No

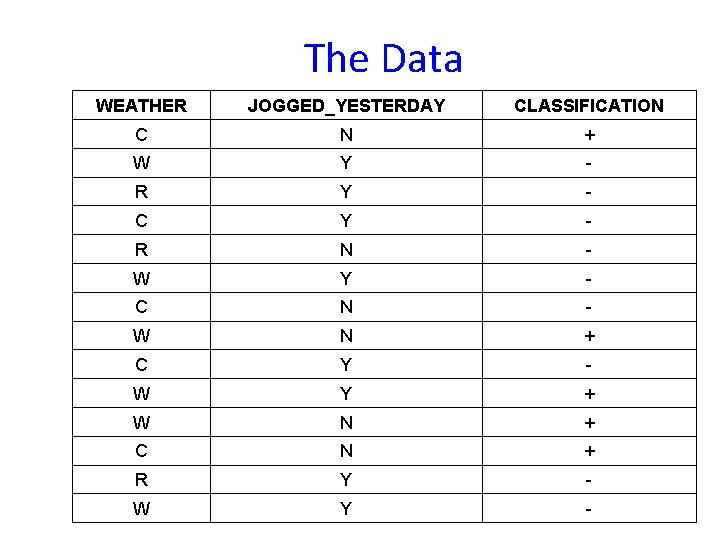

The Data WEATHER JOGGED_YESTERDAY CLASSIFICATION C N + W Y - R Y - C Y - R N - W Y - C N - W N + C Y - W Y + W N + C N + R Y - W Y -

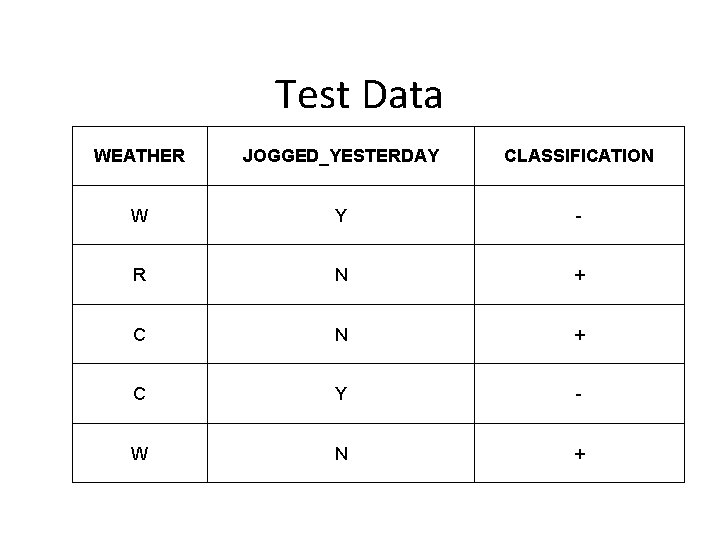

Test Data WEATHER JOGGED_YESTERDAY CLASSIFICATION W Y - R N + C Y - W N +

ID 3: Some Issues • Sometimes we arrive to a node with no examples. This means that the example has not been observed. We just assigned as value the majority vote of its parent • Sometimes we arrive to a node with both positive and negative examples and no attributes left. This means that there is noise in the data. We just assigned as value the majority vote of the examples

Problems with Decision Tree • ID 3 is not optimal – Uses expected entropy reduction, not actual reduction • Must use discrete (or discretized) attributes – What if we left for work at 9: 30 AM? – We could break down the attributes into smaller values…

Problems with Decision Trees • While decision trees classify quickly, the time for building a tree may be higher than another type of classifier • Decision trees suffer from a problem of errors propagating throughout a tree – A very serious problem as the number of classes increases

Decision Tree characteristics • The training data may contain missing attribute values. – Decision tree methods can be used even when some training examples have unknown values.

Unknown Attribute Values What is some examples missing values of A? Use training example anyway sort through tree • If node n tests A, assign most common value of A among other examples sorted to node n. • Assign most common value of A among other examples with same target value • Assign probability pi to each possible value vi of A – Assign fraction pi of example to each descendant in tree 47

Rule Generation Once a decision tree has been constructed, it is a simple matter to convert it into set of rules. • Converting to rules allows distinguishing among the different contexts in which a decision node is used.

Rule Generation • Converting to rules improves readability. – Rules are often easier for people to understand. • To generate rules, trace each path in the decision tree, from root node to leaf node

Rule Simplification Once a rule set has been devised: – Once individual rules have been simplified by eliminating redundant rules and unnecessary rules. – Attempt to replace those rules that share the most common consequent by a default rule that is triggered when no other rule is triggered.

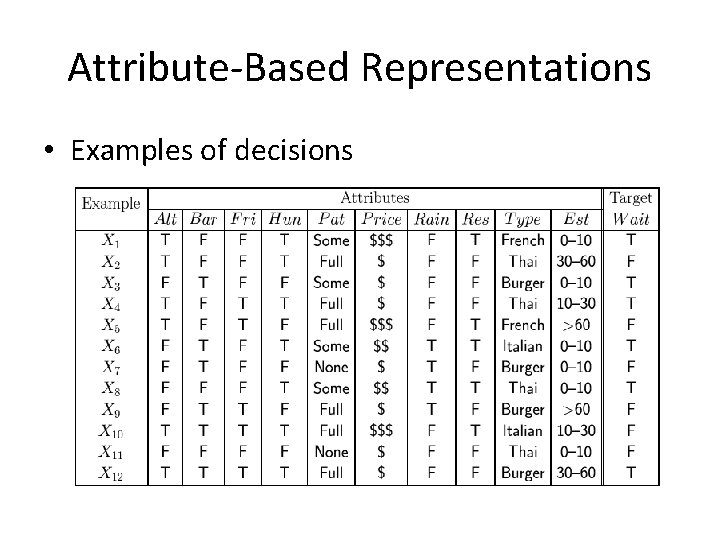

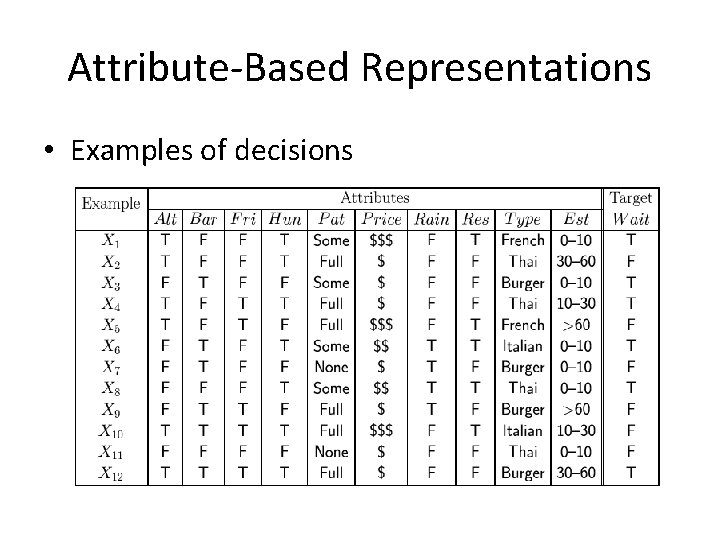

Attribute-Based Representations • Examples of decisions

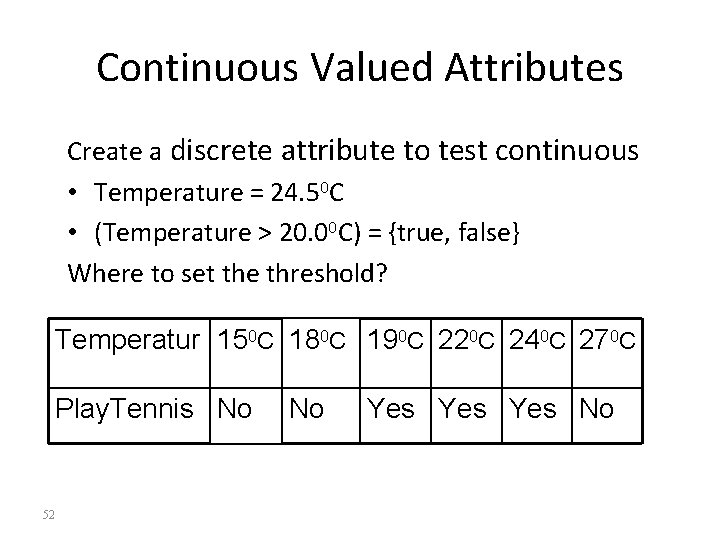

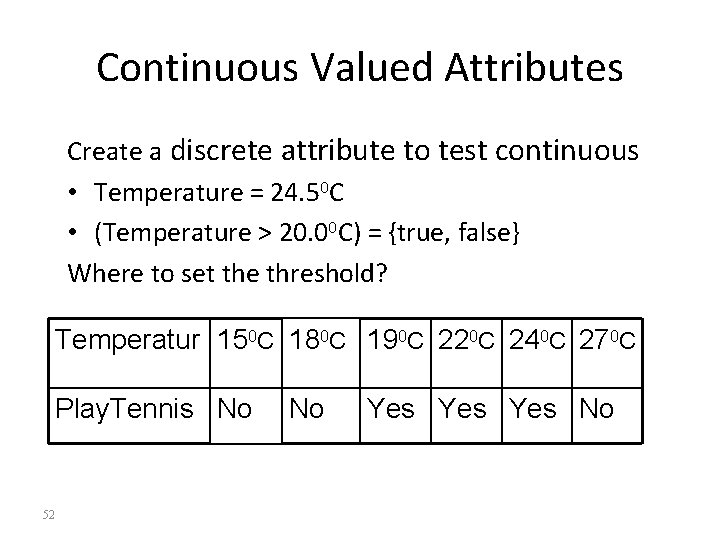

Continuous Valued Attributes Create a discrete attribute to test continuous • Temperature = 24. 50 C • (Temperature > 20. 00 C) = {true, false} Where to set the threshold? Temperatur 150 C 180 C 190 C 220 C 240 C 270 C Play. Tennis No 52 No Yes Yes No

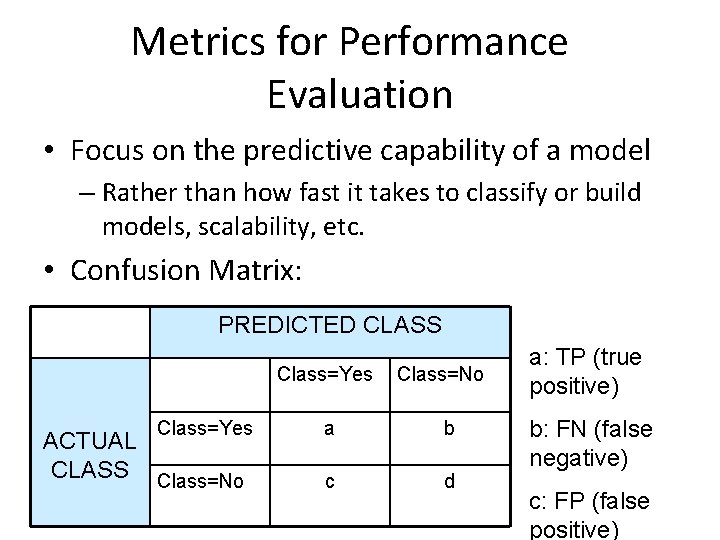

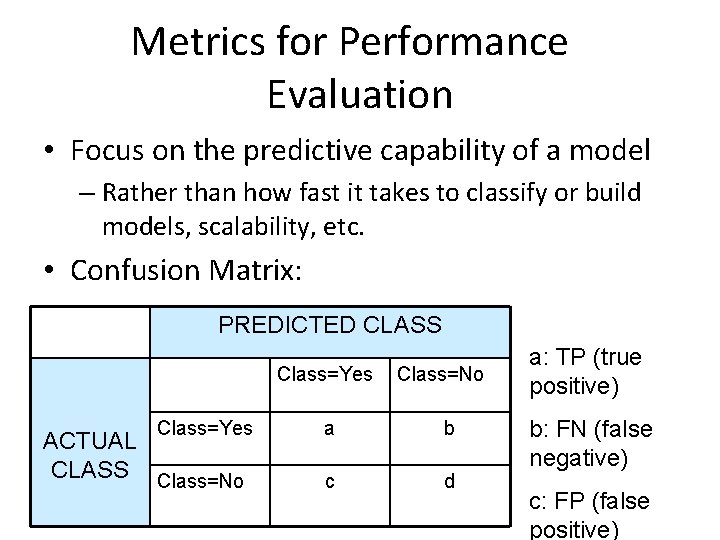

Metrics for Performance Evaluation • Focus on the predictive capability of a model – Rather than how fast it takes to classify or build models, scalability, etc. • Confusion Matrix: PREDICTED CLASS Class=Yes ACTUAL CLASS Class=No a b c d a: TP (true positive) b: FN (false negative) c: FP (false positive)

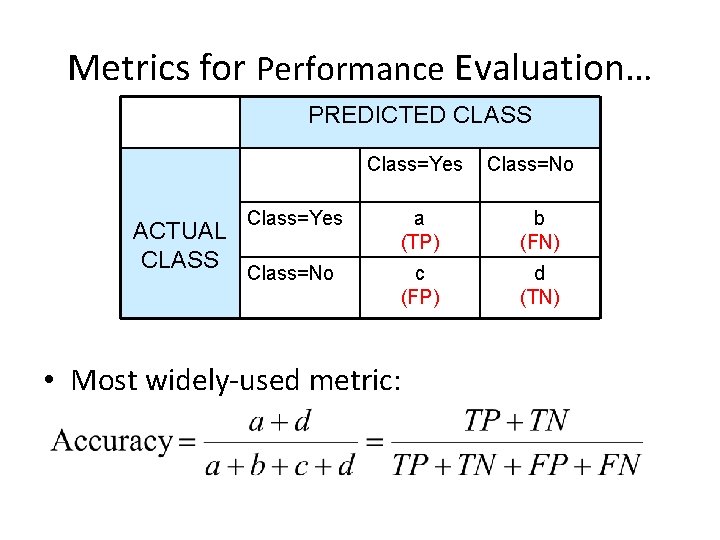

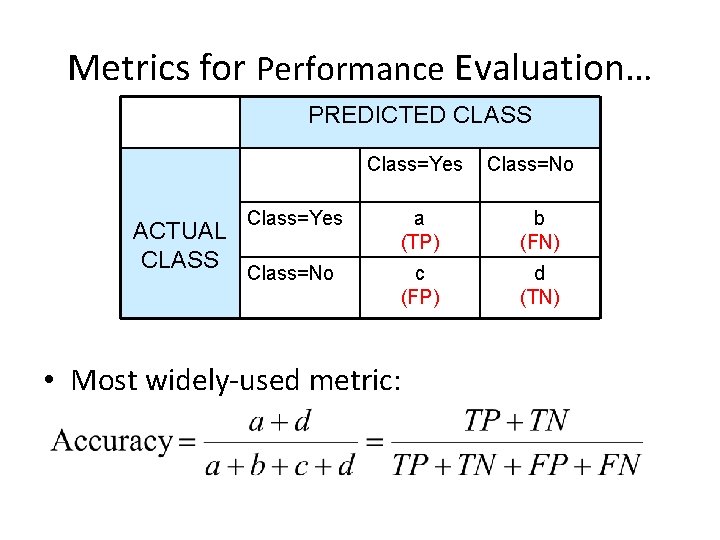

Metrics for Performance Evaluation… PREDICTED CLASS Class=Yes ACTUAL CLASS Class=No Class=Yes a (TP) b (FN) Class=No c (FP) d (TN) • Most widely-used metric:

Pruning Trees • There is another technique for reducing the number of attributes used in a tree - pruning • Two types of pruning: – Pre-pruning (forward pruning) – Post-pruning (backward pruning)

Prepruning • In prepruning, we decide during the building process when to stop adding attributes (possibly based on their information gain) • However, this may be problematic – Why? – Sometimes attributes individually do not contribute much to a decision, but combined, they may have a significant impact

Postpruning • Postpruning waits until the full decision tree has built and then prunes the attributes • Two techniques: – Subtree Replacement – Subtree Raising

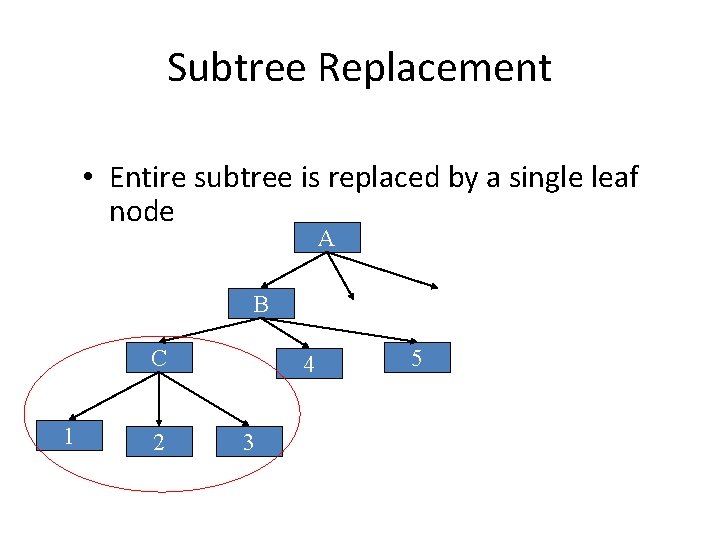

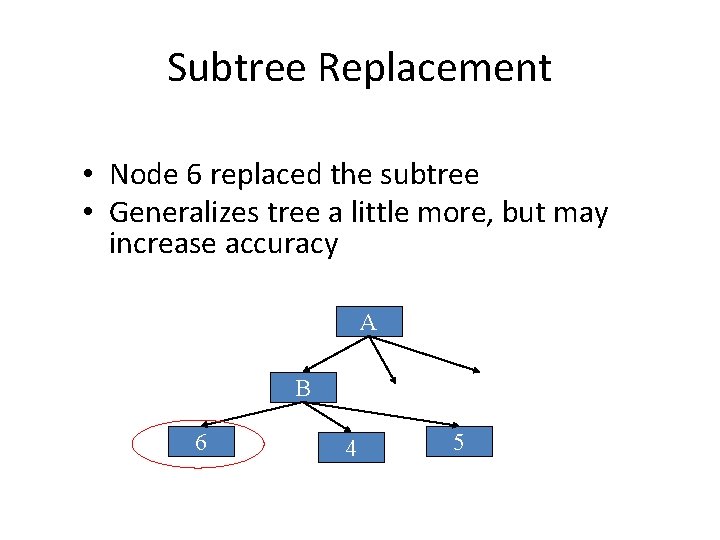

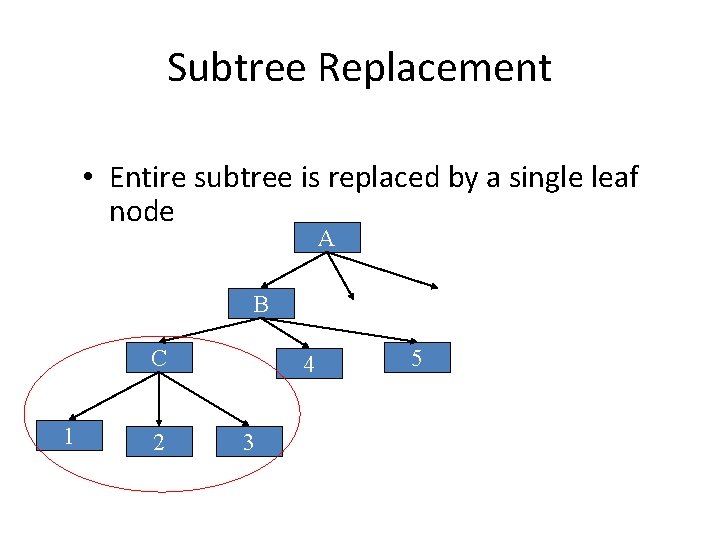

Subtree Replacement • Entire subtree is replaced by a single leaf node A B C 1 2 4 3 5

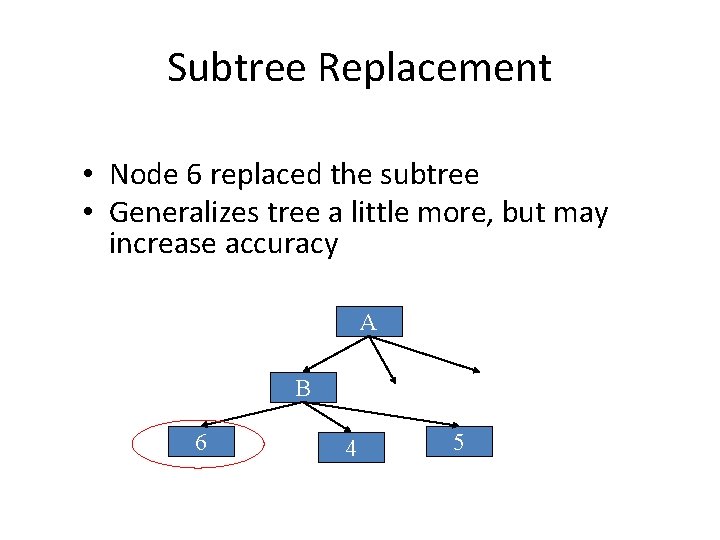

Subtree Replacement • Node 6 replaced the subtree • Generalizes tree a little more, but may increase accuracy A B 6 4 5

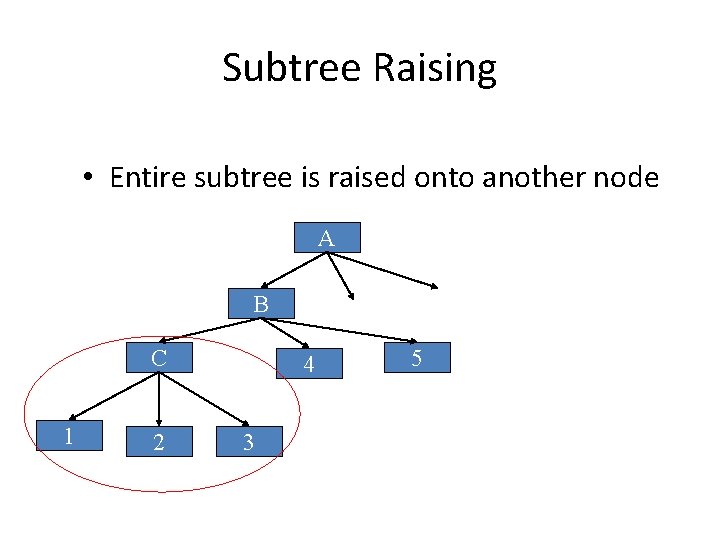

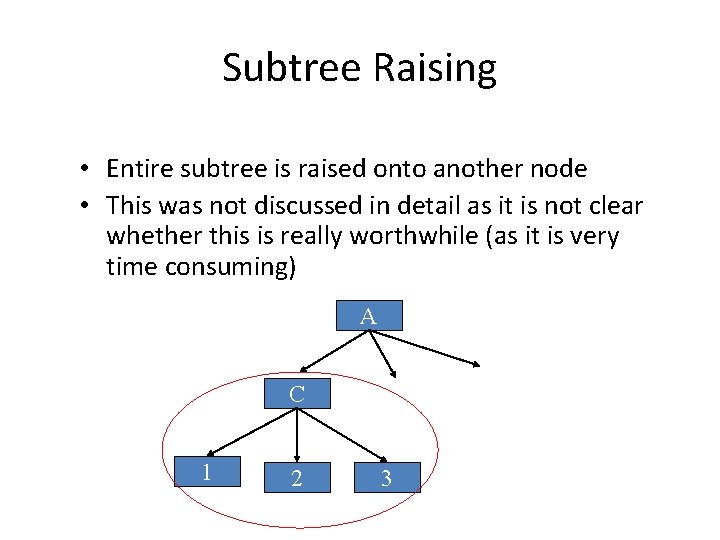

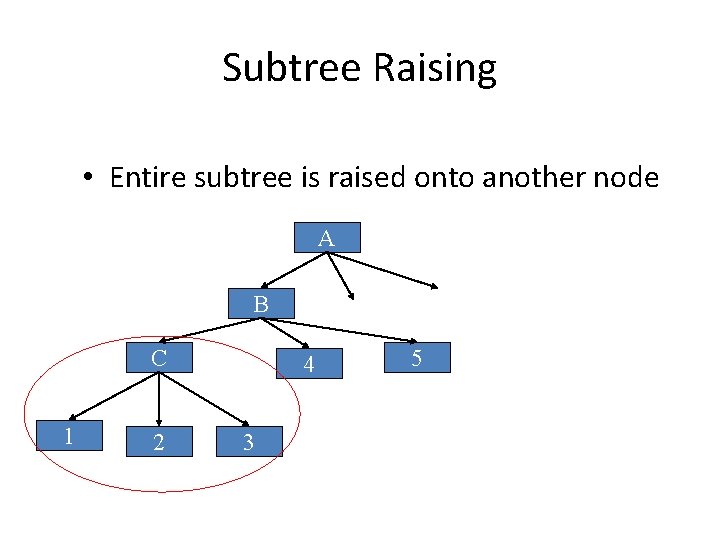

Subtree Raising • Entire subtree is raised onto another node A B C 1 2 4 3 5

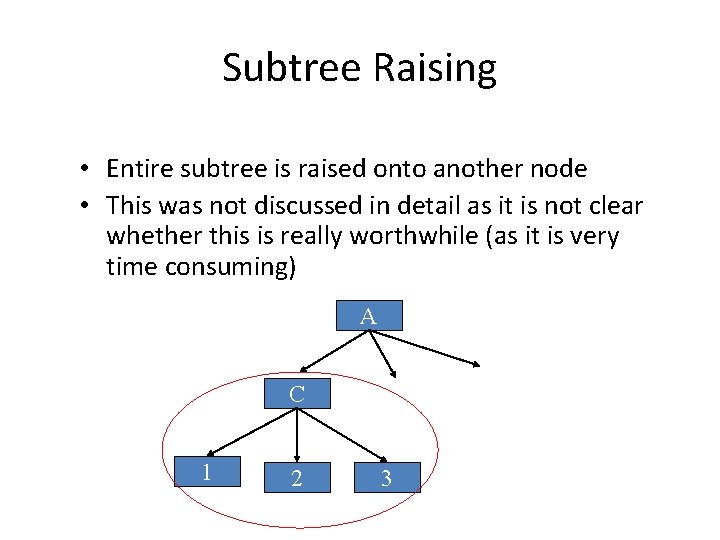

Subtree Raising • Entire subtree is raised onto another node • This was not discussed in detail as it is not clear whether this is really worthwhile (as it is very time consuming) A C 1 2 3

62