Data Repositories and Science Gateways for Open Science

- Slides: 30

Data Repositories and Science Gateways for Open Science Roberto Barbera – University of Catania and INFN EGI Community Forum Bari – 11 November 2015

Outline �Introductory concepts, definitions and driving considerations �A viable approach to Open Science �Summary 2 and conclusions

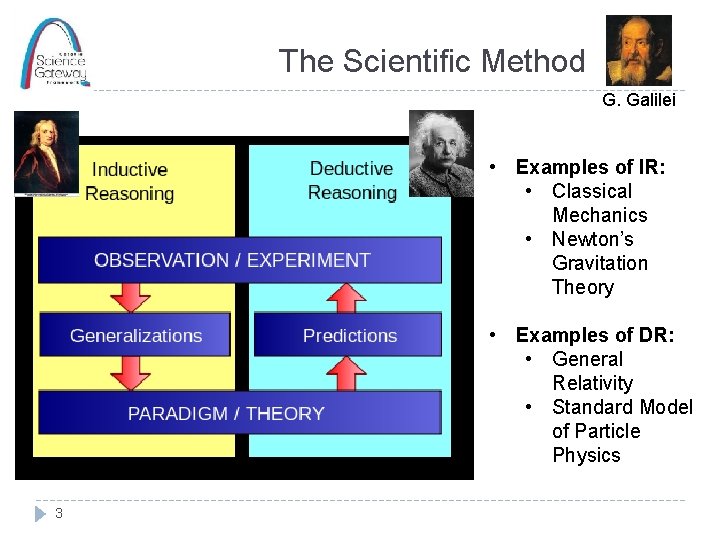

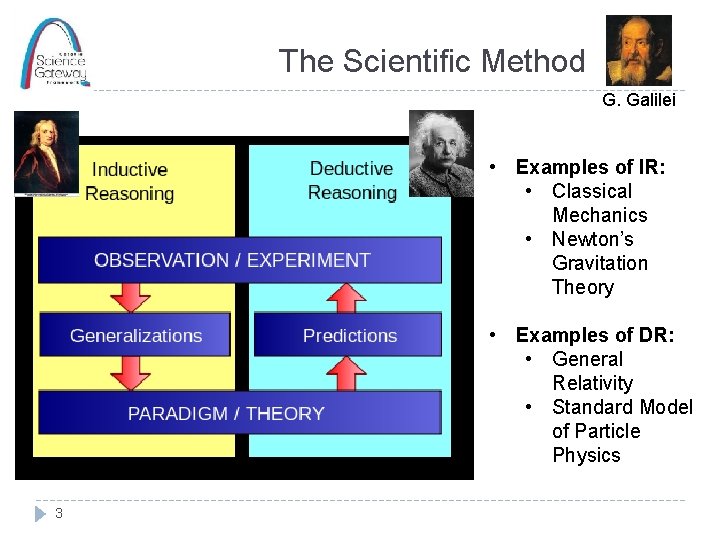

The Scientific Method G. Galilei • Examples of IR: • Classical Mechanics • Newton’s Gravitation Theory • Examples of DR: • General Relativity • Standard Model of Particle Physics 3

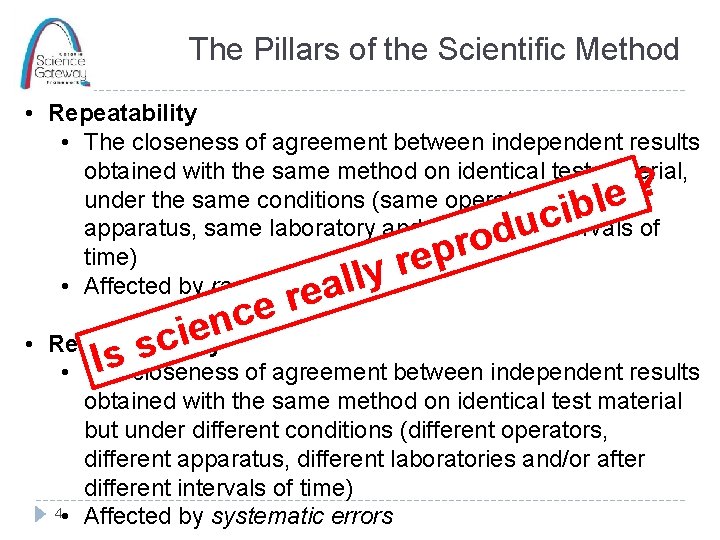

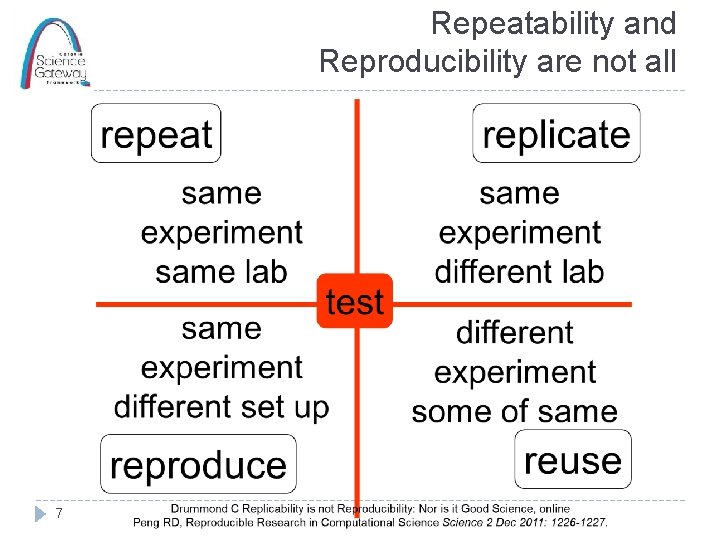

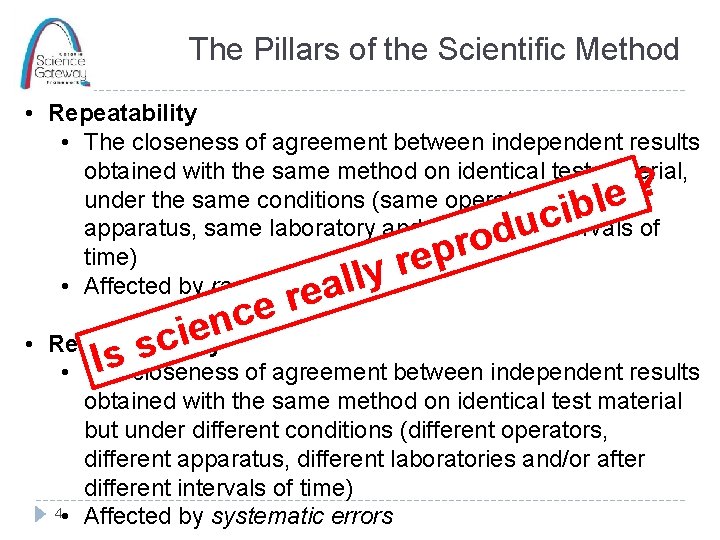

The Pillars of the Scientific Method • Repeatability • The closeness of agreement between independent results obtained with the same method on identical test material, under the same conditions (same operator, same apparatus, same laboratory and after short intervals of time) • Affected by random errors ? e ibl c u d o r p e r lly • a e r e c n e i Reproducibility c s s I closeness of agreement between independent results • The obtained with the same method on identical test material but under different conditions (different operators, different apparatus, different laboratories and/or after different intervals of time) 4 • Affected by systematic errors

Challenges in irreproducible research (http: //www. nature. com/nature/focus/reproducibility/index. html) 5

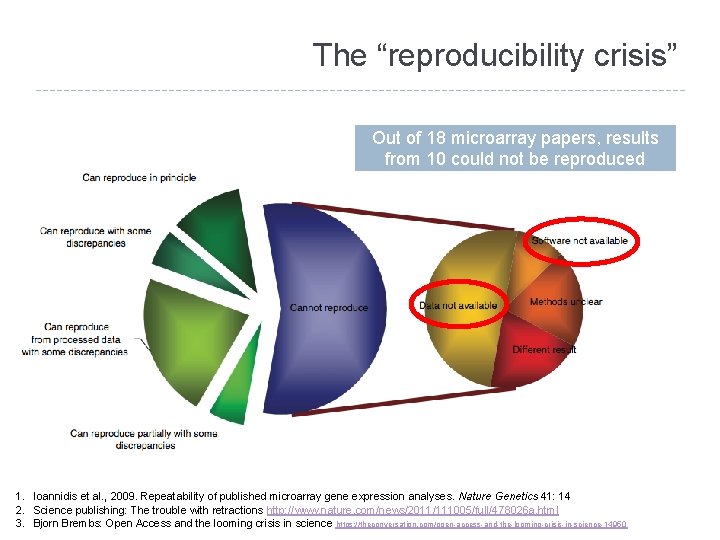

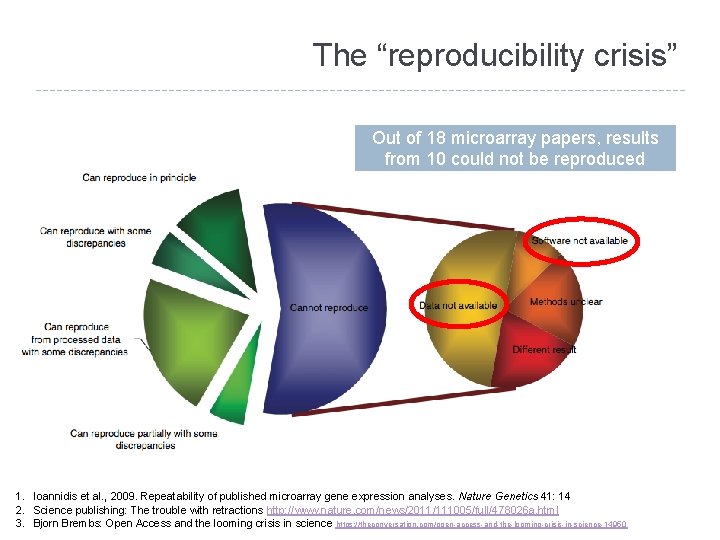

The “reproducibility crisis” Out of 18 microarray papers, results from 10 could not be reproduced 1. Ioannidis et al. , 2009. Repeatability of published microarray gene expression analyses. Nature Genetics 41: 14 2. Science 6 publishing: The trouble with retractions http: //www. nature. com/news/2011/111005/full/478026 a. html 3. Bjorn Brembs: Open Access and the looming crisis in science https: //theconversation. com/open-access-and-the-looming-crisis-in-science-14950 18

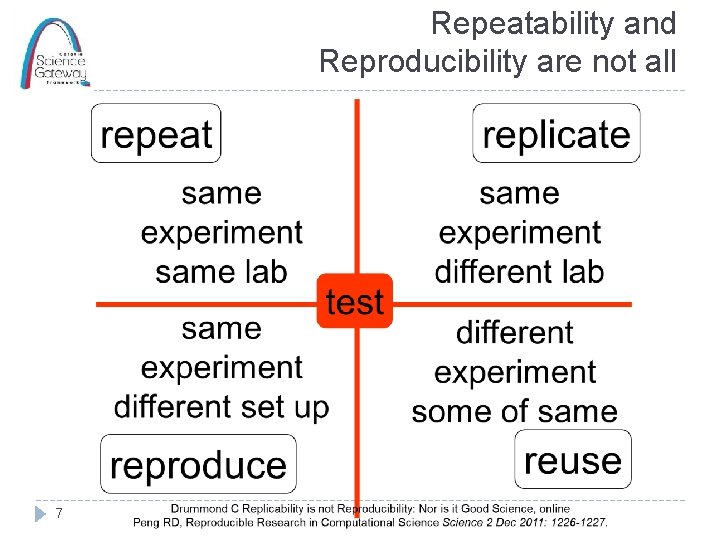

Repeatability and Reproducibility are not all 7

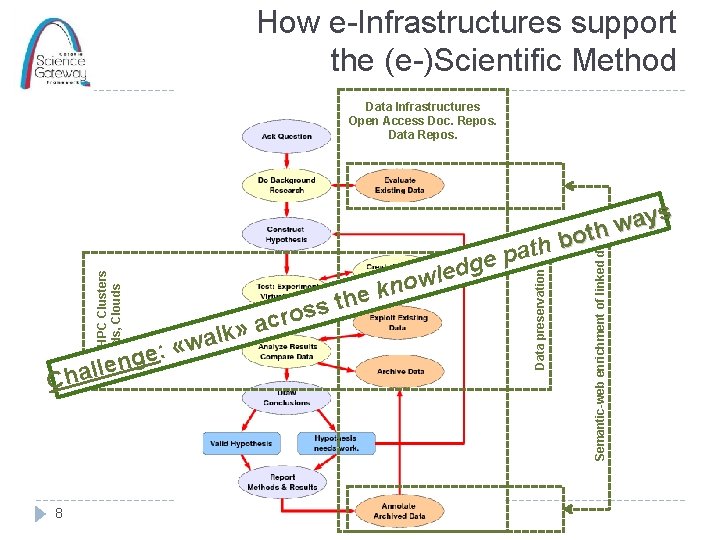

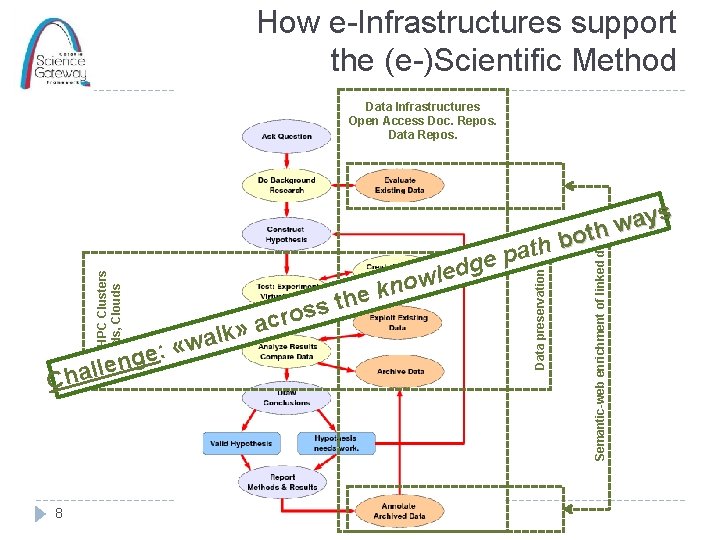

How e-Infrastructures support the (e-)Scientific Method Data Infrastructures Open Access Doc. Repos. Data Repos. 8 » g n e l l ha alk w « e: th s s o acr Semantic-web enrichment of linked data p e g d le w o n ek Data preservation C HTC/HPC Clusters Grids, Clouds ys a w th o b ath

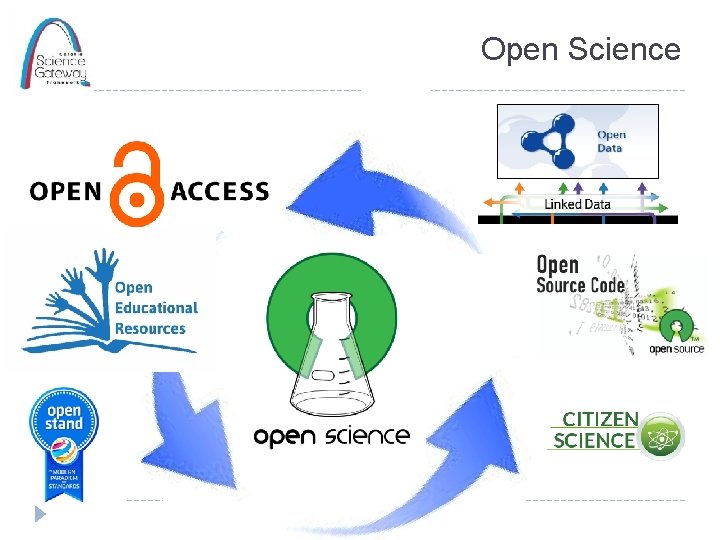

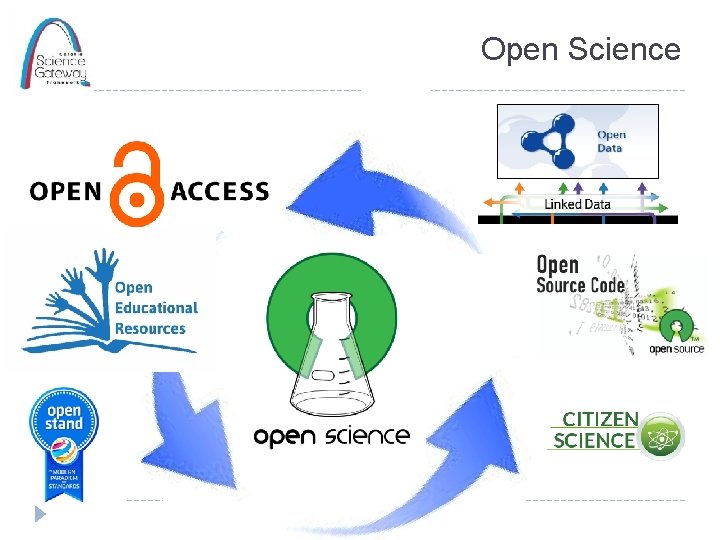

Open Science

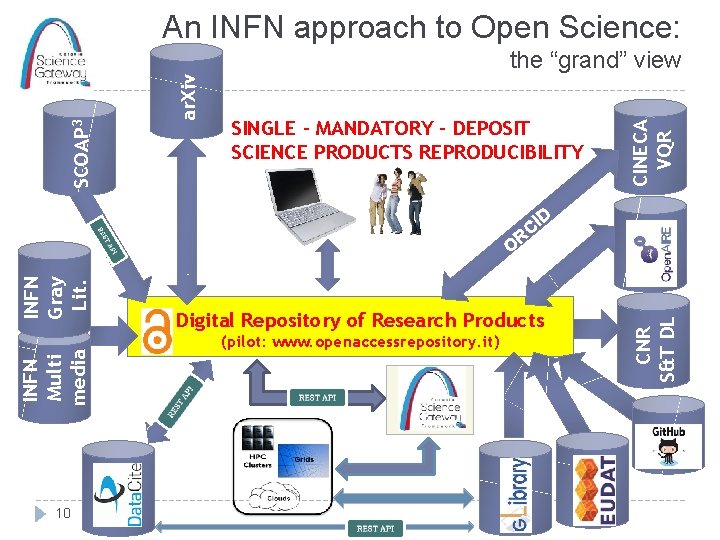

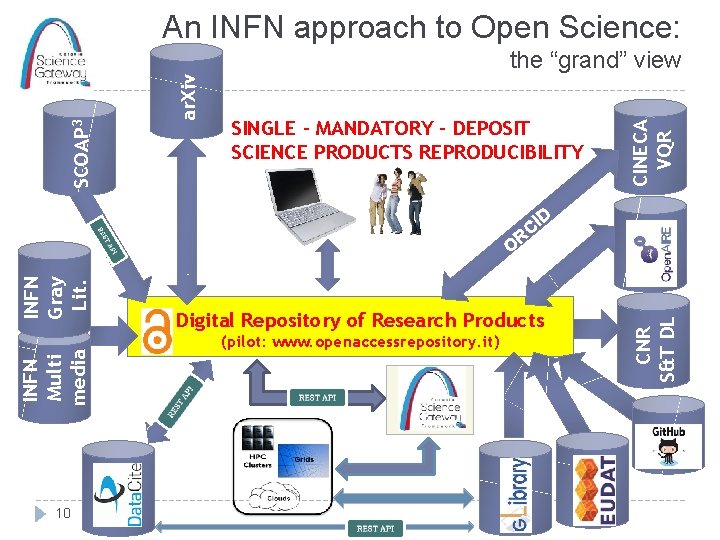

An INFN approach to Open Science: SINGLE – MANDATORY - DEPOSIT SCIENCE PRODUCTS REPRODUCIBILITY CINECA VQR ar. Xiv SCOAP 3 the “grand” view D I C INFN Gray Lit. INFN Multi media 10 Digital Repository of Research Products (pilot: www. openaccessrepository. it) CNR S&T DL R O

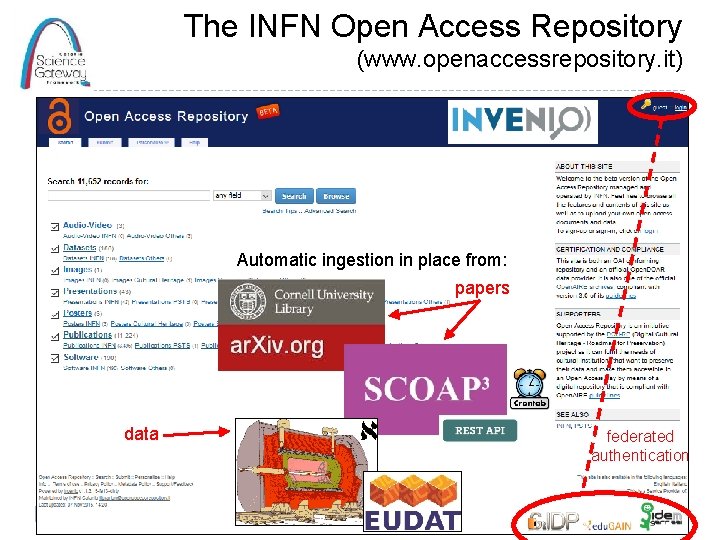

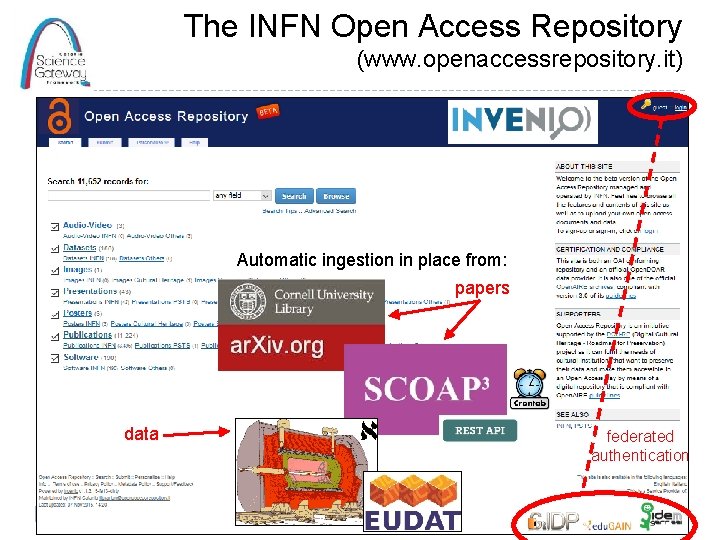

The INFN Open Access Repository (www. openaccessrepository. it) Automatic ingestion in place from: papers data federated authentication

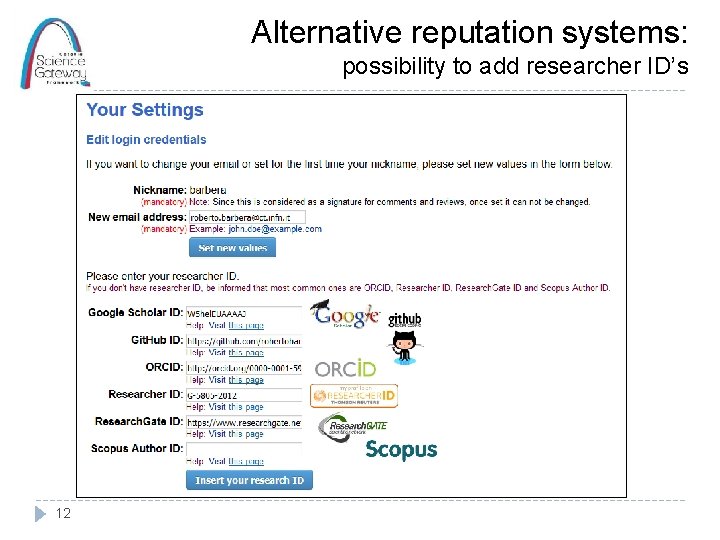

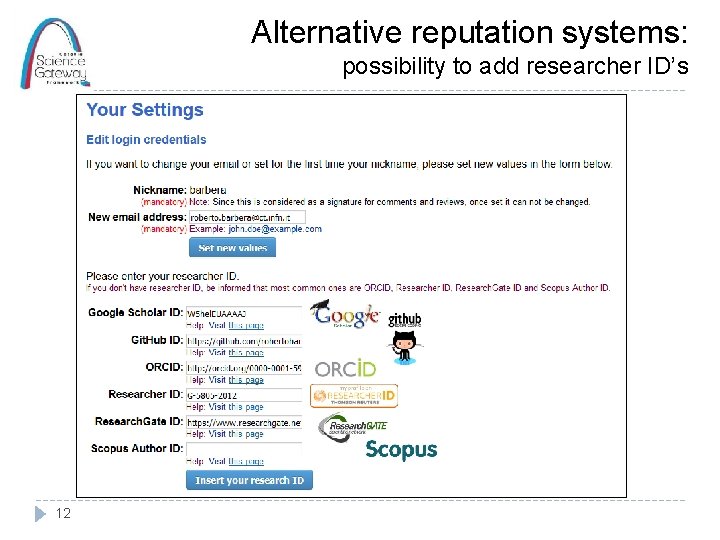

Alternative reputation systems: possibility to add researcher ID’s 12

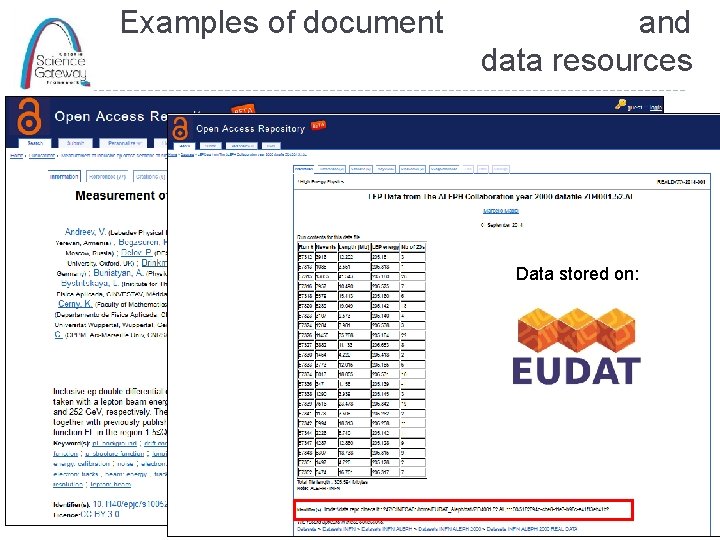

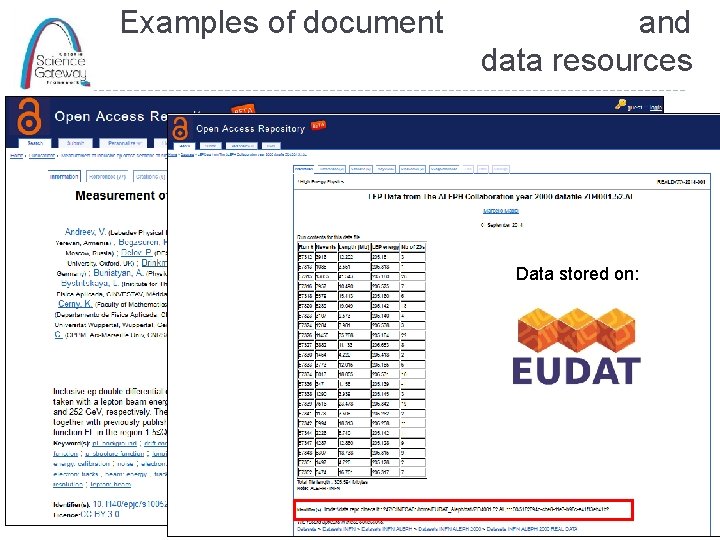

Examples of document and data resources Data stored on: 13

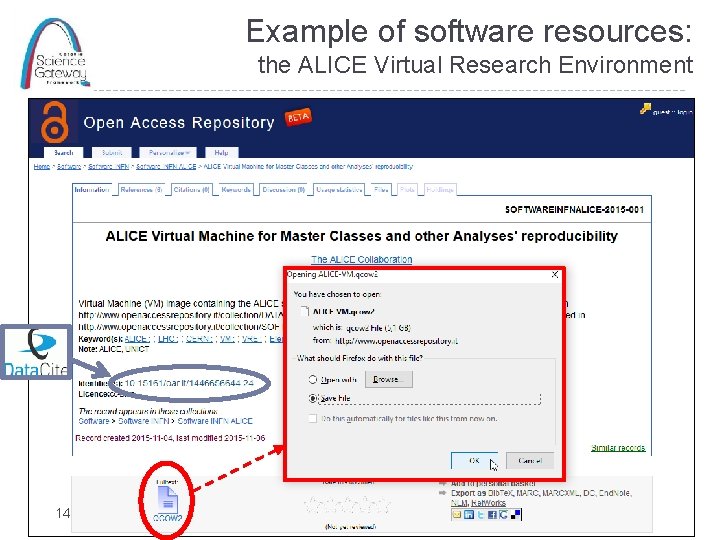

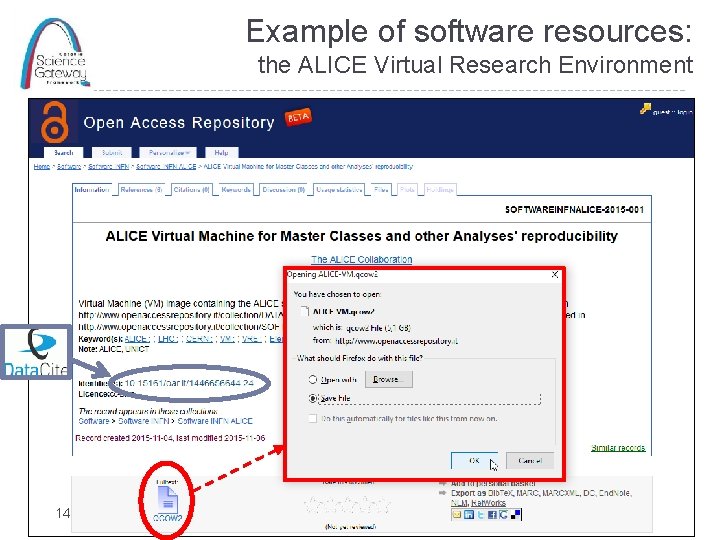

Example of software resources: the ALICE Virtual Research Environment 14

Example of research “package” 15

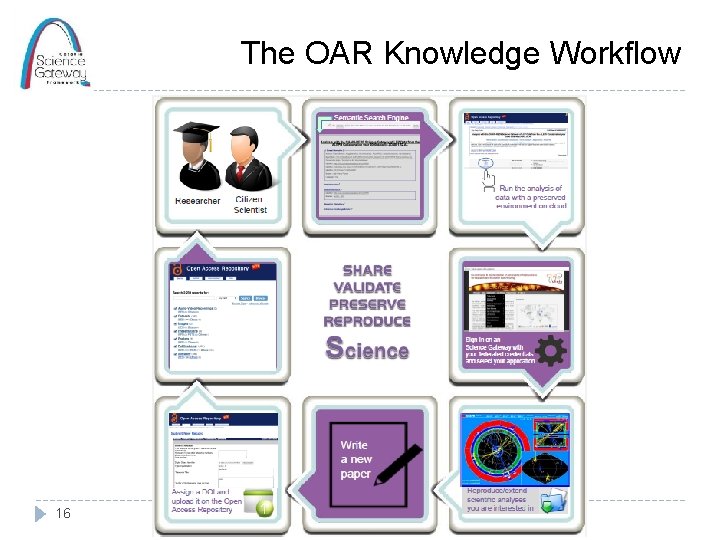

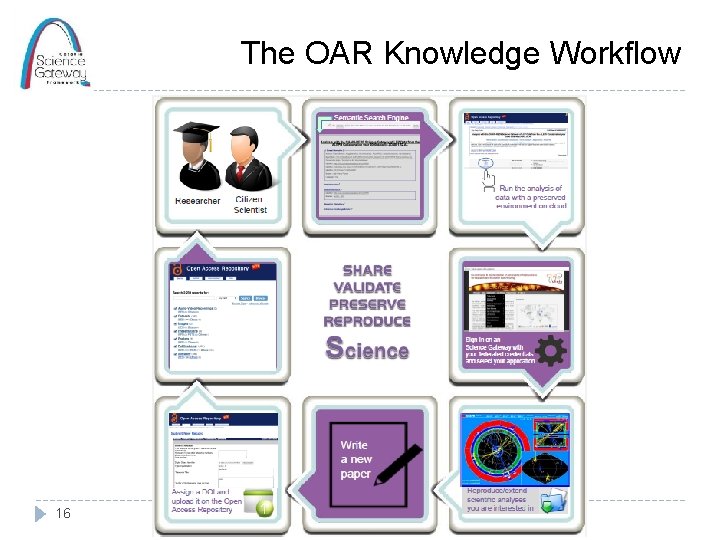

The OAR Knowledge Workflow 16

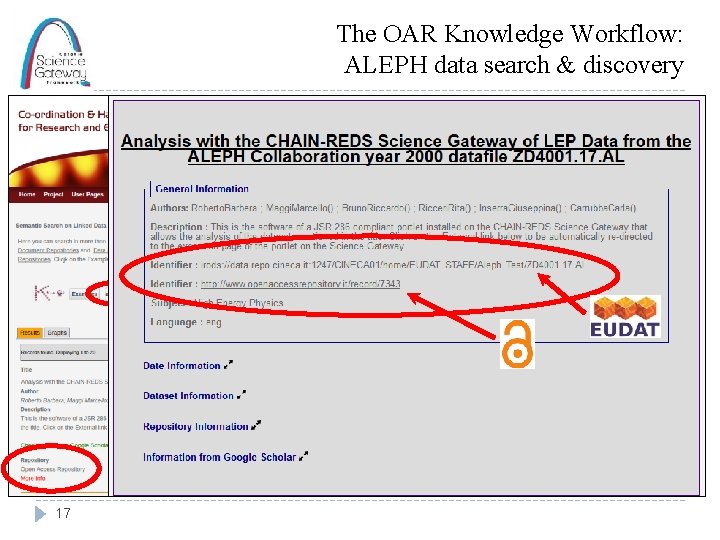

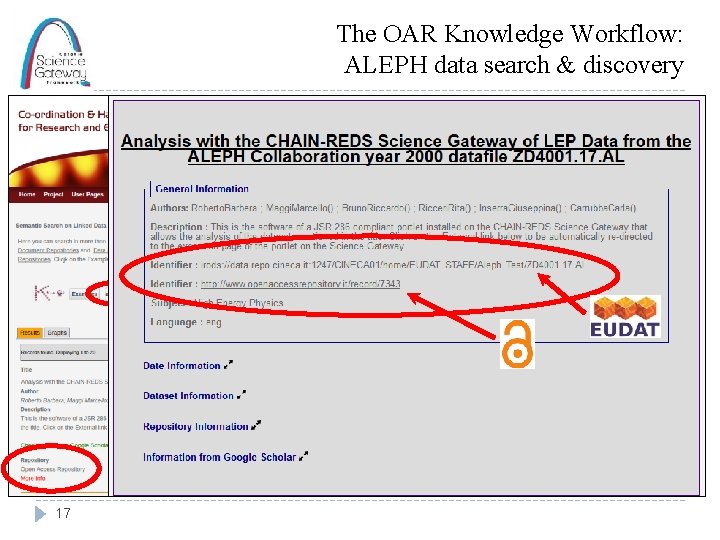

The OAR Knowledge Workflow: ALEPH data search & discovery 17

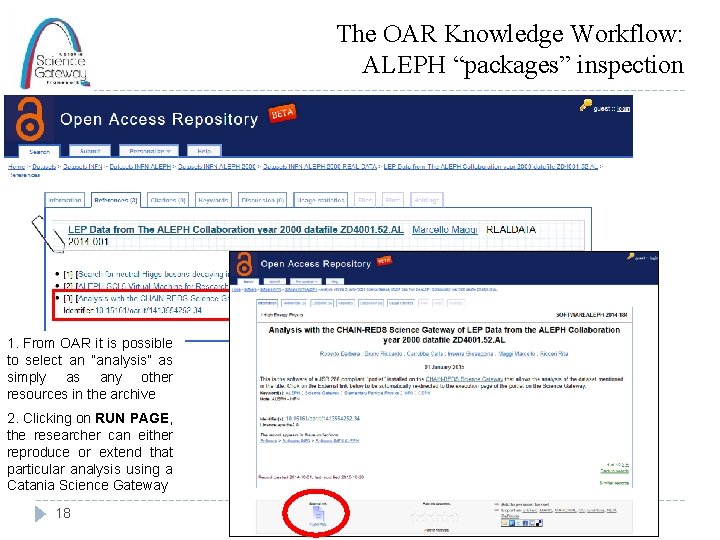

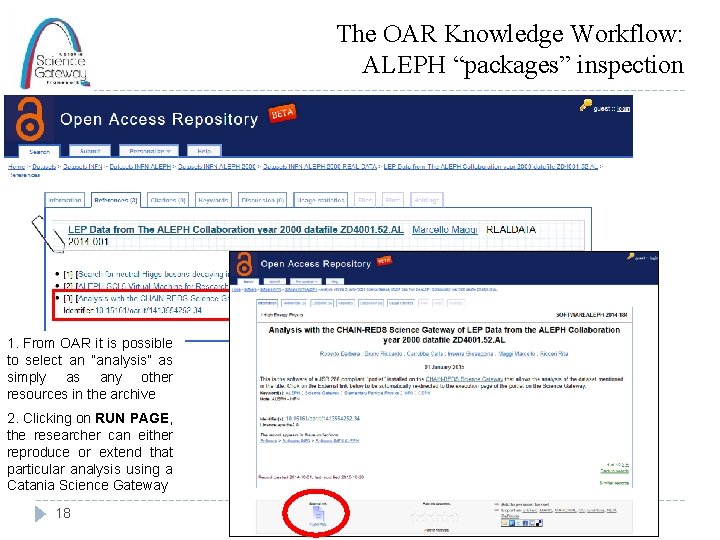

The OAR Knowledge Workflow: ALEPH “packages” inspection 1. From OAR it is possible to select an “analysis” as simply as any other resources in the archive 2. Clicking on RUN PAGE, the researcher can either reproduce or extend that particular analysis using a Catania Science Gateway 18

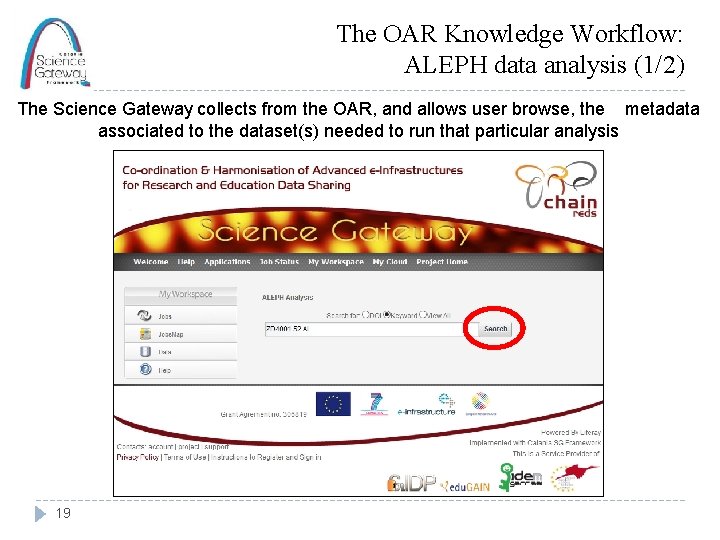

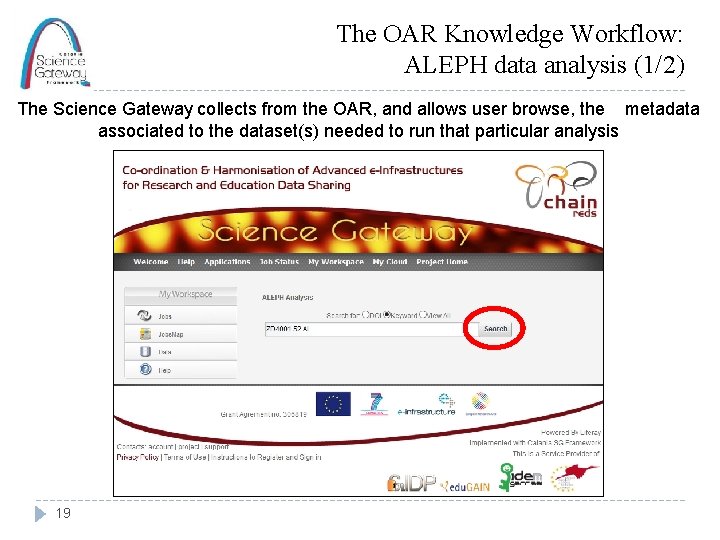

The OAR Knowledge Workflow: ALEPH data analysis (1/2) The Science Gateway collects from the OAR, and allows user browse, the metadata associated to the dataset(s) needed to run that particular analysis 19

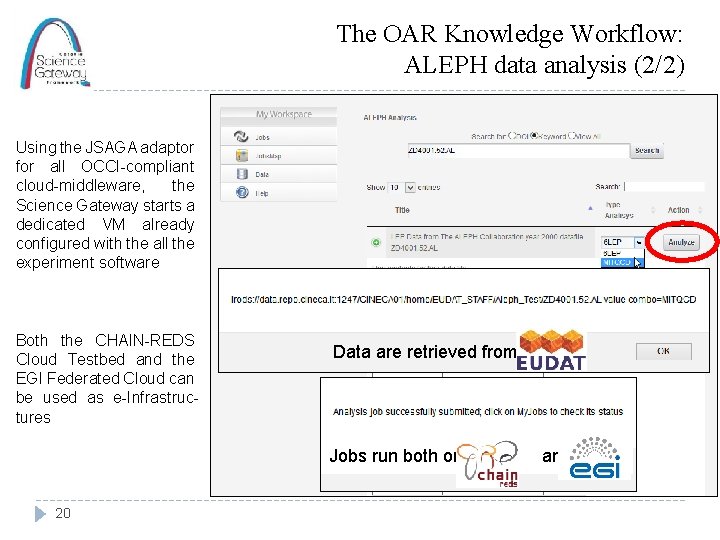

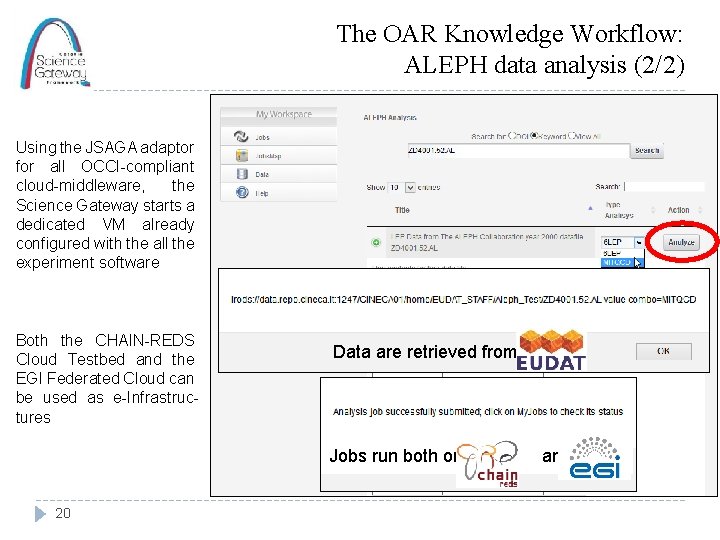

The OAR Knowledge Workflow: ALEPH data analysis (2/2) Using the JSAGA adaptor for all OCCI-compliant cloud-middleware, the Science Gateway starts a dedicated VM already configured with the all the experiment software Both the CHAIN-REDS Cloud Testbed and the EGI Federated Cloud can be used as e-Infrastructures Data are retrieved from Jobs run both on 20 and

Remember: repeatability and reproducibility are not all t x e « nd a y t i l sabi Reu 21 » y t i l i b i s n e ! r e t t ma

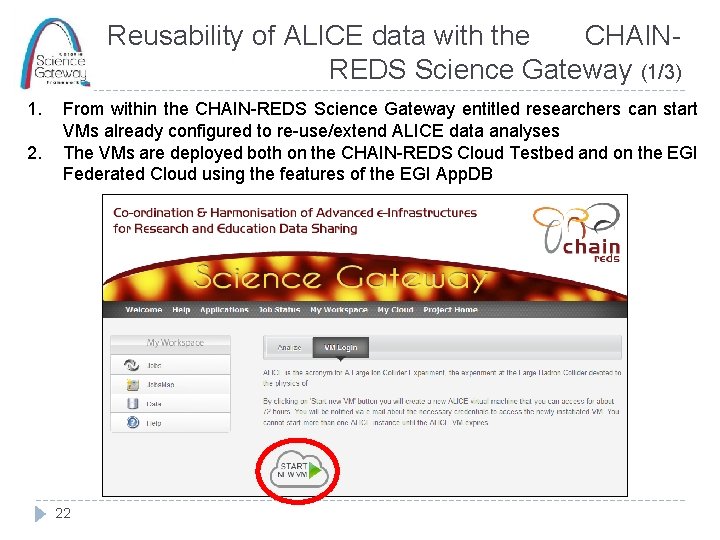

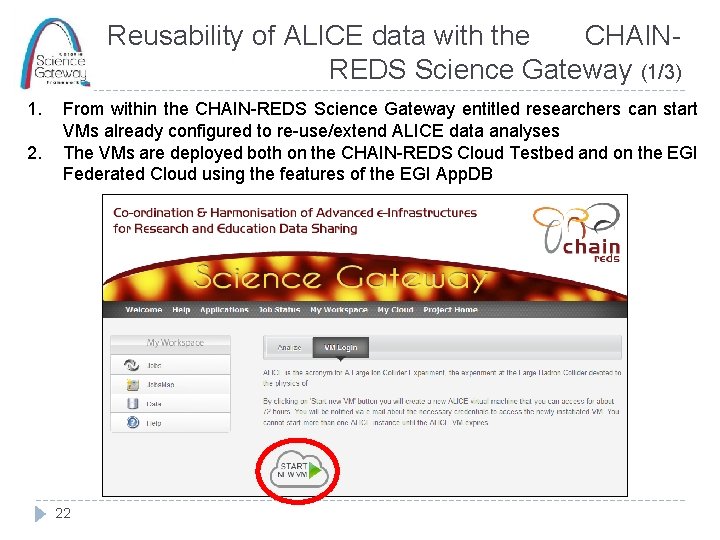

Reusability of ALICE data with the CHAINREDS Science Gateway (1/3) 1. 2. From within the CHAIN-REDS Science Gateway entitled researchers can start VMs already configured to re-use/extend ALICE data analyses The VMs are deployed both on the CHAIN-REDS Cloud Testbed and on the EGI Federated Cloud using the features of the EGI App. DB 22

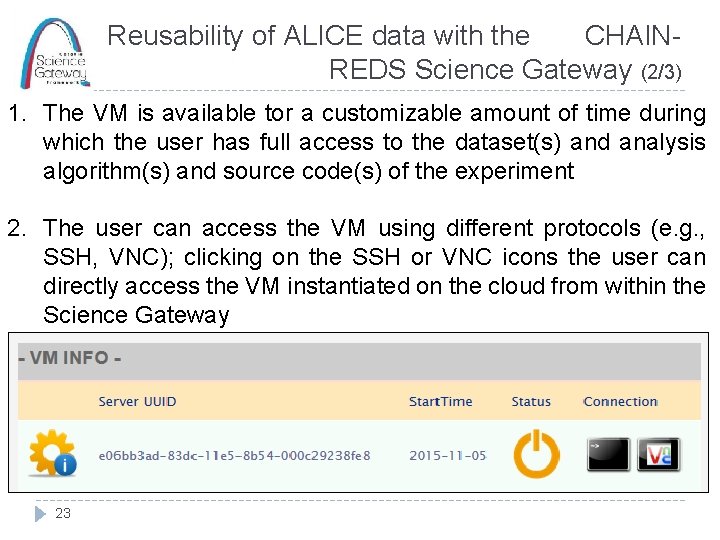

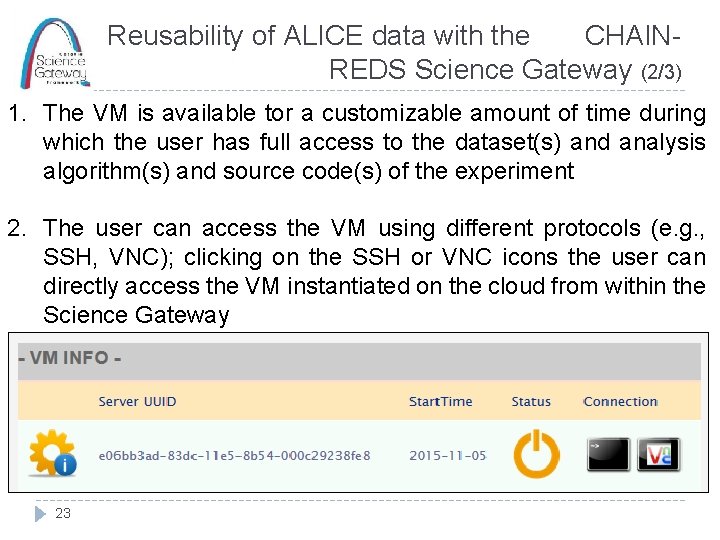

Reusability of ALICE data with the CHAINREDS Science Gateway (2/3) 1. The VM is available tor a customizable amount of time during which the user has full access to the dataset(s) and analysis algorithm(s) and source code(s) of the experiment 2. The user can access the VM using different protocols (e. g. , SSH, VNC); clicking on the SSH or VNC icons the user can directly access the VM instantiated on the cloud from within the Science Gateway 23

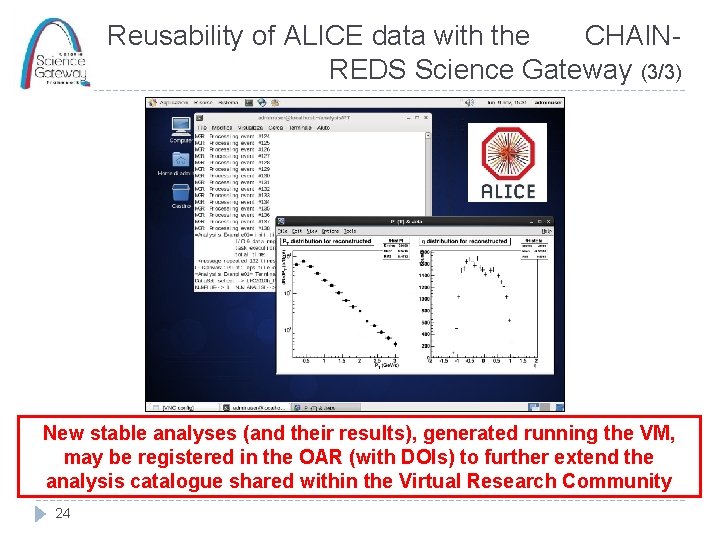

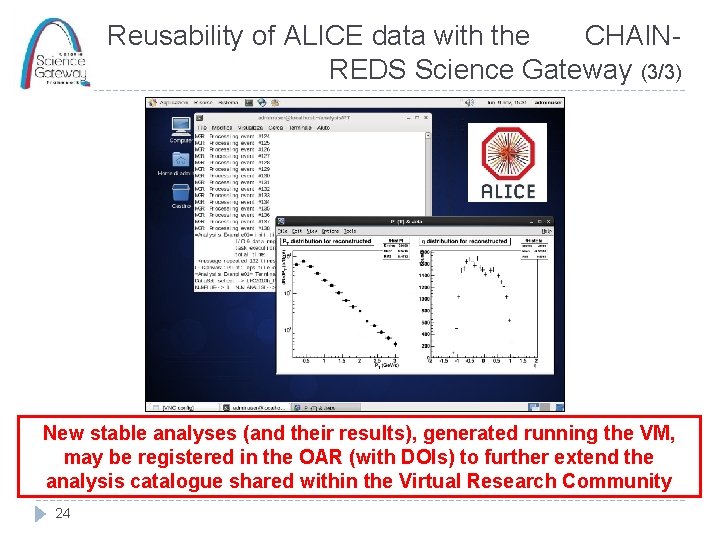

Reusability of ALICE data with the CHAINREDS Science Gateway (3/3) New stable analyses (and their results), generated running the VM, may be registered in the OAR (with DOIs) to further extend the analysis catalogue shared within the Virtual Research Community 24

“Who’s this science of ? ” h c r a ese o h t u de a r o t rship How 25 i v o r to p s? t c u prod

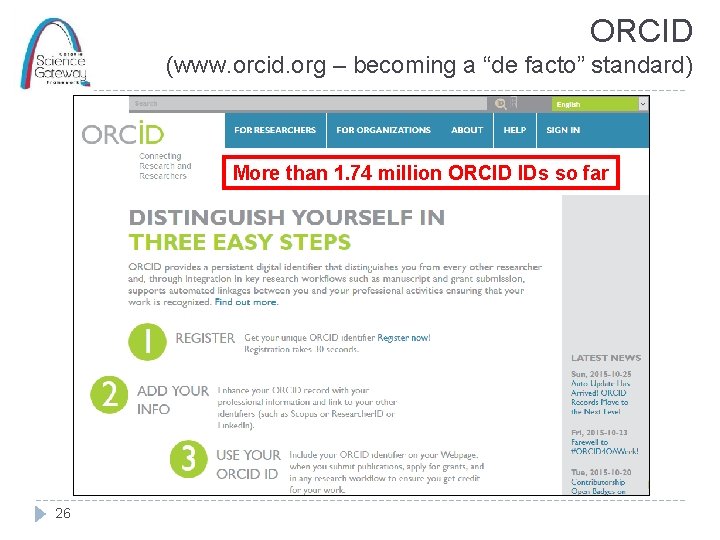

ORCID (www. orcid. org – becoming a “de facto” standard) More than 1. 74 million ORCID IDs so far 26

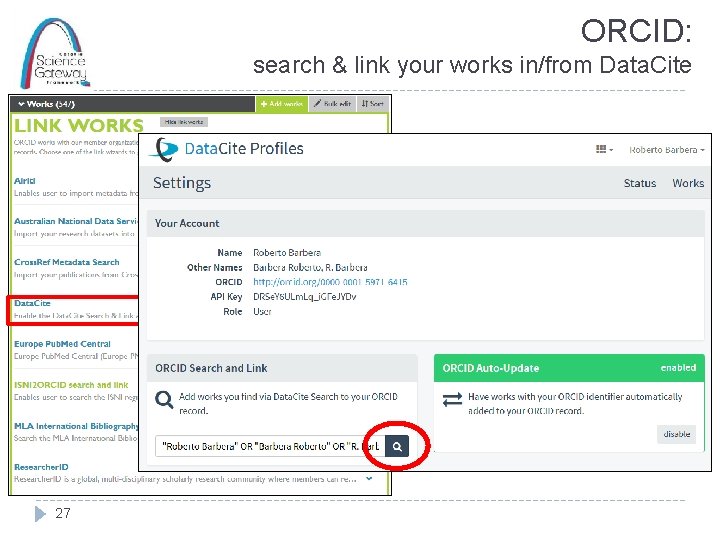

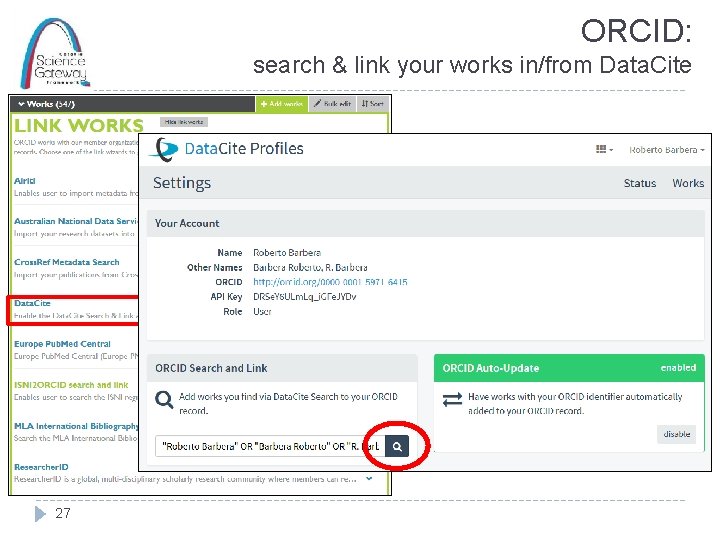

ORCID: search & link your works in/from Data. Cite 27

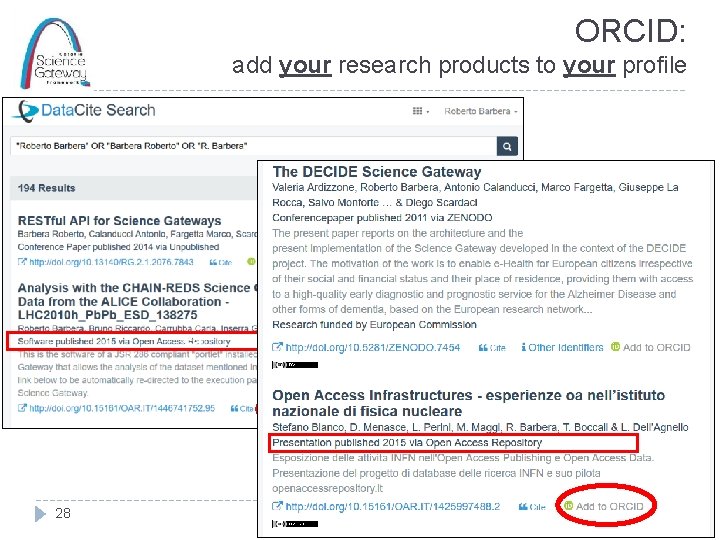

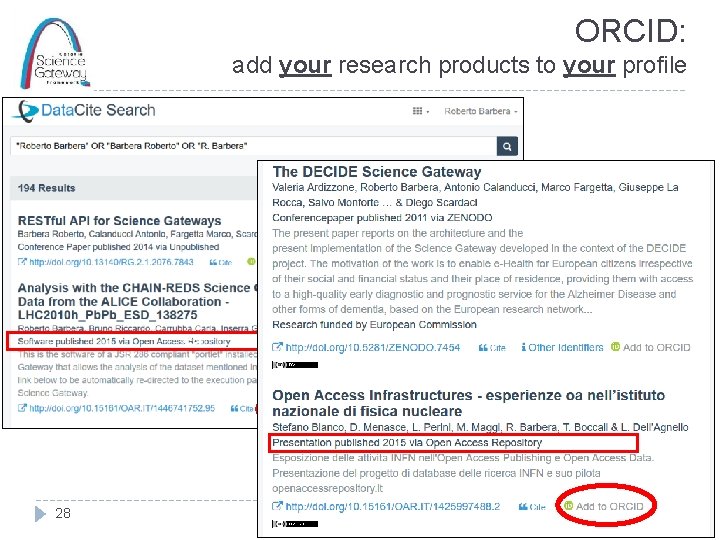

ORCID: add your research products to your profile <a v 28

Summary and conclusions � Open Science vision can be implemented only if the “openness” paradigm becomes pervasive in research � Science outputs’ reproducibility, but also re-usability and extensibility, are key to walk through the “knowledge path” in both directions � The INFN Open Access Repository is a pilot knowledge preservation repository meant to serve both researchers and citizen scientists � What makes the INFN OAR different from other repositories is: Its capability to connect to Science Gateways and exploit cloud resources worldwide to easily reproduce/extend scientific analyses � Its capability to provide full authorship (and hence credit, reputation and visibility) for all products of a scientist this is key for a correct evaluation of research (…and of researchers) � 29

Thank you ! 30