DAQ Status and Prospects Frans Meijers Wed 4

- Slides: 32

DAQ Status and Prospects Frans Meijers Wed 4 November 2009 Readiness for Data-Taking review Intro Global Running DAQ Readiness for Data Taking

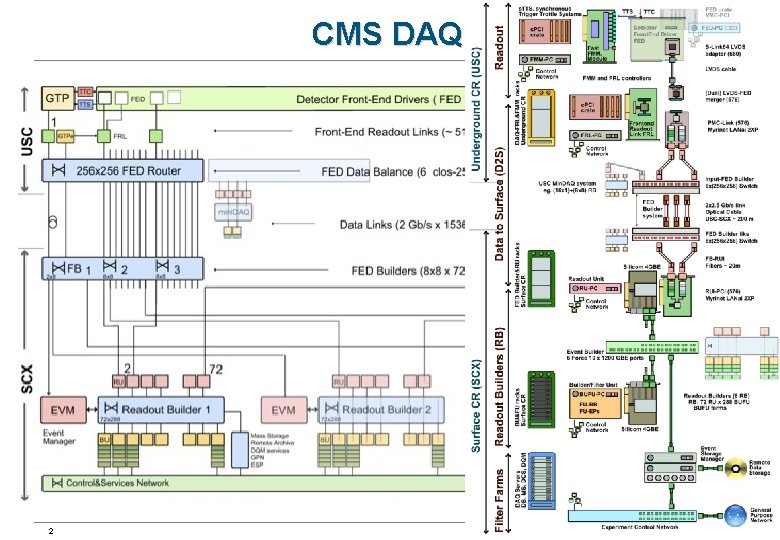

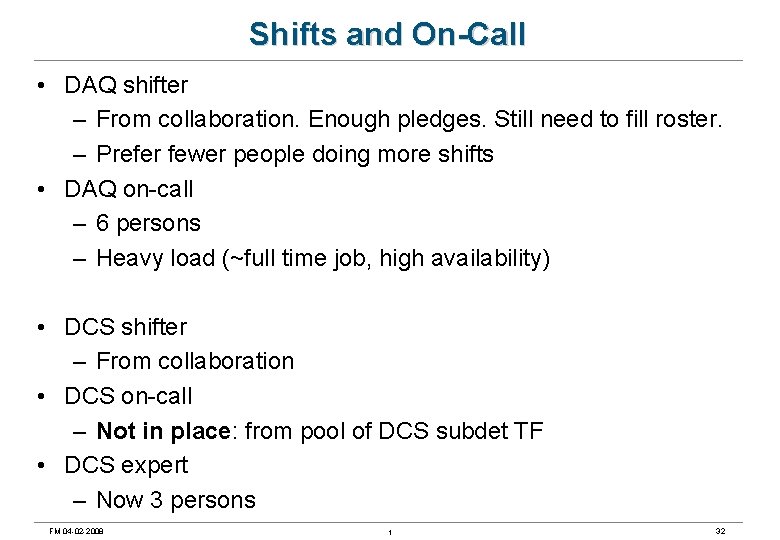

CMS DAQ 2

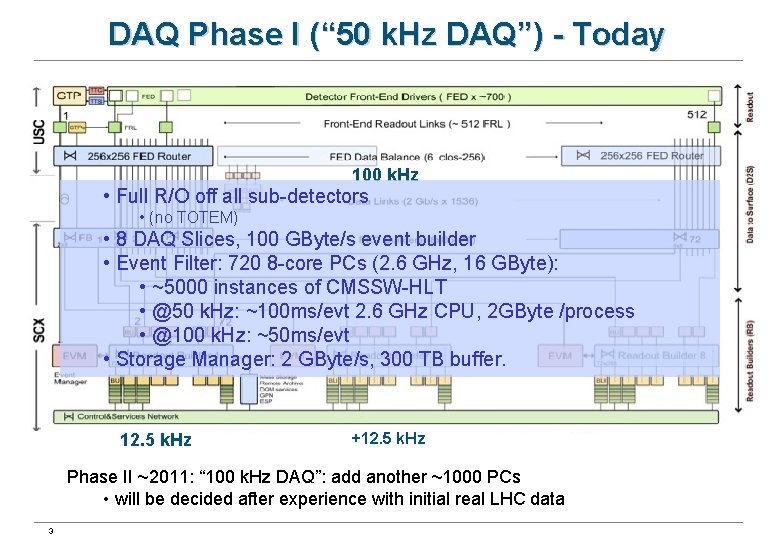

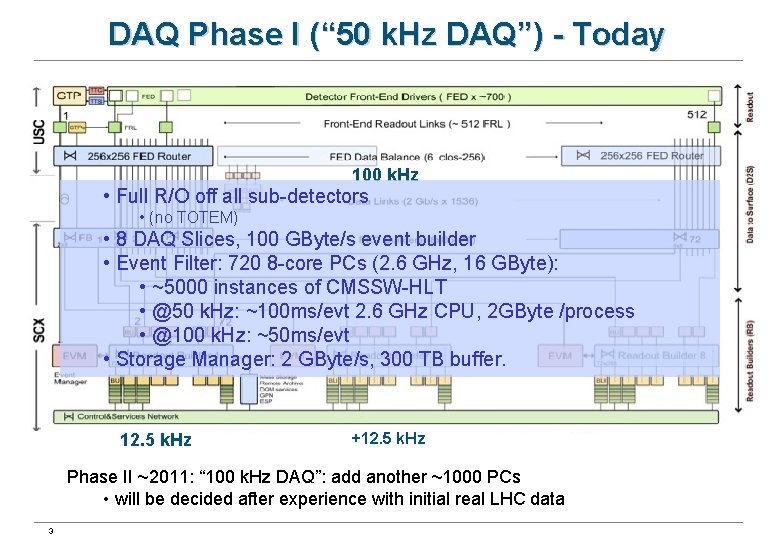

DAQ Phase I (“ 50 k. Hz DAQ”) - Today 100 k. Hz • Full R/O off all sub-detectors • (no TOTEM) • 8 DAQ Slices, 100 GByte/s event builder • Event Filter: 720 8 -core PCs (2. 6 GHz, 16 GByte): • ~5000 instances of CMSSW-HLT • @50 k. Hz: ~100 ms/evt 2. 6 GHz CPU, 2 GByte /process • @100 k. Hz: ~50 ms/evt • Storage Manager: 2 GByte/s, 300 TB buffer. 12. 5 k. Hz +12. 5 k. Hz Phase II ~2011: “ 100 k. Hz DAQ”: add another ~1000 PCs • will be decided after experience with initial real LHC data 3

GLOBAL RUNNING FM 04 -02 -2008 t 4

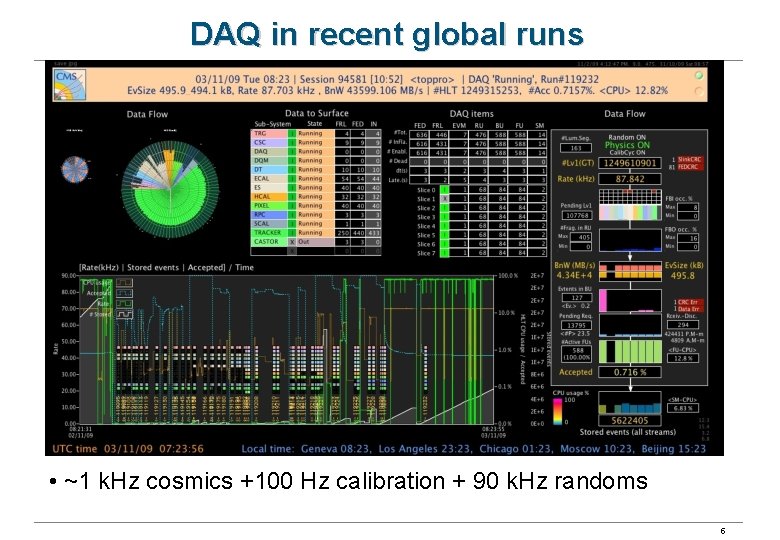

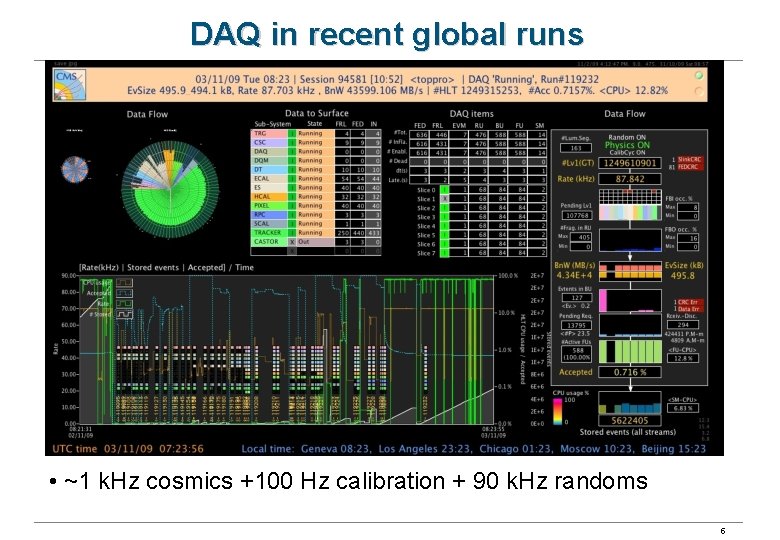

DAQ in recent global runs • ~1 k. Hz cosmics +100 Hz calibration + 90 k. Hz randoms 5

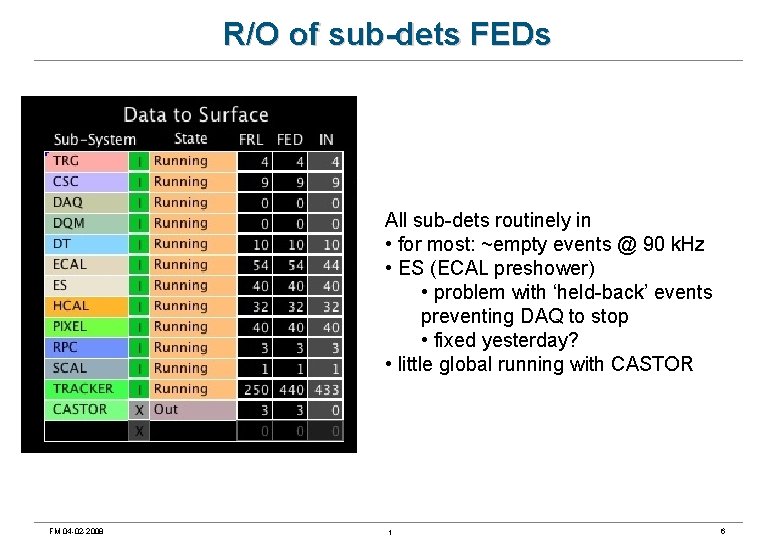

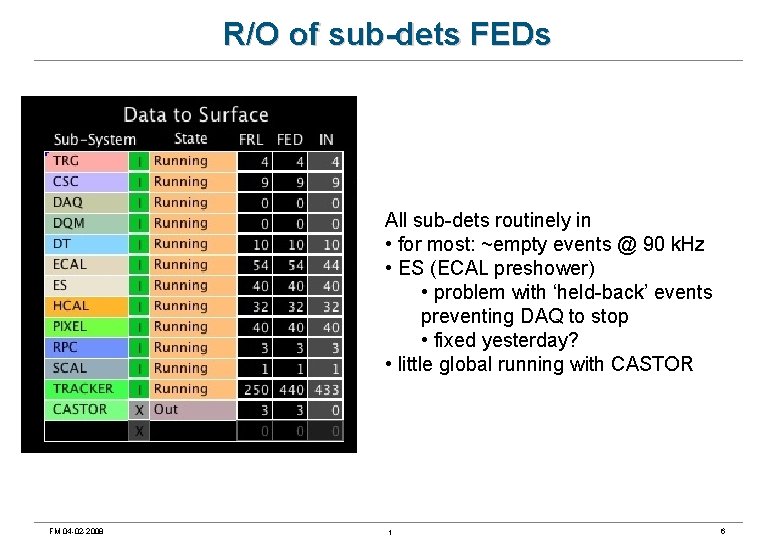

R/O of sub-dets FEDs All sub-dets routinely in • for most: ~empty events @ 90 k. Hz • ES (ECAL preshower) • problem with ‘held-back’ events preventing DAQ to stop • fixed yesterday? • little global running with CASTOR FM 04 -02 -2008 t 6

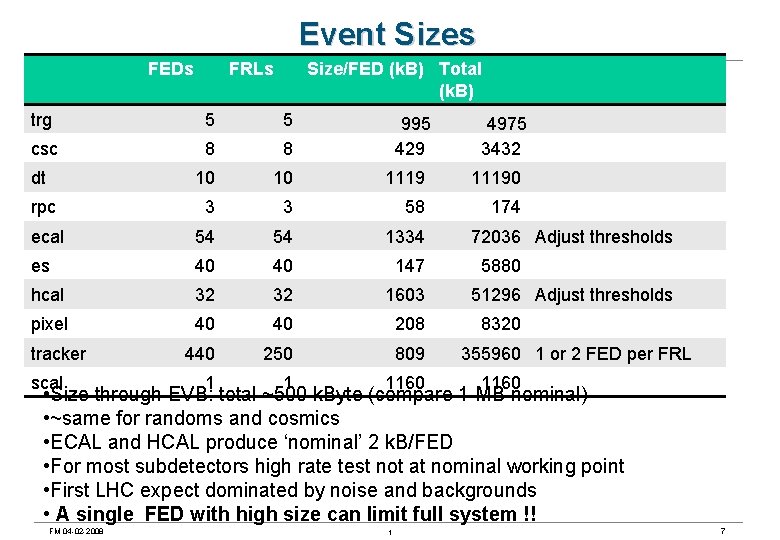

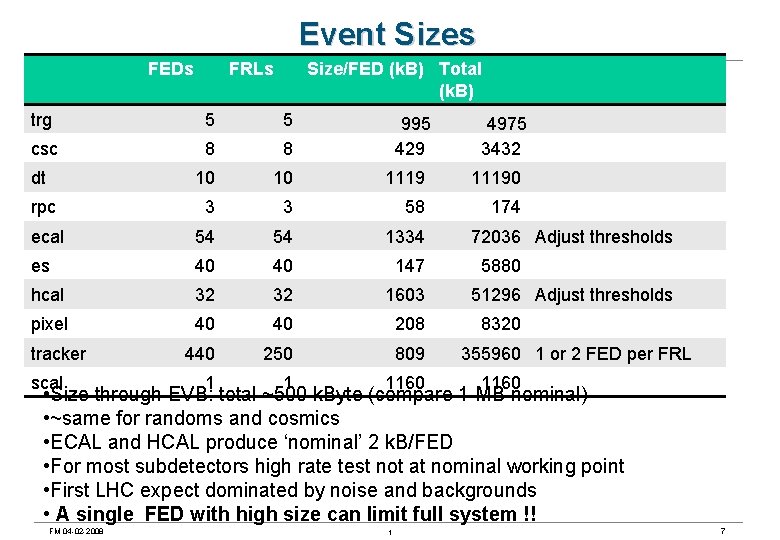

Event Sizes FEDs FRLs Size/FED (k. B) Total (k. B) trg 5 5 csc 8 8 dt 10 10 11190 rpc 3 3 58 174 ecal 54 54 1334 es 40 40 147 hcal 32 32 1603 pixel 40 40 208 440 250 809 1 1 1160 tracker scal 995 429 4975 3432 72036 Adjust thresholds 5880 51296 Adjust thresholds 8320 355960 1 or 2 FED per FRL 1160 • Size through EVB: total ~500 k. Byte (compare 1 MB nominal) • ~same for randoms and cosmics • ECAL and HCAL produce ‘nominal’ 2 k. B/FED • For most subdetectors high rate test not at nominal working point • First LHC expect dominated by noise and backgrounds • A single FED with high size can limit full system !! FM 04 -02 -2008 t 7

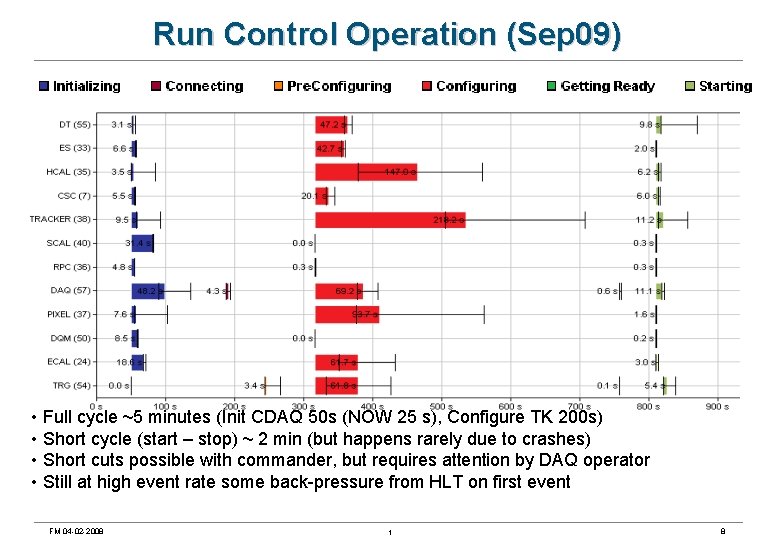

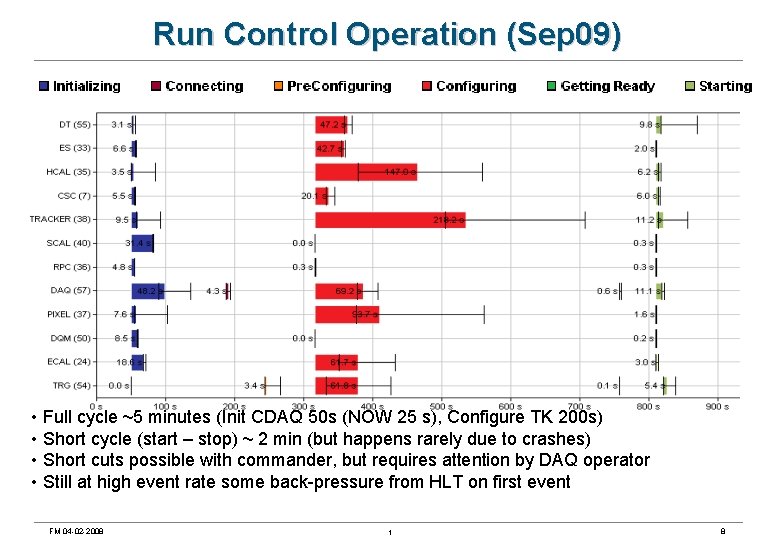

Run Control Operation (Sep 09) • Full cycle ~5 minutes (Init CDAQ 50 s (NOW 25 s), Configure TK 200 s) • Short cycle (start – stop) ~ 2 min (but happens rarely due to crashes) • Short cuts possible with commander, but requires attention by DAQ operator • Still at high event rate some back-pressure from HLT on first event FM 04 -02 -2008 t 8

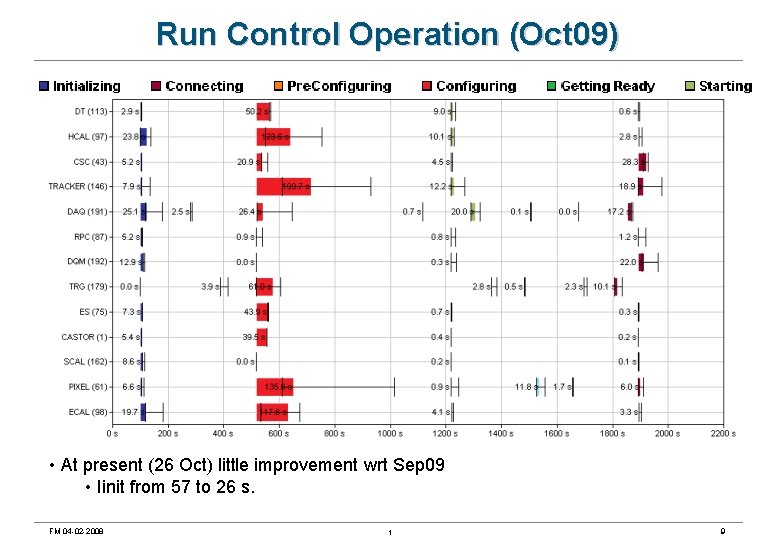

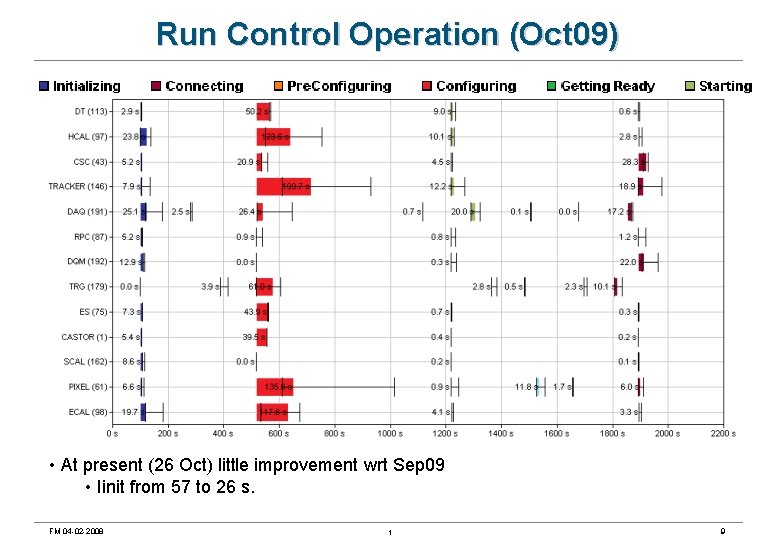

Run Control Operation (Oct 09) • At present (26 Oct) little improvement wrt Sep 09 • Iinit from 57 to 26 s. FM 04 -02 -2008 t 9

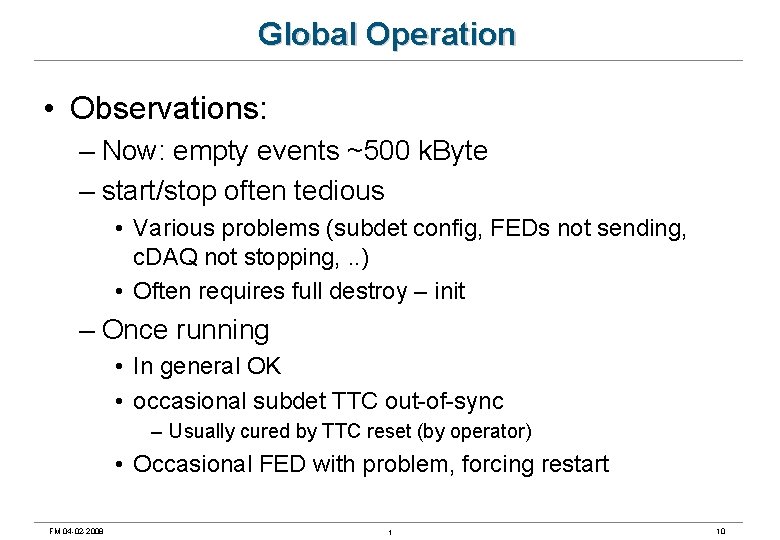

Global Operation • Observations: – Now: empty events ~500 k. Byte – start/stop often tedious • Various problems (subdet config, FEDs not sending, c. DAQ not stopping, . . ) • Often requires full destroy – init – Once running • In general OK • occasional subdet TTC out-of-sync – Usually cured by TTC reset (by operator) • Occasional FED with problem, forcing restart FM 04 -02 -2008 t 10

CENTRAL DAQ FM 04 -02 -2008 t 11

Network • GPN and private network (dot-cms) • Status: OK • Support by IT • Note: • during Xmas break: no configuration changes possible • in Green Barrack: • connection to CMS private network possible 12

Cluster + Network • Operates independent from CERN campus network • Network structure: • Private network + headnodes with access also to campus • PCs ~2000 servers, ~100 Desktop • Linux SLC 4 • Cluster services: • ntp, dns, DHCP, Kerberos, LDAP • NAS filer for home directories, projects • Cluster monitoring with Nagios • Software packaged in rpm • Quattor for system installation and configuration • can install PC from scratch ~2 min, whole cluster 1 hour • Windows cluster of ~100 nodes for DCS 13

Cluster – Progress and Prospects • Purchased few ‘spare’ PE 1950, PE 2950 – Can no longer buy from Dell models with PCI-X (vmepc, Myrinet) On-going: • Re-arrangement of server nodes, headnodes • Extending SCX 5 control room (~continuously) • Consolidation of sub-det computing: storage, PC models – Use of central filer, instead of local disks – eg CSC local DAQ farm • Security • SLC 5 FM 04 -02 -2008 t 14

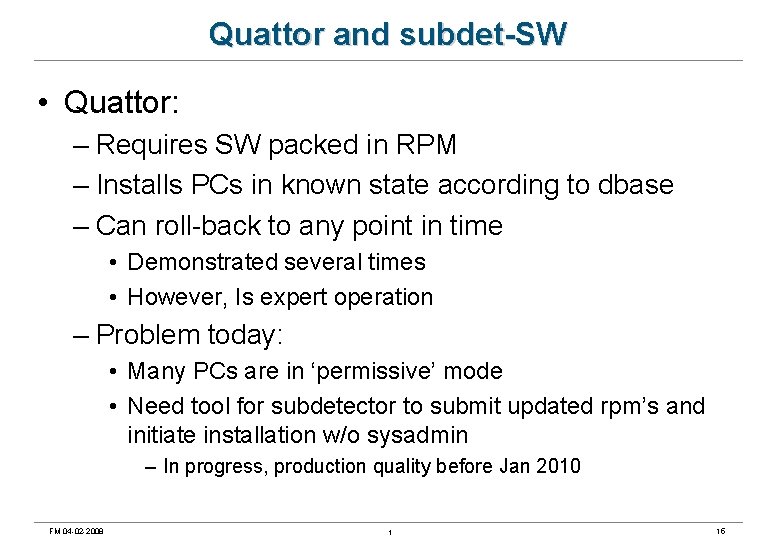

Quattor and subdet-SW • Quattor: – Requires SW packed in RPM – Installs PCs in known state according to dbase – Can roll-back to any point in time • Demonstrated several times • However, Is expert operation – Problem today: • Many PCs are in ‘permissive’ mode • Need tool for subdetector to submit updated rpm’s and initiate installation w/o sysadmin – In progress, production quality before Jan 2010 FM 04 -02 -2008 t 15

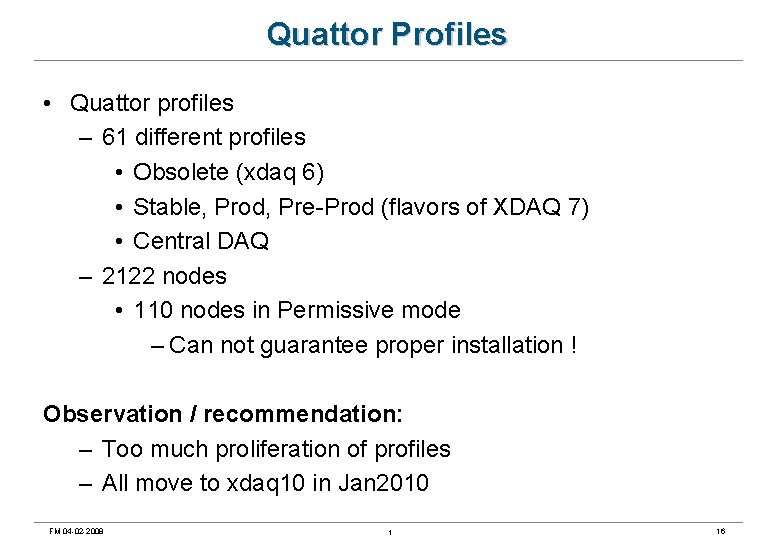

Quattor Profiles • Quattor profiles – 61 different profiles • Obsolete (xdaq 6) • Stable, Prod, Pre-Prod (flavors of XDAQ 7) • Central DAQ – 2122 nodes • 110 nodes in Permissive mode – Can not guarantee proper installation ! Observation / recommendation: – Too much proliferation of profiles – All move to xdaq 10 in Jan 2010 FM 04 -02 -2008 t 16

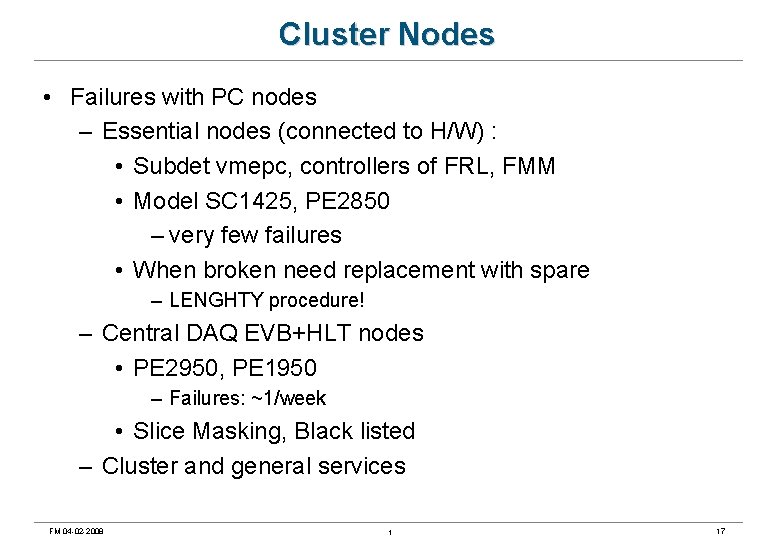

Cluster Nodes • Failures with PC nodes – Essential nodes (connected to H/W) : • Subdet vmepc, controllers of FRL, FMM • Model SC 1425, PE 2850 – very few failures • When broken need replacement with spare – LENGHTY procedure! – Central DAQ EVB+HLT nodes • PE 2950, PE 1950 – Failures: ~1/week • Slice Masking, Black listed – Cluster and general services FM 04 -02 -2008 t 17

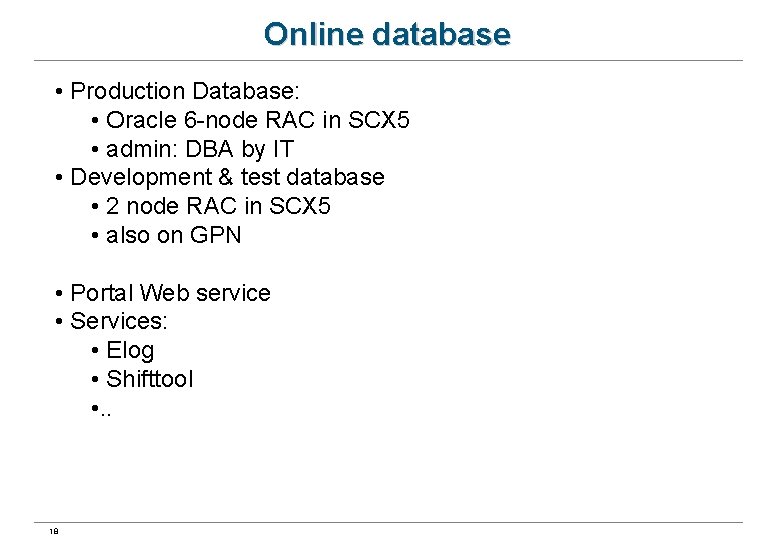

Online database • Production Database: • Oracle 6 -node RAC in SCX 5 • admin: DBA by IT • Development & test database • 2 node RAC in SCX 5 • also on GPN • Portal Web service • Services: • Elog • Shifttool • . . 18

Run Control • Progress: – Ability to mask DAQ slices • DAQ shifter can disable DAQ slice for problems RU onwards; requires destroy and re-init • Does NOT cover faulty FRL, etc – Improvements handling FED masking • Prospects – Some more fault-tolerance FM 04 -02 -2008 t 19

Event Filter Progress: • adoption of CMSSW_3 • streamlining of patching procedure and deployment – CMSSW HLT patches via tag-collector • Rework of Event. Processor – More efficient in memory, control, . . – Can restart crashed HLT instance on the fly In Progress: • HLT counters to Run. Info d. Base – To be finalized, signal on end-of-Lumi. Section from EVM FM 04 -02 -2008 t 20

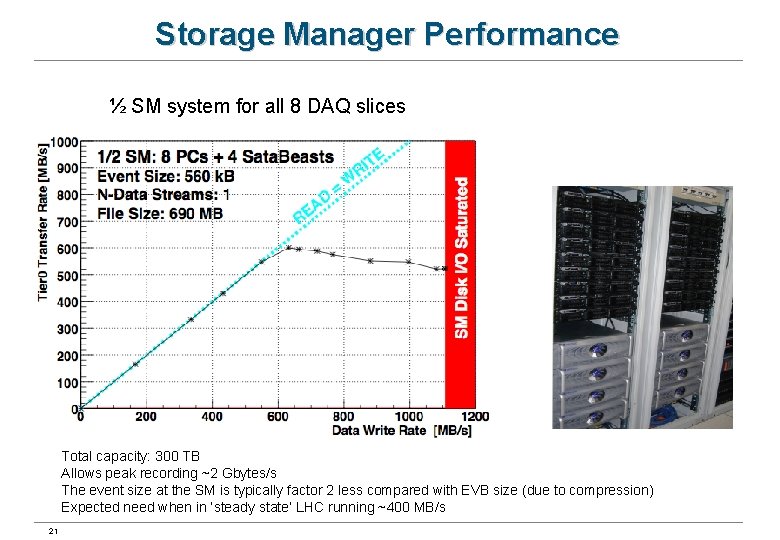

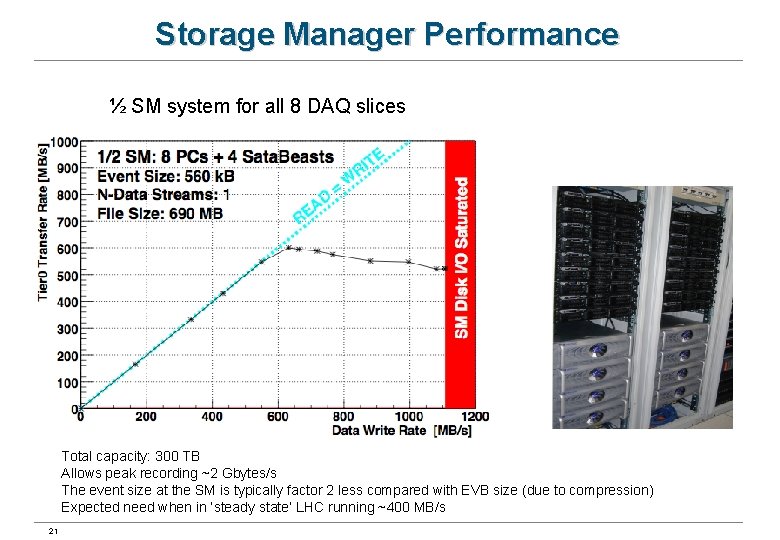

Storage Manager Performance ½ SM system for all 8 DAQ slices Total capacity: 300 TB Allows peak recording ~2 Gbytes/s The event size at the SM is typically factor 2 less compared with EVB size (due to compression) Expected need when in ‘steady state’ LHC running ~400 MB/s 21

Issues – Short term (2009) • Synchronisation – L 1 configuration and HLT – Updates to online data. Base • Monitoring of L 1 and HLT scalers – L 1 scalers (with deadtime) • Collected by EVB event managers – Monitoring at Lumi. Section boundaries FM 04 -02 -2008 t 22

Issues – Medium term (2010) • Overall Control: – Automation and communication between DCS Run control and LHC status – In place: • Basic communication mechanism • DCS is partitioned according to RC TTC partitions – Missing: • Higher level control • Different implementation options • “devil is in the details” FM 04 -02 -2008 t 23

SLC 5/gcc 4. 3 • Motivation: – Follow ‘current’ versions • functionality, HW support, security fixes – TSG prefers HLT on same version of SLC 5/gcc as offline • SLC 5 has gcc 4. 1, LCG uses gcc 4. 3. x, CMSSW uses gcc 4. 3. 4+mod • Migration to SLC 5/gcc 4. 3. x – – SLC 5 (64 -bit OS, 32 bit applications) Private gcc 4. 3. x (distributed with CMSSW) Only migrate Event Filter (and SM) nodes Involves: • System • XDAQ port (coretools and powerpack) and EVB, Ev. F • CMSSW FM 04 -02 -2008 t 24

SLC 5/gcc 4. 3 status • XDAQ release pre-10 – Subset (components required by Ev. F, SM) – Built with cmssw gcc 434 – NOT tested • CMSSW online release – Build environment operational – Build in progress • My (FM) personal feeling – Window of opportunity to migrate in Jan 2010 – Needs extensive testing (hybrid environment of EVB, monitoring) FM 04 -02 -2008 t 25

Xdaq • Release and deployment – Online SW migrated from CVS+sourceforge to SVN+TRAC – From build-8 onwards. – Global (umbrella) release number eg 9. 4 • Release schedule – Build-9 (21 -sep-09): improvements for central DAQ • in particular Monitoring Infrastructure (collecting many large FLs) – Build-10: both SLC 4 and SLC 5/gcc 4. 3. 4 (partial) • Sub-detectors – Now on build 6 or 7 – “Suggest” to migrate to bld-10 (SLC 4) in Jan 2010 FM 04 -02 -2008 t 26

READINESS FOR DATA-TAKING FM 04 -02 -2008 t 27

Splashes • Low L 1 trigger rate ~1 Hz • HLT accepts all, flagging • no TK, PX – Maybe use small config to minimise risks • Note: – 2008 splashes: CSC FED problem, repaired • Conclusion: DAQ is ready FM 04 -02 -2008 t 28

Early Collisions • 900 Ge. V and possibly 2. 2 Tev • Go up to 8 x 8 – L 1 Can accept all events (88 k. Hz) – HLT menu to bring rate down (budget 50 ms/evt) – Put all 16 Storage Managers • 2 Gbyte/s write (~2 k. Hz) instantaneous • Conclusion: – DAQ ready (also efficient? ) – Hope to have robust L 1, HLT scalers FM 04 -02 -2008 t 29

Plan for Jan 2010 • Implement and test – Tolerate missing FEDs – Migrate to xdaq 10 • Also all subdet (SLC 4) – HLT+SM on slc 5/gcc 434 if ready, tested in time • Design and progressively advance – Overall DCS/DAQ control FM 04 -02 -2008 t 30

Shifts and On-Call (I) • Sysadmin on-call – Currently 3, increase to 6 • Network – IT/CS support • Data Base – DBA from IT – Need CMS specific first line / filter FM 04 -02 -2008 t 31

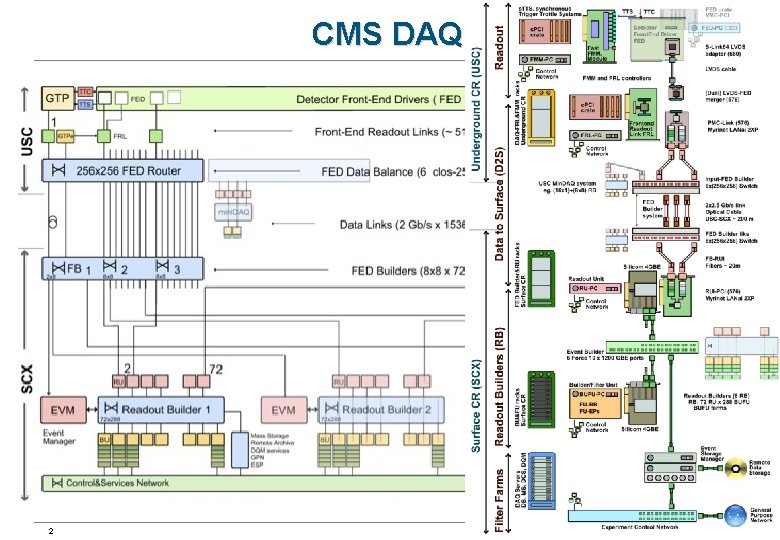

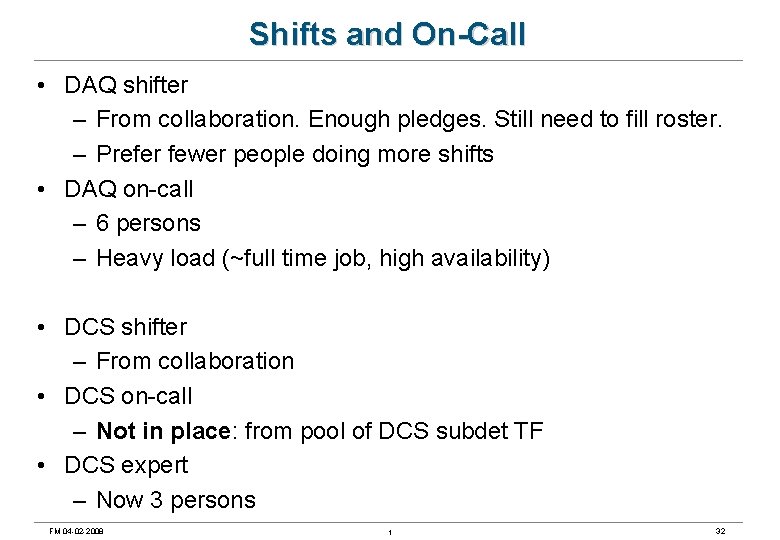

Shifts and On-Call • DAQ shifter – From collaboration. Enough pledges. Still need to fill roster. – Prefer fewer people doing more shifts • DAQ on-call – 6 persons – Heavy load (~full time job, high availability) • DCS shifter – From collaboration • DCS on-call – Not in place: from pool of DCS subdet TF • DCS expert – Now 3 persons FM 04 -02 -2008 t 32

Frans meijers

Frans meijers Meijers near me

Meijers near me Enno meijers

Enno meijers Quantum computing: progress and prospects

Quantum computing: progress and prospects In paragraph 1 impatient of is best interpreted as meaning

In paragraph 1 impatient of is best interpreted as meaning Prospects of agriculture in bangladesh

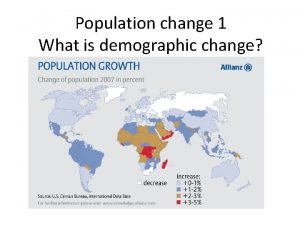

Prospects of agriculture in bangladesh World population prospects

World population prospects Prospects preposition

Prospects preposition Digital data acquisition system block diagram

Digital data acquisition system block diagram Mini daq

Mini daq Bls game

Bls game Simultaneous sampling daq

Simultaneous sampling daq Clark county daq

Clark county daq Daq assistant express vi download

Daq assistant express vi download Daq online

Daq online Frans van waarden

Frans van waarden Descartes frans hals

Descartes frans hals Frans nijhof

Frans nijhof Onbepaald lidwoord frans

Onbepaald lidwoord frans Lees je bijbel bid elke dag frans

Lees je bijbel bid elke dag frans Grote verkeerstoets leerling

Grote verkeerstoets leerling Frans rikhof

Frans rikhof Frans van ittersum

Frans van ittersum Frans kaashoek

Frans kaashoek Eiffeltoren in het frans

Eiffeltoren in het frans Kaartspel frans

Kaartspel frans Frans schuurman

Frans schuurman Frans johansson medici effect

Frans johansson medici effect Duurzaamheid frans

Duurzaamheid frans Slimstampen frans

Slimstampen frans Vgc sport

Vgc sport Uit het oog uit het hart frans

Uit het oog uit het hart frans Frans suijkerbuijk

Frans suijkerbuijk