CUDA Odds and Ends Joseph Kider University of

![Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0; Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0;](https://slidetodoc.com/presentation_image_h/11939cfeb87c28e892eb7e546b69da29/image-41.jpg)

![Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0; Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0;](https://slidetodoc.com/presentation_image_h/11939cfeb87c28e892eb7e546b69da29/image-42.jpg)

![Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0; Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0;](https://slidetodoc.com/presentation_image_h/11939cfeb87c28e892eb7e546b69da29/image-43.jpg)

- Slides: 64

CUDA Odds and Ends Joseph Kider University of Pennsylvania CIS 565 - Fall 2011

Sources Patrick Cozzi Spring 2011 n NVIDIA CUDA Programming Guide n CUDA by Example n Programming Massively Parallel Processors n

Agenda Atomic Functions n Paged-Locked Host Memory n Streams n Graphics Interoperability n

Atomic Functions n What is the value of count if 8 threads execute ++count? __device__ unsigned int count = 0; //. . . ++count;

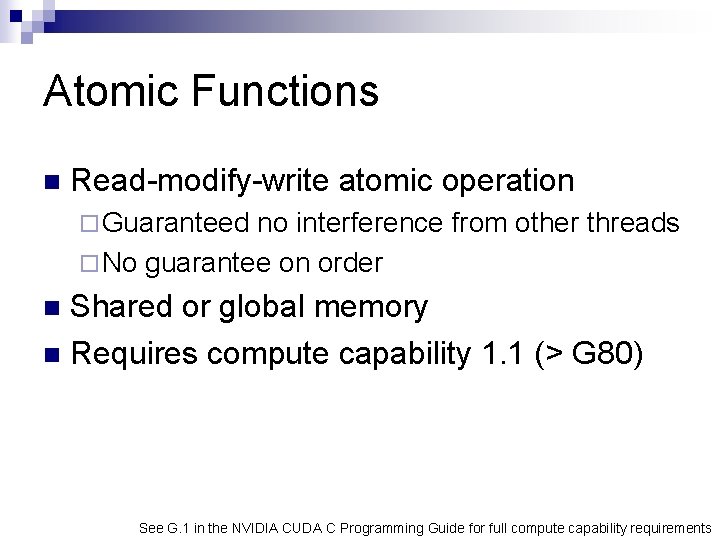

Atomic Functions n Read-modify-write atomic operation ¨ Guaranteed no interference from other threads ¨ No guarantee on order Shared or global memory n Requires compute capability 1. 1 (> G 80) n See G. 1 in the NVIDIA CUDA C Programming Guide for full compute capability requirements

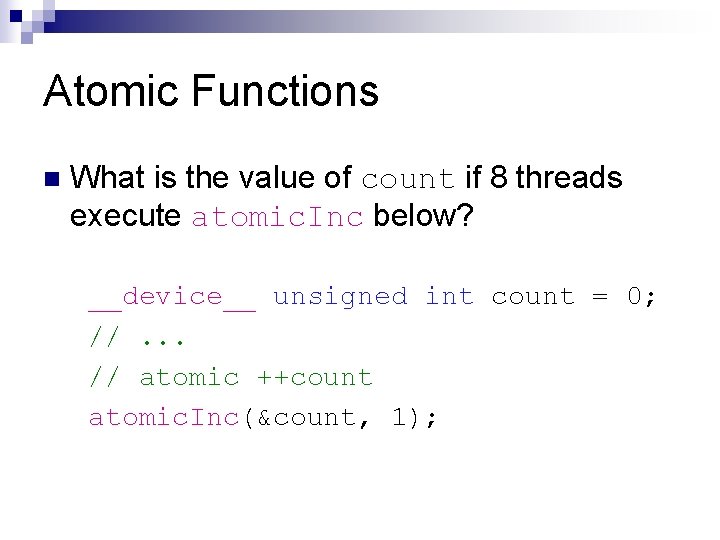

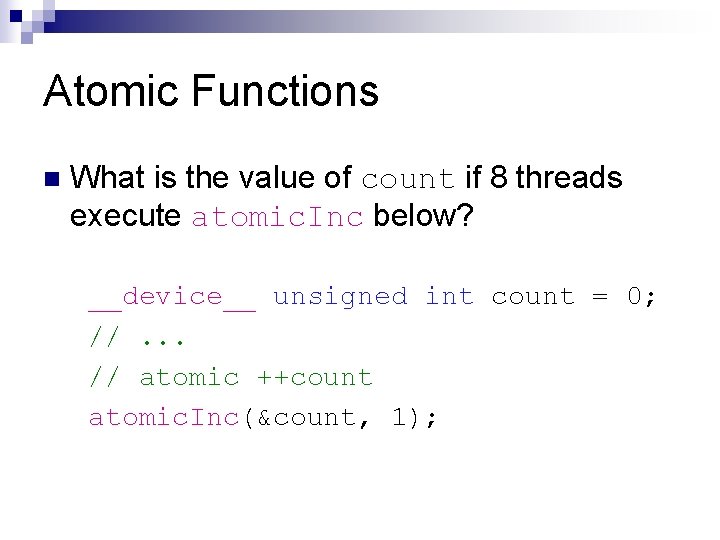

Atomic Functions n What is the value of count if 8 threads execute atomic. Inc below? __device__ unsigned int count = 0; //. . . // atomic ++count atomic. Inc(&count, 1);

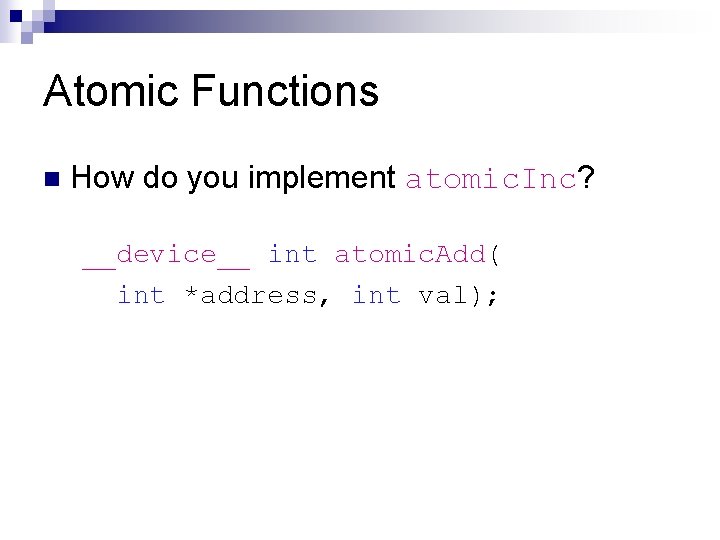

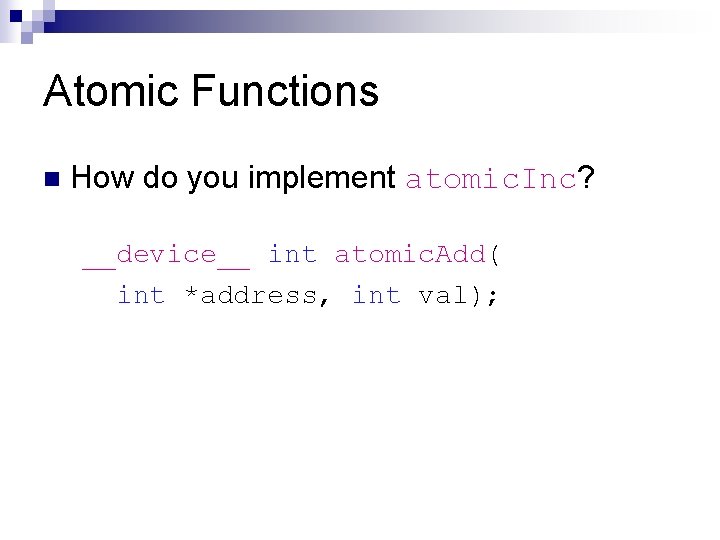

Atomic Functions n How do you implement atomic. Inc? __device__ int atomic. Add( int *address, int val);

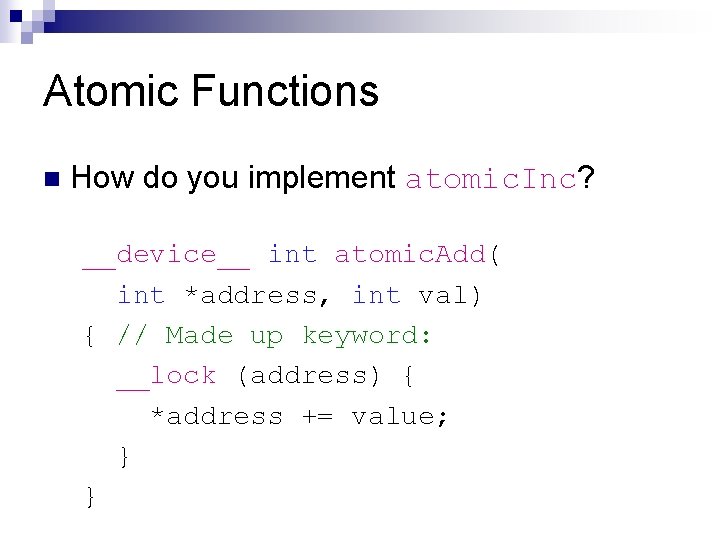

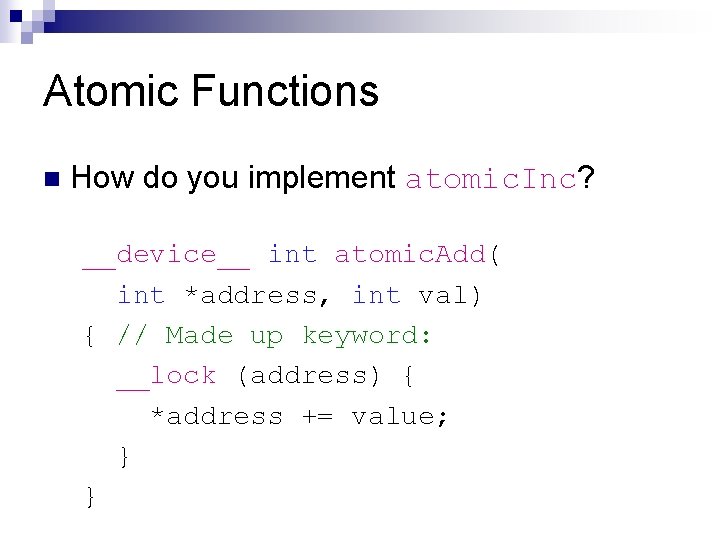

Atomic Functions n How do you implement atomic. Inc? __device__ int atomic. Add( int *address, int val) { // Made up keyword: __lock (address) { *address += value; } }

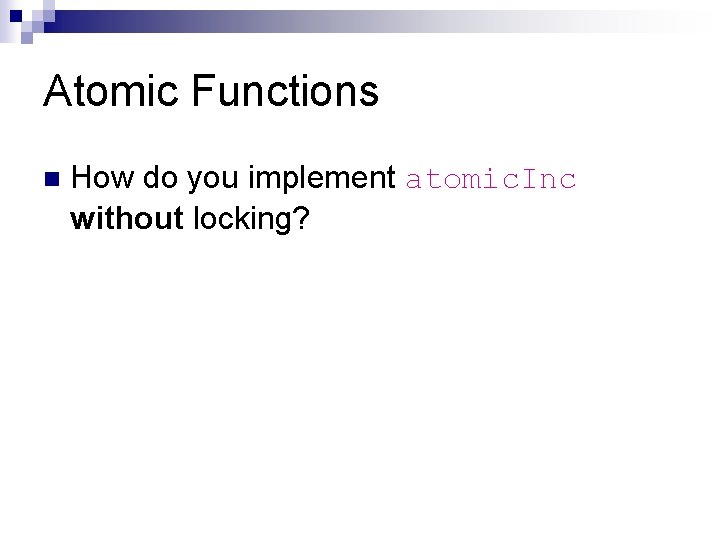

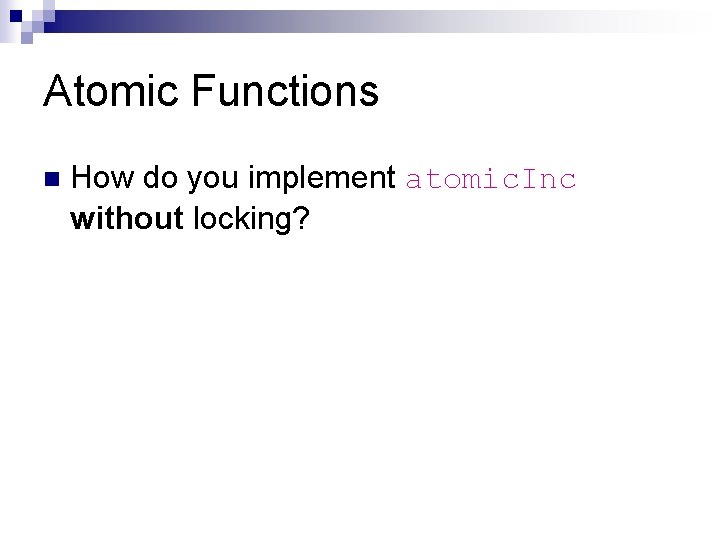

Atomic Functions n How do you implement atomic. Inc without locking?

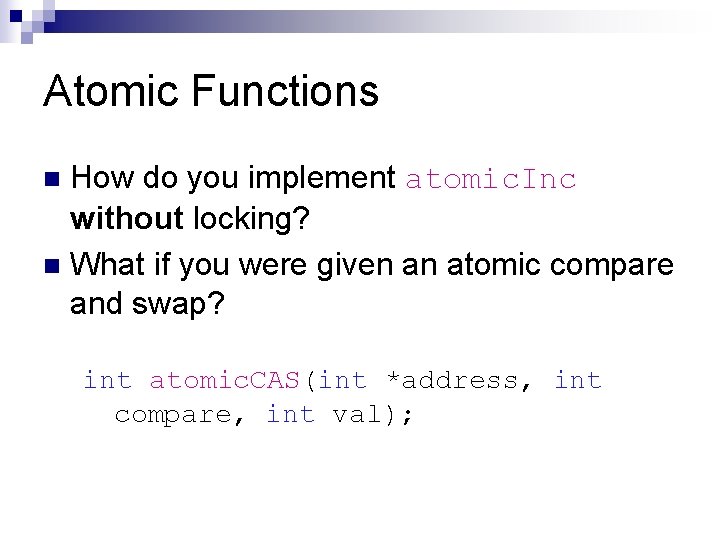

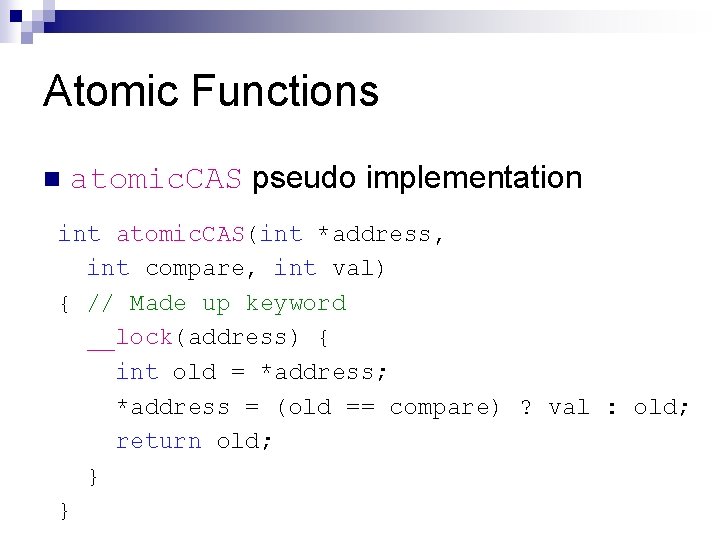

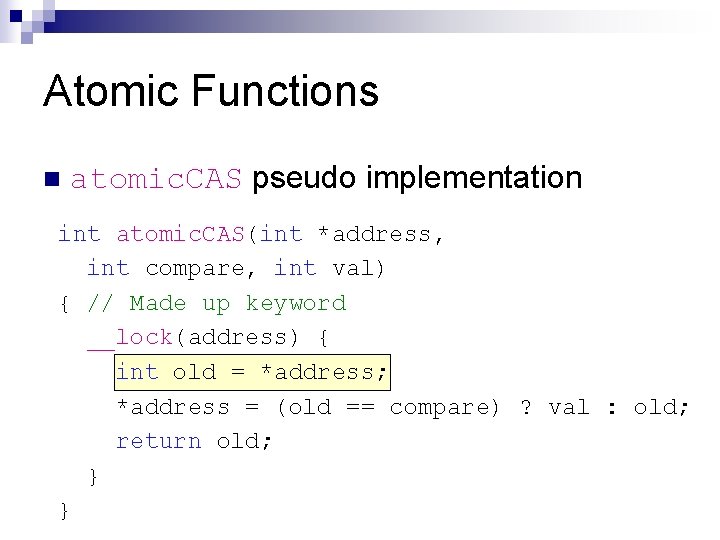

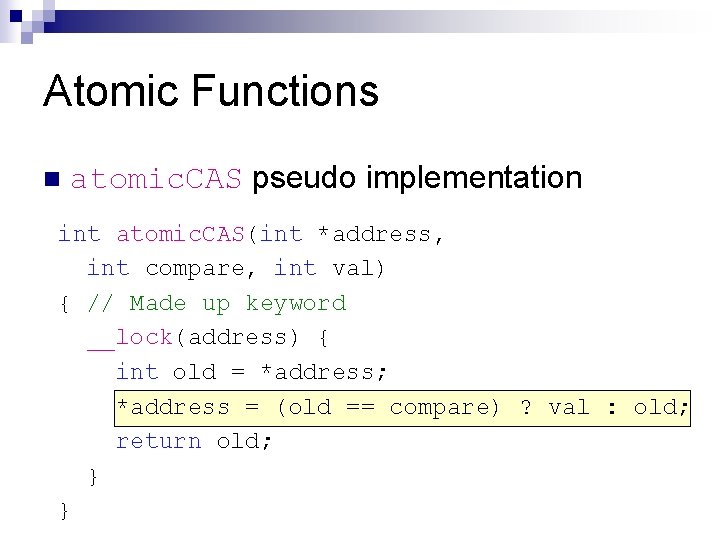

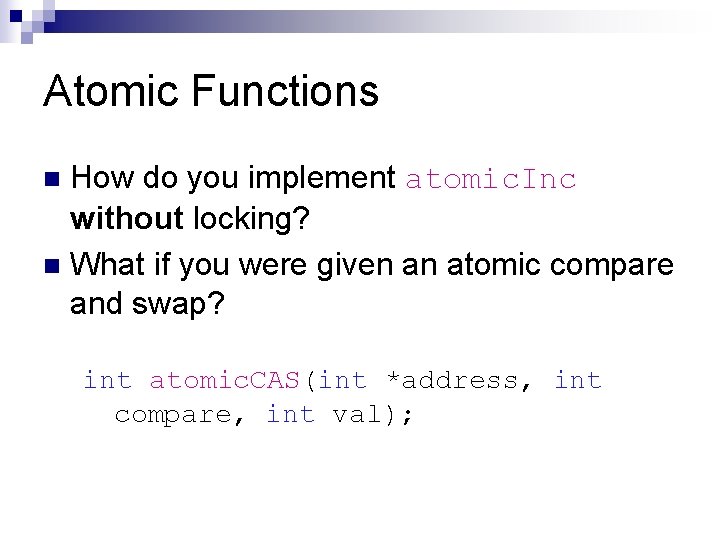

Atomic Functions How do you implement atomic. Inc without locking? n What if you were given an atomic compare and swap? n int atomic. CAS(int *address, int compare, int val);

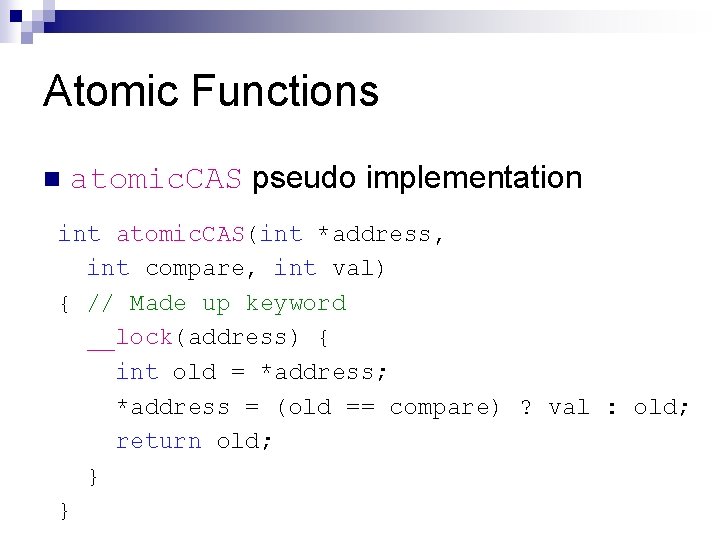

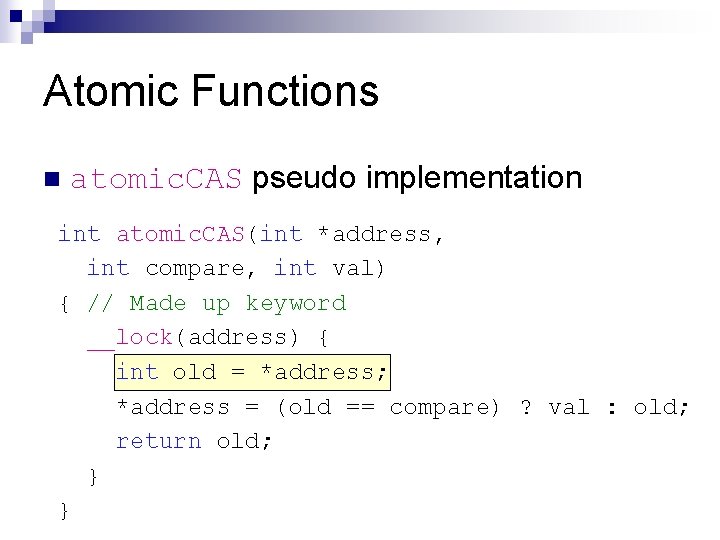

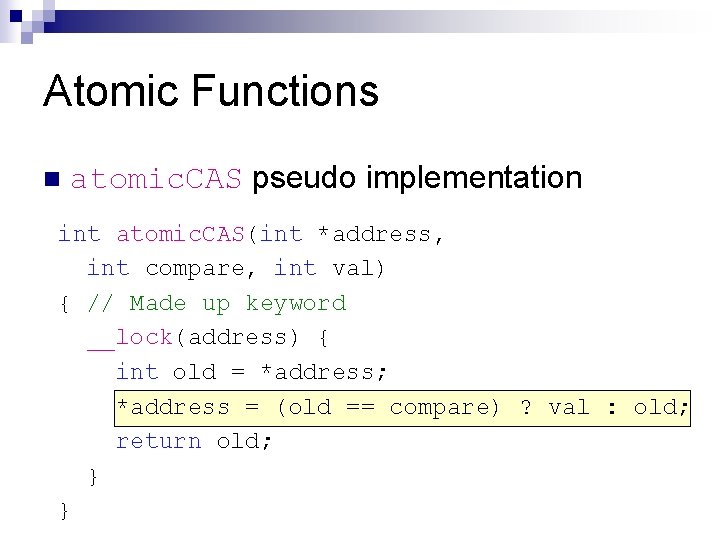

Atomic Functions n atomic. CAS pseudo implementation int atomic. CAS(int *address, int compare, int val) { // Made up keyword __lock(address) { int old = *address; *address = (old == compare) ? val : old; return old; } }

Atomic Functions n atomic. CAS pseudo implementation int atomic. CAS(int *address, int compare, int val) { // Made up keyword __lock(address) { int old = *address; *address = (old == compare) ? val : old; return old; } }

Atomic Functions n atomic. CAS pseudo implementation int atomic. CAS(int *address, int compare, int val) { // Made up keyword __lock(address) { int old = *address; *address = (old == compare) ? val : old; return old; } }

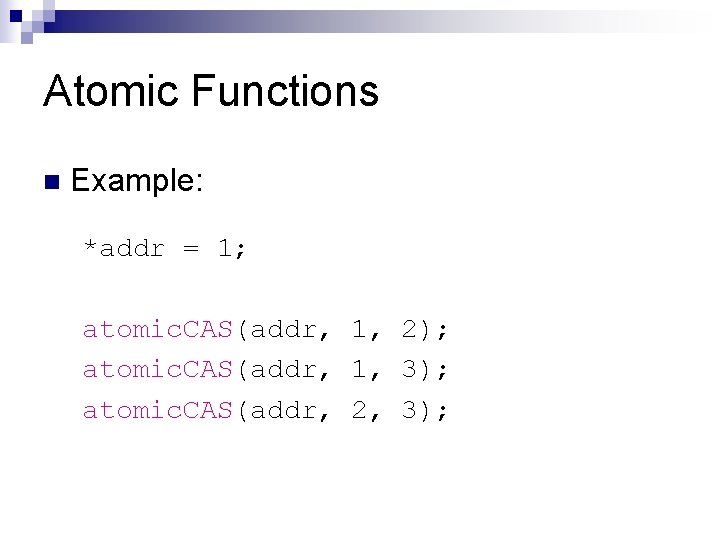

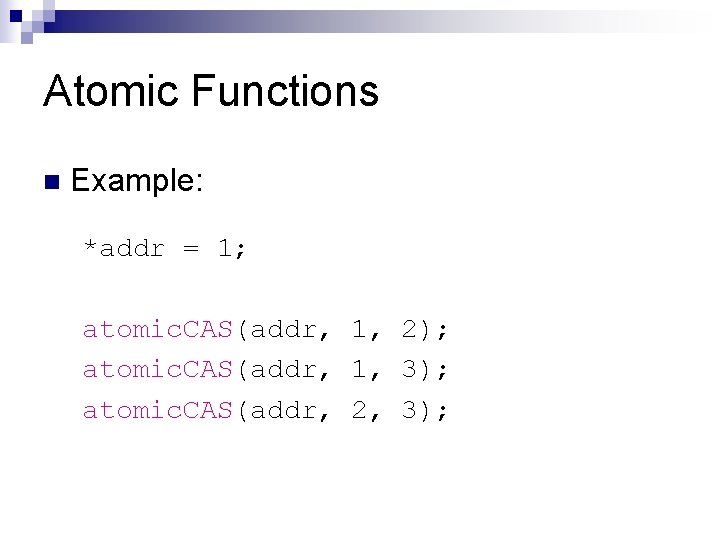

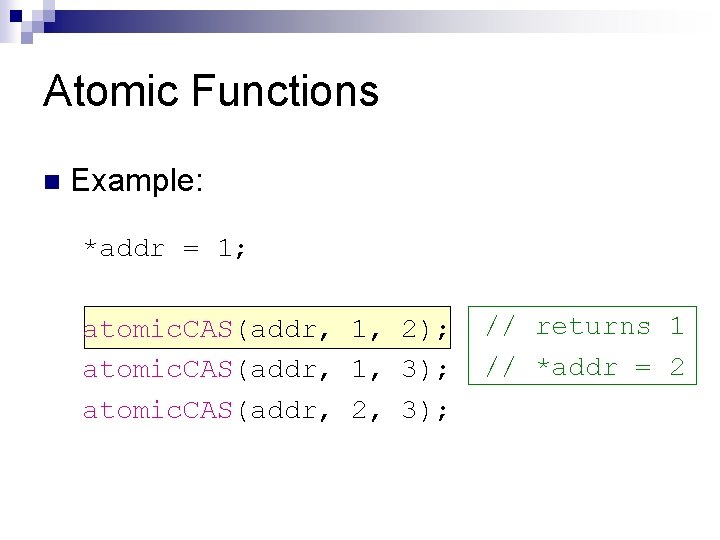

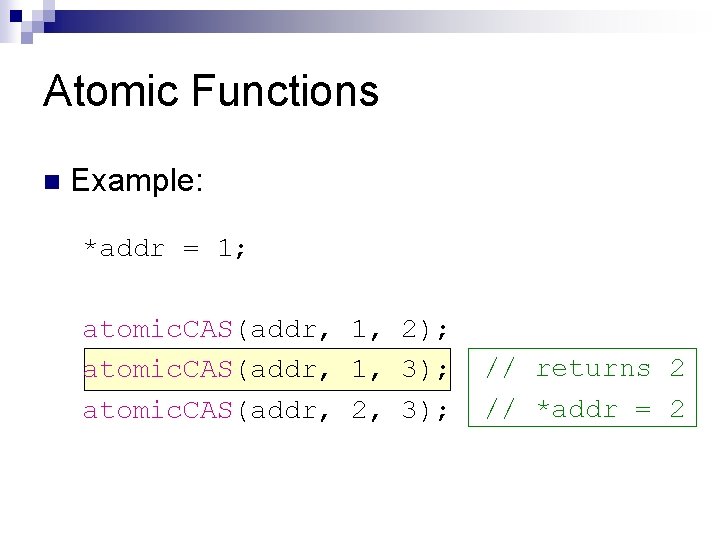

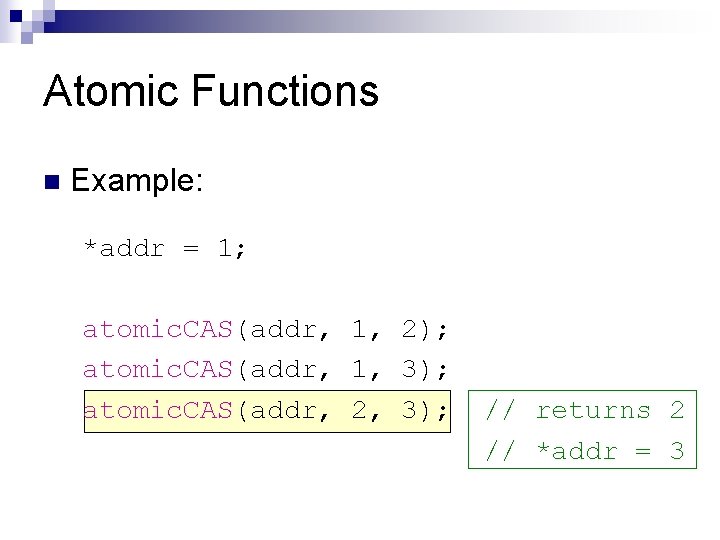

Atomic Functions n Example: *addr = 1; atomic. CAS(addr, 1, 2); atomic. CAS(addr, 1, 3); atomic. CAS(addr, 2, 3);

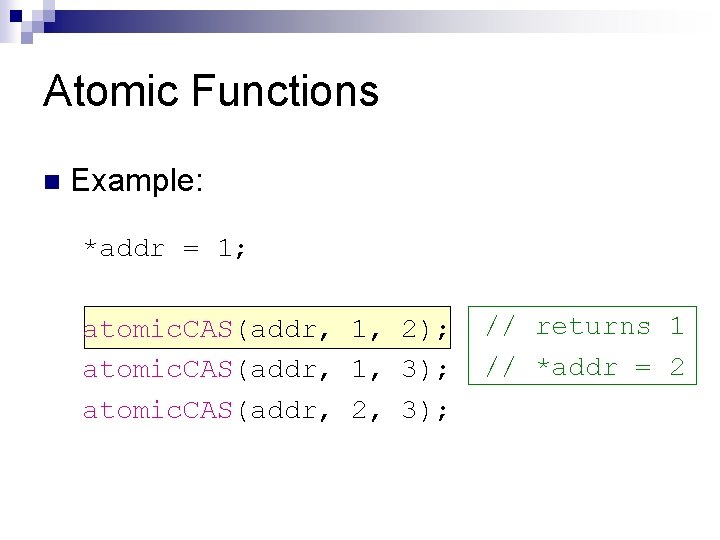

Atomic Functions n Example: *addr = 1; atomic. CAS(addr, 1, 2); atomic. CAS(addr, 1, 3); atomic. CAS(addr, 2, 3); // returns 1 // *addr = 2

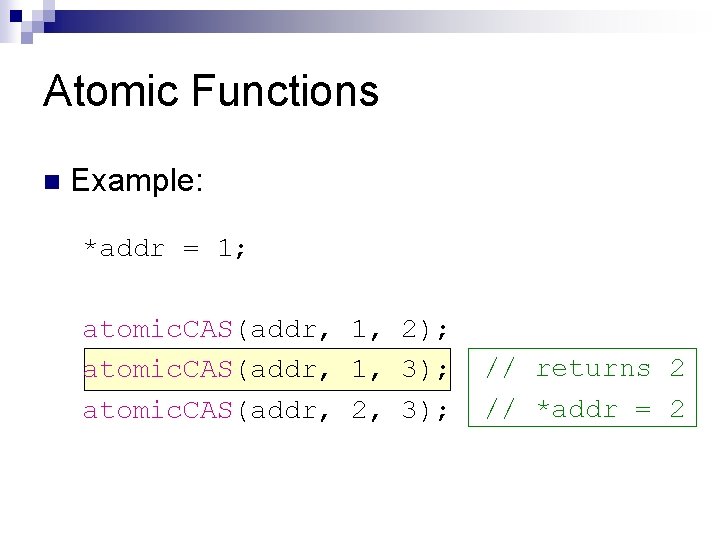

Atomic Functions n Example: *addr = 1; atomic. CAS(addr, 1, 2); atomic. CAS(addr, 1, 3); atomic. CAS(addr, 2, 3); // returns 2 // *addr = 2

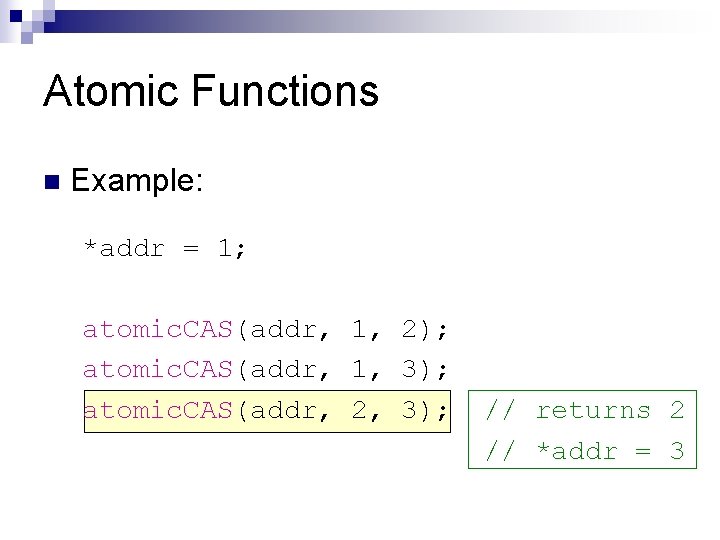

Atomic Functions n Example: *addr = 1; atomic. CAS(addr, 1, 2); atomic. CAS(addr, 1, 3); atomic. CAS(addr, 2, 3); // returns 2 // *addr = 3

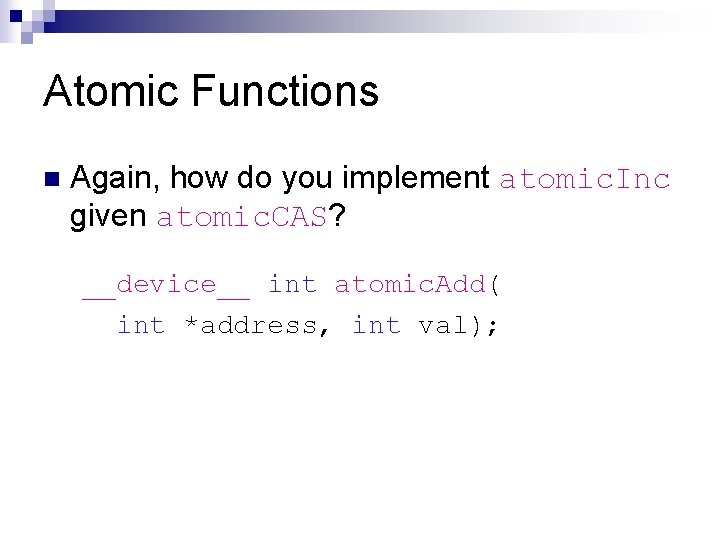

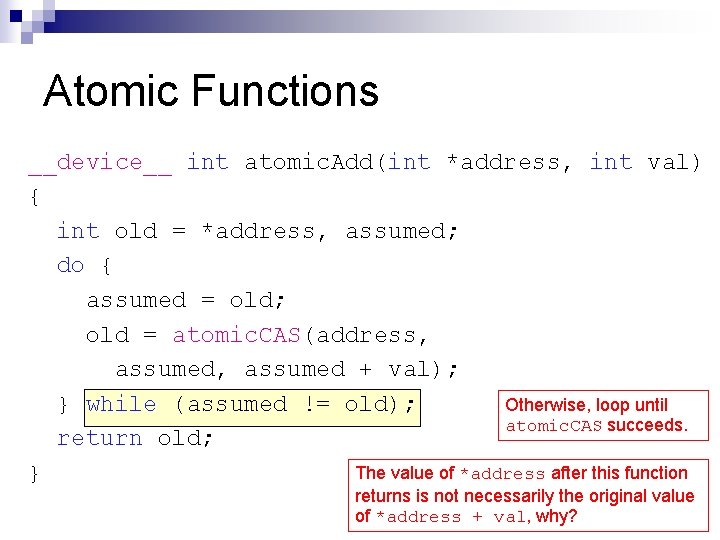

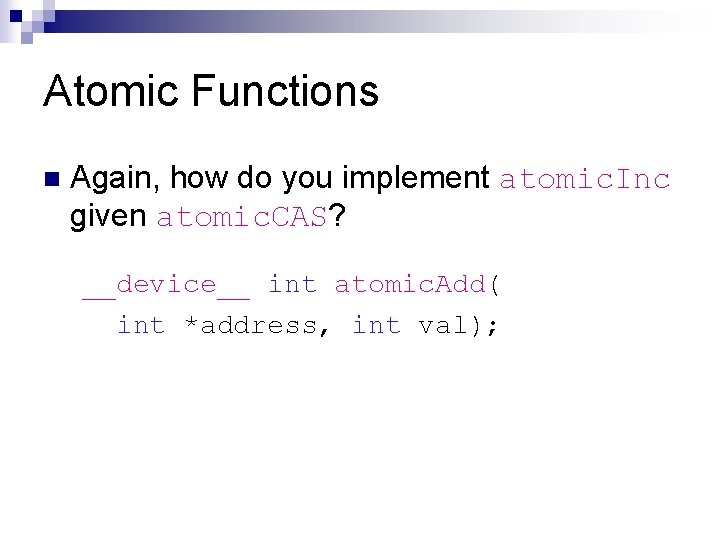

Atomic Functions n Again, how do you implement atomic. Inc given atomic. CAS? __device__ int atomic. Add( int *address, int val);

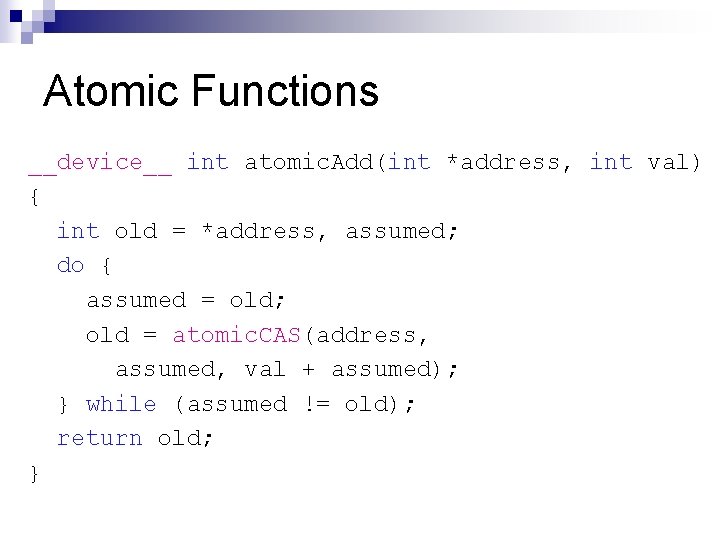

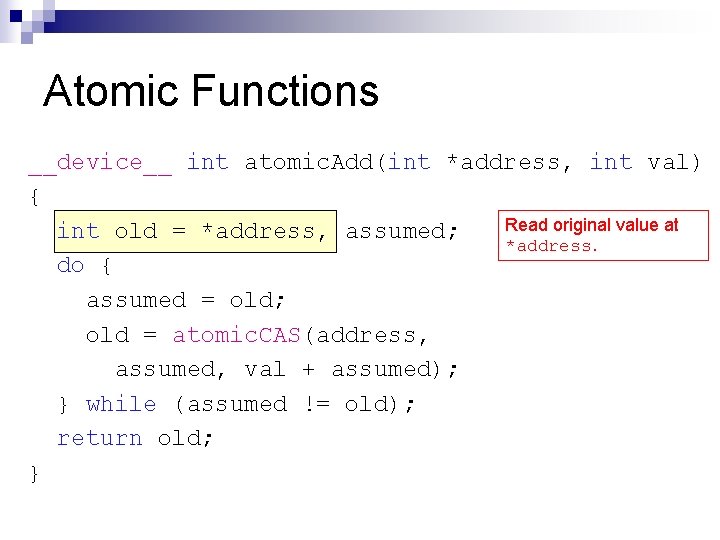

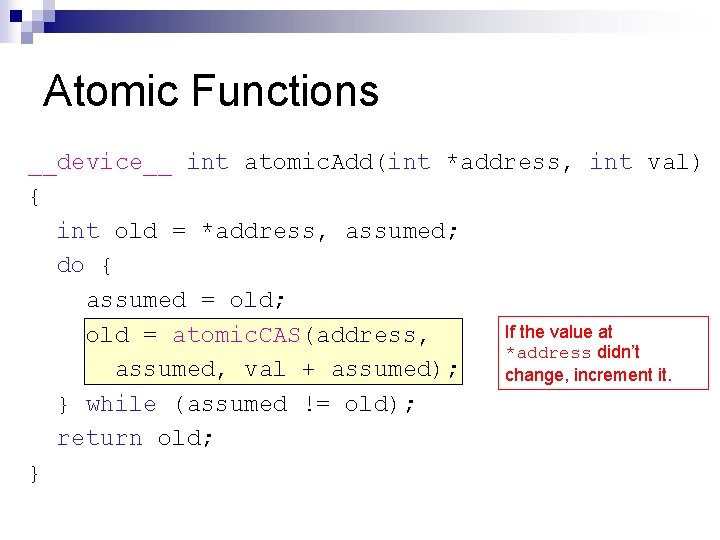

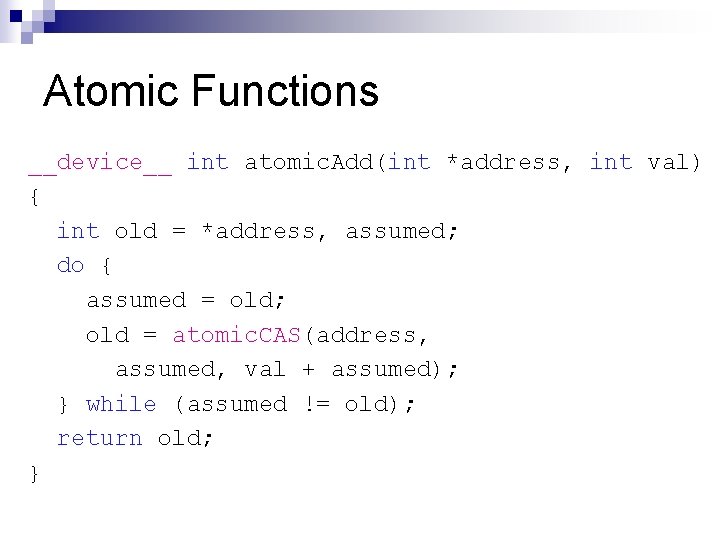

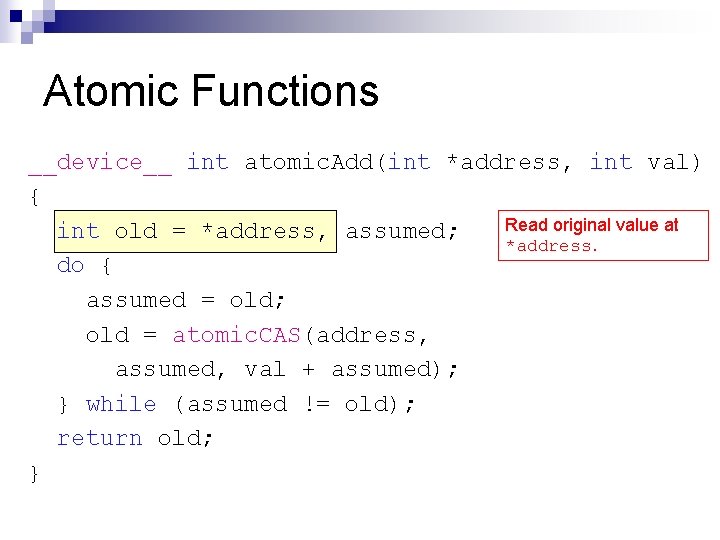

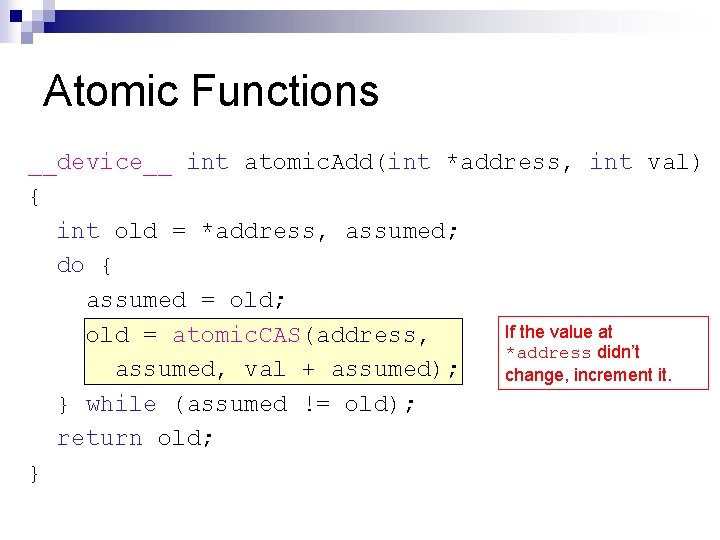

Atomic Functions __device__ int atomic. Add(int *address, int val) { int old = *address, assumed; do { assumed = old; old = atomic. CAS(address, assumed, val + assumed); } while (assumed != old); return old; }

Atomic Functions __device__ int atomic. Add(int *address, int val) { Read original value at int old = *address, assumed; *address. do { assumed = old; old = atomic. CAS(address, assumed, val + assumed); } while (assumed != old); return old; }

Atomic Functions __device__ int atomic. Add(int *address, int val) { int old = *address, assumed; do { assumed = old; If the value at old = atomic. CAS(address, *address didn’t assumed, val + assumed); change, increment it. } while (assumed != old); return old; }

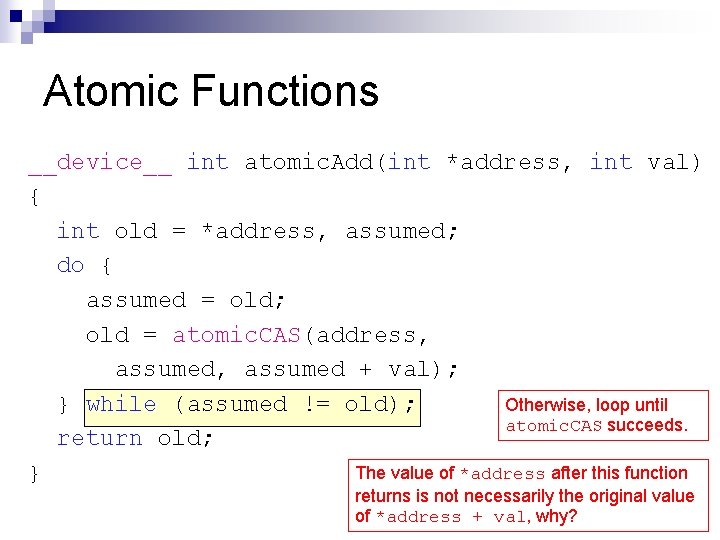

Atomic Functions __device__ int atomic. Add(int *address, int val) { int old = *address, assumed; do { assumed = old; old = atomic. CAS(address, assumed + val); } while (assumed != old); Otherwise, loop until atomic. CAS succeeds. return old; The value of *address after this function } returns is not necessarily the original value of *address + val, why?

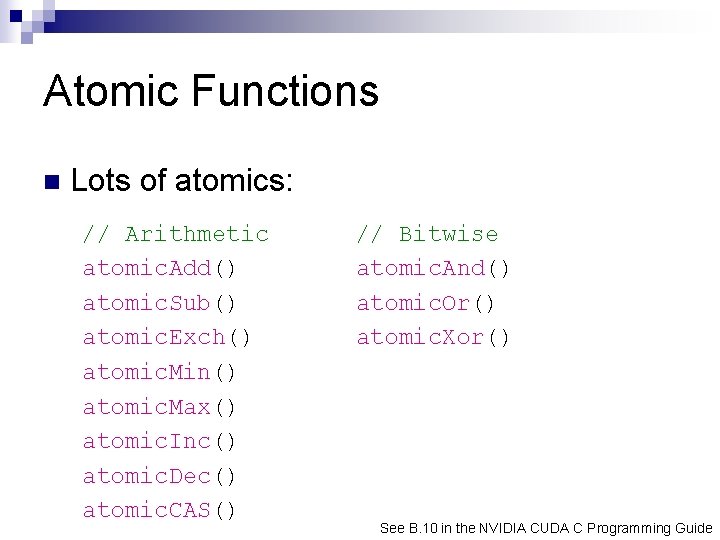

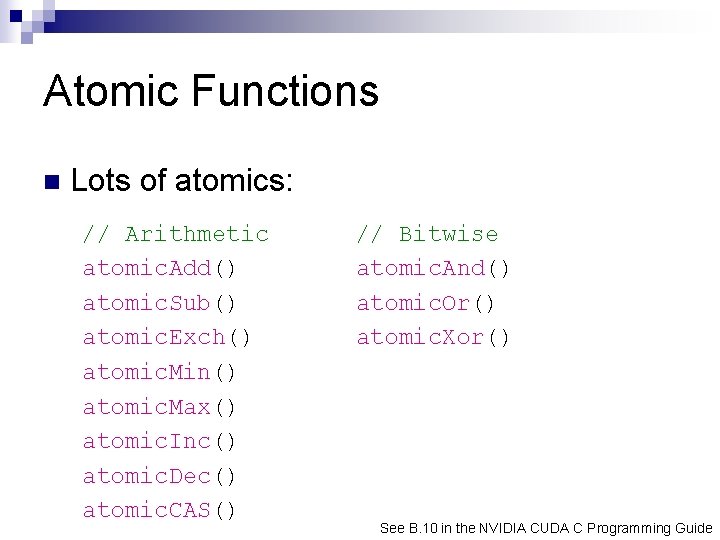

Atomic Functions n Lots of atomics: // Arithmetic atomic. Add() atomic. Sub() atomic. Exch() atomic. Min() atomic. Max() atomic. Inc() atomic. Dec() atomic. CAS() // Bitwise atomic. And() atomic. Or() atomic. Xor() See B. 10 in the NVIDIA CUDA C Programming Guide

Atomic Functions How can threads from different blocks work together? n Use atomics sparingly. Why? n

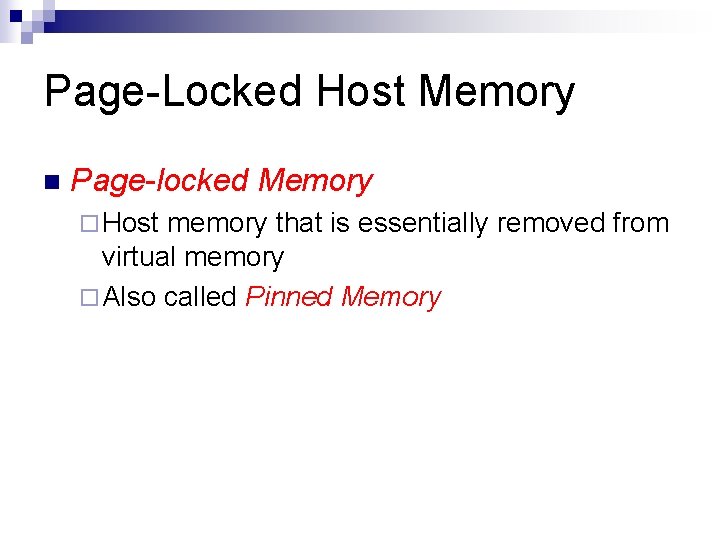

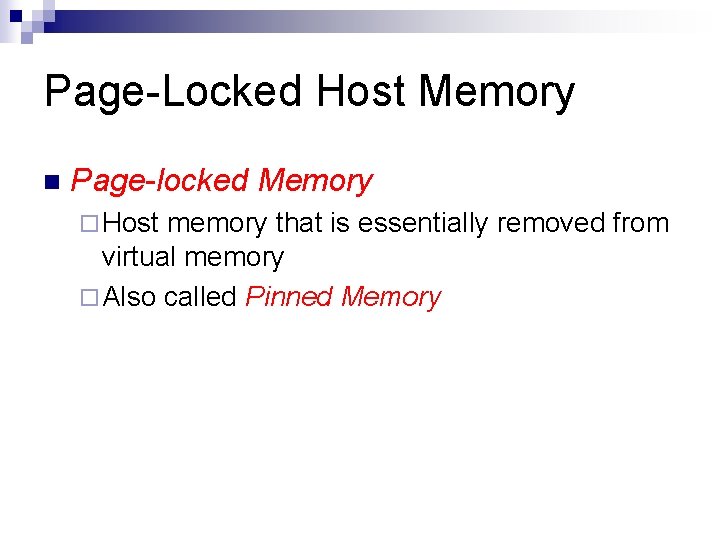

Page-Locked Host Memory n Page-locked Memory ¨ Host memory that is essentially removed from virtual memory ¨ Also called Pinned Memory

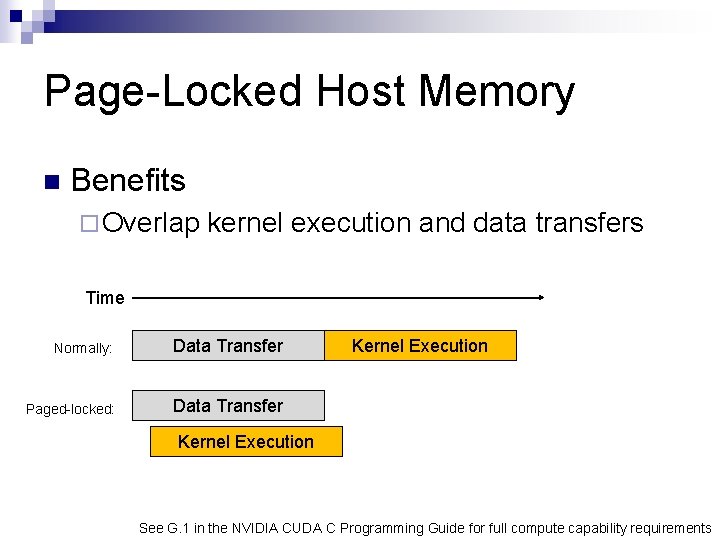

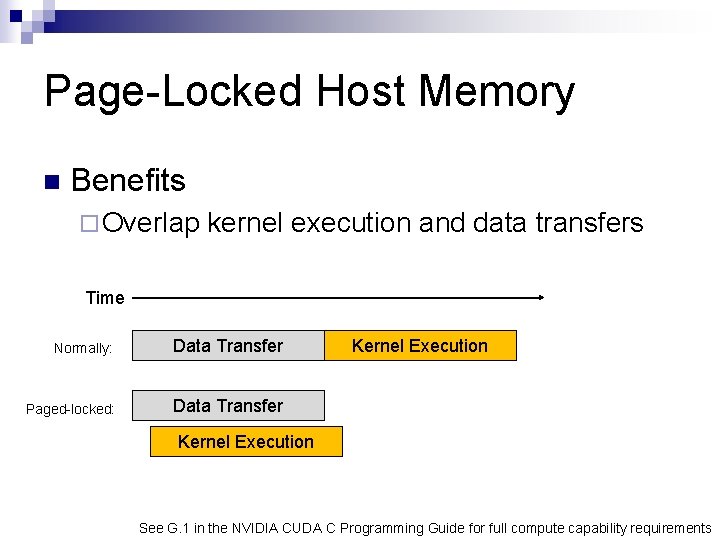

Page-Locked Host Memory n Benefits ¨ Overlap kernel execution and data transfers Time Normally: Data Transfer Paged-locked: Data Transfer Kernel Execution See G. 1 in the NVIDIA CUDA C Programming Guide for full compute capability requirements

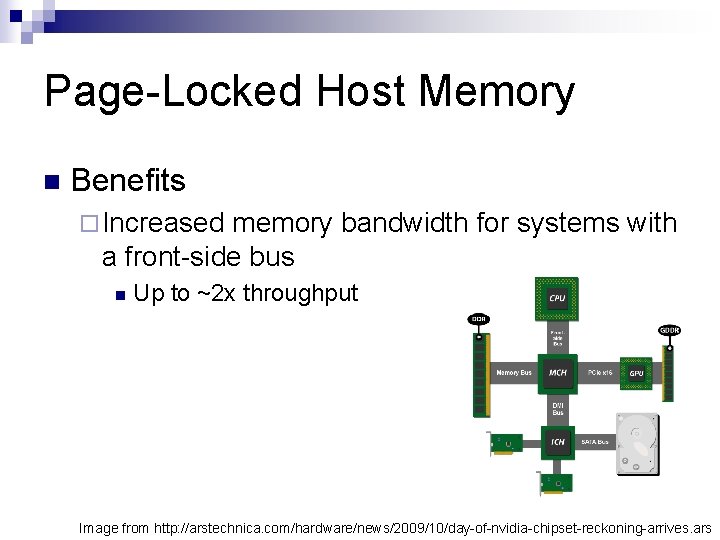

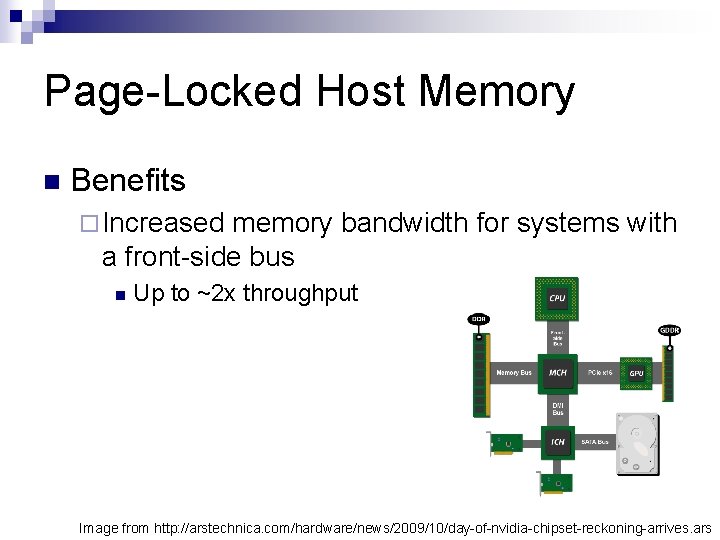

Page-Locked Host Memory n Benefits ¨ Increased memory bandwidth for systems with a front-side bus n Up to ~2 x throughput Image from http: //arstechnica. com/hardware/news/2009/10/day-of-nvidia-chipset-reckoning-arrives. ars

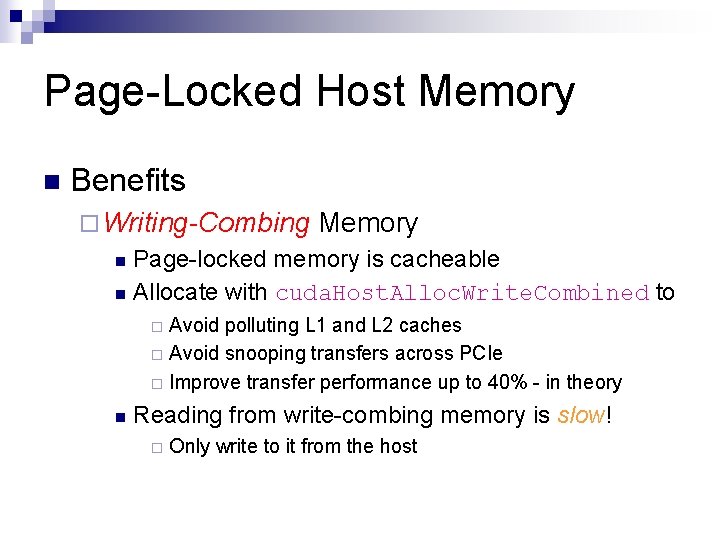

Page-Locked Host Memory n Benefits ¨ Writing-Combing Memory Page-locked memory is cacheable n Allocate with cuda. Host. Alloc. Write. Combined to n Avoid polluting L 1 and L 2 caches ¨ Avoid snooping transfers across PCIe ¨ Improve transfer performance up to 40% - in theory ¨ n Reading from write-combing memory is slow! ¨ Only write to it from the host

Page-Locked Host Memory n Benefits ¨ Paged-locked host memory can be mapped into the address space of the device on some systems What systems allow this? n What does this eliminate? n What applications does this enable? n ¨ Call cuda. Get. Device. Properties() and check can. Map. Host. Memory

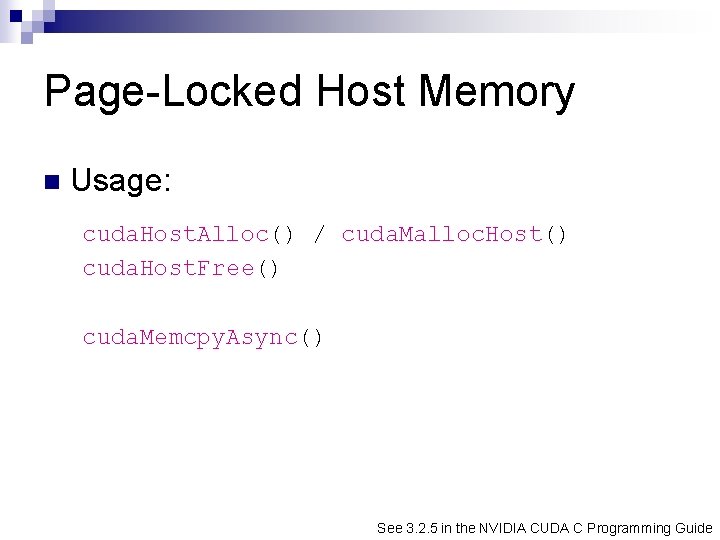

Page-Locked Host Memory n Usage: cuda. Host. Alloc() / cuda. Malloc. Host() cuda. Host. Free() cuda. Memcpy. Async() See 3. 2. 5 in the NVIDIA CUDA C Programming Guide

Page-Locked Host Memory DEMO CUDA SDK Example: bandwidth. Test

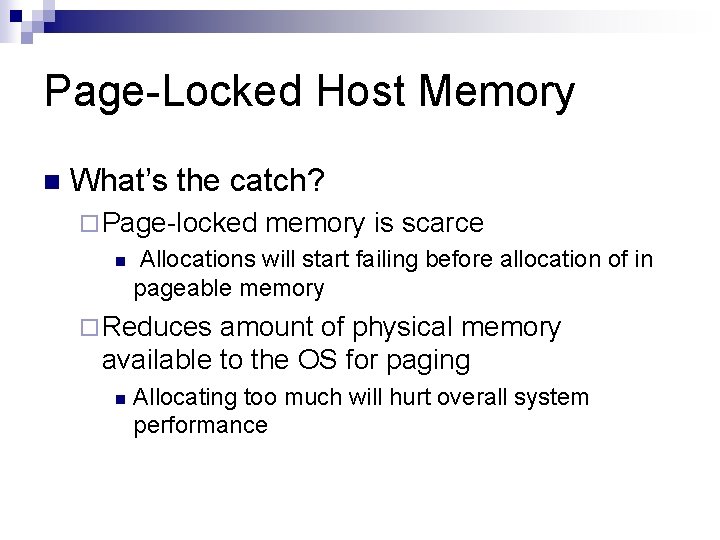

Page-Locked Host Memory n What’s the catch? ¨ Page-locked n memory is scarce Allocations will start failing before allocation of in pageable memory ¨ Reduces amount of physical memory available to the OS for paging n Allocating too much will hurt overall system performance

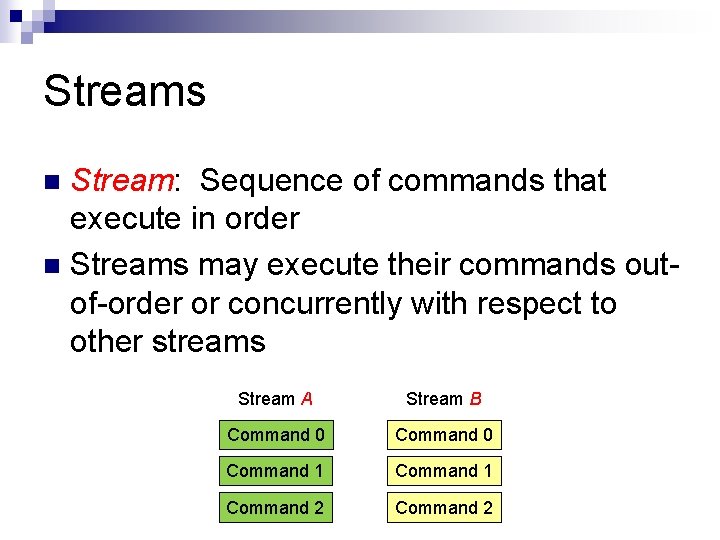

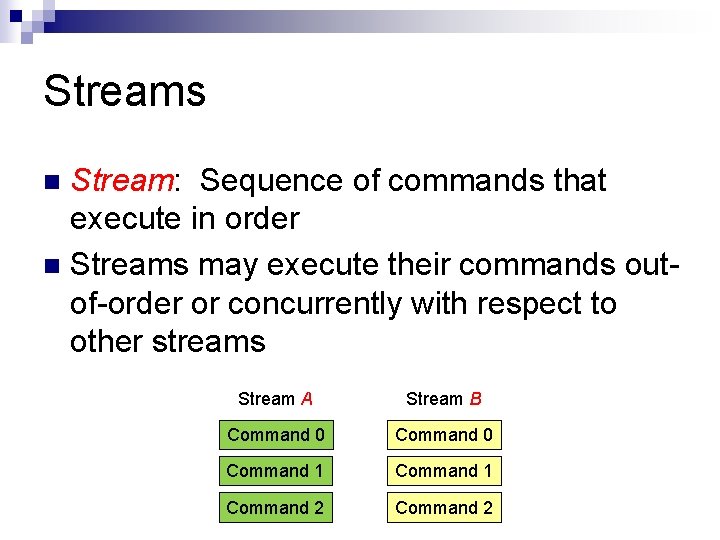

Streams Stream: Sequence of commands that execute in order n Streams may execute their commands outof-order or concurrently with respect to other streams n Stream A Stream B Command 0 Command 1 Command 2

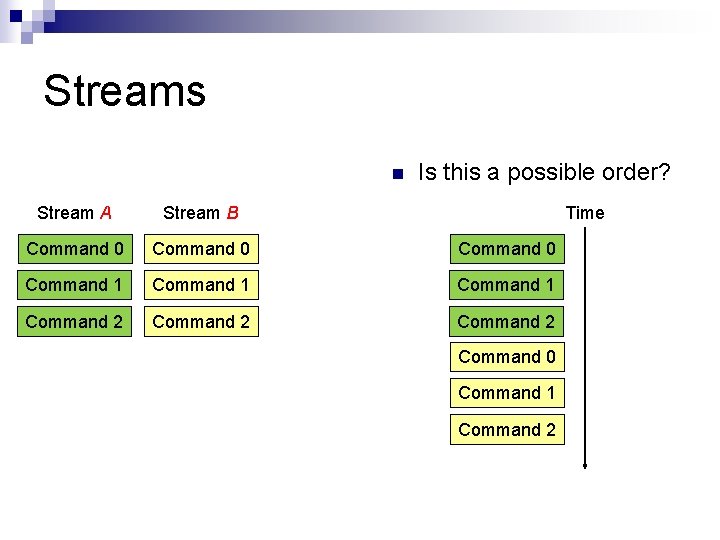

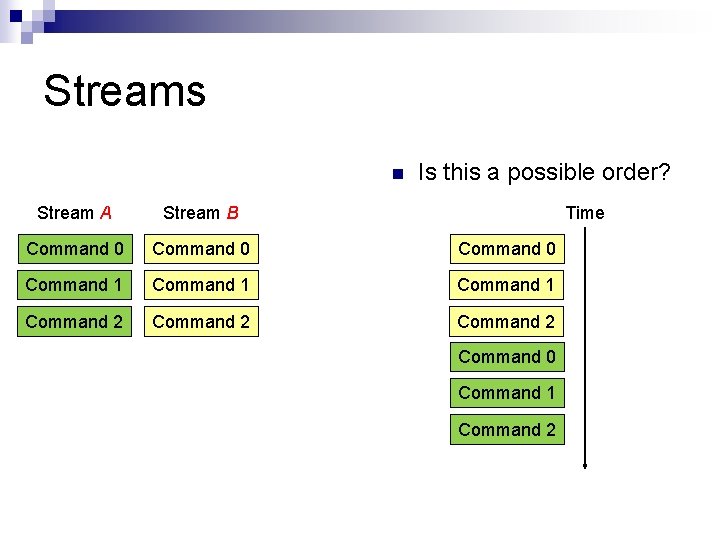

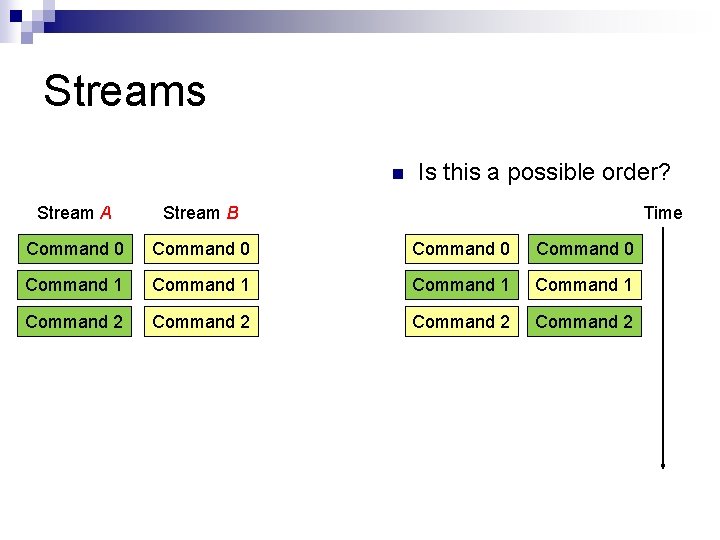

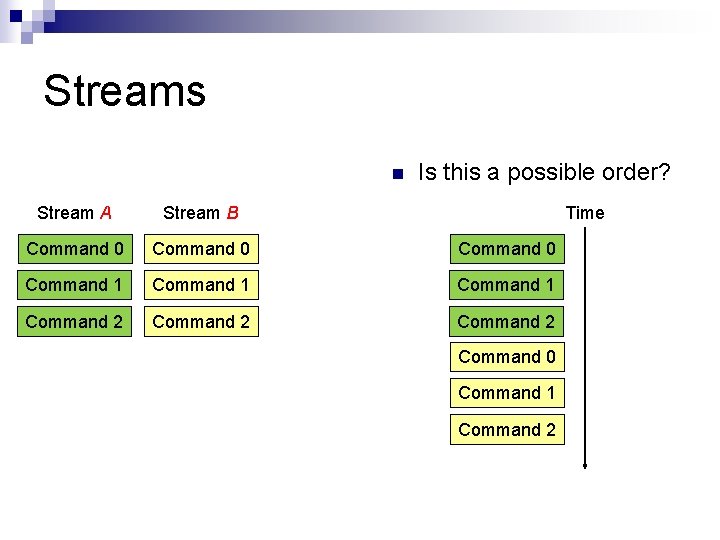

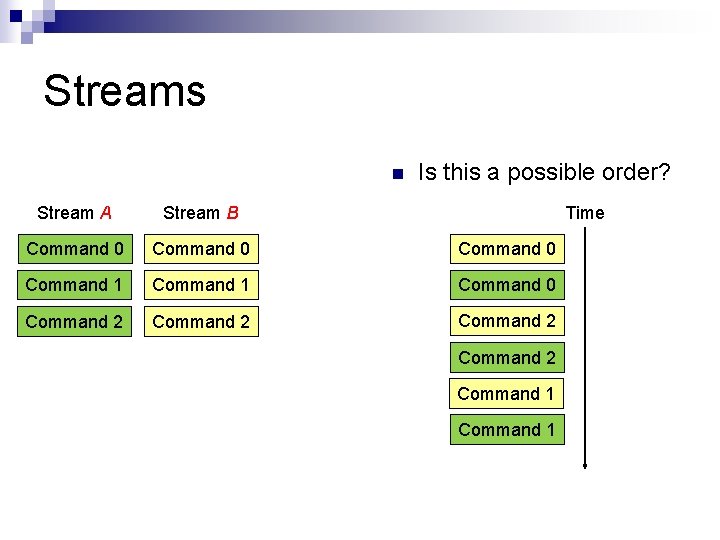

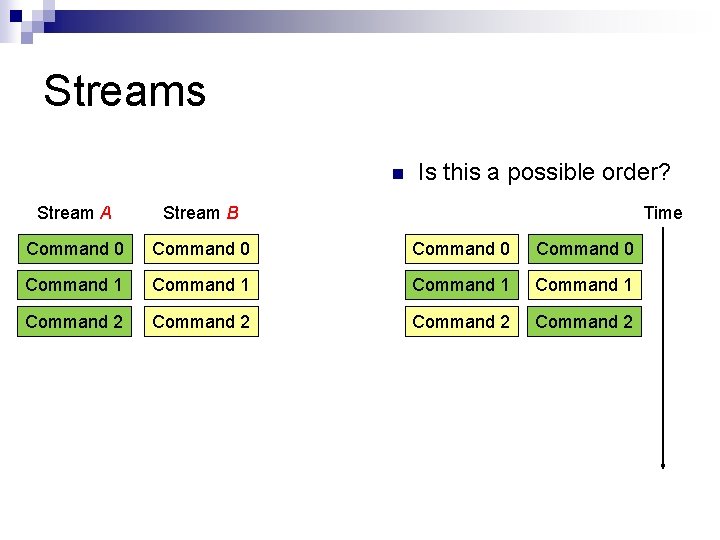

Streams n Is this a possible order? Stream A Stream B Time Command 0 Command 1 Command 2 Command 0 Command 1 Command 2

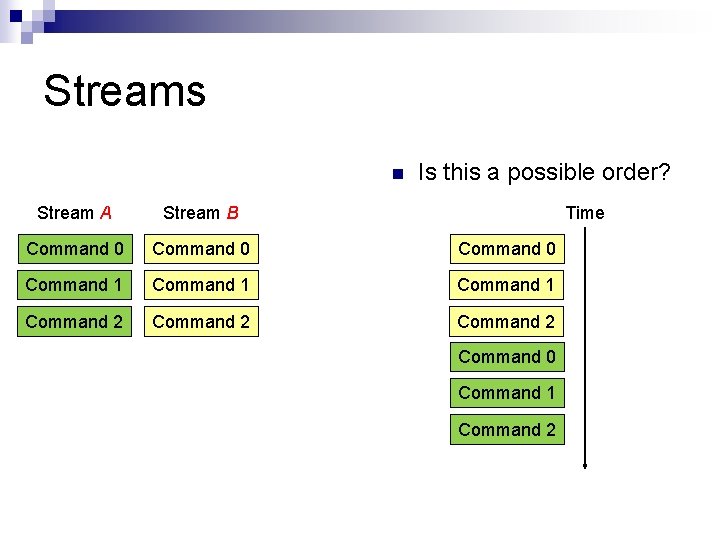

Streams n Is this a possible order? Stream A Stream B Time Command 0 Command 1 Command 2 Command 0 Command 1 Command 2

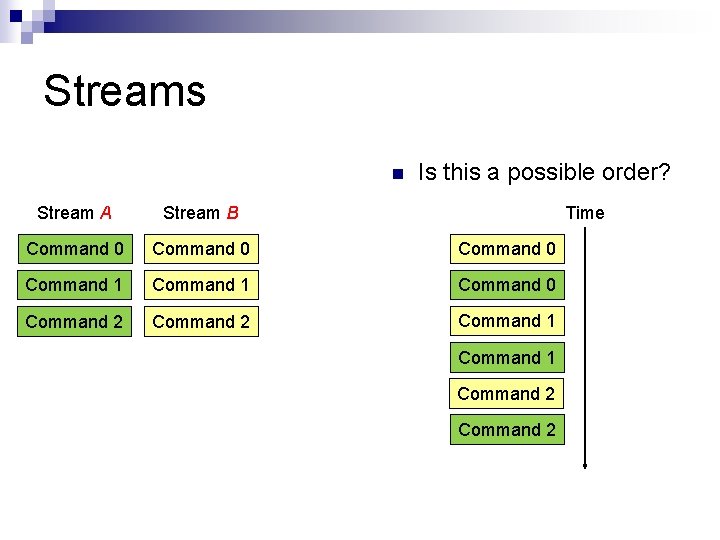

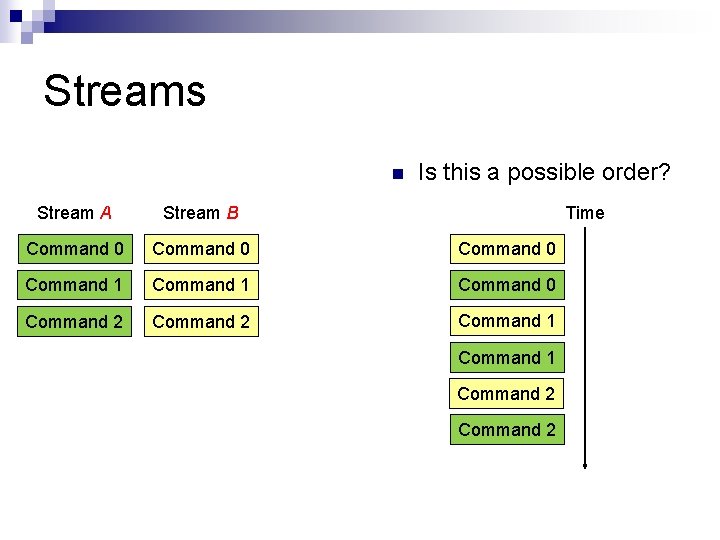

Streams n Is this a possible order? Stream A Stream B Time Command 0 Command 1 Command 0 Command 2 Command 1 Command 2

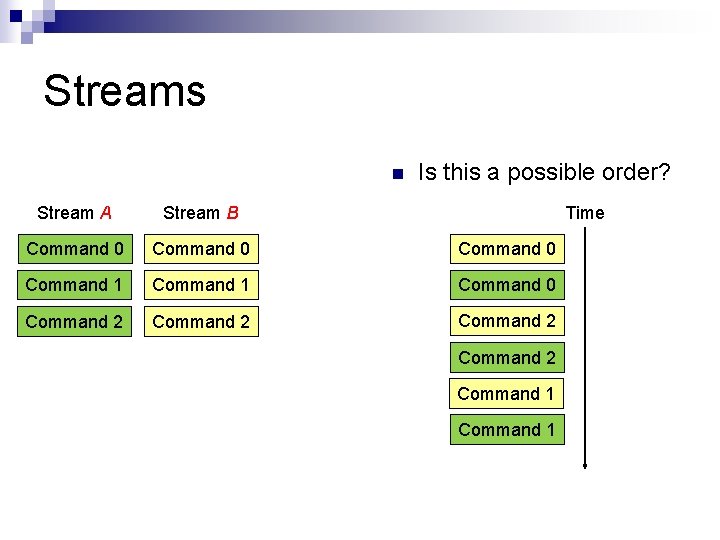

Streams n Is this a possible order? Stream A Stream B Time Command 0 Command 1 Command 0 Command 2 Command 1

Streams n Is this a possible order? Stream A Stream B Time Command 0 Command 1 Command 2

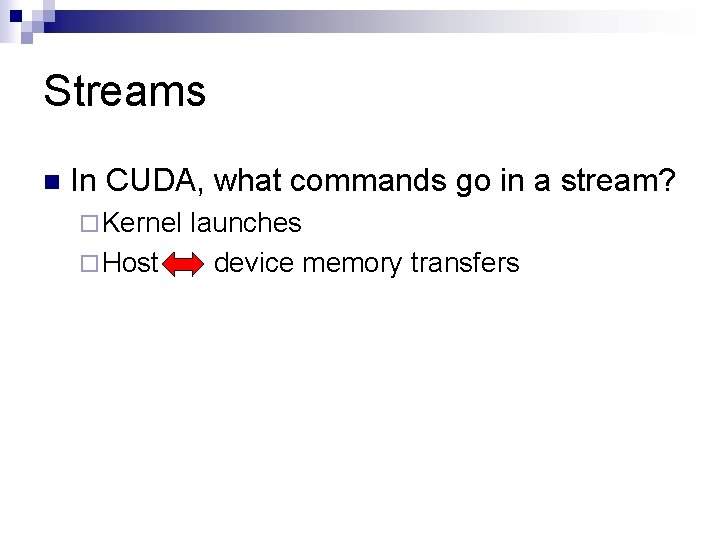

Streams n In CUDA, what commands go in a stream? ¨ Kernel ¨ Host launches device memory transfers

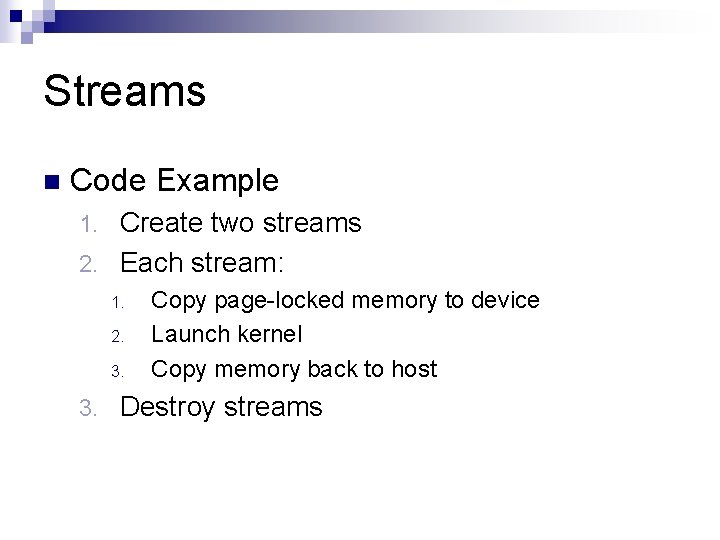

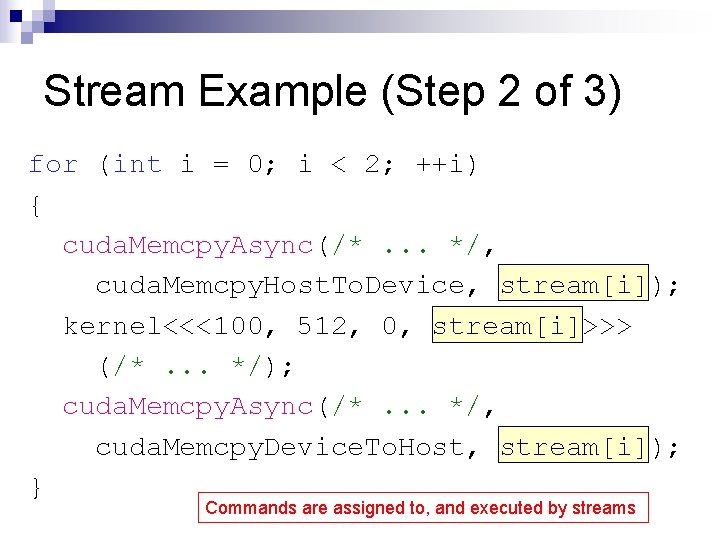

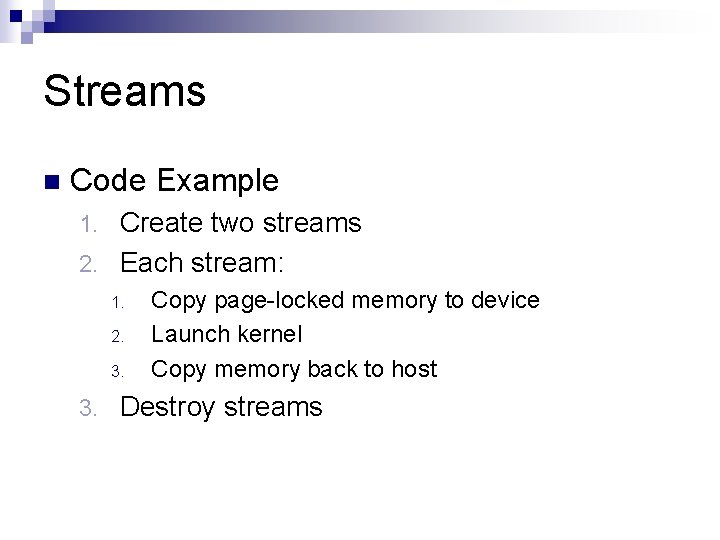

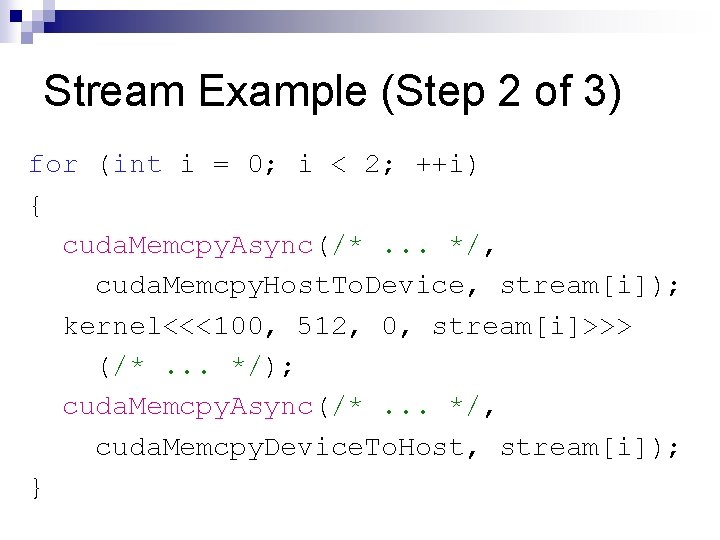

Streams n Code Example Create two streams 2. Each stream: 1. 2. 3. Copy page-locked memory to device Launch kernel Copy memory back to host Destroy streams

![Stream Example Step 1 of 3 cuda Streamt stream2 for int i 0 Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0;](https://slidetodoc.com/presentation_image_h/11939cfeb87c28e892eb7e546b69da29/image-41.jpg)

Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0; i < 2; ++i) { cuda. Stream. Create(&stream[i]); } float *host. Ptr; cuda. Malloc. Host(&host. Ptr, 2 * size);

![Stream Example Step 1 of 3 cuda Streamt stream2 for int i 0 Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0;](https://slidetodoc.com/presentation_image_h/11939cfeb87c28e892eb7e546b69da29/image-42.jpg)

Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0; i < 2; ++i) { cuda. Stream. Create(&stream[i]); } Create two streams float *host. Ptr; cuda. Malloc. Host(&host. Ptr, 2 * size);

![Stream Example Step 1 of 3 cuda Streamt stream2 for int i 0 Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0;](https://slidetodoc.com/presentation_image_h/11939cfeb87c28e892eb7e546b69da29/image-43.jpg)

Stream Example (Step 1 of 3) cuda. Stream_t stream[2]; for (int i = 0; i < 2; ++i) { cuda. Stream. Create(&stream[i]); } float *host. Ptr; cuda. Malloc. Host(&host. Ptr, 2 * size); Allocate two buffers in page-locked memory

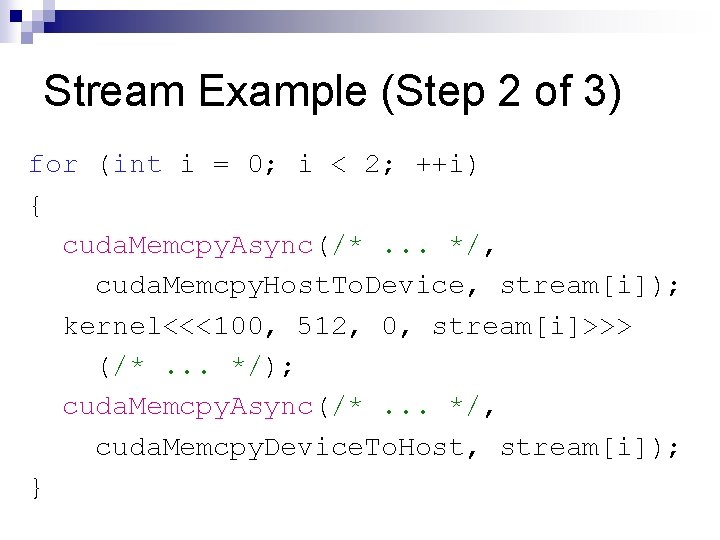

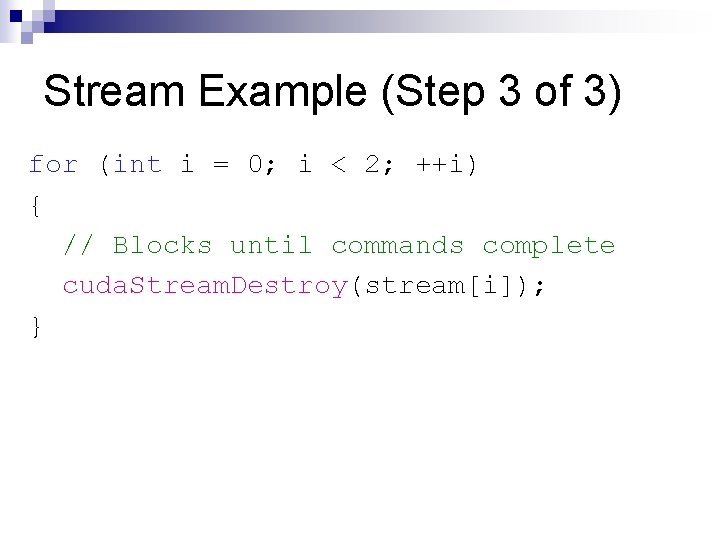

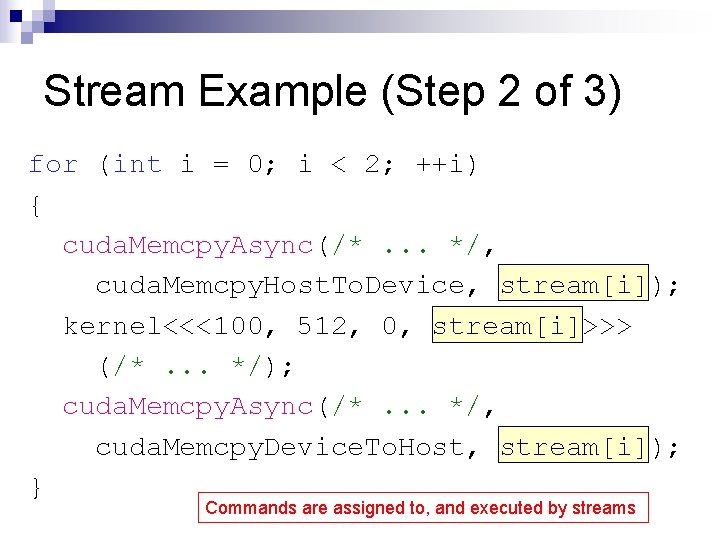

Stream Example (Step 2 of 3) for (int i = 0; i < 2; ++i) { cuda. Memcpy. Async(/*. . . */, cuda. Memcpy. Host. To. Device, stream[i]); kernel<<<100, 512, 0, stream[i]>>> (/*. . . */); cuda. Memcpy. Async(/*. . . */, cuda. Memcpy. Device. To. Host, stream[i]); }

Stream Example (Step 2 of 3) for (int i = 0; i < 2; ++i) { cuda. Memcpy. Async(/*. . . */, cuda. Memcpy. Host. To. Device, stream[i]); kernel<<<100, 512, 0, stream[i]>>> (/*. . . */); cuda. Memcpy. Async(/*. . . */, cuda. Memcpy. Device. To. Host, stream[i]); } Commands are assigned to, and executed by streams

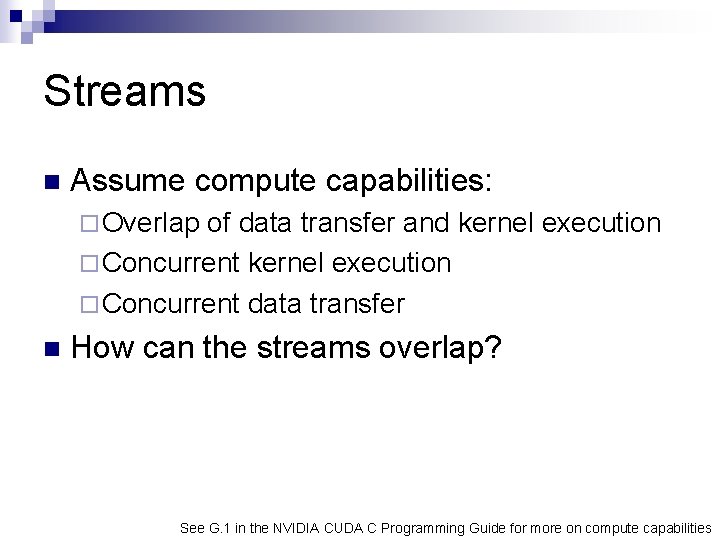

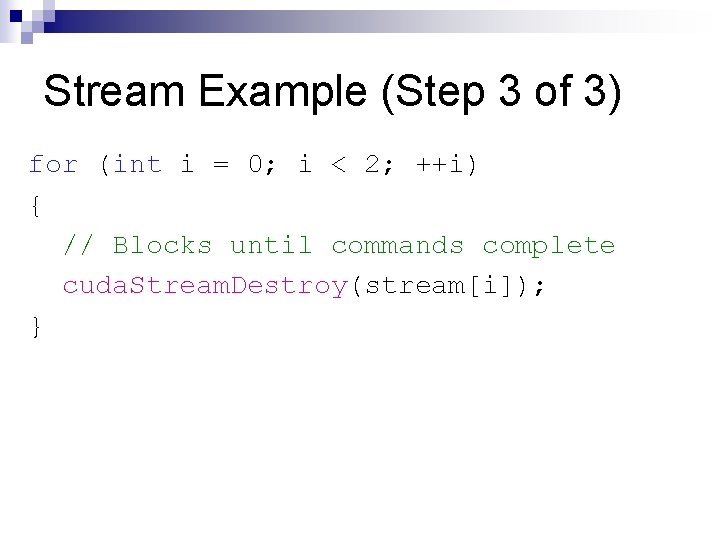

Stream Example (Step 3 of 3) for (int i = 0; i < 2; ++i) { // Blocks until commands complete cuda. Stream. Destroy(stream[i]); }

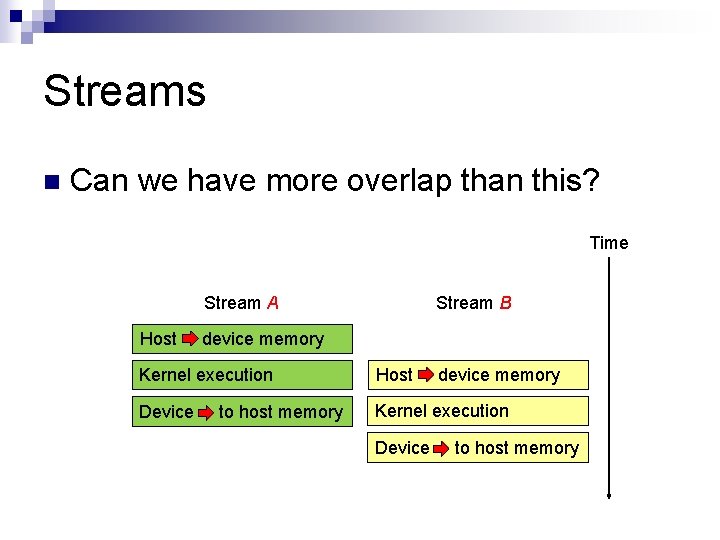

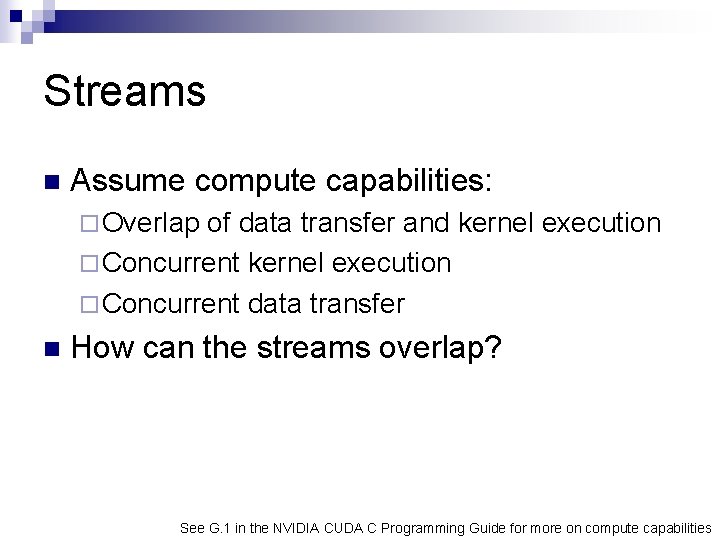

Streams n Assume compute capabilities: ¨ Overlap of data transfer and kernel execution ¨ Concurrent data transfer n How can the streams overlap? See G. 1 in the NVIDIA CUDA C Programming Guide for more on compute capabilities

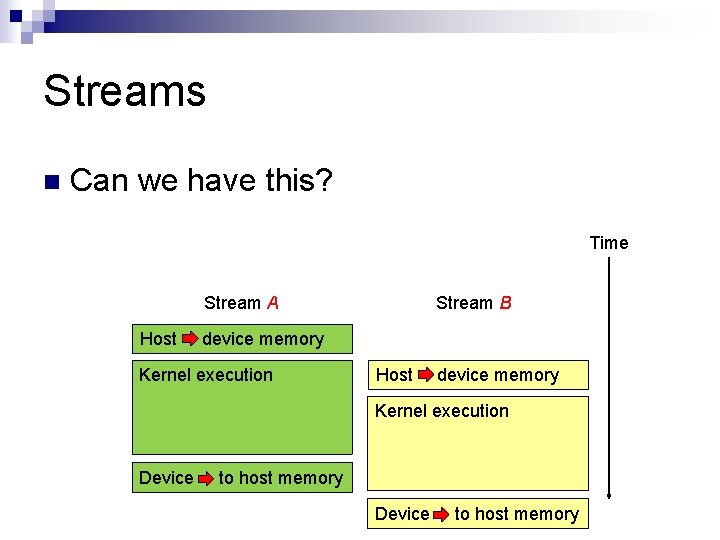

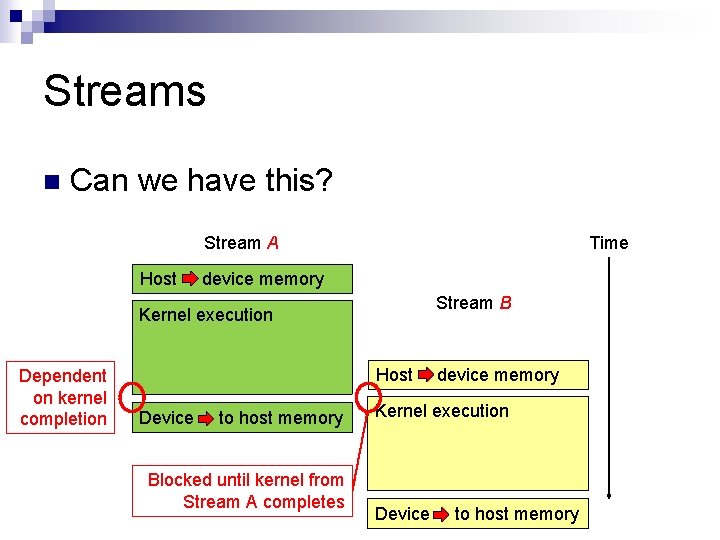

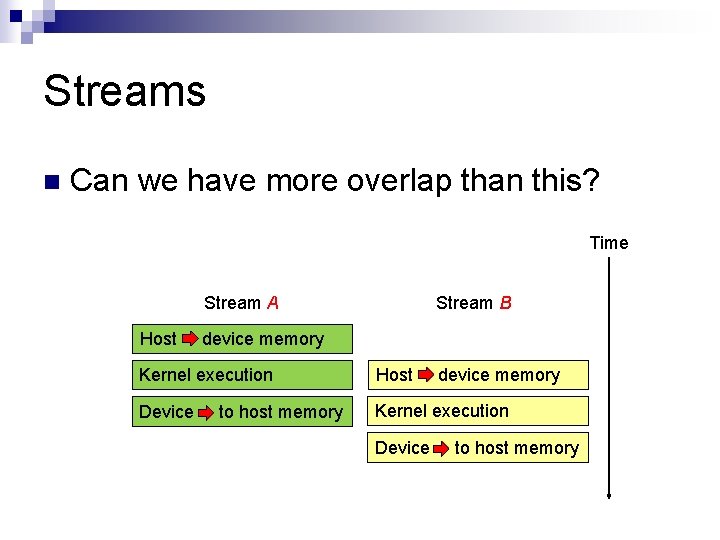

Streams n Can we have more overlap than this? Time Stream A Host Stream B device memory Kernel execution Host Device Kernel execution to host memory Device device memory to host memory

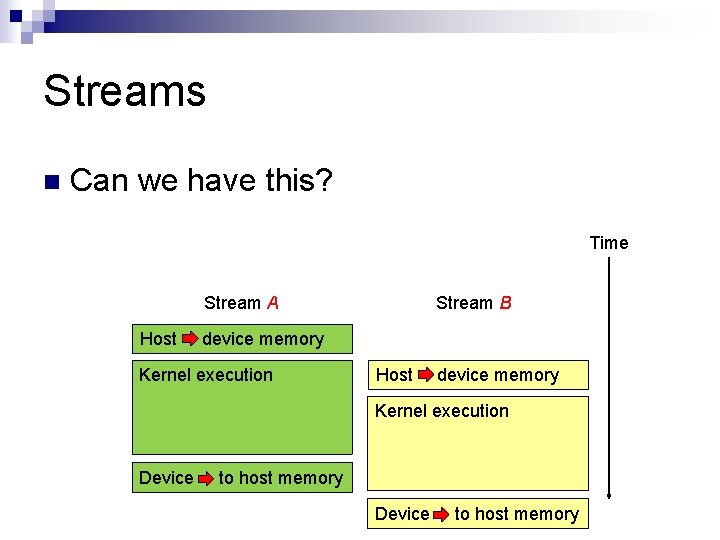

Streams n Can we have this? Time Stream A Host Stream B device memory Kernel execution Host device memory Kernel execution Device to host memory

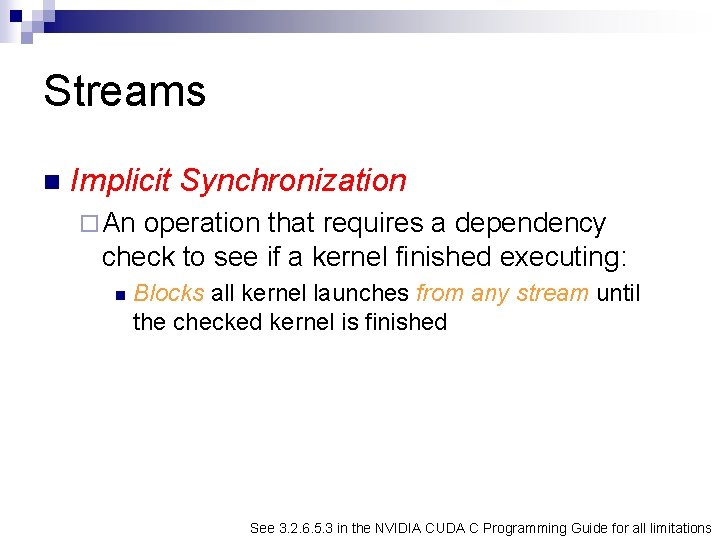

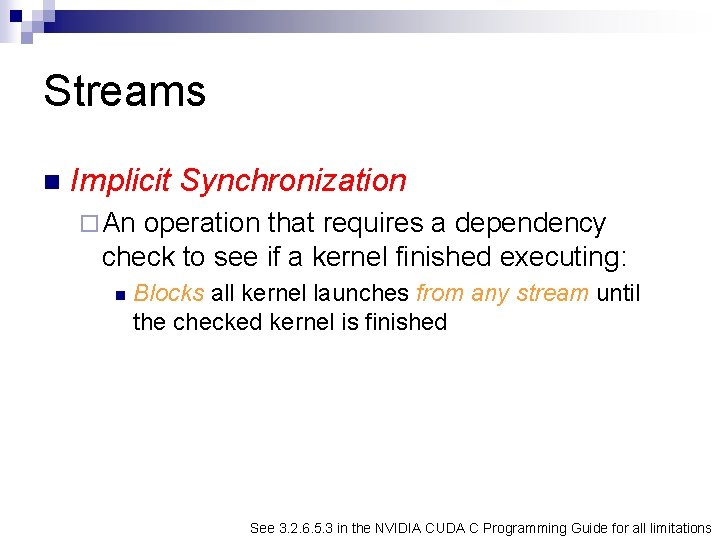

Streams n Implicit Synchronization ¨ An operation that requires a dependency check to see if a kernel finished executing: n Blocks all kernel launches from any stream until the checked kernel is finished See 3. 2. 6. 5. 3 in the NVIDIA CUDA C Programming Guide for all limitations

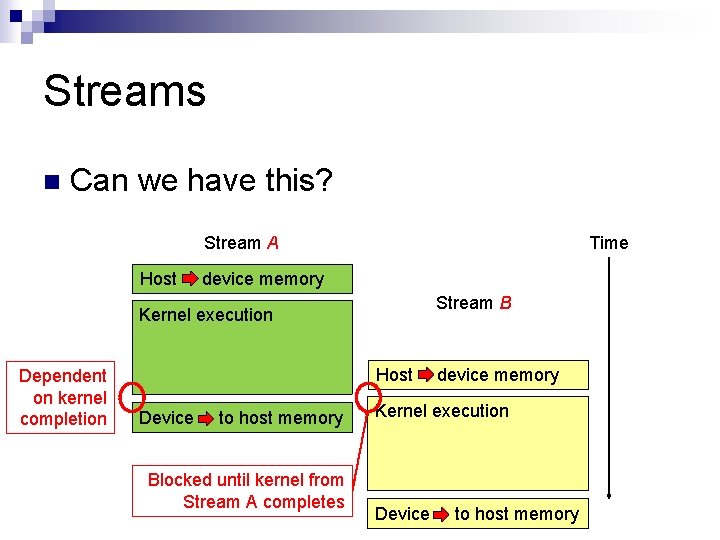

Streams n Can we have this? Stream A Host Time device memory Stream B Kernel execution Dependent on kernel completion Host Device to host memory Blocked until kernel from Stream A completes device memory Kernel execution Device to host memory

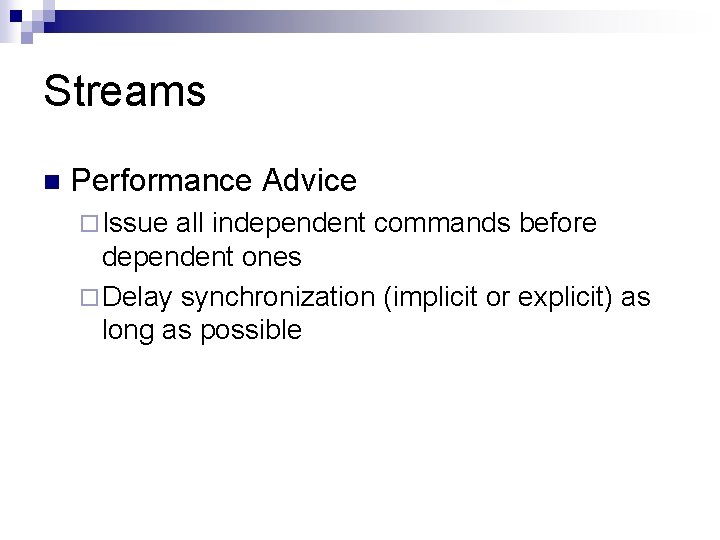

Streams n Performance Advice ¨ Issue all independent commands before dependent ones ¨ Delay synchronization (implicit or explicit) as long as possible

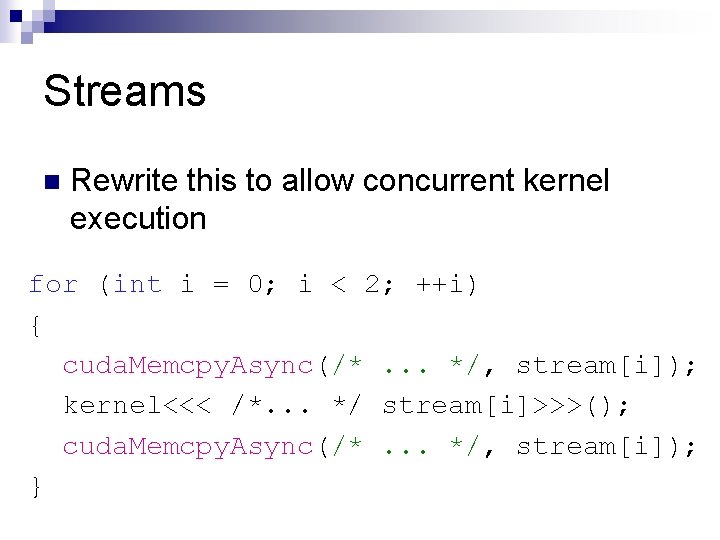

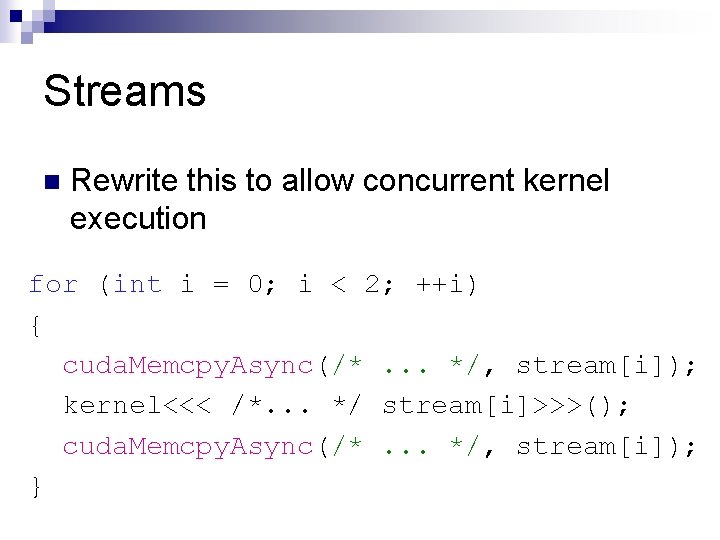

Streams n Rewrite this to allow concurrent kernel execution for (int i = 0; i < 2; ++i) { cuda. Memcpy. Async(/*. . . */, stream[i]); kernel<<< /*. . . */ stream[i]>>>(); cuda. Memcpy. Async(/*. . . */, stream[i]); }

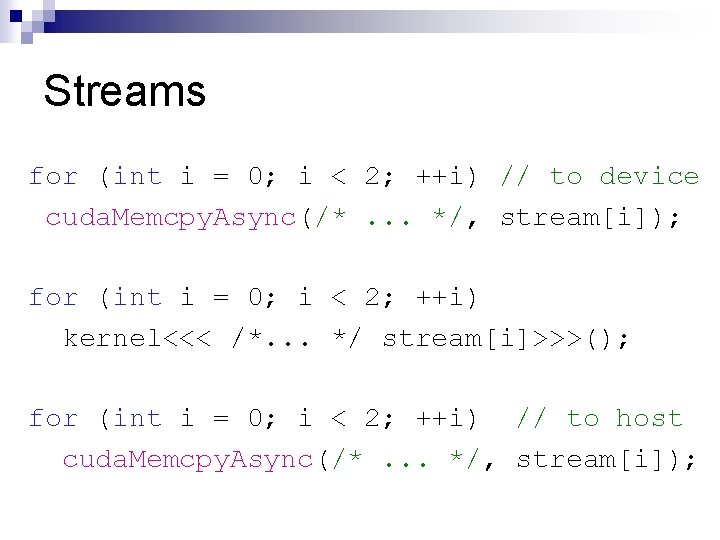

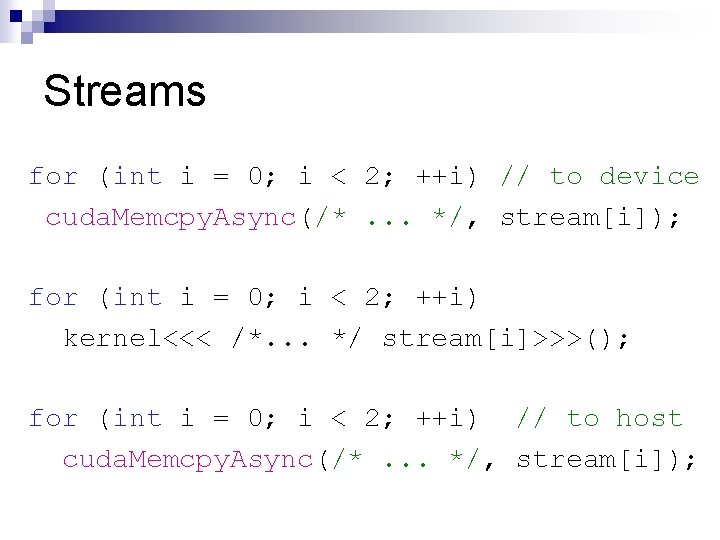

Streams for (int i = 0; i < 2; ++i) // to device cuda. Memcpy. Async(/*. . . */, stream[i]); for (int i = 0; i < 2; ++i) kernel<<< /*. . . */ stream[i]>>>(); for (int i = 0; i < 2; ++i) // to host cuda. Memcpy. Async(/*. . . */, stream[i]);

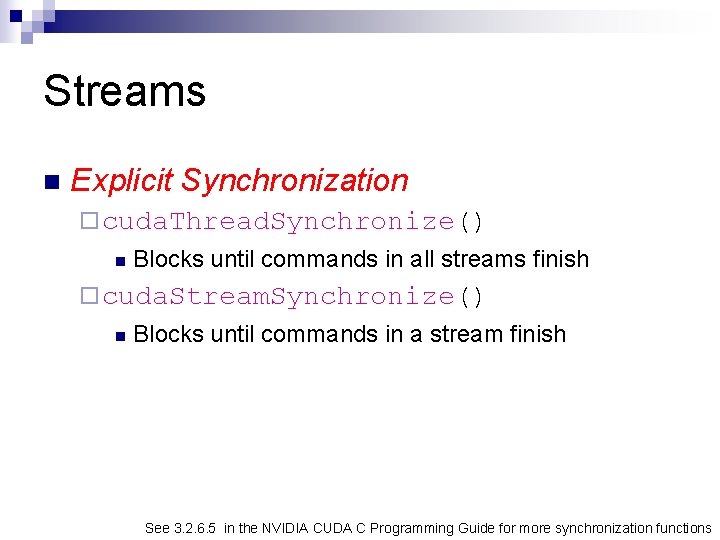

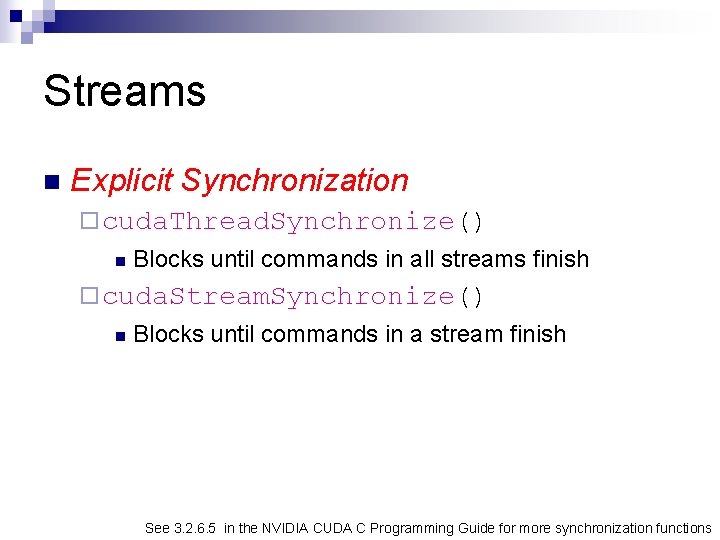

Streams n Explicit Synchronization ¨ cuda. Thread. Synchronize() n Blocks until commands in all streams finish ¨ cuda. Stream. Synchronize() n Blocks until commands in a stream finish See 3. 2. 6. 5 in the NVIDIA CUDA C Programming Guide for more synchronization functions

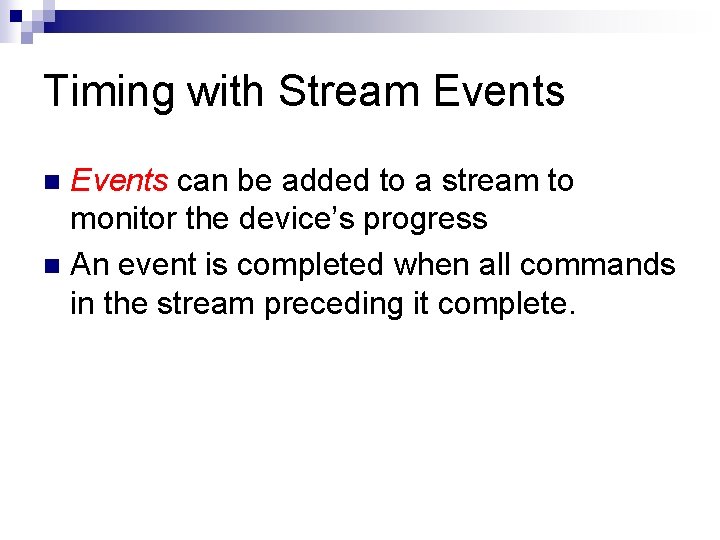

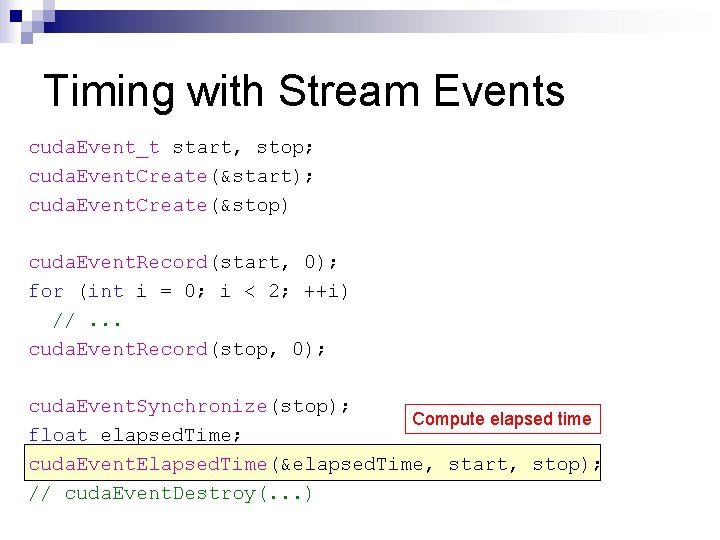

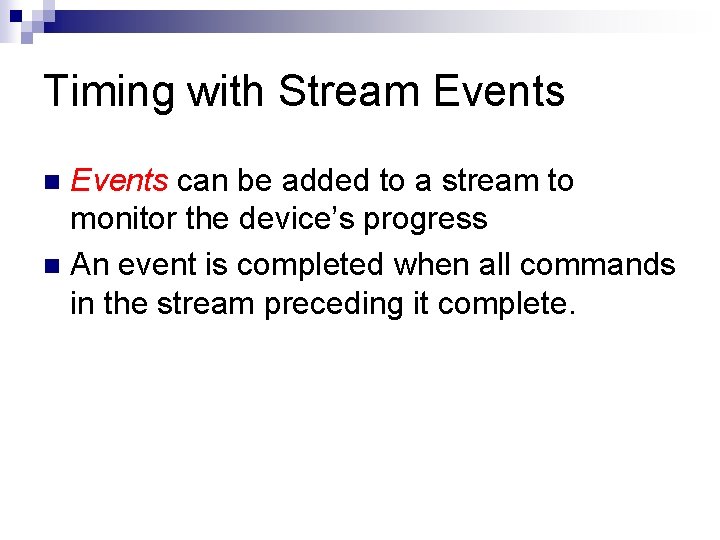

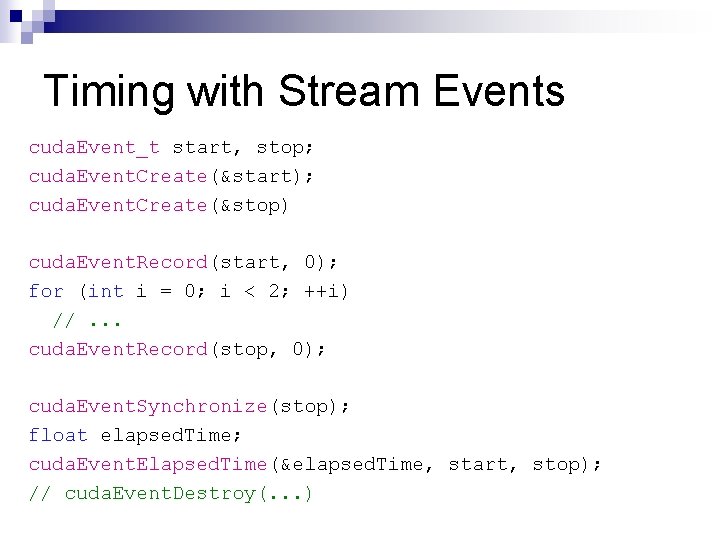

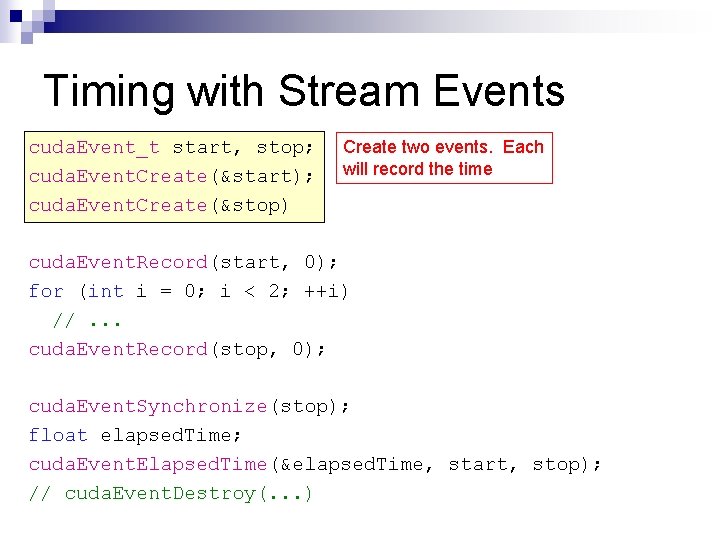

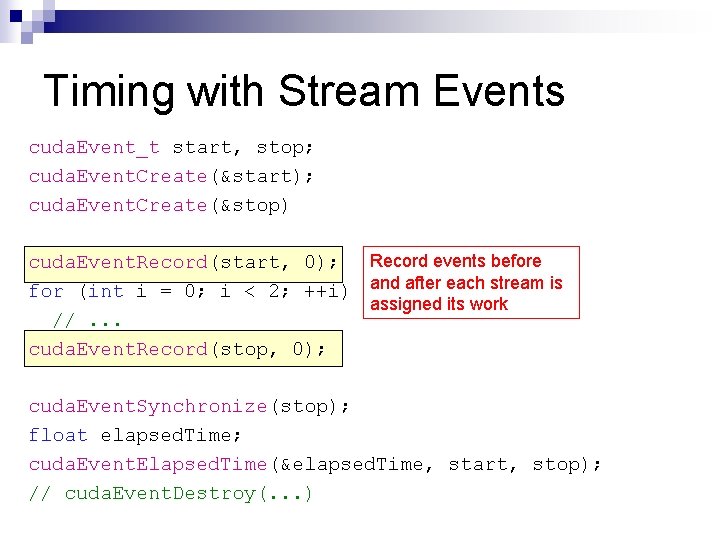

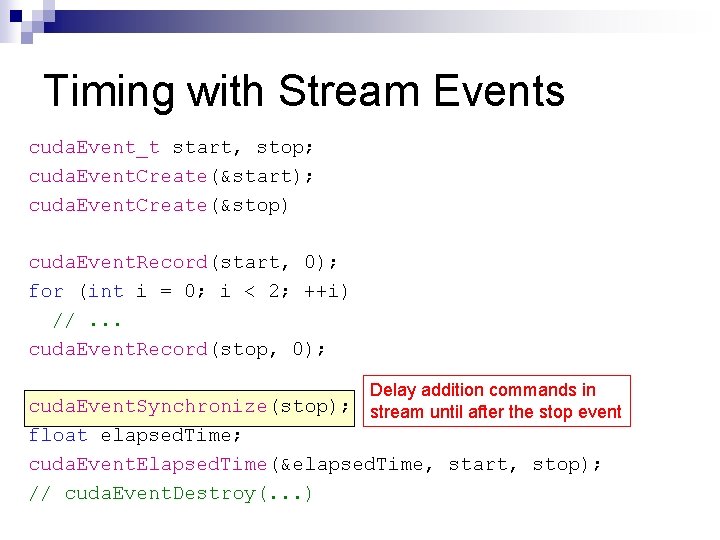

Timing with Stream Events can be added to a stream to monitor the device’s progress n An event is completed when all commands in the stream preceding it complete. n

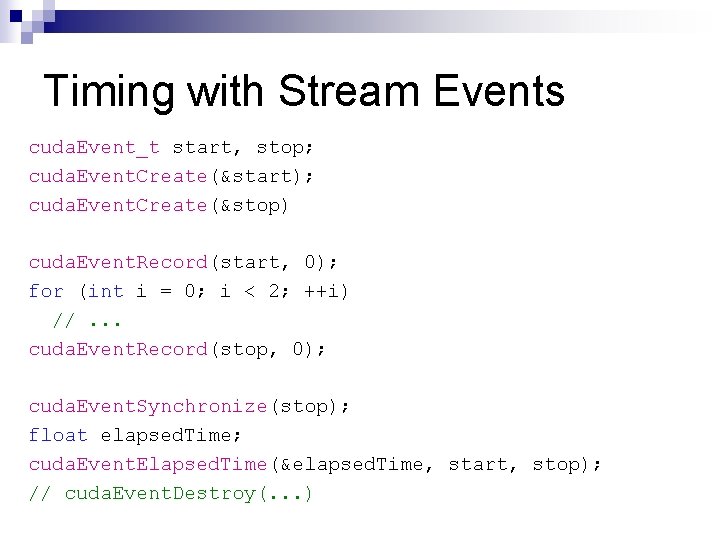

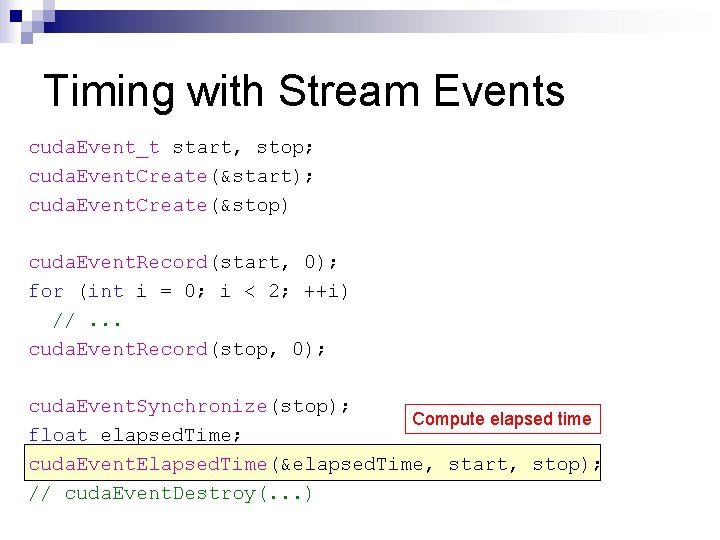

Timing with Stream Events cuda. Event_t start, stop; cuda. Event. Create(&start); cuda. Event. Create(&stop) cuda. Event. Record(start, 0); for (int i = 0; i < 2; ++i) //. . . cuda. Event. Record(stop, 0); cuda. Event. Synchronize(stop); float elapsed. Time; cuda. Event. Elapsed. Time(&elapsed. Time, start, stop); // cuda. Event. Destroy(. . . )

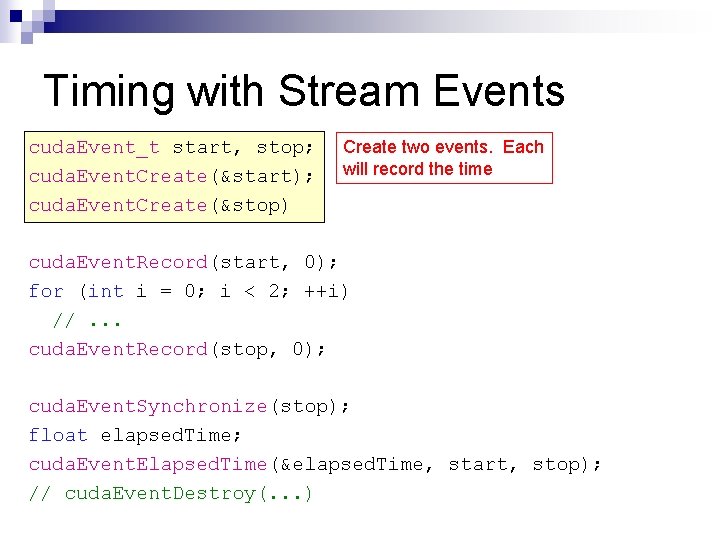

Timing with Stream Events cuda. Event_t start, stop; cuda. Event. Create(&start); cuda. Event. Create(&stop) Create two events. Each will record the time cuda. Event. Record(start, 0); for (int i = 0; i < 2; ++i) //. . . cuda. Event. Record(stop, 0); cuda. Event. Synchronize(stop); float elapsed. Time; cuda. Event. Elapsed. Time(&elapsed. Time, start, stop); // cuda. Event. Destroy(. . . )

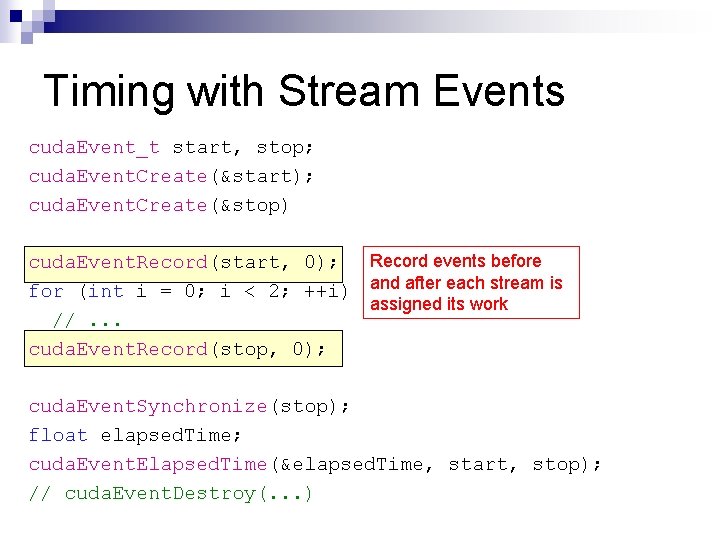

Timing with Stream Events cuda. Event_t start, stop; cuda. Event. Create(&start); cuda. Event. Create(&stop) cuda. Event. Record(start, 0); Record events before for (int i = 0; i < 2; ++i) and after each stream is assigned its work //. . . cuda. Event. Record(stop, 0); cuda. Event. Synchronize(stop); float elapsed. Time; cuda. Event. Elapsed. Time(&elapsed. Time, start, stop); // cuda. Event. Destroy(. . . )

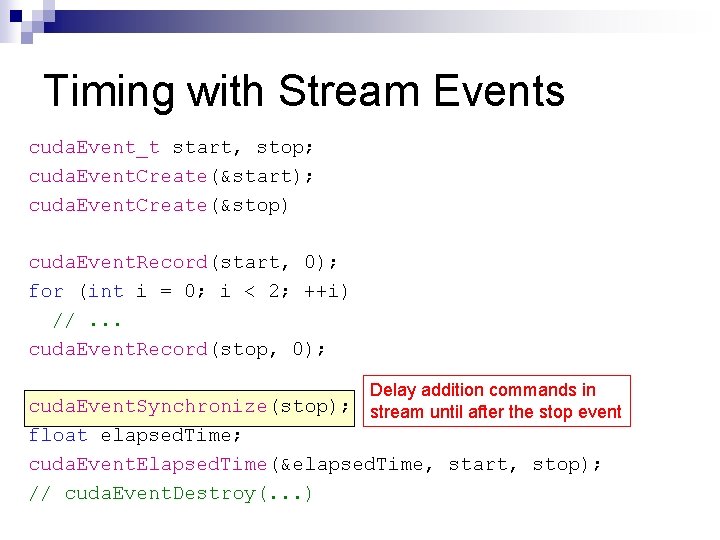

Timing with Stream Events cuda. Event_t start, stop; cuda. Event. Create(&start); cuda. Event. Create(&stop) cuda. Event. Record(start, 0); for (int i = 0; i < 2; ++i) //. . . cuda. Event. Record(stop, 0); Delay addition commands in cuda. Event. Synchronize(stop); stream until after the stop event float elapsed. Time; cuda. Event. Elapsed. Time(&elapsed. Time, start, stop); // cuda. Event. Destroy(. . . )

Timing with Stream Events cuda. Event_t start, stop; cuda. Event. Create(&start); cuda. Event. Create(&stop) cuda. Event. Record(start, 0); for (int i = 0; i < 2; ++i) //. . . cuda. Event. Record(stop, 0); cuda. Event. Synchronize(stop); Compute elapsed time float elapsed. Time; cuda. Event. Elapsed. Time(&elapsed. Time, start, stop); // cuda. Event. Destroy(. . . )

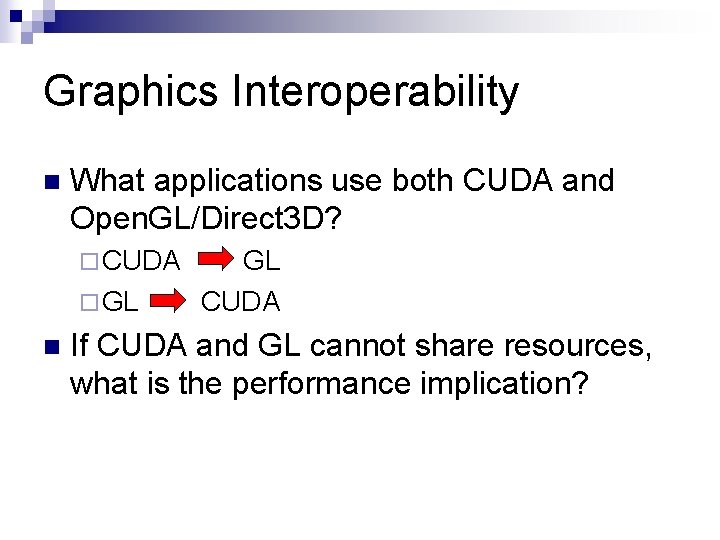

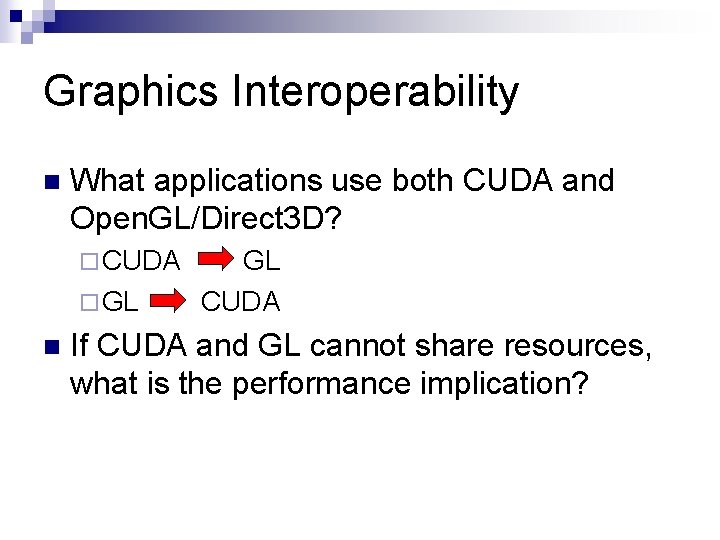

Graphics Interoperability n What applications use both CUDA and Open. GL/Direct 3 D? ¨ CUDA ¨ GL n GL CUDA If CUDA and GL cannot share resources, what is the performance implication?

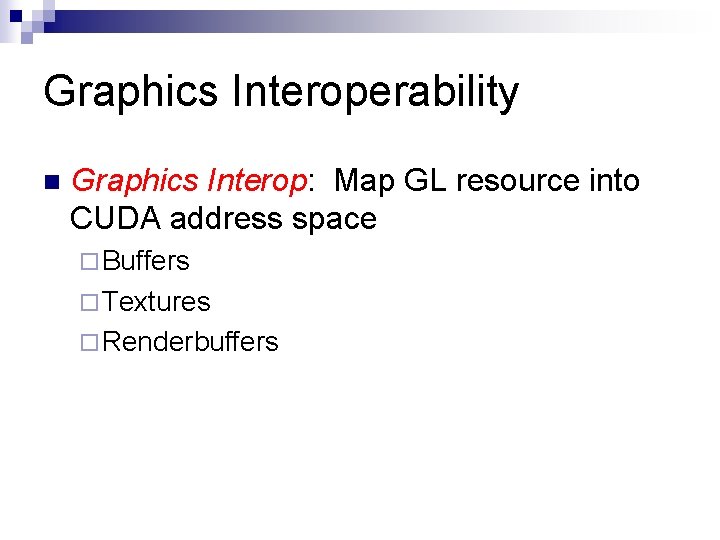

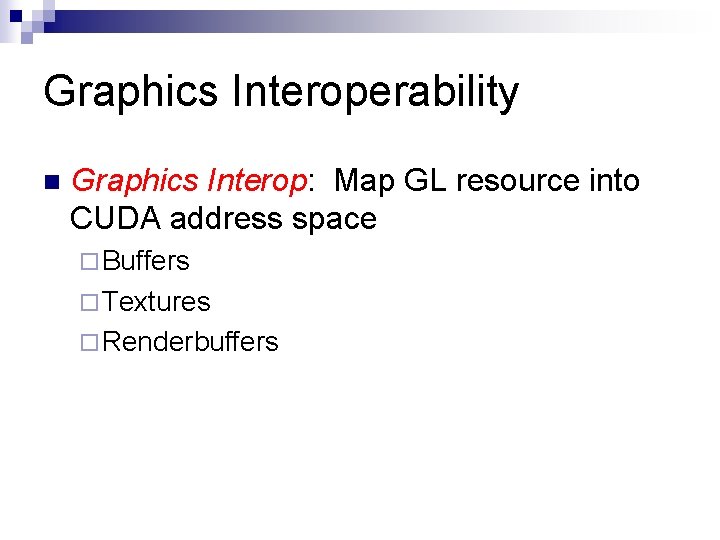

Graphics Interoperability n Graphics Interop: Map GL resource into CUDA address space ¨ Buffers ¨ Textures ¨ Renderbuffers

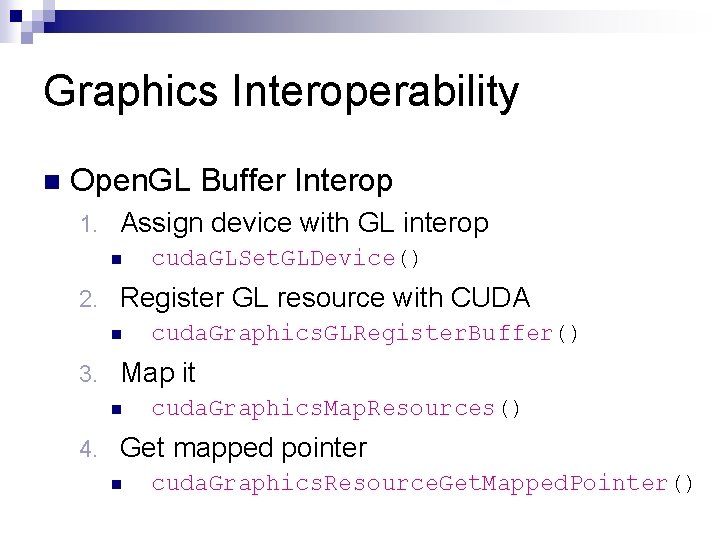

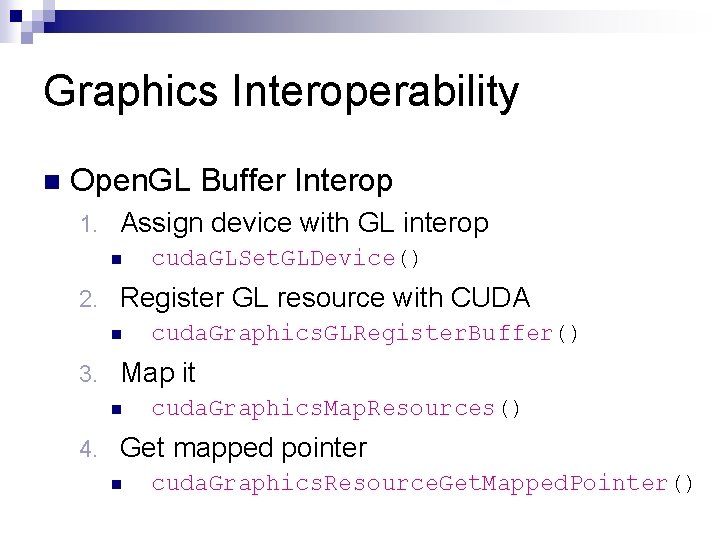

Graphics Interoperability n Open. GL Buffer Interop 1. Assign device with GL interop n 2. Register GL resource with CUDA n 3. cuda. Graphics. GLRegister. Buffer() Map it n 4. cuda. GLSet. GLDevice() cuda. Graphics. Map. Resources() Get mapped pointer n cuda. Graphics. Resource. Get. Mapped. Pointer()

Trichomoniasis

Trichomoniasis Netball odds and evens

Netball odds and evens Attributable risk rumus

Attributable risk rumus Ukuran asosiasi epidemiologi

Ukuran asosiasi epidemiologi Gripsholmsstenen

Gripsholmsstenen Hazard ratio vs odds ratio

Hazard ratio vs odds ratio Probability definition

Probability definition Diagnostic odds ratio

Diagnostic odds ratio Contoh penelitian reaktif

Contoh penelitian reaktif Odds ratio interprétation

Odds ratio interprétation Odds ratio interprétation

Odds ratio interprétation Odds ratio interprétation

Odds ratio interprétation Odd haskell

Odd haskell Apakah probabilitas sama dengan peluang

Apakah probabilitas sama dengan peluang Odds ratio

Odds ratio Tipos de estudo epidemiológico

Tipos de estudo epidemiológico Calcular odds ratio

Calcular odds ratio Rule of thirda

Rule of thirda Haskell odds

Haskell odds Odds of getting pregnant

Odds of getting pregnant Nna odds

Nna odds Slimbu

Slimbu Penggolongan jenis penelitian

Penggolongan jenis penelitian Tft dice odds

Tft dice odds Unit 9 lesson 1

Unit 9 lesson 1 Odds ratio

Odds ratio Skema case control

Skema case control Solid

Solid Battle ends and down goes

Battle ends and down goes Jerusalem judea samaria and the ends of the earth

Jerusalem judea samaria and the ends of the earth Who governs and to what ends

Who governs and to what ends These violent delights will have violent ends

These violent delights will have violent ends Science greatly advanced when galileo favored

Science greatly advanced when galileo favored A solid object with two identical ends and flat sides

A solid object with two identical ends and flat sides Maternal and paternal chromosomes

Maternal and paternal chromosomes Lesson 5 world war 2 ends

Lesson 5 world war 2 ends Chapter 27 lesson 4 world war 1 ends

Chapter 27 lesson 4 world war 1 ends Oxymorons in romeo and juliet

Oxymorons in romeo and juliet Serongagandi description

Serongagandi description Ends-based thinking

Ends-based thinking Ends based thinking

Ends based thinking Ends in a climax community

Ends in a climax community End of the digestive system

End of the digestive system Superlative of peaceful

Superlative of peaceful Comparative and superlative sweet

Comparative and superlative sweet Ends in a climax community

Ends in a climax community Ends in a climax community

Ends in a climax community Adjective ends with y

Adjective ends with y Manner adverb examples

Manner adverb examples Irregular adverbs of manner

Irregular adverbs of manner Many superlative and comparative

Many superlative and comparative Mutcd no outlet sign

Mutcd no outlet sign The name of a binary compound ends with _____.

The name of a binary compound ends with _____. Teori means-ends scheme

Teori means-ends scheme Sticky ends

Sticky ends Restriction ends

Restriction ends A play that ends happily

A play that ends happily Illustrate the steps in restriction digestion and pcr

Illustrate the steps in restriction digestion and pcr Charles by shirley jackson setting

Charles by shirley jackson setting Sticky ends

Sticky ends Sticky ends

Sticky ends Who said this line

Who said this line Shel silverstein where the sidewalk ends

Shel silverstein where the sidewalk ends Gb3fc

Gb3fc What is drama/play

What is drama/play