CSE Senior Design I Critical Topic Review Instructor

- Slides: 53

CSE Senior Design I Critical Topic Review Instructor: Mike O’Dell

CSE Senior Design I Classic Mistakes Instructor: Mike O’Dell This presentations was derived from the textbook used for this class: Mc. Connell, Steve, Rapid Development, Chapter 3.

1 Why Projects Fail - Overview Ø Five main reasons: § § § Failing to communicate Failing to create a realistic plan Lack of buy-in Allowing scope/feature creep Throwing resources at a problem 3

1 Categories of Classic Mistakes Ø People-related Ø Process-related Ø Product-related Ø Technology-related 4

1 Classic Mistakes Enumerated 1. Undermined motivation: ü The Big One - Probably the largest single factor in poor productivity ü Motivation must come from within 2. Weak personnel: ü The right people in the right roles 3. Uncontrolled problem employees: ü Problem people (or just one person) can kill a team and doom a project ü The team must take action… early ü Consider the Welch Grid 5

1 Classic Mistakes Enumerated 4. Heroics: ü Heroics seldom work to your advantage ü Honesty is better than empty “can-do” 5. Adding people to a late project: ü Productivity killer ü Throwing people at a problem seldom helps 6. Noisy, crowded offices: ü Work environment is important to productivity ü Noisy, crowded conditions lengthen schedules 6

1 Classic Mistakes Enumerated 7. Friction between developers and customers: ü Cooperation is the key ü Encourage participation in the process 8. Unrealistic expectations: ü Avoid seat-of-the-pants commitments ü Realistic expectations is a TOP 5 issue 9. Lack of effective project sponsorship: ü Management must buy-in and provide support ü Potential morale killer 7

1 Classic Mistakes Enumerated 10. Lack of stakeholder buy-in: ü Team members, end-users, customers, management, etc. ü Buy-in engenders cooperation at all levels 11. Lack of user input: ü You can’t build what you don’t understand ü Early input is critical to avoid feature creep 12. Politics placed over substance: ü Being well regarded by management will not make your project successful 8

1 Classic Mistakes Enumerated 13. Wishful thinking: ü Not the same as optimism ü Don’t plan on good luck! luck ü May be the root cause of many other mistakes 14. Overly optimistic schedules: ü Wishful thinking? 15. Insufficient risk management: ü Identify unique risks and develop a plan to eliminate them ü Consider a “spiral” approach for larger risks 9

1 Classic Mistakes Enumerated 16. Contractor failure: ü Relationship/cooperation/clear SOW 17. Insufficient planning: ü If you can’t plan it… you can’t do it! 18. Abandonment of planning under pressure: ü Path to failure ü Code-and-fix mentality takes over… and will fail 10

1 Classic Mistakes Enumerated 19. Wasted time during fuzzy front end: ü That would be now! now ü Almost always cheaper and faster to spend time upfront working/refining the plan 20. Shortchanged upstream activities: ü See above… do the work up front! front ü Avoid the “jump to coding” mentality 21. Inadequate design: ü See above… do the required work up front! front 11

1 Classic Mistakes Enumerated 22. Shortchanged quality assurance: ü Test planning is a critical part of every plan ü Shortcutting 1 day early on will likely cost you 3 -10 days later ü QA me now, or pay me later! 23. Insufficient management controls: ü Buy-in implies participation & cooperation 24. Premature or overly frequent convergence: ü It’s not done until it’s done! 12

1 Classic Mistakes Enumerated 25. Omitting necessary tasks from estimates: ü Can add 20 -30% to your schedule ü Don’t sweat the small stuff! 26. Planning to catch up later: ü Schedule adjustments WILL be necessary ü A month lost early on probably cannot be made up later 27. Code-like-hell programming: ü The fast, loose, “entrepreneurial” approach ü This is simply… Code-and-Fix. Don’t! 13

1 Classic Mistakes Enumerated 28. Requirements gold-plating: ü Avoid complex, difficult to implement features ü Often, they add disproportionately to schedule 29. Feature creep: ü The average project experiences 25% change ü Another killer mistake! 30. Developer gold-plating: ü Use proven stuff to do your job ü Avoid dependence on the hottest new tools ü Avoid implementing all the cool new features 14

1 Classic Mistakes Enumerated 31. Push-me, pull-me negotiation: ü Schedule slip = feature addition 32. Research-oriented development: ü Software research schedules are theoretical, theoretical at best ü Try not to push the envelop unless you allow for frequent schedule revisions ü If you push the state of the art… it will push back! 33. Silver-bullet syndrome: ü There is no magic in product development ü Don’t plan on some new whiz-bang thing to save your bacon (i. e. , your schedule) 15

1 Classic Mistakes Enumerated 34. Overestimated savings from new tools or methods: ü Silver bullets probably won’t improve your schedule… don’t overestimate their value 35. Switching tools in the middle of the project: ü Version 3. 1…version 3. 2… version 4. 0! ü Learning curve, rework inevitable 36. Lack of automated source control: ü Stuff happens… enough said! 16

1 Recommendation: Develop a Disaster Avoidance Plan Ø Get together as a team sometime soon and make a list of “worst practices” that you should avoid in your project. Ø Include specific mistakes that you think could/will be made by your team Ø Post this list on the wall in your lab space or where ever it will be visible and prominent on a daily basis Ø Refer to it frequently and talk about how you will avoid these mistakes 17

CSE Senior Design I Your Plan: Estimation Instructor: Mike O’Dell This presentations was derived from the textbook used for this class, Mc. Connell, Steve, Rapid Development, Chapter 8, further expanded on by Mr. Tom Rethard for this course.

1 The Software-Estimation Story Ø Software/System development, and thus estimation, is a process of gradual refinement. Ø Can you build a 3 -bedroom house for $100, 000? (Answer: It depends!) Ø Some organizations want cost estimates to within ± 10% before they’ll fund work on requirements definition. (Is this possible? ) Ø Present your estimate as a range instead of a “single point in time” estimate. Ø The tendency of most developers is to underestimate and over-commit! 19

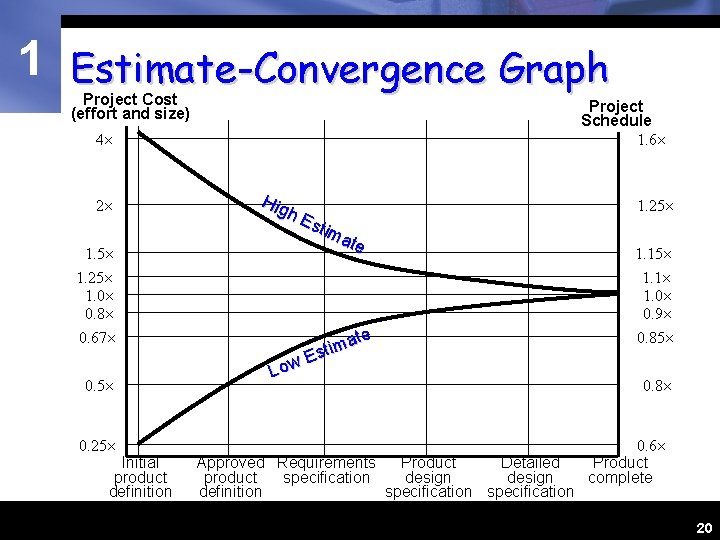

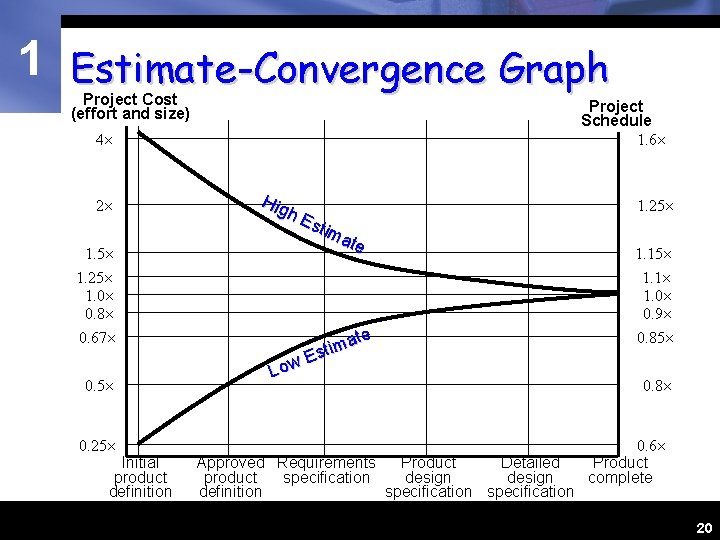

1 Estimate-Convergence Graph Project Cost (effort and size) Project Schedule 1. 6 4 2 1. 5 Hig h. E stim ate 1. 25 1. 0 0. 8 0. 67 0. 5 0. 25 Initial product definition Low ate m i t Es Approved Requirements Product Detailed product specification design definition specification 1. 25 1. 1 1. 0 0. 9 0. 85 0. 8 0. 6 Product complete 20

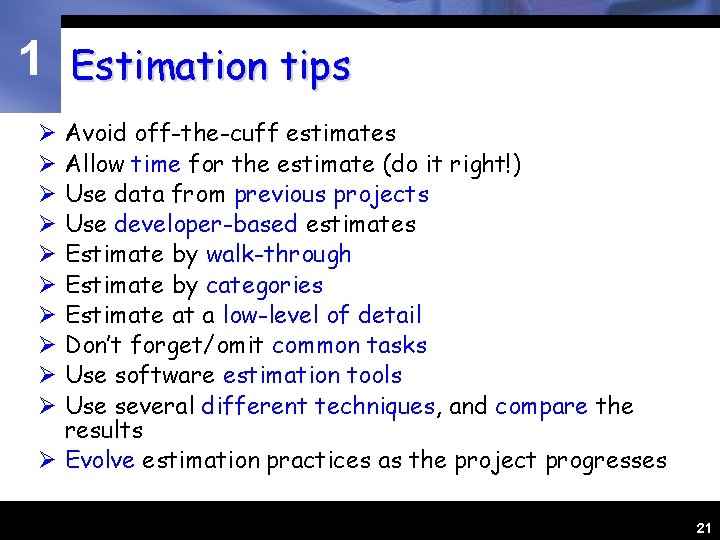

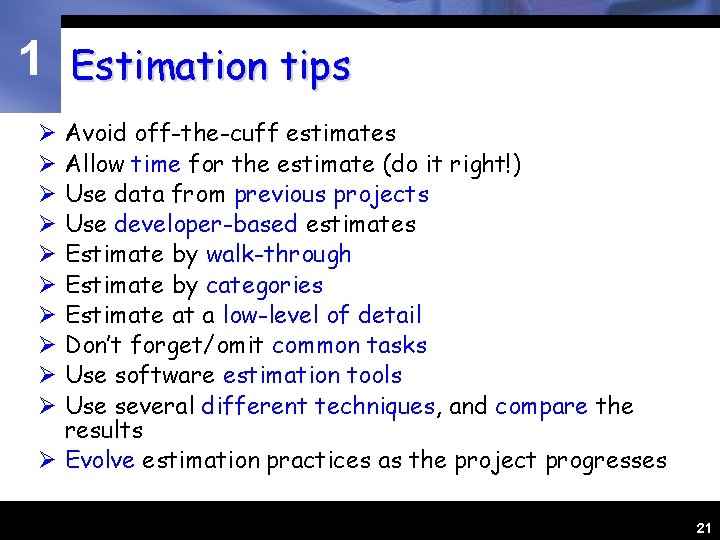

1 Estimation tips Avoid off-the-cuff estimates Allow time for the estimate (do it right!) Use data from previous projects Use developer-based estimates Estimate by walk-through Estimate by categories Estimate at a low-level of detail Don’t forget/omit common tasks Use software estimation tools Use several different techniques, and compare the results Ø Evolve estimation practices as the project progresses Ø Ø Ø Ø Ø 21

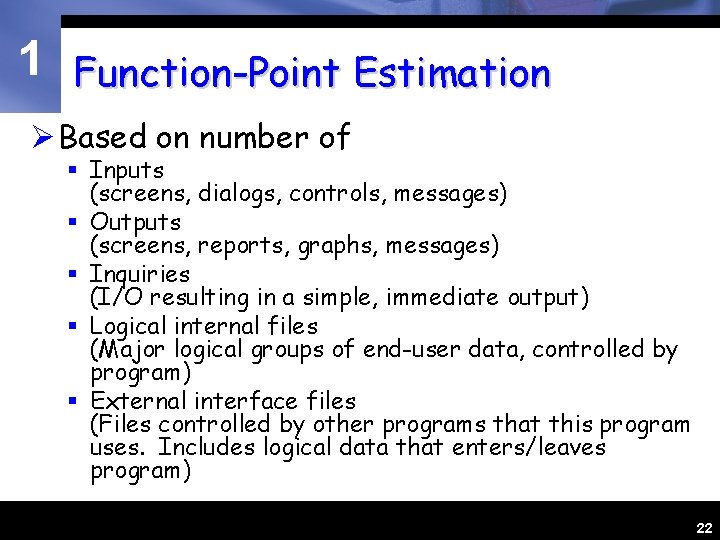

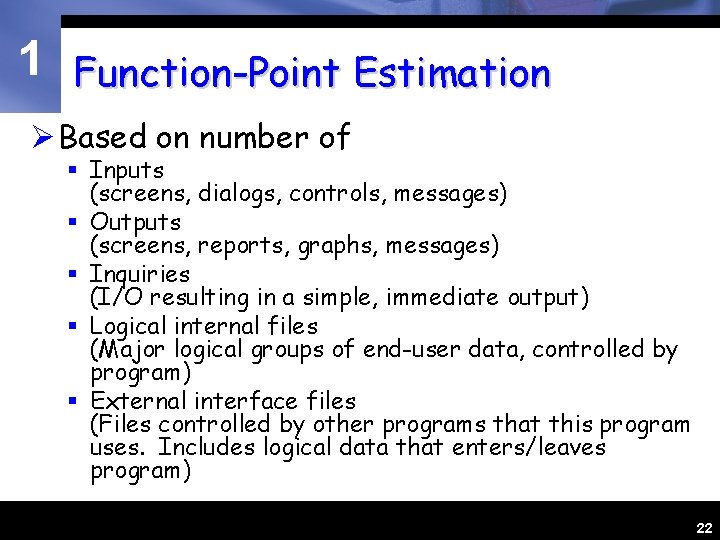

1 Function-Point Estimation Ø Based on number of § Inputs (screens, dialogs, controls, messages) § Outputs (screens, reports, graphs, messages) § Inquiries (I/O resulting in a simple, immediate output) § Logical internal files (Major logical groups of end-user data, controlled by program) § External interface files (Files controlled by other programs that this program uses. Includes logical data that enters/leaves program) 22

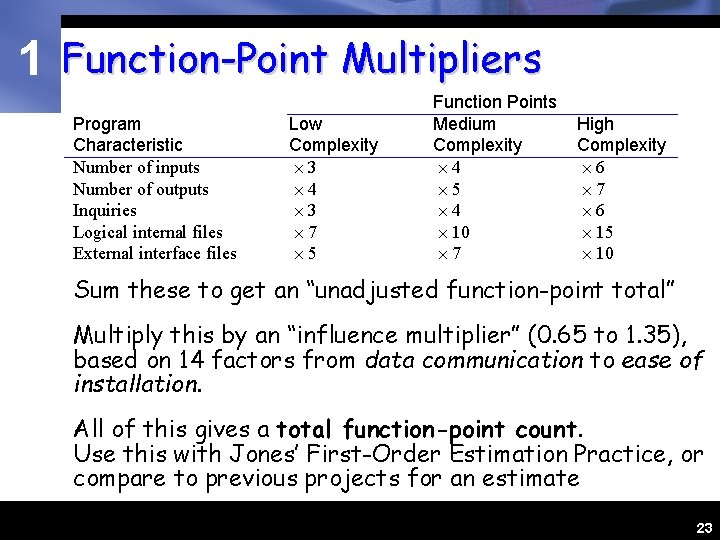

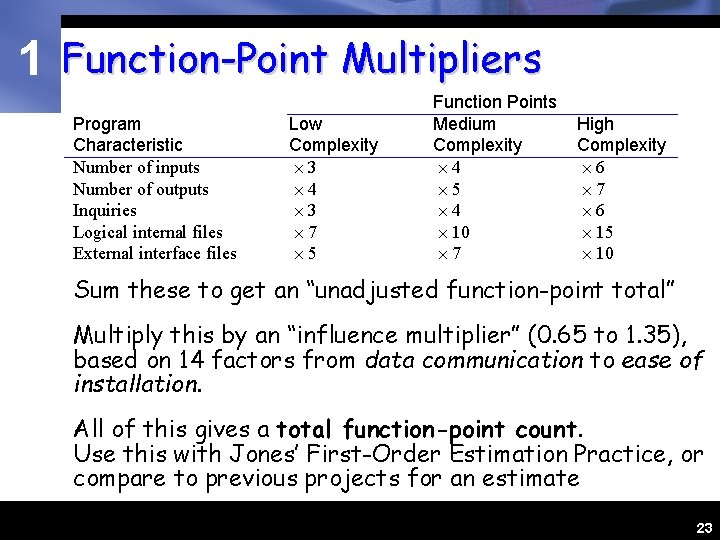

1 Function-Point Multipliers Program Characteristic Number of inputs Number of outputs Inquiries Logical internal files External interface files Low Complexity 3 4 3 7 5 Function Points Medium Complexity 4 5 4 10 7 High Complexity 6 7 6 15 10 Sum these to get an “unadjusted function-point total” Multiply this by an “influence multiplier” (0. 65 to 1. 35), based on 14 factors from data communication to ease of installation. All of this gives a total function-point count. Use this with Jones’ First-Order Estimation Practice, or compare to previous projects for an estimate 23

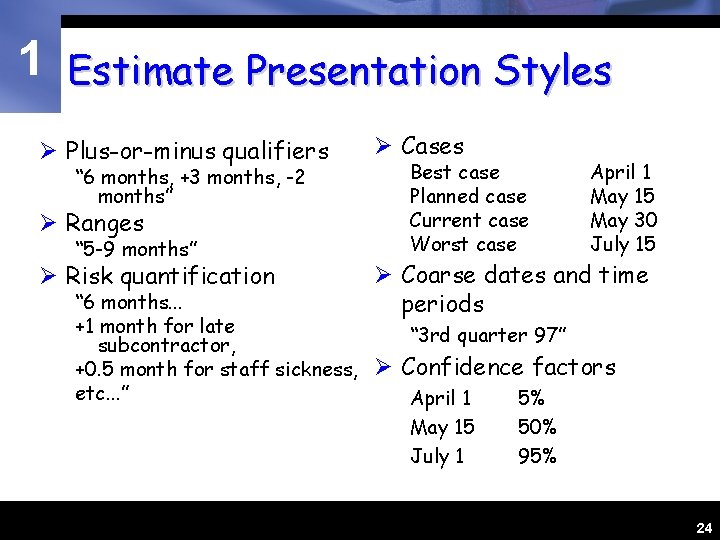

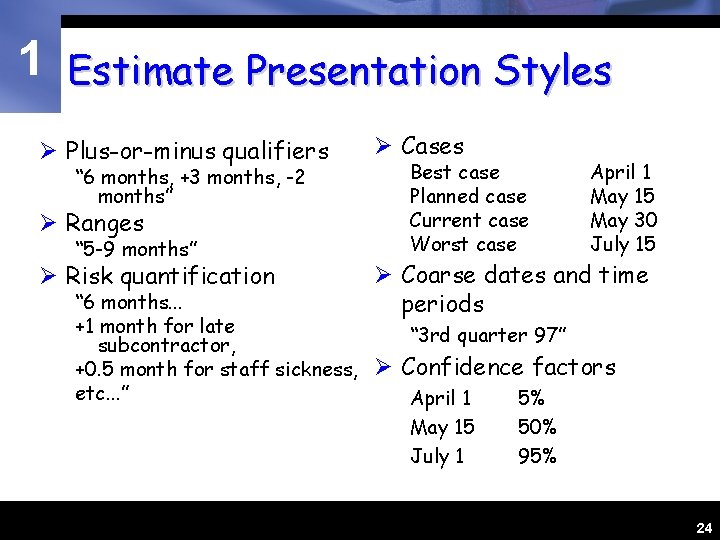

1 Estimate Presentation Styles Ø Plus-or-minus qualifiers “ 6 months, +3 months, -2 months” Ø Ranges “ 5 -9 months” Ø Risk quantification “ 6 months. . . +1 month for late subcontractor, +0. 5 month for staff sickness, etc. . . ” Ø Cases Best case Planned case Current case Worst case April 1 May 15 May 30 July 15 Ø Coarse dates and time periods “ 3 rd quarter 97” Ø Confidence factors April 1 May 15 July 1 5% 50% 95% 24

1 Schedule Estimation Ø Rule-of-thumb equation § schedule in months = 3. 0 * man-months 1/3 This equation implies an optimal team size. Ø Use estimation software to compute the schedule from your size and effort estimates Ø Use historical data from your organization Ø Use Mc. Connell’s Tables 8 -8 through 8 -10 to look up a schedule estimate based on the size estimate Ø Use the schedule estimation step from one of the algorithmic approaches (e. g. , COCOMO) to get a more fine tunes estimate than the “Rule of thumb” equation. 25

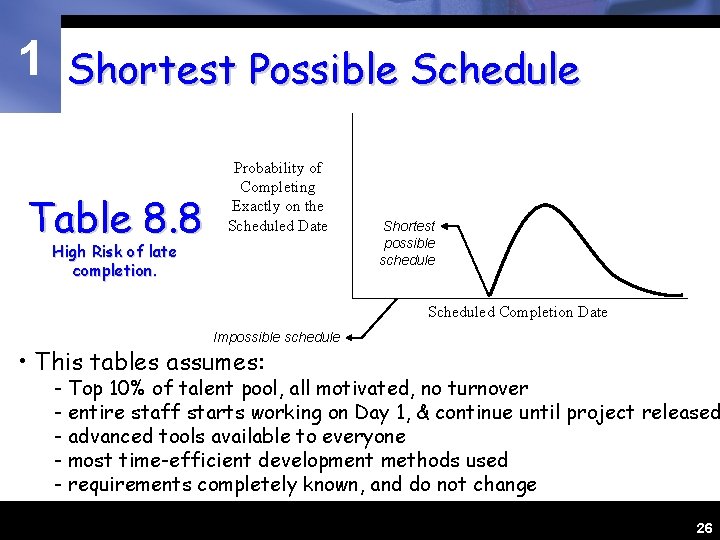

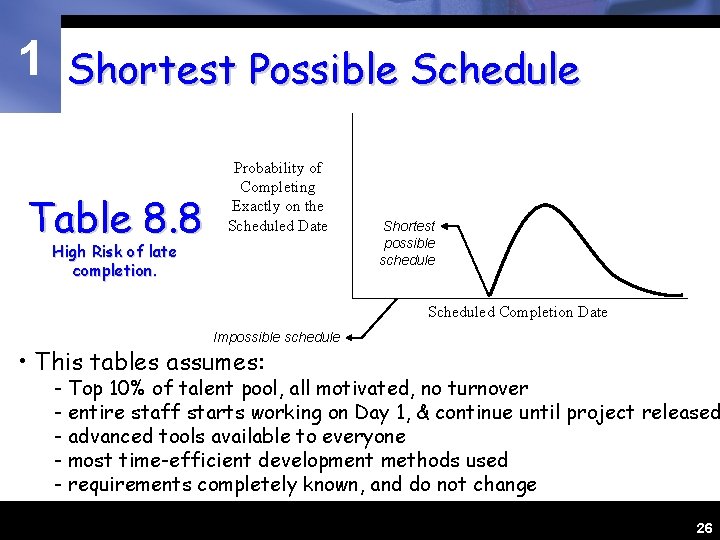

1 Shortest Possible Schedule Table 8. 8 Probability of Completing Exactly on the Scheduled Date High Risk of late completion. Shortest possible schedule Scheduled Completion Date Impossible schedule • This tables assumes: - Top 10% of talent pool, all motivated, no turnover - entire staff starts working on Day 1, & continue until project released - advanced tools available to everyone - most time-efficient development methods used - requirements completely known, and do not change 26

1 Efficient Schedules (Table 8 -9) Ø This table assumes: § § § Top 25% of talent pool Turnover < 6% per year No significant personnel conflicts Using efficient development practices from Chap 1 -5 Note that less effort required on efficient schedule tables § For most projects, the efficient schedules represent “best-case” 27

1 Nominal Schedules (Table 8 -10) Ø This table assumes: § § § Top 50% of talent pool Turnover 10 -12% per year Risk-management less than ideal Office environment only adequate Sporadic use of efficient development practices § Achieving nominal schedule may be a 50/50 bet. 28

1 Estimate Refinement Ø Estimate can be refined only with a more refined definition of the software product Ø Developers often let themselves get trapped by a “single-point” estimate, and are held to it (Case study 1 -1) § Impression of a slip over budget is created when the estimate increases Ø When estimate ranges decrease as the project progresses, customer confidence is built-up. 29

1 Conclusions Ø Estimate accuracy is directly proportional to product definition. § Before requirements specification, product is very vaguely defined Ø Use ranges for estimates and gradually refine (tighten) them as the project progresses. Ø Measure progress and compare to your historical data Ø Refine… 30

CSE Senior Design I Feature-Set Control Instructor: Mike O’Dell The slides in this presentation are derived from materials in the textbook used for CSE 4316/4317, Rapid Development: Taming Wild Software Schedules, by Steve Mc. Connell.

1 The Problem Ø Products are initially stuffed with more features (requirements) than can be reasonably accommodated Ø Features continue to be added as the project progresses (“Feature-Creep”) Ø Features must be removed/reduced or significantly changed late in a project 32

1 Sources of Change Ø End-users: End-users driven by the “need” for additional or different functionality Ø Marketers: Marketers driven by the fact that markets and customer perspectives on requirements change (“latest and greatest” syndrome) Ø Developers: Developers driven by emotional/ intellectual desire to build the “best” widget 33

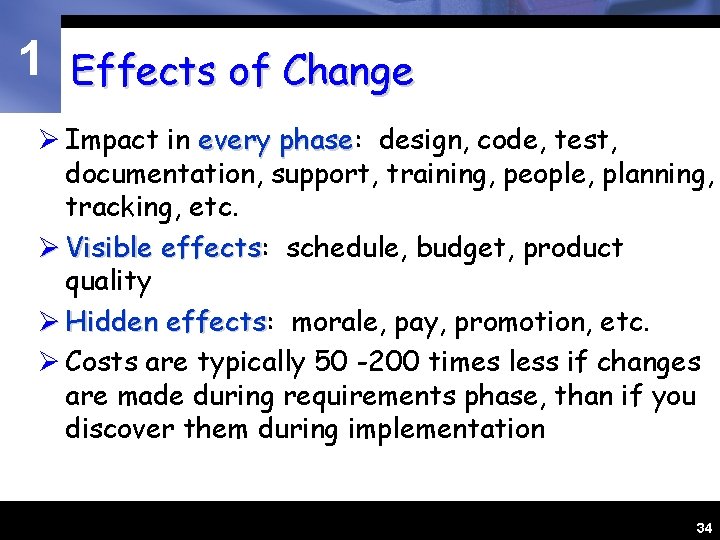

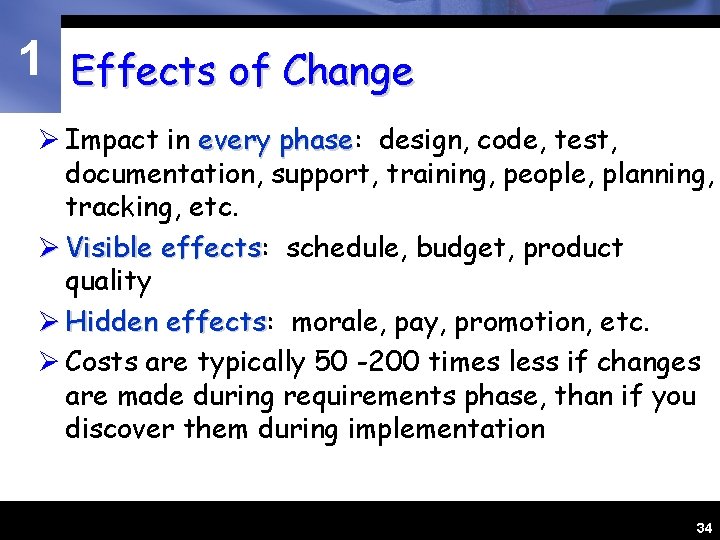

1 Effects of Change Ø Impact in every phase: phase design, code, test, documentation, support, training, people, planning, tracking, etc. Ø Visible effects: effects schedule, budget, product quality Ø Hidden effects: effects morale, pay, promotion, etc. Ø Costs are typically 50 -200 times less if changes are made during requirements phase, than if you discover them during implementation 34

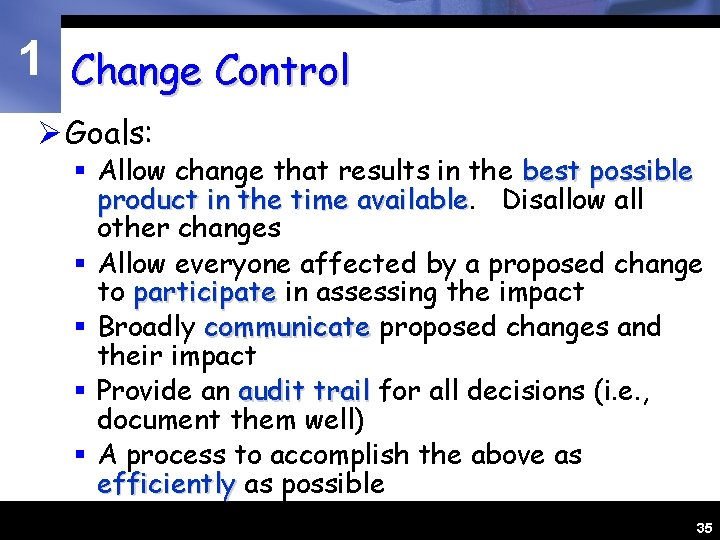

1 Change Control Ø Goals: § Allow change that results in the best possible product in the time available Disallow all other changes § Allow everyone affected by a proposed change to participate in assessing the impact § Broadly communicate proposed changes and their impact § Provide an audit trail for all decisions (i. e. , document them well) § A process to accomplish the above as efficiently as possible 35

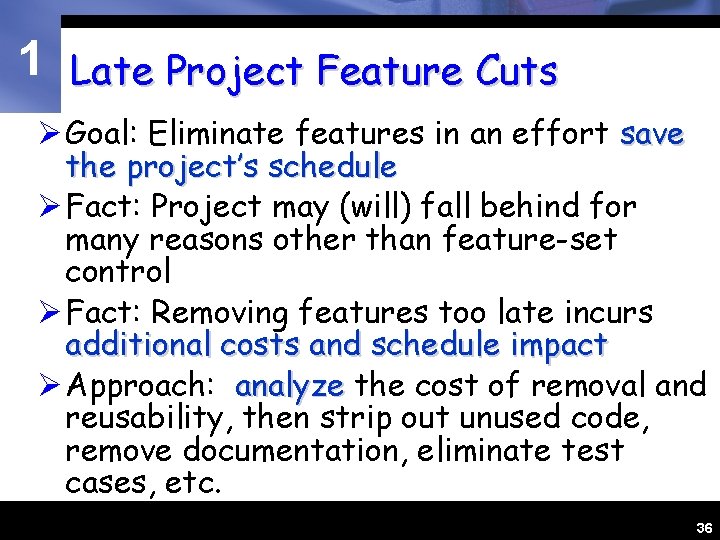

1 Late Project Feature Cuts Ø Goal: Eliminate features in an effort save the project’s schedule Ø Fact: Project may (will) fall behind for many reasons other than feature-set control Ø Fact: Removing features too late incurs additional costs and schedule impact Ø Approach: analyze the cost of removal and reusability, then strip out unused code, remove documentation, eliminate test cases, etc. 36

CSE Senior Design I Risk Management Instructor: Mike O’Dell This presentations was derived from the textbook used for this class: Mc. Connell, Steve, Rapid Development, Chapter 5.

1 Why Do Projects Fail? Ø Generally, from poor risk management § Failure to identify risks § Failure to actively/aggressively plan for, attack and eliminate “project killing” risks Ø Risk comes in different shapes and sizes § Schedule risks (short to long) § Cost risks (small to large) § Technology risks (probable to impossible) 38

1 Elements of Risk Management Ø Managing risk consists of: identifying, addressing and eliminating risks Ø When does this occur? ü WORST – Crisis management/Fire fighting : addressing risk after they present a big problem ü BAD – Fix on failure : finding and addressing as the occur. ü OKAY – Risk Mitigation : plan ahead and allocate resources to address risk that occur, but don’t try to eliminate them before they occur ü GOOD – Prevention : part of the plan to identify and prevent risks before they become problems ü BEST – Eliminate Root Causes : part of the plan to identify and eliminate the factors that make specific risks possible 39

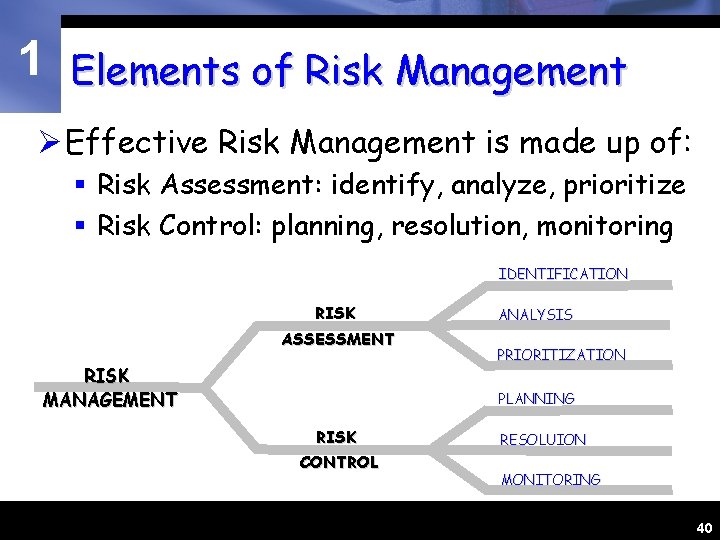

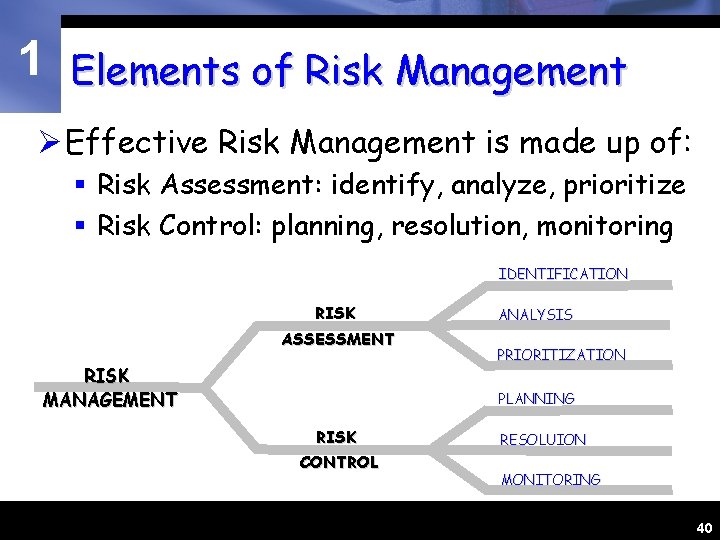

1 Elements of Risk Management Ø Effective Risk Management is made up of: § Risk Assessment: identify, analyze, prioritize § Risk Control: planning, resolution, monitoring IDENTIFICATION RISK ASSESSMENT RISK MANAGEMENT ANALYSIS PRIORITIZATION PLANNING RISK CONTROL RESOLUION MONITORING 40

1 Risk Monitoring Ø Risks and potential impact will change throughout the course of a project Ø Keep an evolving “TOP 10 RISKS” list § See Table 5 -7 for an example § Review the list frequently § Refine… Ø Put someone in charge of monitoring risks Ø Make it a part of your process & project plan 41

CSE Senior Design I Overview: Software System Architecture Software System Test Mike O’Dell Based on an earlier presentation by Bill Farrior, UTA, modified by Mike O’Dell

1 What is System Design? Ø A progressive definition of how a system will be constructed: § Guiding principles/rules for design (Metaarchitecture) § Top-level structure, design abstraction (Architecture Design) § Details of all lowest-level design elements (Detailed Design) CSE 4317 43

1 What is Software Architecture? Ø A critical bridge between what a system will do/look like, and how it will be constructed Ø A blueprint for a software system and how it will be built Ø An abstraction: abstraction a conceptual model of what must be done to construct the software system § It is NOT a specification of the details of the construction CSE 4317 44

1 What is Software Architecture? Ø The top-level breakdown of how a system will be constructed: § § design principles/rules high-level structural components high-level data elements (external/internal) high-level data flows (external/internal) Ø Discussion: Architectural elements of the new ERB CSE 4317 45

1 System Architecture Design Process Ø Define guiding principles/rules for design Ø Define top-level components of the system structure (“architectural layers”) Ø Define top-level data elements/flows (external and between layers) Ø Deconstruct layers into major functional units (“subsystems”) Ø Translate top-level data elements/flows to subsystems CSE 4317 46

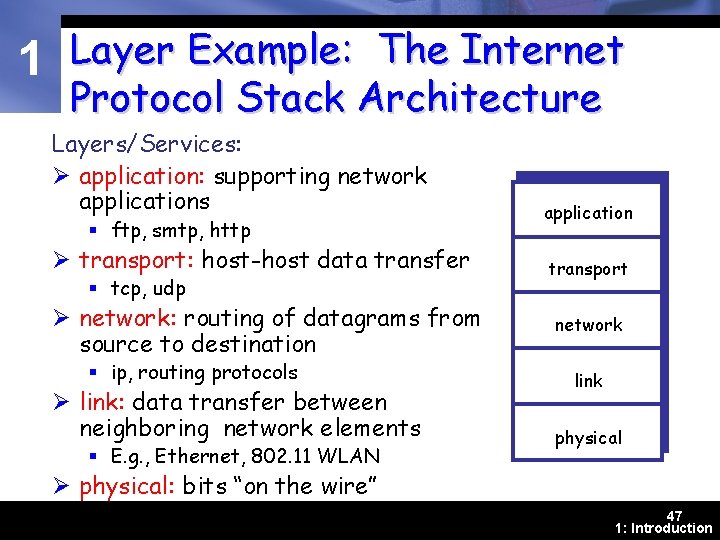

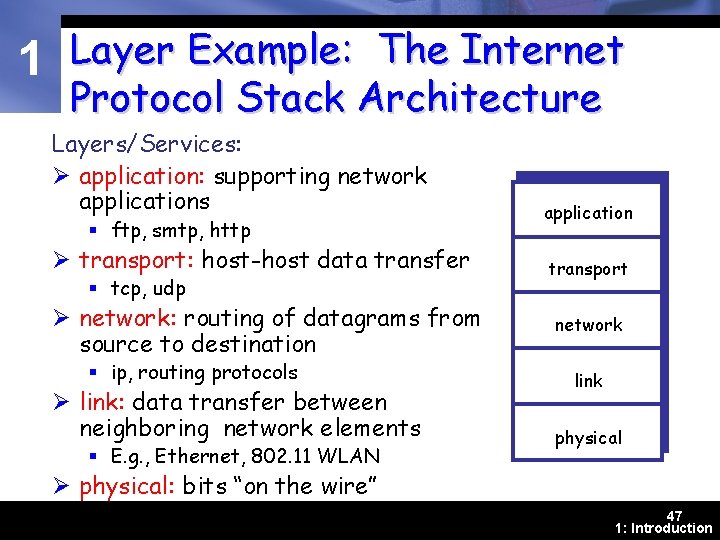

1 Layer Example: The Internet Protocol Stack Architecture Layers/Services: Ø application: supporting network applications application Ø transport: host-host data transfer transport § ftp, smtp, http § tcp, udp Ø network: routing of datagrams from source to destination § ip, routing protocols Ø link: data transfer between neighboring network elements § E. g. , Ethernet, 802. 11 WLAN network link physical Ø physical: bits “on the wire” 47 1: Introduction

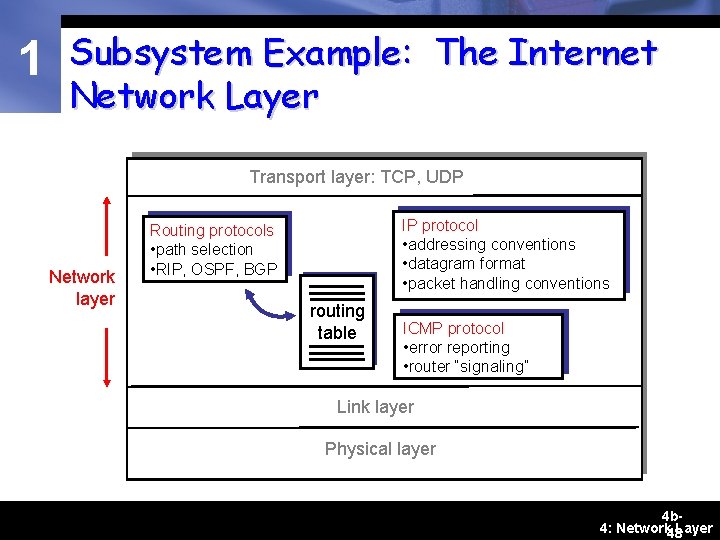

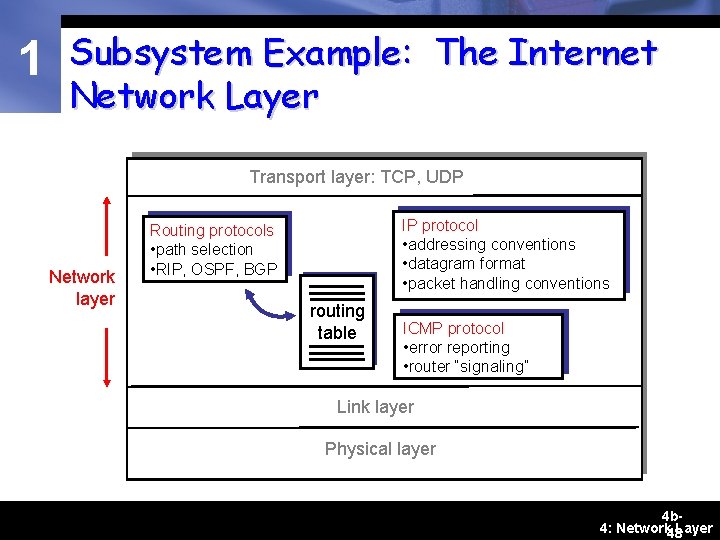

1 Subsystem Example: The Internet Network Layer Transport layer: TCP, UDP Network layer IP protocol • addressing conventions • datagram format • packet handling conventions Routing protocols • path selection • RIP, OSPF, BGP routing table ICMP protocol • error reporting • router “signaling” Link layer Physical layer 4 b 4: Network 48 Layer

1 Criteria for a Good Architecture (The Four I’s) Ø Independence – the layers are independent of each other and each layer’s functions are internally-specific and have little reliance on other layers. Changes in the implementation of one layer should not impact other layers. Ø Interfaces/Interactions – the interfaces and interactions between layers are complete and well-defined, with explicit data flows. Ø Integrity – the whole thing “hangs together”. It’s complete, consistent, accurate… it works. Ø Implementable – the approach is feasible, and the specified system can actually be designed and built using this architecture. CSE 4317 49

1 How do you Document a Software Architecture? Ø Describe the “rules” : meta-architecture § guiding principles, vision, concepts § key decision criteria Ø Describe the layers § what they do, how they interact with other layers § what are they composed of (subsystems) CSE 4317 50

1 How do you Document a Software Architecture? Ø Describe the data flows between layers § what are the critical data elements § provider subsystems (sources) and consumer subsystems (sinks) Ø Describe the subsystems within each layer § what does it do § what are its critical interfaces of the subsystem, within and external to its layer § what are its critical interfaces outside the system CSE 4317 51

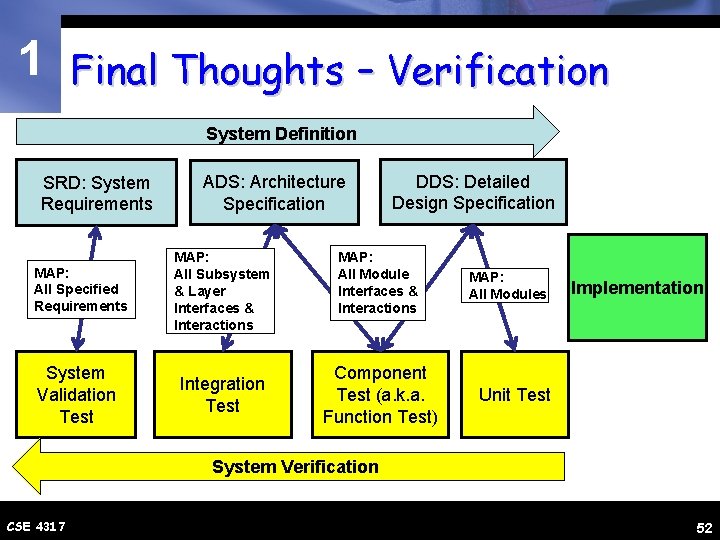

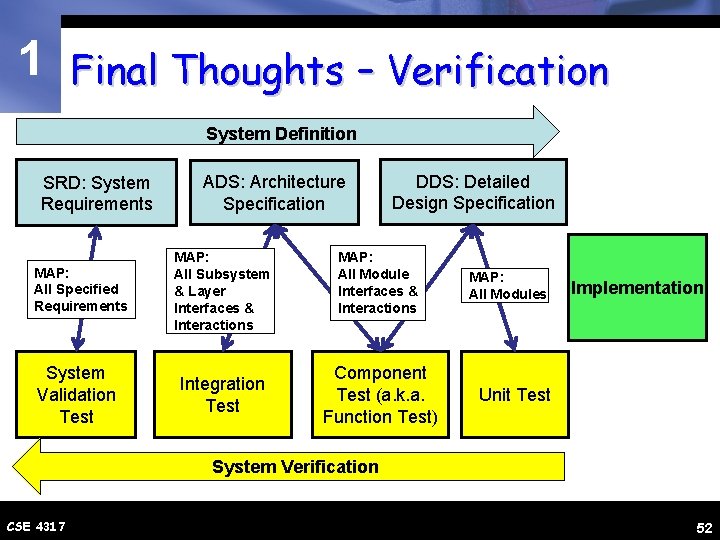

1 Final Thoughts – Verification System Definition SRD: System Requirements MAP: All Specified Requirements System Validation Test ADS: Architecture Specification MAP: All Subsystem & Layer Interfaces & Interactions Integration Test DDS: Detailed Design Specification MAP: All Module Interfaces & Interactions Component Test (a. k. a. Function Test) MAP: All Modules Implementation Unit Test System Verification CSE 4317 52

1 Final Thoughts – Verification Ø Unit Test: verifies that EVERY module (HW/SW) specified in the DDS operates as specified. Ø Component/Function Test: verifies integrity of ALL inter-module interfaces and interactions. Ø Integration Test: verifies integrity of ALL inter -subsystem interfaces and interactions. Ø System Verification Test: verifies ALL requirements are met. CSE 4317 53