Concurrency Concurrency simultaneous execution of program code Forms

![Partial Example class Queue { private int[ ] queue; private int next. In, next. Partial Example class Queue { private int[ ] queue; private int next. In, next.](https://slidetodoc.com/presentation_image_h/b08cda80927a3f1b9586bb80521d22fa/image-27.jpg)

- Slides: 33

Concurrency • Concurrency - simultaneous execution of program code • Forms of concurrency – instruction level – 2 or more machine instructions execute simultaneously – statement level – 2 or more high-level statements – unit level – 2 or more subprograms – program level – 2 or more programs • instruction and program level concurrency involve no language issues and typically require parallel processing • statement and unit level often are handled by interleaving the machine instructions’ execution via multitasking/multithreading • these two forms can be supported via programming language constructs, so we concentrate on these

Definitions • Physical concurrency – program code executed in parallel on multiple processors • Logical concurrency – multitasking on a single processor where the OS or program code is responsible for the switching between processes • Physical and logical concurrency look the same to the programmer – but the language implementer must map logical concurrency onto the underlying hardware • Thread of control – the path through the code taken, that is, the sequence of program points reached

Subprogram-Level Concurrency • Unit-level logical concurrency is implemented through the co-routine which we can also describe as a task – the co-routine differs from subroutines because coroutines may be implicitly started rather than explicitly • when it is resumed by the OS or other co-routines – they have multiple “entry” points • a co-routine will usually restarted from the point it was last at rather than from the beginning – co-routines execute in some interleaved fashion rather than LIFO – co-routines can interrupt themselves to start other coroutines or be interrupted when an event occurs – a unit that invokes a co-routine does not have to wait for the co-routine to complete before it resumes (it can also continue) – control may or may not return to the calling unit

How are Co-routines Controlled? • Unlike subroutines which are strictly controlled by a pattern of subroutine invocations and returns • The co-routine is controlled, at least partially, by the OS – some entity will start the process of co-routines – co-routines are suspended because of • multitasking or multithreading within the OS, based on the timer or I/O – in this case, a timer stops the CPU from proceeding forcing the OS to switch tasks based on some scheduling algorithm – programmers and users may be able to specify priorities of tasks to alter scheduling behavior • having to wait for an event to occur that is being handled by another co-routine

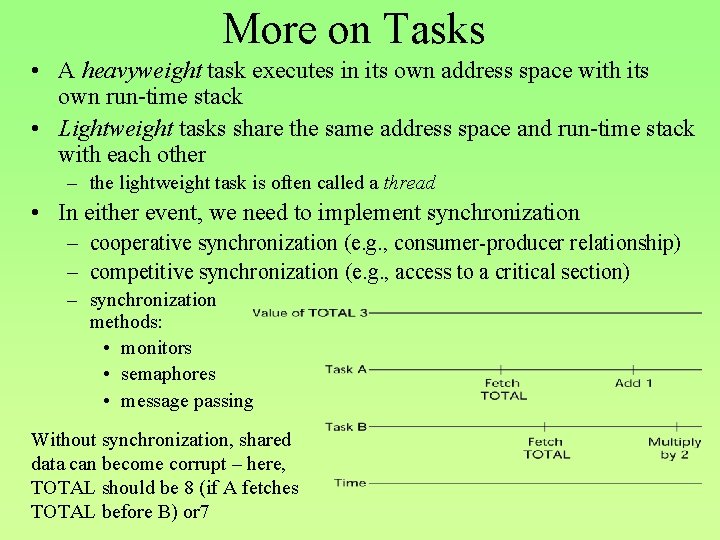

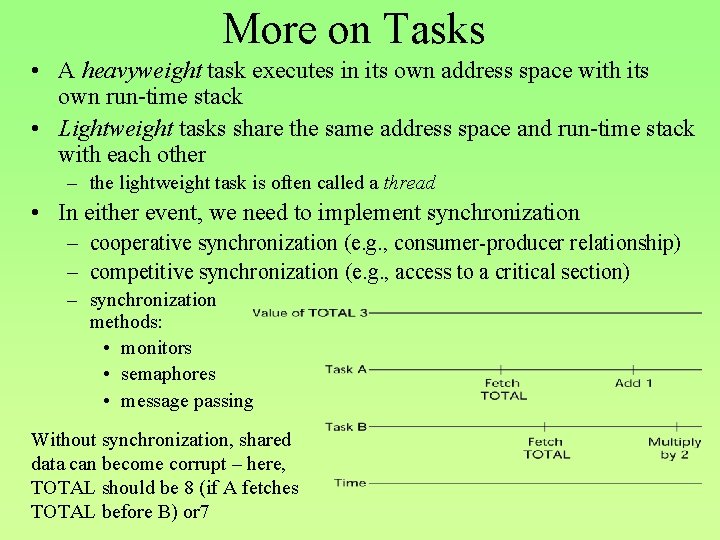

More on Tasks • A heavyweight task executes in its own address space with its own run-time stack • Lightweight tasks share the same address space and run-time stack with each other – the lightweight task is often called a thread • In either event, we need to implement synchronization – cooperative synchronization (e. g. , consumer-producer relationship) – competitive synchronization (e. g. , access to a critical section) – synchronization methods: • monitors • semaphores • message passing Without synchronization, shared data can become corrupt – here, TOTAL should be 8 (if A fetches TOTAL before B) or 7

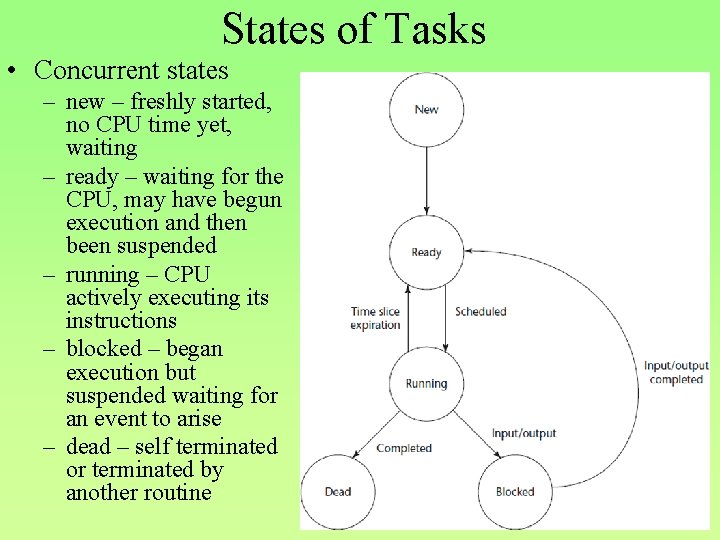

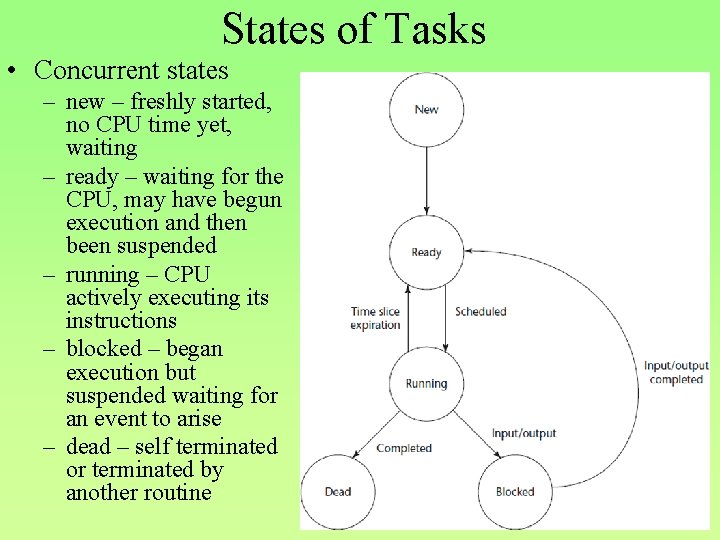

States of Tasks • Concurrent states – new – freshly started, no CPU time yet, waiting – ready – waiting for the CPU, may have begun execution and then been suspended – running – CPU actively executing its instructions – blocked – began execution but suspended waiting for an event to arise – dead – self terminated or terminated by another routine

Concurrency and Synchronization • With concurrency, we see a pattern of tasks starting at stopping seemingly at random – we don’t have issues here if the tasks are independent – if they share resources/communicate with each other, then we have to ensure that we do not get data corruption – we need a form of synchronized access to the shared data – disjoint tasks are tasks which do not affect each other or have any common memory – most tasks are not disjoint but instead share information • Languages have attempted different forms of communication – shared memory (non-local variables) – parameter passing – message passing

Liveness: How Tasks Make Progress • We want to ensure that our tasks are making progress toward completion – a task may be continually blocked because of • deadlock – one task is holding resources that this task needs while this task is holding resources the other task needs – neither can make any progress • starvation – every time the task is selected by the CPU, it can make no progress because it is blocked • Liveness is when a task is in a state that will eventually allow it to complete execution and terminate (no deadlock, no starvation) – these issues are studied in operating systems and so we won’t discuss them in much more detail in this chapter • Note that without concurrency or multitasking, deadlock cannot arise and starvation should not arise – unless resources are unavailable (off-line, not functioning)

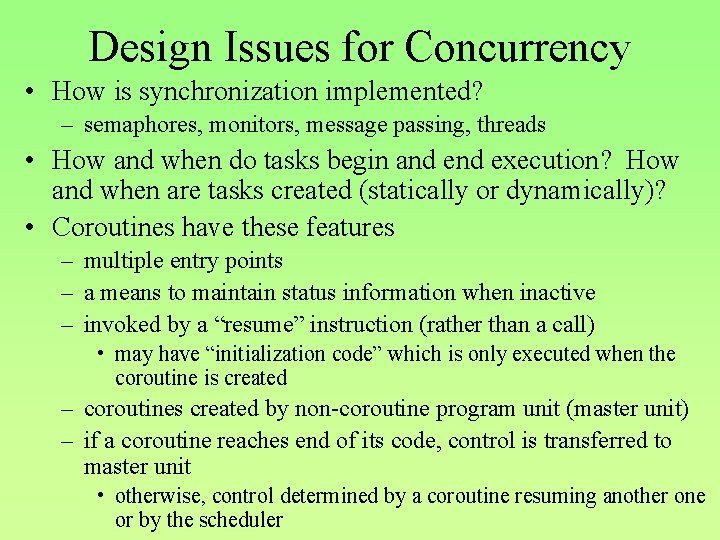

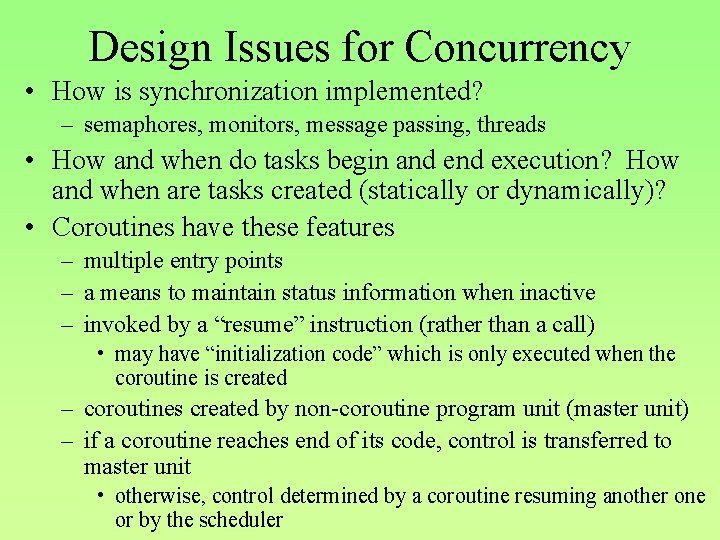

Design Issues for Concurrency • How is synchronization implemented? – semaphores, monitors, message passing, threads • How and when do tasks begin and execution? How and when are tasks created (statically or dynamically)? • Coroutines have these features – multiple entry points – a means to maintain status information when inactive – invoked by a “resume” instruction (rather than a call) • may have “initialization code” which is only executed when the coroutine is created – coroutines created by non-coroutine program unit (master unit) – if a coroutine reaches end of its code, control is transferred to master unit • otherwise, control determined by a coroutine resuming another one or by the scheduler

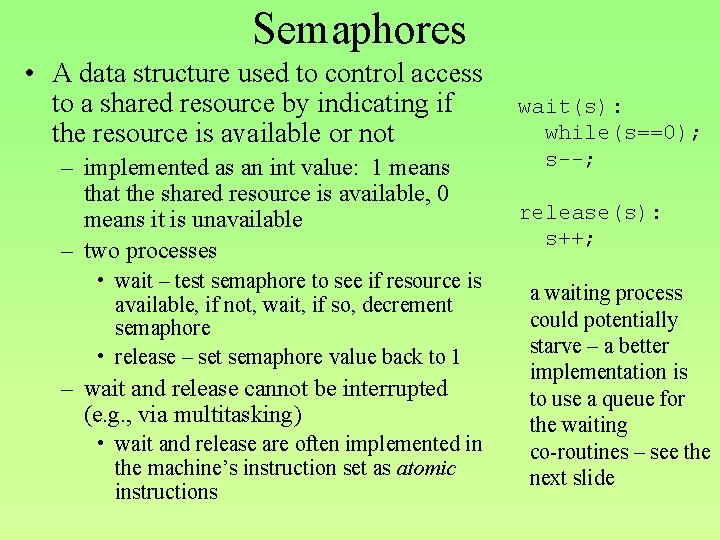

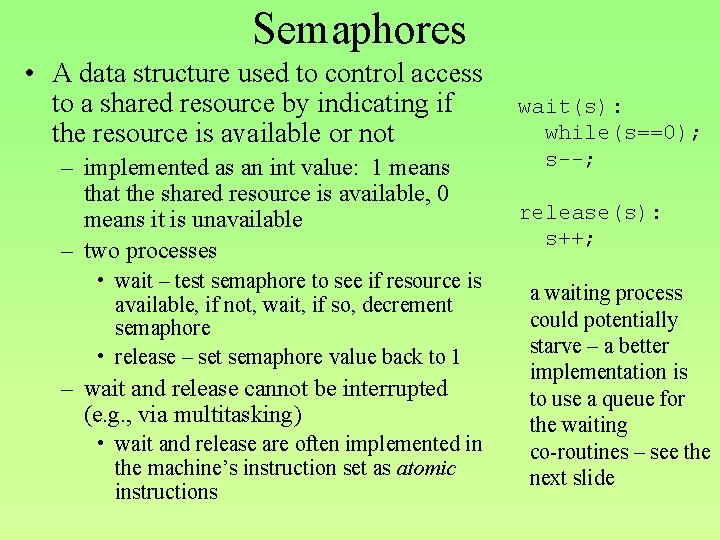

Semaphores • A data structure used to control access to a shared resource by indicating if the resource is available or not – implemented as an int value: 1 means that the shared resource is available, 0 means it is unavailable – two processes • wait – test semaphore to see if resource is available, if not, wait, if so, decrement semaphore • release – set semaphore value back to 1 – wait and release cannot be interrupted (e. g. , via multitasking) • wait and release are often implemented in the machine’s instruction set as atomic instructions wait(s): while(s==0); s--; release(s): s++; a waiting process could potentially starve – a better implementation is to use a queue for the waiting co-routines – see the next slide

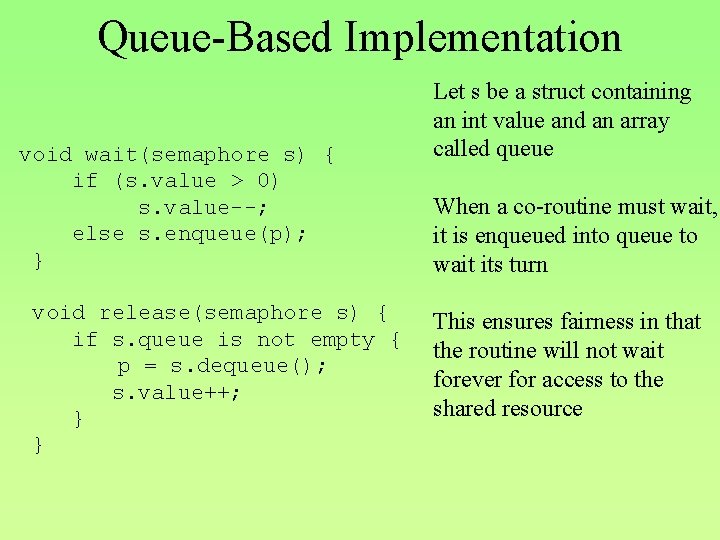

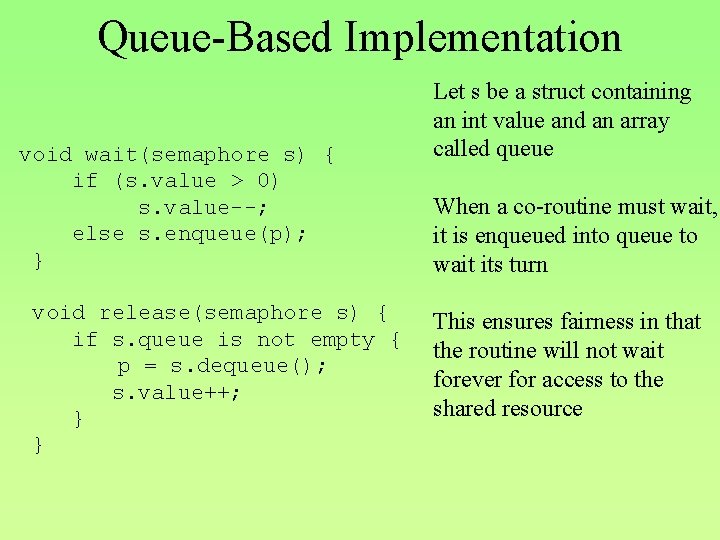

Queue-Based Implementation void wait(semaphore s) { if (s. value > 0) s. value--; else s. enqueue(p); } void release(semaphore s) { if s. queue is not empty { p = s. dequeue(); s. value++; } } Let s be a struct containing an int value and an array called queue When a co-routine must wait, it is enqueued into queue to wait its turn This ensures fairness in that the routine will not wait forever for access to the shared resource

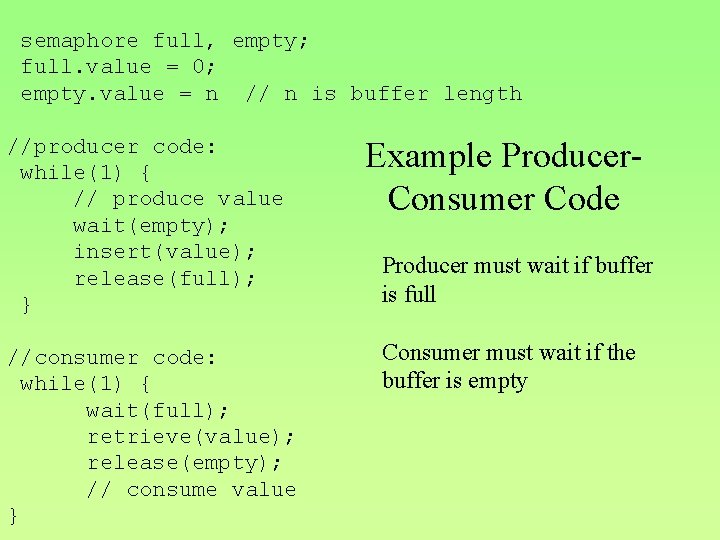

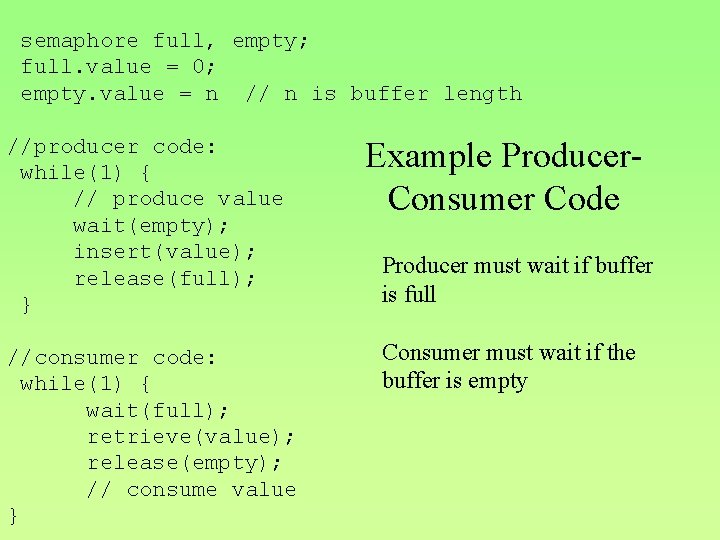

semaphore full, empty; full. value = 0; empty. value = n // n is buffer length //producer code: while(1) { // produce value wait(empty); insert(value); release(full); } Example Producer. Consumer Code //consumer code: while(1) { wait(full); retrieve(value); release(empty); // consume value } Consumer must wait if the buffer is empty Producer must wait if buffer is full

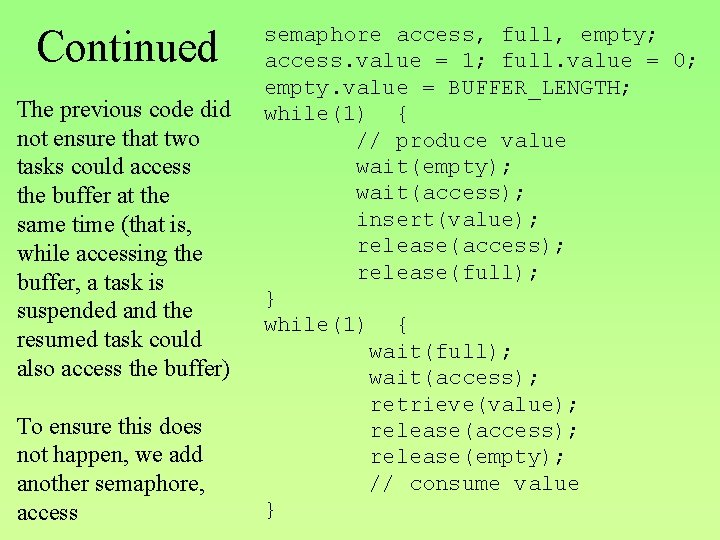

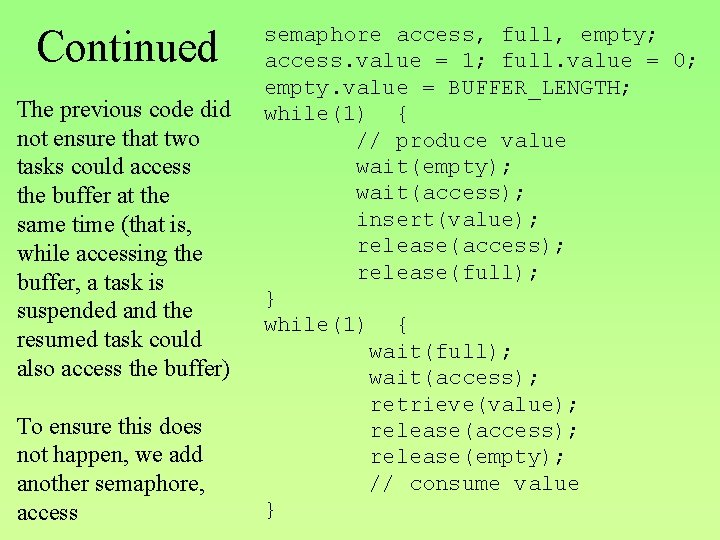

Continued The previous code did not ensure that two tasks could access the buffer at the same time (that is, while accessing the buffer, a task is suspended and the resumed task could also access the buffer) To ensure this does not happen, we add another semaphore, access semaphore access, full, empty; access. value = 1; full. value = 0; empty. value = BUFFER_LENGTH; while(1) { // produce value wait(empty); wait(access); insert(value); release(access); release(full); } while(1) { wait(full); wait(access); retrieve(value); release(access); release(empty); // consume value }

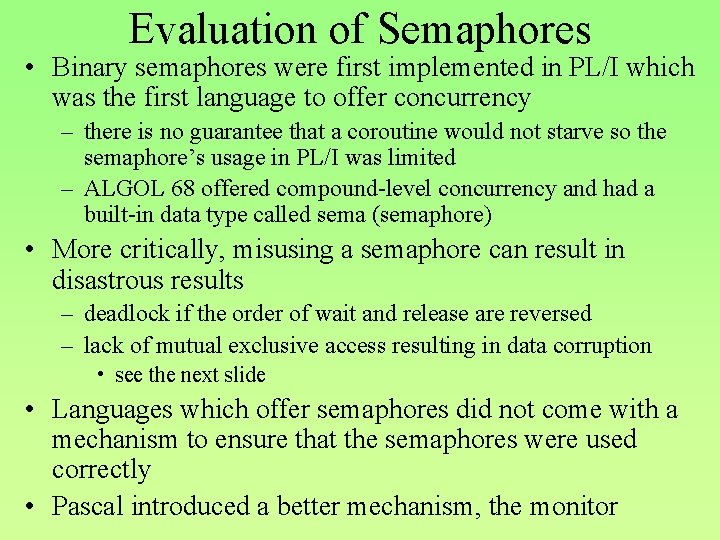

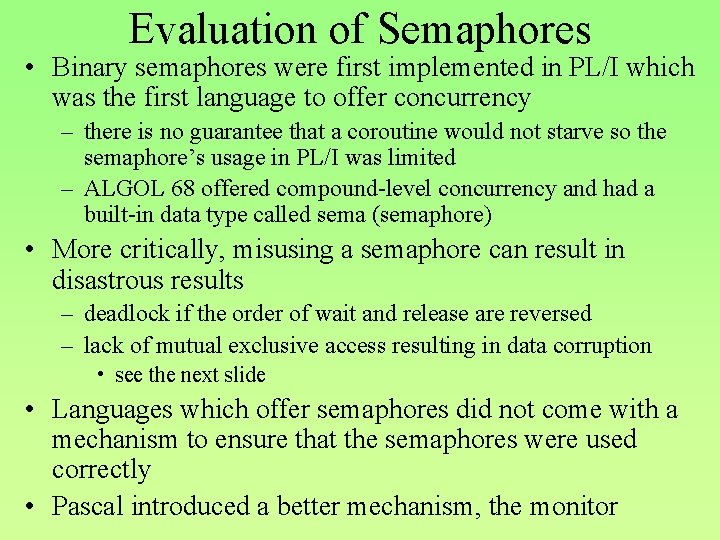

Evaluation of Semaphores • Binary semaphores were first implemented in PL/I which was the first language to offer concurrency – there is no guarantee that a coroutine would not starve so the semaphore’s usage in PL/I was limited – ALGOL 68 offered compound-level concurrency and had a built-in data type called sema (semaphore) • More critically, misusing a semaphore can result in disastrous results – deadlock if the order of wait and release are reversed – lack of mutual exclusive access resulting in data corruption • see the next slide • Languages which offer semaphores did not come with a mechanism to ensure that the semaphores were used correctly • Pascal introduced a better mechanism, the monitor

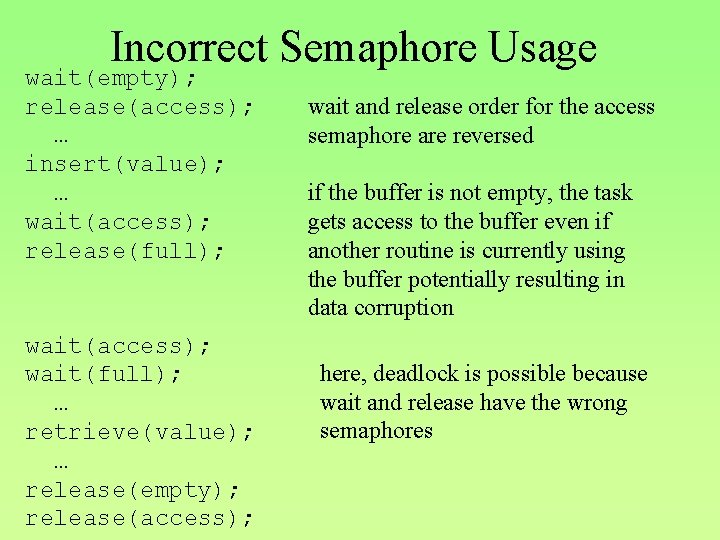

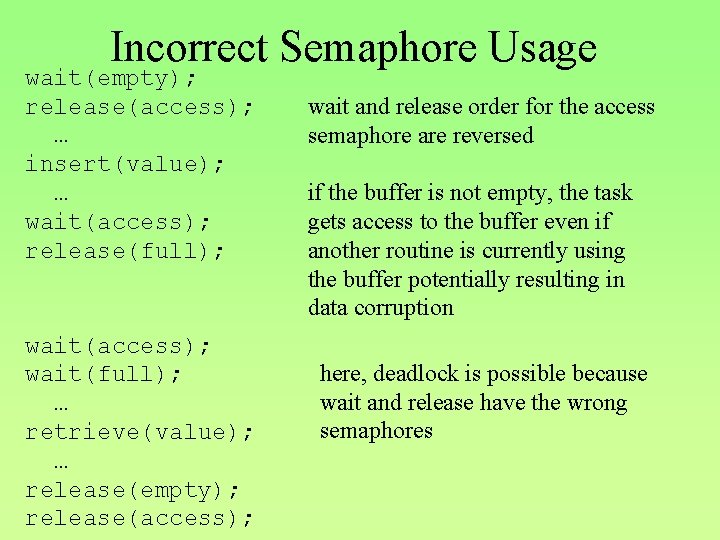

Incorrect Semaphore Usage wait(empty); release(access); … insert(value); … wait(access); release(full); wait(access); wait(full); … retrieve(value); … release(empty); release(access); wait and release order for the access semaphore are reversed if the buffer is not empty, the task gets access to the buffer even if another routine is currently using the buffer potentially resulting in data corruption here, deadlock is possible because wait and release have the wrong semaphores

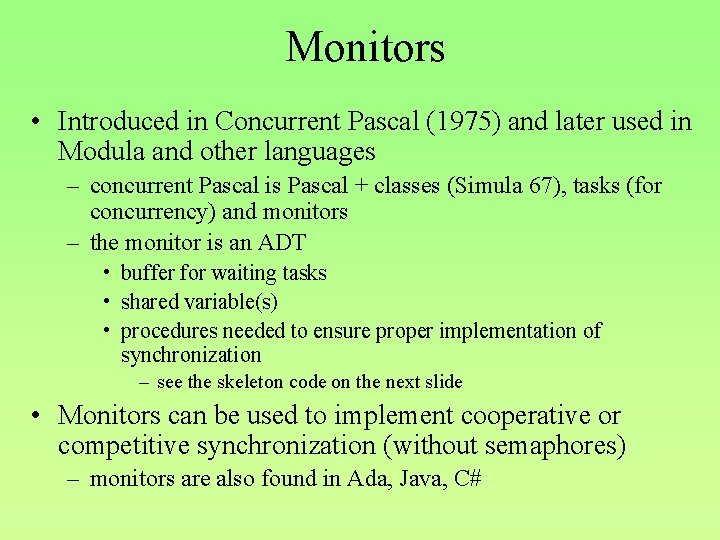

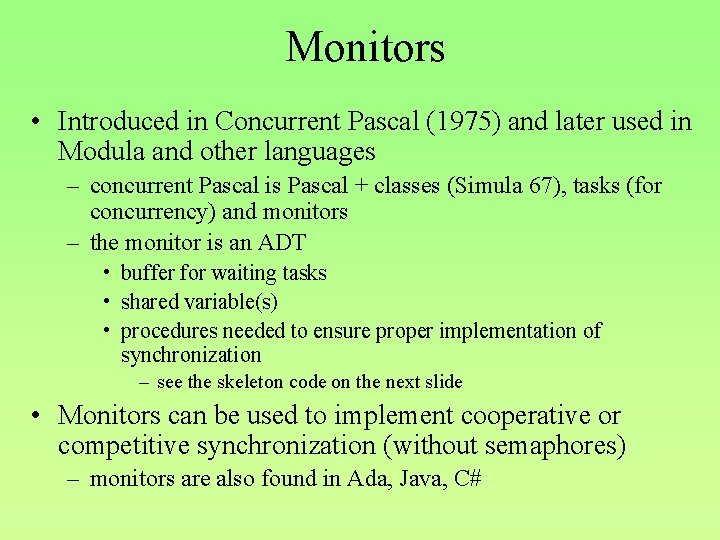

Monitors • Introduced in Concurrent Pascal (1975) and later used in Modula and other languages – concurrent Pascal is Pascal + classes (Simula 67), tasks (for concurrency) and monitors – the monitor is an ADT • buffer for waiting tasks • shared variable(s) • procedures needed to ensure proper implementation of synchronization – see the skeleton code on the next slide • Monitors can be used to implement cooperative or competitive synchronization (without semaphores) – monitors are also found in Ada, Java, C#

Skeleton of a Monitor (Pascal/Ada) type monitor_name = monitor(params) --- declaration of shared vars ----- definitions of local procedures ----- definitions of exported procedures ----- initialization code --end Exported procedures are those that can be referenced externally, e. g. , the public portion of the monitor Because the monitor is implemented in the language itself as a subprogram type, there is no way to misuse it like you could semaphores

Competitive and Cooperative Synchronization • Access to the shared data of a monitor is automatically synchronized through the monitor – competitive synchronization needs no further mechanisms – cooperative synchronization requires further communication implemented by the programmer to ensure that an empty/full buffer is not misused

Evaluation of Monitors • Safer than semaphores, easier to use – different tasks communicate through the shared buffer, inserting and removing data to the buffer • One drawback is that there needs to be an extra mechanism for one process to alert another that a datum is available – this is implemented via an instruction like continue(process) • thus, the burden is on the programmer to implement the communication when implementing cooperative synchronization • additionally, this approach won’t work with distributed systems where the continue instruction will not be sent to other processors – message passing is a better approach…

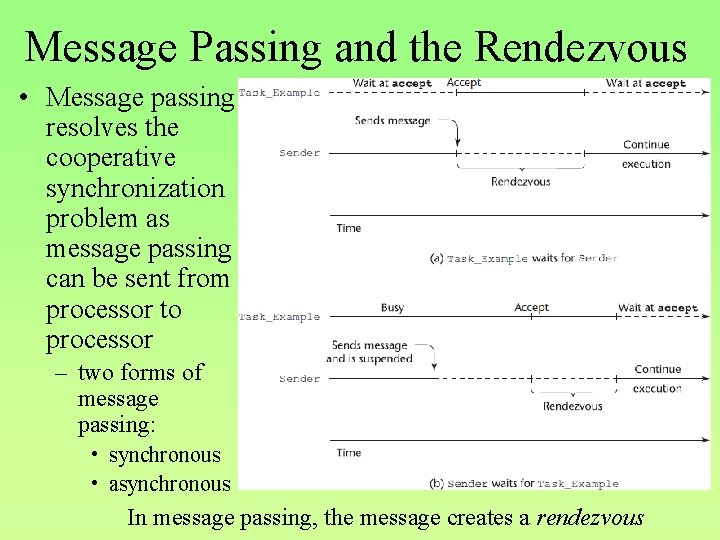

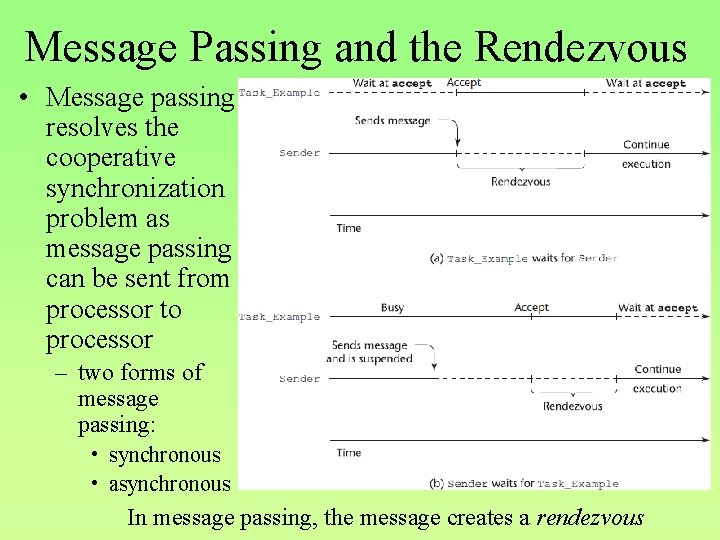

Message Passing and the Rendezvous • Message passing resolves the cooperative synchronization problem as message passing can be sent from processor to processor – two forms of message passing: • synchronous • asynchronous In message passing, the message creates a rendezvous

Rendezvous • From the figure, there are two running tasks, Task_Example and Sender – in the top portion of the figure • Task_Example waits for Sender to “catch up” to it for a rendezvous • while waiting, it surrenders access to the CPU and enters a waiting state • only once the rendezvous starts can it resume • once the rendezvous ends, both tasks can operate in any order – in the bottom of the figure • Task_Example is running doing something but not yet ready for a rendezvous • Sender is ready and sends its message, but now it must suspend and wait for Task_Example to “catch up” • when Task_Example is ready for the rendezvous, both can proceed through the rendezvous and once the rendezvous is over, they can proceed in either order

Implementing Rendezvous • Ada 83: implemented using processes similar to the monitor (but via message passing) • Ada 95: implemented using threads – threads are implemented using objects – but recall that Ada 95 did not implement objects as we would consider them from OOPLs – we won’t look at the details, you can find them in the textbook if interested • Instead, we will move on to languages the directly support threads.

Threads • The concurrent unit in Java and C# is the thread – lightweight tasks (as opposed to Ada’s heavyweight tasks) – a thread is code that shares address and stack space but each thread has its own private data space public My. Threaded. Class extends Thread { public void run( ) {…} } Note that while Thread has a run Some other class: method, it cannot be invoked directly, thus you must implement a start method yourself My. Threaded. Class mt 1 = new My. Threaded. Class(); My. Threaded. Class mt 2 = new My. Threaded. Class(); mt 1. start(); See additional example in mt 2. start(); notes section of this slide if(…) mt 1. suspend(…);

More on Java Threads • Your thread class must implement run • Other methods you can implement if you decide to are – yield – a suggestion to place this task into a ready queue • note that the thread could restart immediately depending on other waiting tasks and the scheduling algorithm – sleep – suspend this thread for the specified amount of time (in milliseconds), upon the end of suspension, the thread is placed into a ready queue – join – forces this thread to suspend until the thread that this message is passed to completes (a rendezvous) – interrupt, is. Interrupted – allows a thread to interrupt another or determine if it has been interrupted

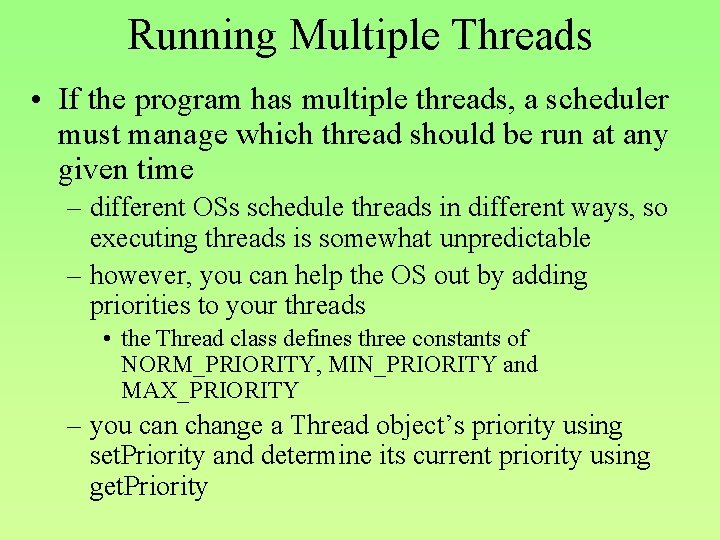

Running Multiple Threads • If the program has multiple threads, a scheduler must manage which thread should be run at any given time – different OSs schedule threads in different ways, so executing threads is somewhat unpredictable – however, you can help the OS out by adding priorities to your threads • the Thread class defines three constants of NORM_PRIORITY, MIN_PRIORITY and MAX_PRIORITY – you can change a Thread object’s priority using set. Priority and determine its current priority using get. Priority

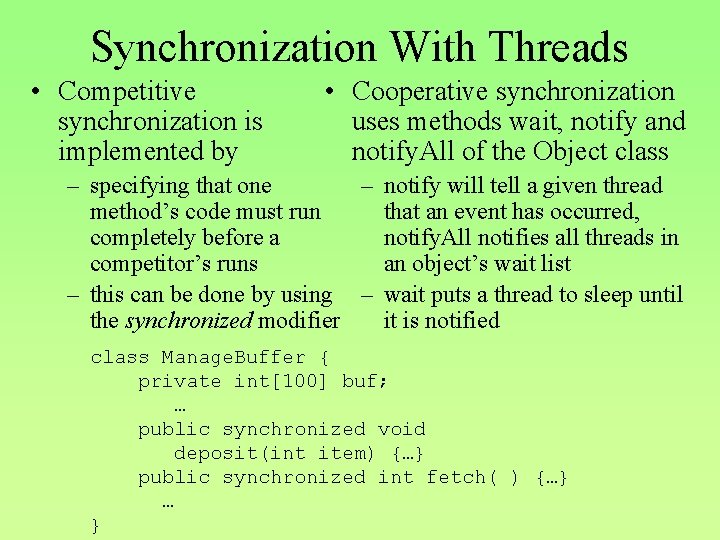

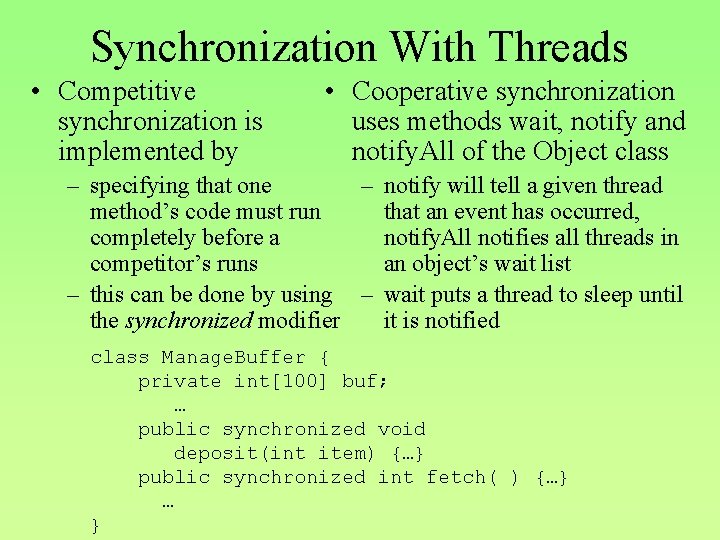

Synchronization With Threads • Competitive synchronization is implemented by • Cooperative synchronization uses methods wait, notify and notify. All of the Object class – specifying that one – notify will tell a given thread method’s code must run that an event has occurred, completely before a notify. All notifies all threads in competitor’s runs an object’s wait list – this can be done by using – wait puts a thread to sleep until the synchronized modifier it is notified class Manage. Buffer { private int[100] buf; … public synchronized void deposit(int item) {…} public synchronized int fetch( ) {…} … }

![Partial Example class Queue private int queue private int next In next Partial Example class Queue { private int[ ] queue; private int next. In, next.](https://slidetodoc.com/presentation_image_h/b08cda80927a3f1b9586bb80521d22fa/image-27.jpg)

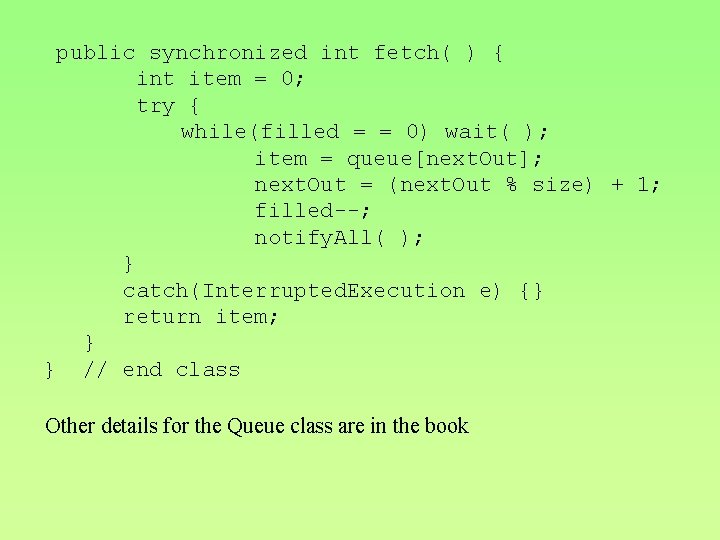

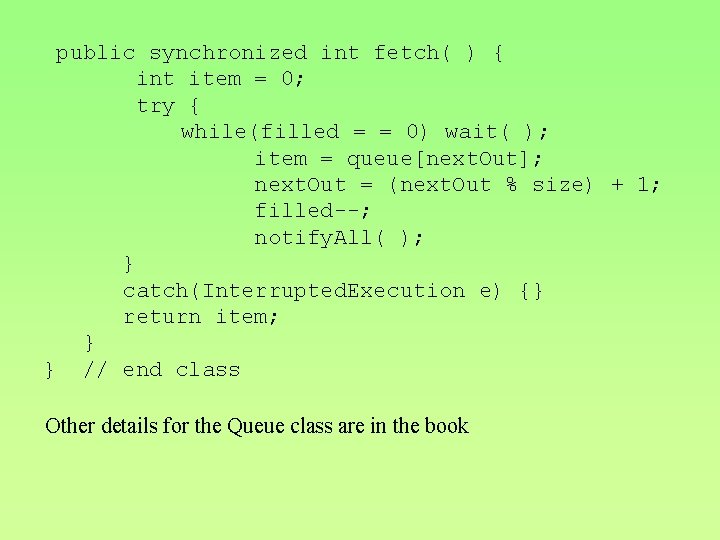

Partial Example class Queue { private int[ ] queue; private int next. In, next. Out, filled, size; // constructor omitted public synchronized void deposit(int item) try { while(filled = = size) wait( ); queue[next. In] = item; next. In = (next. In % size) + 1; filled++; notify. All( ); } catch(Interrupted. Execution e) {} } {

public synchronized int fetch( ) { int item = 0; try { while(filled = = 0) wait( ); item = queue[next. Out]; next. Out = (next. Out % size) + 1; filled--; notify. All( ); } catch(Interrupted. Execution e) {} return item; } } // end class Other details for the Queue class are in the book

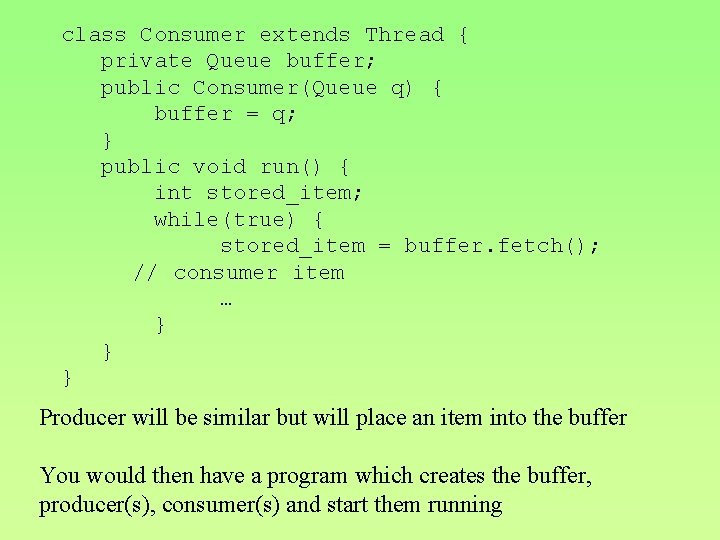

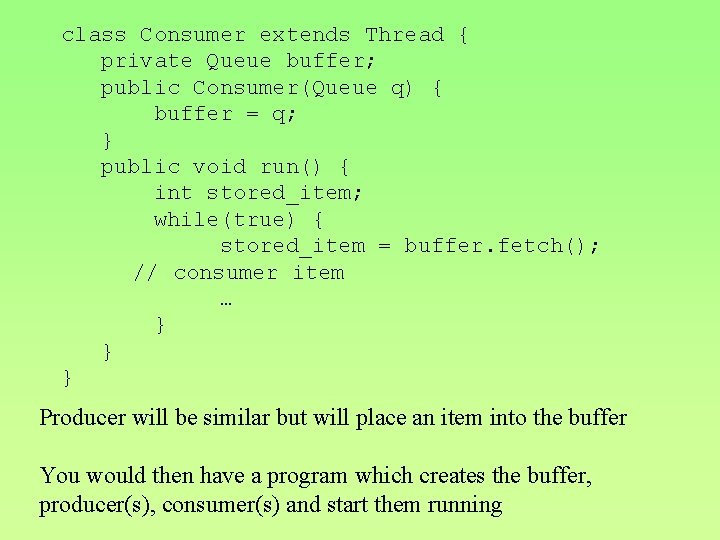

class Consumer extends Thread { private Queue buffer; public Consumer(Queue q) { buffer = q; } public void run() { int stored_item; while(true) { stored_item = buffer. fetch(); // consumer item … } } } Producer will be similar but will place an item into the buffer You would then have a program which creates the buffer, producer(s), consumer(s) and start them running

Other Comments/Evaluation • Java includes classes to control synchronized access to shared int, long and boolean variables in a non-blocking way – this uses built-in atomic machine instructions • There is a Lock interface class with methods lock, unlock, trylock – this interface provides a mechanism whereby you are not using the explicit locks provided with the synchronized method of threads – the reason for this is to avoid a possible indefinite wait time • Java’s thread support is effective and fairly simple to use – but there is limited communication between threads preventing, for instance, threads from running on different processors

C# Threads • A modest improvement over Java threads – any method can run in its own thread by creating a Thread object – Thread objects are instantiated with a previously defined Thread. Start class which is passed the method that implements the action of the new Thread object • C# has methods of start, join, sleep, and includes an abort method that makes termination of threads superior than in Java • In C#, threads can be synchronized by – being placed inside a Monitor class (for creating an ADT with a critical section as per Pascal) – being placed inside an Interlock class (this is used only when synchronizing access to a datum that is to be incr/decr) – using the lock statement (to mark access to a critical section inside a thread) • C# threads are not as flexible as Ada threads, but are simpler to use/implement

Concurrency in LISP • Multi-LISP is an extension of Scheme to support concurrency • To (potentially) launch a process concurrently, use the pcall function – this function receives the function to be launched and its arguments as in (pcall foo a b c) – there is no built-in security for using pcall • for instance, side effects that corrupt data and deadlock can arise unless the function foo has proper synchronization mechanisms • Another function is called future which is the same as pcall except that the function executed is launched as a thread with the parent thread running concurrently with the newly launched function – the book also briefly mentions Concurrent ML and the language F# which has its own form of thread-based concurrency similar to C#

Statement-Level Concurrency • Need language support so that programmers can identify how a program can be mapped onto a multiprocessor – there are many ways to parallelize code, the book only refers to methods for a SIMD architecture • High-Performance FORTRAN (an extension to FORTRAN 90) has the following statements – PROCESSORS – describes number of processors available for the program, used with other specifications to tell the compiler how data is to be distributed – DISTRIBUTE – specifies what data is to be distributed (e. g. an array) – ALIGN – relates distribution of arrays with each other – FORALL – lists statements that can be executed concurrently • Other languages with statement-level concurrency are Cn (variation of C), Parallaxis-III (variation of Modula 2) and Vector Pascal