Simultaneous Multithreading REHNUMA AFRIN Outline Motivation Simultaneous MultithreadingSMT

![References [1] Dean M. Tullsen, Susan J. Eggers, and Henry M. Levy, Simultaneous Multithreading: References [1] Dean M. Tullsen, Susan J. Eggers, and Henry M. Levy, Simultaneous Multithreading:](https://slidetodoc.com/presentation_image_h2/bf70a4973dd4415de02fa58860562120/image-11.jpg)

![References Contd. [9] J. Laudon, A. Gupta, and M. Horowitz, Interleaving: A multithreading technique References Contd. [9] J. Laudon, A. Gupta, and M. Horowitz, Interleaving: A multithreading technique](https://slidetodoc.com/presentation_image_h2/bf70a4973dd4415de02fa58860562120/image-12.jpg)

- Slides: 13

Simultaneous Multithreading REHNUMA AFRIN

Outline Motivation Simultaneous Multithreading(SMT) • Resource Utilization • SMT Visually • SMT Pipeline • Performance Limitations References

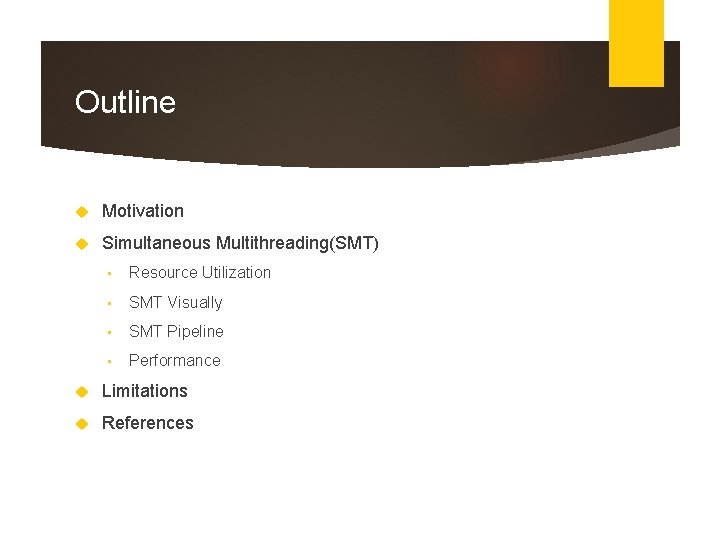

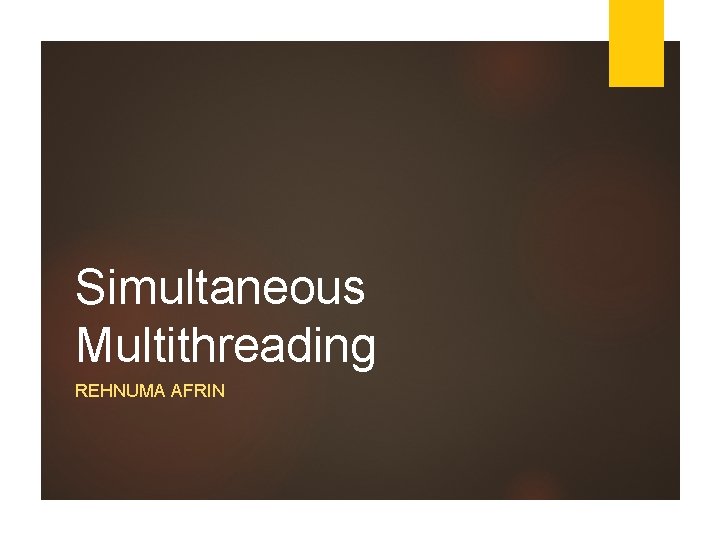

Motivation Superscalar execution and traditional multithreading has limitations to increase instruction throughput in future processors Instruction dependencies Unutilized CPU resources Empty issue slots can be defined as either vertical waste or horizontal waste. Vertical waste is introduced when the processor issues no instructions in a cycle, horizontal waste when not all issue slots can be filled in a cycle. Superscalar execution (as opposed to single-issue execution) both introduces horizontal waste and increases the amount of vertical waste.

Simultaneous Multithreading A technique permitting several independent threads to issue instructions to a superscalar’s multiple functional units in a single cycle Uni-Processor: 4 -6 wide, lucky if you get 1 -2 IPC poor utilization SMP: 2 -4 CPUs, but need independent tasks else poor utilization as well SMT: Idea is to use a single large uni-processor as a multi-processor

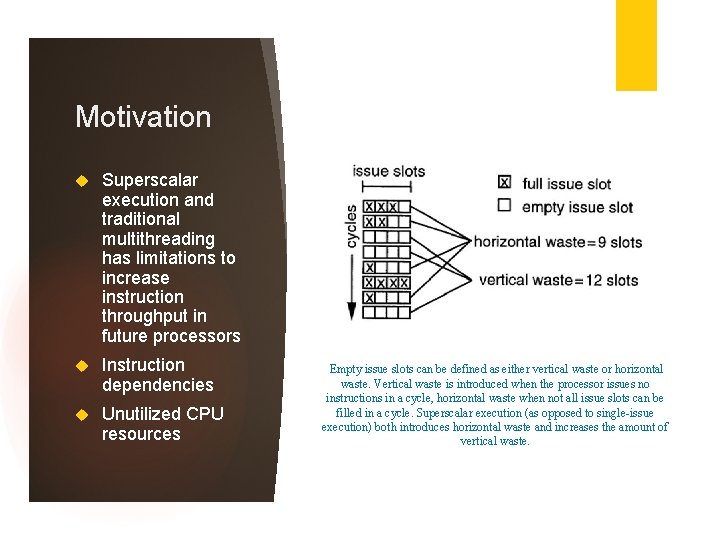

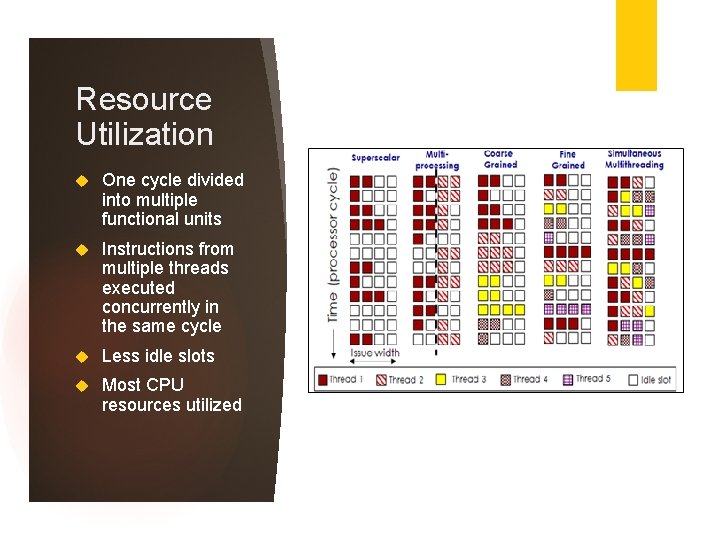

Resource Utilization One cycle divided into multiple functional units Instructions from multiple threads executed concurrently in the same cycle Less idle slots Most CPU resources utilized

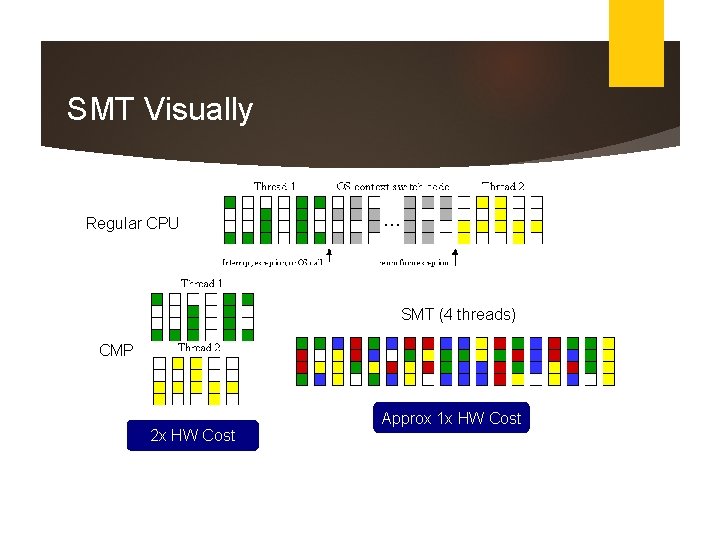

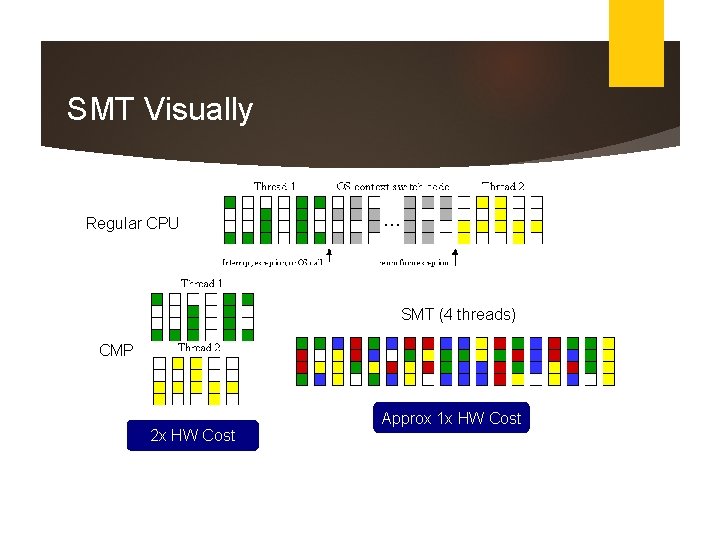

SMT Visually Regular CPU SMT (4 threads) CMP Approx 1 x HW Cost 2 x HW Cost

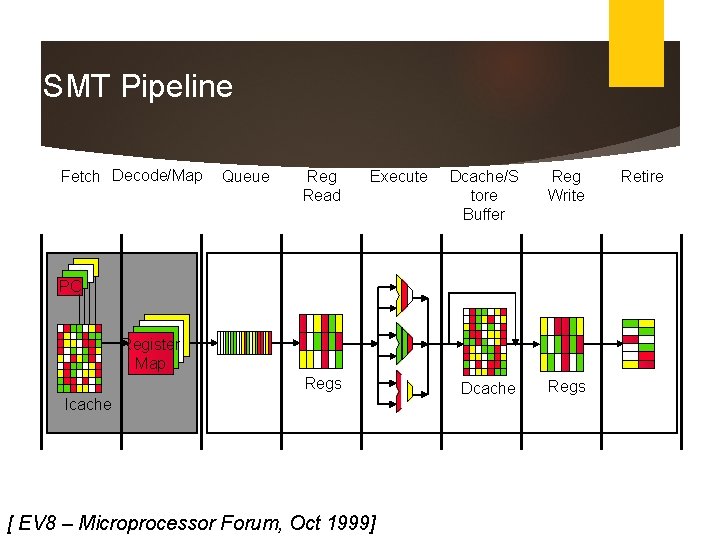

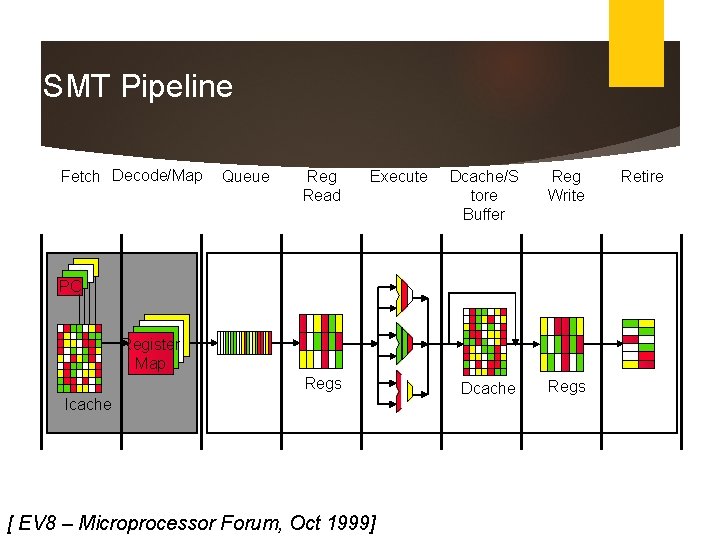

7 SMT Pipeline Fetch Decode/Map Queue Reg Read Execute Dcache/S tore Buffer Reg Write Dcache Regs PC Register Map Regs Icache [ EV 8 – Microprocessor Forum, Oct 1999] Retire

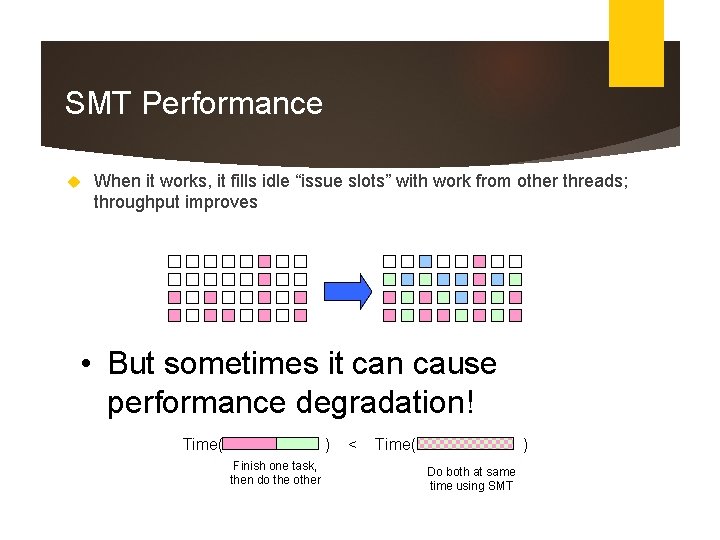

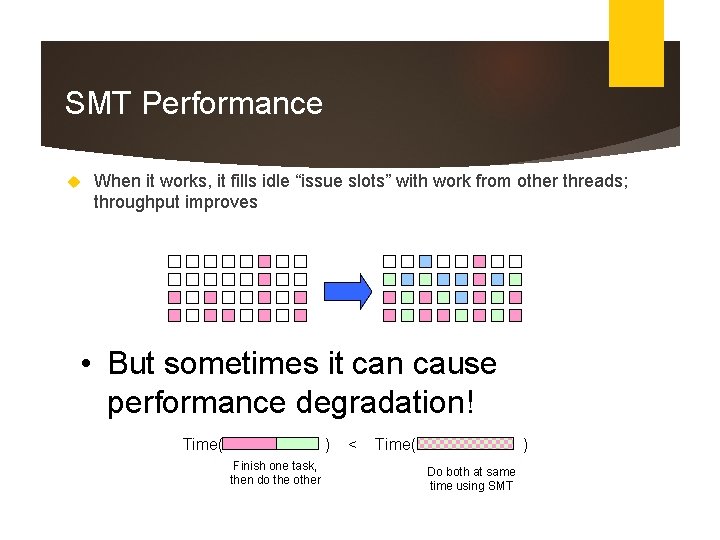

SMT Performance When it works, it fills idle “issue slots” with work from other threads; throughput improves • But sometimes it can cause performance degradation! Time( ) Finish one task, then do the other < Time( ) Do both at same time using SMT

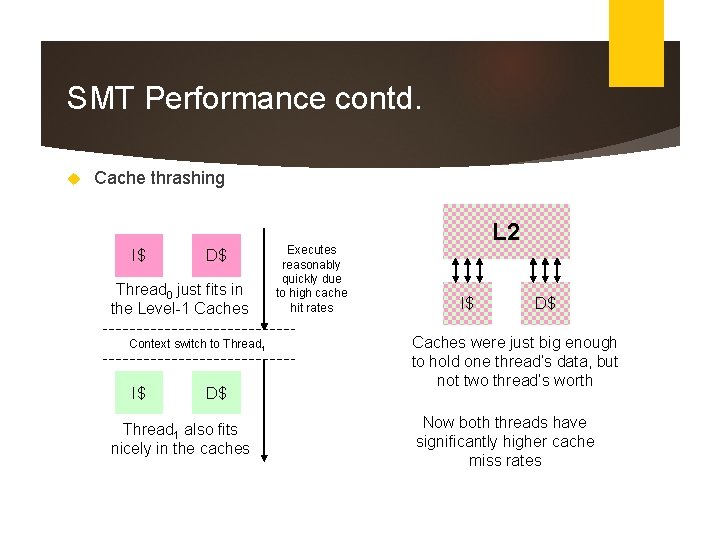

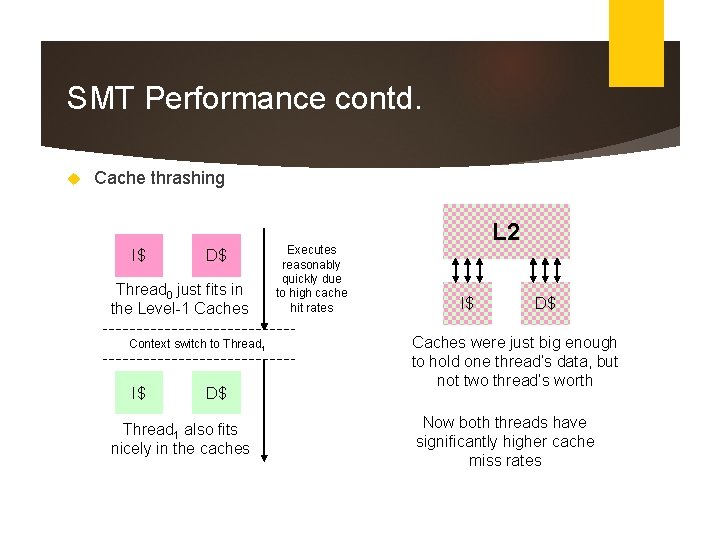

SMT Performance contd. Cache thrashing I$ D$ Thread 0 just fits in the Level-1 Caches Context switch to Thread 1 I$ D$ Thread 1 also fits nicely in the caches Executes reasonably quickly due to high cache hit rates L 2 I$ D$ Caches were just big enough to hold one thread’s data, but not two thread’s worth Now both threads have significantly higher cache miss rates

Limitations Increased complexity Increased stress on shared structures such as Cache

![References 1 Dean M Tullsen Susan J Eggers and Henry M Levy Simultaneous Multithreading References [1] Dean M. Tullsen, Susan J. Eggers, and Henry M. Levy, Simultaneous Multithreading:](https://slidetodoc.com/presentation_image_h2/bf70a4973dd4415de02fa58860562120/image-11.jpg)

References [1] Dean M. Tullsen, Susan J. Eggers, and Henry M. Levy, Simultaneous Multithreading: Maximizing On-Chip Parallelism, ISCA ’ 95, Santa Margherita Ligure Italy @ 1995 ACM , doi 10. 1145/223982. 224449 [2] J Laudon, A Gupta, M Horowitz - Interleaving: A multithreading technique targeting multiprocessors and workstations, ACM SIGPLAN Notices, 1994 - dl. acm. org [3] Mike Johnson, Superscalar Microprocessor Design, Prentice-Hall, 1991, ISBN 0 -13 -875634 -1 [4] JP Shen, MH Lipasti - 2013 - books. google. com, Modern processor design: fundamentals of superscalar processors [5] M. Loikkanen ; N. Bagherzadeh, A fine-grain multithreading superscalar architecture, Proceedings of the 1996 Conference on Parallel Architectures and Compilation Technique, 10. 1109/PACT. 1996. 552663 [6] Nikos Chrisochoides, Multithreaded model for the dynamic load-balancing of parallel adaptive PDE computation, https: //doi. org/10. 1016/0168 -9274(95)00104 -2 [7] A. Agarwal. Performance tradeoffs in multithreaded processors. IEEE Transactr”ons on Parallel and Distributed Systems, 3(5): 525– 539, September 1992. [8] A. Agarwal, B. H. Llm, D. Kranz, and J. Kubiatowicz. APRIL a processor architecture for multiprocessing. In 17 th Annual lnternarional Symposium on Compmr A rchitccture, pages 104 -114, May 1990

![References Contd 9 J Laudon A Gupta and M Horowitz Interleaving A multithreading technique References Contd. [9] J. Laudon, A. Gupta, and M. Horowitz, Interleaving: A multithreading technique](https://slidetodoc.com/presentation_image_h2/bf70a4973dd4415de02fa58860562120/image-12.jpg)

References Contd. [9] J. Laudon, A. Gupta, and M. Horowitz, Interleaving: A multithreading technique targeting multiprocessors and work stations. In Sixth International Conference on Architectural, Support for Programming Languages and Operating Systems, pages 308– 3 18, October 1994 [10] A. Gupta, J. Hennessy, K. Gharachorloo, T. Mowry, and W. D. Weber. Comparative evaluation of latency reducing and tolerating techniques. In 18 th Annual International Symposium on Computer Architecture, pages 254 -263, May 1991. [11] W. D. Weber and A. Gupta. Exploring the benefits of multiple hardware contexts in a multiprocessor architecture: preliminary results. In 16 th Annual International Symposium on Computer Architecture, pages 273– 280, June 1989. [12] R. Thekkath and S. J. Eggers. The effectiveness of multiple hardware contexts. In Sixth International Conference on Architectural Support for Programming Languages and Operating Systems, pages 328– 337, October 1994 [13] R. Alverson, D. Callahan, D. Cummings, B. Koblenz, A. Porterfield, and B. Smith. The Tera computer system. In International Conference on Supercomputing, pages 1– 6, June 1990

THANK YOU