PROCESS CONCEPT Process a program in execution process

![DEKKER’S ALGORITHM- SECOND APPROACH Flag[0] Critical Section Flag[1] 1 P 0 P 1 Turn DEKKER’S ALGORITHM- SECOND APPROACH Flag[0] Critical Section Flag[1] 1 P 0 P 1 Turn](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-118.jpg)

![SECOND ATTEMPT P 0 P 1 while (flag[1]) /**/; while (flag[0]) /**/; flag[0] = SECOND ATTEMPT P 0 P 1 while (flag[1]) /**/; while (flag[0]) /**/; flag[0] =](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-119.jpg)

![Consider the following trace: 1. P 0 finds (state[1] == outside) 2. The Consider the following trace: 1. P 0 finds (state[1] == outside) 2. The](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-121.jpg)

![THIRD ATTEMPT Busy Flag Modified P 0 flag[0] = true; while (flag[1]==true) /**/; critical THIRD ATTEMPT Busy Flag Modified P 0 flag[0] = true; while (flag[1]==true) /**/; critical](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-124.jpg)

![THIRD ATTEMPT Once P 0 has set flag[0] to “true”, P 1 can not THIRD ATTEMPT Once P 0 has set flag[0] to “true”, P 1 can not](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-127.jpg)

![4 TH ATTEMPT - P 0 sets flag[0] to true. - P 1 sets 4 TH ATTEMPT - P 0 sets flag[0] to true. - P 1 sets](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-131.jpg)

![DEKKER’S ALGORITHM-CORRECT SOLUTION Turn Critical Section 1 P 0 P 1 Flag[0] Flag[1] Turn DEKKER’S ALGORITHM-CORRECT SOLUTION Turn Critical Section 1 P 0 P 1 Flag[0] Flag[1] Turn](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-132.jpg)

![CORRECT SOLUTION Procedure P 0 Begin Repeat flag[0]= True While flag[1] do if turn=1 CORRECT SOLUTION Procedure P 0 Begin Repeat flag[0]= True While flag[1] do if turn=1](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-133.jpg)

![while (true) { state[i] = interested; /* declare interest */ while (state[j]) /* If while (true) { state[i] = interested; /* declare interest */ while (state[j]) /* If](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-134.jpg)

![PETERSON’S ALGORITHM Procedure P 0 Begin Procedure P 1 Begin Repeat Flag[0]=true Turn=1 Repeat PETERSON’S ALGORITHM Procedure P 0 Begin Procedure P 1 Begin Repeat Flag[0]=true Turn=1 Repeat](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-136.jpg)

![1) Mutual exclusion - state[0] and state[1] can both be true, but turn can 1) Mutual exclusion - state[0] and state[1] can both be true, but turn can](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-138.jpg)

![EXAMPLE 2 Process 0 i=0, j=1 state [0] = TRUE turn = 1 EXAMPLE 2 Process 0 i=0, j=1 state [0] = TRUE turn = 1](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-140.jpg)

![EXAMPLE 3 Process 0 i=0, j=1 state[0] = TRUE - Lose processor here EXAMPLE 3 Process 0 i=0, j=1 state[0] = TRUE - Lose processor here](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-141.jpg)

![THE DINING-PHILOSOPHER PROBLEM The structure of Philosopher i: do { wait (chopstick[i]); wait (chop. THE DINING-PHILOSOPHER PROBLEM The structure of Philosopher i: do { wait (chopstick[i]); wait (chop.](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-162.jpg)

- Slides: 195

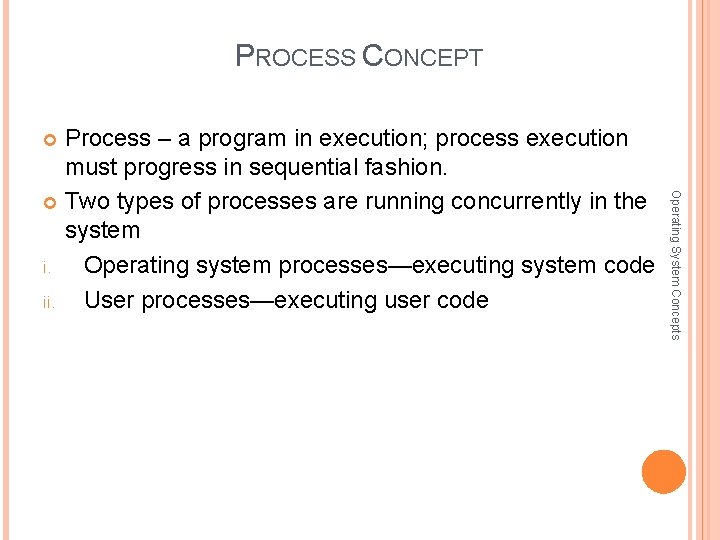

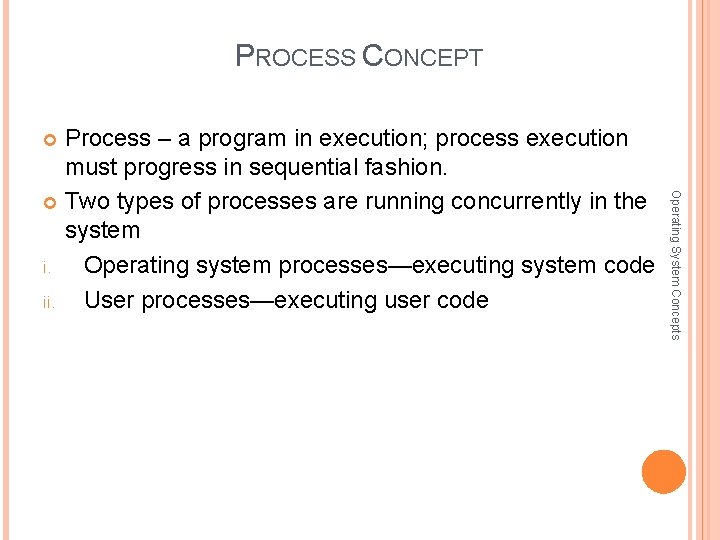

PROCESS CONCEPT Process – a program in execution; process execution must progress in sequential fashion. Two types of processes are running concurrently in the system i. Operating system processes—executing system code ii. User processes—executing user code Operating System Concepts

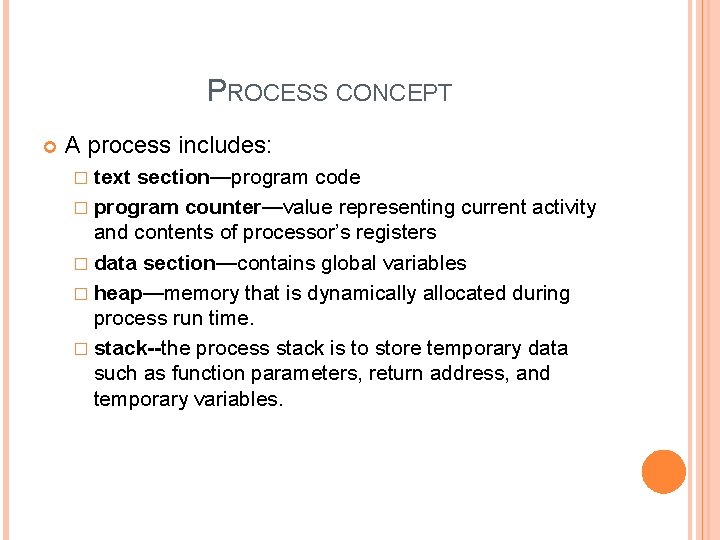

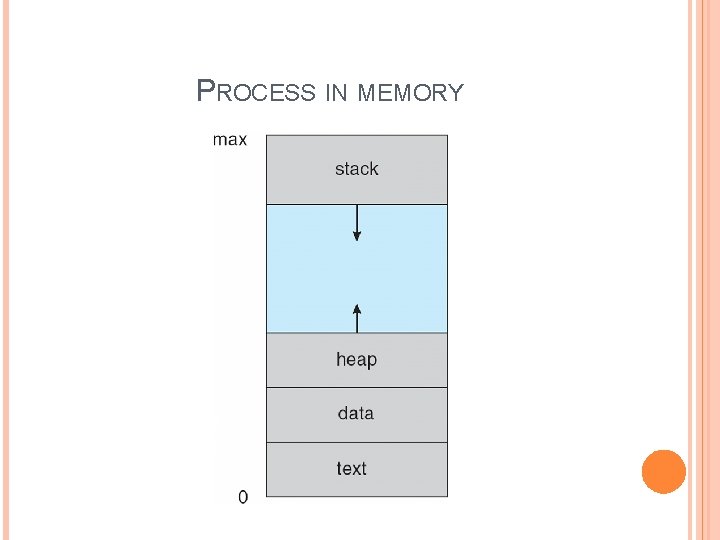

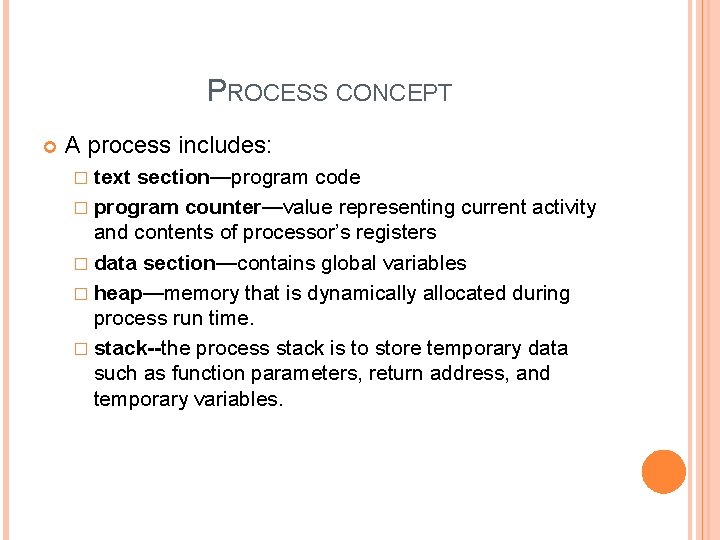

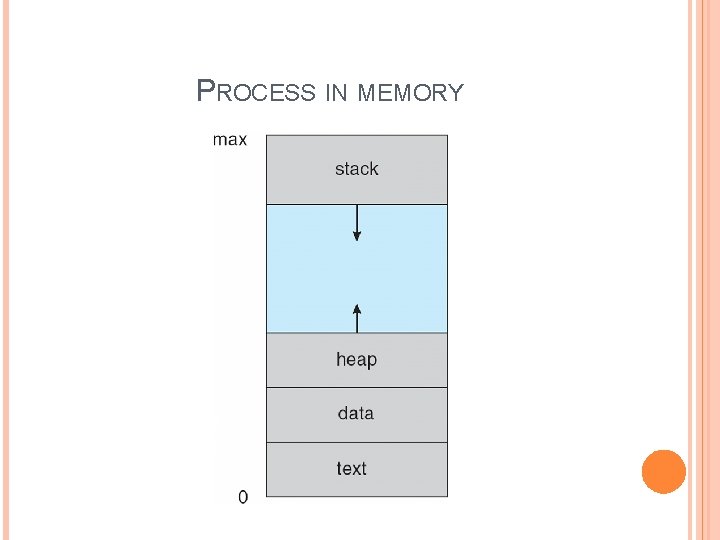

PROCESS CONCEPT A process includes: � text section—program code � program counter—value representing current activity and contents of processor’s registers � data section—contains global variables � heap—memory that is dynamically allocated during process run time. � stack--the process stack is to store temporary data such as function parameters, return address, and temporary variables.

PROCESS IN MEMORY

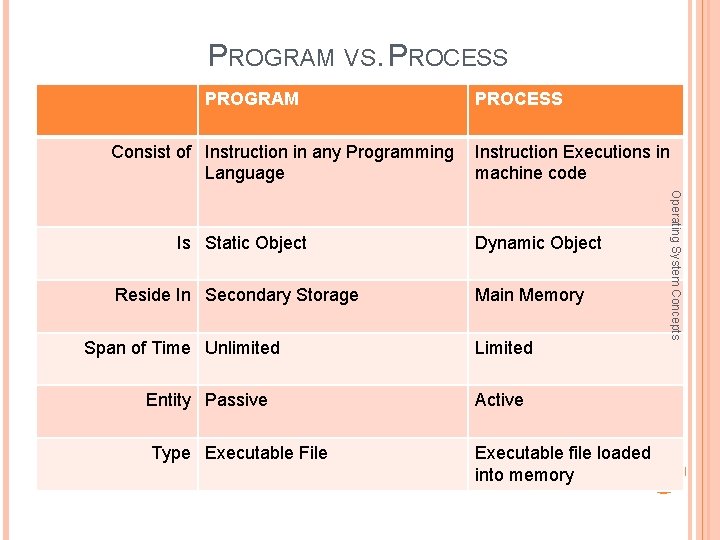

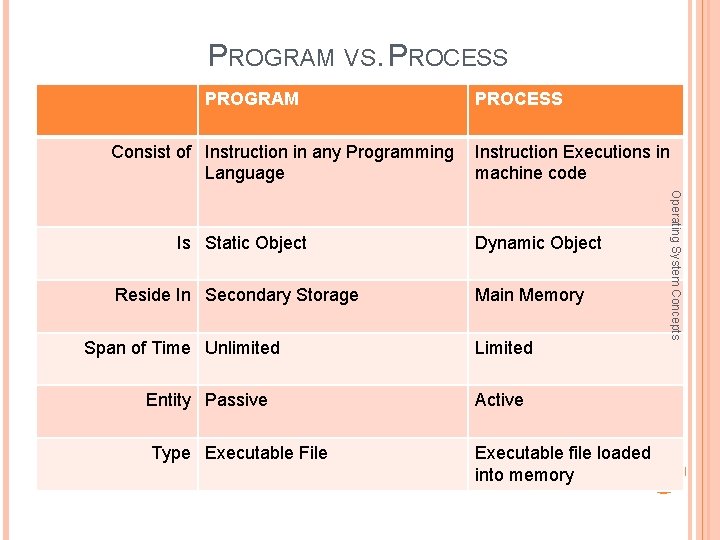

PROGRAM VS. PROCESS PROGRAM PROCESS Consist of Instruction in any Programming Instruction Executions in Language machine code Reside In Secondary Storage Span of Time Unlimited Dynamic Object Main Memory Limited Entity Passive Active Type Executable File Executable file loaded into memory Operating System Concepts Is Static Object

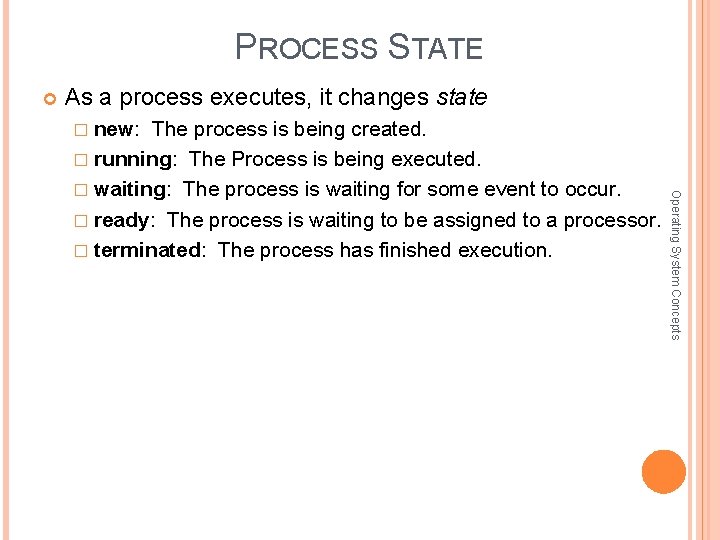

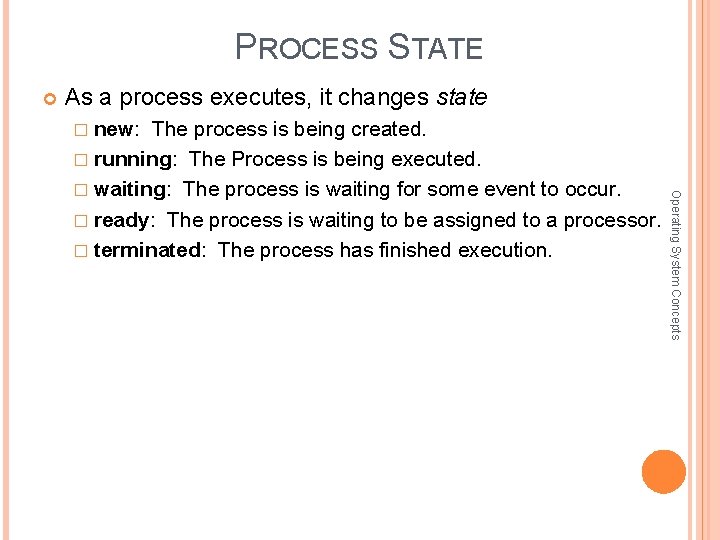

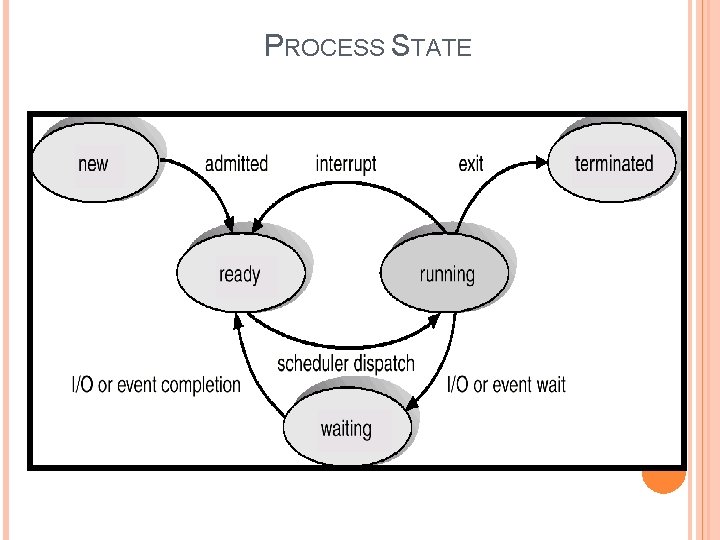

PROCESS STATE As a process executes, it changes state � new: The process is being created. � running: The Process is being executed. � ready: The process is waiting to be assigned to a processor. � terminated: The process has finished execution. Operating System Concepts � waiting: The process is waiting for some event to occur.

PROCESS STATE Operating System Concepts

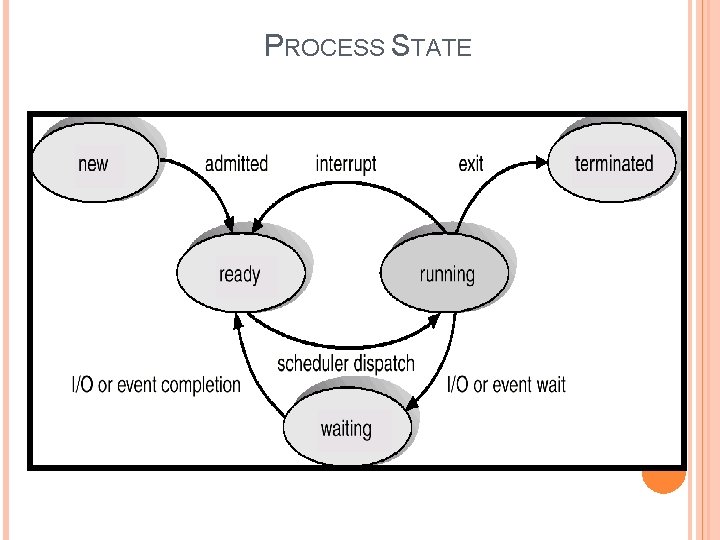

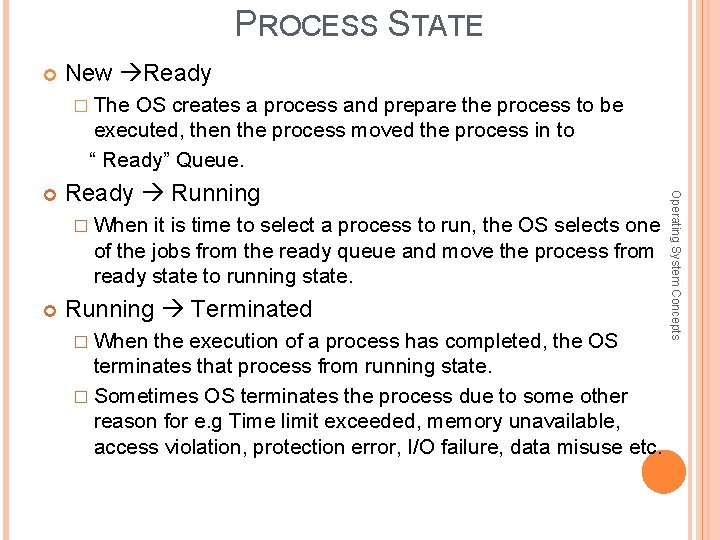

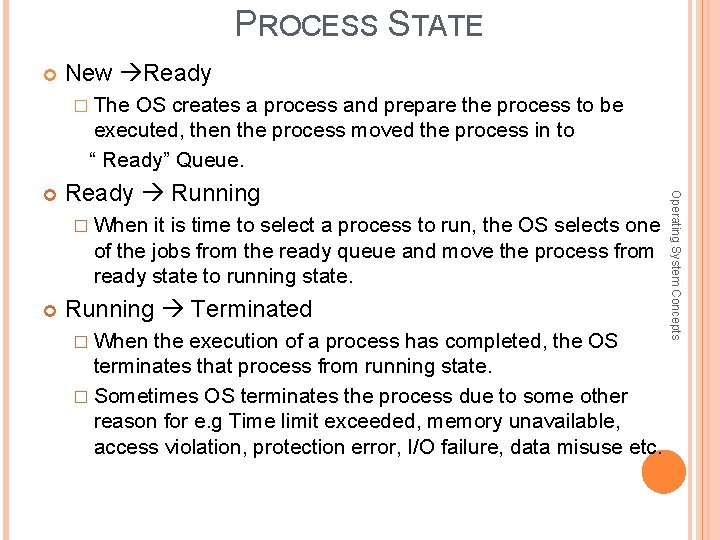

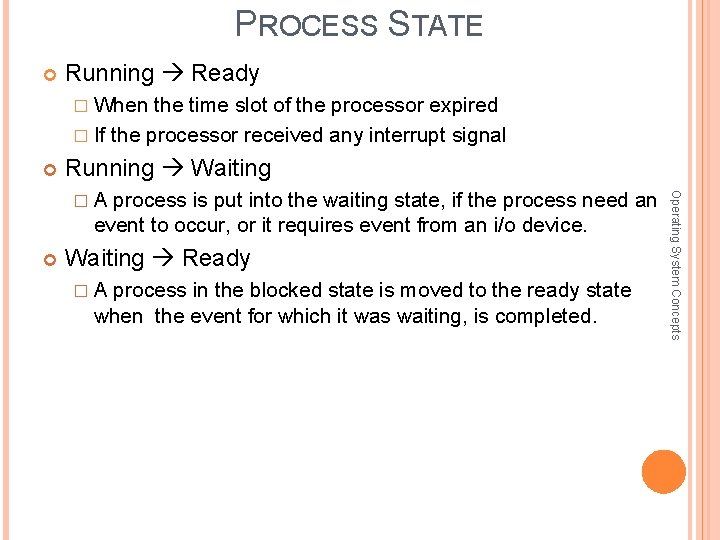

PROCESS STATE New Ready � The OS creates a process and prepare the process to be executed, then the process moved the process in to “ Ready” Queue. Ready Running � When it is time to select a process to run, the OS selects one of the jobs from the ready queue and move the process from ready state to running state. Running Terminated � When the execution of a process has completed, the OS terminates that process from running state. � Sometimes OS terminates the process due to some other reason for e. g Time limit exceeded, memory unavailable, access violation, protection error, I/O failure, data misuse etc. Operating System Concepts

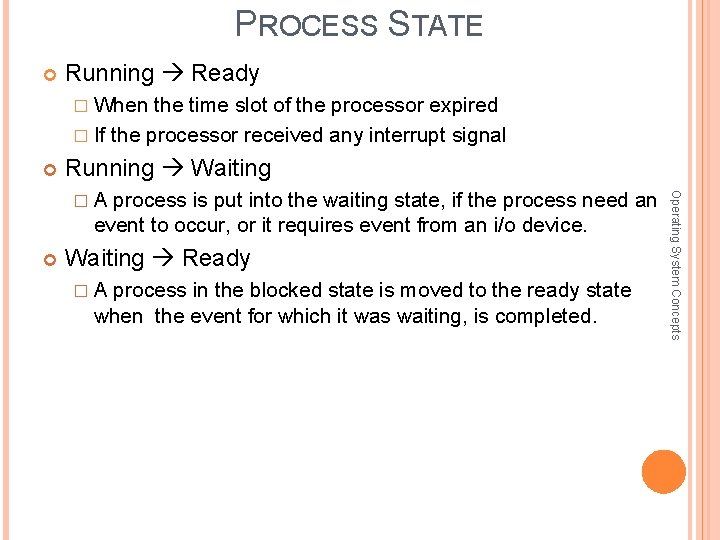

PROCESS STATE Running Ready � When the time slot of the processor expired � If the processor received any interrupt signal Running Waiting event to occur, or it requires event from an i/o device. Waiting Ready � A process in the blocked state is moved to the ready state when the event for which it was waiting, is completed. Operating System Concepts � A process is put into the waiting state, if the process need an

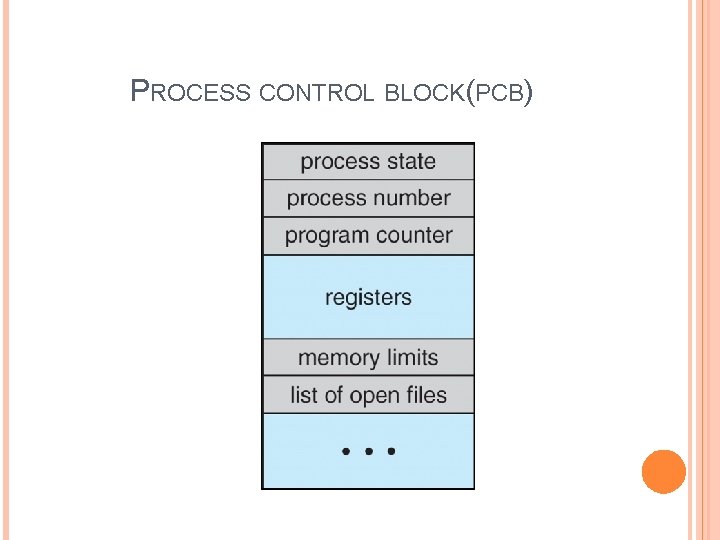

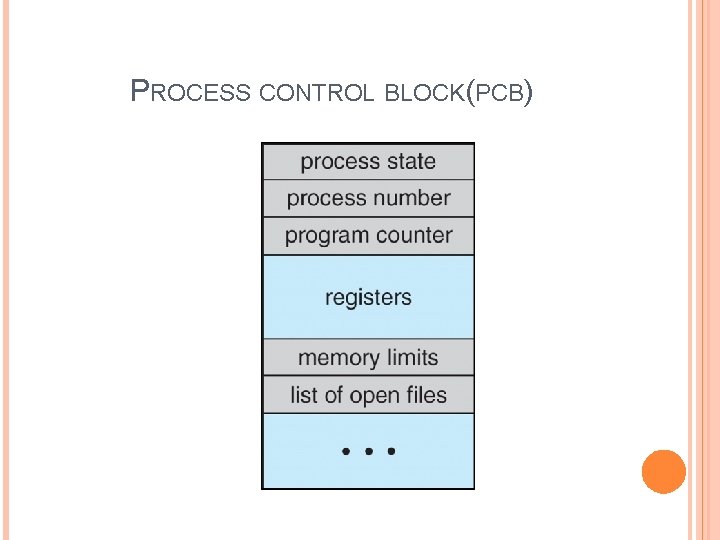

PROCESS CONTROL BLOCK(PCB)

PROCESS CONTROL BLOCK(PCB) Each process is represented in the operating system by a process control block: i. Process State—represents the state of process ii. Program Counter—indicates the address of next instruction to be executed iii. CPU registers—includes contents of all processcentric registers iv. CPU scheduling algorithms—includes process priority and pointers to scheduling queues v. Memory management information—memory allocated to the process vi. Accounting information—CPU used, clock time elapsed since start, time limits vii. I/O status information—I/O devices allocated to process, list of open files

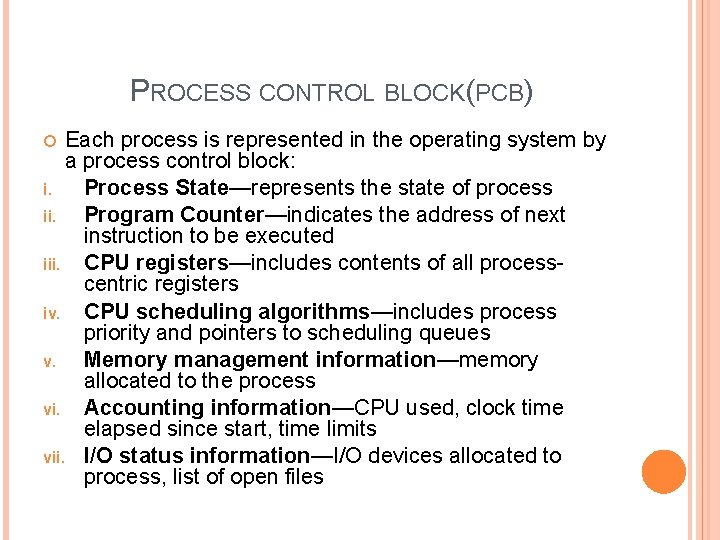

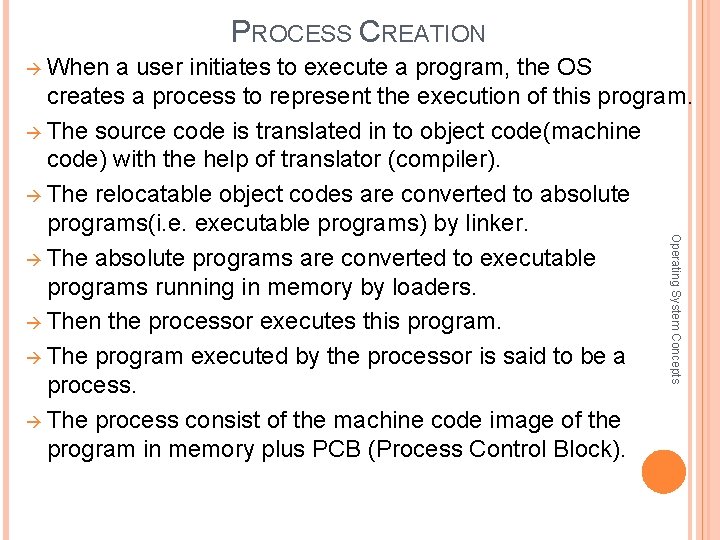

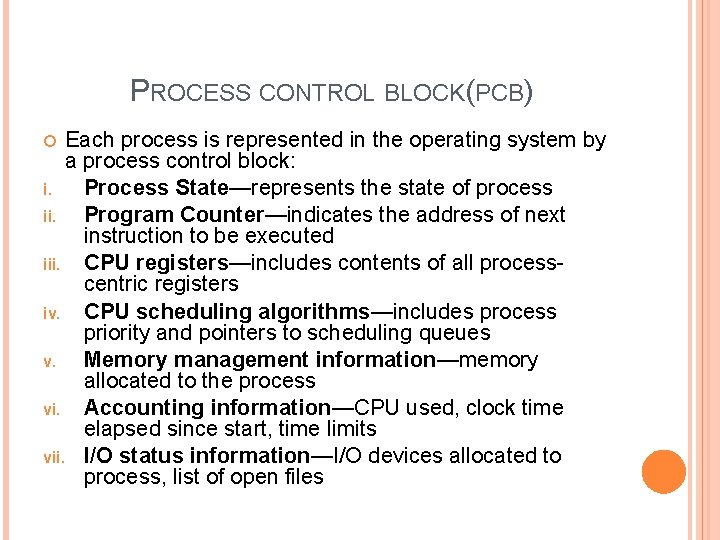

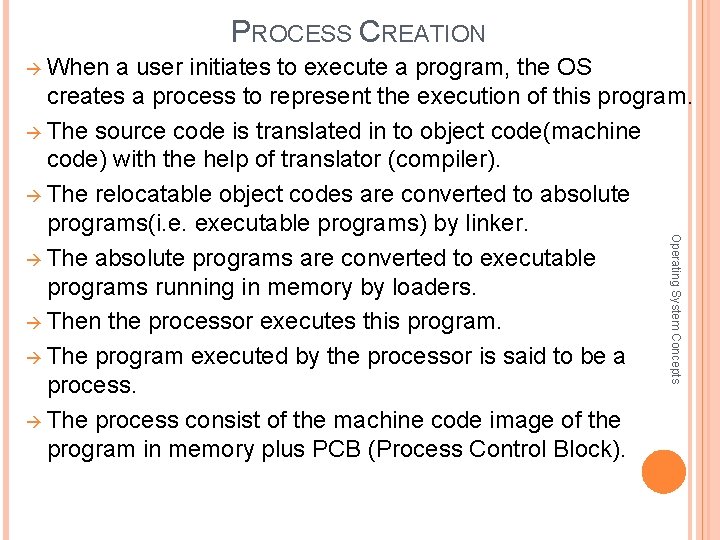

PROCESS CREATION When a user initiates to execute a program, the OS Operating System Concepts creates a process to represent the execution of this program. The source code is translated in to object code(machine code) with the help of translator (compiler). The relocatable object codes are converted to absolute programs(i. e. executable programs) by linker. The absolute programs are converted to executable programs running in memory by loaders. Then the processor executes this program. The program executed by the processor is said to be a process. The process consist of the machine code image of the program in memory plus PCB (Process Control Block).

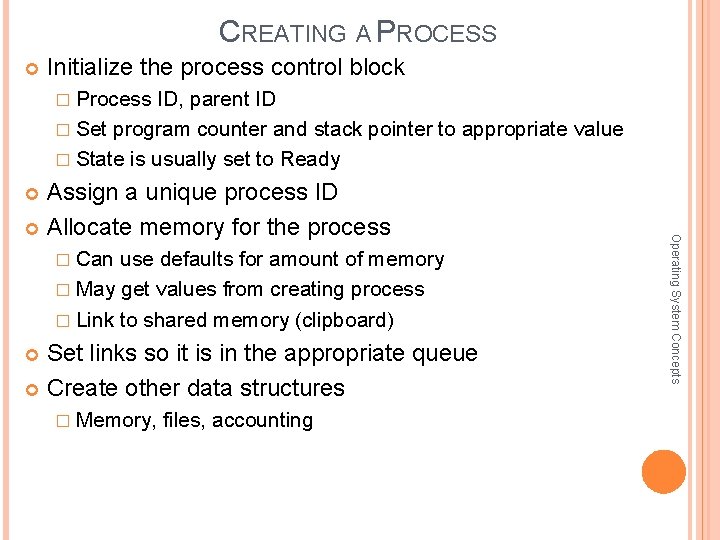

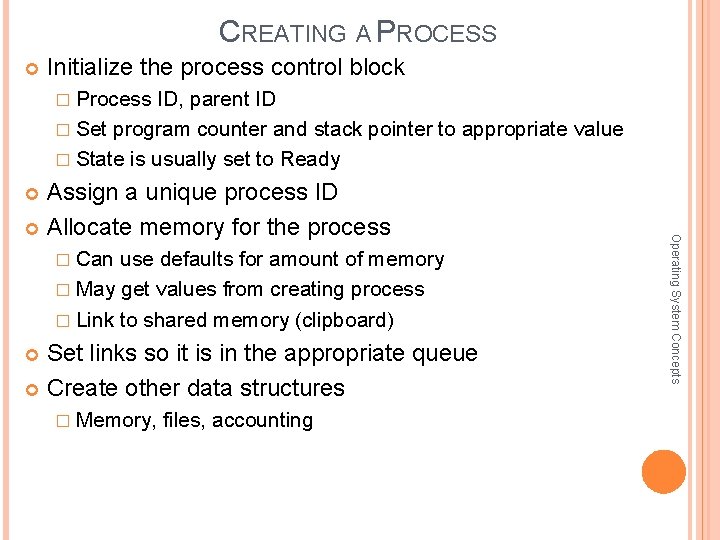

CREATING A PROCESS Initialize the process control block � Process ID, parent ID � Set program counter and stack pointer to appropriate value � State is usually set to Ready Assign a unique process ID Allocate memory for the process � May get values from creating process � Link to shared memory (clipboard) Set links so it is in the appropriate queue Create other data structures � Memory, files, accounting Operating System Concepts � Can use defaults for amount of memory

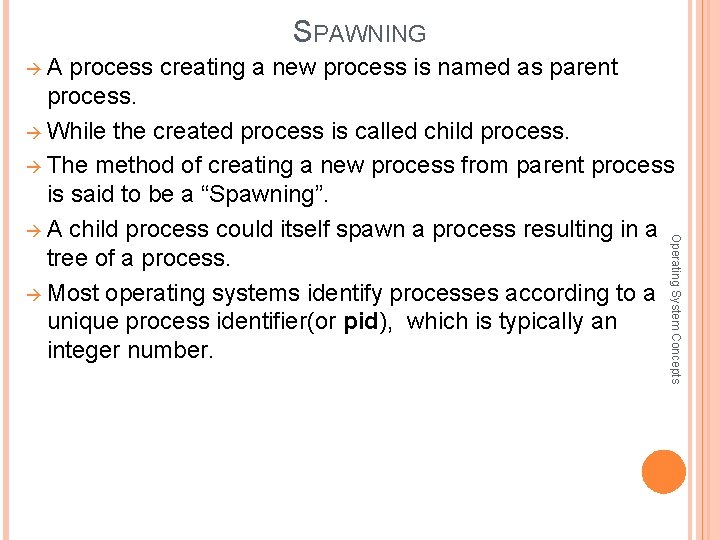

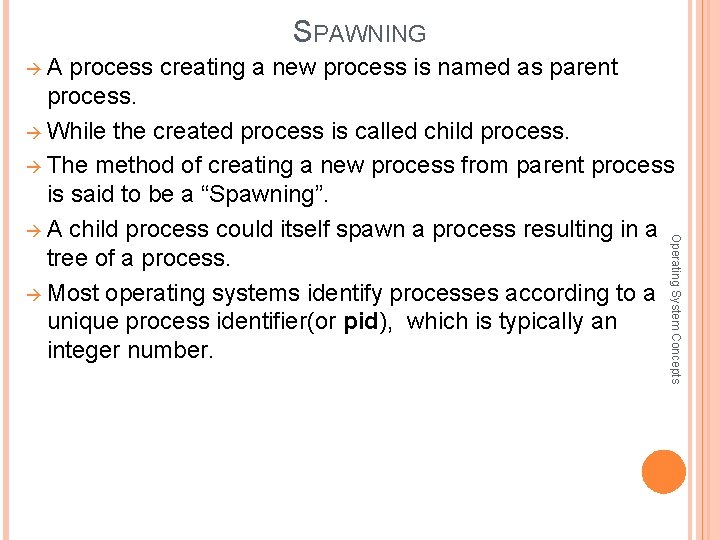

SPAWNING A process creating a new process is named as parent Operating System Concepts process. While the created process is called child process. The method of creating a new process from parent process is said to be a “Spawning”. A child process could itself spawn a process resulting in a tree of a process. Most operating systems identify processes according to a unique process identifier(or pid), which is typically an integer number.

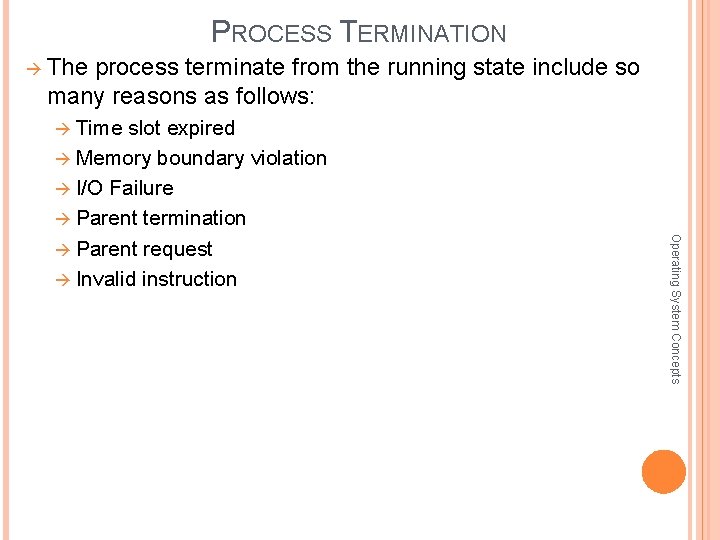

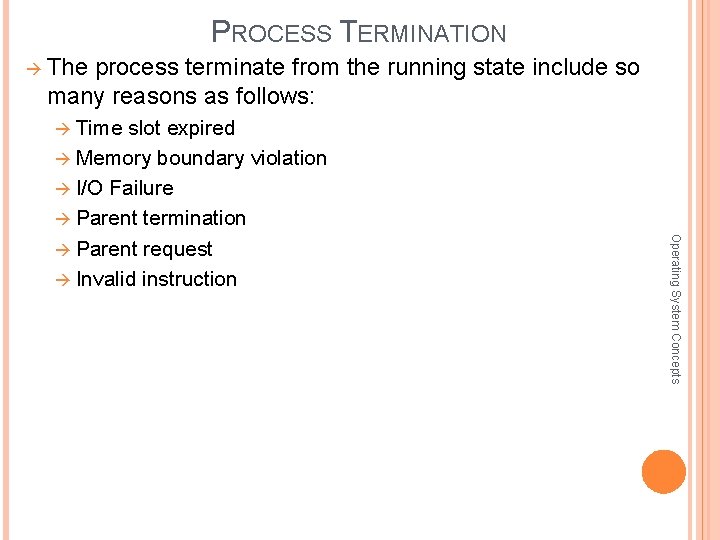

PROCESS TERMINATION The process terminate from the running state include so many reasons as follows: Time slot expired Memory boundary violation I/O Failure Parent termination Invalid instruction Operating System Concepts Parent request

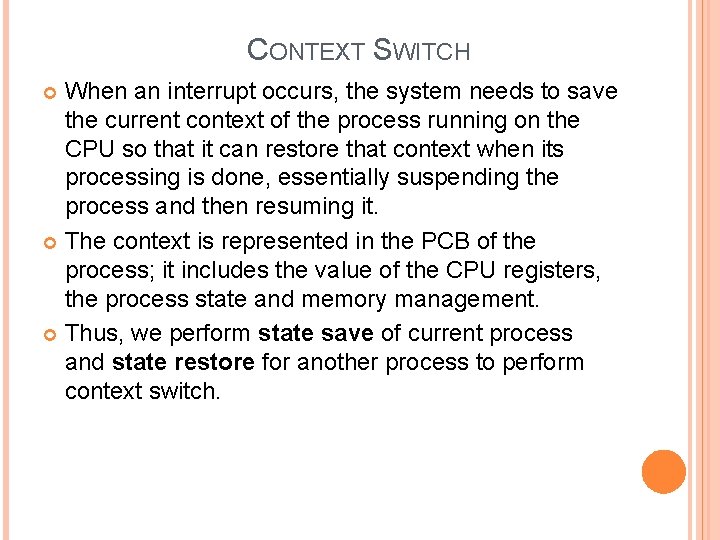

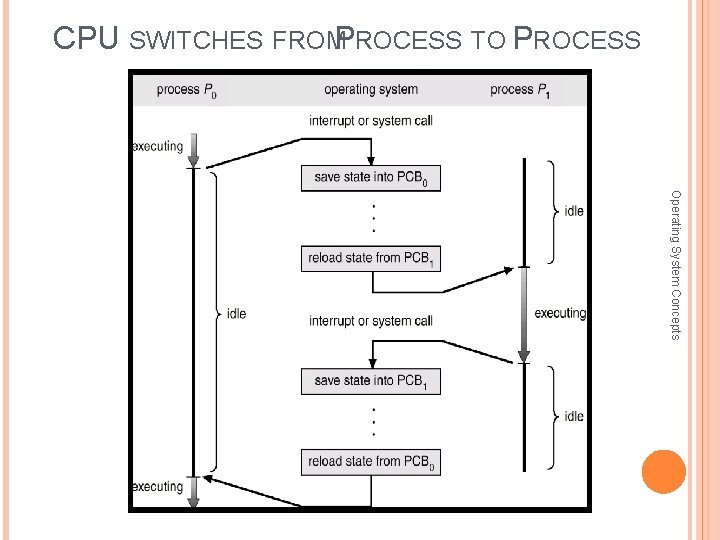

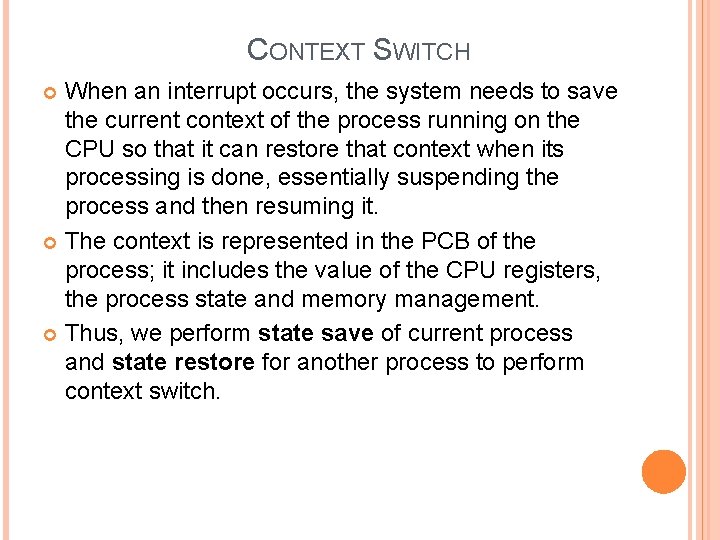

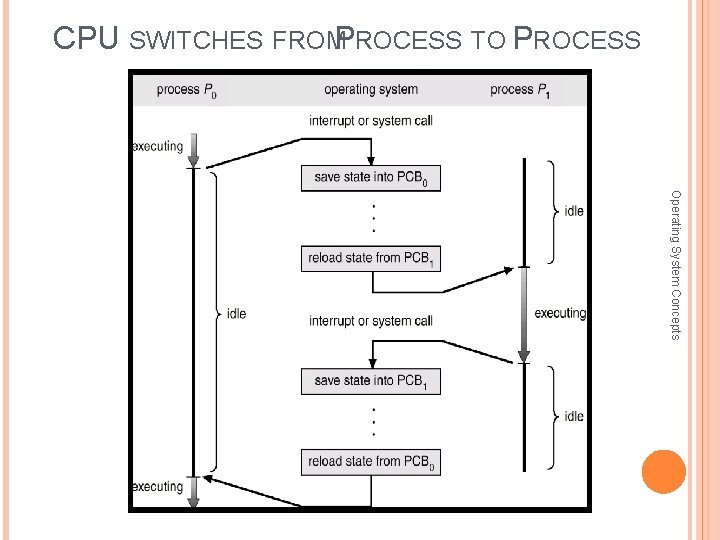

CONTEXT SWITCH When an interrupt occurs, the system needs to save the current context of the process running on the CPU so that it can restore that context when its processing is done, essentially suspending the process and then resuming it. The context is represented in the PCB of the process; it includes the value of the CPU registers, the process state and memory management. Thus, we perform state save of current process and state restore for another process to perform context switch.

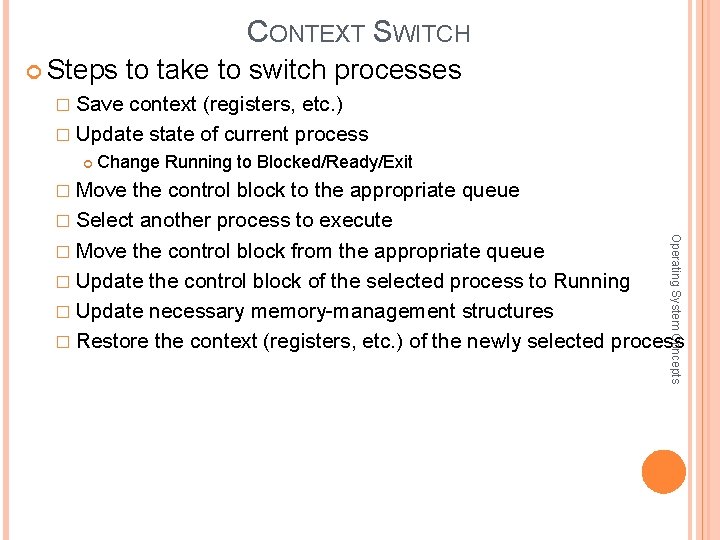

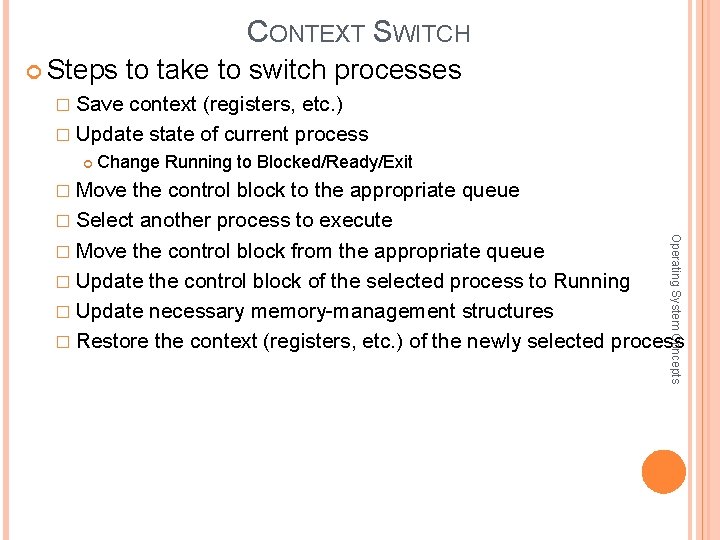

CONTEXT SWITCH Steps to take to switch processes � Save context (registers, etc. ) � Update state of current process Change Running to Blocked/Ready/Exit � Move the control block to the appropriate queue � Select another process to execute � Update the control block of the selected process to Running � Update necessary memory-management structures Operating System Concepts � Move the control block from the appropriate queue � Restore the context (registers, etc. ) of the newly selected process

CPU SWITCHES FROMP ROCESS TO PROCESS Operating System Concepts

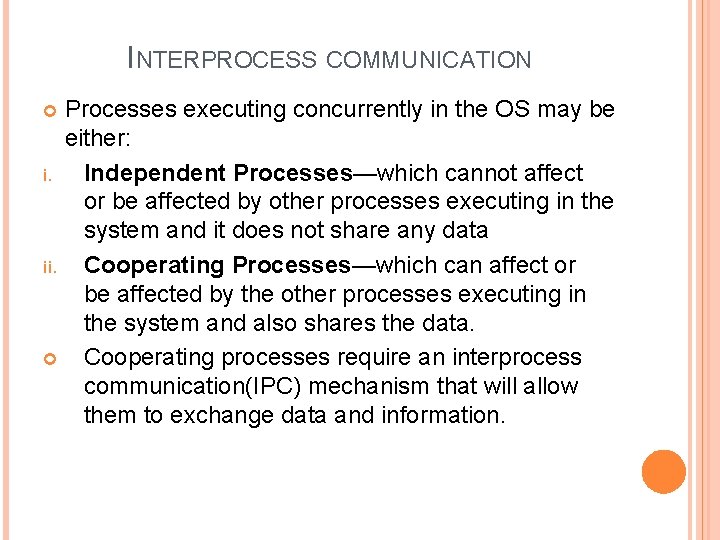

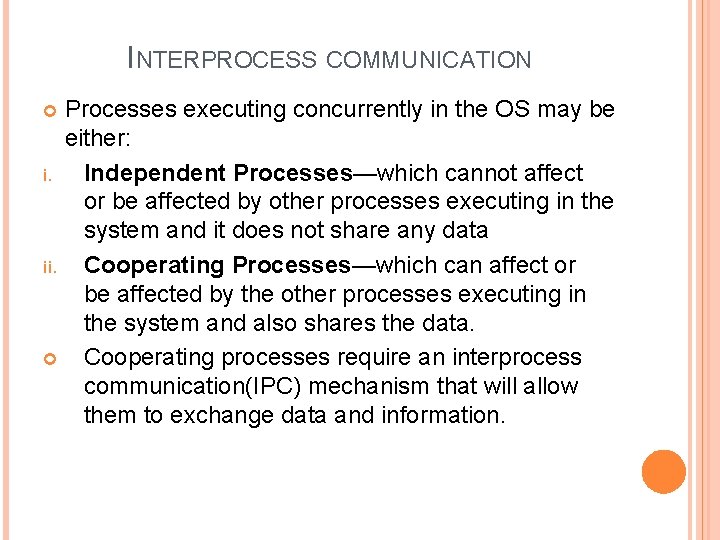

INTERPROCESS COMMUNICATION Processes executing concurrently in the OS may be either: i. Independent Processes—which cannot affect or be affected by other processes executing in the system and it does not share any data ii. Cooperating Processes—which can affect or be affected by the other processes executing in the system and also shares the data. Cooperating processes require an interprocess communication(IPC) mechanism that will allow them to exchange data and information.

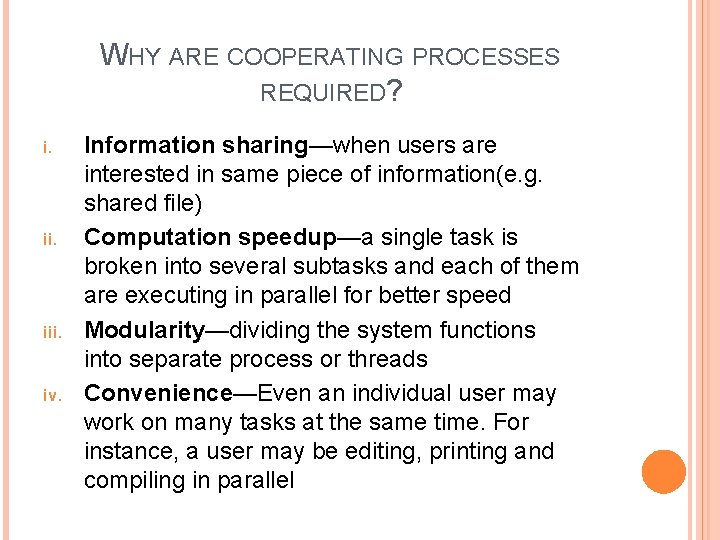

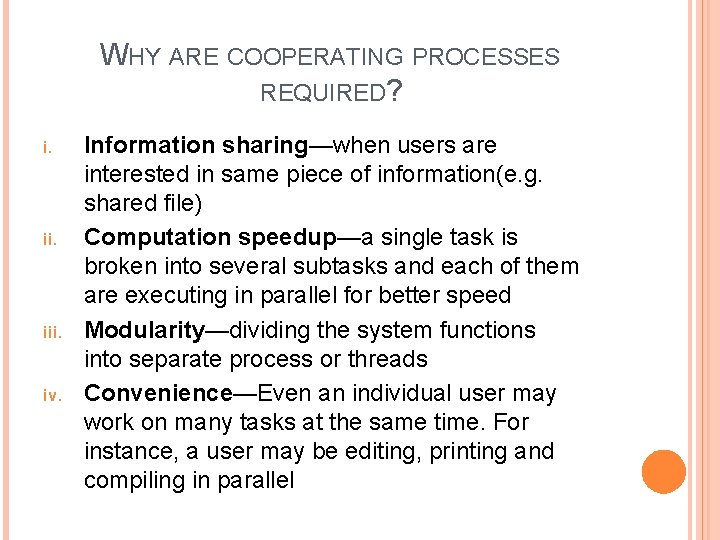

WHY ARE COOPERATING PROCESSES REQUIRED? i. iii. iv. Information sharing—when users are interested in same piece of information(e. g. shared file) Computation speedup—a single task is broken into several subtasks and each of them are executing in parallel for better speed Modularity—dividing the system functions into separate process or threads Convenience—Even an individual user may work on many tasks at the same time. For instance, a user may be editing, printing and compiling in parallel

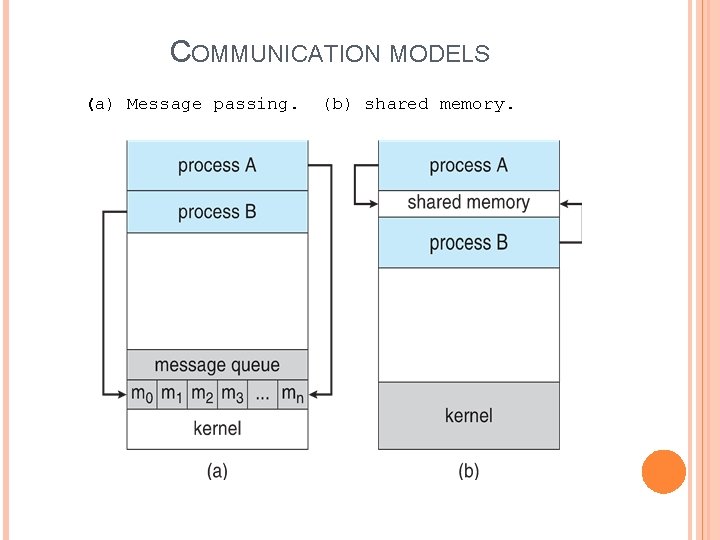

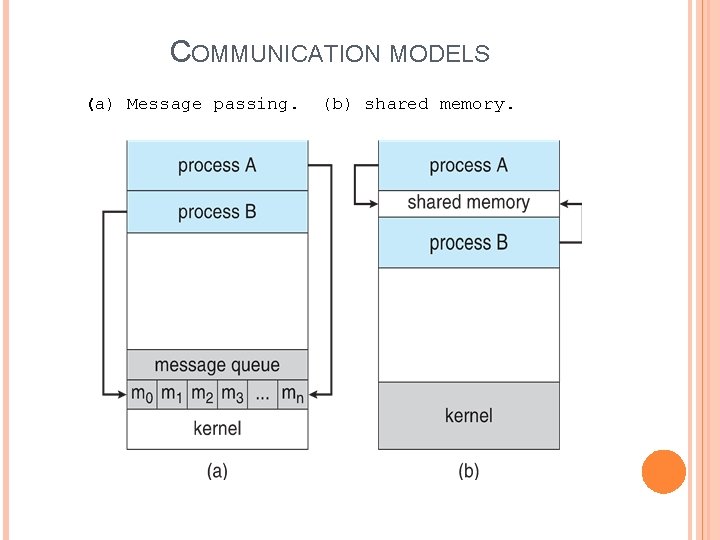

COMMUNICATION MODELS (a) Message passing. (b) shared memory.

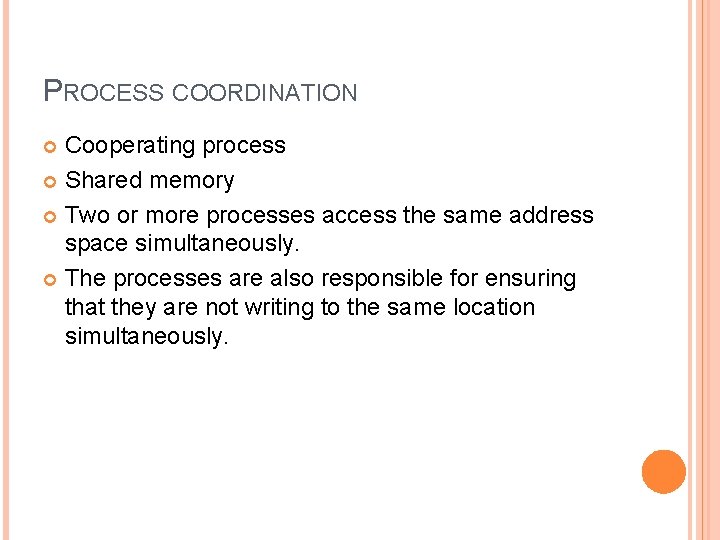

SHARED MEMORY It requires the communicating processes to establish a region of shared memory. Different processes share same address space The processes are also responsible for ensuring that they are not writing to the same location simultaneously.

MESSAGE PASSING In this mechanism the processes communicate and synchronize without sharing the same address space They communicate via passing messages to each other. This mechanism is used in distributed environment where the communicating processes may reside on different computers connected by a network. Example: chat program used on World Wide Web

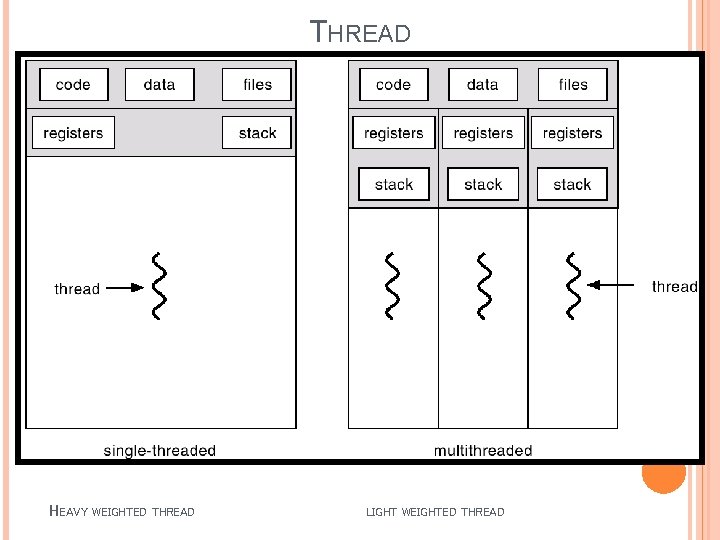

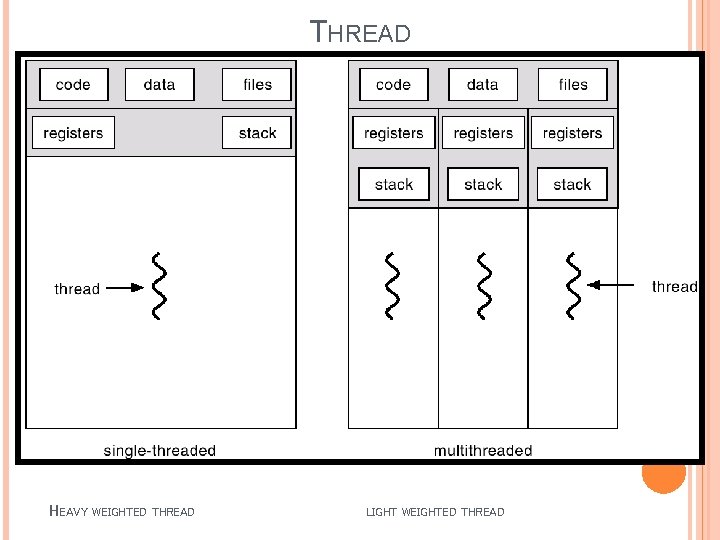

THREAD A process is divided into number of light weight process, each light weight process is said to be a thread. The thread has a program counter that keeps the tracks of which instructions to execute next. It has registers which hold its current working variables. It has stack which contains the execution history. If a process has multiple threads of control, it can perform more than one task at a time.

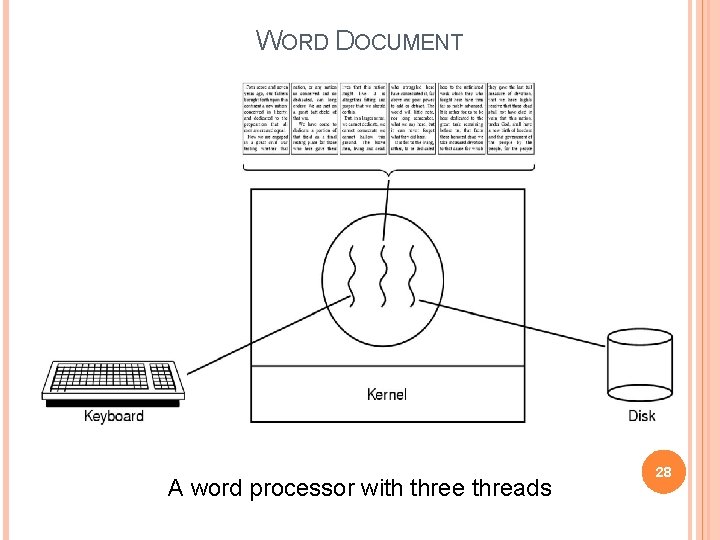

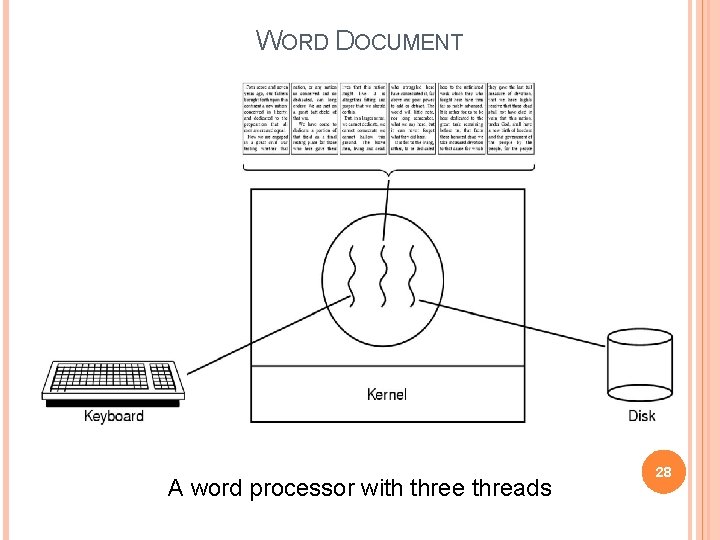

THREAD EXAMPLE A web browser might have one thread which display Operating System Concepts image or text while another thread retrieves data from the network. In word processor, programmer may open a file, responding to keystrokes(one thread), text is automatically formatting (another thread), the text is automatically saved in the disk (another thread).

THREAD HEAVY WEIGHTED THREAD LIGHT WEIGHTED THREAD

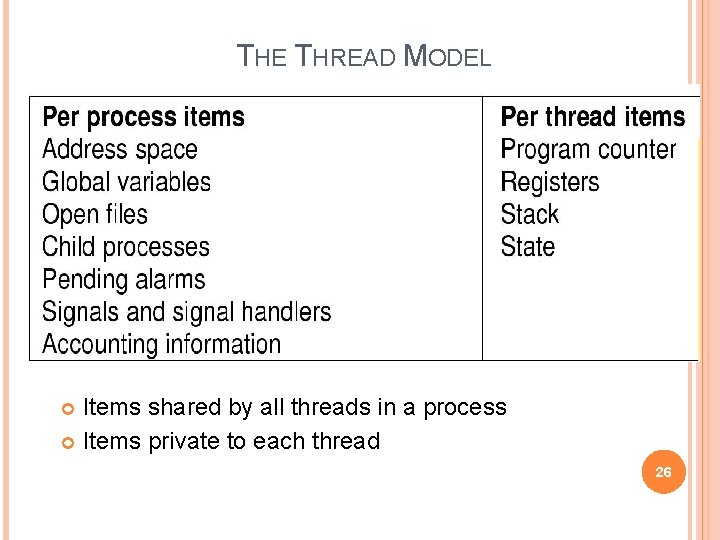

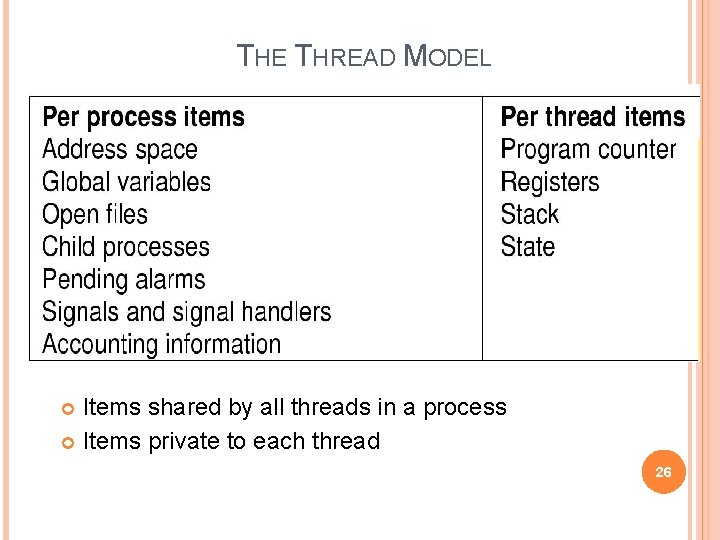

THE THREAD MODEL Items shared by all threads in a process Items private to each thread 26

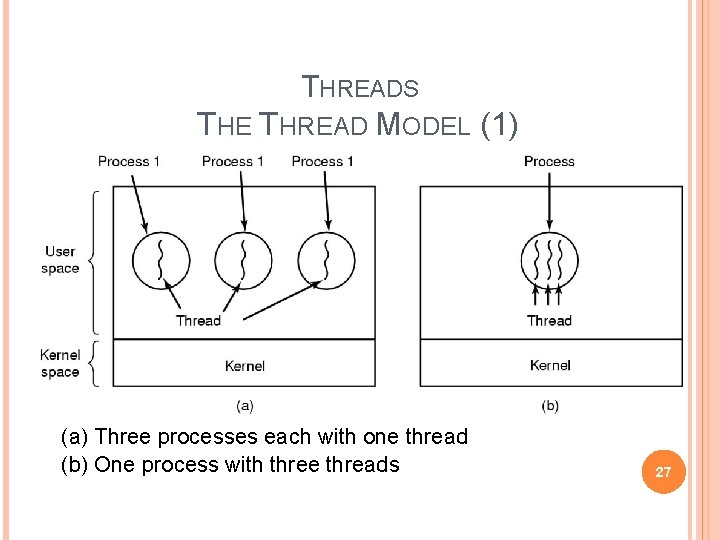

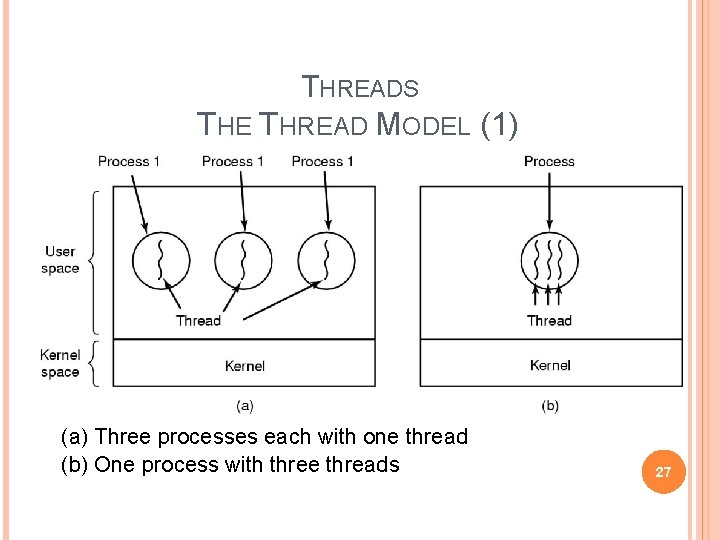

THREADS THE THREAD MODEL (1) (a) Three processes each with one thread (b) One process with three threads 27

WORD DOCUMENT A word processor with three threads 28

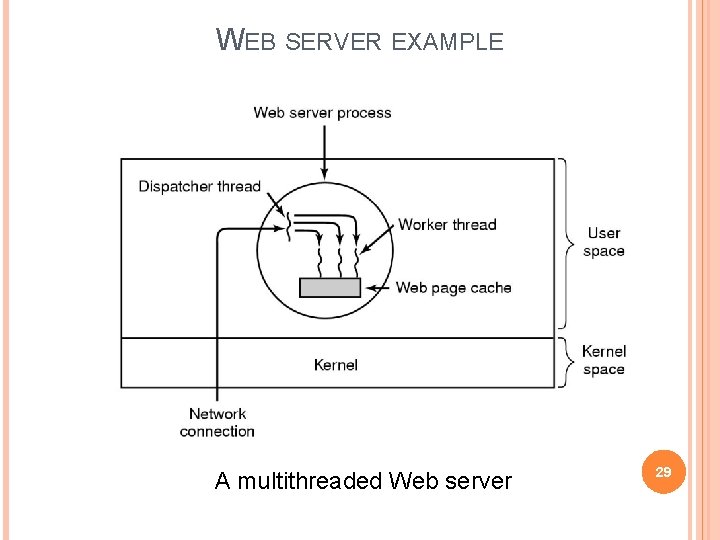

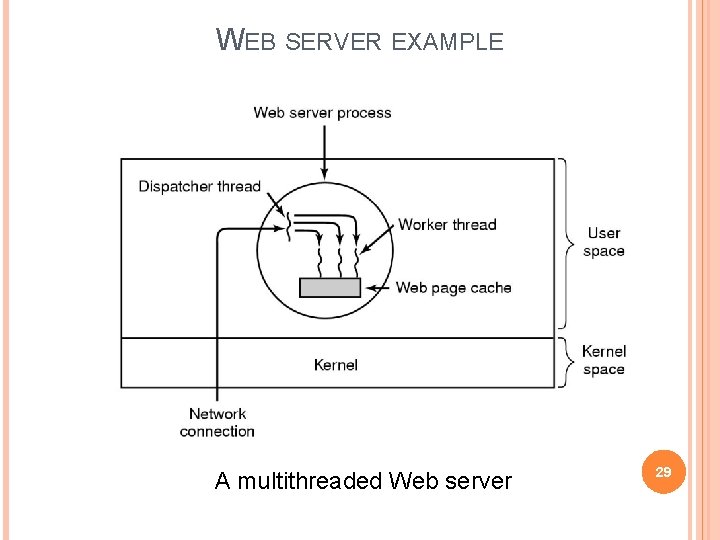

WEB SERVER EXAMPLE A multithreaded Web server 29

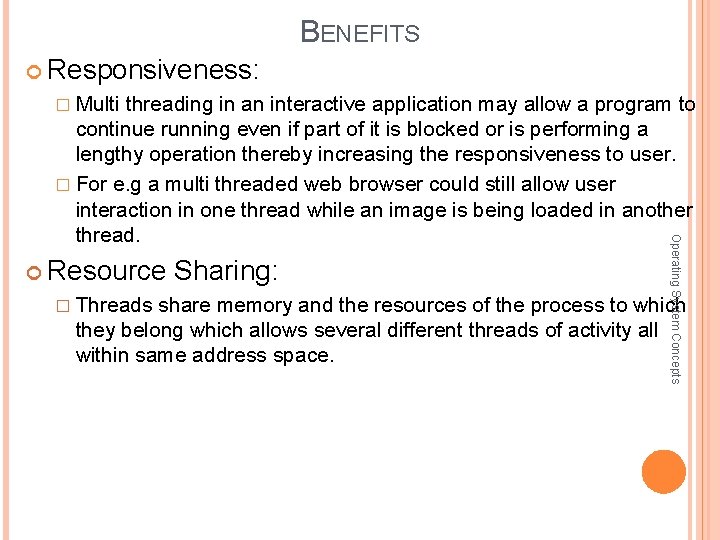

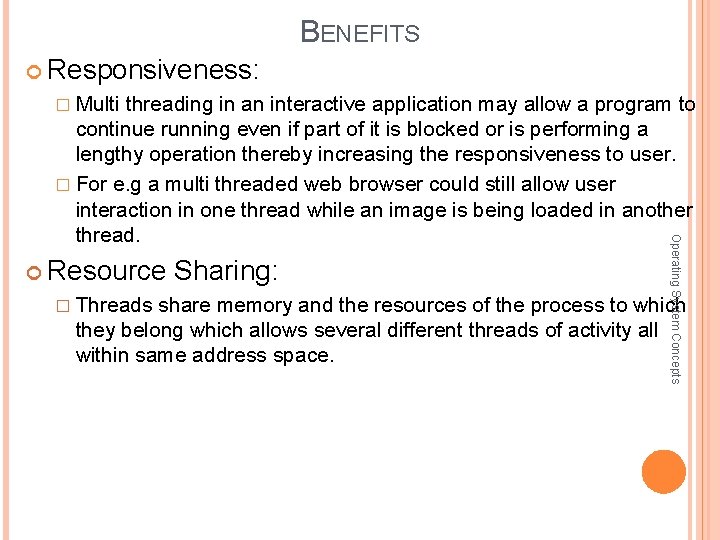

BENEFITS Responsiveness: � Multi threading in an interactive application may allow a program to Resource Sharing: Operating System Concepts continue running even if part of it is blocked or is performing a lengthy operation thereby increasing the responsiveness to user. � For e. g a multi threaded web browser could still allow user interaction in one thread while an image is being loaded in another thread. � Threads share memory and the resources of the process to which they belong which allows several different threads of activity all within same address space.

BENEFITS Economy: � Allocating a memory and resources for process creation may be costly. Threads share same resources of the process to which they belong, it is more economical to create and context switch threads. Utilization of multiprocessor architectures: Operating System Concepts � Implementations of thread can be greatly increased in a multiprocessor architecture, where each thread may be running in parallel on a different processor.

THREAD Two level threads: �User level thread �Kernel level thread Operating System Concepts

USER LEVEL THREAD User threads are supported above the kernel and are Operating System Concepts implemented by thread library at the user level. The library support for thread creation, scheduling, management is without support of kernel. Kernel is unaware of user level threads, all threads creation and scheduling are done in user space without the need for kernel intervention. User Level threads are fast to create and manage. It has drawbacks for e. g. if the kernel is single threaded, then any user level thread that blocks system call will cause the entire process to block even if other threads are available to run within the application.

KERNEL LEVEL THREAD Kernel level threads are supported directly by the Operating System Concepts operating system. The kernel performs thread creation, scheduling, and management in kernel space. Kernel threads are generally slower to create and manage as compared to user threads. Since the kernel is managing the threads, if a thread performs a blocking a system call, the kernel can schedule another thread in the application for execution. In multiprocessor environment, the kernel can schedule threads on different processors. .

MULTITHREADING MODELS Many-to-One One-to-One Many-to-Many Operating System Concepts

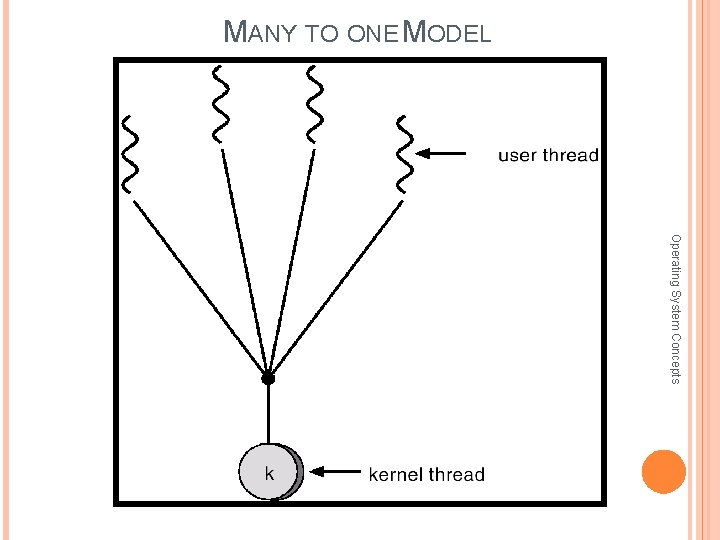

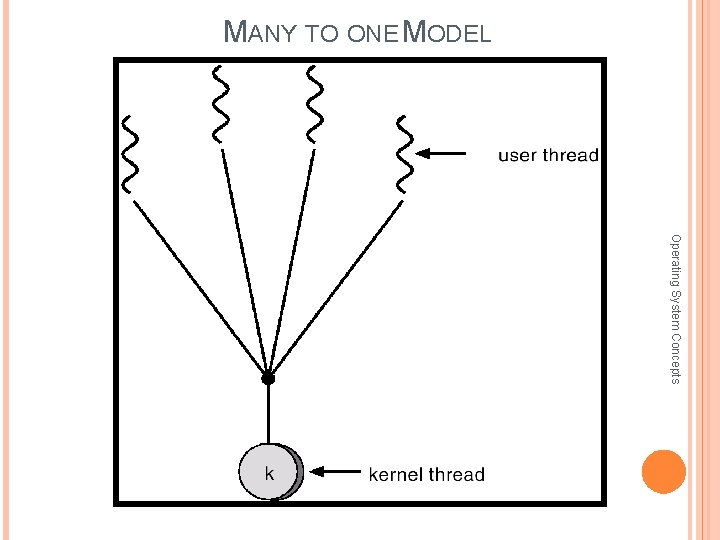

MANY TO ONE MODEL The many-to-one model maps many user level threads to one kernel level thread. Thread management is done in user space, it is efficient. But if the thread makes blocking a system call, the entire process will be blocked. Only one thread can access kernel at a time, multiple threads are unable to run in parallel on multiprocessors.

MANY TO ONE MODEL Operating System Concepts

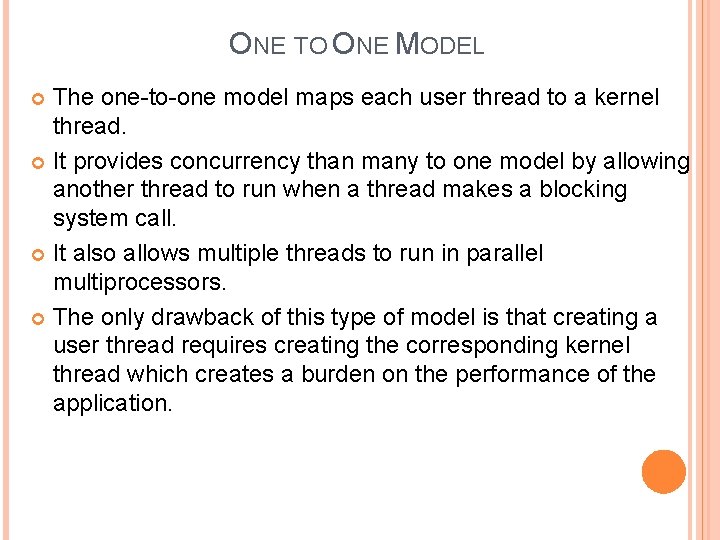

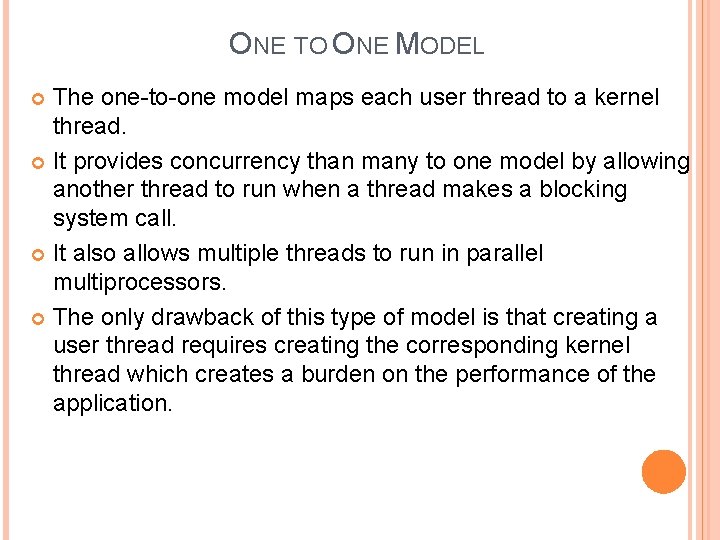

ONE TO ONE MODEL The one-to-one model maps each user thread to a kernel thread. It provides concurrency than many to one model by allowing another thread to run when a thread makes a blocking system call. It also allows multiple threads to run in parallel multiprocessors. The only drawback of this type of model is that creating a user thread requires creating the corresponding kernel thread which creates a burden on the performance of the application.

ONE TO ONE MODELS Operating System Concepts

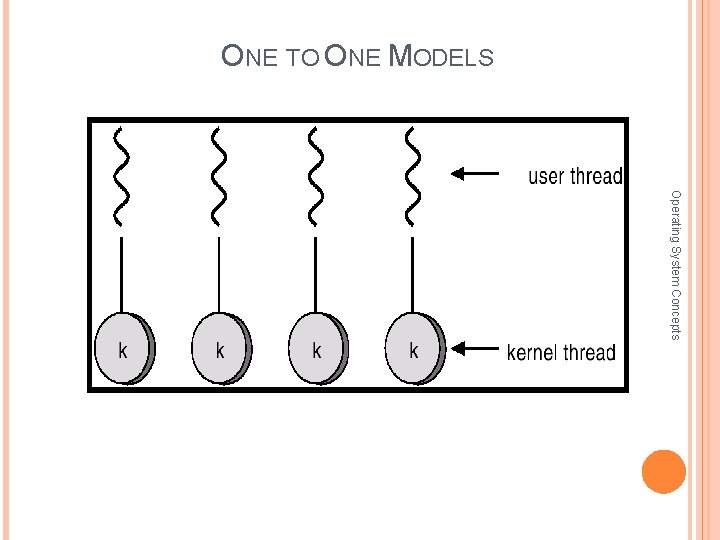

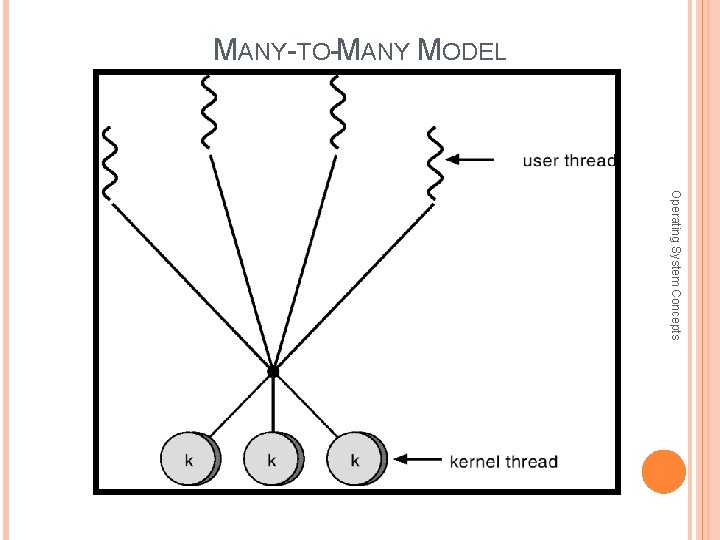

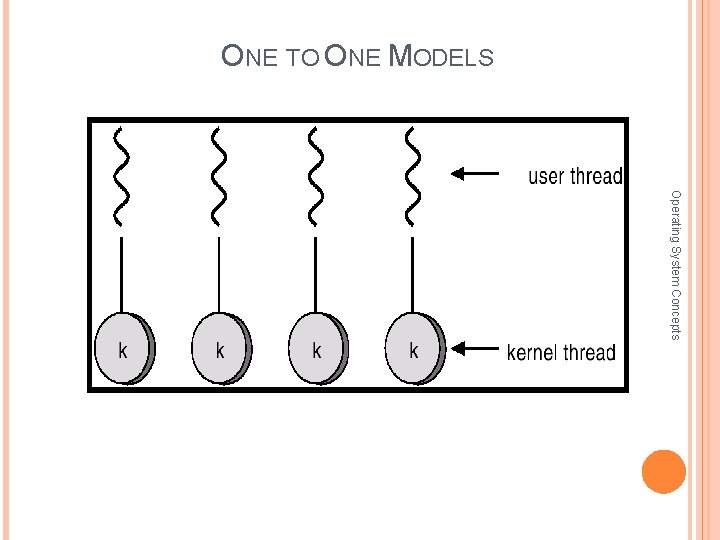

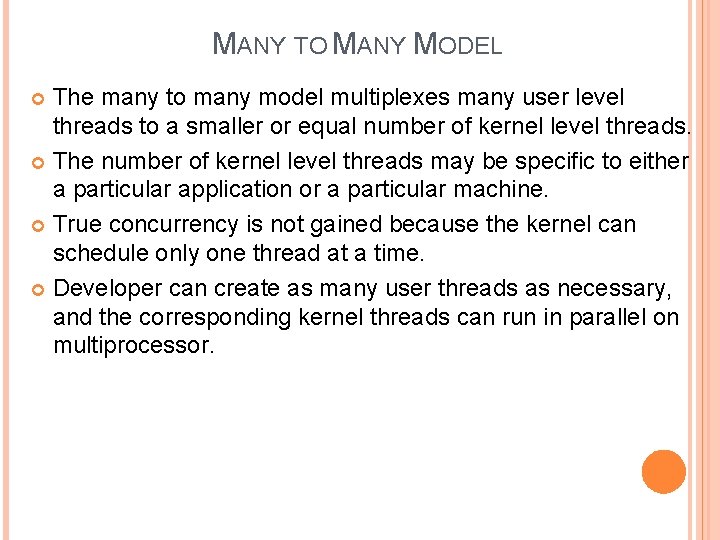

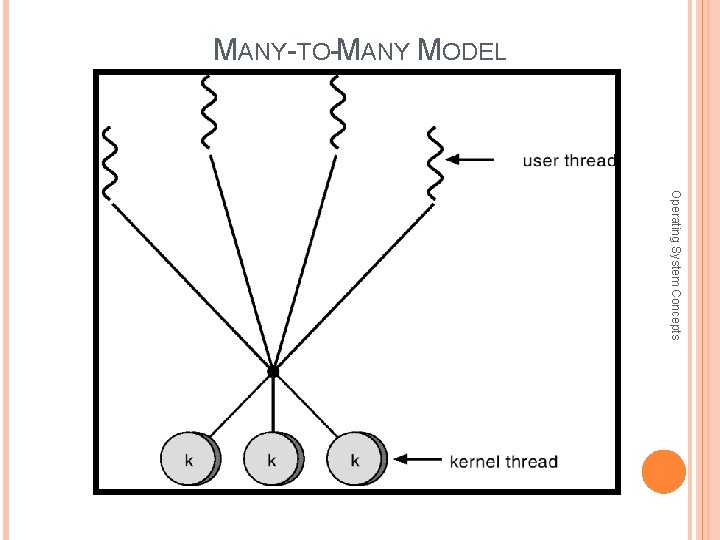

MANY TO MANY MODEL The many to many model multiplexes many user level threads to a smaller or equal number of kernel level threads. The number of kernel level threads may be specific to either a particular application or a particular machine. True concurrency is not gained because the kernel can schedule only one thread at a time. Developer can create as many user threads as necessary, and the corresponding kernel threads can run in parallel on multiprocessor.

MANY-TO-MANY MODEL Operating System Concepts

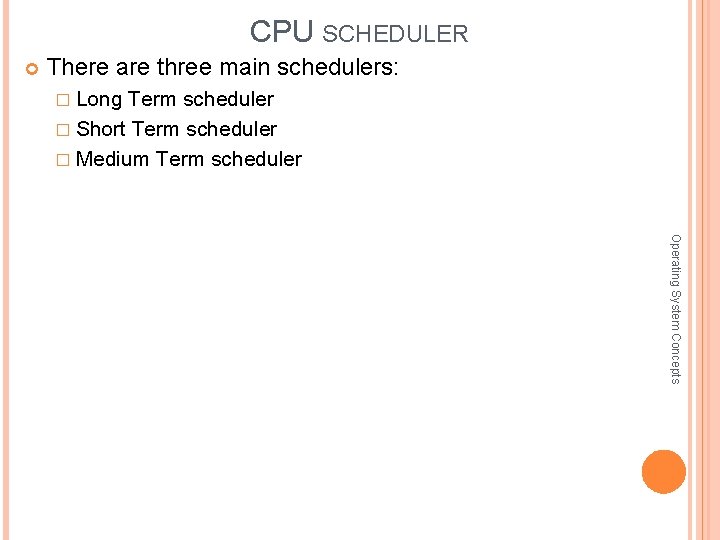

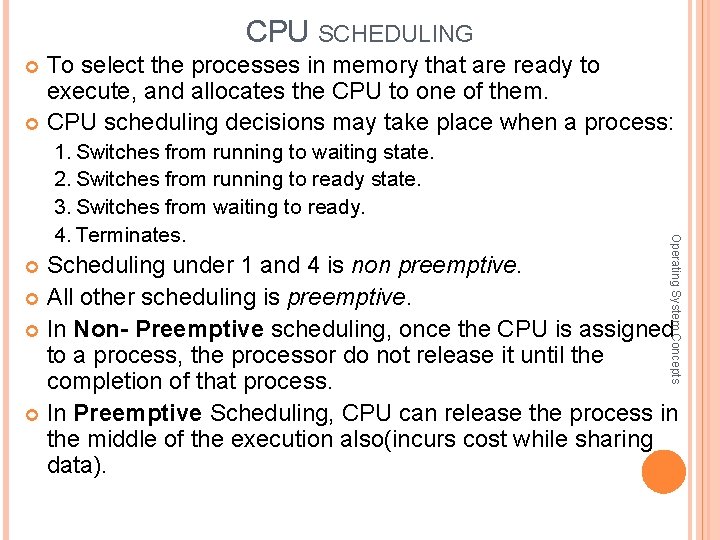

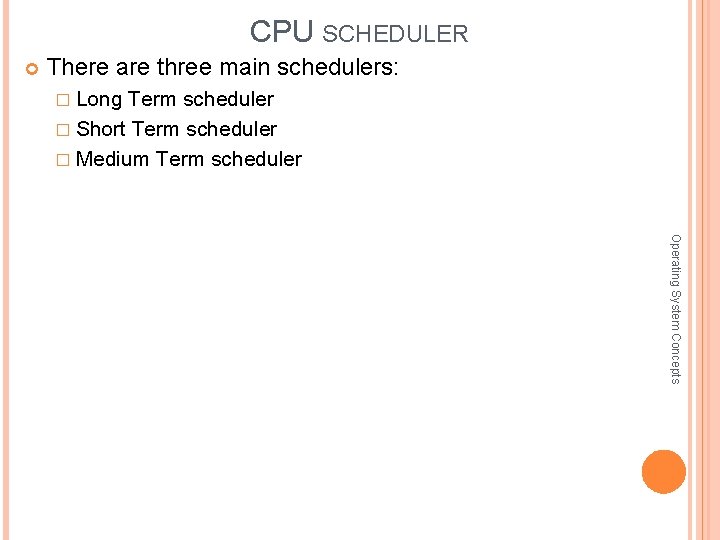

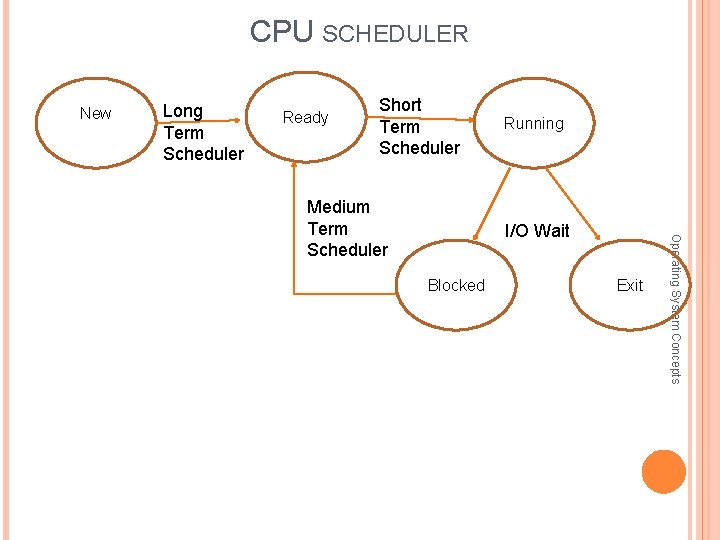

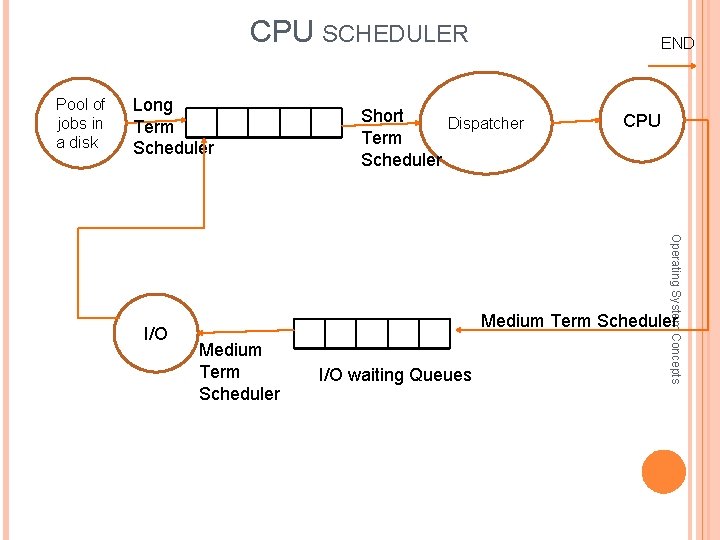

CPU SCHEDULER There are three main schedulers: � Long Term scheduler � Short Term scheduler � Medium Term scheduler Operating System Concepts

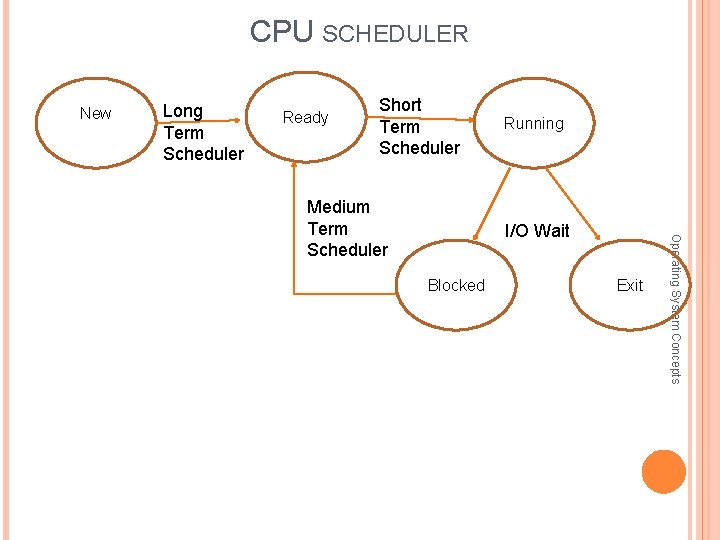

CPU SCHEDULER New Long Term Scheduler Ready Short Term Scheduler I/O Wait Blocked Exit Operating System Concepts Medium Term Scheduler Running

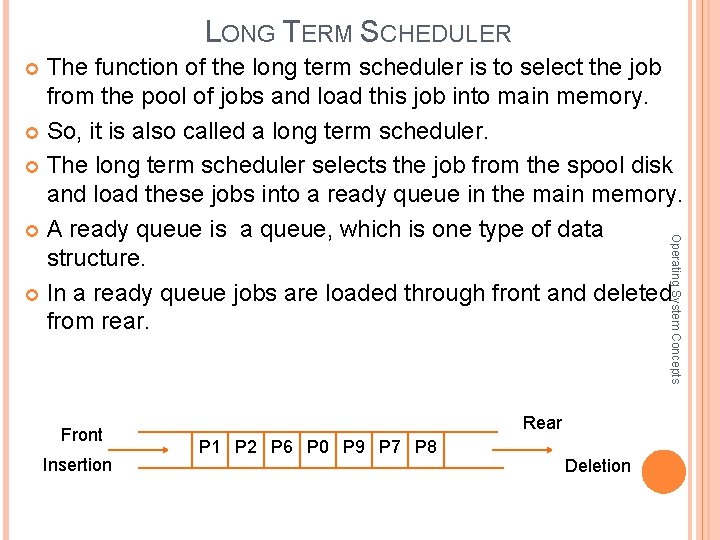

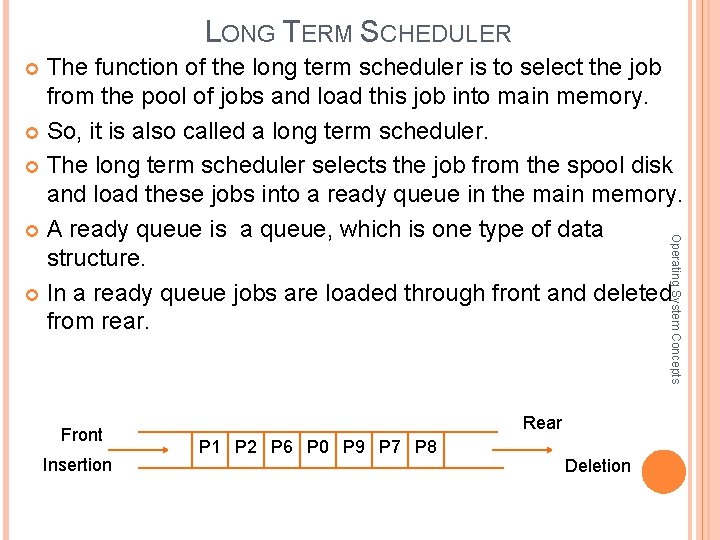

LONG TERM SCHEDULER The function of the long term scheduler is to select the job from the pool of jobs and load this job into main memory. So, it is also called a long term scheduler. The long term scheduler selects the job from the spool disk and load these jobs into a ready queue in the main memory. A ready queue is a queue, which is one type of data structure. In a ready queue jobs are loaded through front and deleted from rear. Operating System Concepts Front Insertion Rear P 1 P 2 P 6 P 0 P 9 P 7 P 8 Deletion

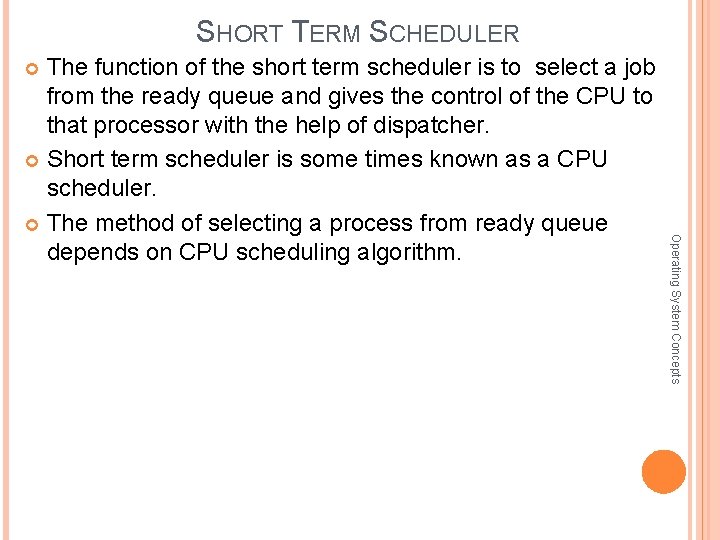

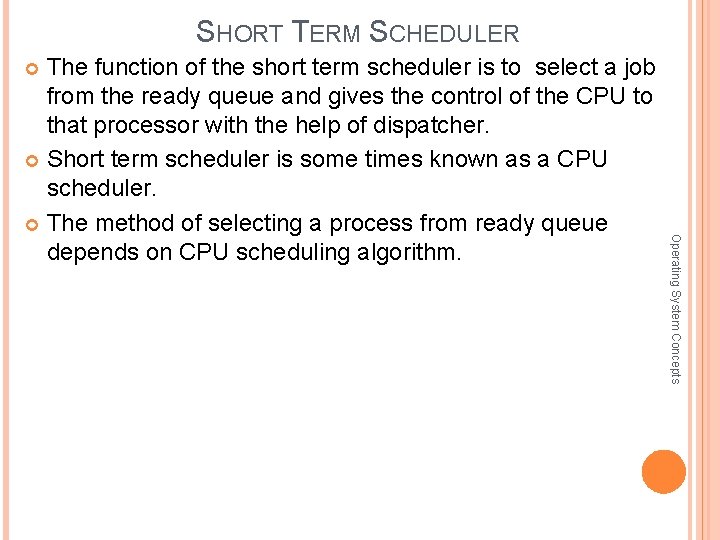

SHORT TERM SCHEDULER The function of the short term scheduler is to select a job from the ready queue and gives the control of the CPU to that processor with the help of dispatcher. Short term scheduler is some times known as a CPU scheduler. The method of selecting a process from ready queue depends on CPU scheduling algorithm. Operating System Concepts

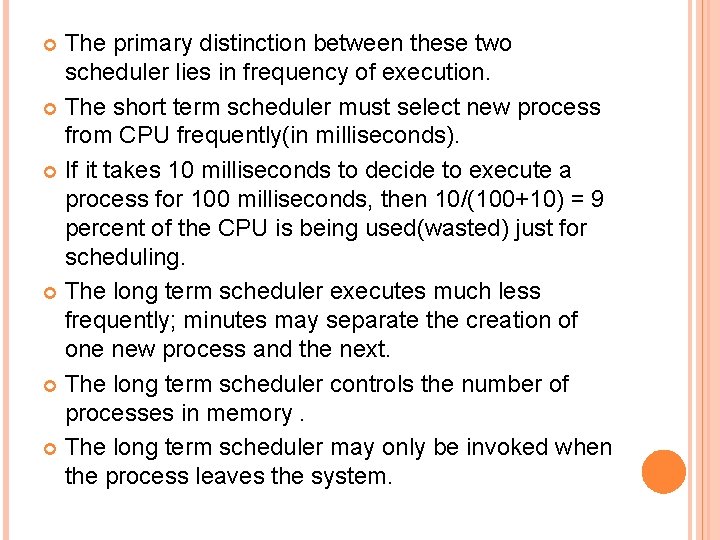

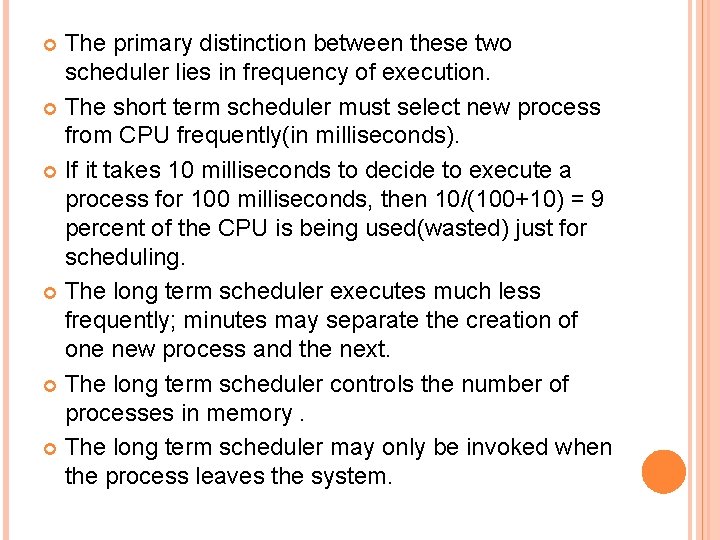

The primary distinction between these two scheduler lies in frequency of execution. The short term scheduler must select new process from CPU frequently(in milliseconds). If it takes 10 milliseconds to decide to execute a process for 100 milliseconds, then 10/(100+10) = 9 percent of the CPU is being used(wasted) just for scheduling. The long term scheduler executes much less frequently; minutes may separate the creation of one new process and the next. The long term scheduler controls the number of processes in memory. The long term scheduler may only be invoked when the process leaves the system.

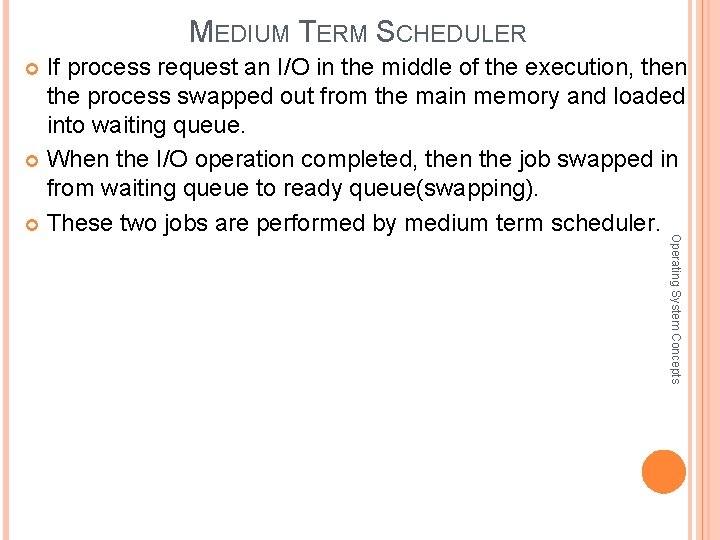

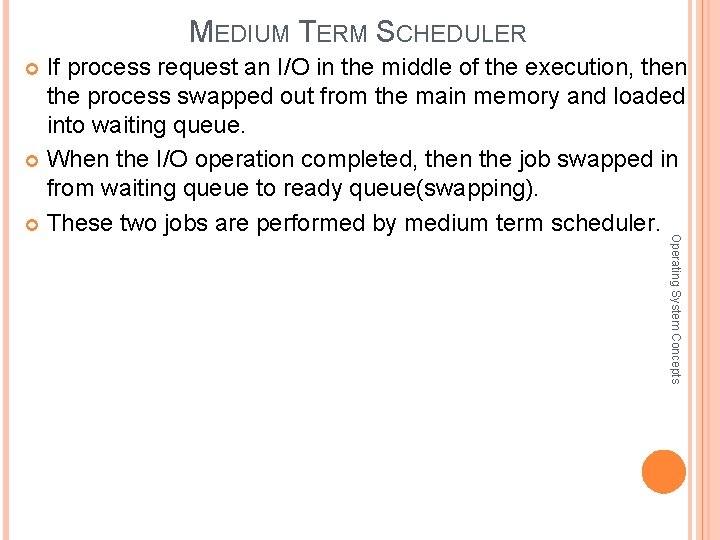

MEDIUM TERM SCHEDULER If process request an I/O in the middle of the execution, then the process swapped out from the main memory and loaded into waiting queue. When the I/O operation completed, then the job swapped in from waiting queue to ready queue(swapping). These two jobs are performed by medium term scheduler. Operating System Concepts

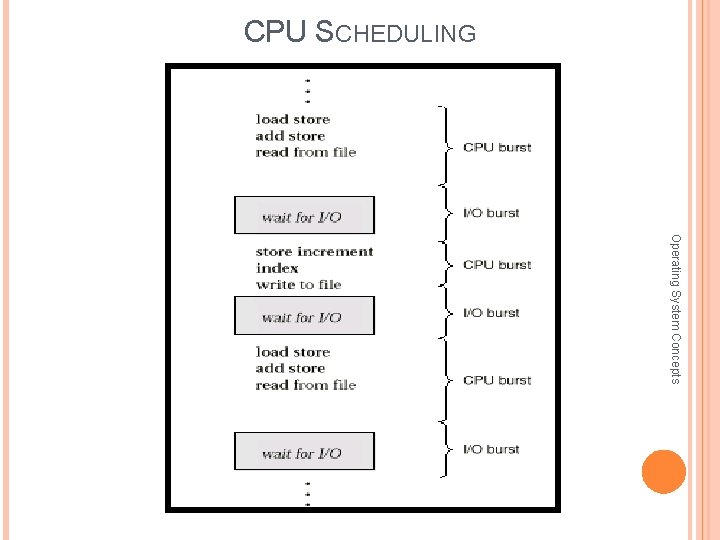

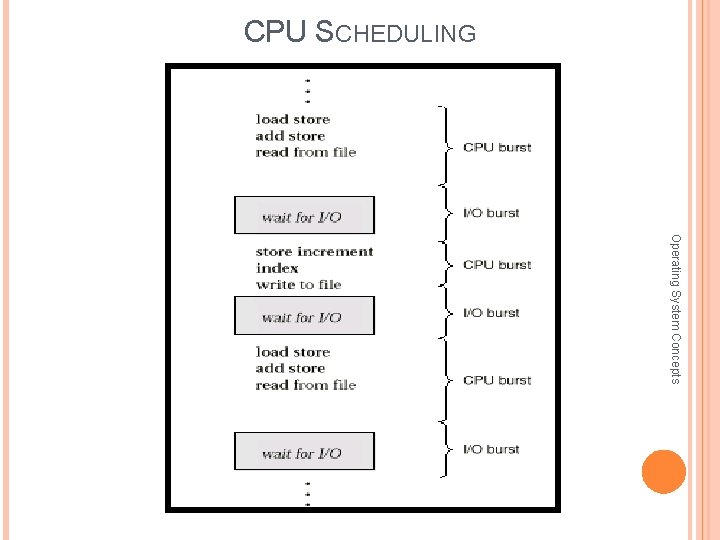

NEED OF CPU SCHEDULING Maximum CPU utilization obtained with multiprogramming CPU–I/O Burst Cycle – Process execution consists of a cycle of CPU execution and I/O wait. CPU burst distribution Operating System Concepts

CPU SCHEDULING Operating System Concepts

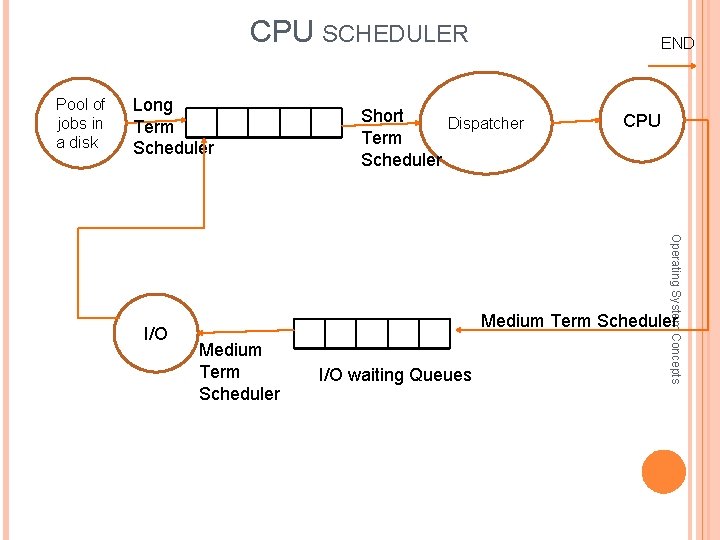

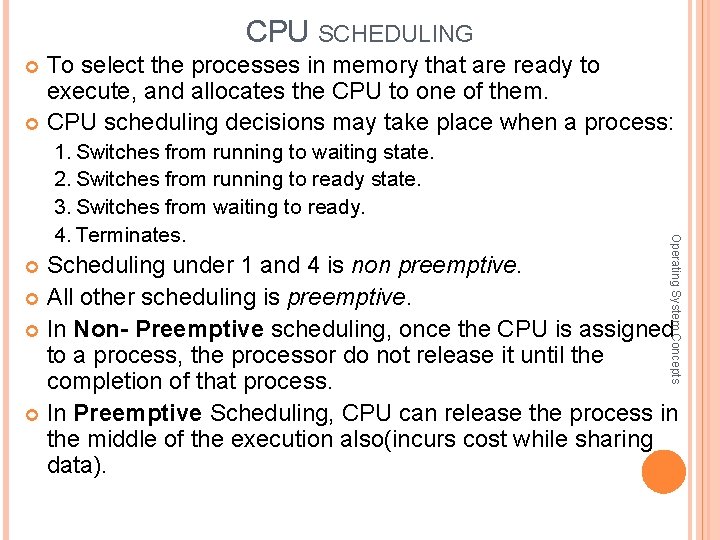

CPU SCHEDULING To select the processes in memory that are ready to execute, and allocates the CPU to one of them. CPU scheduling decisions may take place when a process: Operating System Concepts 1. Switches from running to waiting state. 2. Switches from running to ready state. 3. Switches from waiting to ready. 4. Terminates. Scheduling under 1 and 4 is non preemptive. All other scheduling is preemptive. In Non- Preemptive scheduling, once the CPU is assigned to a process, the processor do not release it until the completion of that process. In Preemptive Scheduling, CPU can release the process in the middle of the execution also(incurs cost while sharing data).

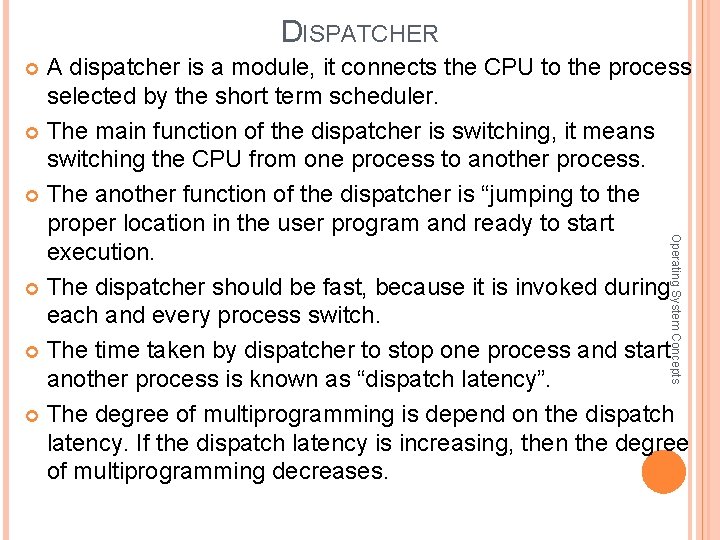

CPU SCHEDULER Pool of jobs in a diskx Long Term Scheduler Short Dispatcher Term Scheduler CPU Operating System Concepts I/O END Medium Term Scheduler I/O waiting Queues

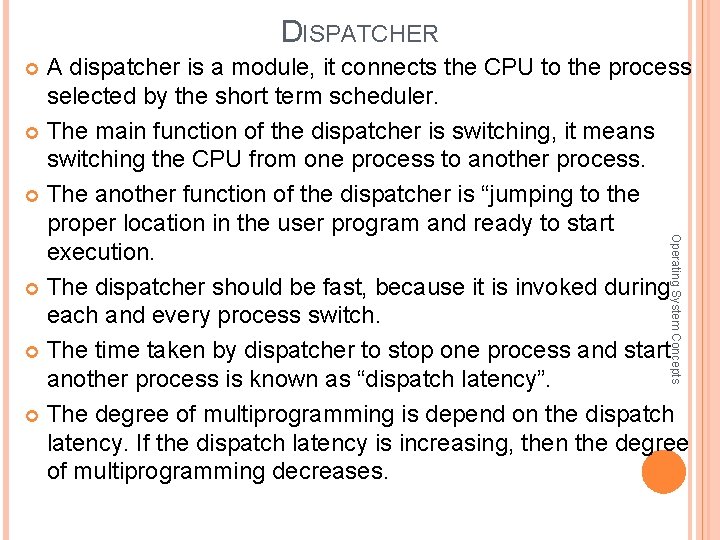

DISPATCHER A dispatcher is a module, it connects the CPU to the process selected by the short term scheduler. The main function of the dispatcher is switching, it means switching the CPU from one process to another process. The another function of the dispatcher is “jumping to the proper location in the user program and ready to start execution. The dispatcher should be fast, because it is invoked during each and every process switch. The time taken by dispatcher to stop one process and start another process is known as “dispatch latency”. The degree of multiprogramming is depend on the dispatch latency. If the dispatch latency is increasing, then the degree of multiprogramming decreases. Operating System Concepts

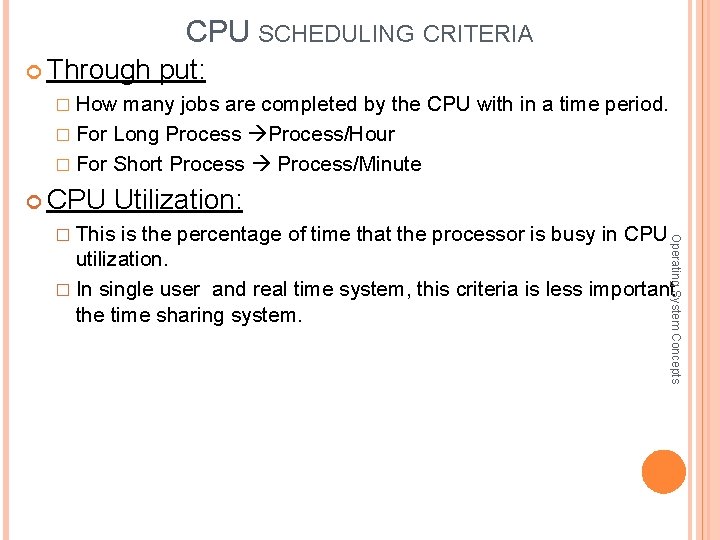

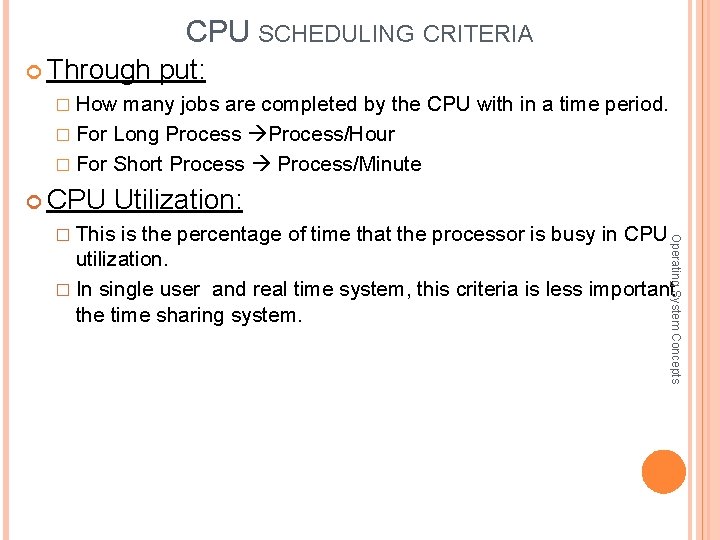

CPU SCHEDULING CRITERIA Through put: � How many jobs are completed by the CPU with in a time period. � For Long Process/Hour � For Short Process/Minute CPU Utilization: Operating System Concepts � This is the percentage of time that the processor is busy in CPU utilization. � In single user and real time system, this criteria is less important the time sharing system.

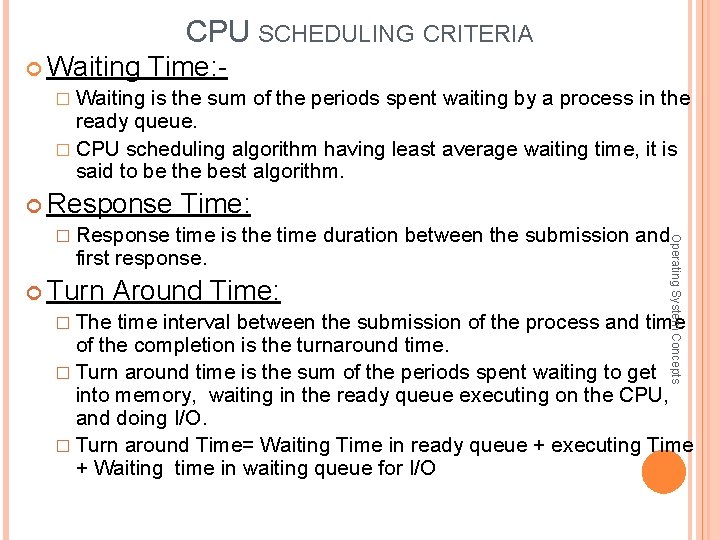

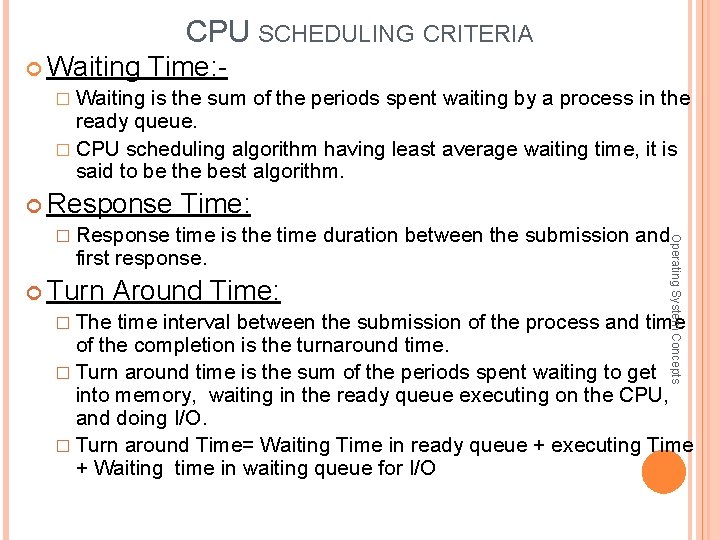

CPU SCHEDULING CRITERIA Waiting Time: � Waiting is the sum of the periods spent waiting by a process in the ready queue. � CPU scheduling algorithm having least average waiting time, it is said to be the best algorithm. Operating System Concepts Response Time: � Response time is the time duration between the submission and first response. Turn Around Time: � The time interval between the submission of the process and time of the completion is the turnaround time. � Turn around time is the sum of the periods spent waiting to get into memory, waiting in the ready queue executing on the CPU, and doing I/O. � Turn around Time= Waiting Time in ready queue + executing Time + Waiting time in waiting queue for I/O

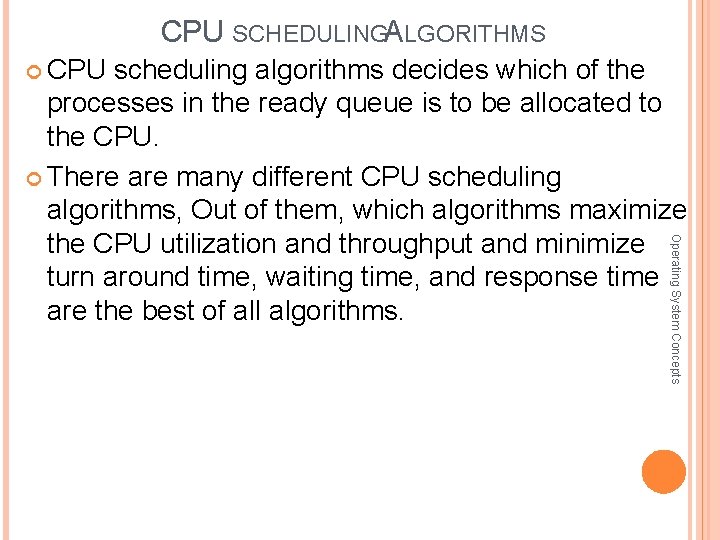

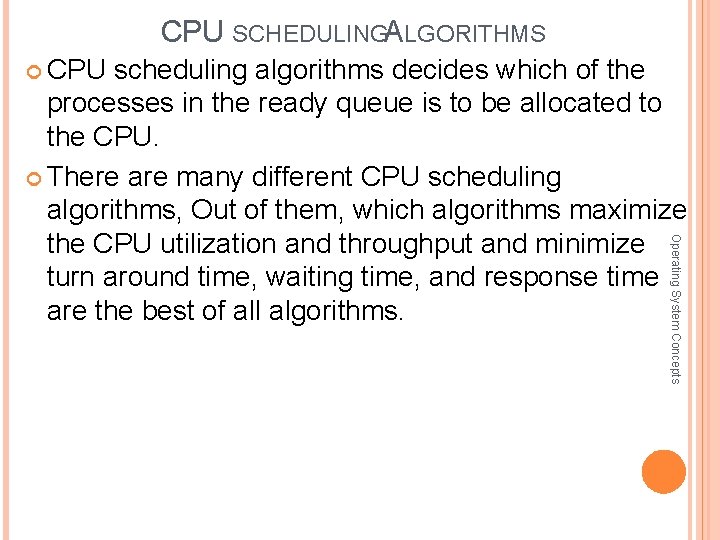

CPU SCHEDULINGA LGORITHMS CPU scheduling algorithms decides which of the Operating System Concepts processes in the ready queue is to be allocated to the CPU. There are many different CPU scheduling algorithms, Out of them, which algorithms maximize the CPU utilization and throughput and minimize turn around time, waiting time, and response time are the best of all algorithms.

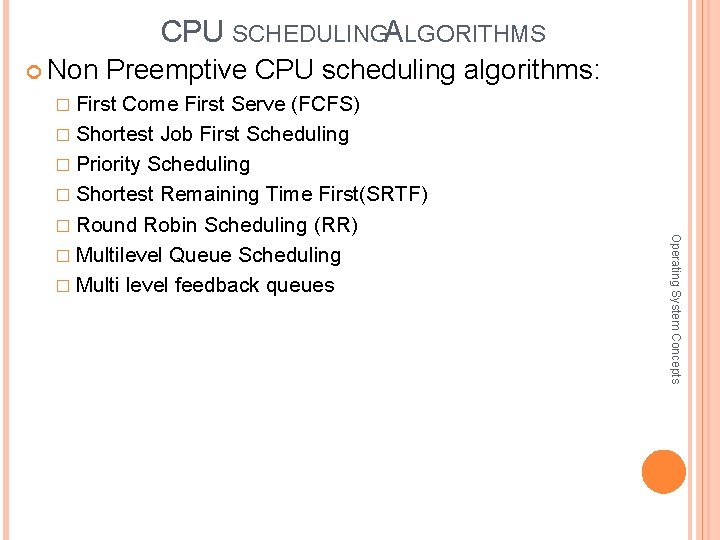

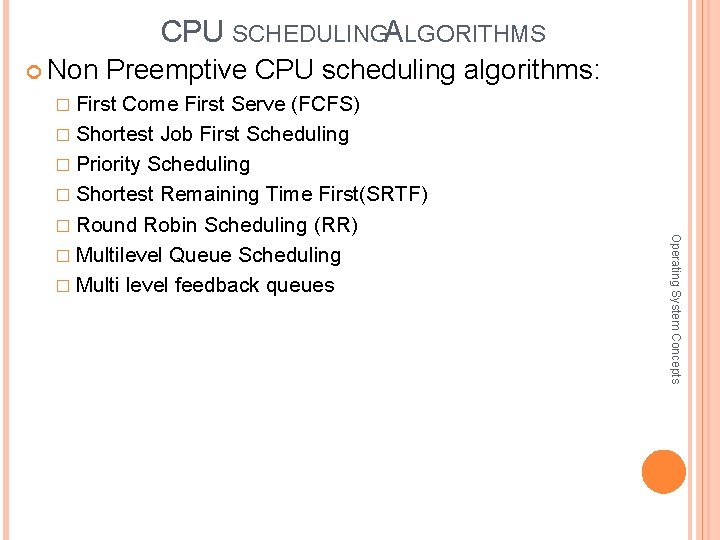

CPU SCHEDULINGA LGORITHMS Non Preemptive CPU scheduling algorithms: � First Come First Serve (FCFS) � Shortest Job First Scheduling � Priority Scheduling � Shortest Remaining Time First(SRTF) � Multilevel Queue Scheduling � Multi level feedback queues Operating System Concepts � Round Robin Scheduling (RR)

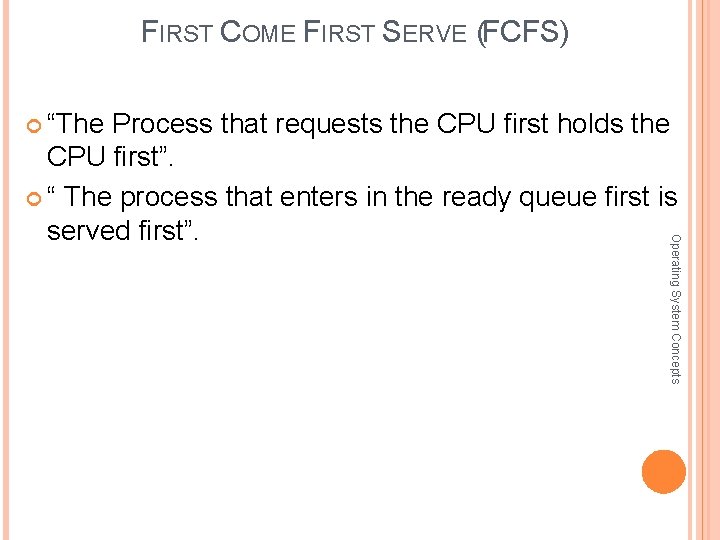

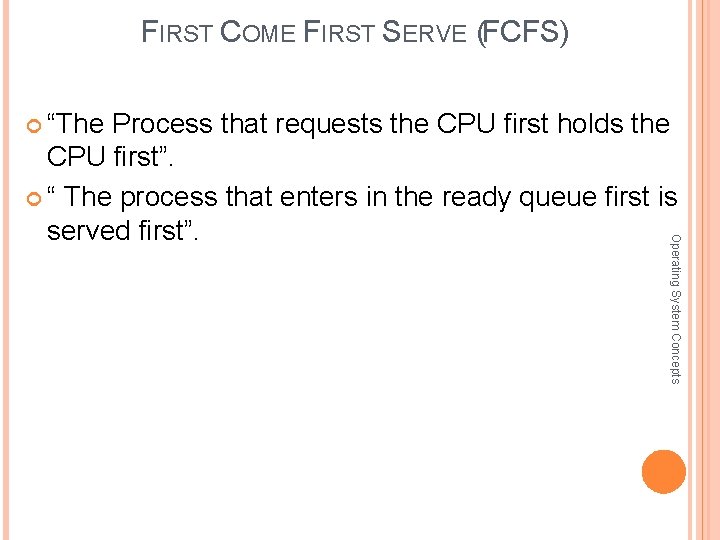

FIRST COME FIRST SERVE (FCFS) “The Process that requests the CPU first holds the Operating System Concepts CPU first”. “ The process that enters in the ready queue first is served first”.

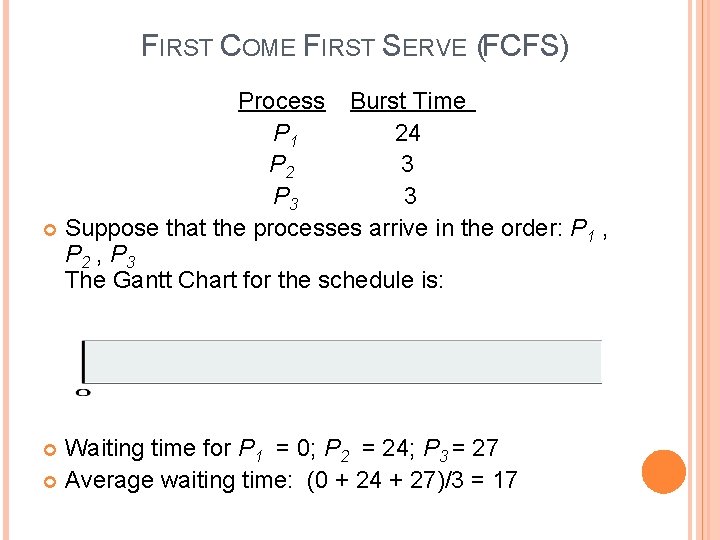

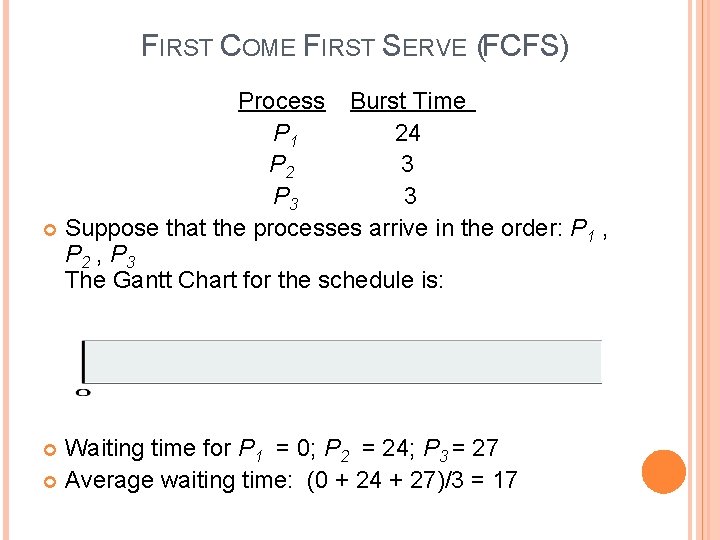

FIRST COME FIRST SERVE (FCFS) Process Burst Time P 1 24 P 2 3 P 3 3 Suppose that the processes arrive in the order: P 1 , P 2 , P 3 The Gantt Chart for the schedule is: Waiting time for P 1 = 0; P 2 = 24; P 3 = 27 Average waiting time: (0 + 24 + 27)/3 = 17

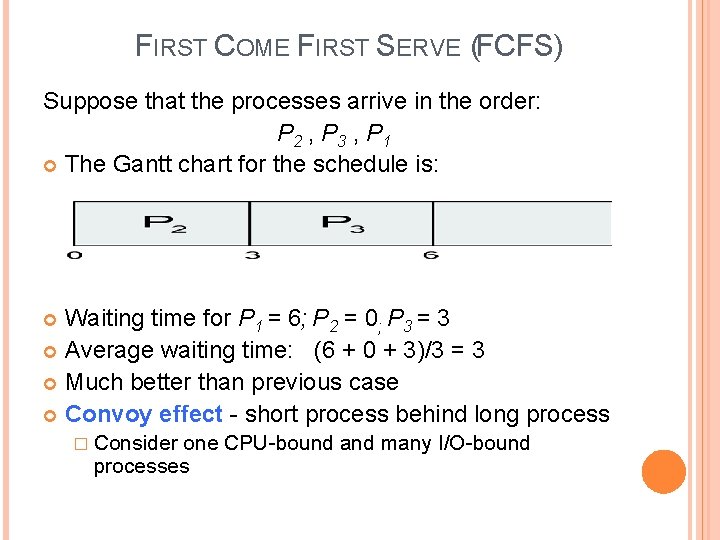

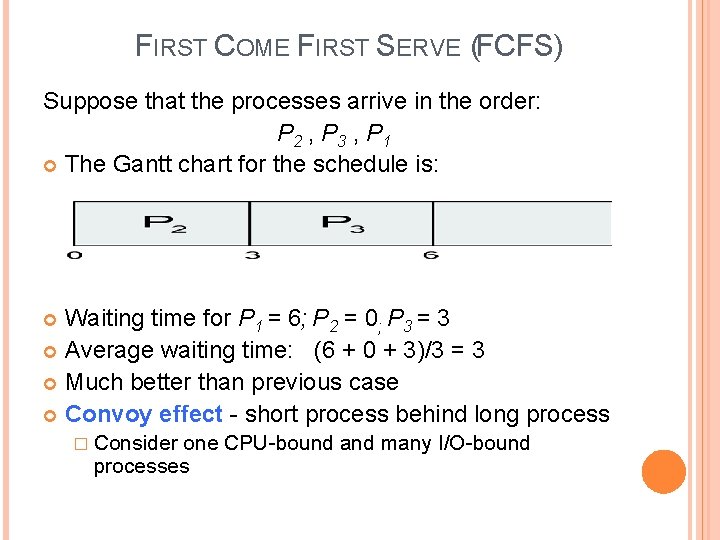

FIRST COME FIRST SERVE (FCFS) Suppose that the processes arrive in the order: P 2 , P 3 , P 1 The Gantt chart for the schedule is: Waiting time for P 1 = 6; P 2 = 0; P 3 = 3 Average waiting time: (6 + 0 + 3)/3 = 3 Much better than previous case Convoy effect - short process behind long process � Consider one CPU-bound and many I/O-bound processes

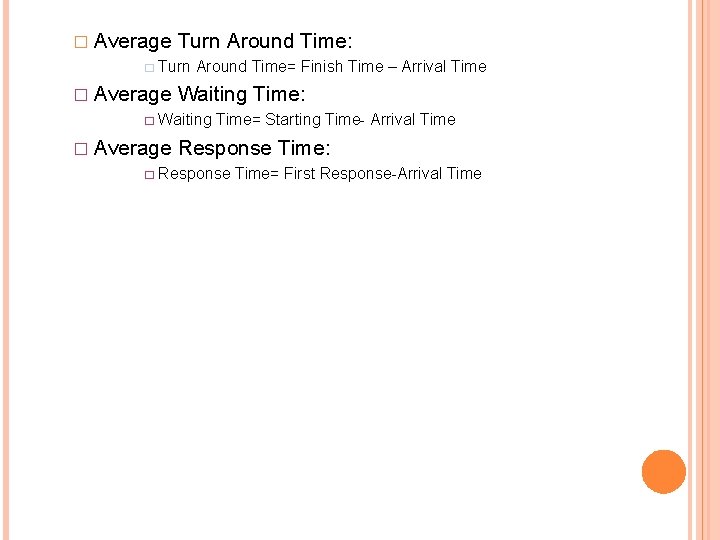

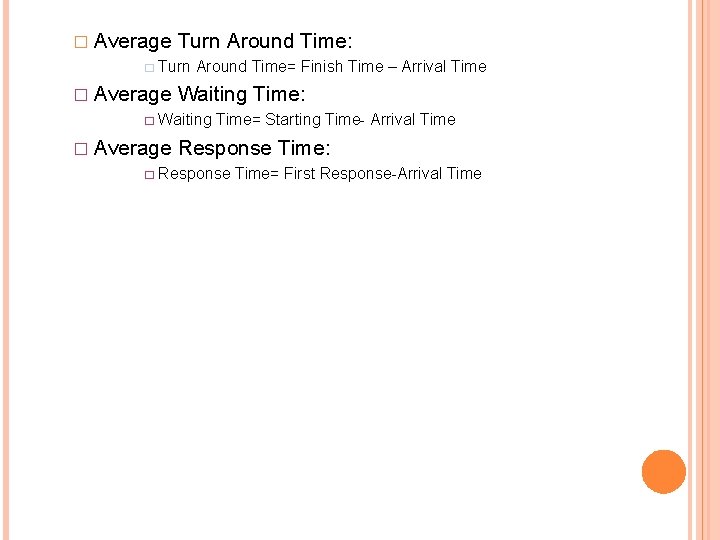

� Average Turn Around Time: � Turn Around Time= Finish Time – Arrival Time � Average Waiting Time: � Waiting Time= Starting Time- Arrival Time � Average Response Time: � Response Time= First Response-Arrival Time

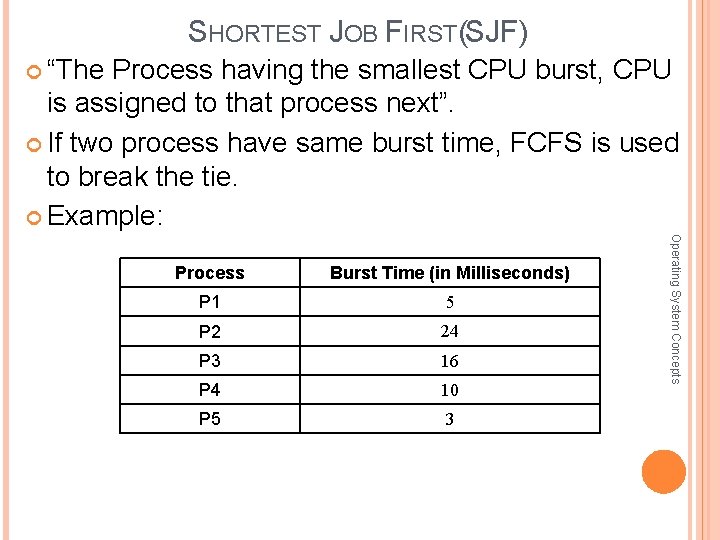

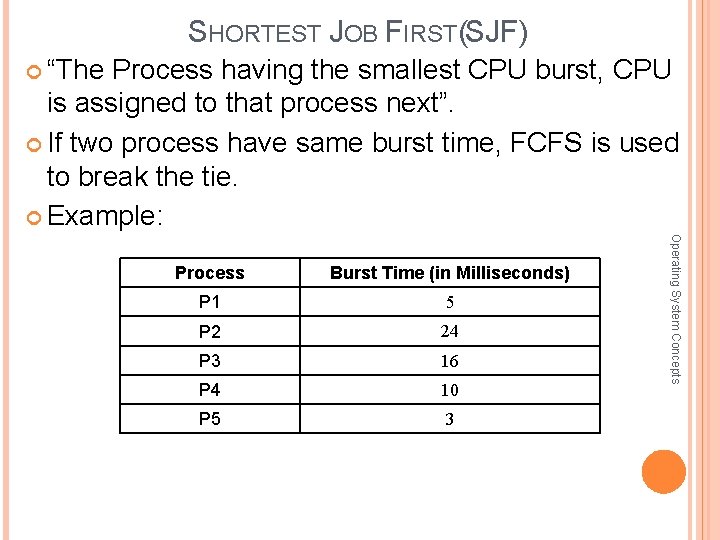

SHORTEST JOB FIRST(SJF) “The Process having the smallest CPU burst, CPU is assigned to that process next”. If two process have same burst time, FCFS is used to break the tie. Example: Burst Time (in Milliseconds) P 1 5 P 2 24 P 3 16 P 4 10 P 5 3 Operating System Concepts Process

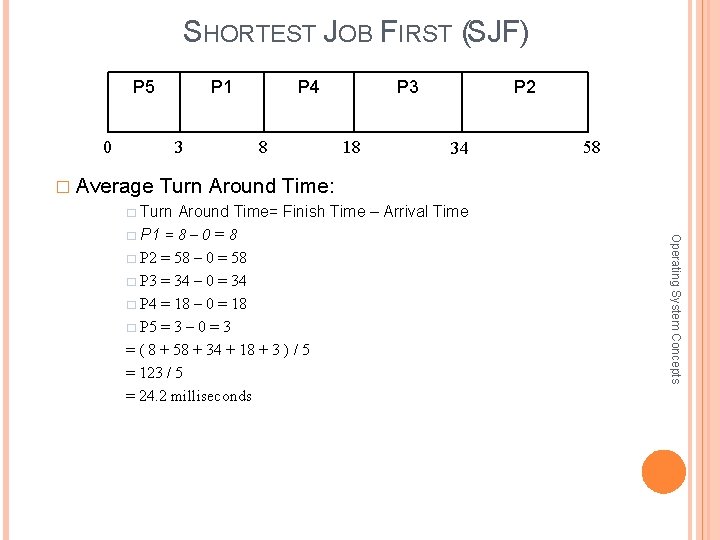

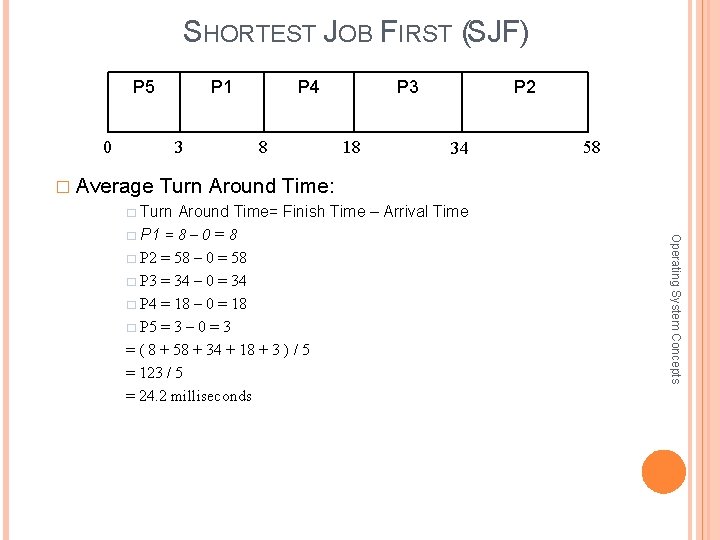

SHORTEST JOB FIRST (SJF) P 5 0 P 1 3 P 4 8 P 3 18 P 2 34 58 � Average Turn Around Time: � Turn Around Time= Finish Time – Arrival Time =8– 0=8 � P 2 = 58 – 0 = 58 � P 3 = 34 – 0 = 34 � P 4 = 18 – 0 = 18 � P 5 = 3 – 0 = 3 = ( 8 + 58 + 34 + 18 + 3 ) / 5 = 123 / 5 = 24. 2 milliseconds Operating System Concepts � P 1

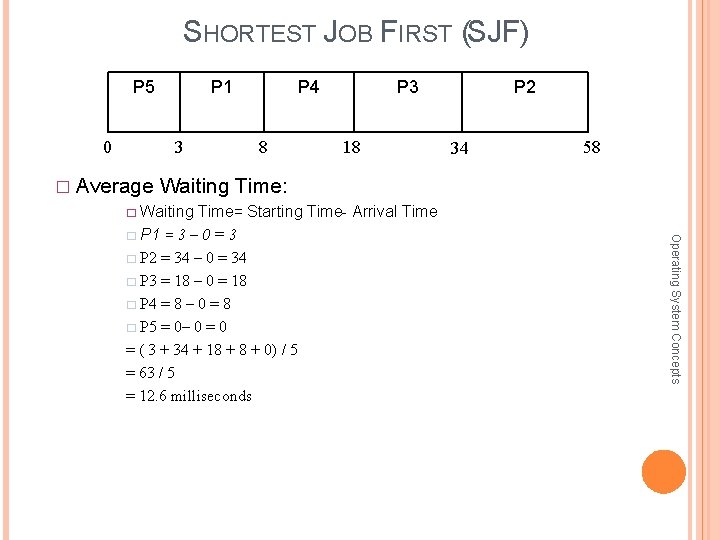

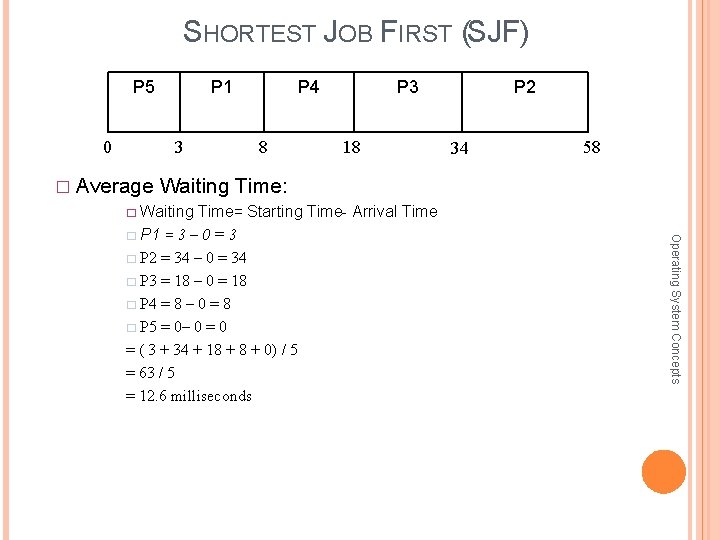

SHORTEST JOB FIRST (SJF) P 5 0 P 1 3 P 4 8 P 3 18 P 2 34 58 � Average Waiting Time: � Waiting Time= Starting Time- Arrival Time =3– 0=3 � P 2 = 34 – 0 = 34 � P 3 = 18 – 0 = 18 � P 4 = 8 – 0 = 8 � P 5 = 0– 0 = ( 3 + 34 + 18 + 0) / 5 = 63 / 5 = 12. 6 milliseconds Operating System Concepts � P 1

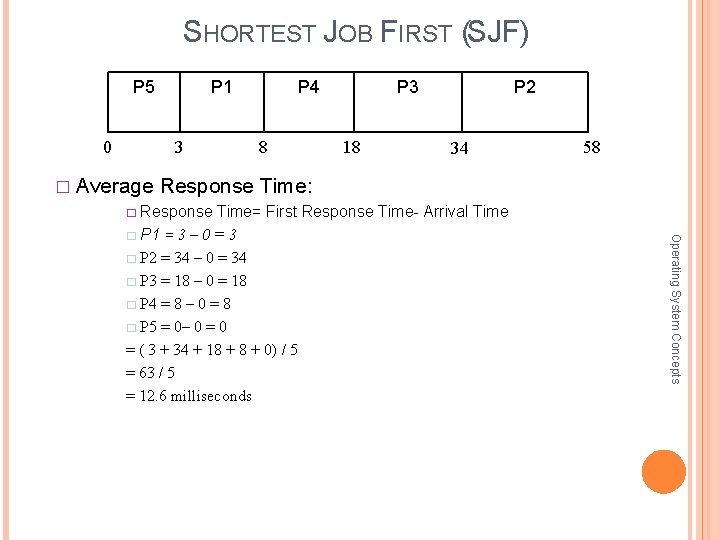

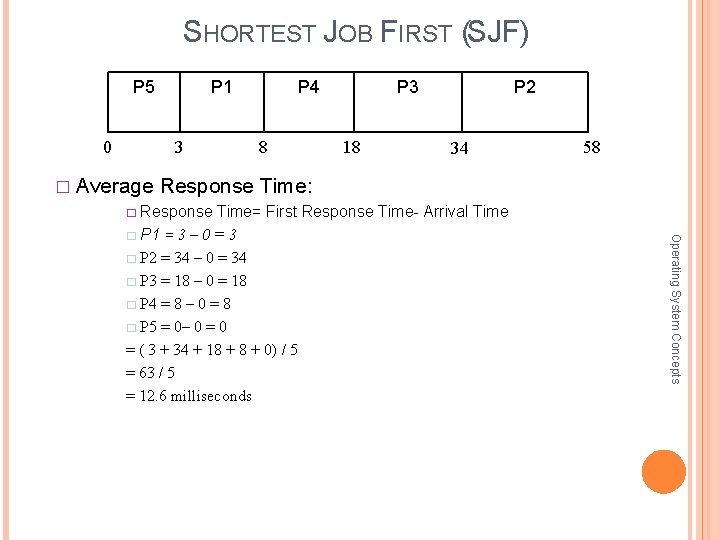

SHORTEST JOB FIRST (SJF) P 5 0 P 1 3 P 4 8 P 3 18 P 2 34 58 � Average Response Time: � Response Time= First Response Time- Arrival Time =3– 0=3 � P 2 = 34 – 0 = 34 � P 3 = 18 – 0 = 18 � P 4 = 8 – 0 = 8 � P 5 = 0– 0 = ( 3 + 34 + 18 + 0) / 5 = 63 / 5 = 12. 6 milliseconds Operating System Concepts � P 1

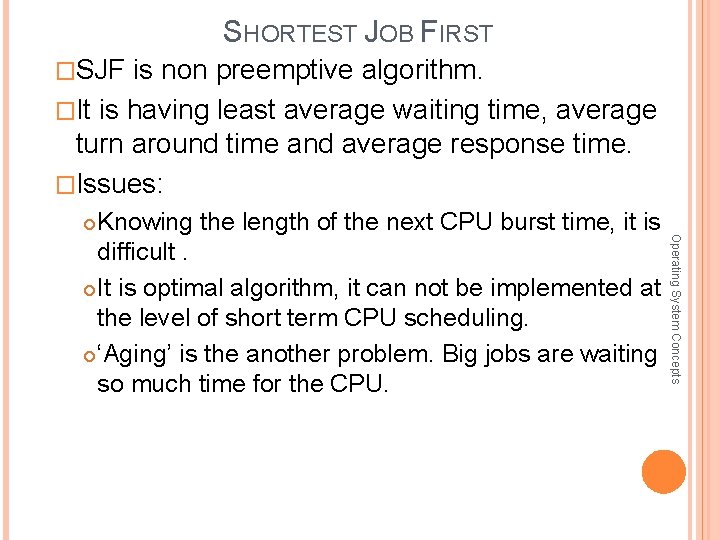

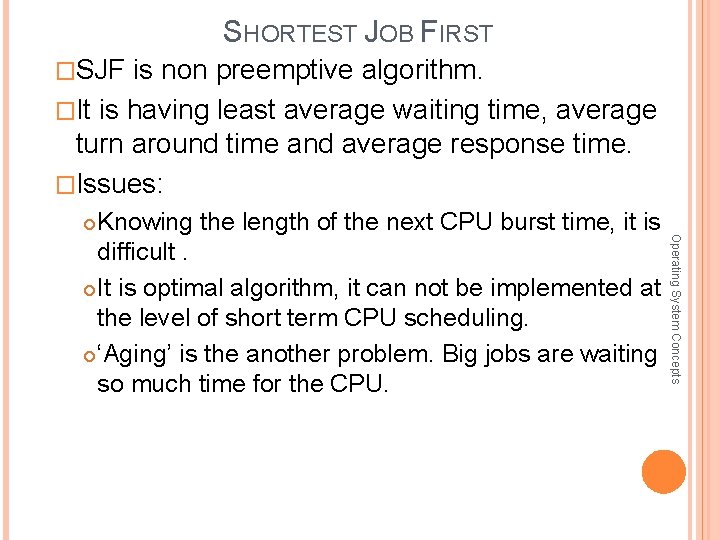

SHORTEST JOB FIRST �SJF is non preemptive algorithm. �It is having least average waiting time, average turn around time and average response time. �Issues: Knowing the length of the next CPU burst time, it is difficult. It is optimal algorithm, it can not be implemented at the level of short term CPU scheduling. ‘Aging’ is the another problem. Big jobs are waiting so much time for the CPU. Operating System Concepts

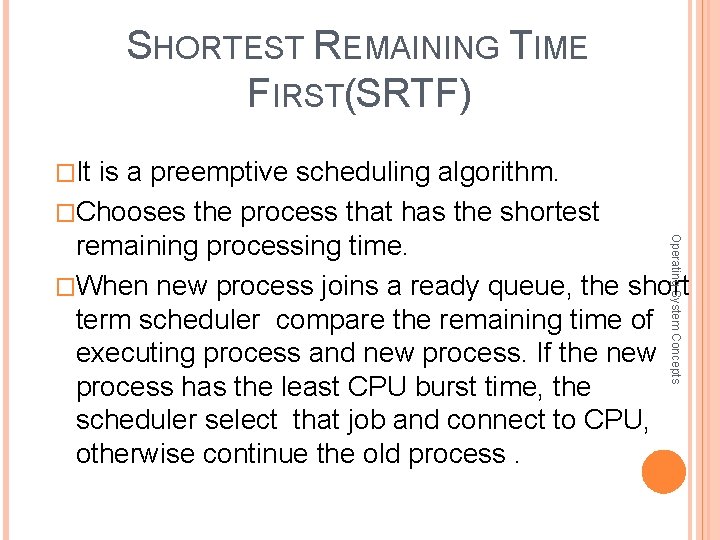

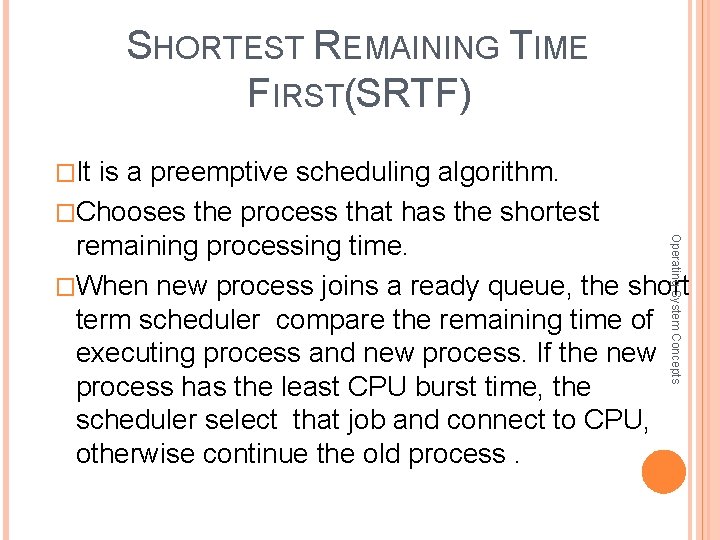

SHORTEST REMAINING TIME FIRST(SRTF) �It is a preemptive scheduling algorithm. �Chooses the process that has the shortest Operating System Concepts remaining processing time. �When new process joins a ready queue, the short term scheduler compare the remaining time of executing process and new process. If the new process has the least CPU burst time, the scheduler select that job and connect to CPU, otherwise continue the old process.

Now we add the concepts of varying arrival times and preemption to the analysis Process. AArrival Time. TBurst Time P 1 0 8 P 2 1 4 P 3 2 9 P 4 3 5 Preemptive SJF Gantt Chart Average waiting time = [(10 -1 -0)+(1 -1)+(17 -2)+53)]/4 = 26/4 = 6. 5 msec

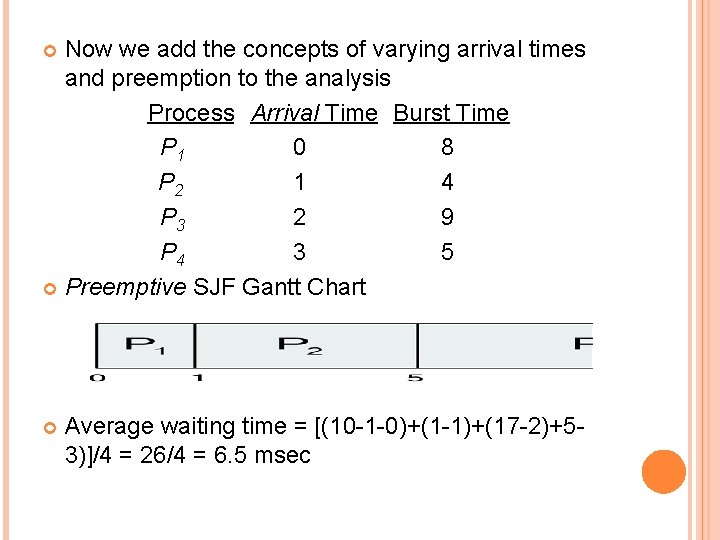

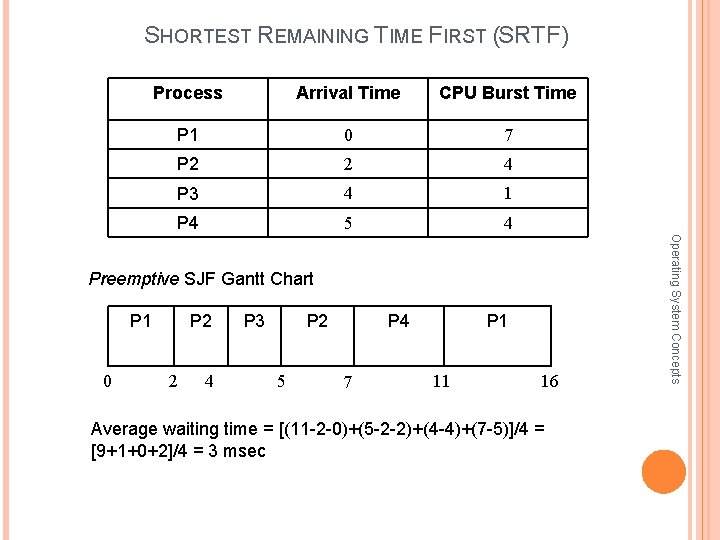

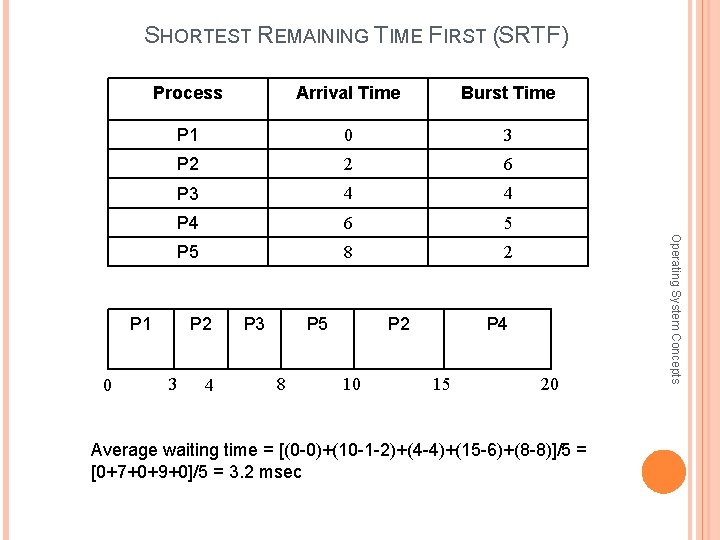

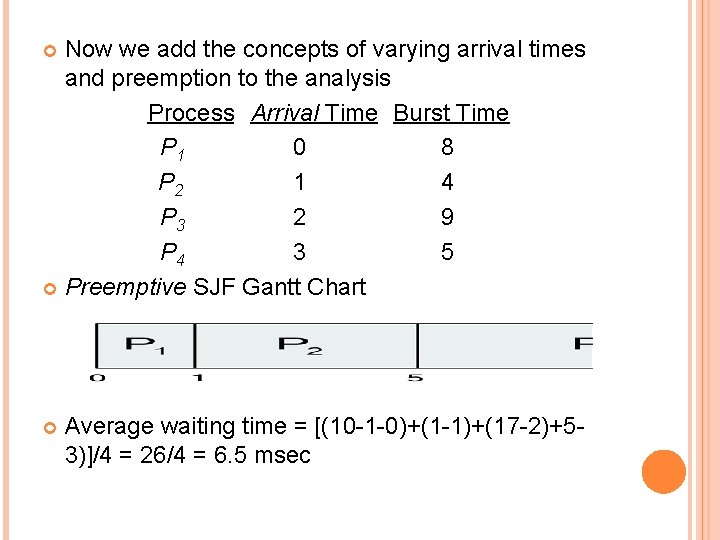

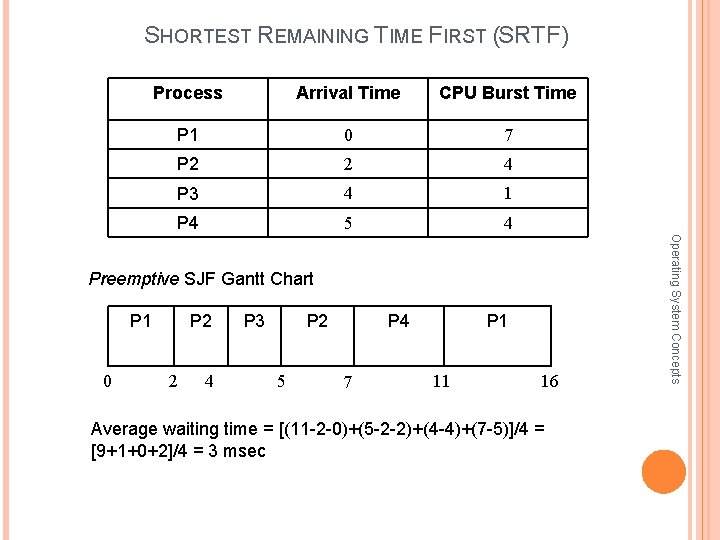

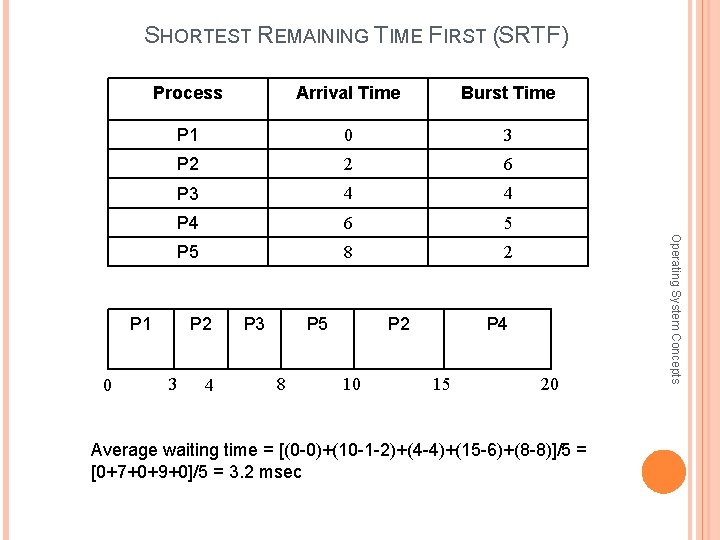

SHORTEST REMAINING TIME FIRST (SRTF) Process Arrival Time CPU Burst Time P 1 0 7 P 2 2 4 P 3 4 1 P 4 5 4 P 1 0 P 2 2 4 P 3 P 2 5 P 4 7 P 1 11 16 Average waiting time = [(11 -2 -0)+(5 -2 -2)+(4 -4)+(7 -5)]/4 = [9+1+0+2]/4 = 3 msec Operating System Concepts Preemptive SJF Gantt Chart

SHORTEST REMAINING TIME FIRST (SRTF) Arrival Time Burst Time P 1 0 3 P 2 2 6 P 3 4 4 P 4 6 5 P 5 8 2 P 1 0 P 2 3 4 P 3 P 5 8 P 2 10 P 4 15 20 Average waiting time = [(0 -0)+(10 -1 -2)+(4 -4)+(15 -6)+(8 -8)]/5 = [0+7+0+9+0]/5 = 3. 2 msec Operating System Concepts Process

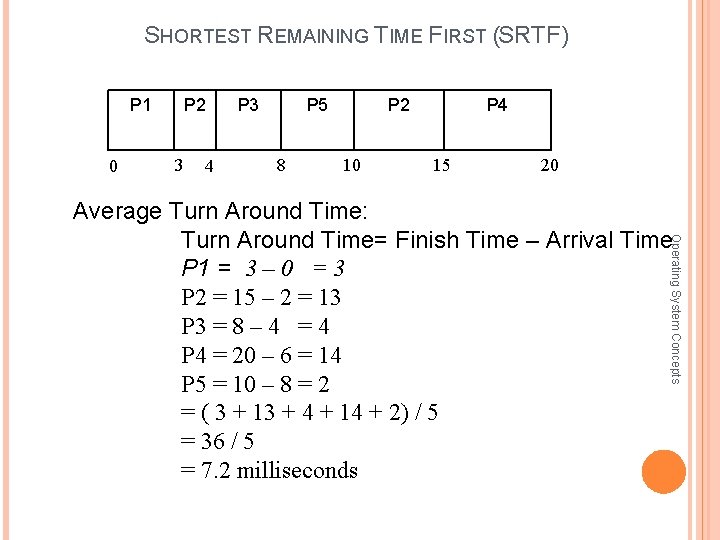

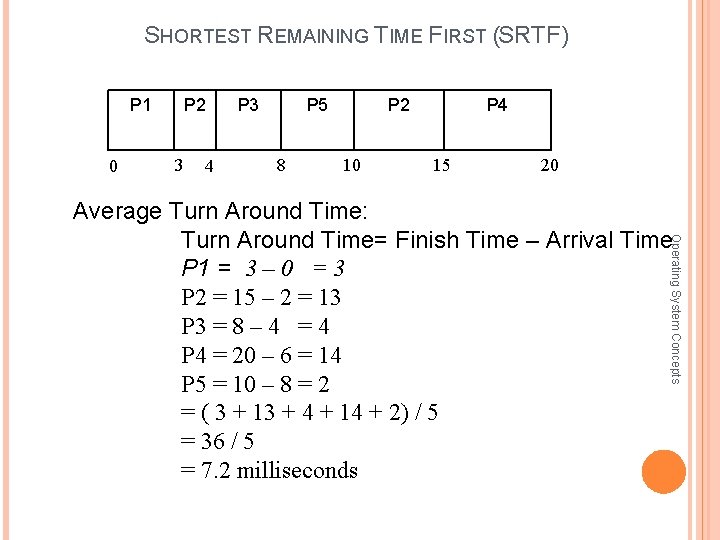

SHORTEST REMAINING TIME FIRST (SRTF) P 1 0 P 2 3 4 P 3 P 5 8 P 2 10 P 4 15 20 Operating System Concepts Average Turn Around Time: Turn Around Time= Finish Time – Arrival Time P 1 = 3 – 0 = 3 P 2 = 15 – 2 = 13 P 3 = 8 – 4 = 4 P 4 = 20 – 6 = 14 P 5 = 10 – 8 = 2 = ( 3 + 13 + 4 + 14 + 2) / 5 = 36 / 5 = 7. 2 milliseconds

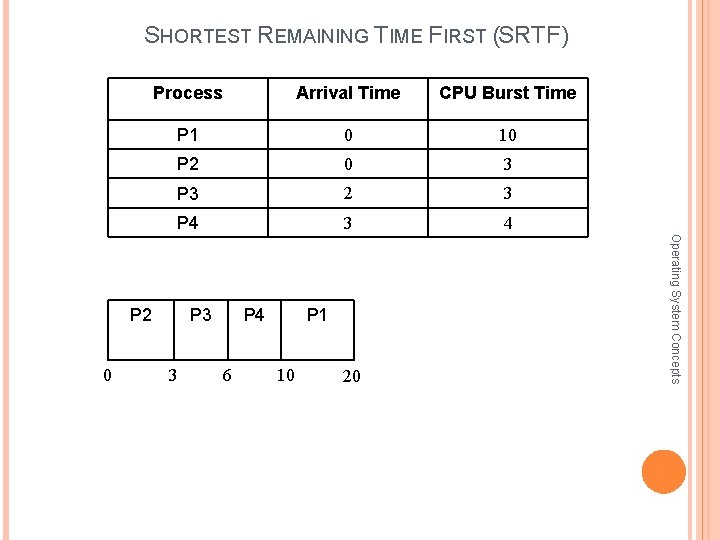

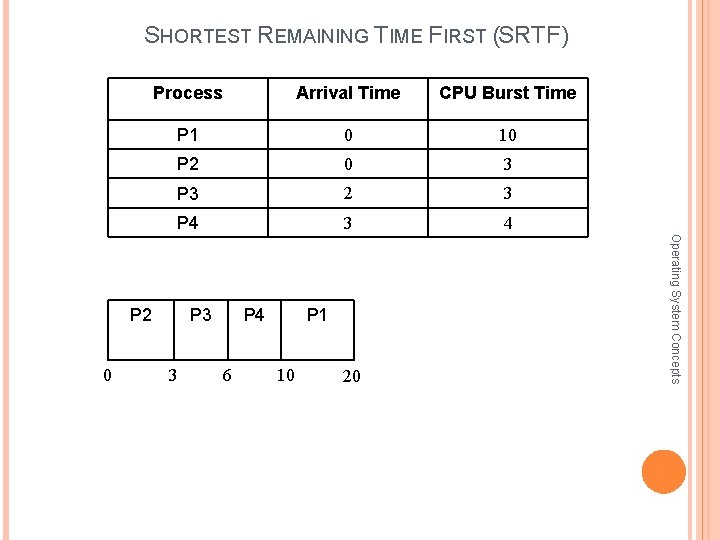

SHORTEST REMAINING TIME FIRST (SRTF) Process Arrival Time CPU Burst Time P 1 0 10 P 2 0 3 P 3 2 3 P 4 3 4 0 P 3 3 P 4 6 P 1 10 20 Operating System Concepts P 2

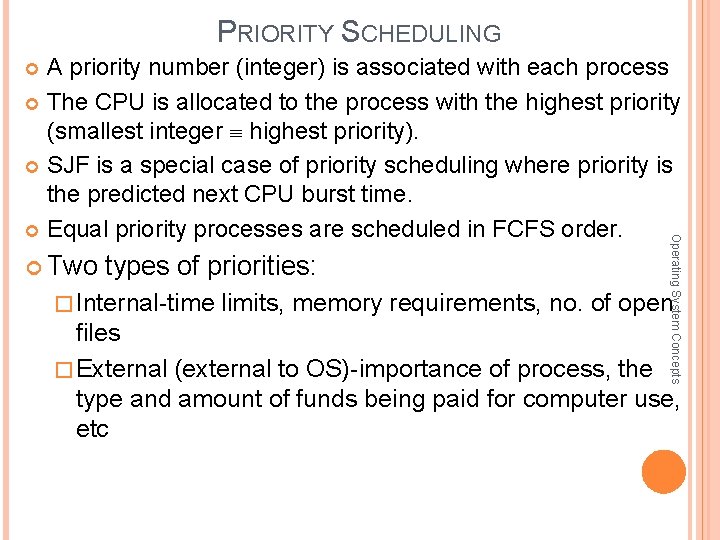

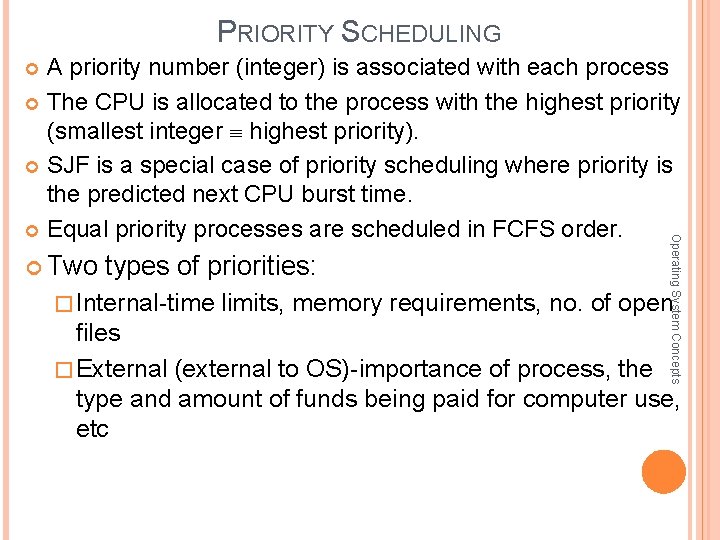

PRIORITY SCHEDULING A priority number (integer) is associated with each process The CPU is allocated to the process with the highest priority (smallest integer highest priority). SJF is a special case of priority scheduling where priority is the predicted next CPU burst time. Equal priority processes are scheduled in FCFS order. Operating System Concepts Two types of priorities: � Internal-time limits, memory requirements, no. of open files � External (external to OS)-importance of process, the type and amount of funds being paid for computer use, etc

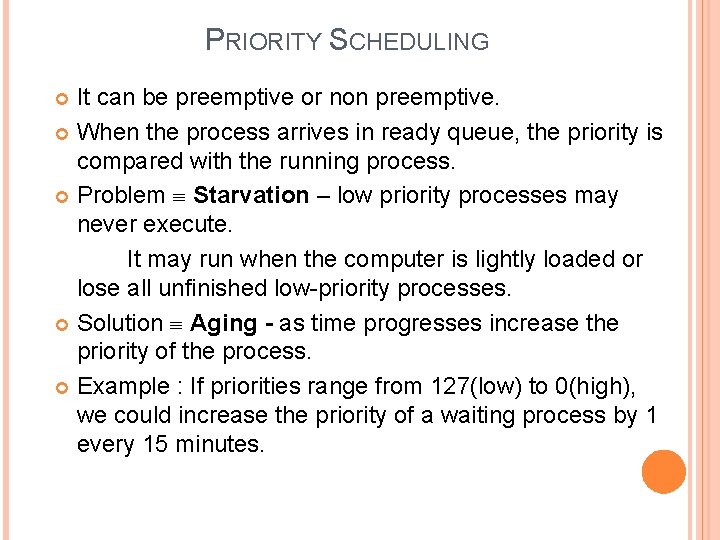

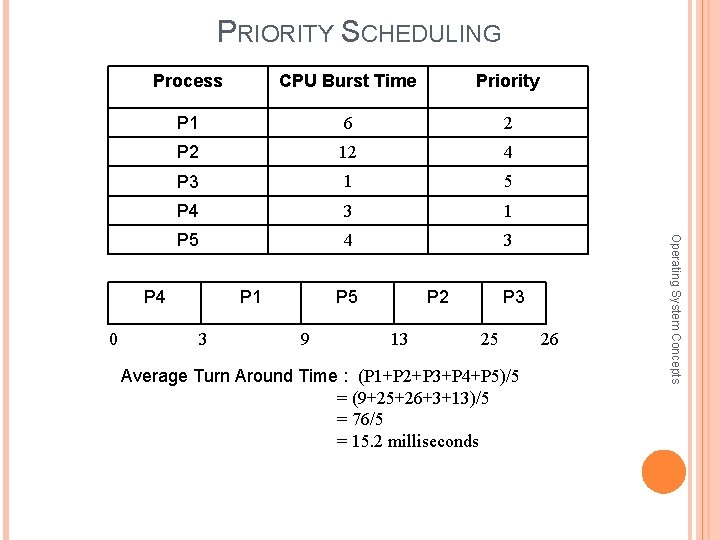

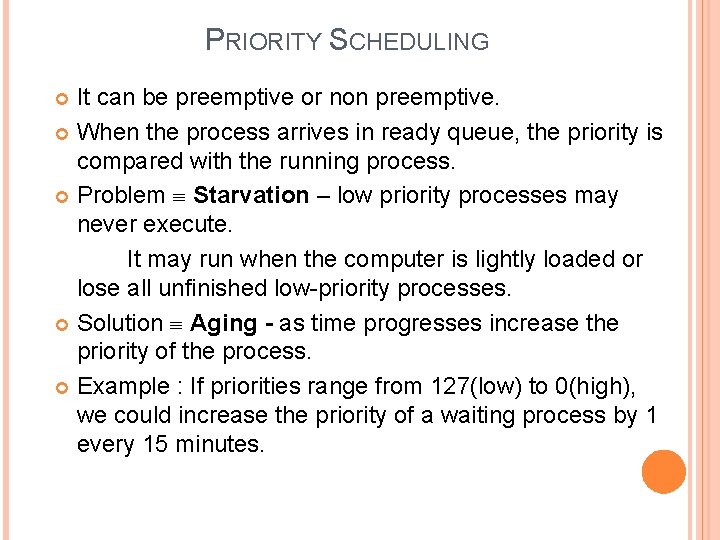

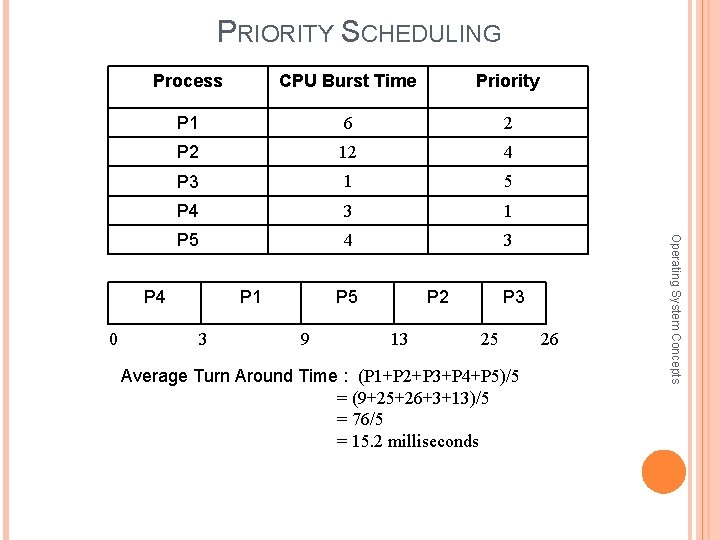

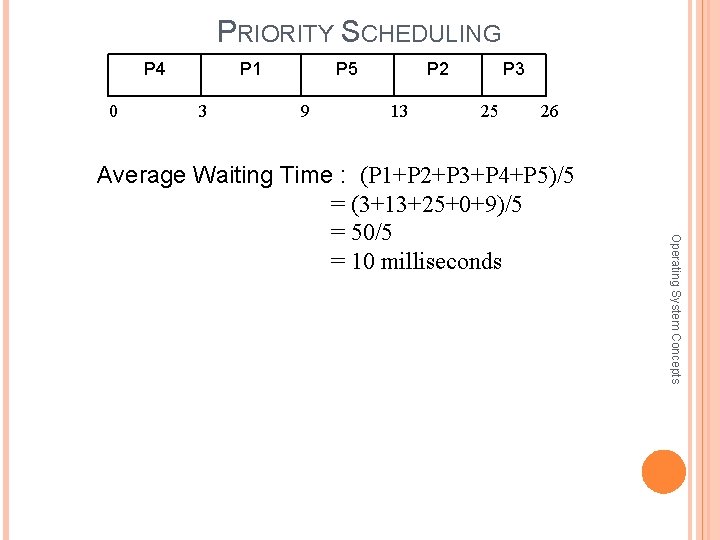

PRIORITY SCHEDULING It can be preemptive or non preemptive. When the process arrives in ready queue, the priority is compared with the running process. Problem Starvation – low priority processes may never execute. It may run when the computer is lightly loaded or lose all unfinished low-priority processes. Solution Aging - as time progresses increase the priority of the process. Example : If priorities range from 127(low) to 0(high), we could increase the priority of a waiting process by 1 every 15 minutes.

PRIORITY SCHEDULING CPU Burst Time Priority P 1 6 2 P 2 12 4 P 3 1 5 P 4 3 1 P 5 4 3 P 4 0 P 1 3 P 5 9 P 2 13 P 3 25 Average Turn Around Time : (P 1+P 2+P 3+P 4+P 5)/5 = (9+25+26+3+13)/5 = 76/5 = 15. 2 milliseconds 26 Operating System Concepts Process

PRIORITY SCHEDULING P 4 0 P 1 3 P 5 9 P 2 13 P 3 25 26 Operating System Concepts Average Waiting Time : (P 1+P 2+P 3+P 4+P 5)/5 = (3+13+25+0+9)/5 = 50/5 = 10 milliseconds

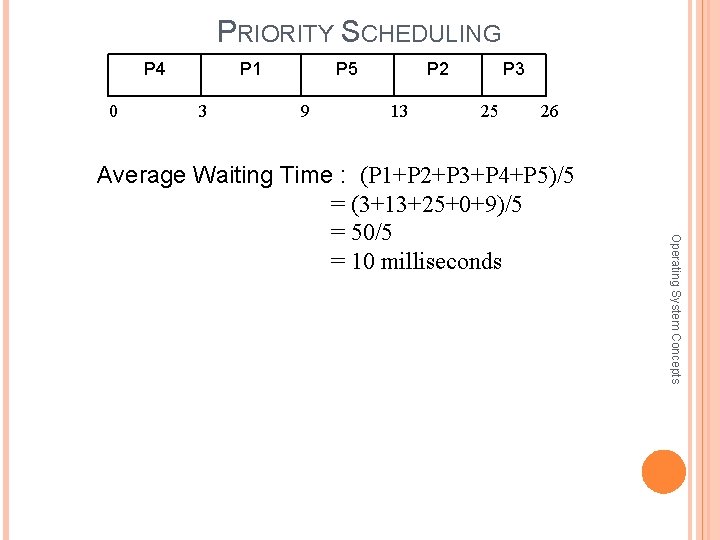

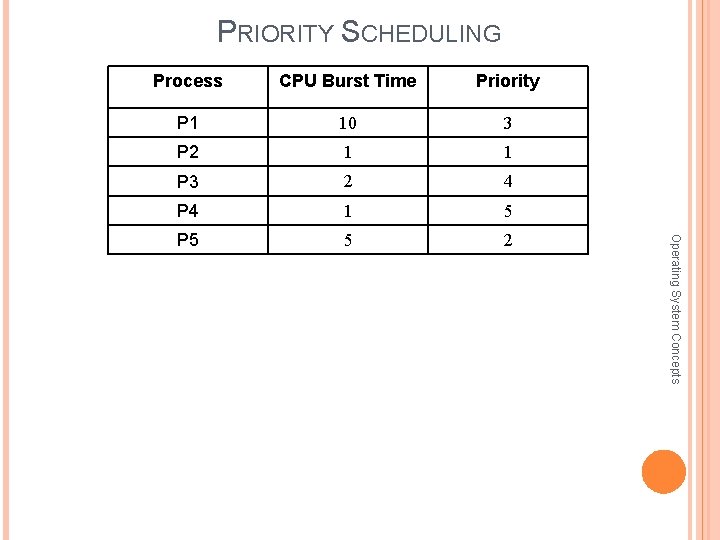

PRIORITY SCHEDULING CPU Burst Time Priority P 1 10 3 P 2 1 1 P 3 2 4 P 4 1 5 P 5 5 2 Operating System Concepts Process

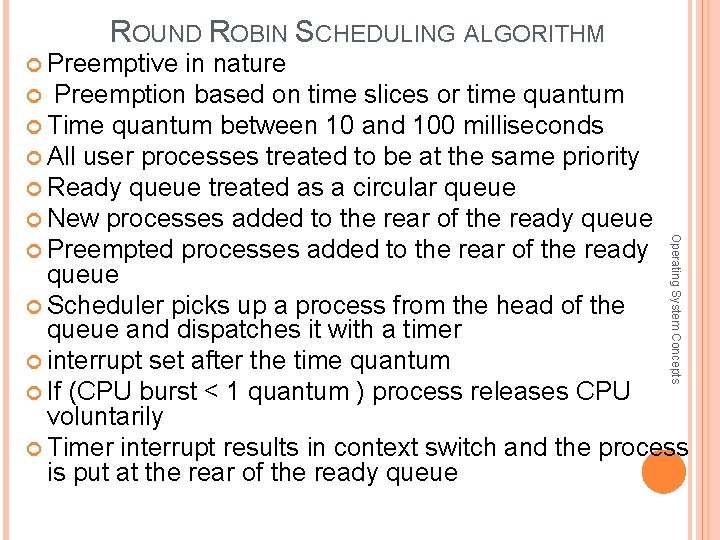

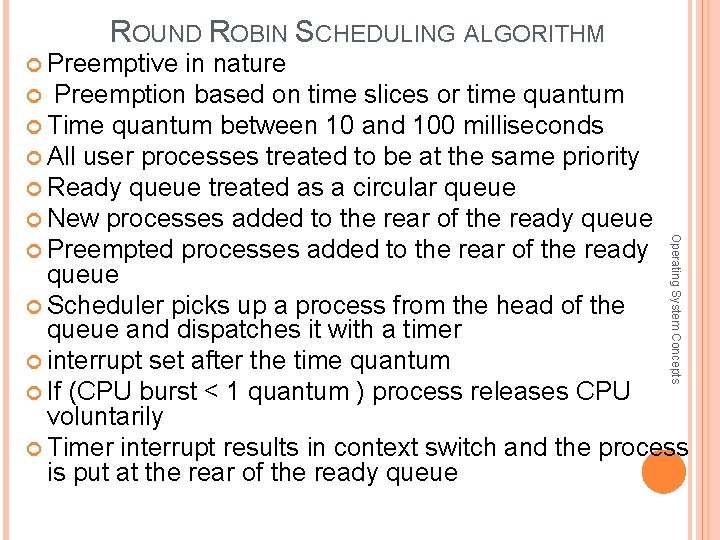

ROUND ROBIN SCHEDULING ALGORITHM Preemptive in nature Preemption based on time slices or time quantum Time quantum between 10 and 100 milliseconds All user processes treated to be at the same priority Ready queue treated as a circular queue New processes added to the rear of the ready queue Operating System Concepts Preempted processes added to the rear of the ready queue Scheduler picks up a process from the head of the queue and dispatches it with a timer interrupt set after the time quantum If (CPU burst < 1 quantum ) process releases CPU voluntarily Timer interrupt results in context switch and the process is put at the rear of the ready queue

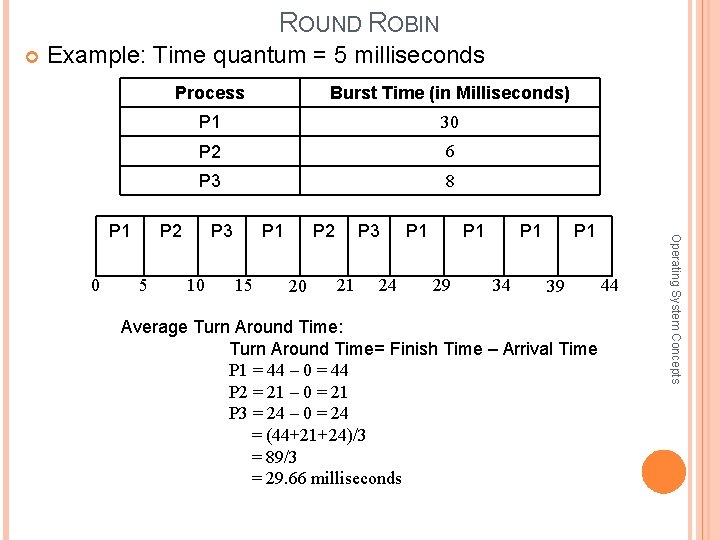

ROUND ROBIN Example: Time quantum = 5 milliseconds 0 Burst Time (in Milliseconds) P 1 30 P 2 6 P 3 8 P 2 5 P 3 10 P 1 15 P 2 20 P 3 21 24 P 1 29 P 1 34 P 1 39 44 Average Turn Around Time: Turn Around Time= Finish Time – Arrival Time P 1 = 44 – 0 = 44 P 2 = 21 – 0 = 21 P 3 = 24 – 0 = 24 = (44+21+24)/3 = 89/3 = 29. 66 milliseconds Operating System Concepts P 1 Process

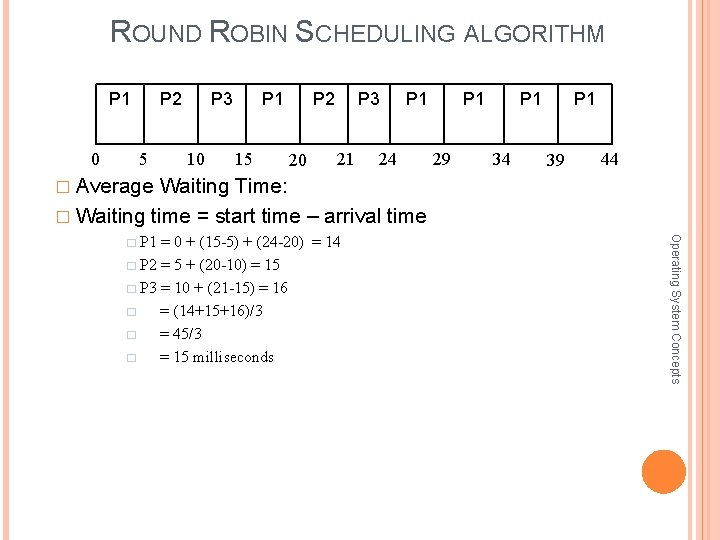

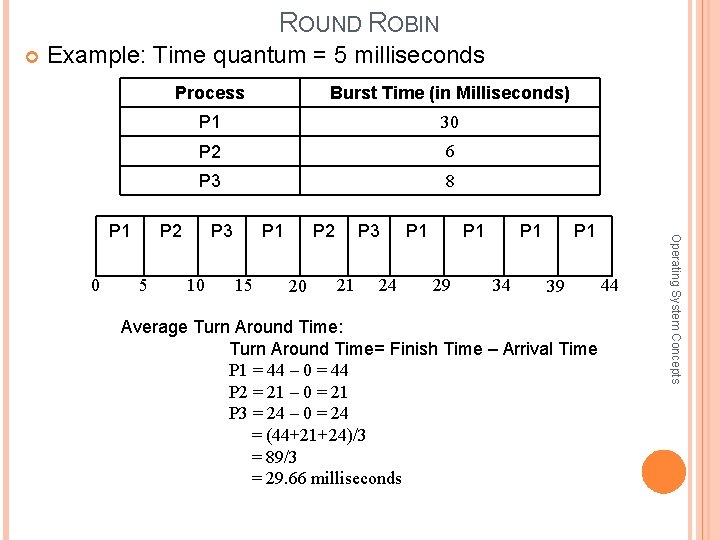

ROUND ROBIN SCHEDULING ALGORITHM P 1 0 P 2 5 P 3 10 P 1 15 P 2 20 P 3 21 P 1 24 P 1 29 P 1 34 P 1 39 44 � Average Waiting Time: � Waiting time = start time – arrival time = 0 + (15 -5) + (24 -20) = 14 � P 2 = 5 + (20 -10) = 15 � P 3 = 10 + (21 -15) = 16 � = (14+15+16)/3 � = 45/3 � = 15 milliseconds Operating System Concepts � P 1

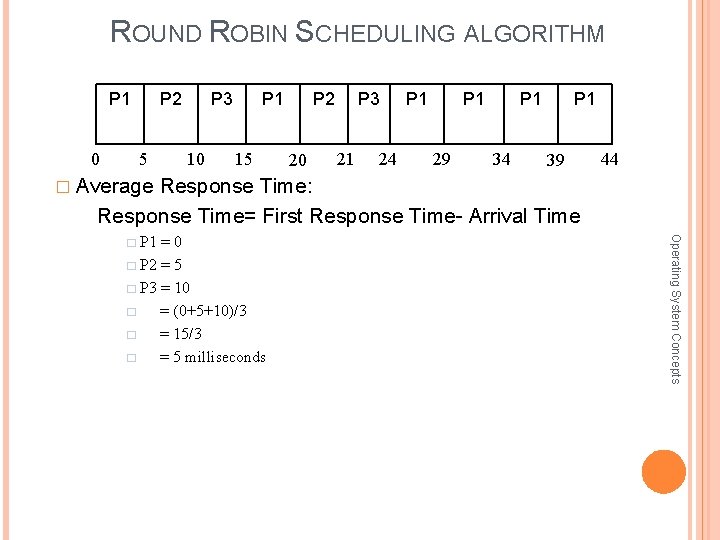

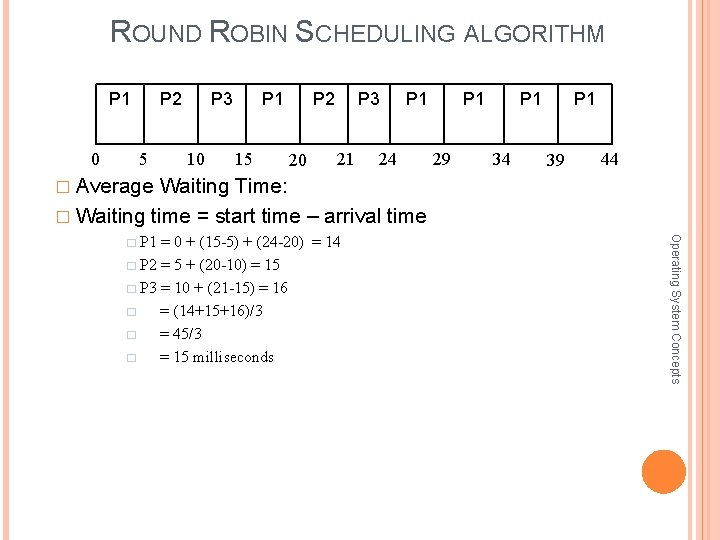

ROUND ROBIN SCHEDULING ALGORITHM P 1 0 P 2 5 P 3 10 P 1 15 P 2 20 P 3 21 24 P 1 29 P 1 34 P 1 39 44 � Average Response Time: Response Time= First Response Time- Arrival Time =0 � P 2 = 5 � P 3 = 10 � = (0+5+10)/3 � = 15/3 � = 5 milliseconds Operating System Concepts � P 1

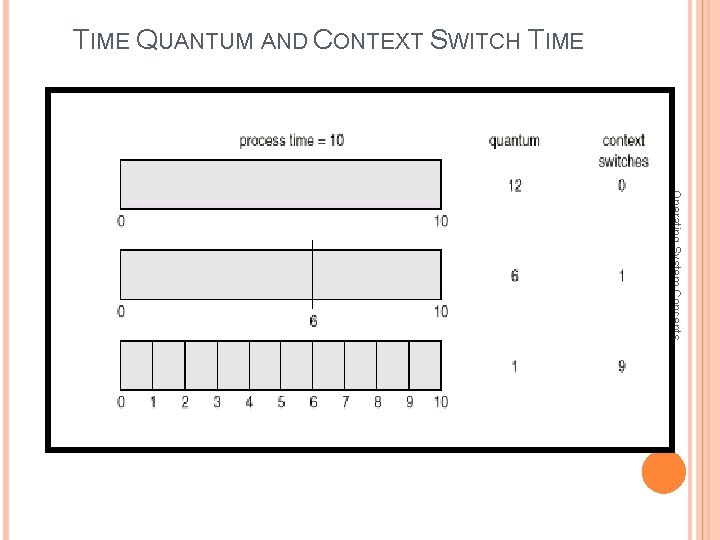

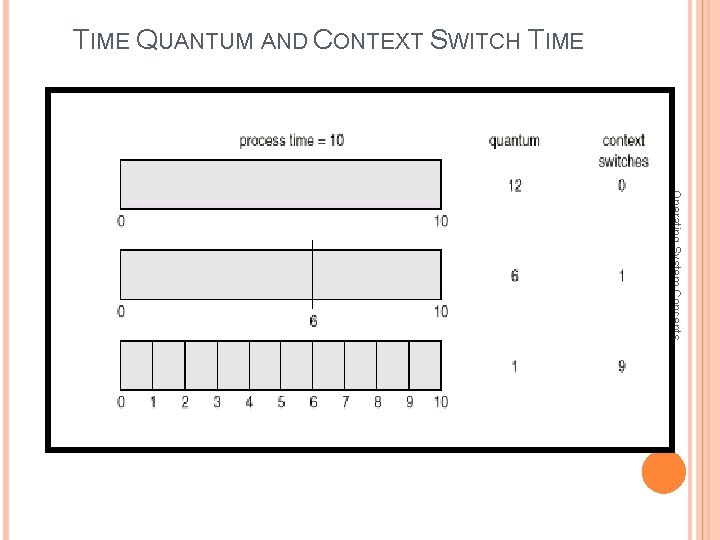

TIME QUANTUM AND CONTEXT SWITCH TIME Operating System Concepts

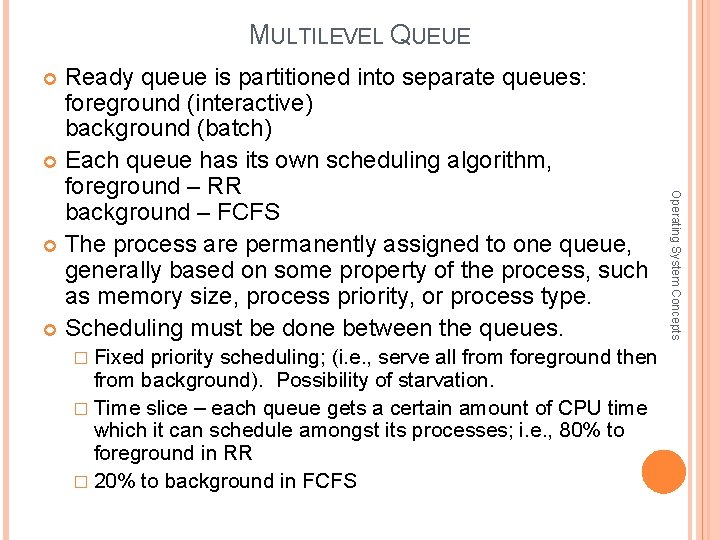

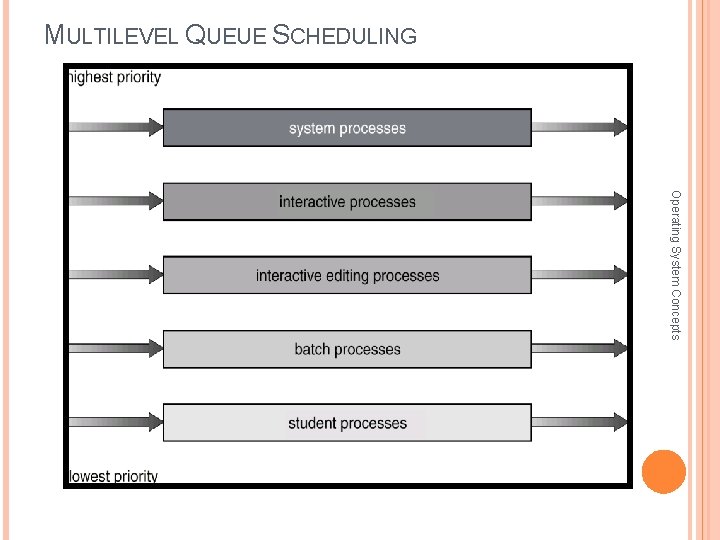

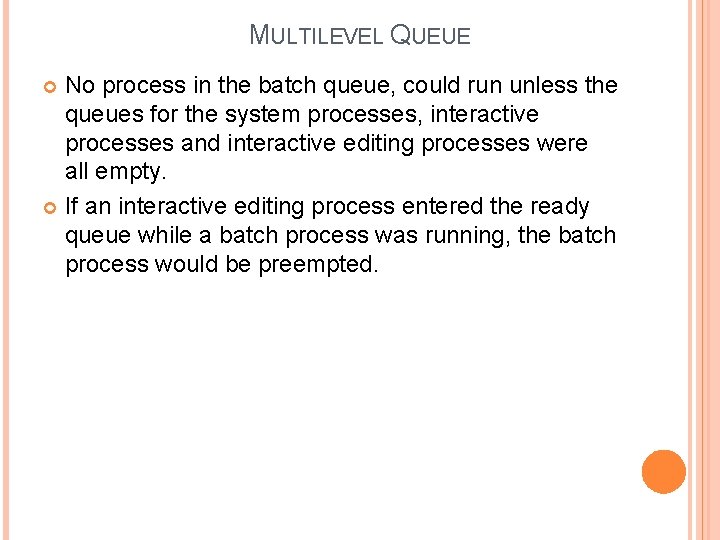

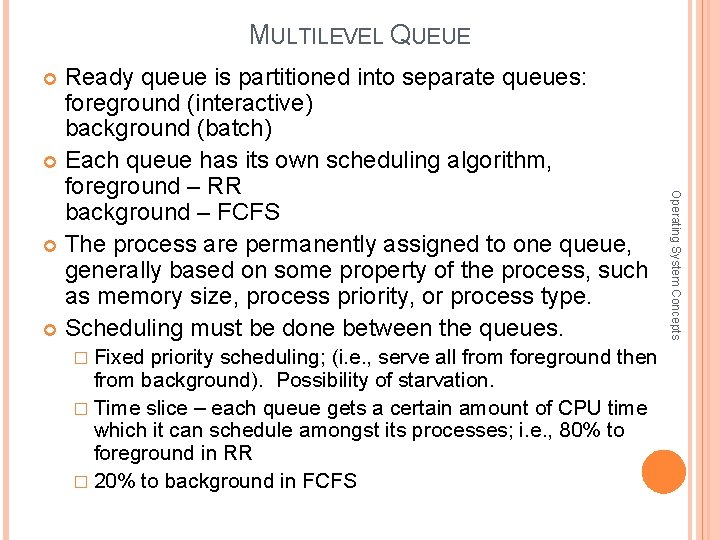

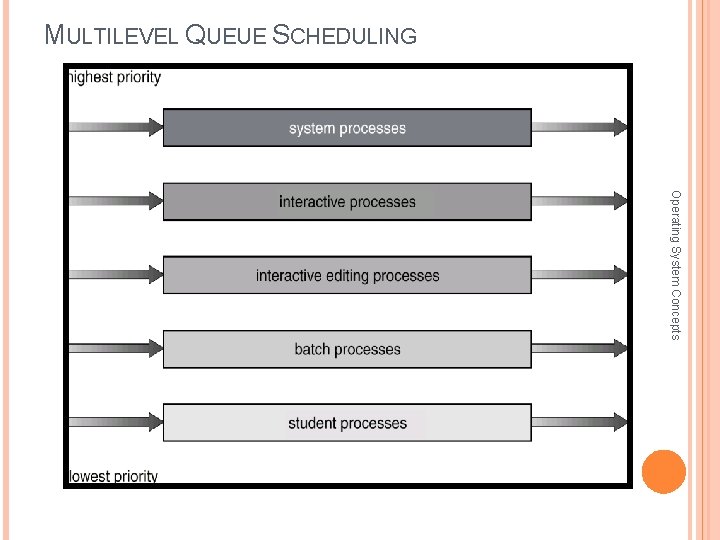

MULTILEVEL QUEUE Ready queue is partitioned into separate queues: foreground (interactive) background (batch) Each queue has its own scheduling algorithm, foreground – RR background – FCFS The process are permanently assigned to one queue, generally based on some property of the process, such as memory size, process priority, or process type. Scheduling must be done between the queues. from background). Possibility of starvation. � Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i. e. , 80% to foreground in RR � 20% to background in FCFS Operating System Concepts � Fixed priority scheduling; (i. e. , serve all from foreground then

MULTILEVEL QUEUE SCHEDULING Operating System Concepts

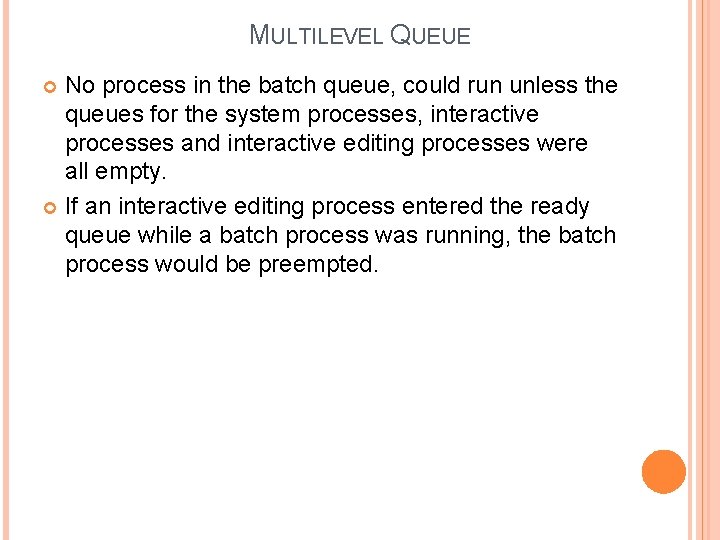

MULTILEVEL QUEUE No process in the batch queue, could run unless the queues for the system processes, interactive processes and interactive editing processes were all empty. If an interactive editing process entered the ready queue while a batch process was running, the batch process would be preempted.

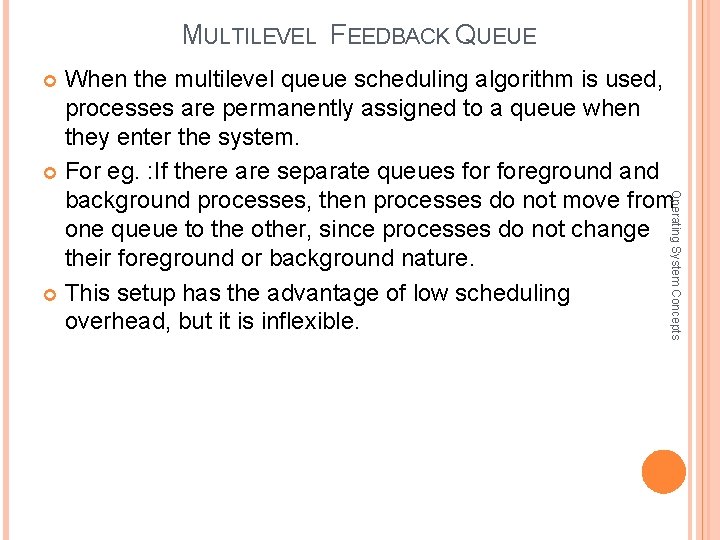

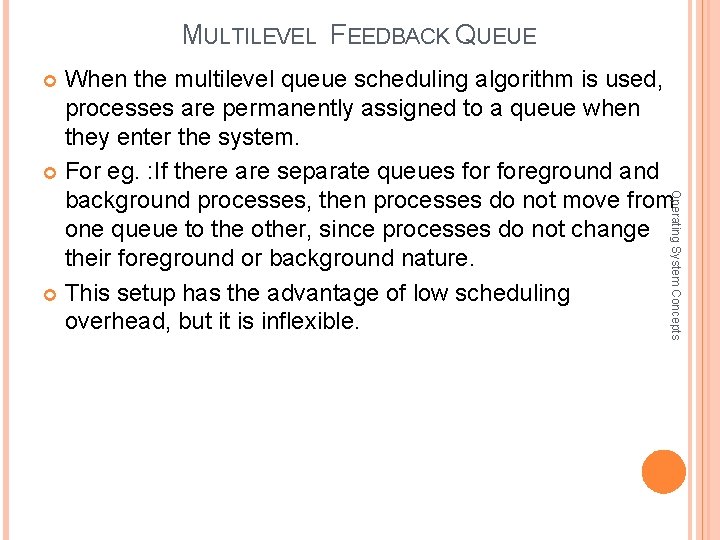

MULTILEVEL FEEDBACK QUEUE When the multilevel queue scheduling algorithm is used, processes are permanently assigned to a queue when they enter the system. For eg. : If there are separate queues foreground and background processes, then processes do not move from one queue to the other, since processes do not change their foreground or background nature. This setup has the advantage of low scheduling overhead, but it is inflexible. Operating System Concepts

MULTILEVEL FEEDBACK QUEUE The multilevel feedback queue algorithm allows a process to move between the queues. Used to separate process with different CPU – Burst characteristics. If a process uses too much CPU time, it will be moved to next low priority queue. A process that waits too long in a lower queue may be moved to a higher priority queue. Used to prevent starvation. Operating System Concepts

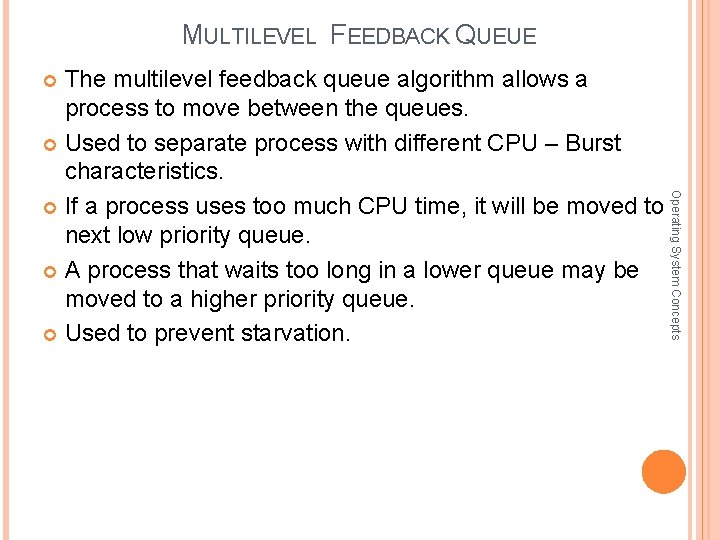

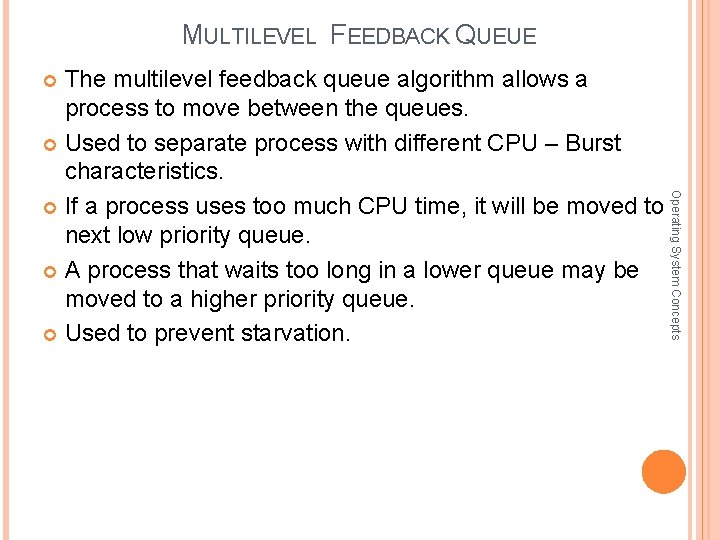

MULTILEVEL FEEDBACK QUEUE RQ 0 RQ 1 RQ 2

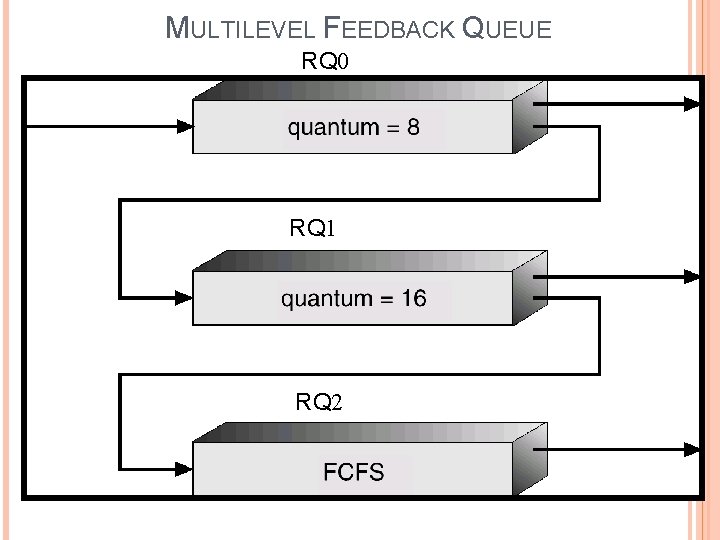

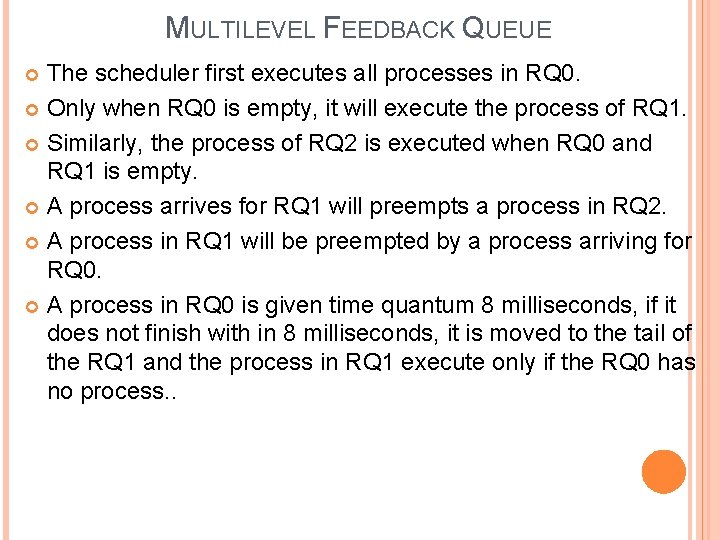

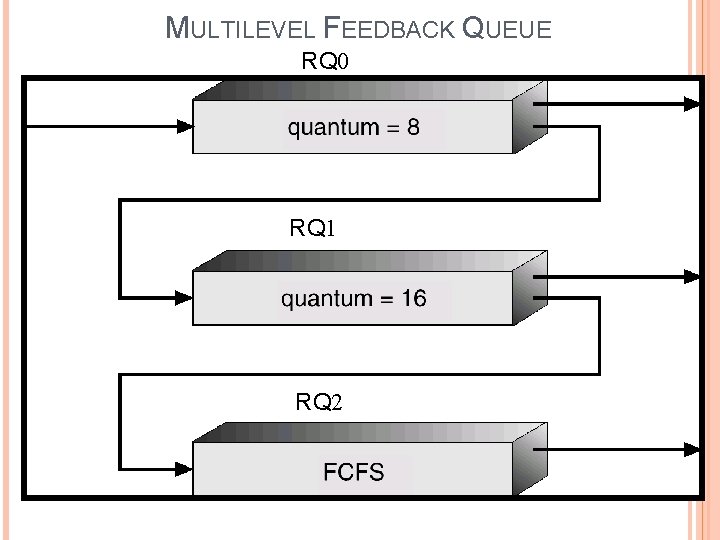

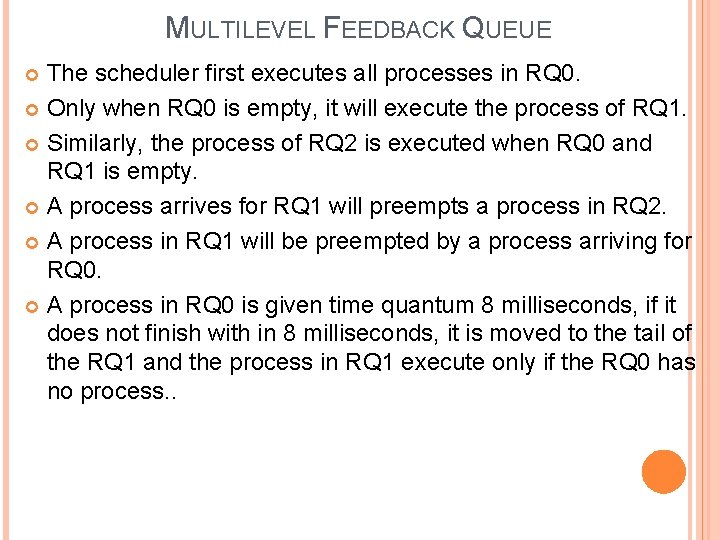

MULTILEVEL FEEDBACK QUEUE The scheduler first executes all processes in RQ 0. Only when RQ 0 is empty, it will execute the process of RQ 1. Similarly, the process of RQ 2 is executed when RQ 0 and RQ 1 is empty. A process arrives for RQ 1 will preempts a process in RQ 2. A process in RQ 1 will be preempted by a process arriving for RQ 0. A process in RQ 0 is given time quantum 8 milliseconds, if it does not finish with in 8 milliseconds, it is moved to the tail of the RQ 1 and the process in RQ 1 execute only if the RQ 0 has no process. .

MULTILEVEL FEEDBACK QUEUE A process at the head of a the RQ 1 is given a time quantum of 16 milliseconds. If it does not complete with in the time quantum, it is moved to the tail of the RQ 2. The process in RQ 2 are run on FCFS basis. The process in the RQ 2 will execute only if the RQ 0 and RQ 1 are empty. The main advantage of this algorithm is , shorter process will complete quickly and a longer process need not to wait much time in the ready queue and will gradually draft downward.

MULTILEVEL FEEDBACK QUEUE In general, a multilevel feedback queue scheduler is defined by the following parameters: 1. The number of queues. 2. The scheduling algorithm for each queue. 3. The method used to determine when to upgrade a process to a higher-priority queue 4. The method used to determine when to demote a process to a lower-priority queue 5. The method used to determine which queue a process will enter when that process needs service.

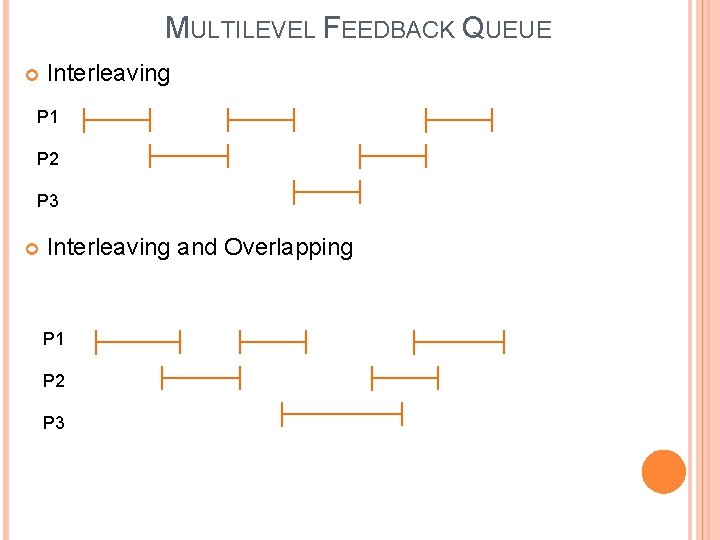

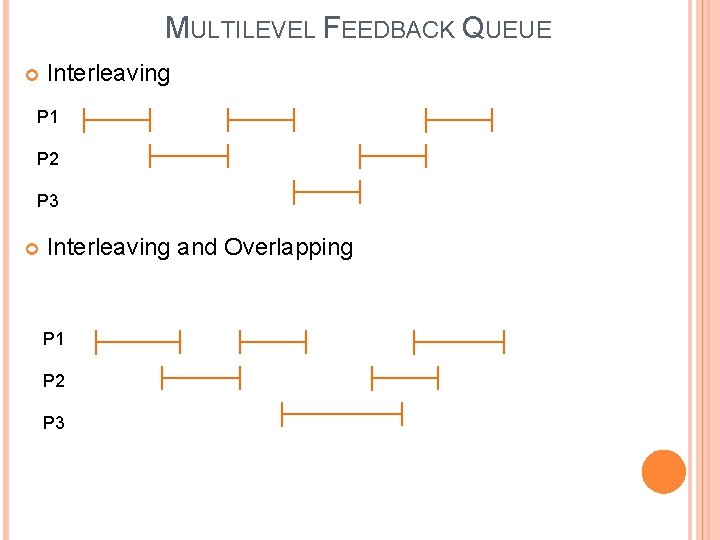

CONCURRENCY In a single processor multi programming system, processes are inter leaved in time to yield the appearance of simultaneous execution. Actual parallel processing is not achieved. Though Certain amount of overhead involved in switching back and forth between processes, interleaved execution provides major benefits in processing efficiency and in program structuring. In a multiprocessor system, it is possible not only to interleave processes but to overlap them.

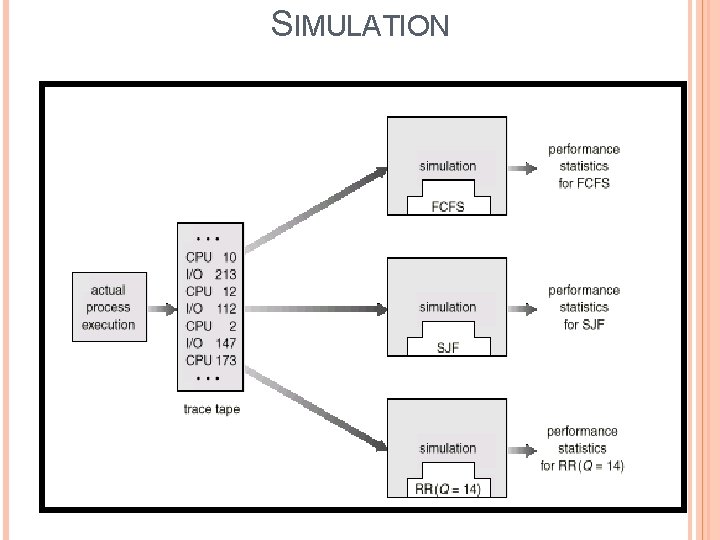

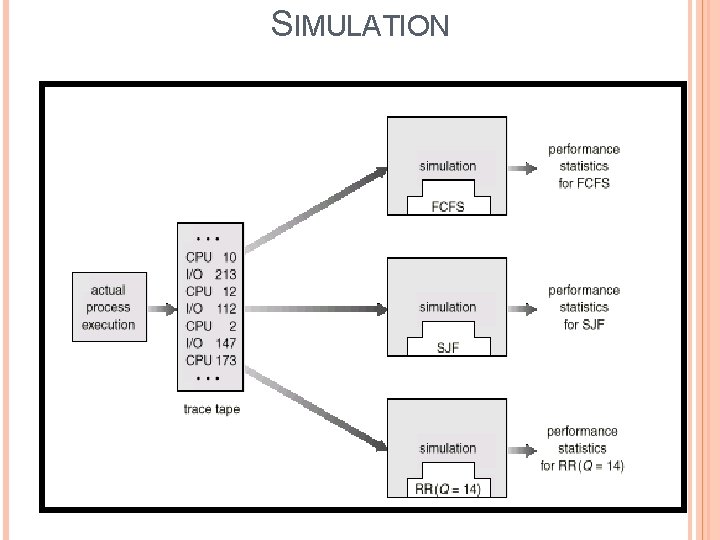

EVALUATION OF ALGORITHM Deterministic modeling – takes a particular predetermined workload and defines the performance of each algorithm for that workload. Queuing models Simulators

SIMULATION

MULTILEVEL FEEDBACK QUEUE Interleaving P 1 P 2 P 3 Interleaving and Overlapping P 1 P 2 P 3

PROCESS SYNCHRONIZATION

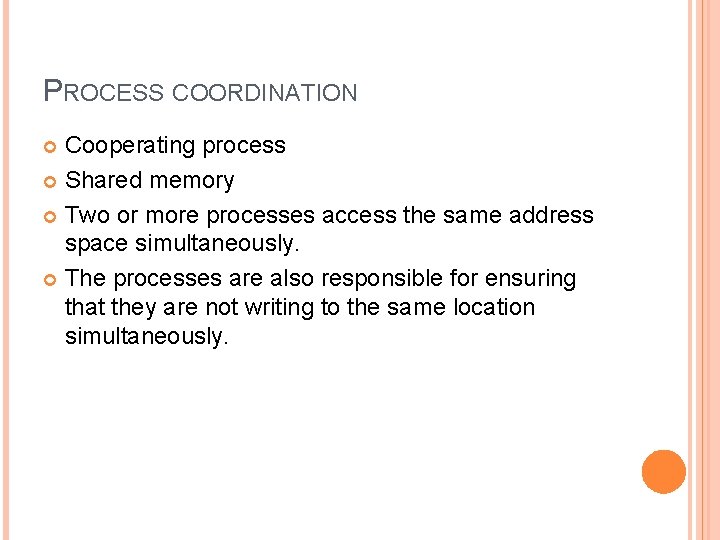

PROCESS COORDINATION Cooperating process Shared memory Two or more processes access the same address space simultaneously. The processes are also responsible for ensuring that they are not writing to the same location simultaneously.

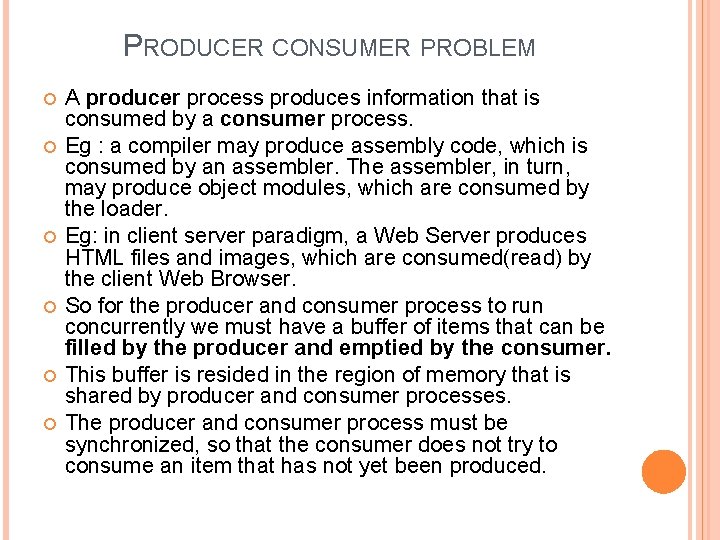

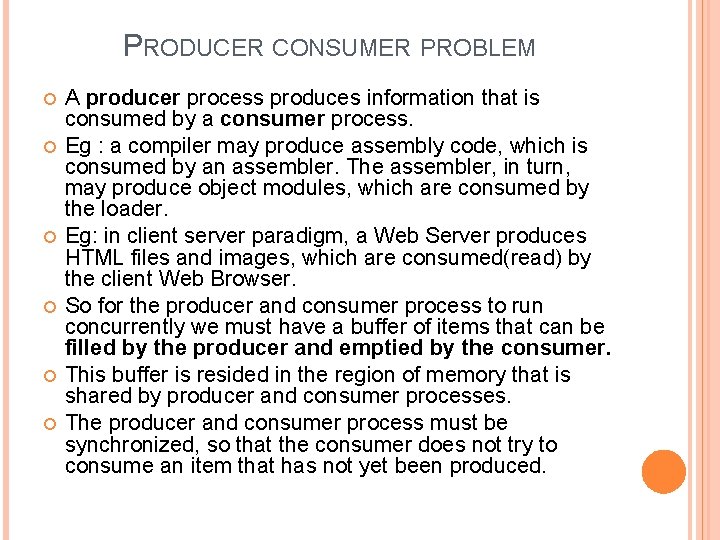

PRODUCER CONSUMER PROBLEM A producer process produces information that is consumed by a consumer process. Eg : a compiler may produce assembly code, which is consumed by an assembler. The assembler, in turn, may produce object modules, which are consumed by the loader. Eg: in client server paradigm, a Web Server produces HTML files and images, which are consumed(read) by the client Web Browser. So for the producer and consumer process to run concurrently we must have a buffer of items that can be filled by the producer and emptied by the consumer. This buffer is resided in the region of memory that is shared by producer and consumer processes. The producer and consumer process must be synchronized, so that the consumer does not try to consume an item that has not yet been produced.

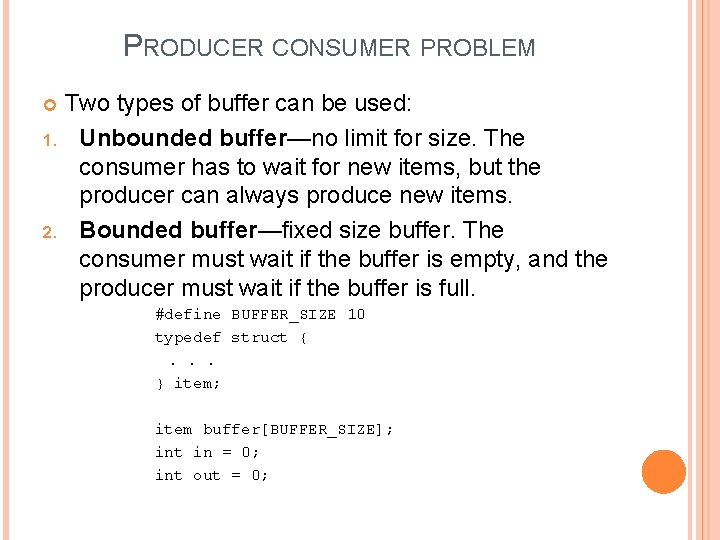

PRODUCER CONSUMER PROBLEM Two types of buffer can be used: 1. Unbounded buffer—no limit for size. The consumer has to wait for new items, but the producer can always produce new items. 2. Bounded buffer—fixed size buffer. The consumer must wait if the buffer is empty, and the producer must wait if the buffer is full. #define BUFFER_SIZE 10 typedef struct {. . . } item; item buffer[BUFFER_SIZE]; int in = 0; int out = 0;

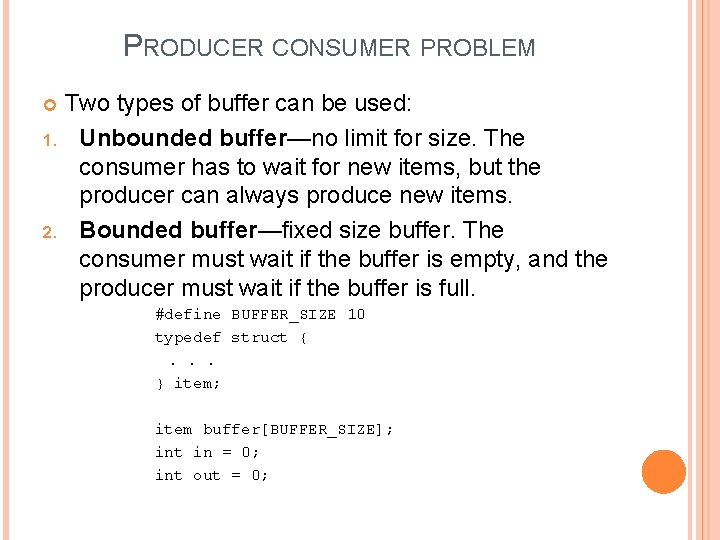

BOUNDED BUFFER – PRODUCER item next_produced; while (true) { /* produce an item in next produced */ while (((in + 1) % BUFFER_SIZE) == out) ; /* do nothing */ buffer[in] = next_produced; in = (in + 1) % BUFFER_SIZE; }

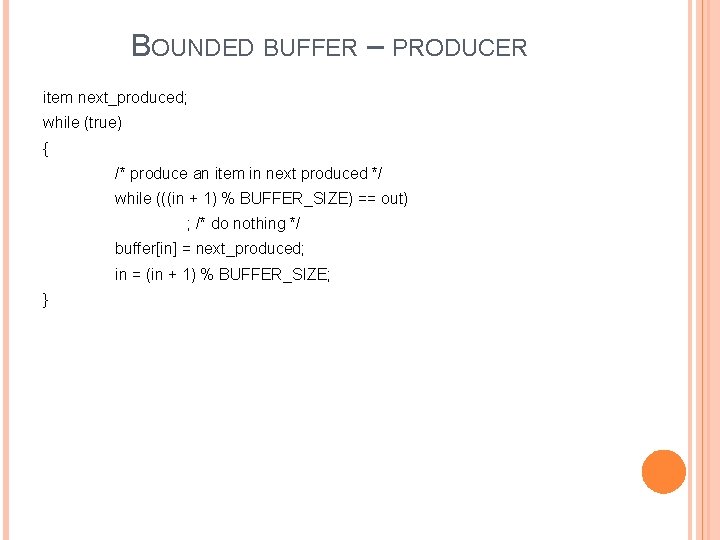

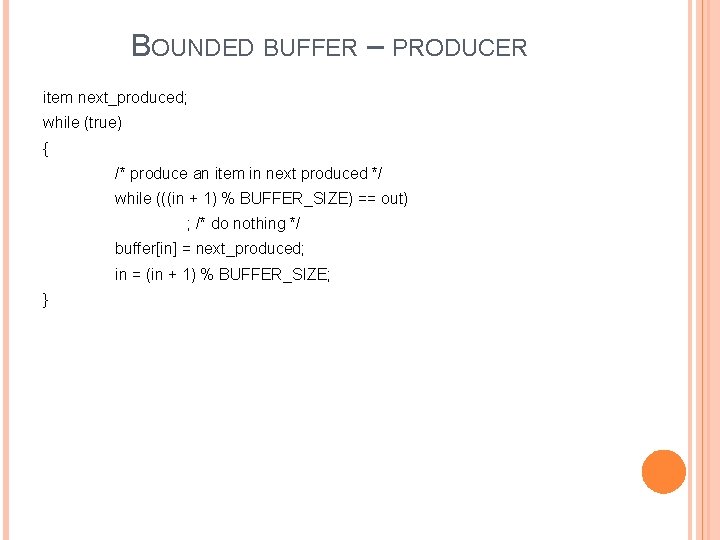

BOUNDED BUFFER - CONSUMER item next_consumed; while (true) { while (in == out) ; /* do nothing */ next_consumed = buffer[out]; out = (out + 1) % BUFFER_SIZE; /* consume the item in next consumed */ }

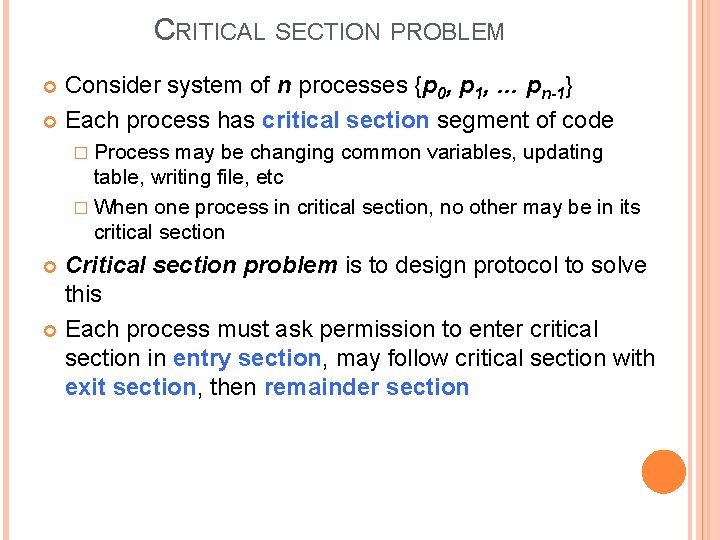

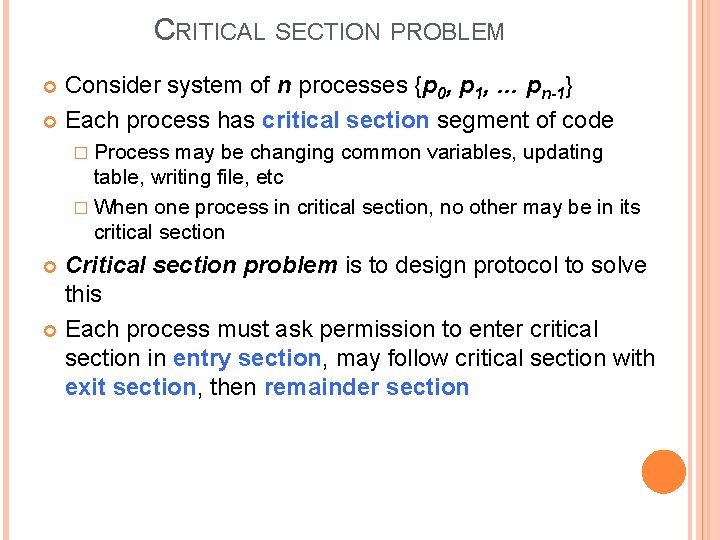

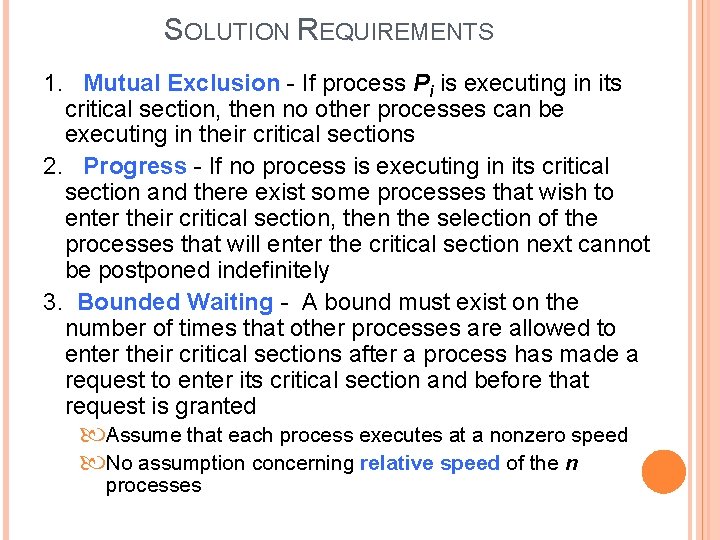

CRITICAL SECTION PROBLEM Consider system of n processes {p 0, p 1, … pn-1} Each process has critical section segment of code � Process may be changing common variables, updating table, writing file, etc � When one process in critical section, no other may be in its critical section Critical section problem is to design protocol to solve this Each process must ask permission to enter critical section in entry section, may follow critical section with exit section, then remainder section

CRITICAL SECTION PROBLEM General structure of process Pi

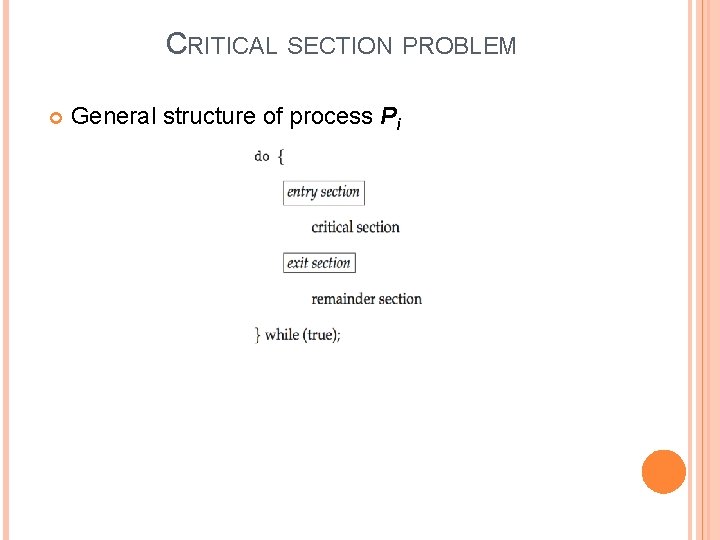

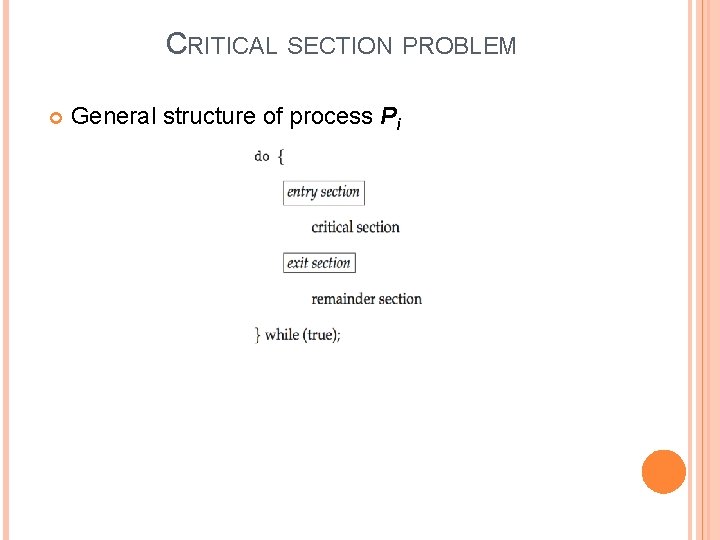

SOLUTION REQUIREMENTS 1. Mutual Exclusion - If process Pi is executing in its critical section, then no other processes can be executing in their critical sections 2. Progress - If no process is executing in its critical section and there exist some processes that wish to enter their critical section, then the selection of the processes that will enter the critical section next cannot be postponed indefinitely 3. Bounded Waiting - A bound must exist on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted Assume that each process executes at a nonzero speed No assumption concerning relative speed of the n processes

RACE CONDITION At a given time, many kernel-mode processes may be active in the operating system. Eg. : consider a kernel data structure which maintains the list of all open files in the system. This list must be modified when a new file is opened or closed. If two processes were to open files simultaneously, the separate updates to this list could result in race condition. Other kernel data structures can be for maintaining memory allocation, for maintaining process lists and for interrupt handelling.

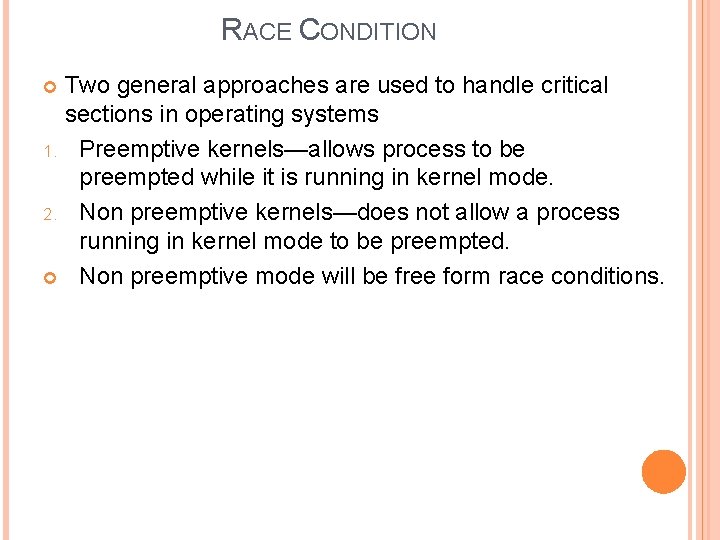

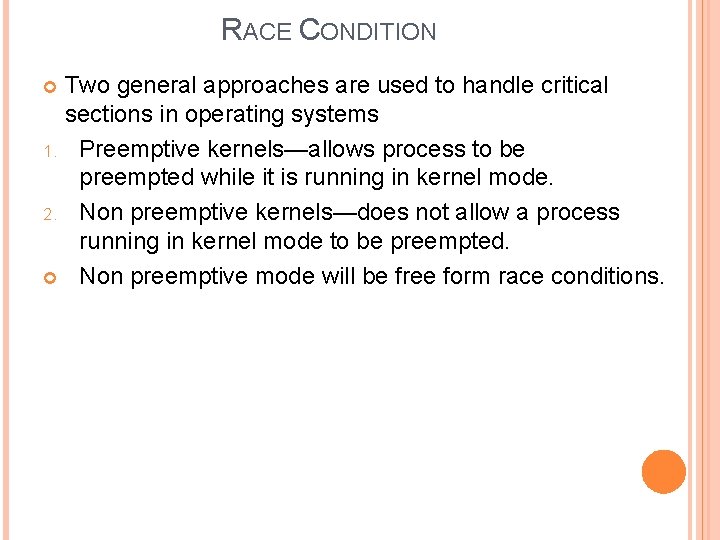

RACE CONDITION Two general approaches are used to handle critical sections in operating systems 1. Preemptive kernels—allows process to be preempted while it is running in kernel mode. 2. Non preemptive kernels—does not allow a process running in kernel mode to be preempted. Non preemptive mode will be free form race conditions.

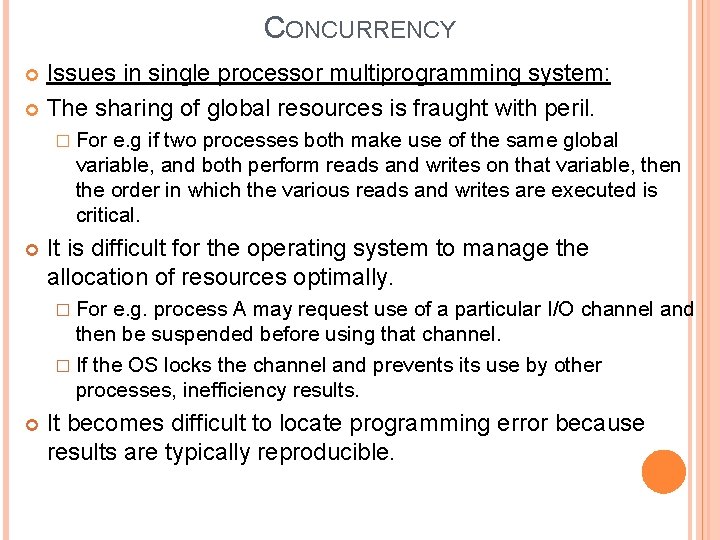

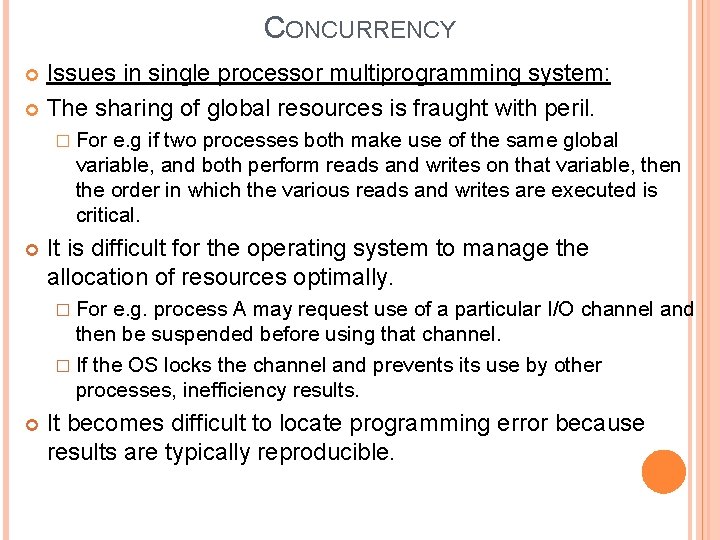

CONCURRENCY Issues in single processor multiprogramming system: The sharing of global resources is fraught with peril. � For e. g if two processes both make use of the same global variable, and both perform reads and writes on that variable, then the order in which the various reads and writes are executed is critical. It is difficult for the operating system to manage the allocation of resources optimally. � For e. g. process A may request use of a particular I/O channel and then be suspended before using that channel. � If the OS locks the channel and prevents its use by other processes, inefficiency results. It becomes difficult to locate programming error because results are typically reproducible.

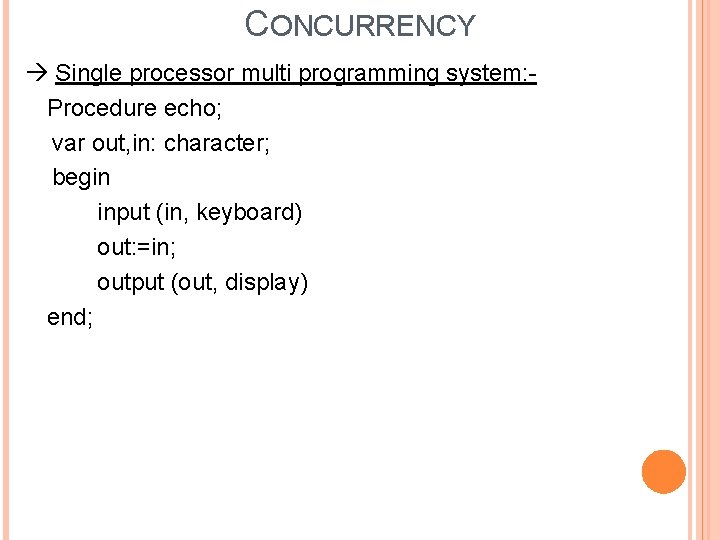

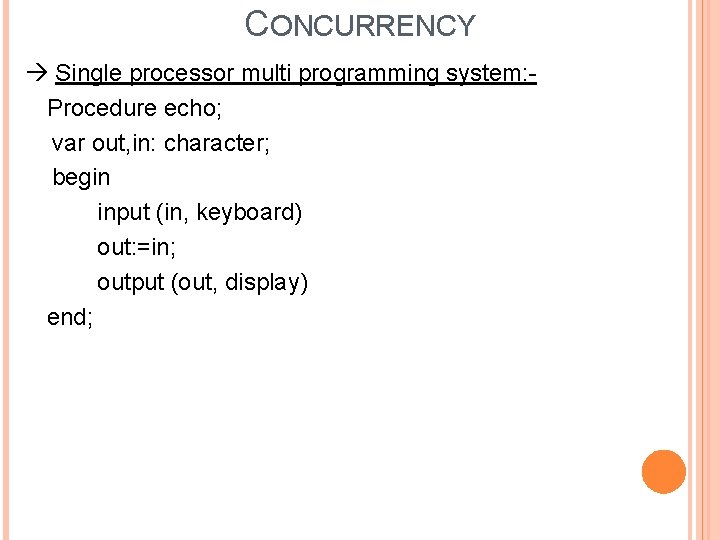

CONCURRENCY Single processor multi programming system: Procedure echo; var out, in: character; begin input (in, keyboard) out: =in; output (out, display) end;

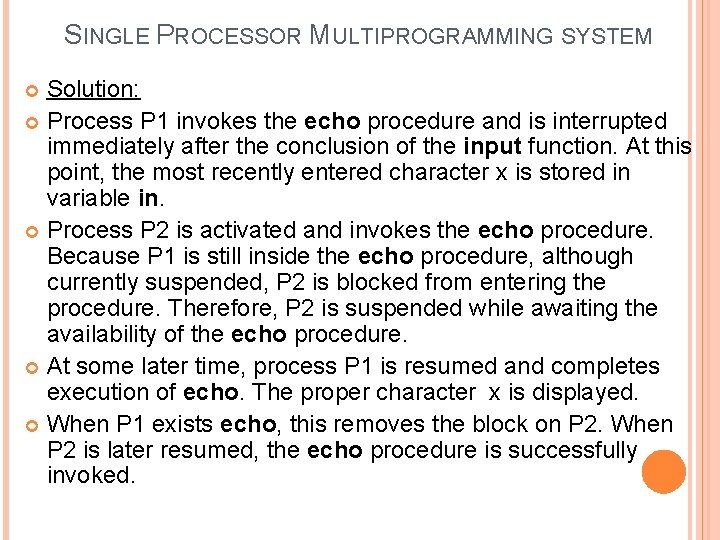

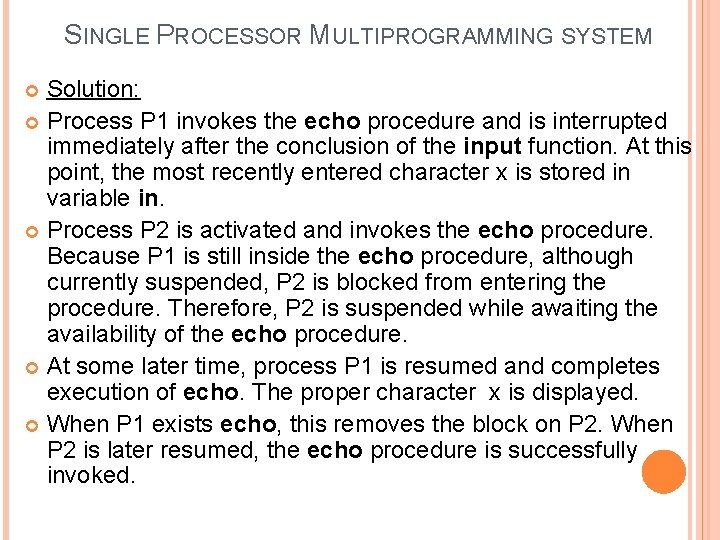

SINGLE PROCESSOR MULTIPROGRAMMING SYSTEM Process P 1 invokes the echo procedure and is interrupted immediately after the conclusion of the input function. At this point, the most recently entered character x, is stored in variable in. Process P 2 is activated and invokes the echo procedure, which runs to conclusion, inputting, and then displaying a single character y on the screen. Process P 1 is resumed. By this time, the value x has overwritten in in and therefore lost, Instead, in contains y, which is transferred to out and displayed. Reason: � Shared global variable in

SINGLE PROCESSOR MULTIPROGRAMMING SYSTEM Solution: Process P 1 invokes the echo procedure and is interrupted immediately after the conclusion of the input function. At this point, the most recently entered character x is stored in variable in. Process P 2 is activated and invokes the echo procedure. Because P 1 is still inside the echo procedure, although currently suspended, P 2 is blocked from entering the procedure. Therefore, P 2 is suspended while awaiting the availability of the echo procedure. At some later time, process P 1 is resumed and completes execution of echo. The proper character x is displayed. When P 1 exists echo, this removes the block on P 2. When P 2 is later resumed, the echo procedure is successfully invoked.

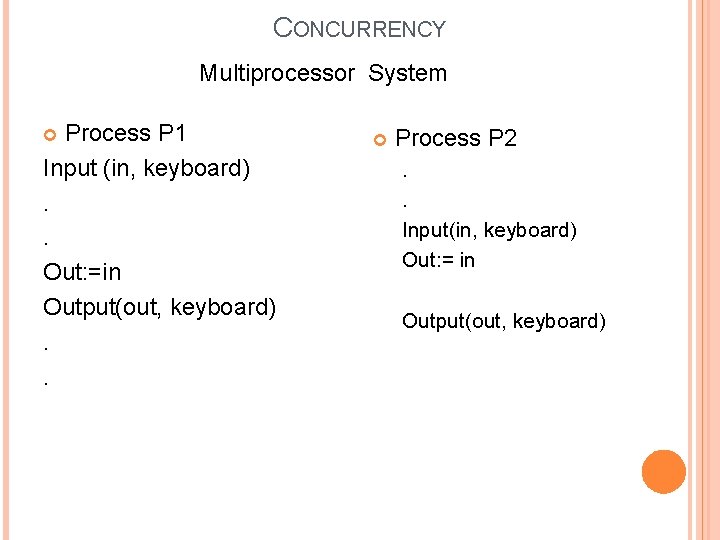

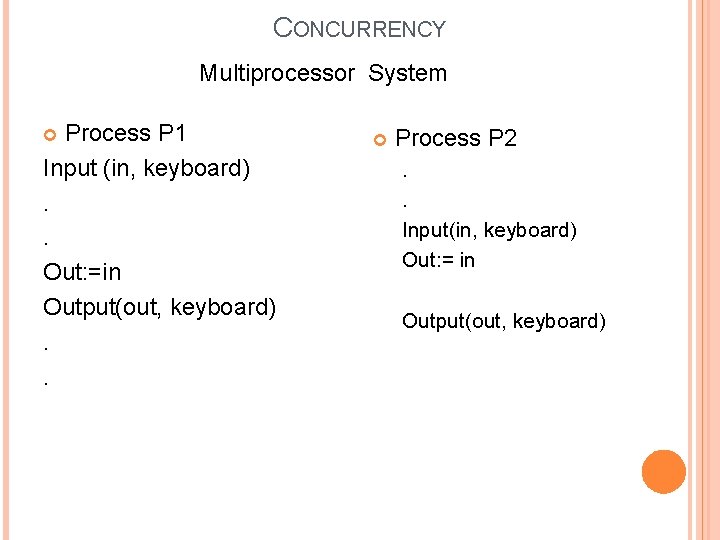

CONCURRENCY Multiprocessor System Process P 1 Input (in, keyboard). . Out: =in Output(out, keyboard). . Process P 2. . Input(in, keyboard) Out: = in Output(out, keyboard)

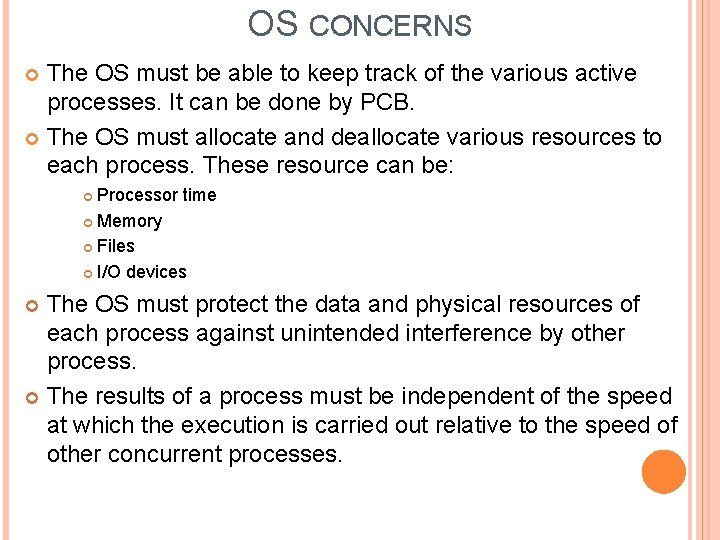

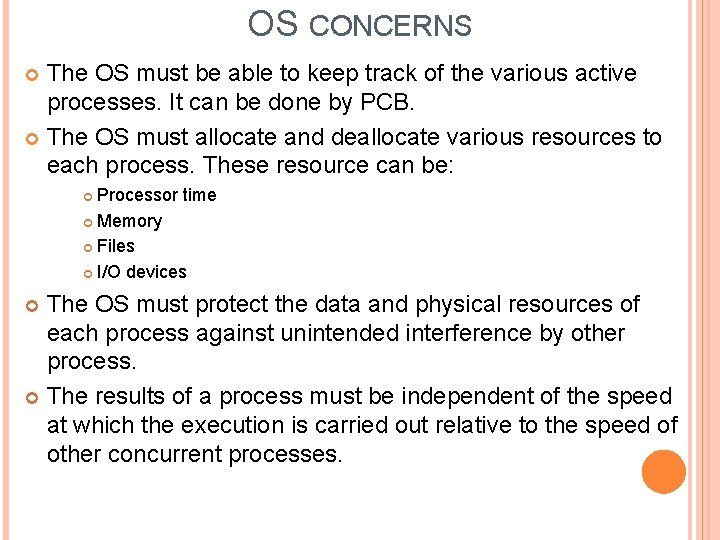

OS CONCERNS The OS must be able to keep track of the various active processes. It can be done by PCB. The OS must allocate and deallocate various resources to each process. These resource can be: Processor time Memory Files I/O devices The OS must protect the data and physical resources of each process against unintended interference by other process. The results of a process must be independent of the speed at which the execution is carried out relative to the speed of other concurrent processes.

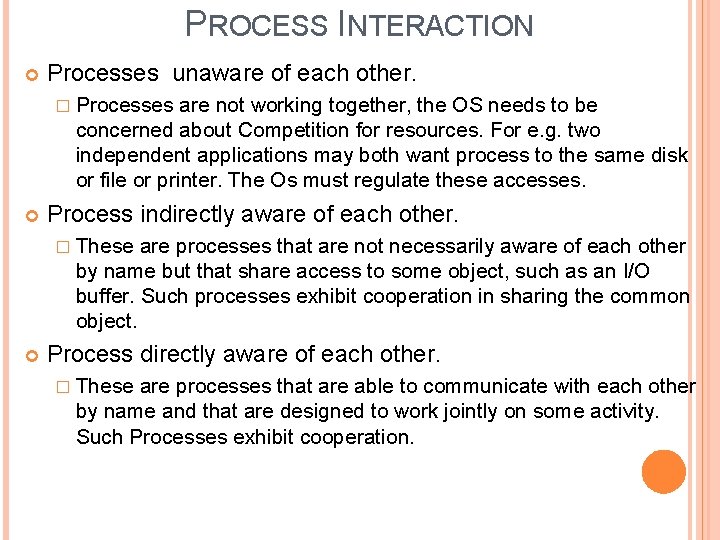

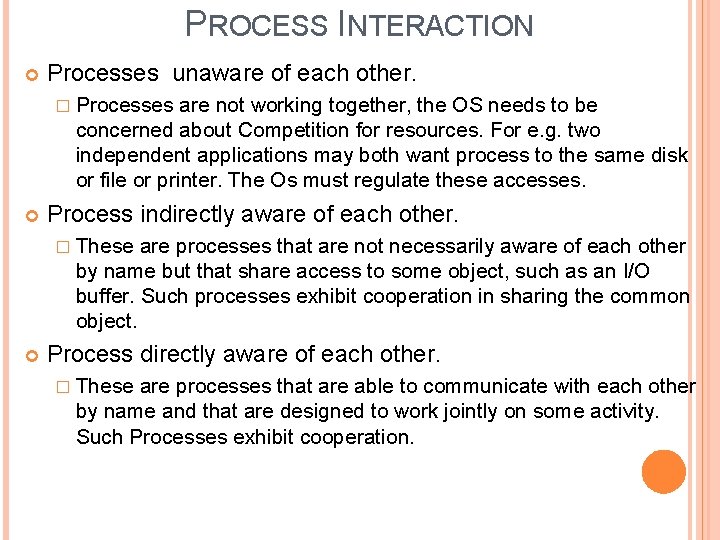

PROCESS INTERACTION Processes unaware of each other. � Processes are not working together, the OS needs to be concerned about Competition for resources. For e. g. two independent applications may both want process to the same disk or file or printer. The Os must regulate these accesses. Process indirectly aware of each other. � These are processes that are not necessarily aware of each other by name but that share access to some object, such as an I/O buffer. Such processes exhibit cooperation in sharing the common object. Process directly aware of each other. � These are processes that are able to communicate with each other by name and that are designed to work jointly on some activity. Such Processes exhibit cooperation.

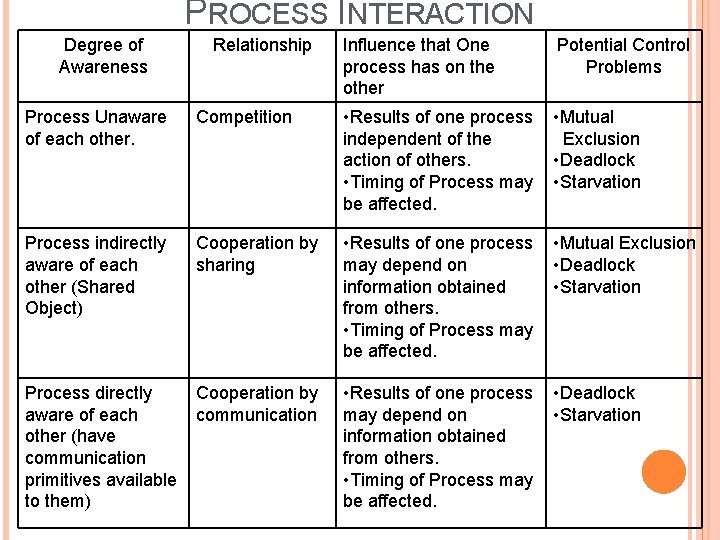

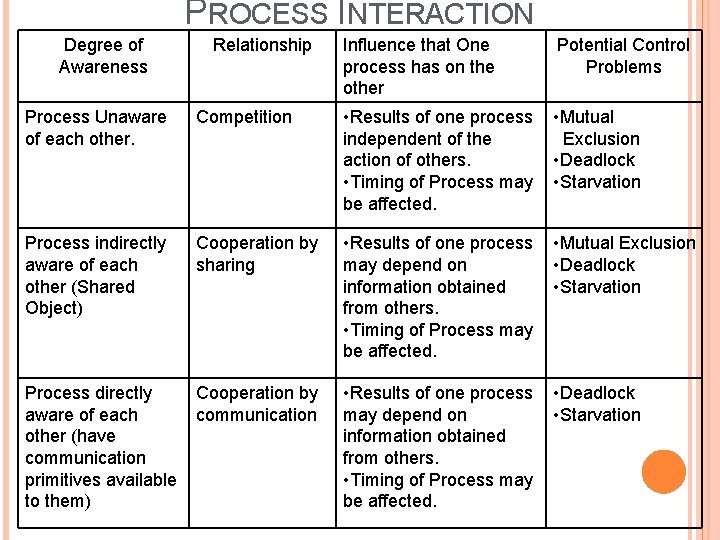

PROCESS INTERACTION Degree of Awareness Relationship Influence that One process has on the other Potential Control Problems • Mutual Exclusion • Deadlock • Starvation Process Unaware of each other. Competition • Results of one process independent of the action of others. • Timing of Process may be affected. Process indirectly aware of each other (Shared Object) Cooperation by sharing • Results of one process • Mutual Exclusion may depend on • Deadlock information obtained • Starvation from others. • Timing of Process may be affected. Process directly Cooperation by aware of each communication other (have communication primitives available to them) • Results of one process • Deadlock may depend on • Starvation information obtained from others. • Timing of Process may be affected.

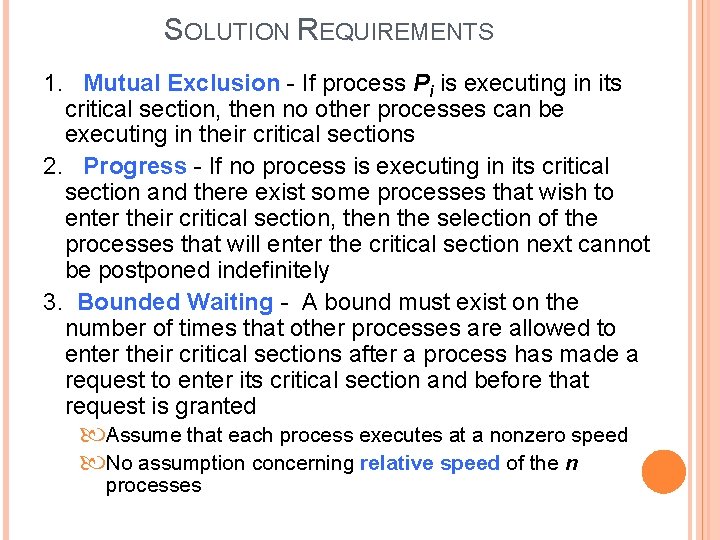

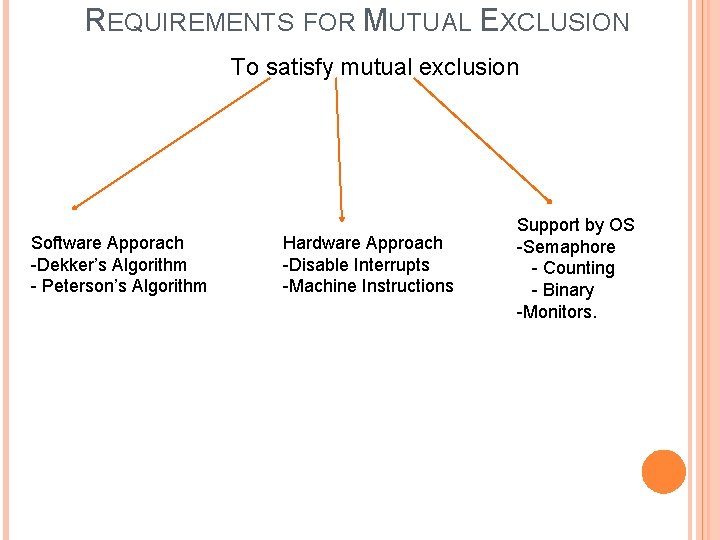

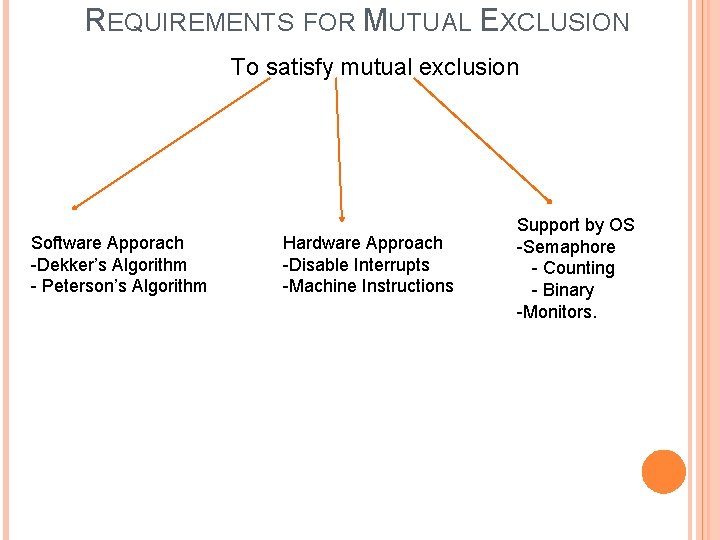

REQUIREMENTS FOR MUTUAL EXCLUSION To satisfy mutual exclusion Software Apporach -Dekker’s Algorithm - Peterson’s Algorithm Hardware Approach -Disable Interrupts -Machine Instructions Support by OS -Semaphore - Counting - Binary -Monitors.

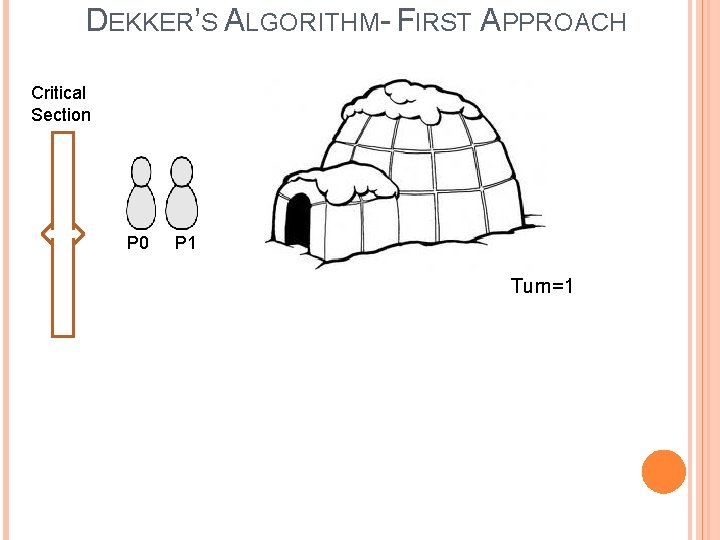

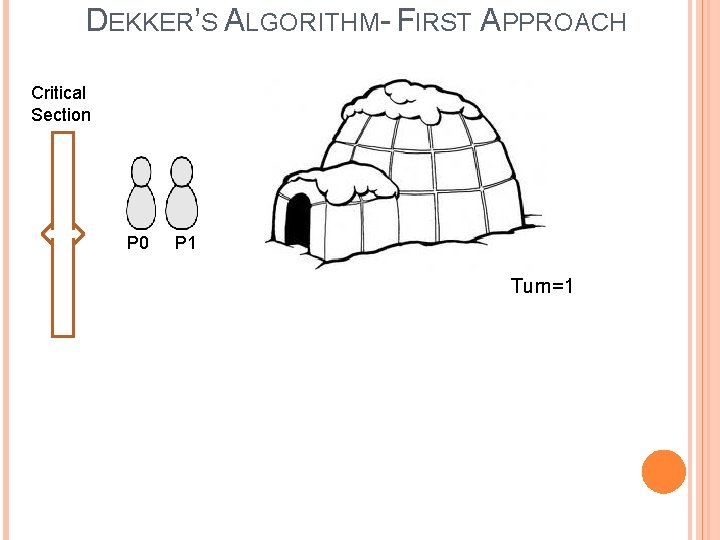

DEKKER’S ALGORITHM- FIRST APPROACH Critical Section P 0 P 1 Turn=1

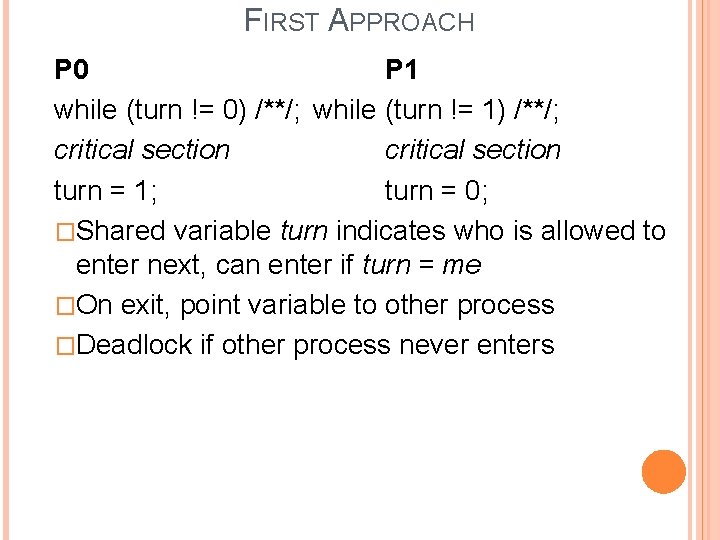

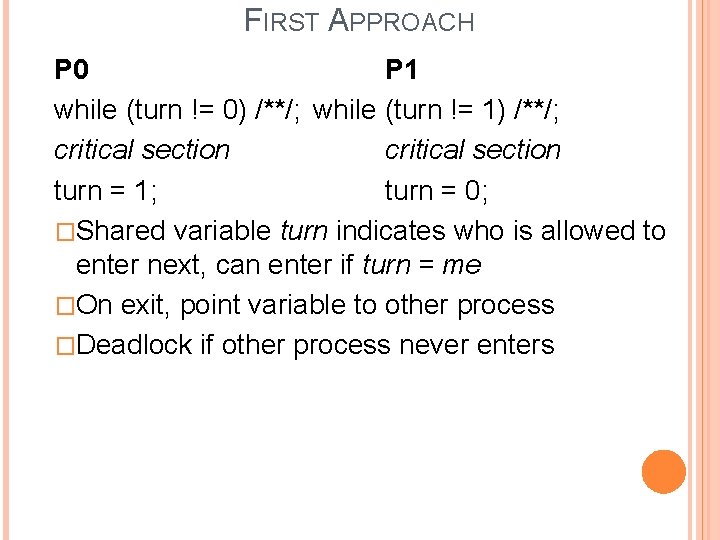

FIRST APPROACH P 0 P 1 while (turn != 0) /**/; while (turn != 1) /**/; critical section turn = 1; turn = 0; �Shared variable turn indicates who is allowed to enter next, can enter if turn = me �On exit, point variable to other process �Deadlock if other process never enters

FIRST APPROACH Issues: Processes must strictly alternate in their use of their critical section; the speed of execution is decided by the slower of two processes. If P 0 uses its critical section only once per hour, but p 1 would like to use its critical section at a rate of 1000 times per hour, p 1 is forced to adopt the speed of P 0. If one process is failed inside its critical section, the other process is permanently blocked.

![DEKKERS ALGORITHM SECOND APPROACH Flag0 Critical Section Flag1 1 P 0 P 1 Turn DEKKER’S ALGORITHM- SECOND APPROACH Flag[0] Critical Section Flag[1] 1 P 0 P 1 Turn](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-118.jpg)

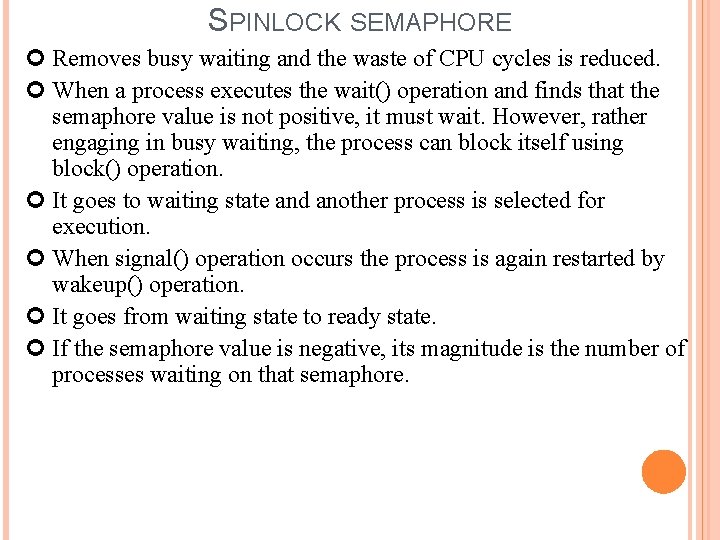

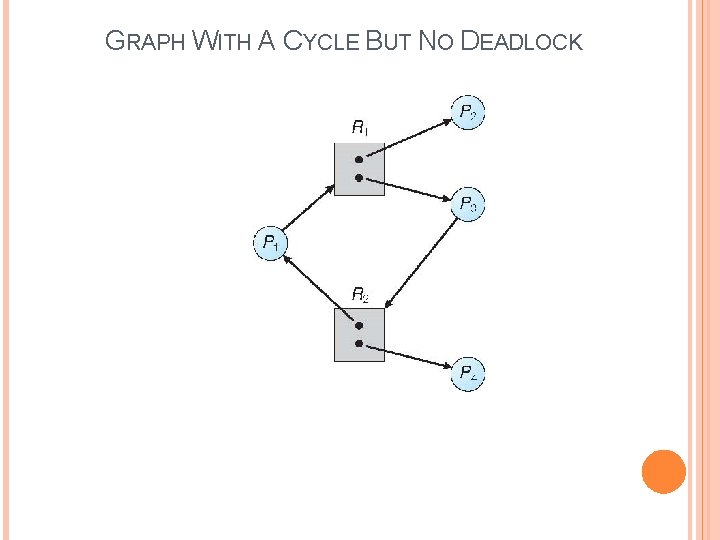

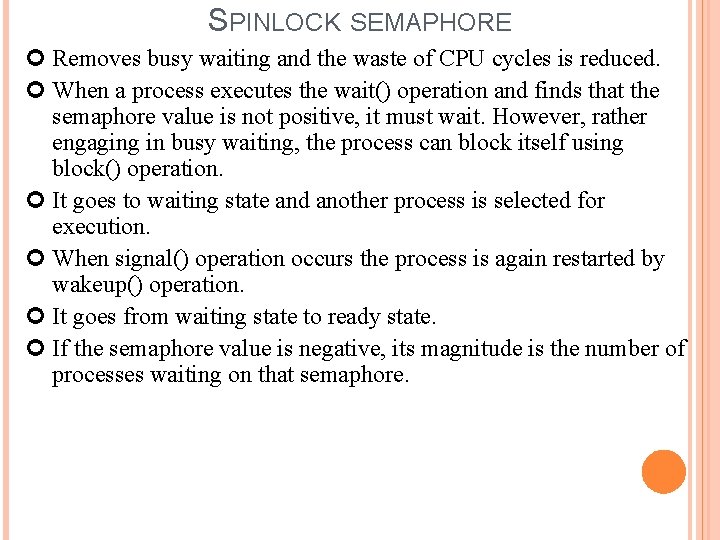

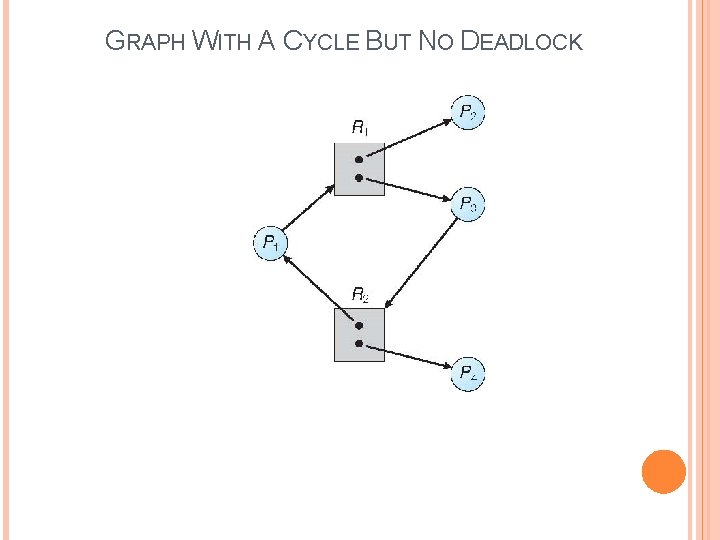

DEKKER’S ALGORITHM- SECOND APPROACH Flag[0] Critical Section Flag[1] 1 P 0 P 1 Turn

![SECOND ATTEMPT P 0 P 1 while flag1 while flag0 flag0 SECOND ATTEMPT P 0 P 1 while (flag[1]) /**/; while (flag[0]) /**/; flag[0] =](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-119.jpg)

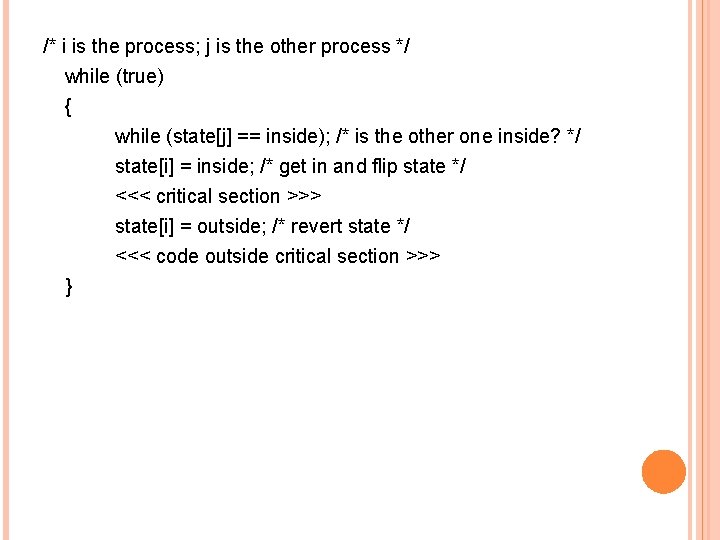

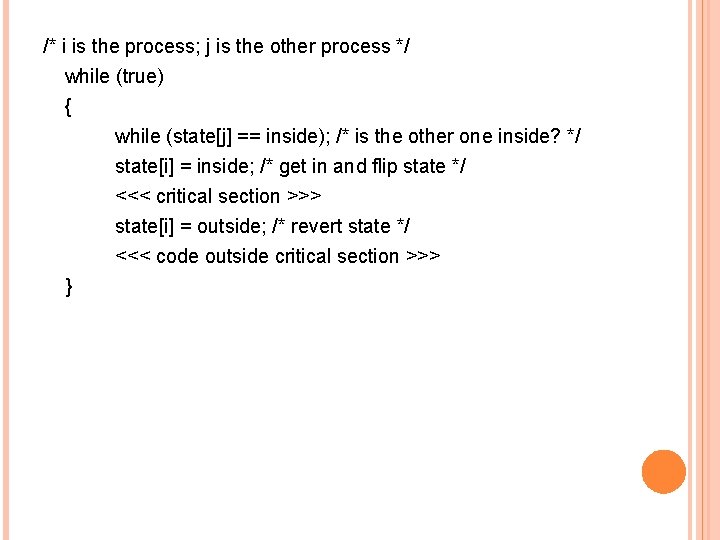

SECOND ATTEMPT P 0 P 1 while (flag[1]) /**/; while (flag[0]) /**/; flag[0] = true; flag[1] = true; <critical section> flag[0] = false; flag[1] = false; �Each process has a flag to indicate it is in the critical section �Fails mutual exclusion if processes are in lockstep

/* i is the process; j is the other process */ while (true) { while (state[j] == inside); /* is the other one inside? */ state[i] = inside; /* get in and flip state */ <<< critical section >>> state[i] = outside; /* revert state */ <<< code outside critical section >>> }

![Consider the following trace 1 P 0 finds state1 outside 2 The Consider the following trace: 1. P 0 finds (state[1] == outside) 2. The](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-121.jpg)

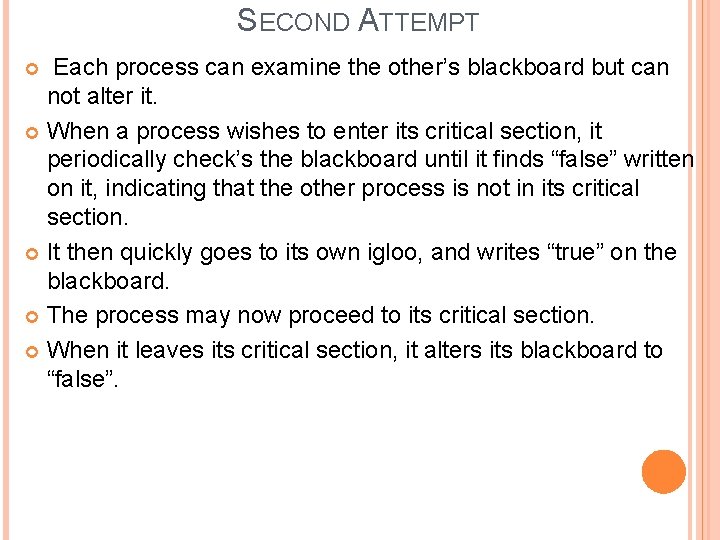

Consider the following trace: 1. P 0 finds (state[1] == outside) 2. The scheduler forces a context-switch 3. P 1 finds (state[0]==outside) 4. P 1 sets (state[1] = inside) 5. P 1 enters the critical section 6. The scheduler forces a context-switch 7. P 0 sets (state[0] = inside) 8. P 0 enters the critical section 9. Both P 0 and P 1 are now in the critical section With both processes in the critical section, the mutual exclusion criteria has been violated. P 0 P 1 while (state[1]==outside); while (state[0]==outside); state[0] = inside; state[1] = inside; <critical section> state[0] = outside; state[1] = outside;

SECOND ATTEMPT Each process can examine the other’s blackboard but can not alter it. When a process wishes to enter its critical section, it periodically check’s the blackboard until it finds “false” written on it, indicating that the other process is not in its critical section. It then quickly goes to its own igloo, and writes “true” on the blackboard. The process may now proceed to its critical section. When it leaves its critical section, it alters its blackboard to “false”.

2 ND ATTEMPT Issues: � If a process is fails inside its critical section, or after setting its flag to true just before entering its critical section, then the other process is permanently blocked. � Proposed solution is not independent of relative speeds of process execution.

![THIRD ATTEMPT Busy Flag Modified P 0 flag0 true while flag1true critical THIRD ATTEMPT Busy Flag Modified P 0 flag[0] = true; while (flag[1]==true) /**/; critical](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-124.jpg)

THIRD ATTEMPT Busy Flag Modified P 0 flag[0] = true; while (flag[1]==true) /**/; critical section flag[0] = false; P 1 flag[1] = true; while (flag[0]==true) /**/; critical section flag[1] = false;

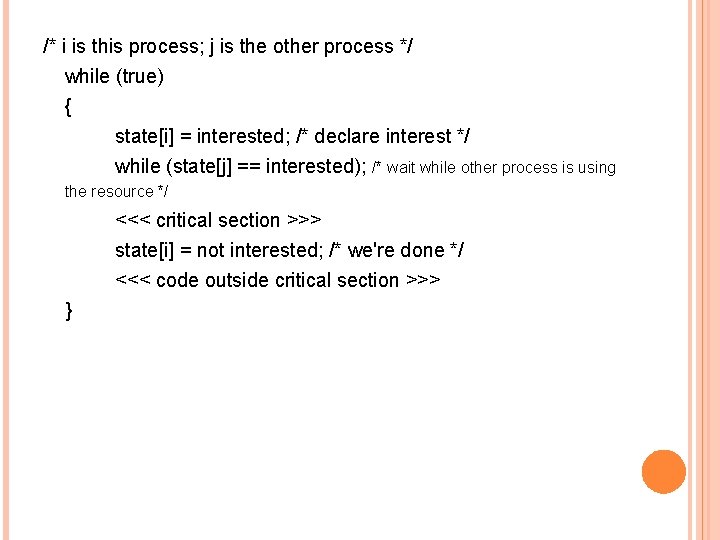

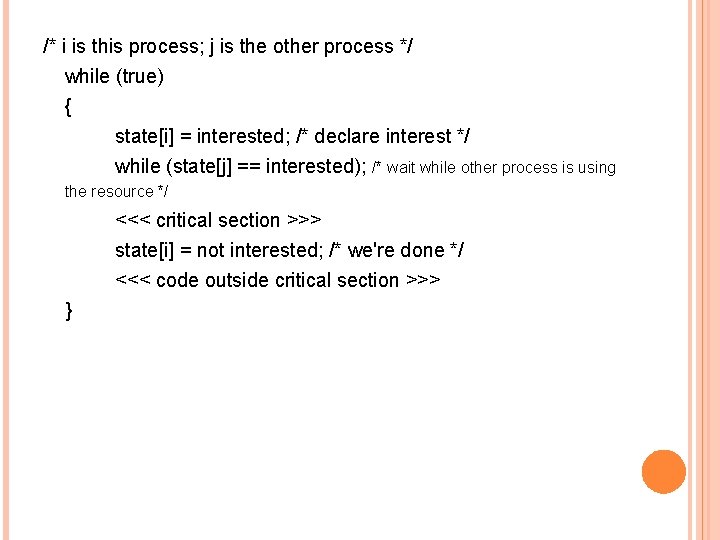

/* i is this process; j is the other process */ while (true) { state[i] = interested; /* declare interest */ while (state[j] == interested); /* wait while other process is using the resource */ <<< critical section >>> state[i] = not interested; /* we're done */ <<< code outside critical section >>> }

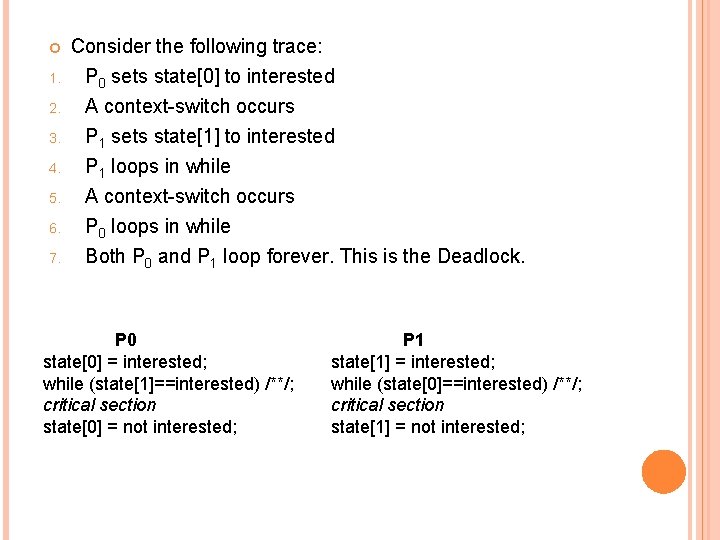

1. 2. 3. 4. 5. 6. 7. Consider the following trace: P 0 sets state[0] to interested A context-switch occurs P 1 sets state[1] to interested P 1 loops in while A context-switch occurs P 0 loops in while Both P 0 and P 1 loop forever. This is the Deadlock. P 0 state[0] = interested; while (state[1]==interested) /**/; critical section state[0] = not interested; P 1 state[1] = interested; while (state[0]==interested) /**/; critical section state[1] = not interested;

![THIRD ATTEMPT Once P 0 has set flag0 to true P 1 can not THIRD ATTEMPT Once P 0 has set flag[0] to “true”, P 1 can not](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-127.jpg)

THIRD ATTEMPT Once P 0 has set flag[0] to “true”, P 1 can not enter its critical section until after P 0 has entered and left its critical section. It could be that P 1 is already in its critical section when P 0 sets its flag. In that case, P 0 will be blocked by the while statement until P 1 has left its critical section. The same reasoning applies from the point of view of P 1. It guarantees mutual exclusion. Issue: � If both processes set their flags to “true” before either has executed the while statement, then each will think that other has entered its critical section. � The result is deadlock.

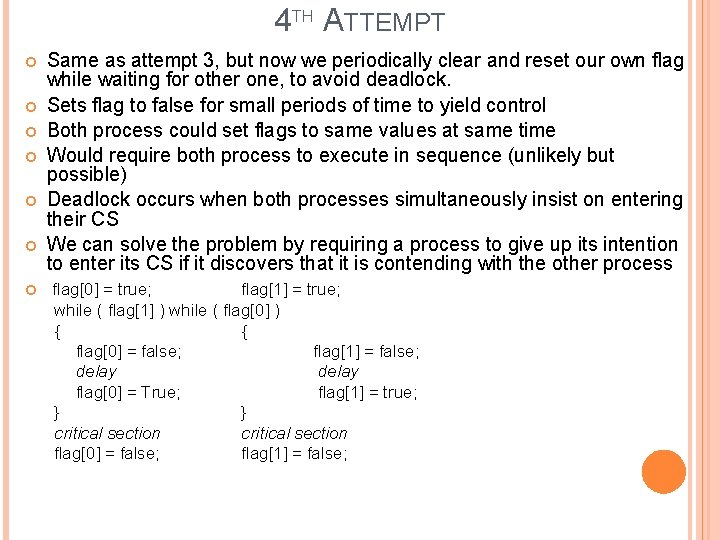

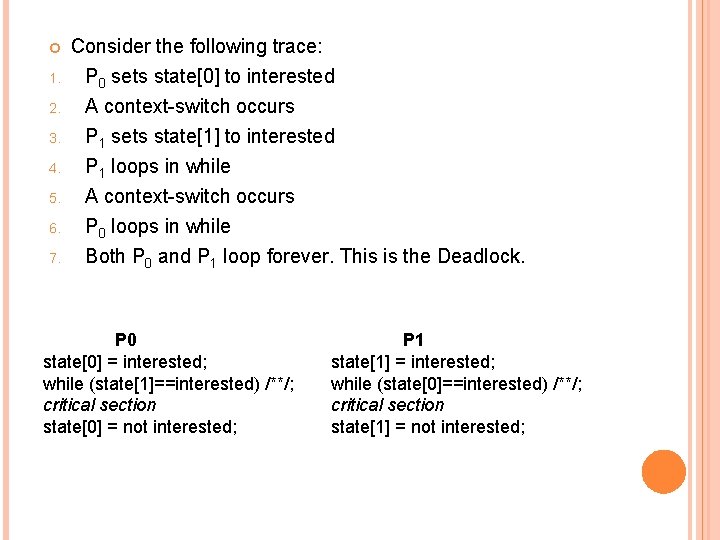

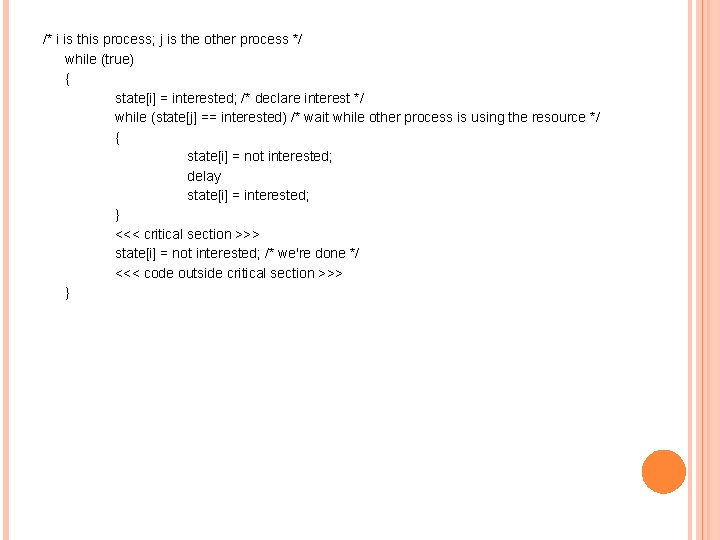

4 TH ATTEMPT Same as attempt 3, but now we periodically clear and reset our own flag while waiting for other one, to avoid deadlock. Sets flag to false for small periods of time to yield control Both process could set flags to same values at same time Would require both process to execute in sequence (unlikely but possible) Deadlock occurs when both processes simultaneously insist on entering their CS We can solve the problem by requiring a process to give up its intention to enter its CS if it discovers that it is contending with the other process flag[0] = true; flag[1] = true; while ( flag[1] ) while ( flag[0] ) { { flag[0] = false; flag[1] = false; delay flag[0] = True; flag[1] = true; } } critical section flag[0] = false; flag[1] = false;

/* i is this process; j is the other process */ while (true) { state[i] = interested; /* declare interest */ while (state[j] == interested) /* wait while other process is using the resource */ { state[i] = not interested; delay state[i] = interested; } <<< critical section >>> state[i] = not interested; /* we're done */ <<< code outside critical section >>> }

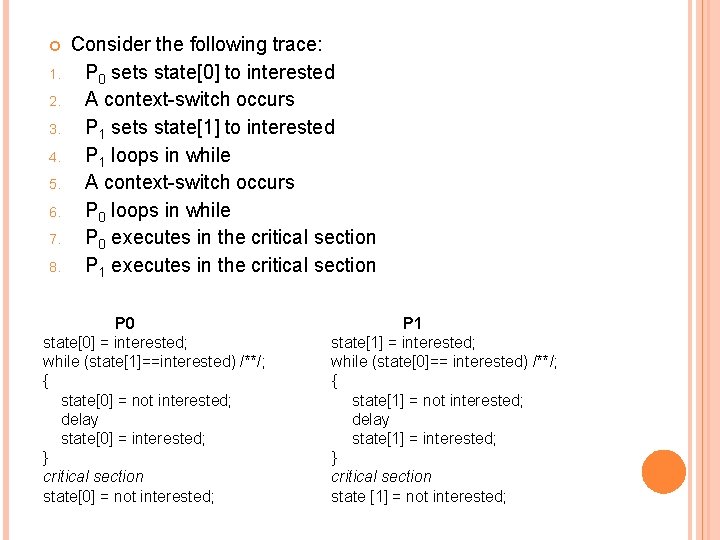

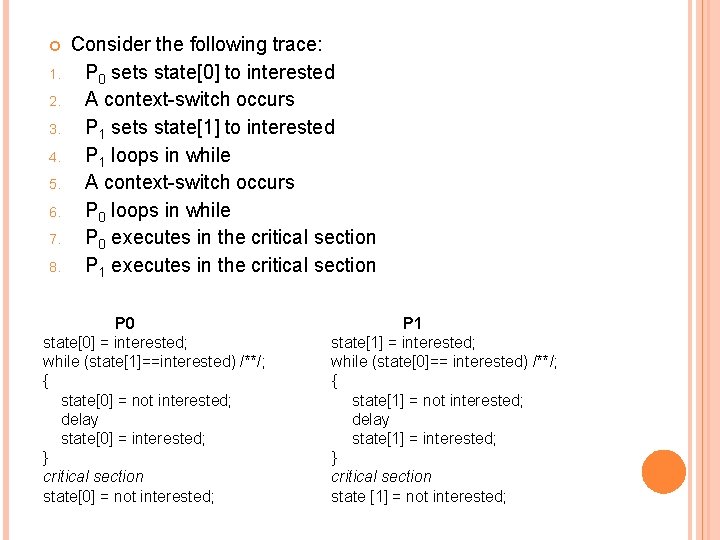

1. 2. 3. 4. 5. 6. 7. 8. Consider the following trace: P 0 sets state[0] to interested A context-switch occurs P 1 sets state[1] to interested P 1 loops in while A context-switch occurs P 0 loops in while P 0 executes in the critical section P 1 executes in the critical section P 0 P 1 state[0] = interested; state[1] = interested; while (state[1]==interested) /**/; while (state[0]== interested) /**/; { { state[0] = not interested; state[1] = not interested; delay delay state[0] = interested; state[1] = interested; } } critical section state[0] = not interested; state [1] = not interested;

![4 TH ATTEMPT P 0 sets flag0 to true P 1 sets 4 TH ATTEMPT - P 0 sets flag[0] to true. - P 1 sets](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-131.jpg)

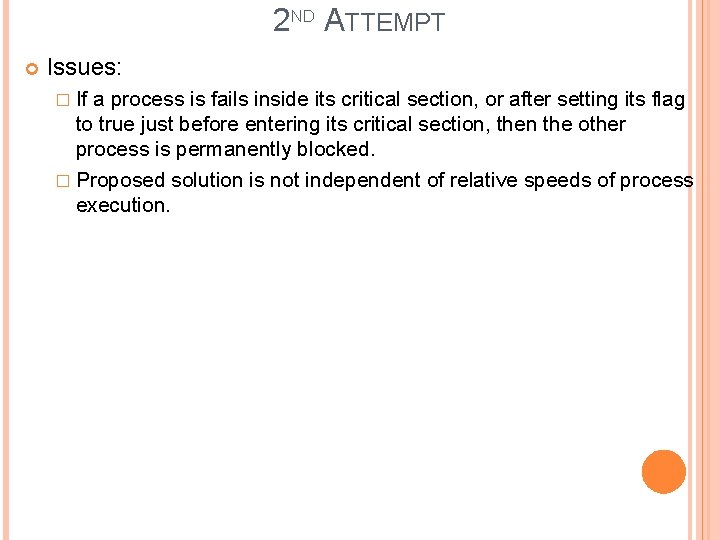

4 TH ATTEMPT - P 0 sets flag[0] to true. - P 1 sets flag[1] to true. - P 0 checks flag[1]. - P 1 checks flag[0] - P 0 sets flag[0] to false - P 1 sets flag[1] to false - P 0 sets flag[0] to true - P 1 sets flag[1] to true This sequence could be extended indefinitely, and neither process could enter its critical section. It is not deadlock because any alteration in the relative speed of the two processes will break this cycle and allow one to enter into critical section. The deadlock is solved, but there is still the possibility of starvation, in the case of perfect interleaving in executing while

![DEKKERS ALGORITHMCORRECT SOLUTION Turn Critical Section 1 P 0 P 1 Flag0 Flag1 Turn DEKKER’S ALGORITHM-CORRECT SOLUTION Turn Critical Section 1 P 0 P 1 Flag[0] Flag[1] Turn](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-132.jpg)

DEKKER’S ALGORITHM-CORRECT SOLUTION Turn Critical Section 1 P 0 P 1 Flag[0] Flag[1] Turn

![CORRECT SOLUTION Procedure P 0 Begin Repeat flag0 True While flag1 do if turn1 CORRECT SOLUTION Procedure P 0 Begin Repeat flag[0]= True While flag[1] do if turn=1](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-133.jpg)

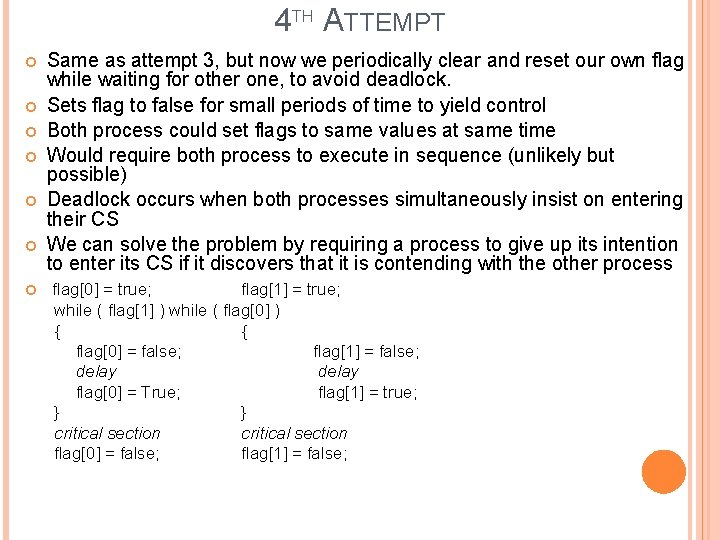

CORRECT SOLUTION Procedure P 0 Begin Repeat flag[0]= True While flag[1] do if turn=1 then begin flag[0]=false while turn=1 {do nothing} flag[0]=true end <critical section> turn=1 flag[0]=False <remainder> Forever End Procedure P 1 Begin Repeat flag[1]=true while flag[0] do if turn=0 then begin flag[1]=false while turn=0 {do nothing} flag[1]=true end <critical section> turn=0 flag[1]=false <remainder> Forever End

![while true statei interested declare interest while statej If while (true) { state[i] = interested; /* declare interest */ while (state[j]) /* If](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-134.jpg)

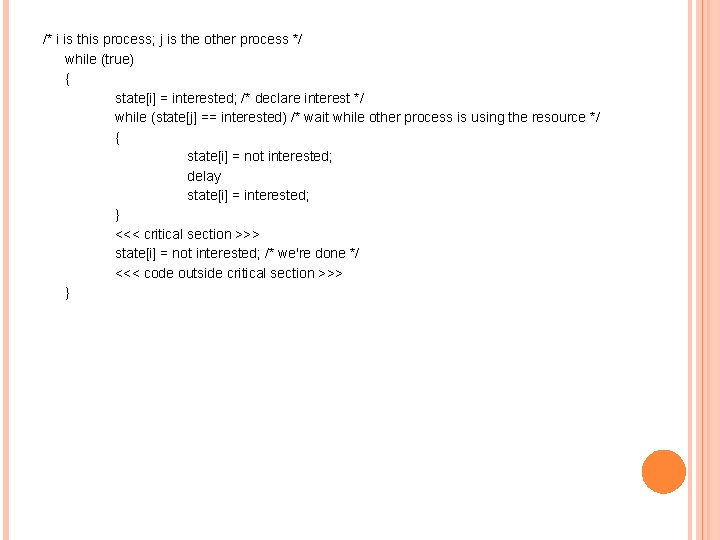

while (true) { state[i] = interested; /* declare interest */ while (state[j]) /* If the other one's interested */ { if (turn == j) /* and it is its turn */ { state[i] = not interested; /* we stay uninterested */ while (turn == j); /*. . . until our turn */ state[i] = interested; } } <<< critical section >>> turn = j; /* forces alternation if both are interested */ state[i] = not interested; /* Need to ask again if I want again */ <<< code outside critical section >>> }

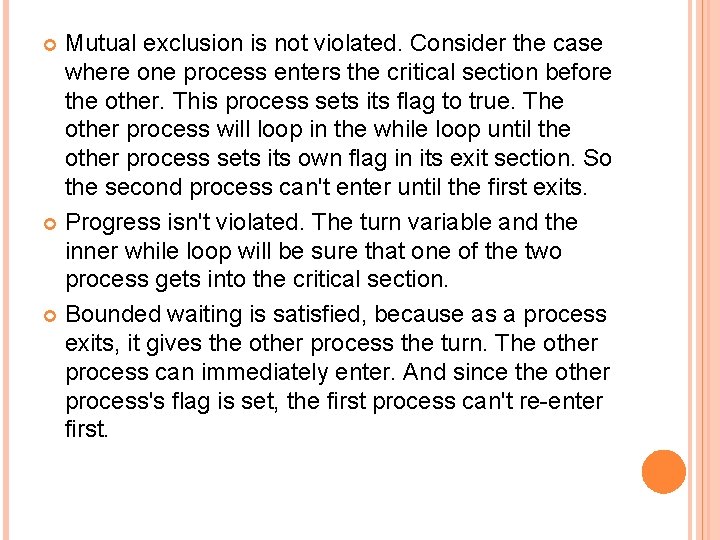

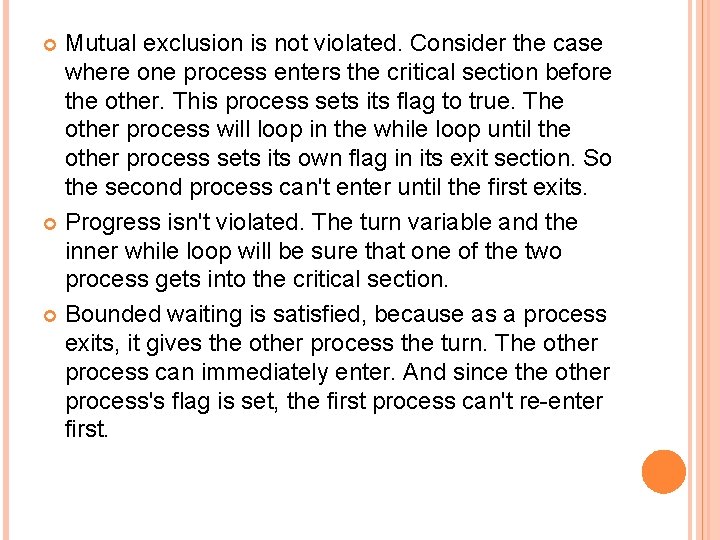

Mutual exclusion is not violated. Consider the case where one process enters the critical section before the other. This process sets its flag to true. The other process will loop in the while loop until the other process sets its own flag in its exit section. So the second process can't enter until the first exits. Progress isn't violated. The turn variable and the inner while loop will be sure that one of the two process gets into the critical section. Bounded waiting is satisfied, because as a process exits, it gives the other process the turn. The other process can immediately enter. And since the other process's flag is set, the first process can't re-enter first.

![PETERSONS ALGORITHM Procedure P 0 Begin Procedure P 1 Begin Repeat Flag0true Turn1 Repeat PETERSON’S ALGORITHM Procedure P 0 Begin Procedure P 1 Begin Repeat Flag[0]=true Turn=1 Repeat](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-136.jpg)

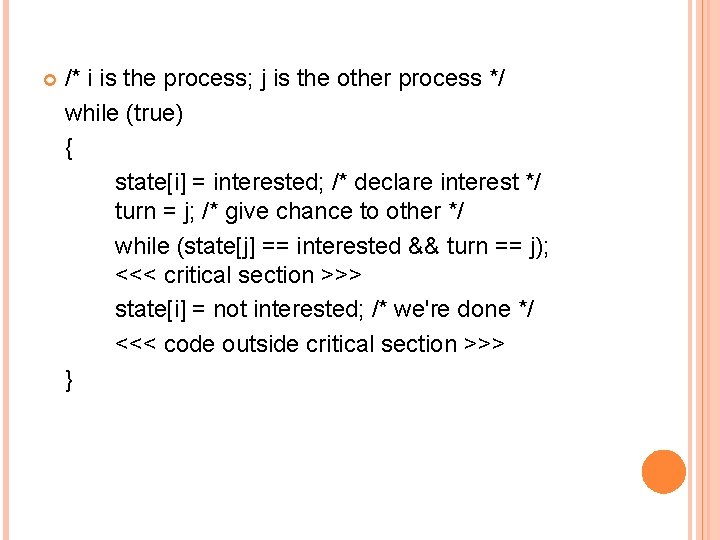

PETERSON’S ALGORITHM Procedure P 0 Begin Procedure P 1 Begin Repeat Flag[0]=true Turn=1 Repeat Flag[1]=true Turn=0 while (flag[1] and turn=1) while (flag[0] and turn=0) {do nothing} { do nothing} <critical section> flag[0]= False <remainder> Forever End flag[1]=false <remainder> Forever End

/* i is the process; j is the other process */ while (true) { state[i] = interested; /* declare interest */ turn = j; /* give chance to other */ while (state[j] == interested && turn == j); <<< critical section >>> state[i] = not interested; /* we're done */ <<< code outside critical section >>> }

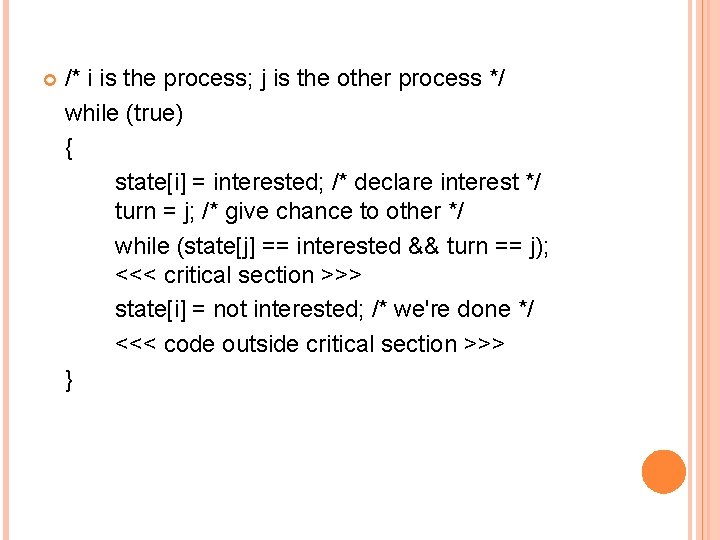

![1 Mutual exclusion state0 and state1 can both be true but turn can 1) Mutual exclusion - state[0] and state[1] can both be true, but turn can](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-138.jpg)

1) Mutual exclusion - state[0] and state[1] can both be true, but turn can only be 0 or 1. Therefore only one of the two critical sections can be executed. The other will spin wait. 2) Progress - If process 0 is in the critical section, then turn = 0 and state[0] is true. Once it has completed it's critical section (even if something catastrophic happens), it must set state[0] to false. If process 1 was spin waiting, it will now free as state[0] != true. 3) Bound-waiting - If Process 1 is waiting, Process 0 can only enter it's critical section once, before process 1 is given the green light, as explained in #2.

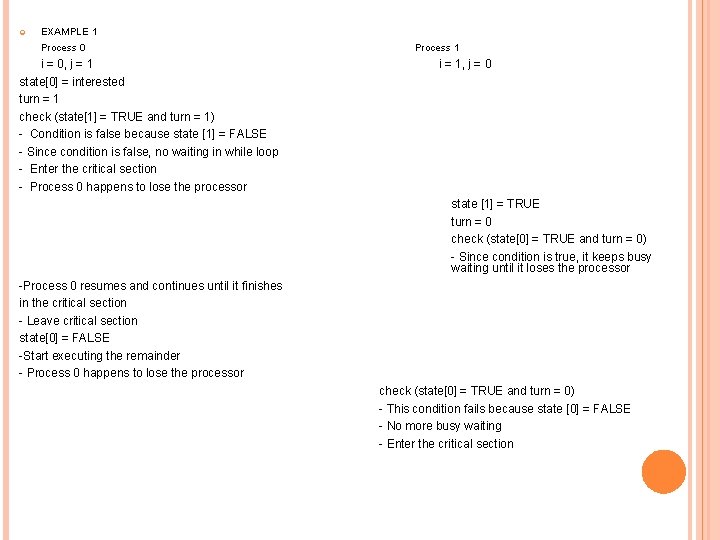

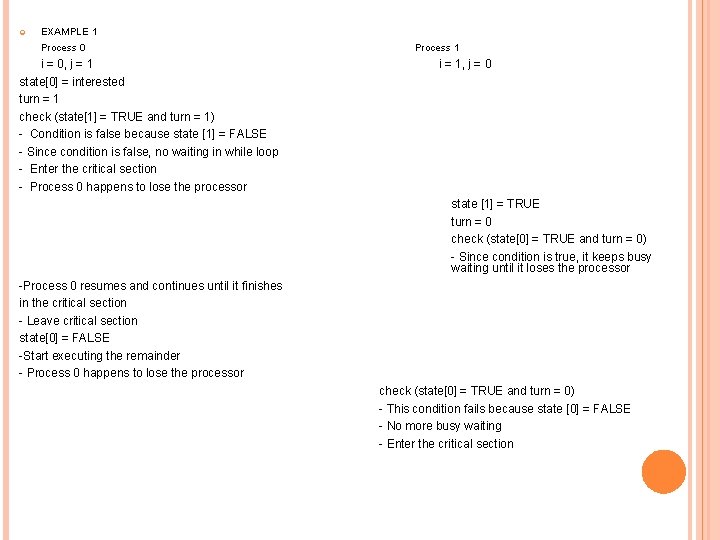

EXAMPLE 1 Process 0 Process 1 i = 0, j = 1 i = 1, j = 0 state[0] = interested turn = 1 check (state[1] = TRUE and turn = 1) - Condition is false because state [1] = FALSE - Since condition is false, no waiting in while loop - Enter the critical section - Process 0 happens to lose the processor state [1] = TRUE turn = 0 check (state[0] = TRUE and turn = 0) - Since condition is true, it keeps busy waiting until it loses the processor -Process 0 resumes and continues until it finishes in the critical section - Leave critical section state[0] = FALSE -Start executing the remainder - Process 0 happens to lose the processor check (state[0] = TRUE and turn = 0) - This condition fails because state [0] = FALSE - No more busy waiting - Enter the critical section

![EXAMPLE 2 Process 0 i0 j1 state 0 TRUE turn 1 EXAMPLE 2 Process 0 i=0, j=1 state [0] = TRUE turn = 1](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-140.jpg)

EXAMPLE 2 Process 0 i=0, j=1 state [0] = TRUE turn = 1 - Lose processor here Process 1 i=1, j=0 state [1] = TRUE turn = 0 check (state[0] = TRUE and turn = 0) - Condition is true so Process 1 busy waits until it loses the processor check (state[1] = TRUE and turn = 1) - This condition is false because turn = 0 - No waiting in loop - Enters critical section

![EXAMPLE 3 Process 0 i0 j1 state0 TRUE Lose processor here EXAMPLE 3 Process 0 i=0, j=1 state[0] = TRUE - Lose processor here](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-141.jpg)

EXAMPLE 3 Process 0 i=0, j=1 state[0] = TRUE - Lose processor here Process 1 i=1, j=0 state[1] = TRUE turn = 0 check (state[0] = TRUE and turn = 0) - Condition is true so, Process 1 busy waits until it loses the processor turn = 1 check (state[1] = TRUE and turn = 1) - Condition is true so Process 0 busy waits until it loses the processor turn = 0) check (state[0] = TRUE and - The condition is false so, Process 1 enters the critical section

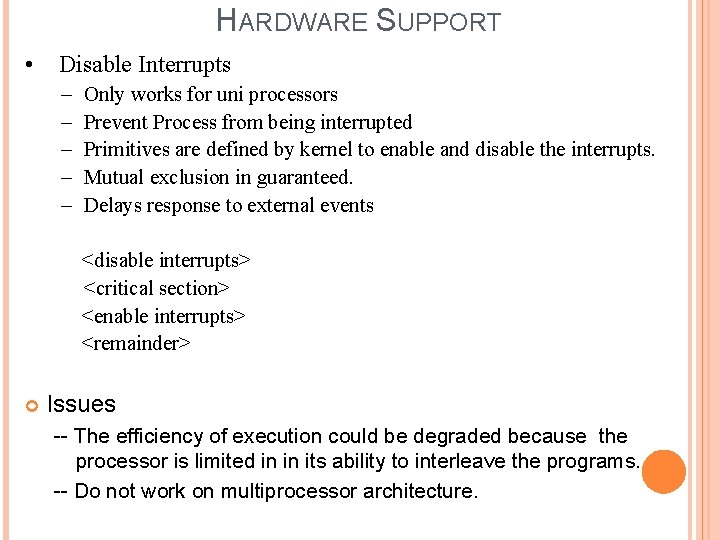

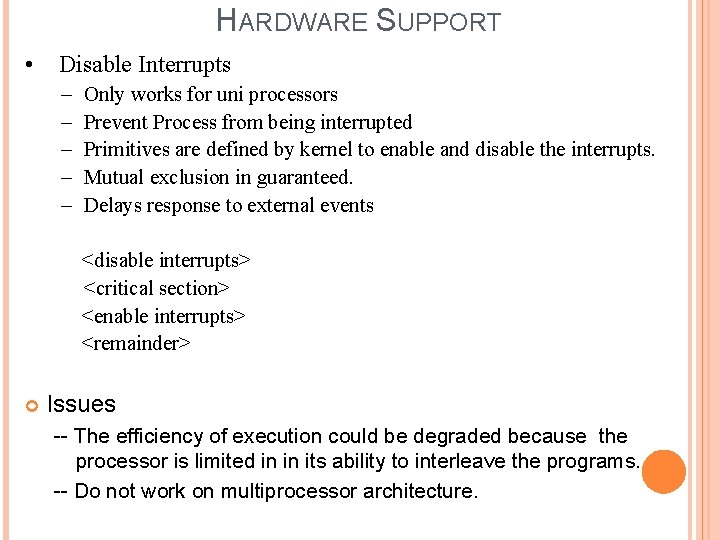

HARDWARE SUPPORT • Disable Interrupts – – – Only works for uni processors Prevent Process from being interrupted Primitives are defined by kernel to enable and disable the interrupts. Mutual exclusion in guaranteed. Delays response to external events <disable interrupts> <critical section> <enable interrupts> <remainder> Issues -- The efficiency of execution could be degraded because the processor is limited in in its ability to interleave the programs. -- Do not work on multiprocessor architecture.

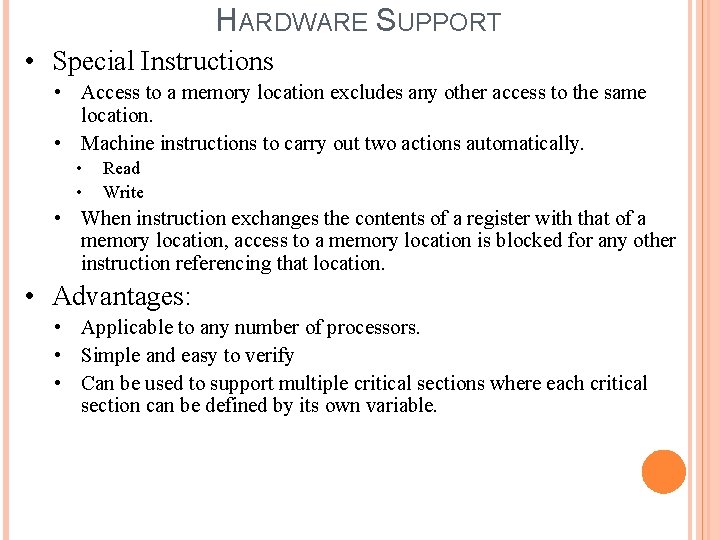

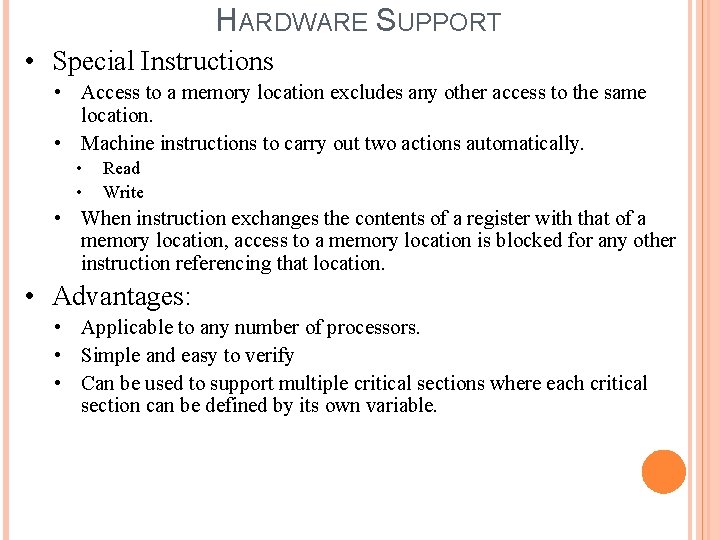

HARDWARE SUPPORT • Special Instructions • Access to a memory location excludes any other access to the same location. • Machine instructions to carry out two actions automatically. • • Read Write • When instruction exchanges the contents of a register with that of a memory location, access to a memory location is blocked for any other instruction referencing that location. • Advantages: • Applicable to any number of processors. • Simple and easy to verify • Can be used to support multiple critical sections where each critical section can be defined by its own variable.

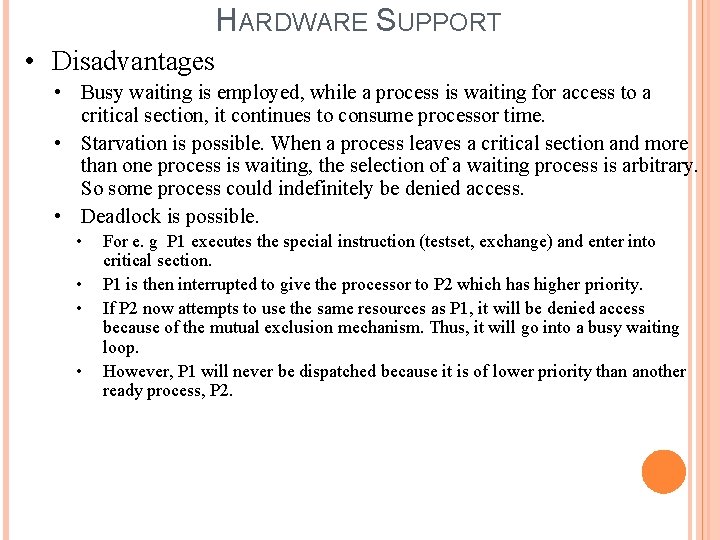

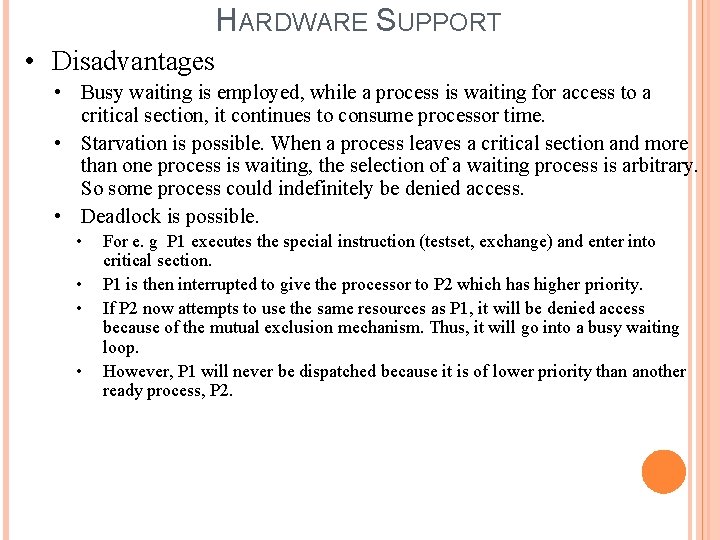

HARDWARE SUPPORT • Disadvantages • Busy waiting is employed, while a process is waiting for access to a critical section, it continues to consume processor time. • Starvation is possible. When a process leaves a critical section and more than one process is waiting, the selection of a waiting process is arbitrary. So some process could indefinitely be denied access. • Deadlock is possible. • • For e. g P 1 executes the special instruction (testset, exchange) and enter into critical section. P 1 is then interrupted to give the processor to P 2 which has higher priority. If P 2 now attempts to use the same resources as P 1, it will be denied access because of the mutual exclusion mechanism. Thus, it will go into a busy waiting loop. However, P 1 will never be dispatched because it is of lower priority than another ready process, P 2.

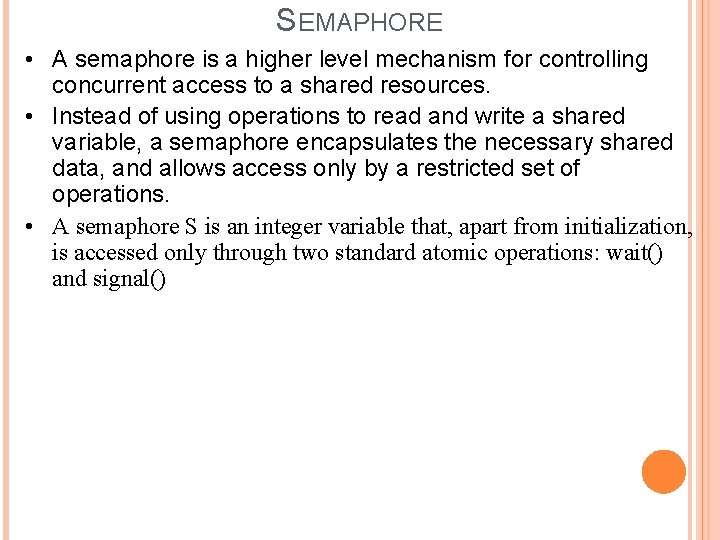

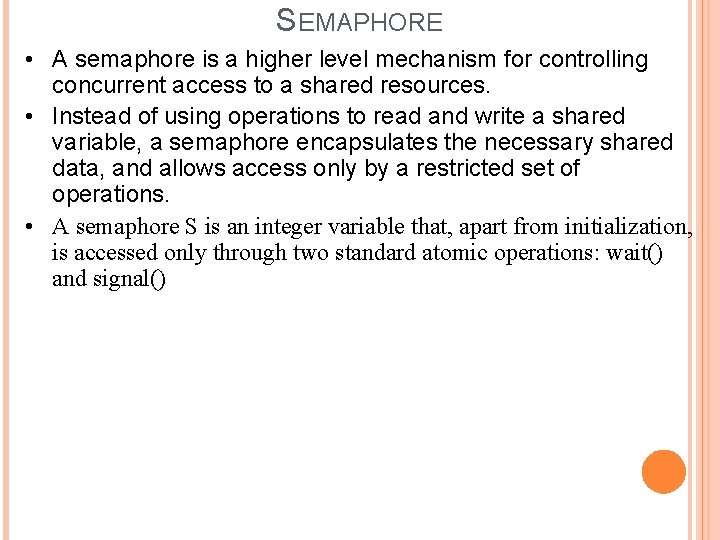

SEMAPHORE • A semaphore is a higher level mechanism for controlling concurrent access to a shared resources. • Instead of using operations to read and write a shared variable, a semaphore encapsulates the necessary shared data, and allows access only by a restricted set of operations. • A semaphore S is an integer variable that, apart from initialization, is accessed only through two standard atomic operations: wait() and signal()

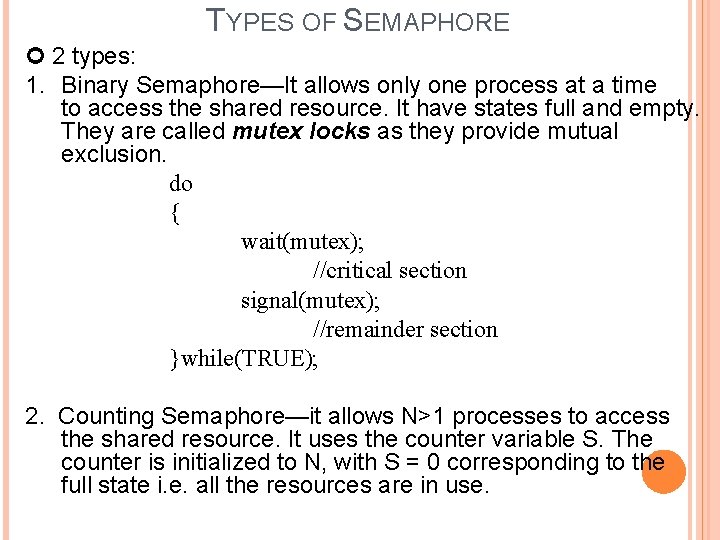

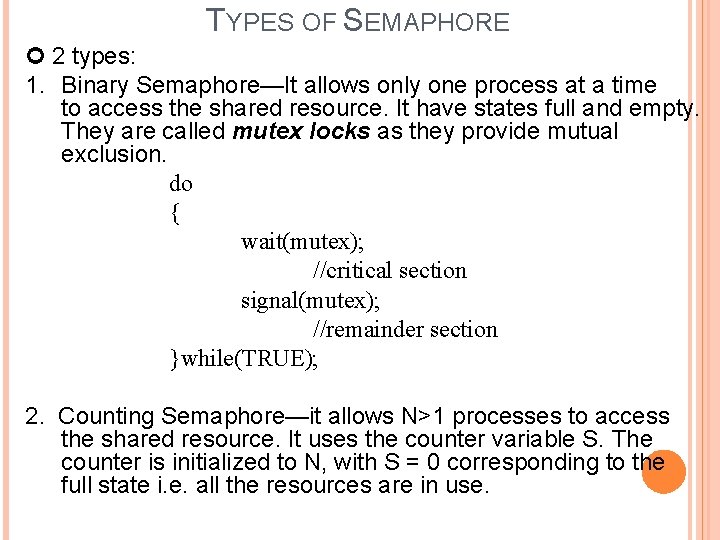

TYPES OF SEMAPHORE 2 types: 1. Binary Semaphore—It allows only one process at a time to access the shared resource. It have states full and empty. They are called mutex locks as they provide mutual exclusion. do { wait(mutex); //critical section signal(mutex); //remainder section }while(TRUE); 2. Counting Semaphore—it allows N>1 processes to access the shared resource. It uses the counter variable S. The counter is initialized to N, with S = 0 corresponding to the full state i. e. all the resources are in use.

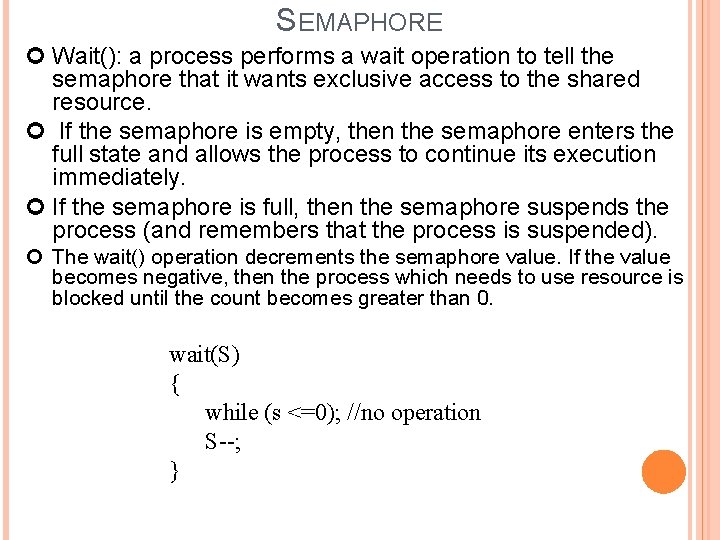

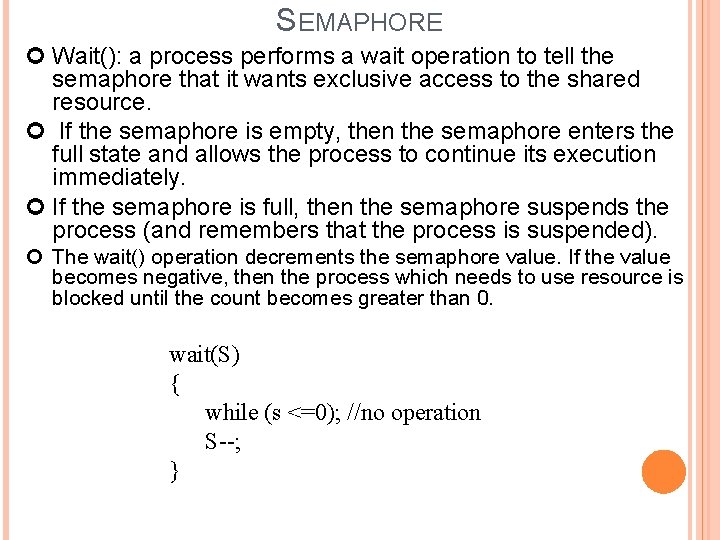

SEMAPHORE Wait(): a process performs a wait operation to tell the semaphore that it wants exclusive access to the shared resource. If the semaphore is empty, then the semaphore enters the full state and allows the process to continue its execution immediately. If the semaphore is full, then the semaphore suspends the process (and remembers that the process is suspended). The wait() operation decrements the semaphore value. If the value becomes negative, then the process which needs to use resource is blocked until the count becomes greater than 0. wait(S) { while (s <=0); //no operation S--; }

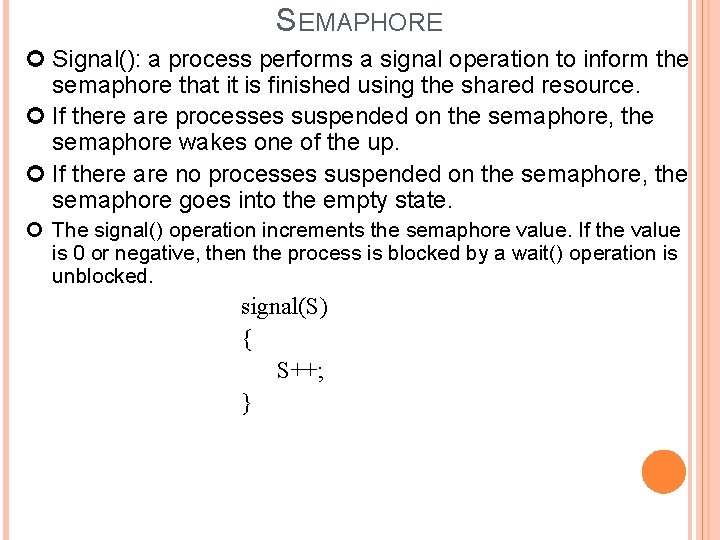

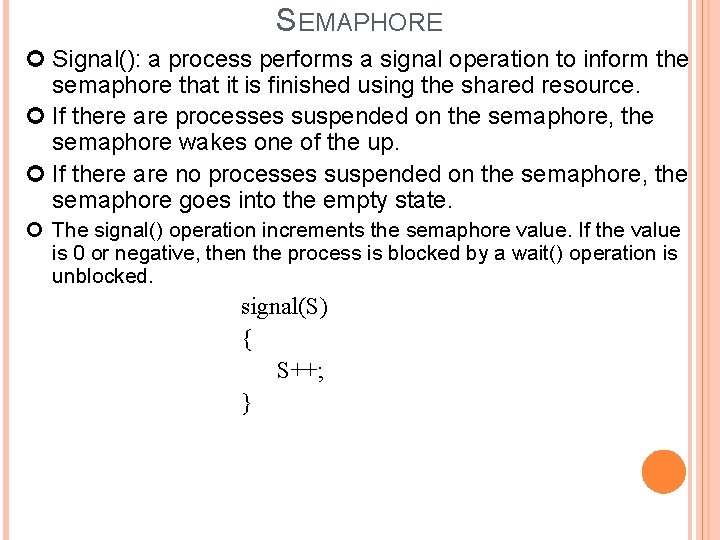

SEMAPHORE Signal(): a process performs a signal operation to inform the semaphore that it is finished using the shared resource. If there are processes suspended on the semaphore, the semaphore wakes one of the up. If there are no processes suspended on the semaphore, the semaphore goes into the empty state. The signal() operation increments the semaphore value. If the value is 0 or negative, then the process is blocked by a wait() operation is unblocked. signal(S) { S++; }

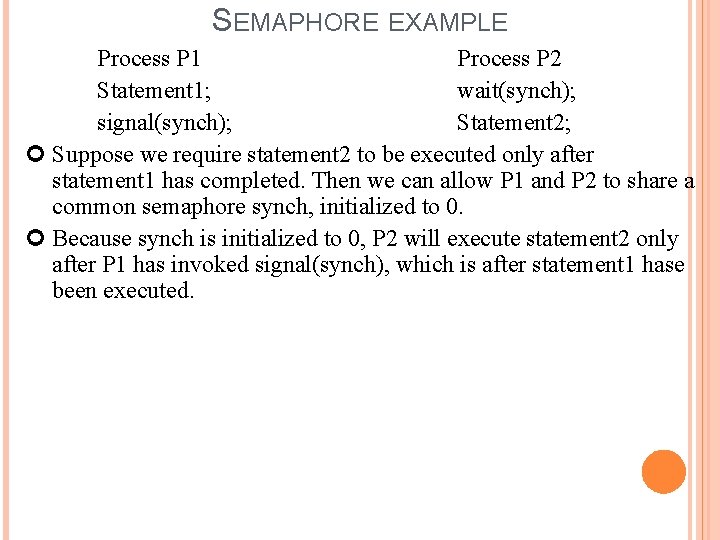

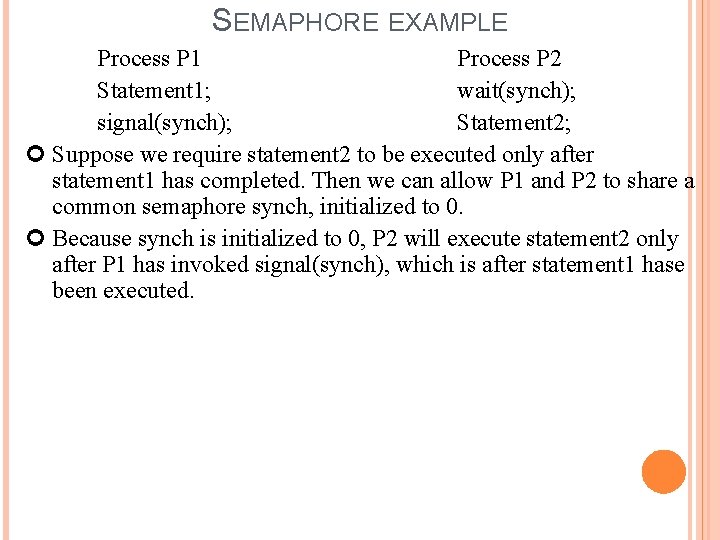

SEMAPHORE EXAMPLE Process P 1 Process P 2 Statement 1; wait(synch); signal(synch); Statement 2; Suppose we require statement 2 to be executed only after statement 1 has completed. Then we can allow P 1 and P 2 to share a common semaphore synch, initialized to 0. Because synch is initialized to 0, P 2 will execute statement 2 only after P 1 has invoked signal(synch), which is after statement 1 hase been executed.

SPINLOCK SEMAPHORE Removes busy waiting and the waste of CPU cycles is reduced. When a process executes the wait() operation and finds that the semaphore value is not positive, it must wait. However, rather engaging in busy waiting, the process can block itself using block() operation. It goes to waiting state and another process is selected for execution. When signal() operation occurs the process is again restarted by wakeup() operation. It goes from waiting state to ready state. If the semaphore value is negative, its magnitude is the number of processes waiting on that semaphore.

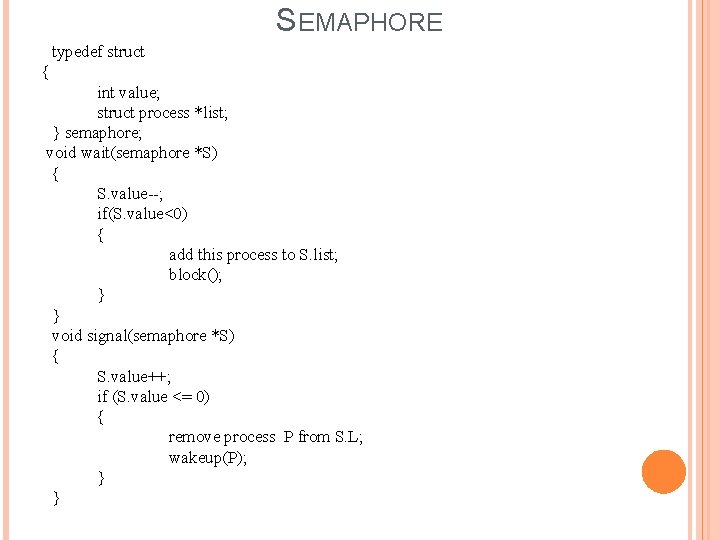

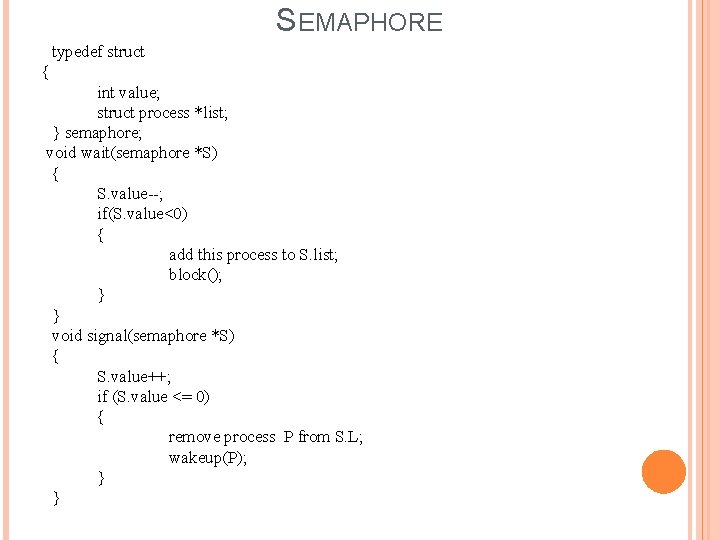

SEMAPHORE typedef struct { int value; struct process *list; } semaphore; void wait(semaphore *S) { S. value--; if(S. value<0) { add this process to S. list; block(); } } void signal(semaphore *S) { S. value++; if (S. value <= 0) { remove process P from S. L; wakeup(P); } }

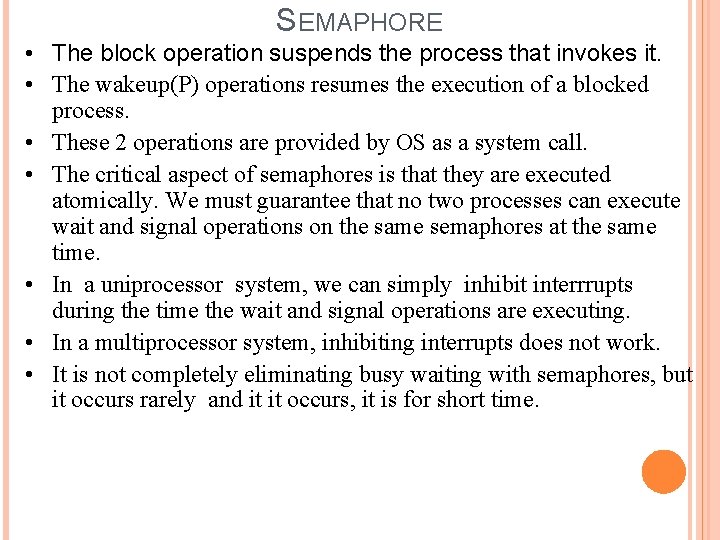

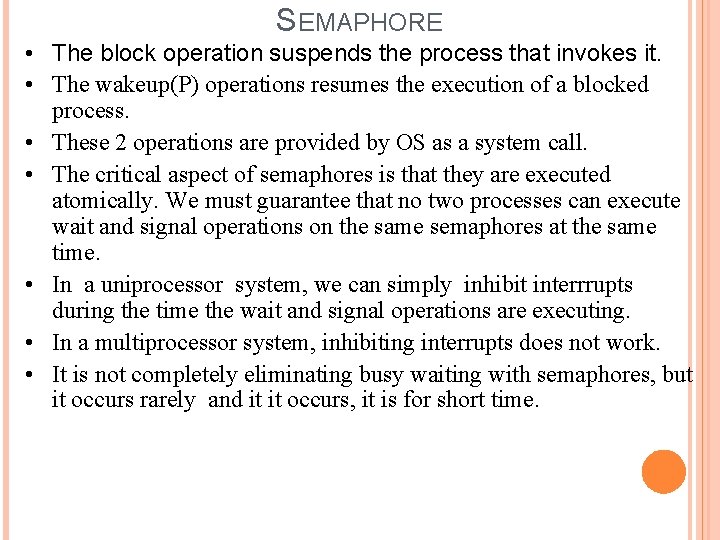

SEMAPHORE • The block operation suspends the process that invokes it. • The wakeup(P) operations resumes the execution of a blocked process. • These 2 operations are provided by OS as a system call. • The critical aspect of semaphores is that they are executed atomically. We must guarantee that no two processes can execute wait and signal operations on the same semaphores at the same time. • In a uniprocessor system, we can simply inhibit interrrupts during the time the wait and signal operations are executing. • In a multiprocessor system, inhibiting interrupts does not work. • It is not completely eliminating busy waiting with semaphores, but it occurs rarely and it it occurs, it is for short time.

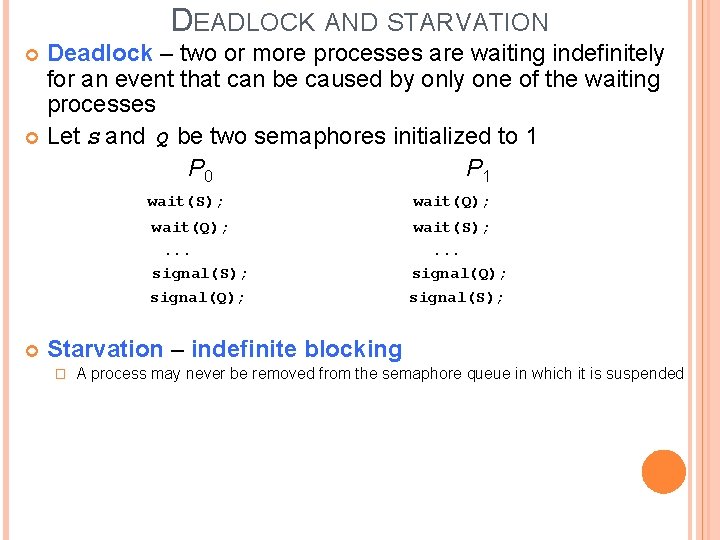

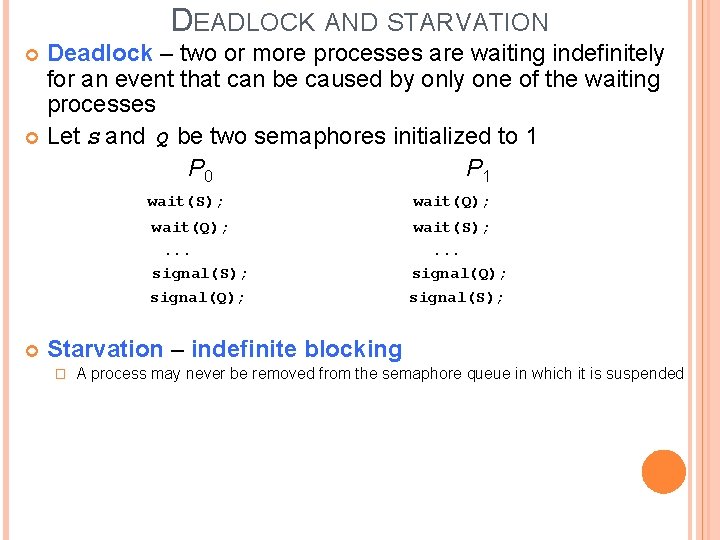

DEADLOCK AND STARVATION Deadlock – two or more processes are waiting indefinitely for an event that can be caused by only one of the waiting processes Let S and Q be two semaphores initialized to 1 P 0 P 1 wait(S); wait(Q); . . . signal(S); signal(Q); wait(S); . . . signal(Q); signal(S); Starvation – indefinite blocking � A process may never be removed from the semaphore queue in which it is suspended

CLASSICAL SYNCHRONIZATION PROBLEMS • The Readers and Writers Problem • Producer/Consumer Problem or Bounded Buffer Problem • The dining philosopher problem

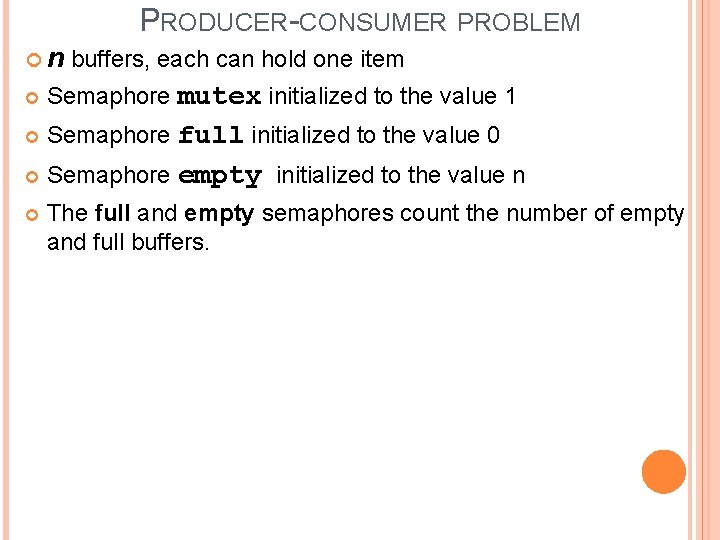

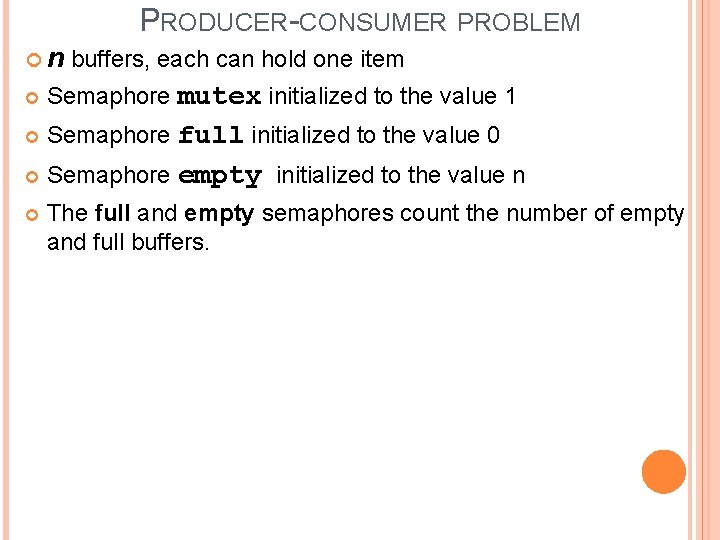

PRODUCER-CONSUMER PROBLEM n buffers, each can hold one item Semaphore mutex initialized to the value 1 Semaphore full initialized to the value 0 Semaphore empty initialized to the value n The full and empty semaphores count the number of empty and full buffers.

PRODUCER-CONSUMER PROBLEM The structure of the producer process do { . . . /* produce an item in next_produced */. . . /* wait for an empty space */ wait(empty); /* store the item */ wait(mutex); /*lock the item*/. . . /* add next produced to the buffer */. . . /* report the new full slot */ signal(mutex); signal(full); } while (true);

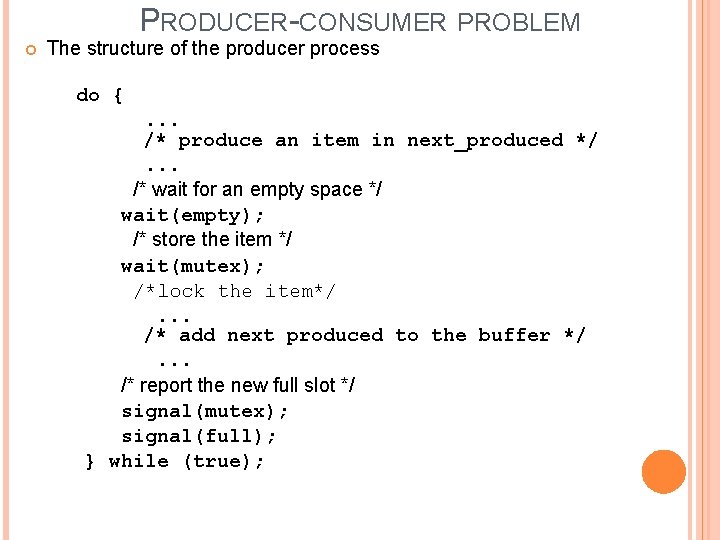

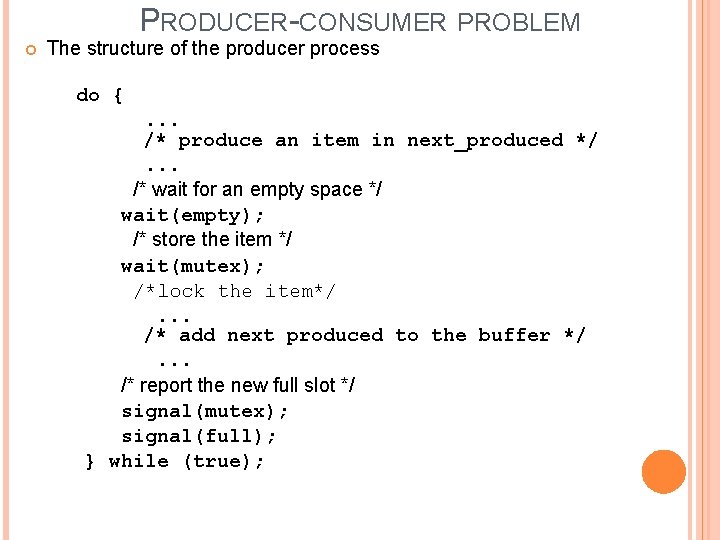

PRODUCER-CONSUMER PROBLEM n The structure of the consumer process Do { */ /* wait for a stored item */ wait(full); /* remove the item */ wait(mutex); . . . /* remove an item from buffer to next_consumed. . . /* report the new empty slot */ signal(mutex); /* consume it */ signal(empty); . . . /* consume the item in next consumed */. . . } while (true);

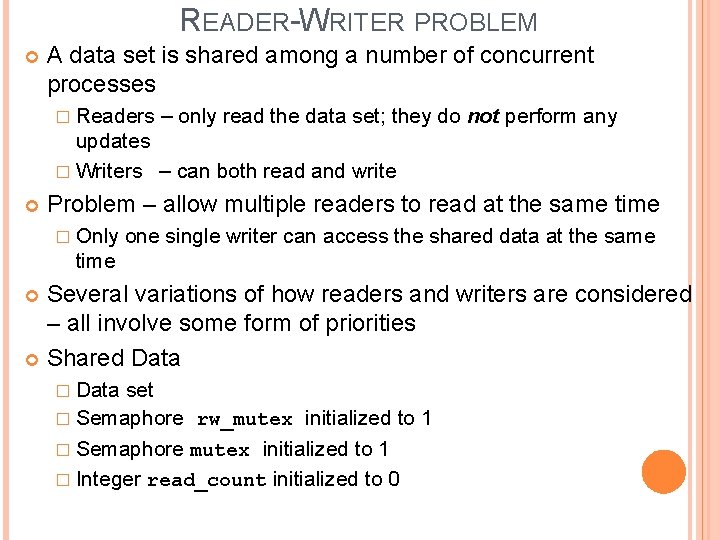

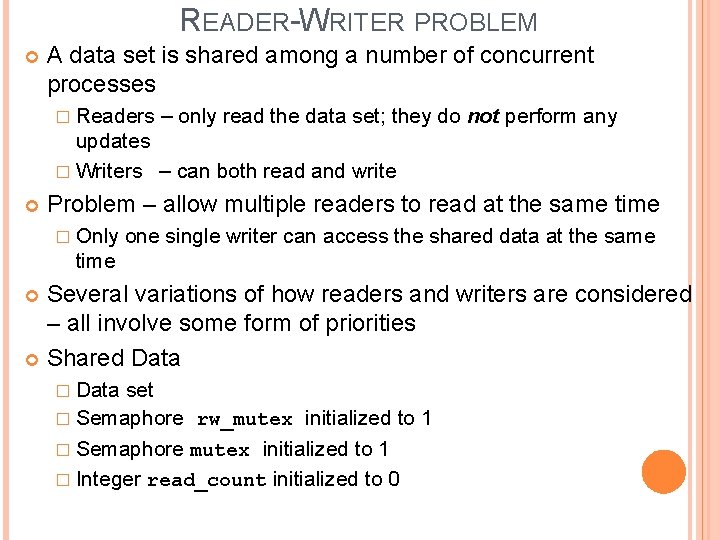

READER-WRITER PROBLEM A data set is shared among a number of concurrent processes � Readers – only read the data set; they do not perform any updates � Writers – can both read and write Problem – allow multiple readers to read at the same time � Only one single writer can access the shared data at the same time Several variations of how readers and writers are considered – all involve some form of priorities Shared Data � Data set � Semaphore rw_mutex initialized to 1 � Semaphore mutex initialized to 1 � Integer read_count initialized to 0

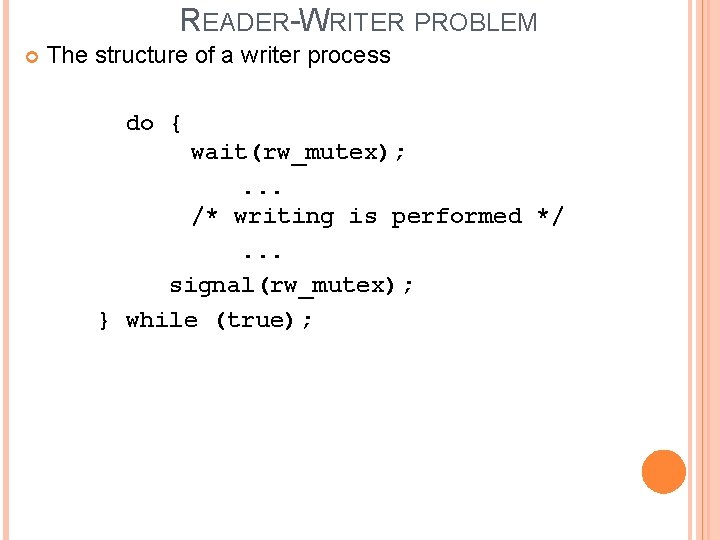

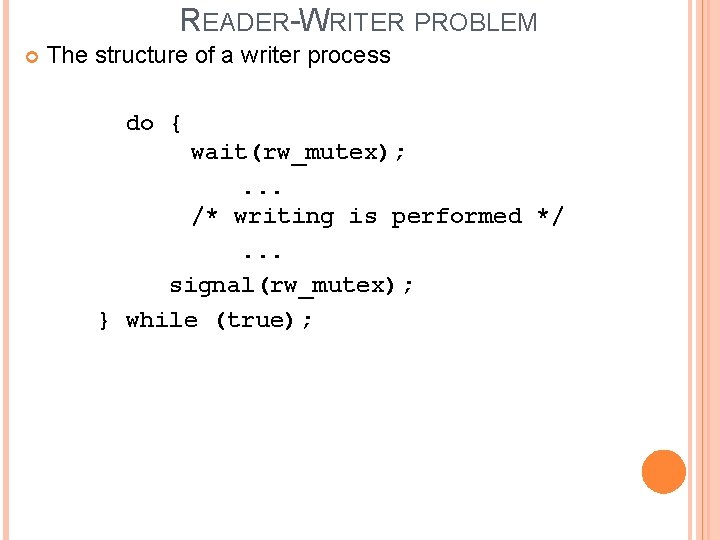

READER-WRITER PROBLEM The structure of a writer process do { wait(rw_mutex); . . . /* writing is performed */. . . signal(rw_mutex); } while (true);

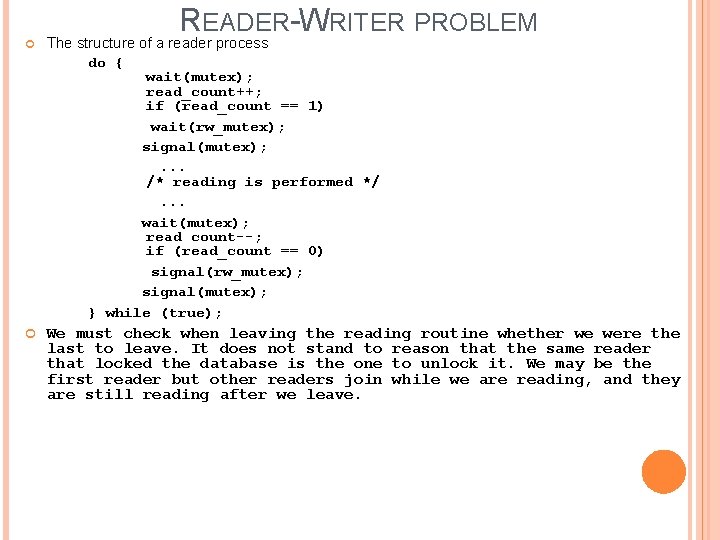

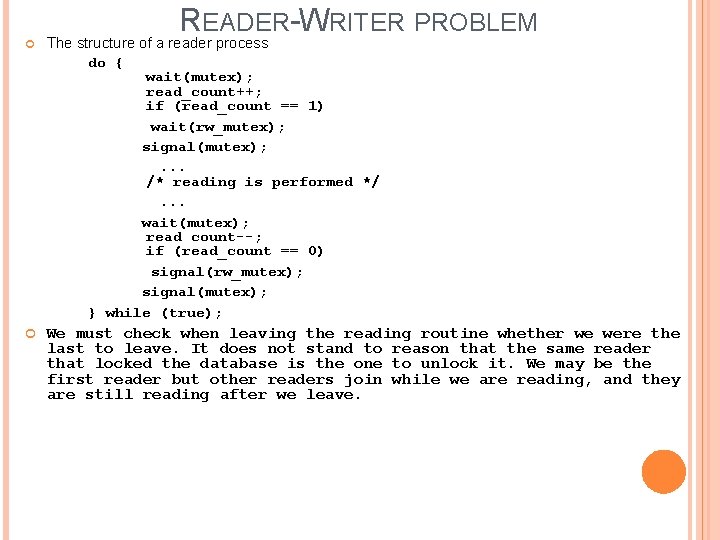

READER-WRITER PROBLEM The structure of a reader process do { wait(mutex); read_count++; if (read_count == 1) wait(rw_mutex); signal(mutex); . . . /* reading is performed */. . . wait(mutex); read count--; if (read_count == 0) signal(rw_mutex); signal(mutex); } while (true); We must check when leaving the reading routine whether we were the last to leave. It does not stand to reason that the same reader that locked the database is the one to unlock it. We may be the first reader but other readers join while we are reading, and they are still reading after we leave.

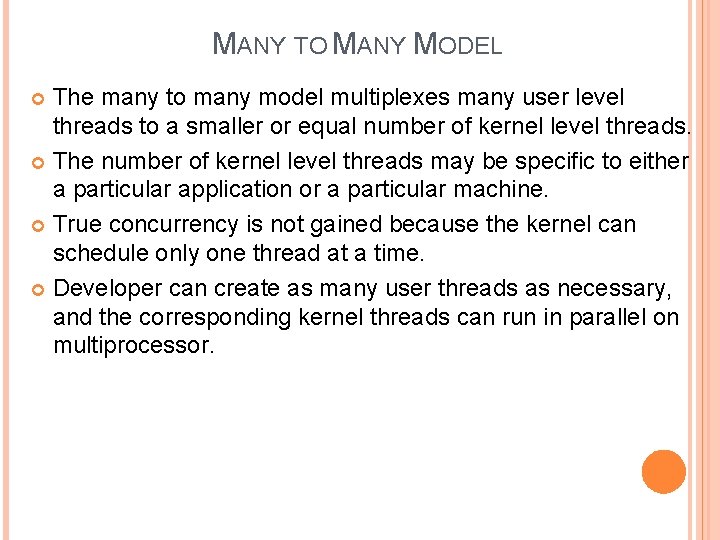

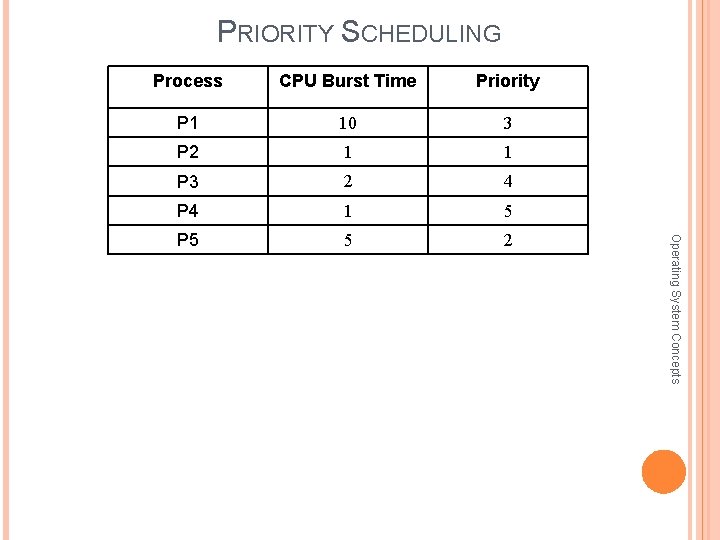

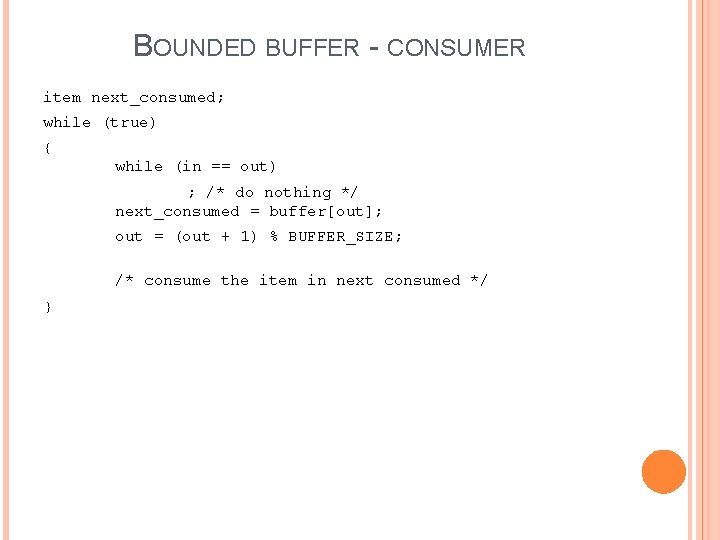

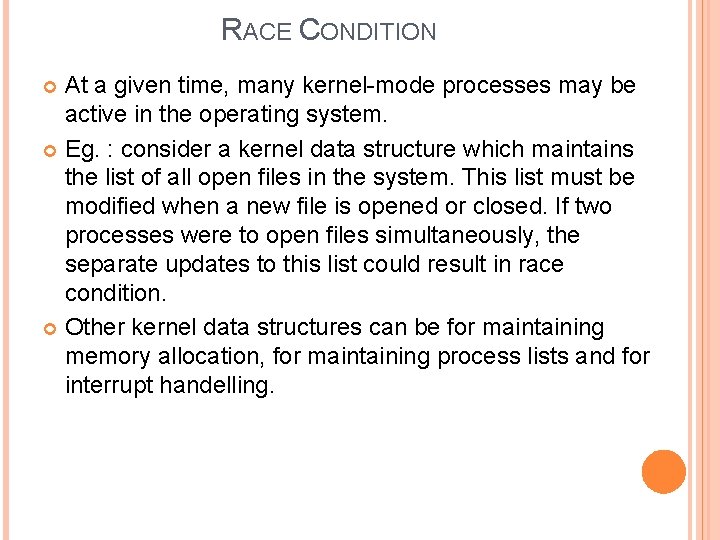

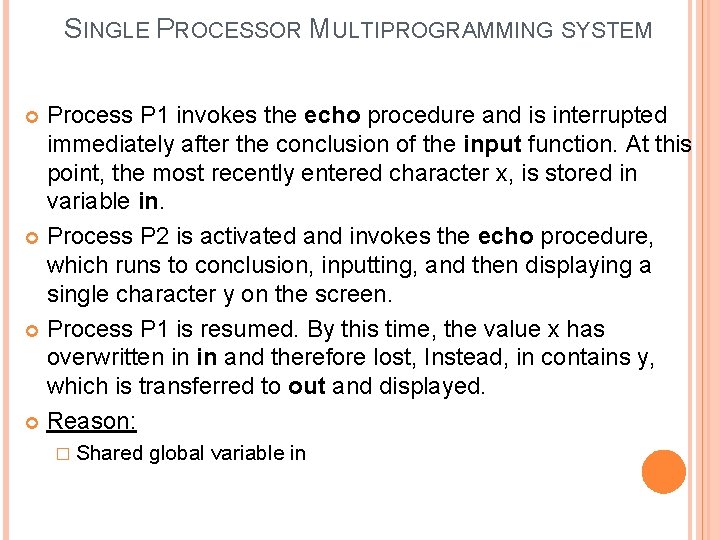

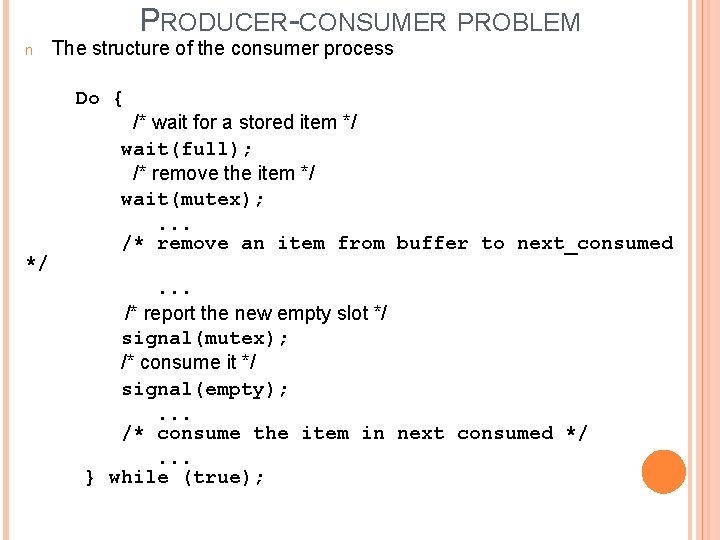

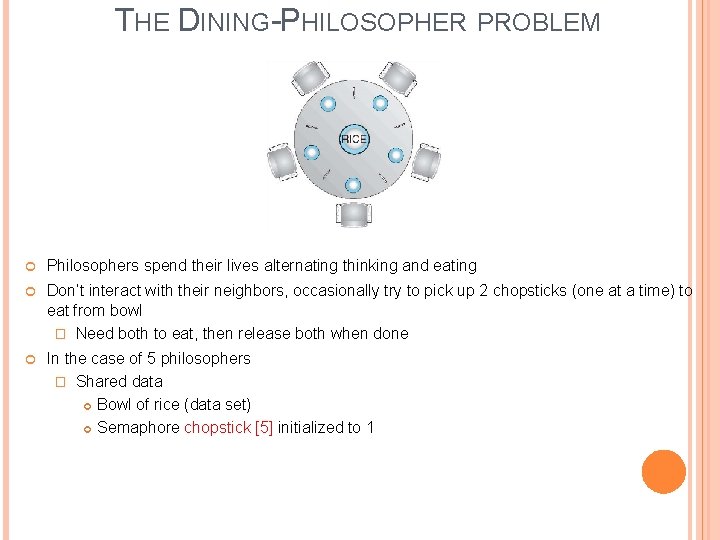

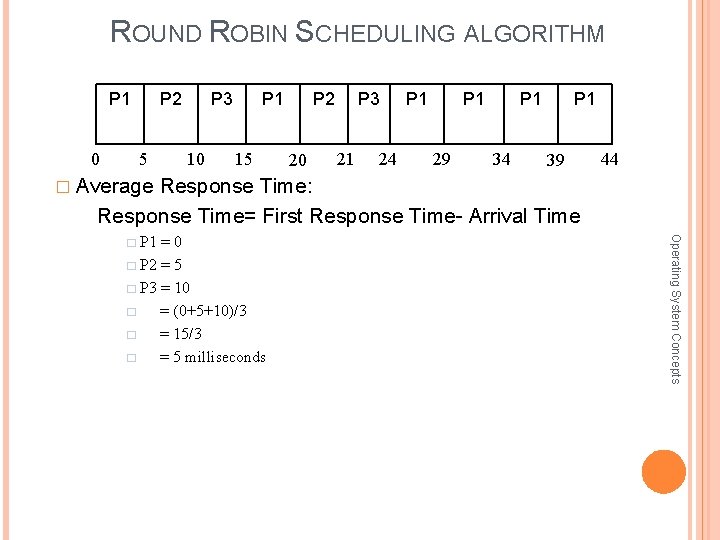

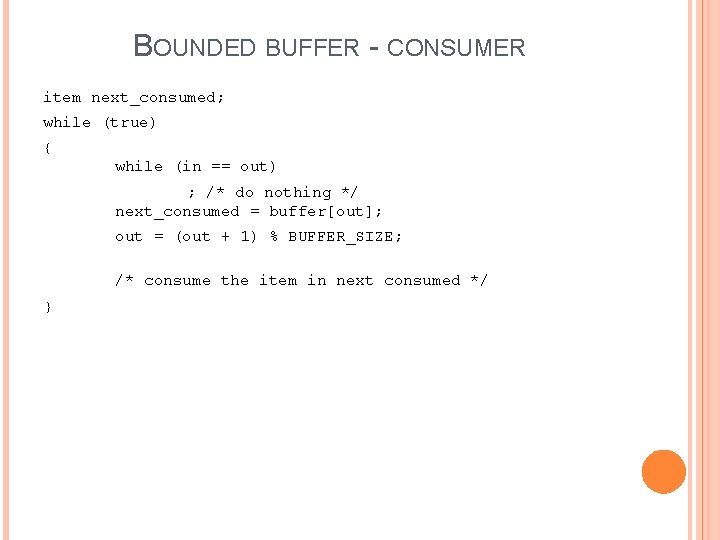

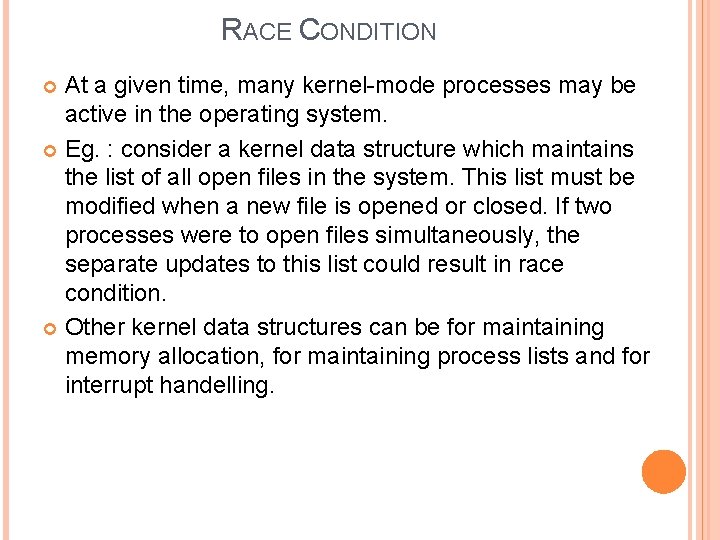

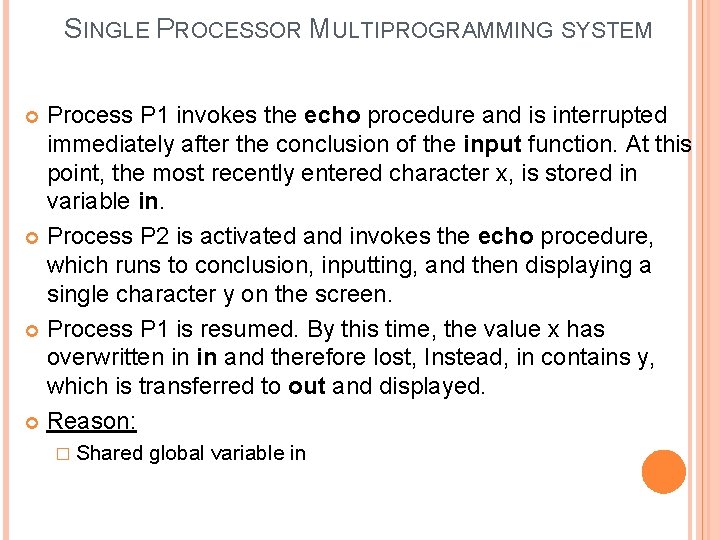

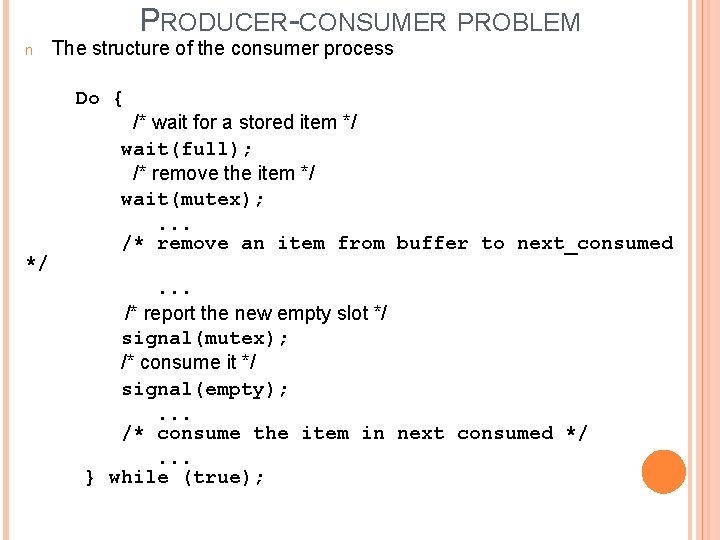

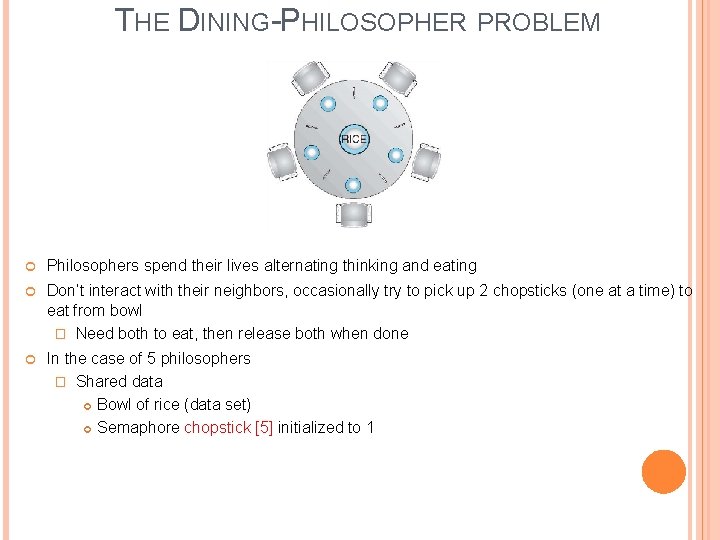

THE DINING-PHILOSOPHER PROBLEM Philosophers spend their lives alternating thinking and eating Don’t interact with their neighbors, occasionally try to pick up 2 chopsticks (one at a time) to eat from bowl � Need both to eat, then release both when done In the case of 5 philosophers � Shared data Bowl of rice (data set) Semaphore chopstick [5] initialized to 1

![THE DININGPHILOSOPHER PROBLEM The structure of Philosopher i do wait chopsticki wait chop THE DINING-PHILOSOPHER PROBLEM The structure of Philosopher i: do { wait (chopstick[i]); wait (chop.](https://slidetodoc.com/presentation_image_h/0dca50e566507135263625c0b55dab11/image-162.jpg)

THE DINING-PHILOSOPHER PROBLEM The structure of Philosopher i: do { wait (chopstick[i]); wait (chop. Stick[(i + 1) % 5]); // eat signal (chopstick[i]); signal (chopstick[(i + 1) % 5]); // } while (TRUE); think

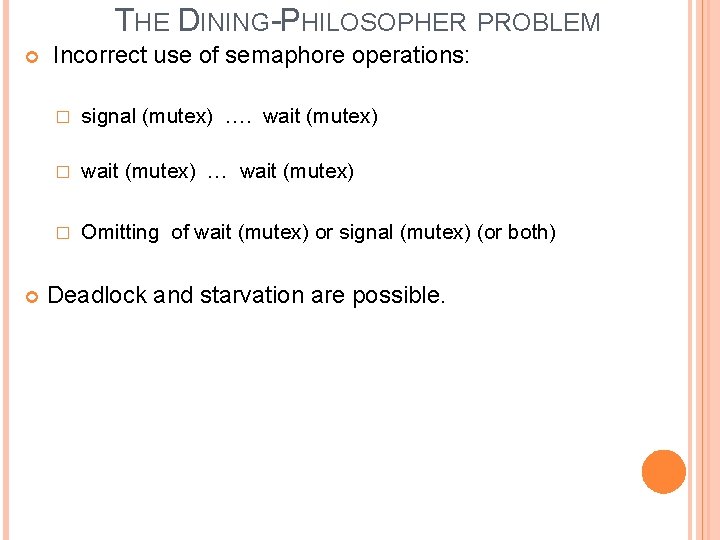

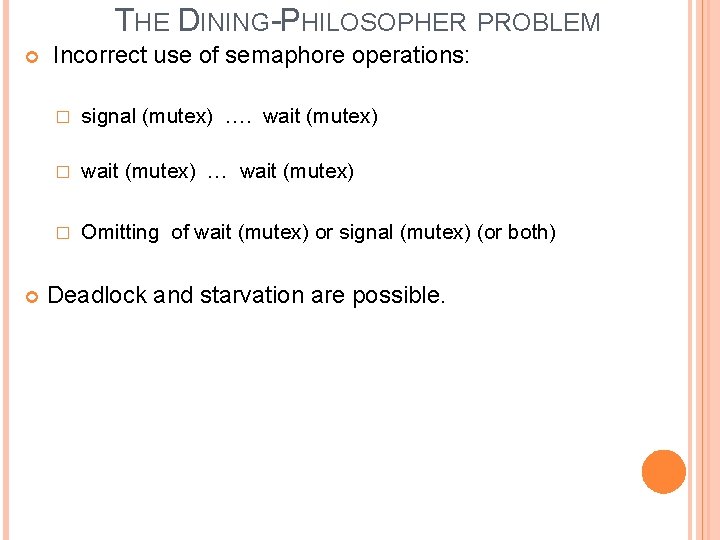

THE DINING-PHILOSOPHER PROBLEM Incorrect use of semaphore operations: � signal (mutex) …. wait (mutex) � wait (mutex) … wait (mutex) � Omitting of wait (mutex) or signal (mutex) (or both) Deadlock and starvation are possible.

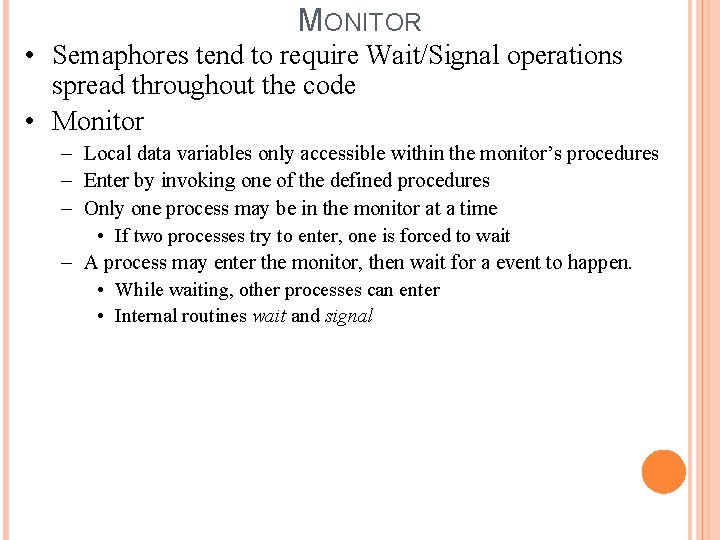

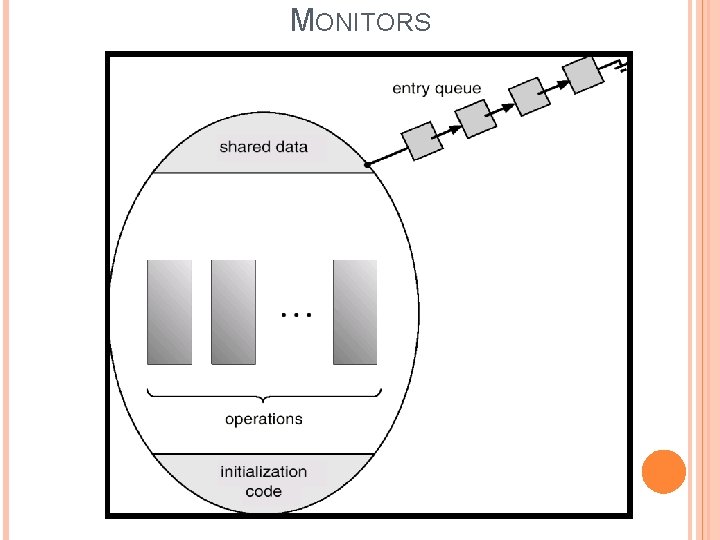

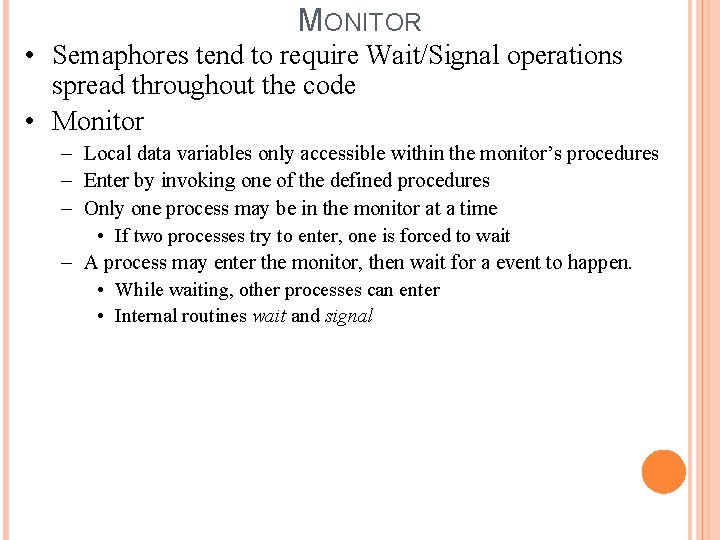

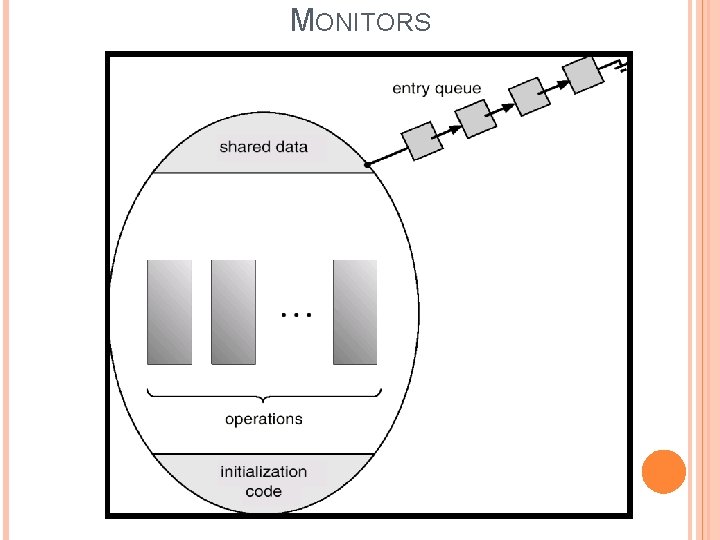

MONITOR • Semaphores tend to require Wait/Signal operations spread throughout the code • Monitor – Local data variables only accessible within the monitor’s procedures – Enter by invoking one of the defined procedures – Only one process may be in the monitor at a time • If two processes try to enter, one is forced to wait – A process may enter the monitor, then wait for a event to happen. • While waiting, other processes can enter • Internal routines wait and signal

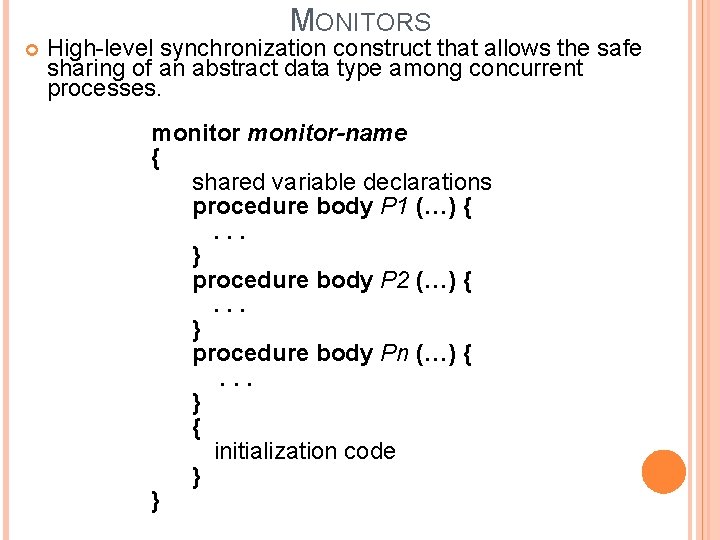

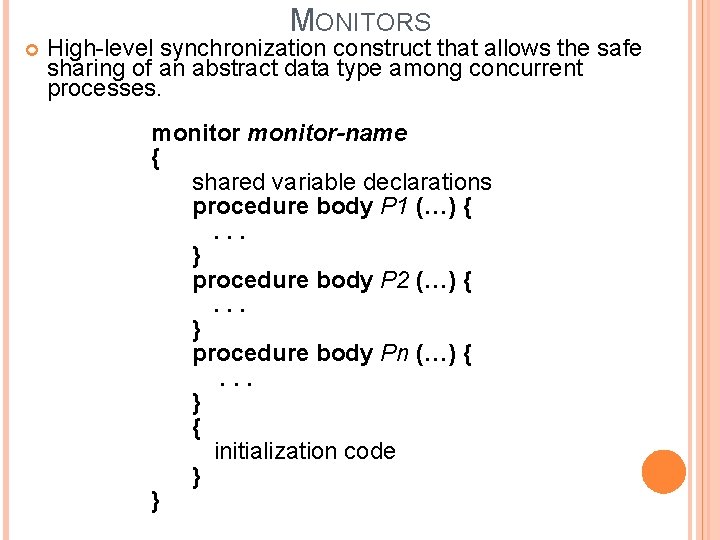

MONITORS High-level synchronization construct that allows the safe sharing of an abstract data type among concurrent processes. monitor-name { shared variable declarations procedure body P 1 (…) {. . . } procedure body P 2 (…) {. . . } procedure body Pn (…) { . . . } { initialization code } }

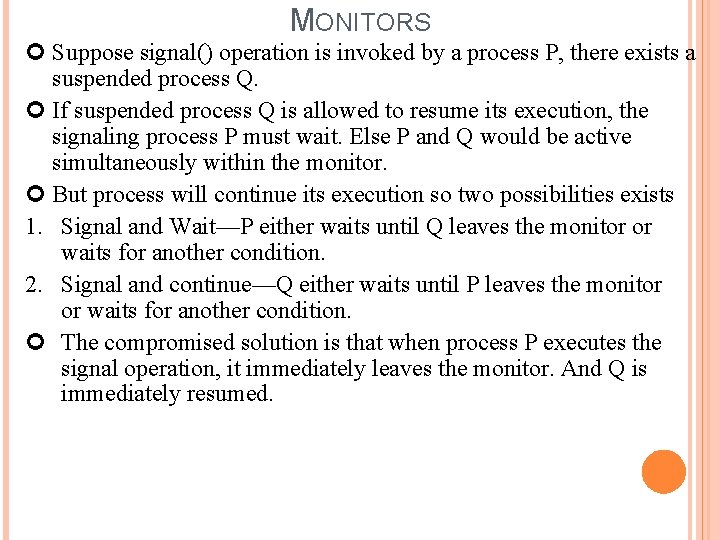

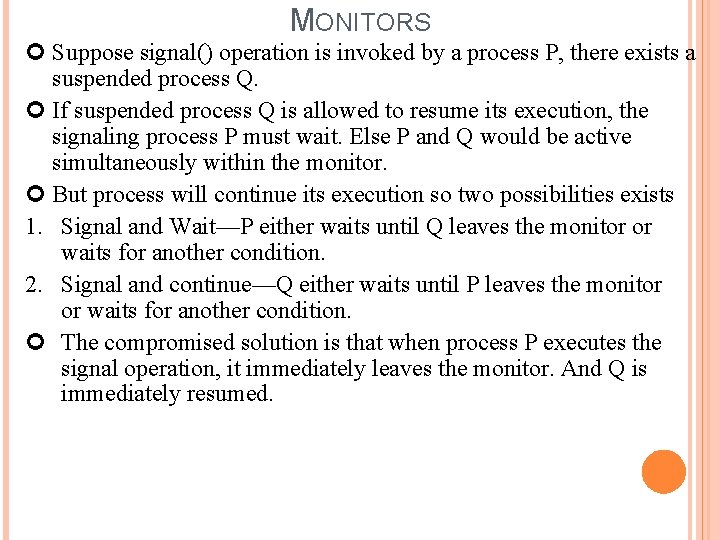

MONITORS Suppose signal() operation is invoked by a process P, there exists a suspended process Q. If suspended process Q is allowed to resume its execution, the signaling process P must wait. Else P and Q would be active simultaneously within the monitor. But process will continue its execution so two possibilities exists 1. Signal and Wait—P either waits until Q leaves the monitor or waits for another condition. 2. Signal and continue—Q either waits until P leaves the monitor or waits for another condition. The compromised solution is that when process P executes the signal operation, it immediately leaves the monitor. And Q is immediately resumed.

MONITORS

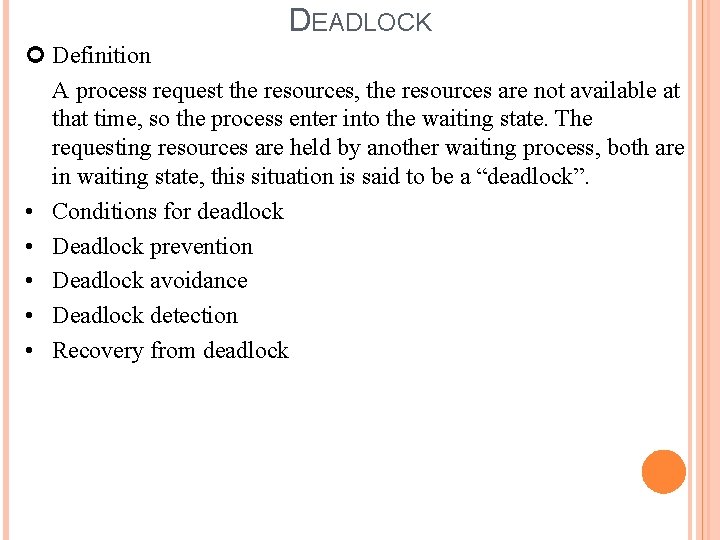

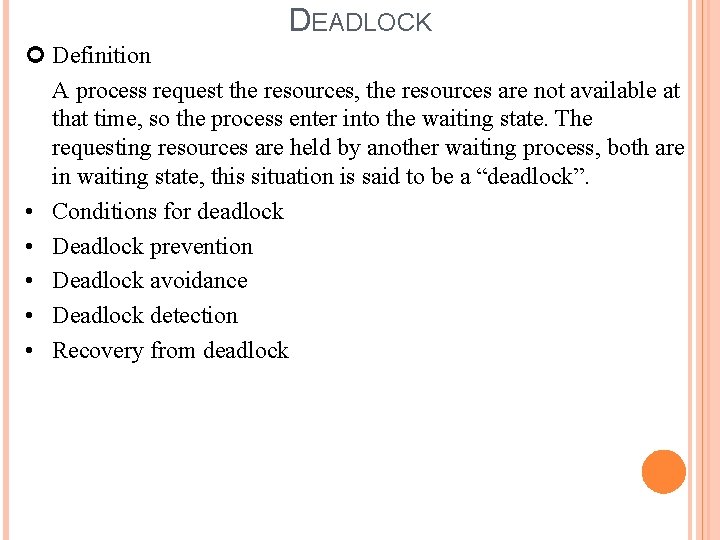

DEADLOCK

DEADLOCK Definition A process request the resources, the resources are not available at that time, so the process enter into the waiting state. The requesting resources are held by another waiting process, both are in waiting state, this situation is said to be a “deadlock”. • Conditions for deadlock • Deadlock prevention • Deadlock avoidance • Deadlock detection • Recovery from deadlock

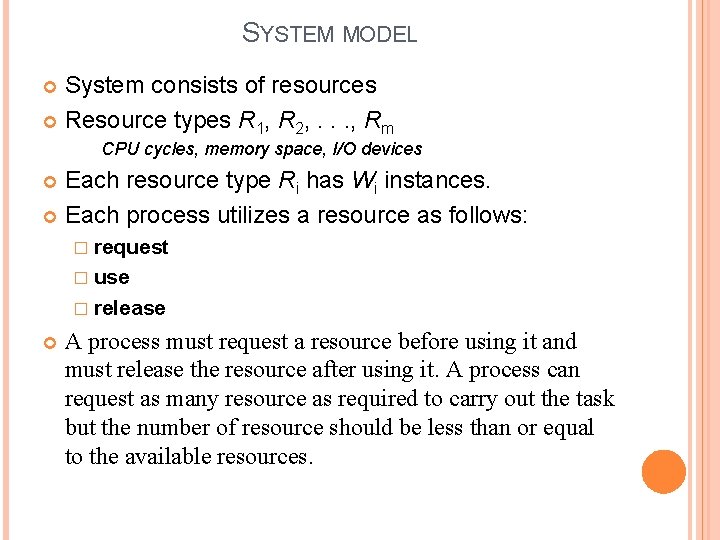

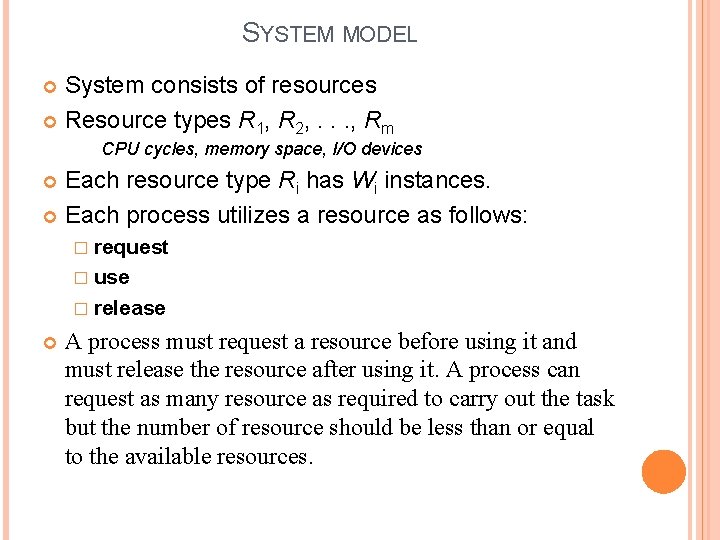

SYSTEM MODEL System consists of resources Resource types R 1, R 2, . . . , Rm CPU cycles, memory space, I/O devices Each resource type Ri has Wi instances. Each process utilizes a resource as follows: � request � use � release A process must request a resource before using it and must release the resource after using it. A process can request as many resource as required to carry out the task but the number of resource should be less than or equal to the available resources.

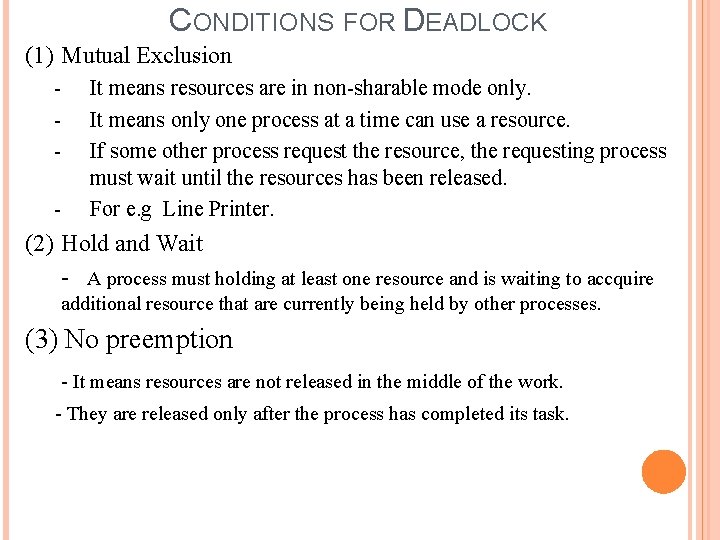

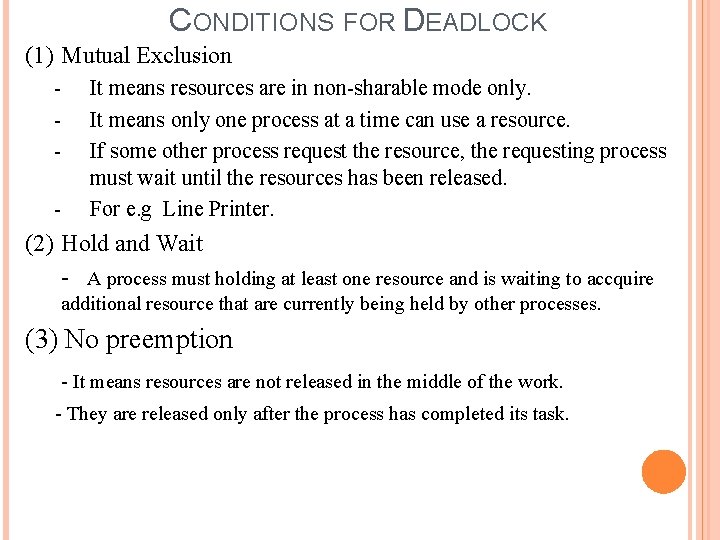

CONDITIONS FOR DEADLOCK (1) Mutual Exclusion - It means resources are in non-sharable mode only. It means only one process at a time can use a resource. If some other process request the resource, the requesting process must wait until the resources has been released. For e. g Line Printer. (2) Hold and Wait - A process must holding at least one resource and is waiting to accquire additional resource that are currently being held by other processes. (3) No preemption - It means resources are not released in the middle of the work. - They are released only after the process has completed its task.

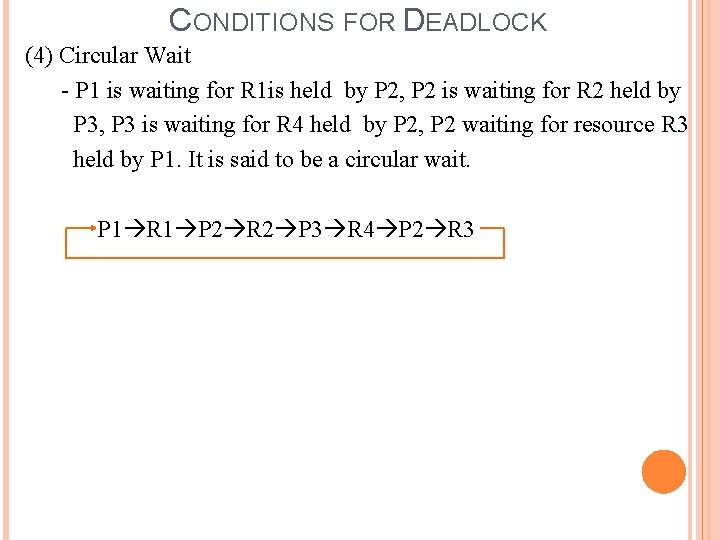

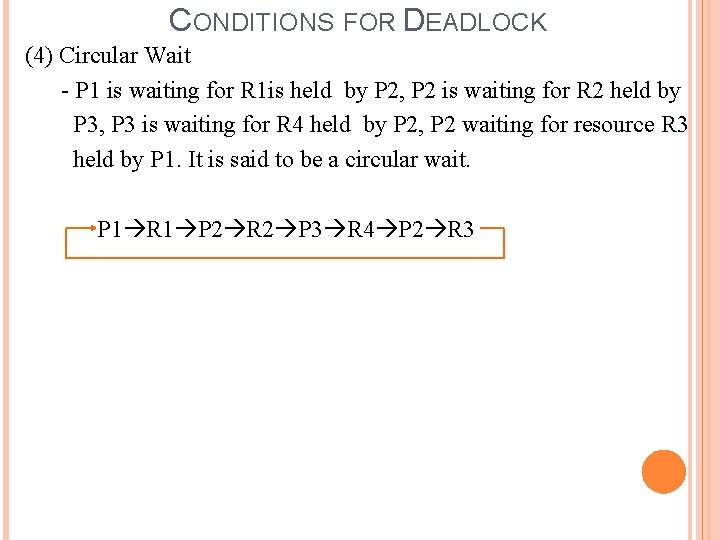

CONDITIONS FOR DEADLOCK (4) Circular Wait - P 1 is waiting for R 1 is held by P 2, P 2 is waiting for R 2 held by P 3, P 3 is waiting for R 4 held by P 2, P 2 waiting for resource R 3 held by P 1. It is said to be a circular wait. P 1 R 1 P 2 R 2 P 3 R 4 P 2 R 3

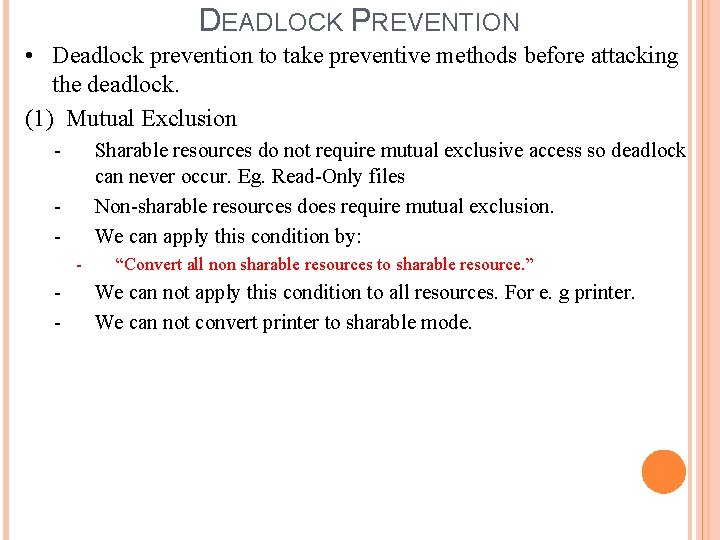

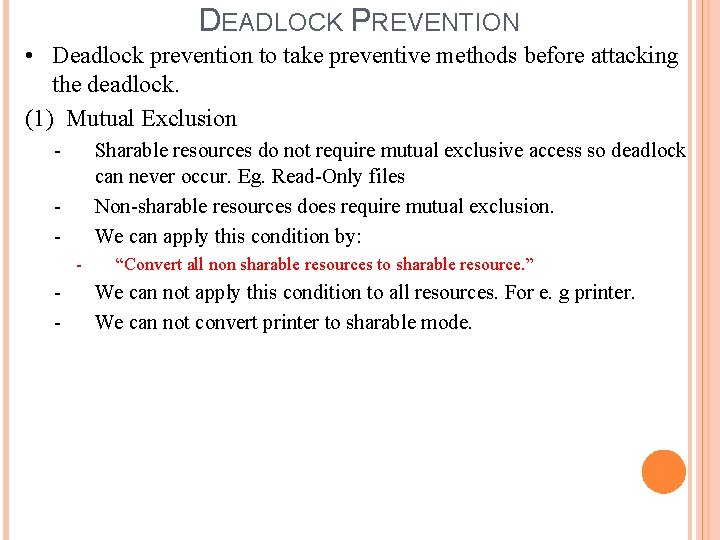

DEADLOCK PREVENTION • Deadlock prevention to take preventive methods before attacking the deadlock. (1) Mutual Exclusion - Sharable resources do not require mutual exclusive access so deadlock can never occur. Eg. Read-Only files Non-sharable resources does require mutual exclusion. We can apply this condition by: - - “Convert all non sharable resources to sharable resource. ” We can not apply this condition to all resources. For e. g printer. We can not convert printer to sharable mode.

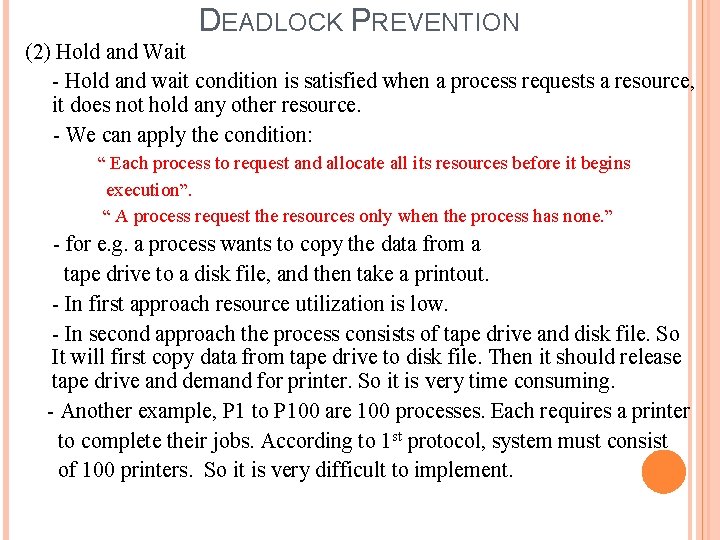

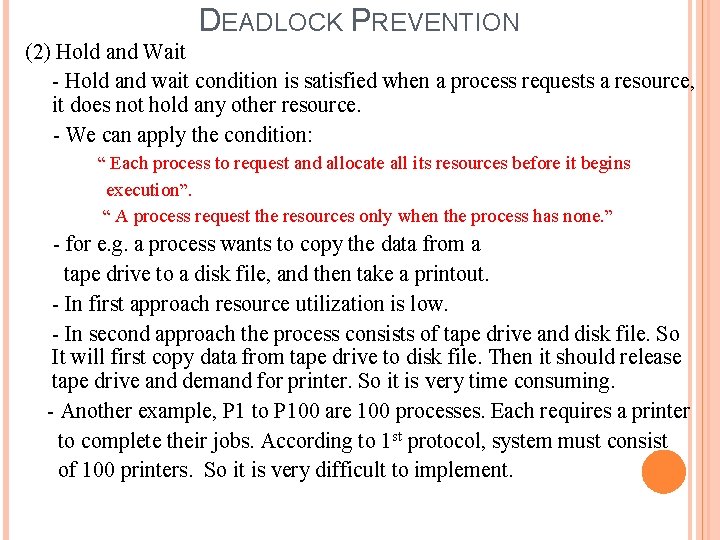

DEADLOCK PREVENTION (2) Hold and Wait - Hold and wait condition is satisfied when a process requests a resource, it does not hold any other resource. - We can apply the condition: “ Each process to request and allocate all its resources before it begins execution”. “ A process request the resources only when the process has none. ” - for e. g. a process wants to copy the data from a tape drive to a disk file, and then take a printout. - In first approach resource utilization is low. - In second approach the process consists of tape drive and disk file. So It will first copy data from tape drive to disk file. Then it should release tape drive and demand for printer. So it is very time consuming. - Another example, P 1 to P 100 are 100 processes. Each requires a printer to complete their jobs. According to 1 st protocol, system must consist of 100 printers. So it is very difficult to implement.

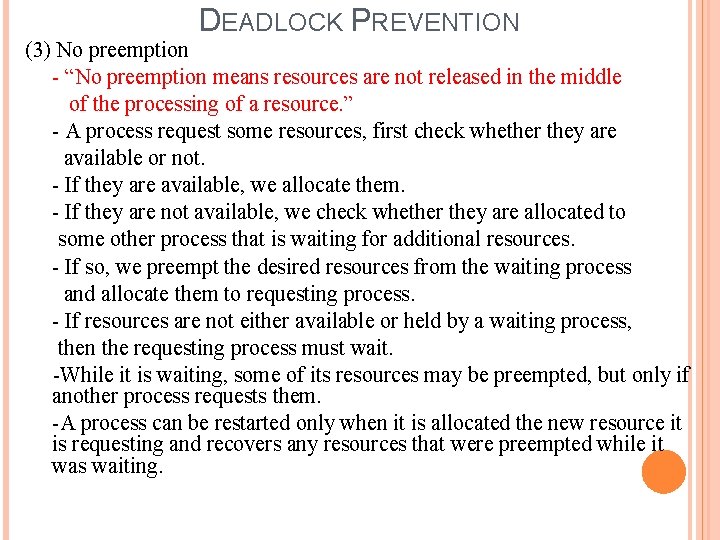

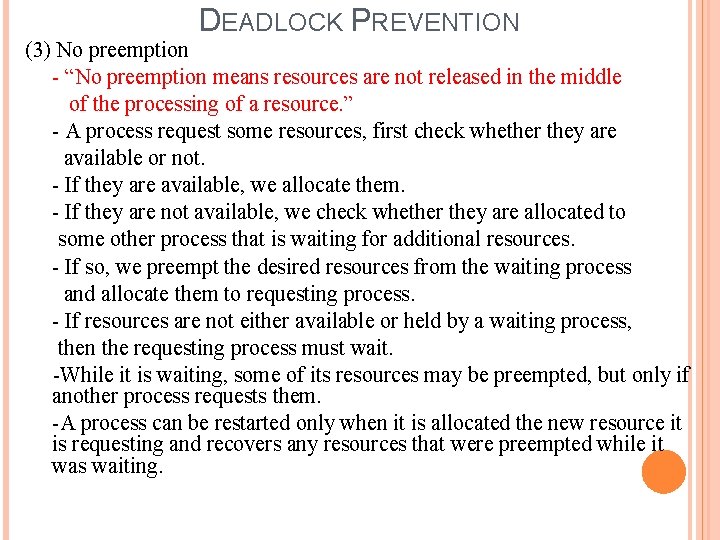

DEADLOCK PREVENTION (3) No preemption - “No preemption means resources are not released in the middle of the processing of a resource. ” - A process request some resources, first check whether they are available or not. - If they are available, we allocate them. - If they are not available, we check whether they are allocated to some other process that is waiting for additional resources. - If so, we preempt the desired resources from the waiting process and allocate them to requesting process. - If resources are not either available or held by a waiting process, then the requesting process must wait. -While it is waiting, some of its resources may be preempted, but only if another process requests them. -A process can be restarted only when it is allocated the new resource it is requesting and recovers any resources that were preempted while it was waiting.

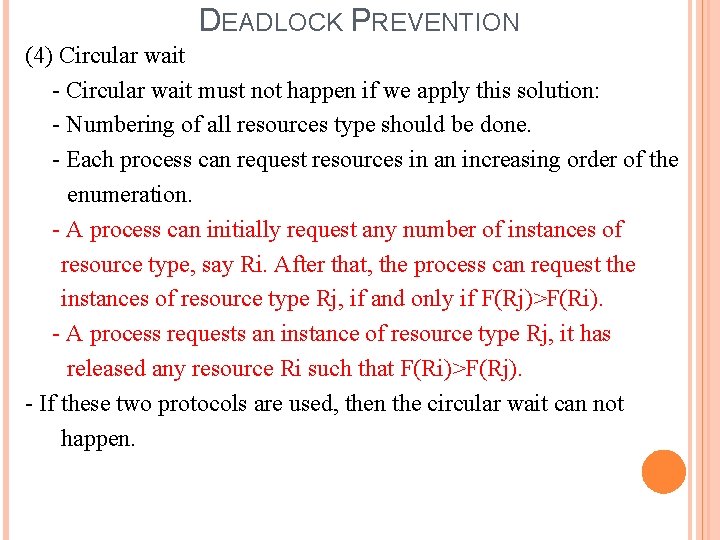

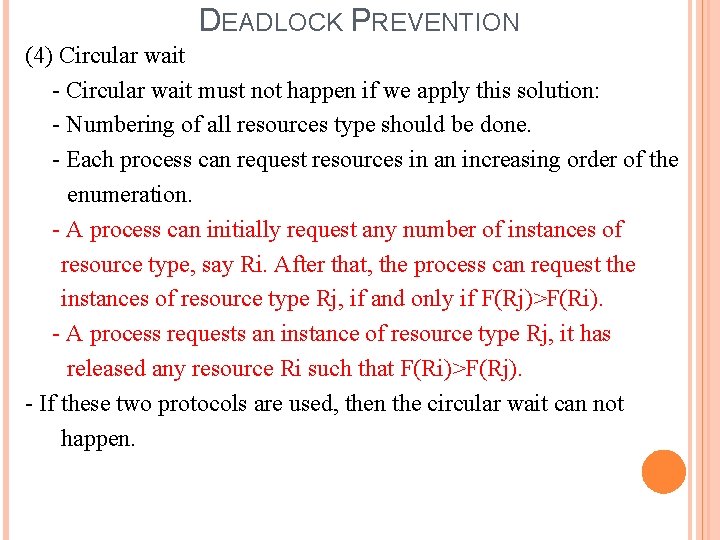

DEADLOCK PREVENTION (4) Circular wait - Circular wait must not happen if we apply this solution: - Numbering of all resources type should be done. - Each process can request resources in an increasing order of the enumeration. - A process can initially request any number of instances of resource type, say Ri. After that, the process can request the instances of resource type Rj, if and only if F(Rj)>F(Ri). - A process requests an instance of resource type Rj, it has released any resource Ri such that F(Ri)>F(Rj). - If these two protocols are used, then the circular wait can not happen.

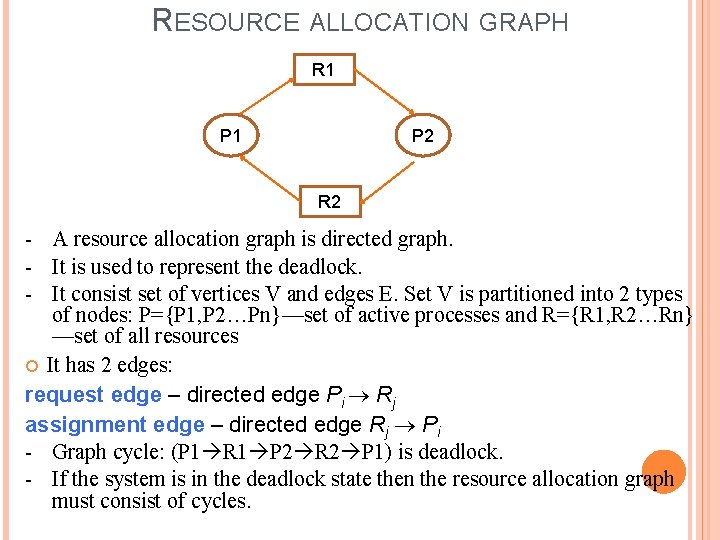

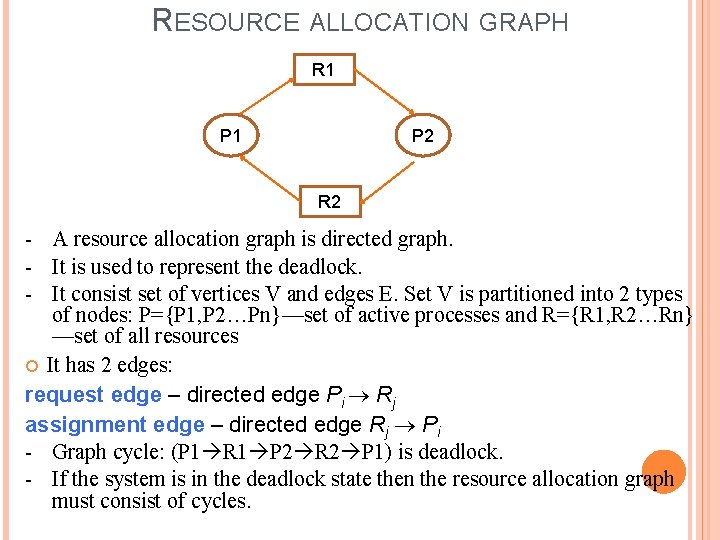

RESOURCE ALLOCATION GRAPH R 1 P 2 R 2 - A resource allocation graph is directed graph. - It is used to represent the deadlock. - It consist set of vertices V and edges E. Set V is partitioned into 2 types of nodes: P={P 1, P 2…Pn}—set of active processes and R={R 1, R 2…Rn} —set of all resources It has 2 edges: request edge – directed edge Pi Rj assignment edge – directed edge Rj Pi - Graph cycle: (P 1 R 1 P 2 R 2 P 1) is deadlock. - If the system is in the deadlock state then the resource allocation graph must consist of cycles.

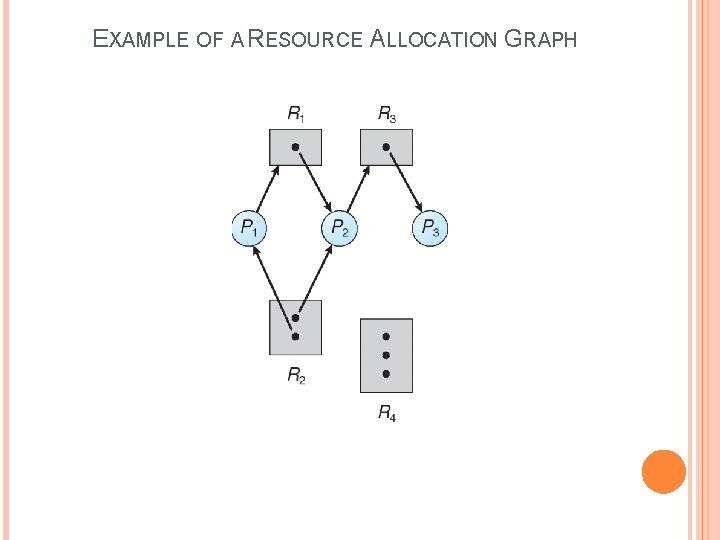

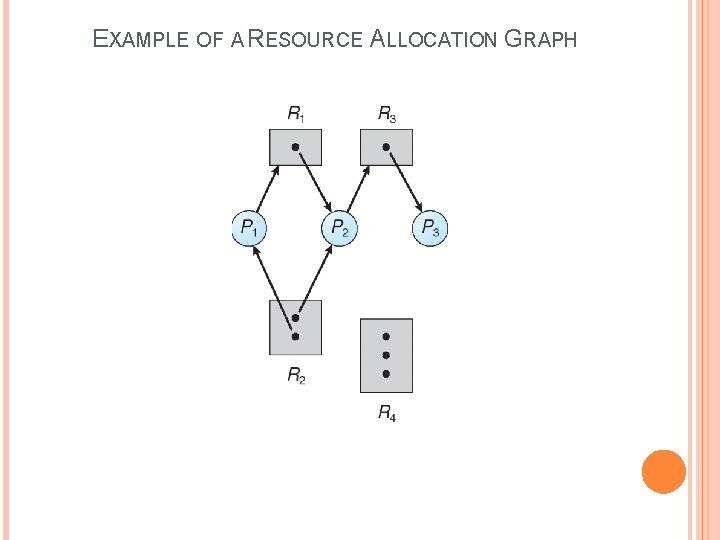

EXAMPLE OF A RESOURCE ALLOCATION GRAPH

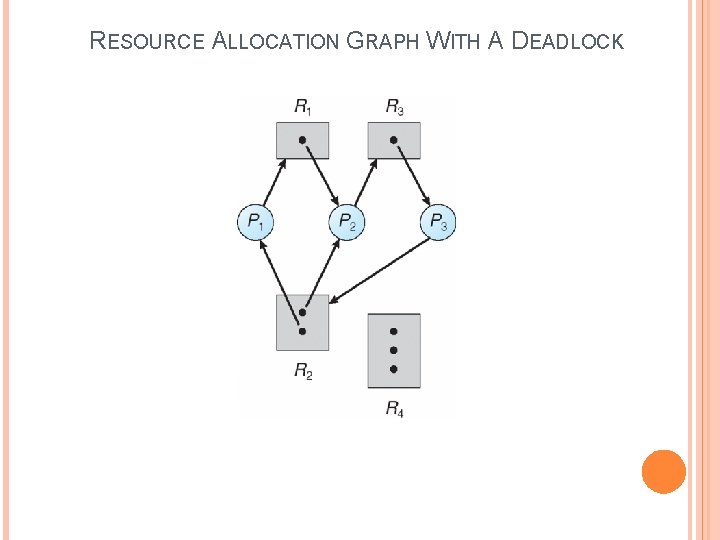

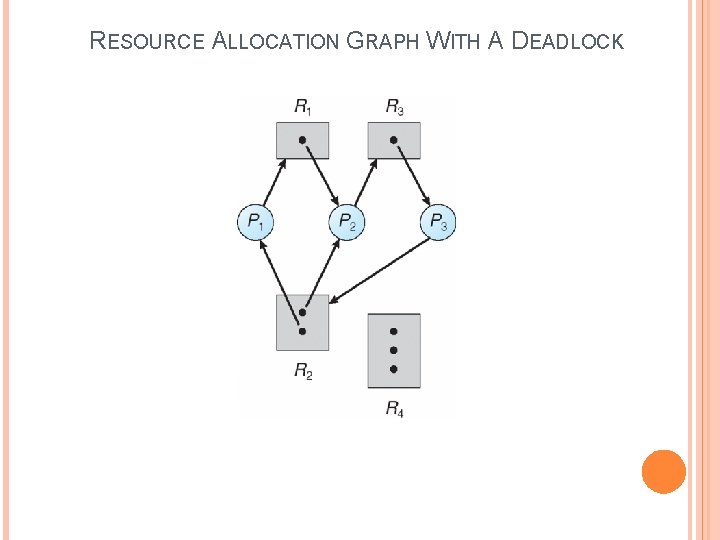

RESOURCE ALLOCATION GRAPH WITH A DEADLOCK

GRAPH WITH A CYCLE BUT NO DEADLOCK

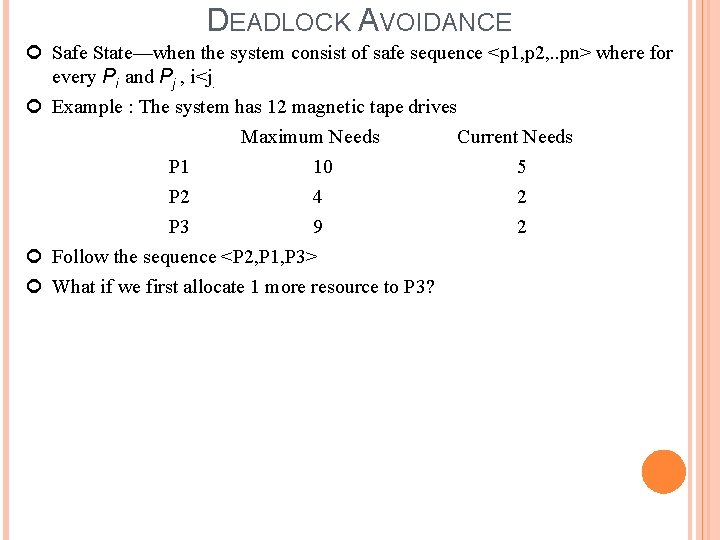

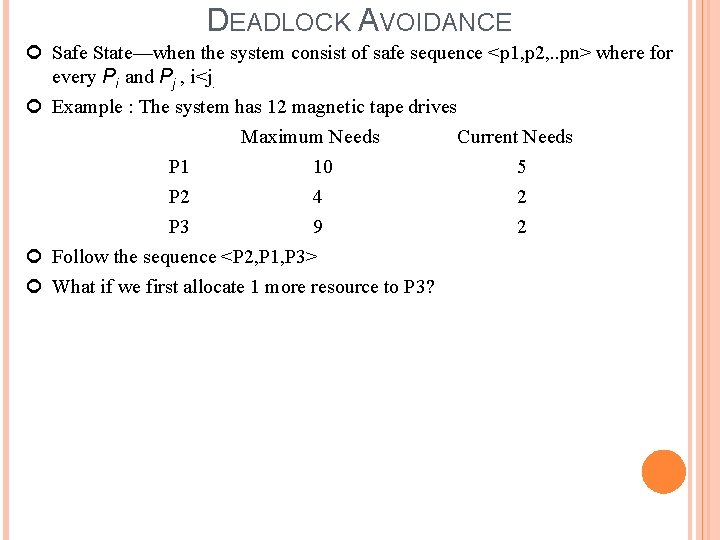

DEADLOCK AVOIDANCE Safe State—when the system consist of safe sequence <p 1, p 2, . . pn> where for every Pi and Pj , i<j. Example : The system has 12 magnetic tape drives Maximum Needs Current Needs P 1 10 5 P 2 4 2 P 3 9 2 Follow the sequence <P 2, P 1, P 3> What if we first allocate 1 more resource to P 3?

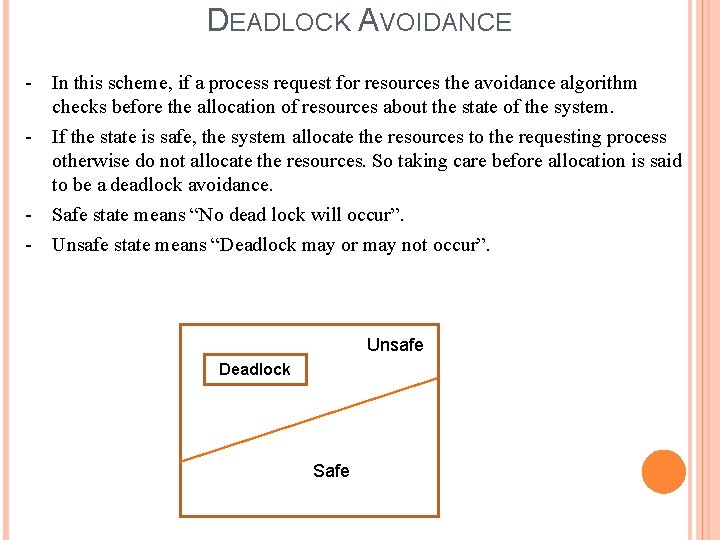

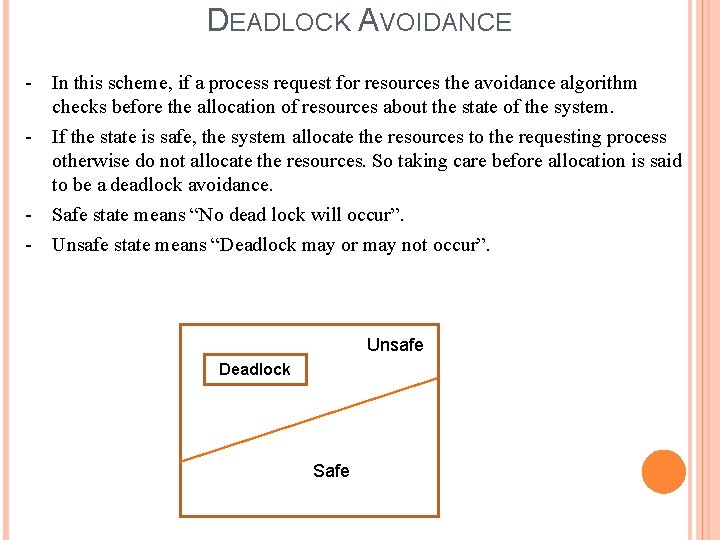

DEADLOCK AVOIDANCE - In this scheme, if a process request for resources the avoidance algorithm checks before the allocation of resources about the state of the system. - If the state is safe, the system allocate the resources to the requesting process otherwise do not allocate the resources. So taking care before allocation is said to be a deadlock avoidance. - Safe state means “No dead lock will occur”. - Unsafe state means “Deadlock may or may not occur”. Unsafe Deadlock Safe

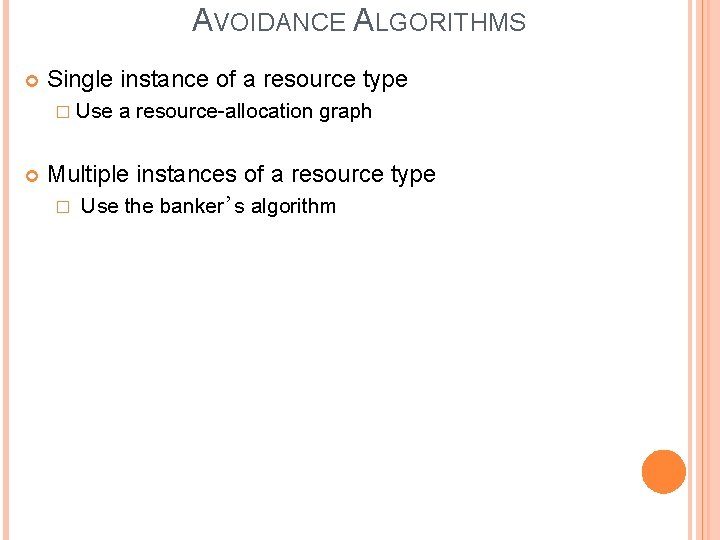

AVOIDANCE ALGORITHMS Single instance of a resource type � Use a resource-allocation graph Multiple instances of a resource type � Use the banker’s algorithm

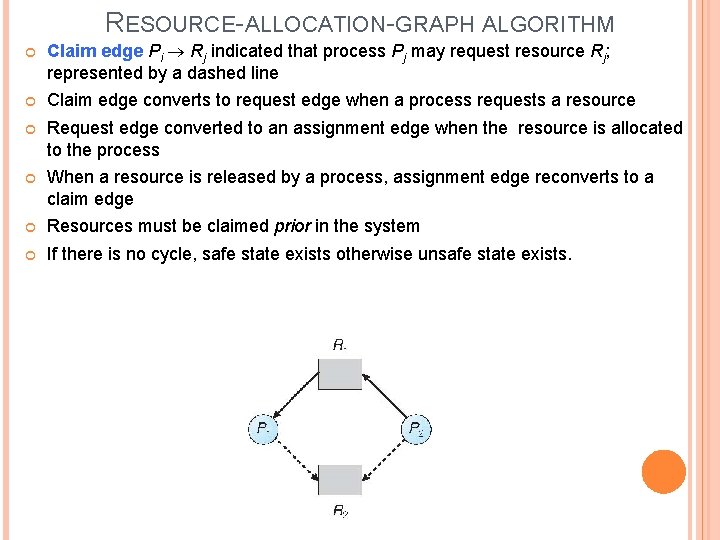

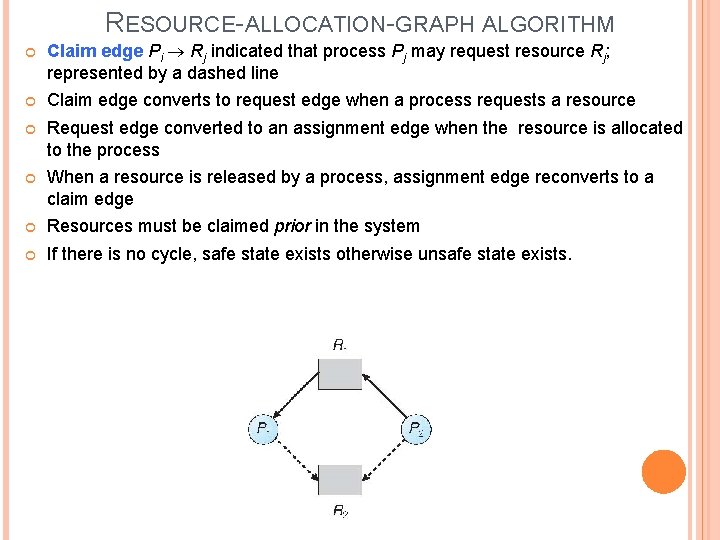

RESOURCE-ALLOCATION-GRAPH ALGORITHM Claim edge Pi Rj indicated that process Pj may request resource Rj; represented by a dashed line Claim edge converts to request edge when a process requests a resource Request edge converted to an assignment edge when the resource is allocated to the process When a resource is released by a process, assignment edge reconverts to a claim edge Resources must be claimed prior in the system If there is no cycle, safe state exists otherwise unsafe state exists.

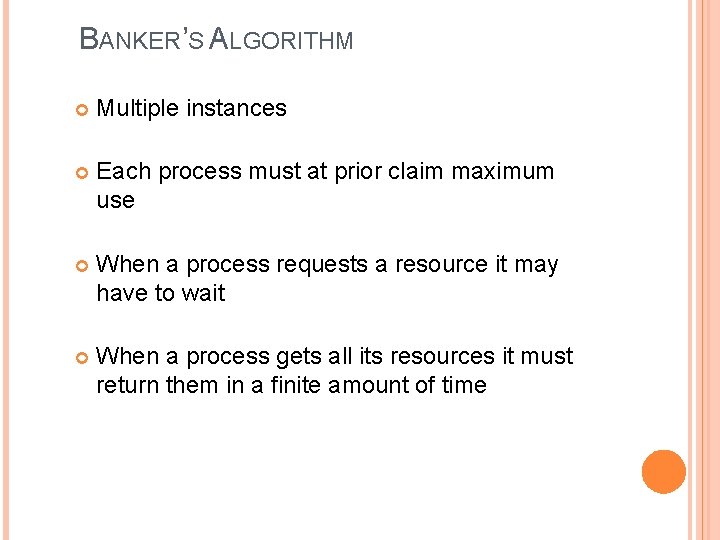

BANKER’S ALGORITHM Multiple instances Each process must at prior claim maximum use When a process requests a resource it may have to wait When a process gets all its resources it must return them in a finite amount of time

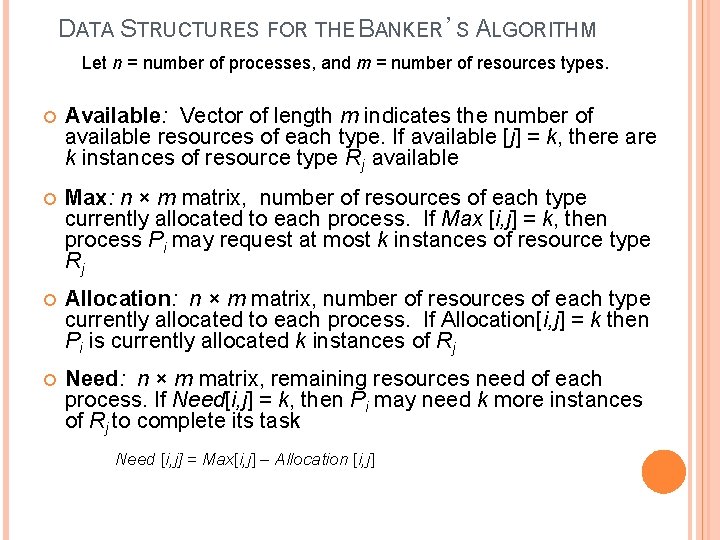

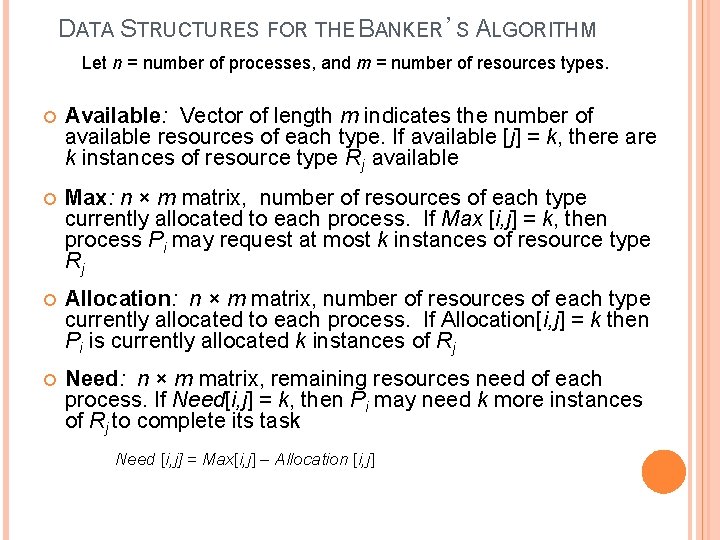

DATA STRUCTURES FOR THE BANKER’S ALGORITHM Let n = number of processes, and m = number of resources types. Available: Vector of length m indicates the number of available resources of each type. If available [j] = k, there are k instances of resource type Rj available Max: n × m matrix, number of resources of each type currently allocated to each process. If Max [i, j] = k, then process Pi may request at most k instances of resource type Rj Allocation: n × m matrix, number of resources of each type currently allocated to each process. If Allocation[i, j] = k then Pi is currently allocated k instances of Rj Need: n × m matrix, remaining resources need of each process. If Need[i, j] = k, then Pi may need k more instances of Rj to complete its task Need [i, j] = Max[i, j] – Allocation [i, j]

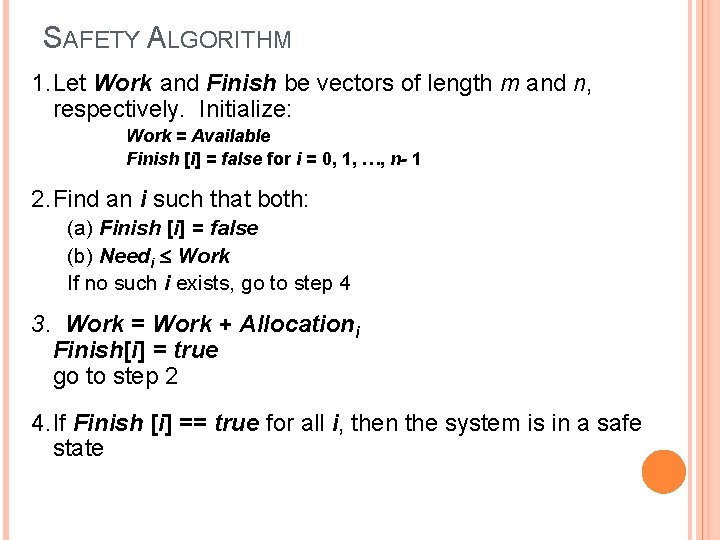

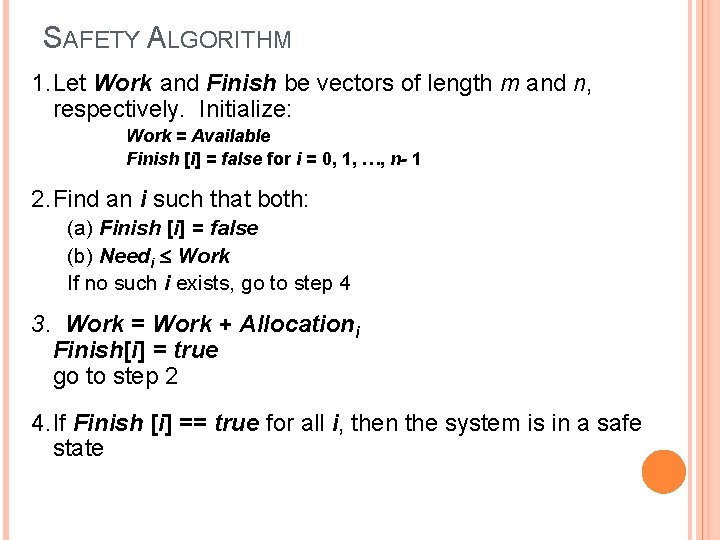

SAFETY ALGORITHM 1. Let Work and Finish be vectors of length m and n, respectively. Initialize: Work = Available Finish [i] = false for i = 0, 1, …, n- 1 2. Find an i such that both: (a) Finish [i] = false (b) Needi Work If no such i exists, go to step 4 3. Work = Work + Allocationi Finish[i] = true go to step 2 4. If Finish [i] == true for all i, then the system is in a safe state

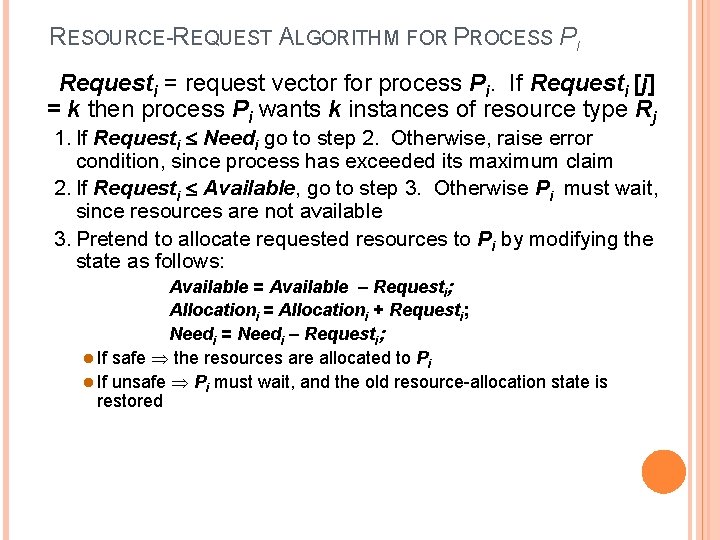

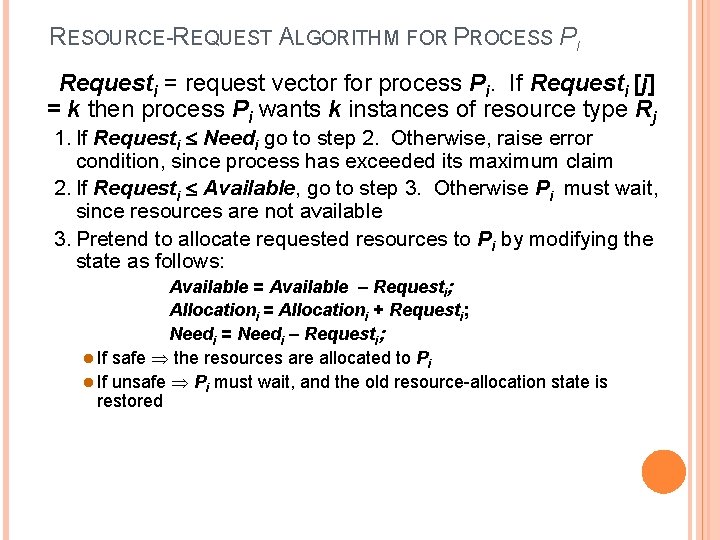

RESOURCE-REQUEST ALGORITHM FOR PROCESS PI Requesti = request vector for process Pi. If Requesti [j] = k then process Pi wants k instances of resource type Rj 1. If Requesti Needi go to step 2. Otherwise, raise error condition, since process has exceeded its maximum claim 2. If Requesti Available, go to step 3. Otherwise Pi must wait, since resources are not available 3. Pretend to allocate requested resources to Pi by modifying the state as follows: Available = Available – Requesti; Allocationi = Allocationi + Requesti; Needi = Needi – Requesti; l If safe the resources are allocated to Pi l If unsafe Pi must wait, and the old resource-allocation state is restored

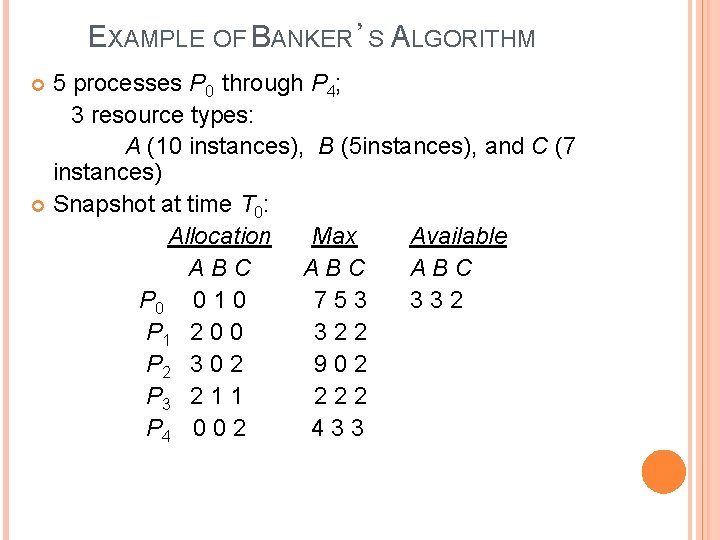

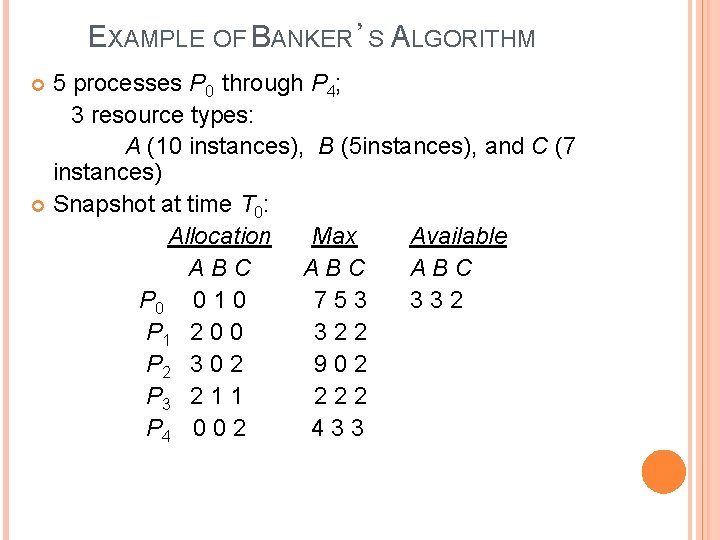

EXAMPLE OF BANKER’S ALGORITHM 5 processes P 0 through P 4; 3 resource types: A (10 instances), B (5 instances), and C (7 instances) Snapshot at time T 0: Allocation Max Available ABC ABC P 0 0 1 0 7 5 3 3 3 2 P 1 2 0 0 3 2 2 P 2 3 0 2 9 0 2 P 3 2 1 1 2 2 2 P 4 0 0 2 4 3 3

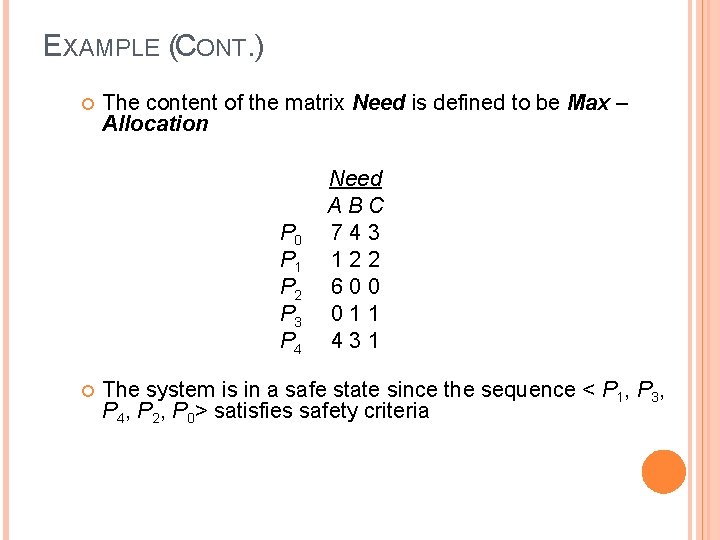

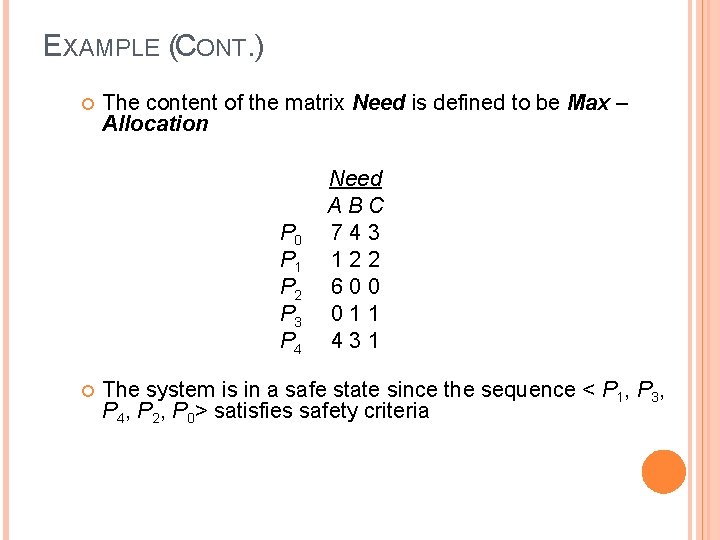

EXAMPLE (CONT. ) The content of the matrix Need is defined to be Max – Allocation P 0 P 1 P 2 P 3 P 4 Need ABC 7 4 3 1 2 2 6 0 0 0 1 1 4 3 1 The system is in a safe state since the sequence < P 1, P 3, P 4, P 2, P 0> satisfies safety criteria

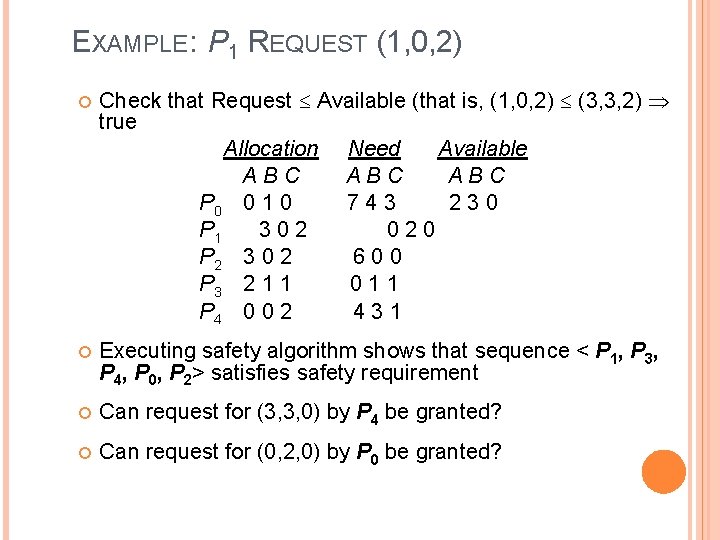

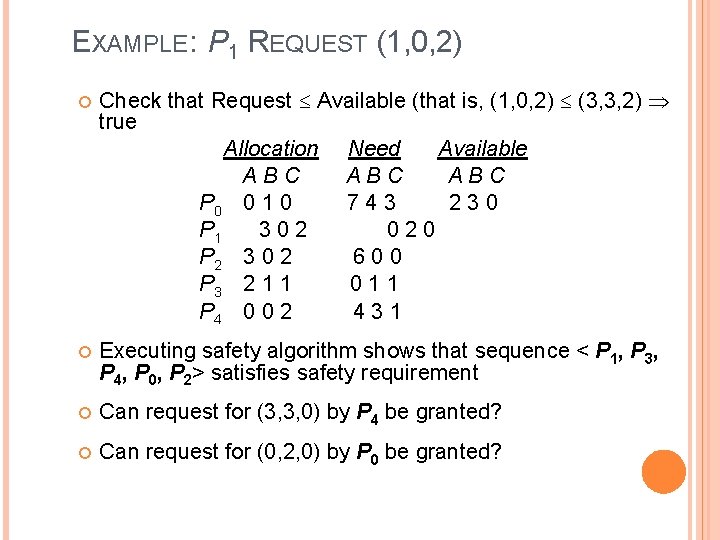

EXAMPLE: P 1 REQUEST (1, 0, 2) Check that Request Available (that is, (1, 0, 2) (3, 3, 2) true Allocation Need Available ABC ABC P 0 0 1 0 7 4 3 2 3 0 P 1 3 0 2 0 P 2 3 0 2 6 0 0 P 3 2 1 1 0 1 1 P 4 0 0 2 4 3 1 Executing safety algorithm shows that sequence < P 1, P 3, P 4, P 0, P 2> satisfies safety requirement Can request for (3, 3, 0) by P 4 be granted? Can request for (0, 2, 0) by P 0 be granted?

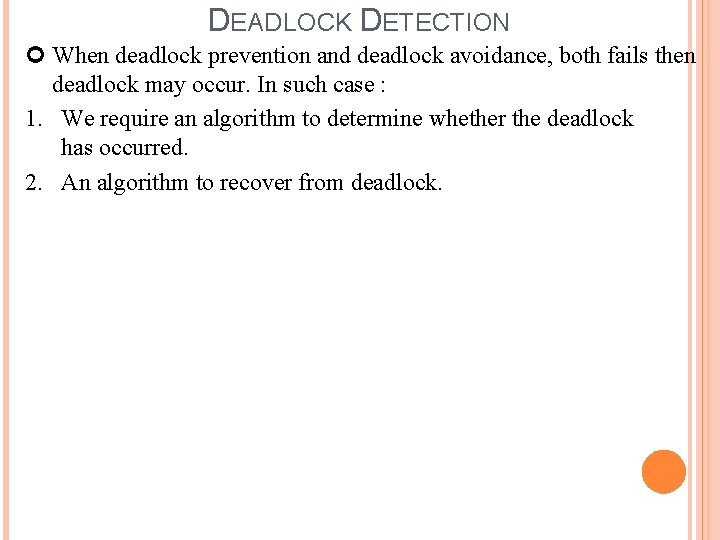

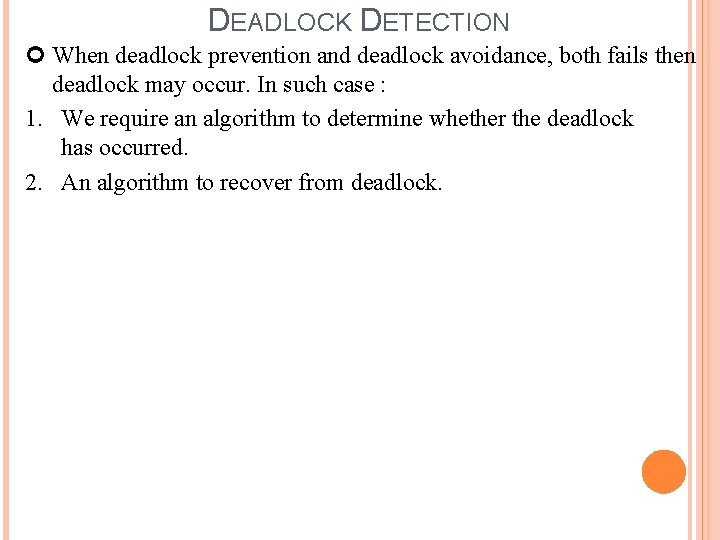

DEADLOCK DETECTION When deadlock prevention and deadlock avoidance, both fails then deadlock may occur. In such case : 1. We require an algorithm to determine whether the deadlock has occurred. 2. An algorithm to recover from deadlock.

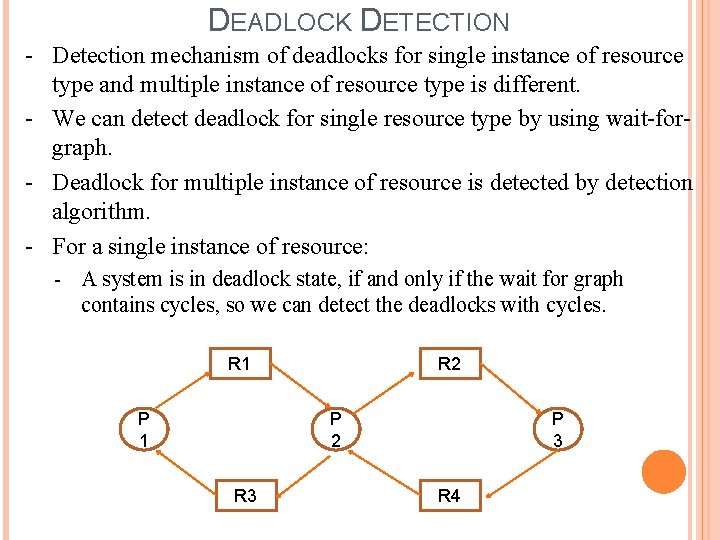

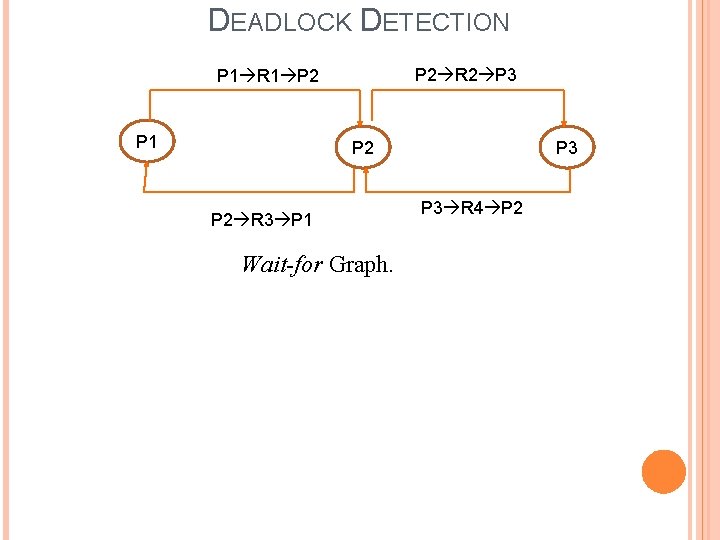

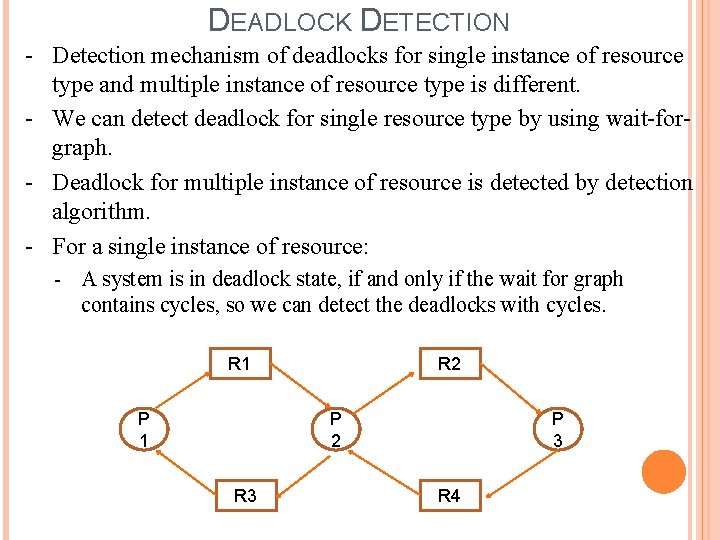

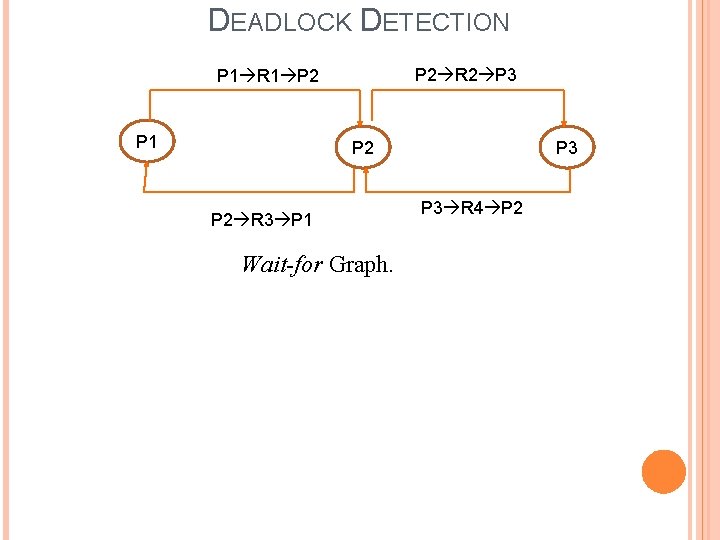

DEADLOCK DETECTION - Detection mechanism of deadlocks for single instance of resource type and multiple instance of resource type is different. - We can detect deadlock for single resource type by using wait-forgraph. - Deadlock for multiple instance of resource is detected by detection algorithm. - For a single instance of resource: - A system is in deadlock state, if and only if the wait for graph contains cycles, so we can detect the deadlocks with cycles. R 1 P 1 R 2 P 2 R 3 P 3 R 4

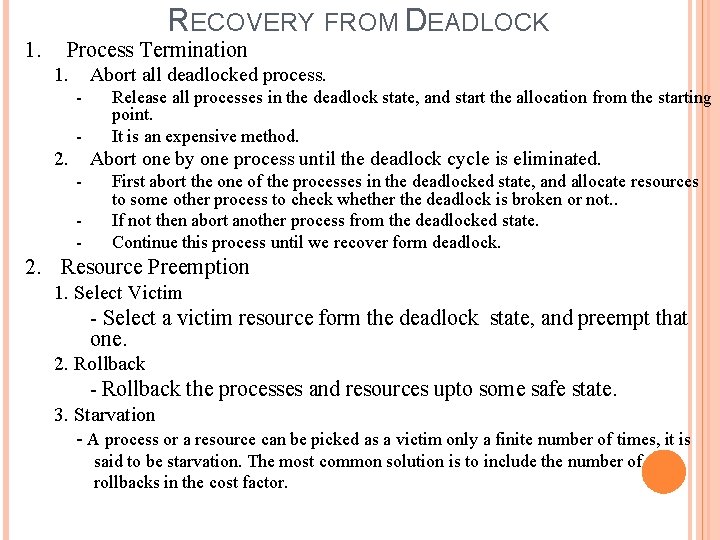

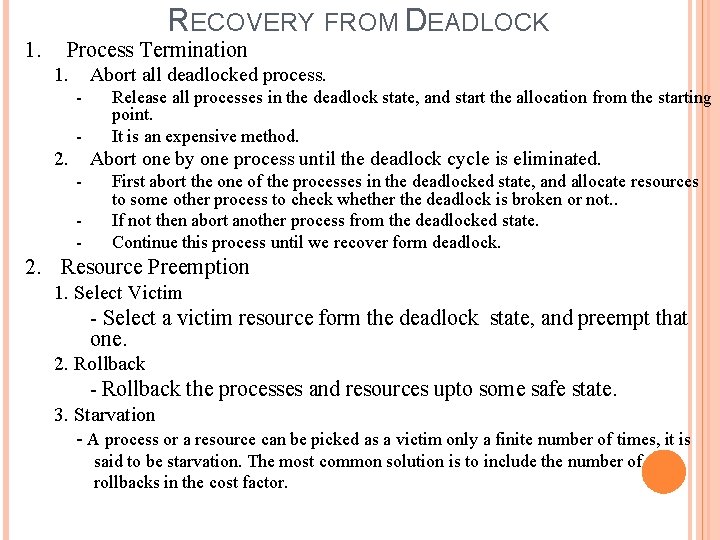

DEADLOCK DETECTION P 2 R 2 P 3 P 1 R 1 P 2 P 2 R 3 P 1 Wait-for Graph. P 3 R 4 P 2