Coarray Fortran Compilation Performance Languages Issues Cristian Coarfa

![Implementing Communication • Given a statement • X(1: n) = A(1: n)[p] + … Implementing Communication • Given a statement • X(1: n) = A(1: n)[p] + …](https://slidetodoc.com/presentation_image_h2/105c22dbcc3978ef8d61a3b4fd8d4dcb/image-12.jpg)

![Coming Attractions • Allocatable co-arrays REAL(8), ALLOCATABLE : : X(: )[*] ALLOCATE(X(MYX_NUM)[*]) • Co-arrays Coming Attractions • Allocatable co-arrays REAL(8), ALLOCATABLE : : X(: )[*] ALLOCATE(X(MYX_NUM)[*]) • Co-arrays](https://slidetodoc.com/presentation_image_h2/105c22dbcc3978ef8d61a3b4fd8d4dcb/image-16.jpg)

- Slides: 34

Co-array Fortran: Compilation, Performance, Languages Issues Cristian Coarfa Yuri Dotsenko John Mellor-Crummey Department of Computer Science Rice University

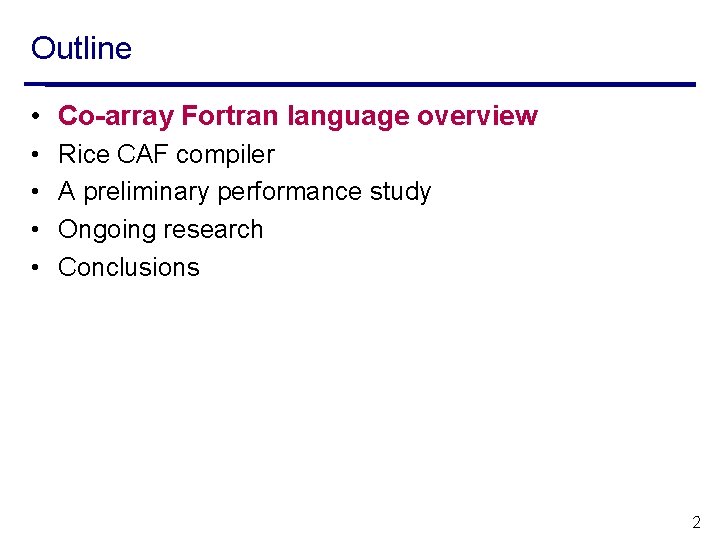

Outline • Co-array Fortran language overview • • Rice CAF compiler A preliminary performance study Ongoing research Conclusions 2

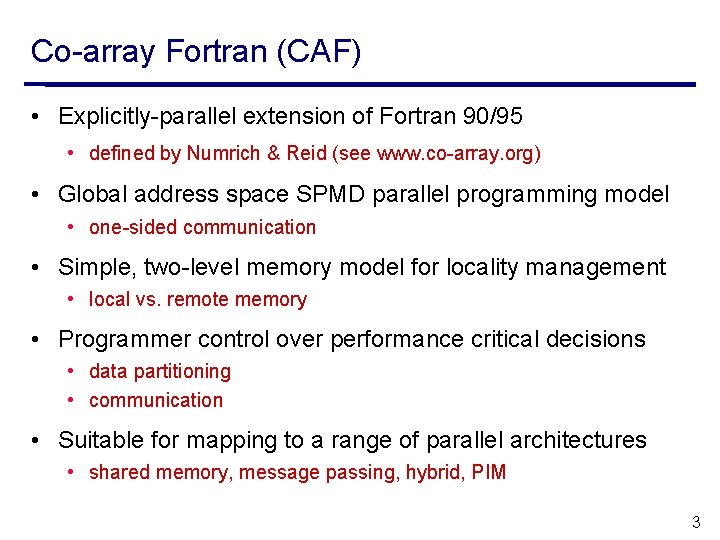

Co-array Fortran (CAF) • Explicitly-parallel extension of Fortran 90/95 • defined by Numrich & Reid (see www. co-array. org) • Global address space SPMD parallel programming model • one-sided communication • Simple, two-level memory model for locality management • local vs. remote memory • Programmer control over performance critical decisions • data partitioning • communication • Suitable for mapping to a range of parallel architectures • shared memory, message passing, hybrid, PIM 3

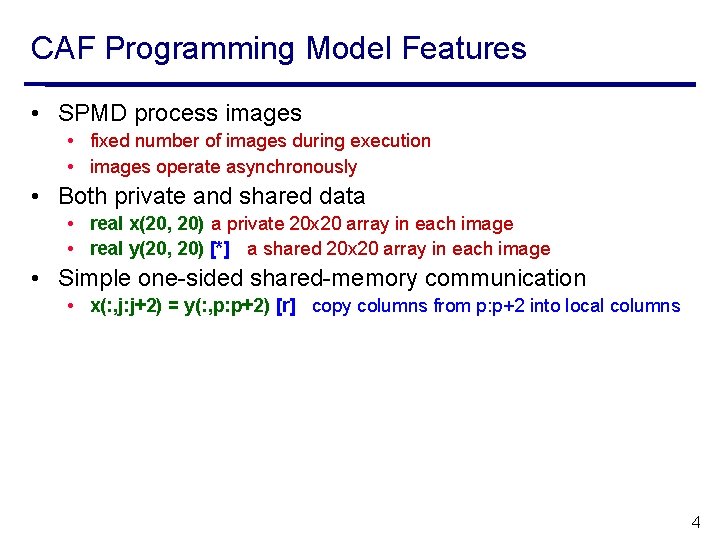

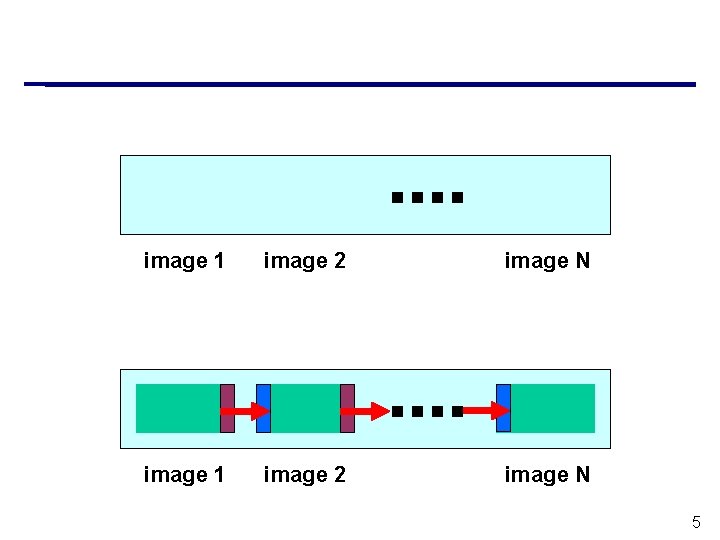

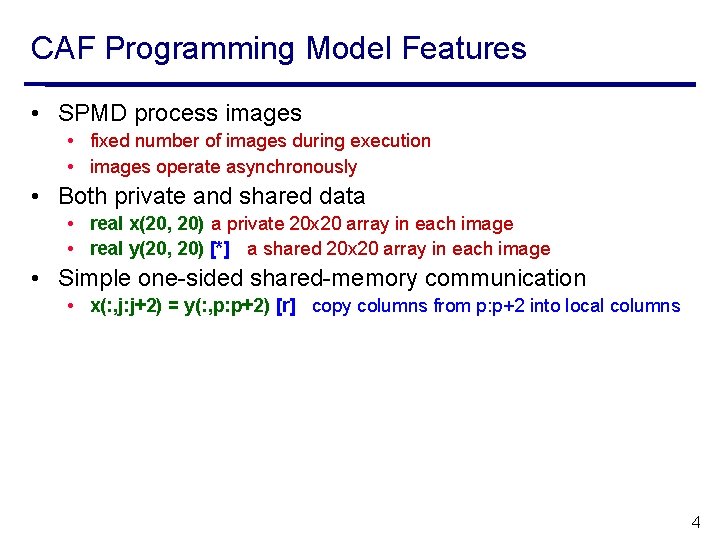

CAF Programming Model Features • SPMD process images • fixed number of images during execution • images operate asynchronously • Both private and shared data • real x(20, 20) a private 20 x 20 array in each image • real y(20, 20) [*] a shared 20 x 20 array in each image • Simple one-sided shared-memory communication • x(: , j: j+2) = y(: , p: p+2) [r] copy columns from p: p+2 into local columns 4

image 1 image 2 image N 5

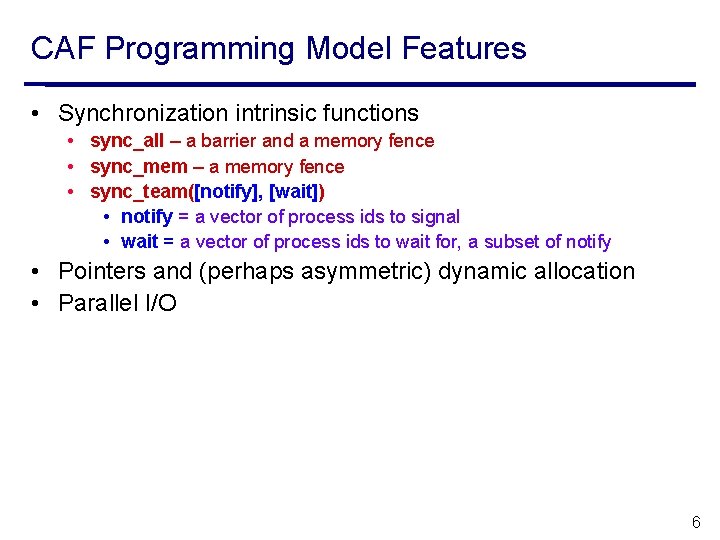

CAF Programming Model Features • Synchronization intrinsic functions • sync_all – a barrier and a memory fence • sync_mem – a memory fence • sync_team([notify], [wait]) • notify = a vector of process ids to signal • wait = a vector of process ids to wait for, a subset of notify • Pointers and (perhaps asymmetric) dynamic allocation • Parallel I/O 6

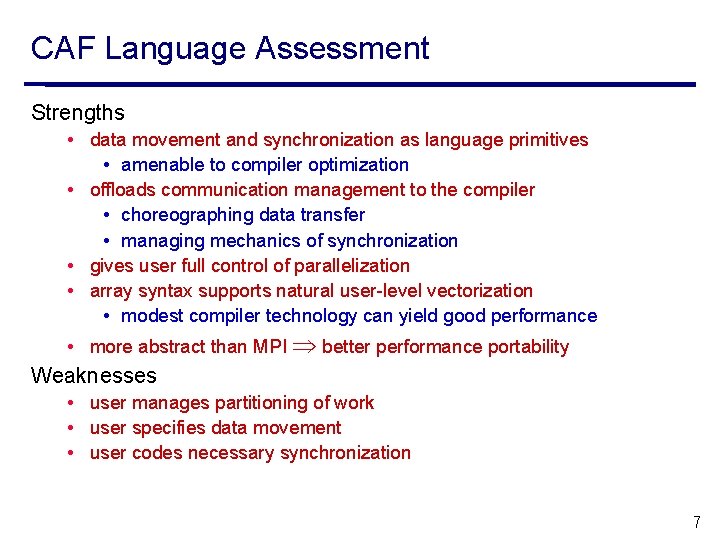

CAF Language Assessment Strengths • data movement and synchronization as language primitives • amenable to compiler optimization • offloads communication management to the compiler • choreographing data transfer • managing mechanics of synchronization • gives user full control of parallelization • array syntax supports natural user-level vectorization • modest compiler technology can yield good performance • more abstract than MPI better performance portability Weaknesses • user manages partitioning of work • user specifies data movement • user codes necessary synchronization 7

Outline • Co-array Fortran language overview • Rice CAF Compiler • A preliminary performance study • Ongoing research • Conclusions 8

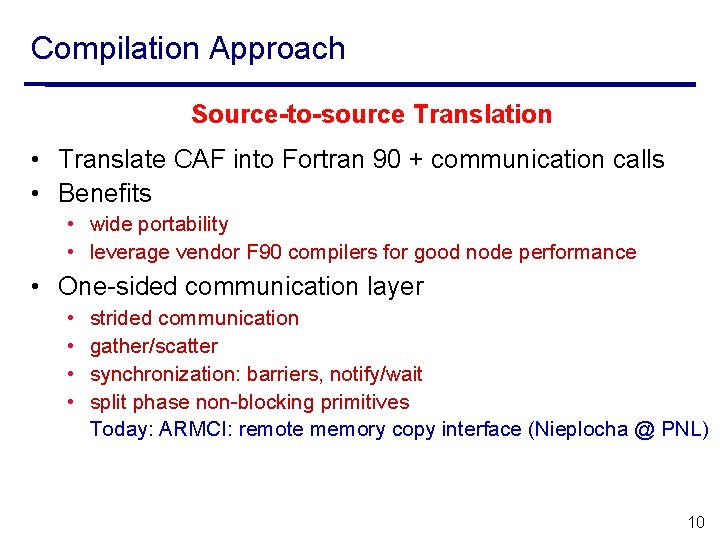

Compiler Goals • Portable, open-source compiler • Multi-platform code generation • High performance generated code 9

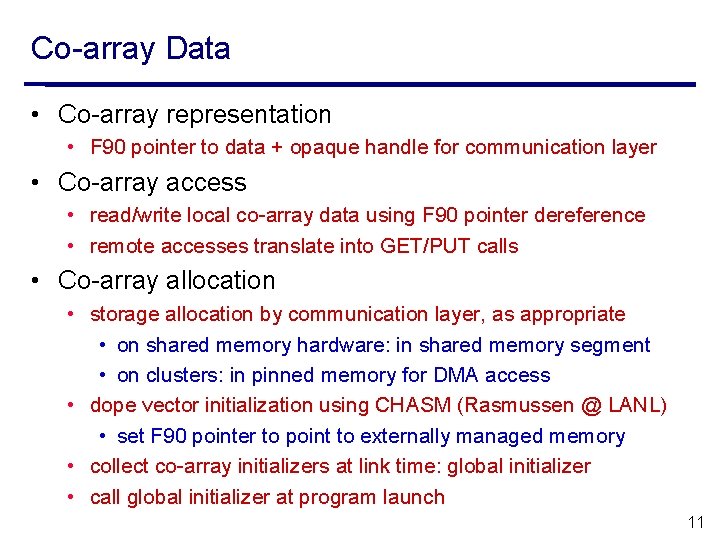

Compilation Approach Source-to-source Translation • Translate CAF into Fortran 90 + communication calls • Benefits • wide portability • leverage vendor F 90 compilers for good node performance • One-sided communication layer • • strided communication gather/scatter synchronization: barriers, notify/wait split phase non-blocking primitives Today: ARMCI: remote memory copy interface (Nieplocha @ PNL) 10

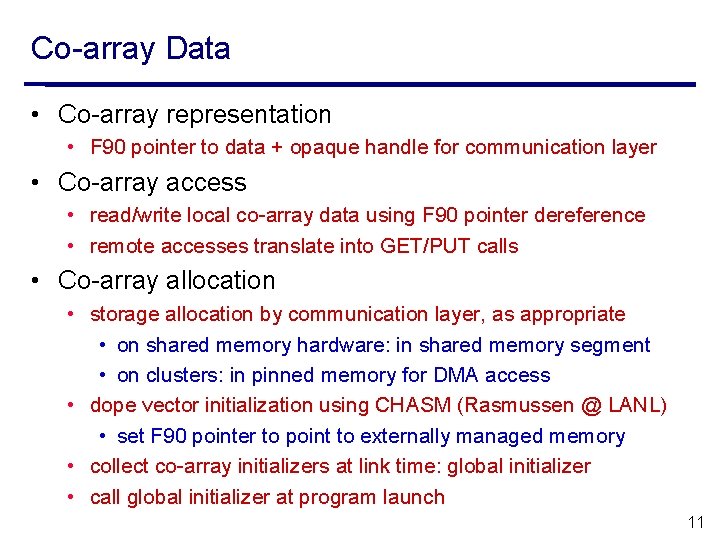

Co-array Data • Co-array representation • F 90 pointer to data + opaque handle for communication layer • Co-array access • read/write local co-array data using F 90 pointer dereference • remote accesses translate into GET/PUT calls • Co-array allocation • storage allocation by communication layer, as appropriate • on shared memory hardware: in shared memory segment • on clusters: in pinned memory for DMA access • dope vector initialization using CHASM (Rasmussen @ LANL) • set F 90 pointer to point to externally managed memory • collect co-array initializers at link time: global initializer • call global initializer at program launch 11

![Implementing Communication Given a statement X1 n A1 np Implementing Communication • Given a statement • X(1: n) = A(1: n)[p] + …](https://slidetodoc.com/presentation_image_h2/105c22dbcc3978ef8d61a3b4fd8d4dcb/image-12.jpg)

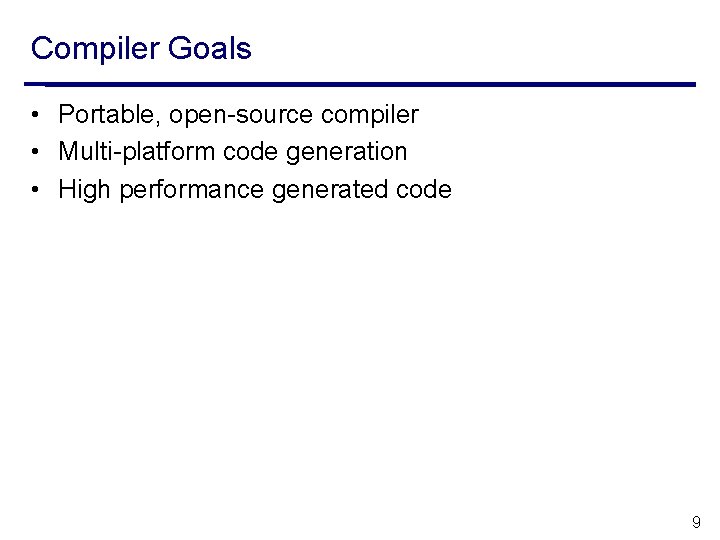

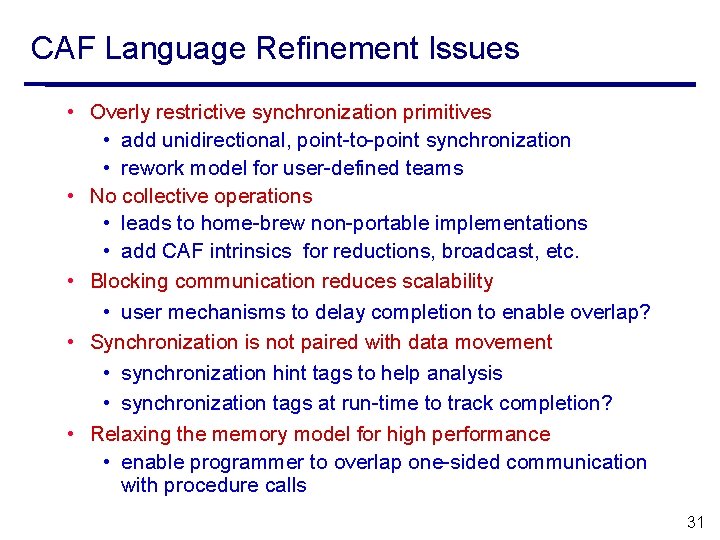

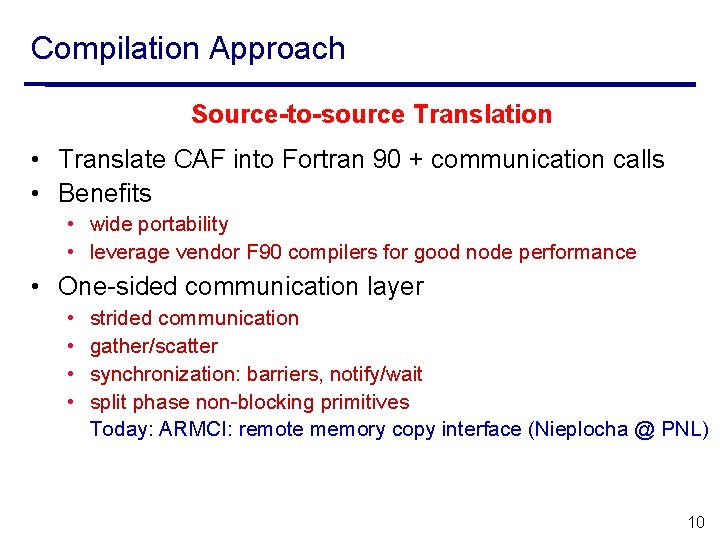

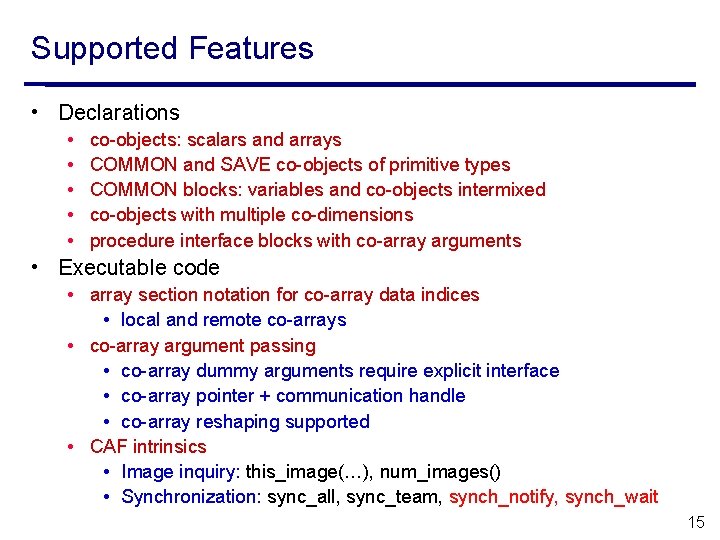

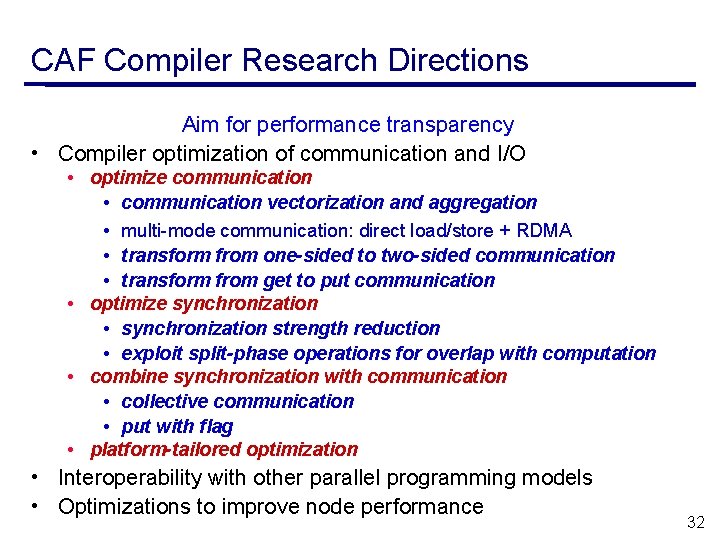

Implementing Communication • Given a statement • X(1: n) = A(1: n)[p] + … • A temporary buffer is used for off processor data • invoke communication library to allocate tmp in suitable temporary storage • dope vector filled in so tmp can be accessed as F 90 pointer • call communication library to fill in tmp (GET) • X(1: n) = tmp(1: n) + … • deallocate tmp • Optimizations • Co-array to co-array communication: no temporary storage • On shared-memory systems: direct load/store 12

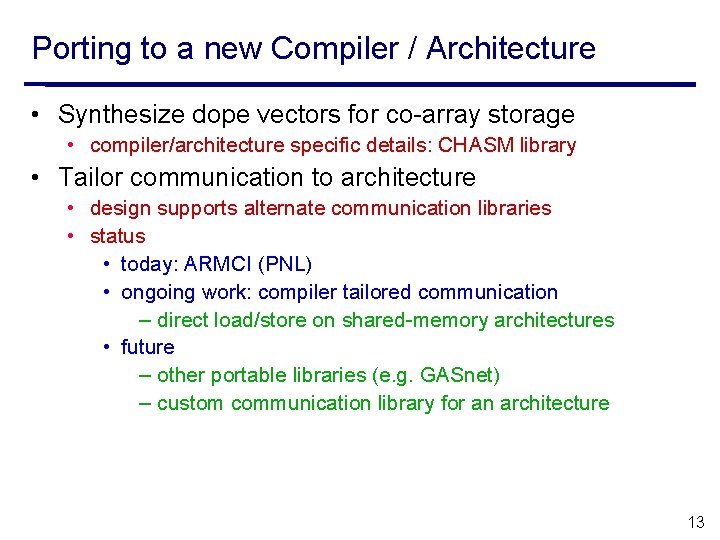

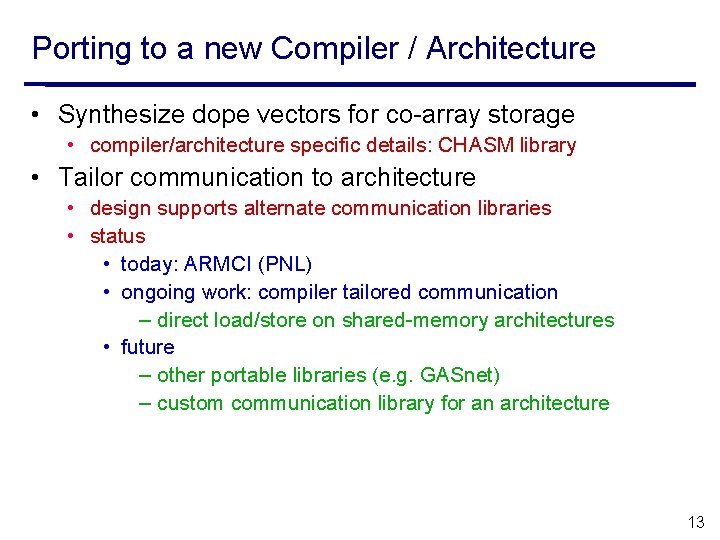

Porting to a new Compiler / Architecture • Synthesize dope vectors for co-array storage • compiler/architecture specific details: CHASM library • Tailor communication to architecture • design supports alternate communication libraries • status • today: ARMCI (PNL) • ongoing work: compiler tailored communication – direct load/store on shared-memory architectures • future – other portable libraries (e. g. GASnet) – custom communication library for an architecture 13

14

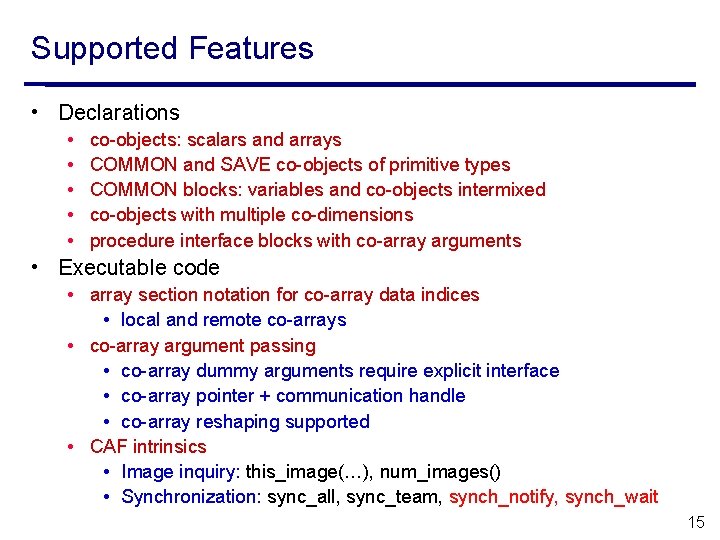

Supported Features • Declarations • • • co-objects: scalars and arrays COMMON and SAVE co-objects of primitive types COMMON blocks: variables and co-objects intermixed co-objects with multiple co-dimensions procedure interface blocks with co-array arguments • Executable code • array section notation for co-array data indices • local and remote co-arrays • co-array argument passing • co-array dummy arguments require explicit interface • co-array pointer + communication handle • co-array reshaping supported • CAF intrinsics • Image inquiry: this_image(…), num_images() • Synchronization: sync_all, sync_team, synch_notify, synch_wait 15

![Coming Attractions Allocatable coarrays REAL8 ALLOCATABLE X ALLOCATEXMYXNUM Coarrays Coming Attractions • Allocatable co-arrays REAL(8), ALLOCATABLE : : X(: )[*] ALLOCATE(X(MYX_NUM)[*]) • Co-arrays](https://slidetodoc.com/presentation_image_h2/105c22dbcc3978ef8d61a3b4fd8d4dcb/image-16.jpg)

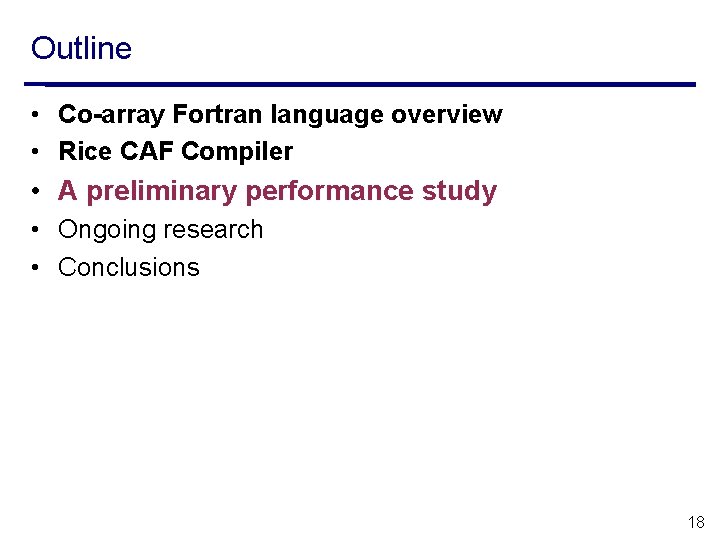

Coming Attractions • Allocatable co-arrays REAL(8), ALLOCATABLE : : X(: )[*] ALLOCATE(X(MYX_NUM)[*]) • Co-arrays of user-defined types • Allocatable co-array components • user defined type with pointer components • Triplets in co-dimensions A(j, k)[p+1: p+4] 16

17

Outline • Co-array Fortran language overview • Rice CAF Compiler • A preliminary performance study • Ongoing research • Conclusions 18

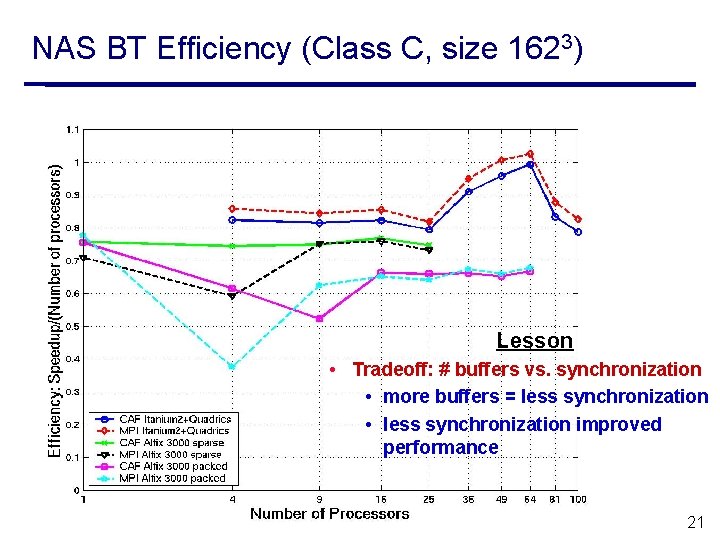

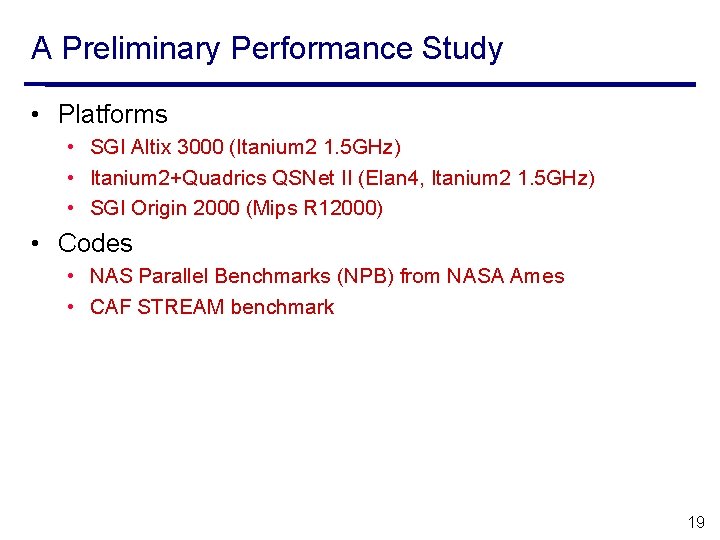

A Preliminary Performance Study • Platforms • SGI Altix 3000 (Itanium 2 1. 5 GHz) • Itanium 2+Quadrics QSNet II (Elan 4, Itanium 2 1. 5 GHz) • SGI Origin 2000 (Mips R 12000) • Codes • NAS Parallel Benchmarks (NPB) from NASA Ames • CAF STREAM benchmark 19

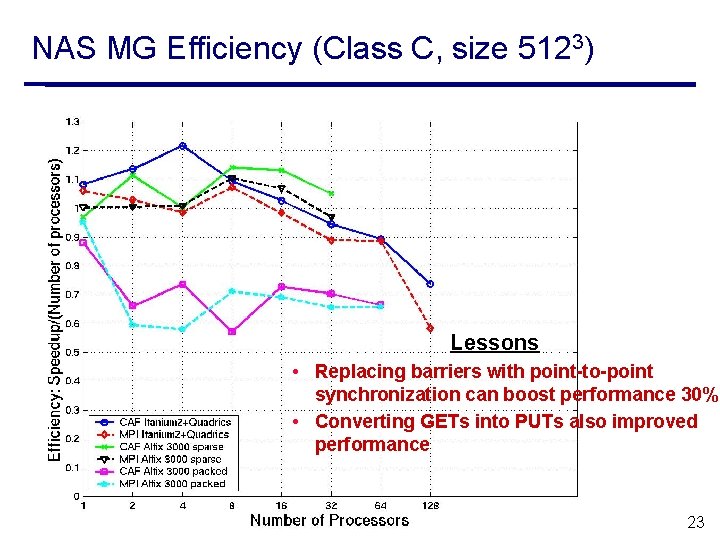

NAS Parallel Benchmarks (NPB) 2. 3 • Benchmarks by NASA Ames • 2 -3 K lines each (Fortran 77) • Widely used to test parallel compiler performance • BT, SP, MG and CG • NAS versions: • NPB 2. 3 b 2 : Hand-coded MPI • NPB 2. 3 -serial : Serial code extracted from MPI version • Our version • NPB 2. 3 -CAF: CAF implementation, based on the MPI version 20

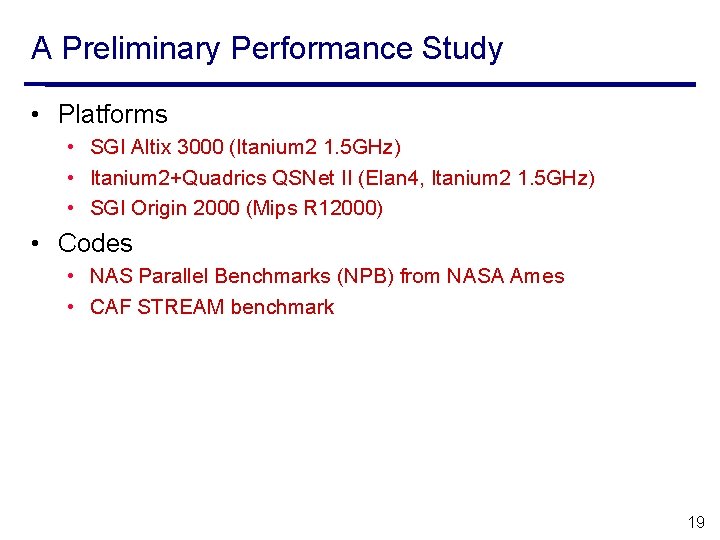

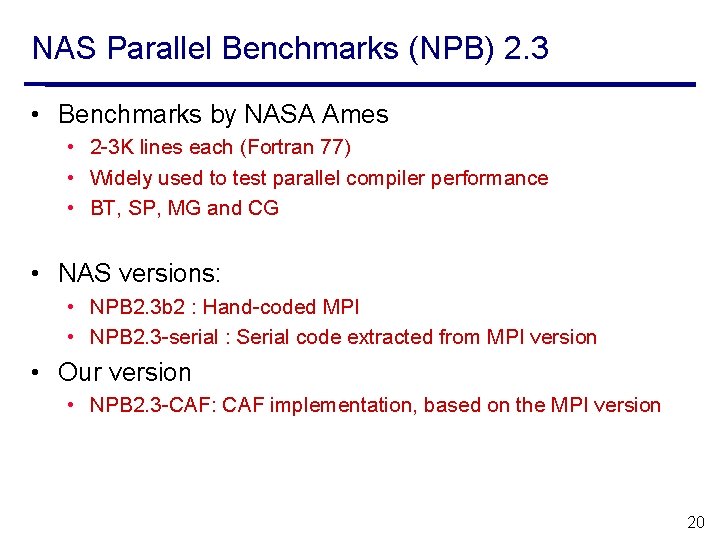

NAS BT Efficiency (Class C, size 1623) Lesson • Tradeoff: # buffers vs. synchronization • more buffers = less synchronization • less synchronization improved performance 21

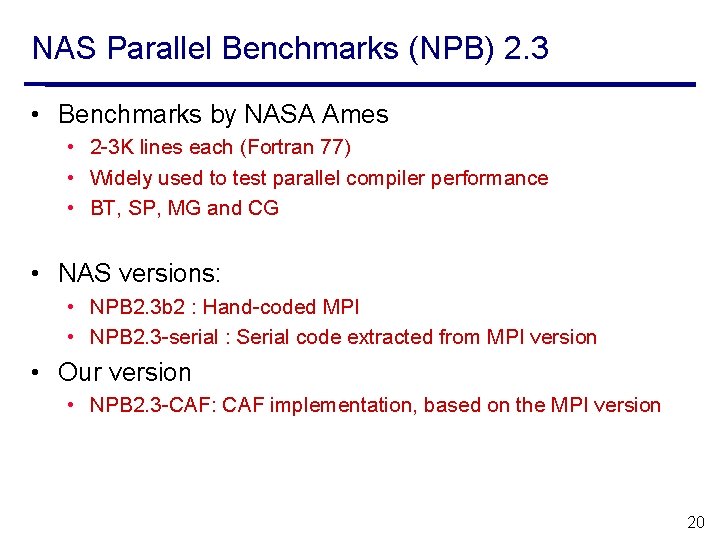

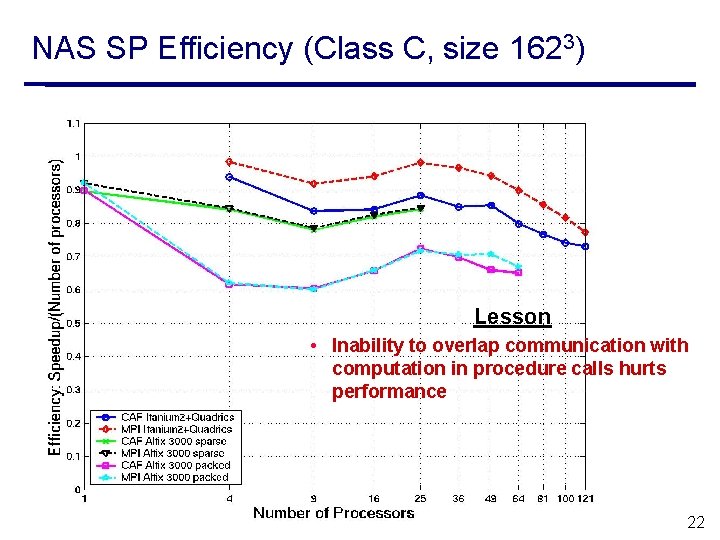

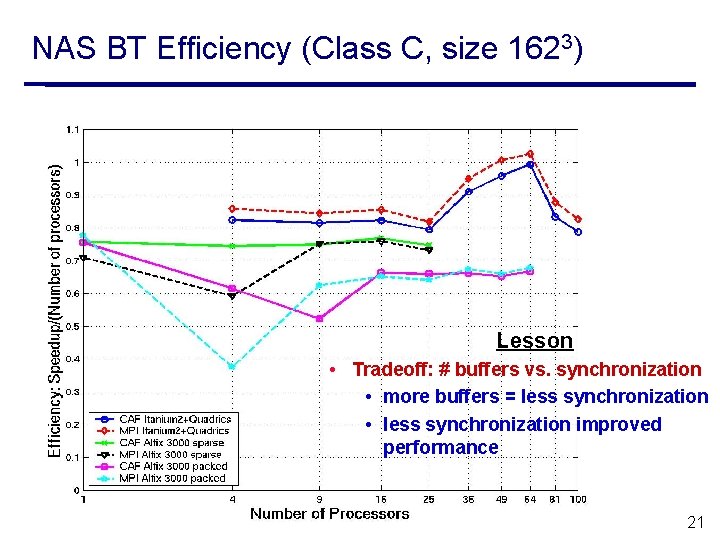

NAS SP Efficiency (Class C, size 1623) Lesson • Inability to overlap communication with computation in procedure calls hurts performance 22

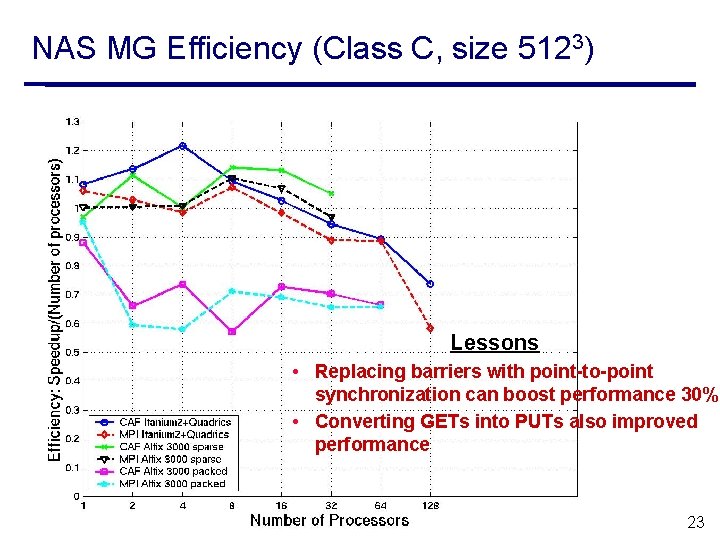

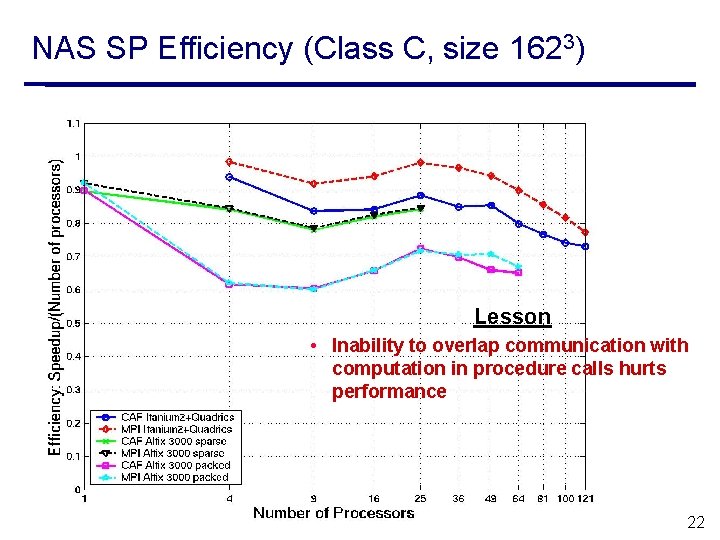

NAS MG Efficiency (Class C, size 5123) Lessons • Replacing barriers with point-to-point synchronization can boost performance 30% • Converting GETs into PUTs also improved performance 23

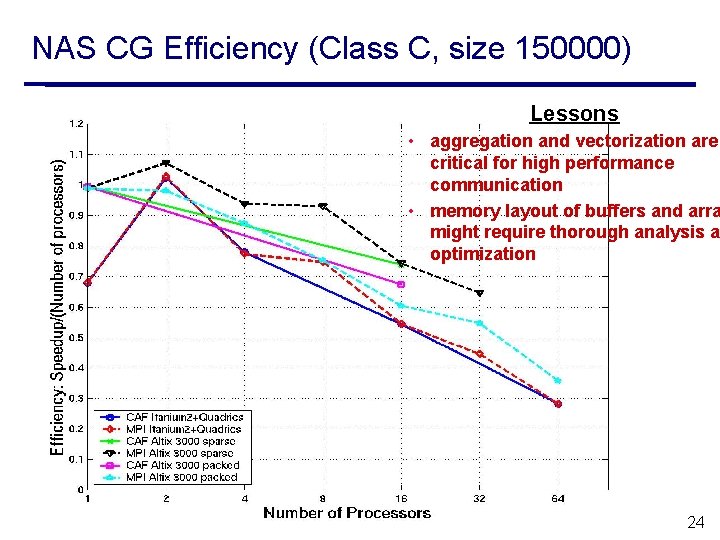

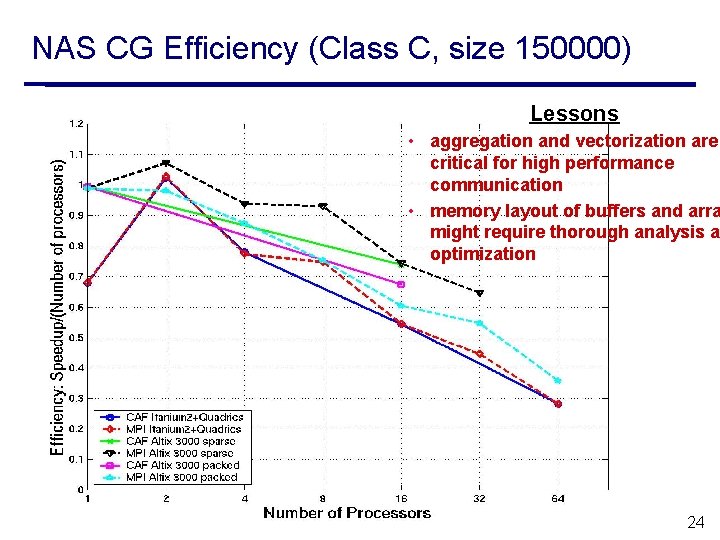

NAS CG Efficiency (Class C, size 150000) Lessons • aggregation and vectorization are critical for high performance communication • memory layout of buffers and arra might require thorough analysis an optimization 24

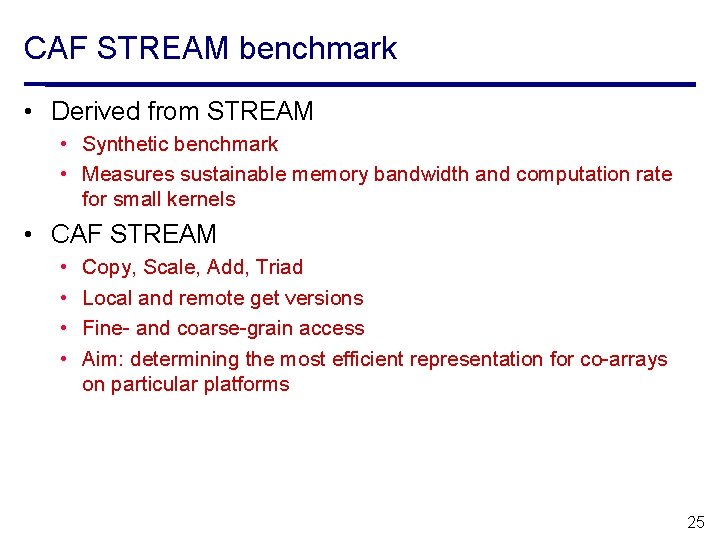

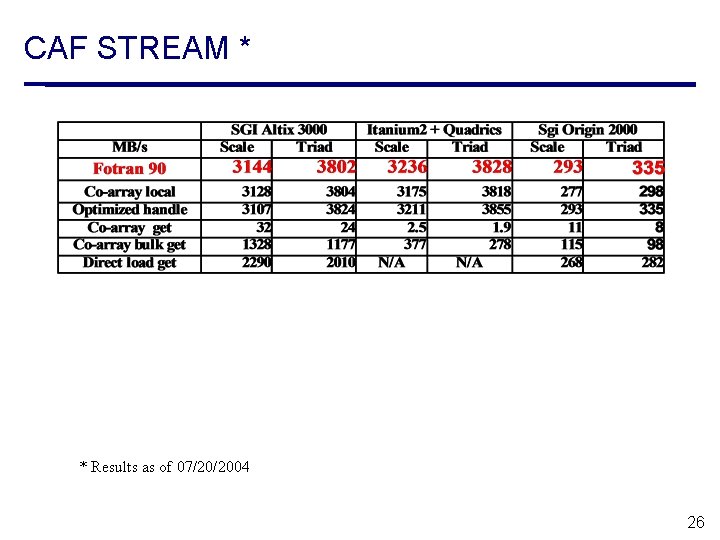

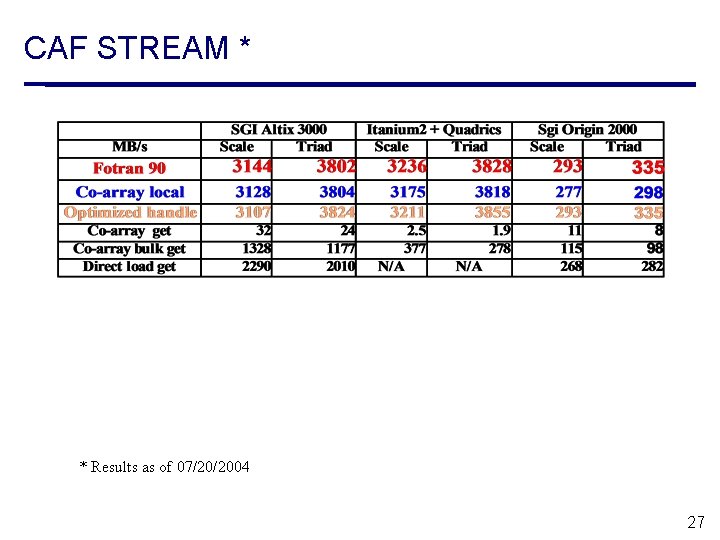

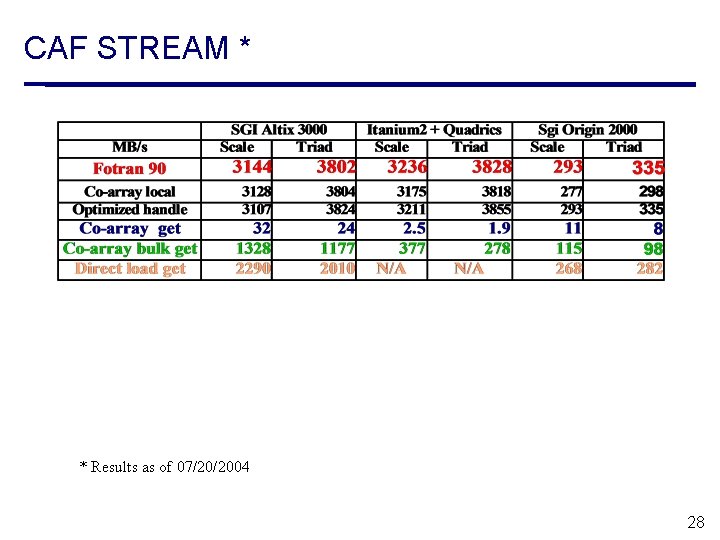

CAF STREAM benchmark • Derived from STREAM • Synthetic benchmark • Measures sustainable memory bandwidth and computation rate for small kernels • CAF STREAM • • Copy, Scale, Add, Triad Local and remote get versions Fine- and coarse-grain access Aim: determining the most efficient representation for co-arrays on particular platforms 25

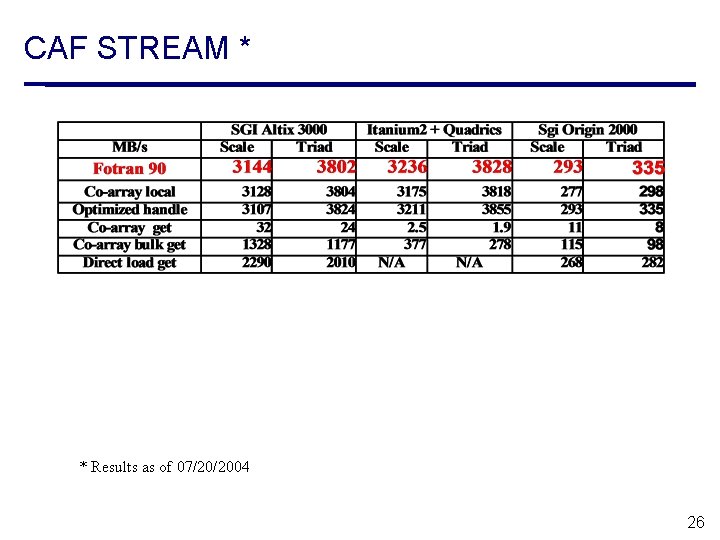

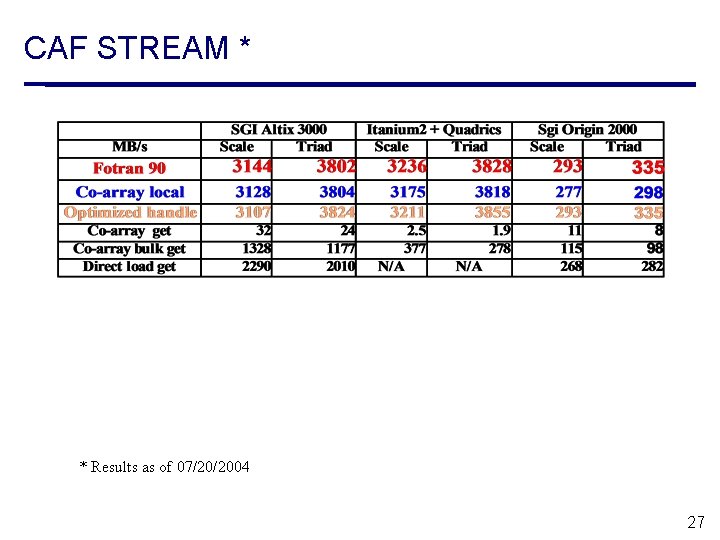

CAF STREAM * * Results as of 07/20/2004 26

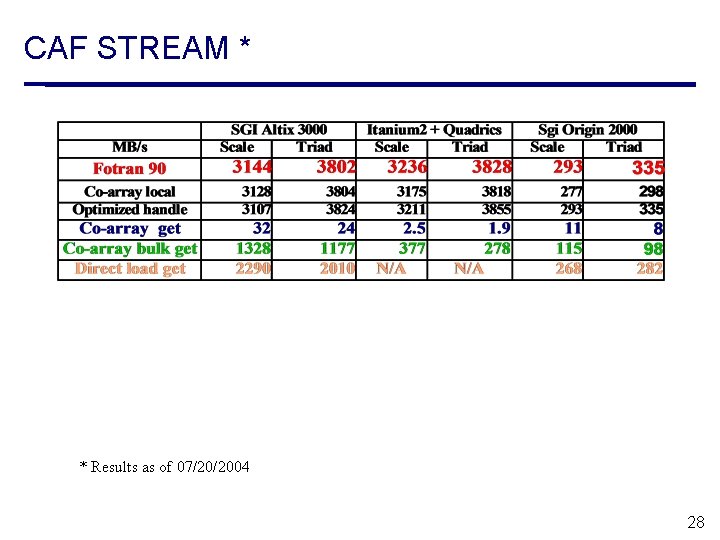

CAF STREAM * * Results as of 07/20/2004 27

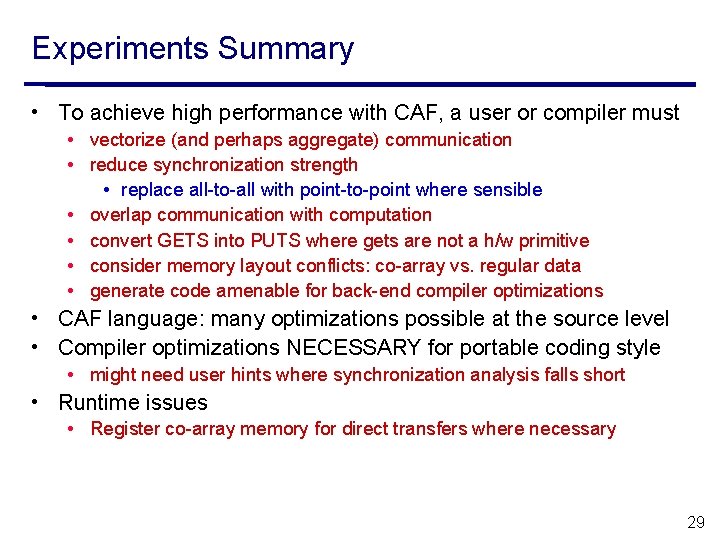

CAF STREAM * * Results as of 07/20/2004 28

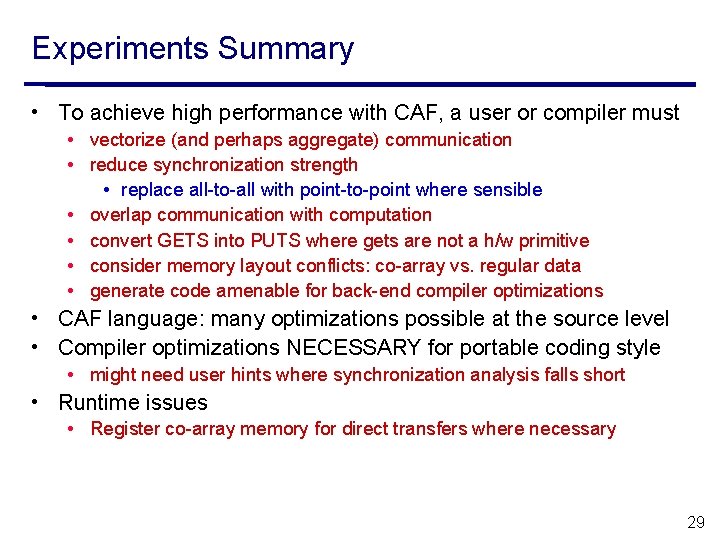

Experiments Summary • To achieve high performance with CAF, a user or compiler must • vectorize (and perhaps aggregate) communication • reduce synchronization strength • replace all-to-all with point-to-point where sensible • overlap communication with computation • convert GETS into PUTS where gets are not a h/w primitive • consider memory layout conflicts: co-array vs. regular data • generate code amenable for back-end compiler optimizations • CAF language: many optimizations possible at the source level • Compiler optimizations NECESSARY for portable coding style • might need user hints where synchronization analysis falls short • Runtime issues • Register co-array memory for direct transfers where necessary 29

Outline • Co-array Fortran language overview • Rice CAF Compiler • A preliminary performance study • Ongoing research • Conclusions 30

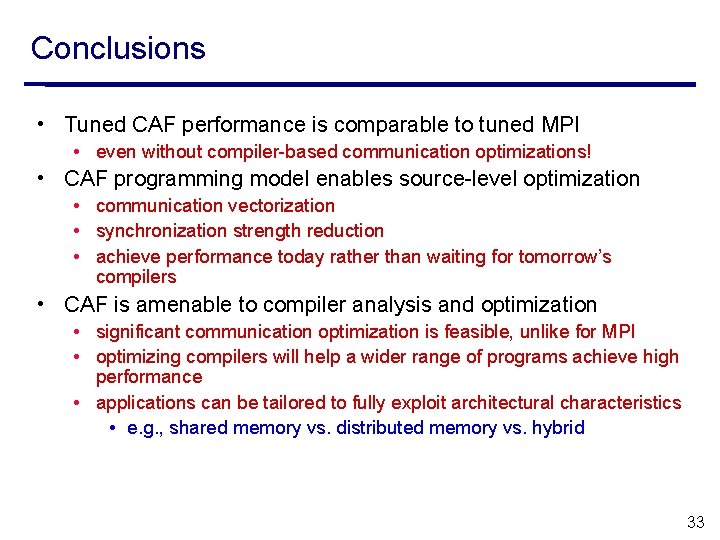

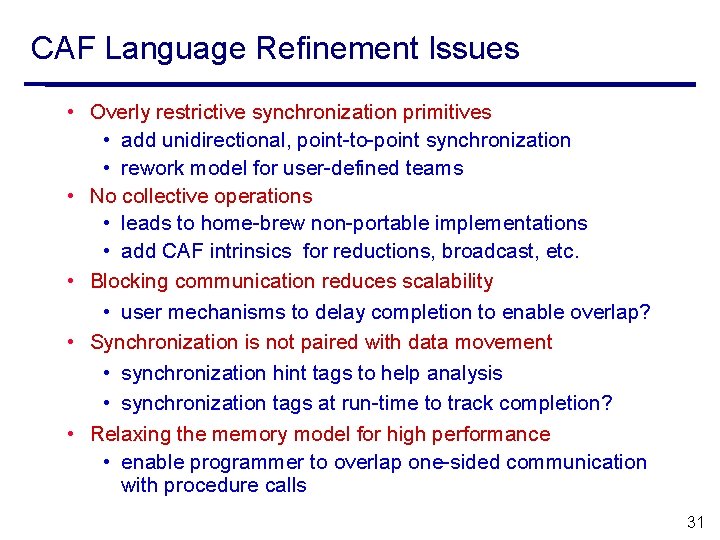

CAF Language Refinement Issues • Overly restrictive synchronization primitives • add unidirectional, point-to-point synchronization • rework model for user-defined teams • No collective operations • leads to home-brew non-portable implementations • add CAF intrinsics for reductions, broadcast, etc. • Blocking communication reduces scalability • user mechanisms to delay completion to enable overlap? • Synchronization is not paired with data movement • synchronization hint tags to help analysis • synchronization tags at run-time to track completion? • Relaxing the memory model for high performance • enable programmer to overlap one-sided communication with procedure calls 31

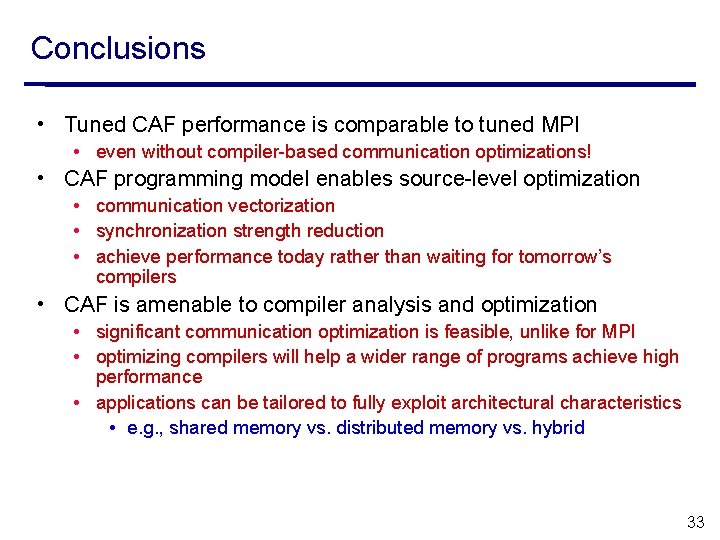

CAF Compiler Research Directions Aim for performance transparency • Compiler optimization of communication and I/O • optimize communication • communication vectorization and aggregation • multi-mode communication: direct load/store + RDMA • transform from one-sided to two-sided communication • transform from get to put communication • optimize synchronization • synchronization strength reduction • exploit split-phase operations for overlap with computation • combine synchronization with communication • collective communication • put with flag • platform-tailored optimization • Interoperability with other parallel programming models • Optimizations to improve node performance 32

Conclusions • Tuned CAF performance is comparable to tuned MPI • even without compiler-based communication optimizations! • CAF programming model enables source-level optimization • communication vectorization • synchronization strength reduction • achieve performance today rather than waiting for tomorrow’s compilers • CAF is amenable to compiler analysis and optimization • significant communication optimization is feasible, unlike for MPI • optimizing compilers will help a wider range of programs achieve high performance • applications can be tailored to fully exploit architectural characteristics • e. g. , shared memory vs. distributed memory vs. hybrid 33

Project URL http: //www. hipersoft. rice. edu/caf 34