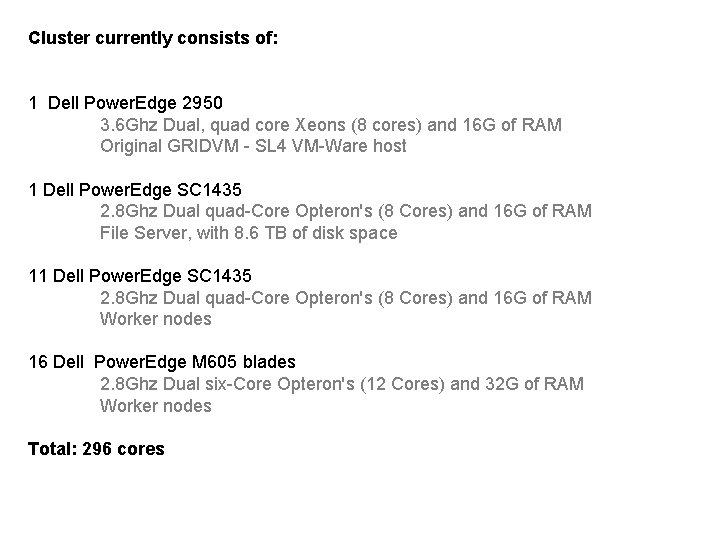

Cluster currently consists of 1 Dell Power Edge

- Slides: 15

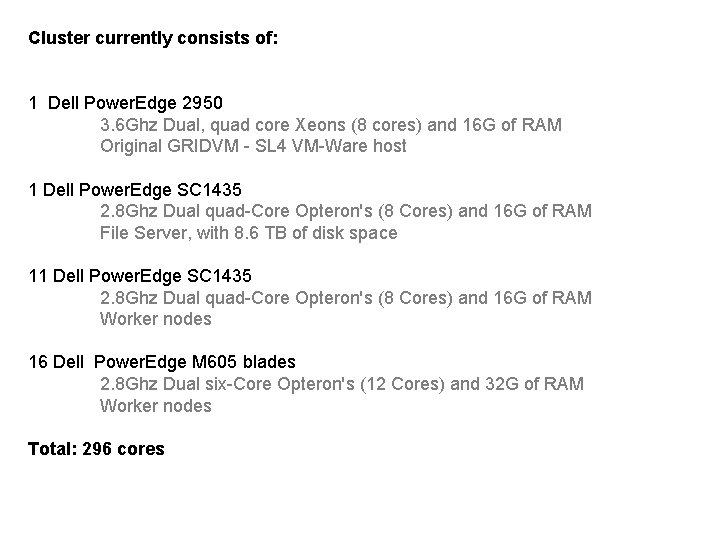

Cluster currently consists of: 1 Dell Power. Edge 2950 3. 6 Ghz Dual, quad core Xeons (8 cores) and 16 G of RAM Original GRIDVM - SL 4 VM-Ware host 1 Dell Power. Edge SC 1435 2. 8 Ghz Dual quad-Core Opteron's (8 Cores) and 16 G of RAM File Server, with 8. 6 TB of disk space 11 Dell Power. Edge SC 1435 2. 8 Ghz Dual quad-Core Opteron's (8 Cores) and 16 G of RAM Worker nodes 16 Dell Power. Edge M 605 blades 2. 8 Ghz Dual six-Core Opteron's (12 Cores) and 32 G of RAM Worker nodes Total: 296 cores

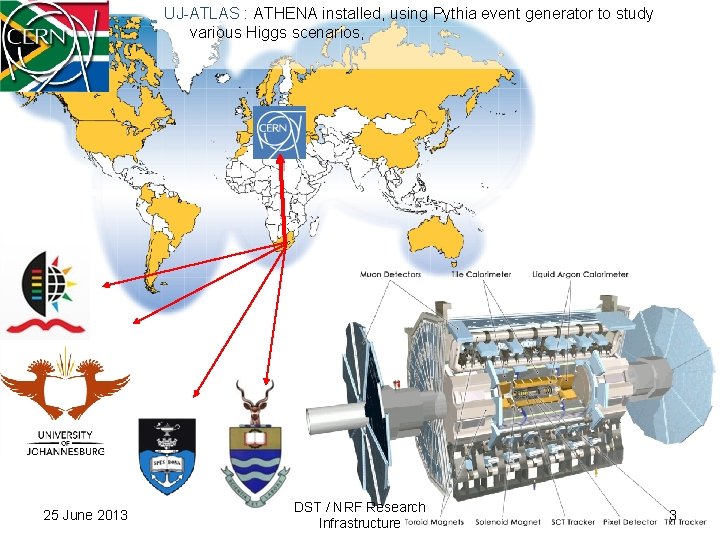

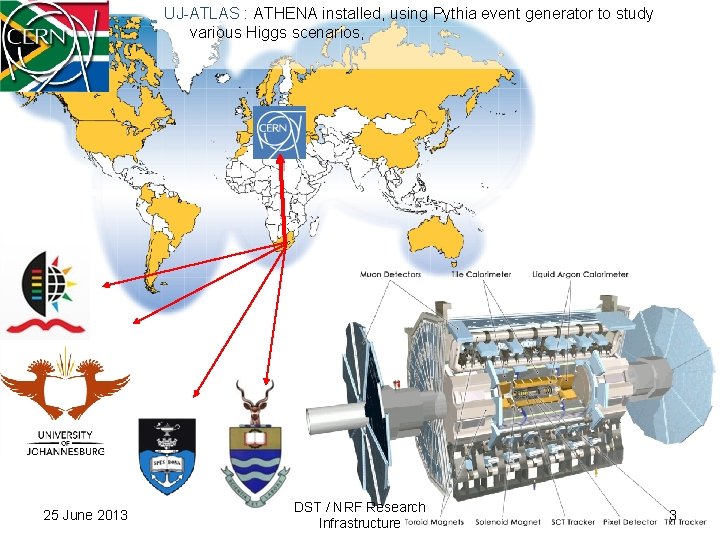

UJ-ATLAS : ATHENA installed, using Pythia event generator to study various Higgs scenarios, 25 June 2013 DST / NRF Research Infrastructure 3

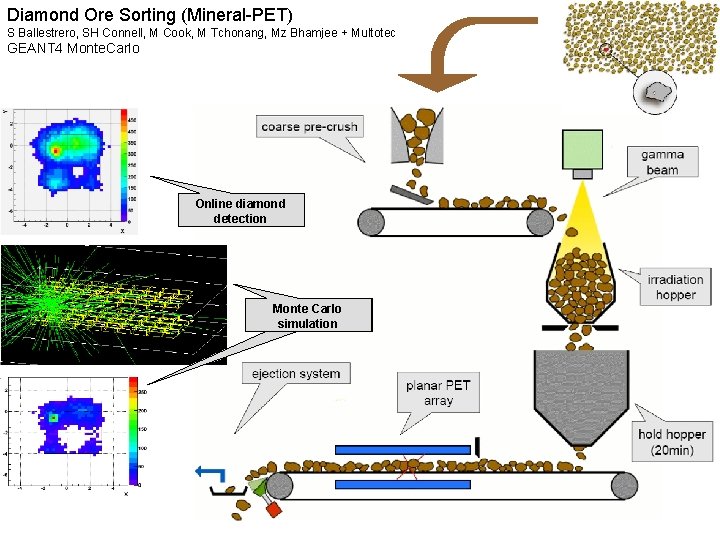

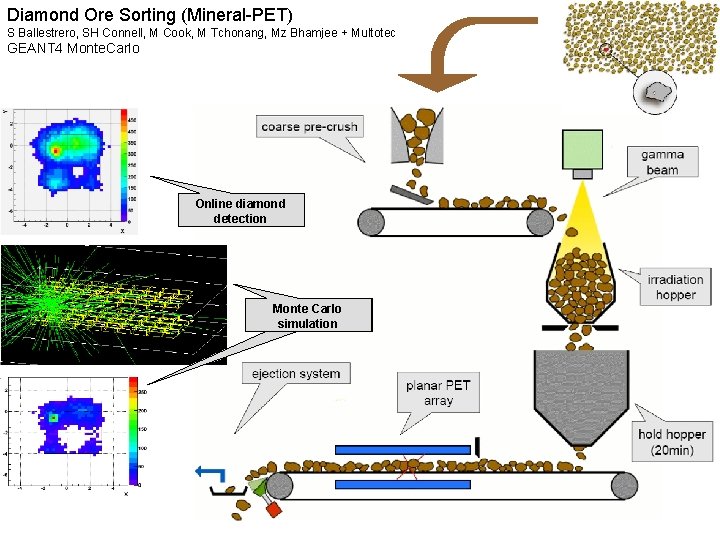

Diamond Ore Sorting (Mineral-PET) S Ballestrero, SH Connell, M Cook, M Tchonang, Mz Bhamjee + Multotec GEANT 4 Monte. Carlo Online diamond detection Online Montediamond Carlo simulation detection

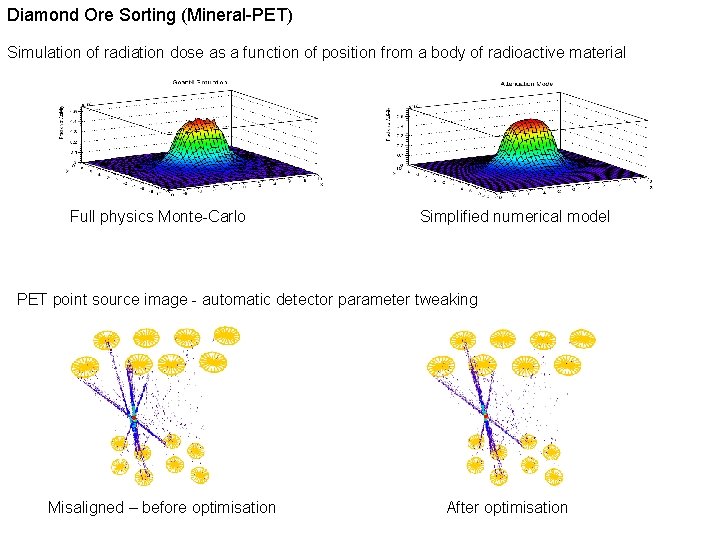

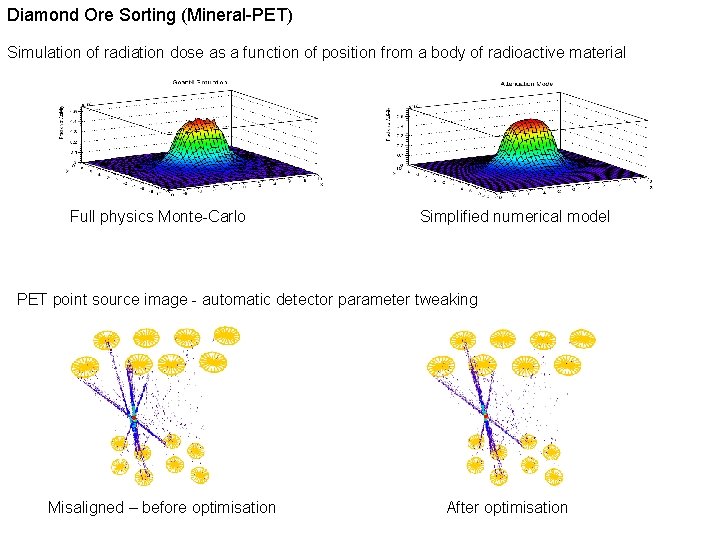

Diamond Ore Sorting (Mineral-PET) Simulation of radiation dose as a function of position from a body of radioactive material Full physics Monte-Carlo Simplified numerical model PET point source image - automatic detector parameter tweaking Misaligned – before optimisation After optimisation

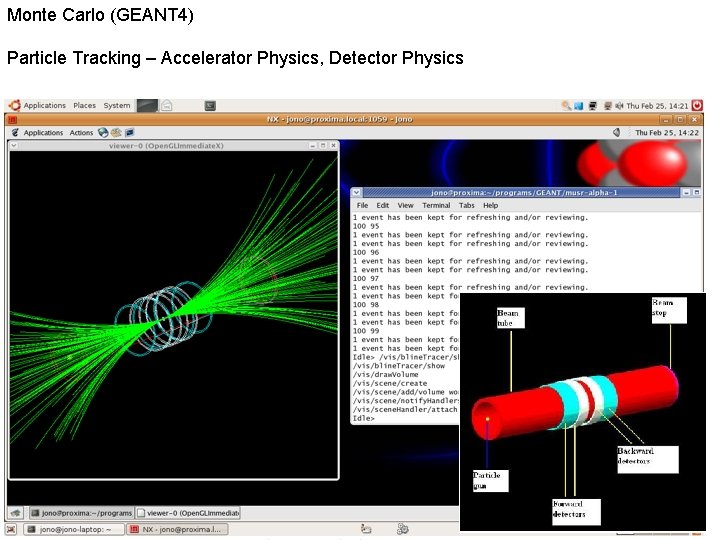

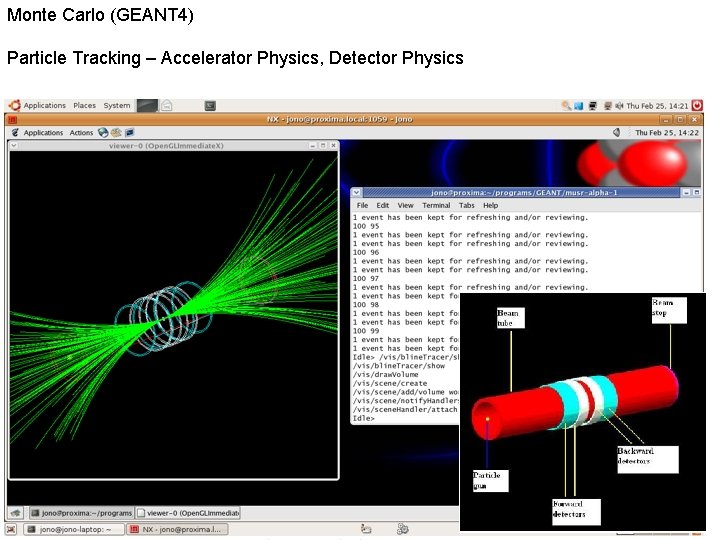

Monte Carlo (GEANT 4) Particle Tracking – Accelerator Physics, Detector Physics

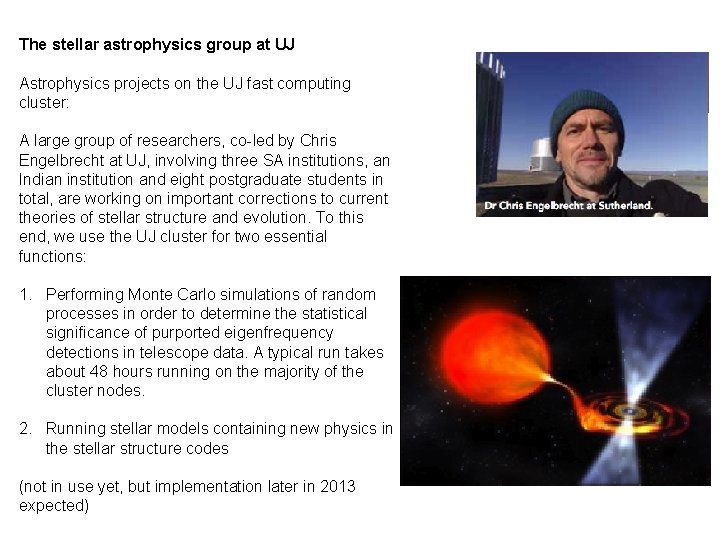

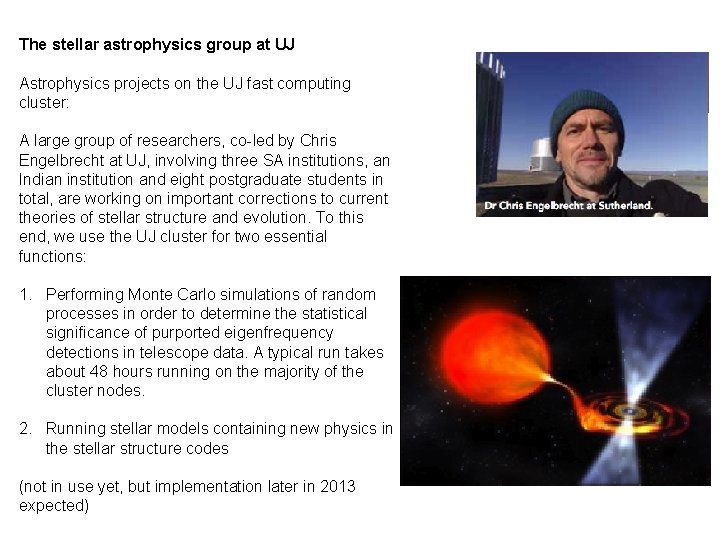

The stellar astrophysics group at UJ Astrophysics projects on the UJ fast computing cluster: A large group of researchers, co-led by Chris Engelbrecht at UJ, involving three SA institutions, an Indian institution and eight postgraduate students in total, are working on important corrections to current theories of stellar structure and evolution. To this end, we use the UJ cluster for two essential functions: 1. Performing Monte Carlo simulations of random processes in order to determine the statistical significance of purported eigenfrequency detections in telescope data. A typical run takes about 48 hours running on the majority of the cluster nodes. 2. Running stellar models containing new physics in the stellar structure codes (not in use yet, but implementation later in 2013 expected)

The stellar astrophysics group at UJ Astrophysics projects on the UJ fast computing cluster: A large group of researchers, co-led by Chris Engelbrecht at UJ, involving three SA institutions, an Indian institution and eight postgraduate students in total, are working on important corrections to current theories of stellar structure and evolution. To this end, we use the UJ cluster for two essential functions: 1. Performing Monte Carlo simulations of random processes in order to determine the statistical significance of purported eigenfrequency detections in telescope data. A typical run takes about 48 hours running on the majority of the cluster nodes. 2. Running stellar models containing new physics in the stellar structure codes (not in use yet, but implementation later in 2013 expected)

The stellar astrophysics group at UJ Astrophysics projects on the UJ fast computing cluster: A large group of researchers, co-led by Chris Engelbrecht at UJ, involving three SA institutions, an Indian institution and eight postgraduate students in total, are working on important corrections to current theories of stellar structure and evolution. To this end, we use the UJ cluster for two essential functions: 1. Performing Monte Carlo simulations of random processes in order to determine the statistical significance of purported eigenfrequency detections in telescope data. A typical run takes about 48 hours running on the majority of the cluster nodes. 2. Running stellar models containing new physics in the stellar structure codes (not in use yet, but implementation later in 2013 expected)

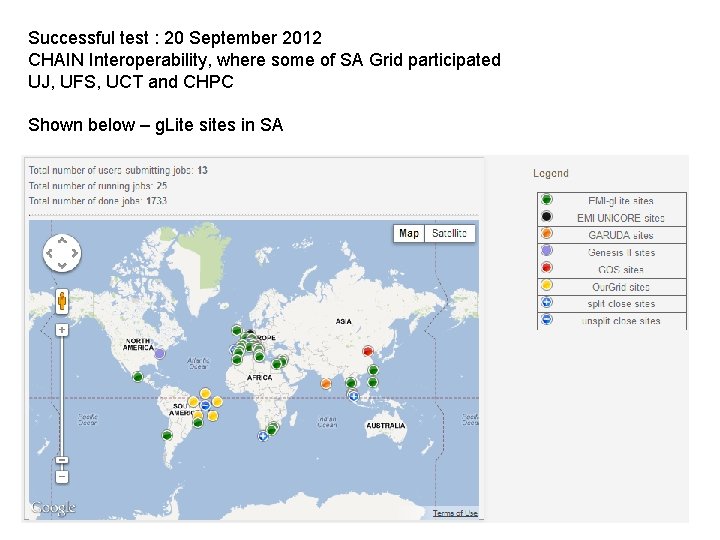

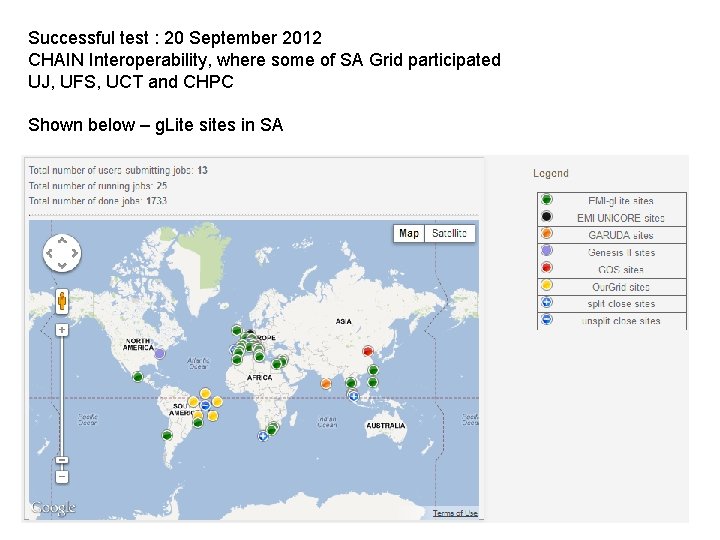

Successful test : 20 September 2012 CHAIN Interoperability, where some of SA Grid participated UJ, UFS, UCT and CHPC Shown below – g. Lite sites in SA

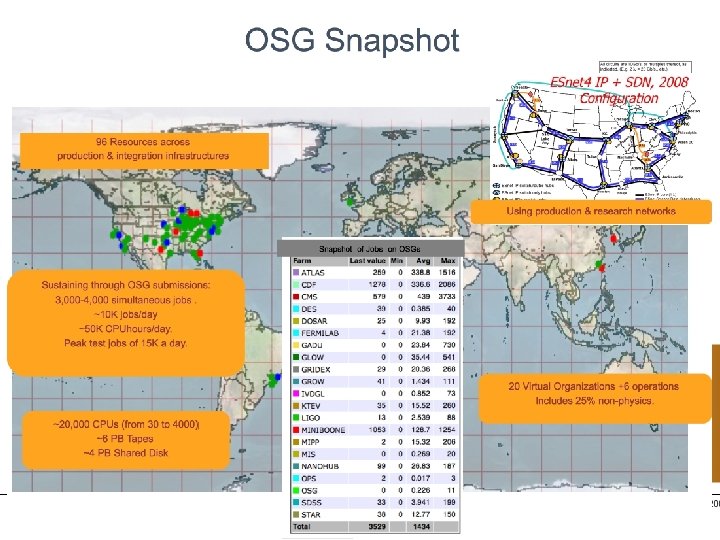

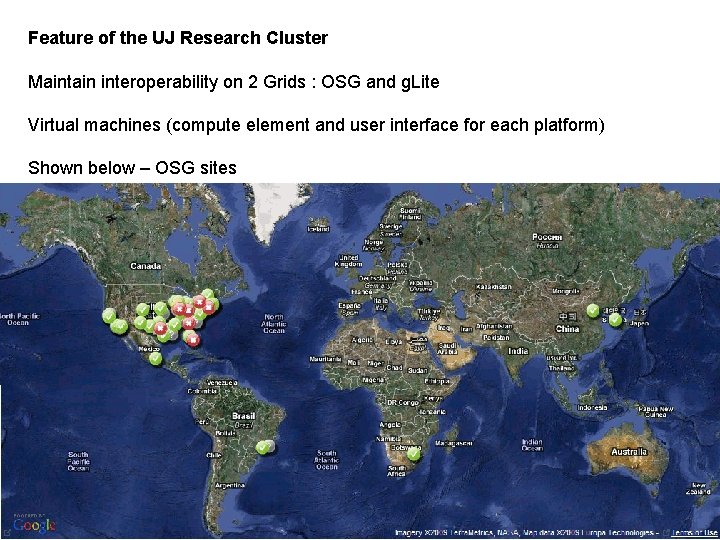

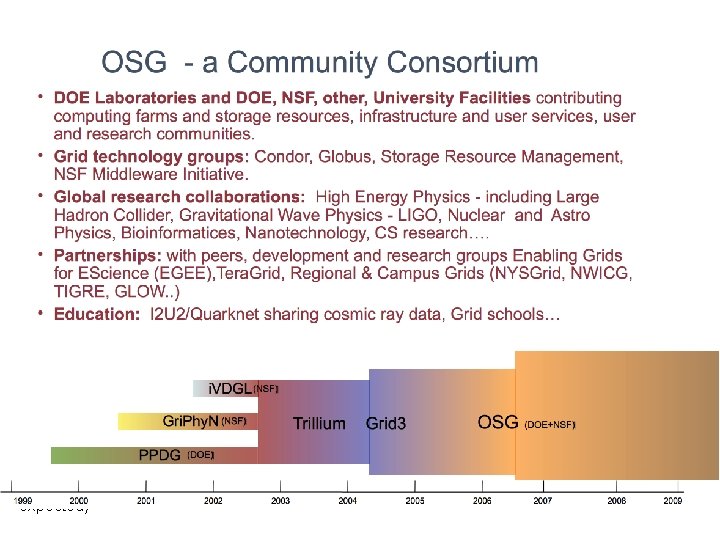

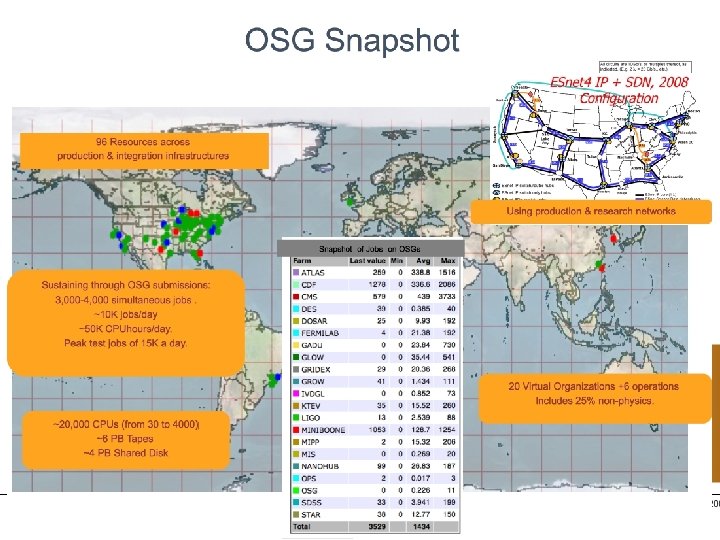

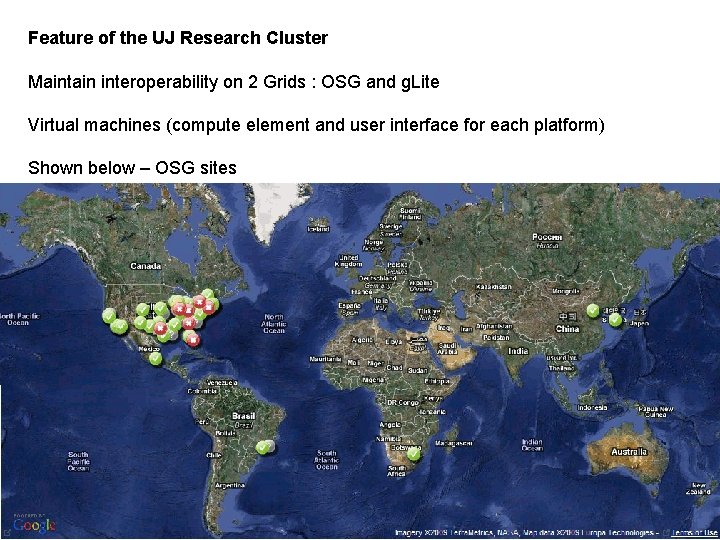

Feature of the UJ Research Cluster Maintain interoperability on 2 Grids : OSG and g. Lite Virtual machines (compute element and user interface for each platform) Shown below – OSG sites

Currently in the middle of an upgrade: Nodes and virtual machines running a spread of Scientific Linux 4, 5 and 6 to keep services online. System administrator is a South African currently based at CERN in Europe. Able to administer the cluster using remote tools. Using Pixie and Puppet, can reboot a node and reinstall to any version of Scientific Linux and EMI (European middleware initiative) within 45 minutes.

Trying to maintain usability by - SAGrid - ATLAS (Large Hadron Collider) - ALICE (Large Hadron Collider) - e-NMR (Bio-molecular) - OSG ATLAS jobs running for last 9 months, in production queue (in test mode) for last 4 weeks. Difficult to keep both OSG and g. Lite running – when the one demands an upgrade, the other breaks. Important though – grids are all about joining computers; we are helping to keep compatibility between the two big physics grids. Currently on the to-do list: Finish partially completed Scientific Linux upgrade Return OSG to functional status Set up IMPI implementation – allow complete remote control at lower level than OS.

Rising edge and falling edge

Rising edge and falling edge Dell hpc cluster

Dell hpc cluster Power triangle diagram

Power triangle diagram Power edge

Power edge Scapi error no license manager

Scapi error no license manager V root

V root Currently enrolled

Currently enrolled Incentives build robustness in bittorrent

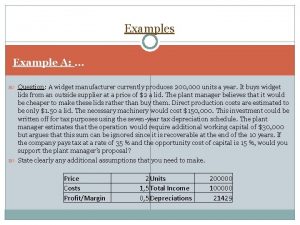

Incentives build robustness in bittorrent Widget manufacturer

Widget manufacturer Solar power satellites and microwave power transmission

Solar power satellites and microwave power transmission Actual power

Actual power Flex28024a

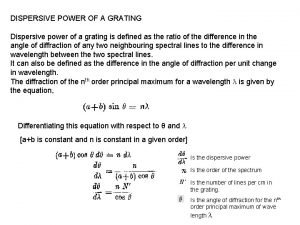

Flex28024a Plane transmission grating is

Plane transmission grating is Power of a power property

Power of a power property General power rule vs power rule

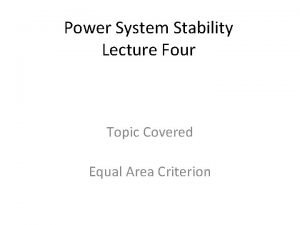

General power rule vs power rule Power angle curve in power system stability

Power angle curve in power system stability