Chapter 4 Syntax Analysis Syntax Error Handling Example

- Slides: 46

Chapter 4 Syntax Analysis

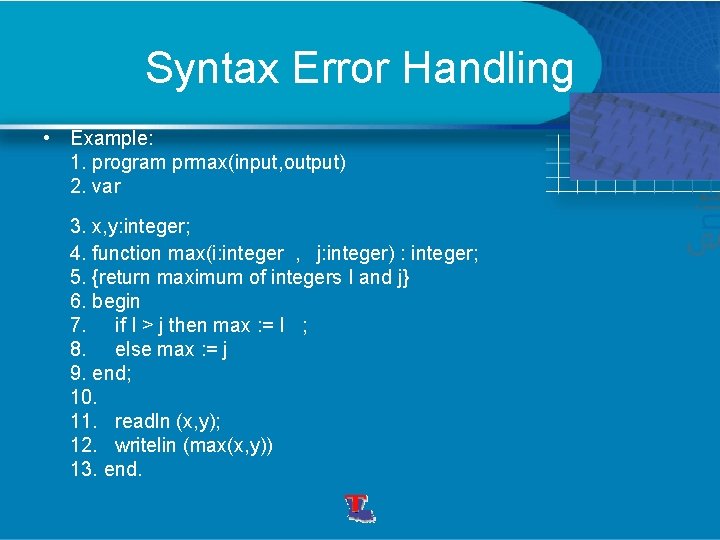

Syntax Error Handling • Example: 1. program prmax(input, output) 2. var 3. x, y: integer; 4. function max(i: integer , j: integer) : integer; 5. {return maximum of integers I and j} 6. begin 7. if I > j then max : = I ; 8. else max : = j 9. end; 10. 11. readln (x, y); 12. writelin (max(x, y)) 13. end.

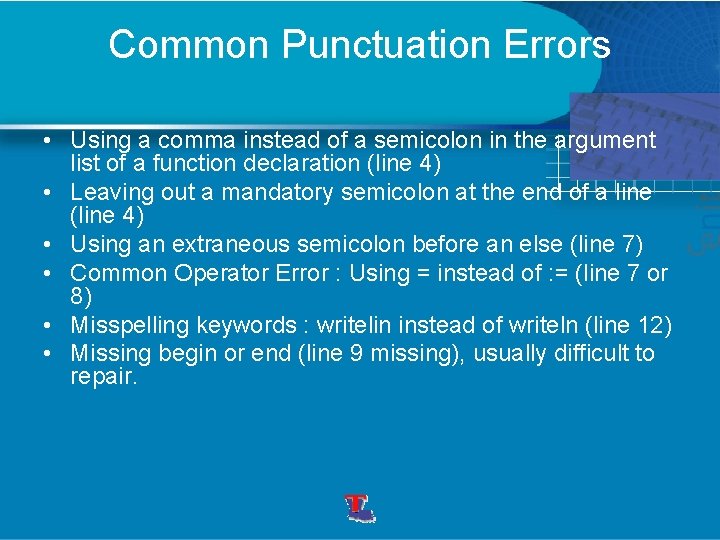

Common Punctuation Errors • Using a comma instead of a semicolon in the argument list of a function declaration (line 4) • Leaving out a mandatory semicolon at the end of a line (line 4) • Using an extraneous semicolon before an else (line 7) • Common Operator Error : Using = instead of : = (line 7 or 8) • Misspelling keywords : writelin instead of writeln (line 12) • Missing begin or end (line 9 missing), usually difficult to repair.

Error Reporting • A common technique is to print the offending line with a pointer to the position of the error. • The parser might add a diagnostic message like “semicolon missing at this position” if it knows what the likely error is.

How to handle Syntax errors • Error Recovery : The parser should try to recover from an error quickly so subsequent errors can be reported. If the parser doesn’t recover correctly it may report spurious errors. • Panic mode • Phase-level Recovery • Error Productions

Panic-mode Recovery • Discard input tokens until a synchronizing token (like; or end) is found. • Simple but may skip a considerable amount of input before checking for errors again. • Will not generate an infinite loop.

Phase-level Recovery • Perform local corrections • Replace the prefix of the remaining input with some string to allow the parser to continue. – Examples: replace a comma with a semicolon, delete an extraneous semicolon or insert a missing semicolon. Must be careful not to get into an infinite loop.

Recovery with Error Productions • Augment the grammar with productions to handle common errors • Example: parameter_list identifier_list : type | parameter_list; identifier_list : type | parameter_list, {error; writeln (“comma should be a semicolon”)} identifier_list : type

Recovery with Global Corrections • Find the minimum number of changes to correct the erroneous input stream. • Too costly in time and space to implement. • Currently only of theoretical interest.

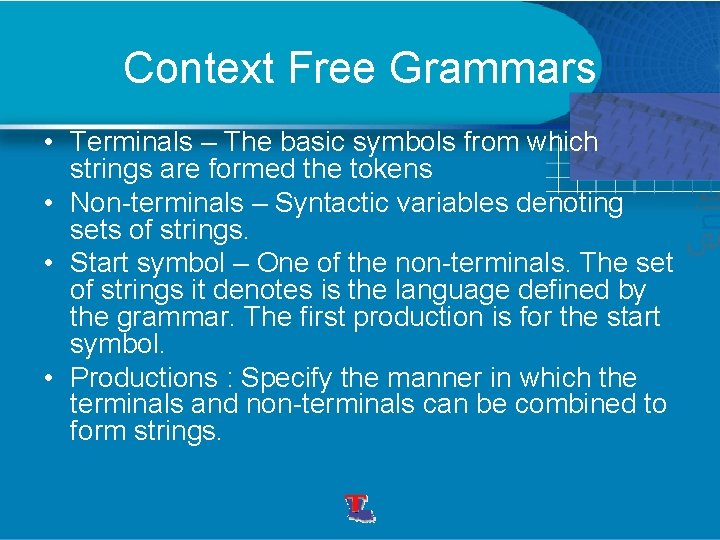

Context Free Grammars • A CFG consists of – terminals , – non-terminals, – a start symbol and – productions.

Context Free Grammars • Terminals – The basic symbols from which strings are formed the tokens • Non-terminals – Syntactic variables denoting sets of strings. • Start symbol – One of the non-terminals. The set of strings it denotes is the language defined by the grammar. The first production is for the start symbol. • Productions : Specify the manner in which the terminals and non-terminals can be combined to form strings.

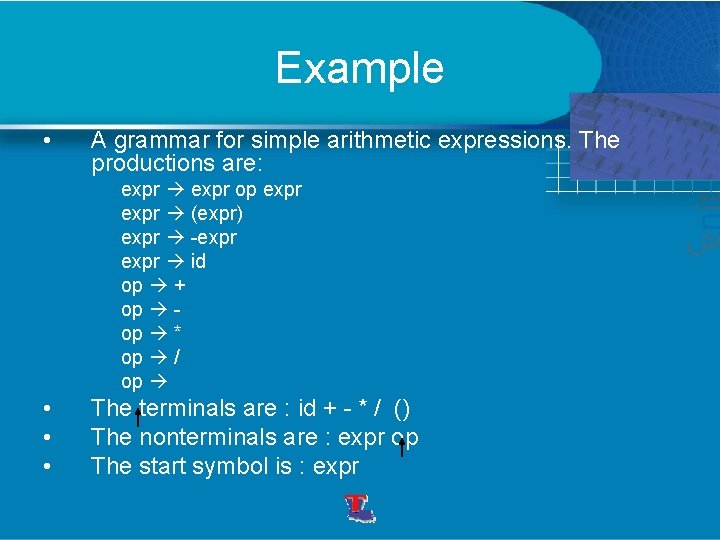

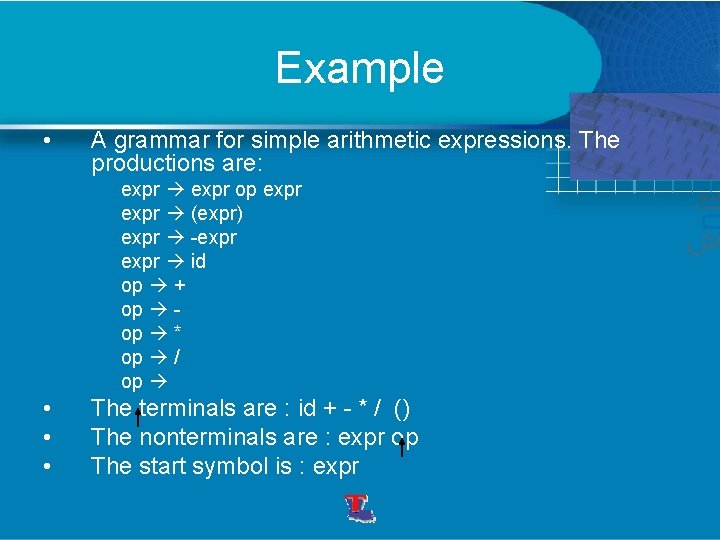

Example • A grammar for simple arithmetic expressions. The productions are: expr op expr (expr) expr -expr id op + op * op / op • • • The terminals are : id + - * / () The nonterminals are : expr op The start symbol is : expr

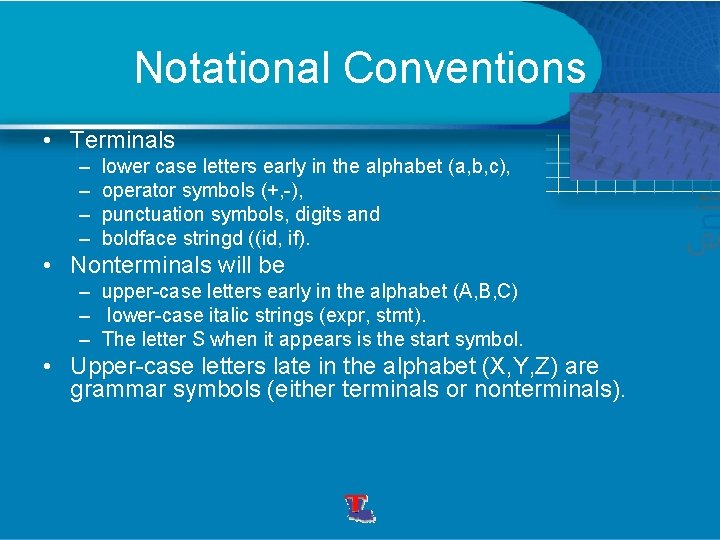

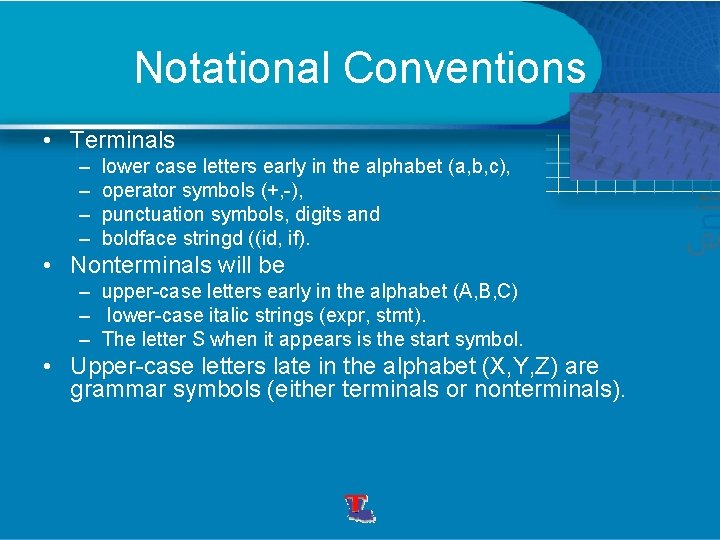

Notational Conventions • Terminals – – lower case letters early in the alphabet (a, b, c), operator symbols (+, -), punctuation symbols, digits and boldface stringd ((id, if). • Nonterminals will be – upper-case letters early in the alphabet (A, B, C) – lower-case italic strings (expr, stmt). – The letter S when it appears is the start symbol. • Upper-case letters late in the alphabet (X, Y, Z) are grammar symbols (either terminals or nonterminals).

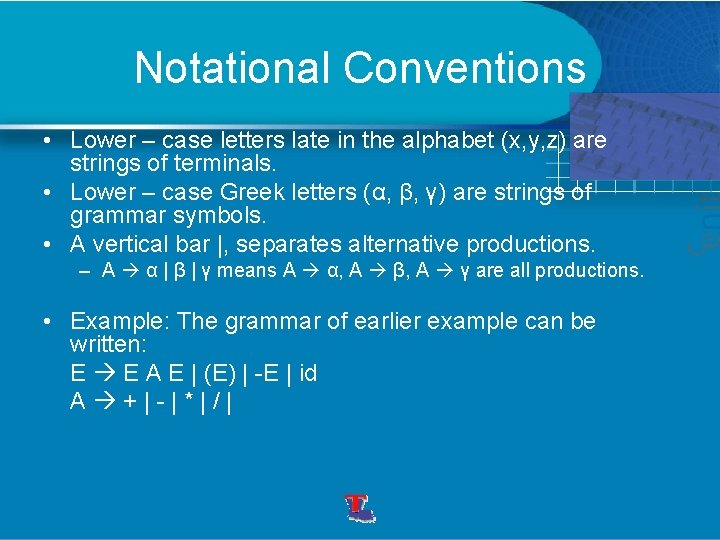

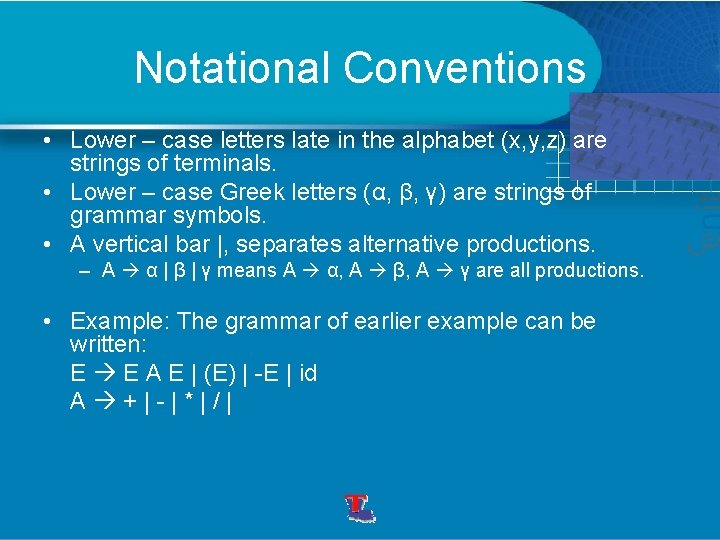

Notational Conventions • Lower – case letters late in the alphabet (x, y, z) are strings of terminals. • Lower – case Greek letters (α, β, γ) are strings of grammar symbols. • A vertical bar |, separates alternative productions. – A α | β | γ means A α, A β, A γ are all productions. • Example: The grammar of earlier example can be written: E E A E | (E) | -E | id A +|-|*|/|

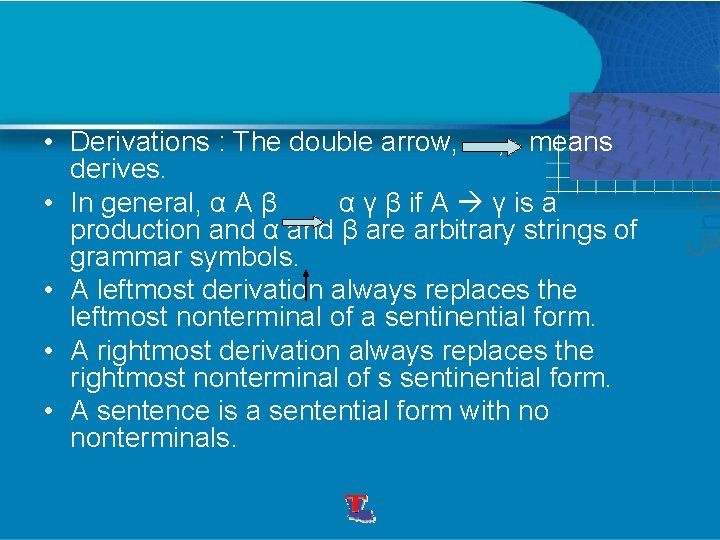

• Derivations : The double arrow, , means derives. • In general, α A β α γ β if A γ is a production and α and β are arbitrary strings of grammar symbols. • A leftmost derivation always replaces the leftmost nonterminal of a sentinential form. • A rightmost derivation always replaces the rightmost nonterminal of s sentinential form. • A sentence is a sentential form with no nonterminals.

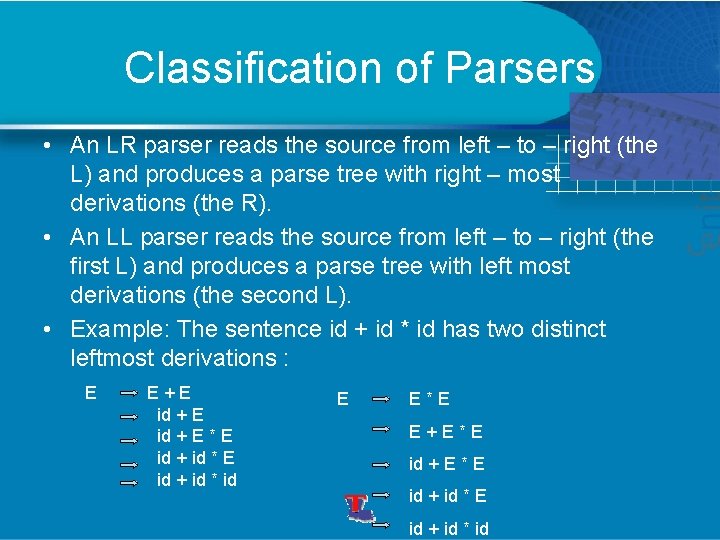

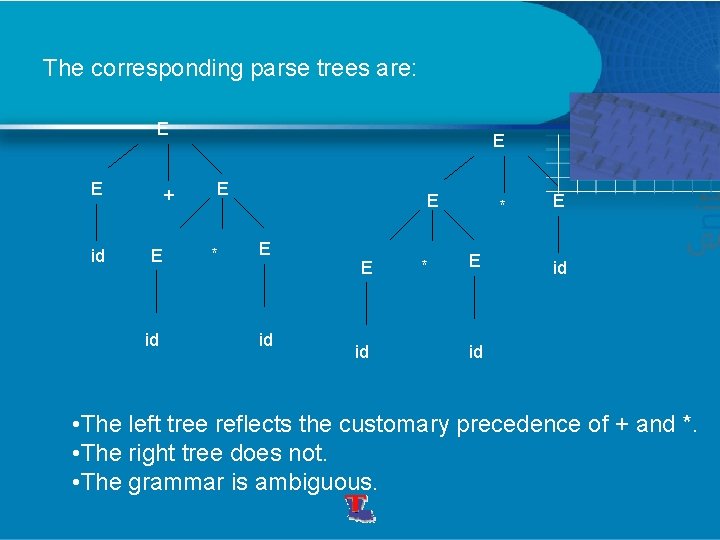

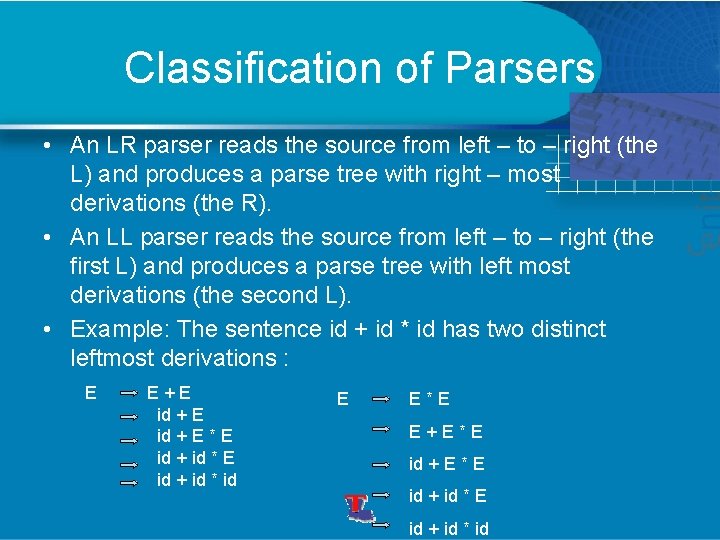

Classification of Parsers • An LR parser reads the source from left – to – right (the L) and produces a parse tree with right – most derivations (the R). • An LL parser reads the source from left – to – right (the first L) and produces a parse tree with left most derivations (the second L). • Example: The sentence id + id * id has two distinct leftmost derivations : E E+E id + E * E id + id * id E E*E E+E*E id + E * E id + id * id

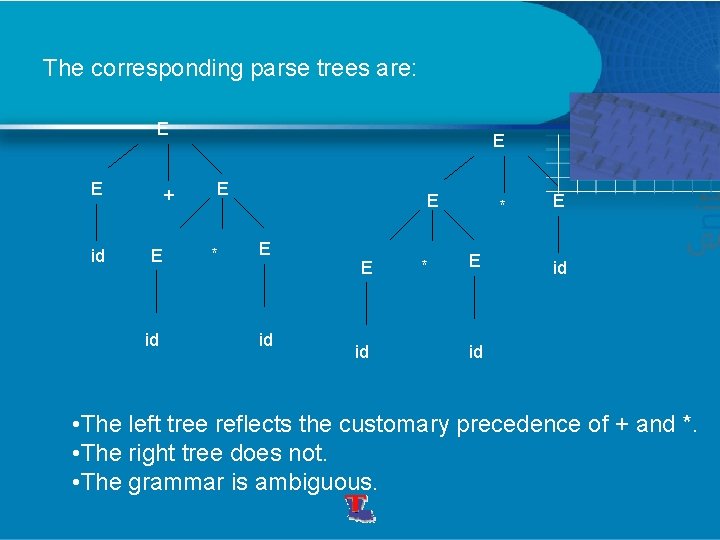

The corresponding parse trees are: E E id + E id E E * E E id * * E E id id • The left tree reflects the customary precedence of + and *. • The right tree does not. • The grammar is ambiguous.

Ambiguity • A grammar is ambiguous if it can produce more than one parse tree. It produces more than one leftmost derivation or more than one rightmost derivation. • An unambiguous grammar is desirable. Otherwise the parser needs some disambiguating rules to throwaway the incorrect parse trees. • Regular expressions vs. grammars: Every construct described by a regular expression can also be described by a grammar. The converse is not true. • We could use a CFG instead of regular expressions to describe a lexical analyzer.

• We use regular expressions because: - Regular expressions are easier to understand. - Lexical analysis is simpler than syntax analysis and doesn’t need a grammar. - A more efficient analyzer can be constructed automatically from regular expressions than from a grammar.

● We could combine lexical analysis with syntax analysis for a simple grammar ● using a single grammar where the terminals are source characters instead of tokens. ● Separating the functions is better because it modularizes the front-end functions into two components of manageable size.

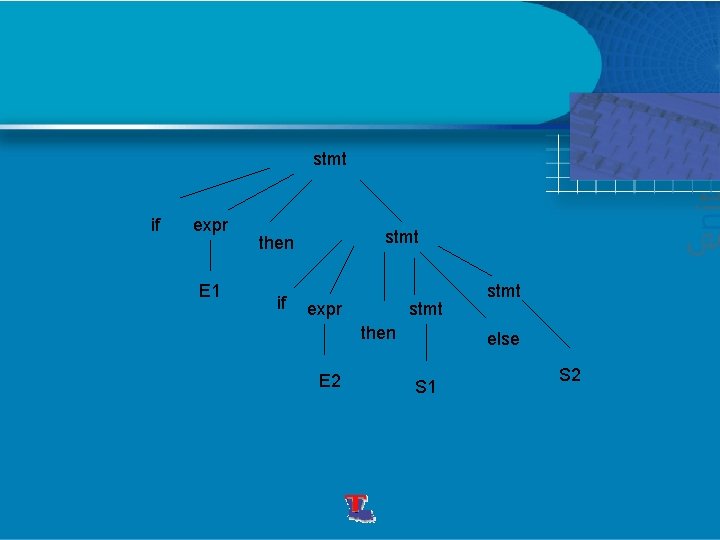

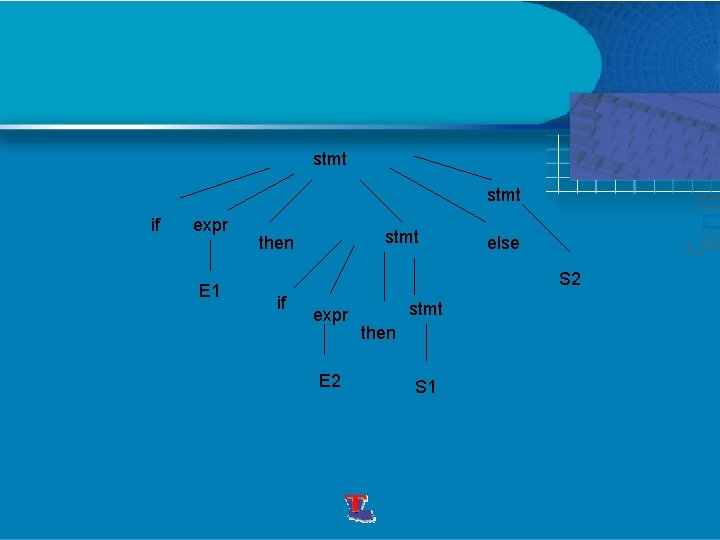

Removing common Ambiguity • Eliminating the “dangling – else” ambiguity. Some languages allow if-then statements and if –then-else statements: stmt if expr then stmt | if expr then stmt else stmt • The grammar is unambiguous since the string if E 1 then if E 2 then S 1 else S 2 has two parse trees:

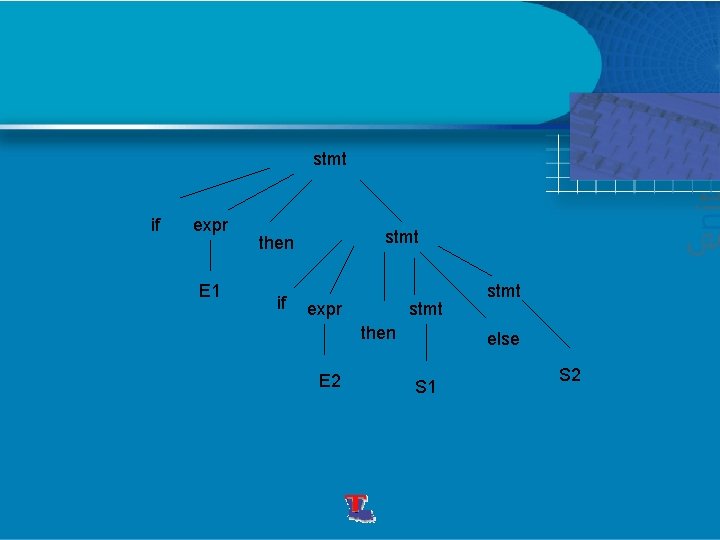

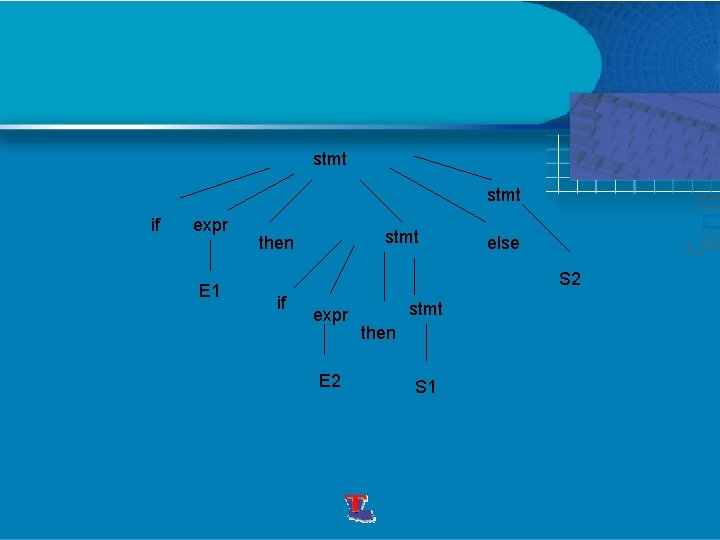

stmt if expr E 1 stmt then if expr stmt then E 2 stmt else S 1 S 2

stmt if expr E 1 stmt then else S 2 if expr E 2 stmt then S 1

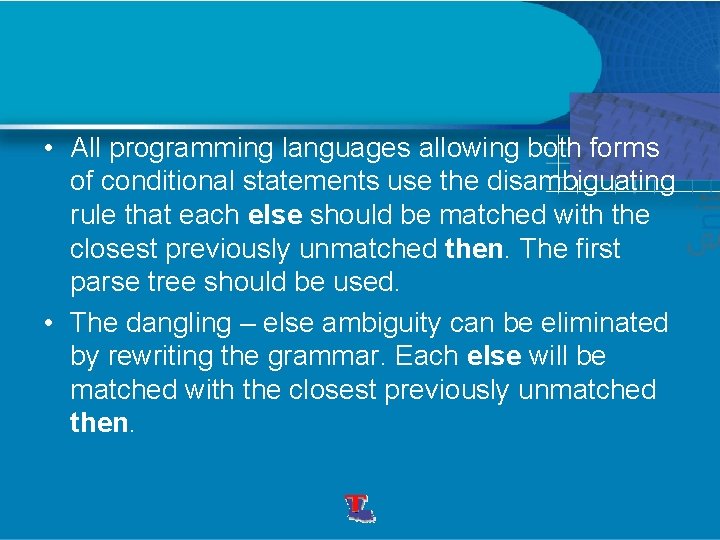

• All programming languages allowing both forms of conditional statements use the disambiguating rule that each else should be matched with the closest previously unmatched then. The first parse tree should be used. • The dangling – else ambiguity can be eliminated by rewriting the grammar. Each else will be matched with the closest previously unmatched then.

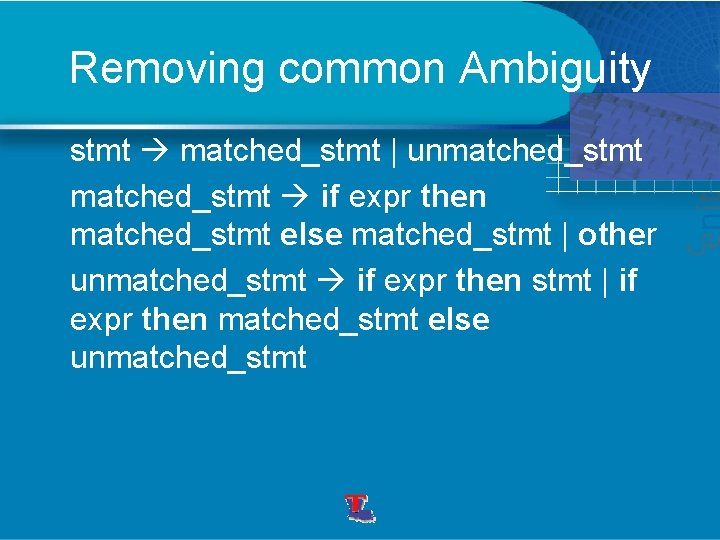

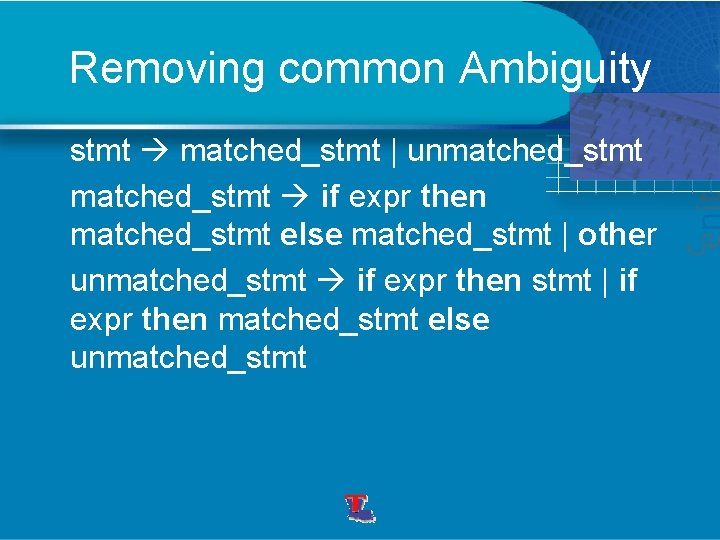

Removing common Ambiguity stmt matched_stmt | unmatched_stmt if expr then matched_stmt else matched_stmt | other unmatched_stmt if expr then stmt | if expr then matched_stmt else unmatched_stmt

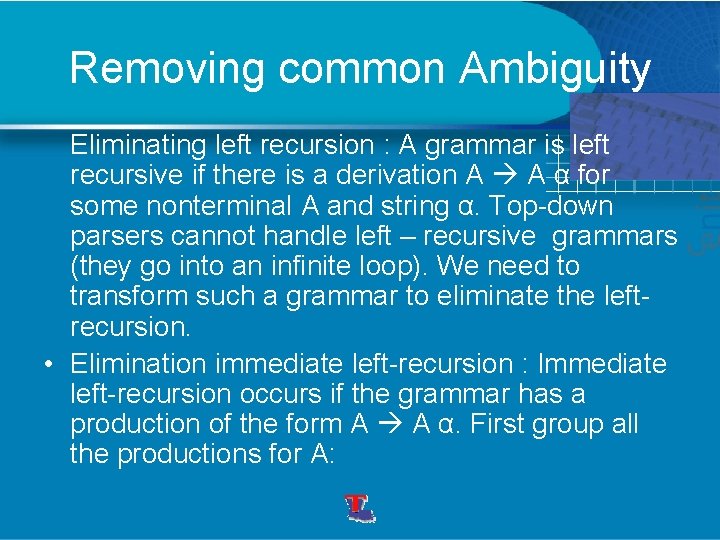

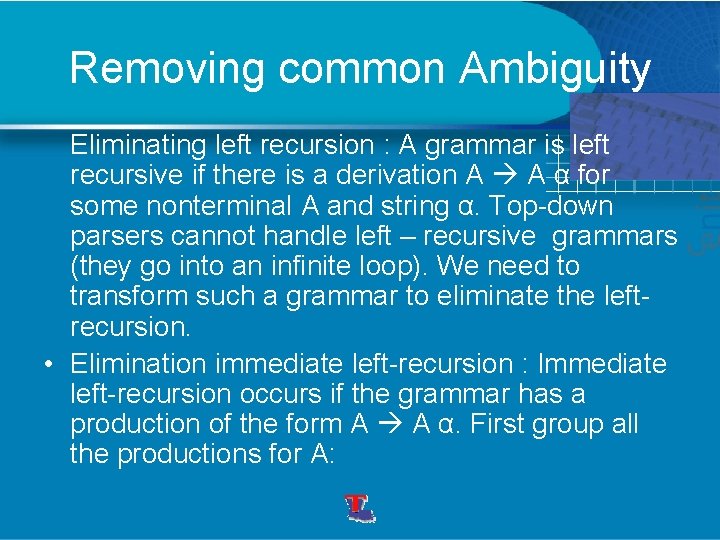

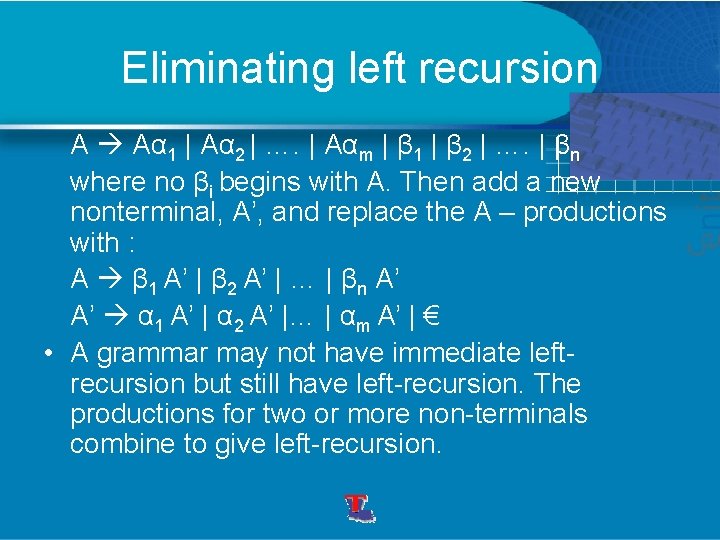

Removing common Ambiguity Eliminating left recursion : A grammar is left recursive if there is a derivation A A α for some nonterminal A and string α. Top-down parsers cannot handle left – recursive grammars (they go into an infinite loop). We need to transform such a grammar to eliminate the leftrecursion. • Elimination immediate left-recursion : Immediate left-recursion occurs if the grammar has a production of the form A A α. First group all the productions for A:

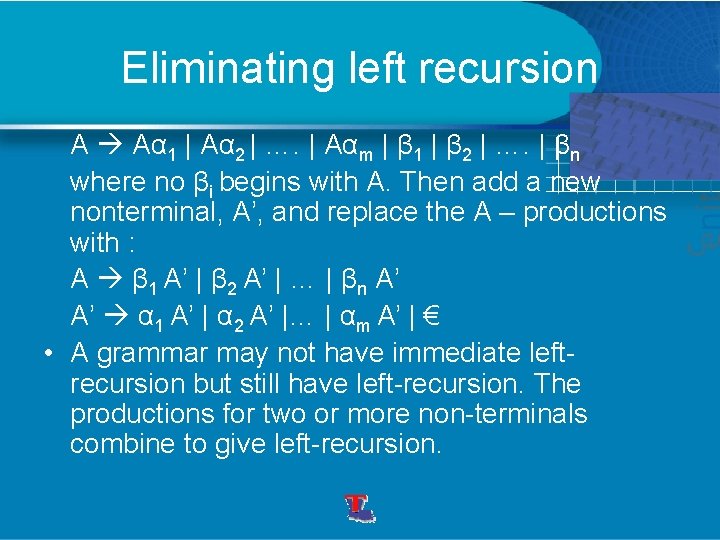

Eliminating left recursion A Aα 1 | Aα 2 | …. | Aαm | β 1 | β 2 | …. | βn where no βi begins with A. Then add a new nonterminal, A’, and replace the A – productions with : A β 1 A’ | β 2 A’ | … | βn A’ A’ α 1 A’ | α 2 A’ |… | αm A’ | € • A grammar may not have immediate leftrecursion but still have left-recursion. The productions for two or more non-terminals combine to give left-recursion.

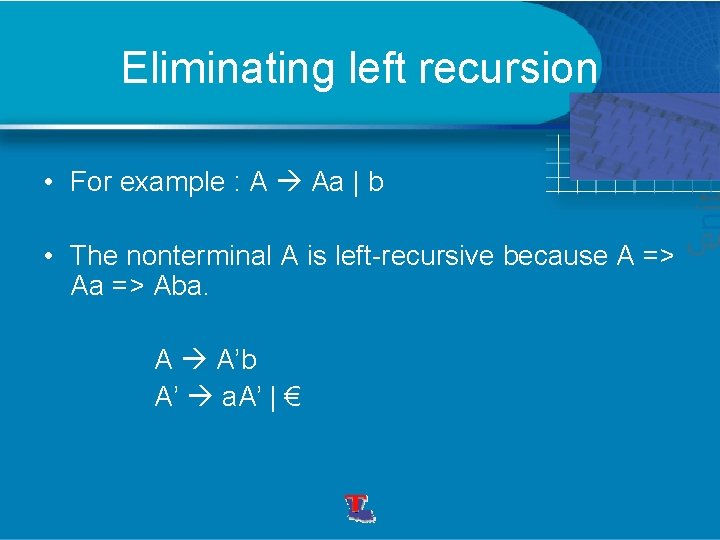

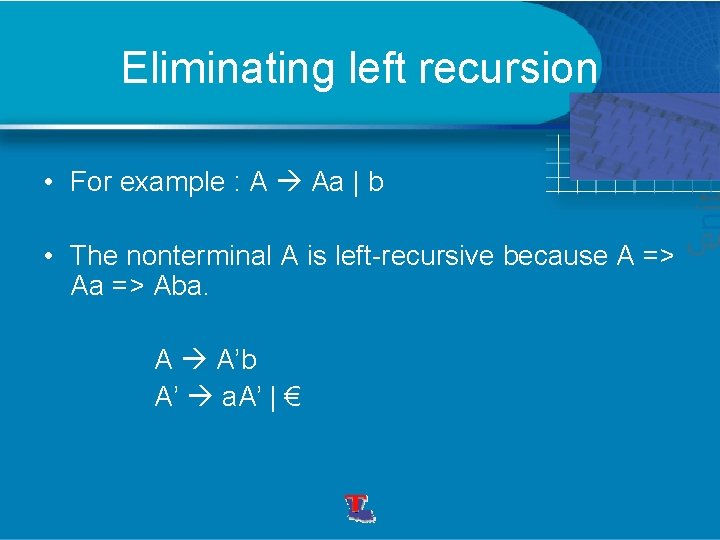

Eliminating left recursion • For example : A Aa | b • The nonterminal A is left-recursive because A => Aa => Aba. A A’b A’ a. A’ | €

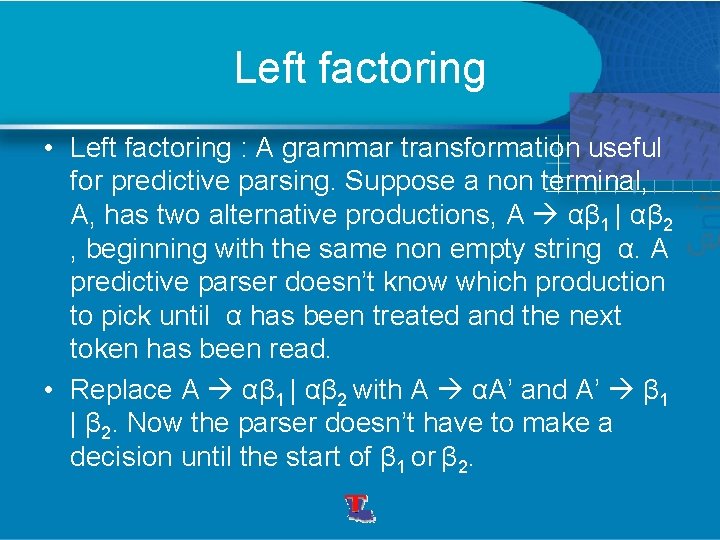

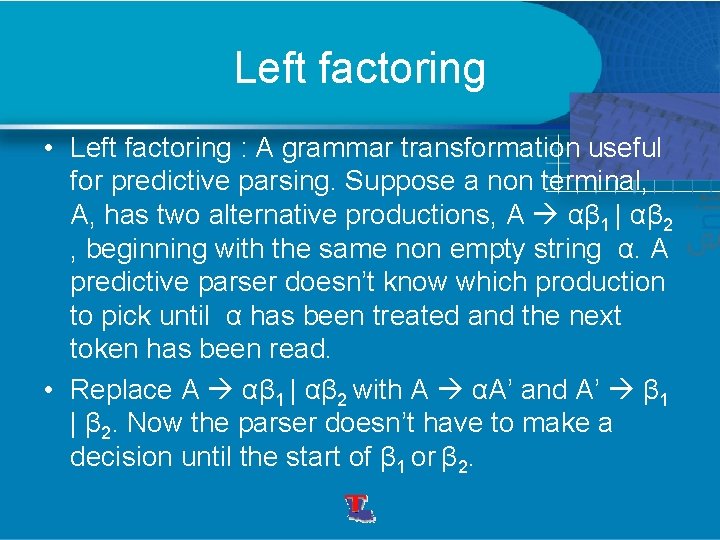

Left factoring • Left factoring : A grammar transformation useful for predictive parsing. Suppose a non terminal, A, has two alternative productions, A αβ 1 | αβ 2 , beginning with the same non empty string α. A predictive parser doesn’t know which production to pick until α has been treated and the next token has been read. • Replace A αβ 1 | αβ 2 with A αA’ and A’ β 1 | β 2. Now the parser doesn’t have to make a decision until the start of β 1 or β 2.

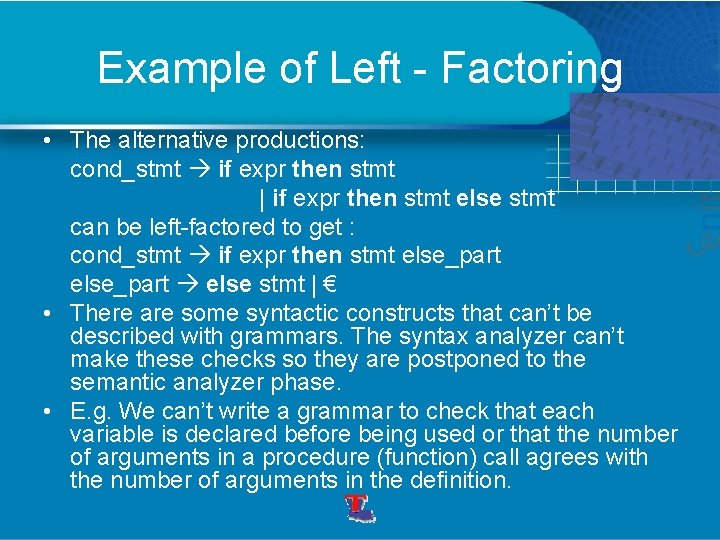

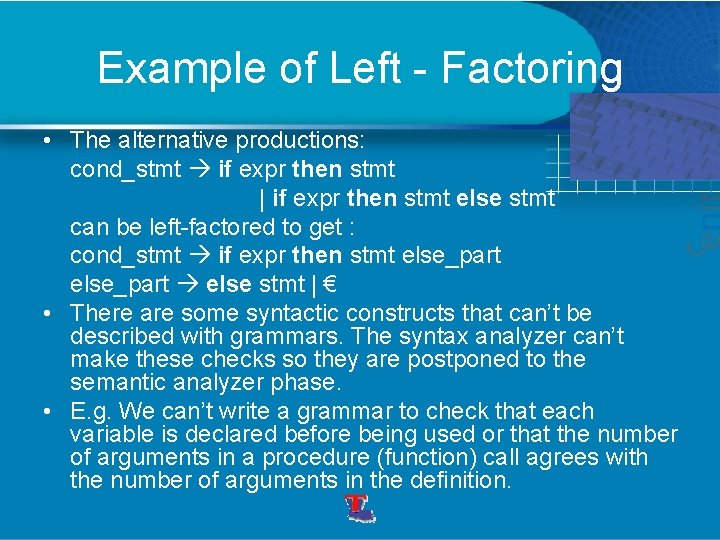

Example of Left - Factoring • The alternative productions: cond_stmt if expr then stmt | if expr then stmt else stmt can be left-factored to get : cond_stmt if expr then stmt else_part else stmt | € • There are some syntactic constructs that can’t be described with grammars. The syntax analyzer can’t make these checks so they are postponed to the semantic analyzer phase. • E. g. We can’t write a grammar to check that each variable is declared before being used or that the number of arguments in a procedure (function) call agrees with the number of arguments in the definition.

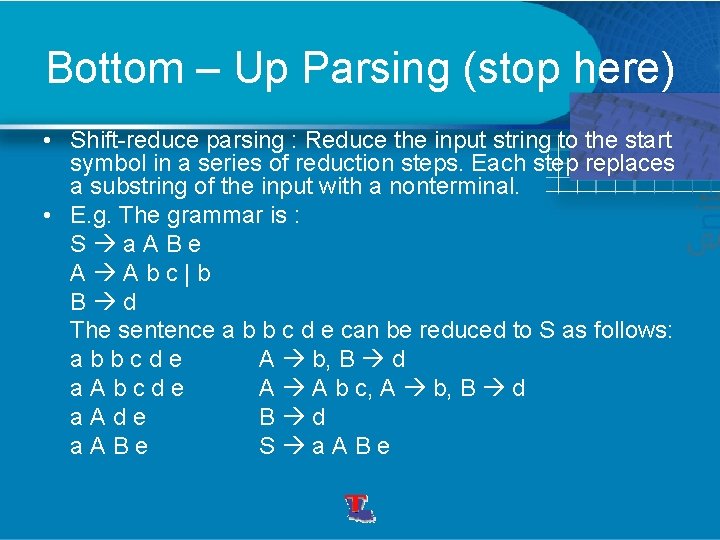

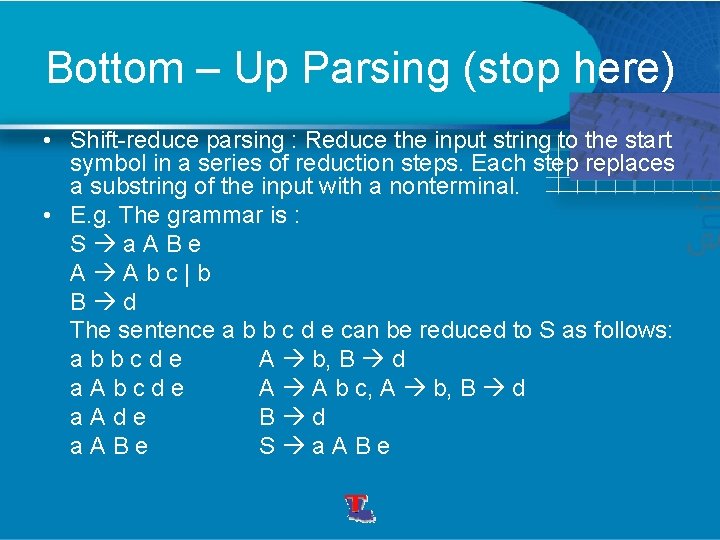

Bottom – Up Parsing (stop here) • Shift-reduce parsing : Reduce the input string to the start symbol in a series of reduction steps. Each step replaces a substring of the input with a nonterminal. • E. g. The grammar is : S a. ABe A Abc|b B d The sentence a b b c d e can be reduced to S as follows: abbcde A b, B d a. Abcde A A b c, A b, B d a. Ade B d a. ABe S a. ABe

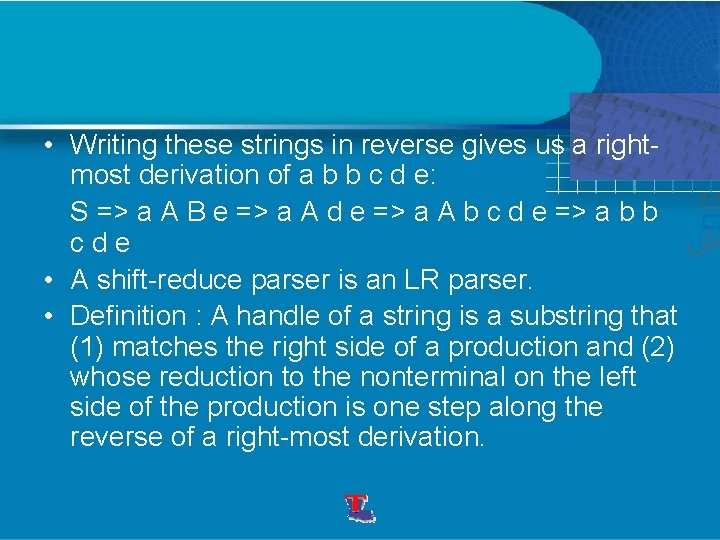

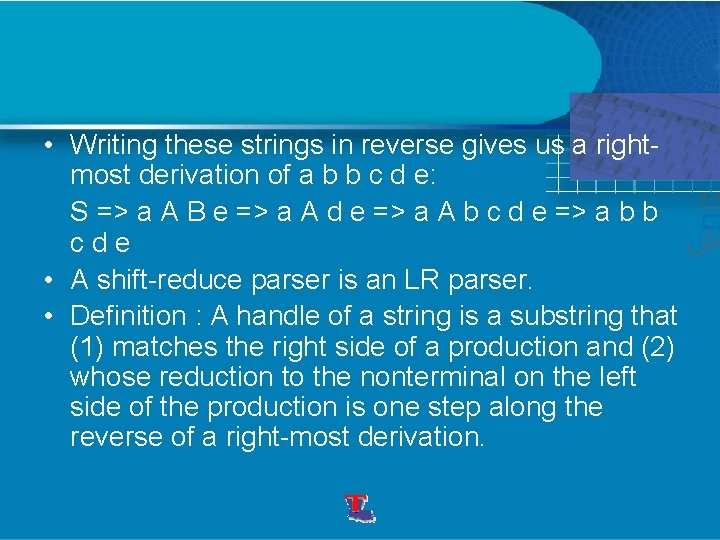

• Writing these strings in reverse gives us a rightmost derivation of a b b c d e: S => a A B e => a A d e => a A b c d e => a b b cde • A shift-reduce parser is an LR parser. • Definition : A handle of a string is a substring that (1) matches the right side of a production and (2) whose reduction to the nonterminal on the left side of the production is one step along the reverse of a right-most derivation.

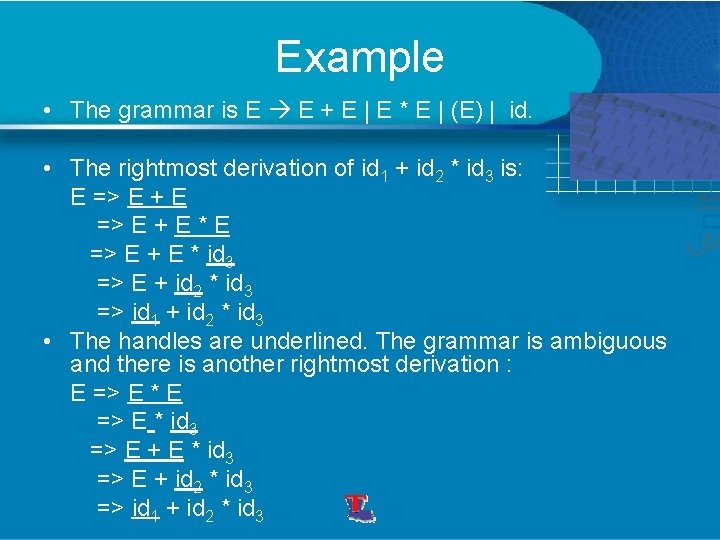

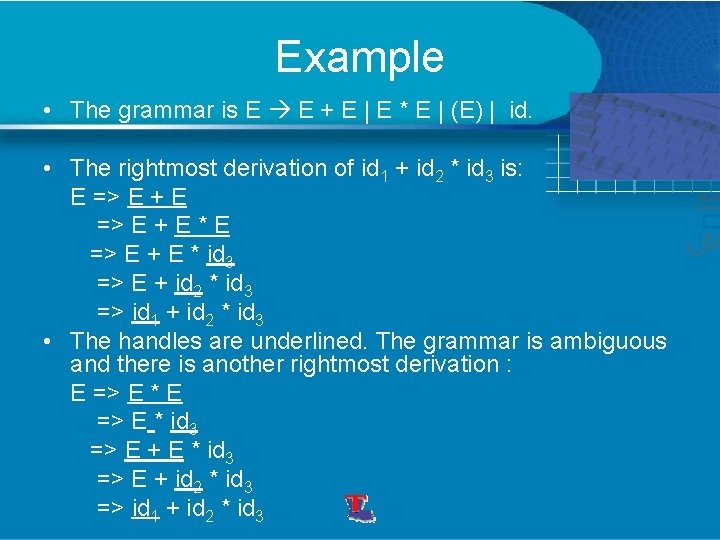

Example • The grammar is E E + E | E * E | (E) | id. • The rightmost derivation of id 1 + id 2 * id 3 is: E => E + E * id 3 => E + id 2 * id 3 => id 1 + id 2 * id 3 • The handles are underlined. The grammar is ambiguous and there is another rightmost derivation : E => E * id 3 => E + id 2 * id 3 => id 1 + id 2 * id 3

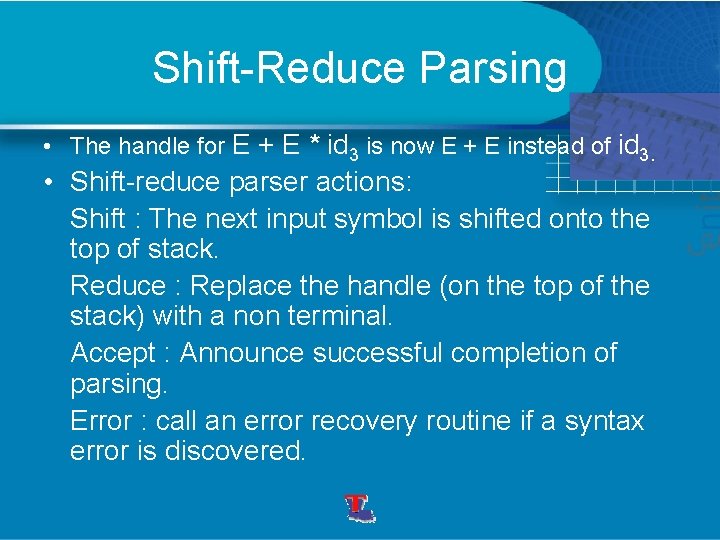

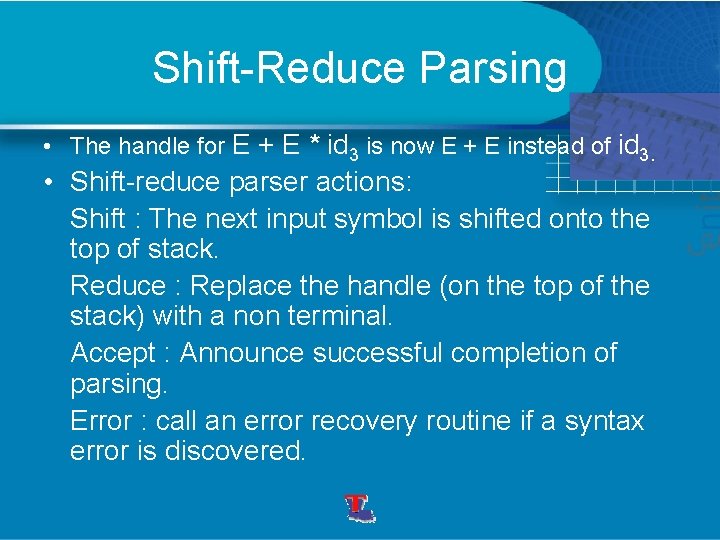

Shift-Reduce Parsing • The handle for E + E * id 3 is now E + E instead of id 3. • Shift-reduce parser actions: Shift : The next input symbol is shifted onto the top of stack. Reduce : Replace the handle (on the top of the stack) with a non terminal. Accept : Announce successful completion of parsing. Error : call an error recovery routine if a syntax error is discovered.

Stop here

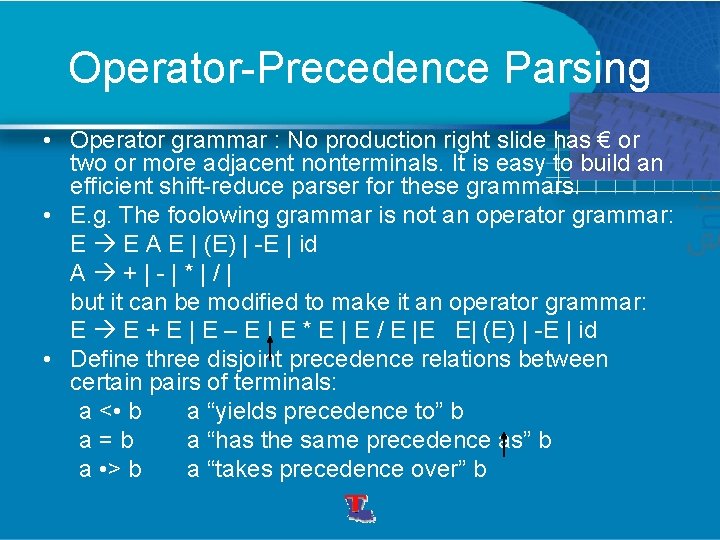

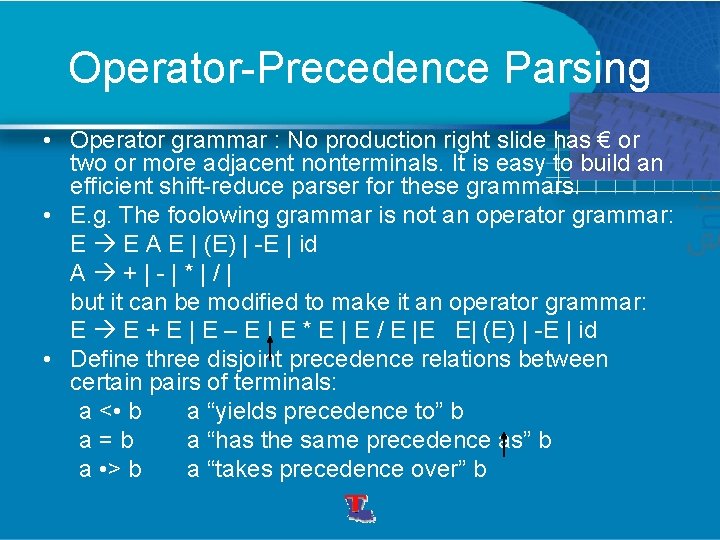

Operator-Precedence Parsing • Operator grammar : No production right slide has € or two or more adjacent nonterminals. It is easy to build an efficient shift-reduce parser for these grammars. • E. g. The foolowing grammar is not an operator grammar: E E A E | (E) | -E | id A +|-|*|/| but it can be modified to make it an operator grammar: E E + E | E – E | E * E | E / E |E E| (E) | -E | id • Define three disjoint precedence relations between certain pairs of terminals: a < • b a “yields precedence to” b a=b a “has the same precedence as” b a • > b a “takes precedence over” b

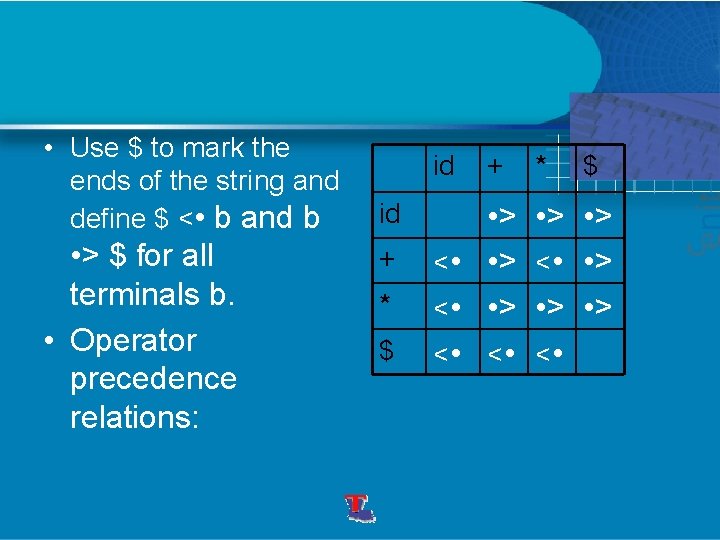

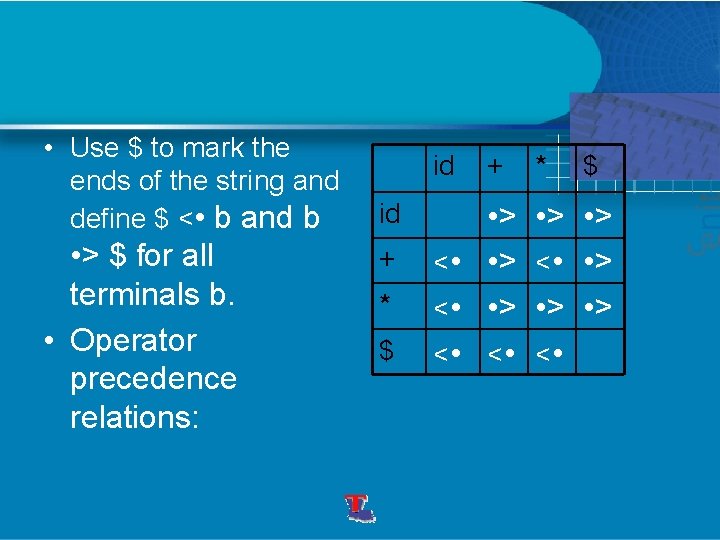

• Use $ to mark the ends of the string and define $ < • b and b • > $ for all terminals b. • Operator precedence relations: id id + * $ + • > < • * $ • > < • • > • > < •

• Note that id has precedence over +. The + and * operators are both left associative so + • > + and * • > * • Finding the handle in a sentential form : The string never has two adjacent nonterminals. Ignore the nonterminals and insert the precedence between the terminals. Scan the string from the left end until a • > is found. Go back to the right until a < • is found. The handle contains everything between the < • and • > including any intervening or surrounding nonterminals.

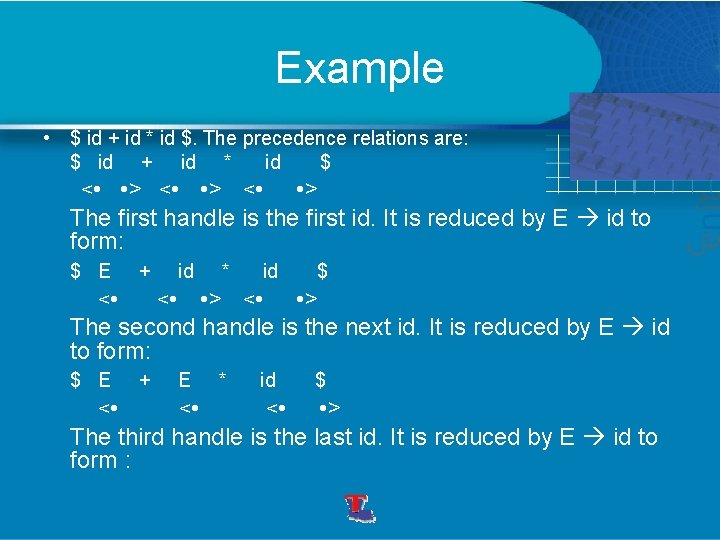

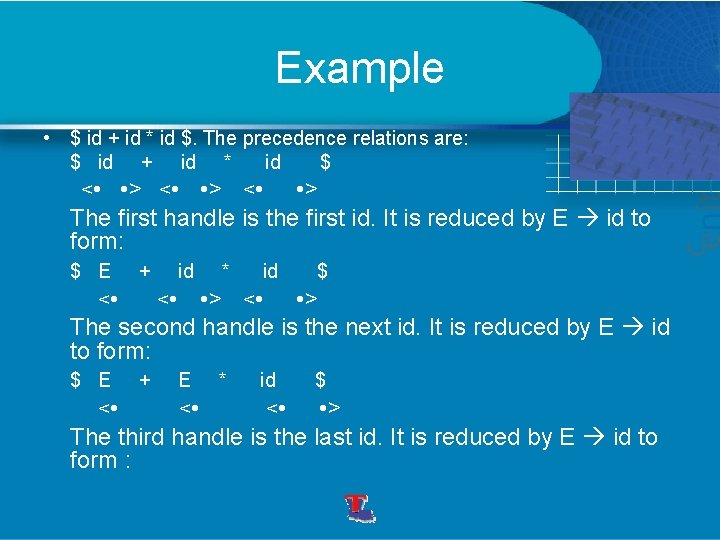

Example • $ id + id * id $. The precedence relations are: $ id + id * id $ < • • > The first handle is the first id. It is reduced by E id to form: $ E < • + id * id $ < • • > The second handle is the next id. It is reduced by E id to form: E < • * id < • $ • > The third handle is the last id. It is reduced by E id to form :

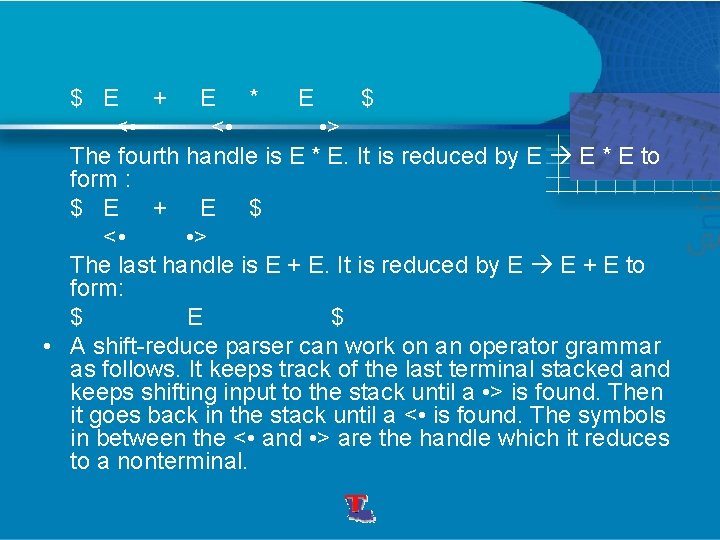

$ E + E * E $ < • • > The fourth handle is E * E. It is reduced by E E * E to form : $ E + E $ < • • > The last handle is E + E. It is reduced by E E + E to form: $ E $ • A shift-reduce parser can work on an operator grammar as follows. It keeps track of the last terminal stacked and keeps shifting input to the stack until a • > is found. Then it goes back in the stack until a < • is found. The symbols in between the < • and • > are the handle which it reduces to a nonterminal.

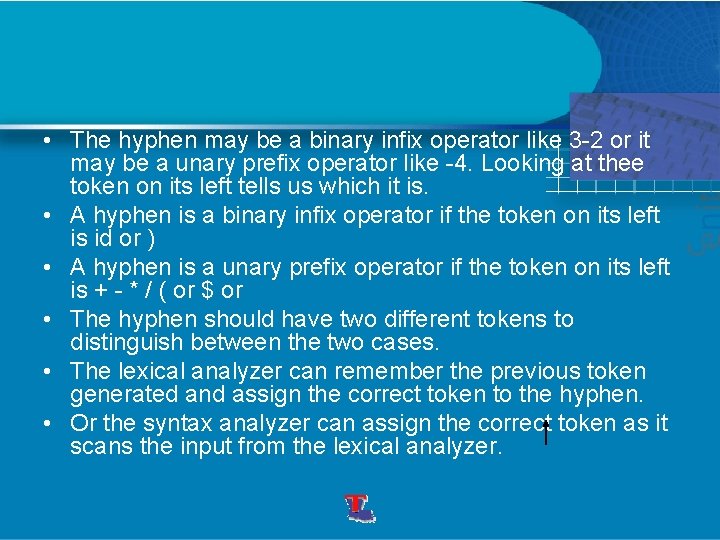

• The hyphen may be a binary infix operator like 3 -2 or it may be a unary prefix operator like -4. Looking at thee token on its left tells us which it is. • A hyphen is a binary infix operator if the token on its left is id or ) • A hyphen is a unary prefix operator if the token on its left is + - * / ( or $ or • The hyphen should have two different tokens to distinguish between the two cases. • The lexical analyzer can remember the previous token generated and assign the correct token to the hyphen. • Or the syntax analyzer can assign the correct token as it scans the input from the lexical analyzer.

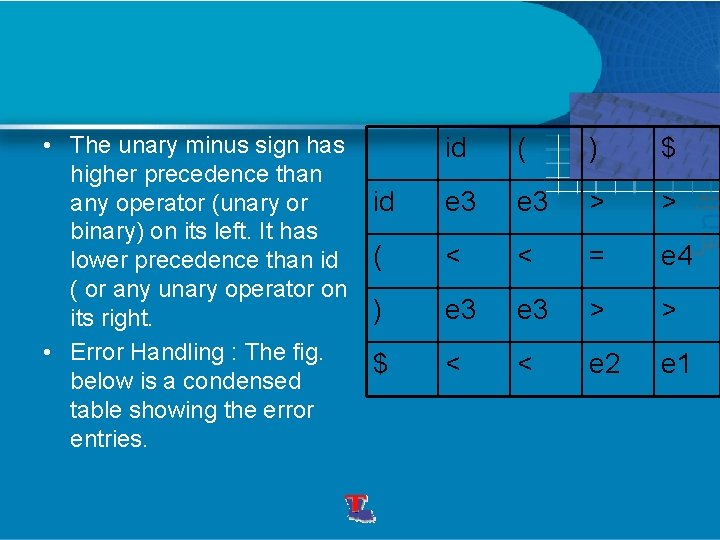

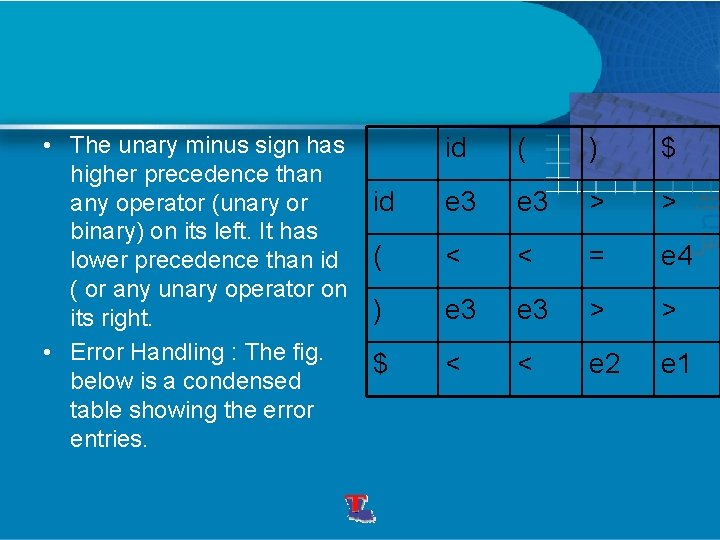

• The unary minus sign has higher precedence than any operator (unary or binary) on its left. It has lower precedence than id ( or any unary operator on its right. • Error Handling : The fig. below is a condensed table showing the error entries. id ( ) $ id e 3 > > ( < < = e 4 ) e 3 > > $ < < e 2 e 1

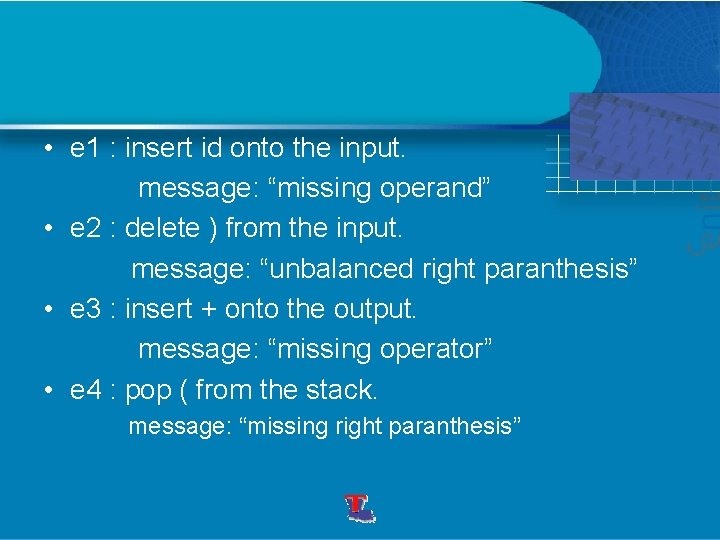

• e 1 : insert id onto the input. message: “missing operand” • e 2 : delete ) from the input. message: “unbalanced right paranthesis” • e 3 : insert + onto the output. message: “missing operator” • e 4 : pop ( from the stack. message: “missing right paranthesis”

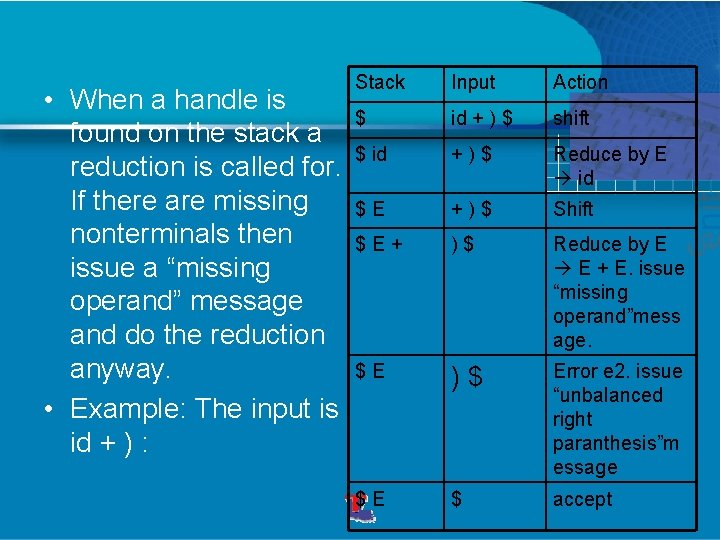

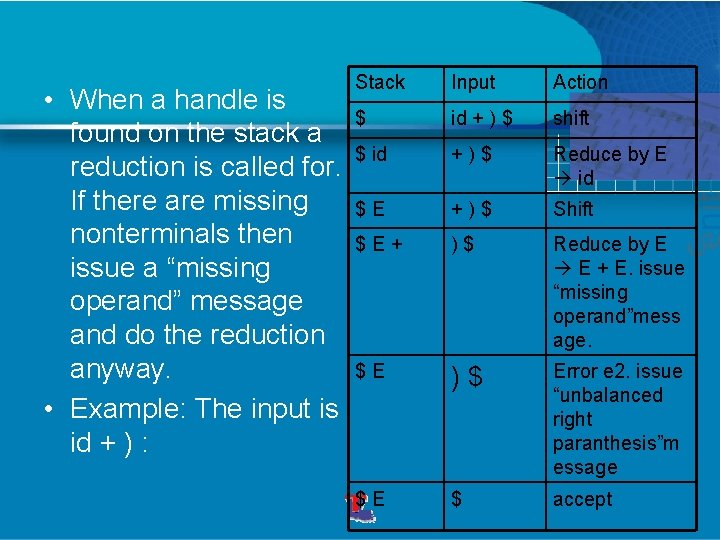

• When a handle is found on the stack a reduction is called for. If there are missing nonterminals then issue a “missing operand” message and do the reduction anyway. • Example: The input is id + ) : Stack Input Action $ id + ) $ shift $ id +)$ Reduce by E id $E +)$ Shift $E+ )$ Reduce by E E + E. issue “missing operand”mess age. $E )$ Error e 2. issue “unbalanced right paranthesis”m essage $E $ accept

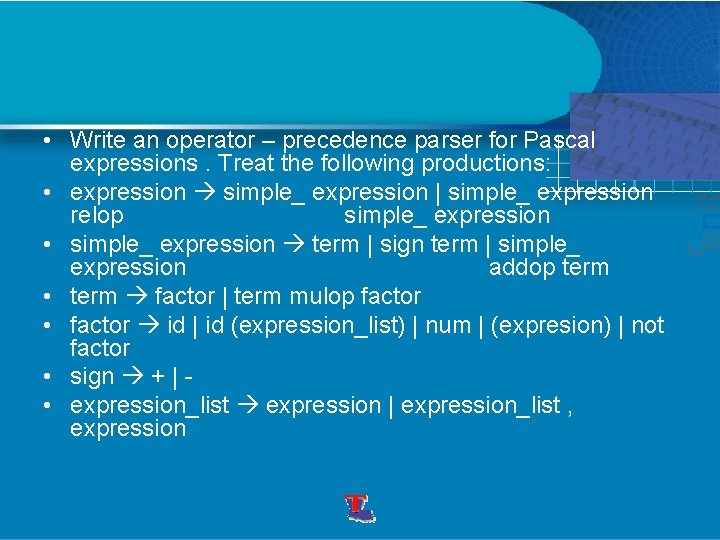

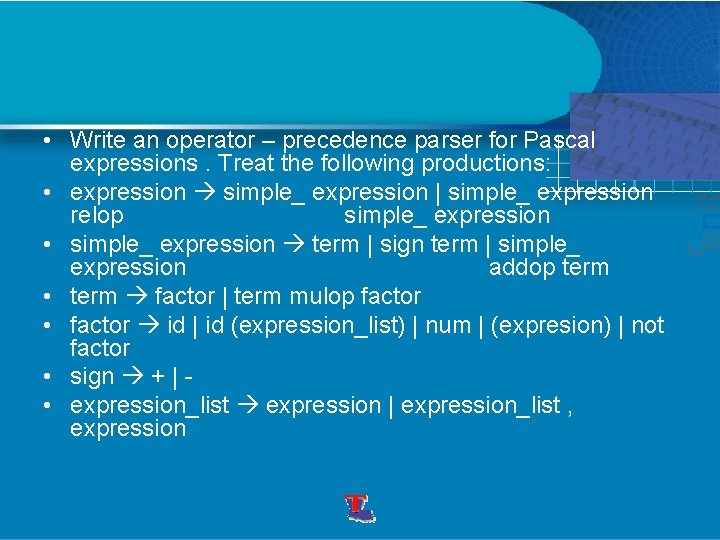

• Write an operator – precedence parser for Pascal expressions. Treat the following productions: • expression simple_ expression | simple_ expression relop simple_ expression • simple_ expression term | sign term | simple_ expression addop term • term factor | term mulop factor • factor id | id (expression_list) | num | (expresion) | not factor • sign + | • expression_list expression | expression_list , expression

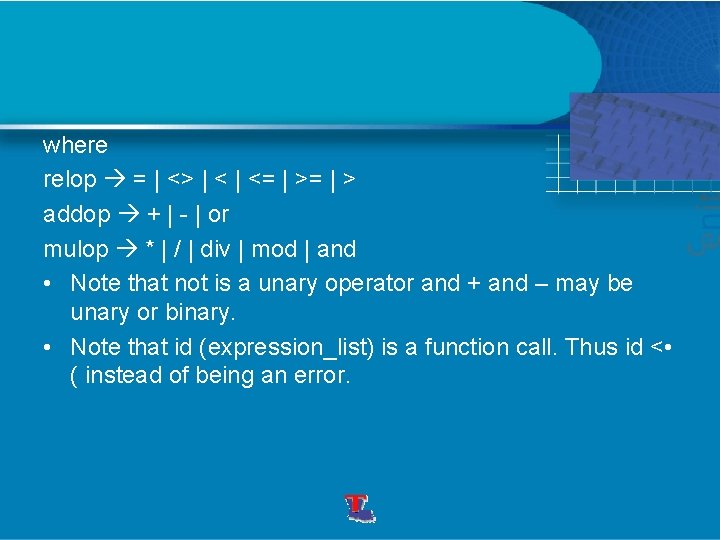

where relop = | <> | <= | > addop + | - | or mulop * | / | div | mod | and • Note that not is a unary operator and + and – may be unary or binary. • Note that id (expression_list) is a function call. Thus id < • ( instead of being an error.