Chair for Computer Aided Medical Procedures Augmented Reality

![Related works • Domain Adaptive Transfer Learning with Specialist Models[7] Ø More pre-training data Related works • Domain Adaptive Transfer Learning with Specialist Models[7] Ø More pre-training data](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-6.jpg)

![…related works • Do Better Image. Net Models Transfer Better? [8] Ø Image. Net …related works • Do Better Image. Net Models Transfer Better? [8] Ø Image. Net](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-7.jpg)

![(Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] • An efficient and invariant to affine (Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] • An efficient and invariant to affine](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-11.jpg)

![(Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] Neuron activation vector a neurons b neurons (Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] Neuron activation vector a neurons b neurons](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-12.jpg)

![(Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] • [9] Raghu, M. ; Gilmer, J. (Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] • [9] Raghu, M. ; Gilmer, J.](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-13.jpg)

![Datasets RETINA dataset Che. Xpert dataset[11] • • • Retinal fundus photographs(back of the Datasets RETINA dataset Che. Xpert dataset[11] • • • Retinal fundus photographs(back of the](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-20.jpg)

![Models Source: [12] Image. Net Architectures • Resnet 50 and Inception-v 3 Source: https: Models Source: [12] Image. Net Architectures • Resnet 50 and Inception-v 3 Source: https:](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-21.jpg)

![Bibliography • • • [1] Chen, C. ; Ren, Y. & Kuo, C. -C. Bibliography • • • [1] Chen, C. ; Ren, Y. & Kuo, C. -C.](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-33.jpg)

![Extra slides[7] Ngiam, J. ; Peng, D. ; Vasudevan, V. ; Kornblith, S. ; Extra slides[7] Ngiam, J. ; Peng, D. ; Vasudevan, V. ; Kornblith, S. ;](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-34.jpg)

- Slides: 37

Chair for Computer Aided Medical Procedures & Augmented Reality Master seminar: Deep Learning for Medical Applications Transfusion: Understanding Transfer Learning for Medical Imaging Maithra Raghu, Chiyuan Zhang, Jon Kleinberg and Samy Bengio Tutor: Shadi Albarqouni, Ph. D Student: Panarit Jahiri

Introduction

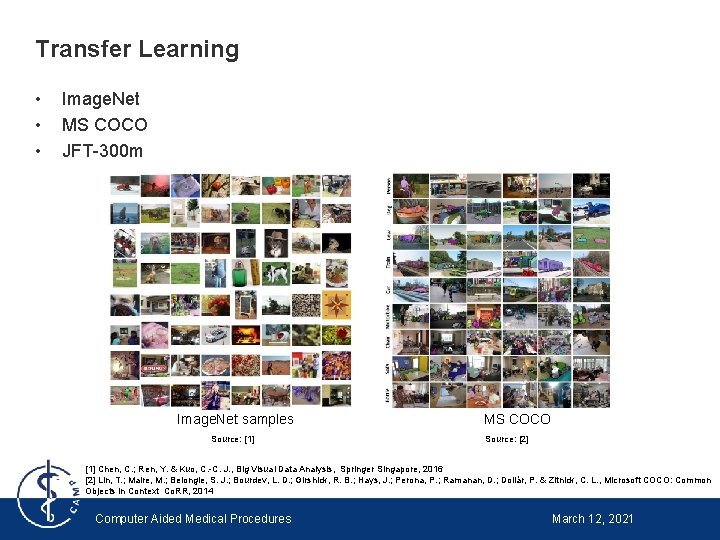

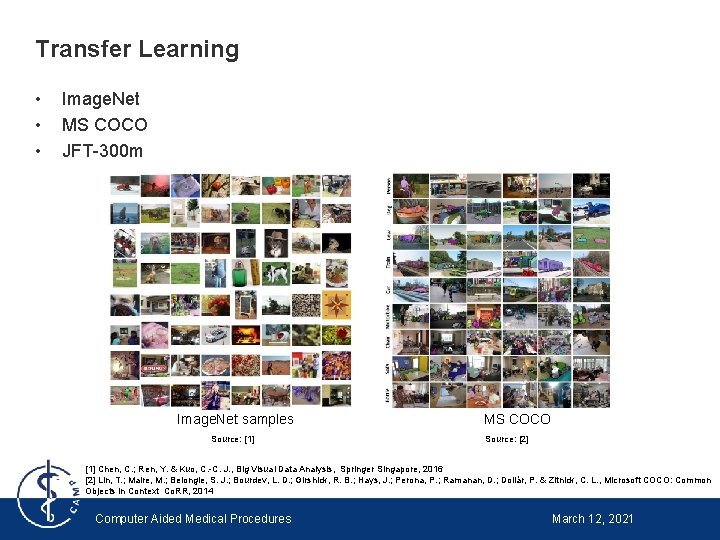

Transfer Learning • • • Image. Net MS COCO JFT-300 m Image. Net samples Source: [1] MS COCO Source: [2] [1] Chen, C. ; Ren, Y. & Kuo, C. -C. J. , Big Visual Data Analysis, Springer Singapore, 2016 [2] Lin, T. ; Maire, M. ; Belongie, S. J. ; Bourdev, L. D. ; Girshick, R. B. ; Hays, J. ; Perona, P. ; Ramanan, D. ; Dollár, P. & Zitnick, C. L. , Microsoft COCO: Common Objects in Context Co. RR, 2014 Computer Aided Medical Procedures March 12, 2021

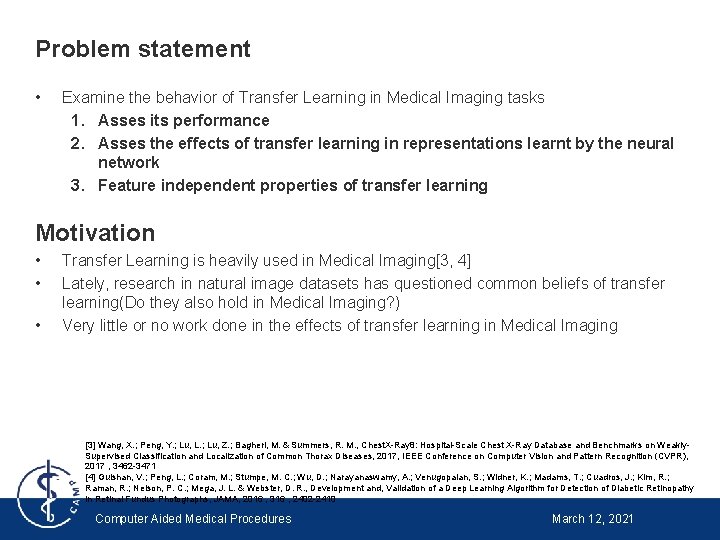

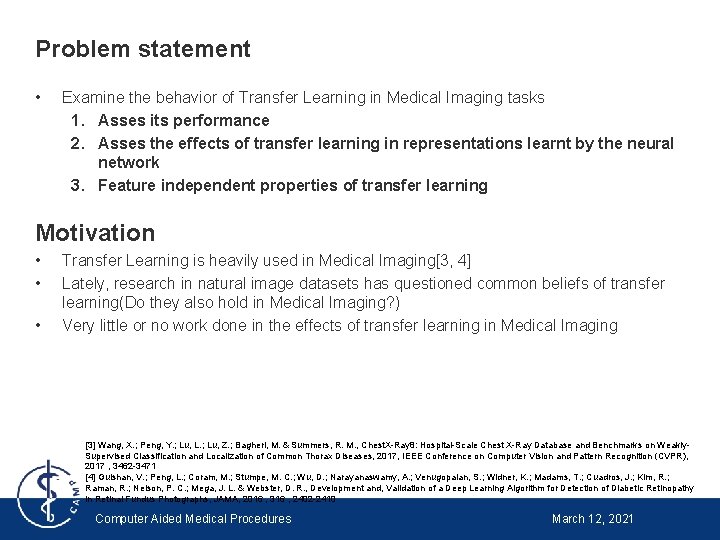

Problem statement • Examine the behavior of Transfer Learning in Medical Imaging tasks 1. Asses its performance 2. Asses the effects of transfer learning in representations learnt by the neural network 3. Feature independent properties of transfer learning Motivation • • • Transfer Learning is heavily used in Medical Imaging[3, 4] Lately, research in natural image datasets has questioned common beliefs of transfer learning(Do they also hold in Medical Imaging? ) Very little or no work done in the effects of transfer learning in Medical Imaging [3] Wang, X. ; Peng, Y. ; Lu, L. ; Lu, Z. ; Bagheri, M. & Summers, R. M. , Chest. X-Ray 8: Hospital-Scale Chest X-Ray Database and Benchmarks on Weakly. Supervised Classification and Localization of Common Thorax Diseases, 2017, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017 , 3462 -3471 [4] Gulshan, V. ; Peng, L. ; Coram, M. ; Stumpe, M. C. ; Wu, D. ; Narayanaswamy, A. ; Venugopalan, S. ; Widner, K. ; Madams, T. ; Cuadros, J. ; Kim, R. ; Raman, R. ; Nelson, P. C. ; Mega, J. L. & Webster, D. R. , Development and, Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs, JAMA, 2016 , 316 , 2402 -2410 Computer Aided Medical Procedures March 12, 2021

…motivation • Big difference between Image. Net classification and Medical Imaging tasks(Maybe we get interesting results? ) Ø In Medical Imaging tasks often no clear global subject or pathology in the image Ø Medical Imaging datasets usually fewer samples[5, 6], but often much larger in size(better search for local variations) Ø Medical Tasks fewer classes(5 for Diabetic Retinopathy diagnosis, 14 for chest x-ray pathologies). 1000 classes in Image. Net[4, 5] [4] Gulshan, V. ; Peng, L. ; Coram, M. ; Stumpe, M. C. ; Wu, D. ; Narayanaswamy, A. ; Venugopalan, S. ; Widner, K. ; Madams, T. ; Cuadros, J. ; Kim, R. ; Raman, R. ; Nelson, P. C. ; Mega, J. L. & Webster, D. R. , Development and, Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs, JAMA, 2016 , 316 , 2402 -2410 [5] Rajpurkar, P. ; Irvin, J. ; Zhu, K. ; Yang, B. ; Mehta, H. ; Duan, T. ; Ding, D. Y. ; Bagul, A. ; Langlotz, C. ; Shpanskaya, K. S. ; Lungren, M. P. & Ng, A. Y. , Che. XNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning, Co. RR, 2017 , abs/1711. 05225 [6] Khosravi, P. ; Kazemi, E. ; Zhan, Q. ; Toschi, M. ; Malmsten, J. E. ; Hickman, C. ; Meseguer, M. ; Rosenwaks, Z. ; Elemento, O. ; Zaninovic, N. & Hajirasouliha, I. , Robust Automated Assessment of Human Blastocyst Quality using Deep Learning , bio. Rxiv, Cold Spring Harbor Laboratory, 2018 Computer Aided Medical Procedures March 12, 2021

![Related works Domain Adaptive Transfer Learning with Specialist Models7 Ø More pretraining data Related works • Domain Adaptive Transfer Learning with Specialist Models[7] Ø More pre-training data](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-6.jpg)

Related works • Domain Adaptive Transfer Learning with Specialist Models[7] Ø More pre-training data does not always help Ø Matching to the target dataset distribution improves transfer learning Ø Fine-grained target tasks require fine-grained pretraining Pre-training Method Birdsnap Oxford-IIIT Pets Stanford Cars FGVC Aircraft Food-101 CIFAR-10 Entire JFT Dataset 74. 2 92. 5 94. 0 88. 2 88. 6 97. 6 Random initialization 75. 2 80. 8 92. 1 88. 3 86. 4 95. 7 JFT-Adaptive Transfer 81. 7 97. 1 95. 7 94. 1 98. 3 Source: Adapted from [7] Ngiam, J. ; Peng, D. ; Vasudevan, V. ; Kornblith, S. ; Le, Q. V. & Pang, R. , Domain Adaptive Transfer Learning with Specialist Models, Ar. Xiv, 2018 Computer Aided Medical Procedures March 12, 2021

![related works Do Better Image Net Models Transfer Better 8 Ø Image Net …related works • Do Better Image. Net Models Transfer Better? [8] Ø Image. Net](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-7.jpg)

…related works • Do Better Image. Net Models Transfer Better? [8] Ø Image. Net pretraining does not necessarily improve accuracy on fine-grained tasks • What makes Image. Net good for transfer learning? [11] Ø How many pre-training Image. Net examples are sufficient for transfer learning? Ø How many pre-training Image. Net classes are sufficient for transfer learning? Source: [8] Kornblith, S. ; Shlens, J. & Le, Q. V. , Do better Image. Net models transfer better? , 2019 Computer Aided Medical Procedures March 12, 2021

Methodology

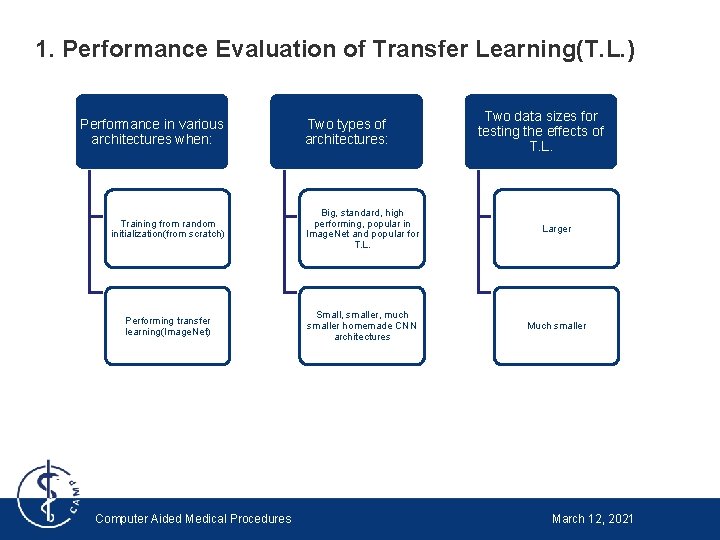

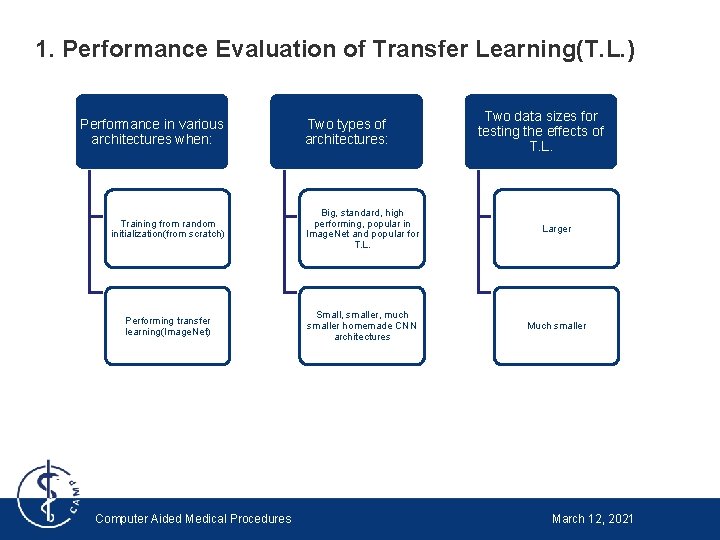

1. Performance Evaluation of Transfer Learning(T. L. ) Performance in various architectures when: Two types of architectures: Two data sizes for testing the effects of T. L. Training from random initialization(from scratch) Big, standard, high performing, popular in Image. Net and popular for T. L. Larger Performing transfer learning(Image. Net) Small, smaller, much smaller homemade CNN architectures Much smaller Computer Aided Medical Procedures March 12, 2021

2. Analyze the effects of T. L. in the learned representations

![Singular Vector Canonical Correlation Analysis SVCCA9 An efficient and invariant to affine (Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] • An efficient and invariant to affine](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-11.jpg)

(Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] • An efficient and invariant to affine transformations tool for comparing two representations, for example[9]: Ø Two layers of the same network Ø Same layer of the network before/after training Ø Same layer of the network trained with different initializations • It works with a collection of outputs of a neuron on a sequence of inputs. This collection is called a neuron activation vector [9] Raghu, M. ; Gilmer, J. ; Yosinski, J. & Sohl-Dickstein, J. , SVCCA: Singular Vector Canonical Correlation Analysis for Deep Learning Dynamics and Interpretab Computer Aided Medical Procedures March 12, 2021

![Singular Vector Canonical Correlation Analysis SVCCA9 Neuron activation vector a neurons b neurons (Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] Neuron activation vector a neurons b neurons](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-12.jpg)

(Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] Neuron activation vector a neurons b neurons Dataset [9] Raghu, M. ; Gilmer, J. ; Yosinski, J. & Sohl-Dickstein, J. , SVCCA: Singular Vector Canonical Correlation Analysis for Deep Learning Dynamics and Interpretability, 2017 [10] Morcos, A. S. ; Raghu, M. & Bengio, S. , Insights on representational similarity in neural networks with canonical correlation, 2018 Computer Aided Medical Procedures March 12, 2021

![Singular Vector Canonical Correlation Analysis SVCCA9 9 Raghu M Gilmer J (Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] • [9] Raghu, M. ; Gilmer, J.](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-13.jpg)

(Singular Vector) Canonical Correlation Analysis – (SV)CCA[9] • [9] Raghu, M. ; Gilmer, J. ; Yosinski, J. & Sohl-Dickstein, J. , SVCCA: Singular Vector Canonical Correlation Analysis for Deep Learning Dynamics and Interpretability, 2017 [10] Morcos, A. S. ; Raghu, M. & Bengio, S. , Insights on representational similarity in neural networks with canonical correlation, 2018 Computer Aided Medical Procedures March 12, 2021

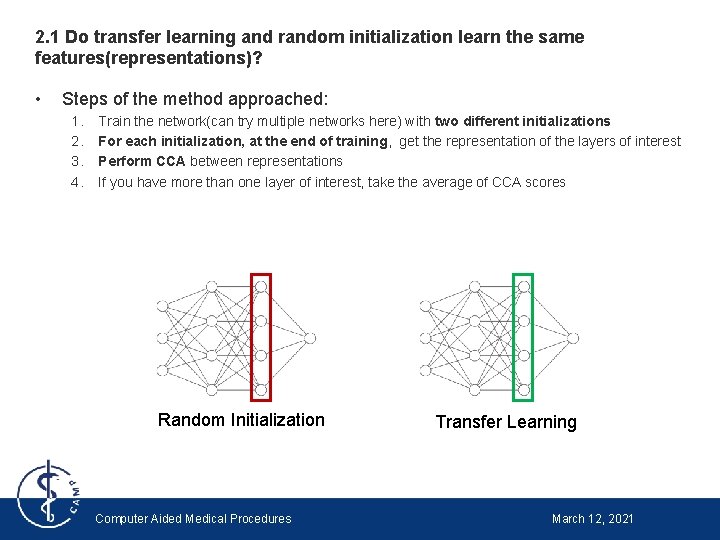

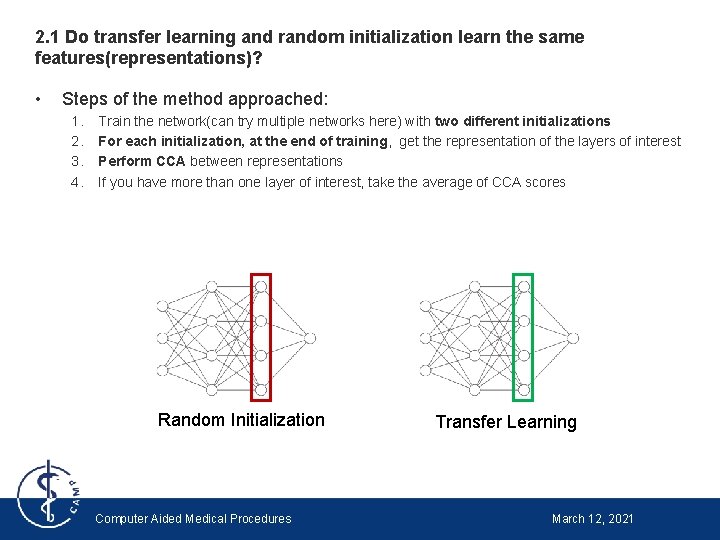

2. 1 Do transfer learning and random initialization learn the same features(representations)? • Steps of the method approached: 1. 2. 3. 4. Train the network(can try multiple networks here) with two different initializations For each initialization, at the end of training, get the representation of the layers of interest Perform CCA between representations If you have more than one layer of interest, take the average of CCA scores Random Initialization Computer Aided Medical Procedures Transfer Learning March 12, 2021

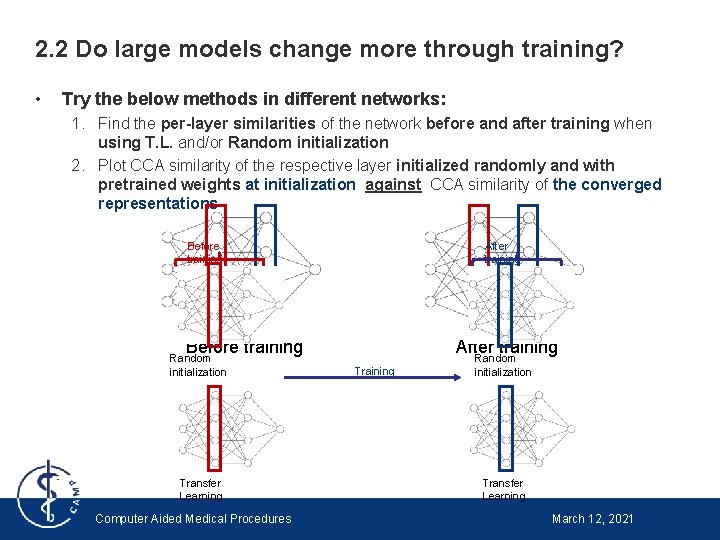

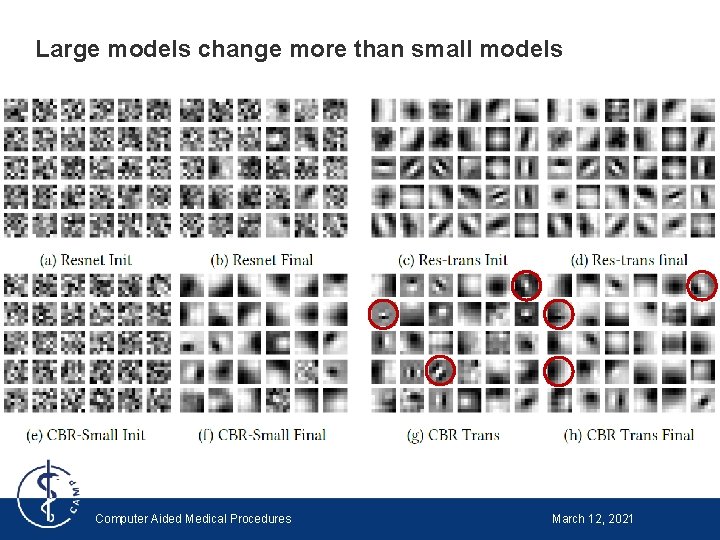

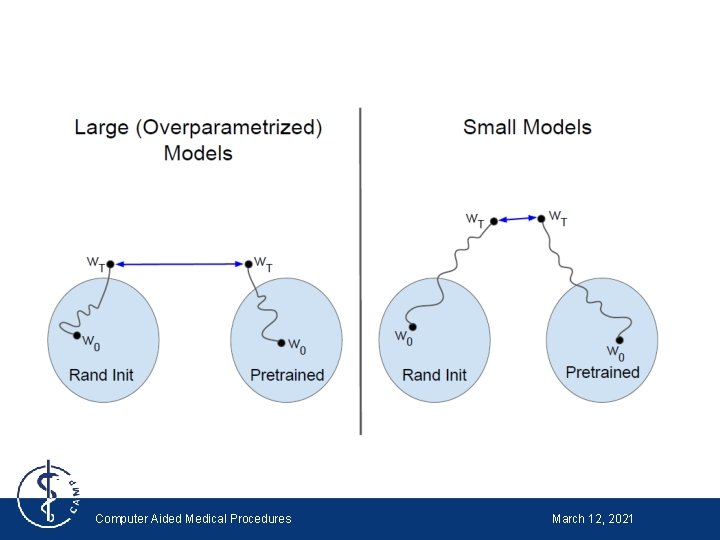

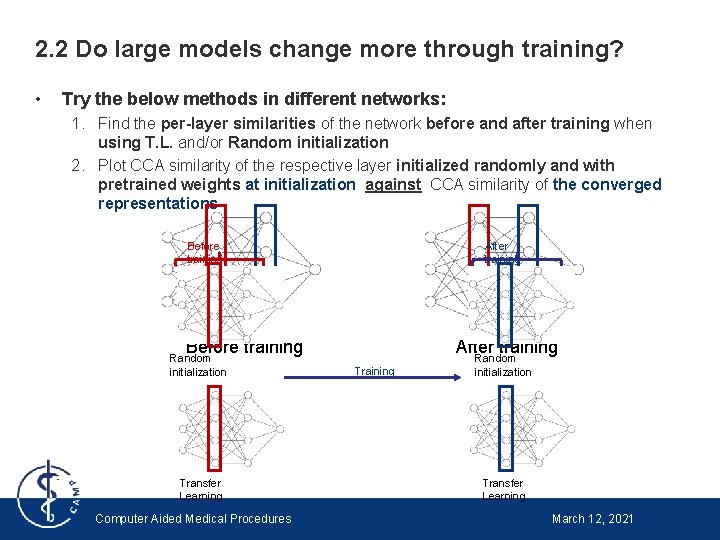

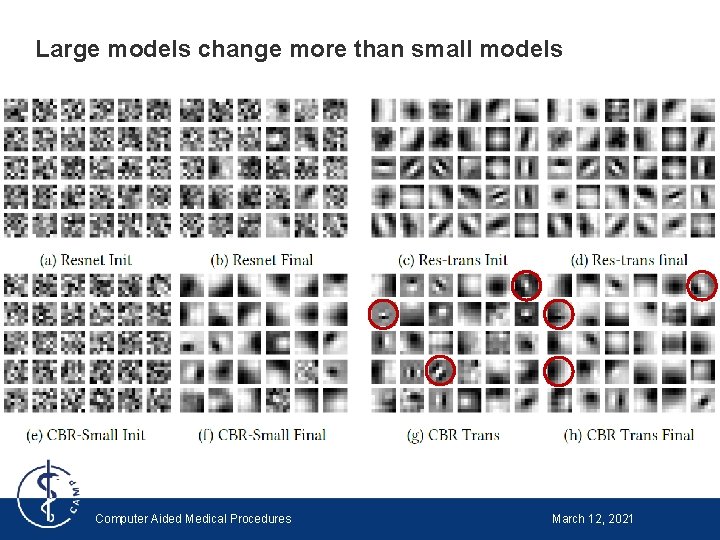

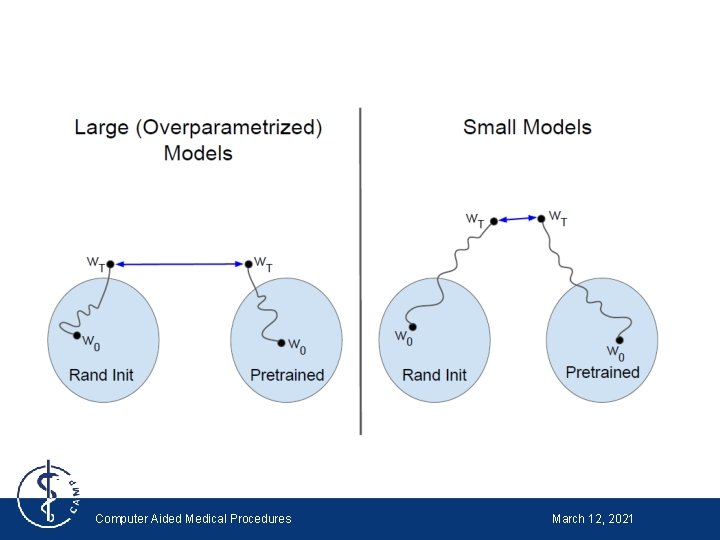

2. 2 Do large models change more through training? • Try the below methods in different networks: 1. Find the per-layer similarities of the network before and after training when using T. L. and/or Random initialization 2. Plot CCA similarity of the respective layer initialized randomly and with pretrained weights at initialization against CCA similarity of the converged representations Before training After training Before training Random initialization Transfer Learning Computer Aided Medical Procedures After training Training Random initialization Transfer Learning March 12, 2021

3 Feature independent properties of transfer learning

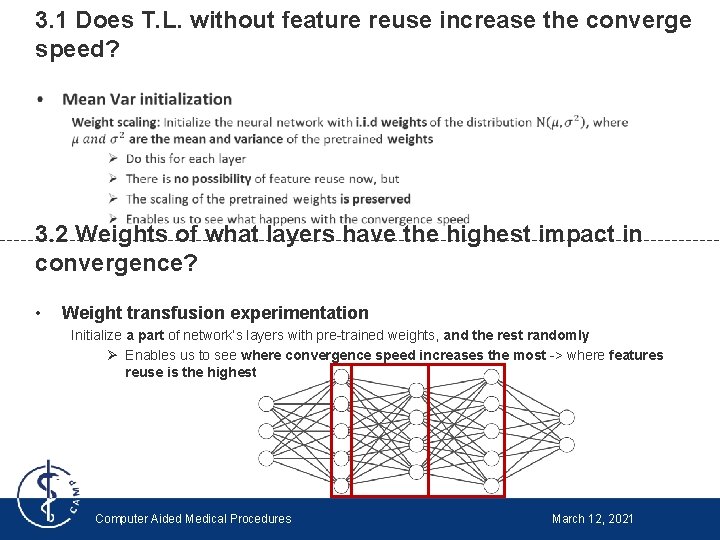

3. 1 Does T. L. without feature reuse increase the converge speed? • 3. 2 Weights of what layers have the highest impact in convergence? • Weight transfusion experimentation Initialize a part of network’s layers with pre-trained weights, and the rest randomly Ø Enables us to see where convergence speed increases the most -> where features reuse is the highest Computer Aided Medical Procedures March 12, 2021

3. 3 Hybrid approach to Transfer Learning • Slim models instead of big models 1. Reuse pretrained weights up to a certain layer ØSlim the rest of the network and initialize its layers randomly Computer Aided Medical Procedures March 12, 2021

Experimental setup

![Datasets RETINA dataset Che Xpert dataset11 Retinal fundus photographsback of the Datasets RETINA dataset Che. Xpert dataset[11] • • • Retinal fundus photographs(back of the](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-20.jpg)

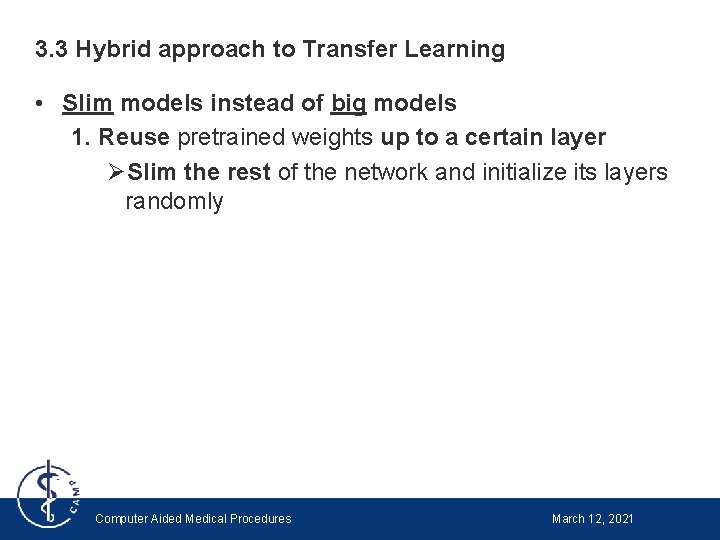

Datasets RETINA dataset Che. Xpert dataset[11] • • • Retinal fundus photographs(back of the eye images)[4] of size 587 x 587 Detect Diabetic Retinopathy disease, graded in 5 scales by increasing severity Evaluate via AUC-ROC on identifying referable DR(grade 3 and up) Around 250 k training images and 70 k testing images • • Chest x-ray images resized to 224 x 224 Used it do detect 5 pathologies(atelectasis, cardiomegaly, consolidation, edema and pleural eusion) Evaluate on the AUC of diagnosing the pathologies Around 223 k training images [4] Gulshan, V. ; Peng, L. ; Coram, M. ; Stumpe, M. C. ; Wu, D. ; Narayanaswamy, A. ; Venugopalan, S. ; Widner, K. ; Madams, T. ; Cuadros, J. ; Kim, R. ; Raman, R. ; Nelson, P. C. ; Mega, J. L. & Webster, D. R. , Development and, Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs, JAMA, 2016 , 316 , 2402 -2410 [11] Irvin, J. ; Rajpurkar, P. ; Ko, M. ; Yu, Y. ; Ciurea-Ilcus, S. ; Chute, C. ; Marklund, H. ; Haghgoo, B. ; Ball, R. L. ; Shpanskaya, K. S. ; Seekins, J. ; Mong, D. A. ; Halabi, S. S. ; Sandberg, J. K. ; Jones, R. ; Larson, D. B. ; Langlotz, C. P. ; Patel, B. N. ; Lungren, M. P. & Ng, A. Y. , Che. Xpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison , Co. RR, 2019 Computer Aided Medical Procedures March 12, 2021

![Models Source 12 Image Net Architectures Resnet 50 and Inceptionv 3 Source https Models Source: [12] Image. Net Architectures • Resnet 50 and Inception-v 3 Source: https:](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-21.jpg)

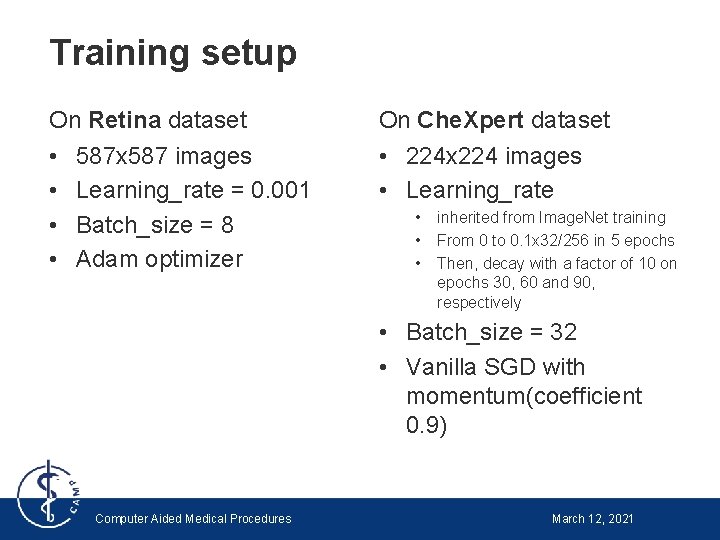

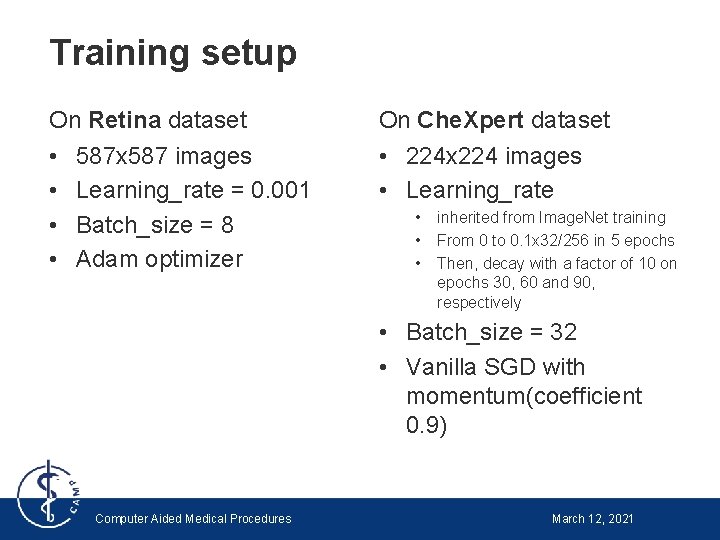

Models Source: [12] Image. Net Architectures • Resnet 50 and Inception-v 3 Source: https: //cloud. google. com/tpu/docs/inception-v 3 -advanced CBR family of small CNNs Convol ution Batch normal ization Re. Lu Maxpool 3 x 3, s=2 Convol ution Batch normal ization Re. Lu Maxpool Convol 3 x 3, s=2 ution Batch normal ization [12] F. -F. Li, J. Johnson and S. Yeung, "CS 231 n: Convolutional Neural Networks for Visual Recognition, " Stanford, 2017. Computer Aided Medical Procedures March 12, 2021 Re. Lu

Training setup On Retina dataset On Che. Xpert dataset • • • 224 x 224 images • Learning_rate 587 x 587 images Learning_rate = 0. 001 Batch_size = 8 Adam optimizer • • • inherited from Image. Net training From 0 to 0. 1 x 32/256 in 5 epochs Then, decay with a factor of 10 on epochs 30, 60 and 90, respectively • Batch_size = 32 • Vanilla SGD with momentum(coefficient 0. 9) Computer Aided Medical Procedures March 12, 2021

Results and Discussion

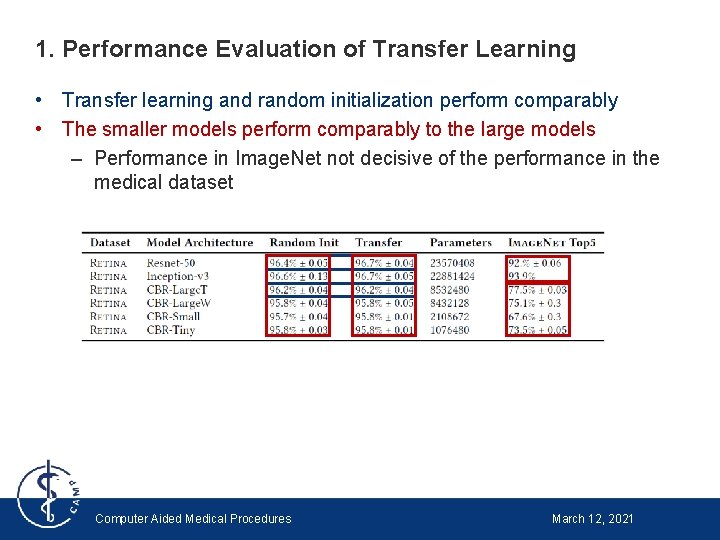

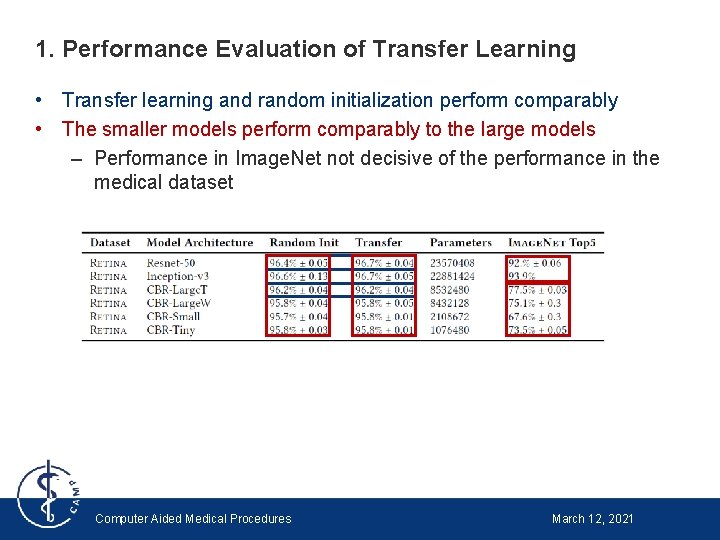

1. Performance Evaluation of Transfer Learning • Transfer learning and random initialization perform comparably • The smaller models perform comparably to the large models – Performance in Image. Net not decisive of the performance in the medical dataset Computer Aided Medical Procedures March 12, 2021

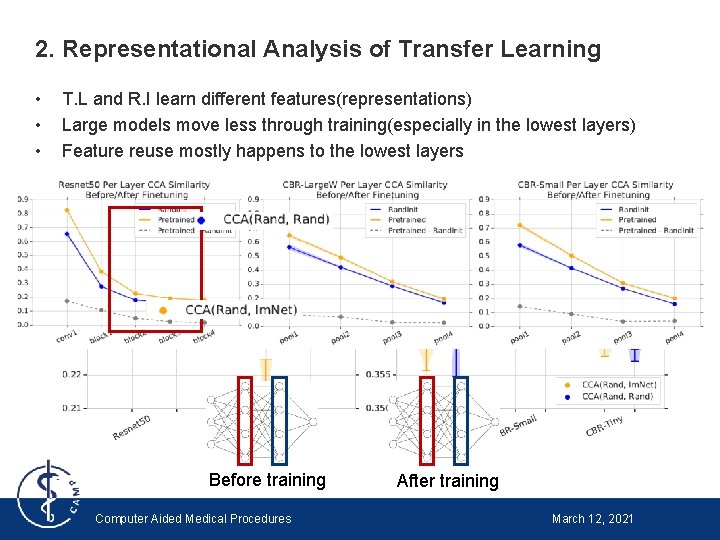

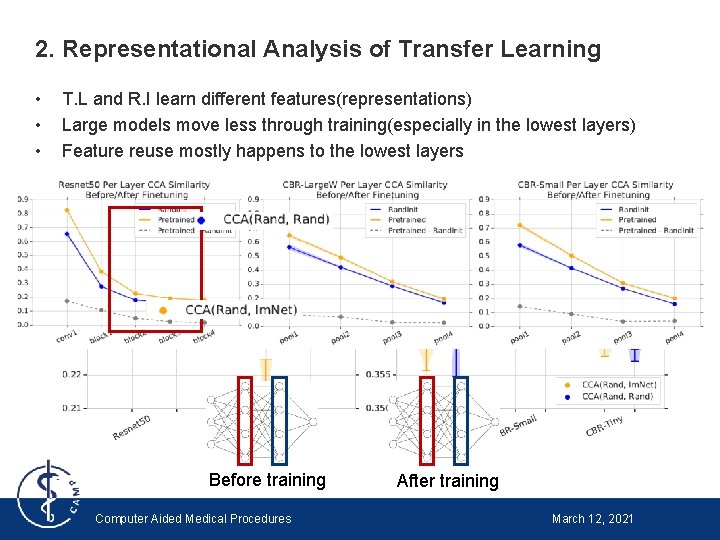

2. Representational Analysis of Transfer Learning • • • T. L and R. I learn different features(representations) Large models move less through training(especially in the lowest layers) Feature reuse mostly happens to the lowest layers Before training Computer Aided Medical Procedures After training March 12, 2021

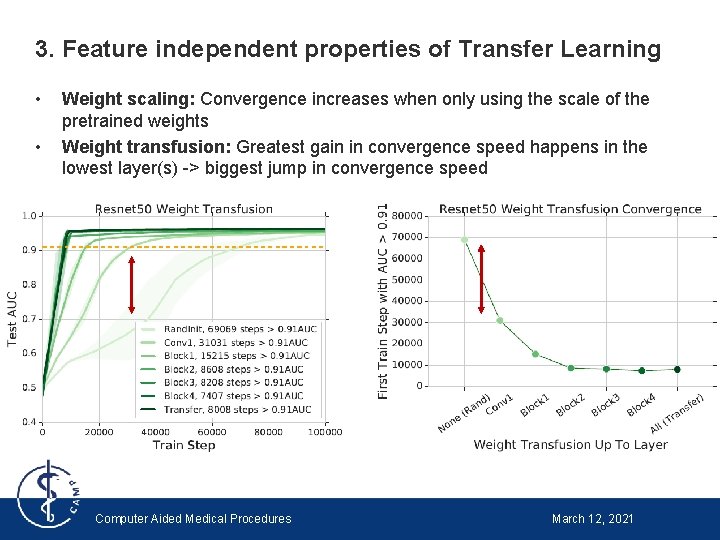

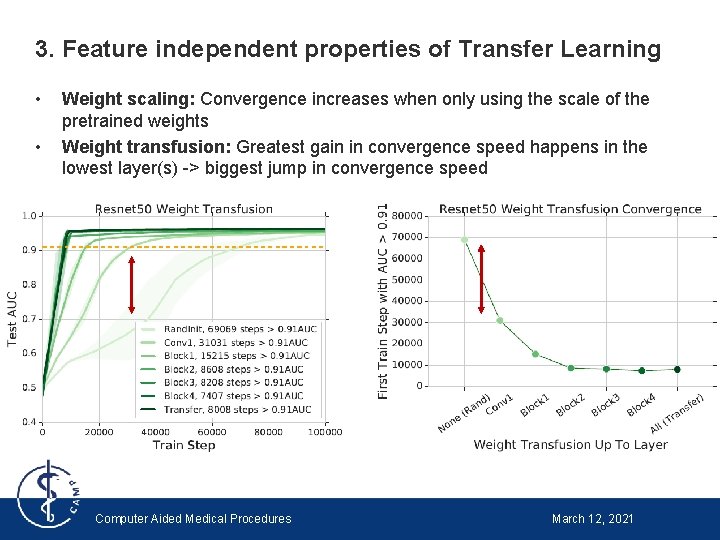

3. Feature independent properties of Transfer Learning • • Weight scaling: Convergence increases when only using the scale of the pretrained weights Weight transfusion: Greatest gain in convergence speed happens in the lowest layer(s) -> biggest jump in convergence speed RETINA Dataset Computer Aided Medical Procedures Che. Xpert Dataset March 12, 2021

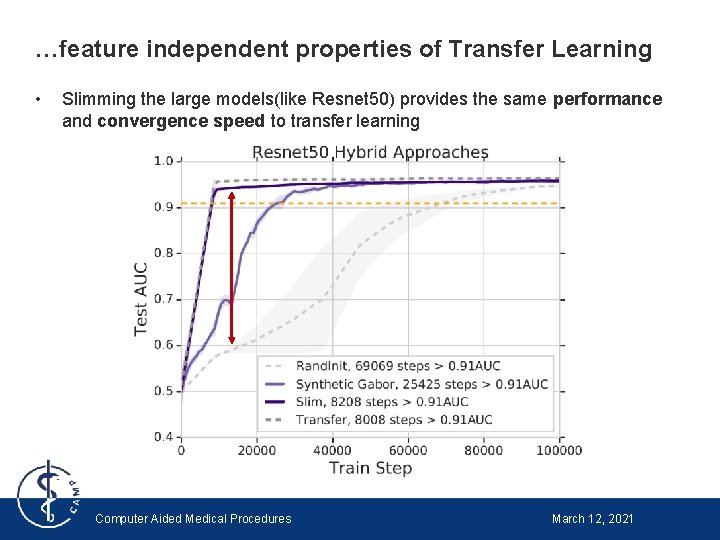

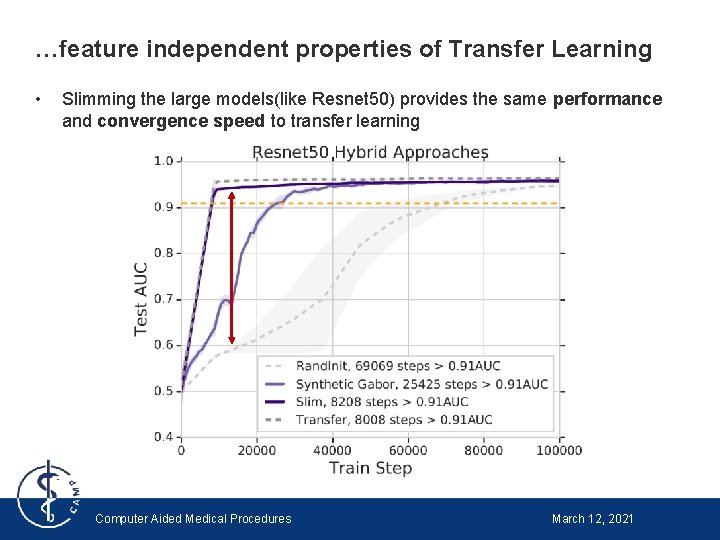

…feature independent properties of Transfer Learning • Slimming the large models(like Resnet 50) provides the same performance and convergence speed to transfer learning Computer Aided Medical Procedures March 12, 2021

Conclusion and Future Work

Open questions and applicability to other fields • Does the domain adaption method proposed in [7] work for Medical datasets? – Or maybe with transfer learning within the Medical area, for example when texture detection is important • Come up with a precise mathematical definition of weight scaling that guarantees(? ) better convergence • Does weight scaling also work within the same domain? – Train on Image. Net -> Do weight scaling -> Train again on Image. Net • More detailed observation of the optimization space of the loss function [7] Ngiam, J. ; Peng, D. ; Vasudevan, V. ; Kornblith, S. ; Le, Q. V. & Pang, R. , Domain Adaptive Transfer Learning with Specialist Models, Ar. Xiv, 2018 Computer Aided Medical Procedures March 12, 2021

Own view of the paper Good side: • Very interesting results • Good layout of the paper • Very nice ideas for analyzing the results of transfer learning Could be improved: • Be more specific which dataset was used when • Separate Introduction from Related work • Be more concise in some definitions and the meaning of some sentences Computer Aided Medical Procedures March 12, 2021

Lessons learned • If we use something a lot, it does not mean we understand it deep enough • There is still place to better understand what transfer learning is doing • Following the trend of research is extremely important Computer Aided Medical Procedures March 12, 2021

Thank you very much Any question? Tutor: Shadi Albarqouni, Ph. D Student: Panarit Jahiri

![Bibliography 1 Chen C Ren Y Kuo C C Bibliography • • • [1] Chen, C. ; Ren, Y. & Kuo, C. -C.](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-33.jpg)

Bibliography • • • [1] Chen, C. ; Ren, Y. & Kuo, C. -C. J. , Big Visual Data Analysis, Springer Singapore, 2016 [2] Lin, T. ; Maire, M. ; Belongie, S. J. ; Bourdev, L. D. ; Girshick, R. B. ; Hays, J. ; Perona, P. ; Ramanan, D. ; Dollár, P. & Zitnick, C. L. , Microsoft COCO: Common Objects in Context, Co. RR, 2014 [3] Wang, X. ; Peng, Y. ; Lu, L. ; Lu, Z. ; Bagheri, M. & Summers, R. M. , Chest. X-Ray 8: Hospital-Scale Chest X-Ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases, 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017 , 3462 -3471 [4] Gulshan, V. ; Peng, L. ; Coram, M. ; Stumpe, M. C. ; Wu, D. ; Narayanaswamy, A. ; Venugopalan, S. ; Widner, K. ; Madams, T. ; Cuadros, J. ; Kim, R. ; Raman, R. ; Nelson, P. C. ; Mega, J. L. & Webster, D. R. , Development and, Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs, JAMA, 2016 , 316 , 2402 -2410 [5] Rajpurkar, P. ; Irvin, J. ; Zhu, K. ; Yang, B. ; Mehta, H. ; Duan, T. ; Ding, D. Y. ; Bagul, A. ; Langlotz, C. ; Shpanskaya, K. S. ; Lungren, M. P. & Ng, A. Y. , Che. XNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning, Co. RR, 2017 , abs/1711. 05225 [6] Khosravi, P. ; Kazemi, E. ; Zhan, Q. ; Toschi, M. ; Malmsten, J. E. ; Hickman, C. ; Meseguer, M. ; Rosenwaks, Z. ; Elemento, O. ; Zaninovic, N. & Hajirasouliha, I. , Robust Automated Assessment of Human Blastocyst Quality using Deep Learning , bio. Rxiv, Cold Spring Harbor Laboratory, 2018 [7] Ngiam, J. ; Peng, D. ; Vasudevan, V. ; Kornblith, S. ; Le, Q. V. & Pang, R. , Domain Adaptive Transfer Learning with Specialist Models, Ar. Xiv, 2018 [8] Kornblith, S. ; Shlens, J. & Le, Q. V. , Do better Image. Net models transfer better? , 2019 [9] Raghu, M. ; Gilmer, J. ; Yosinski, J. & Sohl-Dickstein, J. , SVCCA: Singular Vector Canonical Correlation Analysis for Deep Learning Dynamics and Interpretability, 2017 [10] Morcos, A. S. ; Raghu, M. & Bengio, S. , Insights on representational similarity in neural networks with canonical correlation, 2018 [11] Irvin, J. ; Rajpurkar, P. ; Ko, M. ; Yu, Y. ; Ciurea-Ilcus, S. ; Chute, C. ; Marklund, H. ; Haghgoo, B. ; Ball, R. L. ; Shpanskaya, K. S. ; Seekins, J. ; Mong, D. A. ; Halabi, S. S. ; Sandberg, J. K. ; Jones, R. ; Larson, D. B. ; Langlotz, C. P. ; Patel, B. N. ; Lungren, M. P. & Ng, A. Y. , Che. Xpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison , Co. RR, 2019 [12] F. -F. Li; J. Johnson; S. Yeung, "CS 231 n: Convolutional Neural Networks for Visual Recognition, " Stanford, 2017. Computer Aided Medical Procedures March 12, 2021 Slide 33

![Extra slides7 Ngiam J Peng D Vasudevan V Kornblith S Extra slides[7] Ngiam, J. ; Peng, D. ; Vasudevan, V. ; Kornblith, S. ;](https://slidetodoc.com/presentation_image_h/36ed4a9610050b9f11e92fd002cb1d6d/image-34.jpg)

Extra slides[7] Ngiam, J. ; Peng, D. ; Vasudevan, V. ; Kornblith, S. ; Le, Q. V. & Pang, R. , Domain Adaptive Transfer Learning with Specialist Models, Ar. Xiv, 2018 Computer Aided Medical Procedures March 12, 2021

Large models change more than small models Computer Aided Medical Procedures March 12, 2021

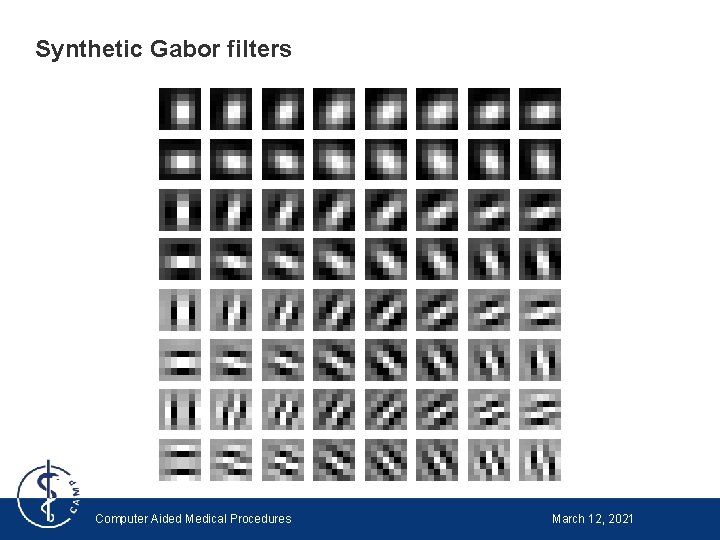

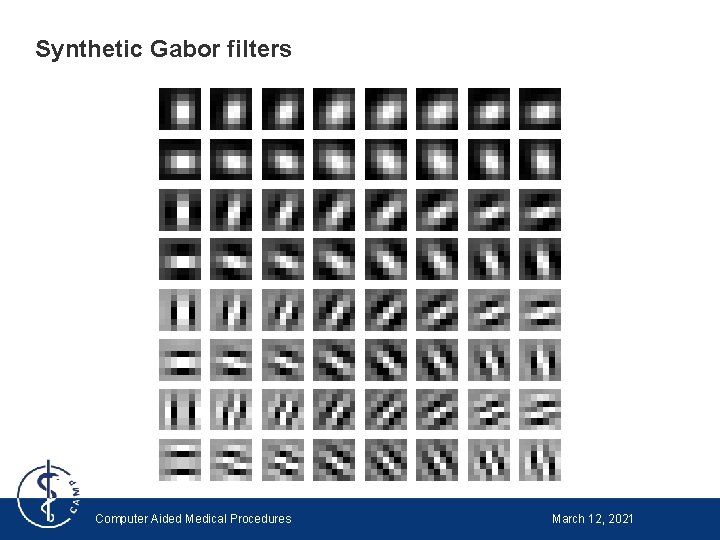

Synthetic Gabor filters Computer Aided Medical Procedures March 12, 2021

Computer Aided Medical Procedures March 12, 2021