ATLAS Data Challenges US ATLAS Physics Computing ANL

- Slides: 23

ATLAS Data Challenges US ATLAS Physics & Computing ANL October 30 th 2001 Gilbert Poulard CERN EP-ATC ATLAS Plenary-18 October 2001

Outline q. ATLAS Data Challenges & “LHC Computing Grid Project” q. Goals q. Scenarios q. Organization ATLAS Plenary-18 October 2001 2

From CERN Computing Review (December 1999 - February 2001) Recommendations: organize the computing for the LHC era LHC Grid project • Phase 1: Development & prototyping (2001 -2004) • Phase 2: Installation of the 1 st production system (2005 -2007) Software & Computing Committee (SC 2) Project accepted by the CERN council (20 September) Ask the experiments to validate their Computing model by iterating on a set of Data Challenges of increasing complexity However DC’s were in our plans ATLAS Plenary-18 October 2001 3

LHC Computing GRID project Phase 1: q Prototype construction develop Grid middleware acquire experience with high-speed wide-area network develop model for distributed analysis adapt LHC applications deploy a prototype (CERN+Tier 1+Tier 2) q Software complete the development of the 1 st version of the physics application and enable them for the distributed grid model develop & support common libraries, tools & frameworks • including simulation, analysis, data management, . . . in parallel LHC collaborations must develop and deploy the first version of their core software ATLAS Plenary-18 October 2001 4

ATLAS Data challenges q Goal understand validate: our computing model, our data model and our software our technology choices q How? In iterating on a set of DCs of increasing complexity Start with data which looks like real data Run the filtering and reconstruction chain Store the output data into our database Run the analysis Produce physics results To study Performances issues, database technologies, analysis scenarios, . . . To identify weaknesses, bottle necks, etc… (but also good points) ATLAS Plenary-18 October 2001 5

ATLAS Data challenges q But: Today we don’t have ‘real data’ Needs to produce ‘simulated data’ first so: • • • Physics Event generation Simulation Pile-up Detector response Plus reconstruction and analysis will be part of the first Data Challenges we need also to “satisfy” the ATLAS communities HLT, Physics groups, . . . ATLAS Plenary-18 October 2001 6

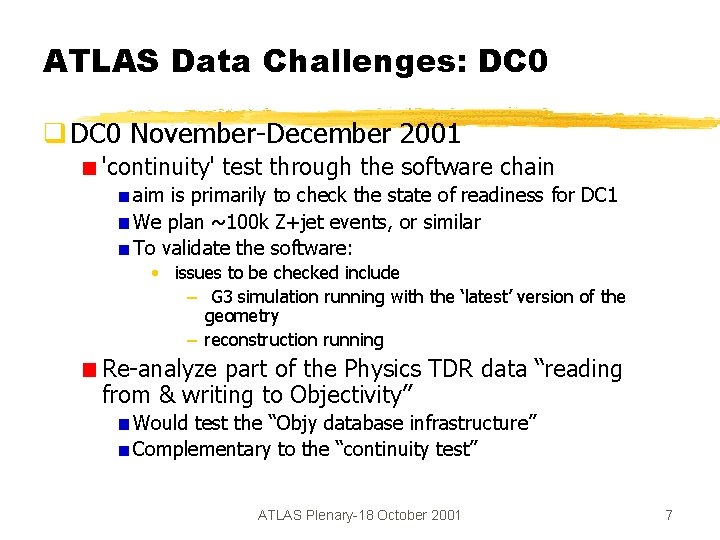

ATLAS Data Challenges: DC 0 q DC 0 November-December 2001 'continuity' test through the software chain aim is primarily to check the state of readiness for DC 1 We plan ~100 k Z+jet events, or similar To validate the software: • issues to be checked include – G 3 simulation running with the ‘latest’ version of the geometry – reconstruction running Re-analyze part of the Physics TDR data “reading from & writing to Objectivity” Would test the “Objy database infrastructure” Complementary to the “continuity test” ATLAS Plenary-18 October 2001 7

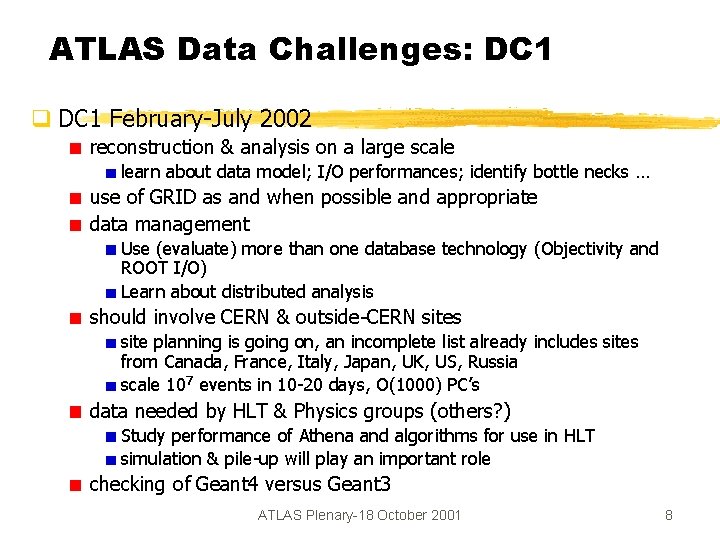

ATLAS Data Challenges: DC 1 q DC 1 February-July 2002 reconstruction & analysis on a large scale learn about data model; I/O performances; identify bottle necks … use of GRID as and when possible and appropriate data management Use (evaluate) more than one database technology (Objectivity and ROOT I/O) Learn about distributed analysis should involve CERN & outside-CERN sites site planning is going on, an incomplete list already includes sites from Canada, France, Italy, Japan, UK, US, Russia scale 107 events in 10 -20 days, O(1000) PC’s data needed by HLT & Physics groups (others? ) Study performance of Athena and algorithms for use in HLT simulation & pile-up will play an important role checking of Geant 4 versus Geant 3 ATLAS Plenary-18 October 2001 8

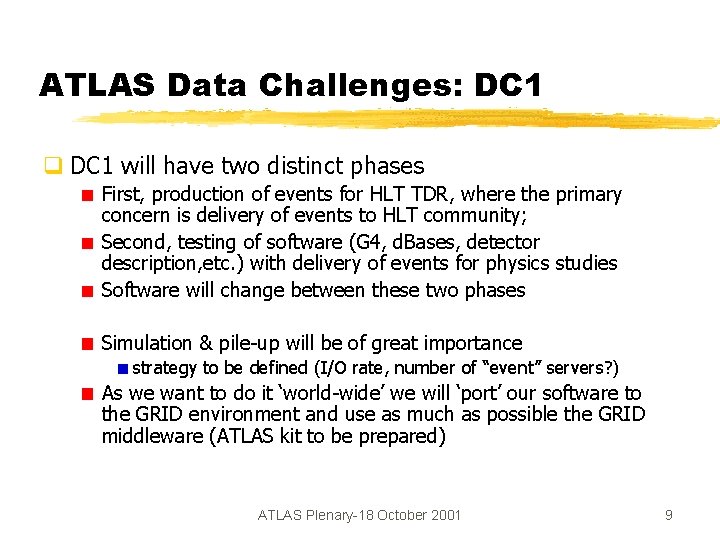

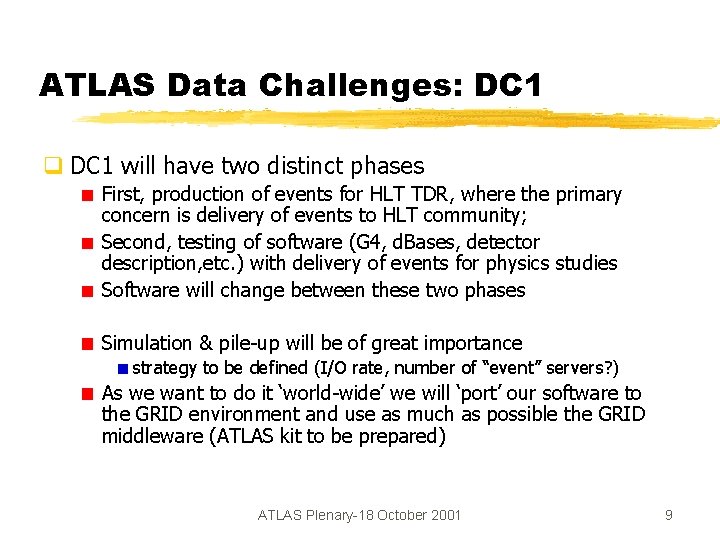

ATLAS Data Challenges: DC 1 q DC 1 will have two distinct phases First, production of events for HLT TDR, where the primary concern is delivery of events to HLT community; Second, testing of software (G 4, d. Bases, detector description, etc. ) with delivery of events for physics studies Software will change between these two phases Simulation & pile-up will be of great importance strategy to be defined (I/O rate, number of “event” servers? ) As we want to do it ‘world-wide’ we will ‘port’ our software to the GRID environment and use as much as possible the GRID middleware (ATLAS kit to be prepared) ATLAS Plenary-18 October 2001 9

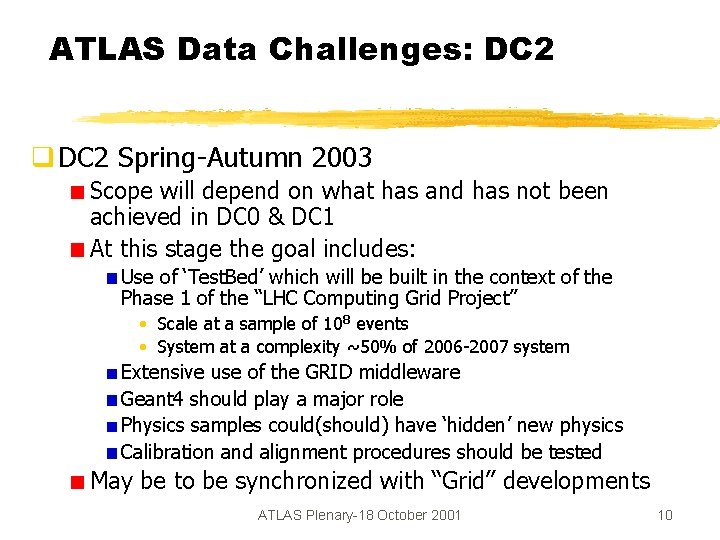

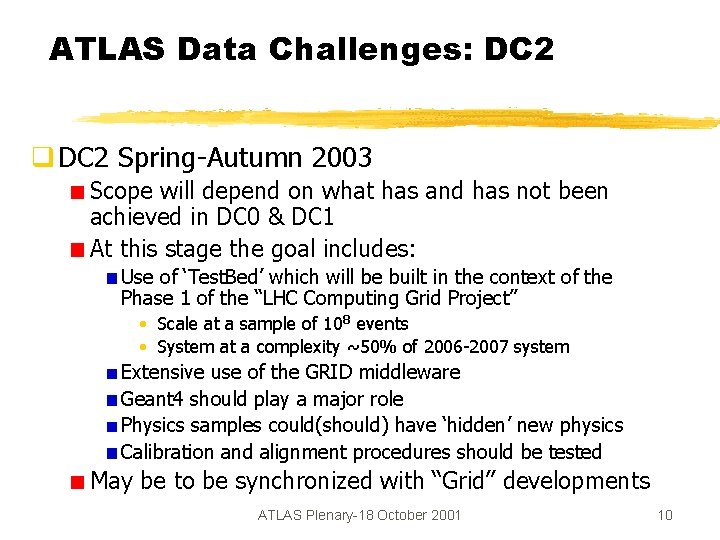

ATLAS Data Challenges: DC 2 q DC 2 Spring-Autumn 2003 Scope will depend on what has and has not been achieved in DC 0 & DC 1 At this stage the goal includes: Use of ‘Test. Bed’ which will be built in the context of the Phase 1 of the “LHC Computing Grid Project” • Scale at a sample of 108 events • System at a complexity ~50% of 2006 -2007 system Extensive use of the GRID middleware Geant 4 should play a major role Physics samples could(should) have ‘hidden’ new physics Calibration and alignment procedures should be tested May be to be synchronized with “Grid” developments ATLAS Plenary-18 October 2001 10

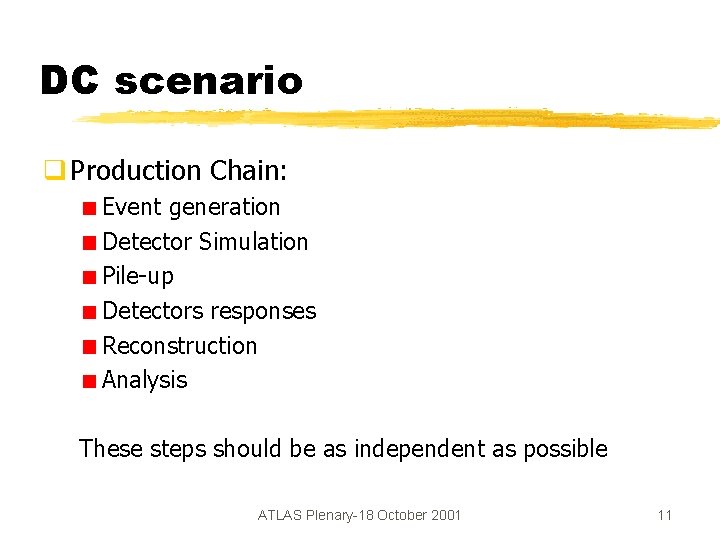

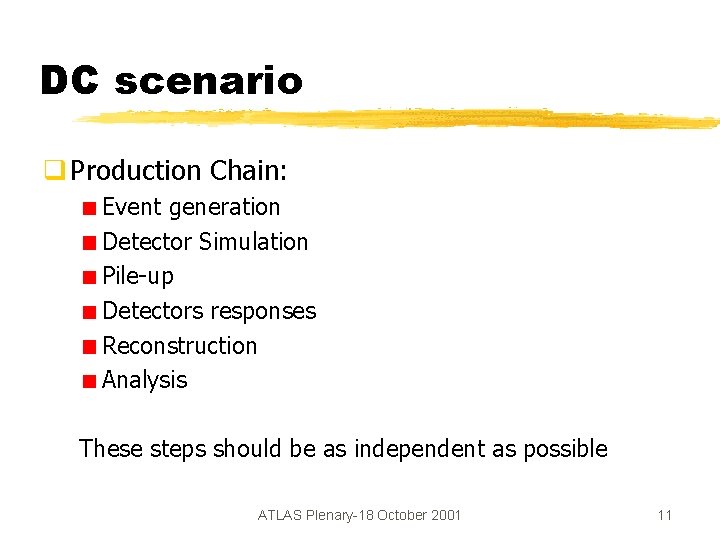

DC scenario q Production Chain: Event generation Detector Simulation Pile-up Detectors responses Reconstruction Analysis These steps should be as independent as possible ATLAS Plenary-18 October 2001 11

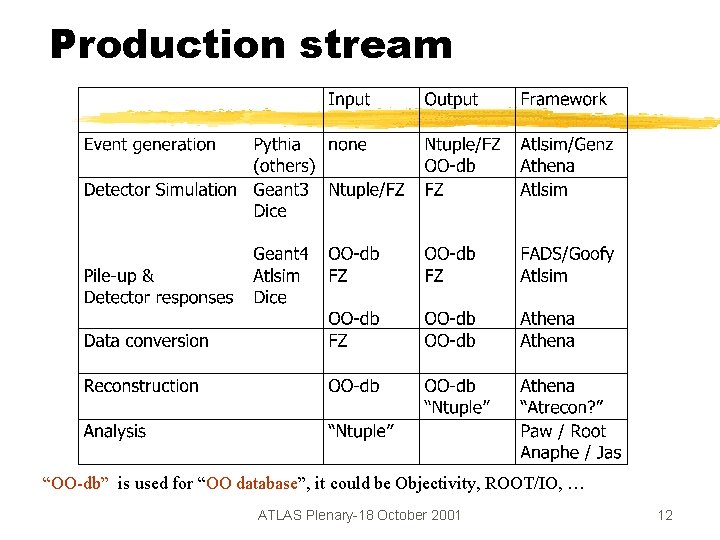

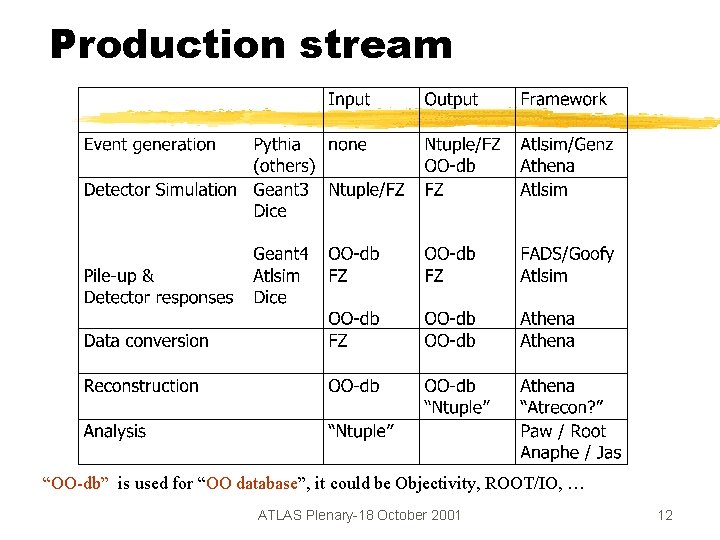

Production stream “OO-db” is used for “OO database”, it could be Objectivity, ROOT/IO, … ATLAS Plenary-18 October 2001 12

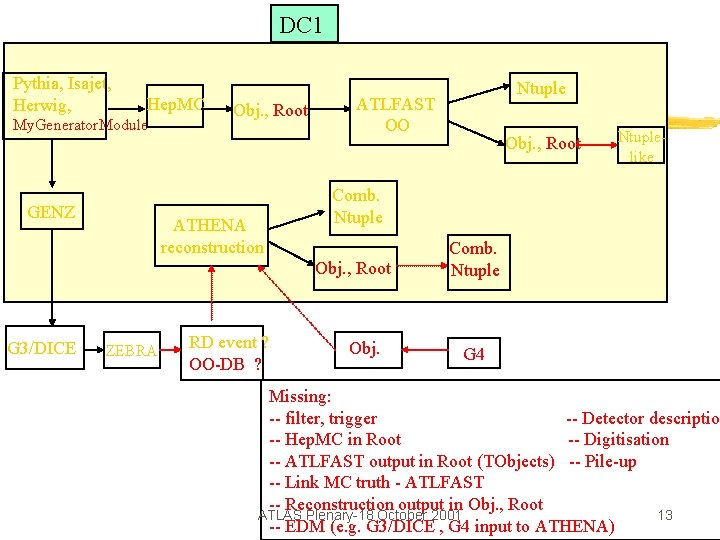

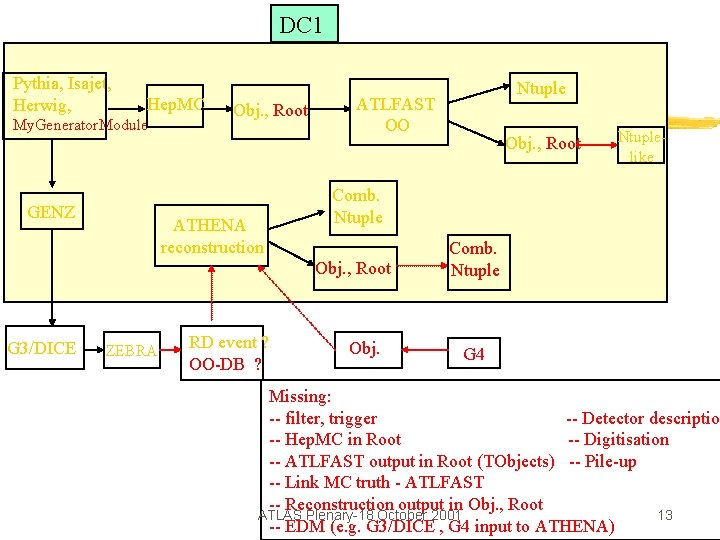

DC 1 Pythia, Isajet, Herwig, Hep. MC My. Generator. Module GENZ Obj. , Root ATHENA reconstruction ATLFAST OO ZEBRA RD event ? OO-DB ? Obj. , Root Ntuple like Comb. Ntuple Obj. , Root G 3/DICE Ntuple Obj. Comb. Ntuple G 4 Missing: -- filter, trigger -- Detector description -- Hep. MC in Root -- Digitisation -- ATLFAST output in Root (TObjects) -- Pile-up -- Link MC truth - ATLFAST -- Reconstruction output in Obj. , Root ATLAS Plenary-18 October 2001 13 -- EDM (e. g. G 3/DICE , G 4 input to ATHENA)

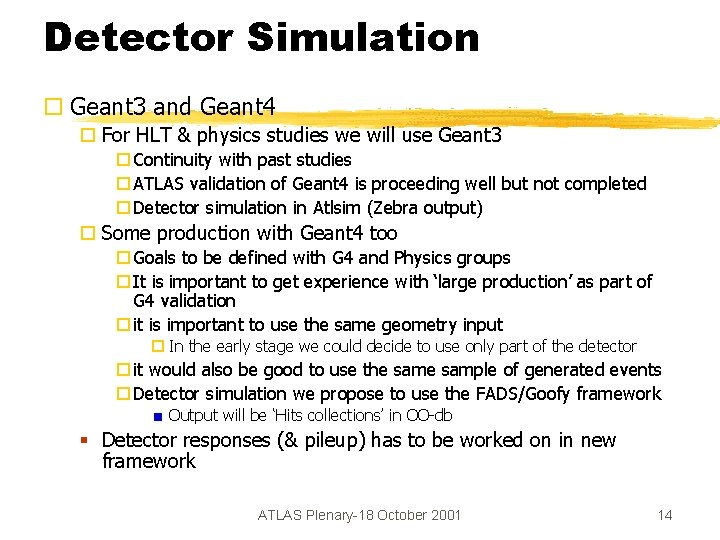

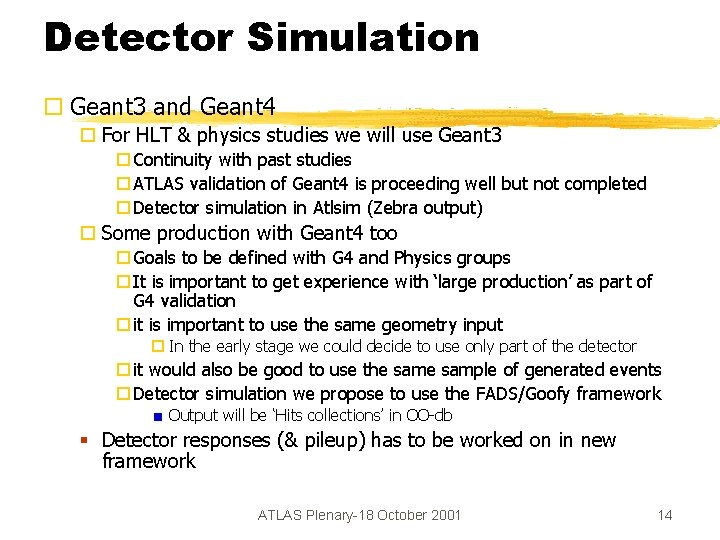

Detector Simulation ¨ Geant 3 and Geant 4 ¨ For HLT & physics studies we will use Geant 3 ¨ Continuity with past studies ¨ ATLAS validation of Geant 4 is proceeding well but not completed ¨ Detector simulation in Atlsim (Zebra output) ¨ Some production with Geant 4 too ¨ Goals to be defined with G 4 and Physics groups ¨ It is important to get experience with ‘large production’ as part of G 4 validation ¨ it is important to use the same geometry input ¨ In the early stage we could decide to use only part of the detector ¨ it would also be good to use the sample of generated events ¨ Detector simulation we propose to use the FADS/Goofy framework Output will be ‘Hits collections’ in OO-db § Detector responses (& pileup) has to be worked on in new framework ATLAS Plenary-18 October 2001 14

Reconstruction q. Reconstruction we want to use the ‘new reconstruction’ code being run in Athena framework Input should be from OO-db Output in OO-db: ESD (event summary data) AOD (analysis object data) TAG (event tag) ATLAS Plenary-18 October 2001 15

Analysis q We are just starting to work on this but Analysis tools evaluation should be part of the DC It will be a good test of the Event Data Model Performance issues should be evaluated Analysis scenario It is important to know the number of analysis groups, the number of physicists per group, the number of people who want to access the data at the same time It is of ‘first’ importance to ‘design’ the analysis environment • to measure the response time • to identify the bottle necks for that users’ input is needed ATLAS Plenary-18 October 2001 16

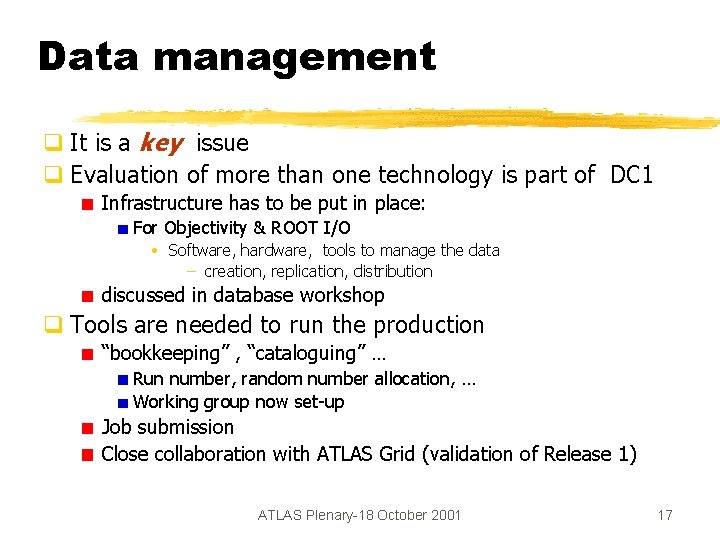

Data management q It is a key issue q Evaluation of more than one technology is part of DC 1 Infrastructure has to be put in place: For Objectivity & ROOT I/O • Software, hardware, tools to manage the data – creation, replication, distribution discussed in database workshop q Tools are needed to run the production “bookkeeping” , “cataloguing” … Run number, random number allocation, … Working group now set-up Job submission Close collaboration with ATLAS Grid (validation of Release 1) ATLAS Plenary-18 October 2001 17

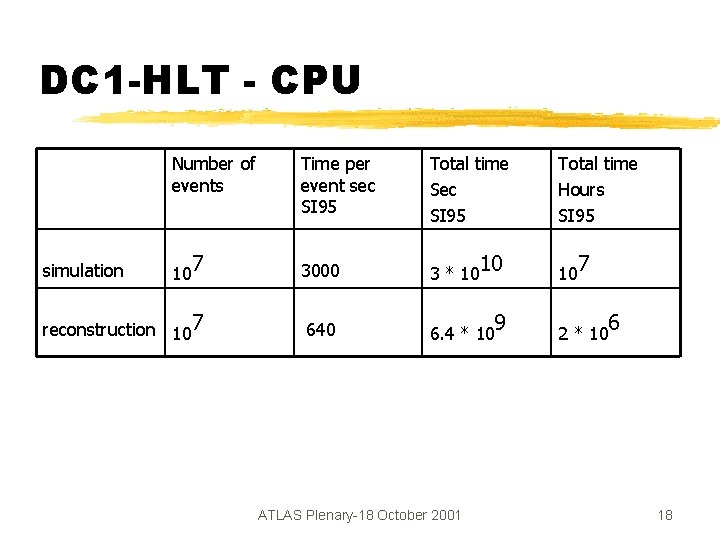

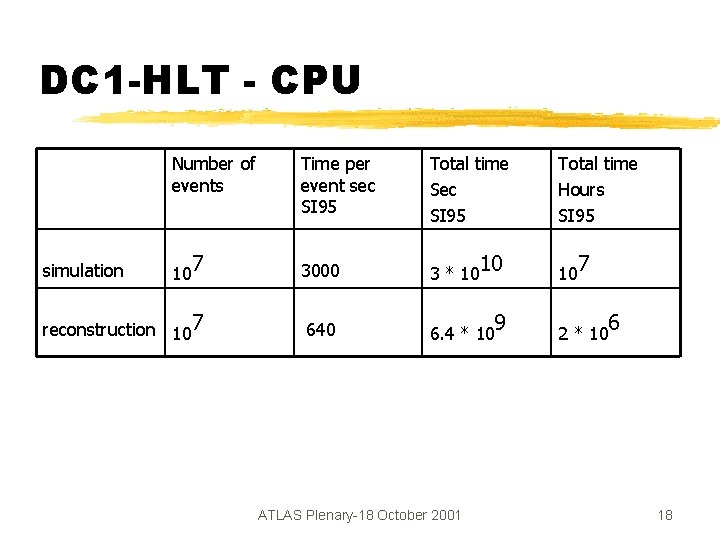

DC 1 -HLT - CPU Number of events Time per event sec SI 95 Total time Sec SI 95 7 3000 3 * 10 reconstruction 107 640 simulation 10 10 6. 4 * 10 ATLAS Plenary-18 October 2001 9 Total time Hours SI 95 10 7 2 * 10 6 18

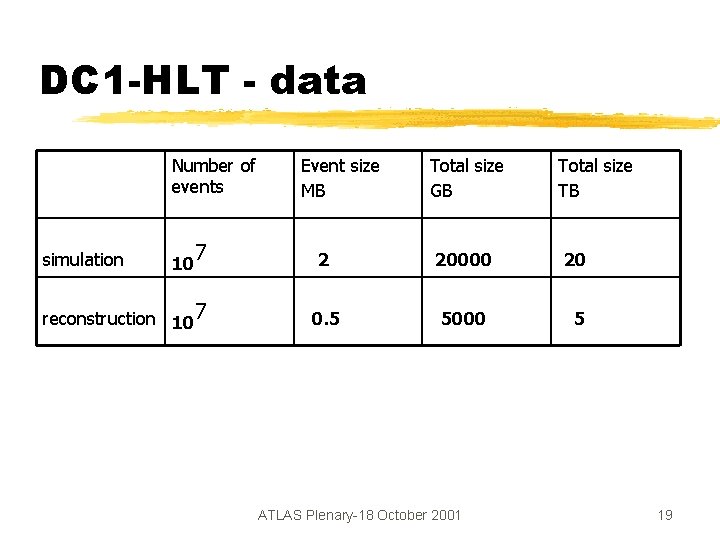

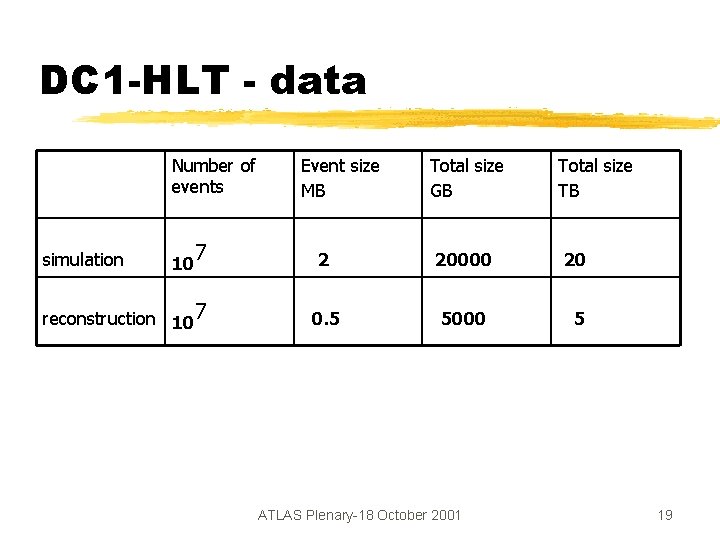

DC 1 -HLT - data Number of events simulation 10 7 reconstruction 107 Event size MB Total size GB Total size TB 2 20000 20 0. 5 5000 5 ATLAS Plenary-18 October 2001 19

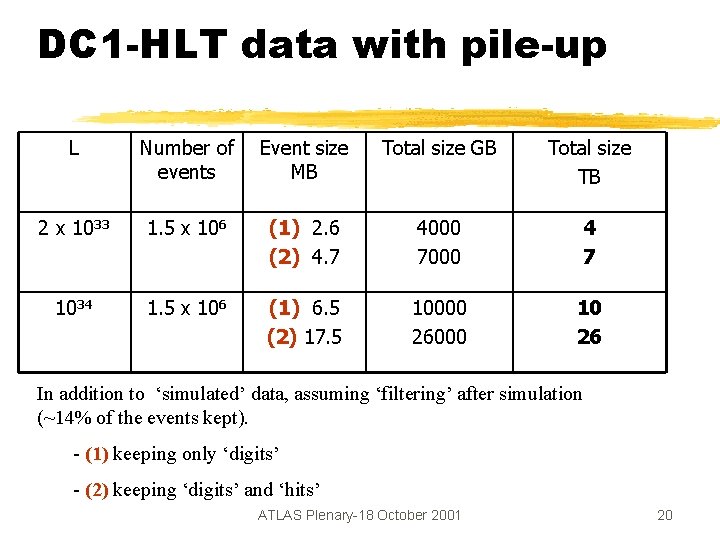

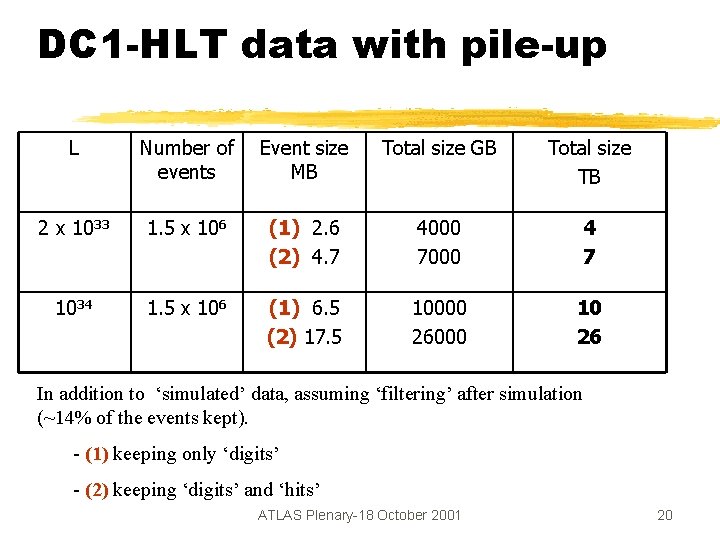

DC 1 -HLT data with pile-up L Number of events Event size MB Total size GB Total size TB 2 x 1033 1. 5 x 106 (1) 2. 6 (2) 4. 7 4000 7000 4 7 1034 1. 5 x 106 (1) 6. 5 (2) 17. 5 10000 26000 10 26 In addition to ‘simulated’ data, assuming ‘filtering’ after simulation (~14% of the events kept). - (1) keeping only ‘digits’ - (2) keeping ‘digits’ and ‘hits’ ATLAS Plenary-18 October 2001 20

Ramp-up scenario @ CERN Week in 2002 ATLAS Plenary-18 October 2001 21

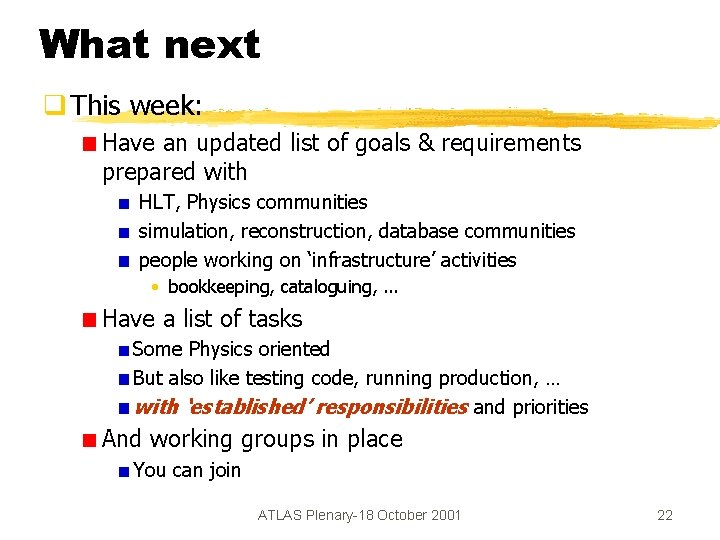

What next q This week: Have an updated list of goals & requirements prepared with HLT, Physics communities simulation, reconstruction, database communities people working on ‘infrastructure’ activities • bookkeeping, cataloguing, . . . Have a list of tasks Some Physics oriented But also like testing code, running production, … with ‘established’ responsibilities and priorities And working groups in place You can join ATLAS Plenary-18 October 2001 22

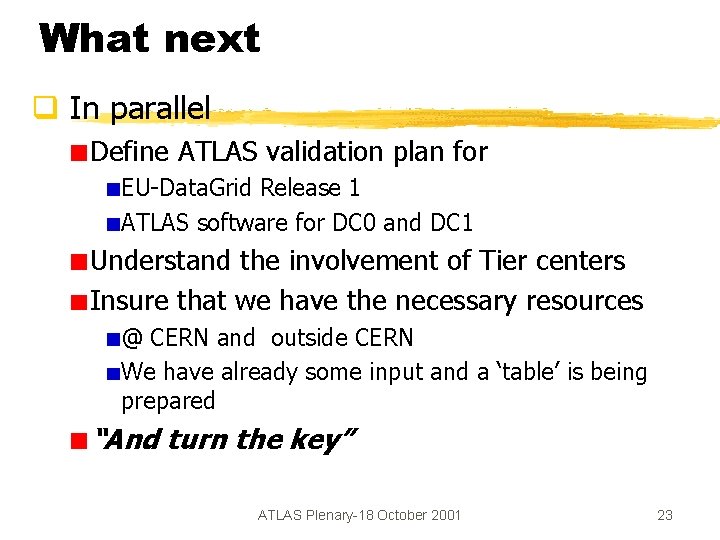

What next q In parallel Define ATLAS validation plan for EU-Data. Grid Release 1 ATLAS software for DC 0 and DC 1 Understand the involvement of Tier centers Insure that we have the necessary resources @ CERN and outside CERN We have already some input and a ‘table’ is being prepared “And turn the key” ATLAS Plenary-18 October 2001 23