Big Data Issues Challenges Tools and Good Practices

Big Data: Issues, Challenges, Tools and Good Practices

“BIG” data • 1 petabyte = 10ˆ15 bytes = 1000 terabytes • 1 exabyte = 10ˆ18 bytes = 1000 petabytes • 1 zetabyte = 10ˆ21 bytes = 1000 exabytes • We now have to deal with exabytes of data • Soon zetabytes… • Computing resources are not following • Google sorted 1 PB of data over 4000 computers in 2011 • Time taken : 6 hours • 200 PB of data flow in Large Hadron Collider • Utah Data Center stores yottabytes of data (1000 zettabytes…) • Wallmart stores 2. 5 PB of data to serve 1 million customer an hour

But size is not everything • Variety of data • Log files, sensor devices, e-mails • Semi-structured or unstructured data • Velocity • Data constantly in motion to database stores • Data coming in real time from sensors • Variability • Inconsistencies in the data flow • Peak of data loads in social media • Complexity • Must cleanse and correlate data from different sources • Value • Extract value from the data collected • Big data => more profit

Application examples • Log files • • Help finding root cause analysis of rare problems Current log file duration : weeks Systems can not handle bigger logs Semi-structured data • Sensor data • Take real-time decision based on sensor values • Repartition of road traffic • Lack of storage infrastructure and analysis techniques • Risk analysis for banks • Social media • Customers sentiments over your product • Huge amount of feedbacks from social media

Big data challenges and issues • • • Privacy and security Access and sharing Storage and processing Analytics Skill requirements • • Fault tolerance Scalability Data quality Heterogeneous data

Privacy and security • • • Personal information + big data new private information Insights in people’s life they are not aware of Social stratification Mining big data may widen the social gaps Big data discriminating people without them knowing

Access and sharing • • To improve decision making data should be open Available with standard APIs Contrary to business secrecy Companies wish to keep their data hidden to stay competitive

Storage and processing • • • Storage available is not big enough => outsourcing data to clouds Costly to move data from storage point to processing point Problematic to upload terabytes in real time Vital to build indexes prior to data storage

Analytics • • • What do I do with all this data ? Do I need all this data ? Which part should be analyzed ? Which part is important ? What can I extract from it ? What can I do if the data volume suddenly becomes too large ?

Skill requirements • New subject • Requires young engineers with diverse skills • • Research Analysis Interpretation Creation • Training programs in companies • Big data curriculum

Fault tolerance • Minimizing damage consequences • Not restarting the whole computation from the beginning • Google petabyte sort : “every time we ran our sort, at least one of our disk managed to break” • Viable strategy : • Divide the task between nodes • Master nodes make sure each task is well done • Other strategy : • Put checkpoints in the process • When the computation is interrupted, restart from the checkpoint

Scalability • Big data = cloud computing • Data distributed between large clusters • => Sharing resources between clusters • How to run the jobs between these resources ? • How to deal with system failures ?

Data quality • With big data, suicidal to start storing large irrelevant data • Focus on quality data from the beginning • Easier to extract better information from quality data • Which data is relevant ? • How much data ? • How accurate is it ?

Heterogeneous data • Cannot only deal with structured data • Unstructured data must be taken into account • Social media interactions • PDF documents • Sensor data • Very costly to work with this kind of data • Re-organizing it into structured data is very costly too

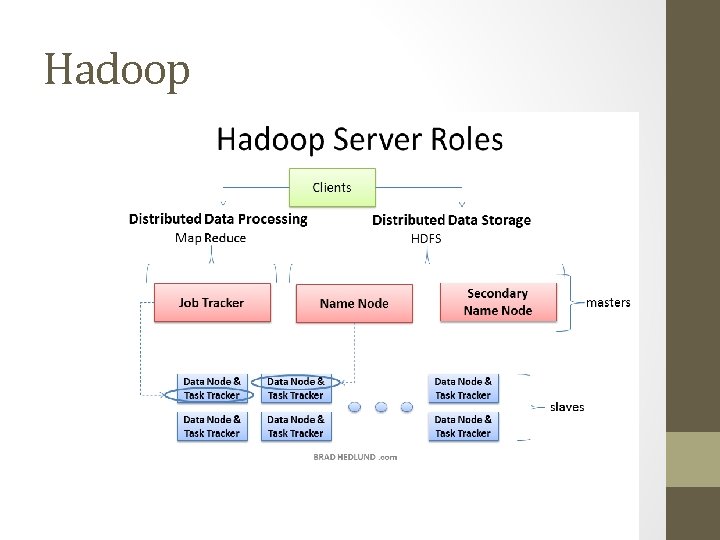

Hadoop • Open source, hosted by Apache ! • Two main components : • Hadoop File System • Hadoop Map. Reduce • • Reading from disk is long Better read from several disks at the same time Many disks implies more failure => Hadoop File System • After reading from all these disks, combine the data • => Hadoop Map Reduce

Hadoop File System • • Storing very large files on clusters Streaming data access Block size : 64 MB Large block size to reduce disk seeks • Two type of nodes : • Namenode (master) • Datanodes (worker) • Namenode manages the namespace, the file system tree and the metadata • Datanotes stores and retrieves data blocks • Close to Google File System

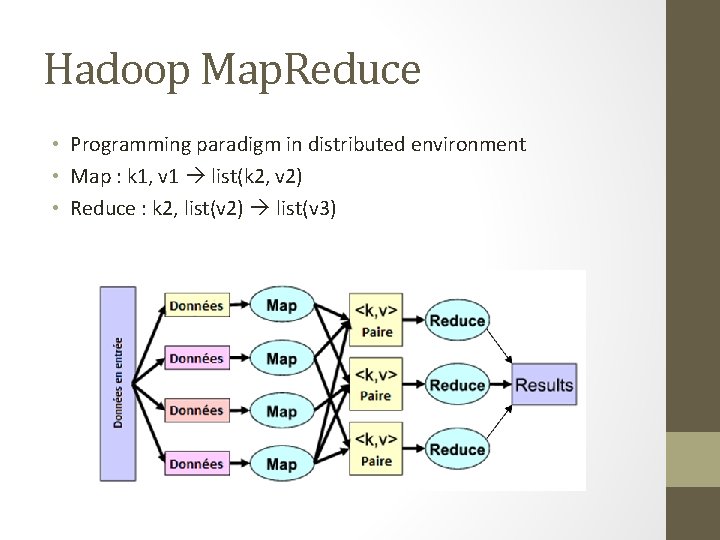

Hadoop Map. Reduce • Programming paradigm in distributed environment • Map : k 1, v 1 list(k 2, v 2) • Reduce : k 2, list(v 2) list(v 3)

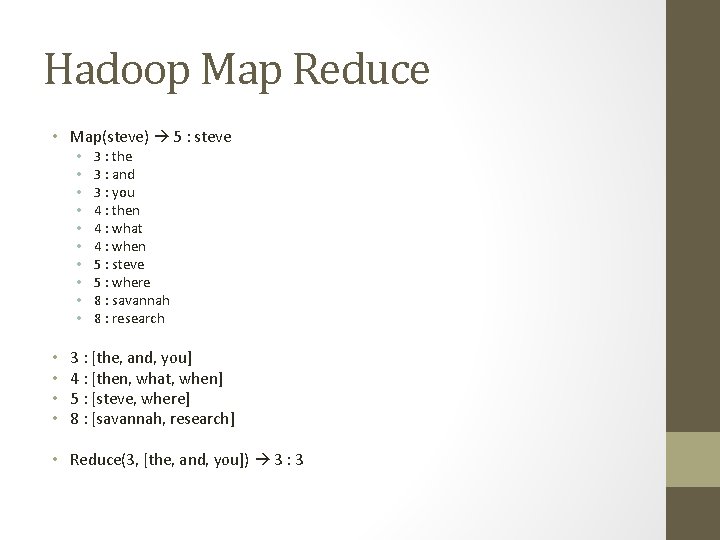

Hadoop Map Reduce • Map(steve) 5 : steve • • • • 3 : the 3 : and 3 : you 4 : then 4 : what 4 : when 5 : steve 5 : where 8 : savannah 8 : research 3 : [the, and, you] 4 : [then, what, when] 5 : [steve, where] 8 : [savannah, research] • Reduce(3, [the, and, you]) 3 : 3

Hadoop Map Reduce • Map tasks and Reduce tasks divided between workers • Master controller assigns the task and follow the completion of each • Failures are detected by the master and tasks are restarted

Hadoop

Big data practices • • • Always anticipate before data becomes out of control Create dimensions for you data Create surrogate keys Be prepared to integrate structured AND unstructured data Stay generic when manipulating the data Always work with key-value pairs Privacy is the toughest challenge of big data Ensure data quality at the earliest point possible Big data widens the gap between business leaders and IT leaders, they must work together !

- Slides: 21