Association Rule Mining II CS 685 Special Topics

- Slides: 33

Association Rule Mining II CS 685: Special Topics in Data Mining Spring 2008 The UNIVERSITY of KENTUCKY

Frequent Pattern Analysis Finding inherent regularities in data What products were often purchased together? — Beer and diapers? ! What are the subsequent purchases after buying a PC? What are the commonly occurring subsequences in a group of genes? What are the shared substructures in a group of effective drugs? 2 CS 685: Special Topics in Data Mining

What Is Frequent Pattern Analysis? Frequent pattern: a pattern (a set of items, subsequences, substructures, etc. ) that occurs frequently in a data set Applications Identify motifs in bio-molecules DNA sequence analysis, protein structure analysis Identify patterns in micro-arrays Business applications: Market basket analysis, cross-marketing, catalog design, sale campaign analysis, etc. 3 CS 685: Special Topics in Data Mining

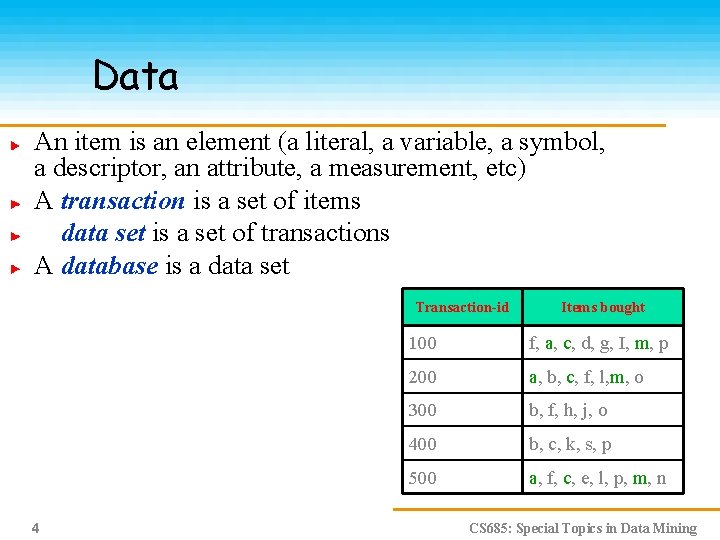

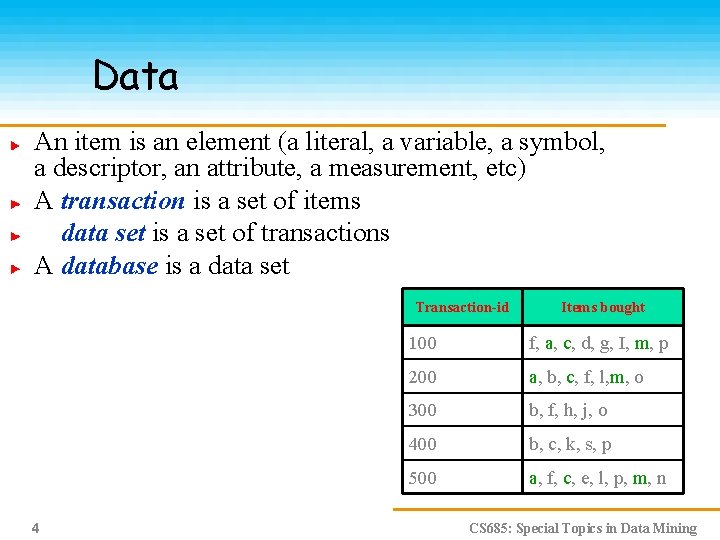

Data An item is an element (a literal, a variable, a symbol, a descriptor, an attribute, a measurement, etc) A transaction is a set of items A data set is a set of transactions A database is a data set Transaction-id 4 Items bought 100 f, a, c, d, g, I, m, p 200 a, b, c, f, l, m, o 300 b, f, h, j, o 400 b, c, k, s, p 500 a, f, c, e, l, p, m, n CS 685: Special Topics in Data Mining

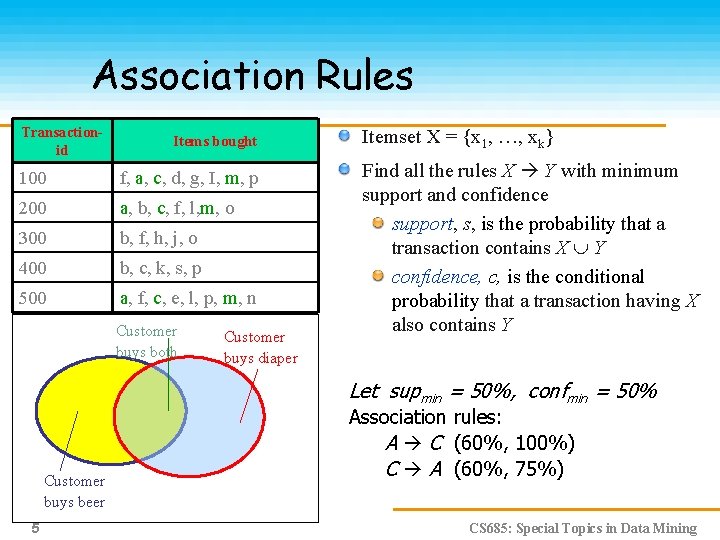

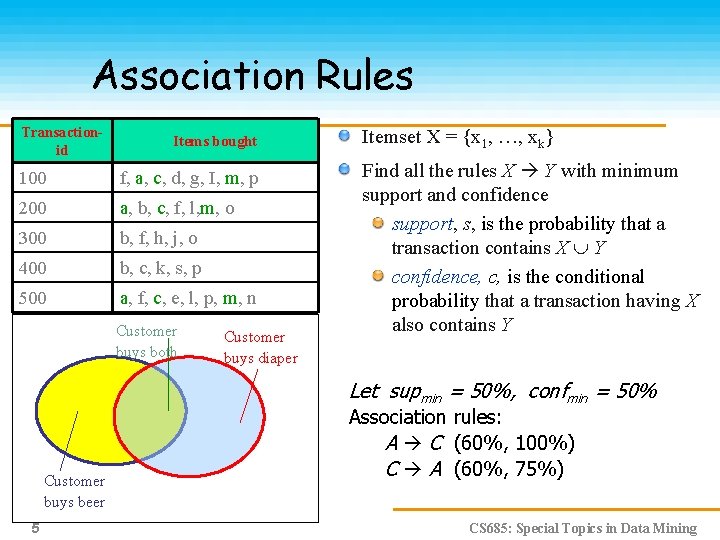

Association Rules Transactionid Items bought 100 f, a, c, d, g, I, m, p 200 a, b, c, f, l, m, o 300 b, f, h, j, o 400 b, c, k, s, p 500 a, f, c, e, l, p, m, n Customer buys both Customer buys diaper Itemset X = {x 1, …, xk} Find all the rules X Y with minimum support and confidence support, s, is the probability that a transaction contains X Y confidence, c, is the conditional probability that a transaction having X also contains Y Let supmin = 50%, confmin = 50% Customer buys beer 5 Association rules: A C (60%, 100%) C A (60%, 75%) CS 685: Special Topics in Data Mining

Apriori-based Mining Generate length (k+1) candidate itemsets from length k frequent itemsets, and Test the candidates against DB 6 CS 685: Special Topics in Data Mining

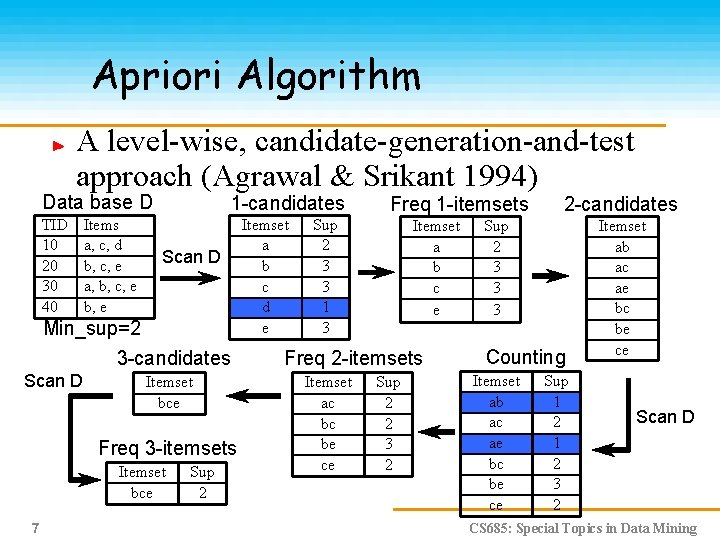

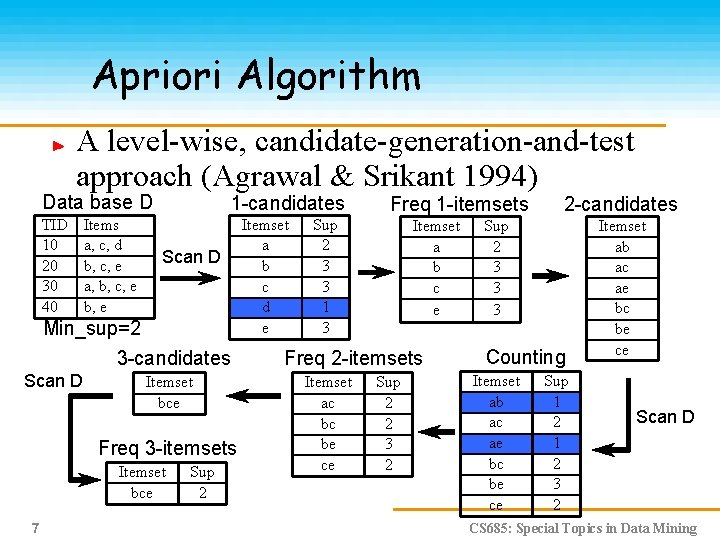

Apriori Algorithm A level-wise, candidate-generation-and-test approach (Agrawal & Srikant 1994) Data base D TID 10 20 30 40 Items a, c, d b, c, e a, b, c, e b, e 1 -candidates Scan D Min_sup=2 3 -candidates Scan D Itemset bce Freq 3 -itemsets Itemset bce 7 Sup 2 Itemset a b c d e Sup 2 3 3 1 3 Freq 1 -itemsets Itemset a b c e Freq 2 -itemsets Itemset ac bc be ce Sup 2 2 3 2 2 -candidates Sup 2 3 3 3 Counting Itemset ab ac ae bc be ce Sup 1 2 3 2 Itemset ab ac ae bc be ce Scan D CS 685: Special Topics in Data Mining

Important Details of Apriori How to generate candidates? Step 1: self-joining Lk Step 2: pruning How to count supports of candidates? 8 CS 685: Special Topics in Data Mining

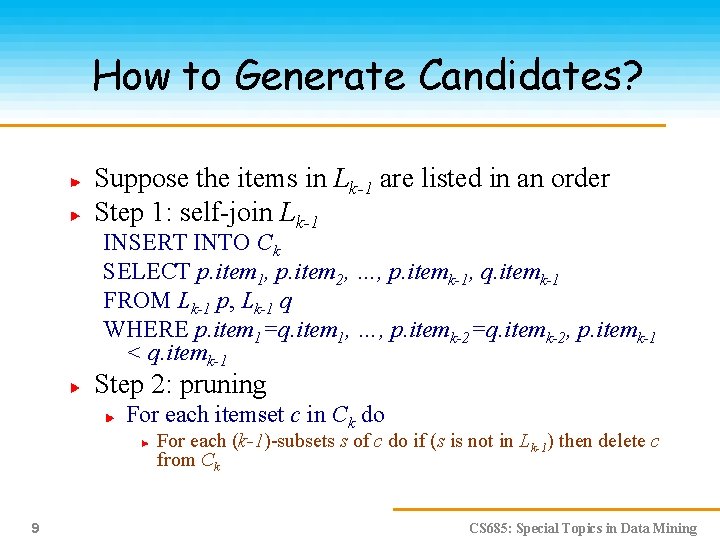

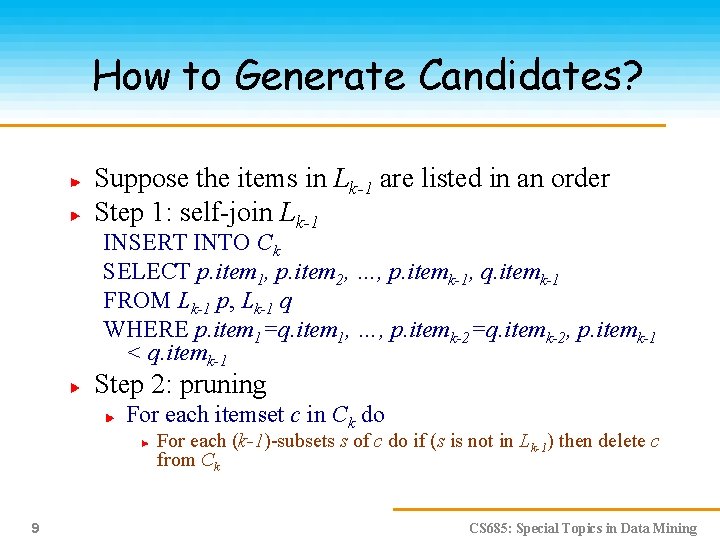

How to Generate Candidates? Suppose the items in Lk-1 are listed in an order Step 1: self-join Lk-1 INSERT INTO Ck SELECT p. item 1, p. item 2, …, p. itemk-1, q. itemk-1 FROM Lk-1 p, Lk-1 q WHERE p. item 1=q. item 1, …, p. itemk-2=q. itemk-2, p. itemk-1 < q. itemk-1 Step 2: pruning For each itemset c in Ck do For each (k-1)-subsets s of c do if (s is not in Lk-1) then delete c from Ck 9 CS 685: Special Topics in Data Mining

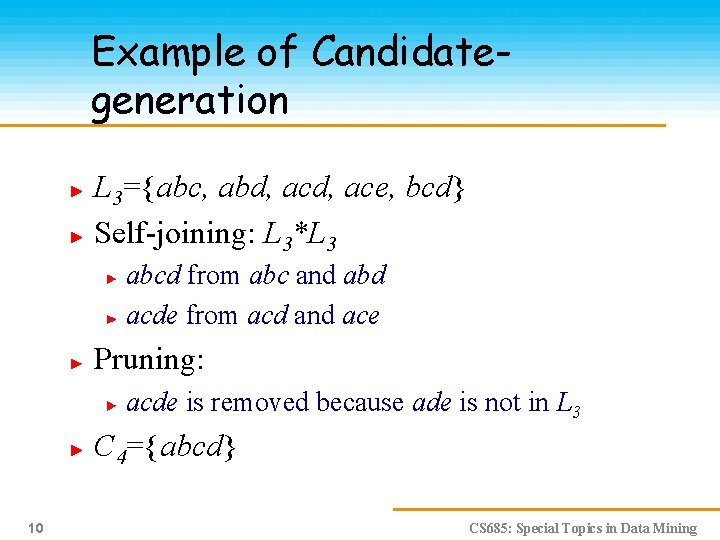

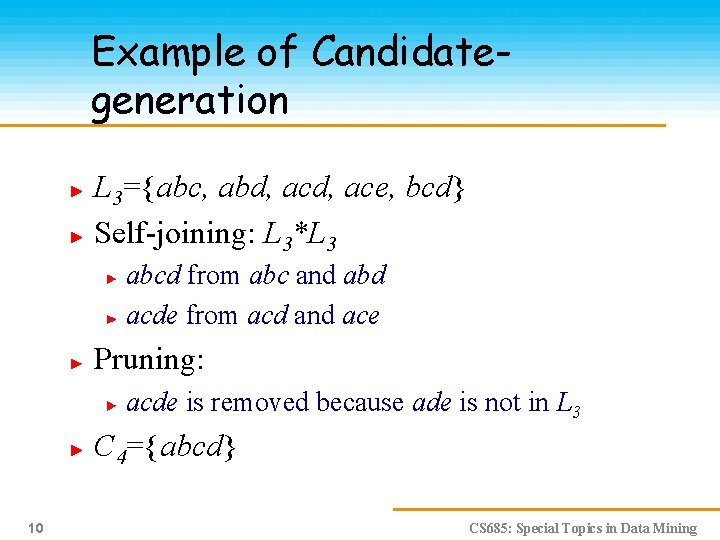

Example of Candidategeneration L 3={abc, abd, ace, bcd} Self-joining: L 3*L 3 abcd from abc and abd acde from acd and ace Pruning: acde is removed because ade is not in L 3 C 4={abcd} 10 CS 685: Special Topics in Data Mining

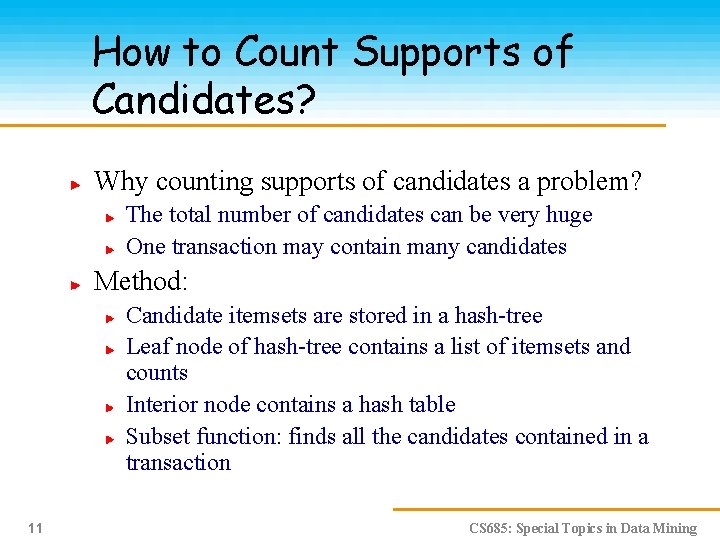

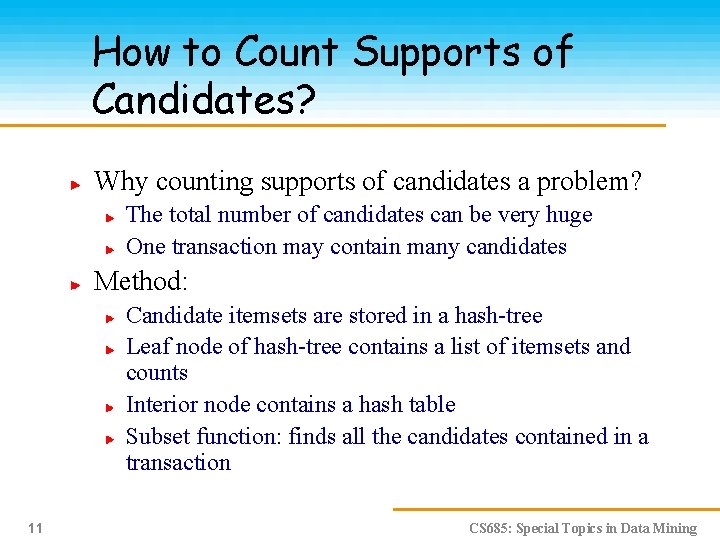

How to Count Supports of Candidates? Why counting supports of candidates a problem? The total number of candidates can be very huge One transaction may contain many candidates Method: Candidate itemsets are stored in a hash-tree Leaf node of hash-tree contains a list of itemsets and counts Interior node contains a hash table Subset function: finds all the candidates contained in a transaction 11 CS 685: Special Topics in Data Mining

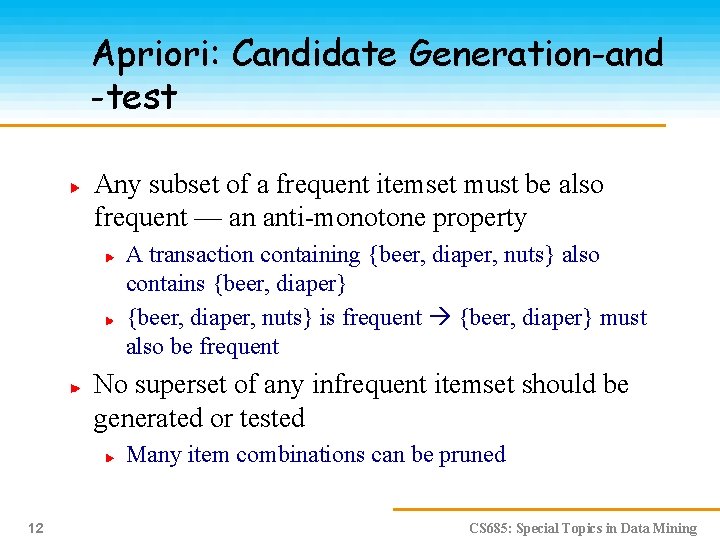

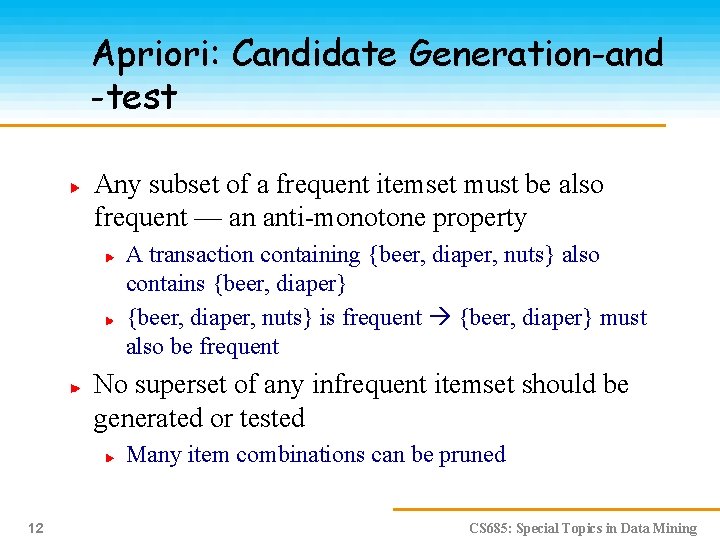

Apriori: Candidate Generation-and -test Any subset of a frequent itemset must be also frequent — an anti-monotone property A transaction containing {beer, diaper, nuts} also contains {beer, diaper} {beer, diaper, nuts} is frequent {beer, diaper} must also be frequent No superset of any infrequent itemset should be generated or tested Many item combinations can be pruned 12 CS 685: Special Topics in Data Mining

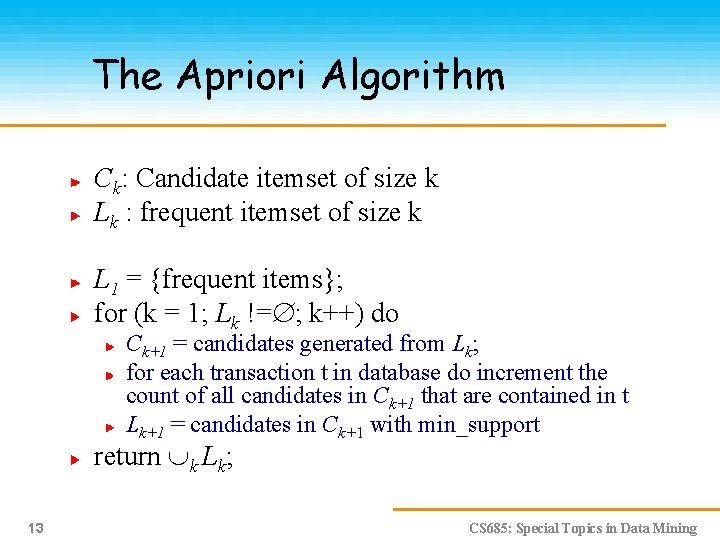

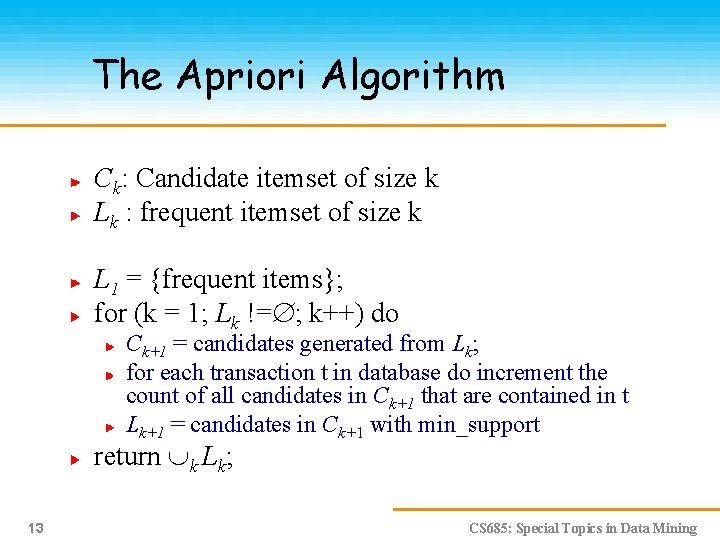

The Apriori Algorithm Ck: Candidate itemset of size k Lk : frequent itemset of size k L 1 = {frequent items}; for (k = 1; Lk != ; k++) do Ck+1 = candidates generated from Lk; for each transaction t in database do increment the count of all candidates in Ck+1 that are contained in t Lk+1 = candidates in Ck+1 with min_support return k Lk; 13 CS 685: Special Topics in Data Mining

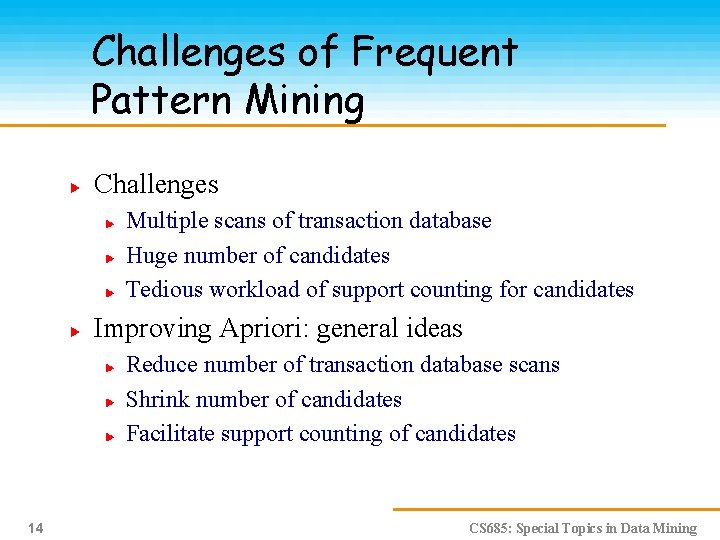

Challenges of Frequent Pattern Mining Challenges Multiple scans of transaction database Huge number of candidates Tedious workload of support counting for candidates Improving Apriori: general ideas Reduce number of transaction database scans Shrink number of candidates Facilitate support counting of candidates 14 CS 685: Special Topics in Data Mining

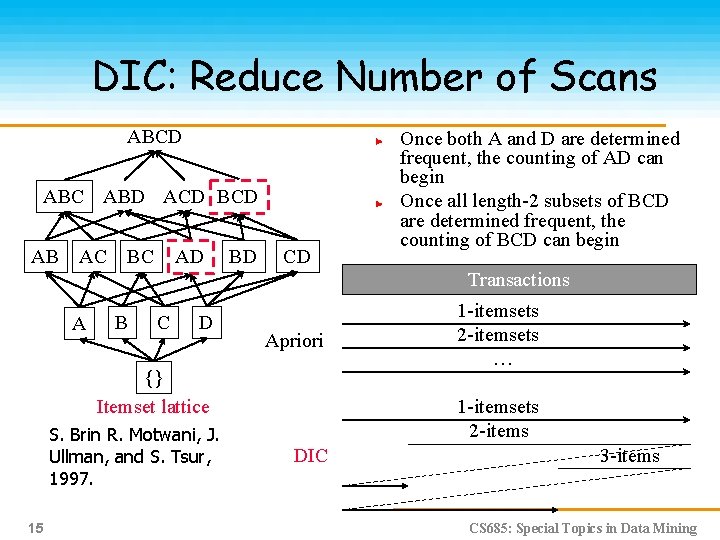

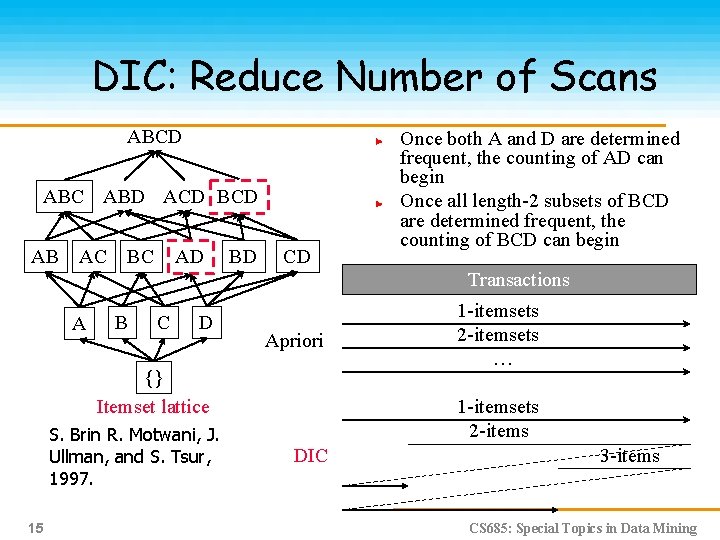

DIC: Reduce Number of Scans ABCD ABC ABD ACD BCD AB AC BC AD BD CD Once both A and D are determined frequent, the counting of AD can begin Once all length-2 subsets of BCD are determined frequent, the counting of BCD can begin Transactions A B C D Apriori {} Itemset lattice S. Brin R. Motwani, J. Ullman, and S. Tsur, 1997. 15 1 -itemsets 2 -itemsets … 1 -itemsets 2 -items DIC 3 -items CS 685: Special Topics in Data Mining

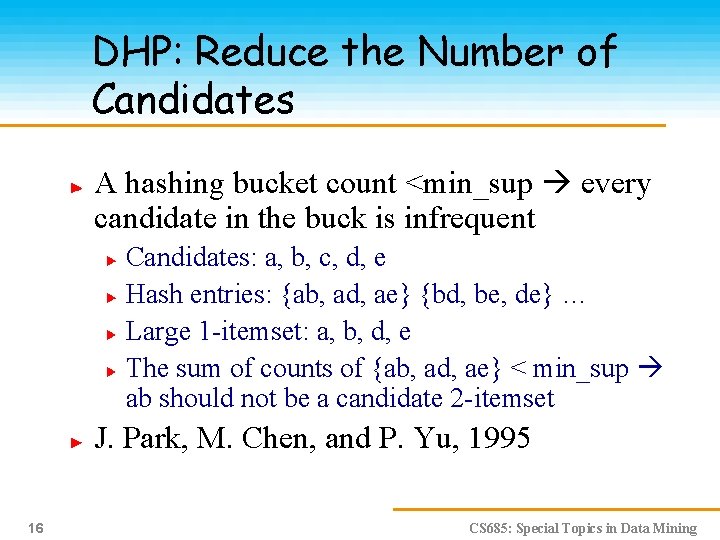

DHP: Reduce the Number of Candidates A hashing bucket count <min_sup every candidate in the buck is infrequent Candidates: a, b, c, d, e Hash entries: {ab, ad, ae} {bd, be, de} … Large 1 -itemset: a, b, d, e The sum of counts of {ab, ad, ae} < min_sup ab should not be a candidate 2 -itemset J. Park, M. Chen, and P. Yu, 1995 16 CS 685: Special Topics in Data Mining

Partition: Scan Database Only Twice Partition the database into n partitions Itemset X is frequent in at least one partition Scan 1: partition database and find local frequent patterns Scan 2: consolidate global frequent patterns A. Savasere, E. Omiecinski, and S. Navathe, 1995 17 CS 685: Special Topics in Data Mining

Sampling for Frequent Patterns Select a sample of original database, mine frequent patterns within sample using Apriori Scan database once to verify frequent itemsets found in sample, only borders of closure of frequent patterns are checked Example: check abcd instead of ab, ac, …, etc. Scan database again to find missed frequent patterns H. Toivonen, 1996 18 CS 685: Special Topics in Data Mining

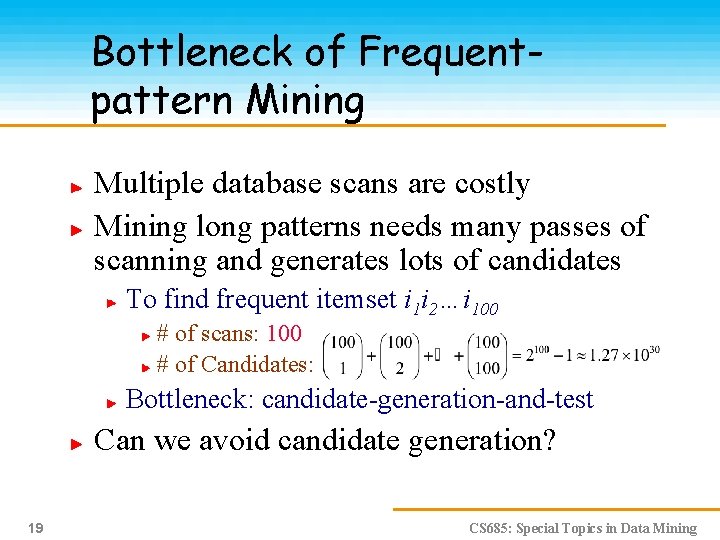

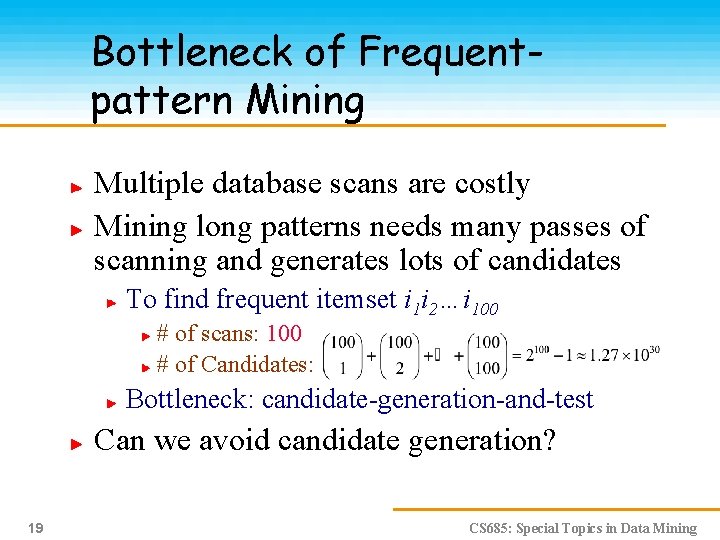

Bottleneck of Frequentpattern Mining Multiple database scans are costly Mining long patterns needs many passes of scanning and generates lots of candidates To find frequent itemset i 1 i 2…i 100 # of scans: 100 # of Candidates: Bottleneck: candidate-generation-and-test Can we avoid candidate generation? 19 CS 685: Special Topics in Data Mining

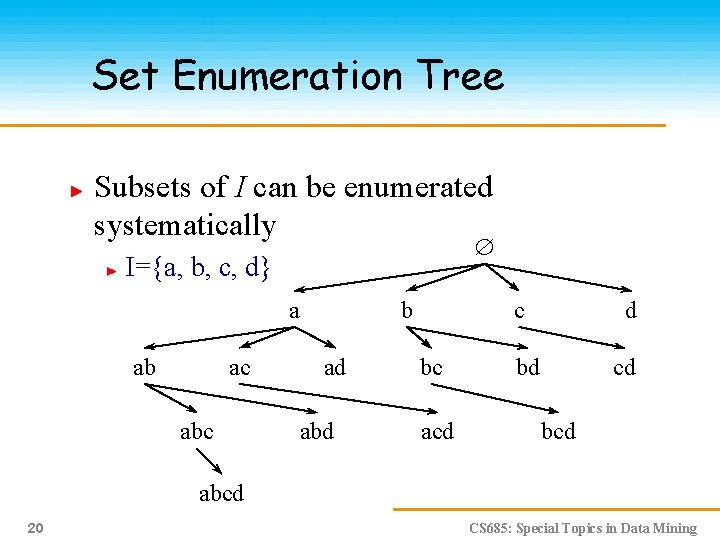

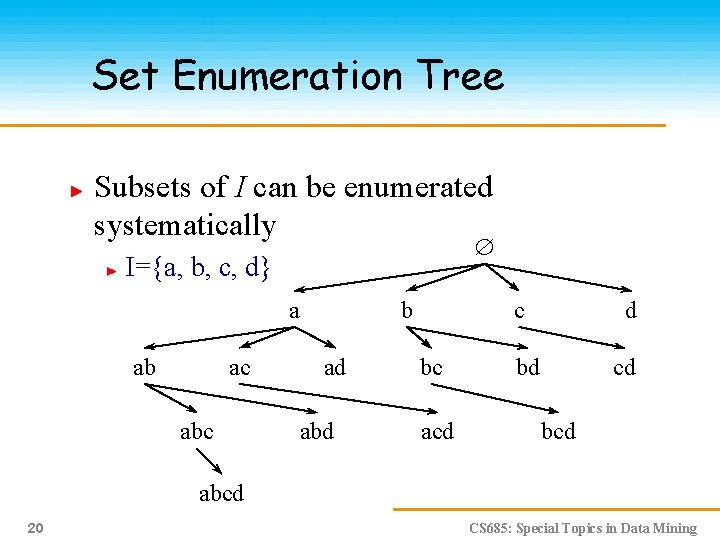

Set Enumeration Tree Subsets of I can be enumerated systematically I={a, b, c, d} a ab ac abc b ad abd c bc acd d bd cd bcd abcd 20 CS 685: Special Topics in Data Mining

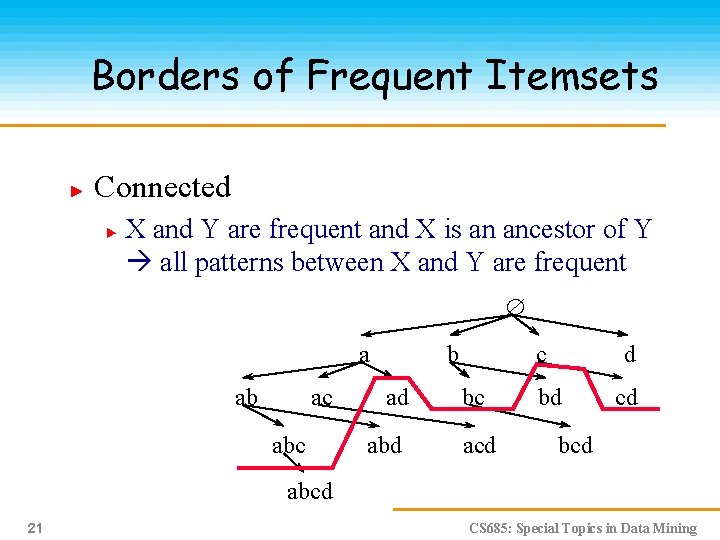

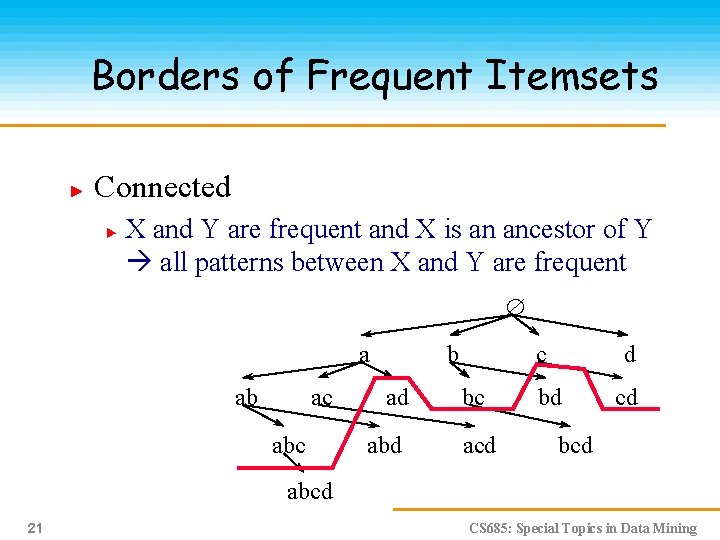

Borders of Frequent Itemsets Connected X and Y are frequent and X is an ancestor of Y all patterns between X and Y are frequent a ab ac abc b ad abd c bc acd d bd cd bcd abcd 21 CS 685: Special Topics in Data Mining

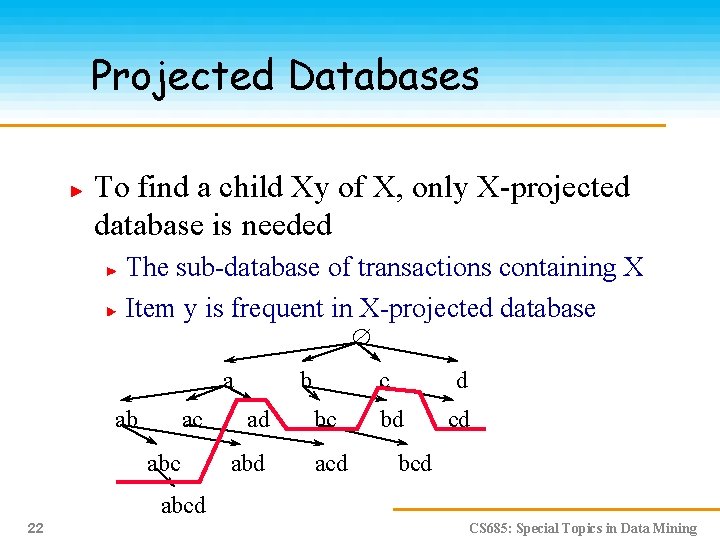

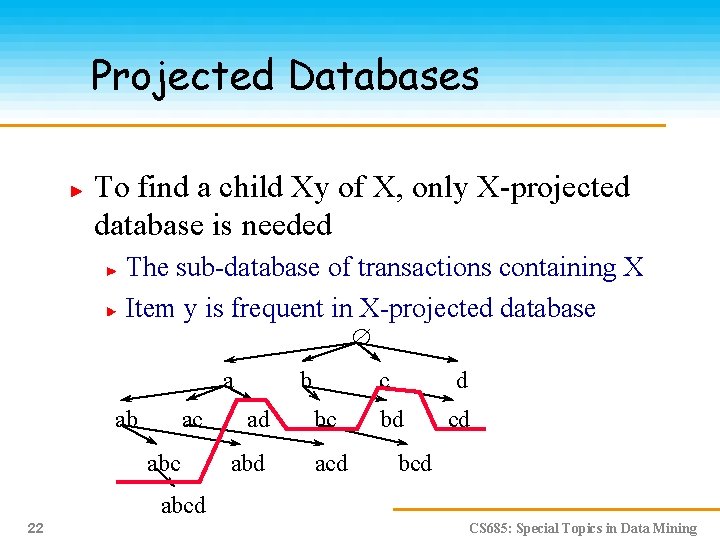

Projected Databases To find a child Xy of X, only X-projected database is needed The sub-database of transactions containing X Item y is frequent in X-projected database a ab ac abc b ad abd c bc acd d bd cd bcd abcd 22 CS 685: Special Topics in Data Mining

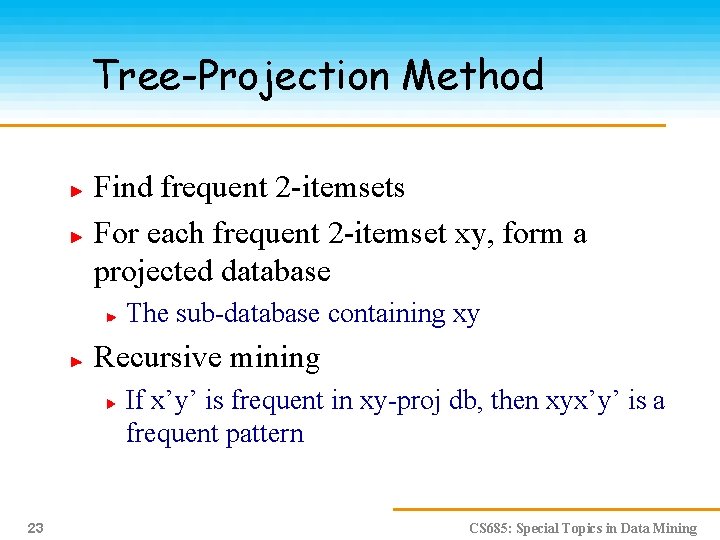

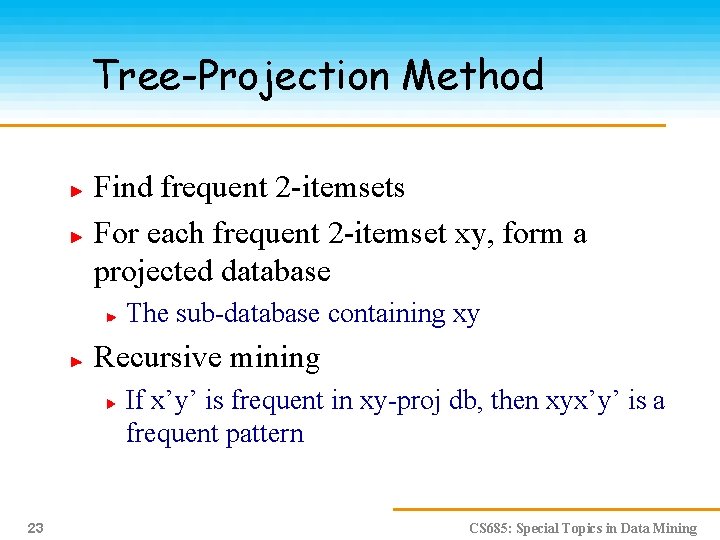

Tree-Projection Method Find frequent 2 -itemsets For each frequent 2 -itemset xy, form a projected database The sub-database containing xy Recursive mining If x’y’ is frequent in xy-proj db, then xyx’y’ is a frequent pattern 23 CS 685: Special Topics in Data Mining

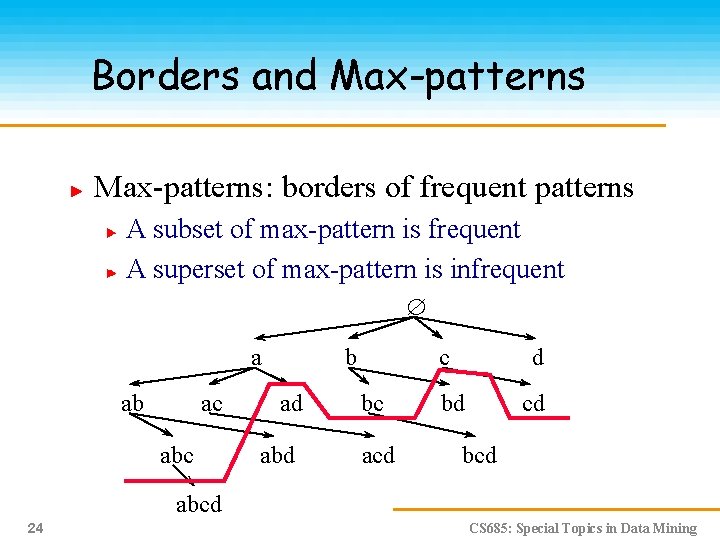

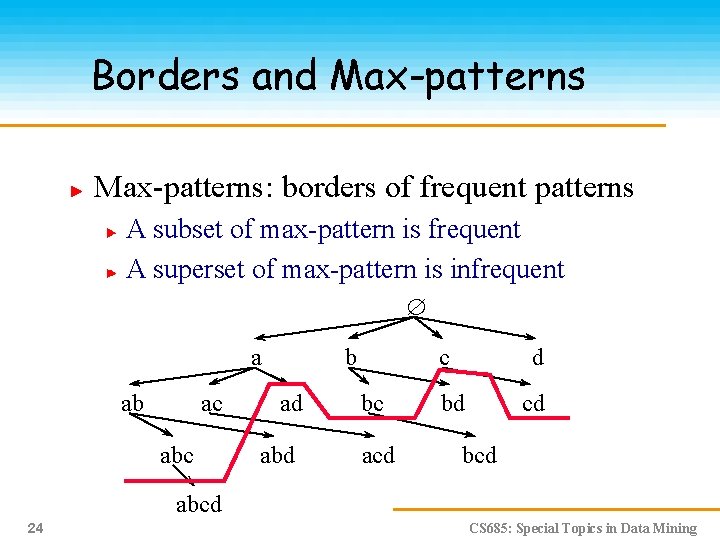

Borders and Max-patterns: borders of frequent patterns A subset of max-pattern is frequent A superset of max-pattern is infrequent a ab ac abc b ad abd c bc acd d bd cd bcd abcd 24 CS 685: Special Topics in Data Mining

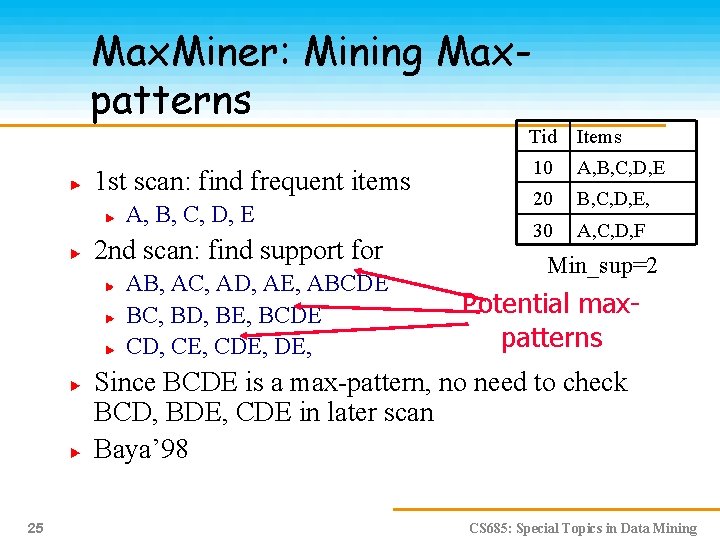

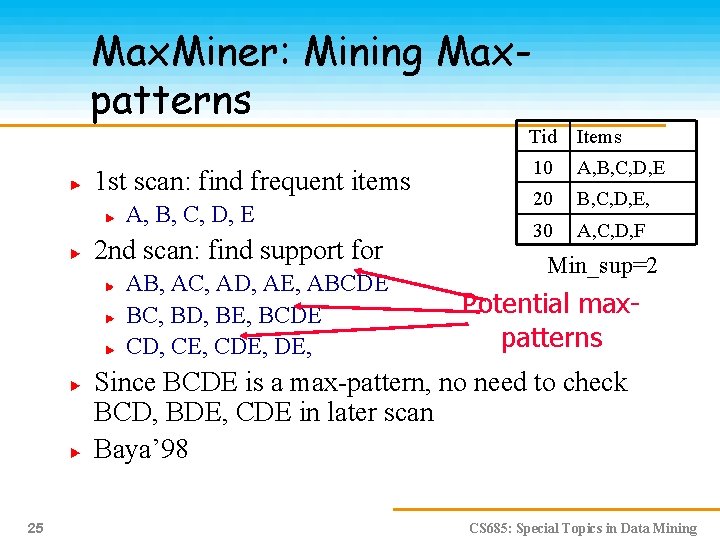

Max. Miner: Mining Maxpatterns 1 st scan: find frequent items A, B, C, D, E 2 nd scan: find support for AB, AC, AD, AE, ABCDE BC, BD, BE, BCDE CD, CE, CDE, Tid Items 10 A, B, C, D, E 20 B, C, D, E, 30 A, C, D, F Min_sup=2 Potential maxpatterns Since BCDE is a max-pattern, no need to check BCD, BDE, CDE in later scan Baya’ 98 25 CS 685: Special Topics in Data Mining

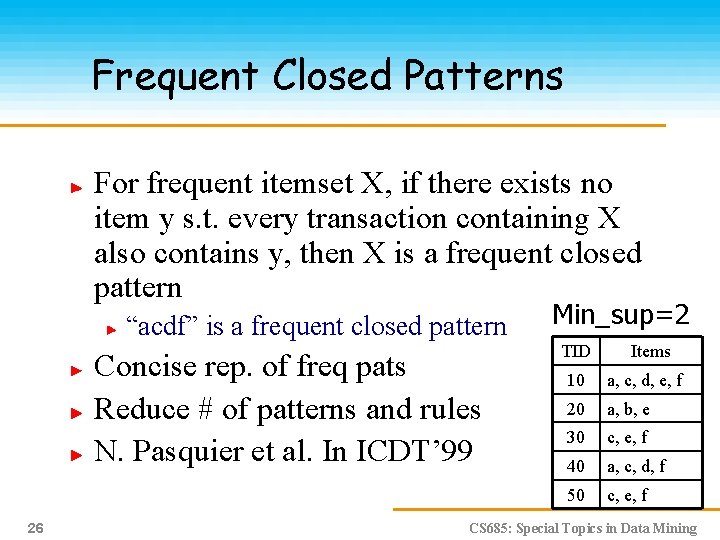

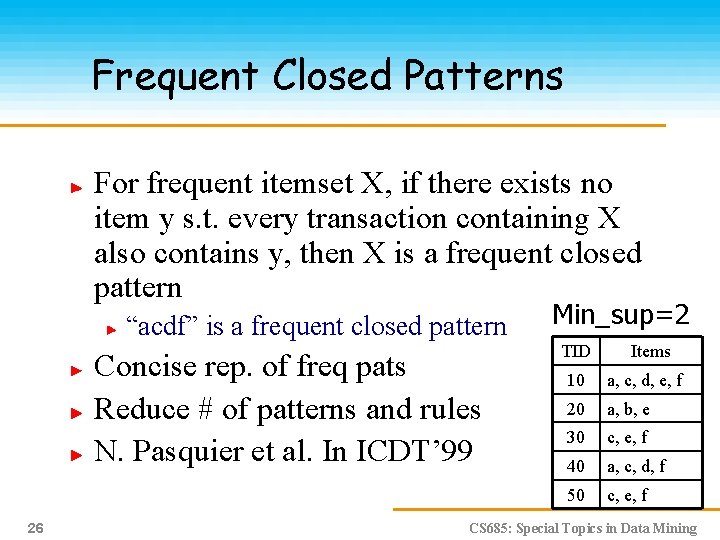

Frequent Closed Patterns For frequent itemset X, if there exists no item y s. t. every transaction containing X also contains y, then X is a frequent closed pattern “acdf” is a frequent closed pattern Concise rep. of freq pats Reduce # of patterns and rules N. Pasquier et al. In ICDT’ 99 26 Min_sup=2 TID Items 10 a, c, d, e, f 20 a, b, e 30 c, e, f 40 a, c, d, f 50 c, e, f CS 685: Special Topics in Data Mining

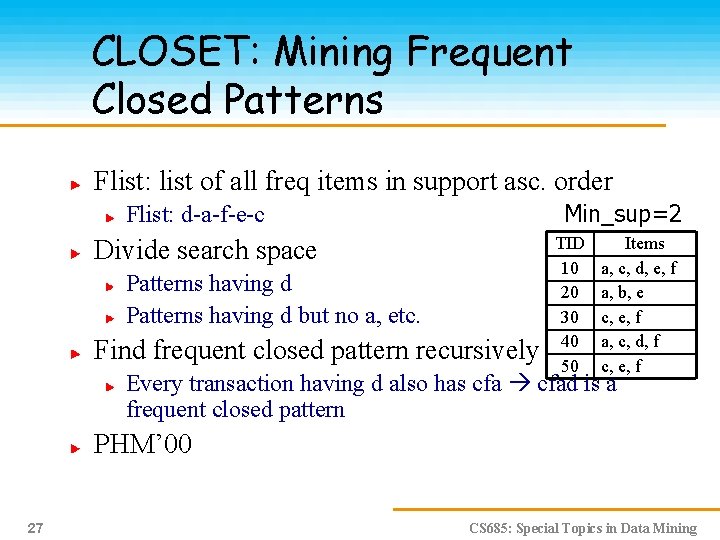

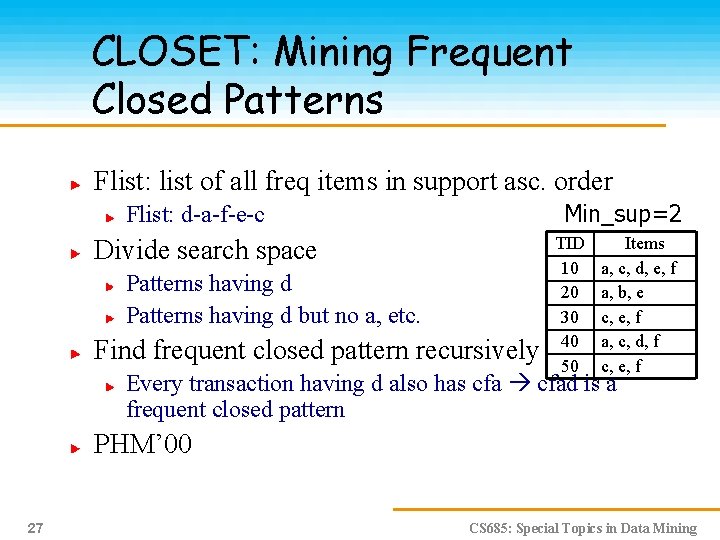

CLOSET: Mining Frequent Closed Patterns Flist: list of all freq items in support asc. order Min_sup=2 Flist: d-a-f-e-c Divide search space Patterns having d but no a, etc. Find frequent closed pattern recursively TID 10 20 30 40 50 Items a, c, d, e, f a, b, e c, e, f a, c, d, f c, e, f Every transaction having d also has cfad is a frequent closed pattern PHM’ 00 27 CS 685: Special Topics in Data Mining

Closed and Max-patterns Closed pattern mining algorithms can be adapted to mine max-patterns A max-pattern must be closed Depth-first search methods have advantages over breadth-first search ones 28 CS 685: Special Topics in Data Mining

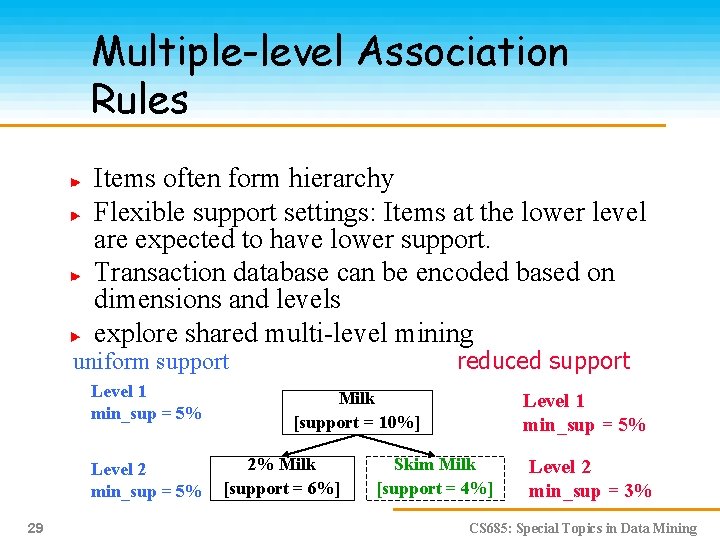

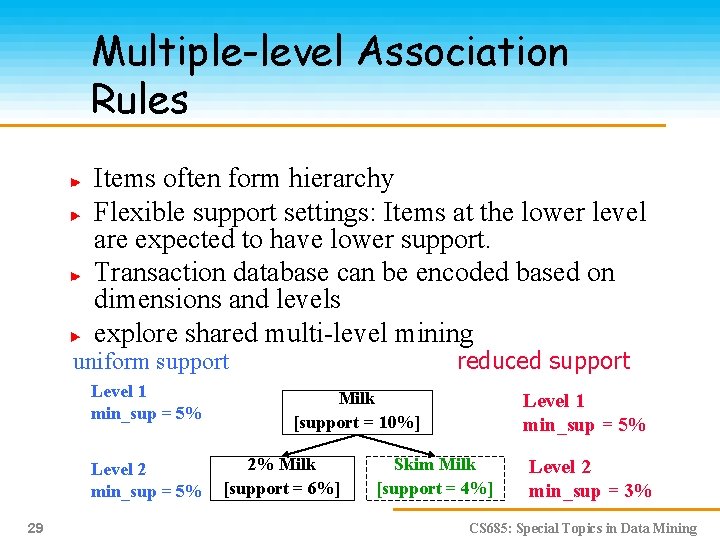

Multiple-level Association Rules Items often form hierarchy Flexible support settings: Items at the lower level are expected to have lower support. Transaction database can be encoded based on dimensions and levels explore shared multi-level mining reduced support uniform support Level 1 min_sup = 5% Level 2 min_sup = 5% 29 Milk [support = 10%] 2% Milk [support = 6%] Level 1 min_sup = 5% Skim Milk [support = 4%] Level 2 min_sup = 3% CS 685: Special Topics in Data Mining

Multi-dimensional Association Rules Single-dimensional rules: buys(X, “milk”) buys(X, “bread”) MD rules: 2 dimensions or predicates Inter-dimension assoc. rules (no repeated predicates) age(X, ” 19 -25”) occupation(X, “student”) buys(X, “coke”) hybrid-dimension assoc. rules (repeated predicates) age(X, ” 19 -25”) buys(X, “popcorn”) buys(X, “coke”) Categorical Attributes: finite number of possible values, no order among values Quantitative Attributes: numeric, implicit order 30 CS 685: Special Topics in Data Mining

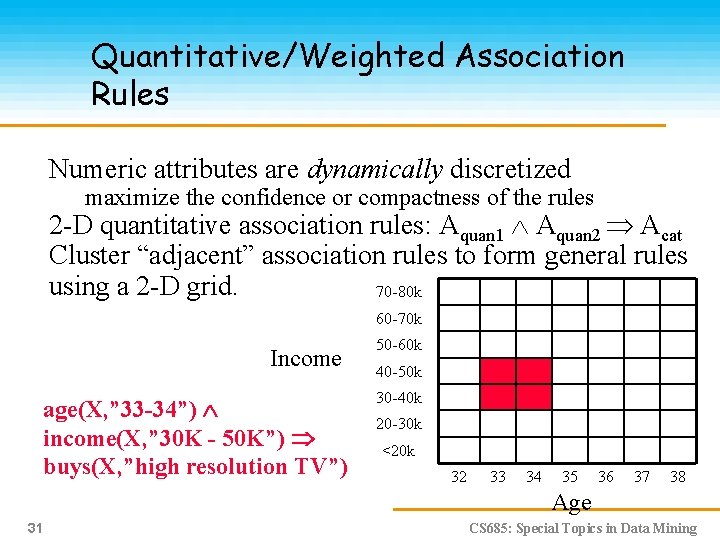

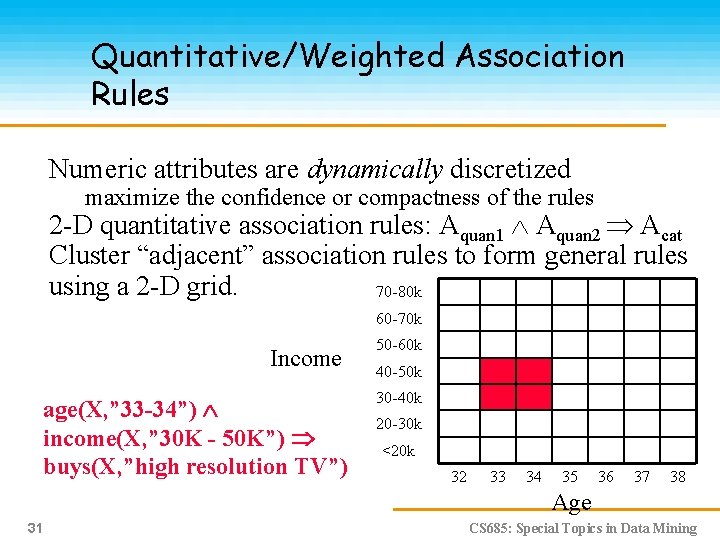

Quantitative/Weighted Association Rules Numeric attributes are dynamically discretized maximize the confidence or compactness of the rules 2 -D quantitative association rules: Aquan 1 Aquan 2 Acat Cluster “adjacent” association rules to form general rules using a 2 -D grid. 70 -80 k 60 -70 k Income age(X, ” 33 -34”) income(X, ” 30 K - 50 K”) buys(X, ”high resolution TV”) 50 -60 k 40 -50 k 30 -40 k 20 -30 k <20 k 32 33 34 35 36 37 38 Age 31 CS 685: Special Topics in Data Mining

Constraint-based Data Mining Find all the patterns in a database autonomously? The patterns could be too many but not focused! Data mining should be interactive User directs what to be mined Constraint-based mining User flexibility: provides constraints on what to be mined System optimization: push constraints for efficient mining 32 CS 685: Special Topics in Data Mining

Constraints in Data Mining Knowledge type constraint classification, association, etc. Data constraint — using SQL-like queries find product pairs sold together in stores in New York Dimension/level constraint in relevance to region, price, brand, customer category Rule (or pattern) constraint small sales (price < $10) triggers big sales (sum >$200) Interestingness constraint strong rules: support and confidence 33 CS 685: Special Topics in Data Mining