Fifteen Implementation Principles CS 685 Network Algorithmics Spring

- Slides: 27

Fifteen Implementation Principles CS 685 Network Algorithmics Spring 2006 1 Spring 2006 CS 685 Network Algorithmics

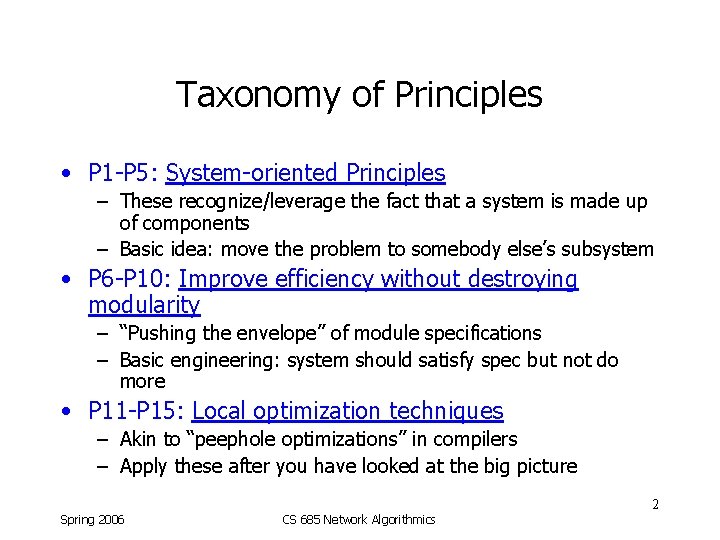

Taxonomy of Principles • P 1 -P 5: System-oriented Principles – These recognize/leverage the fact that a system is made up of components – Basic idea: move the problem to somebody else’s subsystem • P 6 -P 10: Improve efficiency without destroying modularity – “Pushing the envelope” of module specifications – Basic engineering: system should satisfy spec but not do more • P 11 -P 15: Local optimization techniques – Akin to “peephole optimizations” in compilers – Apply these after you have looked at the big picture 2 Spring 2006 CS 685 Network Algorithmics

Part I: Systems Principles 3 Spring 2006 CS 685 Network Algorithmics

P 1: Avoid Obvious Waste • Key Concept: look for ways to avoid doing something, or to avoid doing it multiple times • Example: copying in protocol stacks – In the old days, copying was not a bottleneck – But transmission & processor speeds increased much faster than memory speeds • Today, reading/writing from/to memory is slowest operation on a packet • “Zero-copy” protocol stacks never copy packet data once it is stored in memory by the NIC – Eventually multiple copies became a bottleneck 4 Spring 2006 CS 685 Network Algorithmics

P 2: Shift Computation in Time • Key Concept: Move expensive operations from where time is scarce to where it is more plentiful – P 2 a: Precompute (= shift earlier in time) • Example: computing a function by table lookup – P 2 b: Evaluate Lazily (= shift later in time) • Example: copy-on-write – P 2 c: Share Expenses (= collect things in time) • Example: garbage collection • Examples: – initializing counter arrays: lazy evaluation + batching 5 Spring 2006 CS 685 Network Algorithmics

P 3: Relax (Sub)System Requirements • Key Concept: make one subsystem’s job easier by relaxing the specification (possibly at the expense of another subsystem’s job getting slightly harder) – P 3 a: Trade certainty for time (= use probabilistic solutions) – P 3 b: Trade accuracy for time (= use approximate solutions) • Remember: “Good enough” is good enough – P 3 c: Shift computation in space (= let someone else do it) • Example: DF bit in IPv 4 – Purpose: to help End Systems avoid fragmentation – Why avoid? • ease load on routers, avoid loss of APDUs 6 Spring 2006 CS 685 Network Algorithmics

P 4: Leverage Off-System Components • Key Concept: design bottom-up, use hardware – P 4 a: Exploit locality (= exploit caches) • Note: not always useful (cf. IP forwarding lookups) – P 4 b: Trade Memory for Speed • Note: this can be interpreted two ways – Use more memory to avoid computation (cf P 12) – Use less memory to make data structures fit in cache – P 4 c: Exploit hardware features • Examples – Some NIC cards compute TCP checksum on the board (i. e. the OS/software does not need to compute it) 7 Spring 2006 CS 685 Network Algorithmics

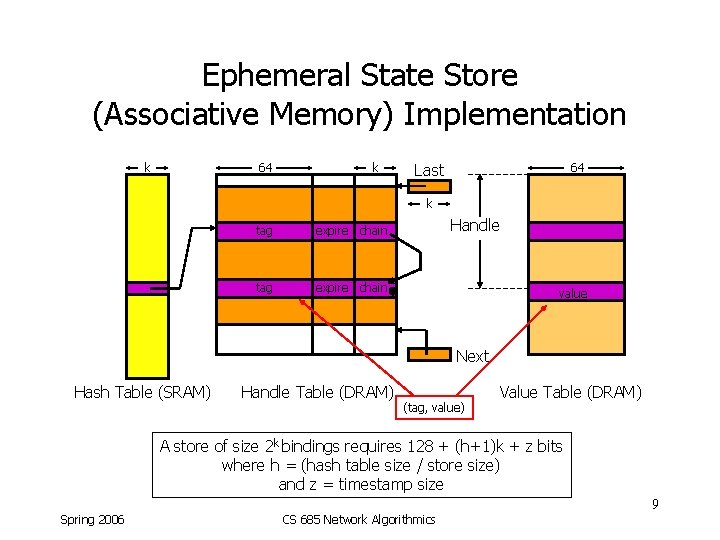

P 5: Add Hardware to Improve Performance • Key Concept: Hardware is inherently parallel, can be very fast, and is cheap in volume; consider adding hardware to perform operations that must be done often and are expensive in software – P 5 a: Use memory interleaving, pipelining (= parallelism) – P 5 b: Use Wide-word parallelism (save memory accesses) – P 5 c: Combine SRAM, DRAM • Examples: – DES encryption — requires permuting bits, nonlinear mappings (some say it was designed to require hardware for fast implementation) • Add-on boards for encryption/decryption at wire speeds – Ephemeral State Store 8 Spring 2006 CS 685 Network Algorithmics

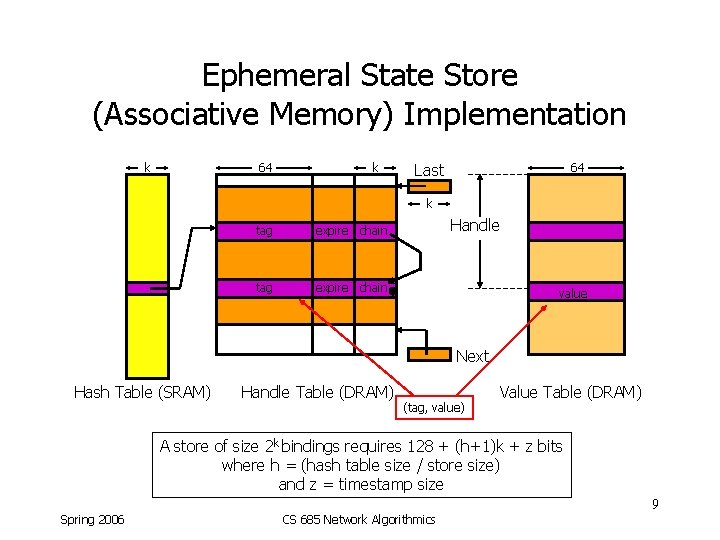

Ephemeral State Store (Associative Memory) Implementation k 64 k Last 64 k tag expire chain Handle value Next Hash Table (SRAM) Handle Table (DRAM) (tag, value) Value Table (DRAM) A store of size 2 k bindings requires 128 + (h+1)k + z bits where h = (hash table size / store size) and z = timestamp size 9 Spring 2006 CS 685 Network Algorithmics

Part II: Modularity With Efficiency • Note: read Clark & Tennenhouse, “Architectural Considerations for a New Generation of Protocols”, Proceedings of ACM SIGCOMM 1990 10 Spring 2006 CS 685 Network Algorithmics

P 6: Replace inefficient general routines with efficient specialized ones • Key Concept: General-purpose routines cannot leverage problem-specific knowledge that can improve performance • Example: – in_pcblookup() function in BSD Unix: generalpurpose state-block retrieval for both TCP and UDP sockets • Uses simple linear search • Not adequate for large servers with 10000’s of open sockets • Applications have very different reference characteristics, so organize PCB’s for different apps separately (Calvert & Dixon '95) 11 Spring 2006 CS 685 Network Algorithmics

P 7: Avoid Unnecessary Generality • Key Concept: Do not include features or handle cases that are not needed. – "When in doubt, leave it out" • Example: – mbuf structure was designed to not waste memory for devices that produced small amounts of input • Today memory is cheap, and most devices produce 1000+ bytes at once • Most implementations use large monolithic buffers, big enough to hold an Ethernet packet (2 K bytes or more) 12 Spring 2006 CS 685 Network Algorithmics

P 8: Don't be tied to reference implementations • Key Concept: – Implementations are sometimes given (e. g. by manufacturers) as a way to make the specification of an interface precise, or show to use a device – These do not necessarily show the right way to think about the problem—they are chosen for conceptual clarity! • Examples: – RSARef implementation of RSA cryptography – Thread-per-layer implementations vs. upcalls 13 Spring 2006 CS 685 Network Algorithmics

P 9: Pass hints across interfaces • Key Concept: if the caller knows something the callee will have to compute, pass it (or something that makes it easier to compute) as an argument! – "hint" = something that makes the recipient's life easier, but may not be correct – "tip" = hint that is guaranteed to be correct – Caveat: callee must either trust caller, or verify (probably should do both) • Example – Passing addresses of device-mapped pages in from user processes (Text Section 4. 1) 14 Spring 2006 CS 685 Network Algorithmics

P 10: Pass hints in protocol headers • Key Concept: If sender knows something receiver will have to compute, pass it in the header • Example: – Identifying state blocks • TCP/IP method (4 -tuple) is inefficient and slow • Better: have each end give the other a handle, to be included in each packet; handle can be index into array – Include a nonce or key to validate the information – Nonce stored in both header and state block 15 Spring 2006 CS 685 Network Algorithmics

Part III: Local Speedup Techniques 16 Spring 2006 CS 685 Network Algorithmics

P 11: Optimize the Expected Case • Key Concept: If 80% of the cases can be handled similarly, optimize for those cases • P 11 a: Use Caches – A form of using state to improve performance • Example: – TCP input "header prediction" • If an incoming packet is in order and does what is expected, can process in small number of instructions (see code) 17 Spring 2006 CS 685 Network Algorithmics

P 12: Add or Exploit State to Gain Speed • Key Concept: Remember things to make it easier to compute them later • P 12 a: Compute incrementally – Here the idea is to "accumulate" as you go, rather than computing all-at-once at the end • Example: – Incremental computation of Chi-Square statistic 18 Spring 2006 CS 685 Network Algorithmics

P 13: Optimize Degrees of Freedom • Key Concept: Consider all the aspects of the problem that might be adapted to the conditions • Example: IP trie lookups, where "width" of tree varies in the tree 19 Spring 2006 CS 685 Network Algorithmics

P 14: Use special techniques for finite universes (e. g. small integers) • Key Concept: when the domain of a function is small, techniques like bucket sorting, bitmaps, etc. become feasible. • Example: Timing wheels 20 Spring 2006 CS 685 Network Algorithmics

P 15: Use algorithmic techniques to create efficient data structures • Key Concept: once P 1 -P 14 have been applied, think about how to build an ingenious data structure that exploits what you know • Examples – IP forwarding lookups • PATRICIA trees (data structure) were first • Then many other more-efficient approaches – Packet classification • Given a set of patterns to match 5 -tuples, and a 5 -tuple, find {all|the first} pattern(s) that it matches 21 Spring 2006 CS 685 Network Algorithmics

Caveats • These are implementation principles, not design principles – But when you go to design new protocols, it is very helpful to know them! (E. g. SCTP uses receiver-chosen handles to identify state blocks. ) • There are other (probably better) ways to carve up these ideas into groups of "Principles" – The value is in thinking about and applying them 22 Spring 2006 CS 685 Network Algorithmics

Cautionary Examples • Having web servers pre-serve embedded objects when an HTML object is requested – Varghese says they tried it, found it hurt performance! – Two proposed reasons: • Interaction with TCP slow-start • Client caching • Multiple-string matching in Snort (IDS) – Modified Boyer-Moore matching algorithm to do "set matches" – Incorporated into Snort – little improvement – Why? • String matching was not the bottleneck! • A large data structure was required, which no longer fit in cache 23 Spring 2006 CS 685 Network Algorithmics

Cautionary Examples, cont. • Process-list searching in PDP-11 Unix – Many kernel operations involved a linear search through the list of processes – Idea: use doubly-linked list of processes to speed search, insertion, deletion – Result: would've taken about twice as long for typical table sizes; needed 1000's of processes before it would pay off! 24 Spring 2006 CS 685 Network Algorithmics

Cautionary Questions • • Q 1: Is improvement really needed? Q 2: Is this really the bottleneck? Q 3: What impact will change have on rest of system? Q 4: Does Bo. E-analysis indicate significant improvement? Q 5: Is it worth adding custom hardware? Q 6: Can protocol change be avoided? Q 7: Do prototypes confirm the initial promise? Q 8: Will performance gains be lost if environment changes? 25 Spring 2006 CS 685 Network Algorithmics

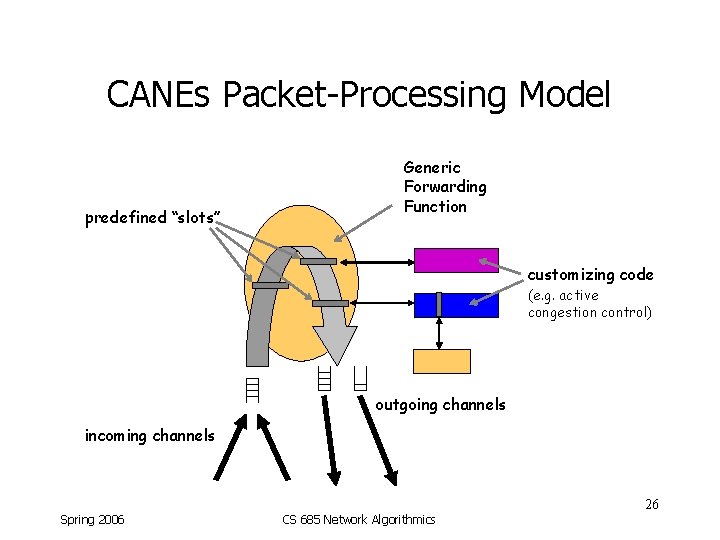

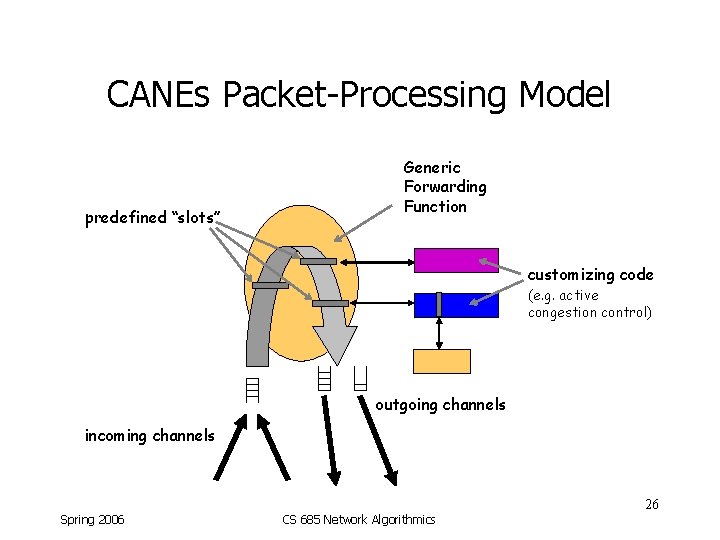

CANEs Packet-Processing Model predefined “slots” Generic Forwarding Function customizing code (e. g. active congestion control) outgoing channels incoming channels 26 Spring 2006 CS 685 Network Algorithmics

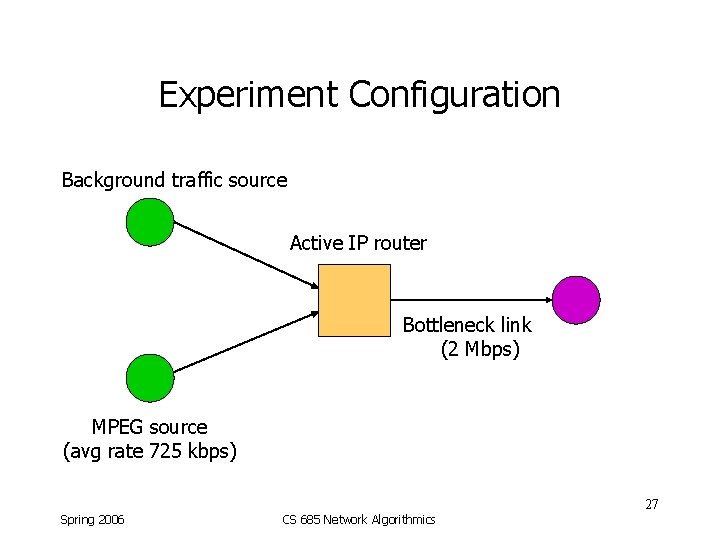

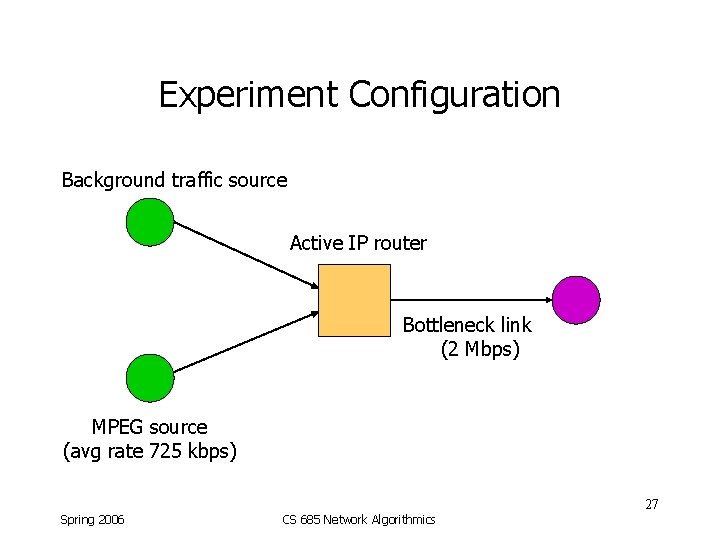

Experiment Configuration Background traffic source Active IP router Bottleneck link (2 Mbps) MPEG source (avg rate 725 kbps) 27 Spring 2006 CS 685 Network Algorithmics