Apache Hadoop YARN Yet Another Resource Negotiator WeiChiu

- Slides: 29

Apache Hadoop YARN: Yet Another Resource Negotiator Wei-Chiu Chuang 10/17/2013 Permission to copy/distribute/adapt the work except the figures which are copyrighted by ACM

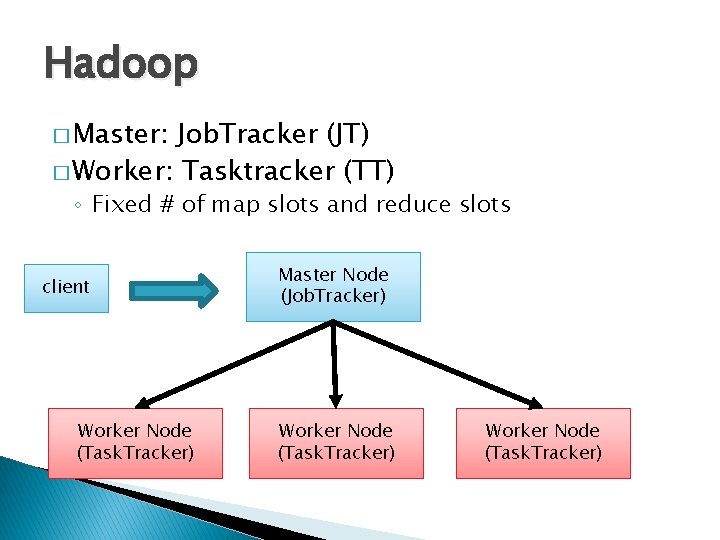

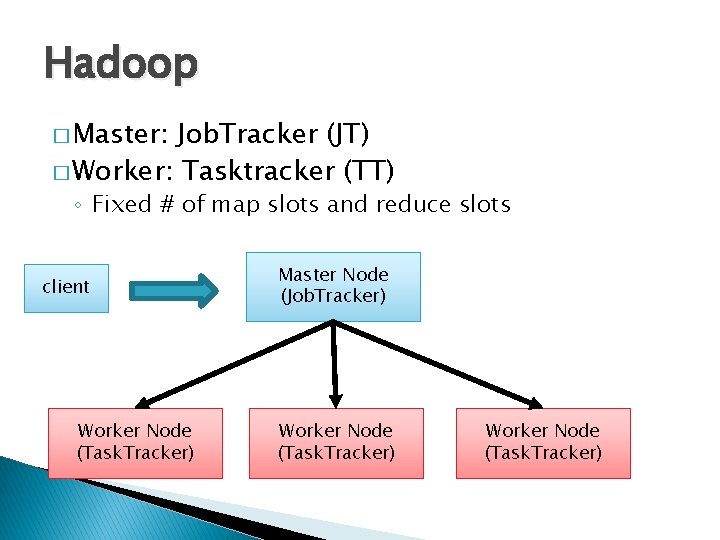

Hadoop � Master: Job. Tracker (JT) � Worker: Tasktracker (TT) ◦ Fixed # of map slots and reduce slots client Worker Node (Task. Tracker) Master Node (Job. Tracker) Worker Node (Task. Tracker)

The Problem � Hadoop is being used for all kinds of tasks beyond its original design � Tight coupling of a specific programming model with the resource management infrastructure � Centralized handling of jobs’ control flow

Hadoop Design Criteria � Scalability

Hadoop on Demand � Scalability � Multi-tenancy ◦ A shared pool of nodes for all jobs ◦ Allocate Hadoop clusters of fixed size on the shared pool. � Serviceability ◦ sets up a new cluster for every job ◦ old and new Hadoop co-exist ◦ Hadoop has short, 3 -month release cycle

Failure of Ho. D � � � Scalability Multi-tenancy Serviceability � Locality Awareness ◦ Job. Tracker tries to place tasks close to the input data ◦ But node allocator is not aware of the locality

Failure of Ho. D � � Scalability Multi-tenancy Serviceability Locality Awareness � High Cluster Utilization ◦ Ho. D does not resize the cluster between stages ◦ Users allocate more nodes than needed �Competing for resources results in longer latency to start a job

Problem with Shared Cluster � � � Scalability Multi-tenancy Serviceability Locality Awareness High Cluster Utilization � Reliability/Availability ◦ The failure in one job tracker can bring down the entire cluster ◦ Overhead of tracking multiple jobs in a larger, shared cluster

Challenge of Multi-tenancy � � � Scalability Multi-tenancy Serviceability Locality Awareness High Cluster Utilization Reliability/Availability � Secure and auditable operation ◦ Authentication

Challenge of Multi-tenancy � � � � Scalability Multi-tenancy Serviceability Locality Awareness High Cluster Utilization Reliability/Availability Secure and auditable operation � Support for Programming Model Diversity ◦ Iterative computation ◦ Different communication pattern

YARN � � � � Scalability Multi-tenancy Serviceability Locality Awareness High Cluster Utilization Reliability/Availability Secure and auditable operation Support for Programming Model Diversity � Flexible Resource Model ◦ Hadoop: # of Map/reduce slots are fixed. ◦ Easy, but lower utilization

YARN � � � � � Scalability Multi-tenancy Serviceability Locality Awareness High Cluster Utilization Reliability/Availability Secure and auditable operation Support for Programming Model Diversity Flexible Resource Model � Backward Compatibility ◦ The system behaves similar to the old Hadoop

YARN � Separating resource management functions from the programming model � Map. Reduce becomes just one of the application � Dryad, …. Etc � Binary compatible/Source compatible

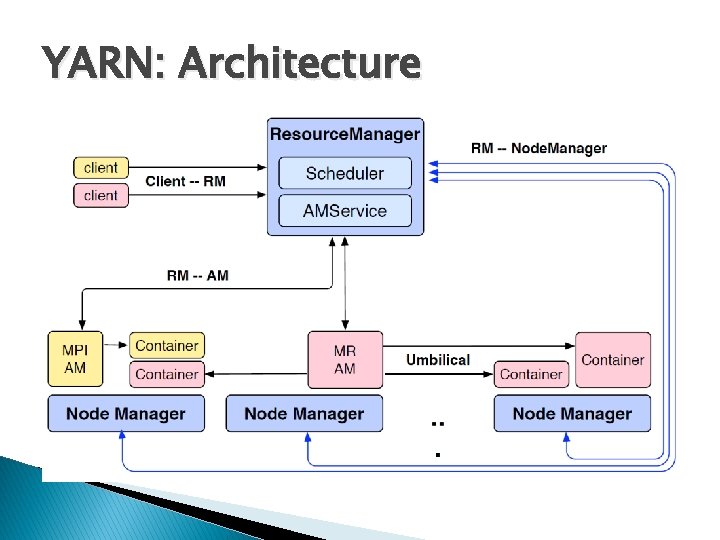

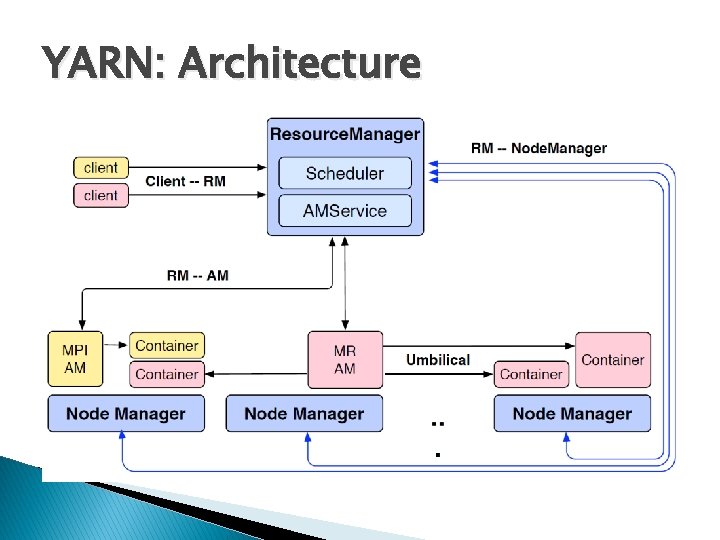

YARN: Architecture

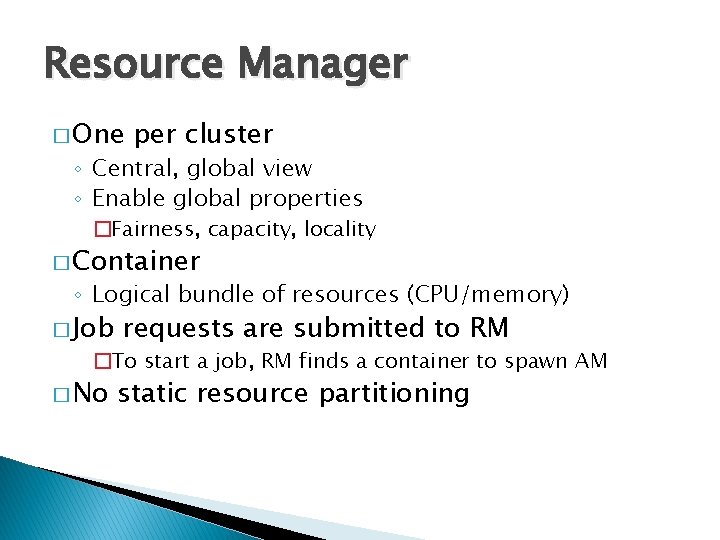

Resource Manager � One per cluster ◦ Central, global view ◦ Enable global properties �Fairness, capacity, locality � Container ◦ Logical bundle of resources (CPU/memory) � Job requests are submitted to RM � No static resource partitioning �To start a job, RM finds a container to spawn AM

Resource Manager (cont’) � only handles an overall resource profile for each application ◦ Local optimization/internal flow is up to the application � Preemption ◦ Request resources back from an application ◦ Checkpoint snapshot instead of explicitly killing jobs / migrate computation to other containers

Application Master � The head of a job � Runs as a container � Request resources from RM ◦ # of containers/ resource per container/ locality … � Dynamically changing resource consumption � Can run any user code (Dryad, Map. Reduce, Tez, REEF…etc) � Requests are “late-binding”

Map. Reduce AM � Optimizes for locality among map tasks with identical resource requirements ◦ Selecting a task with input data close to the container. � AM determines the semantics of the success or failure of the container

Node Manager � The “worker” daemon. Registers with RM � One per node � Container Launch Context – env var, commands… � Report resources (memory/CPU/etc…) � Configure the environment for task execution � Garbage collection/ Authentication � Auxiliary services ◦ Output intermediate data between map and reduce tasks

YARN framework/application writers 1. 2. 3. 4. 5. Submitting the application by passing a CLC for the Application Master to the RM. When RM starts the AM, it should register with the RM and periodically advertise its liveness and requirements over the heartbeat protocol Once the RM allocates a container, AM can construct a CLC to launch the container on the corresponding NM. It may also monitor the status of the running container and stop it when the resource should be reclaimed. Monitoring the progress of work done inside the container is strictly the AM’s responsibility. Once the AM is done with its work, it should unregister from the RM and exit cleanly. Optionally, framework authors may add control flow between their own clients to report job status and expose a control plane.

Fault tolerance and availability � RM Failure � NM Failure � AM Failure ◦ Recover using persistent storage ◦ Kill all containers, including AMs’ ◦ Relaunch AMs ◦ RM detects it, mark the containers as killed, report to Ams ◦ RM kills the container and restarts it. � Container Failure ◦ The framework is responsible for recovery

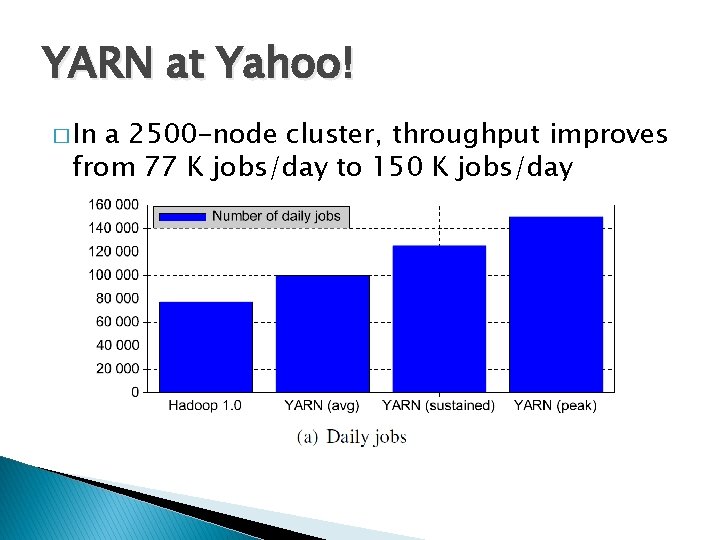

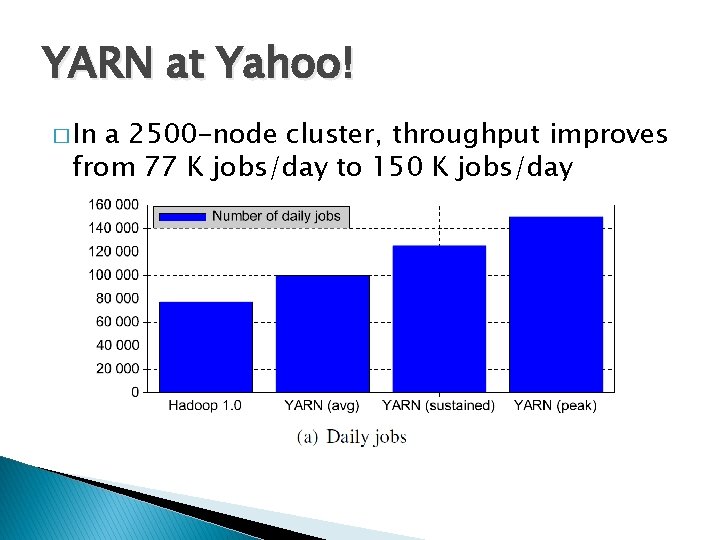

YARN at Yahoo! � In a 2500 -node cluster, throughput improves from 77 K jobs/day to 150 K jobs/day

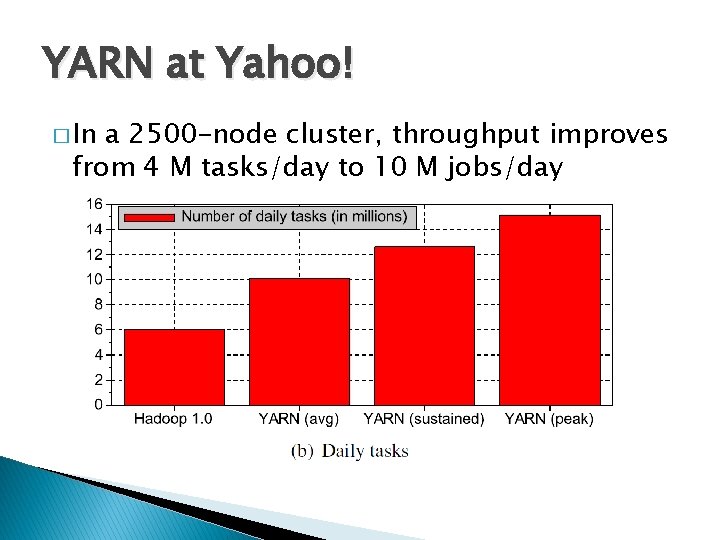

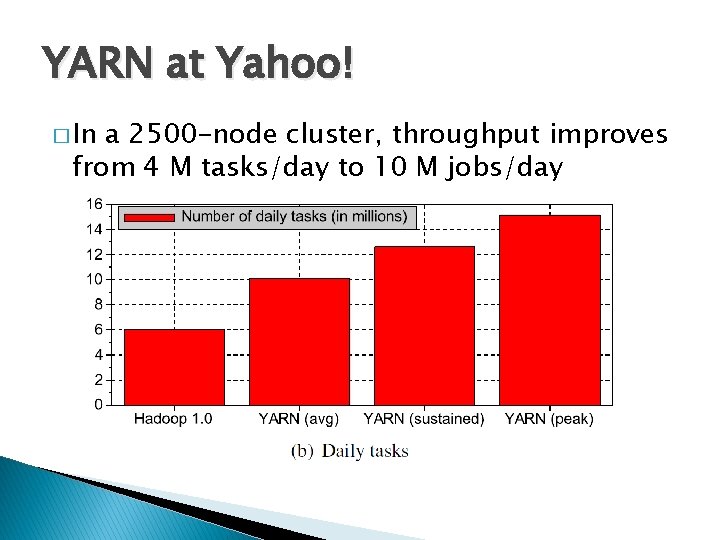

YARN at Yahoo! � In a 2500 -node cluster, throughput improves from 4 M tasks/day to 10 M jobs/day

YARN at Yahoo! � Why? ◦ the removal of the static split between map and reduce slots. � Essentially, moving to YARN, the CPU utilization almost doubled � “upgrading to YARN was equivalent to adding 1000 machines [to this 2500 machines cluster]”

Applications/Frameworks � Pig, Hive, Oozie ◦ Decompose a DAG job into multiple MR jobs � Apache Tez ◦ DAG execution framework � Spark � Dryad � Giraph ◦ Vertice centric graph computation framework ◦ fits naturally within YARN model � Storm – distributed real time processing engine (parallel stream processing) � REEF ◦ Simplify implementing Application. Master � Haya – Hbase clusters

Evaluation � Sorting � Map. Reduce � Preemption � W/ benchmarks Apache Tez � REEF

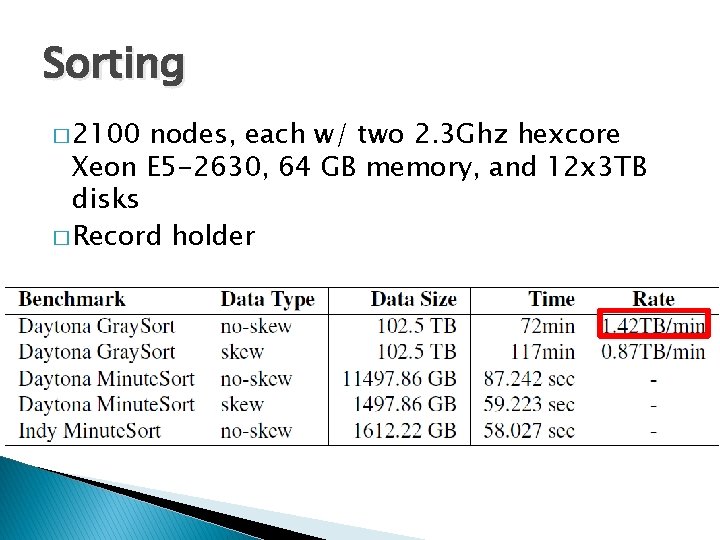

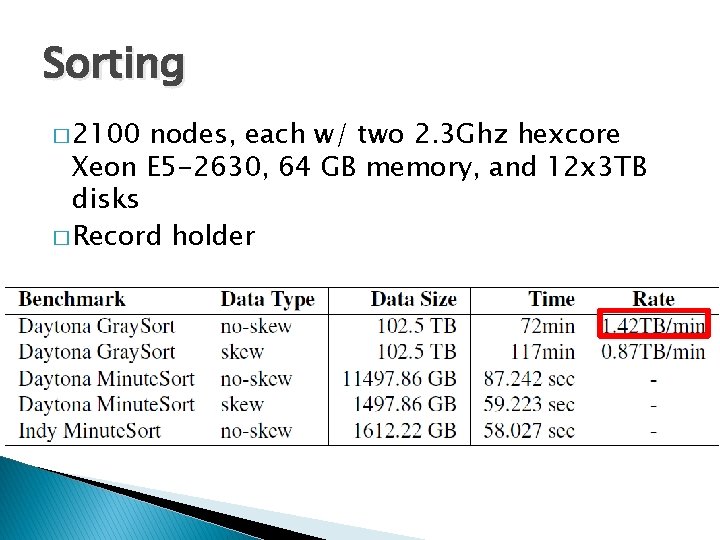

Sorting � 2100 nodes, each w/ two 2. 3 Ghz hexcore Xeon E 5 -2630, 64 GB memory, and 12 x 3 TB disks � Record holder

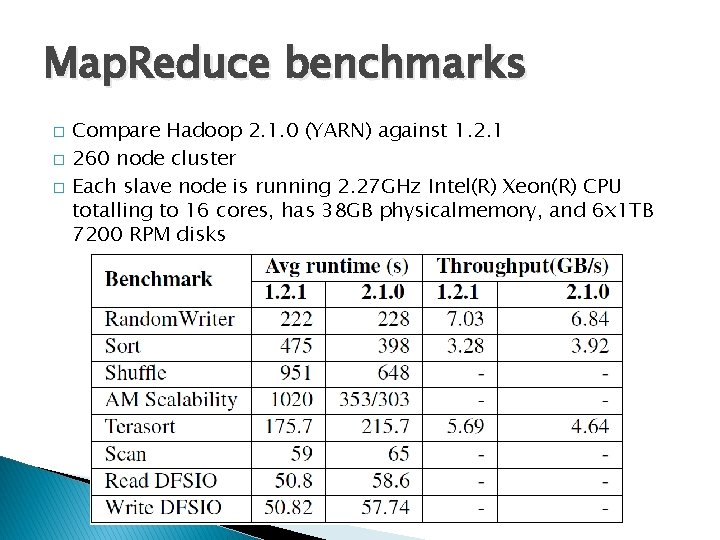

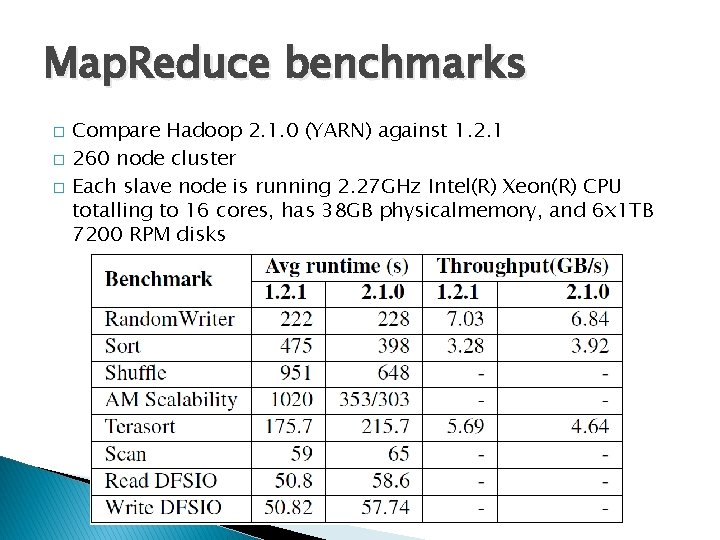

Map. Reduce benchmarks � � � Compare Hadoop 2. 1. 0 (YARN) against 1. 2. 1 260 node cluster Each slave node is running 2. 27 GHz Intel(R) Xeon(R) CPU totalling to 16 cores, has 38 GB physicalmemory, and 6 x 1 TB 7200 RPM disks

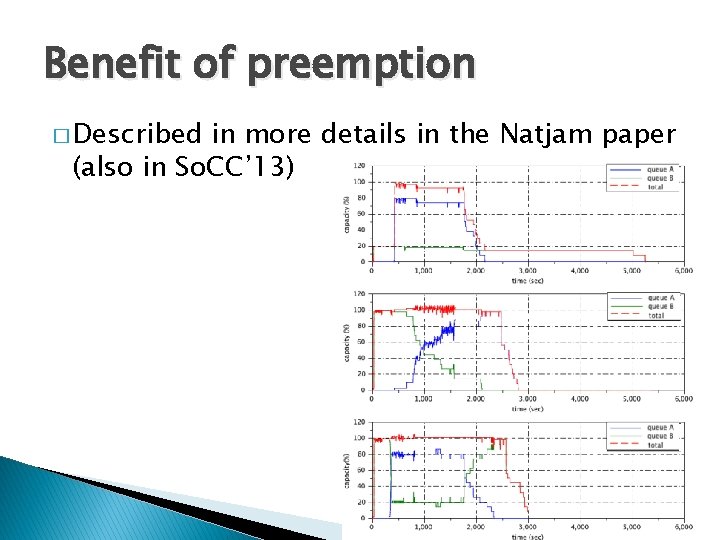

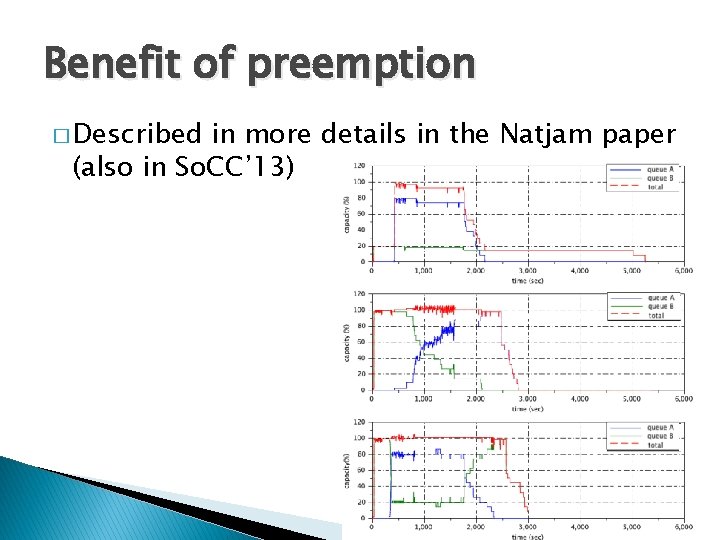

Benefit of preemption � Described in more details in the Natjam paper (also in So. CC’ 13)