SZU Apache Hadoop Content l Motivations for Hadoop

- Slides: 31

SZU Apache Hadoop

Content l Motivations for Hadoop l What is Apache Haddop ? l HDFS && Map. Reduce l Hadoop Ecosystem

Why ? Part One Motivations for hadoop

What were the limitations of earlier large-scale computing? What requirements should an alternative approach have? How does Hadoop address those requirements ?

Early Large Scale Computing In early time, the most popular computing way. l. Historically computation was processorbound ØData volume has been relatively small ØComplicated computations are performed on that data l. Advances in computer technology has historically centered around improving the power of a single machine TEXT 100%

Advances in CPUs ØMoore’s Law • The number of transistors on a dense integrated circuit doubles every two years ØSingle-core computing can’t scale with current computing needs

Single-Core Limitation Power consumption limits the speed increase we get from transistor density

Distributed Systems ØAllows developers to use multiple machines for a single task

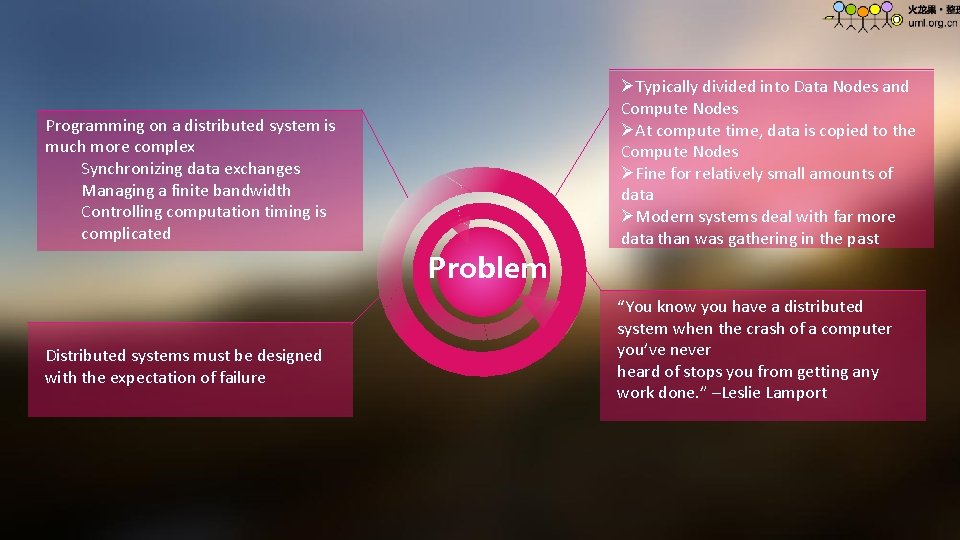

Programming on a distributed system is much more complex Synchronizing data exchanges Managing a finite bandwidth Controlling computation timing is complicated Problem Distributed systems must be designed with the expectation of failure ØTypically divided into Data Nodes and Compute Nodes ØAt compute time, data is copied to the Compute Nodes ØFine for relatively small amounts of data ØModern systems deal with far more data than was gathering in the past “You know you have a distributed system when the crash of a computer you’ve never heard of stops you from getting any work done. ” –Leslie Lamport

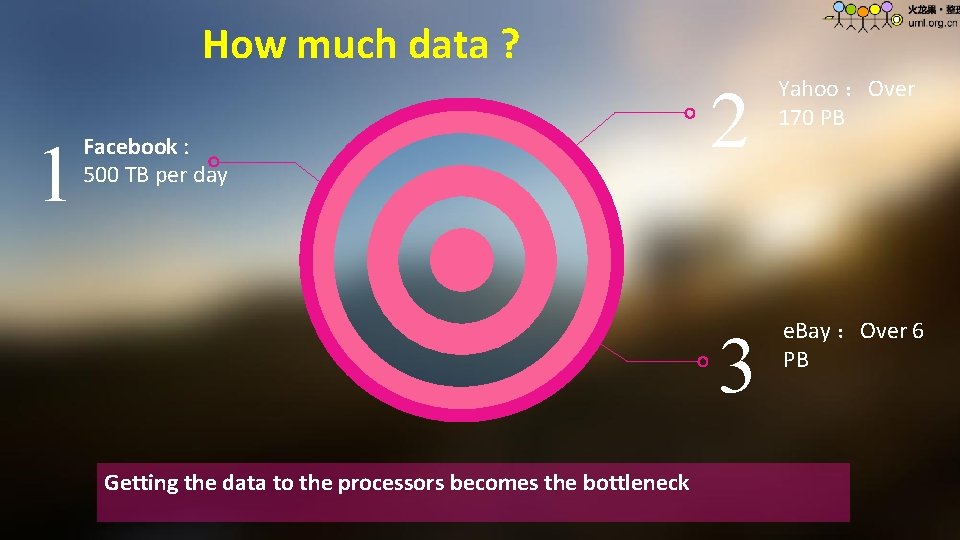

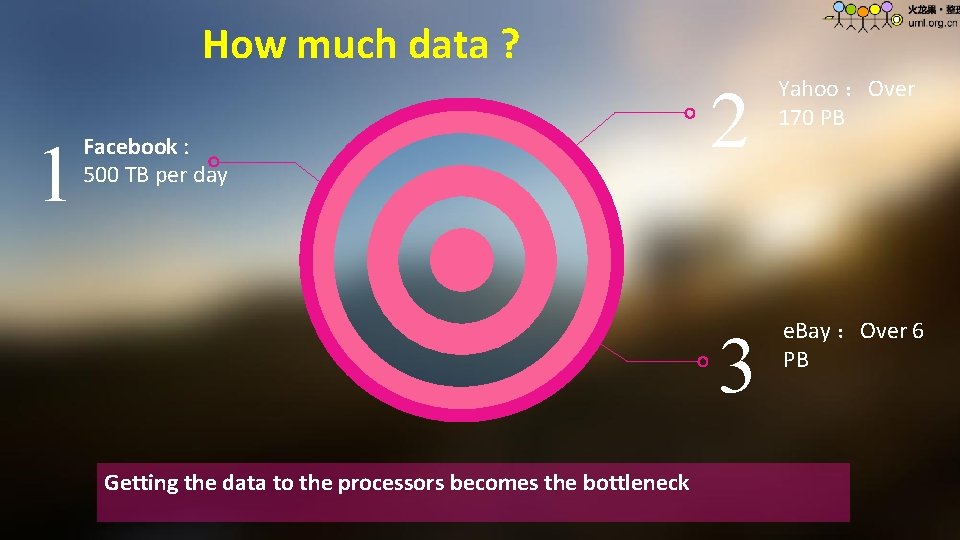

How much data ? 1 Facebook : 500 TB per day 2 3 Getting the data to the processors becomes the bottleneck Yahoo :Over 170 PB e. Bay :Over 6 PB

ØMust support partial failure ØMust be scalable Requirements for a new object

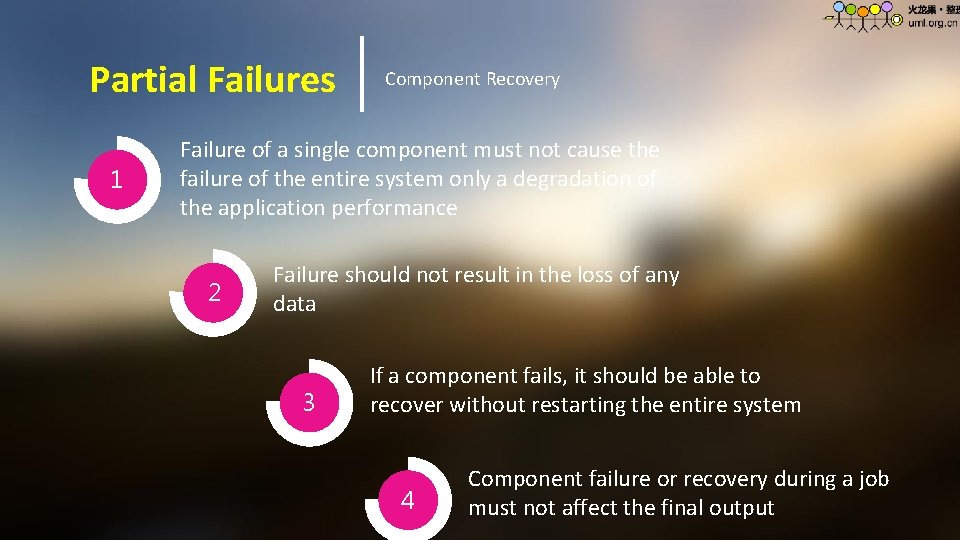

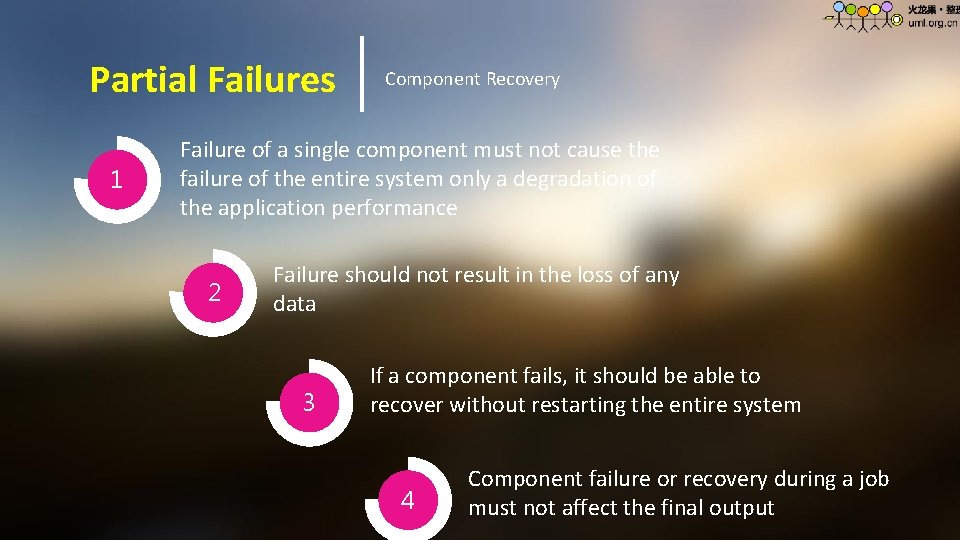

Partial Failures 1 Component Recovery Failure of a single component must not cause the failure of the entire system only a degradation of the application performance 2 Failure should not result in the loss of any data 3 If a component fails, it should be able to recover without restarting the entire system 4 Component failure or recovery during a job must not affect the final output

Scalability Must be scalable ØIncreasing resources should increase load capacity ØIncreasing the load on the system should result in a graceful decline in performance for all jobs • Not system failure TEXT 100%

What ? Part Two What is apache hadoop ?

What is apache hadoop ? Ø Based on work done by Google in the early 2000 s • “The Google File System” in 2003 • “Map. Reduce: Simplified Data Processing on Large Clusters” in 2004 Ø The core idea was to distribute the data as it is initially stored • Each node can then perform computation on the data it stores without moving the data for the initial processing

Core Hadoop Concepts 1 Applications are written in a high-level programming language Ø No network programming or temporal dependency 2 Nodes should communicate as little as possible Ø A “shared nothing” architecture 3 You Data is spread among the machines in advance Ø Perform computation where the data is already stored as often as possible

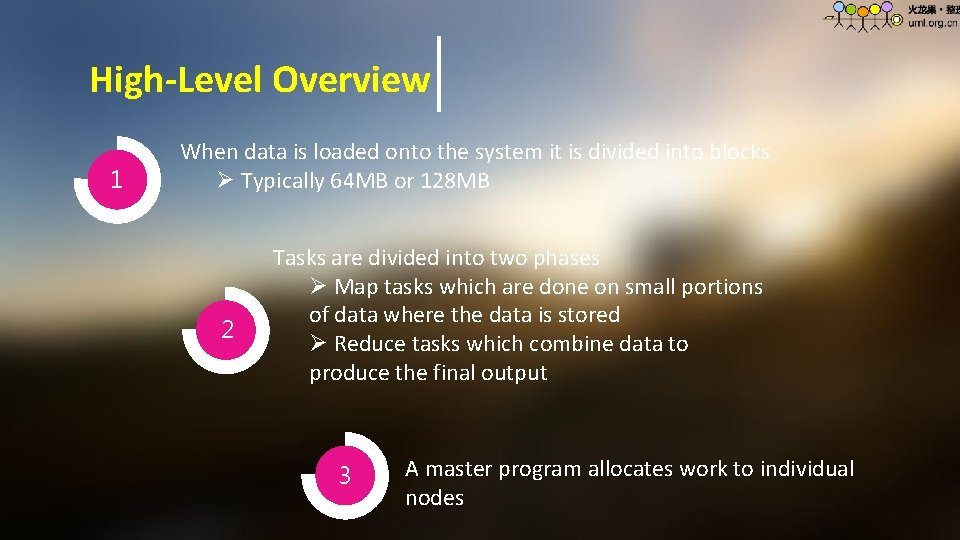

High-Level Overview 1 When data is loaded onto the system it is divided into blocks Ø Typically 64 MB or 128 MB 2 Tasks are divided into two phases Ø Map tasks which are done on small portions of data where the data is stored Ø Reduce tasks which combine data to produce the final output 3 A master program allocates work to individual nodes

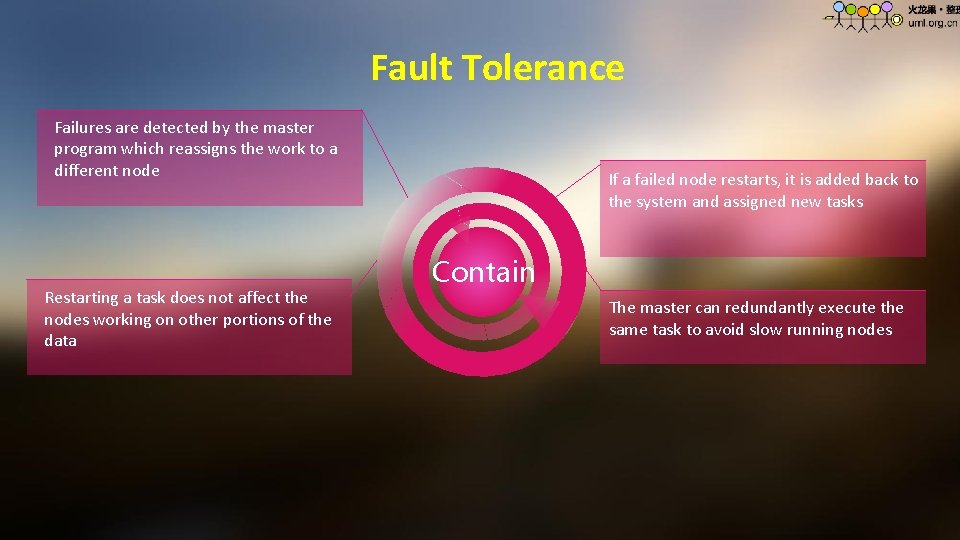

Fault Tolerance Failures are detected by the master program which reassigns the work to a different node Restarting a task does not affect the nodes working on other portions of the data If a failed node restarts, it is added back to the system and assigned new tasks Contain The master can redundantly execute the same task to avoid slow running nodes

HDFS Part Three hadoop distributed file system

Responsible for storing data on the cluster Data files are split into blocks and distributed across the nodes in the cluster Each block is replicated multiple times

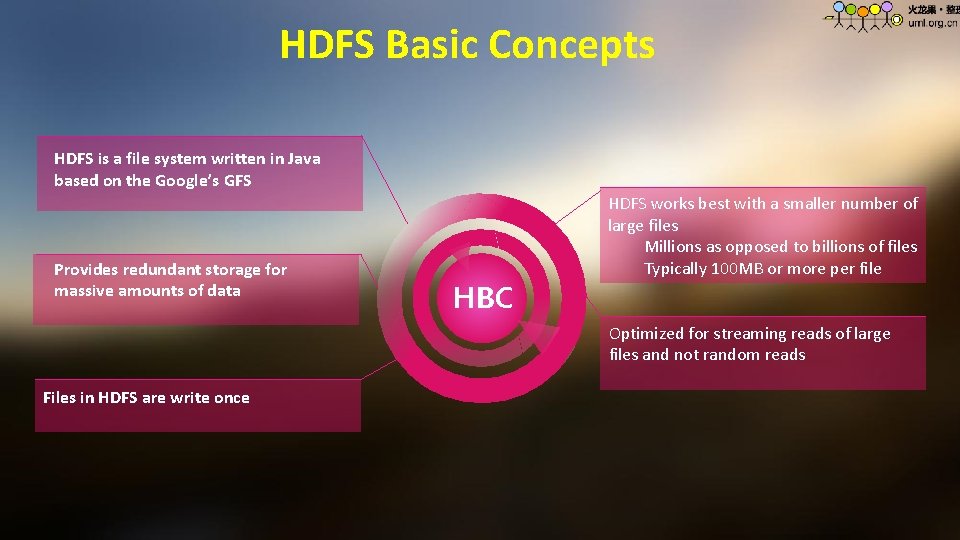

HDFS Basic Concepts HDFS is a file system written in Java based on the Google’s GFS Provides redundant storage for massive amounts of data HBC HDFS works best with a smaller number of large files Millions as opposed to billions of files Typically 100 MB or more per file Optimized for streaming reads of large files and not random reads Files in HDFS are write once

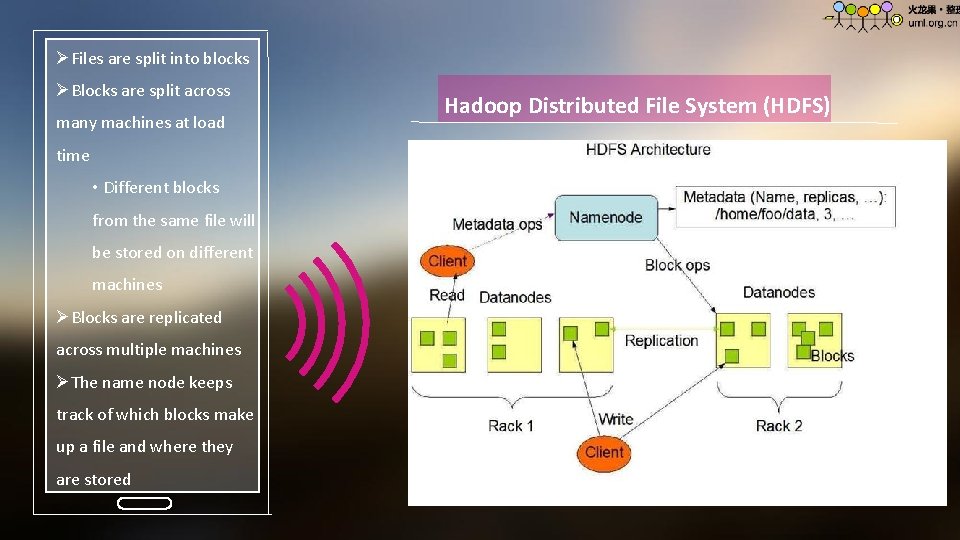

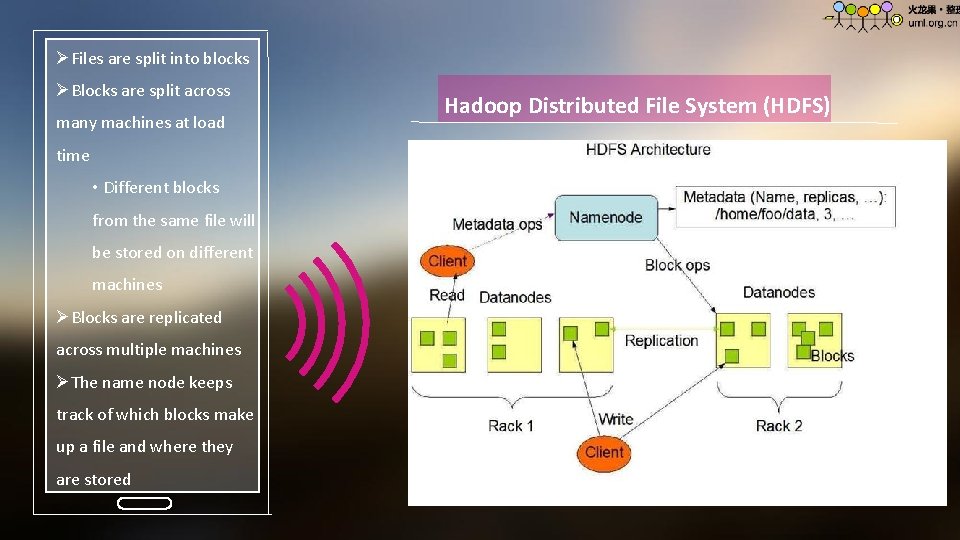

ØFiles are split into blocks ØBlocks are split across many machines at load Hadoop Distributed File System (HDFS) time • Different blocks from the same file will be stored on different machines ØBlocks are replicated across multiple machines ØThe name node keeps track of which blocks make up a file and where they are stored Ø You can click here to enter your text.

Map. Reduce Part Three distributing computation across nodes

Map. Reduce Overview Ø A method for distributing computation across multiple nodes Ø Each node processes the data that is stored at that node Ø Consists of two main phases • Map • Reduce

Map. Reduce Features 1 Automatic parallelization and distribution 2 Fault-Tolerance 3 Provides a clean abstraction for programmers to use

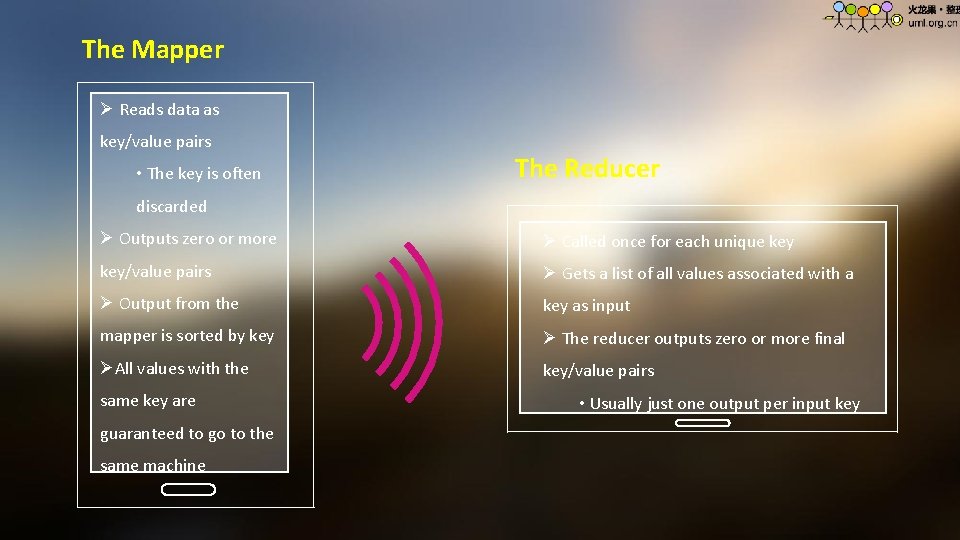

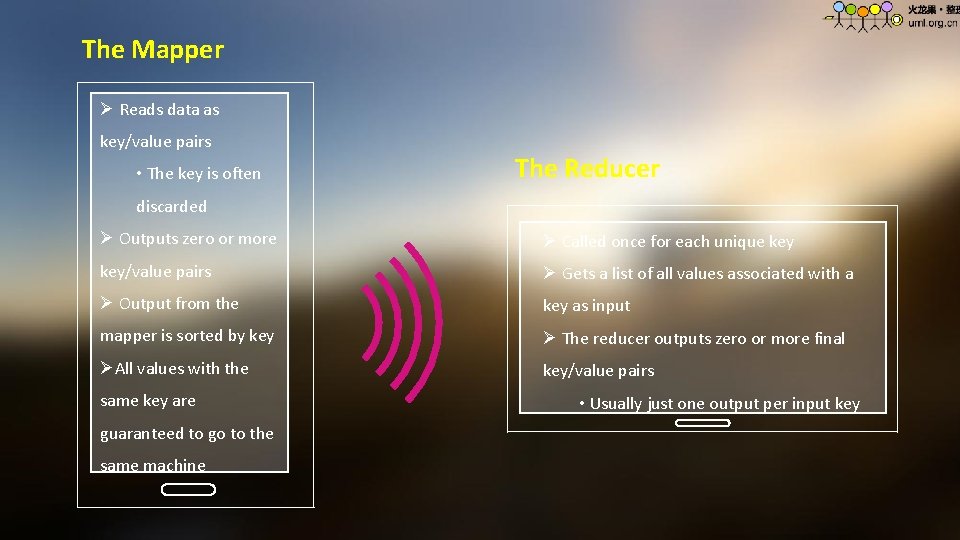

The Mapper Ø Reads data as key/value pairs • The key is often The Reducer discarded Ø Outputs zero or more Ø Called once for each unique key/value pairs Ø Gets a list of all values associated with a Ø Output from the key as input mapper is sorted by key Ø The reducer outputs zero or more final ØAll values with the key/value pairs same key are guaranteed to go to the same machine • Usually just one output per input key

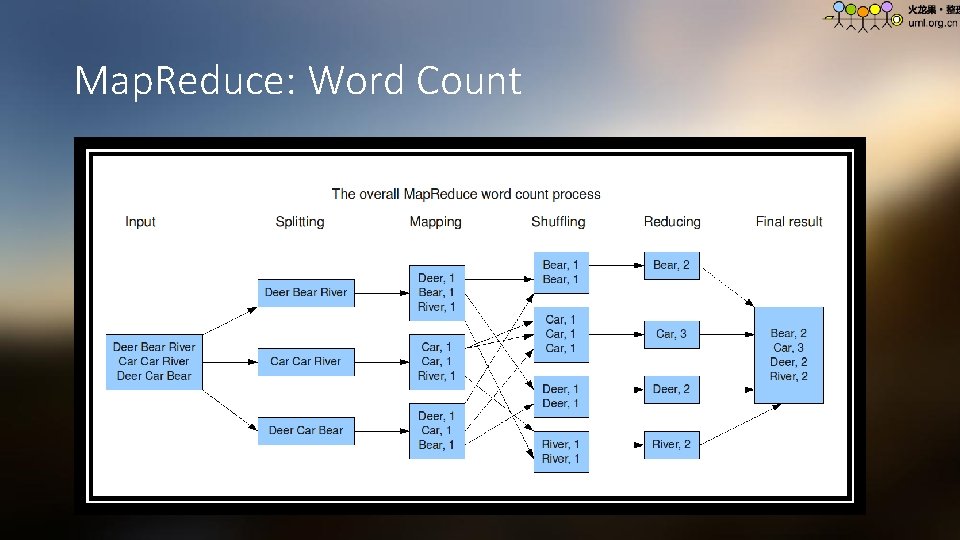

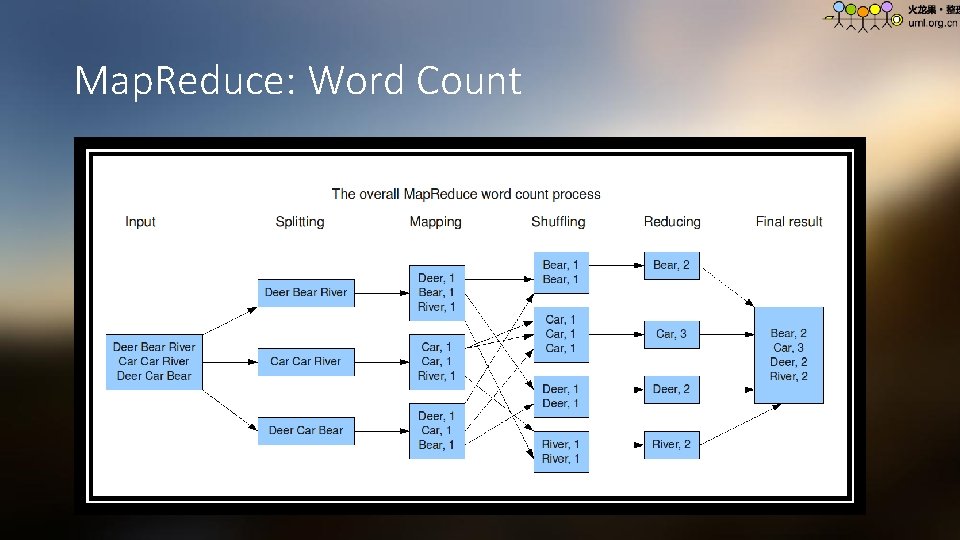

Map. Reduce: Word Count

Hadoop Ecosystem Part Four Other available tools

Why do these tools exist? Why do these tools exist ? ØMap. Reduce is very powerful, but can be awkward to master ØThese tools allow programmers who are familiar with other programming styles to take advantage of the power of Map. Reduce TEXT 100%

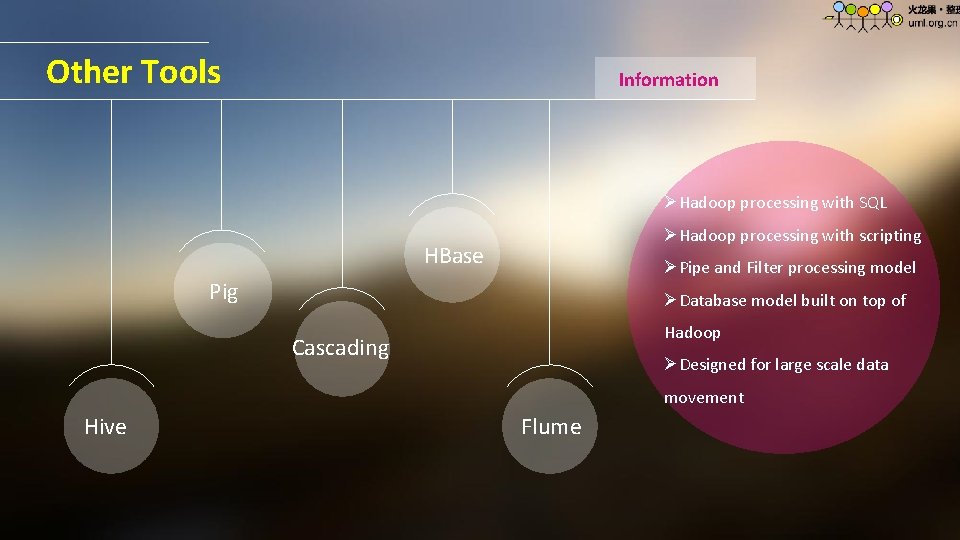

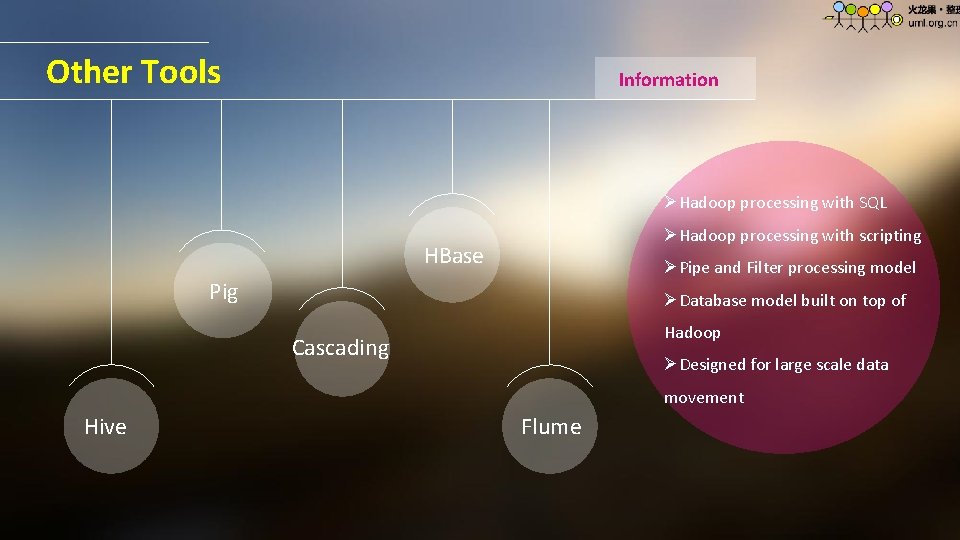

Other Tools Information ØHadoop processing with SQL ØHadoop processing with scripting HBase ØPipe and Filter processing model Pig ØDatabase model built on top of Hadoop Cascading ØDesigned for large scale data movement Hive Flume

Thank you!