Accelerating Multiplication and Parallelizing Operations in NonVolatile Memory

![In-Memory Computing Accelerator Classification Clustering Hyperdimensional Classification [HPCA’ 17] Kmeans Support both Training and In-Memory Computing Accelerator Classification Clustering Hyperdimensional Classification [HPCA’ 17] Kmeans Support both Training and](https://slidetodoc.com/presentation_image_h/247009d01b8496726412c0987f5bcd52/image-17.jpg)

- Slides: 23

Accelerating Multiplication and Parallelizing Operations in Non-Volatile Memory Mohsen Imani, Saransh Gupta, Tajana S. Rosing University of California San Diego System Energy Efficiency Lab seelab. ucsd. edu

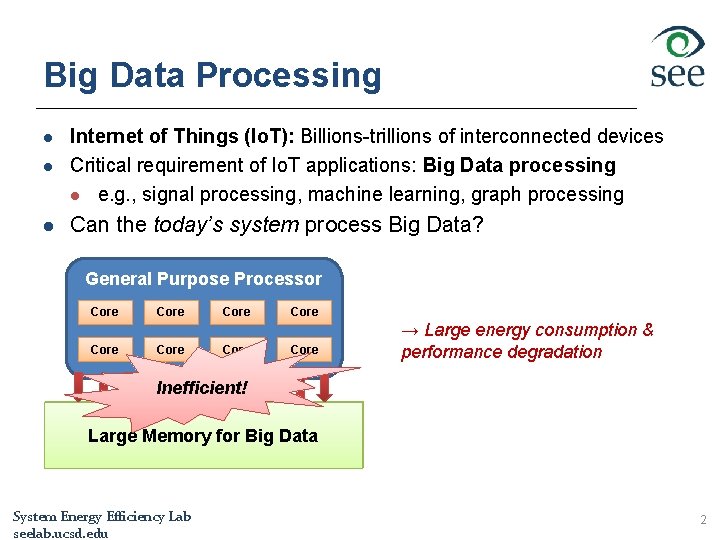

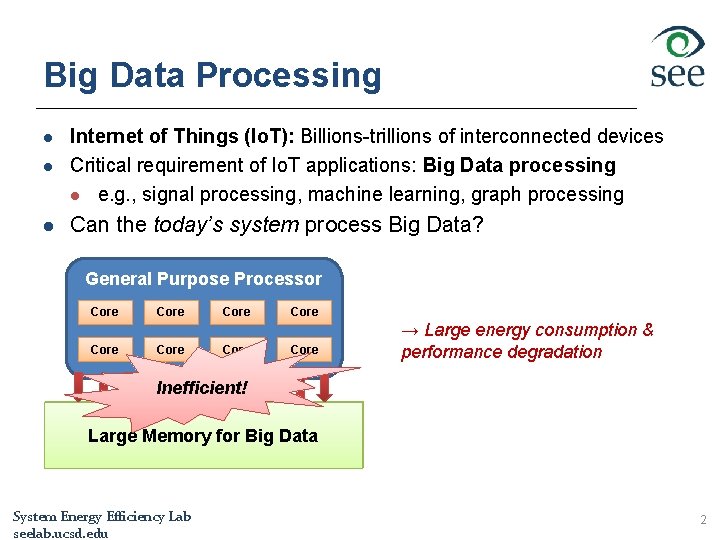

Big Data Processing l Internet of Things (Io. T): Billions-trillions of interconnected devices Critical requirement of Io. T applications: Big Data processing l e. g. , signal processing, machine learning, graph processing l Can the today’s system process Big Data? l General Purpose Processor Core Core → Large energy consumption & performance degradation Inefficient! Data Movements Memory Large Memory for Big Data System Energy Efficiency Lab seelab. ucsd. edu 2

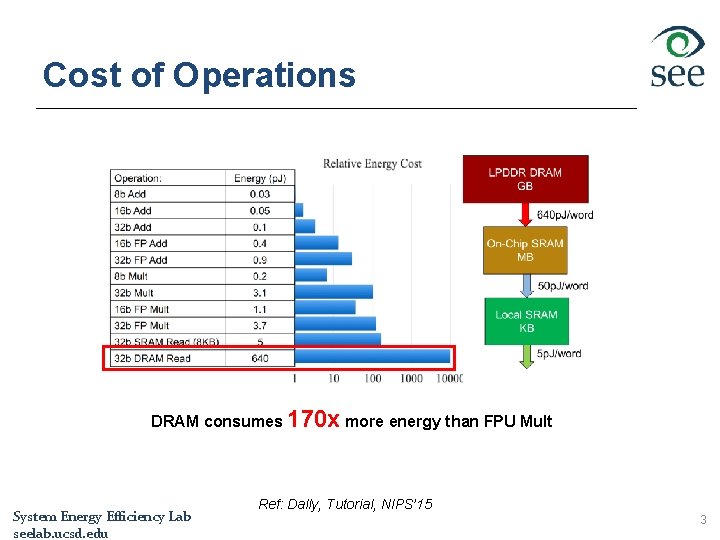

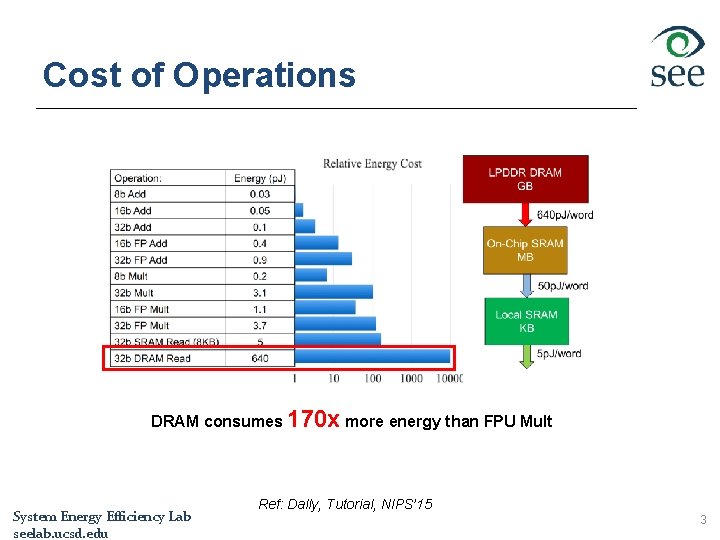

Cost of Operations DRAM consumes 170 x more energy than FPU Mult System Energy Efficiency Lab seelab. ucsd. edu Ref: Dally, Tutorial, NIPS’ 15 3

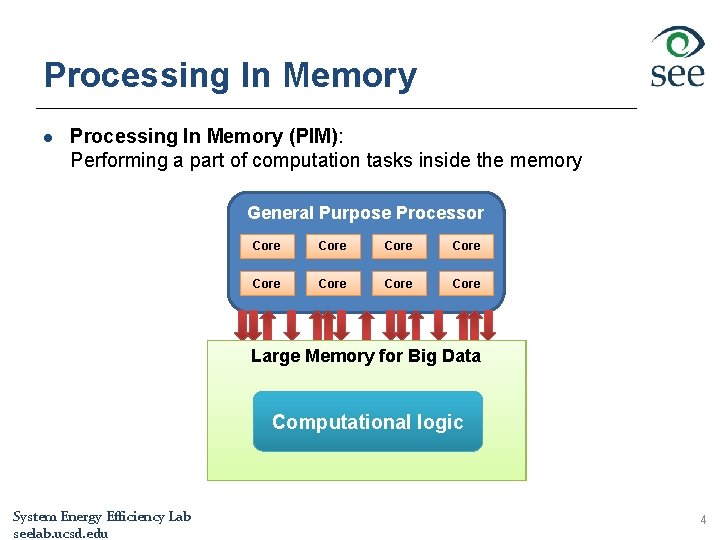

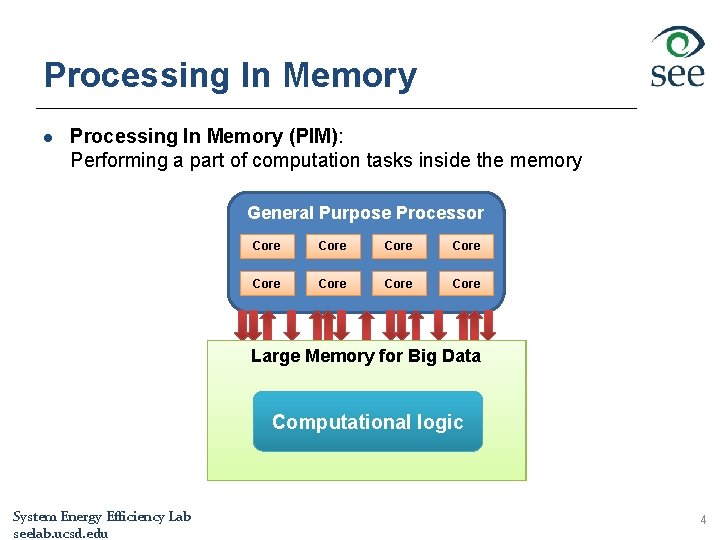

Processing In Memory l Processing In Memory (PIM): Performing a part of computation tasks inside the memory General Purpose Processor Core Core Large Memory for Big Data Computational logic System Energy Efficiency Lab seelab. ucsd. edu 4

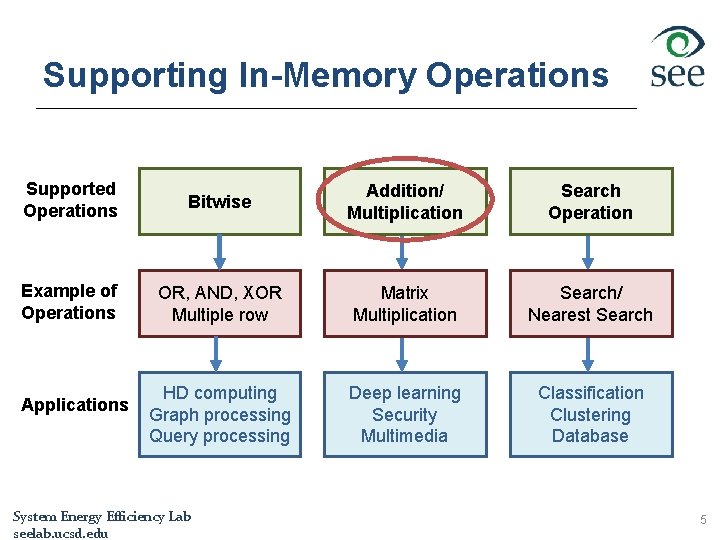

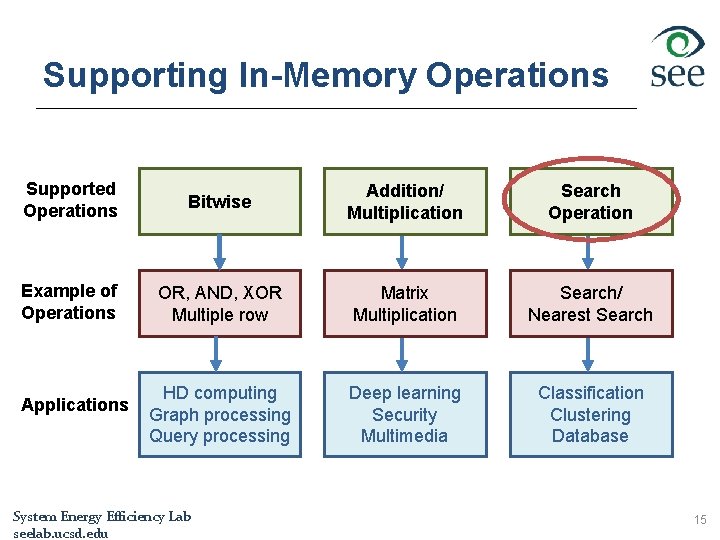

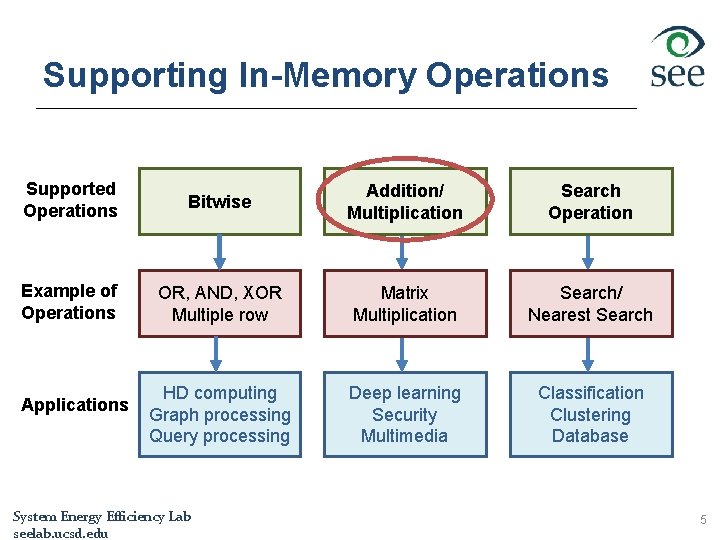

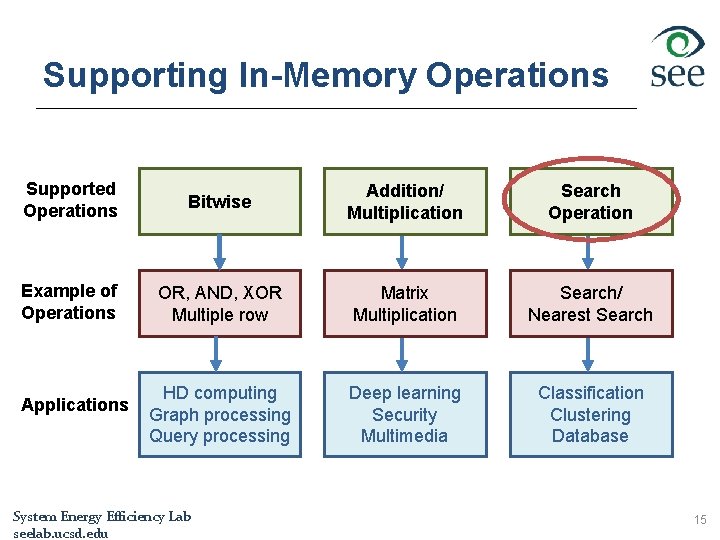

Supporting In-Memory Operations Supported Operations Bitwise Addition/ Multiplication Search Operation Example of Operations OR, AND, XOR Multiple row Matrix Multiplication Search/ Nearest Search HD computing Graph processing Query processing Deep learning Security Multimedia Classification Clustering Database Applications System Energy Efficiency Lab seelab. ucsd. edu 5

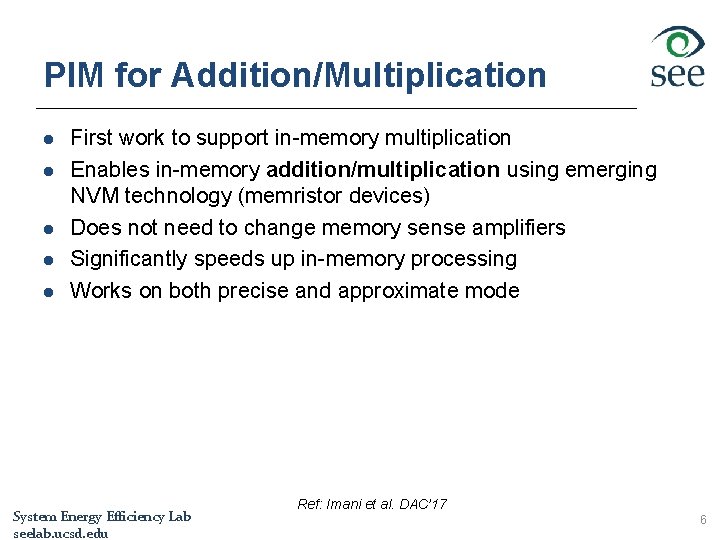

PIM for Addition/Multiplication l l l First work to support in-memory multiplication Enables in-memory addition/multiplication using emerging NVM technology (memristor devices) Does not need to change memory sense amplifiers Significantly speeds up in-memory processing Works on both precise and approximate mode System Energy Efficiency Lab seelab. ucsd. edu Ref: Imani et al. DAC’ 17 6

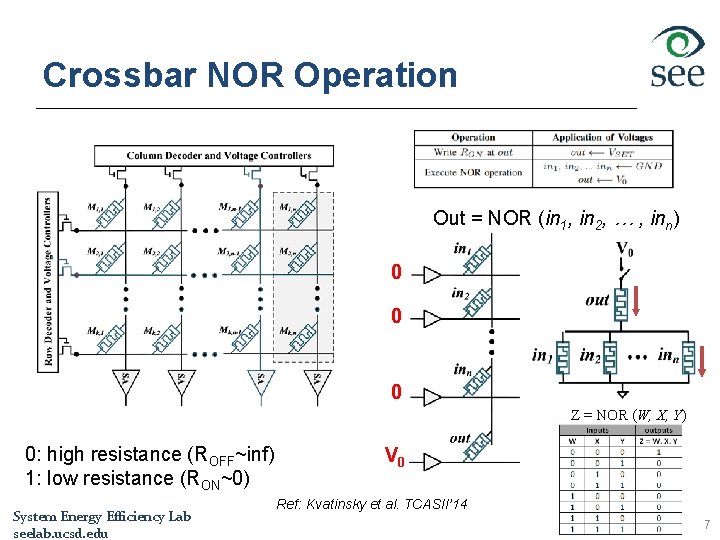

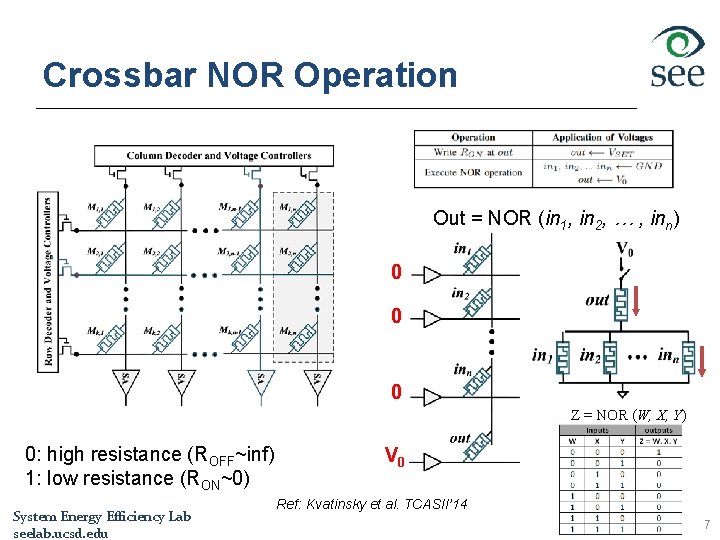

Crossbar NOR Operation Out = NOR (in 1, in 2, … , inn) 0 0 0 Z = NOR (W, X, Y) 0: high resistance (ROFF~inf) 1: low resistance (RON~0) System Energy Efficiency Lab seelab. ucsd. edu V 0 Ref: Kvatinsky et al. TCASII’ 14 7

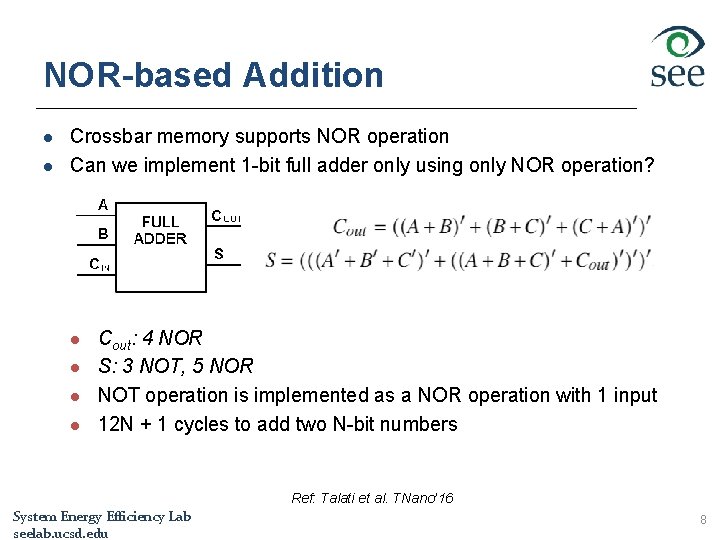

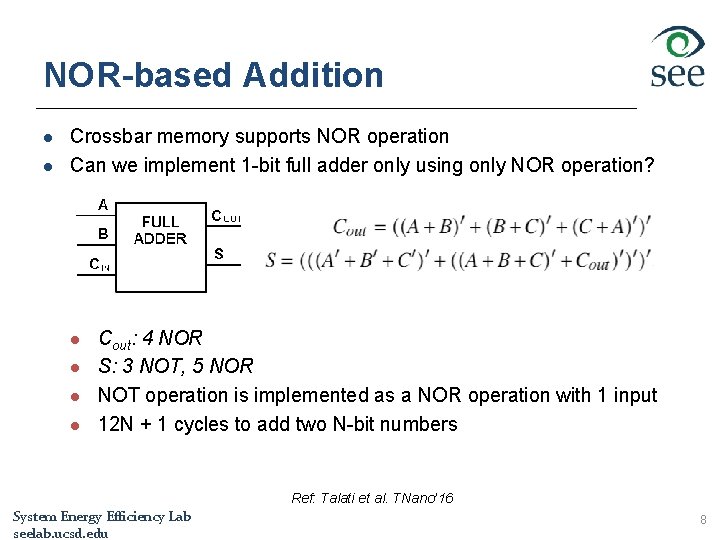

NOR-based Addition l l Crossbar memory supports NOR operation Can we implement 1 -bit full adder only using only NOR operation? l l Cout: 4 NOR S: 3 NOT, 5 NOR NOT operation is implemented as a NOR operation with 1 input 12 N + 1 cycles to add two N-bit numbers Ref: Talati et al. TNano’ 16 System Energy Efficiency Lab seelab. ucsd. edu 8

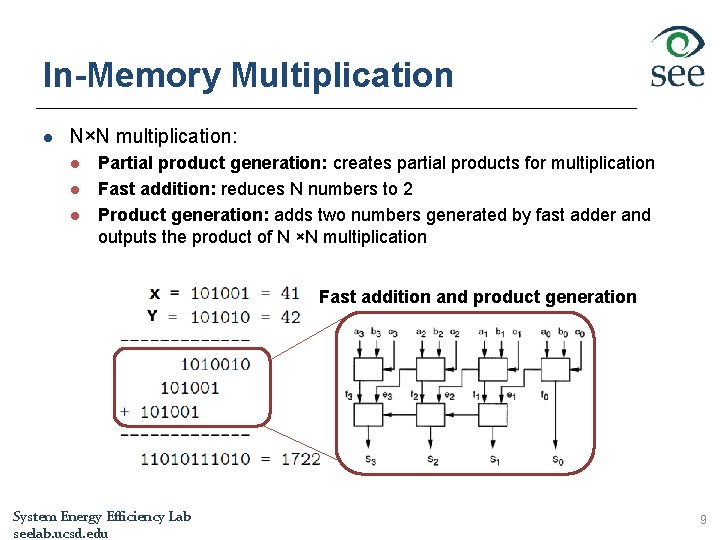

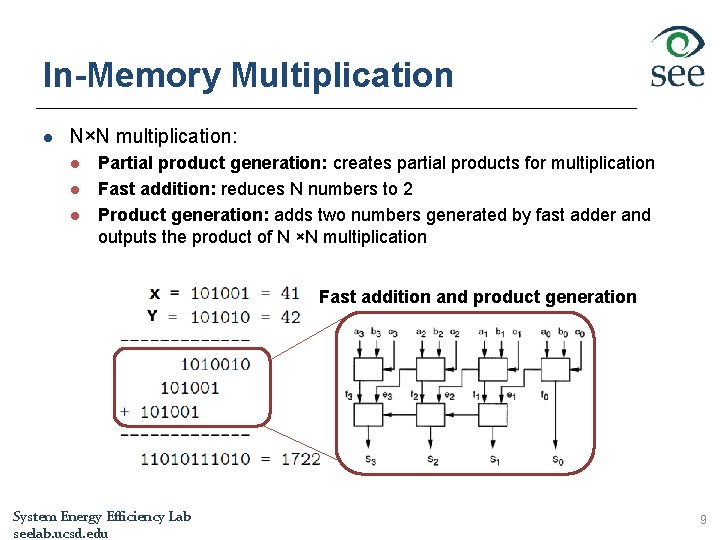

In-Memory Multiplication l N×N multiplication: l l l Partial product generation: creates partial products for multiplication Fast addition: reduces N numbers to 2 Product generation: adds two numbers generated by fast adder and outputs the product of N ×N multiplication Fast addition and product generation System Energy Efficiency Lab seelab. ucsd. edu 9

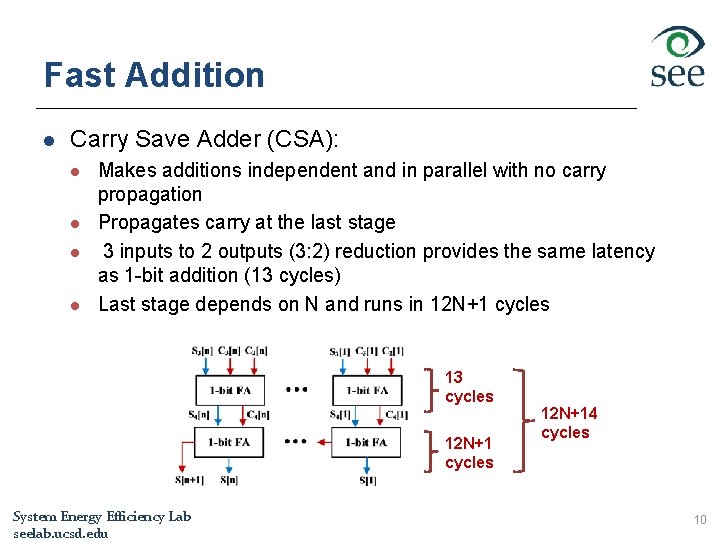

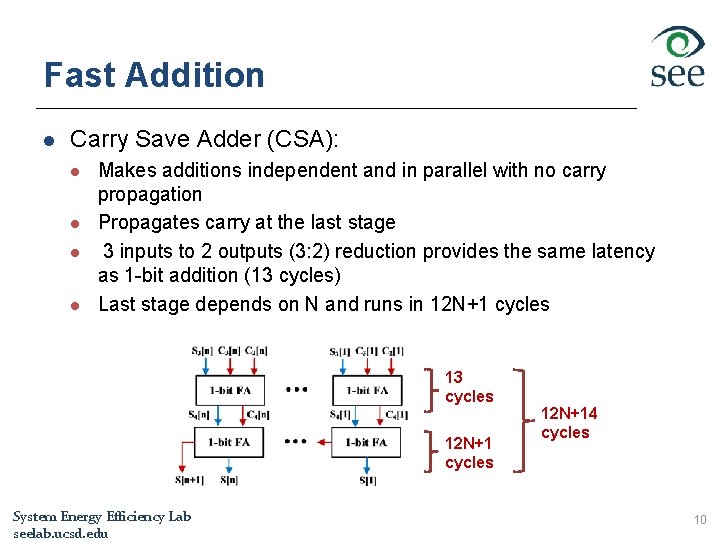

Fast Addition l Carry Save Adder (CSA): l l Makes additions independent and in parallel with no carry propagation Propagates carry at the last stage 3 inputs to 2 outputs (3: 2) reduction provides the same latency as 1 -bit addition (13 cycles) Last stage depends on N and runs in 12 N+1 cycles 13 cycles 12 N+1 cycles System Energy Efficiency Lab seelab. ucsd. edu 12 N+14 cycles 10

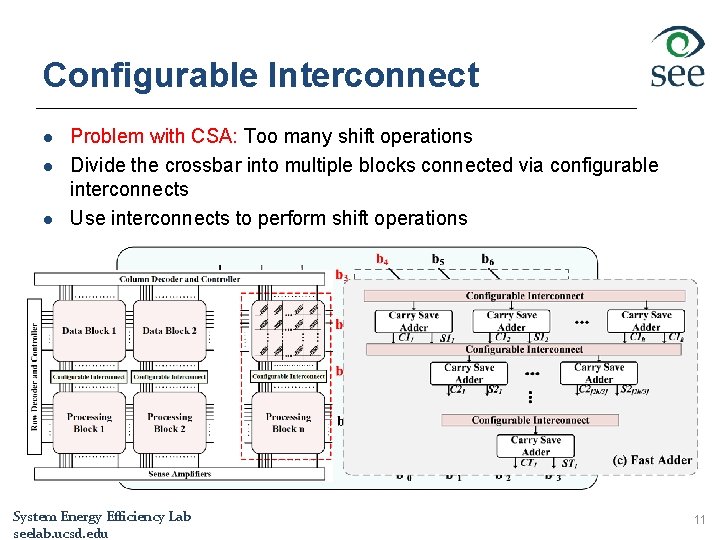

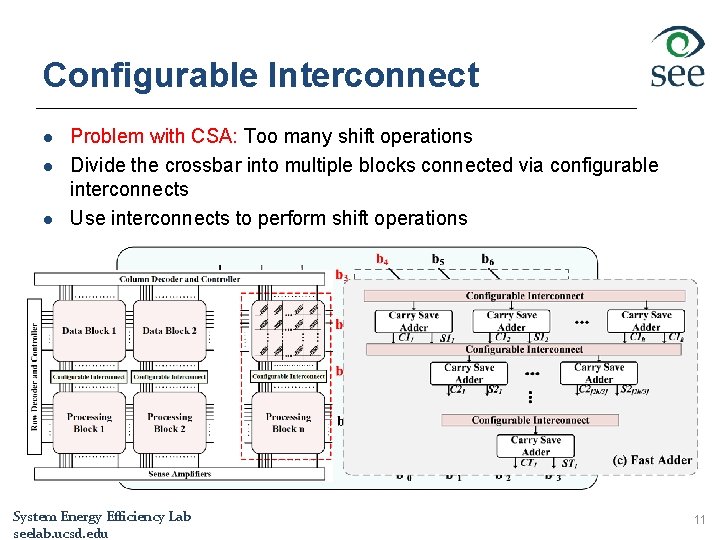

Configurable Interconnect l l l Problem with CSA: Too many shift operations Divide the crossbar into multiple blocks connected via configurable interconnects Use interconnects to perform shift operations System Energy Efficiency Lab seelab. ucsd. edu 11

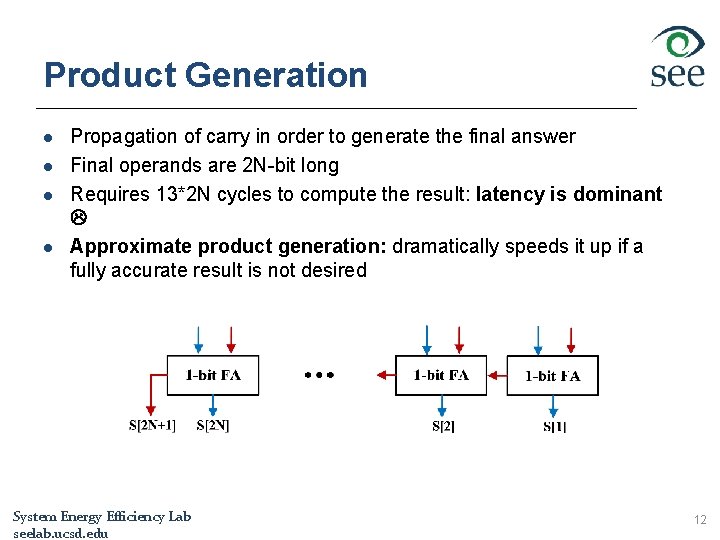

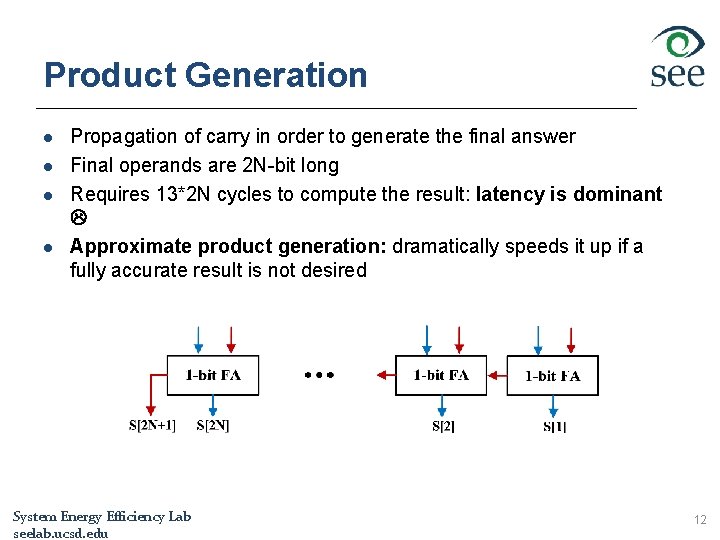

Product Generation l l Propagation of carry in order to generate the final answer Final operands are 2 N-bit long Requires 13*2 N cycles to compute the result: latency is dominant Approximate product generation: dramatically speeds it up if a fully accurate result is not desired System Energy Efficiency Lab seelab. ucsd. edu 12

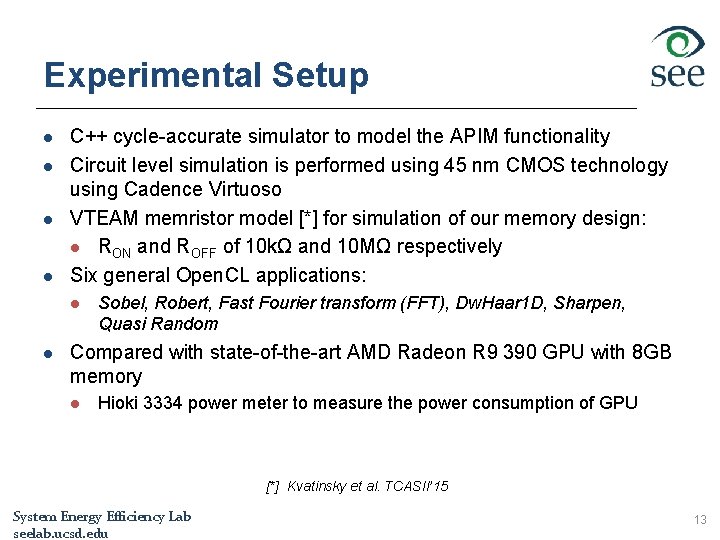

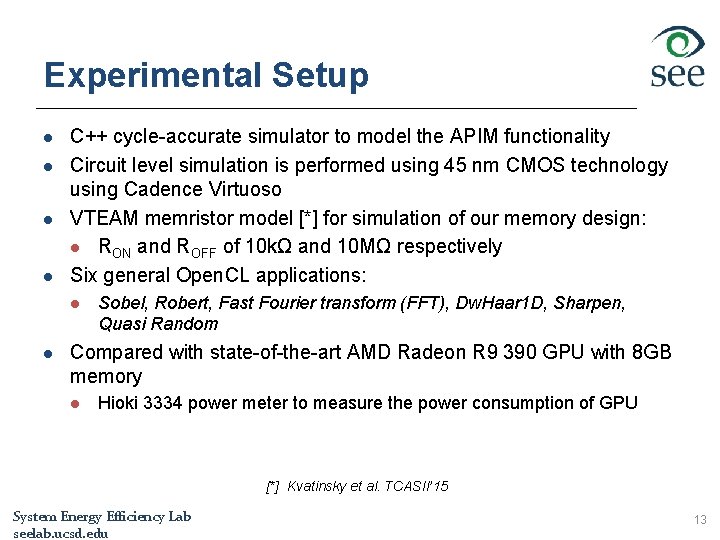

Experimental Setup l l C++ cycle-accurate simulator to model the APIM functionality Circuit level simulation is performed using 45 nm CMOS technology using Cadence Virtuoso VTEAM memristor model [*] for simulation of our memory design: l RON and ROFF of 10 kΩ and 10 MΩ respectively Six general Open. CL applications: l l Sobel, Robert, Fast Fourier transform (FFT), Dw. Haar 1 D, Sharpen, Quasi Random Compared with state-of-the-art AMD Radeon R 9 390 GPU with 8 GB memory l Hioki 3334 power meter to measure the power consumption of GPU [*] Kvatinsky et al. TCASII’ 15 System Energy Efficiency Lab seelab. ucsd. edu 13

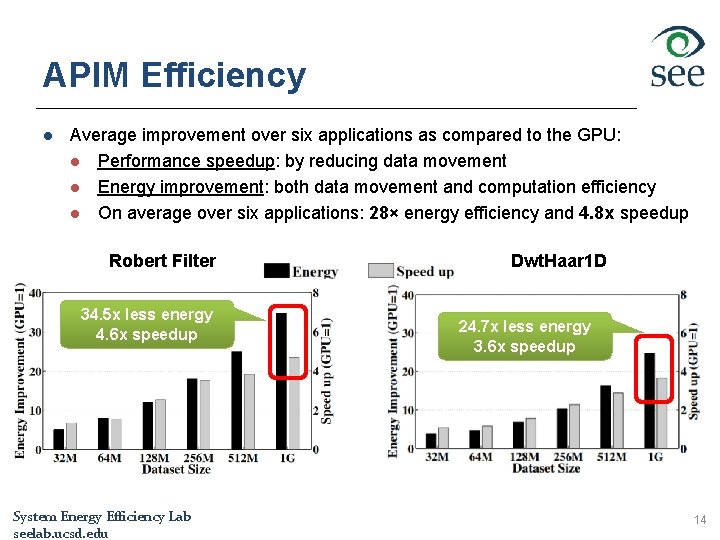

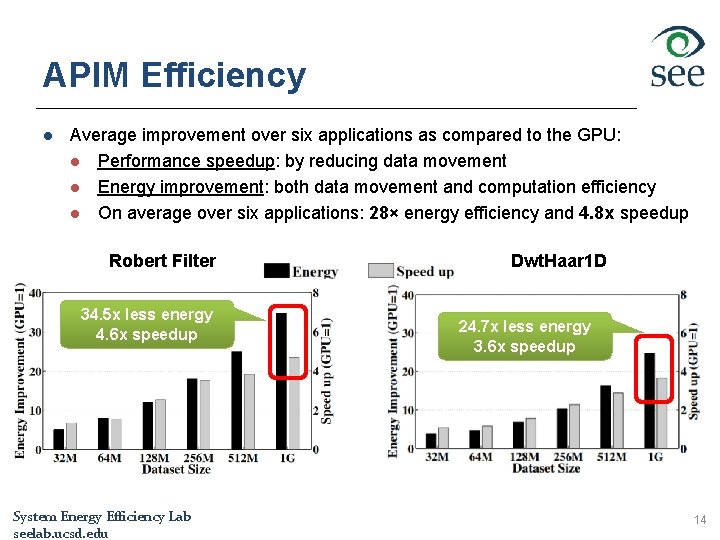

APIM Efficiency l Average improvement over six applications as compared to the GPU: l Performance speedup: by reducing data movement l Energy improvement: both data movement and computation efficiency l On average over six applications: 28× energy efficiency and 4. 8 x speedup Robert Filter 34. 5 x less energy 4. 6 x speedup System Energy Efficiency Lab seelab. ucsd. edu Dwt. Haar 1 D 24. 7 x less energy 3. 6 x speedup 14

Supporting In-Memory Operations Supported Operations Bitwise Addition/ Multiplication Search Operation Example of Operations OR, AND, XOR Multiple row Matrix Multiplication Search/ Nearest Search HD computing Graph processing Query processing Deep learning Security Multimedia Classification Clustering Database Applications System Energy Efficiency Lab seelab. ucsd. edu 15

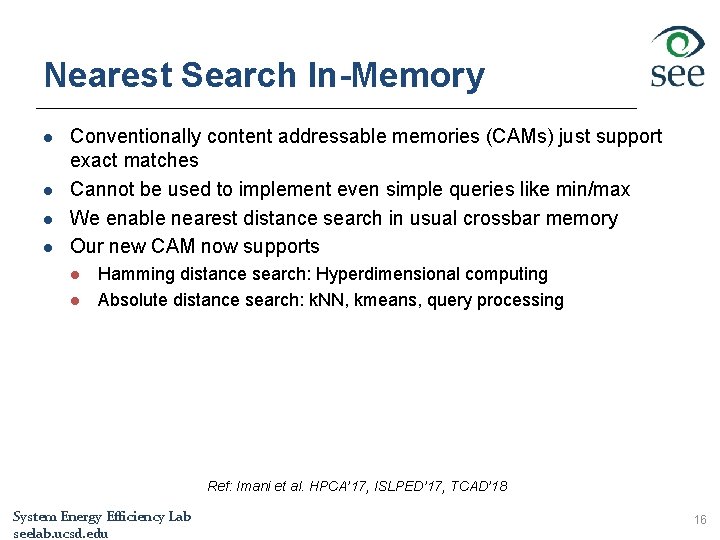

Nearest Search In-Memory l l Conventionally content addressable memories (CAMs) just support exact matches Cannot be used to implement even simple queries like min/max We enable nearest distance search in usual crossbar memory Our new CAM now supports l l Hamming distance search: Hyperdimensional computing Absolute distance search: k. NN, kmeans, query processing Ref: Imani et al. HPCA’ 17, ISLPED’ 17, TCAD’ 18 System Energy Efficiency Lab seelab. ucsd. edu 16

![InMemory Computing Accelerator Classification Clustering Hyperdimensional Classification HPCA 17 Kmeans Support both Training and In-Memory Computing Accelerator Classification Clustering Hyperdimensional Classification [HPCA’ 17] Kmeans Support both Training and](https://slidetodoc.com/presentation_image_h/247009d01b8496726412c0987f5bcd52/image-17.jpg)

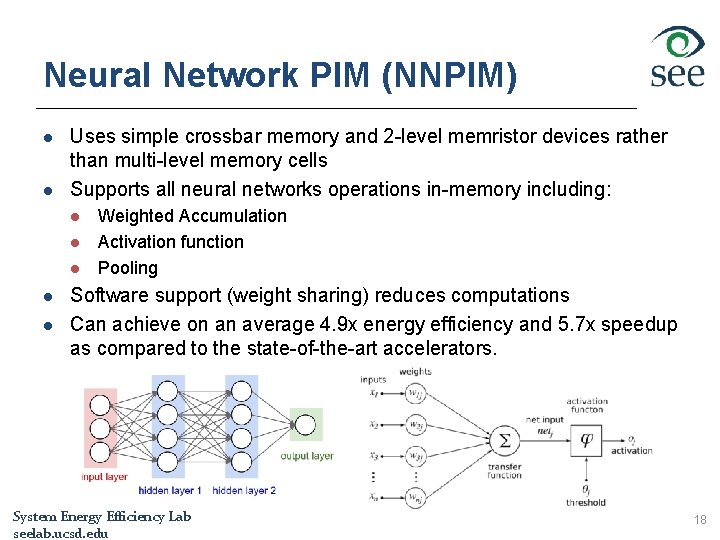

In-Memory Computing Accelerator Classification Clustering Hyperdimensional Classification [HPCA’ 17] Kmeans Support both Training and Testing Adaboost [ICCAD’ 17] Hyperdimensional Clustering DNN, CNN [DATE’ 17] Graph Processing System Energy Efficiency Lab seelab. ucsd. edu Decision Tree k. NN [ICRC’ 17] Database Query Processing [ISLPED’ 17] [TCAD’ 18] 17

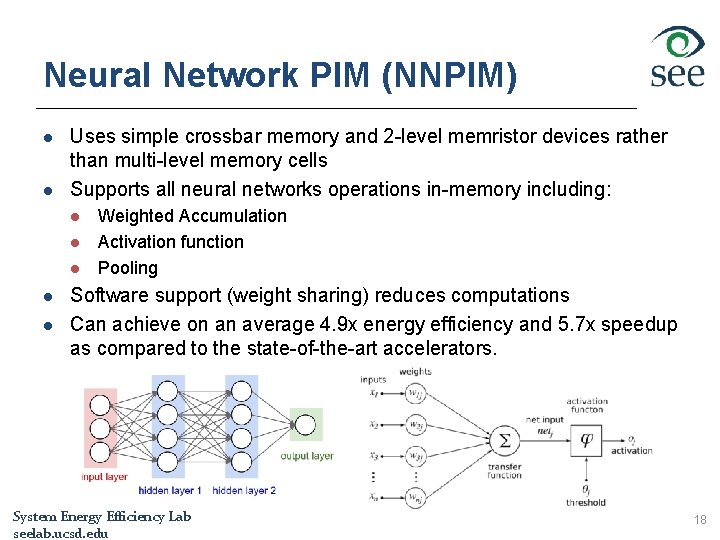

Neural Network PIM (NNPIM) l l Uses simple crossbar memory and 2 -level memristor devices rather than multi-level memory cells Supports all neural networks operations in-memory including: l l l Weighted Accumulation Activation function Pooling Software support (weight sharing) reduces computations Can achieve on an average 4. 9 x energy efficiency and 5. 7 x speedup as compared to the state-of-the-art accelerators. System Energy Efficiency Lab seelab. ucsd. edu 18

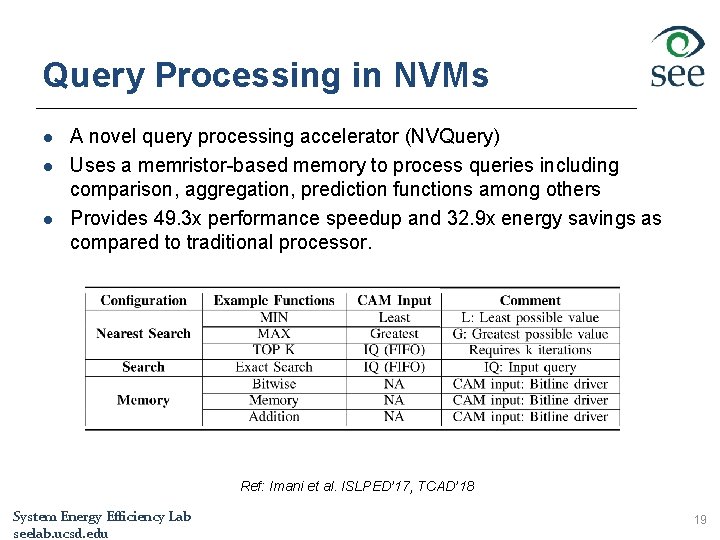

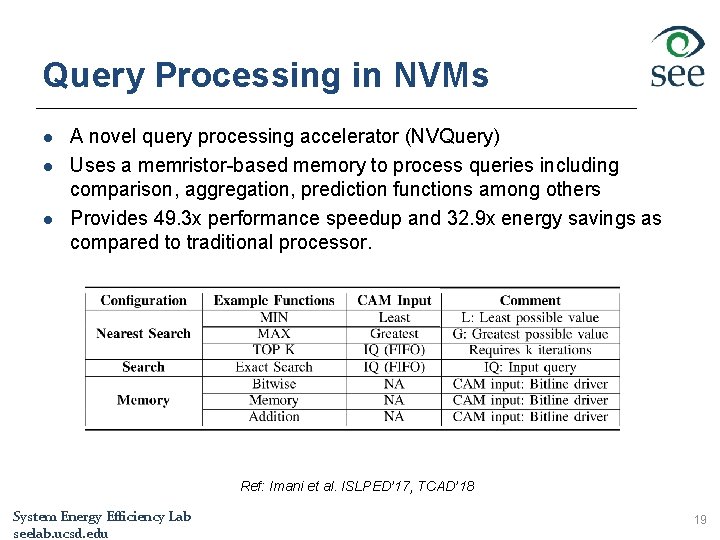

Query Processing in NVMs l l l A novel query processing accelerator (NVQuery) Uses a memristor-based memory to process queries including comparison, aggregation, prediction functions among others Provides 49. 3 x performance speedup and 32. 9 x energy savings as compared to traditional processor. Ref: Imani et al. ISLPED’ 17, TCAD’ 18 System Energy Efficiency Lab seelab. ucsd. edu 19

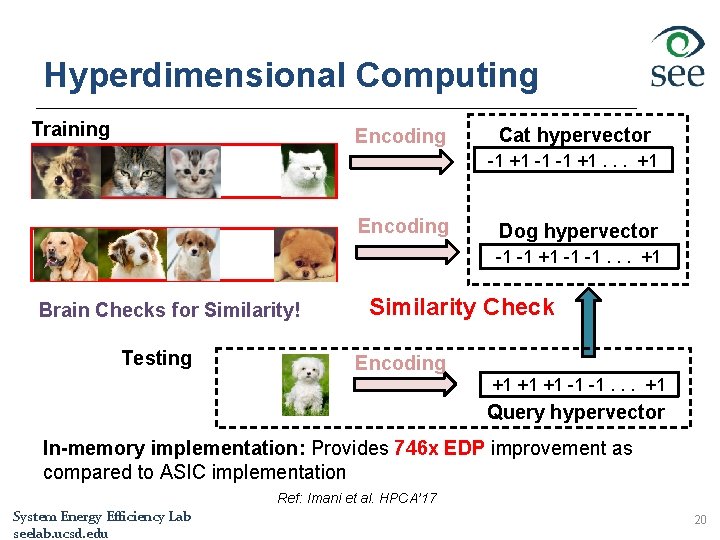

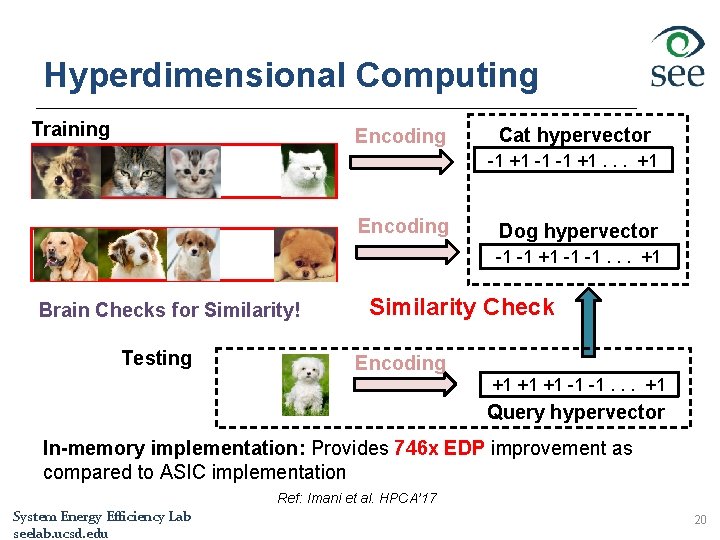

Hyperdimensional Computing Training Encoding Cat hypervector -1 +1 -1 -1 +1. . . +1 Encoding Dog hypervector -1 -1 +1 -1 -1. . . +1 Brain Checks for Similarity! Testing Similarity Check Encoding +1 +1 +1 -1 -1. . . +1 Query hypervector In-memory implementation: Provides 746 x EDP improvement as compared to ASIC implementation Ref: Imani et al. HPCA’ 17 System Energy Efficiency Lab seelab. ucsd. edu 20

Conclusion l We are working to accelerate a wide range of applications in memory l At circuit level, we are working to support more operations in memory and to make the existing operations more efficient l At architecture and system level, we are designing new application specific accelerators l At application level, we are designing libraries which provides interface to programmers for accelerating their applications using PIM System Energy Efficiency Lab seelab. ucsd. edu 21

References l l l l Dally Tutorial, NIPS’ 15 Mohsen Imani, Saransh Gupta, and Tajana Rosing. "Ultra-efficient processing in-memory for data intensive applications. " In Proceedings of the 54 th Annual Design Automation Conference 2017, p. 6. ACM, 2017. Shahar Kvatinsky, Dmitry Belousov, Slavik Liman, Guy Satat, Nimrod Wald, Eby G. Friedman, Avinoam Kolodny, and Uri C. Weiser. "MAGIC—Memristor-aided logic. " IEEE Transactions on Circuits and Systems II: Express Briefs 61, no. 11 (2014): 895 -899. Nishil Talati, Saransh Gupta, Pravin Mane, and Shahar Kvatinsky. "Logic design within memristive memories using memristor-aided lo. GIC (MAGIC). " IEEE Transactions on Nanotechnology 15, no. 4 (2016): 635 -650. Shahar Kvatinsky, Misbah Ramadan, Eby G. Friedman, and Avinoam Kolodny. "VTEAM: A general model for voltage-controlled memristors. " IEEE Transactions on Circuits and Systems II: Express Briefs 62, no. 8 (2015): 786 -790. Mohsen Imani, Abbas Rahimi, Deqian Kong, Tajana Rosing, and Jan M. Rabaey. "Exploring hyperdimensional associative memory. " In High Performance Computer Architecture (HPCA), 2017 IEEE International Symposium on, pp. 445 -456. IEEE, 2017. Mohsen Imani, Saransh Gupta, Atl Arredondo, and Tajana Rosing. "Efficient query processing in crossbar memory. " In Low Power Electronics and Design (ISLPED, 2017 IEEE/ACM International Symposium on, pp. 1 -6. IEEE, 2017. System Energy Efficiency Lab seelab. ucsd. edu 22

References l l l Mohsen Imani, Saransh Gupta, Sahil Sharma, and Tajana Rosing. ”NVQuery: Efficient query processing in non-volatile memory. " IEEE Transactions on Computer Aided Design of Integrated Circuits and Systems (2018), in press. Yeseong Kim, Mohsen Imani, and Tajana Rosing. "Orchard: Visual object recognition accelerator based on approximate in-memory processing. " In Computer-Aided Design (ICCAD), 2017 IEEE/ACM International Conference on, pp. 25 -32. IEEE, 2017. Mohsen Imani, Daniel Peroni, Yeseong Kim, Abbas Rahimi, and Tajana Rosing. "Efficient neural network acceleration on gpgpu using content addressable memory. " In 2017 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 1026 -1031. IEEE, Mohammad Samragh Razlighi, Mohsen Imani, Farinaz Koushanfar, and Tajana Rosing. "Looknn: Neural network with no multiplication. " In 2017 Design, Automation & Test in Europe Conference & Exhibition (DATE), pp. 1775 -1780. IEEE, 2017. Mohsen Imani, Yeseong Kim, and Tajana Rosing. "NNgine: Ultra-Efficient Nearest Neighbor Accelerator Based on In-Memory Computing. " In Rebooting Computing (ICRC), 2017 IEEE International Conference on, pp. 1 -8. IEEE, 2017. Mohsen Imani, Deqian Kong, Abbas Rahimi, and Tajana Rosing. "Voicehd: Hyperdimensional computing for efficient speech recognition. " In Rebooting Computing (ICRC), 2017 IEEE International Conference on, pp. 1 -8. IEEE, 2017. System Energy Efficiency Lab seelab. ucsd. edu 23