Parallelizing Sequential Graph Computations Wenfei Fan University of

- Slides: 42

Parallelizing Sequential Graph Computations Wenfei Fan University of Edinburgh Beihang University 1

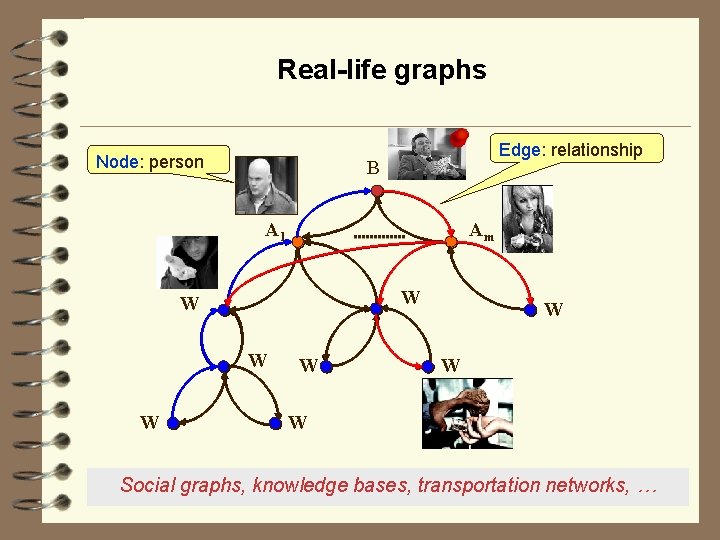

Real-life graphs Node: person Edge: relationship B A 1 Am W W W W Social graphs, knowledge bases, transportation networks, …

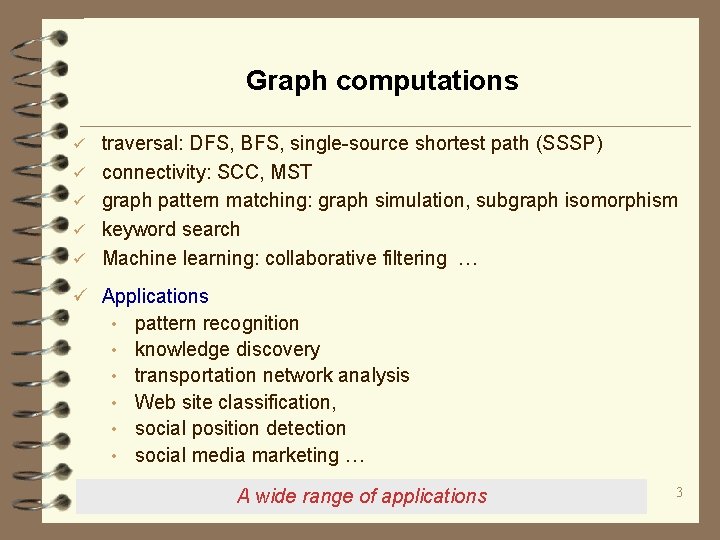

Graph computations ü ü ü traversal: DFS, BFS, single-source shortest path (SSSP) connectivity: SCC, MST graph pattern matching: graph simulation, subgraph isomorphism keyword search Machine learning: collaborative filtering … ü Applications • pattern recognition • knowledge discovery • transportation network analysis • Web site classification, • social position detection • social media marketing … A wide range of applications 3

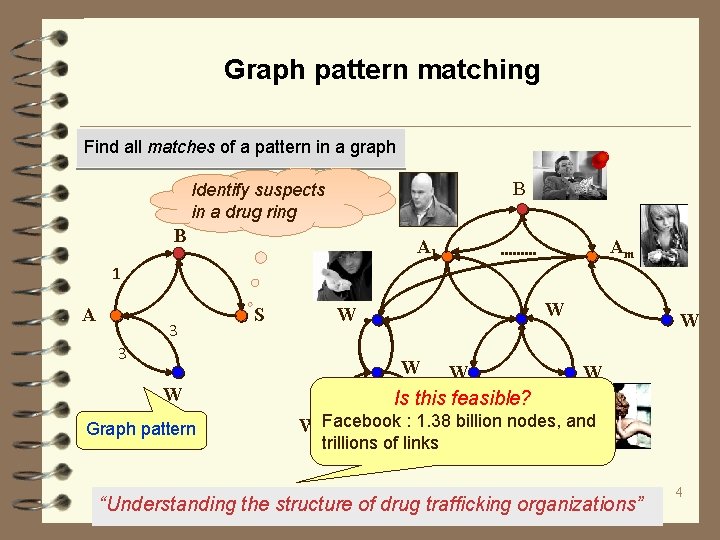

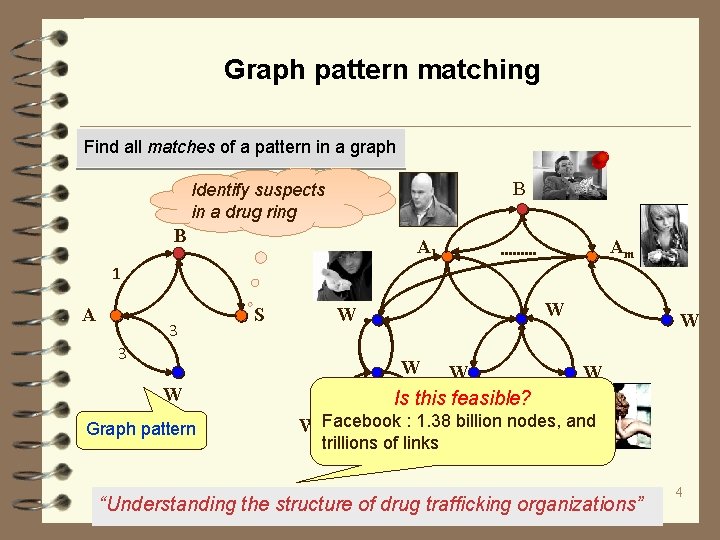

Graph pattern matching Find all matches of a pattern in a graph B Identify suspects in a drug ring B A 1 Am 1 A 3 3 S W W W Graph pattern W W Is this feasible? W Facebook : 1. 38 Wbillion nodes, and trillions of links “Understanding the structure of drug trafficking organizations” 4

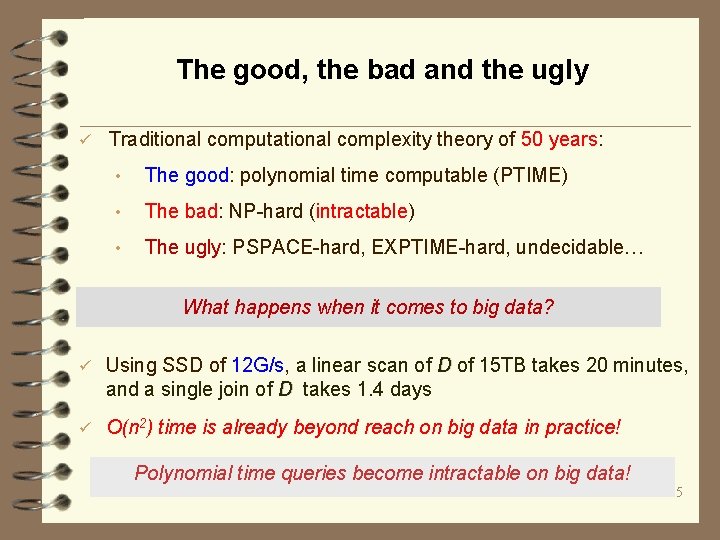

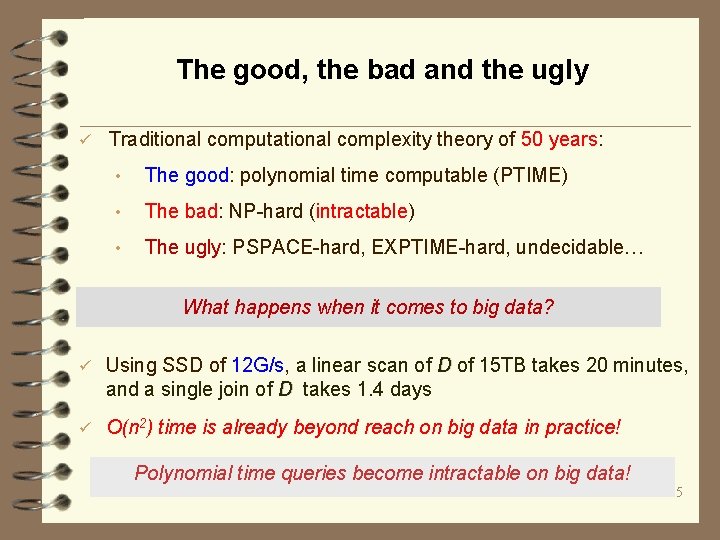

The good, the bad and the ugly ü Traditional computational complexity theory of 50 years: • The good: polynomial time computable (PTIME) • The bad: NP-hard (intractable) • The ugly: PSPACE-hard, EXPTIME-hard, undecidable… What happens when it comes to big data? ü Using SSD of 12 G/s, a linear scan of D of 15 TB takes 20 minutes, and a single join of D takes 1. 4 days ü O(n 2) time is already beyond reach on big data in practice! Polynomial time queries become intractable on big data! 5

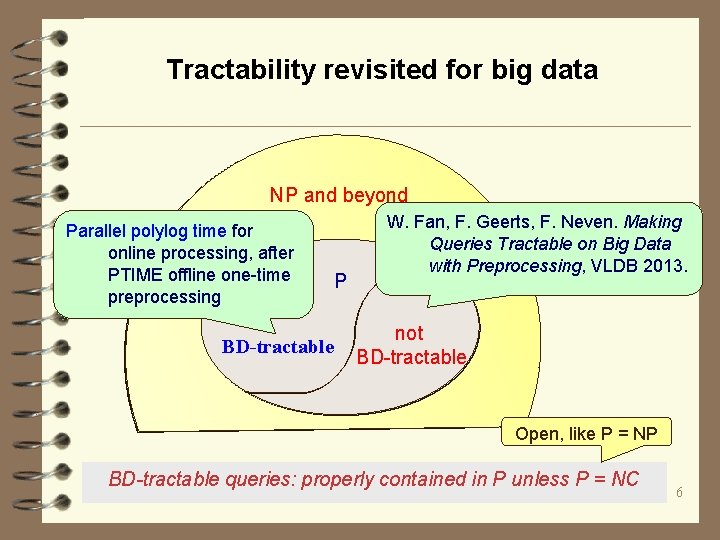

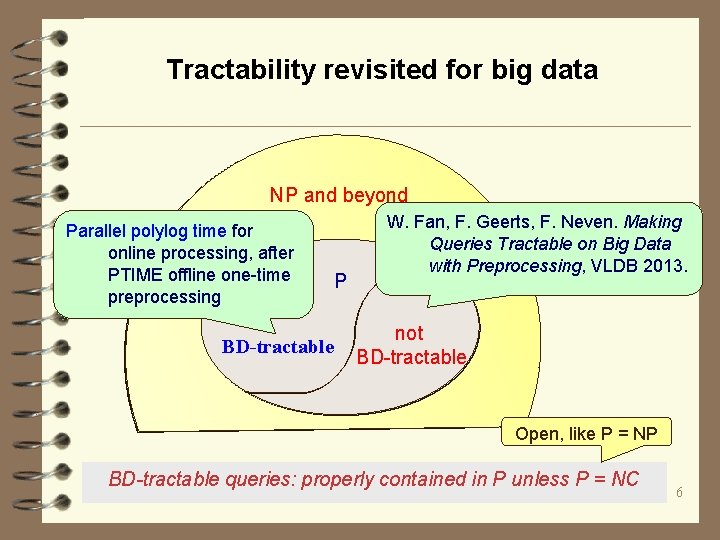

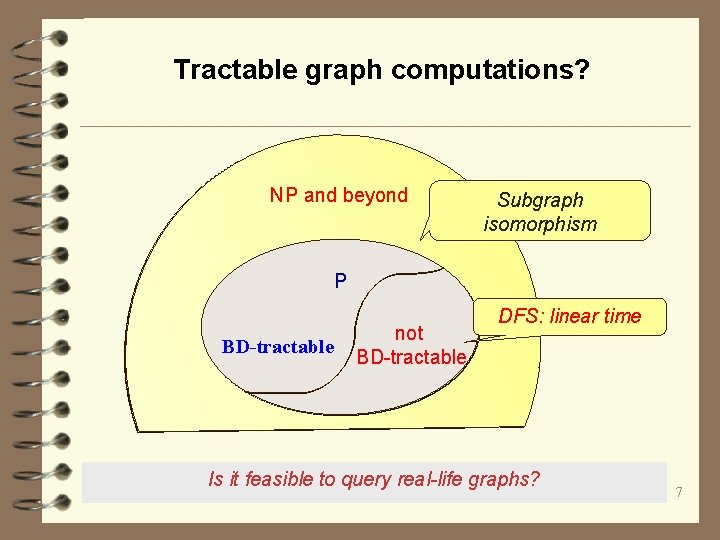

Tractability revisited for big data NP and beyond Parallel polylog time for online processing, after PTIME offline one-time preprocessing BD-tractable P W. Fan, F. Geerts, F. Neven. Making Queries Tractable on Big Data with Preprocessing, VLDB 2013. not BD-tractable Open, like P = NP BD-tractable queries: properly contained in P unless P = NC 6

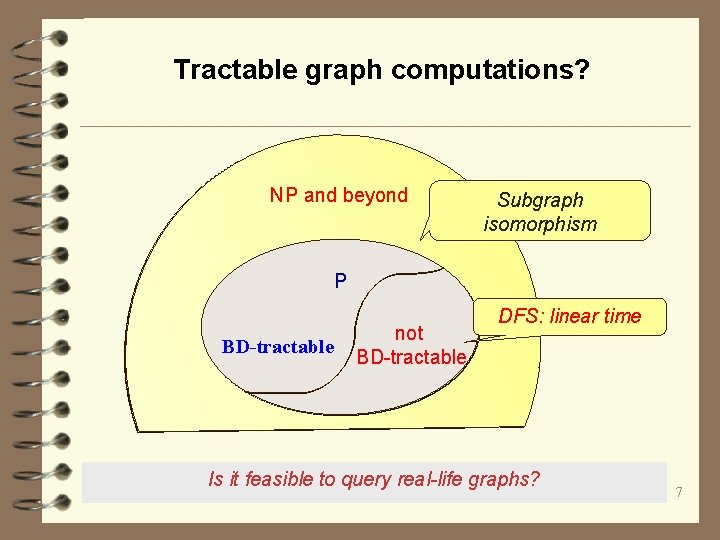

Tractable graph computations? NP and beyond Subgraph isomorphism P BD-tractable not BD-tractable DFS: linear time Is it feasible to query real-life graphs? 7

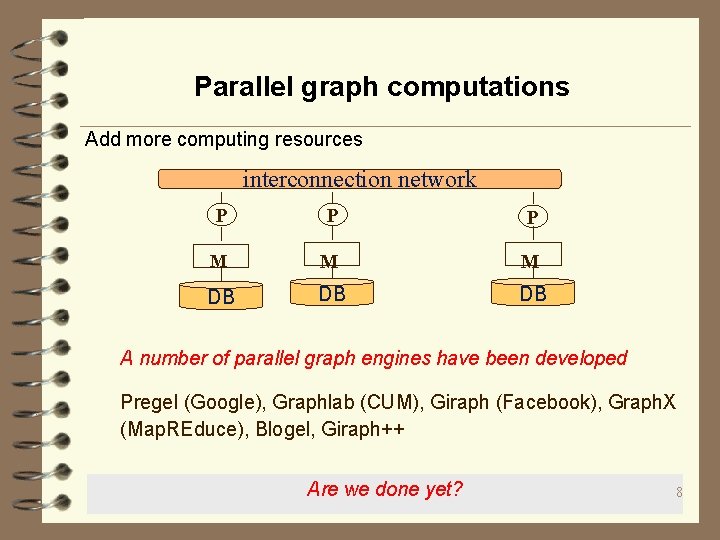

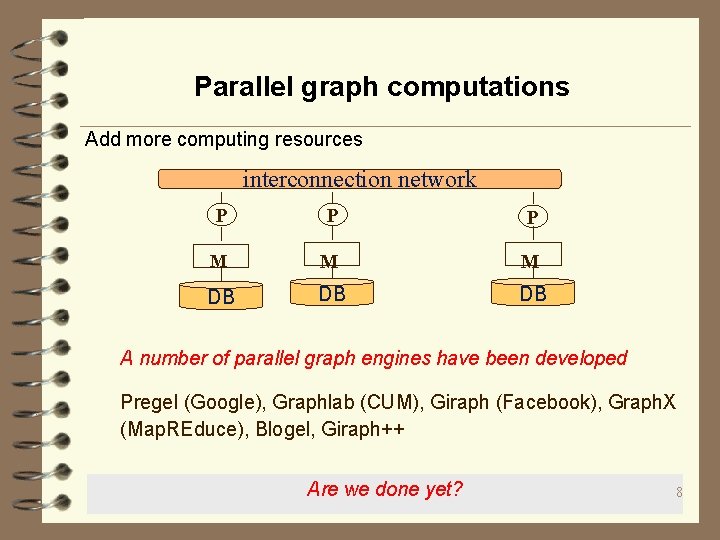

Parallel graph computations Add more computing resources interconnection network P P P M M M DB DB DB A number of parallel graph engines have been developed Pregel (Google), Graphlab (CUM), Giraph (Facebook), Graph. X (Map. REduce), Blogel, Giraph++ Are we done yet? 8

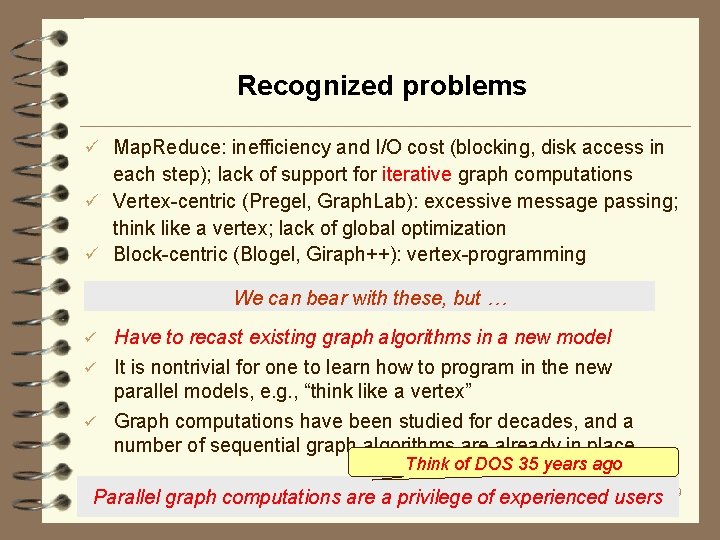

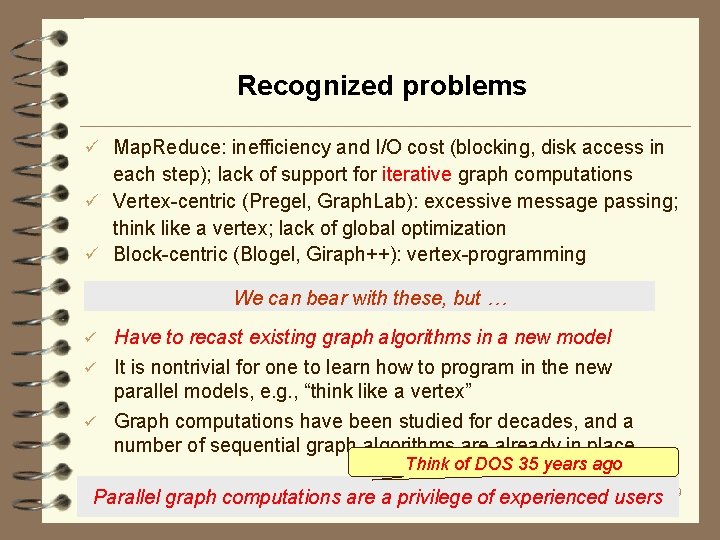

Recognized problems ü Map. Reduce: inefficiency and I/O cost (blocking, disk access in each step); lack of support for iterative graph computations ü Vertex-centric (Pregel, Graph. Lab): excessive message passing; think like a vertex; lack of global optimization ü Block-centric (Blogel, Giraph++): vertex-programming We can bear with these, but … Have to recast existing graph algorithms in a new model ü It is nontrivial for one to learn how to program in the new parallel models, e. g. , “think like a vertex” ü Graph computations have been studied for decades, and a number of sequential graph algorithms are already in place ü Think of DOS 35 years ago Parallel graph computations are a privilege of experienced users 9

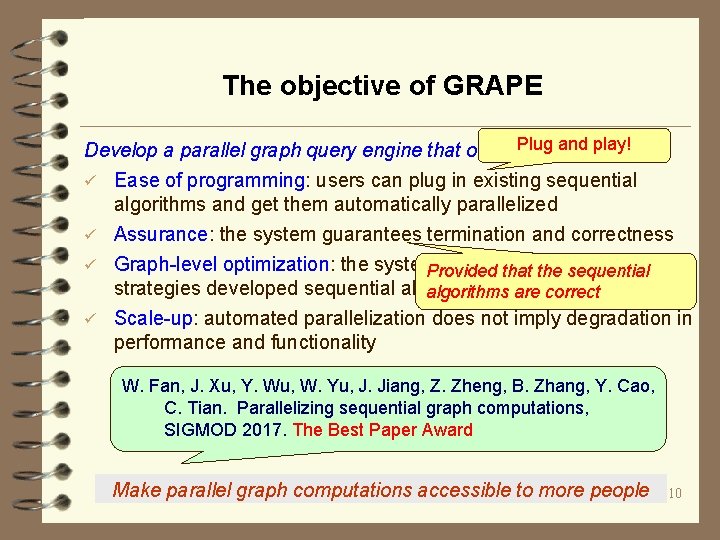

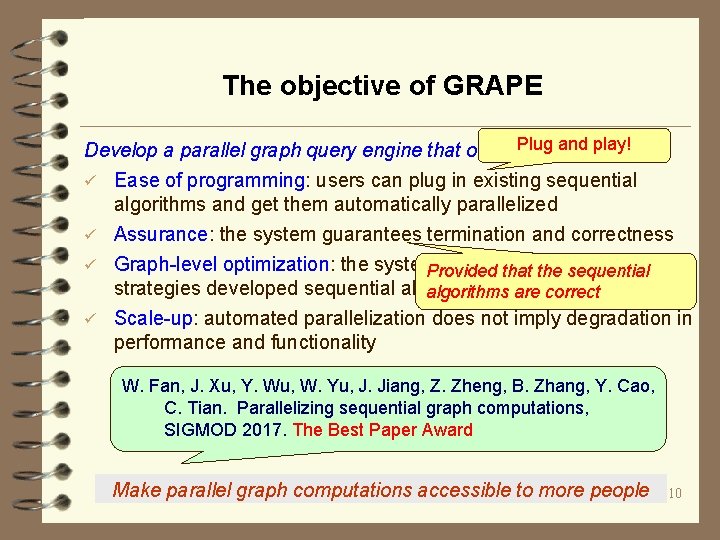

The objective of GRAPE Develop a parallel graph query engine that offers Plug and play! ü Ease of programming: users can plug in existing sequential algorithms and get them automatically parallelized ü Assurance: the system guarantees termination and correctness ü Graph-level optimization: the system inheritsthat all the optimization Provided sequential strategies developed sequential algorithms, algorithmsand are correct ü Scale-up: automated parallelization does not imply degradation in performance and functionality W. Fan, J. Xu, Y. Wu, W. Yu, J. Jiang, Z. Zheng, B. Zhang, Y. Cao, C. Tian. Parallelizing sequential graph computations, SIGMOD 2017. The Best Paper Award Make parallel graph computations accessible to more people 10

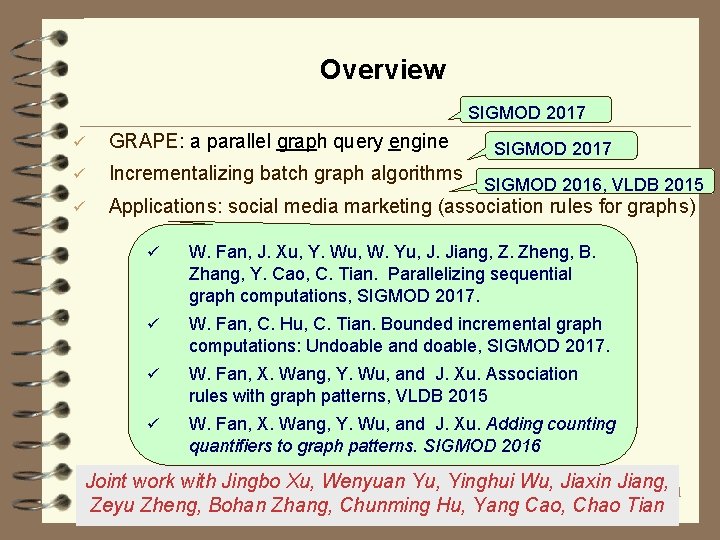

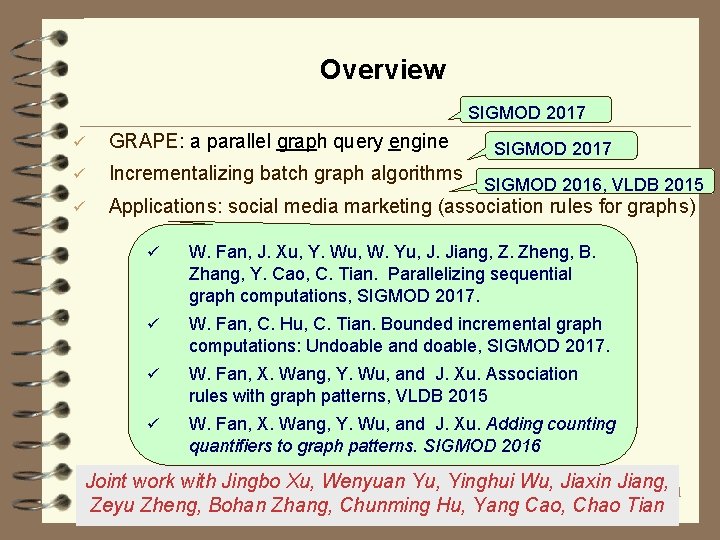

Overview SIGMOD 2017 ü GRAPE: a parallel graph query engine ü Incrementalizing batch graph algorithms ü Applications: social media marketing (association rules for graphs) SIGMOD 2017 SIGMOD 2016, VLDB 2015 ü W. Fan, J. Xu, Y. Wu, W. Yu, J. Jiang, Z. Zheng, B. Zhang, Y. Cao, C. Tian. Parallelizing sequential graph computations, SIGMOD 2017. ü W. Fan, C. Hu, C. Tian. Bounded incremental graph computations: Undoable and doable, SIGMOD 2017. ü W. Fan, X. Wang, Y. Wu, and J. Xu. Association rules with graph patterns, VLDB 2015 ü W. Fan, X. Wang, Y. Wu, and J. Xu. Adding counting quantifiers to graph patterns. SIGMOD 2016 Joint work with Jingbo Xu, Wenyuan Yu, Yinghui Wu, Jiaxin Jiang, 11 Zeyu Zheng, Bohan Zhang, Chunming Hu, Yang Cao, Chao Tian

GRAPE: a parallel graph query engine 12

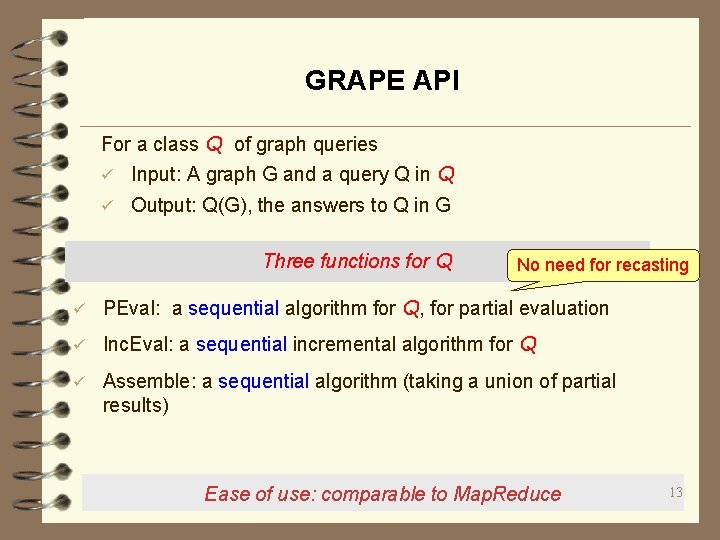

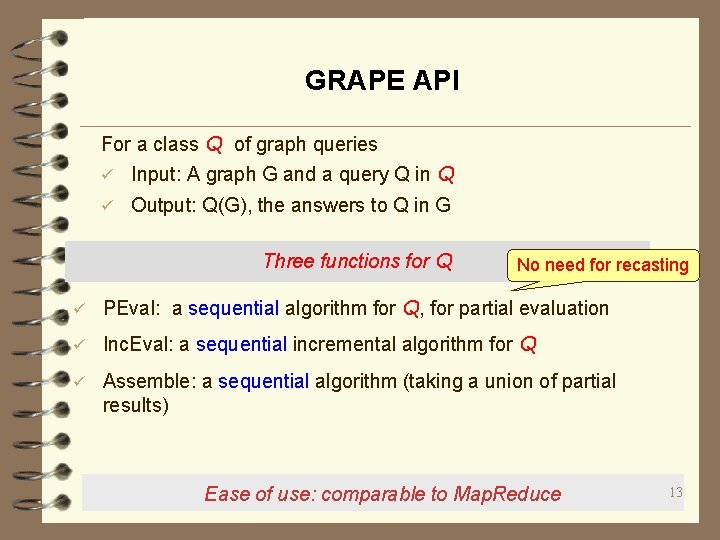

GRAPE API For a class Q of graph queries ü Input: A graph G and a query Q in Q ü Output: Q(G), the answers to Q in G Three functions for Q No need for recasting ü PEval: a sequential algorithm for Q, for partial evaluation ü Inc. Eval: a sequential incremental algorithm for Q ü Assemble: a sequential algorithm (taking a union of partial results) Ease of use: comparable to Map. Reduce 13

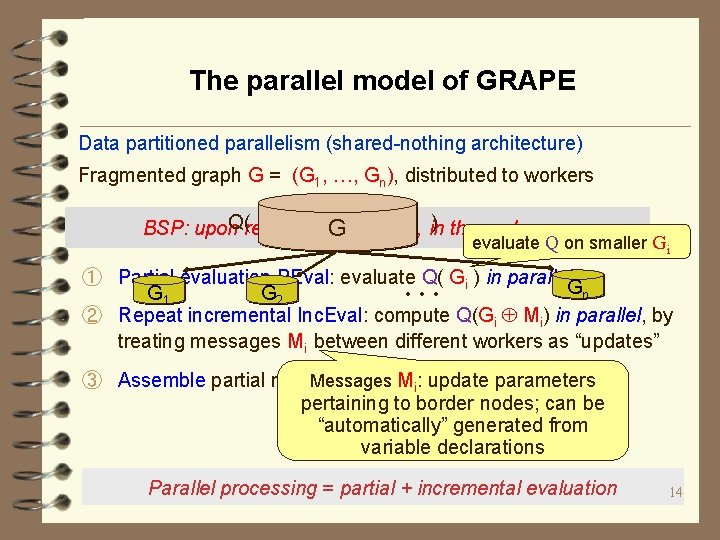

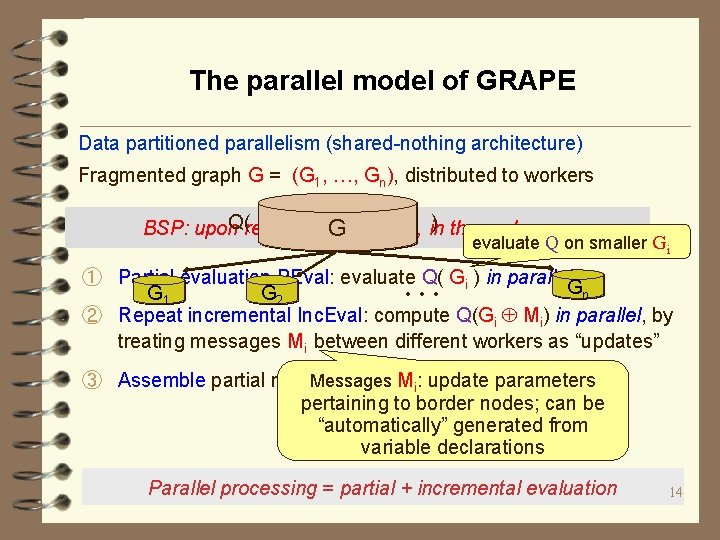

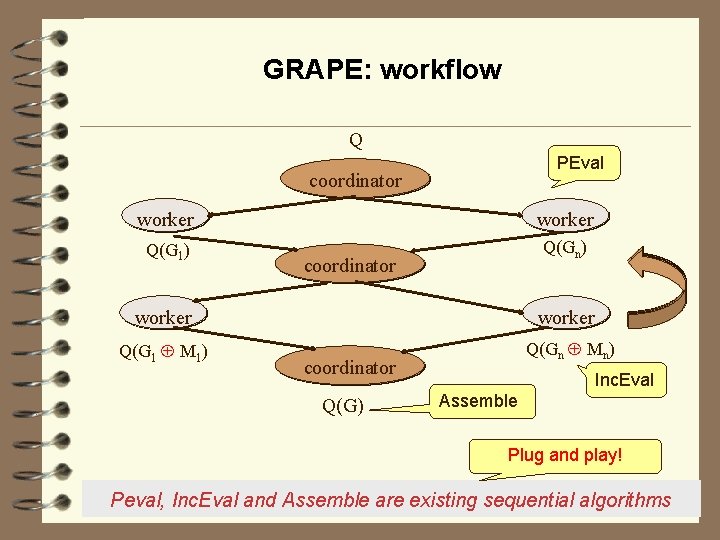

The parallel model of GRAPE Data partitioned parallelism (shared-nothing architecture) Fragmented graph G = (G 1, …, Gn), distributed to workers Q(receiving G ) three phases: BSP: upon a query Q, in evaluate Q on smaller Gi … ① Partial evaluation PEval: evaluate Q( Gi ) in parallel Gn G 1 G 2 ② Repeat incremental Inc. Eval: compute Q(Gi Mi) in parallel, by treating messages Mi between different workers as “updates” ③ Assemble partial results when it. Mreaches a parameters “fixed point” Messages i: update pertaining to border nodes; can be “automatically” generated from variable declarations Parallel processing = partial + incremental evaluation 14

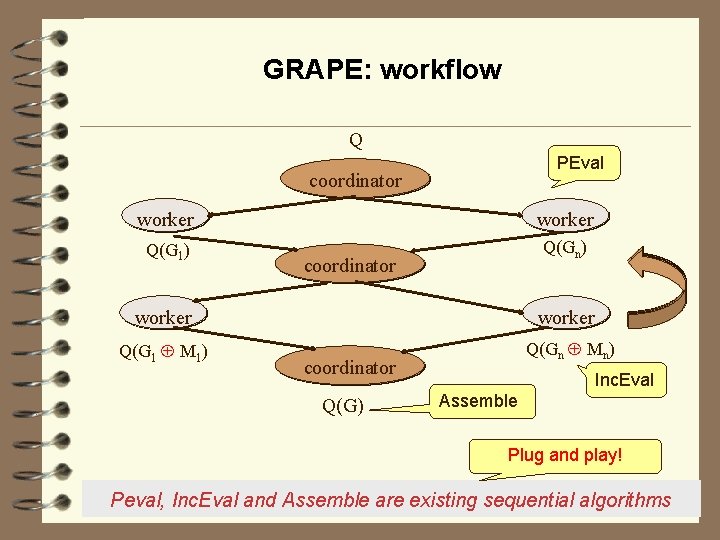

GRAPE: workflow Q PEval coordinator worker Q(G 1) Q(Gn) coordinator worker Q(G 1 M 1) Q(Gn Mn) coordinator Q(G) Inc. Eval Assemble Plug and play! Peval, Inc. Eval and Assemble are existing sequential algorithms 15

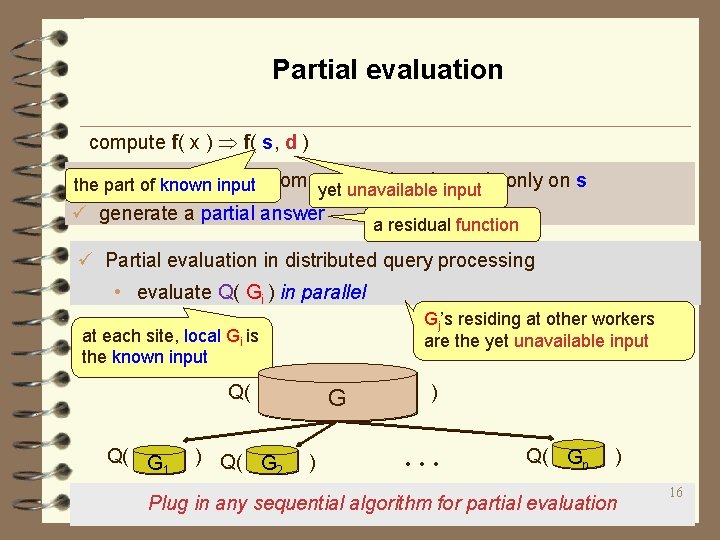

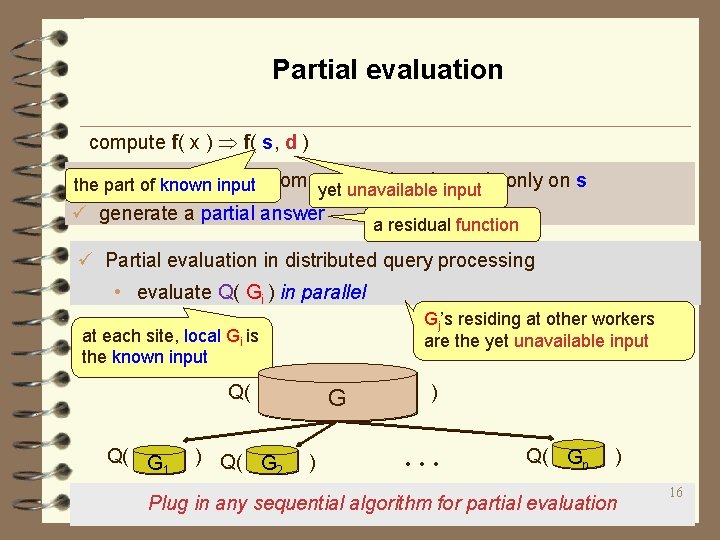

Partial evaluation compute f( x ) f( s, d ) ü the part that depends only on s theconduct part of known inputof computation yet unavailable input ü generate a partial answer a residual function ü Partial evaluation in distributed query processing • evaluate Q( Gi ) in parallel Gj’s residing at other workers are the yet unavailable input at each site, local Gi is the known input Q( Q( G 1 ) Q( G 2 G ) ) … Q( Gn ) Plug in any sequential algorithm for partial evaluation 16

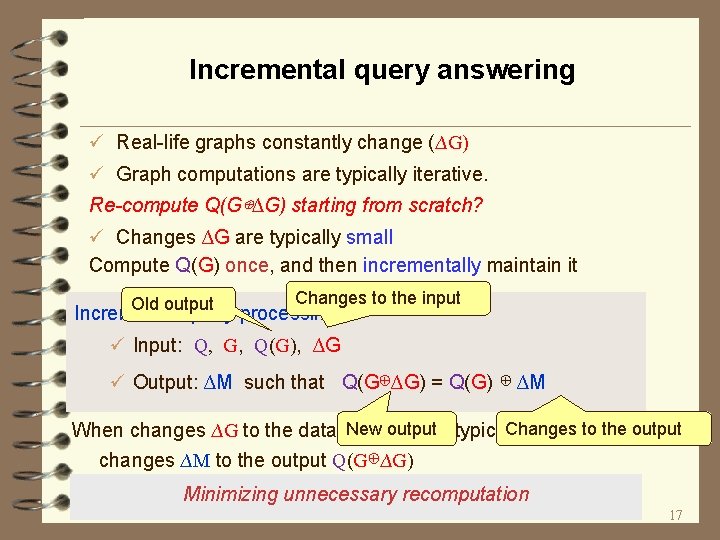

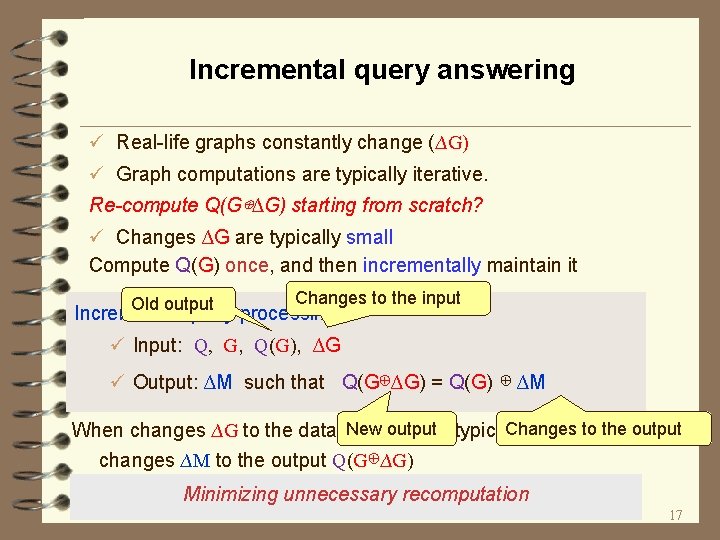

Incremental query answering ü Real-life graphs constantly change (∆G) ü Graph computations are typically iterative. Re-compute Q(G⊕∆G) starting from scratch? ü Changes ∆G are typically small Compute Q(G) once, and then incrementally maintain it Changes to the input Old output Incremental query processing: ü Input: Q, G, Q(G), ∆G ü Output: ∆M such that Q(G⊕∆G) = Q(G) ⊕ ∆M New Changes the output When changes ∆G to the data G areoutput small, typically so aretothe changes ∆M to the output Q(G⊕∆G) Minimizing unnecessary recomputation 17

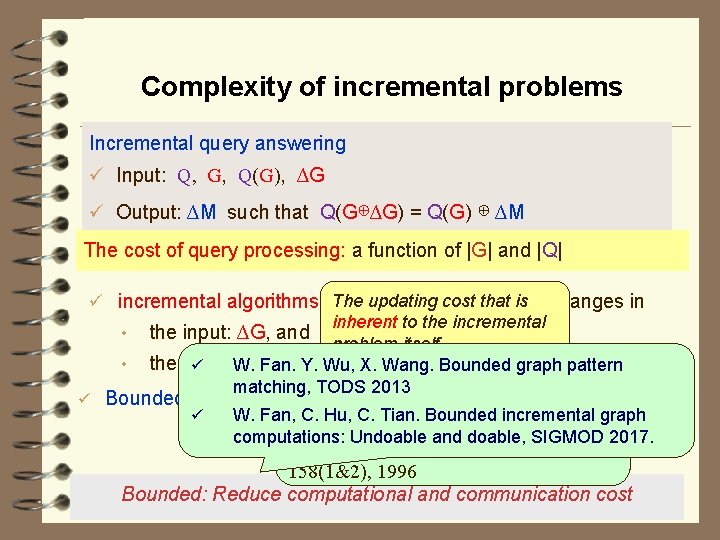

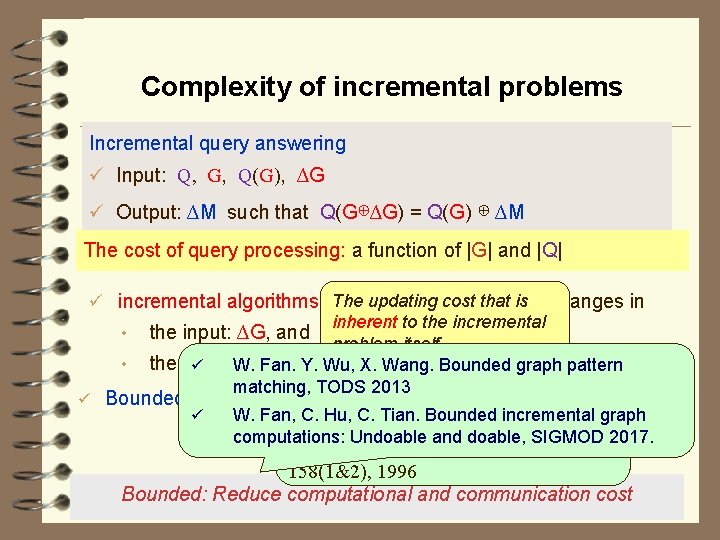

Complexity of incremental problems Incremental query answering ü Input: Q, G, Q(G), ∆G ü Output: ∆M such that Q(G⊕∆G) = Q(G) ⊕ ∆M The cost of query processing: a function of |G| and |Q| The updating cost ü incremental algorithms: |CHANGED|, thethat sizeisof changes in inherent to the incremental • the input: ∆G, and problem itself • the output: ü W. ∆M Fan. Y. Wu, X. Wang. Bounded graph pattern matching, TODS 2013 ü Bounded: the cost is G. expressible as Thomas f(|CHANGED|, Ramalingam, W. Reps: |Q|)? On ü W. Fan, C. Hu, C. Tian. Bounded incremental graph the Computational Complexity of computations: Undoable and doable, SIGMOD 2017. Dynamic Graph Problems. TCS 158(1&2), 1996 Bounded: Reduce computational and communication cost 18

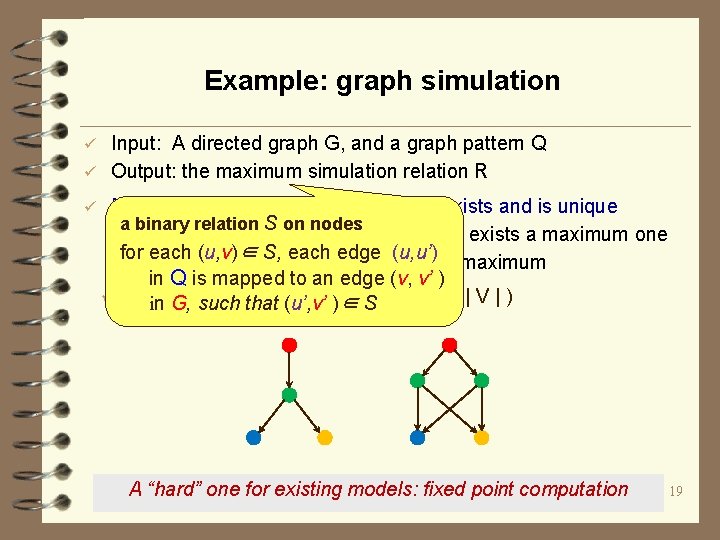

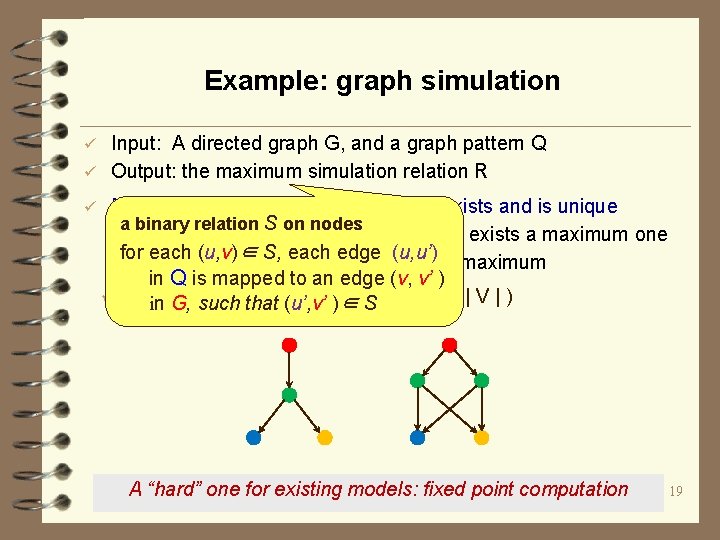

Example: graph simulation Input: A directed graph G, and a graph pattern Q ü Output: the maximum simulation relation R ü ü Maximum simulation relation: always exists and is unique a binary relation S on nodes • If a match relation exists, then there exists a maximum one for each (u, v)∈ S, each edge (u, u’) • Otherwise, it is the empty set – still maximum in Q is mapped to an edge (v, v’ ) ü Complexity: O((| (u’, v’ EQ | +)∈ | VSQ |) (| E | + | V | ) in G, such that A “hard” one for existing models: fixed point computation 19

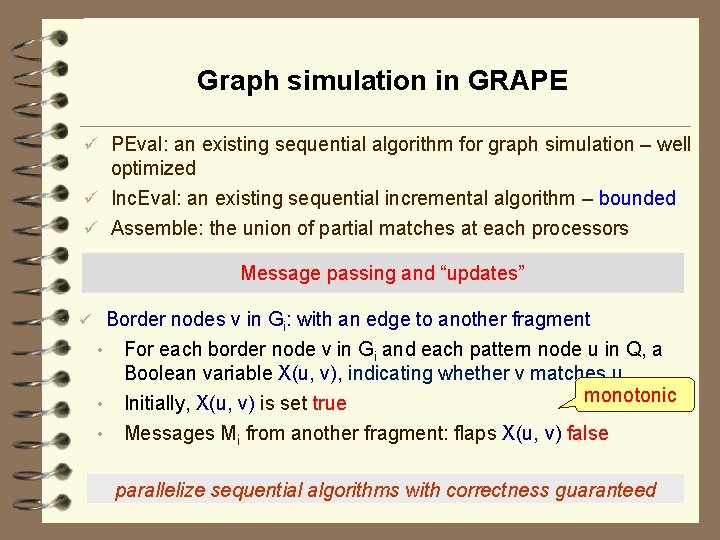

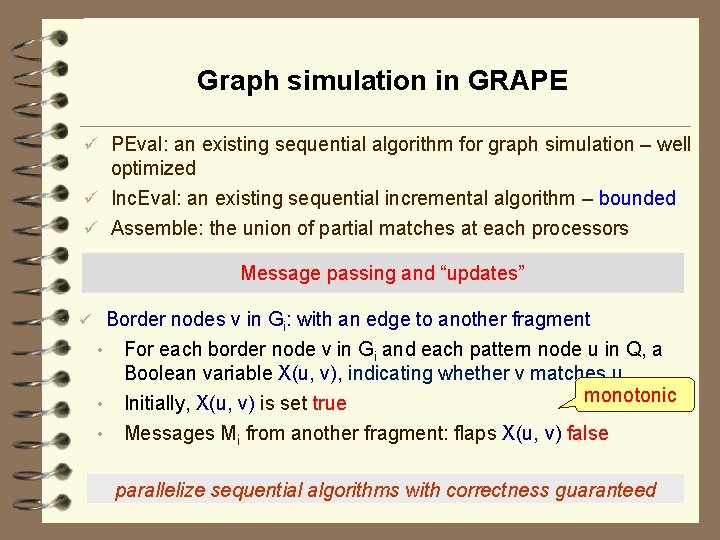

Graph simulation in GRAPE ü PEval: an existing sequential algorithm for graph simulation – well optimized ü Inc. Eval: an existing sequential incremental algorithm – bounded ü Assemble: the union of partial matches at each processors Message passing and “updates” ü Border nodes v in Gi: with an edge to another fragment • For each border node v in Gi and each pattern node u in Q, a Boolean variable X(u, v), indicating whether v matches u monotonic • Initially, X(u, v) is set true • Messages Mi from another fragment: flaps X(u, v) false parallelize sequential algorithms with correctness guaranteed 20

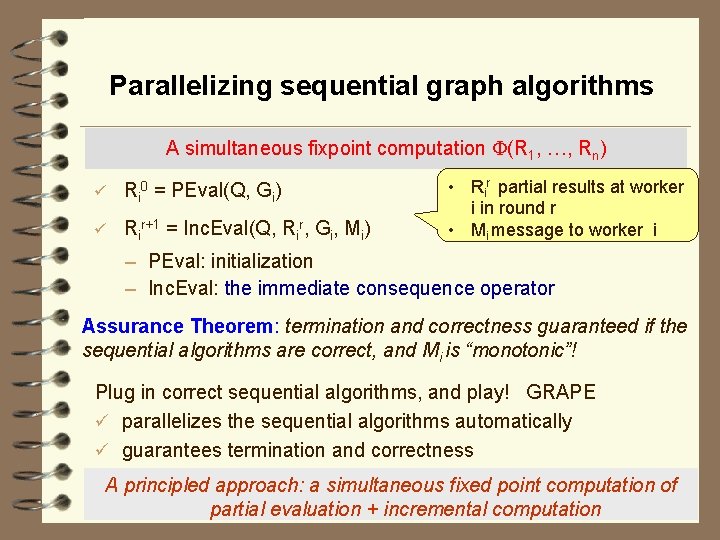

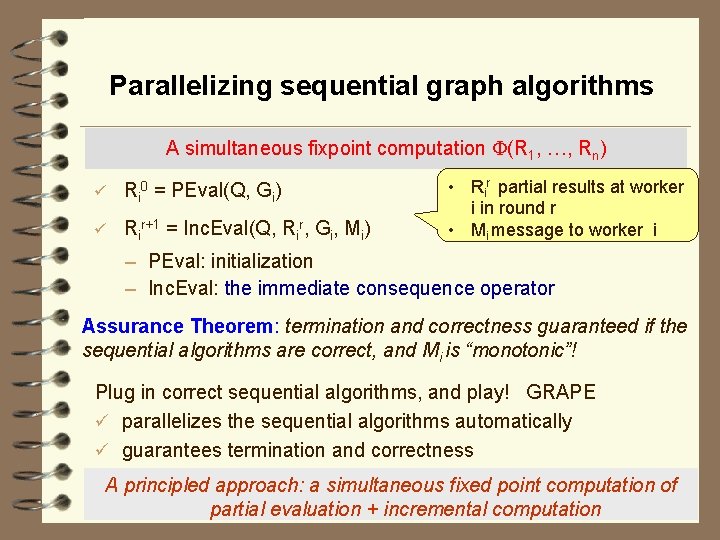

Parallelizing sequential graph algorithms A simultaneous fixpoint computation (R 1, …, Rn) ü Ri 0 = PEval(Q, Gi) ü Rir+1 = Inc. Eval(Q, Rir, Gi, Mi) • Rir partial results at worker i in round r • Mi message to worker i – PEval: initialization – Inc. Eval: the immediate consequence operator Assurance Theorem: termination and correctness guaranteed if the sequential algorithms are correct, and Mi is “monotonic”! Plug in correct sequential algorithms, and play! GRAPE ü parallelizes the sequential algorithms automatically ü guarantees termination and correctness A principled approach: a simultaneous fixed point computation of 21 partial evaluation + incremental computation

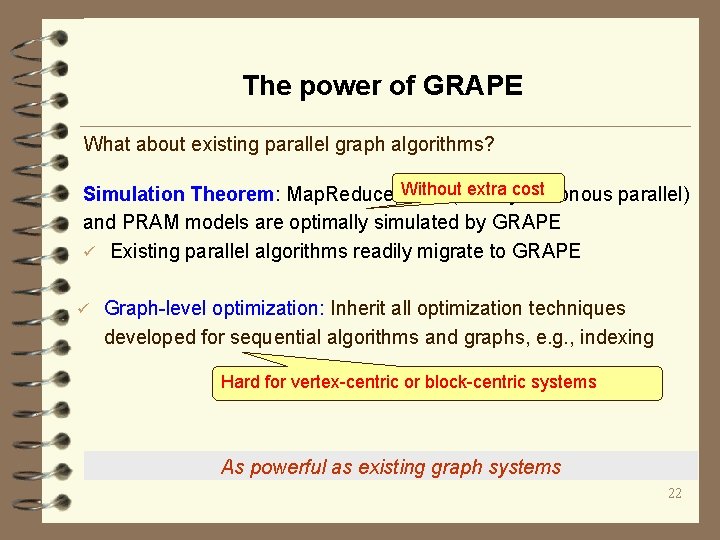

The power of GRAPE What about existing parallel graph algorithms? extrasynchronous cost Simulation Theorem: Map. Reduce, Without BSP (bulk parallel) and PRAM models are optimally simulated by GRAPE ü Existing parallel algorithms readily migrate to GRAPE ü Graph-level optimization: Inherit all optimization techniques developed for sequential algorithms and graphs, e. g. , indexing Hard for vertex-centric or block-centric systems As powerful as existing graph systems 22

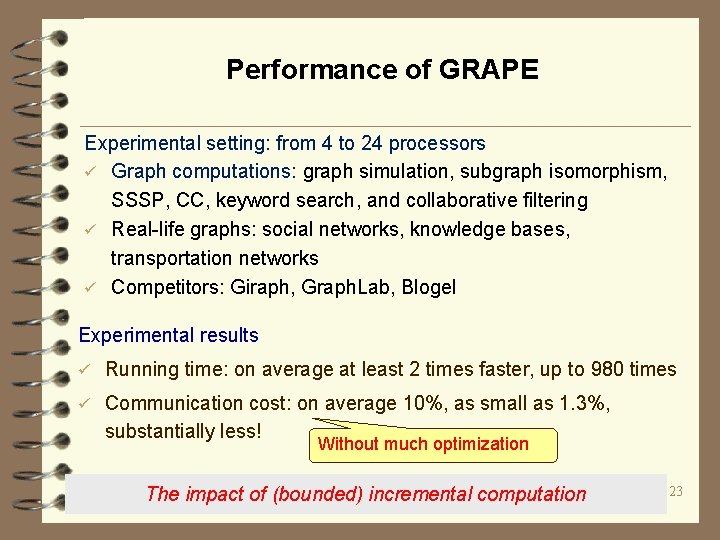

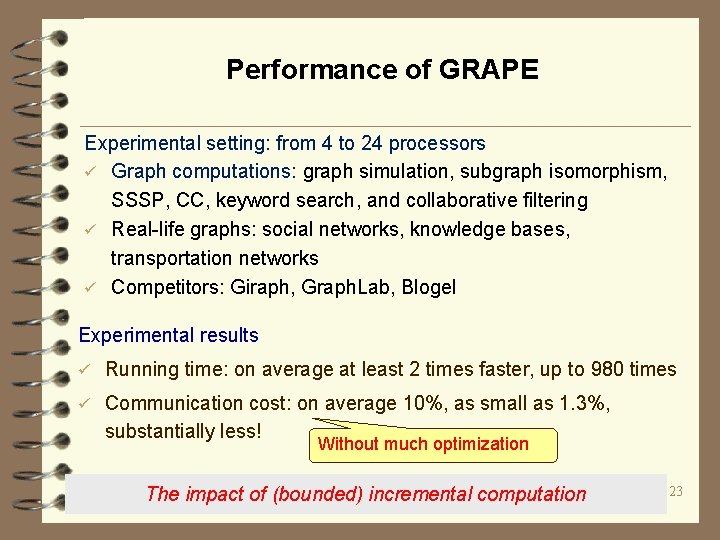

Performance of GRAPE Experimental setting: from 4 to 24 processors ü Graph computations: graph simulation, subgraph isomorphism, SSSP, CC, keyword search, and collaborative filtering ü Real-life graphs: social networks, knowledge bases, transportation networks ü Competitors: Giraph, Graph. Lab, Blogel Experimental results ü Running time: on average at least 2 times faster, up to 980 times ü Communication cost: on average 10%, as small as 1. 3%, substantially less! Without much optimization The impact of (bounded) incremental computation 23

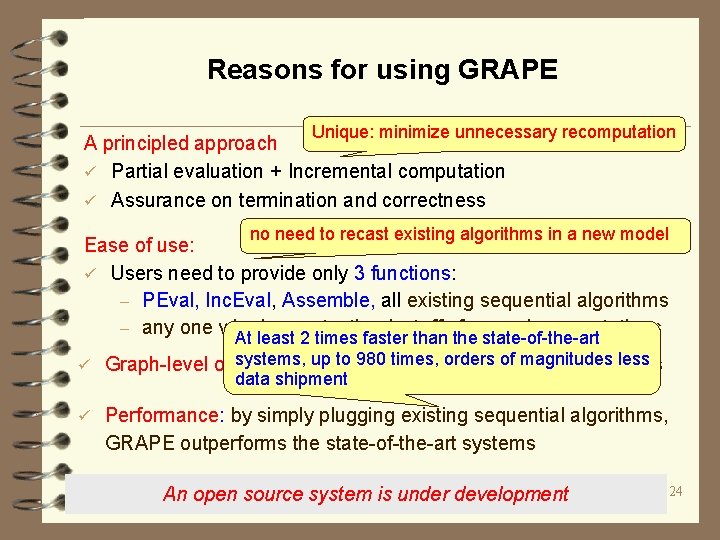

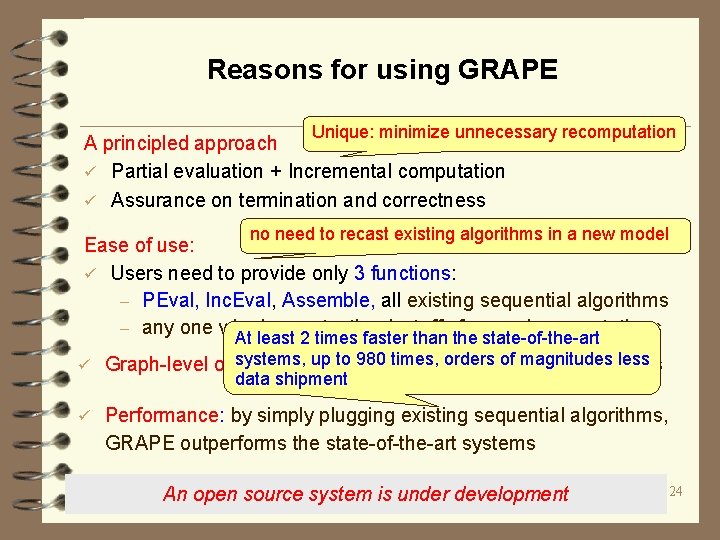

Reasons for using GRAPE Unique: minimize unnecessary recomputation A principled approach ü Partial evaluation + Incremental computation ü Assurance on termination and correctness no need to recast existing algorithms in a new model Ease of use: ü Users need to provide only 3 functions: – PEval, Inc. Eval, Assemble, all existing sequential algorithms – any one who knows textbook stuffs for graph computations At least 2 times faster than the state-of-the-art systems, up all to 980 times, orders of magnitudes less ü Graph-level optimization: sequential optimization techniques data shipment ü Performance: by simply plugging existing sequential algorithms, GRAPE outperforms the state-of-the-art systems An open source system is under development 24

Relative bounded incremental computations 25

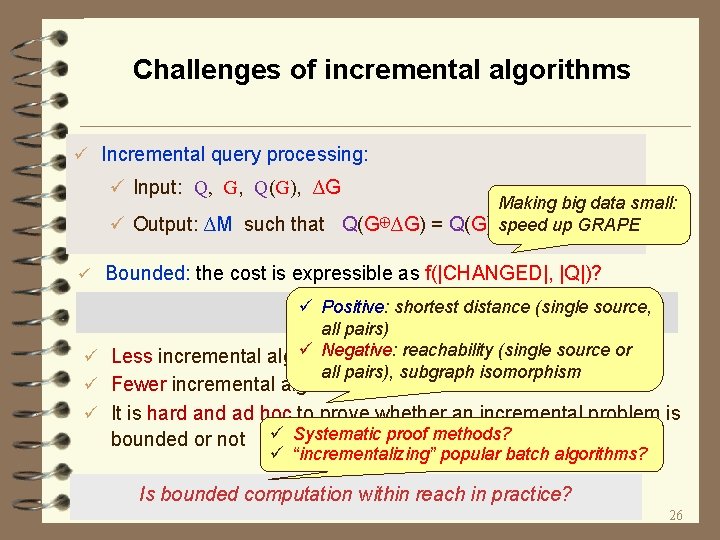

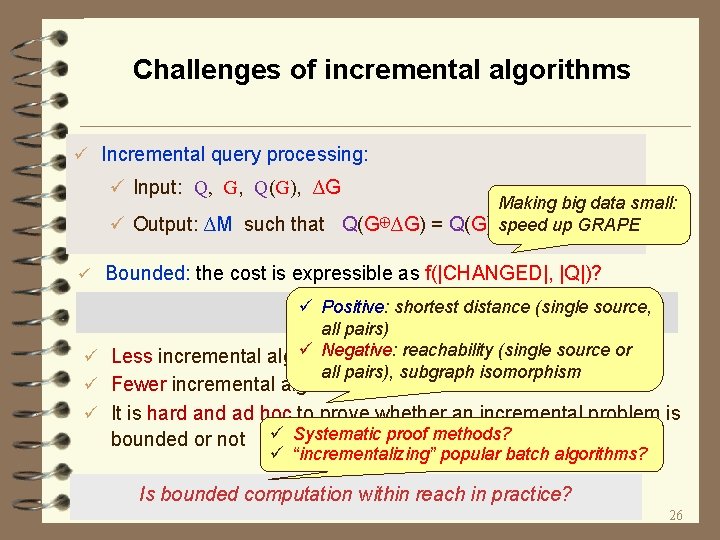

Challenges of incremental algorithms ü Incremental query processing: ü Input: Q, G, Q(G), ∆G Making big data small: ü Output: ∆M such that Q(G⊕∆G) = Q(G) speed ⊕ ∆M up GRAPE ü Bounded: the cost is expressible as f(|CHANGED|, |Q|)? ü Positive: shortest distance (single source, However, all pairs) ü Negative: reachability (single source or ü Less incremental algorithms are in place than batch algorithms all pairs), subgraph isomorphism ü Fewer incremental algorithms are known bounded or not ü It is hard and ad hoc to prove whether an incremental problem is bounded or not ü Systematic proof methods? ü “incrementalizing” popular batch algorithms? Is bounded computation within reach in practice? 26

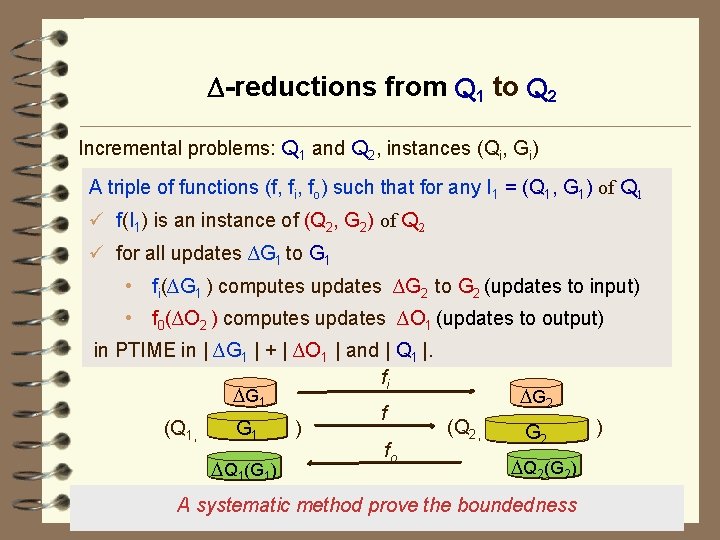

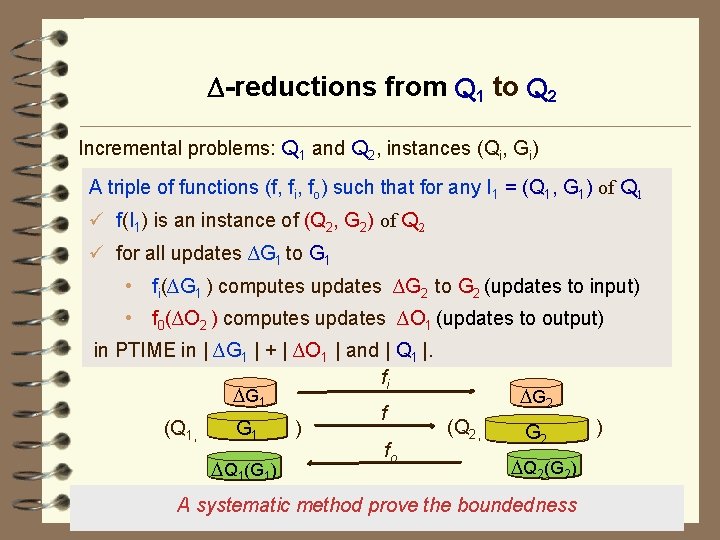

-reductions from Q 1 to Q 2 Incremental problems: Q 1 and Q 2, instances (Qi, Gi) A triple of functions (f, fi, fo) such that for any I 1 = (Q 1, G 1) of Q 1 ü f(I 1) is an instance of (Q 2, G 2) of Q 2 ü for all updates ∆G 1 to G 1 • fi(∆G 1 ) computes updates ∆G 2 to G 2 (updates to input) • f 0(∆O 2 ) computes updates ∆O 1 (updates to output) in PTIME in | ∆G 1 | + | ∆O 1 | and | Q 1 |. fi G 1 f (Q 2, (Q 1, ) G 1 fo Q 1(G 1) G 2 ) Q 2(G 2) A systematic method prove the boundedness 27

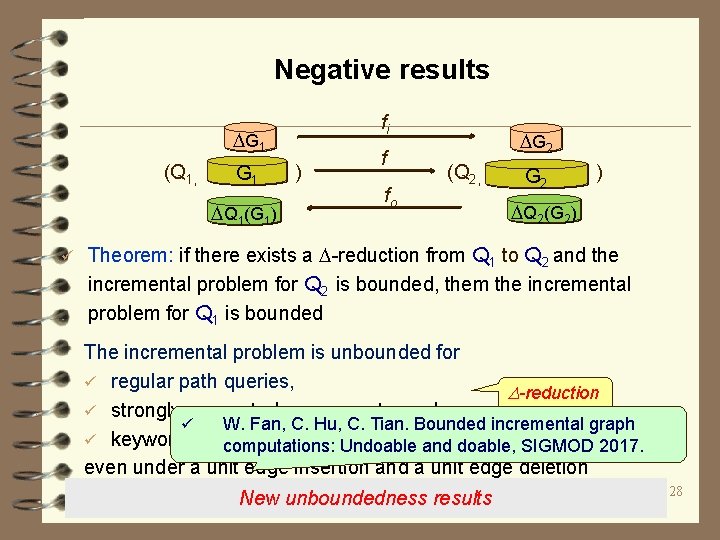

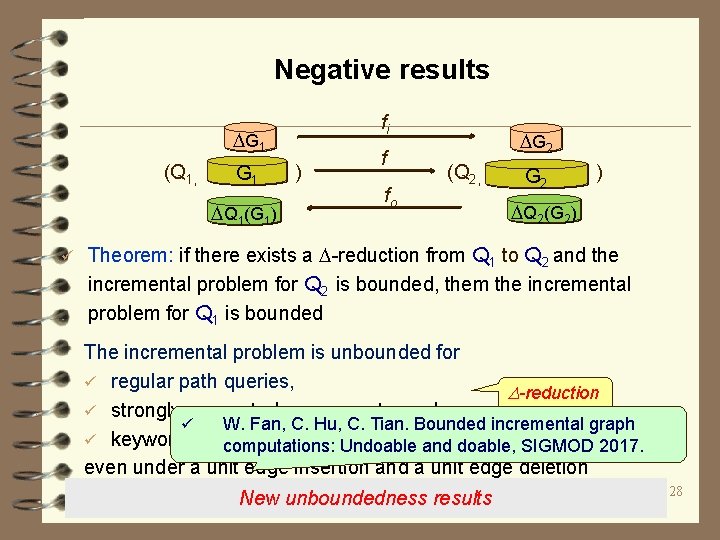

Negative results fi G 1 (Q 1, G 1 Q 1(G 1) ü ) f fo G 2 (Q 2, G 2 ) Q 2(G 2) Theorem: if there exists a -reduction from Q 1 to Q 2 and the incremental problem for Q 2 is bounded, them the incremental problem for Q 1 is bounded The incremental problem is unbounded for ü regular path queries, -reduction ü strongly connected components, and ü W. Fan, C. Hu, C. Tian. Bounded incremental graph ü keyword search computations: Undoable and doable, SIGMOD 2017. even under a unit edge insertion and a unit edge deletion New unboundedness results 28

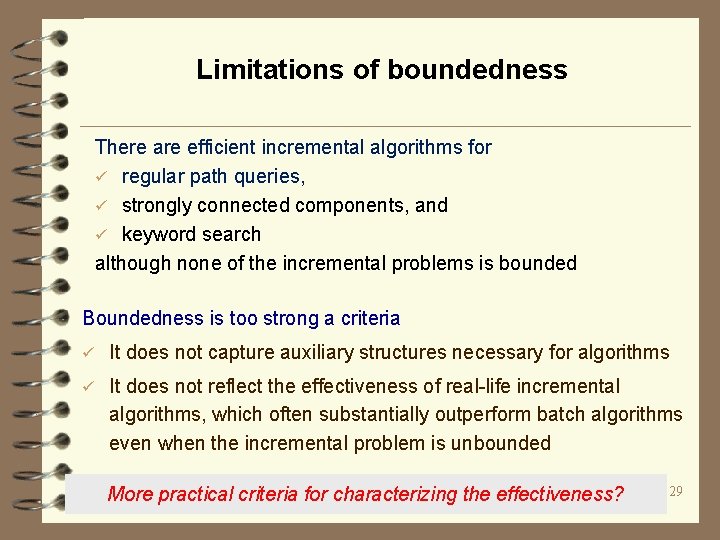

Limitations of boundedness There are efficient incremental algorithms for ü regular path queries, ü strongly connected components, and ü keyword search although none of the incremental problems is bounded Boundedness is too strong a criteria ü It does not capture auxiliary structures necessary for algorithms ü It does not reflect the effectiveness of real-life incremental algorithms, which often substantially outperform batch algorithms even when the incremental problem is unbounded More practical criteria for characterizing the effectiveness? 29

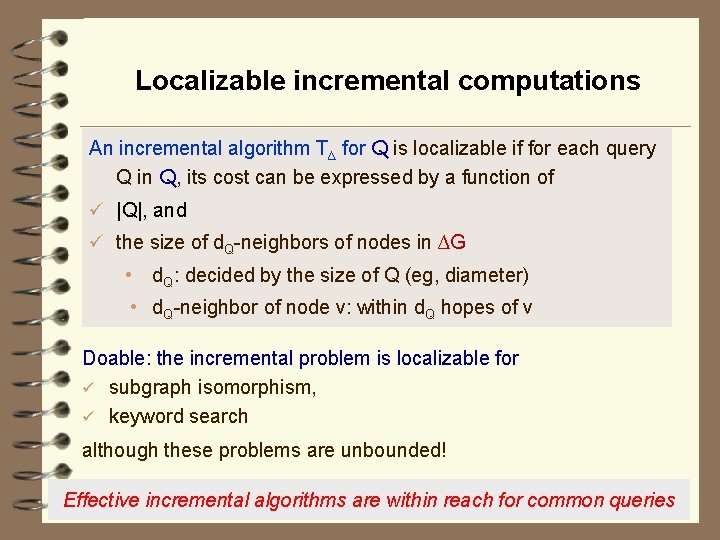

Localizable incremental computations An incremental algorithm T∆ for Q is localizable if for each query Q in Q, its cost can be expressed by a function of -reduction ü |Q|, and ü the size of d. Q-neighbors of nodes in ∆G • d. Q: decided by the size of Q (eg, diameter) • d. Q-neighbor of node v: within d. Q hopes of v Doable: the incremental problem is localizable for ü subgraph isomorphism, ü keyword search although these problems are unbounded! 30 Effective incremental algorithms are within reach for common queries

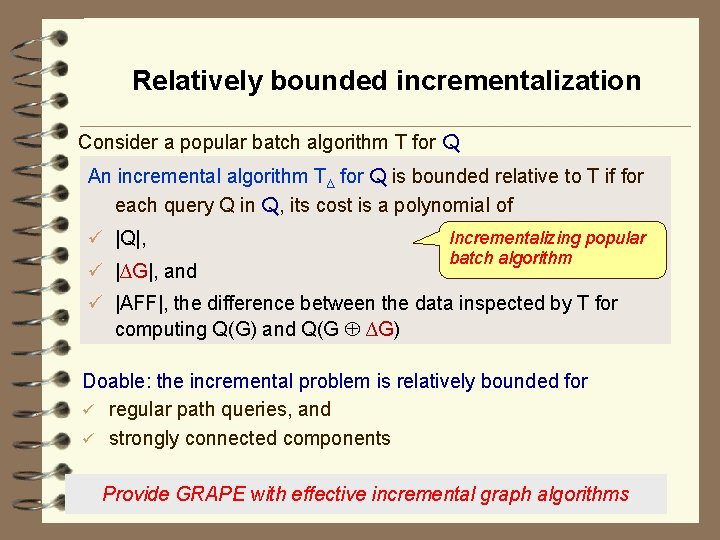

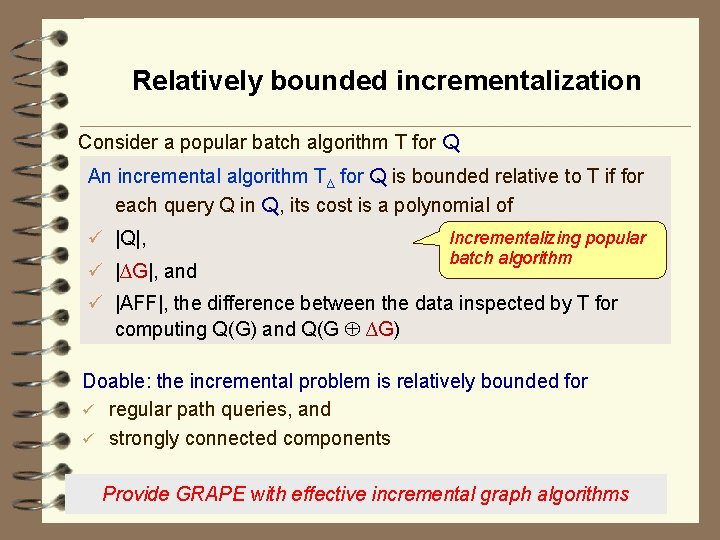

Relatively bounded incrementalization Consider a popular batch algorithm T for Q An incremental algorithm T∆ for Q is bounded relative to T if for each query Q in Q, its cost is a polynomial of ü |Q|, ü |∆G|, and Incrementalizing popular batch algorithm ü |AFF|, the difference between the data inspected by T for computing Q(G) and Q(G ∆G) Doable: the incremental problem is relatively bounded for ü regular path queries, and ü strongly connected components Provide GRAPE with effective incremental graph algorithms

Application: Graph-pattern association rules 32

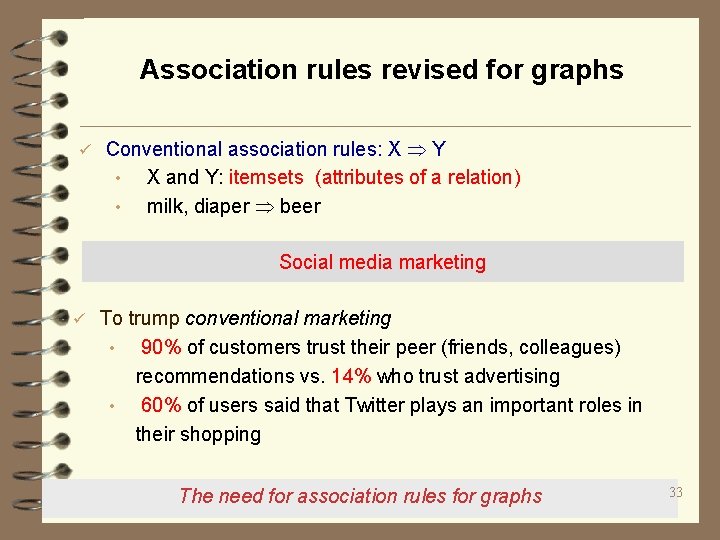

Association rules revised for graphs ü Conventional association rules: X Y • X and Y: itemsets (attributes of a relation) • milk, diaper beer Social media marketing ü To trump conventional marketing • 90% of customers trust their peer (friends, colleagues) recommendations vs. 14% who trust advertising • 60% of users said that Twitter plays an important roles in their shopping The need for association rules for graphs 33

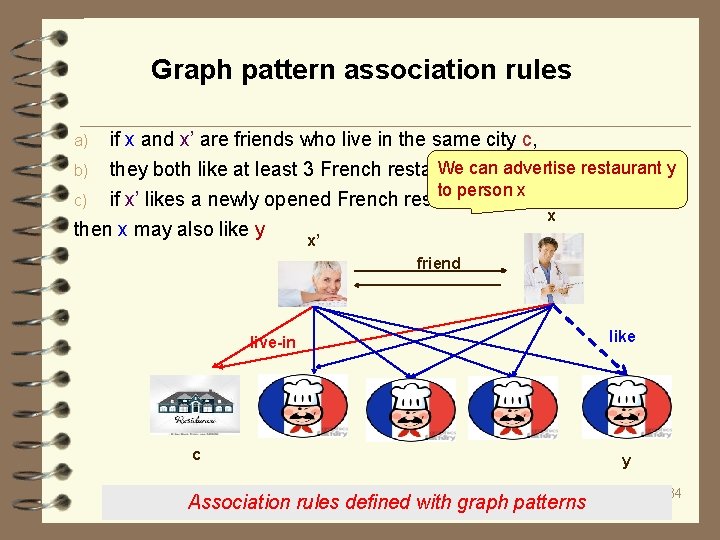

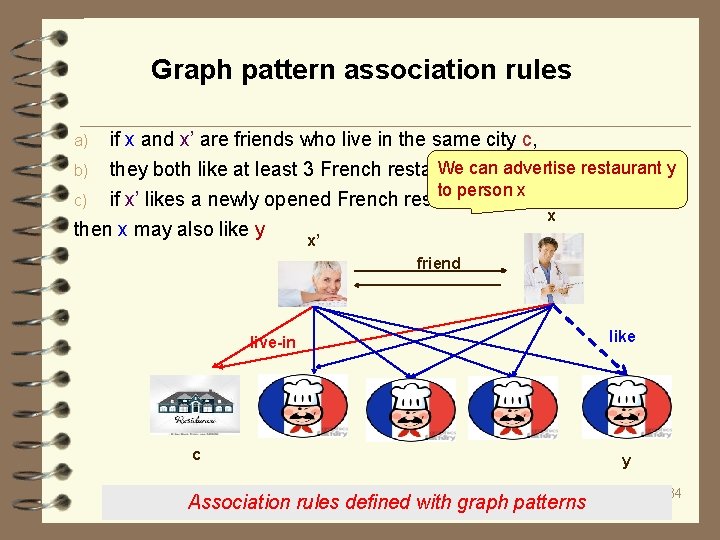

Graph pattern association rules a) b) if x and x’ are friends who live in the same city c, We caninadvertise they both like at least 3 French restaurants c, and restaurant y to person x if x’ likes a newly opened French restaurant y, then x may also like y c) x x’ friend live-in c Association rules defined with graph patterns like y 34

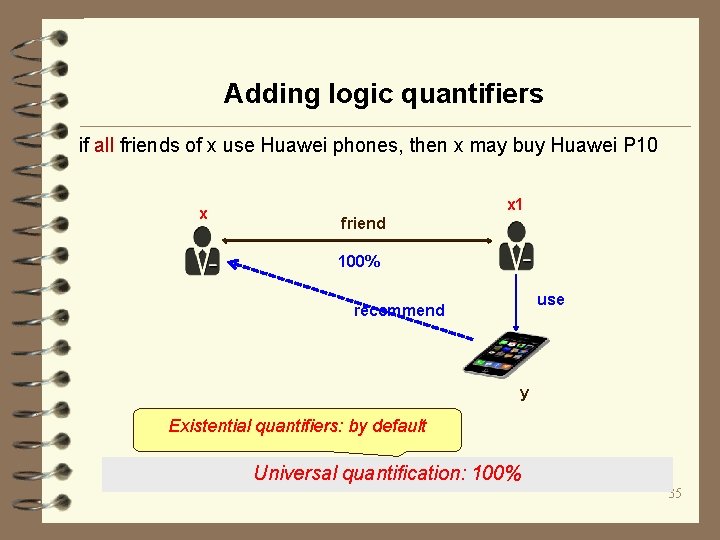

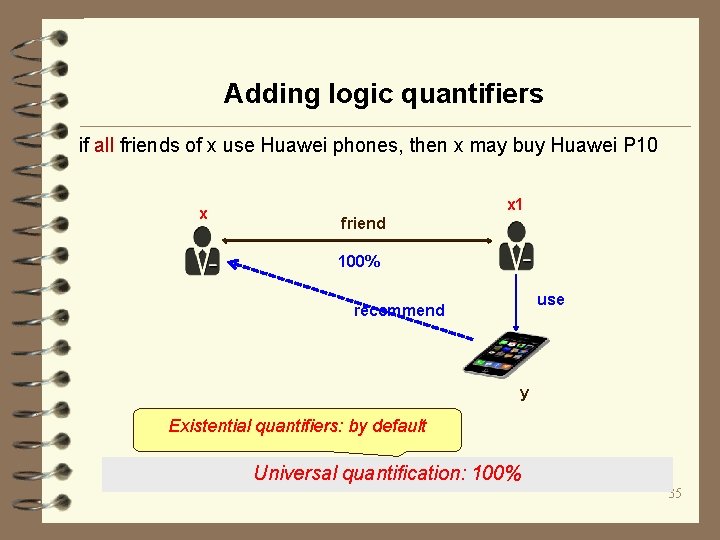

Adding logic quantifiers if all friends of x use Huawei phones, then x may buy Huawei P 10 x x 1 friend 100% use recommend y Existential quantifiers: by default Universal quantification: 100% 35

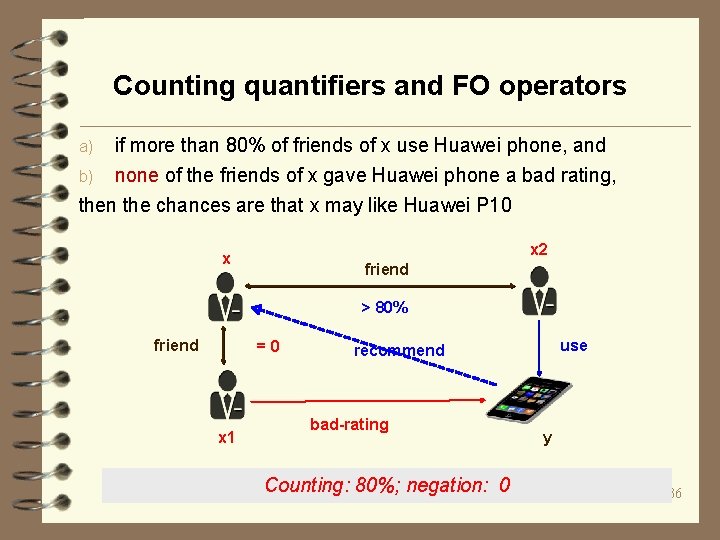

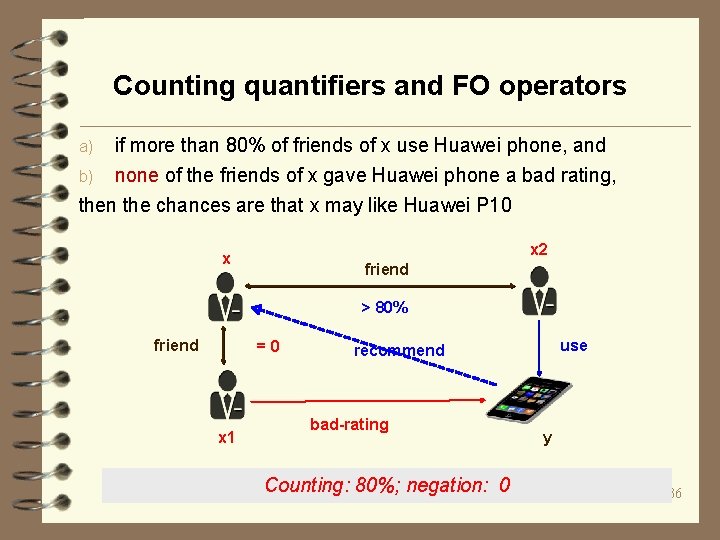

Counting quantifiers and FO operators a) b) if more than 80% of friends of x use Huawei phone, and none of the friends of x gave Huawei phone a bad rating, then the chances are that x may like Huawei P 10 x 2 x friend > 80% friend =0 x 1 use recommend bad-rating Counting: 80%; negation: 0 y 36

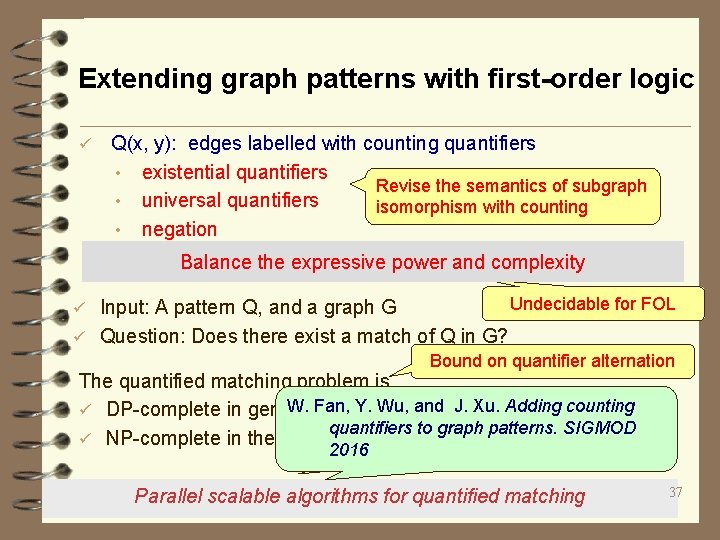

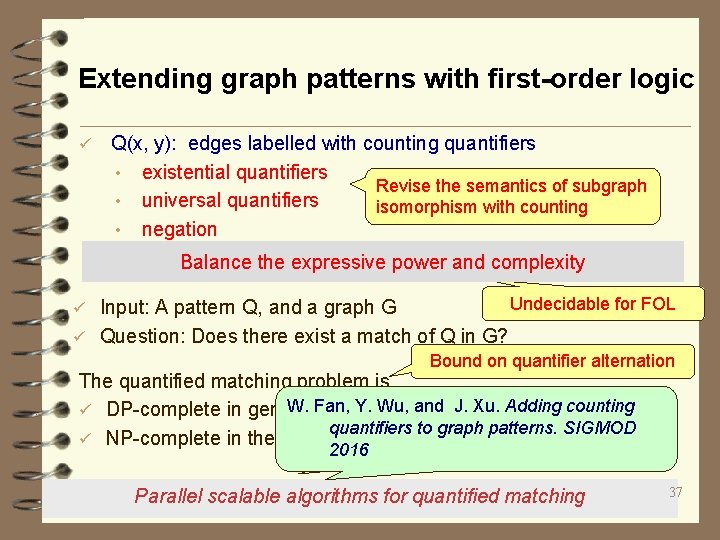

Extending graph patterns with first-order logic ü Q(x, y): edges labelled with counting quantifiers • existential quantifiers Revise the semantics of subgraph • universal quantifiers isomorphism with counting • negation Balance the expressive power and complexity Undecidable for FOL Input: A pattern Q, and a graph G ü Question: Does there exist a match of Q in G? ü Bound on quantifier alternation The quantified matching problem is W. Fan, Y. Wu, and J. Xu. Adding counting ü DP-complete in general quantifiers to graph patterns. SIGMOD ü NP-complete in the absence negationand apply GPARs Practical of to discover 2016 Parallel scalable algorithms for quantified matching 37

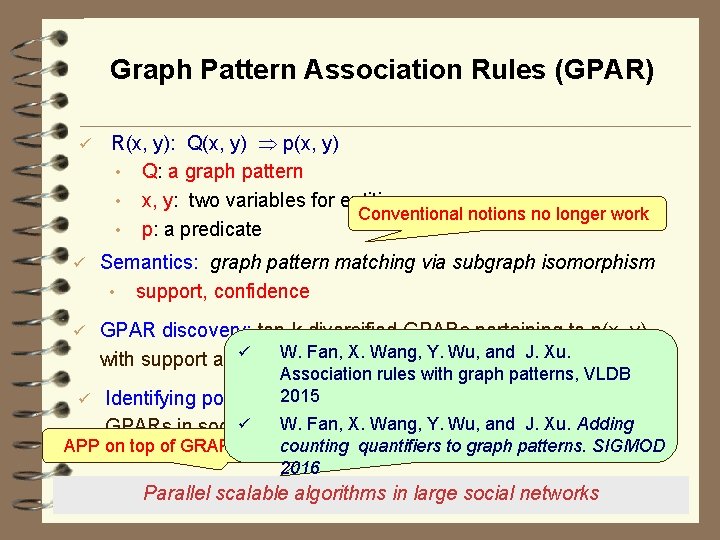

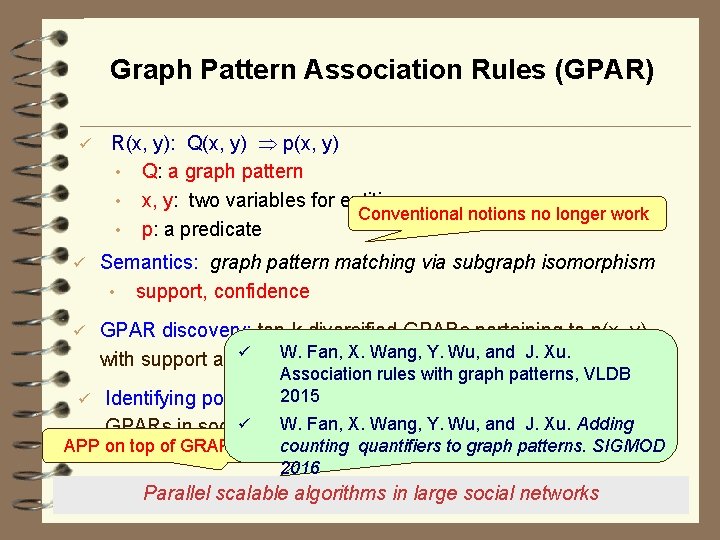

Graph Pattern Association Rules (GPAR) ü R(x, y): Q(x, y) p(x, y) • Q: a graph pattern • x, y: two variables for entities Conventional notions no longer work • p: a predicate ü Semantics: graph pattern matching via subgraph isomorphism • support, confidence ü GPAR discovery: top-k diversified GPARs pertaining to p(x, y) ü W. from Fan, a. X. social Wang, graph Y. Wu, Gand J. Xu. with support above , Association rules with graph patterns, VLDB ü Identifying potential 2015 customers: the set of entities identified by ü graph W. Fan, X. Wang, Y. Wu, above and J. Xu. Adding GPARs in social G with confidence APP on top of GRAPE counting quantifiers to graph patterns. SIGMOD 2016 Parallel scalable algorithms in large social networks 38

Summing up 39

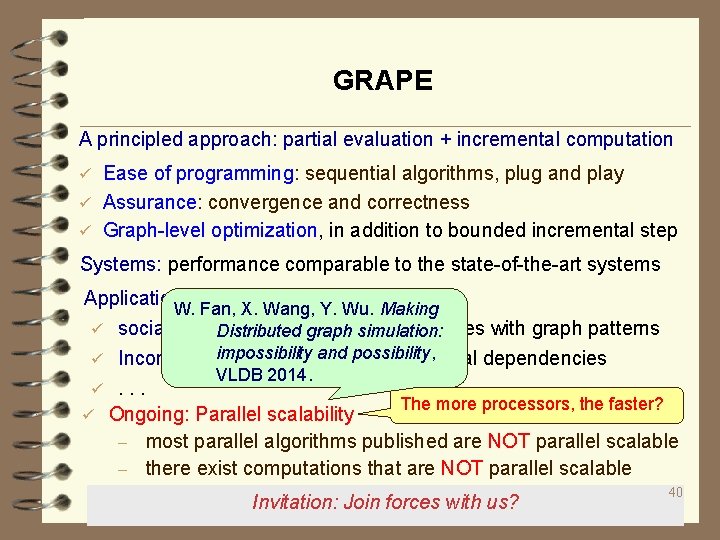

GRAPE A principled approach: partial evaluation + incremental computation Ease of programming: sequential algorithms, plug and play ü Assurance: convergence and correctness ü Graph-level optimization, in addition to bounded incremental step ü Systems: performance comparable to the state-of-the-art systems Applications: W. Fan, X. Wang, Y. Wu. Making ü social media marketing: Association Distributed graph simulation: rules with graph patterns impossibility and possibility, Inconsistency checking: graph functional dependencies VLDB 2014. ü. . . The more processors, the faster? ü Ongoing: Parallel scalability – most parallel algorithms published are NOT parallel scalable – there exist computations that are NOT parallel scalable ü Invitation: Join forces with us? 40

References ü W. Fan, J. Xu, Y. Wu, W. Yu, J. Jiang, Z. Zheng, B. Zhang, Y. Cao, C. Tian. Parallelizing sequential graph computations. SIGMOD 2017. ü W. Fan, J. Xu, Y. Wu, W. Yu, J. Jiang. GRAPE: Parallelizing sequential graph computations. VLDB 2017 (demo). ü W. Fan, C. Hu, C. Tian. Bounded incremental computations: Undoable and doable. SIGMOD 2017. ü W. Fan, X. Wang, and Y. Wu. Answering Graph Pattern Queries using Views, TKDE 2016 (invited). ü W. Fan, Y. Wu, and J. Xu. Adding counting quantifiers to graph patterns. SIGMOD 2016 ü W. Fan, Y. Wu, and J. Xu. Functional dependencies for graphs. SIGMOD 2016 ü W. Fan, X. Wang, Y. Wu, J. Xu. Association rules for graph patterns. VLDB 2015 41

References ü Y. Cao, W. Fan and R. Huang. Making pattern queries bounded in big graphs. ICDE 2015. ü W. Fan, X. Wang, and Y. Wu. Querying big graphs with bounded resources, SIGMOD 2014. ü W. Fan, X. Wang, and Y. Wu. Distributed Graph Simulation: Impossibility and Possibility, VLDB 2014. ü W. Fan, X. Wang, and Y. Wu. Diversified Top-k Graph Pattern Matching, VLDB 2014. ü W. Fan, X. Wang, and Y. Wu. Answering Graph Pattern Queries using Views, ICDE 2014. ü W. Fan, X. Wang, and Y. Wu. Incremental Graph Pattern Matching, TODS 38(3), 2013 ü W. Fan, F. Geerts, F. Neven. Making Queries Tractable on Big Data with Preprocessing, VLDB 2013. 42