4 3 LeastSquares Regression 1 Regression Lines LeastSquares

- Slides: 19

4. 3 Least-Squares Regression • • 1 Regression Lines Least-Squares Regression Line Facts about Least-Squares Regression Correlation and Regression

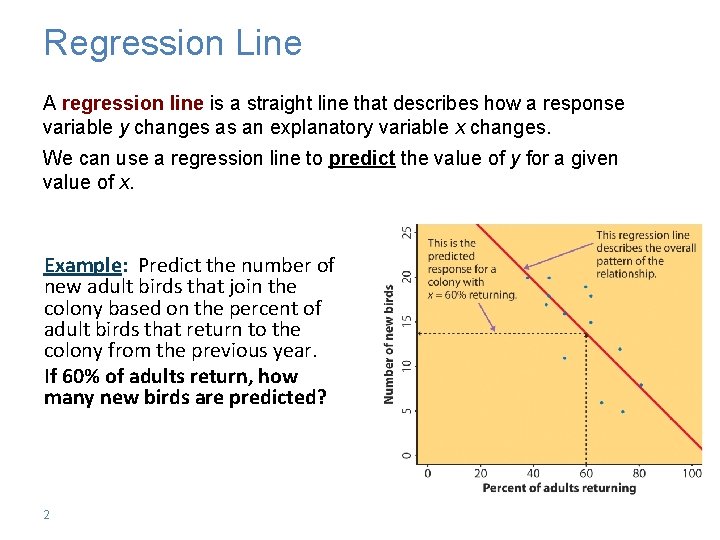

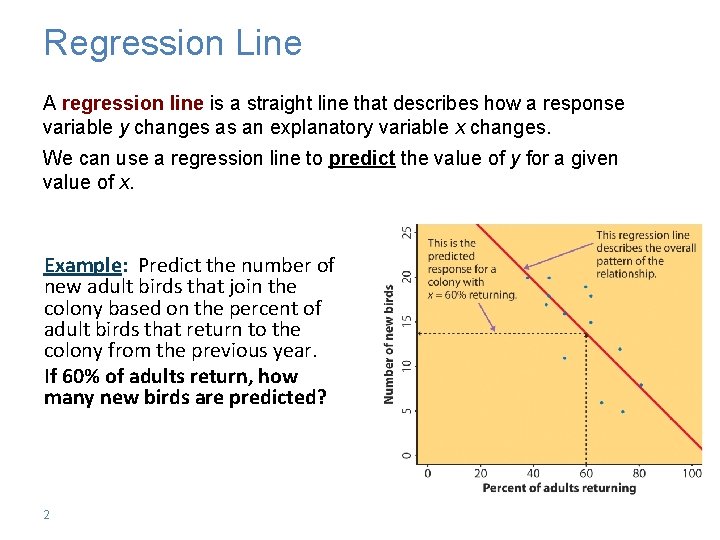

Regression Line A regression line is a straight line that describes how a response variable y changes as an explanatory variable x changes. We can use a regression line to predict the value of y for a given value of x. Example: Predict the number of new adult birds that join the colony based on the percent of adult birds that return to the colony from the previous year. If 60% of adults return, how many new birds are predicted? 2

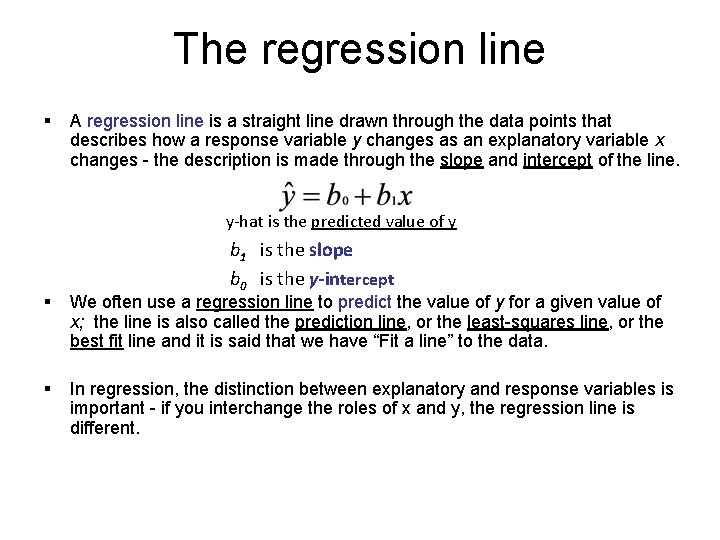

The regression line § A regression line is a straight line drawn through the data points that describes how a response variable y changes as an explanatory variable x changes - the description is made through the slope and intercept of the line. y-hat is the predicted value of y b 1 is the slope b 0 is the y-intercept § We often use a regression line to predict the value of y for a given value of x; the line is also called the prediction line, or the least-squares line, or the best fit line and it is said that we have “Fit a line” to the data. § In regression, the distinction between explanatory and response variables is important - if you interchange the roles of x and y, the regression line is different.

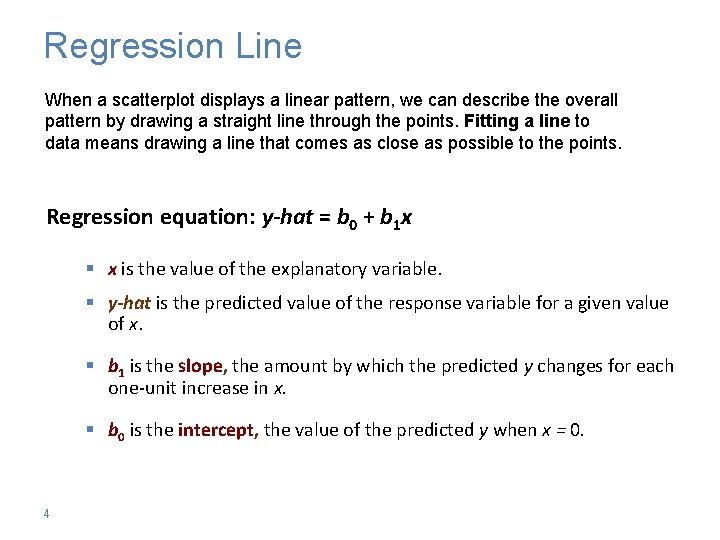

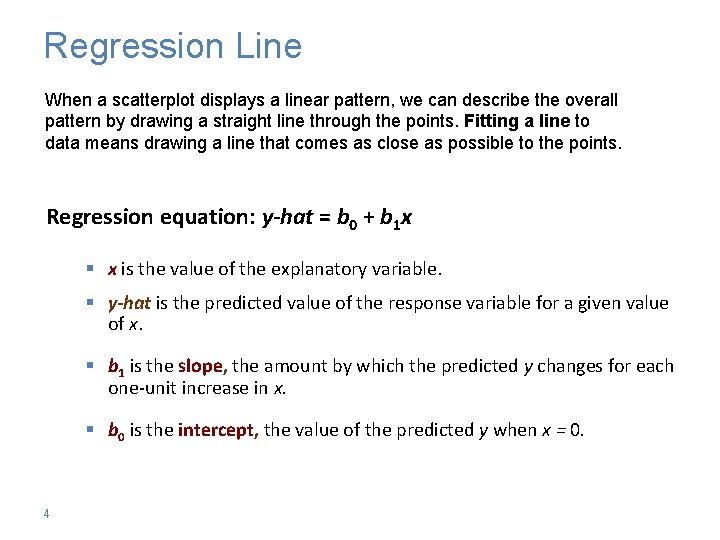

Regression Line When a scatterplot displays a linear pattern, we can describe the overall pattern by drawing a straight line through the points. Fitting a line to data means drawing a line that comes as close as possible to the points. Regression equation: y-hat = b 0 + b 1 x § x is the value of the explanatory variable. § y-hat is the predicted value of the response variable for a given value of x. § b 1 is the slope, the amount by which the predicted y changes for each one-unit increase in x. § b 0 is the intercept, the value of the predicted y when x = 0. 4

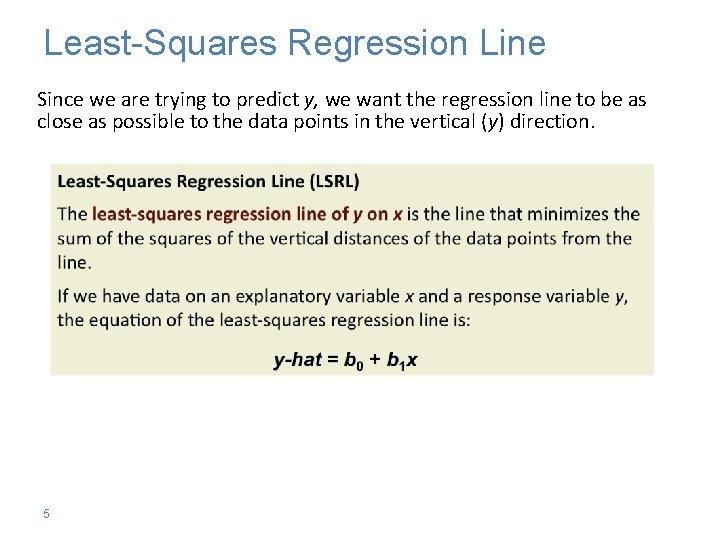

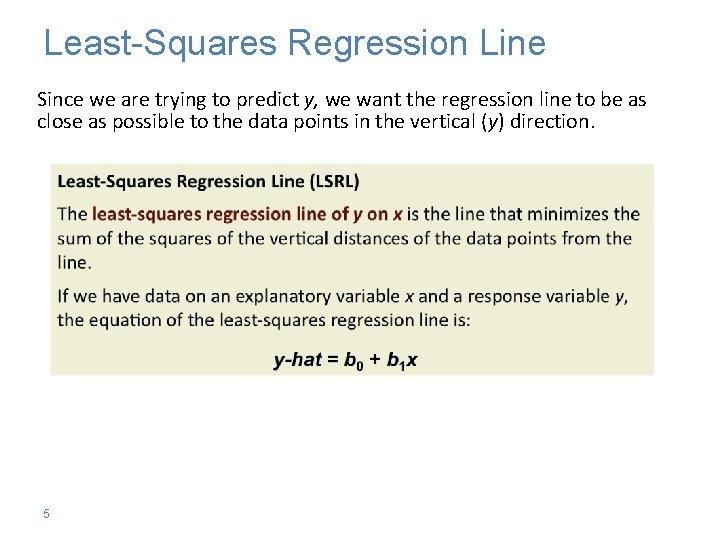

Least-Squares Regression Line Since we are trying to predict y, we want the regression line to be as close as possible to the data points in the vertical (y) direction. 5

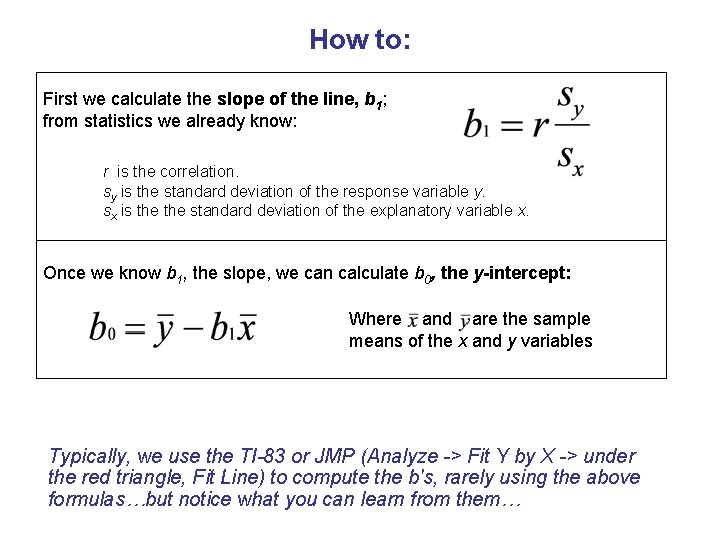

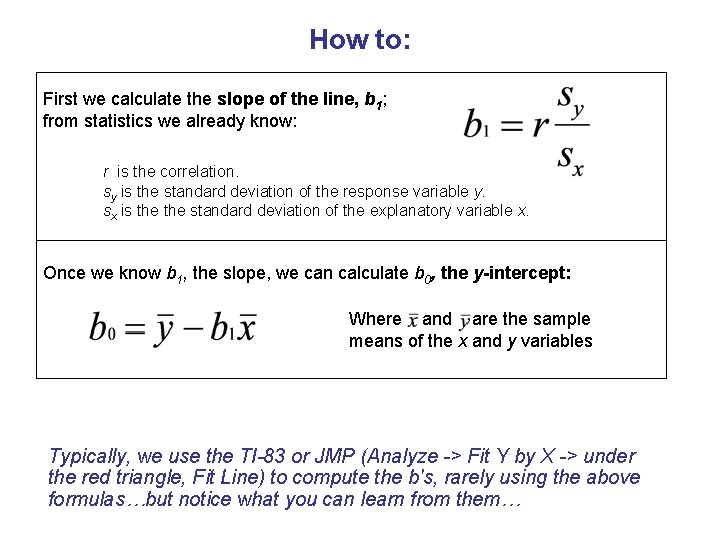

How to: First we calculate the slope of the line, b 1; from statistics we already know: r is the correlation. sy is the standard deviation of the response variable y. sx is the standard deviation of the explanatory variable x. Once we know b 1, the slope, we can calculate b 0, the y-intercept: Where and are the sample means of the x and y variables Typically, we use the TI-83 or JMP (Analyze -> Fit Y by X -> under the red triangle, Fit Line) to compute the b's, rarely using the above formulas…but notice what you can learn from them…

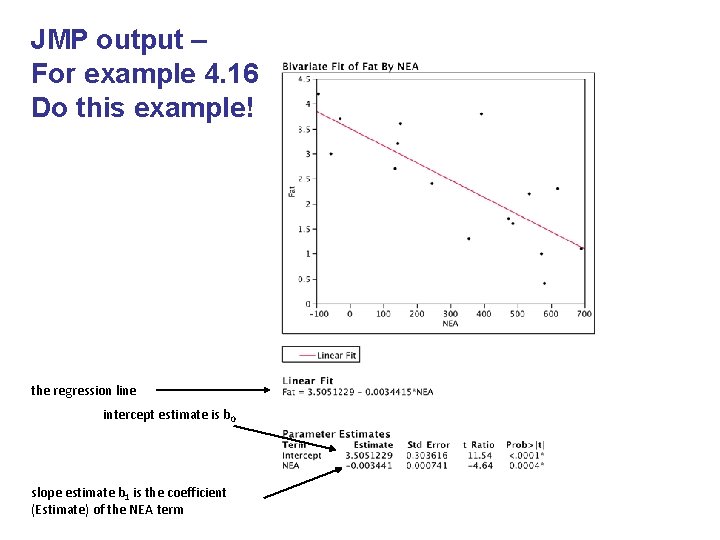

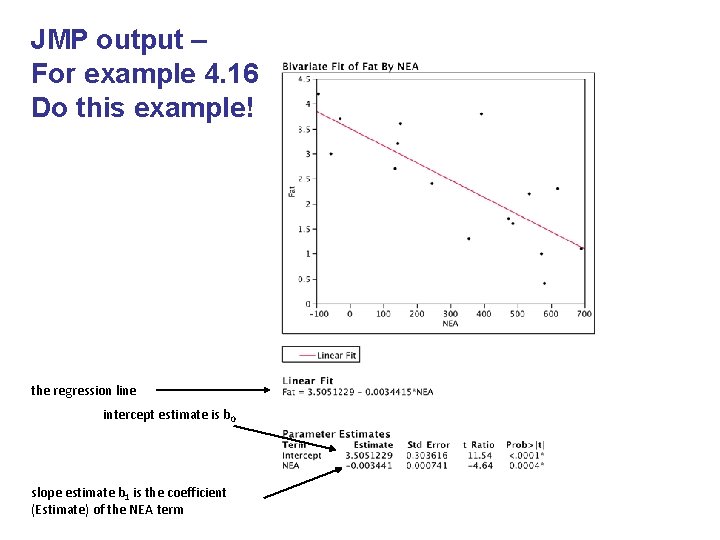

JMP output – For example 4. 16 Do this example! the regression line intercept estimate is b 0 slope estimate b 1 is the coefficient (Estimate) of the NEA term

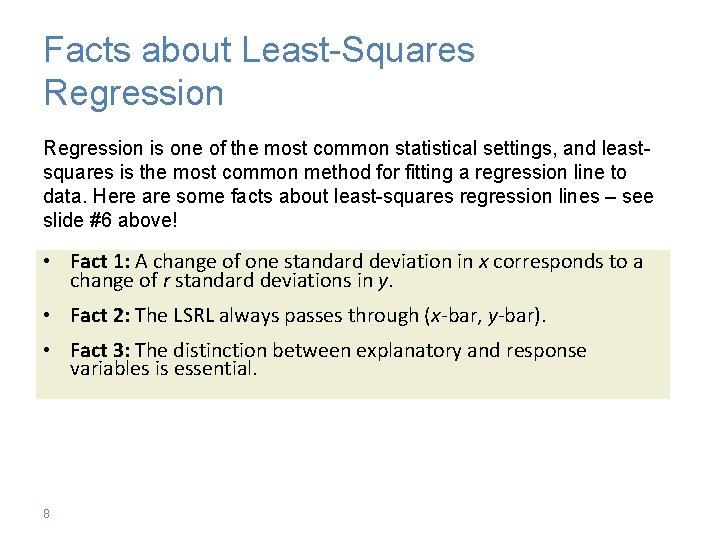

Facts about Least-Squares Regression is one of the most common statistical settings, and leastsquares is the most common method for fitting a regression line to data. Here are some facts about least-squares regression lines – see slide #6 above! • Fact 1: A change of one standard deviation in x corresponds to a change of r standard deviations in y. • Fact 2: The LSRL always passes through (x-bar, y-bar). • Fact 3: The distinction between explanatory and response variables is essential. 8

Correlation and Regression Least-squares regression looks at the distances of the data points from the line only in the y direction. The variables x and y play different roles in regression. Even though correlation r ignores the distinction between x and y, there is a close connection between correlation and regression. The square of the correlation, r 2, is the fraction of the variation in the values of y that is explained by the leastsquares regression of y on x. – r 2 is called the coefficient of determination. 9

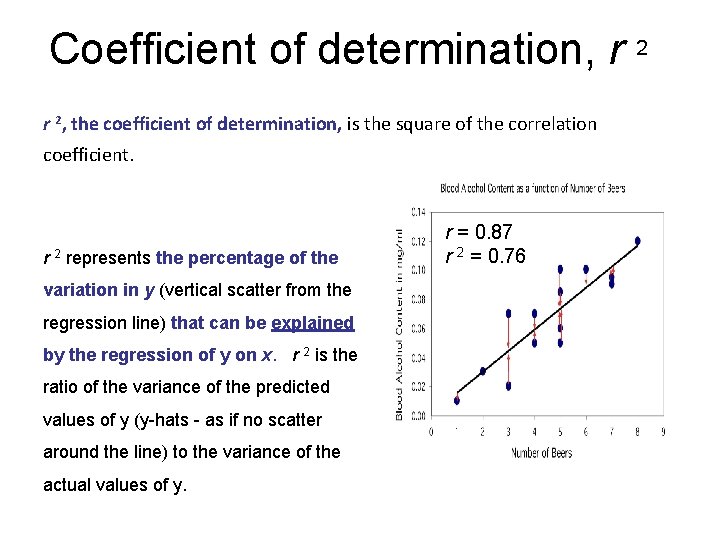

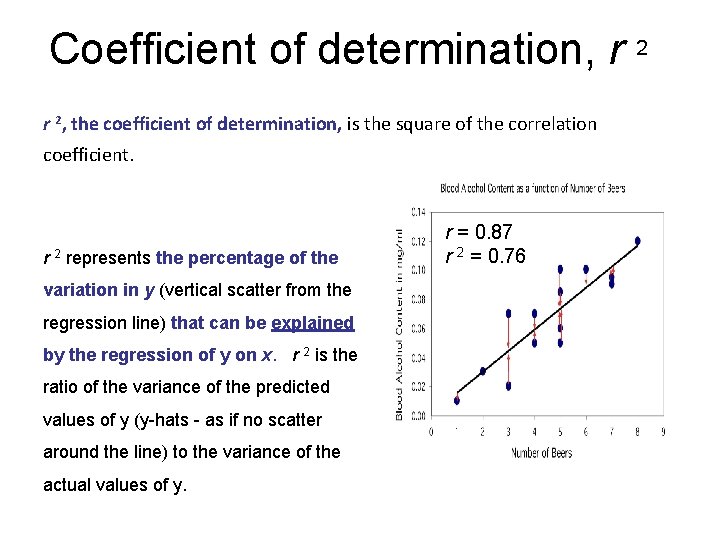

Coefficient of determination, r 2, the coefficient of determination, is the square of the correlation coefficient. r 2 represents the percentage of the variation in y (vertical scatter from the regression line) that can be explained by the regression of y on x. r 2 is the ratio of the variance of the predicted values of y (y-hats - as if no scatter around the line) to the variance of the actual values of y. r = 0. 87 r 2 = 0. 76

HW: Read sections 4. 3 and 4. 4 Do #4. 53 -4. 56, 4. 58 and 4. 69; use JMP to compute the regression line and the correlation coefficient and its square.

4. 4 Cautions about Correlation and Regression • Residuals and Residual Plots • Outliers and Influential Observations • Lurking Variables • Correlation and Causation 12

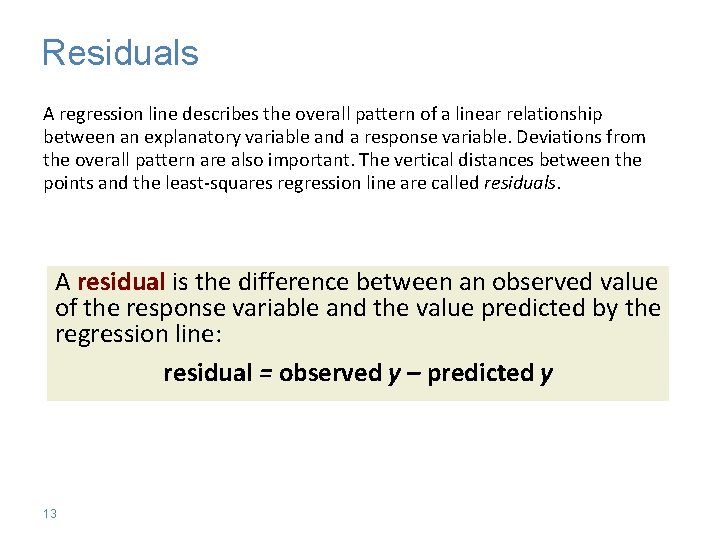

Residuals A regression line describes the overall pattern of a linear relationship between an explanatory variable and a response variable. Deviations from the overall pattern are also important. The vertical distances between the points and the least-squares regression line are called residuals. A residual is the difference between an observed value of the response variable and the value predicted by the regression line: residual = observed y – predicted y 13

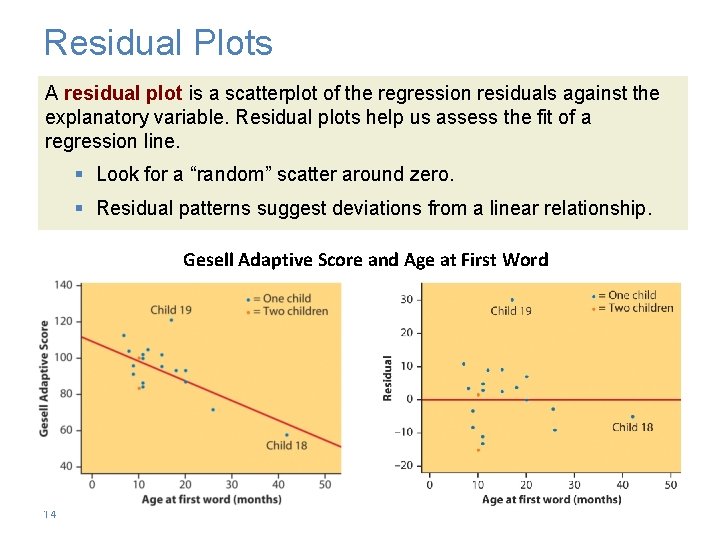

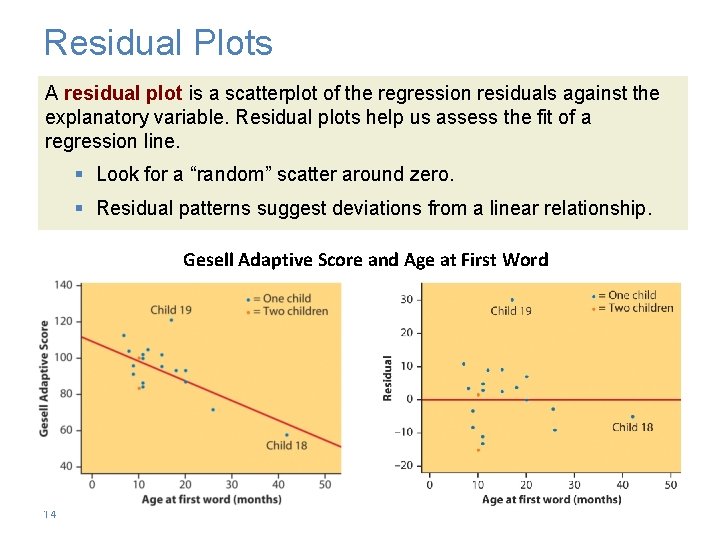

Residual Plots A residual plot is a scatterplot of the regression residuals against the explanatory variable. Residual plots help us assess the fit of a regression line. § Look for a “random” scatter around zero. § Residual patterns suggest deviations from a linear relationship. Gesell Adaptive Score and Age at First Word 14

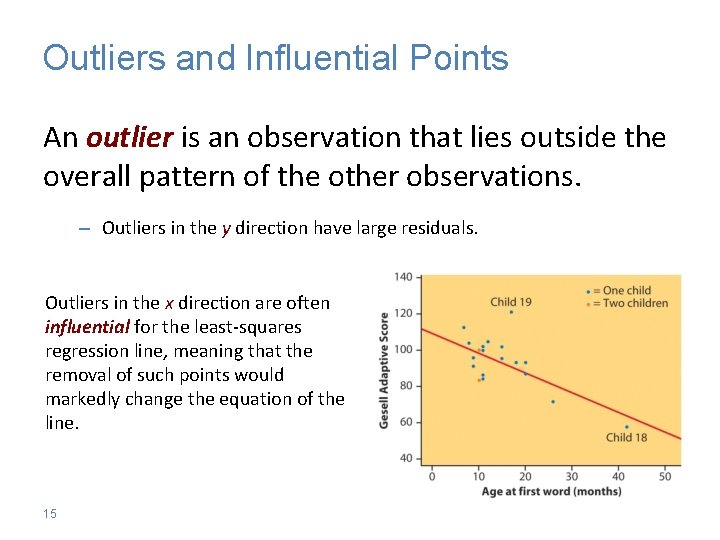

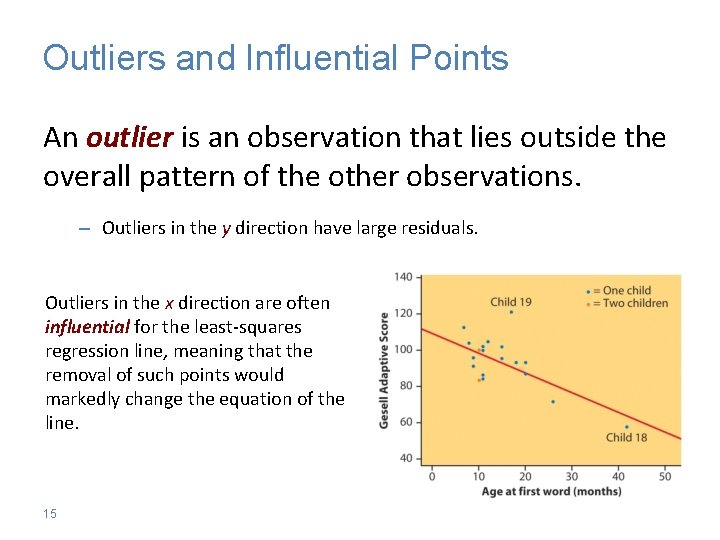

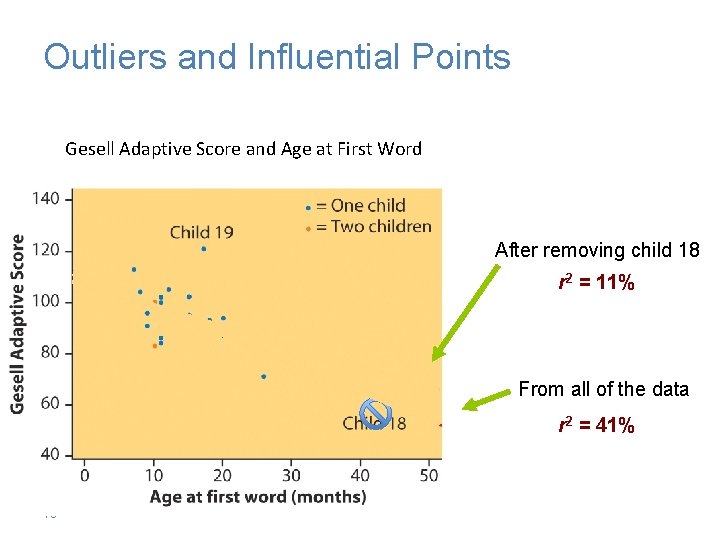

Outliers and Influential Points An outlier is an observation that lies outside the overall pattern of the other observations. – Outliers in the y direction have large residuals. Outliers in the x direction are often influential for the least-squares regression line, meaning that the removal of such points would markedly change the equation of the line. 15

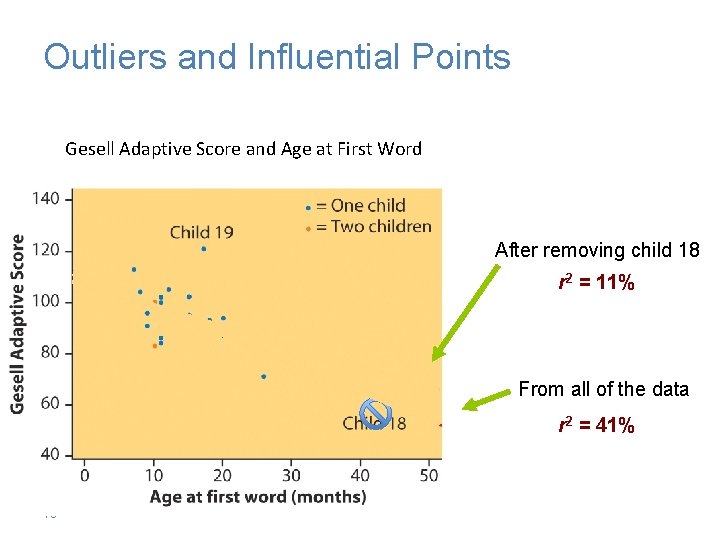

Outliers and Influential Points Gesell Adaptive Score and Age at First Word After removing child 18 r 2 = 11% From all of the data r 2 = 41% 16 Chapter 5

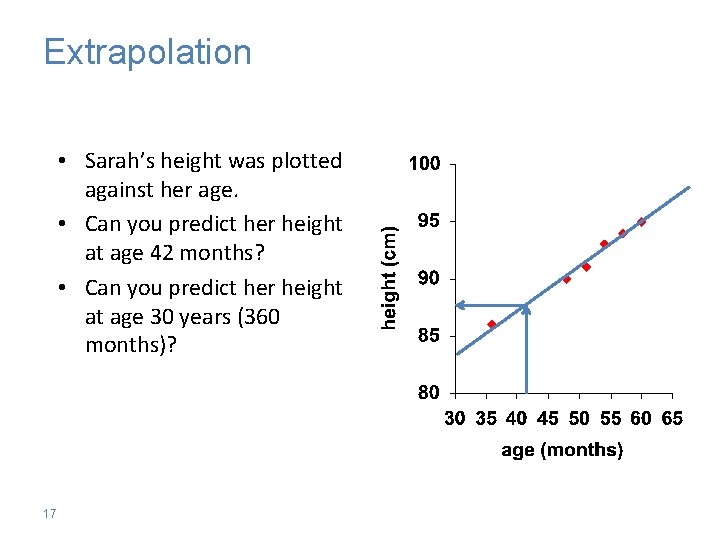

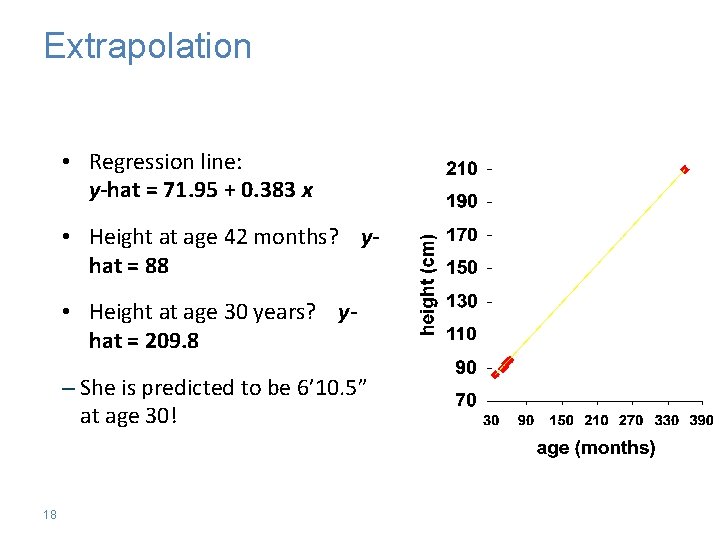

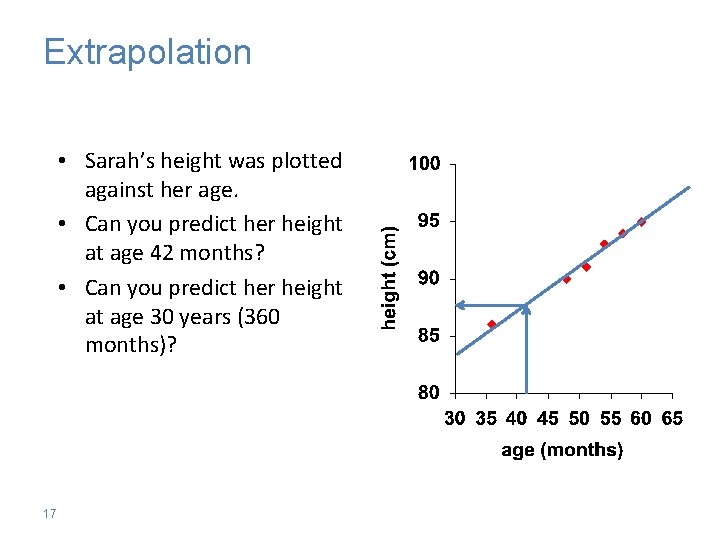

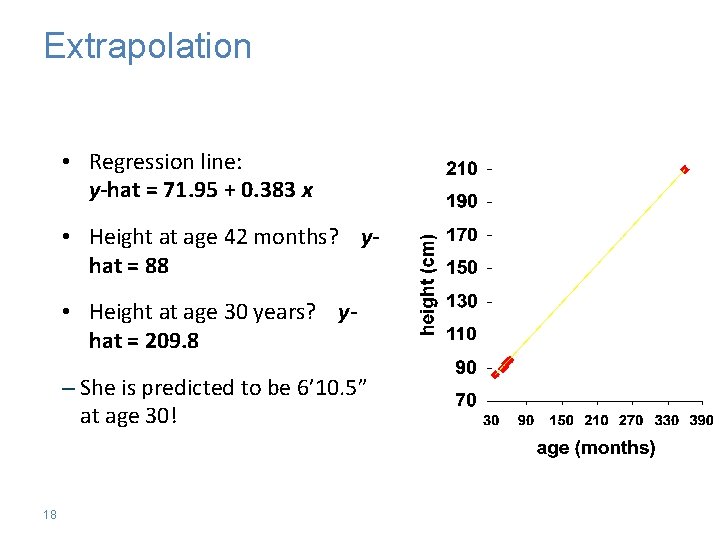

Extrapolation • Sarah’s height was plotted against her age. • Can you predict her height at age 42 months? • Can you predict her height at age 30 years (360 months)? 17

Extrapolation • Regression line: y-hat = 71. 95 + 0. 383 x • Height at age 42 months? yhat = 88 • Height at age 30 years? yhat = 209. 8 – She is predicted to be 6’ 10. 5” at age 30! 18

Cautions about Correlation and Regression § Both describe linear relationships. § Both are affected by outliers. § Always plot the data before interpreting. § Beware of extrapolation. § Predicting outside of the range of x § Beware of lurking variables. § These have an important effect on the relationship among the variables in a study, but are not included in the study. § Correlation does not imply causation! 19