LEASTSQUARES REGRESSION Section 3 3 Regression line A

- Slides: 14

LEASTSQUARES REGRESSION Section 3. 3

Regression line A straight line that describes how a response variable y changes as an explanatory variable x changes Used to predict y for a value of x

Data must have explanatory and response variables Used for linear trends

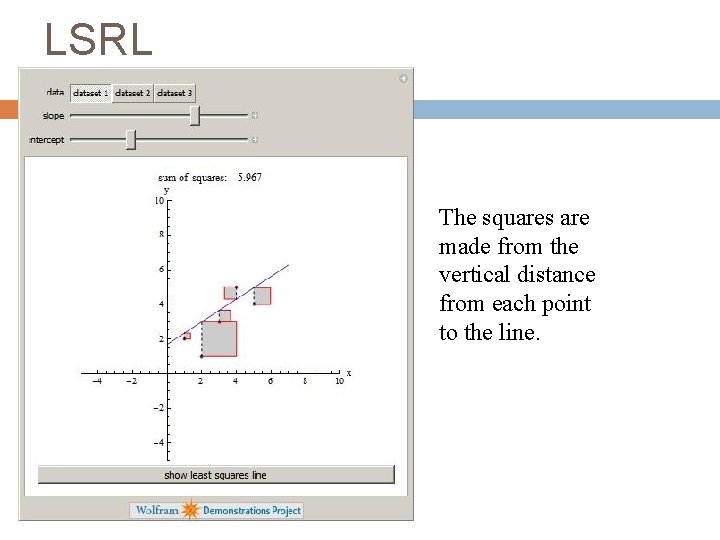

Least-squares regression line The line that makes the sum of the squares of the vertical distances of the data points from the line as small as possible y hat = a + bx where y hat is the predicted value, a is the y-intercept, and b is the slope of the line

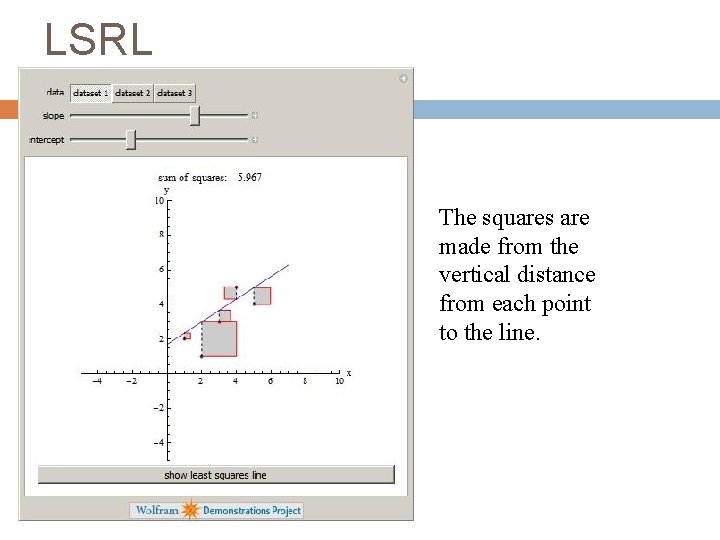

LSRL The squares are made from the vertical distance from each point to the line.

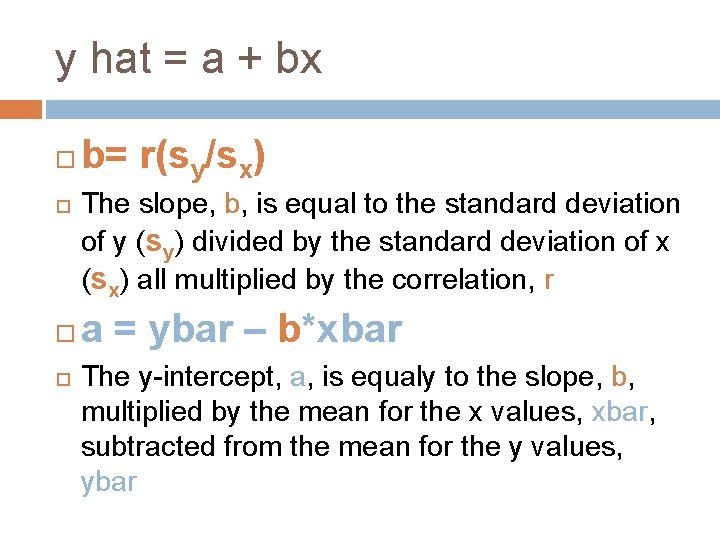

y hat = a + bx b= r(sy/sx) The slope, b, is equal to the standard deviation of y (sy) divided by the standard deviation of x (sx) all multiplied by the correlation, r a = ybar – b*xbar The y-intercept, a, is equaly to the slope, b, multiplied by the mean for the x values, xbar, subtracted from the mean for the y values, ybar

Calculators You can calculate a, b, and r by using the Lin. Reg(a+bx) tool on your calculator Go to Stat—Calc--#8(Lin. Reg(a+bx)) There is a #4 under Calc that says Lin. Reg(ax+b), which will give you the same a and b values for the data set, but the slope is a and y-intercept is b in this function, so use #8

Coefficient of determination The fraction of the variation in the values of y that is explained by the least-squares regression of y on x r 2 Sometimes represented as a percentage % of the variation in y is explained by the least-squares regression of y on x

Facts about LSR: The distinction between explanatory and response variables is essential A change of one standard deviation in x corresponds to a change of r standard deviations in y The LSRL always passes through the point (x bar, y bar)

Residual The difference between an observed value of the response variable and the value predicted by the regression line Residual = observed y – predicted y The mean of the least-squares residuals is always zero

Residual plot A scatterplot of the regression residuals against the explanatory variable Helps assess the fit of a regression line Uniform scatter indicates the regression line fits well

Curved pattern--relationship is not linear Individual points with large residuals are outliers in the vertical (y) direction because they lie far from the line Individual points that are extreme in the x direction may not have large

Influential Observations If removing the observation would markedly change the result of the calculation, it is influential Points that are outliers in the x direction are often influential

Practice Problems pg. 176 #3. 50 -3. 61