3 1 Memory Hierarchy Prof Mikko H Lipasti

- Slides: 30

3 -1: Memory Hierarchy Prof. Mikko H. Lipasti University of Wisconsin-Madison Companion Lecture Notes for Modern Processor Design: Fundamentals of Superscalar Processors, 1 st edition, by John P. Shen and Mikko H. Lipasti Terms of use: This lecture is licensed for noncommercial private use to anyone that owns a legitimate purchased copy of the above-mentioned textbook. All others must contact mikko@engr. wisc. edu for licensing terms.

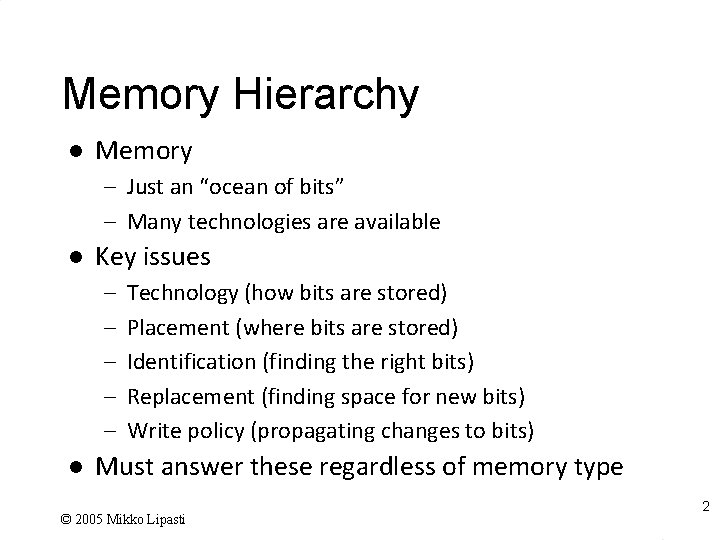

Memory Hierarchy l Memory – Just an “ocean of bits” – Many technologies are available l Key issues – – – l Technology (how bits are stored) Placement (where bits are stored) Identification (finding the right bits) Replacement (finding space for new bits) Write policy (propagating changes to bits) Must answer these regardless of memory type © 2005 Mikko Lipasti 2

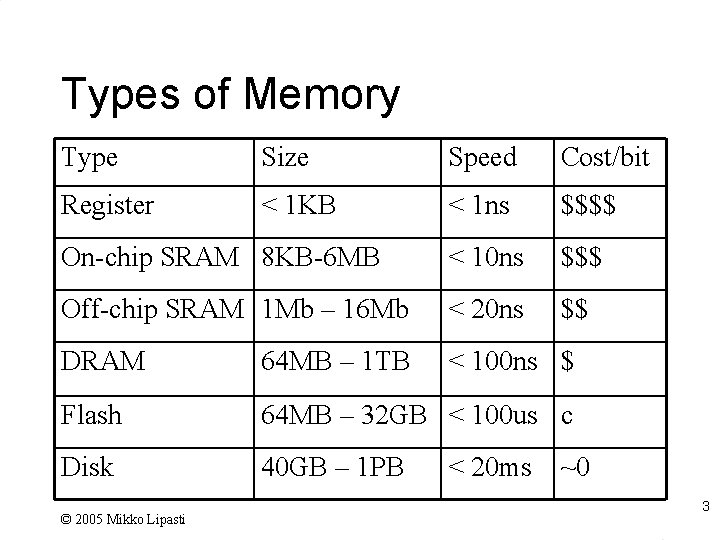

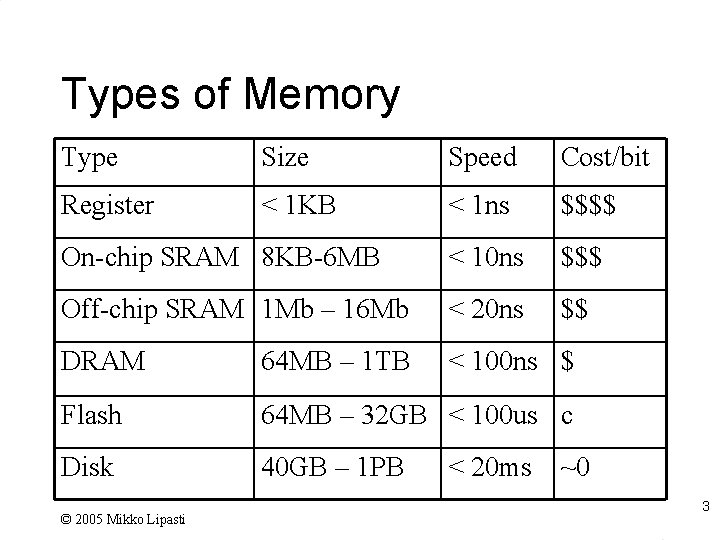

Types of Memory Type Size Speed Cost/bit Register < 1 KB < 1 ns $$$$ On-chip SRAM 8 KB-6 MB < 10 ns $$$ Off-chip SRAM 1 Mb – 16 Mb < 20 ns $$ DRAM 64 MB – 1 TB < 100 ns $ Flash 64 MB – 32 GB < 100 us c Disk 40 GB – 1 PB © 2005 Mikko Lipasti < 20 ms ~0 3

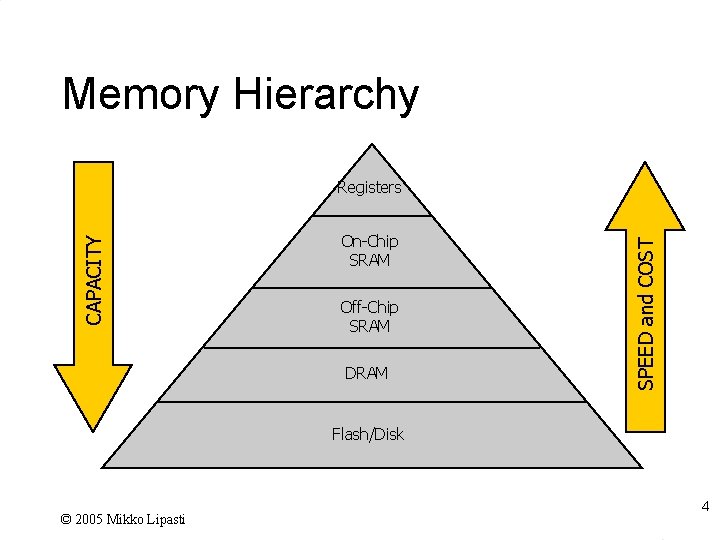

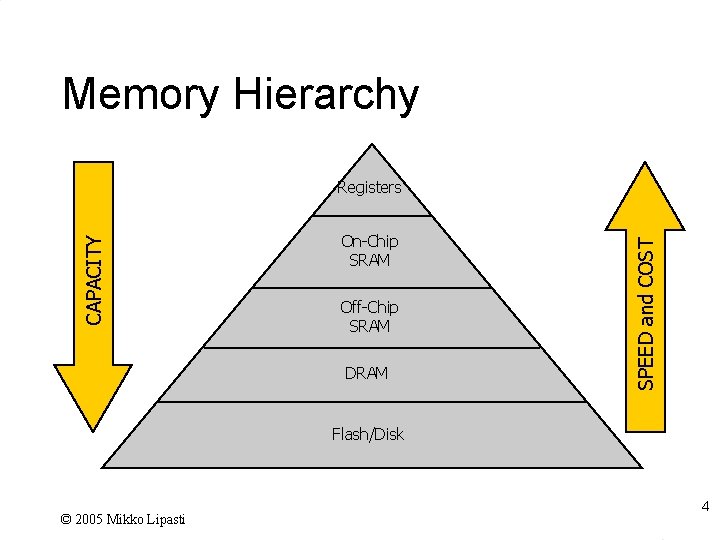

Memory Hierarchy On-Chip SRAM Off-Chip SRAM DRAM SPEED and COST CAPACITY Registers Flash/Disk © 2005 Mikko Lipasti 4

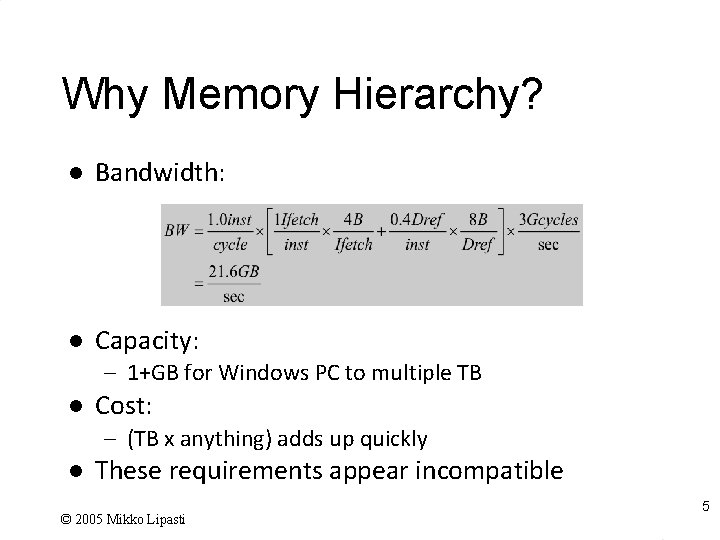

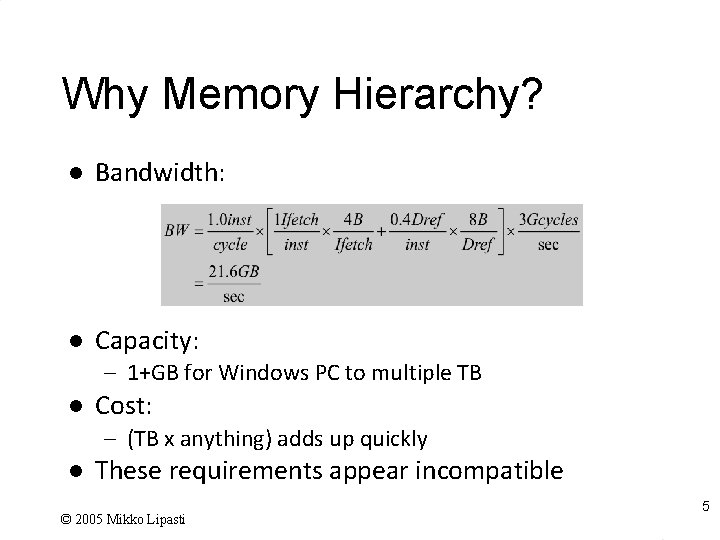

Why Memory Hierarchy? l Bandwidth: l Capacity: – 1+GB for Windows PC to multiple TB l Cost: – (TB x anything) adds up quickly l These requirements appear incompatible © 2005 Mikko Lipasti 5

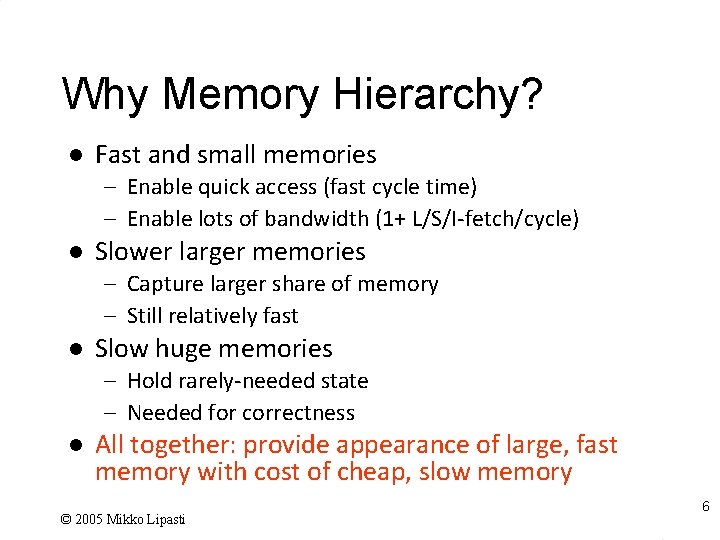

Why Memory Hierarchy? l Fast and small memories – Enable quick access (fast cycle time) – Enable lots of bandwidth (1+ L/S/I-fetch/cycle) l Slower larger memories – Capture larger share of memory – Still relatively fast l Slow huge memories – Hold rarely-needed state – Needed for correctness l All together: provide appearance of large, fast memory with cost of cheap, slow memory © 2005 Mikko Lipasti 6

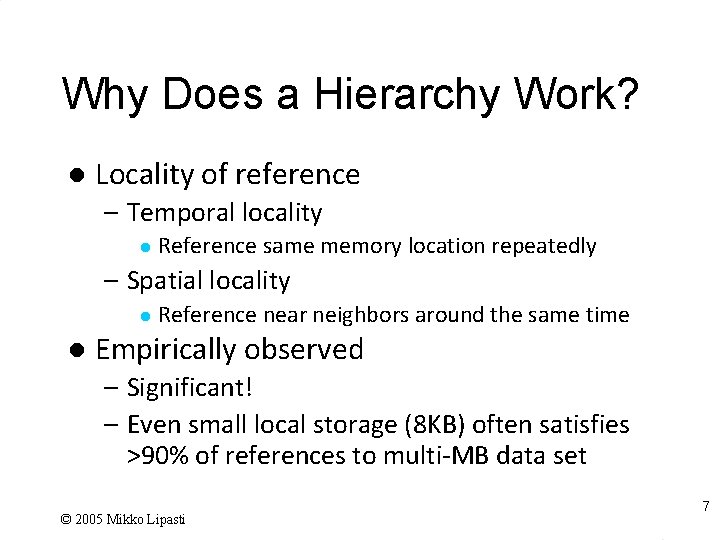

Why Does a Hierarchy Work? l Locality of reference – Temporal locality l Reference same memory location repeatedly – Spatial locality l l Reference near neighbors around the same time Empirically observed – Significant! – Even small local storage (8 KB) often satisfies >90% of references to multi-MB data set © 2005 Mikko Lipasti 7

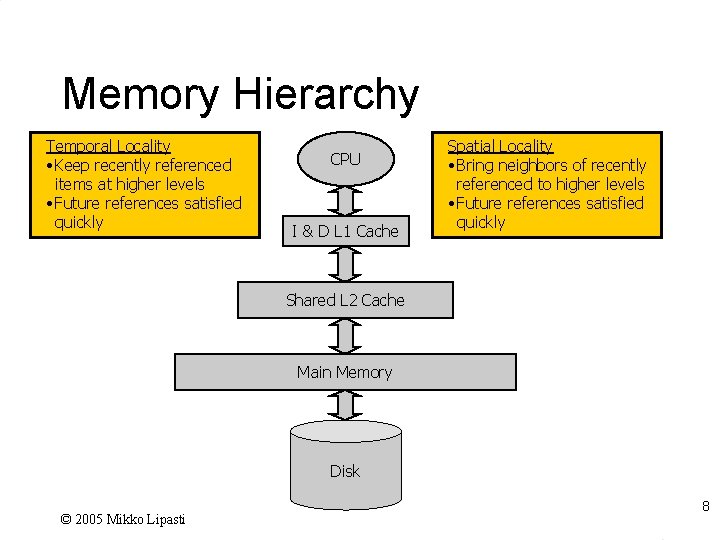

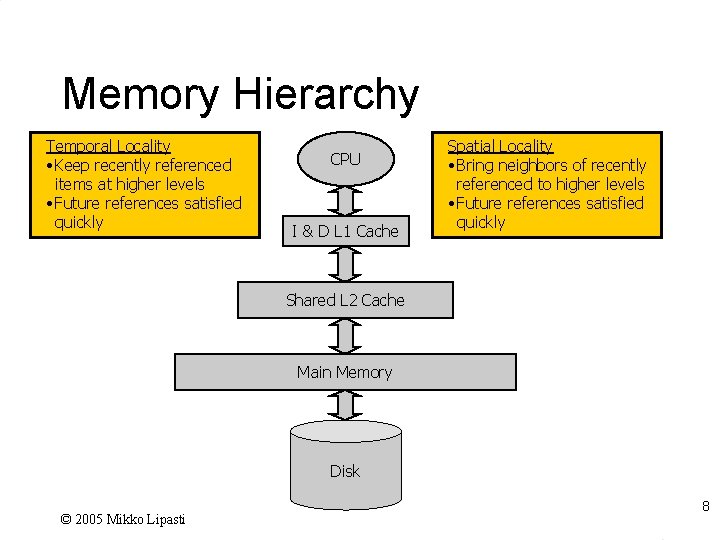

Memory Hierarchy Temporal Locality • Keep recently referenced items at higher levels • Future references satisfied quickly CPU I & D L 1 Cache Spatial Locality • Bring neighbors of recently referenced to higher levels • Future references satisfied quickly Shared L 2 Cache Main Memory Disk © 2005 Mikko Lipasti 8

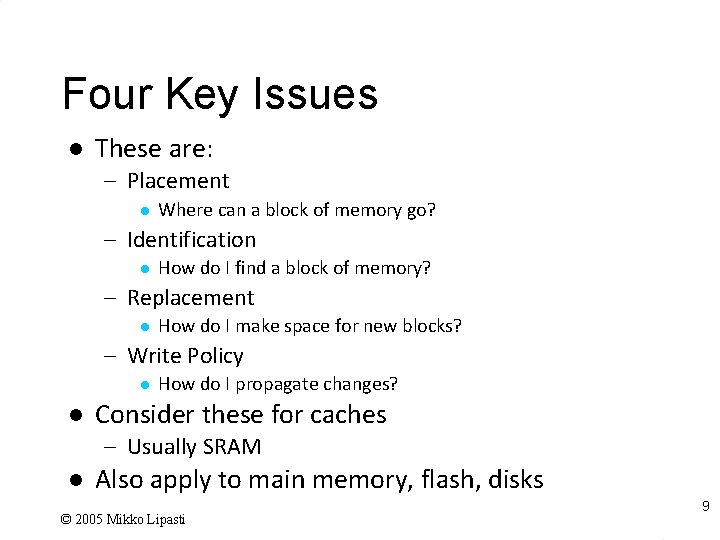

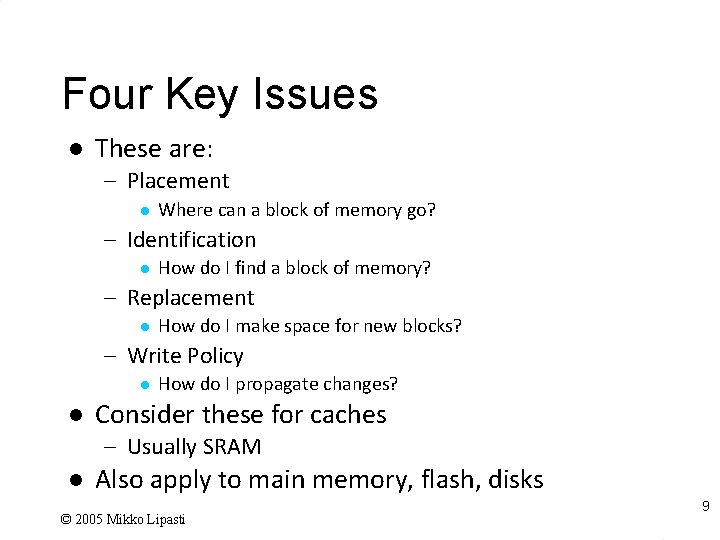

Four Key Issues l These are: – Placement l Where can a block of memory go? – Identification l How do I find a block of memory? – Replacement l How do I make space for new blocks? – Write Policy l l How do I propagate changes? Consider these for caches – Usually SRAM l Also apply to main memory, flash, disks © 2005 Mikko Lipasti 9

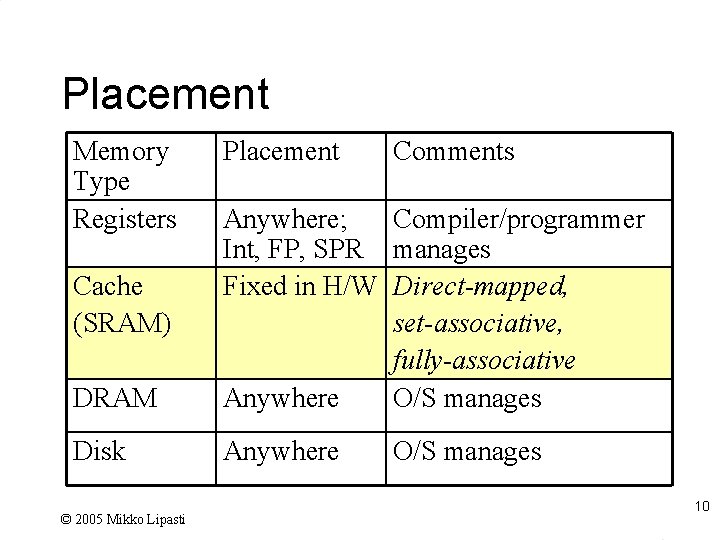

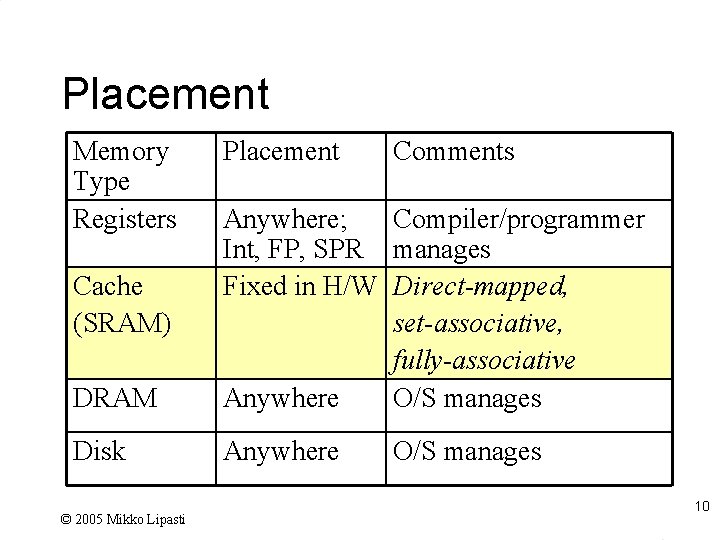

Placement Memory Type Registers Placement Comments DRAM Anywhere; Compiler/programmer Int, FP, SPR manages Fixed in H/W Direct-mapped, set-associative, fully-associative Anywhere O/S manages Disk Anywhere Cache (SRAM) © 2005 Mikko Lipasti O/S manages 10

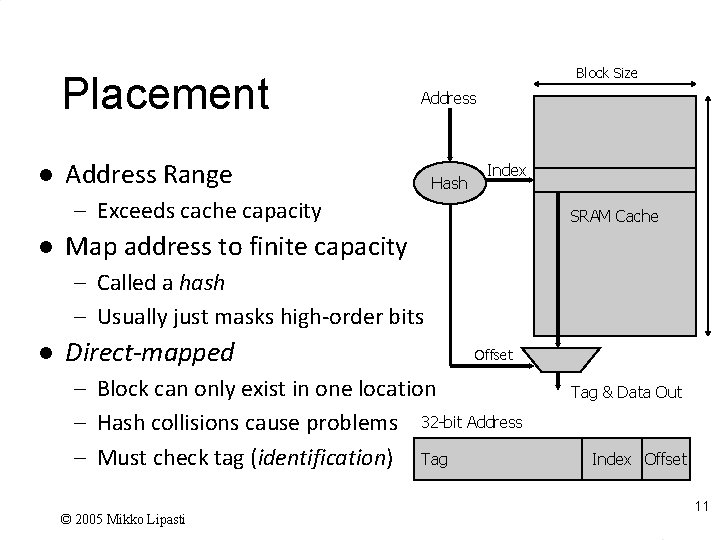

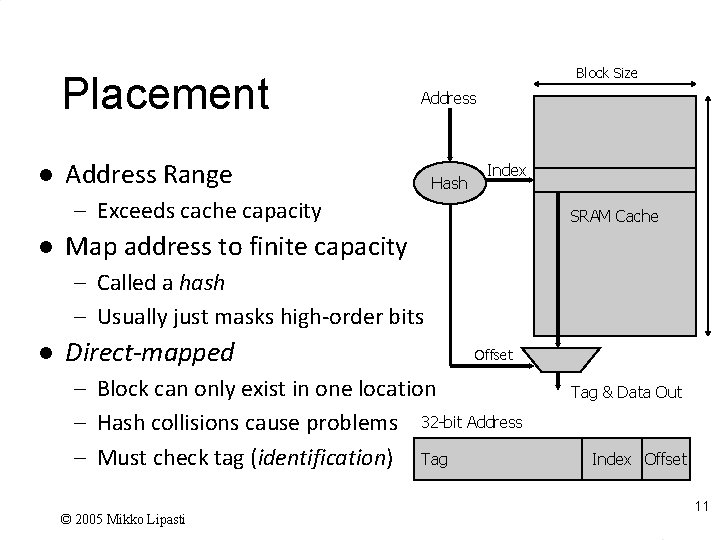

Placement l Block Size Address Range Hash Index – Exceeds cache capacity l SRAM Cache Map address to finite capacity – Called a hash – Usually just masks high-order bits l Direct-mapped Offset – Block can only exist in one location – Hash collisions cause problems 32 -bit Address – Must check tag (identification) Tag © 2005 Mikko Lipasti Tag & Data Out Index Offset 11

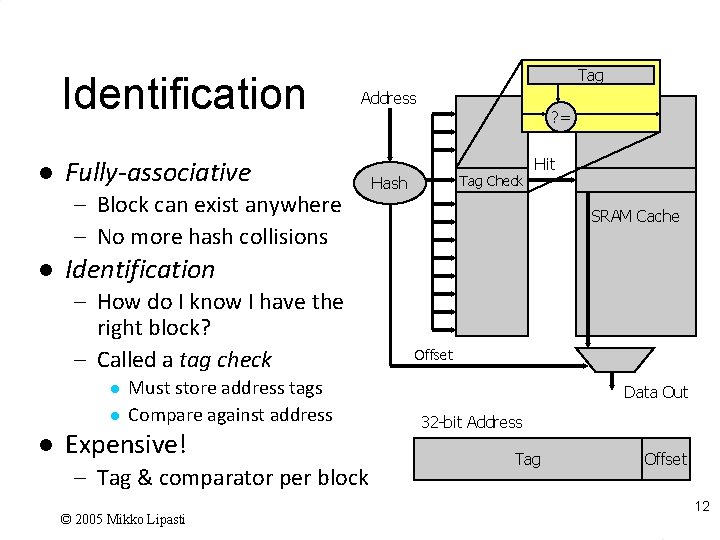

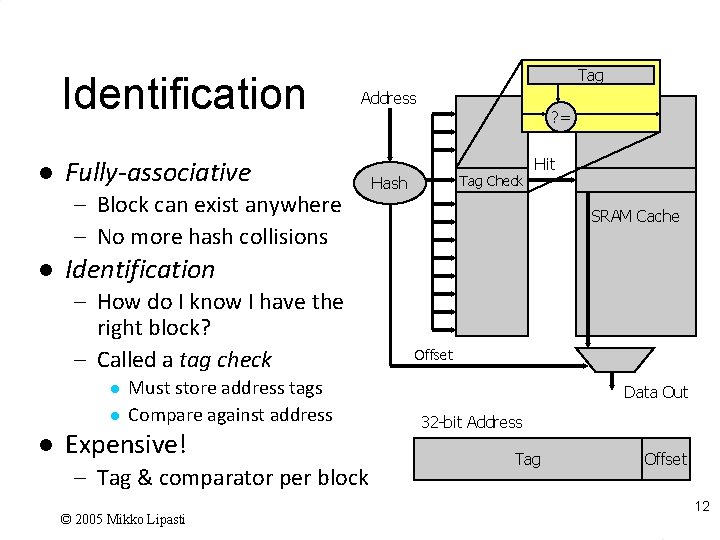

Identification l Tag Address Fully-associative – Block can exist anywhere – No more hash collisions l Tag Check Hash Hit SRAM Cache Identification – How do I know I have the right block? – Called a tag check l l l ? = Must store address tags Compare against address Expensive! – Tag & comparator per block © 2005 Mikko Lipasti Offset Data Out 32 -bit Address Tag Offset 12

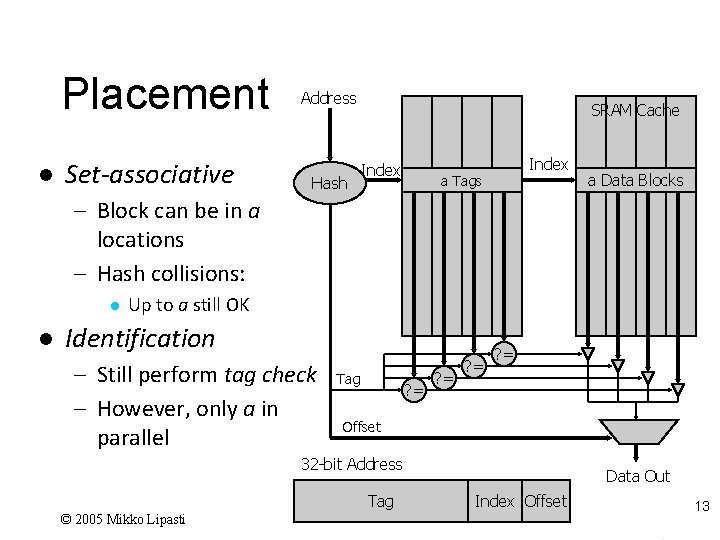

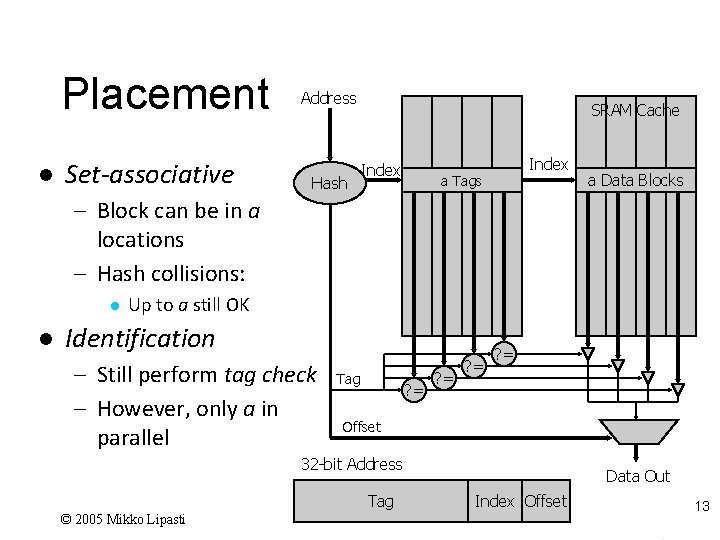

Placement l Set-associative Address Hash SRAM Cache Index a Tags a Data Blocks – Block can be in a locations – Hash collisions: l l Up to a still OK Identification – Still perform tag check – However, only a in parallel Tag ? = ? = Offset 32 -bit Address Tag © 2005 Mikko Lipasti Data Out Index Offset 13

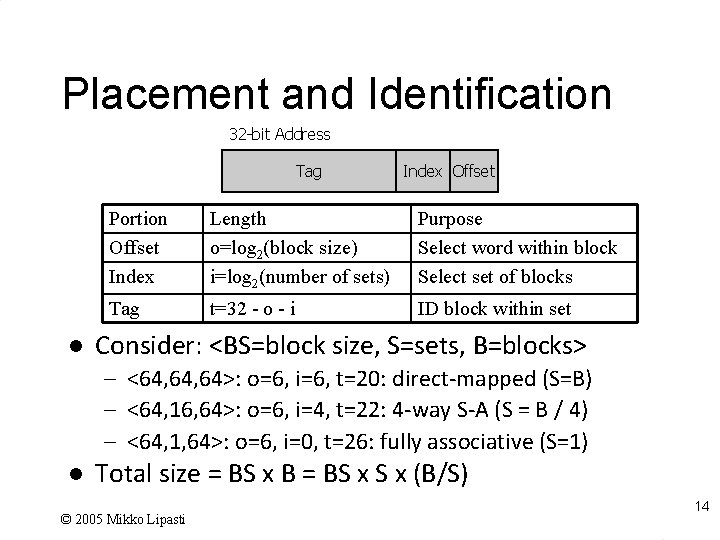

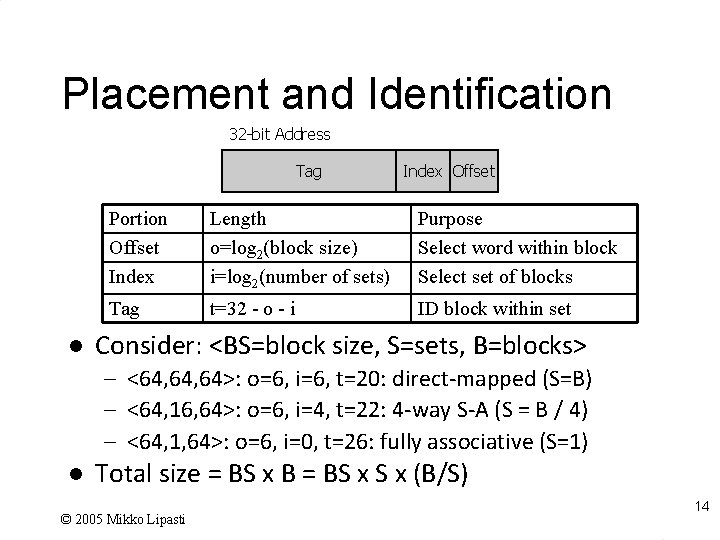

Placement and Identification 32 -bit Address Tag l Index Offset Portion Offset Index Length o=log 2(block size) i=log 2(number of sets) Purpose Select word within block Select set of blocks Tag t=32 - o - i ID block within set Consider: <BS=block size, S=sets, B=blocks> – <64, 64>: o=6, i=6, t=20: direct-mapped (S=B) – <64, 16, 64>: o=6, i=4, t=22: 4 -way S-A (S = B / 4) – <64, 1, 64>: o=6, i=0, t=26: fully associative (S=1) l Total size = BS x B = BS x (B/S) © 2005 Mikko Lipasti 14

Replacement l Cache has finite size – What do we do when it is full? l Analogy: desktop full? – Move books to bookshelf to make room l Same idea: – Move blocks to next level of cache © 2005 Mikko Lipasti 15

Replacement l How do we choose victim? – Verbs: Victimize, evict, replace, cast out l Several policies are possible – – l FIFO (first-in-first-out) LRU (least recently used) NMRU (not most recently used) Pseudo-random (yes, really!) Pick victim within set where a = associativity – If a <= 2, LRU is cheap and easy (1 bit) – If a > 2, it gets harder – Pseudo-random works pretty well for caches © 2005 Mikko Lipasti 16

Write Policy l Replication in memory hierarchy – 2 or more copies of same block Main memory and/or disk l Caches l l What to do on a write? – Eventually, all copies must be changed – Write must propagate to all levels © 2005 Mikko Lipasti 17

Write Policy l l Easiest policy: write-through Every write propagates directly through hierarchy – Write in L 1, L 2, memory, disk (? !? ) l Why is this a bad idea? – Very high bandwidth requirement – Remember, large memories are slow l Popular in real systems only to the L 2 – Every write updates L 1 and L 2 – Beyond L 2, use write-back policy © 2005 Mikko Lipasti 18

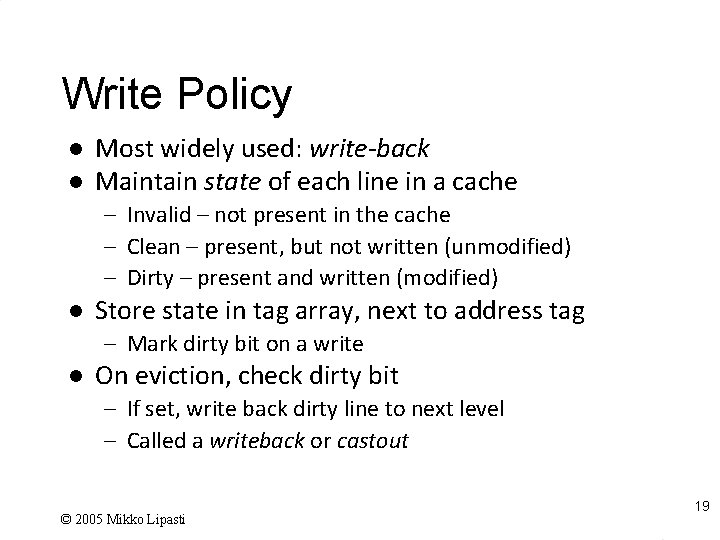

Write Policy l l Most widely used: write-back Maintain state of each line in a cache – Invalid – not present in the cache – Clean – present, but not written (unmodified) – Dirty – present and written (modified) l Store state in tag array, next to address tag – Mark dirty bit on a write l On eviction, check dirty bit – If set, write back dirty line to next level – Called a writeback or castout © 2005 Mikko Lipasti 19

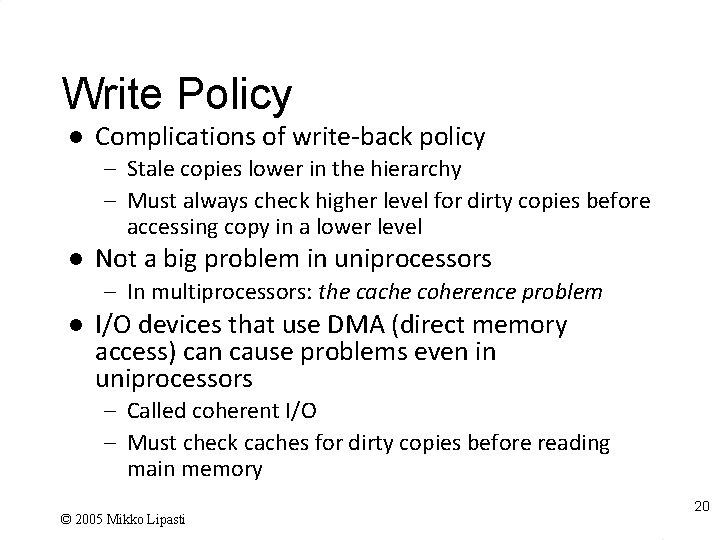

Write Policy l Complications of write-back policy – Stale copies lower in the hierarchy – Must always check higher level for dirty copies before accessing copy in a lower level l Not a big problem in uniprocessors – In multiprocessors: the cache coherence problem l I/O devices that use DMA (direct memory access) can cause problems even in uniprocessors – Called coherent I/O – Must check caches for dirty copies before reading main memory © 2005 Mikko Lipasti 20

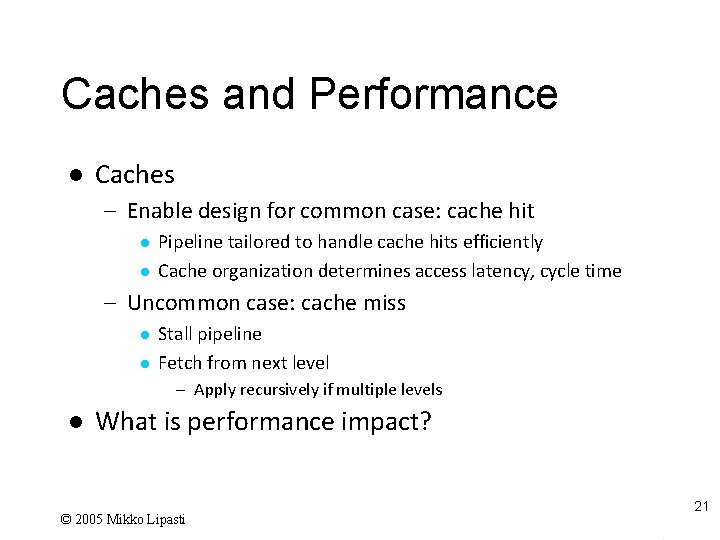

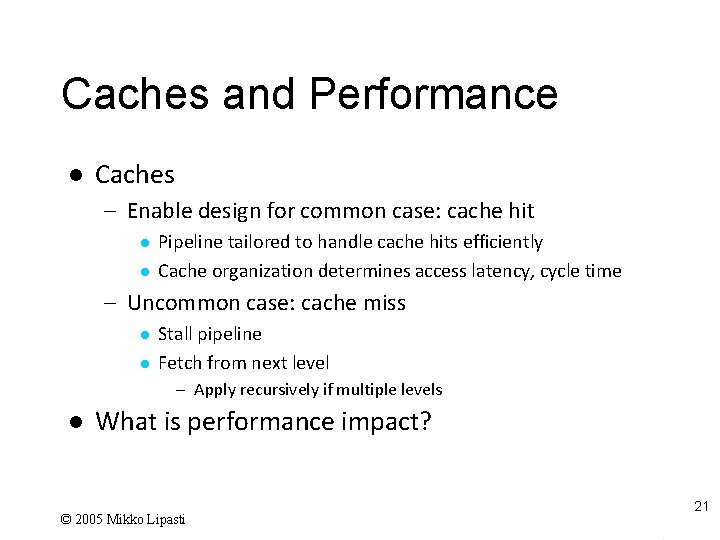

Caches and Performance l Caches – Enable design for common case: cache hit l l Pipeline tailored to handle cache hits efficiently Cache organization determines access latency, cycle time – Uncommon case: cache miss l l Stall pipeline Fetch from next level – Apply recursively if multiple levels l What is performance impact? © 2005 Mikko Lipasti 21

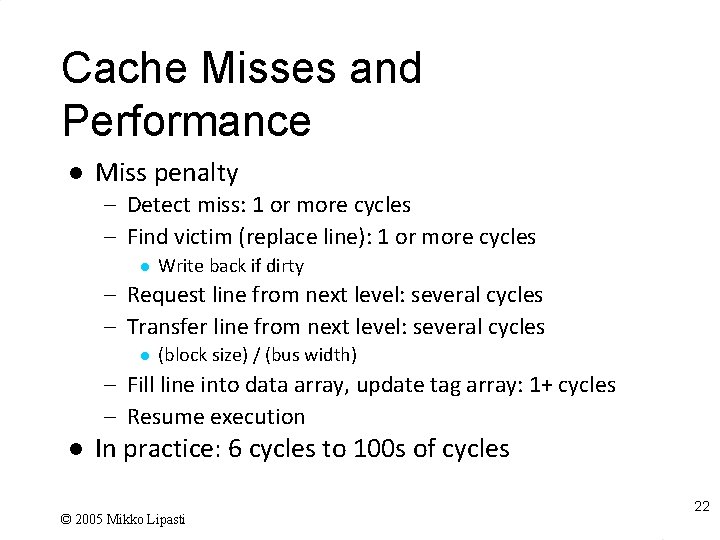

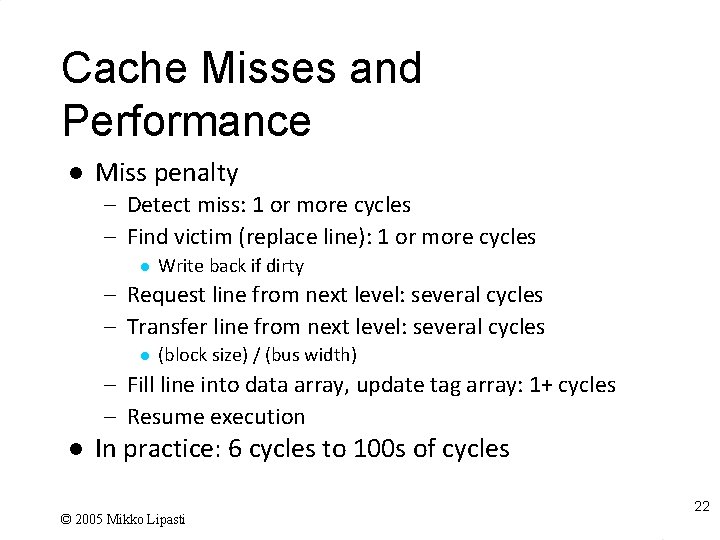

Cache Misses and Performance l Miss penalty – Detect miss: 1 or more cycles – Find victim (replace line): 1 or more cycles l Write back if dirty – Request line from next level: several cycles – Transfer line from next level: several cycles l (block size) / (bus width) – Fill line into data array, update tag array: 1+ cycles – Resume execution l In practice: 6 cycles to 100 s of cycles © 2005 Mikko Lipasti 22

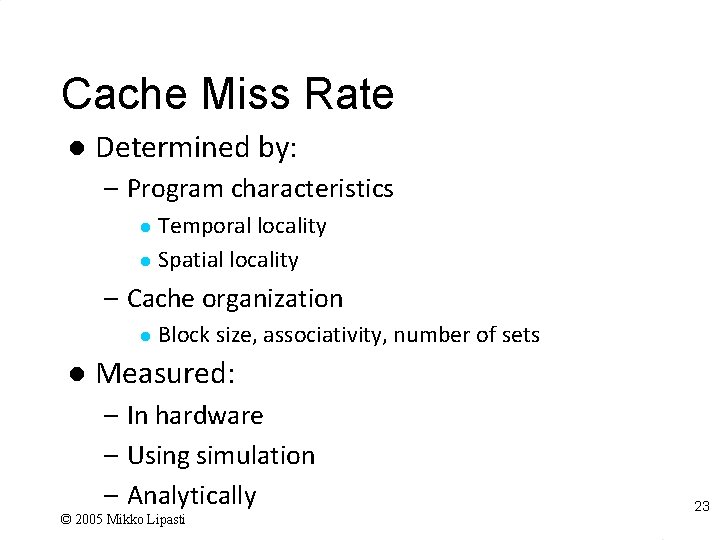

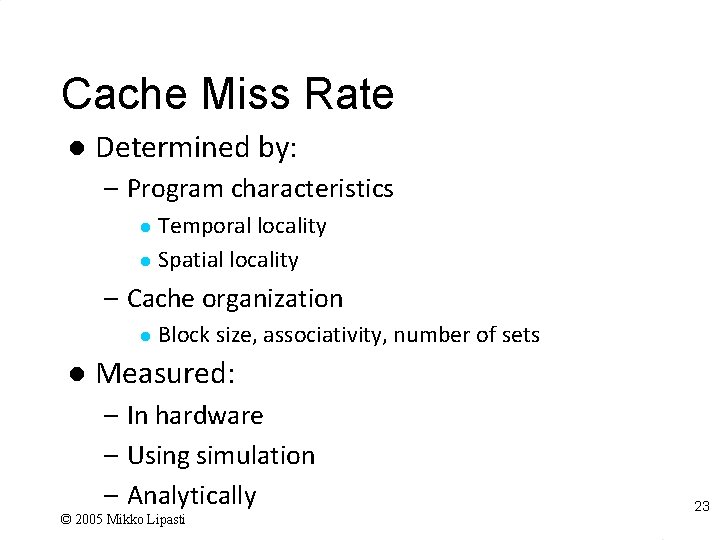

Cache Miss Rate l Determined by: – Program characteristics Temporal locality l Spatial locality l – Cache organization l l Block size, associativity, number of sets Measured: – In hardware – Using simulation – Analytically © 2005 Mikko Lipasti 23

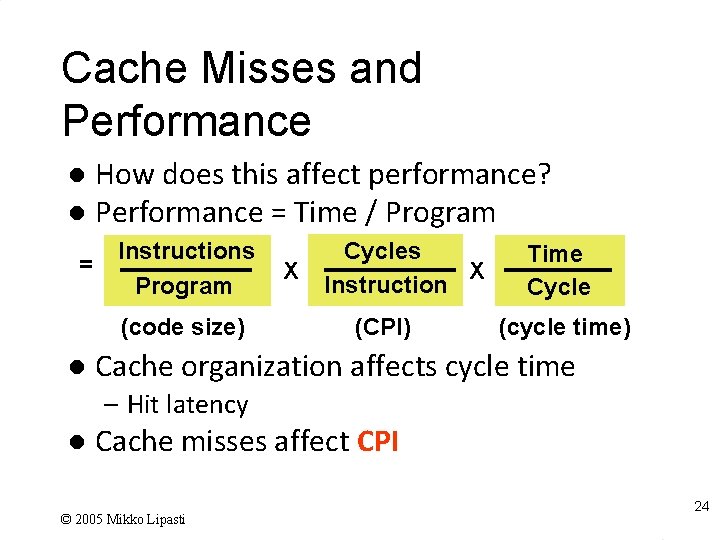

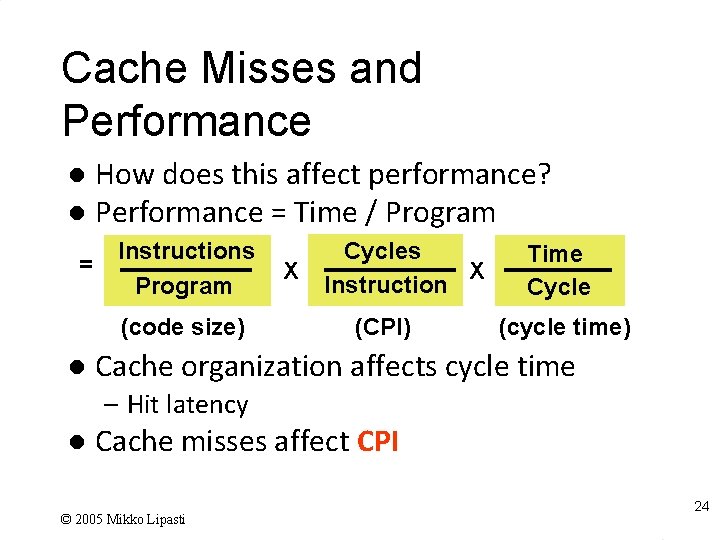

Cache Misses and Performance How does this affect performance? l Performance = Time / Program l = Instructions Program (code size) l X Cycles X Instruction (CPI) Time Cycle (cycle time) Cache organization affects cycle time – Hit latency l Cache misses affect CPI © 2005 Mikko Lipasti 24

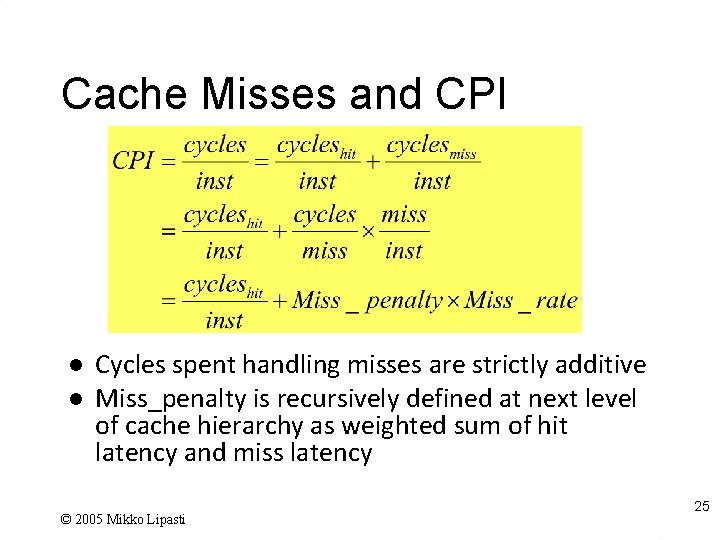

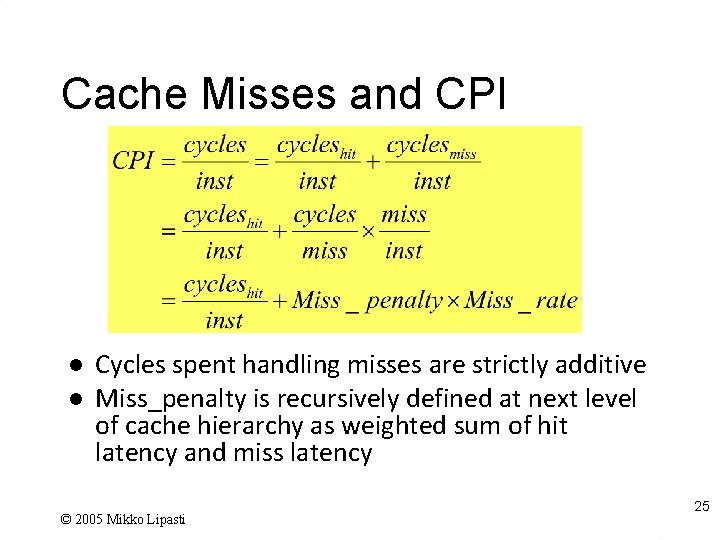

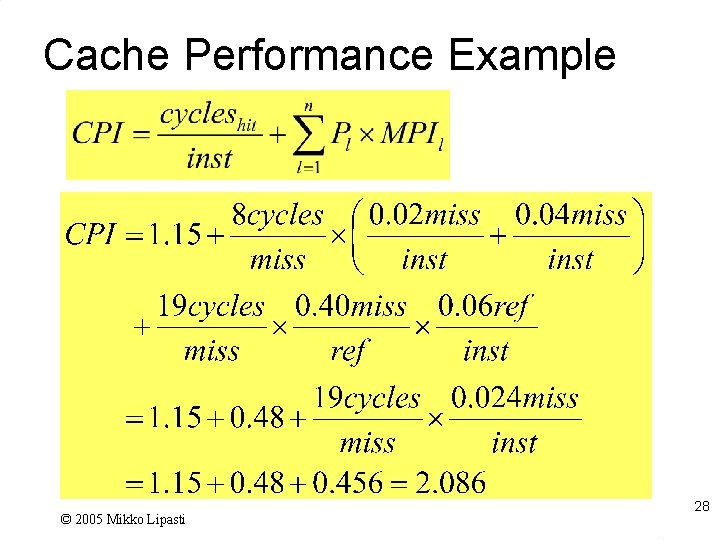

Cache Misses and CPI l l Cycles spent handling misses are strictly additive Miss_penalty is recursively defined at next level of cache hierarchy as weighted sum of hit latency and miss latency © 2005 Mikko Lipasti 25

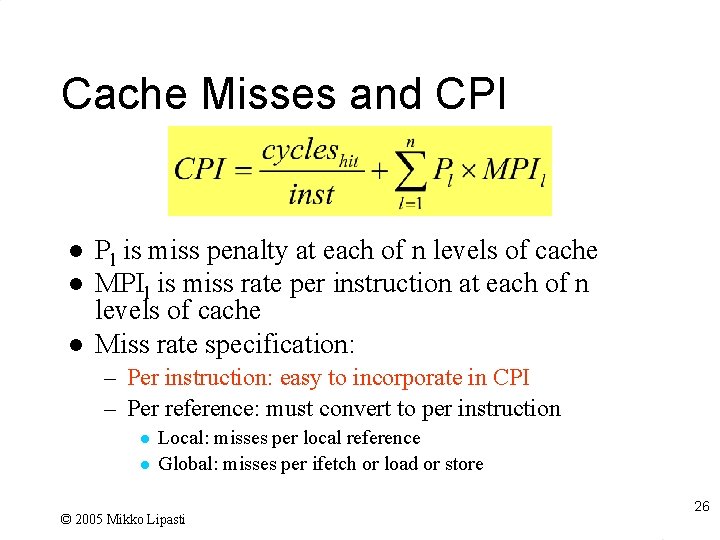

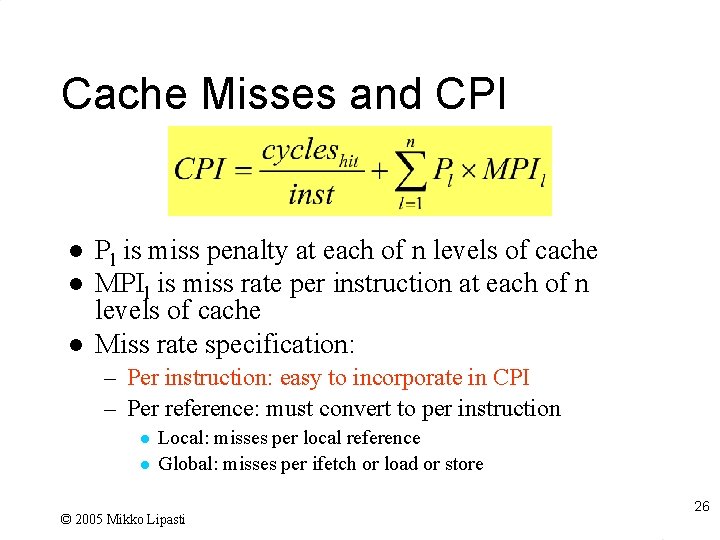

Cache Misses and CPI l l l Pl is miss penalty at each of n levels of cache MPIl is miss rate per instruction at each of n levels of cache Miss rate specification: – Per instruction: easy to incorporate in CPI – Per reference: must convert to per instruction l l Local: misses per local reference Global: misses per ifetch or load or store © 2005 Mikko Lipasti 26

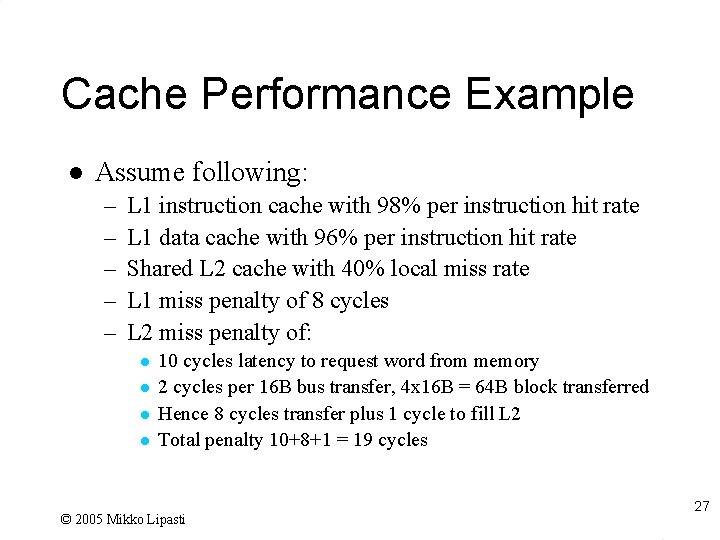

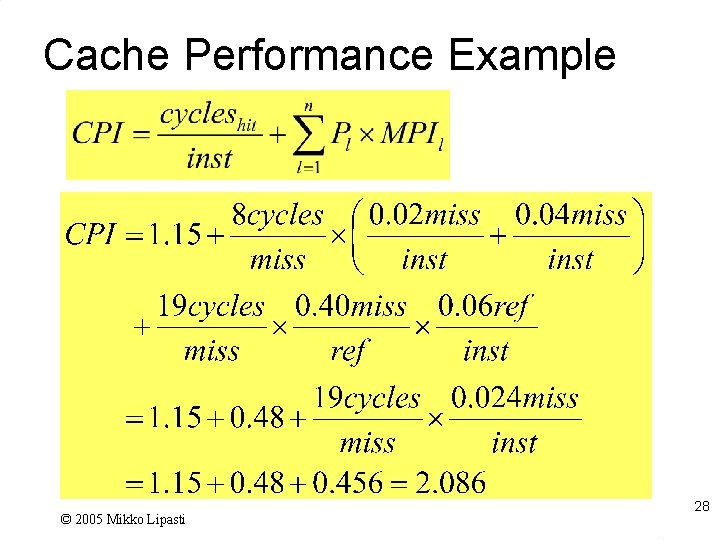

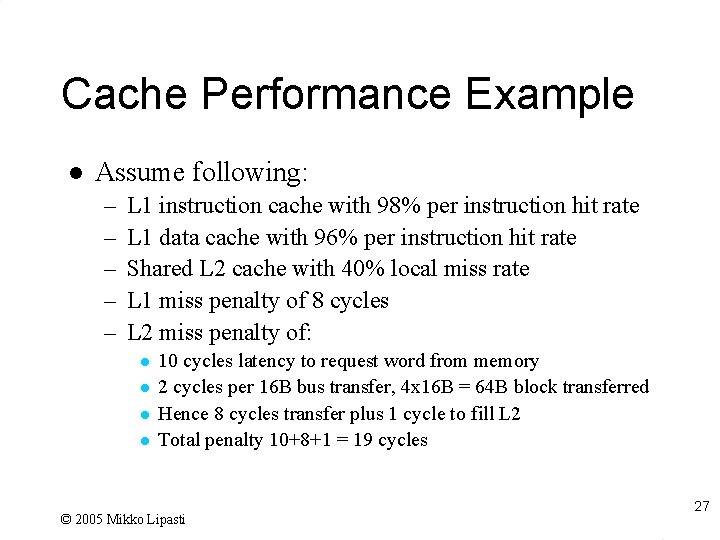

Cache Performance Example l Assume following: – – – L 1 instruction cache with 98% per instruction hit rate L 1 data cache with 96% per instruction hit rate Shared L 2 cache with 40% local miss rate L 1 miss penalty of 8 cycles L 2 miss penalty of: l l 10 cycles latency to request word from memory 2 cycles per 16 B bus transfer, 4 x 16 B = 64 B block transferred Hence 8 cycles transfer plus 1 cycle to fill L 2 Total penalty 10+8+1 = 19 cycles © 2005 Mikko Lipasti 27

Cache Performance Example © 2005 Mikko Lipasti 28

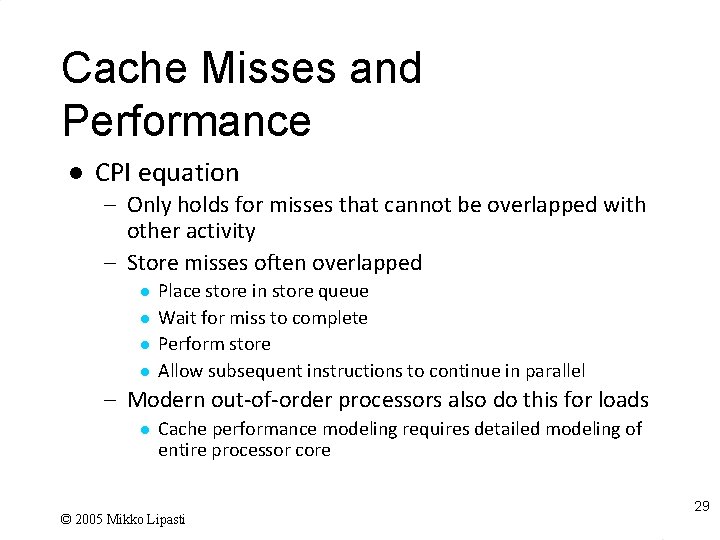

Cache Misses and Performance l CPI equation – Only holds for misses that cannot be overlapped with other activity – Store misses often overlapped l l Place store in store queue Wait for miss to complete Perform store Allow subsequent instructions to continue in parallel – Modern out-of-order processors also do this for loads l Cache performance modeling requires detailed modeling of entire processor core © 2005 Mikko Lipasti 29

Recap l Memory Hierarchy – Small for bandwidth and latency (cache) – Large for cost and capacity l Key issues – – – l Technology (how bits are stored) Placement (where bits are stored) Identification (finding the right bits) Replacement (finding space for new bits) Write policy (propagating changes to bits) Simple cache performance analysis © 2005 Mikko Lipasti 30